Abstract

Background:

Physician scores on examinations decline with time after graduation. However, whether this translates into declining quality of care is unknown. Our objective was to determine how physician experience is associated with negative outcomes for patients admitted to hospital.

Methods:

We conducted a retrospective cohort study involving all patients admitted to general internal medicine wards over a 2-year period at all 7 teaching hospitals in Alberta, Canada. We used files from the Alberta College of Physicians and Surgeons to determine the number of years since medical school graduation for each patient’s most responsible physician. Our primary outcome was the composite of in-hospital death, or readmission or death within 30 days postdischarge.

Results:

We identified 10 046 patients who were cared for by 149 physicians. Patient characteristics were similar across physician experience strata, as were primary outcome rates (17.4% for patients whose care was managed by physicians in the highest quartile of experience, compared with 18.8% in those receiving care from the least experienced physicians; adjusted odds ratio [OR] 0.88, 95% confidence interval [CI] 0.72–1.06). Outcomes were similar between experience quartiles when further stratified by physician volume, most responsible diagnosis or complexity of the patient’s condition. Although we found substantial variability in length of stay between individual physicians, there were no significant differences between physician experience quartiles (mean adjusted for patient covariates and accounting for intraphysician clustering: 7.90 [95% CI 7.39–8.42] d for most experienced quartile; 7.63 [95% CI 7.13–8.14] d for least experienced quartile).

Interpretation:

For patients admitted to general internal medicine teaching wards, we saw no negative association between physician experience and outcomes commonly used as proxies for quality of inpatient care.

Many jurisdictions have instituted compulsory recertification of physicians on the assumption that quality of care declines with experience. Although a systematic review reported that 32 of 62 studies found decreasing performance with increasing physician experience, most of these studies evaluated performance on examinations or hypothetical vignettes rather than actual quality of care provided to patients, and most of the studies were done decades ago, before the widespread availability of tools to readily facilitate evidence-based medicine.1

Experience is strongly related to better outcomes in surgery and obstetrics, but studies examining the association between physician experience and quality of care for medical patients have reported mixed results.1–8 Many of the studies reporting an inverse association between experience and quality of care have focused on the provision of “guideline recommended tests or therapies” as a proxy for quality of care. However, guideline recommendations might not be appropriate in every situation.

An evaluation of broader quality metrics may be more appropriate to answer this question. For example, in-hospital mortality and readmission rates or mortality postdischarge are commonly used as markers for quality of inpatient care, are endorsed by the Centers for Medicare & Medicaid Services and are included in the Patient Protection and Affordable Care Act.9,10 However, to our knowledge, few studies have examined the association between these broader quality metrics and physician experience, and these studies have been limited. They either focused on single diagnoses,11 excluded older adult patients,2 examined data from only 1 hospital8 or combined data7 for both surgeons and physicians.

Patients admitted to general internal medicine services at Alberta teaching hospitals are distributed between wards purely on the basis of bed availability, and attending physicians rotate every 1–4 weeks. For these reasons, the distribution of patients between attending physicians is quasirandom. We took advantage of this natural experiment to evaluate the association between attending physician experience (years since medical school graduation) and outcomes for patients admitted to general internal medicine wards in Alberta.

Methods

Data sources

This study complied with the guidelines of the Declaration of Helsinki and was approved by the Health Research Ethics Board at the University of Alberta; the need for patient-level informed consent was waived.

We used deidentified linked data from the Alberta Health Discharge Abstract Database, the Alberta Health Care Insurance Plan Registry, and the Ambulatory Care Database. We used the Alberta College of Physicians and Surgeons master file to identify the year of graduation for each attending physician (www.cpsa.ab.ca).

Study cohort

We identified all adults with an admission to general internal medicine services at the 7 Alberta teaching hospitals between Oct. 1, 2009, and Sept. 30, 2010 (the 12 months before a reorganization of care at one of the teaching hospitals, the General Internal Medicine Care Transformation, was implemented), and between Apr. 1, 2011, and Mar. 31, 2012 (the 12 months after the change). As previously reported,12 the General Internal Medicine Care Transformation did not affect our primary outcome: in-hospital mortality and rate of death or readmission in the first 30 days postdischarge. All 7 teaching hospitals are located in either Edmonton (4) or Calgary (3).

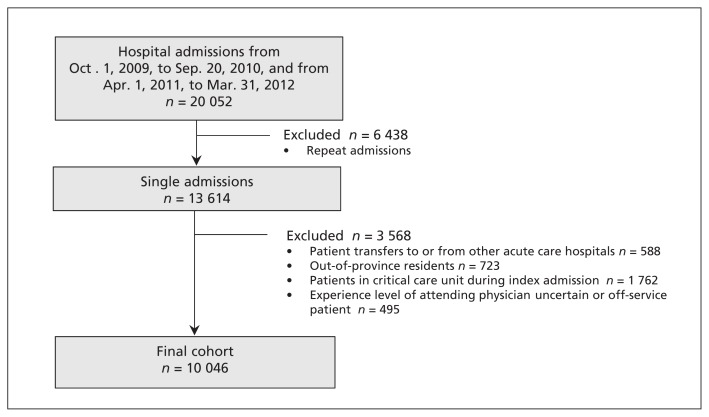

We excluded patients from out of province, patients who were transferred from or to another inpatient service (e.g., the intensive care unit, a different service in the same hospital, another acute care hospital or a rehabilitation hospital) and patients with lengths of stay greater than 30 days. In addition, because readmitted patients tend to be readmitted directly to the service that discharged them (thereby potentially introducing a selection bias because readmitted patients have poorer outcomes than first-time admissions), we only collected data for the first admission for any patient during the study period (Figure 1).

Figure 1:

Derivation of the study cohort.

Design

The general internal medicine services at all 7 teaching hospitals are structured similarly (with Royal College of Physicians and Surgeons of Canada–accredited clinical teaching units run by attending staff and learners at multiple levels of training) and admit undifferentiated medical patients from the emergency department.

Covariates

Patient comorbidities were identified using diagnostic codes from the International Classification of Diseases, 9th revision (Clinical Modification) and International Classification of Diseases, 10th revision, from the index admission and all other admissions, visits to emergency departments or ambulatory care visits in the 12 months before their index admission. The accuracy of these data has been previously validated in Alberta databases.13,14

We derived Charlson Comorbidity Index scores and calculated the LACE score for each patient at the time of discharge from their index admission to hospital. LACE is a 4-item score that was derived15 in a prospective cohort study in Ontario and subsequently validated in Alberta.16 The score incorporates length of stay for the index admission to hospital (L), acuity of admission (A), Charlson Comorbidity Index score (C) and emergency department use in the previous 6 months (E). The LACE score can be used for risk-adjusting outcomes in the first 30 days after discharge from hospital. Each patient was assigned a resource intensity weight by health authority nosologists independent of our study. This measure is a multiplier indicating how many resources a patient required compared with an average inpatient in the same province. Individual patients’ resource intensity weights would be higher if their length of stay exceeds that expected for their disease-related group or if they require additional procedures, such as thoracentesis, paracentesis, biopsy, feeding tubes or total parenteral nutrition.

Outcomes

Our primary end point — death during the index admission, or death or readmission within 30 days after discharge — was designed to account for competing risks and can be adjusted for risk using the LACE score. In addition, we explored differences in length of stay across quartiles of physician experience both in terms of observed and expected lengths of stay for each patient during the study period. We generated expected length of stay for each patient independent of our study using Canadian Institute for Health Information estimates, which take into account case mix group, age and inpatient resource intensity weights (see www.cihi.ca). The estimates are based on the most current 2 years of information available in the Canadian Institute for Health Information Discharge Abstract Database for all acute care hospitals in Canada.

Statistical analyses

We evaluated the association between patient outcomes and attending physician experience, stratified into quartiles based on years since medical school graduation. All general internal medicine physicians had to complete at least 4 years of postgraduate training in internal medicine before being licensed in Alberta.

We compared patient characteristics across physician experience quartiles as a categorical variable to allow for nonlinearity using standard analyses of variance for comparison to continuous variables and χ2 tests for comparison to categorical variables. We calculated adjusted odds ratios (ORs) and corresponding 95% confidence intervals (CIs) to compare physician experience quartiles using logistic generalized linear mixed models, treating physician as a random effect and adjusting for age, sex, LACE score (Charlson Comorbidity Index score for outcome of in-hospital death) and hospital as fixed effects. We modelled length of stay similarly, but with a linear linkage function. We calculated adjusted length of stay for each physician experience quartile using least squares means adjusting for physician as a random effect and age, sex, Charlson Comorbidity Index score, hospital, time period (2011–2012 v. 2009–2010) and interaction between hospital and time period as fixed effects. As a sensitivity analysis, we ran the models with years of physician experience as a continuous variable rather than as quartiles.

We undertook 3 preplanned sensitivity analyses. First, we stratified patients by complexity of admission (using resource intensity weights) into categories of high complexity, median complexity and low complexity. Second, in an attempt to create similar patient cohorts between physician groups, we examined the experience–outcome associations in a subcohort of patients admitted to hospital with one of the 5 most common discharge diagnoses from general internal medicine wards in Alberta (i.e., chronic obstructive pulmonary disease, pneumonia, heart failure, urinary tract infection and venous thromboembolism). Third, because inpatient volume is associated with length of stay,17 and to ameliorate the potential interaction between physician experience and volume, we dichotomized physicians by their total patient volume over the 2 years we studied and performed our primary analysis within both subgroups independently. Physicians with a greater than median number of admissions were deemed “higher volume” and those with the median or a lower than median number of admissions were deemed “lower volume.” All statistical analyses were done using SAS version 9.4 (Cary, NC).

Results

We identified 10 046 patients (mean age 63.4 [standard deviation 19.5] yr; 51.3% men) with an admission to a general internal medicine teaching ward during the study period who met our inclusion criteria (Figure 1), who were cared for by 149 physicians. Physician experience ranged from 4 to 55 years (median 17 [interquartile range 10–25] yr). Although some comorbidities (such as hypertension and diabetes) were more common in the patients cared for by the least experienced clinicians, other comorbidities (such as liver disease and cerebrovascular disease) were more common in patients cared for by the more experienced clinicians; as a result, general estimates of patient severity (i.e., Charlson Comorbidity Index scores, resource intensity weights, LACE scores and discharge dispositions) were similar across strata of physician experience (Table 1).

Table 1:

Characteristics of study participants, by quartile of physician experience

| Characteristic | Overall (149 physicians) | Physician experience quartile | p value* | |||

|---|---|---|---|---|---|---|

| 1, 4–10 yr (40 physicians) | 2, 11–17 yr (38 physicians) | 3, 18–22 yr (33 physicians) | 4, ≥ 23 yr (38 physicians) | |||

| No. of patients | 10046 | 2394 | 2471 | 2720 | 2461 | |

| Age, yr, mean ± SD | 63.4 ± 19.5 | 64.1 ± 19.1 | 62.8 ± 19.5 | 62.3 ± 19.7 | 64.5 ± 19.6 | < 0.001 |

| Male sex | 5153 (51.3) | 1192 (49.8) | 1271 (51.4) | 1428 (52.5) | 1262 (51.3) | 0.3 |

| Top 5 most responsible diagnoses, no. (%) | 2506 (24.9) | 648 (27.1) | 612 (24.8) | 656 (24.1) | 590 (24.0) | 0.045† |

| COPD | 766 (7.6) | 221 (9.2) | 187 (7.6) | 176 (6.5) | 182 (7.4) | |

| Pneumonia | 512 (5.1) | 135 (5.6) | 118 (4.8) | 148 (5.4) | 111 (4.5) | |

| Heart failure | 517 (5.1) | 120 (5.0) | 120 (4.9) | 130 (4.8) | 147 (6.0) | |

| Urinary tract infection | 364 (3.6) | 104 (4.3) | 98 (4.0) | 92 (3.4) | 70 (2.8) | |

| Venous thromboembolism | 347 (3.5) | 68 (2.8) | 89 (3.6) | 110 (4.0) | 80 (3.3) | |

| Diagnosis fields populated in Discharge Abstract Database, no. (%) | 7.4 (4.6) | 7.7 (4.8) | 7.7 (4.8) | 7.0 (4.5) | 7.4 (4.4) | < 0.001 |

| Charlson Comorbidity Index score, mean ± SD | 2.6 ± 3.2 | 2.7 ± 2.9 | 2.7 ± 3.3 | 2.6 ± 3.5 | 2.6 ± 3.1 | 0.4 |

| Features of index admission, mean ± SD | ||||||

| Resource intensity weight‡ | 1.4 ± 1.2 | 1.4 ± 1.2 | 1.3 ± 1.3 | 1.4 ± 1.1 | 1.4 ± 1.2 | 0.5 |

| LACE score | 10.4 ± 3.1 | 10.6 ± 3.1 | 10.2 ± 3.1 | 10.4 ± 3.1 | 10.6 ± 3.0 | < 0.001 |

| Expected length of stay, d | 7.3 ± 5.2 | 7.6 ± 5.8 | 7.2 ± 5.3 | 7.3 ± 5.0 | 7.2 ± 4.6 | 0.03 |

| Actual length of stay, d | 7.6 ± 6.2 | 7.6 ± 6.2 | 7.1 ± 6.1 | 7.6 ± 6.0 | 8.2 ± 6.3 | < 0.001 |

| Discharge disposition, no. (%) | 0.2§ | |||||

| Death | 541 (5.4) | 133 (5.6) | 135 (5.5) | 137 (5.0) | 136 (5.5) | |

| Transferred to facility with inpatient care | 241 (2.4) | 66 (2.8) | 47 (1.9) | 60 (2.2) | 68 (2.8) | |

| Transferred to long-term care facility | 700 (7.0) | 169 (7.1) | 163 (6.6) | 190 (7.0) | 178 (7.2) | |

| Transferred to hospice | 32 (0.3) | 4 (0.2) | 9 (0.4) | 8 (0.3) | 11 (0.4) | |

| Discharged home with support services | 1688 (16.8) | 388 (16.2) | 419 (17.0) | 428 (15.7) | 453 (18.4) | |

| Discharged home | 6645 (66.1) | 1581 (66.0) | 1655 (67.0) | 1846 (67.9) | 1563 (63.5) | |

| Left against medical advice | 199 (2.0) | 53 (2.2) | 43 (1.7) | 51 (1.9) | 52 (2.1) | |

Note: COPD = chronic obstructive pulmonary disease, LACE = length of stay, acuity of admission, comorbidities and emegency department visits, SD = standard deviation.

Calculated using analysis of variance (continuous variables) or χ2 test (binary and categorical variables).

p value comparing overall proportion of discharges (binary) that were a “Top 5 diagnoses” across physician experience groups.

Resource intensity weight values provide a measure of a patient’s relative resource consumption compared with an average typical inpatient cost and are generated by the Canadian Institute of Health Information for each case mix group.

Overall p value for discharge disposition (categorical) across physician experience groups.

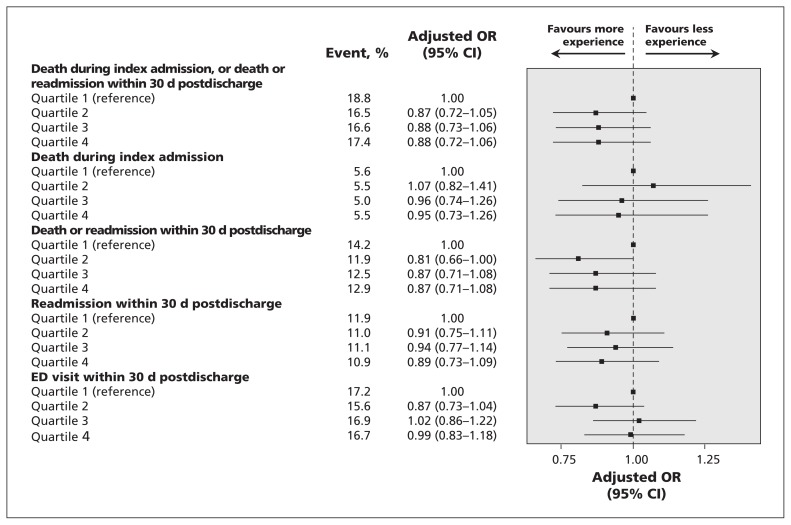

All of the patient outcomes we examined were similar across physician experience quartiles (Table 2). For example, 17.4% of patients whose care was managed by physicians in the highest quartile of experience died in hospital or were readmitted or died within 30 days of discharge (our primary outcome), compared with 18.8% of those cared for by the least experienced physicians (p = 0.24, Table 2). Even after covariate adjustment, there were no significant differences in outcomes across physician experience strata (e.g., primary outcome adjusted OR 0.88 [95% CI 0.72–1.06] for patients cared for by the most experienced physicians compared with those cared for by the least experienced physicians) (Figure 2). We found similar results when we considered physician experience as a continuous variable (primary outcome adjusted OR 0.997 [95% CI 0.991–1.004], p = 0.45 for each 1-year increase in physician experience).

Table 2:

Outcomes during the index admission and during the first 30 days postdischarge

| Outcome | Overall, % | Physician experience quartile, %* | p value | |||

|---|---|---|---|---|---|---|

| 1, 4–10 yr | 2, 11–17 yr | 3, 18–22 yr | 4, ≥ 23 yr | |||

| Death during index admission, or death or readmission within 30 d postdischarge | 17.3 | 18.8 | 16.5 | 16.6 | 17.4 | 0.2 |

| Death during index admission | 5.4 | 5.6 | 5.5 | 5.0 | 5.5 | 0.8 |

| Death or readmission within 30 d for any cause†‡ | 12.8 | 14.2 | 11.9 | 12.5 | 12.9 | 0.2 |

| Readmission within 30 d for any cause‡ | 11.2 | 11.9 | 11.0 | 11.1 | 10.9 | 0.7 |

| Death within 30 d postdischarge†‡ | 3.0 | 3.6 | 2.3 | 3.1 | 2.9 | 0.1 |

| Emergency department visit within 30 d postdischarge‡ | 16.6 | 17.2 | 15.6 | 16.9 | 16.7 | 0.5 |

| Discharged back to same level of care as preadmission‡ | 93.1 | 92.8 | 94.1 | 93.2 | 92.5 | 0.2 |

| Outpatient physician visit within 30 d of discharge† | 72.2 | 72.9 | 71.1 | 72.1 | 72.6 | 0.6 |

| No. of outpatient physician visits in the first 30 d postdischarge, mean ± SD‡ | 2.53 ± 1.98 | 2.57 ± 2.02 | 2.53 ± 2.03 | 2.51 ± 1.89 | 2.53 ± 1.97 | 0.8 |

| Length of stay, d (95% CI) | ||||||

| Mean actual | 7.62 (7.50–7.74) | 7.62 (7.37–7.87) | 7.10 (6.86–7.34) | 7.55 (7.33–7.78) | 8.22 (7.97–8.47) | < 0.001 |

| Physician-adjusted actual§ | 7.30 (6.64–7.95) | 7.53 (6.88–8.19) | 7.53 (6.84–8.22) | 8.04 (7.37–8.71) | 0.5 | |

| Patient- and physician-adjusted actual¶ | 7.63 (7.13–8.14) | 7.71 (7.21–8.22) | 7.82 (7.30–8.34) | 7.90 (7.39–8.42) | 0.9 | |

Unless otherwise stated.

Mortality data postdischarge are not available for patients discharged after Dec. 1, 2011; thus, these patients are excluded from this calculation.

Denominator excludes patients who died during index admission to hospital.

Physician-adjusted length of stay obtained from generalized linear mixed model containing physician experience group (fixed effect) and controlling for physician as a random effect.

Patient- and physician-adjusted length of stay obtained from same generalized linear mixed model with physician as a random effect, but also including age, sex, Charlson Comorbidity Index score, hospital, time period (2011–2012 v. 2009–2010) and hospital × time period interaction.

Figure 2:

Patient outcomes across quartiles of physician experience. Referent group for each comparison are patients cared for by the physician quartile with the least experience (quartile 1). Odds ratios less than 1 favour physicians with more experience. Adjusted odds ratios were obtained using logistic generalized linear mixed models, with physician as a random effect and fixed effects being patient age, sex, hospital and LACE score (except death during index admission, which instead used Charlson Comorbidity Index score). CI = confidence interval; LACE = length of stay, acuity of admission, comorbidities and emergency department (ED) visits; OR = odds ratio.

Although mean length of stay was longer for patients cared for by the most experienced clinicians compared with those cared for by less experienced physicians (Table 2), we saw substantial variability in length of stay between individual physicians within each experience quartile. However, adjusting for patient covariates and accounting for intraphysician clustering rendered the differences between quartiles nonsignificant (mean adjusted length of stay 7.90 [95% CI 7.39–8.42] d for most experienced quartile v. 7.63 [95% CI 7.13–8.14] d for least experienced quartile, p = 0.90).

Sensitivity analyses

Our preplanned sensitivity analyses examining subgroups defined by patient complexity, most responsible diagnosis and physician volume yielded results similar to those of our main analyses, with no significant differences between physician experience quartiles.

Although out-of-hospital death is a potential competing risk for each of the secondary outcomes, we felt its effect was unlikely to bias our results because it was an uncommon outcome (< 3.0%), it did not differ significantly between the experience quartiles (p = 0.13), and almost all patients who died outside of hospital first visited a hospital (30.6%), an emergency department (32.8%) or an outpatient physician (36.7%).

Discussion

We found that, at least in teaching hospitals, physician experience was not negatively associated with commonly cited proxies for quality of inpatient care. Moreover, although there were apparent differences in lengths of stay — with more experienced physicians keeping patients longer — the differences were not significant after adjusting for differences in patient case mix. These results are similar to those of Goodwin and colleagues in their study on variability in length of stay and in-hospital mortality.18

Our findings are consistent with those of Burns and Wholey,7 who found that physician experience was not associated with inpatient mortality, and those of Reid and colleagues,2 who found that although care patterns vary, most of this variation is due to case mix differences. Very little variation (< 3%) was attributable to physician characteristics.2 Although Norcini and colleagues11 reported that there was a significant positive association between years of physician experience and inpatient mortality for patients with acute myocardial infarction, the effect size was minimal: nearly 100 times smaller than the impact of physician specialty and more than 1000 times smaller than the effect of patient characteristics.

Southern and colleagues8 reported significant associations between increasing physician experience, longer length of stay and higher mortality; however, their analysis was based on 6572 admissions (not unique patients), 59 physicians and 1 hospital. We examined data from 7 hospitals, 149 physicians and more than 10 000 unique patients. In addition, we had 90% power to exclude a 20% relative difference between the lowest and highest physician experience quartiles for in-hospital mortality or death or readmission within 30 days of discharge, a difference deemed clinically important by the Centers for Medicare & Medicaid Services.9,10

Although some may question the generalizability of our findings beyond Alberta teaching hospitals, our sociodemographics, most common admitting diagnoses, case-mix, in-hospital mortality and postdischarge readmission rates are comparable to those of most hospitals elsewhere in North America.19–21 Some may speculate that an inverse association between quality and experience is more likely at nonteaching hospitals, because attending physicians at teaching hospitals are exposed to trainees and thereby more likely to remain “up-to-date,”; however, we would counter that this hypothesis should be tested rather than accepted at face value. In fact, in a recent systematic review including 15 studies that involved more than 108 000 patients, we found no substantive differences in outcomes (including length of stay, mortality and readmission rate) between general internal medicine patients admitted to nonteaching hospitals compared with those admitted to teaching hospitals.22 Regardless, we believe that studies should be done to examine the association between physician experience and quality of care in nonteaching hospitals.

Limitations

Our study has limitations owing to the nature of administrative data. These data do not capture clinical markers of disease severity or functional capacity. However, we adjusted for physician and patient covariates, specialty consultation and resource intensity weight during the index admission to hospital as proxies for disease severity. In addition, in our analysis of outcomes in the first 30 days after discharge, we adjusted for LACE scores, an index that incorporates length of index hospital stay and number of previous visits to the emergency department (proxy markers for frailty and disease severity).15

We did not have detailed process-of-care measures and instead used in-hospital mortality and postdischarge outcomes as proxies for quality of care. However, these quality metrics are endorsed by both the Canadian Institute for Health Information and the Centers for Medicare & Medicaid Services, and are included in the Patient Protection and Affordable Care Act.9,10 Furthermore, we would argue that a focus on process-of-care indicators may be misleading, because not all processes of care should be applied to every patient. Indeed, qualitative studies have found that experienced clinicians are more likely to deviate from guideline recommendations based on their judgement of which patients are most/least likely to benefit.23

Administrative data are not granular enough to determine whether the attending physician or the housestaff wrote discharge orders or came up with diagnoses and management plans. However, interphysician variability in length of stay was marked, suggesting that housestaff were not as influential in mitigating differences in quality of care or outcomes between attending physicians as some may assume.

We examined all-cause readmissions rather than “preventable” readmissions, and we recognize that very few of all readmissions are truly preventable24,25 and that not all readmissions should be viewed as evidence of poor quality of care.26,27 Currently, no validated algorithms exist to define which readmissions are preventable using administrative data alone.

We acknowledge that years in practice is a proxy for physician experience that does not address physician expertise. Previous studies have shown that physicians with higher scores on qualifying or recertification examinations provide more guideline-concordant care.28,29 Future studies should test other proxies for physician experience, including volume and complexity of case loads. Our sensitivity analyses suggest no experience–outcome differences within strata defined by volume or patient complexity, although these were underpowered.

Conclusion

We found no evidence that patients under the care of more experienced clinicians working in teaching hospitals had poorer outcomes than patients cared for by less experienced clinicians. Although our findings cannot be considered definitive because our data are limited to inpatients at teaching hospitals, our results raise questions as to the assumptions underlying physician recertification programs based solely on number of years in practice. A more robust evaluation of physician-specific patient outcomes is needed to personalize maintenance of competence programs to meet the needs of individual physicians, regardless of their vintage.

Acknowledgements

This study is based in part on data provided by Alberta Health to Alberta Health Services Data Integration, Measurement, and Reporting Branch. The interpretation and conclusions contained herein are those of the researchers and do not necessarily represent the views of the Government of Alberta nor Alberta Health Services. None of the Government of Alberta, Alberta Health, nor Alberta Health Services express any opinion in relation to this study. FAM had full access to all the data used for this study and takes responsibility for the analysis and interpretation.

Footnotes

CMAJ Podcasts: editor’s summary at https://soundcloud.com/cmajpodcasts/150316-res

Competing interests: None declared.

This article has been peer reviewed.

Disclaimer: Jayna Holroyd-Leduc is an associate editor for CMAJ and was not involved in the editorial decision-making process for this article.

Contributors: All authors designed the study; Jeffrey Bakal and Erik Youngson performed the analyses; all authors were involved in interpretation of the data; Finlay McAlister wrote the first draft of the paper, and all authors were involved in revisions and approving the final manuscript for publication.

Funding: This study was supported by operating grants from the Canadian Institutes of Health Research and Alberta Innovates – Health Solutions. None of the funding agencies had any input into design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. FAM holds career salary support from Alberta Innovates – Health Solutions and the Capital Health/University of Alberta Chair in Cardiovascular Outcomes Research.

References

- 1.Choudhry NK, Fletcher RH, Soumerai SB. Systematic review: the relationship between clinical experience and quality of health care. Ann Intern Med 2005;142:260–73. [DOI] [PubMed] [Google Scholar]

- 2.Reid RO, Friedberg MW, Adams JL, et al. Associations between physician characteristics and quality of care. Arch Intern Med 2010;170:1442–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pham HH, Schrag D, Hargraves JL, et al. Delivery of preventive services to older adults by primary care physicians. JAMA 2005;294:473–81. [DOI] [PubMed] [Google Scholar]

- 4.Christian AH, Mills T, Simpson SL, et al. Quality of cardiovascular disease preventive care and physician/practice characteristics. J Gen Intern Med 2006;21:231–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Block AE, Solomon DH, Cadarette SM, et al. Patient and physician predictors of post-fracture osteoporosis management. J Gen Intern Med 2008;23:1447–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Funkhouser E, Houston TK, Levine DA, et al. Physician and patient influences on provider performance. β-blockers in postmyocardial infarction management in the MI-Plus Study. Circ Cardiovasc Qual Outcomes 2011;4:99–106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Burns LR, Wholey DR. The effects of patient, hospital, and physician characteristics on length of stay and mortality. Med Care 1991;29:251–71. [DOI] [PubMed] [Google Scholar]

- 8.Southern WN, Bellin EY, Arnsten JH. Longer lengths of stay and higher risk of mortality among inpatients of physicians with more years in practice. Am J Med 2011;124:868–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Axon RN, Williams MV. Hospital readmission as an accountability measure. JAMA 2011;305:504–5. [DOI] [PubMed] [Google Scholar]

- 10.Epstein AM. Revisiting readmissions – changing the incentives for shared accountability. N Engl J Med 2009;360:1457–9. [DOI] [PubMed] [Google Scholar]

- 11.Norcini JJ, Kimball HR, Lipner RS. Certification and specialization: do they matter in the outcome of acute myocardial infarction? Acad Med 2000;75:1193–8. [DOI] [PubMed] [Google Scholar]

- 12.McAlister FA, Bakal J, Majumdar SR, et al. Safely and effectively reducing inpatient length of stay: a controlled study of the General Internal Medicine Care Transformation Initiative. BMJ Qual Saf 2014;23:446–56. [DOI] [PubMed] [Google Scholar]

- 13.Quan H, Sundararajan V, Halfon P, et al. Coding algorithms for defining comorbities in ICD-9-CM and ICD-10 administrative data. Med Care 2005;43:1130–9. [DOI] [PubMed] [Google Scholar]

- 14.Quan H, Li B, Saunders DL, et al. Assessing validity of ICD-9-CM and ICD-10 administrative data in recording clinical conditions in a unique dually-coded database. Health Serv Res 2008;43:1424–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.van Walraven C, Dhalla IA, Bell C, et al. Derivation and validation of an index to predict early death or unplanned readmission after discharge from hospital to the community. CMAJ 2010;182:551–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Au AG, McAlister FA, Bakal JA, et al. Predicting the risk of unplanned readmission or death within 30 days of discharge after a heart failure hospitalization. Am Heart J 2012;164:365–72. [DOI] [PubMed] [Google Scholar]

- 17.Parekh V, Saint S, Furney S, et al. What effect does inpatient physician specialty and experience have on clinical outcomes and resource utilization on a general medical service? J Gen Intern Med 2004;19:395–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Goodwin JS, Lin YL, Singh S, et al. Variation in length of stay and outcomes among hospitalized patients attributable to hospitals and hospitalists. J Gen Intern Med 2013;28:370–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med 2009;360:1418–28. [DOI] [PubMed] [Google Scholar]

- 20.Kaboli PJ, Go JT, Hockenberry J, et al. Associations between reduced hospital length of stay and 30-day readmission rate and mortality: 14 year experience in 129 Veterans Affairs hospitals. Ann Intern Med 2012;157:837–45. [DOI] [PubMed] [Google Scholar]

- 21.Vashi AA, Fox JP, Carr BG, et al. Use of hospital-based acute care among patients recently discharged from hospital. JAMA 2013;309:364–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Au AG, Padwal RS, Majumdar SR, et al. Outcomes in teaching versus nonteaching General Internal Medicine services: systematic review. Acad Med 2014;89:517–23. [DOI] [PubMed] [Google Scholar]

- 23.Elstad EA, Lutfey KE, Marceau LD, et al. What do physicians gain (and lose) with experience? Qualitative results from a cross-national study of diabetes. Soc Sci Med 2010;70:1728–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.van Walraven C, Wong J, Hawken S, et al. Comparing methods to calculate hospital-specific rates of early death or urgent readmission. CMAJ 2012;184:E810–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.van Walraven C, Bennett C, Jennings A, et al. Proportion of hospital readmissions deemed avoidable: a systematic review. CMAJ 2011;183:E391–402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gorodeski EZ, Starling RC, Blackstone EH. Are all readmissions bad readmissions? N Engl J Med 2010;363:297–8. [DOI] [PubMed] [Google Scholar]

- 27.Benbassat J, Taragin M. Hospital readmissions as a measure of quality of health care: advantages and limitations. Arch Intern Med 2000;160:1074–81. [DOI] [PubMed] [Google Scholar]

- 28.Tamblyn R, Abrahamowicz M, Brailovsky C, et al. Association between licensing examination scores and resource use and quality of care in primary care practice. JAMA 1998; 280: 989–96. [DOI] [PubMed] [Google Scholar]

- 29.Holmboe ES, Wang Y, Meehan TP, et al. Association between maintenance of certification examination scores and quality of care for Medicare beneficiaries. Arch Intern Med 2008; 168: 1396–403. [DOI] [PubMed] [Google Scholar]