Abstract

A program’s infrastructure is often cited as critical to public health success. The Component Model of Infrastructure (CMI) identifies evaluation as essential under the core component of engaged data. An evaluation plan is a written document that describes how to monitor and evaluate a program, as well as how to use evaluation results for program improvement and decision making. The evaluation plan clarifies how to describe what the program did, how it worked, and why outcomes matter. We use the Centers for Disease Control and Prevention’s (CDC) “Framework for Program Evaluation in Public Health” as a guide for developing an evaluation plan. Just as using a roadmap facilitates progress on a long journey, a well-written evaluation plan can clarify the direction your evaluation takes and facilitate achievement of the evaluation’s objectives.

A program’s infrastructure is often cited as a critical component of public health success.1,2 The Component Model of Infrastructure (CMI) identifies evaluation as a critical component of program infrastructure under the core component of engaged data.3 A written evaluation plan that is thoughtful, transparent, and collaboratively developed is the preferred method for effective evaluation planning.4

The Office on Smoking and Health at the Centers for Disease Control and Prevention (OSH/CDC) has a long history of supporting evaluation and evaluation capacity building as a central component of state tobacco control program infrastructure.5–7 For example, a written evaluation plan was included as a requirement for states who applied for the funding opportunity announcement for the Communities Putting Prevention to Work cooperative agreements. The CDC’s expectation was that funded programs would develop functional evaluation plans to guide the implementation of useful evaluations of their Communities Putting Prevention to Work activities. In order to promote evaluation capacity building, an evaluation plan development workbook was created by OSH and the Division of Nutrition, Physical Activity, and Obesity.4 The workbook is a how-to guide intended to assist public health program managers, administrators, health educators, and evaluators in developing a joint understanding of what constitutes an evaluation plan, why it is important, and how to develop an effective evaluation plan. The workbook guides the user through the plan development process using the 6 steps of the CDC’s “Framework for Program Evaluation in Public Health”8 and provides tools, worksheets, and a resource list. Although the workbook was collaboratively created by OSH and the Division of Nutrition, Physical Activity, and Obesity to provide guidance to tobacco and obesity prevention programs, it can be used by a broad public health audience to guide their evaluation plan development process.

Area 4 of the 7 Certified Health Education Specialists’ responsibilities9 is “conduct evaluation and research related to health education.”(p4) More specifically, Competency 4.1, “develop an evaluation/research plan,” provides a list of 14 subcompetencies or steps in developing an evaluation plan.9(p4) This easy-to-read, abridged version of the workbook addresses these competencies in a concrete manner that can increase health education specialists’ professional capacities. This article summarizes the major steps in the evaluation plan development process and provides relevant examples for health educators. It may be used alone to develop an evaluation plan. We refer the reader to the workbook for more in-depth instruction, tools, worksheets, and resource suggestions.4

WHAT IS AN EVALUATION PLAN?

An evaluation plan is a written document that describes how you will monitor and evaluate your program, as well as how you intend to use evaluation results for program improvement and decision making. The evaluation plan clarifies how you will describe the “what,” the “how,” and the “why it matters” for your program.

The “what” describes your program and how its activities are linked to its intended effects. It serves to clarify the program’s purpose and anticipated outcomes.

The “how” addresses the process for implementing a program and provides information about whether the program is operating with fidelity to the program’s design.

The “why it matters” provides the rationale for your program and its intended impact on public health. This is also sometimes referred to as the “so what?” question. Being able to demonstrate that your program has made a difference is critical to program sustainability.

An evaluation plan is similar to a roadmap. It clarifies the steps needed to assess the processes and outcomes of a program. An effective evaluation plan is more than a list of indicators in your program’s work plan. It is a dynamic tool that should be updated on an ongoing basis to reflect program changes and priorities over time.

WHY DO YOU WANT AN EVALUATION PLAN?

Just as using a roadmap facilitates progress on a long journey, an evaluation plan can clarify the direction of your evaluation based on the program’s priorities and resources and the time and skills needed to accomplish the evaluation. The process of developing a written evaluation plan in cooperation with an evaluation stakeholder workgroup (ESW) will foster collaboration; give a sense of shared purpose to the stakeholders; create transparency through the implementation process; and ensure that stakeholders have a common vision and understanding of the purpose, use, and users of the evaluation results. The use of evaluation results must be planned, directed, and intentional and should be included as part of the evaluation plan.10

WHAT ARE THE KEY STEPS IN DEVELOPING AN EVALUATION PLAN USING CDC’S FRAMEWORK FOR PROGRAM EVALUATION?

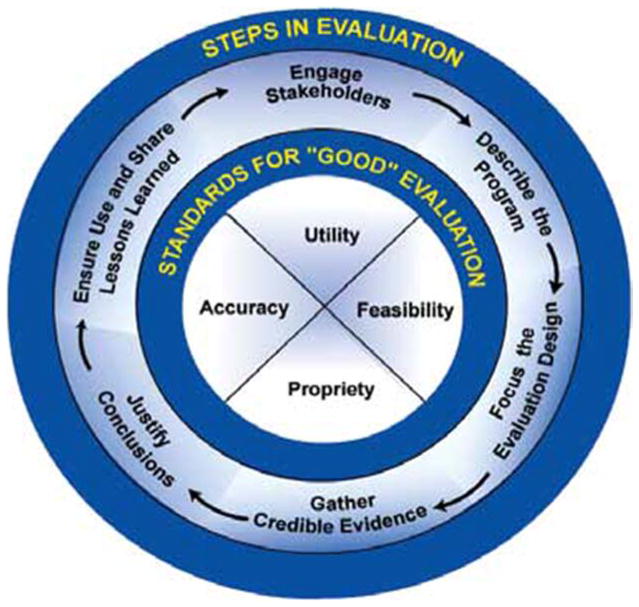

There are numerous ways in which you can frame your evaluation plan. We use the CDC’s “Framework for Program Evaluation in Public Health” as a guide for the planning process and outlining considerations for what to include in the written evaluation plan.8 The CDC framework is a guide on how to effectively evaluate public health programs and on using your evaluation’s findings for program improvement and decision making (Figure 1). There are countless ways to organize your evaluation plan. Using the framework to organize your plan will facilitate including concrete elements that promote transparent and thoughtful implementation of the evaluation. The workbook and this abridged version of the workbook provide needed resources on how to use the framework for implementing an evaluation to develop the plan itself. Though the framework is described in terms of steps, the actions are not always linear and are often completed in a cyclical nature. The development of an evaluation plan is an ongoing process; you may need to revisit a step during the process and complete several steps concurrently.

FIGURE 1.

The CDC framework for program evaluation (color figure available online).

Step 1: The Process of Participatory Evaluation Planning or Engaging the Stakeholders

A primary feature of an evaluation plan is the identification and acknowledgement of the roles and responsibilities of an ESW. The ESW includes members who have a stake or vested interest in the evaluation findings and those who are the intended users of the evaluation.10,11 The ESW may also include others who have a direct or indirect interest in program implementation. Engaging stakeholders in the ESW enhances intended users’ understanding and acceptance of the utility of evaluation information. Stakeholders are much more likely to buy into and support the evaluation if they are involved in the evaluation process from the beginning.

How are Stakeholder’s Roles Described in the Plan?

For the ESW to be truly integrated in the development of the evaluation plan, ideally, it will be identified in the evaluation plan. The form this takes may vary based on program needs. If it is important politically, a program might want to specifically name each member of the workgroup, their affiliation, and specific role(s) in the workgroup. Being transparent about the role and purpose of the ESW can facilitate buy-in for the evaluation plan. In addition, you may want to include the preferred method of communication and the timing of that communication for each stakeholder or group. A stakeholder chart or table can be a useful tool to include in your evaluation plan.

Step 2: Describing the Program in the Evaluation Plan

The next step in the evaluation plan is to describe the program. A program description clarifies the program’s purpose, stage of development, activities, capacity to improve health, and implementation context. A shared understanding of the program by health educators, program staff, evaluators, and the ESW and what the evaluation can and cannot deliver is essential to implementation of evaluation activities and use of evaluation results. A narrative description in the written plan is helpful to ensure a full and complete shared understanding of the program and a ready reference for stakeholders. A logic model may be used to succinctly synthesize the main elements of a program. The program description is essential for focusing the evaluation design and selecting the appropriate methods. Too often groups jump to evaluation methods before understanding what the program is designed to achieve or what the evaluation should deliver. The description will be based on your program’s objectives and context but most descriptions include at a minimum:

A statement of need to identify the health issue addressed

Inputs or program resources needed to implement program activities

Program activities linked to program outcomes through theory or best practice program logic

Stage of development of the program to reflect program maturity

Environmental context within which the program is implemented

In terms of describing the stage of development of the program, the developmental stages that programs typically move through are planning, implementation, and maintenance. For policy or environmental initiatives, which programs and health educators often evaluate, the stages might look somewhat like this:

Planning

-

1

Environment and asset assessment

-

2

Policy or environmental change development

-

3

Policy or environmental change developed but not yet approved

Implementation

-

4

Policy or environmental change approved but not implemented

-

5

Policy or environmental change in effect for less than 1 year

Maintenance

-

6

Policy or environmental change in effect for 1 year or longer

When it comes to evaluation, the stages of development are not always a “once-and-done” sequence of events. For example, once a program has progressed past the initial planning stage, it may experience occasions where environment and asset assessment are still needed. Additionally, in a multiyear program, the evaluation plan should consider both future evaluation data sets and baseline information that will be needed so that the evaluators can be prepared for more distal impact and outcome projects.

Step 3: Focusing Your Evaluation Plan and Your ESW

In this part of the plan, you will articulate the purposes of the evaluation, its uses, and the program description. This will aid in narrowing the evaluation questions and focusing the evaluation for program improvement and decision making. The scope and depth of any program evaluation is dependent on program and stakeholder priorities and the feasibility of conducting the evaluation given the available resources. The program staff should work together with the ESW to determine the priority and feasibility of the evaluation’s questions and identify the uses of evaluation results before designing the evaluation plan. In this step, you may begin to notice the iterative process of developing the evaluation plan as you revisit aspects of step 1 and step 2 to inform decisions made in step 3.

Even with an established multiyear plan, step 3 should be revisited with your ESW annually (or more often if needed) to determine whether priorities and feasibility issues still hold for the planned evaluation activities. This highlights the dynamic nature of the evaluation plan. Ideally, your plan should be intentional and strategic by design and generally cover multiple years for planning purposes, but the plan is not set in stone. It should also be flexible and adaptive. It is flexible because resources and priorities change and adaptive because opportunities and programs change. For example, you may have a new funding opportunity and a short-term program added to your overall program. The written plan can document where you have been and where you are going with the evaluation as well as why changes were made to the plan.

Budget and Resources

Discussion of the budget and the resources (financial and human) that can be allocated to the evaluation will be included in your feasibility discussion. In the Best Practices for Comprehensive Tobacco Control Programs, it is recommended that at least 10% of your total program resources be allocated to surveillance and program evaluation.5 The questions and subsequent methods selected will have a direct relationship to the financial resources available, evaluation team members’ skills, and environmental constraints. Stakeholder involvement may facilitate advocating for the resources needed to implement the evaluation necessary to answer priority questions. However, sometimes you might not have the resources necessary to fund the evaluation questions you would most like to answer. A thorough discussion of feasibility and recognition of real constraints will facilitate a shared understanding of what the evaluation can and cannot deliver. The process of selecting the appropriate methods to answer the priority questions and discussing feasibility and efficiency is iterative. Steps 3, 4, and 5 in planning the evaluation will often be visited concurrently in a back-and-forth progression until the group comes to consensus.

Step 4: Planning for Gathering Credible Evidence

Now that you have solidified the focus of your evaluation and identified the questions to be answered, it will be necessary to select and document the appropriate methods that fit the evaluation questions you have chosen. Sometimes evaluation is guided by a favorite method and the evaluation is forced to fit that method. This could lead to incomplete or inaccurate answers to evaluation questions. Ideally, the evaluation questions inform the methods. If you follow the steps in this outline, you will collaboratively choose the evaluation questions with your ESW that will provide you with information that will be used for program improvement and decision making. The most appropriate method to answer the evaluation questions should then be selected and the process you used to select the methods should be described in your plan. Additionally, it is prudent as part of the articulation of the methods to identify a timeline and the roles and responsibilities of those overseeing the evaluation implementation, whether it is program or stakeholder staff.

To accomplish this step of choosing appropriate methods to answer your evaluation questions, you will need to:

keep in mind the purpose, logic model/program description, stage of development of the program, evaluation questions, and what the evaluation can and cannot deliver.

determine the method(s) needed to fit the question(s). There are a multitude of options including, but not limited to, qualitative, quantitative, mixed methods, multiple methods, naturalistic inquiry, experimental, and quasi-experimental.

think about what will constitute credible evidence for stakeholders or users.

identify sources of evidence (e.g., persons, documents, observations, administrative databases, surveillance systems) and appropriate methods for obtaining quality (i.e., reliable and valid) data.

identify roles and responsibilities along with timelines to ensure the project remains on time and on track.

remain flexible and adaptive and, as always, transparent.

Evaluation Plan Methods Grid

One tool that is particularly useful in your evaluation plan is an evaluation plan methods grid (Table 1). Not only is this tool helpful for aligning evaluation questions with methods, indicators, performance measures, data sources, roles, and responsibilities, it can also facilitate a shared understanding of the overall evaluation plan with stakeholders. Having this table in the evaluation plan helps readers visualize how the evaluation will be implemented, which is a key feature of having an evaluation plan. The tool can take many forms and should be adapted to fit your specific evaluation and context.

TABLE 1.

Example Evaluation Plan Methods Grid

| Evaluation Question | Indicator/Performance Measure | Method | Data Source | Frequency | Responsibility |

|---|---|---|---|---|---|

Step 5: Planning for Conclusions

Justifying conclusions includes analyzing the information you collected, interpreting, and drawing conclusions from your data. This step is needed to turn the data collected into meaningful, useful, and accessible information. It is critical to think through this process and outline procedures to be implemented and the necessary timeline in the evaluation plan. Programs often incorrectly assume that they no longer need the ESW integrally involved in decision making around formulating conclusions and instead look to the “experts” to complete the analyses and interpretation of the program’s data. However, engaging the ESW in this step is critical to ensuring the meaningfulness, credibility, and acceptance of evaluation findings and conclusions. Actively meeting with stakeholders and discussing preliminary findings helps to guide the interpretation phase. In fact, stakeholders often have novel insights or perspectives to guide interpretation that evaluation staff may not have, leading to more thoughtful conclusions.

Planning for analysis and interpretation is directly tied to the timetable begun in step 4. Errors or omissions in planning this step can create serious delays in producing the final evaluation report and may result in missed opportunities (e.g., having current data available for a legislative session) if the report has been timed to correspond with significant events (e.g., program or national conferences).

Moreover, it is critical that your evaluation plan includes time for interpretation and review of the conclusions by stakeholders to increase transparency and validity of your process and conclusions. The emphasis here is on justifying conclusions, not just analyzing data. This is a step that deserves due diligence in the planning process. A note of caution: As part of a stakeholder-driven process, there is often pressure for data interpretation to reach beyond the evidence when conclusions are drawn. It is the responsibility of the evaluator and the ESW to ensure that conclusions are drawn directly from the evidence. This is a topic that should be discussed with the ESW in the planning stages along with reliability and validity issues and possible sources of biases. If possible and appropriate, triangulation of data should be considered and remedies to threats to the credibility of the data should be addressed as early as possible.

Step 6: Planning for Dissemination and Sharing of Lessons Learned

Another often overlooked step in the planning stage is step 6, which encompasses planning for use of evaluation results, sharing of lessons learned, communication, and dissemination of results.

Based on the uses for your evaluation, you will need to determine who should learn about the findings and how they should learn the information. Typically this is where the final report is published. The impact and value of the evaluation results will increase if the program and the ESW take personal responsibility for getting the results to the right people and in a usable, targeted format.10 This absolutely must be planned for and documented in the evaluation plan. It will be important to consider the audience in terms of timing, style, tone, message source, and method and format of delivery. Remember that stakeholders will not suddenly become interested in your product just because you produced a report. You must sufficiently prepare the market for each product and for use of the evaluation results.10

Communication and Dissemination Plans

An intentional communication and dissemination approach should be included in your evaluation plan. As previously stated, the planning stage is the time for the program and the ESW to begin to think about the best way to share the lessons you will learn from the evaluation. The communication–dissemination phase of the evaluation is a 2-way process designed to support use of the evaluation results for program improvement and decision making. In order to achieve this outcome, a program must translate evaluation results into practical applications and must systematically distribute the information through a variety of audience-specific strategies.

The first step in writing an effective communications plan is to define your communication goals and objectives. Given that the communication objectives will be tailored to each priority audience, you need to consider with your ESW who the primary audience(s) are (e.g., the ESW, the funding agency, the general public, and some other groups).

Once the goals, objectives, and priority audiences of the communication plan are established, you should consider the best ways to reach the intended audiences by considering which communication–dissemination methods or formats will best serve your goals and objectives. Will the program use newsletters/fact sheets, oral presentations, visual displays, videos, storytelling, and/or press releases? Carefully consider the best tools to use by getting feedback from your ESW, by learning from others’ experiences, and by reaching out to priority audiences to gather their preferences. An excellent resource to facilitate creative techniques for reporting evaluation results is Torres et al.’s Evaluation Strategies for Communicating and Reporting.12

Complete the communication planning step by establishing a timetable for sharing evaluation findings and lessons learned. The communication and dissemination chart provided in Table 2 can be useful in helping the program to chart the written communications plan.

TABLE 2.

Example Communication and Dissemination Chart

| Target Audience (Priority) | Objectives for the Communication | Tools | Timetable |

|---|---|---|---|

It is important to note that you do not have to wait until the final evaluation report is written in order to share your evaluation results. A system for sharing interim results to facilitate program course corrections and decision making should be included in your evaluation plan.

Communicating results is not enough to ensure the use of evaluation results and lessons learned. The evaluation team and program staff need to proactively take action to encourage the use and widespread dissemination of the information gleaned through the evaluation project. It is helpful to strategize with stakeholders early in the evaluation process about how your program will ensure that findings are used to support program improvement efforts and informed decision making. Program staff and the ESW must take personal responsibility for ensuring the dissemination of and application of evaluation results.

ONE LAST NOTE

The impact of the evaluation results can reach far beyond the evaluation report. If stakeholders are involved throughout the process, communication and participation may be enhanced. If an effective feedback loop is in place, program improvement and outcomes may be enhanced. If a strong commitment to sharing lessons learned and success stories is in place, then other programs may benefit from the information gleaned through the evaluation process. Changes in thinking, understanding, program and organization may stem from thoughtful evaluative processes.10 The use of evaluation results and impacts beyond the formal findings of the evaluation report start with the planning process and the transparent, written evaluation plan. In addition, all of the above facilitate the actual use of evaluation data, which is a core component of essential, foundational, and functioning program infrastructure as defined by the CMI.3

Acknowledgments

Thank you to the authors of the workbook: S Rene Lavinghouze, Jan Jernigan, LaTisha Marshall, Adriane Niare, Kim Snyder, Marti Engstrom, Rosanne Farris.

Footnotes

The findings and conclusions in this presentation are those of the author and do not necessarily represent the views of the Centers for Disease Control and Prevention.

Contributor Information

S. Rene Lavinghouze, Centers for Disease Control and Prevention.

Kimberly Snyder, ICF International.

References

- 1.Chapman R. [Accessed September 25, 2012];Organization development in public health: a foundation for growth [white paper] http://www.leadingpublichealth.com/writings&resources.html. Published 2010.

- 2.Department of Health and Human Services. Public health infrastructure. [Accessed September 25, 2012];Healthy People 2020 Topics & Objectives Web site. http://www.healthypeople.gov/2020/topicsobjectives2020/overview.aspx?topicid=35. Updated September 6, 2012.

- 3.Lavinghouze SR, Rieker P, Snyder K. Reconstructing infrastructure: from model to measurement tool. Paper presented at: 26th American Evaluation Association National Conference; October 24–27, 2012; Minneapolis, MN. [Google Scholar]

- 4.Centers for Disease Control and Prevention. Developing an Effective Evaluation Plan: Setting the Course for Effective Program Evaluation. Atlanta, GA: Department of Health and Human Services, Centers for Disease Control and Prevention, National Center for Chronic Disease Prevention and Health Promotion, Office on Smoking and Health; Division of Nutrition, Physical Activity, and Obesity; 2011. [Accessed May 29, 2013]. http://www.cdc.gov/tobacco/tobacco_control_programs/surveillance_evaluation/evaluation_plan/index.htm. [Google Scholar]

- 5.Centers for Disease Control and Prevention. Best Practices for Comprehensive Tobacco Control Programs, 2007. Atlanta, GA: US Department of Health and Human Services, Centers for Disease Control and Prevention, National Center for Chronic Disease Prevention and Health Promotion, Office on Smoking and Health; 2007. [Google Scholar]

- 6.Centers for Disease Control and Prevention. Introduction to Process Evaluation in Tobacco Use Prevention and Control. Atlanta, GA: US Department of Health and Human Services, Centers for Disease Control and Prevention, National Center for Chronic Disease Prevention and Health Promotion, Office on Smoking and Health; 2008. [Google Scholar]

- 7.Mercer SL, MacDonald G, Green LW. Participatory research and evaluation: from best practices for all states to achievable practices within each state in the context of the Master Settlement Agreement. Health Promot Pract. 2004;5(3):167S–178S. doi: 10.1177/1524839904264681. [DOI] [PubMed] [Google Scholar]

- 8.Centers for Disease Control and Prevention. Framework for program evaluation in public health. [Accessed September 25, 2012];MMWR Morb Mortal Wkly Rep. 1999 48(RR11):1–40. http://www.cdc.gov/mmwr/preview/mmwrhtml/rr4811a1.htm. [Google Scholar]

- 9.National Commission for Health Education Credentialing, Inc. [Accessed January 22, 2013];Areas of responsibilities, competencies, and sub-competencies for the health education specialists. 2010 http://www.nchec.org/_files/_items/nch-mr-tab3-110/docs/areas%20of%20responsibilities%20competencies%20and%20sub-competencies%20for%20the%20health%20education%20specialist%202010.pdf.

- 10.Patton MQ. Utilization-Focused Evaluation. 4. Thousand Oaks, CA: Sage Publication; 2011. [Google Scholar]

- 11.Knowlton LW, Philips CC. The Logic Model Guidebook: Better Strategies for Great Results. Thousand Oaks, CA: Sage Publications; 2009. [Google Scholar]

- 12.Torres R, Preskill H, Piontek ME. Evaluation Strategies for Communicating and Reporting. 2. Thousand Oaks, CA: Sage Publications; 2004. [Google Scholar]