Abstract

Musical enjoyment for cochlear implant (CI) recipients is often reported to be unsatisfactory. Our goal was to determine whether the musical experience of postlingually deafened adult CI recipients could be enriched by presenting the bass and treble clef parts of short polyphonic piano pieces separately to each ear (dichotic). Dichotic presentation should artificially enhance the lateralization cues of each part and help the listeners to better segregate them and thus provide greater clarity. We also hypothesized that perception of the intended emotion of the pieces and their overall enjoyment would be enhanced in the dichotic mode compared with the monophonic (both parts in the same ear) and the diotic mode (both parts in both ears). Twenty-eight piano pieces specifically composed to induce sad or happy emotions were selected. The tempo of the pieces, which ranged from lento to presto covaried with the intended emotion (from sad to happy). Thirty participants (11 normal-hearing listeners, 11 bimodal CI and hearing-aid users, and 8 bilaterally implanted CI users) participated in this study. Participants were asked to rate the perceived clarity, the intended emotion, and their preference of each piece in different listening modes. Results indicated that dichotic presentation produced small significant improvements in subjective ratings based on perceived clarity. We also found that preference and clarity ratings were significantly higher for pieces with fast tempi compared with slow tempi. However, no significant differences between diotic and dichotic presentation were found for the participants’ preference ratings, or their judgments of intended emotion.

Keywords: Cochlear implant, music perception, emotions, auditory scene analysis

Introduction

Many cochlear implant (CI) recipients consider music an important factor contributing to their quality of life (Middlebrooks & Onsan, 2012; Schulz & Kerber, 1994). However, a large proportion also report a decrease in musical enjoyment and unsatisfactory music perception postimplantation (Gfeller, Christ, Knutson, Witt, & Mehr, 2003; Limb & Rubinstein, 2012). Although a significant research effort has resulted in improved device design, signal processing, training programs, and fittings (for a review, see Faulkner & Pisoni, 2013), the enjoyment of music remains a challenge for many CI recipients, and the overall quality and pleasantness of music are often judged as poor by CI users when compared with those with normal hearing (Limb & Rubinstein, 2012; Looi, McDermott, McKay, & Hickson, 2008).

Modern multichannel CI sound processing strategies degrade the acoustic signal by altering the fine-structure information in the stimulus waveform and preserving only the temporal envelope extracted from up to 22 frequency bands (Zeng, 2004). This results in poor pitch perception that affects the recognition and understanding of melody (for a review, see McDermott, 2011), poor perception of timbre that affects sound quality (Kong, Mullangi, & Marozeau, 2012; Kong, Mullangi, Marozeau, & Epstein, 2011) and poor ability to localize sound objects (Seeber, Baumann, & Fastl, 2004). In combination, degraded perception of pitch, timbre, and localization cues contributes to a greatly decreased ability to perceive individual parts of music separately. This ability, called auditory scene analysis (Bregman, 1990), has been studied extensively in normal-hearing (NH) listeners (e.g., Carlyon, 2004; Oxenham, 2008), but there are only few studies in CI users (e.g., Chatterjee, Sarampalis, & Oba, 2006; Cooper & Roberts, 2009; Hong & Turner, 2009).

While listening to music through headphones, the perceived location of each auditory source can be varied to a position confined between the two ears of the listener. This sensation called lateralization can be varied by changing binaural cues such as the interaural difference in the level and arrival time of sounds (Blauert, 1997). This effect is used extensively in music mixing to create a stereo image of each instrument. When the instruments are perceived in different locations to each other, the music played by each instrument is easier to segregate. For instance, the lines of music played by two guitars could be segregated based on how they were mixed into the left and right channels of a recording. In the most extreme case, 100% of the signal of one instrument could be sent to only one channel and the rest of the instruments to the other channel (a great example can be heard in Eleanor Rigby by the Beatles).

Hearing impairment degrades the processing of binaural cues, leading to poorer localization ability (Noble, Byrne, & Lepage, 1994), and changes in how lateralization cues are perceived (Florentine, 1976). As implantation criteria are relaxed (Gifford, 2011; Gifford, Dorman, Shallop, & Sydlowski, 2010), there are increasing numbers of people with bimodal fittings (BM—one CI and a hearing aid in the contralateral ear) and bilateral fittings (BL—two CIs). Unfortunately, the time delay and nonlinear amount of amplification varies between devices, and it is unlikely that traditional mixing will provide sufficient lateralization cues to help hearing-impaired people to segregate sounds (Francart, Brokx, & Wouters, 2008, 2009). Furthermore, our previous research has suggested that CI users need a large physical difference between auditory streams in order to segregate them (Marozeau, Innes-Brown, & Blamey, 2013a, 2013b). However, it is possible to propose new musical mixes that should enhance the difference between sources by assigning them to completely separate audio channels. We, therefore, hypothesized that polyphonic music might be better perceived by CI users if some of the musical lines could be sent to one ear and the rest to the other.

Enhancing the segregation of sources should allow CI users better access to musical information. Such enhancement may allow better perception of the musical structure, rhythm, mode (major or minor scale), and the overall emotional content. In NH listeners, emotions in the happy or sad dimension are related to the tempo (the speed of music, measured in beats per minute) and mode: A major mode and a fast tempo evoke happiness, whereas a minor mode and a slow tempo stir a sad emotion (Hevner, 1935; Peretz, Gagnon, & Bouchard, 1998). Although CI users are able to perceive simple monophonic rhythm relatively well compared with NH listeners (Kong, Cruz, Jones, & Zeng, 2004), they show limitations in the ability to correctly identify musical emotions. Hopyan, Gordon, and Papsin (2011) asked children (CI users and NH listeners) to listen to short musical excerpts and point to a smiling or frowning face depending on whether they thought the music was happy or sad. While the NH children identified the emotion accurately (97.3% correct), children with CIs were significantly less accurate (77.5% correct), but still significantly above chance. Volkova Trehub, Schellenberg, Papsin, and Gordon (2013) examined the ability of prelingually deaf children with bilateral CIs to identify emotions (happiness and sadness) in speech and music. Those children were able to identify happiness and sadness in both speech and music with high accuracy but were significantly less accurate than their normally hearing peers (piece of music was taken from the corpus of Vieillard et al., 2008). Although it has been shown that CI users can accurately discriminate simple rhythmical patterns, their perception of the tempo of a polyphonic musical pattern is less well known. As argued by Bregman (1990), rhythmic experience can be influenced by the degree of segregation between two streams. It is, therefore, possible that if CI users fuse multiple musical streams (melodic lines), into a single stream, they might perceive the music as having a faster tempo than NH listeners. Therefore, we hypothesized that by separating musical streams between the two ears, the additional musical information available would help them to improve the recognition of musical emotion.

We also hypothesized that separating musical streams would enhance the enjoyment of music. First, listening preference ratings are significantly higher when musical material is reproduced with more than one channel, compared with monaural reproduction (Choisel & Wickelmaier, 2007). In addition, the emotional content of music is one of the main motivations for listening to music (Balkwill & Thompson, 1999; Panksepp, 1995). Empirical evidence of a link between preference and emotion has been shown in many studies (North & Hargreaves, 1997; Ritossa & Rickard, 2004; Schubert, 2007). Therefore, if emotional cues could be more accurately perceived, music enjoyment should be enhanced.

The aim of this study was to test the three following hypotheses: Assigning two musical streams into different ears would (a) improve subjective ratings of clarity (measured with a clear or unclear rating), (b) improve the accuracy of identifying the happy or sad emotional content of the piece (measured with an happy or sad rating), and (c) enhance the enjoyment of the music (measured with a like or dislike rating). As we expected a different outcome based on acoustical residual hearing, these hypotheses were tested in both BM and BL CI users.

Materials and Methods

Participants

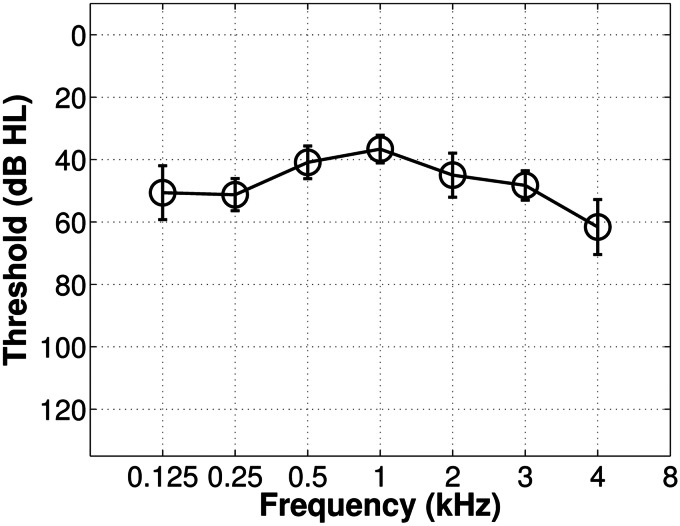

Thirty participants were involved in this study. Eleven were NH listeners (7 females, age range 19–31, average ± SD, 25.3 ± 3.3 years). All NH listeners had hearing thresholds at octave frequencies from 125 Hz to 8 kHz below 20 dB HL. Eleven were BM listeners (4 females, age range 58–77, 69.4 ± 6.2 years) with functional residual aided hearing (see audiogram in Figure 1) and eight were BL listeners (5 females, age range 35–72, 58.9 ± 13.1 years). All CI users were postlingually deafened adults with more than 1 year of implant use in the best ear. Both BL and BM listeners were asked to set their device(s) settings to those they normally used when listening to music. All participants reported being nonmusicians. Every CI user had a Nucleus implant with the advanced combination encoder sound processing strategy. Recruitment of CI users was conducted through the Cochlear Implant Clinic at the Royal Victorian Eye and Ear Hospital and through local networks for NH listeners. All the participants gave written informed consent and were compensated for their travel expenses.

Figure 1.

Aided audiogram for the bimodal listeners. Circles represent mean threshold (dB HL) for each frequency and error bars showing standard deviations.

Stimuli

Twenty-eight lyric-free piano pieces were chosen from a set of stimuli especially composed by a professional musician to induce specific and well-defined emotions (Vieillard et al., 2008). Half of them were designed to convey a happy emotional response and the other half a sad response. The pieces were 10 to 15 s in duration, unfamiliar to our participants, and were made up of a treble clef part, which outlined the melody (usually played with the right hand), and the bass clef part which outlined the harmony (usually played with the left hand). Each part (or musical stream) was generated separately from a MIDI file. All the notes were set to the same velocity and were played back using high-quality samples of an acoustic piano (Ableton live 8). The experiment was controlled using MAX/MSP (Cycling ’74), and stimuli were played through a MOTU IO-24 soundcard and headphones (AKG K601) for the NH listeners. Stimuli were sent to CI sound processors using the accessory input, and to hearing aids using a loudspeaker (Genelec 8020B) 1.2 m in front of the listener head11. To set the loudness levels, all listeners had to listen to the same short musical piece in each ear separately. Afterwards, by means of a small touchscreen, they were instructed to find the same subjective sensation of loudness across ears before the beginning of the main experiment.

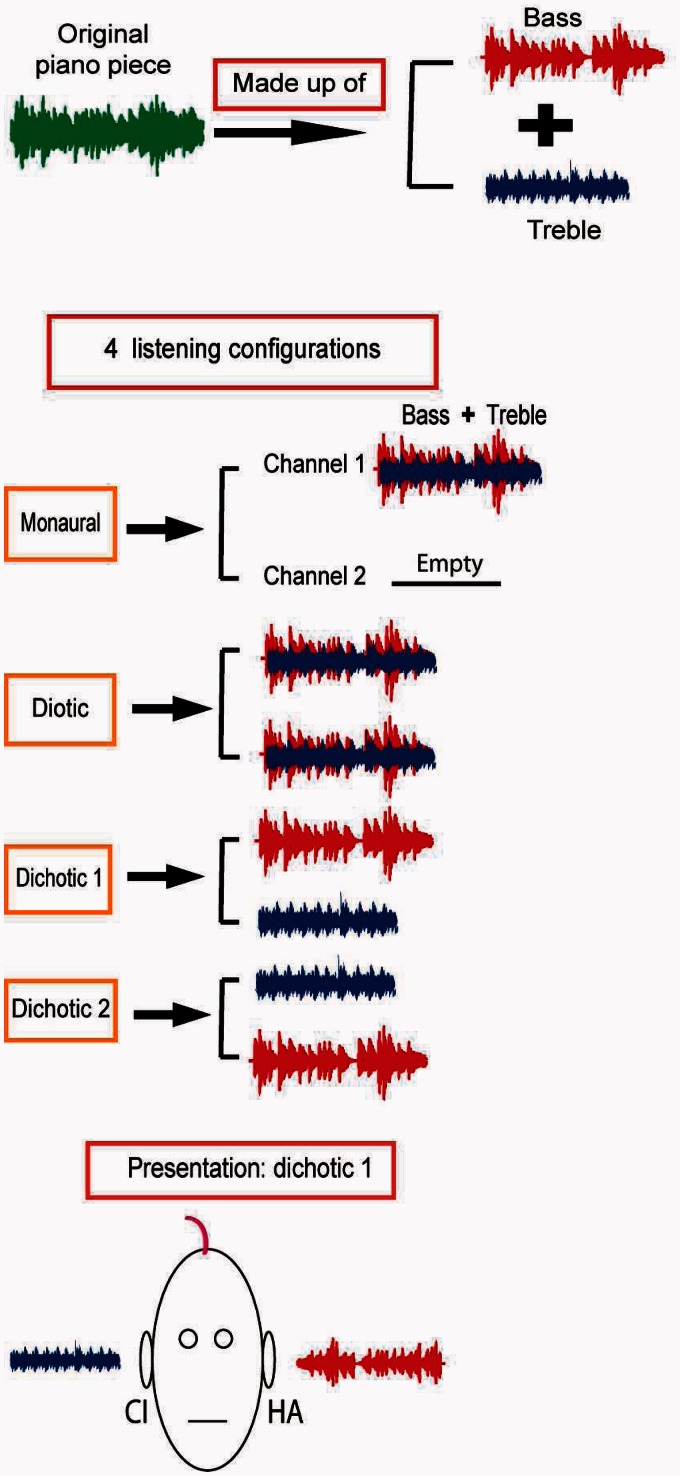

Each piece was presented in four listening configurations: (a) monaural—the treble and the bass parts were combined and sent to one ear only (the “best” ear for the BL listeners, and the implanted ear for BM listeners), (b) diotic—the treble and bass parts were combined and sent to both ears, (c) dichotic 1—the treble part was sent to the best ear (or cochlear implant for BM listeners) and the bass part to the opposite ear, and (d) dichotic 2—same as dichotic 1 but the parts are swapped (see figure 2). Thereby, a total of 112 stimulation variants were created.

Figure 2.

Creation of the stimuli. The piano pieces are made up of a treble clef and a bass clef. Depending on the listening configuration, the treble and bass are combined differently. Here is shown an example of the dichotic configuration in a BM listener.

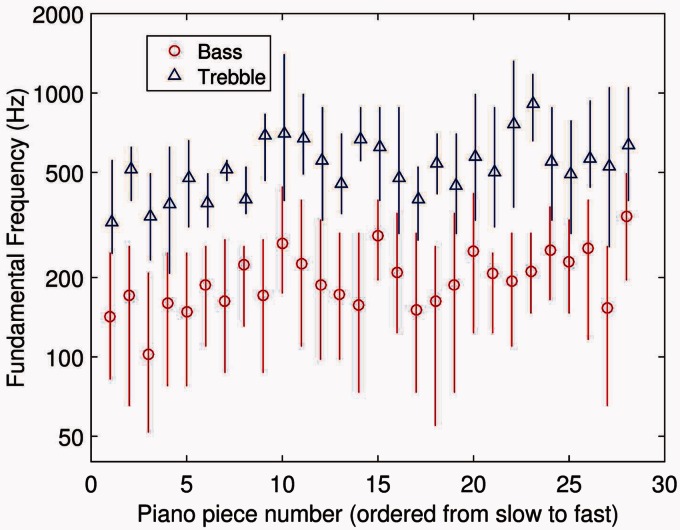

The bass and treble clef parts overlap in pitch in 9 of the 28 pieces for only few notes. Figure 3 shows the range (vertical line) and the average (symbol) F0 of the notes in the pieces. The average F0 was calculated by taking the average of the MIDI note values in each piece. Overall, the average F0 increased moderately but significantly with tempo (R2 = .37, p < .001) showing that the composer had the tendency to use higher pitched notes in pieces with fast tempi. For each increase of 14 beats per minute (BPM), the average F0 increases by one semitone. However, the average F0 difference between the bass and treble clefs was not significantly correlated with the tempo (R2 = .02; p = .45). MIDI files for the pieces were downloaded from http://www.brams.umontreal.ca/plab/downloads/Emotional_Clips.zip.

Figure 3.

Note range (vertical line) and the average (symbol) F0 of each piece (the treble clef in blue triangle and the bass clef in red circle).

Procedure

As participants were nonmusicians, the instructions explained the two-part structure of the pieces by showing a picture of two hands playing a piano. It was explained that the right hand usually played the treble part and the left one the bass part and that the two parts were always present in the piece but could be presented to the same or different ears. A training session was administered, in which participants listened to examples of the bass and treble parts. These example stimuli were not reused in the experiment. After training, the main experiment began.

The pieces were played back in random order within a single block. After each piece finished, participants were asked to rate the piece using three dichotomously anchored, continuous rating scales implemented as sliders on a touchscreen. The first slider was labeled happy/sad, and participants were asked to indicate the degree to which they thought the piece was intended by the composer to be happy or sad. The second was labeled like/dislike, and participants were asked to indicate how much they liked or disliked the piece. The last slider was labeled clear/unclear, and participants were asked to indicate how clearly or unclearly they could hear the two parts of the piece separately (the left and right hands). When all three ratings were complete, the next trial was initiated by the participant pressing a button labeled “next”.

Statistical Analysis

The slider position was encoded with integer values between 1 (happy, like, clear) and 100 (sad, dislike, unclear). As CI users are known to have good perception of rhythm, and tempo is known to affect the emotional response (happy songs have usually faster tempo than sad ones) and the ability to segregate sequential notes (faster tempo induces more sequential segregation than slower; Bregman, 1990), the songs were analyzed as function of their tempo.

All tempi were estimated by asking eight additional NH listeners to tap on a button with the beat of each piece. The average across the eight NH listeners was used as the reference tempo for further analysis.

The data were analyzed using JMP (version 11.1.1) through a linear mixed-model with the following fixed effects: the groups (BM and BL), the listening configuration (monaural, diotic, dichotic 1, and dichotic 2), the tempo (set as a continuous variable), and their two-way and three-way interactions. The participants and their interactions with the tempi and listening configurations were included as random effects. When significant, the differences between the listening configurations were further tested with Tukey HSD post hoc analyses. As the goal of this study was to test whether the listening configuration would affect the perception of CI users, NH listeners were analyzed separately. The effect size (η2) was reported for significant factors.

Results

NH Listeners

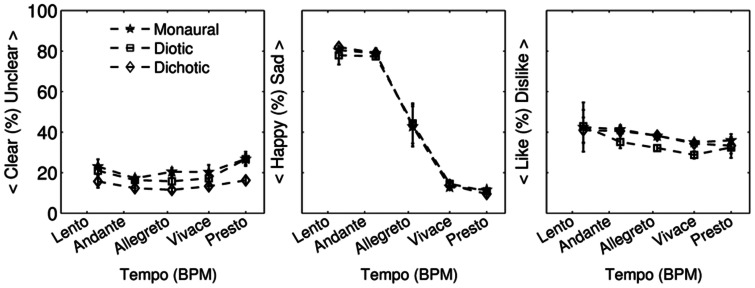

Figure 4 shows the average clear or unclear, happy or sad ratings, and like or dislike ratings as a function of the tempo for the NH group. For clarity of the presentation, the tempo of each piece was classified into five categories as follows: (a) lento < 73 BPM, (b) andante 74–98 BPM, (c) allegretto 99–132 BPM, (d) vivace 133–168 BPM, and (e) presto: > 169 BPM, and the two dichotic configurations were averaged together. However, the analysis was performed with the continuous tempo values and with both dichotic listening configurations. The effect of listening condition was significant and small for the clear or unclear rating (F(3, 30) = 8.357, p < .001, η2 = 0.027) but not significant for the like or dislike rating (F(3, 30) = 2.549, p = .074) or the happy or sad rating (F(3, 30) = 0.881, p = .462). Post hoc analysis revealed that for the clear or unclear ratings, the two dichotic conditions were not significantly different from each other. However, both dichotic conditions were significantly different from the monaural and diotic conditions (p < .01). The effect of tempo was significant and large for the happy or sad rating (F(1, 10) = 418.164, p < .001, η2 = 0.463) and not significant for the clear or unclear rating (F(1, 10) = 3.751, p = .082) or the like or dislike rating (F(1, 10) = 1.22, p = .295).

Figure 4.

Average ratings for the NH listeners. Blue pentagram, green square, and red diamond lines depict three listening configurations (the two dichotic modes are averaged together).

As the pieces were composed to be either “happy” or “sad,” it is possible to calculate the ratio of stimuli correctly identified as such. Each stimulus was identified as “happy” for a participant if it was rated with a value below 50% on the happy or sad slider or was otherwise identified as “sad.” Table 1 shows that on average, NH listeners identified 97% of the happy pieces as happy and 94% of the sad pieces as sad.

Table 1.

Percentage of Stimuli Correctly Identified as Happy or Sad for Each Listening Condition and Participant Group.

| Percentage of stimuli correctly identified as happy or sad |

||||

|---|---|---|---|---|

| Listening condition | Composer intention | NH | BM | BL |

| Monaural | Happy | 100 | 97 | 87 |

| Sad | 95 | 81 | 78 | |

| Diotic | Happy | 99 | 99 | 96 |

| Sad | 92 | 79 | 81 | |

| Dichotic 1 | Happy | 100 | 99 | 98 |

| Sad | 98 | 70 | 75 | |

| Dichotic 2 | Happy | 100 | 97 | 95 |

| Sad | 92 | 77 | 71 | |

| Average | Happy | 97 | 97 | 93 |

| Sad | 94 | 77 | 76 | |

Note. Each Piece Was Labelled as “Happy” or “Sad” by the Composer. Each Stimulus Was Identified as “Happy” for a Participant if It Was Rated Below 50% on the Happy/Sad Rating, or Was Otherwise Identified as “Sad.” NH = normal hearing; BM = bimodal fitting; BL = bilateral fitting.

Cochlear Implant Users

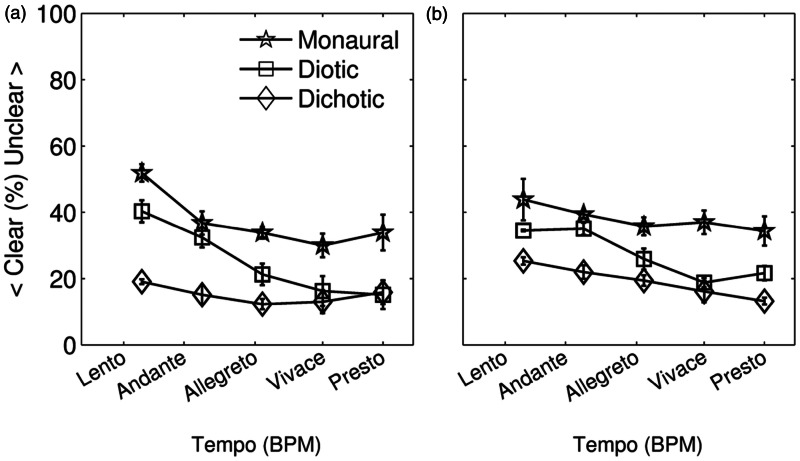

Clear or unclear ratings

Figure 5 shows the average clear or unclear ratings as a function of tempo and listening configuration. Overall, the listeners rated the pieces as relatively clear, with all average ratings below 52 points. This rating might have been influenced by the overall F0 separation between the treble and bass part. The main effect of listening configuration was significant and moderate, (F(3, 51) = 17.023, p < .001, η2 = 0.087). The main effect of tempo was also found to be significant and small (F(1, 17) = 25.891, p < .001, η2 = 0.011) showing that the faster the tempo the clearer the ratings. Furthermore, the interactions between listening configuration and tempo were significant and small (F(3, 51) = 3.374, p = .025, η2 = 0.005). No effect of group was found (neither as a main effect nor with any interaction), indicating no significant difference between BM and BL participants. Post hoc tests in both CI groups showed that ratings were significantly lower (more clear) in the two dichotic configurations compared with the diotic and monaural configuration (p < .001). Ratings in the diotic configuration were also significantly more clear than in the monaural configuration (p < .001). No significant difference was found between the two dichotic configurations. This result supports our first hypothesis.

Figure 5.

Average clear or unclear ratings for the CI users (BM, panel a and BL, panel b). Blue, green, and red lines depict three listening configurations (the two dichotic modes are averaged together).

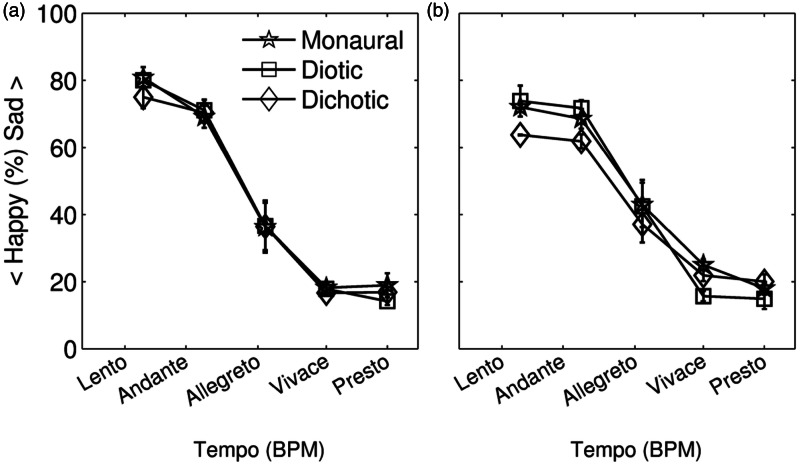

Happy or sad ratings

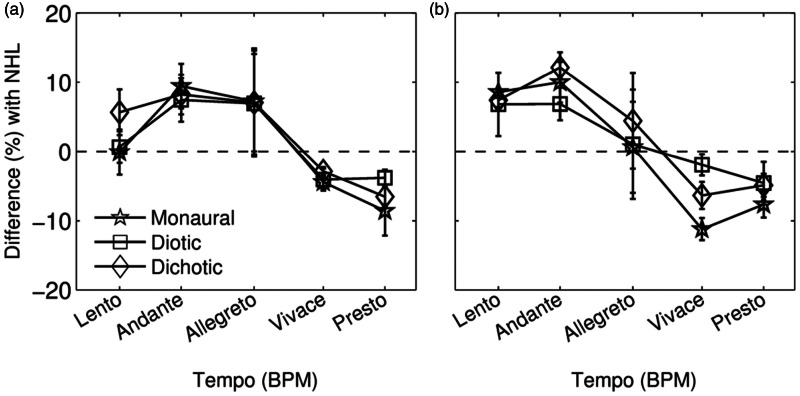

Figure 6 shows the average happy or sad ratings for the BM (Panel A) and BL (Panel B) listeners and Table 1 the percentage of piece correctly identified. On average both groups were surprisingly accurate at judging the intended emotion of each piece. The percentage of pieces correctly identified as “happy” range between 87% and 99%, and the percentage of pieces correctly identified as “sad” between 70% and 81% depending on the listening configuration and the listener group. Statistical analysis showed that only the main effect of tempo was significant and large (F(1, 17) = 151.21, p < .001, η2 = 0.38). No other main effect or interaction was found for the group or the listening configuration. Compared with the responses of the NH listeners (Figure 4), CI users’ responses were very similar. Figure 7 shows the difference between ratings of the BM and BL groups and the NH group. This figure shows that most of the difference between CI users and NH listeners were for the pieces with moderate tempi. The largest average difference in ratings was 12 points. As the effect of the listening configuration was not significant, the second hypothesis was not supported.

Figure 6.

Average happy or sad ratings for the CI users (BM, panel a and BL, panel b). Blue, green, and red lines depict three listening configurations (the two dichotic modes are averaged together).

Figure 7.

Average ratings for the BM (Panel a) and the BL (Panel b) groups compared (in %) to the average of the NH listeners. Blue pentagram, green square, and red diamond lines depict three listening configurations (the two dichotic modes are averaged together).

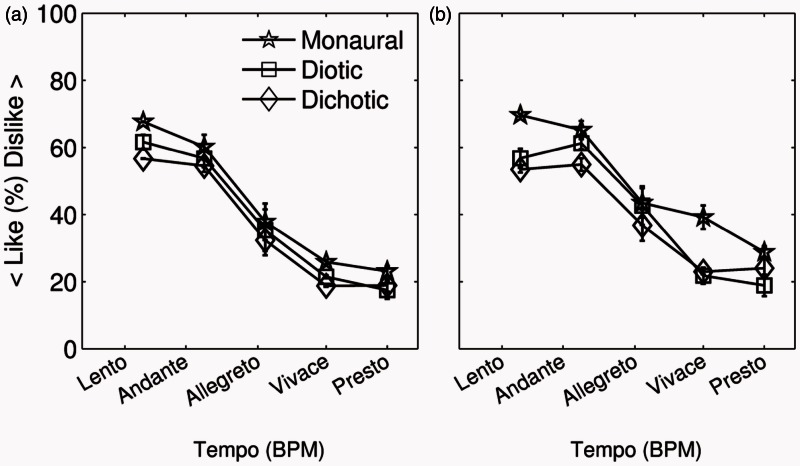

Like or dislike ratings

The average like or dislike ratings for the implantees are shown in Figure 8. The main effect of listening configuration was significant and small (F(3, 51) = 6.699, p < .001, η2 = 0.012). The main effect of tempo was significant and large (F(1, 51) = 41.24, p < .001, η2 = 0.205). No significant effect was found for the group. When the tempo was presto, both BL and BM groups rated the piece on average as more liked than when the tempo was lento. Post hoc tests showed that participants judged the music presented in the monaural listening configuration as significantly less likable than the music presented in diotic configuration (p = .017) or in the dichotic configuration (p < .0001). No significant difference was found between the two dichotic configurations. The average like or dislike ratings were higher for both dichotic configurations compared with the diotic configuration. However, as the differences were not significant (p > .1 for diotic vs. dichotic 1 and for diotic vs. dichotic 2), the third hypothesis was not supported.

Figure 8.

Average like or dislike ratings for the CI users (BM, panel a and BL, panel b). Blue pentagram, green square, and red diamond lines depict three listening configurations (the two dichotic modes are averaged together).

Discussion

This study aimed to improve musical enjoyment for adult CI users. We increased the perceptual distance (and thus the salience of the lateralization stream segregation cue) between the treble and bass clef parts of simple piano melodies by presenting the parts to different ears. We evaluated the effect of four different listening configurations (monaural, diotic, and two dichotics) on three perceptual ratings (clear or unclear, happy or sad, and like or dislike) for BM and BL listeners.

Hypothesis 1: Improving Subjective Ratings of Clarity

The first hypothesis was supported: Assigning the bass and treble clef part to different ears improved subjective ratings of clarity. The NH group and both CI groups reported that the piece was significantly clearer (better polyphonic perception) in the dichotic configuration compared with the monaural and diotic configurations. The mean improvement in clarity ratings from the monaural to dichotic configurations across all tempi for the two CI groups was approximately 20 points. The lack of significant effects between the BL and BM groups, as well as the lack of interaction with listening configurations, indicates that both groups could benefit from the enhancement of lateralization cues. The lack of difference between the two dichotic configurations is more surprising, at least for the BM group, as we might have expected that the configuration in which the bass part is sent to the hearing aid and the treble to the CI, would be more efficient than the inverse due to the limited ability of the CI in coding low frequency acoustic information.

Hypothesis 2: Improving Identification of Emotional Content

The second hypothesis was not supported: Assigning the bass and treble clef part into different ears did not improve the accuracy of identifying the happy or sad emotional content of the piece. Both BM and BL participants had very similar happy or sad ratings to the NH listeners across all listening configurations. However, it is possible that the listening configuration effect existed but was overpowered by the strong effect of tempo. Furthermore, as shown in Figure 7, CI users could identify the musical emotion surprisingly well, so it is possible that the rating differences were limited by a ceiling effect. It would be interesting to replicate the experiment with all the stimuli at the same tempo to avoid this bias. If such experiment also failed to show a significant effect of listening configuration this would strongly refute the second hypothesis.

Hypothesis 3: Improving Enjoyment of Music

The third hypothesis was not supported. Although the analysis revealed a significant effect of listening configuration, and CI users’ enjoyment ratings were significantly lower in the monaural condition compared with both binaural conditions, the post hoc analyses showed no significant difference in ratings between the diotic and dichotic configurations. Assigning the bass and treble clef part to different ears did not improve enjoyment of the music when compared with a simple bilateral listening configuration. This result suggests that presenting the music to both ears was sufficient to enhance music appreciation. Similar results were previously found in a study that reports a higher appreciation of the piano for BL users compared unilateral users (Veekmans, Ressel, Mueller, Vischer, & Brockmeier, 2009). The same study also showed that 91% of BL users report to listen to music “to be happy” compared with only 30% of unilateral users. It is worth noting that the NH listeners significantly preferred the diotic condition, and that no differences were found between the monaural and the two dichotic configurations. Among the four listening conditions, the diotic is by far the most common way most piano pieces are experienced, which could explain the preference rating of the NH listeners. On the other hand, CI users are more familiar with large differences in the audio inputs between the two ears.

As shown in Figure 8, the size effect of the listening configuration was small in comparison with the effect of the tempo. A linear regression analysis indicated a regression slope of 0.33 for the CI listeners (i.e., for an increase of 100 BPM, the like or dislike rating increased by 33 points). This effect was surprising, as we might not expect a strong effect of tempo on music preference. For example, a recent study by Schellenberg and von Scheve (2012) revealed no strong preferences of NH music listeners toward fast songs. They compared 200 songs that were in the top 40 from 2005 to 2009. Almost half of the most well-liked songs were in a minor key (57.5%), and their average tempo ranged widely between andante and vivace (average tempo of 99.9 BPM, SD = 24.6). There are several possible explanations that can be suggested to explain this large effect of the CI users. First, the faster the tempo the more musical information is conveyed. This could possibly lead to a larger appreciation of music. Second, faster tempo pieces may have more complex rhythmic information. As CI users have relatively intact perception of rhythm, they may better appreciate an interesting rhythmical piece. Third, because the CI users are likely to have very poor pitch perception and little or no ability to perceive different harmonies, tempo may be the primary perceptual dimension along which the pieces vary, leading them to rate the pieces according to this dimension, without regard to the additional dimensions, such as mode (major and minor), harmony, or melodic content, used by NH listeners.

Conclusion

In this article, we proposed a strategy to improve musical perception and enjoyment for CI recipients. The strategy involved presenting the treble and bass clefs of simple piano music to opposite ears, under the three hypotheses that dichotic presentation would (a) improve perceived clarity, (b) improve judgments of musical emotion, and (c) increase listening pleasure. Ratings of the clarity of piano pieces were improved in CI users by sending the treble and bass clef to separate ears, supporting the first hypothesis. We propose that this separation acts as a stream segregation cue (lateralization cue), increasing the perceptual difference between the two parts. The two parts were also rated as more clear when the tempo was fast. When rating the emotional content of the pieces, CI users had similar ratings to the NH listeners overall, but the listening configuration had no effect, thus providing no support for the second hypothesis. For the CI users, the pieces were not liked more in dichotic than in diotic presentation mode, in contrast to the predictions of the third hypothesis. However, CI users’ ratings were higher when the two parts were presented either diotically or dichotically compared with monaurally, but this effect was small when compared with the effect of the tempo.

Acknowledgments

We would like to thank Professor Isabelle Peretz for her valuable help in the design of the experiment. We also wish to thank the participants involved in this study for their tireless contribution, Drs Chris James and Kuzma Strelnikov for their comments on an early version of the article, and Dr Camilla Thyregod for her help in the statistical analysis. This study was part of Nicolas Vannson’s final year research project for the completion of his Master of audiology, supervised by Drs Marozeau and Innes-Brown.

Note

As we are studying lateralization cues, it would have been better to send the stimuli to the hearing aid through a direct audio cable. However, it would have required for us to have a cable for each brand and model. In our experiment, although the sound is first generated by a loudspeaker, it is processed and presented to the participant through his or her hearing aid.

Declaration of Conflicting Interests

The authors declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: Nicolas Vannson is an employee of Cochlear France S.A.S.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was supported by the National Health and Medical Research Council of Australia. The Bionics Institute acknowledges the support it receives from the Victorian Government through its Operational Infrastructure Support Program.

References

- Balkwill L.-L., Thompson W. F. (1999) A cross-cultural investigation of the perception of emotion in music: Psychophysical and cultural cues. Music Perception 17: 43–64. [Google Scholar]

- Blauert J. (1997) Spatial hearing: The psychophysics of human sound localization, Cambridge, MA: The MIT Press. [Google Scholar]

- Bregman A. S. (1990) Auditory scene analysis: The perceptual organization of sound, Cambridge, MA: The MIT Press. [Google Scholar]

- Carlyon R. P. (2004) How the brain separates sounds. Trends in Cognitive Sciences 8(10): 465–471. [DOI] [PubMed] [Google Scholar]

- Chatterjee M., Sarampalis A., Oba S. I. (2006) Auditory stream segregation with cochlear implants: A preliminary report. Hearing Research 222(1–2): 100–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choisel S., Wickelmaier F. (2007) Evaluation of multichannel reproduced sound: Scaling auditory attributes underlying listener preference. The Journal of the Acoustical Society of America 121(1): 388–400. [DOI] [PubMed] [Google Scholar]

- Cooper H. R., Roberts B. (2009) Auditory stream segregation in cochlear implant listeners: Measures based on temporal discrimination and interleaved melody recognition. Journal of the Acoustical Society of America 126(4): 1975–1987. doi:10.1121/1.3203210. [DOI] [PubMed] [Google Scholar]

- Faulkner K. F., Pisoni D. B. (2013) Some observations about cochlear implants: Challenges and future directions. Neuroscience Discovery 1(1): 9. [Google Scholar]

- Florentine M. (1976) Relation between lateralization and loudness in asymmetrical hearing losses. Journal of America Audiology Society 1(6): 243–251. [PubMed] [Google Scholar]

- Francart T., Brokx J., Wouters J. (2008) Sensitivity to interaural level difference and loudness growth with bilateral bimodal stimulation. Audiology and Neurotology 13(5): 309–319. [DOI] [PubMed] [Google Scholar]

- Francart T., Brokx J., Wouters J. (2009) Sensitivity to interaural time differences with combined cochlear implant and acoustic stimulation. Journal of the Association for Research in Otolaryngology 10(1): 131–141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller K., Christ A., Knutson J., Witt S., Mehr M. (2003) The effects of familiarity and complexity on appraisal of complex songs by cochlear implant recipients and normal hearing adults. Journal of Music Therapy 40(2): 78. [DOI] [PubMed] [Google Scholar]

- Gifford R. H. (2011) Who is a cochlear implant candidate? The Hearing Journal 64(6): 16–18. [Google Scholar]

- Gifford R. H., Dorman M. F., Shallop J. K., Sydlowski S. A. (2010) Evidence for the expansion of adult cochlear implant candidacy. Ear and Hearing 31(2): 186–194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hevner K. (1935) The affective character of the major and minor modes in music. The American Journal of Psychology 47(1): 103–118. [Google Scholar]

- Hong R. S., Turner C. W. (2009) Sequential stream segregation using temporal periodicity cues in cochlear implant recipients. Journal of the Acoustical Society of America 126(1): 291–299. doi:10.1121/1.3140592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopyan T., Gordon K., Papsin B. (2011) Identifying emotions in music through electrical hearing in deaf children using cochlear implants. Cochlear Implants International 12(1): 21–26. [DOI] [PubMed] [Google Scholar]

- Kong Y.-Y., Cruz R., Jones J. A., Zeng F.-G. (2004) Music perception with temporal cues in acoustic and electric hearing. Ear and Hearing 25(2): 173–185. [DOI] [PubMed] [Google Scholar]

- Kong Y.-Y., Mullangi A., Marozeau J. (2012) Timbre and speech perception in bimodal and bilateral cochlear-implant listeners. Ear and Hearing 33(5): 645–659. doi:10.1097/AUD.0b013e318252caae. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong Y.-Y., Mullangi A., Marozeau J., Epstein M. (2011) Temporal and spectral cues for musical timbre perception in electric hearing. Journal of Speech Language and Hearing Research 54: 981–994. doi:1092-4388_2010_10-0196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Limb C. J., Rubinstein J. T. (2012) Current research on music perception in cochlear implant users. Otolaryngologic Clinics of North America 45(1): 129–140. [DOI] [PubMed] [Google Scholar]

- Looi V., McDermott H., McKay C., Hickson L. (2008) The effect of cochlear implantation on music perception by adults with usable pre-operative acoustic hearing. International Journal of Audiology 47(5): 257–268. doi:10.1080/14992020801955237. [DOI] [PubMed] [Google Scholar]

- Marozeau J., Innes-Brown H., Blamey P. J. (2013a) The acoustic and perceptual cues in melody segregation for listeners with a cochlear implant. Frontiers in Psychology 4: 790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marozeau J., Innes-Brown H., Blamey P. J. (2013b) The effect of timbre and loudness on melody segregation. Music Perception 30(3): 259–274. [Google Scholar]

- McDermott H. (2011) Music perception. In: Fan-Gang Zeng A. N. P., Richard R. F. (eds) Auditory prostheses: New horizons, New York, NY: Springer, pp. 305–339. [Google Scholar]

- Middlebrooks J. C., Onsan Z. A. (2012) Stream segregation with high spatial acuity. The Journal of the Acoustical Society of America 132(6): 3896–3911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noble W., Byrne D., Lepage B. (1994) Effects on sound localization of configuration and type of hearing impairment. The Journal of the Acoustical Society of America 95(2): 992–1005. [DOI] [PubMed] [Google Scholar]

- North A. C., Hargreaves D. J. (1997) Liking, arousal potential, and the emotions expressed by music. Scandinavian Journal of Psychology 38(1): 45–53. [DOI] [PubMed] [Google Scholar]

- Oxenham A. J. (2008) Pitch perception and auditory stream segregation: Implications for hearing loss and cochlear implants. Trends in Amplification 12(4): 316–331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panksepp J. (1995) The emotional sources of “chills” induced by music. Music Perception 13: 171–207. [Google Scholar]

- Peretz I., Gagnon L., Bouchard B. (1998) Music and emotion: Perceptual determinants, immediacy, and isolation after brain damage. Cognition 68(2): 111–141. [DOI] [PubMed] [Google Scholar]

- Ritossa D. A., Rickard N. S. (2004) The relative utility of ‘pleasantness’ and ‘liking’ dimensions in predicting the emotions expressed by music. Psychology of Music 32(1): 5–22. [Google Scholar]

- Schellenberg E. G., von Scheve C. (2012) Emotional cues in American popular music: Five decades of the top 40. Psychology of Aesthetics, Creativity, and the Arts 6(3): 196–203. [Google Scholar]

- Schubert E. (2007) The influence of emotion, locus of emotion and familiarity upon preference in music. Psychology of Music 35(3): 499–515. [Google Scholar]

- Schulz E., Kerber M. (1994) Music perception with the MED-EL implants. Advances in Cochlear Implants. 326–332. [Google Scholar]

- Seeber B. U., Baumann U., Fastl H. (2004) Localization ability with bimodal hearing aids and bilateral cochlear implants. The Journal of the Acoustical Society of America 116(3): 1698–1709. [DOI] [PubMed] [Google Scholar]

- Veekmans K., Ressel L., Mueller J., Vischer M., Brockmeier S. J. (2009) Comparison of music perception in bilateral and unilateral cochlear implant users and normal-hearing subjects. Audiology and Neurootology 14(5): 315–326. doi:000212111. [DOI] [PubMed] [Google Scholar]

- Vieillard S., Peretz I., Gosselin N., Khalfa S., Gagnon L., Bouchard B. (2008) Happy, sad, scary and peaceful musical excerpts for research on emotions. Cognition & Emotion 22(4): 720–752. [Google Scholar]

- Volkova A., Trehub S. E., Schellenberg E. G., Papsin B. C., Gordon K. A. (2013) Children with bilateral cochlear implants identify emotion in speech and music. Cochlear Implants International 14(2): 80–91. [DOI] [PubMed] [Google Scholar]

- Zeng F. G. (2004) Trends in cochlear implants. Trends in Amplification 8(1): 1–34. [DOI] [PMC free article] [PubMed] [Google Scholar]