Abstract

We propose a novel framework for perceptual grouping based on the idea of mixture models, called Bayesian Hierarchical Grouping (BHG). In BHG we assume that the configuration of image elements is generated by a mixture of distinct objects, each of which generates image elements according to some generative assumptions. Grouping, in this framework, means estimating the number and the parameters of the mixture components that generated the image, including estimating which image elements are “owned” by which objects. We present a tractable implementation of the framework, based on the hierarchical clustering approach of Heller and Ghahramani (2005). We illustrate it with examples drawn from a number of classical perceptual grouping problems, including dot clustering, contour integration, and part decomposition. Our approach yields an intuitive hierarchical representation of image elements, giving an explicit decomposition of the image into mixture components, along with estimates of the probability of various candidate decompositions. We show that BHG accounts well for a diverse range of empirical data drawn from the literature. Because BHG provides a principled quantification of the plausibility of grouping interpretations over a wide range of grouping problems, we argue that it provides an appealing unifying account of the elusive Gestalt notion of Prägnanz.

Keywords: perceptual grouping, Bayesian inference, hierarchical representation, visual perception, Prägnanz, computational model

Perceptual grouping is the process by which image elements are organized into distinct clusters or objects. The problem of grouping is inherently difficult because the system has to choose the “best” grouping interpretation among an enormous number of candidates (the number of possible partitions of N elements, for example, grows exponentially with N). Moreover, exactly what makes one interpretation “better” than another is a notoriously subtle problem, epitomized by the ambiguity surrounding the Gestalt term Prägnanz, usually translated as “goodness of form.” Hence despite an enormous literature (see Wagemans, Elder, et al., 2012; Wagemans, Feldman, et al., 2012, for modern reviews), both the underlying goals of perceptual grouping—exactly what the system is trying to accomplish—as well as the computational mechanisms the human system employs to accomplish these goals, are poorly understood.

Many models have been proposed for specific subproblems of perceptual grouping. Models of contour integration, the process by which visual elements are grouped into elongated contours, are particularly well-developed (e.g. Ernst et al., 2012; Field, Hayes, & Hess, 1993; Geisler, Perry, Super, & Gallogly, 2001). However, these models often presuppose a variety of Gestalt principles such as proximity (Wertheimer, 1923) and good continuation (Wertheimer, 1923), whose motivations are themselves poorly understood. Figure-ground organization, in which the system interprets qualitative depth relations among neighboring surfaces, is also heavily studied, but again models often invoke a diverse collection of rules, including closure, symmetry, parallelism, and so forth (e.g. Kikuchi & Fukushima, 2003; Sajda & Finkel, 1995). Other problems of perceptual grouping have, likewise, been studied both computationally and empirically. Notwithstanding the success of many of these models in accounting for the phenomena to which they are addressed, most are narrow in scope, and difficult to integrate with other problems of perceptual grouping. An overarching or unifying paradigm for perceptual grouping does not as yet exist.

Of course, it cannot be assumed that the various problems of perceptual grouping do, in fact, involve common mechanisms or principles. “Perceptual grouping” might simply be an umbrella term for a set of essentially unrelated though similar processes. (According to Helson, 1933, the Gestalt literature identified 114 distinct grouping principles.) (See also Pomerantz, 1986) Nevertheless, it has long been observed that many aspects of perceptual grouping seem to involve similar or analogous organizational preferences (Kanizsa, 1979), suggesting the operation of a common underlying computational mechanism—as reflected in Gestalt attempts to unify grouping via Prägnanz (Wertheimer, 1923). But attempts to give a concrete definition to this term have not converged on a clear account. Köhler (1950) sought an explanation of Prägnanz at the neurophysiological level, while van Leeuwen (1990a, 1990b) argued that it should reflect a theory of mental representation. Despite this long history, the idea of an integrated computational framework for perceptual grouping, in which each of a variety of narrower grouping problems could be understood as a special case, remains elusive.

A number of unifying approaches have centered around the idea of simplicity, often referred to as the minimum principle. Hochberg and McAlister (1953) and Attneave (1954) were the first to apply ideas from information theory to perceptual organization, showing how the uncertainty or complexity of perceptual interpretations could be formally quantified, and arguing that the visual system chooses the simplest among available alternatives. Leeuwenberg (1969) developed a more comprehensive model of perceptual complexity based on the idea of the length of the stimulus description in a fixed coding language, now referred to as Structural Information Theory (SIT). More recently his followers have applied similar ideas to a range of grouping problems, including amodal completion (e.g. Boselie & Wouterlood, 1989; van Lier, van der Helm, & Leeuwenberg, 1994, 1995) and symmetry perception (e.g. van der Helm & Leeuwenberg, 1991, 1996). The concreteness of the formal complexity minimization makes these models a clear advance over the often vague prescriptions of the Gestalt theory. But they suffer from a number of fundamental problems, including the ad hoc nature of the fixed coding language adopted, the lack of a tractable computational procedure, and a variety of other problems (Wagemans, 1999).

Recently, a number of Gestalt problems have been modeled in a Bayesian framework, in which degree of belief in a given grouping hypothesis is associated with the posterior probability of the hypothesis conditioned on the stimulus data (e.g. Kersten, Mammasian, & Yuille, 2004). Contour integration, for example, has been shown to conform closely to a rational Bayesian model given suitable assumptions about contours (Claessens & Wage mans, 2008; Elder & Goldberg, 2002; Ernst et al., 2012; Feldman, 1997a, 2001; Geisler et al., 2001). Similarly, the Gestalt principle of good continuation has been formalized in terms of Bayesian extrapolation of smooth contours (Singh & Fulvio, 2005, 2007). But the Bayesian approach has not yet been extended to the more difficult problems of perceptual organization, and a unifying approach has not been developed. A more comprehensive Bayesian account of perceptual grouping would require a way of expressing grouping interpretations in a probabilistic language, and tractable techniques for estimating the posterior probability of each interpretation. The Bayesian framework is well-known to relate closely to complexity minimization, essentially because maximization of the Bayesian posterior is related to minimization of the Description Length (i.e. the negative log of the posterior; see Chater, 1996; Feldman, 2009; Wagemans, Feldman, et al., 2012). The Bayesian approach, too, is often argued to solve the Bias-variance problem, giving a solution with optimal complexity given the data and prior knowledge (MacKay, 2003). Hence a comprehensive Bayesian account of grouping promises to shed light on the nature of the minimum principle.

In what follows, we introduce a unified Bayesian framework for perceptual grouping called Bayesian Hierarchical Grouping (BHG) based on the idea of mixture models. Mixture models have been used to model a wide variety of problems, including motion segmentation (Gershman, Jäkel, & Tenenbaum, 2013; Weiss, 1997), visual short-term memory (Orhan & R. A. Jacobs, 2013) and categorization (Rosseel, 2002; Sanborn, Griffiths, & Navarro, 2010). But other than our own work on narrower aspects of the problem of perceptual grouping (Feldman et al., 2013, 2014; Froyen, Feldman, & Singh, 2010, under review), mixture models have yet to be applied to this problem in general. BHG builds on our earlier work but goes considerably farther in encompassing a broad range of grouping problems and introducing a coherent and consistent algorithmic approach. BHG uses agglomerative clustering techniques (in much the same spirit as Ommer & Buhmann, 2003, 2005, in computer science) to estimate the posterior probabilities of hierarchical grouping interpretations. We illustrate and evaluate BHG by demonstrating it on a diverse range of classical problems of perceptual organization, including contour integration, part decomposition and contour completion, and also show that BHG generalizes naturally beyond these problems. In contrast to many past computational models of specific grouping problems, BHG does not take Gestalt principles for granted as premises, but attempts to consider in a more principled way exactly what is being estimated when the visual system decomposes the image into distinct groups. To preview, our proposal is that the visual system assumes that the image is composed of a combination (mixture) of distinct generative sources (or objects), each of which generates some visual elements via a stochastic process. In this view, the problem of perceptual grouping is to estimate the nature of these generating sources, and thus to decompose the image into the coherent groups, each of which corresponds to a distinct generative source.

Below we will first outline the computational framework of BHG. We then show how BHG accounts for a number of distinct aspects of perceptual grouping, relying on a variety of data drawn from the literatures of contour integration, part decomposition and contour completion, as well as some “instant psychophysics” (i.e., perceptually natural results on some simple cases).

The computational framework

In recent years Bayesian models have been developed to explain a variety of problems in visual perception. The goal of these models, broadly speaking, is to quantify the degree of belief that ought to be assigned to each potential interpretation of image data. In these models each possible interpretation cj ∈ C = {c1 … cJ} of an image D is associated with posterior probability p(C|D), which according to Bayes’ rule is proportional to the product of a prior probability p(C) and likelihood p(D|C) (for introductions see Feldman, 2014; Kersten et al., 2004; Mamassian & Landy, 2002). Similary we propose a framework in which perceptual grouping can be viewed as a rational Bayesian procedure by regarding it as a kind of mixture estimation (see also Feldman et al., 2014; Froyen et al., under review). In this framework, the goal is to use Bayesian inference to estimate the most plausible decomposition of the image configuration into constituent “objects.”1 Here we give a brief overview of the approach, sufficient to explain the applications that follow, with mathematical details left to the appendices.

An image is a mixture of objects

A mixture model is a probability distribution that is composed of the weighted sum of some number of distinct component distribution or sources (McLachlan & Basford, 1988). That is, a mixture model is a combination of distinct data sources, all mixed together, without explicit labels—like a set of unlabeled color measurements drawn from a sample of apples mixed with a sample of oranges. The problem for the observer, given data sampled from the mixture, is to decompose the dataset into the most likely combination of components (e.g., a set of reddish things and a set of orange things). Because the data derive from a set of distinct sources, the distribution of values can be highly multimodal and heterogeneous, even if each source has a simple unimodal form. The fundamental problem for the observer is to estimate the nature of the sources (e.g. parameters such as means and variances) while simultaneously estimating which data originate from which source.

The main idea behind our approach is to assume that the image itself is a mixture of objects2. That is, we assume that the visual elements we observe are a sample drawn from a mixture model in which each object is a distinct data-generating source. Technically, let D = {x1 … xN} denote the image data (with each xn representing a 2-dimensional vector in ℝ2, e.g. the location of a visual element). We assume that the image (really, the probability distribution from which image elements are sampled) is a sum of K components

| (1) |

In this expression cn ∈ c = {c1 … cN} are the assignments of data to source components, p is a parameter vector of a multinomial distribution with p(cn = k|p) = pk, θk are the parameters of the k-th object, and ϕ = {θ1, … θK, p}. The observer’s goal is to estimate the posterior distribution over the hypotheses cj, assigning a degree of belief to each way of decomposing the image data into constituent objects—that is, to group the image.

The framework derives its flexibility from the fact that the objects (generating sources) can be defined in a variety of ways depending on assumptions and context. In a simple case like dot clustering, the image data might be assumed to be generated by a mixture of Gaussian objects, that is, simple clusters defined by a mean μk and covariance matrix Σk (see Froyen et al., under review). Later, we introduce a more elaborate object definition appropriate for other types of grouping problems, such as contour integration and part decomposition. For contour integration, for example, we define an image as a mixture of contours, with contours formalized as elongated mixture components. For part decomposition, we define a shape as a “mixture of parts.” In fact, all the object definitions we introduce below are variations of a single flexible object class, which as we will show can be tailored to generate image data in the form of contours, clusters, or objects parts. So notwithstanding the differences among these distinct kinds of perceptual grouping, in our framework they are all treated in a unified manner.

To complete the Bayesian formulation, we define priors over the object parameters p(θ|β) and over the mixing distribution p(p|α). The first of these defines our expectations about what “objects” in our context tend to look like. The second is more technical, defining our expectations about how distinct components tend to “mix.” (It is the natural conjugate prior for the mixing distribution, the Dirichlet distribution with parameter α) Using these two priors we can rewrite the mixture model (Eq. 1) to define the probability of a particular grouping hypothesis cj. The likelihood of a particular grouping hypothesis is obtained by marginalizing over the parameters . This yields the posterior of interest

| (2) |

where p(cj|α) = ∫p(cj|p)p(p|α)dp is a standard Dirichlet integral (see Rasmussen, 2000, for derivation). This equation defines the degree of belief in a particular grouping hypothesis.

Note that the posterior can be decomposed into two intuitive factors: the likelihood p(D|cj, β) which expresses how well the grouping hypothesis cj fits the image data D; and a prior which in effect quantifies the complexity of the grouping hypothesis cj. As in all Bayesian frameworks, these two components trade off. Decomposing the image into more groups allows each group to fit its constituent image data better, but at a cost in complexity; decomposing the image into fewer, larger groups is simpler, but doesn’t fit the data as well. In principle, Bayes’ rule allows this tradeoff to be optimized, allowing the observer to find the right balance between a simple grouping interpretation and a reasonable fit to the image data.

Unfortunately, as in many Bayesian approaches, computing the full posterior over grouping hypotheses cj can become intractable as N increases, even for a fixed number of components K (Gershman & Blei, 2012). Moreover, we do not generally know the number K of clusters, meaning that K must be treated as a free parameter to be estimated, making the problem even more complex. To accommodate this we extend the finite mixture model into a so-called Dirichlet process mixture model, commonly used in machine learning and statistics (Neal, 2000). Several approximation methods have been proposed to compute posteriors for these models, such as Markov-Chain Monte Carlo (McLachlan & Peel, 2004) or variational methods (Attias, 2000). In the current paper, we adopt a method introduced by Heller and Ghahramani (2005), called Bayesian Hierarchical Clustering (BHC), to the problem of perceptual grouping, resulting in a framework we refer to as Bayesian Hierarchical Grouping (BHG).

One of the main features of BHG is that it produces a hierarchical representation of the organization of image elements. Of course, the idea that perceptual organization tends to be hierarchical has a long history (e.g. Baylis & Driver, 1993; Lee, 2003; Marr & Nishihara, 1978; Palmer, 1977; Pomerantz, Sager, & Stoever, 1977). Machine learning too has often employed hierarchical representations of data (see Bishop, 2006, for a useful overview). Formally, a hierarchical structure corresponds to a tree where the root node represents the image data at the most global level, i.e. the grouping hypothesis that postulates that all image data is generated by one underlying object. Subtrees then describe finer and more local relations between image data, all the way down to the leaves, which each explain only one image datum xn. While this formalism has become increasingly popular (Amir & Lindenbaum, 1998; Feldman, 1997b, 2003; Shi & Malik, 2000), no general method currently exists for actually building this tree for a particular image. In this paper we introduce a computational framework, Bayesian Hierarchical Grouping (BHG), that creates a fully hierarchical representation of a given configuration of image elements.

Bayesian hierarchical grouping

In this section we give a slightly technical synopsis of BHG (details can be found in the Appendix). Like other agglomerative hierarchical clustering techniques, BHC (Heller & Ghahramani, 2005) begins by assigning each data point its own cluster, and then progressively merges pairs of clusters to create a hierarchy. BHC differs from traditional agglomerative clustering methods in that it uses a Bayesian hypothesis test to decide which pair of clusters to merge. The technique serves as a fast approximate inference method for Dirichlet process mixture models (Heller & Ghahramani, 2005).

Given the dataset D = {x1 … xN}, the algorithm is initiated with N trees Ti each containing one data point Di = {xn}. At each stage, the algorithm considers merging every possible pair of trees. Then, by means of a statistical test explained below, it chooses two trees Ti and Tj to merge, resulting in a new tree Tk, with its associated merged dataset Dk = Di ∪ Dj (Fig. 1A). Testing all possible pairs is intractable, so to reduce complexity BHG considers only those pairs that have “neighboring” data points. Neighbor relations are defined via adjacency in the Delaunay triangulation, a computationally efficient method of defining spatial adjacency (de Berg, Cheong, van Kreveld, & Overmars, 2008) that has been found to play a key role in perceptual grouping mechanisms (Watt, Ledgeway, & Dakin, 2008). Considering only neighboring trees substantially reduces computational complexity, from 𝒪(N2) in the initial phase of Heller & Ghahramani’s original algorithm to 𝒪(N log(N)) in ours (see Appendix A).

Figure 1.

Illustration of the Bayesian hierarchical clustering process. (A) Example tree decomposition (see also Heller & Ghahramani, 2005) for the 1D grouping problem on the right; (B) Tree slices, i.e. different grouping hypotheses. (C) Tree decomposition as computed by the clustering algorithm for the dot-clusters on the right, assuming bivariate Gaussian objects; (D) Tree slices for the dot-clusters.

In considering each merge, the algorithm compares two hypotheses in a Bayesian hypothesis testing framework. The first hypothesis ℋ0 is that all the data in Dk are generated by only one underlying object p(Dk|θ), with unknown parameters θ. In order to evaluate the probability of the data given this hypothesis p(X|ℋ0) we introduce priors p(θ|β) over the objects as described above, in order to integrate over the to-be-estimated parameters θ,

| (3) |

For simple objects such as Gaussian clusters this integral can be computed analytically, but with more complex objects it becomes intractable and approximate methods must be used (see Appendix B).

The second hypothesis, ℋ1, is the sum of all possible partitionings of Dk into two or more objects. However, again exhaustive evaluation of all such hypotheses is intractable. The Bayesian hierarchical clustering algorithm circumvents this problem by considering only partitions that are consistent with the tree structure of the two trees Ti and Tj to be merged. For example, for the tree structure in Fig. 1A the possible tree-consistent partitionings are shown Fig. 1B. As is clear from the figure, this constraint eliminates many possible partitions. The probability of the data under ℋ1 can now be computed by taking the product over the subtrees p(Dk|ℋ1) = p(Di|Ti)p(Dj|Tj). As will become clear below, p(Di|Ti) can be computed recursively as the tree is constructed.

To obtain the marginal likelihood of the data under the tree Tk, we need to combine p(Dk|ℋ0) and p(Dk|ℋ1). This yields the probability of the data integrated across all possible partitions, including the one-object hypothesis ℋ0. Weighting these hypotheses by the prior on the one-object hypothesis p(ℋ0) yields an expression for p(Di|Ti),

| (4) |

Note that p(ℋ0) is also computed bottom-up as the tree is built and is based on a Dirichlet process prior (Eq. 6, for details see Heller & Ghahramani, 2005). (We discuss this prior in greater depth below.) Finally, the probability of the merged hypothesis p(ℋ0|Dk) can be found via Bayes’ rule,

| (5) |

This probability is then computed for all Delaunay-consistent pairs, and the pair with the highest merging probability is merged. In this way the algorithm greedily3 builds the tree until all data is merged into one tree. Given the assumed generative models of objects, this tree represents the most “reasonable” decomposition of the image data into distinct objects.

Examples of such trees are shown in Fig. 1. Fig. 1A illustrates a tree for the classical one-dimensional grouping problem (Wertheimer, 1923) (Fig. 1B). The algorithm first groups the closest pair of image elements, then continues as described above until all image elements are incorporated into the tree. Fig. 1C and D similarly illustrate two-dimensional dot clustering (Froyen et al., under review). The results shown in the figure assume objects consisting of bivariate Gaussian distributions of image elements.

Tree-slices and grouping hypotheses

During the construction of the tree one can greedily find an at least local maximum posterior decomposition by splitting the tree once p(ℋ0|Dk) < .5. However, we are more often interested in the distribution over all the possible grouping hypotheses rather than choosing a single winner. In order to do so we need to build the entire tree, and subsequently take what are called tree slices at each level of the tree (Fig. 1B and D), and compute their respective probabilities p(cj|D, α, β). Since the current algorithm proposes an approximation of the Dirichlet process mixture model (see Heller & Ghahramani, 2005, for proof), we make use of a Dirichlet process prior (Rasmussen, 2000, and independently discovered by Anderson, 1991) to compute the posterior probability of each grouping hypothesis (or treeslice). This prior is defined as

| (6) |

where nk is the number of datapoints explained by object with index k. When α > 1 there is a bias towards more objects, each explaining a small number of image data; while when 0 < α < 1 there is a bias towards fewer objects, each explaining a large number of image data. Inserting Eq. 6 into Eq. 2 we can compute the posterior distribution across all tree-consistent decompositions of data D

| (7) |

where p(Dk|β) is the marginal likelihood over θ for the data in cluster k of the current grouping hypothesis.

Prediction and completion

For any grouping hypothesis, BHG can compute the probability of a new point x* given the existing data D, called the posterior predictive distribution p(x*|D, cj).4 As in Eq. 1, the new datum is generated from a mixture model consisting of the K components comprised in this particular grouping hypothesis. More specifically new data is generated as a weighted sum of predictive distributions p(x*|Dk) = ∫p(x*|θ)p(θ|Dk, β) (where Dk is the data associated with object k),

| (8) |

Here πk is the posterior predictive of the Dirichlet prior defined as (see Bishop, 2006, p478 for a derivation).

The posterior predictive distribution has a particularly important interpretation in the context of perceptual grouping: it allows the model to make predictions about missing data, such as how shapes continue behind occluders (amodal completion). Several examples are shown below.

The objects

In the BHG framework, objects (generating sources) can be defined in a way suitable to the problem at hand. For a simple dot clustering problem, we might assume Gaussian objects governed by a mean μk and a covariance matrix Σk. We have previously found that such a representation accurately and quantitatively predicts human cluster enumeration judgments (Froyen et al., under review). Fig. 1C–D shows sample BHG output for this problem. In this problem, because the prior and likelihood are conjugate, the marginal p(D|H0) can be computed analytically.

However, more complex grouping problems such as part decomposition and contour integration call for a more elaborate object definitions. As a general object model, assume that objects are represented as B-spline curves G = {g1 … gK} (Fig. 2), each governed by a parameter vector qk. (B-splines (see Farouki & Hinds, 1985) are a convenient and widelyused mathematical representation of curves.) For each spline, datapoints xn are sampled from univariate Gaussian distributions perpendicular to this curve,

| (9) |

where dn = ‖xn − gk(n)‖ is the distance between the datapoint xn and its perpendicular projection to the curve gk(n), also referred to as the rib length.5 μk and σk are respectively the mean rib length and the rib length variance for each curve. Put together, the parameter vector for each component is defined as θk = {μk, σk, qk}. Fig. 2 shows how this object definition yields a wide variety of forms, ranging from contour-like objects (when μk = 0, Fig. 2A) to axial shapes (when μk > 0, making “wide” shapes, Fig. 2B). Objects with μk = 0 but larger variance σk will tend to look like dots generated from a cluster. For elongated objects, note that the generative function is symmetric along the curve (Fig. 2), constraining objects to obey a kind of local symmetry (Blum, 1973; Brady & Asada, 1984; Feldman & Singh, 2006; Siddiqi, Shokoufandeh, Dickinson, & Zucker, 1999).

Figure 2.

The generative function of our model depicted as a field. Ribs sprout perpendicularly from the curve (red) and the length they take on is depicted by the contour plot. (A) For contours ribs are sprouted with a μ close to zero, resulting in a Gaussian fall-off along the curve. (B) For shapes ribs are sprouted with μ > 0 resulting in a band surrounding the curve.

In the Bayesian framework the definition of an object class requires a prior p(θ|β) on the parameter vector θ governing the generative function (Eq. 9). In the illustrations below we use a set of priors that create a simple but flexible object class. First, we introduce a bias on the “compactness” of the objects by adopting a prior on the squared arclength Fk1 (also referred to as elastic energy) of the generating curve k, Fk1 ~ exp(λ1), resulting in a preferences for shorter curves. Similarly we introduce a prior governing the “straightness” of the objects by introducing a prior over the squared total curvature Fk2, also referred to as bending energy, of the curve k (Mumford, 1994), Fk1 ~ exp(λ1), resulting in a preference for straighter rather than curved objects (for computations of both Fk1 and Fk2 see Appendix B). We also assume a normal-inverse-chi-squared prior (conjugate of the normal distribution) over the function that generates the data points from the curves (Eq. 9), with parameters {μ0, κ0, ν0, σ0}. μ0 is the expectation of the rib length and κ0 defines how strongly we believe this; σ0 is the expectation of the variance of the rib length, and ν0 defines how strongly we believe this. Taken together these priors and their hyperparameter vector β = {λ1, λ2 μ0, κ0, ν0, σ0} induce a simple but versatile class of objects suitable for a wide range of spatially-defined perceptual grouping problems.

In summary, the BHG framework makes a few simple assumptions about the form of objects in the world, and then estimates a hierarchical decomposition of the image data into objects (or, more correctly, assigns posterior probabilities to all potential decompositions within a hierarchy). In what follows we give examples of results drawn from a variety of grouping problems, including contour integration, part decomposition and shape completion, and show how the approach explains a number of known perceptual phenomena and fits data drawn from the literature.

Results

We next show results from BHG computations for a variety of perceptual grouping problems, including contour integration, contour grouping, part decomposition and shape completion.

Contours

In what follows we show several examples of how BHG can be applied to contour grouping. In the first three examples, the problem in effect determines the set of alternative hypotheses, and we show how BHG gauges the relative degree of belief among the available grouping interpretations. In the rest of the examples, we illustrate the capabilities of BHG more fully by allowing it to generate the hypotheses themselves rather than choosing from a given set of alternatives.

To apply BHG to the problem of grouping contours, we need to define an appropriate data-generating model. To model contours, we use the generic object class defined above with the hyperparameters set in a way that expresses the idea that contours are narrow, elongated objects (μ0 = 0 and κ = 1 × 104, σ0 = .01, and ν0 = 20) that do not extend very far or bend very much (λ1 = 0.16 and λ2 = 0.05). Finally we set the parameter of the Dirichlet process prior to α = 0.1, expressing a modest bias towards keeping objects together rather than splitting them up. Naturally, alternative setting of these parameters may be appropriate for other contexts.

Dot lattices

Ever since Wertheimer (1923), researchers have used dot lattices (Fig. 3) to study the Gestalt principle of grouping by proximity (e.g. Kubovy & Wagemans, 1995; Oyama, 1961; Zucker, Stevens, & Sander, 1983). A dot lattice is a collection of dots arranged on a grid-like structure, invariant to at least two translations a (with length |a|), and b (with length |b| ≥ |a|). These two lengths and the angle γ between them defines the dot-lattice, and influence how the dots in the lattice are perceived to be organized. A number of proposals have been made about grouping in dot lattices, with one of the few rigorously defined mathematical models being the pure-distance law (Kubovy, Holcombe, & Wagemans, 1998; Kubovy & Wagemans, 1995), which dictates that the tendency to group one way or the other will be determined solely by the ratio |υ|/|a|. Here |υ| can be |b| or the length of the diagonals of the rectangle formed by |a| and |b|.

Figure 3.

BHG predictions for simple dot lattices. As input the model received the location of the dots as seen in the bottom two rows, where the ratio of the vertical |b| over the horizontal |a| dot distance was manipulated. The graphs on top shows the log posterior ratio of seeing vertical versus horizontal contours as a function of the ratio |b|/|a|. (left plot) Object definition included error on arclength; (right plot) Object definition included a quadratic error on arclength.

In the computational experiments that follow below the angle γ was set to 90°, while also keeping the orientation of the entire lattice fixed, allowing us to restrict our attention to the two dominant percepts of rows and columns (see Fig. 3). In these lattices |a| (inter-dot distance in the horizontal direction) is fixed and |b| (inter-dot distance in the vertical direction) is varied such that |b|/|a| ranges from 1 to 2. The BHG framework allows grouping to be modeled as follows.

Following Eq. 7 the posterior distribution over the two hypotheses is then

| (10) |

The results for a 5 × 5 lattice are shown in Fig. 3A. We plotted the log of the posterior ratio log p(cυ|D)/p(ch|D), as a function of the ratio |b|/|a| (similarly to Kubovy et al., 1998). Fig. 3A–B shows how the posterior ratio decreases monotonically with the ratio |b|/|a|, consistent with human data (Kubovy & Wagemans, 1995). In other words the posterior probability of the horizontal contours (rows) interpretation increases monotonically with |b|/|a|. The exact functional form of the relationship depends on the details of the object definition. If our object definition includes an error on arclength then this implies a linear relationship between the ratio |b|/|a| and the log posterior ratio is precisely as predicted by the pure-distance law (Fig. 3A). The examples in this paper use a quadratic penalty of arclength (see App. B) for computational convenience, in which case we get a slightly non-linear prediction depicted in Fig. 3B.

Good continuation

Good continuation, the tendency for collinear or nearly collinear sets of visual items to group together, is another Gestalt principle that has been extensively studied (Feldman, 1997a, 2001; Smits & Vos, 1987; Smits, Vos, & Van Oeffelen, 1985). Here we show that BHG can make quantitatively accurate predictions about the strength of tendency towards collinear grouping, by comparing its predictions to measured judgments of human subjects. Feldman (2001) conducted an experiment in which subjects were shown dot patterns consisting of six dots (e.g. Fig. 4A and B) and asked to indicate if they saw one or two contours, corresponding to a judgment about whether the dots were perceived as grouping into a single smooth curve or two distinct curves with an “elbow” (non-smooth junction) between them. To model these data, we ran BHG on all the original stimuli and computed the probability (Eq. 10) of the two alternative grouping hypotheses: c1 (all dots generated by one underlying contour) and c2 (dots generated by two distinct contours).6 We then compared this posterior to pooled human judgments for all 343 dot configurations considered in Feldman (2001), and find a strong monotonic relationship between model and data (Fig. 4). Because of the binomial nature of both variables, we took the log odds ratio of each. The log odds of the human judgments and log posterior ratio of the model predictions were highly correlated (LRT = 229.3, df = 1, p< .001, R2 = 0.6650; BF = 2.93775e + 77).7

Figure 4.

BHG’s performance on data from Feldman (2001). (A,B) Sample stimuli with likely responses (stimuli not drawn to scale). (C) Log odds of the pooled subject responses plotted as a function of the log posterior ratio of the model log p(c2|D) − log p(c1|D), where each point depicts one of the 343 stimulus types shown in the experiment. Both indicate the probability of seeing two contours p(c2|D). Model responses are linearized using an inverse cumulative Gaussian.

Association field

The interaction between good continuation and proximity is often expressed as an “association field” (Field et al., 1993; Geisler et al., 2001), and is closely related to the idea of co-circular support neighborhoods (Parent & Zucker, 1989; Zucker, 1985). Given the generative model described above, the BHG framework automatically introduces a Bayesian interaction between proximity and collinearity without any special mechanism. To illustrate this effect, we manipulated the distance D and relative orientation θ of two segments each composed of five dots (Fig. 5A). To quantify the degree of grouping between the two segments, we considered two hypotheses: one c1 in which all 10 dots are generated by a single underlying contour; and another c2 in which the two segments are each generated by distinct contours. The posterior probability of the former p(c1|D), computed using Eq. 10, expresses the tendency for the two segments to be perceptually grouped together in the BHG framework (Fig. 5B). As can be seen in the figure, the larger the angle between the two segments, and/or the further they are from each other, the less probable c1 becomes. The transition between ungrouped and grouped segments can be seen in Fig. 5B as a transition from blue (low p(c1|D)) to red (high p(c1|D)).

Figure 5.

The “association field” between two line segments (each containing 5 dots) as quantified by BHG. (A) Manipulation of the distance and angle between these two line segments. The blue line depicts the one-object hypothesis, and the two green lines depict the two-objects hypothesis. (B) The association field reflecting the posterior probability of p(c1|D) of the one-object hypothesis.

Contour grouping

The examples above illustrate how BHG gauges the degree to which a given configuration of dots coheres as perceptual group. But the larger and much more difficult problem of perceptual grouping is to determine how elements should be grouped in the first place—that is, which of the vast number of qualitatively distinct ways of grouping the observed elements is the most plausible. Here we illustrate how the framework can generate grouping hypotheses by means of its hierarchical clustering machinery and estimate the posterior distribution over those hypotheses. We first ran BHG on a set of simple edge configurations (Fig 6). One can see that the framework decomposes these into intuitive segments at each level of the hierarchy. Fig. 6A gives an illustration of how the MAP (maximum a posteriori) hypothesis is that all edges are generated by the same underlying contour, while the hypothesis one step down the hierarchy segments it into two intuitive segments. The latter hypothesis, however, has a lower posterior. Another example of an intuitive hierarchy can be seen in Fig. 6D, where the MAP estimate consists of three segments. The decomposition one level up (the 2-contour hypothesis) joins the two segments that are abutting together, but has a lower posterior. These simple cases show that the model can build up an intuitive space of grouping hypotheses and assign them posterior probabilities.

Figure 6.

BHG results for simple dot contours. The first column shows the input images and their MAP segmentation. Input dots are numbered from left to right. The second column shows the tree decomposition as computed by BHG. The third column shows the posterior probability distribution over all tree-consistent decompositions (i.e. grouping hypotheses).

Fig. 7 shows a set of more challenging cases in which dots are arranged in a variety of configurations in the plane. The figure shows the MAP interpretation drawn from BHG (color coded), meaning the single interpretation assigned highest posterior. In most cases, the decomposition is highly intuitive. In Fig. 7B the long vertical segment is broken into two parts. Although this might seem less intuitive, it follows from the assumptions embodied in the model as specified above. Specifically, the framework includes a penalty for the length of the curve in the form (λ1) which is applied to the entire curve. Exactly how dot spacing influences contour grouping is part of a more complex question of how spacing and regularity of spacing influence grouping (e.g. Feldman, 1997a; Geisler et al., 2001).

Figure 7.

The MAP grouping hypothesis for a more complex dot configurations. Distinct colors indicate distinct components in the MAP. (B) An example illustrating some shortcomings of the model. The preference for shorter segments leads to some apparently coherent segments to be oversegmented.

Parts of objects

The decomposition of whole objects into perceptually distinct parts is an essential aspect of shape interpretation with an extensive literature (see e.g. Biederman, 1987; Cohen & Singh, 2006; De Winter & Wagemans, 2006; Hoffman & Richards, 1984; Palmer, 1977; Singh & Hoffman, 2001). Part decomposition is known to be influenced by a wide variety of specialized factors and rules, such as the minima rule (Hoffman & Richards, 1984), the short-cut rule (Singh, Seyranian, & Hoffman, 1999), and limbs and necks (Siddiqi & Kimia, 1995). But each of these rules has known exceptions and idiosyncracies (see De Winter and Wagemans, 2006; Singh and Hoffman, 2001), and no unifying account is known. Part decomposition is conventionally treated as a completely separate problem from the grouping problems considered above. But as we now show, it can be incorporated elegantly into the BHG framework by treating it as a kind of grouping problem, in which we aim to group the elements that make up the object’s boundary into internally coherent components. When treated this way, part decomposition seems to follow the same basic principles—e.g. evaluation of the strength of alternate hypotheses via Bayes’ rule—as do other kinds of perceptual grouping.

Feldman and Singh (2006) proposed an approach to shape representation in which shape parts are understood as stochastic mixture components that generate the observed shape. Their approach is based on the idea of the shape skeleton, a probabilistic generalization of the Blum (1967, 1973)’s medial axis representation. In this framework, the shape skeleton is a hierarchically organized set of axes, each of which generates contour elements in a locally symmetric fashion laterally from both sides. As mentioned above, the random lateral vector is referred to as a rib (because it sprouts sideways from a skeleton) and the distance between the contour element and the axis is called the rib length. In the Feldman and Singh (2006) framework, the goal is to estimate the skeleton that, given an observed set of contour elements, is mostly likely to have generated the observed elements (the maximum a posteriori (MAP) skeleton). Critically, each distinct axial component of the MAP skeleton “explains” or “owns” (is interpreted as having generated) distinct contour elements, so a skeleton estimate entails a decomposition of the shape into parts. In this sense, part decomposition via skeleton estimation is a special case of mixture estimation, in which the shape itself is understood as “a mixture of parts.” Skeleton-based part decomposition seems to correspond closely to intuitive part decompositions for many critical cases (Feldman et al., 2013). But the original approach (Feldman & Singh, 2006) lacked a principled and tractable estimation procedure. Here we show how this approach to part decomposition can be incorporated into the BHG mixture estimation framework, yielding an effective hierarchical decomposition of shapes into parts.

To apply BHG, we begin first by sampling the shape’s boundary to create a discrete approximation consisting of a set of edges D = {x1 … xN}. Figure-ground assignment is assumed known (that is, we know which side of the boundary is the interior; the skeleton is assumed to explain the shape “from the inside”). Next, we choose hyperparameters that express our generative model of a part (i.e. reflect our assumptions about what a part looks like). The main difference compared to contours is that we now do not want the mean rib length to be zero. That is, in the case of parts we assume that the mean rib length can be assigned freely with a slight bias towards shorter mean rib lengths to incorporate the idea that parts are more likely to be narrow (μ0 = 0; κ0 = .001). The remaining generative parameters were set to reflect the assumption that parts should preferably have smooth boundaries (σ0 = .001; ν0 = 10). The hyperparameters biasing the shape of the axes themselves were set to identical values as in the contour integration case (λ1 = .16; λ2 = .05). Finally the mixing hyperparameter was set to α = .001.

Fig. 8 shows an example of BHG applied to a multipart shape. The model finds the most probable (MAP) part decomposition (Fig. 8C) and the entire structural hierarchy (Fig. 8B). In other words BHG finds what we argue is the “perceptually natural description” of the shape at different levels of the structural hierarchy (Fig. 8D and E). The MAP part decomposition for several shapes of increasing number of parts is shown in Fig. 9. Note that each axis represents a part.

Figure 8.

Shape decomposition in BHG. (A) A multipart shape, and (B) resulting tree structure depicted as a dendrogram. Colors indicate MAP decomposition, corresponding to the boundary labeling shown in (C). D and E show (lower probability) decompositions at other levels of the hierarchy.

Figure 9.

MAP skeleton as computed by the BHG for shapes of increasing complexity. The axis depicts the expected complexity, DL (Eq. 11), of each of the shapes based on the entire tree decomposition computed.

Fig. 10 shows some sample results. BHG correctly handles difficult cases such as a leaf on a stem (Fig. 10A) and dumbbells (Fig. 10B) while still maintaining the hierarchical structure of the shapes (Siddiqi et al., 1999). Fig. 10D shows a typical animal. In particular, Fig. 10C shows the algorithm’s robustness to contour noise. This shape, the “prickly pear” from Richards, Dawson, and Whittington (1986) is especially interesting because a qualitatively different type of noise is added to each part of the shape, which cannot be correctly handled by standard scale-space techniques. Furthermore, a desirable side-effect of our approach is the absence of ligature regions. A ligature is the “glue” that binds two or more parts together (e.g. connecting the leaf to its stem in Fig. 10A). Such regions have been identified (August, Siddiqi, & Zucker, 1999) as the cause of internal instabilities in the conventional medial axis transform (Blum, 1967), diminishing their usefulness for object recognition. Past medial axis models had to cope with this problem by explicitly identifying and deleting such regions (e.g. August et al., 1999). In our approach, by contrast, they do not appear at all in the BHG part decompositions, so they do not have to be dealt with separately.

Figure 10.

Examples of MAP tree-slices for: (A) leaf on a branch, (B) dumbbells, (C) “prickly pear” from Richards, Dawson, and Whittington (1986), and (D) Donkey. (Example D has higher dot density because the original image was larger.)

Shape complexity

As a side effect, the BHG model also provides a natural measure of shape complexity, a factor that often arises in experiments but which lacks a principled definition. For example, we have found that shape complexity diminishes the detectability of shapes in noise (Wilder, Singh, & Feldman, under review). Rissanen (1989) showed that the negative of the logarithm of probability −log p, called the Description Length (DL), provides a good measure of complexity because it expresses the length of the description in an optimal coding language (see also Feldman & Singh, 2006). To compute the DL of a shape we first integrate over the entire grouping hypothesis space C = {c1 … cJ}:

| (11) |

The DL is then defined as DL = −log p(D|α,β). Fig. 9 shows how, as we make a shape perceptually more complex (here by increasing the number of parts), the DL increases monotonically. This metric is universal to our framework and can be used to express the complexity of any image given any object definition. In general, the DL expresses the complexity of any stimulus given the generative assumptions about the object classes that are assumed to have generated it.

Part salience

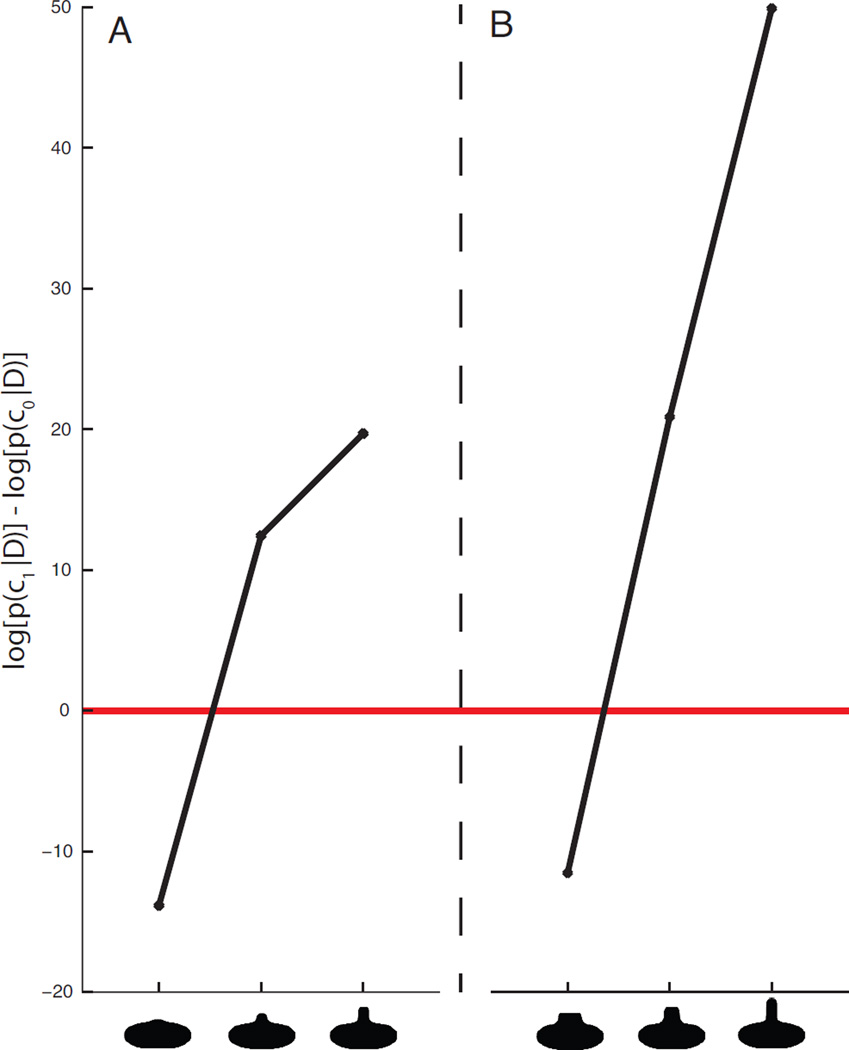

Hoffman and Singh (1997) proposed that representation of object parts is graded, in that parts can vary in the degree to which they appear to be distinct perceptual units within the shape. This variable, called part salience, is influenced by a number of geometric factors, including the sharpness of the region’s boundaries, its degree of protrusion (defined as the ratio of the part’s perimeter to the length of the part cut), and its size relative to the entire shape. Within BHG we can define part salience in a more principled way by comparing two grouping hypotheses: the hypothesis where the part was last present within the computed hierarchy c1, and the hypothesis c0 one step up in the hierarchy where the part ceases to exist as a distinct entity. The higher this ratio, the stronger the part’s role in the inferred explanation of the shape. In the examples that follow we defined part salience as the log ratio between posterior probabilities of these hypotheses, which captures the weight of evidence in favor of the part. Fig. 11 shows some sample results. Note that the log posterior ratio increases with both part-length (Fig. 11A) and protrusion (Fig. 11B), even though neither is an overt factor in its computation. The implication is that the known influence of both factors is in fact a side-effect of the unifying Bayesian account.

Figure 11.

Log posterior ratio between tree consistent 1- and 2-component hypotheses, as a function of (A) part length and (B) part protrusion.

To demonstrate quantitatively how BHG captures part salience, we compared our model’s performance to published human-subject data on part identification. Cohen and Singh (2007) tested the contribution of various geometric factors to part salience. Here we focus on their experiment concerning part-protrusion. In this experiment, subjects where shown a randomly generated shape on each trial, with one of 12 levels of part-protrusion (3[base widths]×4[part lengths]), after which they were shown a test part depicting a part taken from this shape (see Fig. 12A). Subjects were asked to indicate in which of four display quadrants this part was present in the original, complete shape. Cohen and Singh (2007) found that subjects’ accuracy in this task increased monotonically with increasing protrusion of the test part. For each of the 12 levels of part-protrusion, subjects were shown 50 different randomly generated shapes. In order for us to compare our model’s performance to subjects’ accuracy, we ran BHG on 20 randomly selected shapes for each level of part-protrusion. We then looked for the presence of the test part in the hierarchy generated by BHG and computed the log posterior ratio between the hypotheses c1 and c0 described above (Eq. 10). Because subject responses were binomial, we computed the log odds ratio. Fig. 12 shows that log odds of the subjects’ accuracy on the task increases monotonically with the log posterior ratio of the test part. The log posterior ratio of the test part was found to be a good predictor of the log odds of the subject’s accuracy at part identification (LRT = 50. 594,df = 1, R2 = 0.4109; BF = 8.0271e + 14; see footnote 7.)

Figure 12.

(A) Representative stimuli used in the Cohen and Singh (2007) experiment relating part-protrusion to part saliency. As part protrusion increases, so does subjective part saliency. (Parts are indicated by a red part cut.) (B) Log odds of subject accuracy as a function of log posterior ratio log p(c1|D) − log p(c0|D) as computed by the model. (Error bars depict the 95% confidence interval across subjects. The red curve depicts the linear regression.)

Shape completion

Shape completion refers to the visual interpolation of contour segments that are separated by gaps, often caused in natural images by occlusion. In dealing with partially-occluded object boundaries, the visual system needs to solve two problems (Singh & Fulvio, 2007; Takeichi, Nakazawa, Murakami, & Shimojo, 1995). First it needs to determine if two contour elements should be grouped together into the representation of single, extended contour (the grouping problem). Second, it has to determine what the shape of the contour within the gap region should be (the shape problem). Here we focus primarily on the shape problem, and show how our model can make perceptually natural predictions about the missing shape of a partly-occluded contour.

Local and global influences

Most previous models of completion base their predictions on purely local contour information, namely the position and orientation of the contour at the point where it disappears behind the occluder (called the inducer, Ben-Yosef & Ben-Shahar, 2012; Fantoni & Gerbino, 2003; Williams & D. W. Jacobs, 1997). Such models cannot explain the influence of non-local factors such as global symmetry (Sekuler, Palmer, & Flynn, 1994; van Lier et al., 1995) and axial structure (Fulvio & Singh, 2006). Non-local aspects of shape are, however, notoriously difficult to capture mathematically. But the BHG framework allows shape to be represented at any and all hierarchical levels, allowing it to make predictions that combine local and global factors in a comprehensive fashion.

In the BHG framework, completion is based on the posterior predictive distribution over missing contour elements, which assigns probability to potential completions conditioned on the estimated model. Assuming that figure-ground segmentation has already been established, we first compute the hierarchical representation of the occluded shape (given the object definitions set up in the context of part decomposition above) with the missing boundary segment, then we compute the posterior predictive (Eq. 8) based on the best grouping hypothesis, i.e. MAP tree-slice. This induces a probability distribution over the field of potential positions of contour elements in the occluded area as influenced by the global shape. This field should thus be understood as a global shape prior over possible completions, which could be used by future models in combination with some good-continuation constraint based on the local inducers to infer a particular completion. For example, consider a simple case in which a circle is occluded by a square (Fig. 13A). The model predicts that the interpolated contour must lie along a circular path with the same curvature as the rest of the circle. The model makes similarly intuitive predictions even with multi-part shapes (Fig. 13B). The model can also handle cases where grouping is ambiguous (the grouping problem mentioned above), i.e. in which the projection of the partly occluded object is fragmented in the image by the occluder (Fig. 13C). The model can even make a prediction in cases where there is not enough information for a local model to specify a boundary (Fig. 13D). All these completions follow directly from the framework, with no fundamentally new mechanisms. The completion problem, in this framework, is just an aspect of the effective quantification of the “goodness” or Prägnanz of grouping hypotheses, operationalized via the Bayesian posterior.

Figure 13.

Completion predictions based on the posterior predictive distribution based on the MAP skeleton (as computed by BHG).

Dissociating global and local predictions

Some of the model predictions mentioned above could also have been made by a purely local model. However, global and local models often predict vastly different shape completions, and the strongest evidence for global influences rests on such cases. For example Sekuler et al. (1994) found that certain symmetries in the completed shape facilitated shape completion. More generally, van Lier et al. (1994, 1995) found that the regularity of the completed shape, as formulated in their regularity-based framework, likewise influences completion. The shapes in Fig. 14, containing so-called fuzzy regularities, further illustrate the necessity for global accounts (van Lier, 1999). As we keep the inducer orientation and position constant we can increase the complexity of a tubular shape’s contour (Fig. 14A and C). A model based merely on local inducers would expect the completed contour to look exactly the same in both cases, but to most observers it does not. Because BHG takes the global shape into account, it predicts a more uncertain (i.e. noisy) completion when the global shape is noisier (Fig. 14B, D, and E). The capacity of the BHG framework to precisely quantify such influences raises the possibility that, in future work, we might be able to fully disentangle local and global influences and determine how they combine to determine visual shape completion.

Figure 14.

A simple tubular shape was generated with different magnitudes (s.d.) of contour noise. Note that for the local inducers are identical in both input images (A and C). For noiseless contours (A) the posterior predictive distribution over completions has a narrow noise distribution (B), while for noisy contours (C) the distribution has more variance (D). Panel (E) shows the relationship between the noise on the contour and the completion uncertainty as reflected by the posterior predictive distribution.

Discussion

In this paper we proposed a principled and mathematically coherent framework for perceptual grouping, based on a central theoretical construct (mixture estimation) implemented in a unified algorithmic framework (Bayesian hierarchical clustering). The main idea, building on proposals from our previous papers (Feldman et al., 2014; Froyen et al., under review), is to recast the problem of perceptual grouping as a mixture estimation problem. We model the image as a mixture (in the technical sense) of objects, and then introduce effective computational techniques for estimating the mixture—or, in other words, decomposing the image into objects. Above, we illustrated the framework for several key problems in perceptual grouping: contour integration, part decomposition and shape completion. In this section we elaborate on several properties of the framework, and point out some of its advantages over competing approaches.

Object classes

The flexibility of the BHG framework lies in the freedom to define object classes. In the literature, the term “object” encompasses a wide variety of image structures, simply referring to whatever units result from grouping operations (Feldman, 2003). In our framework, objects are re-conceived generatively as stochastic image-generating processes, which produce image elements under a set of probabilistic assumptions (Feldman & Singh, 2006). Formally, object classes are defined by two components: the generative (likelihood) function, p(D|θ), which defines how image elements are generated for a given class, and the prior over parameters, p(θ|β), which modulates the values the parameters themselves are likely to take on. Taken together, these two components define what objects in the class tend to look like.

In all the applications demonstrated above, we assumed a generic object class appropriate for spatial grouping problems, which generalizes the skeletal generating function proposed in Feldman and Singh (2006). Objects drawn from this class contain elements generated at stochastically chosen distances from an underlying generating curve, whose shape is itself chosen stochastically from a curve class. (The curve may have length zero, in which case the generating curve is a point, and the resulting shape approximately circular.) Depending on the parameters, this object class can generate contour fragments, dot clouds, or shape boundaries. This broad class unifies a number of types of perceptual units, such as contours, dot clusters, and shapes, that are traditionally treated as distinct in the grouping literature, but which we regard as a connected family. For example, in our framework contours are in effect elongated shapes with short ribs, dot clusters are shapes with image elements generated in their interiors, and so forth. Integrating these object classes under a common umbrella makes it possible to treat the corresponding grouping rules, contour integration, dot clustering, and the others mentioned above, as special cases of a common mechanism.

One can, of course, extend the current object definition into 3D (El-Gaaly et al., 2015), or imagine alternative object definitions, such as Gaussian objects (Froyen et al., under review; Juni, Singh, & Maloney, 2010). Furthermore, objects classes can be defined in non-spatial domains, using such features as color, texture, and contrast (Blaser, Pylyshyn, & Holcombe, 2000). As long as the object classes can be expressed in the appropriate technical form, the Bayesian grouping machinery can be pressed into service.

Hierarchical structure

BHG constructs a hierarchical representation of the image elements based on the object definitions and assumption set. Hierarchical approaches to perceptual grouping have been very influential (e.g. Baylis & Driver, 1993; Lee, 2003; Palmer, 1977; Pomerantz et al., 1977). At the coarsest level of representation our approach represents all the image elements as one object. For shapes this is similar to the notion of a model axis by Marr and Nishihara (1978), providing only coarse information such as size and orientation, of the entire shape (Fig. 15A). Lower down in the hierarchy, individual axes correspond to some- thing more like classical shape primitives such as geons (Biederman, 1987) or generalized cones (Binford, 1971; Marr, 1982) (Fig. 15A–C). Note though that our axial representation not only describes the shape of each part but also entails a statistical characterization of the image data that supports the description. This is what allows descriptions of missing parts (Fig. 13). Even further down the hierarchy, more and more detailed aspects of image structure are represented (Fig. 15B–C).

Figure 15.

Prediction fields for the shape in Fig. 8 for three different levels of the hierarchy. In order to illustrate how underlying objects also represent the statistical information about the image elements they explain the prediction/completion field was computed for each object separately without normalization so that the highest point for each object is equalized.

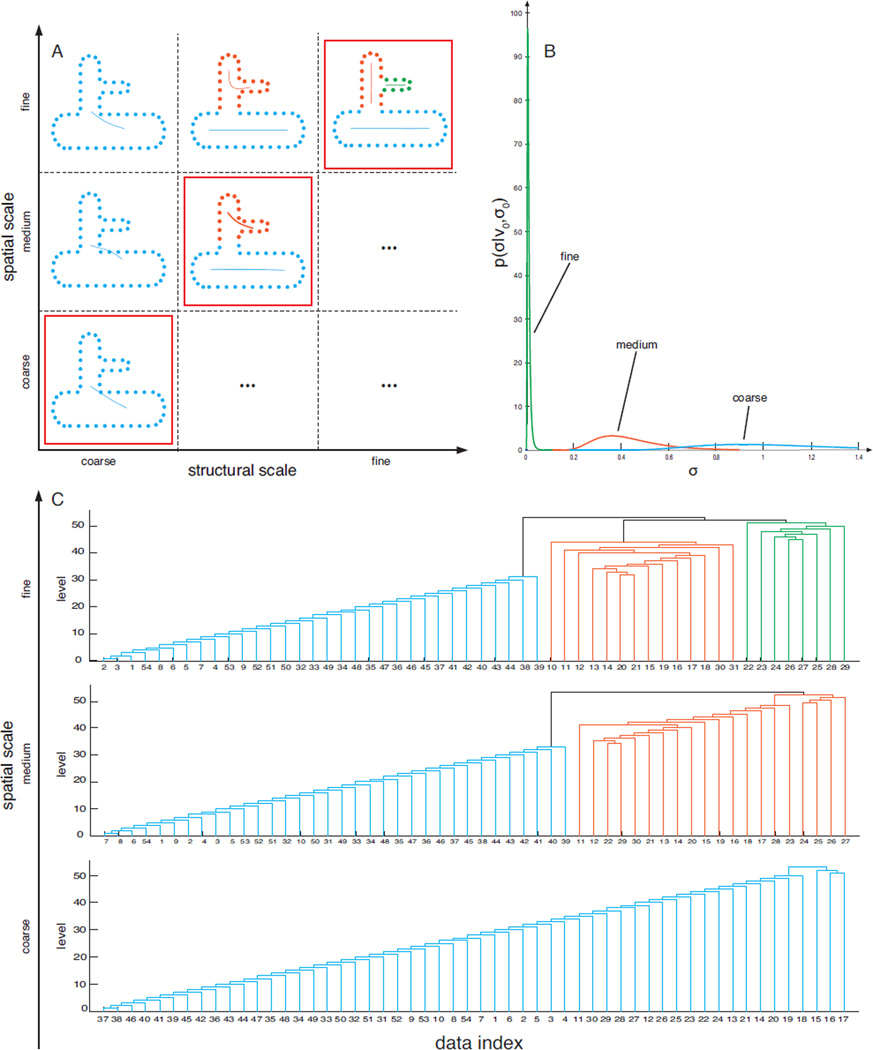

Structural versus spatial scale

Two different notions of scale can be distinguished in the literature. Most commonly, scale is defined in terms of spatial frequency, invoking a hierarchy of operators or receptive fields of different sizes, with broader receptive fields integrating image data from larger regions, and finer receptive fields integrating smaller regions. This notion of spatial scale has been incorporated into a number of models of perceptual grouping, including figure/ground (e.g. Jehee, Lamme, & Roelfsema, 2007; Klymenko & Weisstein, 1986) and shape representation (e.g. Burbeck & Pizer, 1994). Pure spatial scale effects have, however, been shown not to account for essential aspects of grouping (Field et al., 1993; Jáñez, 1984). In contrast, any hierarchical representation of image structure implicitly defines a notion of structural scale, referring to the position of a particular structural unit along the hierarchy (Feldman, 2003; Marr & Nishihara, 1978; Palmer, 1977; Siddiqi et al., 1999). Typically structural scale assumes a tree structure, with nodes at various levels describing various aggregations of image units, the top node incorporating the entire image, and leaf nodes referring to individual image elements.

These two notions of scale have not generally been explicitly distinguished in the literature, but we would argue that they are distinct. Elements at a common level of structural scale need not, in fact, have similar spatial frequency content, while elements with similar spatial scales may well occupy distinct levels of the organizational hierarchy. The BHG framework incorporates both notions of scale in a manner that keeps their distinct contributions clear (Fig. 16A). In BHG, spatial scale is modulated in effect by the prior over the variance of riblength, p(σ|σ0, ν0) (also see Appendix B). Large values of σ0 makes object hypotheses more tolerant to variance in the spatial location of image elements. Fig. 16B illustrates the effect of changing this prior on the resulting interpretation. Structural scale, in contrast, is defined by the level in the estimated hierarchy. Fig. 16C illustrates how the hierarchy changes as spatial scale is modified. At fine spatial scales, the inferred hierarchy includes three hypotheses at distinct structural scales, with the three-part hypothesis being the most probable (Fig. 16A). But at a coarser spatial scale a different hierarchical interpretation is drawn, with the two-part hypothesis the most probable and the three-part hypothesis, as found in the finer spatial scale, completely absent. This example illustrates how structural and spatial scale are related but distinct, describing different aspects of the grouping mechanism.

Figure 16.

Relating structural and spatial scale in our model by means of the shape in Fig 8. A. relationship between structural and spatial scale depicting their orthogonality. The red squares depict the most probable structural grouping hypothesis for each spatial scale. B. Showing the priors over the variance of the riblength, σ for each spatial scale. C. Hierarchical structure as computed by our framework depicted as a dendrogram for each spatial scale. The most probable hypothesis is shown in color.

Selective organization

Selective organization refers to the fact that the visual system overtly represents some subsets of image elements but not others (Palmer, 1977). The way BHG considers grouping hypotheses and builds hierarchical representations realizes the notion of selective organization in a coherent way. In our model only N grouping hypotheses are considered while the total number of possible grouping hypotheses c is exponential in N. Grouping hypotheses selected at one level of the hierarchy depend directly on those chosen at lower level (grouping hypotheses are “tree-consistent”), leading to a clean and consistent hierarchical structure. Inevitably, this means that numerous grouping hypotheses are not represented at all in the hierarchy. Such selective organization is empirically supported by the observation that object parts not represented in the hierarchy are more difficult to retrieve (Cohen & Singh, 2007; Palmer, 1977).

Advantages of the Bayesian framework

The Bayesian approach used here has several advantages over traditional approaches to perceptual grouping. First, it allows us to assign different degrees of belief, i.e. probabilities, to different grouping hypotheses, capturing the often graded responses found in subject data. Previous non-probabilistic models often only converge on one particular grouping hypothesis (e.g. Williams & D. W. Jacobs, 1997), or are unable to assign graded degrees of belief to different grouping hypotheses at all (e.g. Compton & Logan, 1993). Second, Bayesian inference makes optimal use of available information modulo the assumptions adopted by the observer (Jaynes, 2003). In the context of perceptual grouping, this means that a Bayesian framework provides the optimal way of grouping the image, given both the image data and the particular set of assumptions about object classes adopted by the observer. The posterior over grouping hypotheses, in this sense, represents the most “reasonable” way of grouping the image, or more accurately, the most reasonable way of assigning degrees of belief to distinct ways of grouping the image.

Conclusion

In this article we have presented a novel, principled, and mathematically coherent framework for understanding perceptual grouping, called Bayesian Hierarchical Grouping (BHG). BHG defines the image as a mixture of objects, and thus reformulates the problem of perceptual grouping as a mixture estimation problem. In the BHG framework, perceptual grouping means estimating how the image is most plausibly decomposed into distinct stochastic image sources—objects—and deciding which image elements belong to which sources, that is, which elements were generated by which object. The generality of the framework stems from the freedom it allows in how objects are defined. In the formulation discussed above, we used a single simple but flexible object definition, allowing us to apply BHG to such diverse problems as contour integration, dot clustering, part-decomposition, and shape completion. But the framework can easily be extended to other problems and contexts simply by employing alternative object class definitions.

BHG has a number of advantages over conventional approaches to grouping. First, its generality allows a wide range of grouping problems to be handled in a unified way. Second, as illustrated above, with only a small number of parameters it can explain a wide array of human grouping data. Third, in contrast to classical approaches, it allows grouping interpretations to be assigned degrees of belief, helping to explain a range of graded percepts and ambiguities, some of which were exhibited above. Fourth, it provides hierarchically structured interpretations, helping to explain human grouping percepts that arise at a variety of spatial and structural scales. Finally, in keeping with its Bayesian roots, the framework assigns degrees of belief to grouping interpretations in a rational and principled way.

Naturally, the BHG model framework has some limitations. One broad limitation is the general assumption that image data is independent and identically distributed (i.i.d.) conditioned on the mixture model, meaning essentially that image elements are randomly drawn from the objects present in the scene. Such an assumption is nearly universal in statistical inference, but certainly limits the generality of the approach, and may be especially inappropriate in dynamic situations. We note a few additional limitations more specific to our framework and algorithm.

First, in its current implementation the framework cannot easily be applied to natural images. Standard front-ends for natural images (e.g. edge detectors or V1-like operator banks) yield extremely noisy outputs, and natural scenes contain object classes for which we have not yet developed suitable generative models. (Algorithms for perceptual grouping are routinely applied to real images in the computer vision literature, but such schemes are notoriously limited compared to human observers, and are generally unable to handle the subtle Gestalt grouping phenomena discussed here.) Extending our framework so that it can be applied to natural images is a primary goal of our future research.

Secondly, broad though our framework may be, it is limited to perceptual grouping, and does not solve other fundamental interrelated problems such as 3D inference. Extending our framework to 3D is actually conceptually simple, albeit computationally complex, in that it simply requires the generative model be generalized to 3D, while estimation and hypothesis comparison mechanisms would remain essentially the same. Indeed in recent work (El-Gaaly et al., 2015) we have taken steps towards such an extension, allowing us to decompose 3D objects into parts.

Finally, it is unclear how exactly the framework could be carried out by neural hardware. However, Beck, Heller, and Pouget (2012) have recently derived neural plausible implementations for variational inference (a technique for providing Bayeisan estimates of mixtures; Attias, 2000) within the context of Probabilistic Population Codes. Because such techniques allow mixture decomposition by neural networks, they suggest a promising route towards a plausible neural implementations of the theoretical model we have laid out here.

We end with a comment on the “big picture” contribution of this paper. The perceptual grouping literature contains a wealth of narrow grouping principles covering specific grouping situations. Some of these, such as proximity (Kubovy et al., 1998; Kubovy & Wagemans, 1995), and good continuation (e.g. Feldman, 2001; Field et al., 1993; Parent & Zucker, 1989; Singh & Fulvio, 2005), have been given mathematically concrete and empirically persuasive accounts. But (with a few intriguing exceptions such as Geisler & Super, 2000; Ommer & Buhmann, 2003; Song & Hall, 2008; Zhu, 1999) similarly satisfying accounts of the broader overarching principles of grouping—if indeed any exist—remain comparatively vague. Terms such as Prägnanz, “coherence,” “simplicity,” “configural goodness,” and “meaningfulness” are repeatedly invoked without concrete definitions, often accompanied by ritual protestations about the difficulty in defining them. In this paper we have taken a step towards solving this problem by introducing an approach to perceptual grouping that is both principled, general, and mathematically concrete. In BHG, the posterior distribution quantifies the degree to which each grouping interpretation “makes sense”—the degree to which it is both plausible a priori and fits the image data. The data reviewed above suggests that this formulation effectively quantifies the degree to which grouping interpretations make sense to the human visual system. This represents an important advance towards translating the insights of the Gestalt psychologists—still routinely cited a century after they were first introduced (Wagemans, Elder, et al., 2012; Wagemans, Feldman, et al., 2012)—into rigorous modern terms.

Acknowledgments

This research was supported by NIH EY021494 to JF and MS, NSF DGE 0549115 (Rutgers IGERT in Perceptual Science), and a Fulbright fellowship to VF. We are grateful to Steve Zucker, Melchi Michel, John Wilder, Brian McMahan, and the members of the Feldman and Singh lab for their many helpful comments.

Appendix A

Delaunay-consistent pairs

Bayesian Hierarchical Clustering is a pairwise clustering method, where at each iteration merges between all possible pairs of trees Ti and Tj are considered. Given a dataset D = {x1 … xN} the algorithm is initiated with N trees Ti each containing one data point Di = {xn}. As N increases the number of pairs to be checked during this first iteration increases quadratricaly with N, or more specifically as follows from combinatorics #pairs = (N2 − N)/2 (Fig. A1), resulting in a complexity of 𝒪(N2). In each of the following iterations the hypothesis for merging only needs to be computed for pairs between existing trees and newly merged trees from iteration t − 1. However, computing the hypothesis for merging p(Dk|ℋ0) for each possible pair is computationally expensive. Therefore, in our implementation of the BHC, we propose to limit the pairs checked to a local neighbourhood as defined by the Delaunay Triangulation. In other words a data point xn is only considered to be merged with data point xm if it is a neighbour of that point. To initialize the BHC algorithm we compute the Delaunay Triangulation over the dataset D. Given this we can then compute a binary neighbourhood vector bn of length N for each datapoint xn indicating which other datapoints xn shares a Delaunay edge with. Together these vectors form a sparse symmetric neighbourhood matrix. In contrast when all pairs were considered this matrix would consist of all ones except for zeros along the diagonal. Using this neighbourhood matrix we can then define which pairs are to be checked at the first iteration. The amount of pairs checked at this initial stage is considerable lower than when all pairs are to be considered. Specifically when simulating the amount of Delaunay-consistent pairs checked at this first iteration on a randomly scattered dataset, the amount of pairs increased linearly with N (Fig A1). This results, when combined with the lower-bound complexity of Delaunay triangulation 𝒪(N log(N)), in a lower-bound complexity of 𝒪(N log(N)). In all of the following iterations the neighbourhood matrix is updated to reflect how merging trees, also causes neighbourhoods to merge. In order to implement this we created a second matrix, D, called the token-to-cluster matrix of size N × [(N − 1) + N]. The rows indicate the datapoints, and the columns the possible clusters they can belong to. N for belonging to themselves, and N −1 for each of the to be merged clusters throughout the iteration. Given this matrix and the neighbourhood matrix we can then define which pairs to test in each of the iterations following the initial one. Note, when all Delaunay consistent pairs have been exhausted, our implementation will return test all pair-wise comparisons.

Figure A1. Difference between checking all pairs and only Delaunay-consistent pairs at the first initial iteration of the BHG. As the number of data points, N, increases the number of pairs increases differently for the Delaunay-consistent (green), or all pairs (blue).

Appendix B

B-spline curve estimation

Within our approach it is necessary to compute the marginal p(D|β) = ∫θ p(D|θ)p(θ|β). For simple objects such as Gaussian clusters this can be solved analytically. However, for the more complex objects discussed here, integrating over the entire parameter space becomes rather intractable. The parameter vector for our objects looks as follows θ = {q, μ, σ}. Again integrating over the Gaussian part of the parameter space (μ and σ) is again straightforward and can be computed analytically. On the other hand integrating over all possible B-spline curves as defined by the parameter vector q is intractable for our purposes. We therefore choose to pick the parameter vector q that maximizes Eq. 15, while at the same time integrating over the Gaussian components. In what follows we will describe how we estimate the B-spline curve for a given dataset D.

B-spline curves were chosen for their versatility in taking many possible shapes by only defining a few parameters. Formally a parametric B-spline curve is defined as

| (12) |