Abstract

In medical therapies involving multiple stages, a physician’s choice of a subject’s treatment at each stage depends on the subject’s history of previous treatments and outcomes. The sequence of decisions is known as a dynamic treatment regime, or treatment policy. We consider dynamic treatment regimes in settings where each subject’s final outcome can be defined as the sum of longitudinally observed values, each corresponding to a stage of the regime. Q-learning, which is a backward induction method, is used to first optimize the last stage treatment, then sequentially optimize each previous stage treatment until the first stage treatment is optimized. During this process, model-based expectations of outcomes of late stages are used in the optimization of earlier stages. When the outcome models are misspecified, bias can accumulate from stage to stage and become severe, especially when the number of treatment stages is large. We demonstrate that a modification of standard Q-learning can help reduce the accumulated bias. We provide a computational algorithm, estimators, and closed-form variance formulas. Simulation studies show that the modified Q-learning method has a higher probability of identifying the optimal treatment regime even in settings with mis-specified models for outcomes. It is applied to identify optimal treatment regimes in a study for advanced prostate cancer, and to estimate and compare the final mean rewards of all the possible discrete two-stage treatment sequences.

Keywords: Backward induction, Multi-stage treatment, Optimal treatment sequence, Q-learning, Treatment decision making

1. Introduction

A dynamic treatment regime is a mathematical formalism for what physicians do routinely when making therapeutic decisions sequentially. The physician chooses a first treatment using diagnostic information, administers it, and observes the patient’s response. A second decision is based on the diagnostic information, first treatment, and newly observed response. This process may be continued, using the patient’s history up to the current stage for each decision, until either a satisfactory outcome is achieved or no further treatment is considered acceptable. The dynamic treatment regime is the sequence of decision rules embedded in the sequence of alternating observations and treatments.

Methods for evaluating dynamic treatment regimes have been used increasingly for patients undergoing long-term care involving multi-stage therapies. It is challenging to identify optimal decision rules in such multi-stage treatment settings because of the complicated relationships between the alternating sequences of observed outcomes and treatments. The decision at each treatment stage depends on all observed historical data, and influences all future outcomes and treatments. In turn, outcomes at each stage are affected by all previous treatments, and influence all future treatment decisions. It is well-known that simply optimizing the immediate outcome of each stage, which is called a myopic or greedy optimization, may not achieve the best final outcome. Simulation studies in Section 3 demonstrate this point.

Despite these complications, many approaches have been proposed to identify, estimate, or optimize dynamic treatment regimes based on observational data. Most methods have their origins in the seminal papers by Robins and colleagues [1, 2, 3, 4, 5] and Murphy [6], including inverse probability of treatment weighting (IPTW) and g-estimation for structural nested models. Many variants of IPTW and g-estimation have been proposed [7, 8, 9, 10, 11, 12, 13, 14, 15, 16]. They show the importance, when evaluating causal treatment effects, of correcting for bias introduced by physicians’ adaptive treatment decisions based on each patient’s current health status. Recent applications include estimation of survival for dynamic treatment regimes in a sequentially randomized prostate cancer trial [17], and in a partially randomized trial of chemotherapy for acute leukemia [18]. Clinical trial designs that compare multi-stage treatment strategies by adaptively re-randomizing patients have been proposed [19, 20, 21, 22].

We address the multi-stage decision making problem in settings where the final outcome to be optimized can be expressed as the sum of values observed at each stage. An important application is that where survival time is the final outcome and the cumulative value at each stage is the patient’s current survival time. Another application is a study where the cumulative outcome is the amount of time that the patient’s health index was within a specific target range. In such situations, a natural approach is to assume a conditional model for the outcome of each stage. However, due to the dependence of each outcome on previous treatments and outcomes, this approach may lead to a very complex model for the final outcome, which in turn makes global optimization of the treatment sequence difficult or intractable.

An alternative approach, called Q-learning [23, 24, 25, 26, 27, 28, 29, 30], is to use backward induction [31] to first optimize the last stage treatment, then sequentially optimize the treatment in each previous stage. At each stage, a model is assumed for the cumulative rewards from this stage onward, with all the future stages already optimized. This approach is well-suited for global optimization, but depends on the correct specification of reward models at all stages. At the optimization at each stage, misspecified models cause bias, and a severe problem is that bias is carried forward from each stage to the optimization of the previous stage. Consequently, even small bias at each stage may produce a large cumulative bias for the optimization of the entire regime.

In this article, we show that a slight modification of standard Q-learning can reduce cumulative bias. At each stage, when computing the rewards from those “already-optimized” future stage, standard Q-learning uses the maximum of model-based values. The modified Q-learning uses the actual observed rewards plus estimated loss due to suboptimal actions. If the optimal actions were actually taken for all future stages, then the estimated loss is zero, and the actual observed rewards are used. Consequently, comparing with standard Q-learning, the modified Q-learning is more likely to use the originally observed rewards instead of model-based ones, thus it is more robust to model misspecification, and less likely to carry the bias from stage to stage during the backward reduction. This is demonstrated by simulation studies in Section 3.

When applying the modified Q-learning to a prostate cancer study in Section 4, we provide a by-product that is convenient for the practical use of both standard and modified Q-learning. In many situations where the treatment options are discrete, it is often of interest to estimate the mean rewards of all the treatment sequences. However, Q-learning does not require fully specified reward functions for all possible treatment strategies. This helps achieving simplicity. On the other hand, it fails to provide all the estimates of interest in the above situation. Therefore, this seems to be a shortcoming of Q-learning. Nevertheless, in Section 4.3, we provide a trick to use Q-learning (standard or modified) to estimate the mean rewards for all possible multi-stage (discrete) treatment sequences, and make inference about the reward differences between any two treatment sequences. This technique of Q-learning application is useful in practice, but has not appeared in the literature, to the best of our knowledge.

The article is organized as follows. The modified Q-learning method and its properties are described in Section 2. Its performance for identifying optimal treatment regimes in settings with misspecified reward models is evaluated by simulation in Section 3. We apply the method in Section 4 to analyze data from a prostate cancer trial. A summary and discussion of the modified Q-learning method are presented in Section 5. Mathematical details are provided in Appendices.

2. Backward Induction For Sequential Treatment Optimization

We use the framework of potential (counterfactual) outcomes of all possible treatment options for each individual, and make the usual assumptions [32] that (i) an individual’s potential outcome under the treatment actually received is the observed outcome (consistency), (ii) given the history at each stage, the treatment decision is independent of the potential outcomes (sequential randomization or no unmeasured confounders), and (iii) all treatment strategies being considered have a positive probability of being observed (positivity). To identify the optimal action at each stage of backward induction, the estimated reward is computed for each possible action assuming that the actions at all future stages will be optimal. This is done by fitting a parametric model for the counterfactual future reward as a function of actions and current history. The final cumulative reward is the estimate of what the patient’s total reward would be if all actions were optimal. In the sequel, we will use the terms ’payoff’ and ’reward’ interchangeably to mean the same thing.

2.1. Notation and Method

For each subject i = 1,⋯, n and stage s = 1,⋯, K, where n denotes the sample size, and K denotes the total number of multiple stages, let Zi,s denote the time-dependent covariates measured at the beginning of s-th stage, Ai,s the treatment or action, and Yi,s the observed outcome. Without loss of generality, we assume that larger values of Yi,s are preferable. We denote the corresponding vectors of these variables through s stages by Z̄i,s = (Zi,1,⋯, Zi,s), Āi,s = (Ai,1,⋯, Ai,s), and Ȳi,s = (Yi,1,⋯, Yi,s). At stage 1, the subject’s history is simply H̄i,1 = Zi,1. For each subsequent stage s ≥ 2 the history is H̄i,s = (Z̄i,s, Āi,s−1, Ȳi,s−1). We denote the optimal action at stage s by and the associated counterfactual outcome that would occur if this optimal action were taken by For all s < K, we define the counterfactual cumulative outcome for stages s through K under (Ai,s, ) as follows:

| (1) |

where each is conditional on all the historic information observed prior to stage j, including previous treatments and responses. For simplicity, we have suppressed conditional notation. In words, this equation says that

That is, is the future payoff, starting at stage s, if all actions from s + 1 to K are optimized, but the actual (possibly suboptimal) action Ai,s is taken at stage s. For the final stage, because there are no future actions to optimize, we define . In our notation are random variables, rather than mean functions as denoted by other authors.

We next define Δi,s to be the total future loss from stages s to K if action Ai,s is taken instead of while all actions from stage s + 1 to K are optimal. Thus, if then Δi,s = 0, whereas if Ai,s is not optimal then Δi,s > 0. This Δi,s is essentially Murphy’s regret function [6]. Robins defined a similar blip function by comparison with a “zero" treatment instead of the optimal one [5]. We use the counterfactual in (1) and loss Δi,s to define the cumulative future reward to patient i, from stages s to stage K, for taking the optimal action from stage s onward as

| (2) |

The basic idea is that the reward Ri,s is obtained from by adding back the future loss due to taking a suboptimal action at stage s. For example, if Yi,s is the increment in survival time for stage s, then Ri,s is the sum of the stagewise survival times from stage s onward associated with all current and future actions being optimal, given the past treatment and response history.

Given the above structure for the future reward Ri,s at each stage s, the counterfactual cumulative outcome in equation (1) can be written in the more compact form

| (3) |

If all optimal actions and Δi,s’s were known, one could simply work backward and compute Ri,s for each s = K, K − 1,⋯, 1, and thus obtain the final payoff Ri,1. To derive in the steps of the backward induction, we will exploit the decomposition given by equations (1) – (3) by assuming an additive parametric regression model for as a function of Ai,s and the most recent history . We formulate the regression model in terms of rather than the complete history H̄i,s, to control the number of parameters so that the method may be applied feasibly. This requires us to make a Markovian assumption. The fitted model will provide estimates of the Δi,s’s and thus identify the values and thus the optimal payoffs.

Appendices A provides details of the parametric regression model that we assume for . In particular, Appendix D provides an illustration for a special case of three treatment stages with three treatment options each stage. An important aspect of our approach is that we assume a regression model for rather than assuming simple models for Ys, 1 ≤ s ≤ K, which could result in a model for that may be too complicated for optimization.

2.2. Backward Induction

Our method requires the the cumulative causal effect of treatment j versus l at stage s, which we define formally, for j > l, as

In words, this cumulative causal effect is

Substituting the parameter estimates obtained from the fitted regression model, given in Appendix A, gives the estimated cumulative payoff, , estimated causal effects, D̂i,s(j, l), and estimated cumulative future rewards R̂i,s. The backward induction is carried out as follows:

Step 1. Start with s = K and set .

- Step 2. For the current step s,

- Step 2.1 Fit the regression model (15) for to obtain (β̂s, ) and thus .

- Step 2.2 Use the estimated causal effects D̂i,s(j, l) given by (16) to identify the estimated optimal action .

- Step 2.3 Define the estimated future loss due to taking action Ai,s to be . Step 2.4 By(2), Step 2.3 gives the estimate .

Step 3. If s > 1, decrement s → s − 1. By (3), set , and go to Step 2.1 If s = 1, stop.

At the end of these steps, , the optimal treatments for all subjects at all stages, have been identified, and R̂i,1 is the estimated total payoff from taking these estimated optimal actions. With this algorithm, the optimization is global rather than myopic or local.

Asymptotic properties of the estimators are given in Appendix B. The “Sandwich” formula [33] is used to account for the extra variation due to plugging in an estimator from a late treatment stage into the regression models for an early stage.

2.3. Comparison with Standard Q-learning

The method described above is a robust modification of standard Q-learning [23, 28]. For all treatment stages except the last, to estimate counterfactual outcomes under optimal actions, standard Q-learning uses predicted values from previously fitted linear models plus estimated loss due to suboptimal actions. In contrast, our modified Q-learning method uses the values actually observed plus the estimated loss. Let denote the standard Q-learning method’s objective function, that plays the role of our function . For the first step of the backward induction used in our method, .

Standard Q-learning does backward induction using the same steps as our backward induction algorithm through Step 2.3, but it uses the estimated stage K reward

In contrast, our estimated stage K reward is

The difference is that standard Q-learning uses predicted values , while our modified Q-learning method uses the observed values . This difference is carried to the next stage, s = K − 1, through the formulations of and . Similar differences are accumulated during the iteration of this process in the backward induction steps from s = K − 1 to s = 1.

The modified Q-learning method has the following advantages. First, uses observed outcomes whenever possible for any s ≤ K, whereas uses model-based expectations for any s < K. Retaining the original outcomes helps the modified Q-learning rely less on the specification of the models used in (15), and thus improves robustness. This is shown by our simulation study in the next section when the model (15) is misspecified, for example, when some relevant covariates are not included in the data set. The simulations show that has a more robust performance than .

The second advantage of the modified Q-learning method follows from the fact that definition (3) ensures . This means that the predicted reward under optimal treatment regimes for stage s + 1 onward is always at least the observed reward under the actual regimes, which may be suboptimal. This is a desirable property that does not always hold for standard Q-learning because, in practice, one may observe for some s < K. This happens simply because, for some subjects, the predicted rewards under optimal treatment regimes for stage s + 1 onward are less than their observed actual reward. Furthermore, for s < K, if a patient has received the optimal treatment regimes for stage s + 1 onward, then with the modified Q-learning, because Δi,r = 0 for all r ≥ s + 1, the potential outcome under the treatment sequence {Ais, } for this patient, is , the observed reward from stage s onward. This is in agreement with the “consistency assumption”. This assumption, stated at the beginning of Section 2, requires that the assumed counterfactual outcomes under the actual observed actions must be equal to the observed outcomes. It is a very natural assumption and commonly required in causal inference [34]. In contrast, with standard Q-learning, may not equal , even if a patient receives the optimal treatment regimes for stage s + 1 onward. That is, as an estimate of the counterfactual outcome, may violate the consistency assumption on individual basis, although it satisfies this assumption in expectation when the reward models are correctly specified. We will compare the performance of standard and modified Q-learning in the next section by simulation.

3. Simulation Studies

The correct specification of reward models is very important for Q-learning [32]. In this section, we use simulations to show that, in some scenarios when the reward models are misspecified, the modified Q-learning out-performs standard Q-learning. For simplicity, we evaluate two-stage treatment sequences. Sample sizes 50, 100, 200 and 400 are considered. We use three scenarios, each simulation scenario is replicated 1,000 times.

3.1. Scenario I

In Scenario I, we assume an unobserved variable V ~ Normal(0, 22). For the first treatment stage, we generate covariate Z1 ~ Normal(0, 1), treatment A1 ~ Bernoulli(0.5), and outcome Y1 = Z1(A1 − 0.5) + V + ε1, with ε1 ~ Normal(0, 1). The second stage treatment A2 ~ Bernoulli(0.5), and outcome Y2 = −2Z1(A1 − 0.5) + (A1 − 0.5)(A2 − 0.5) − V + ε2, with ε2 ~ Normal(0, 1). The final cumulative outcome is Y = Y1 + Y2. With the observed data of (Z1, A1, Y1, A2, Y2) for all subjects, the goal is to find the optimal two-stage treatment regimes that maximizes Y.

In the simulation, both treatments A1 and A2 are randomized. The optimal stage-2 treatment is . Then the reward for stage 2 under is R2 = −2Z1(A1 − 0.5) + 0.25 − V + ε2. Recalling that , and . We assume the following model to optimize A1.

| (4) |

The true values for the above parameters are β1 = (β10, β11)T = (0.25, 0.5)T and ψ1 = (ψ10, ψ11)T = (0, − 1)T. The optimal stage-1 treatment is . If we use a myopic strategy to optimize A1 by maximizing Y1 = Z1(A1 − 0.5) + V + ε1, we will get a wrong solution .

To apply the modified Q-learning, we first fit the following model,

| (5) |

From the data generation mechanism, it can be derived that the true values for the above parameters are β2 = (β20, β21, β22, β23)T = (0.25, 0,−0.5,−0.857)T, and ψ2 = (ψ20, ψ21, ψ22, ψ23)T = (−0.5, 0, 1, 0)T. The coefficient β23 = −0.857 is the effect of V on Y2 through Y1, namely . Details about the above derivation is provided in Appendix C. After fitting the above model to obtain β̂2 and ψ̂2, the estimated optimal stage-2 treatment is

| (6) |

Then let

| (7) |

with |·| denotes absolute value. We use the outcome to fit the model in (4). After estimators β̂1 and ψ̂1 are obtained, the estimated optimal stage-1 treatment is

| (8) |

The simulation results for samples of size 200 are given Table 1 and Table 2 (each first panel from the left, Scenario I). In general, the bias is small, and the empirical and asymptotic standard errors (SE and ASE) match well, with coverage probabilities of the 95% confidence intervals all close to nominal. The modified Q-learning correctly identified the optimal stage-1 and stage-2 treatments 91.1% and 88.4% of the time, respectively. Parameter estimations for other sample sizes (n=50, 100, or 400) are also performed well by the modified Q-learning (results not shown).

Table 1.

Parameter estimates for treatment stage 1

| Modified Q-Learning | Standard Q-Learning | g-estimation* | Regret Minimization* | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| True | Est | SE | ASE | CP | Est | SE | ASE | CP | Est | SE | ASE | CP | Est | SE | ASE | CP | |

| Scenario I: Pr(A1 = 1) = Pr(A2 = 1) = 0.5 | |||||||||||||||||

| β10 | 0.25 | 0.282 | 0.180 | 0.243 | 0.988 | 0.281 | 0.192 | 0.202 | 0.952 | ||||||||

| β11 | 0.5 | 0.489 | 0.171 | 0.200 | 0.980 | −0.068 | 0.157 | 0.147 | 0.039 | ||||||||

| ψ10 | 0 | −0.008 | 0.270 | 0.341 | 0.988 | −0.004 | 0.281 | 0.282 | 0.953 | −0.009 | 0.269 | 0.256 | 0.947 | 0.009 | 0.264 | 0.256 | 0.928 |

| ψ11 | −1 | −0.969 | 0.264 | 0.263 | 0.933 | 0.139 | 0.178 | 0.178 | 0.000 | −0.980 | 0.263 | 0.249 | 0.926 | −1.012 | 0.274 | 0.258 | 0.922 |

| Scenario II: logit{Pr(A1 = 1)} = Z1, logit{Pr(A2 = 1)} = 0.3Y1 | |||||||||||||||||

| β10 | 0.25 | 0.274 | 0.199 | 0.263 | 0.989 | 0.044 | 0.205 | 0.217 | 0.851 | ||||||||

| β11 | 0.5 | 0.483 | 0.193 | 0.217 | 0.970 | −0.072 | 0.177 | 0.159 | 0.074 | ||||||||

| ψ10 | 0 | 0.008 | 0.274 | 0.369 | 0.993 | 0.009 | 0.291 | 0.304 | 0.942 | 0.008 | 0.275 | 0.276 | 0.945 | 0.021 | 0.293 | 0.282 | 0.934 |

| ψ11 | −1 | −0.963 | 0.288 | 0.287 | 0.938 | 0.147 | 0.202 | 0.194 | 0.000 | −0.990 | 0.329 | 0.315 | 0.926 | −1.051 | 0.346 | 0.347 | 0.948 |

| Scenario III: logit{Pr(A1 = 1)} = Z1 + V, logit}Pr(A2 = 1)} = 0.3Y1 + V | |||||||||||||||||

| β10 | 0.25 | 0.419 | 0.194 | 0.378 | 0.996 | 0.289 | 0.192 | 0.348 | 0.999 | ||||||||

| β11 | 0.5 | 0.556 | 0.177 | 0.229 | 0.977 | 0.098 | 0.168 | 0.179 | 0.393 | ||||||||

| ψ10 | 0 | −0.086 | 0.253 | 0.474 | 0.998 | −0.087 | 0.256 | 0.429 | 0.995 | −0.056 | 0.270 | 0.274 | 0.945 | −0.038 | 0.284 | 0.301 | 0.949 |

| ψ11 | −1 | −0.982 | 0.261 | 0.281 | 0.950 | −0.066 | 0.206 | 0.196 | 0.002 | −0.991 | 0.264 | 0.284 | 0.959 | −1.016 | 0.287 | 0.339 | 0.96 |

g-estimation and regret minimization specify treatment selection probabilities (not shown), but do not need β10 or β11;

Est, mean of estimates; SE, empirical standard error;

ASE, average of standard error estimates; ASE for g-estimation and regret minimization obtained by 200 bootstrap samples.

CP, coverage probability of 95% confidence interval.

Table 2.

Parameter estimates for treatment stage 2

| Standard or modified Q-Learning* | g-estimation** | Regret Minimization** | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| True | Est | SE | ASE | CP | Est | SE | ASE | CP | Est | SE | ASE | CP | |

| Scenario I: Pr(A1 = 1) = Pr(A2 = 1) = 0.5 | |||||||||||||

| β20 | 0.25 | 0.255 | 0.207 | 0.210 | 0.949 | ||||||||

| β21 | 0 | 0.002 | 0.170 | 0.149 | 0.907 | ||||||||

| β22 | −0.5 | −0.495 | 0.298 | 0.297 | 0.947 | ||||||||

| β23 | −0.857 | −0.858 | 0.063 | 0.065 | 0.960 | ||||||||

| ψ20 | −0.5 | −0.510 | 0.294 | 0.297 | 0.952 | −0.510 | 0.284 | 0.289 | 0.952 | −0.509 | 0.282 | 0.292 | 0.947 |

| ψ21 | 0 | −0.003 | 0.240 | 0.212 | 0.919 | −0.004 | 0.229 | 0.227 | 0.946 | −0.014 | 0.214 | 0.219 | 0.955 |

| ψ22 | 1 | 0.997 | 0.413 | 0.420 | 0.949 | 0.997 | 0.378 | 0.396 | 0.959 | 1.027 | 0.410 | 0.420 | 0.948 |

| ψ23 | 0 | 0.004 | 0.091 | 0.093 | 0.952 | 0.004 | 0.091 | 0.094 | 0.950 | −0.002 | 0.100 | 0.097 | 0.932 |

| Scenario II: Scenario II: logit{Pr(A1 = 1)} = Z1, logit{Pr(A2 = 1)} = 0.3Y1 | |||||||||||||

| β20 | 0.25 | 0.019 | 0.217 | 0.220 | 0.823 | ||||||||

| β21 | 0 | 0.006 | 0.194 | 0.165 | 0.904 | ||||||||

| β22 | −0.5 | −0.501 | 0.321 | 0.319 | 0.942 | ||||||||

| β23 | −0.857 | −0.842 | 0.070 | 0.069 | 0.939 | ||||||||

| ψ20 | −0.5 | −0.517 | 0.309 | 0.316 | 0.965 | −0.514 | 0.310 | 0.318 | 0.955 | −0.476 | 0.325 | 0.324 | 0.944 |

| ψ21 | 0 | −0.008 | 0.268 | 0.230 | 0.906 | −0.004 | 0.269 | 0.258 | 0.933 | −0.002 | 0.266 | 0.271 | 0.960 |

| ψ22 | 1 | 1.023 | 0.455 | 0.456 | 0.943 | 1.019 | 0.453 | 0.464 | 0.945 | 0.996 | 0.488 | 0.488 | 0.938 |

| ψ23 | 0 | −0.005 | 0.097 | 0.096 | 0.954 | −0.005 | 0.107 | 0.107 | 0.949 | 0.001 | 0.114 | 0.119 | 0.957 |

| Scenario III: Scenario III: logit{Pr(A1 = 1)} = Z1 + V, logit{Pr(A2 = 1)} = 0.3Y1 + V | |||||||||||||

| β20 | 0.25 | 0.639 | 0.233 | 0.235 | 0.617 | ||||||||

| β21 | 0 | 0.343 | 0.182 | 0.157 | 0.422 | ||||||||

| β22 | −0.5 | −1.31 | 0.394 | 0.364 | 0.391 | ||||||||

| β23 | −0.857 | −0.724 | 0.0871 | 0.0873 | 0.669 | ||||||||

| ψ20 | −0.5 | −1.32 | 0.401 | 0.363 | 0.407 | −0.965 | 0.409 | 0.399 | 0.774 | −1.089 | 0.393 | 0.392 | 0.667 |

| ψ21 | 0 | −0.539 | 0.245 | 0.218 | 0.315 | −0.020 | 0.327 | 0.314 | 0.934 | 0.046 | 0.316 | 0.353 | 0.966 |

| ψ22 | 1 | 1.725 | 0.548 | 0.503 | 0.697 | 1.020 | 0.591 | 0.579 | 0.932 | 0.969 | 0.606 | 0.625 | 0.953 |

| ψ23 | 0 | −0.010 | 0.124 | 0.123 | 0.948 | −0.005 | 0.202 | 0.206 | 0.953 | −0.009 | 0.222 | 0.238 | 0.950 |

Standard and modified Q-Learning methods use the same estimating equations and give the same results for the last treatment stage;

g-estimation and regret minimization specify treatment selection probabilities (not shown), but do not need β’s;

Est, mean of estimates; SE, empirical standard error;

ASE, average of standard error estimates; ASE for g-estimation and regret minimization obtained by 200 bootstrap samples.

CP, coverage probability of 95% confidence interval.

We apply standard Q-learning to the same data sets. Both standard and modified Q-learning fit the same regression models (5) for treatment stage 2. Naturally, they obtain exactly the same results for stage 2 (see Table 2), but differ for the stage-1 estimates (Table 1). As shown in equation (7), the outcome used by the modified Q-learning is the actually observed values Y2 plus the estimated loss due to suboptimal stage-2 actions. In contrast, the outcome used by standard Q-learning for stage 1 is the predicted value Ŷ2 from stage 2 plus the same estimated loss, as follows.

| (9) |

Using to replace in (4), the same linear regression model in (4) is fit to identify the optimal stage-1 treatments. Note that carries this information from V by using the original data Y2, but discards this information by using the model based value Ŷ2. Due to this difference, the two methods obtain different stage-1 estimates (see Table 1). Standard Q-learning gives biased estimates for β11 and ψ11. Consequently, the probability that it correctly identifies the optimal stage-1 treatments is only 38.2%, much lower than that achieved by the modified Q-learning (91.1%). In summary, standard Q-learning uses model based values in the construction of counterfactual outcomes, and is prone to bias introduced by model mis-specification. As the number of treatment stages increases, the model-based values will be used more times during the backward induction, and this bias problem will become more severe. In contrast, the modified Q-learning achieves robustness against model mis-specification by using the original data instead of model-based values whenever possible.

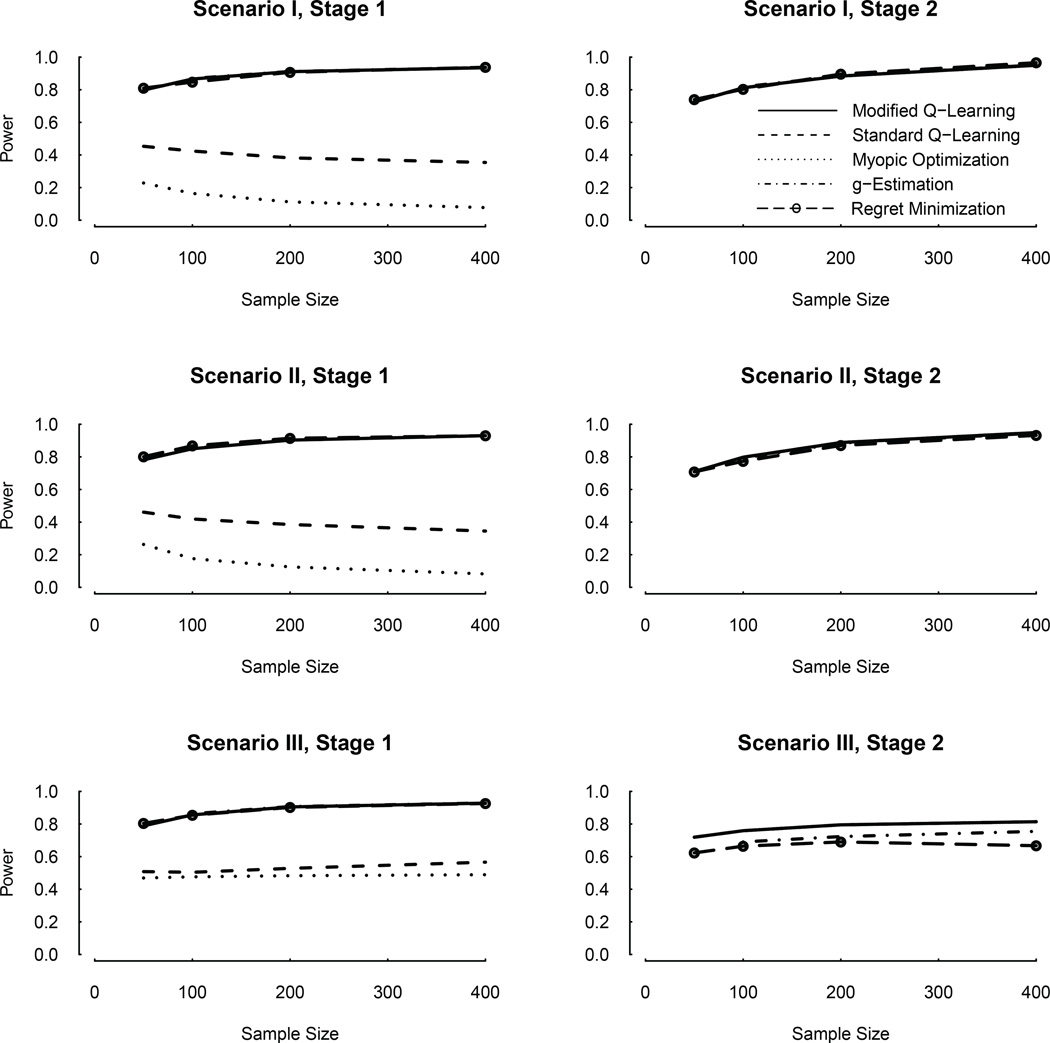

Since the main goal of Q-learning is to correctly identify optimal treatments, we conducted additional simulations for a range of sample sizes and compared performance of modified Q-learning with standard Q-learning. We also compared their performances with the myopic strategy that uses Y1 to optimize A1 and Y2 to optimize A2. Figure 1 shows these probabilities under a range of sample sizes. The modified Q-learning has larger probabilities than standard Q-learning to correctly identify the optimal stage-1 treatments. Both the modified and standard Q-learning have much better performances than the myopic strategy.

Figure 1.

Comparisons between the modified and standard Q-learning, myopic optimization, g-estimation and regret minimization: probability (power) of correctly identifying the optimal stage-1 and stage-2 treatments in Scenarios I, II, and III. Note: For stage-1, in all Scenarios, the power curves for the modified Q-learning, g-estimation and regret minimization almost overlap with each other. For stage-2, in all Scenarios, the power curves for the modified and standard Q-learning and myopic optimization overlap with each other, so that only the solid curve (modified Q-learning) is visible; they almost overlap with those for g-estimation and regret minimization in Scenarios I and II, and are higher than those for g-estimation and regret minimization in Scenarios III.

It is also interesting to note that, in this setting with misspecified reward models, the optimal treatment selection power of the modified Q-learning increases with sample size, but this is not true for either standard Q-learning or myopic optimization. This shows that in situations of model mis-specification, a large sample size cannot remedy a non-robust or incorrectly designed optimization algorithm, and may even make things worse. Specifically in this simulation setting, since there are only two treatment options at each stage, a pure random selection of any one of them has a probability of 50% of being correct. The less than 50% power of standard Q-learning and myopic optimization shown in Figure 1 reveal that they are severely biased in such a situation with misspecified reward models. It also explains why their empirical power levels decrease with sample size. This is because their true power levels are less than 50% as n → ∞, and equal to 50% as n → 0 (equivalent to a pure random selection).

3.2. Scenarios II & III, and other optimization methods

Treatments in the above Scenario I are randomized. We also consider other treatment selection models. In Scenario II, treatment assignment probabilities depend on observed covariates and outcomes, namely, A1 ~ Bernoulli(Z1), A2 ~ Bernoulli(0.3Y1). In Scenario III, treatment assignments further depend on the unobserved variable V, as below, A1 ~ Bernoulli(Z1 + V), A2 ~ Bernoulli(0.3Y1 + V). All the other data generation mechanisms remain the same as in Scenario I. The same data analyses shown in the previous subsection for Scenario I by standard and modified Q-learning, and by the myopic optimization method, are also conducted for Scenarios II & III.

As suggested by a reviewer, we also compare the proposed estimator to the estimators by Murphy [6] and Robins [5]. Moodie et al [25] provided a nice description of these estimators together with R functions for implementing them, which are used below with combination of her and our notations.

In our setting of data generation, the g-estimator (Robins, 2004) starts with the following estimation functions. Denote by 𝔥j the observed history prior to Aj, j = 1, 2. Let γ1(𝔥1, a1; ψ) = a1(ψ10 + ψ11z1) and γ2(𝔥2, a2; ψ) = a2(ψ20 + ψ21z1 + ψ22a1 + ψ23y1) be the blip functions (Robins, 2004) defining the expected differences in outcome, respectively, between treatments A1 = a1 and A1 = 0, and between A2 = a2 and A2 = 0. Consequently, this would identify the optimal stage 2 treatment as , and . By the data generation described earlier, we have ψ1 = (ψ10, ψ11)T = (0, −1)T and ψ2 = (ψ20, ψ21, psi22, ψ23)T = (−0.5, 0, 1, 0)T. Denote S1(a1) = a1(1, z1)T and S2(a2) = a2(1, z1, a1, y1)T. Define

| (10) |

| (11) |

Also assume treatment selection models as below,

The resulting estimator α̂ from above is used in the estimating equation below to estimate ψ and then find out the optimal treatment regimes,

| (12) |

Murphy(2003) defined a regret function as the loss due to taking a sub-optimal action. In notation above, the regret functions for the two stages can be written as μj(𝔥j, aj) = maxa{γj(𝔥j, a) − γj(𝔥j, aj)} (Moodie et al, 2007). If an optimal action is taken, i.e., , then the regret is zero, namely, μj(𝔥j, aj) = 0. The true regret functions are μ1(𝔥1, a1) = |z1|I{a1 ≠ I(z1 < 0)} and μ2(𝔥2, a2) = |a1 − 0.5|I(a2 ≠ a1). Using the methods proposed by Murphy (2003) and also adopted by Moodie et al (2007), logistic functions as below are used to approximate the above piece-wise linear functions,

| (13) |

| (14) |

The true values of the above ψ’s are the same as that for the g-estimation.

For all the methods, the parameter estimates and their empirical and asymptotic standard errors are reported in Tables 1 and 2. The standard and modified Q-learning, and the myopic optimization use more parameters for their outcome models (both β’s and ψ’s), whereas the g-estimation and regret minimization use less parameters for their outcome models (only the ψ’s). However, they use logistic regression models for treatment selection, for which the estimated parameters are not reported. Similarly as Moodie et al (2007), the bootstrap method (200 randomly-drawn samples from the original data with replacement) are used to compute the asymptotic standard errors for the g-estimation and regret minimization. In the above Tables, standard Q-learning estimators show substantial bias for the stage-1 parameters β11 and ψ11 in all the three Scenarios. The modified Q-learning, g-estimation and regret minimization have only very small bias in Scenarios I and II, but some bias in Scenario III, in which both the outcome and treatment assignment models are mis-specified.

The power levels for all the methods to correctly identify the optimal treatments are depicted in Figure 1. For stage-1, in all three Scenarios, the modified Q-learning, g-estimation and regret minimization perform almost the same, whereas the myopic optimization and standard Q-learning have poor performance. For stage 2, the standard and modified Q-learning, and the myopic optimization have the same performance. Comparing with them, the g-estimation and regret minimization have slightly lower power levels in Scenario III, but the same power levels in Scenario I and II. In this Figure, the case of n = 50 for g-estimation is not shown because it involves singular matrices and other computation issues.

4. Application To A prostate Cancer Study

We applied the modified Q-learning to analyse data from a clinical trial of advanced prostate cancer conducted at MD Anderson Cancer Center from 1998 to 2006 to evaluate multi-stage therapeutic strategies [17, 19]. One hundred and fifty patients with advanced prostate cancer were randomized at enrollment to receive one of four chemotherapy combinations, abbreviated as CVD, KA/VE, TEC, and TEE, during an initial treatment period of 8 to 24 weeks. Thereafter, response-based assignment to the second stage treatment was made. Patients with a favorable response to the initial treatment stayed on the same treatment during the second stage (“respond ↦ stay”), while patients who did not have a favorable response were randomized among the three remaining treatments (“no response ↦ switch”). Since 47 patients did not follow this protocol due to severe toxicities or progressive disease or other reasons, Wang et al [17] defined viable dynamic treatment regimes including such discontinuation and accounting for both efficacy and toxicity. This evaluation was based an expert score defined from the bivariate outcomes of efficacy and toxicity in each stage. The scores for the first and second stages were denoted by Y1 and Y2 respectively. It was further specified that patients who went off treatment during the first stage received a score of Y2 = 0 for stage 2. We used the modified Q-learning to identify the optimal treatments for the two stages that maximized Y = Y1 + Y2. The data set included the following covariates: patient age, radiation treatment (yes or no), length of time hormone therapy was received (in months) before registration, location of evidence of disease at enrollment, strata (low or high risk), baseline PSA level, and alkaline phosphatase hemoglobin concentrations.

4.1. Stage-2 estimation

By design, patients with a favorable response in stage 1 had that treatment repeated, and we assumed that they received the optimal A2. Since patients whose first stage treatment failed were re-randomized, this produced a saturated factorial design with twelve different two-stage treatment sequences. Due to the limited sample size, we fit a model with twelve indicators for the twelve treatment sequences, without including their interactions with patients’ characteristics. The fitted model showed that, for patients who received TEC in stage-1 and did not have a favorable response, the best stage-2 treatment was CVD. For patients who did not receive TEC in stage-1 and did not have a favorable response, the best stage-2 treatment was TEC. The computation of potential stage-2 scores under the above optimal stage-2 treatment is shown in Table 3. If the stage-1 treatment failed, the score indicated in Table 3 is added to each patient’s actual stage-2 score, Y2, to obtain a hypothetical optimal score, R2, which is used in the next step of the analysis. For patients who had a favorable response in stage-1 treatment, we set R2 = Y2. For patients who went off treatment during the first stage, since they did not receive any stage-2 treatment, they could not be used in the estimation of stage-2 treatment effects. They were still included in the analyses for stage 1 and overall outcomes by assigning R2 = 0. This had an impact on the interpretation of the identified optimal regimes, as shown in the next Subsection.

Table 3.

Estimates of Δ2 in computation of optimal potential stage-2 score R2 = Y2 + Δ2

| Stage-1 treatment |

Optimal stage-2 treatment conditional on stage-1 treatment |

Actual stage-2 treatment | |||

|---|---|---|---|---|---|

| CVD | KA/VE | TEC | TEE | ||

| CVD | TEC | NA | 0.03 | 0 | 0.24 |

| KA/VE | TEC | 0.5 | NA | 0 | 0.55 |

| TEC | CVD | 0 | 0.175 | NA | 0.25 |

| TEE | TEC | 0.125 | 0.125 | 0 | NA |

4.2. Stage-1 estimation

After the stage-2 estimation, we defined

For the four stage-1 treatments, we fit a linear regression model for all main effects and interactions associated with the stage-1 treatment with response . All covariates mentioned at the beginning of this Section 4 were considered. Using the Akaike information criterion (AIC) to conduct a stepwise variable selection, we found that age seemed to be the only significant covariate. Interactions between age and treatments were not statistically significant. Age was centered at 65 years, which is roughly the mean. The fitted model given in Table 3 and 4 shows that the stage-1 treatments may be ranked in the following order: TEC, KA/VE, TEE, and CVD, and they roughly can be put into two groups, {TEC, KA/VE} and {TEE, CVD}, with substantial difference between the groups, but not much difference within either group. Combining these results with those in Table 3, which show the optimal stage-2 treatment conditional on stage-1 treatment, we conclude that the optimal treatment sequence (strategy) for these patients is as follows. Start with initial treatment TEC. If a patient achieves a favorable response, then continue to treat with TEC in the second stage. Otherwise, i.e., if a patient does not achieve a favorable response to the initial treatment, then treat with CVD in the second stage. We denote this regime by (TEC, CVD). Other regimes are denoted similarly.

Table 4.

Linear regression for the effects of stage-1 treatments on the potential final outcome if their corresponding optimal stage-2 treatments had been received

| Estimate | SE | p-value | |

|---|---|---|---|

| Intercept | 1.248 | 0.1004 | < 0.001 |

| Age | −0.0109 | 0.0053 | 0.039 |

| KA/VE vs. CVD | 0.2366 | 0.1286 | 0.066 |

| TEC vs. CVD | 0.2757 | 0.1294 | 0.033 |

| TEE vs. CVD | 0.0475 | 0.1370 | 0.729 |

The estimates in Table 4 are not for stage-1 outcomes only, but rather for the mean final rewards if the stage-2 treatments had been optimized conditional on the stage-1 treatment. For example, compared with CVD, the initial treatment TEC could have improved mean final outcome score by 0.2757 (standard deviation = 0.1294), if all subjects had received their respective optimal stage-2 treatments conditional on their stage-1 treatments. Referring to Table 3, the optimal two-stage treatment strategy is (TEC, CVD) for subjects who receive TEC in stage 1, and is (CVD, TEC) for subjects whose stage-1 treatments are CVD. The noted difference of 0.2757 in Table 4 between initial treatments TEC and CVD is actually the difference between the two regimes (TEC, CVD) and (CVD, TEC). This difference is statistically significant (p=0.033).

4.3. Estimation for the mean rewards of 16 regimes

Similar to standard Q-learning, the modified Q-learning does not require fully specified reward functions for all possible treatment strategies. For the above example, combining the results in Tables 3 and 4, we have estimated the mean rewards of the following four regimes: (TEC, CVD), (KA/VE, TEC), (TEE, TEC) and (CVD, TEC). However, we have not obtained estimates for other regimes, e.g., (TEC, TEE). There are 12 such regimes. This might be viewed as an inconvenience for Q-learning or the modified Q-learning. One may try to introduce some extra models to estimate the mean rewards for the other 12 regimes. However, we show below that this is unnecessary.

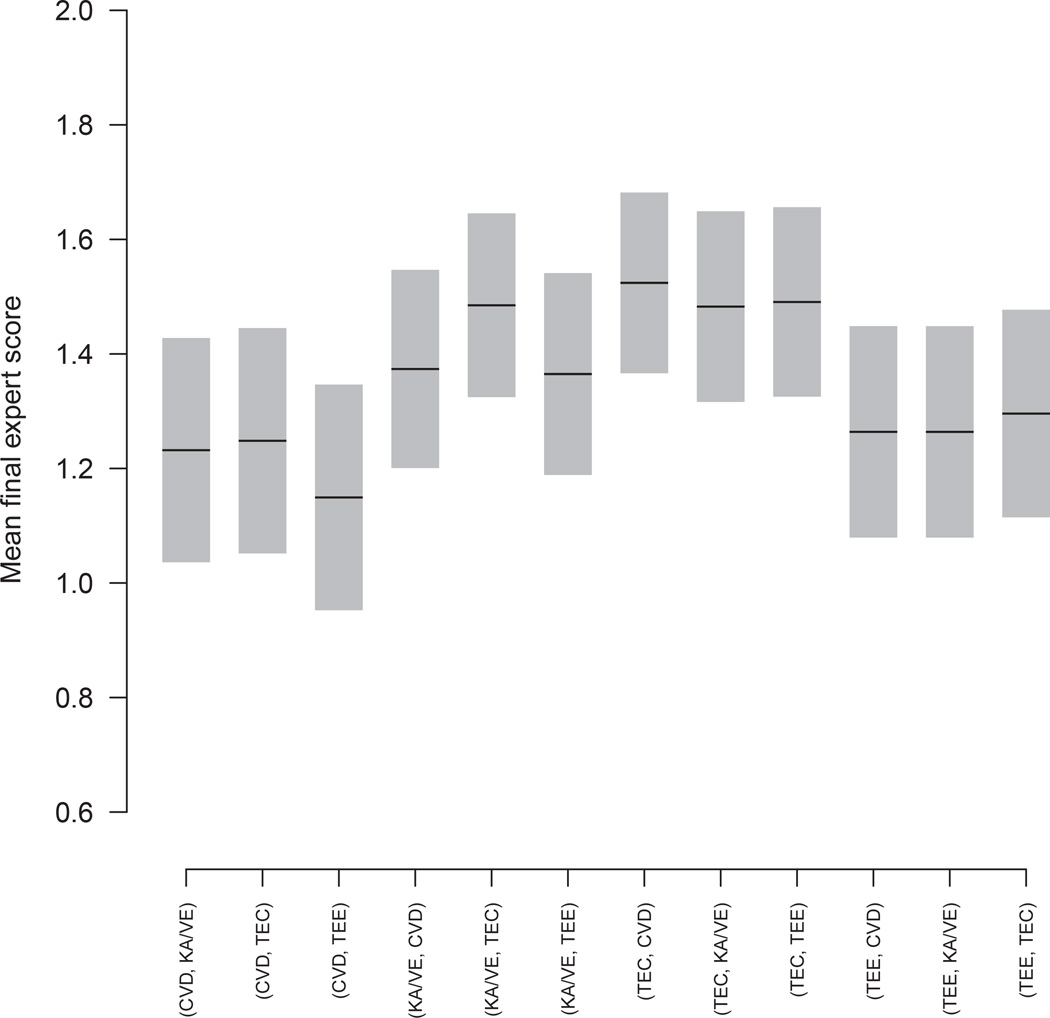

In Table 3, our purpose was to identify the optimal regimes, thus we used the optimal stage-2 treatments as references, and computed the potential loss Δ2 due to not talking the optimal stage-2 treatment. When our purpose is to compute mean final rewards for other regimes rather than identifying optimal regimes, we replace those optimal stage-2 treatments in Table 3 by the treatments for which we intend to estimate and use them as the new references. Then we figure out the new potential loss (or gain) due to not taking the new reference treatments in stage 2, and finally compute the final reward for regimes using the new reference treatments in stage 2. We put these values in Table 5. For convenience, we copy Table 3 to the top of Table 5. The middle part of Table 5 shows the new values for estimating the mean final rewards for the following regimes, (CVD, KA/VE), (KA/VE, CVD), (TEC, TEE) and (TEE, CVD), which are referred to as target regimes. With these , we define a new final reward , with , and then proceed similarly to use regression models for as we do for . The bottom part of Table 5 uses another different set of stage-2 reference treatments to estimate the final mean rewards for regimes (CVD, TEE), (KA/VE, TEE), (TEC, KA/VE) and (TEE, KA/VE). Throughout Table 5, the stage-2 reference treatments have (or Δ2 = 0). If the label for a stage-2 reference treatment is j (hence ), then a different stage-2 treatment k has . Both Tables 3 and 5 are intended to be used for patients who had a unfavorable stage-1 response, consequently their diagonal elements are not given due to the trial design that only those patients who achieved a successful stage-1 response could receive a stage-2 treatment same as stage-1. The results for 12 possible regimes are shown in Figure 2.

Table 5.

Estimates for Δ2 and with different stage-2 treatments as references

| Target regimes |

Stage-1 treatment |

Reference stage-2 treatment |

Actual stage-2 treatment | ||||

|---|---|---|---|---|---|---|---|

| CVD | KA/VE | TEC | TEE | ||||

| Δ2 | |||||||

| (CVD, TEC) | CVD | TEC | NA | 0.03 | 0 | 0.24 | |

| (KA/VE, TEC) | KA/VE | TEC | 0.5 | NA | 0 | 0.55 | |

| (TEC, CVD) | TEC | CVD | 0 | 0.175 | NA | 0.25 | |

| (TEE, TEC) | TEE | TEC | 0.125 | 0.125 | 0 | NA | |

| (CVD, KA/VE) | CVD | KA/VE | NA | 0 | 0–0.03 | 0.24–0.03 | |

| (KA/VE, CVD) | KA/VE | CVD | 0 | NA | 0–0.5 | 0.55–0.5 | |

| (TEC, TEE) | TEC | TEE | 0–0.25 | 0.175–0.25 | NA | 0 | |

| (TEE, CVD) | TEE | CVD | 0 | 0.125–0.125 | 0–0.125 | NA | |

| (CVD, TEE) | CVD | TEE | NA | 0.03–0.24 | 0–0.24 | 0 | |

| (KA/VE, TEE) | KA/VE | TEE | 0.5–0.55 | NA | 0–0.55 | 0 | |

| (TEC, KA/VE) | TEC | KA/VE | 0–0.175 | 0 | NA | 0.25–0.175 | |

| (TEE, KA/VE) | TEE | KA/VE | 0.125–0.125 | 0 | 0–0.125 | NA | |

Figure 2.

Means and 90% confidence intervals of the final outcome (expert score) for the 12 treatment strategies in the form of (A, B), which means to start with treatment A, if success, stay with A; otherwise switch to treatment B, with A and B each takes one of the four values CVD, KA/VE, TEC and TEE, and A ≠ B.

For this particular example, standard Q-learning gives very similar results (not shown). An advantage of both standard and modified Q-learning is that they can identify optimal dynamic treatment regimes for each individual. This can be done by including interactions between individual level covariates and treatments. For example, if in the above analysis we include an interaction between patient age and stage-1 treatment, then we can identify the age-specific optimal treatment regimes. If we include an interaction between stage-1 score and stage-2 treatment in the model in Subsection 4.1, then such an identified optimal stage-2 treatment will depend on stage-1 score. These are all desirable explorations to maximize benefit for each patient. However, due to the limited sample size, this may not yield stable results and thus is not presented here.

Recall that, for patients who went off treatment during stage 1 due to toxicity or progressive disease, and thus did not receive any stage-2 treatment, we set R2 = 0. By doing this, all 150 patients were included in the above analyses. This practical modification of the original treatment plan is consistent with the idea of “viable treatment regimes” of Wang et al [17]. For example, a patient received TEC as in stage 1, then went off treatment due to toxicity or progressive disease or other reasons and did not receive any stage-2 treatment, the data from this patient are used in the estimation of final reward for three regimes, namely (TEC, CVD), (TEC, KA/VE) and (TEC, TEE).

5. Discussion

We have demonstrated a robust modification of Q-learning for optimizing a multi-stage treatment sequence in settings where the payoff is a cumulative outcome and intermediate values at each stage are available. The modified Q-learning preserves more randomness in the observed outcomes, and thus is more robust against model mis-specification, has higher power to identify optimal treatments, and satisfies the consistency assumption. If the treating physician happens to adopt the treatment regime that is optimal for a given patient’s condition, the optimal outcome assumed by the modified Q-learning is precisely the observed outcome.

Optimization of a K-stage treatment regime is difficult, since conditioning on the treatment history can result in very complicated models. This is a common problem with all optimization algorithms for multi-stage treatments [6, 5]. We handle this problem by making a Markov assumption. This kind of assumption also was used in others’ simulation studies [6]. In reality, this assumption may be violated. The degree of robustness of model results against this assumption is unknown. In such a case, if sample size permits, it is best to explore models without this Markov assumption, i.e., include a large number of interaction terms to involve earlier stage history into the reward models. In cancer research, practical values for K are about 2 to 5, corresponding to disease recurrences. In other areas of application where the value of K may be much larger, the advantages of the modified Q-learning, i.e., satisfying the consistency assumption and being robust against model mis-specifications, may become more prominent.

An attractive feature of both standard and the modified Q-learning is that they do not need model treatment selection probabilities. Most other methods require this additional structure, including the history-adjusted marginal structural models [35] and A-learning [6]. There are very subtle arguments required with the use of modeling treatment selections. It has been argued that small mis-specifications in such selection models can accumulate over treatment stages and thus cause severe bias and convergence problems [36]. Therefore, there is an advantage to avoid using such treatment selection models.

Acknowledgments

Contract/grant sponsor: U.S.A. National Institutes of Health grants U54 CA096300, U01 CA152958, 5P50 CA100632, R01 CA 83932 and 5P01 CA055164.

Appendix A

Regression Model for the Modified Q-Function

For each s = 1,⋯, K, let βs be a parameter vector of the main effects of on . Using j = 1 as the reference treatment group, for each j = 2,⋯, Js, where Js is the number of treatment options at stage s, let be a parameter vector of the interactive effects of action Ai,s = j and on . Let {ei, i = 1,⋯, n} be a vector of i.i.d. random errors. Denoting the indicator of an event E by I(E), the regression model for is

| (15) |

where the main effects are

and the multiplier of I(Ai,s = j) in the sum of the interaction terms is

Thus, each parameter indexed by j is the comparative effect of Ai,s = j versus action Ai,s = 1. Under the model (15), we define the cumulative causal effect of treatment j versus l at stage s, where j > l, as

| (16) |

which depends on the interaction parameters , and recent history , but not on the main effects βs.

Given estimates β̂s and of the parameters in (15), denote the resulting estimates of by , estimated causal effects by D̂i,s(j, l), and estimated cumulative future rewards by R̂i,s.

Appendix B

Asymptotic Properties

The linear models in (15) are easy to fit. However, due to the use of later stage estimates in models for earlier stages, the covariance formulas for the estimated regression parameters are not straightforward. Below we provide closed-form sandwich formula estimators for the covariance matrices. For simplicity, we assume the total number of treatment stages K = 2 in the following derivation, denote by Sk the matrix formed by , and assume treatment Ak is binary, for k = 1, 2. The results can be generalized to K > 2.

Rewrite the right-hand side of the regression model in (15) as

| (17) |

where Sk1 ∈ ℝpk and Sk2 ∈ ℝqk are sub-vectors of Sk. We allow variable selection so that the model in (15) may not include the full set of variables in . Denote , and θk0 its true value, where βk ∈ ℝpk is the main effect of the current state variables on the outcome, and ψk ∈ ℝqk are the interactions between current state variables and treatment. Note that , and is the potential cumulative outcome given S1,i, A1,i and . The two-stage backward induction proceeds as follows. Start with the second stage, we have

where is the stage-2 design matrix and Y⃗2 = (Y21, …, Y2n)T. Then estimate the second stage individual optimal outcome by R̂2 = (R̂21,…, R̂2n)T, where

| (18) |

With this optimized outcome at stage 2, the potential cumulative outcome, given S1,i, A1,i and , is , where . After this, we estimate the first stage parameters by

where .

The asymptotic properties of these parameter estimates are presented below, under the following technical conditions.

- A1. The true value for θ2, denoted by , minimizes

and the true value for θ1, denoted by , minimizes

where 𝒫0 = limn ℙn denotes the true probability measure. We assume that the limits exists and is finite in the above expressions. A2. is is an interior point in a bounded, open, convex subset Θ ⊂ ℛm, where m = ∑k(pk + qk). For k = 1, 2, with probability one, 𝒬k(Sk, Ak; θk) is at least twice continuously differentiable with respect to θk, and the Hessian matrix, exists and is positive-definite.

A3. With probability one, for k = 1, 2.

Condition A1 says that θ10 and θ20 are true values that minimize loss function in each step. If Qk takes the form of the linear model (17), condition A2 is equivalent to non-singularity of the design matrix for k = 1, 2. From condition A3, we assume there is no possibility of non-regularity. In case of , it has been verified that multi-stage estimation, including standard Q-learning, may be biased and the above asymptotic properties may be inappropriate, and thus requiring special treatment [13, 37]. Here, we do not consider such complications.

Denote the estimating equation for θ2 as ℙnΨ2(θ2; S2, A2) = 0, where

Theorem 1

Under conditions A1–A3,

where V2(θ2) = D2(θ2)−1B2(θ2){D2(θ2)−1}T with D2(θ2) = −E[∂Ψ2(θ2; S2, A2)/(∂θ2)] and B2(θ2) = E[Ψ2(θ2; S2, A2)Ψ2(θ2; S2, A2)T].

Proof

It is a direct application of the “Sandwich” formula [33], so omitted.

Since the estimation of θ1 depends on ψ̂2, let Ψ2,2 denote the sub-equation of Ψ2 and D2,2 denote the sub-matrix of D2, both corresponding to ψ2 at β2 = β20. Then, ψ̂2 − ψ20 ≈ D2,2(ψ20)−1ℙn[Ψ2,2(ψ20; S2, A2)], where β20 and ψ20 are true values of β2 and ψ2.

The estimating equation for θ1 is

Let

Theorem 2

Under conditions A1–A3,

where V1(θ10, θ20) can be estimated by

with D1(θ1) = −E[∂Ψ1(θ1; S1, A1)/(∂θ1)].

Proof

Again it is a direct application of the “Sandwich” formula [33], so omitted.

Appendix C

Simulation Model

In general, suppose we have random variables X1,⋯, Xp, and Y, and we would like to do regression of Y on X1,⋯, Xp with model . If X1,⋯, Xp are orthogonal to each other, then we have .

In Section 3, we have Z1 ~ Normal(0, 1), A1 ~ Bernoulli(0.5), V ~ Normal(0, 22), Y1 = Z1(A1 − 0.5) + V + ε1 with ε1 ~ Normal(0, 1). A2 ~ Bernoulli(0.5), Y2 = −2Z1(A1 − 0.5) + (A1 − 0.5)(A2 − 0.5) − V + ε2 with ε2 ~ Normal(0, 1). We use the following model to do regression analysis.

To find out the true values for β’s in the above model, we consider regressing Y2 on the following orthogonal set of random variables {1, Z1, (A1 − 0.5), Y1, (A2 − 0.5), (A2 − 0.5)Z1, (A2 − 0.5)(A1 − 0.5), (A2 − 0.5)Y1}. That is to say, we consider the model

By using the formula mentioned at the beginning of this Appendix, we obtain

For the above coefficients, the only one that is not straightforward is

Note e2 = ε2 − 2Z1(A1 − 0.5) − V + 0.857Y1. It can be verified that e2 is orthogonal to all explanatory variables on the right hand side of the above equation, justifying its validity as a residual term.

Appendix D

An Illustration for > 2 Stages and > 2 Treatment Options in Each

Stage Section 2 and Appendix A provide a general description for any number of stages (K), and any number of treatment options in each stage (Js), for s = 1,⋯, K. As suggested by a referee, for easy understanding, we illustrate through an example below. We skip the data generation and pick a particular regression model for each stage. Suppose we observe (Zs, As, Ys) for each stage s = 1, 2, 3, with As = 1, 2 or 3 indicating three different treatment options for each stage s. Here As = 1 and As′ = 1 do not necessarily mean the same medical treatment for stages s ≠ s′. The modified Q-learning proceeds as follows.

Let . Fit a linear regression model as below to find out the optimal treatment for stage 3 conditional on current covariates Z3, previous treatment A2 and outcome Y2.

Then the conditional optimal treatment is just the one that maximize the mean reward in stage 3, which is mathematically described as,

| (19) |

Then we estimate the potential optimal reward R3 in stage 3 each individual would have achieved had he/she received his/her conditional optimal treatment as indicated above. If an individual actually received his/her conditional optimal treatment, the estimated reward R̂3 is set to be the observed Y3 by our modified Q-learning. Otherwise, R̂3 is set to be Y3 plus the difference between the rewards for the optimal and actual treatments.

By doing the above, the optimal stage 3 treatments conditional on historical information are identified. Here the Markov assumption is used in the above linear regression model so that it depends on A2 and Y2, but does not go further to depend on A1 or Y1.Without such an assumption, the above linear regression would have too many predictors and require an extremely large sample size to have a reasonable fit. This Markov assumption is for practical rather than theoretical consideration.

Now consider optimizing treatments for stage 2. Define . Similarly as above, first fit a linear model as below,

Then find out the optimal stage-2 treatment conditional on current covariates Z2, previous treatment A1 and outcome Y1, as below.

| (20) |

The potential total reward from stage 2 onwards (i.e., sum of rewards from stages 2 and 3), R2, had a subject received his optimal stage 2 treatment and his corresponding optimal stage 3 treatment, can be estimated as below,

For stage 1, similarly as above, define . Then fit a linear regression model

This will give estimates for the optimal treatment stage-1 treatments conditional on Z1 as below,

| (21) |

Under this optimal stage-1 treatment, and corresponding optimal stages 2 and 3 treatments, the total optimal reward is R1, which can be estimated by,

The above procedures are to derive the optimal treatments using a backward induction. After the estimation results are obtained, to apply them in practice, the optimal treatment decision rules are determined as follows. First use (21) to find out the optimal treatment conditional on covariate Z1. Suppose this gives A1 = 1. After receives this treatment A1 = 1, the observed stage-1 outcome is Y1. At the beginning of stage 2, covariate Z2 is observed. Then at this moment, the optimal stage 2 treatment can be determined by (20) based on Z2, A1 = 1 and the observed Y1. Suppose the optimal treatment conditional on these variables is A2 = 3. After receives this treatment A2 = 3, the observed stage-2 outcome is Y2. At the beginning of stage 3, covariate Z3 is observed. At this time, the optimal stage-3 treatment is determined by (19) based Z3, A2 = 3 and the observed Y2. Suppose the observed stage-3 outcome is Y3. The above optimal treatment identification method is supposed to maximize Y = Y1 + Y2 + Y3.

References

- 1.Robins J. A new approach to causal inference in mortality studies with a sustained exposure period: application to control of the healthy worker survivor effect. Mathematical Modelling. 1986;7:9–12. [Google Scholar]

- 2.Robins J. The control of confounding by intermediate variables. Statistics in Medicine. 1989;8:679–701. doi: 10.1002/sim.4780080608. [DOI] [PubMed] [Google Scholar]

- 3.Robins JM. Analytic methods for estimating hiv treatment and cofactor effects. In: Ostrow D, Kessler R, editors. Methodological Issues of AIDS Mental Health Research. New York: Plenum Publishing; 1993. pp. 213–290. [Google Scholar]

- 4.Robins JM. Causal inference from complex longitudinal data. In: Berkane M, editor. Latent Variable Modeling and Applications to Causality. New York: Springer-Verlag; 1997. pp. 69–117. [Google Scholar]

- 5.Robins JM. Optimal structural nested models for optimal sequential decisions. In: Lin D, Heagerty P, editors. Proceedings of the Second Seattle Symposium on Biostatistics. New York: Springer; 2004. pp. 189–326. [Google Scholar]

- 6.Murphy SA. Optimal dynamic treatment regimes (with discussion) Journal of the Royal Statistical Society, Series B. 2003;65:331–366. [Google Scholar]

- 7.Hernán M, Brumback B, Robins J. Marginal structural models to estimate the causal effect of zidovudine on the survival of hiv-positive men. Epidemiology. 2000;11:561–570. doi: 10.1097/00001648-200009000-00012. [DOI] [PubMed] [Google Scholar]

- 8.Murphy SA, van der Laan MJ, Robins JM Group CPPR. Marginal mean models for dynamic regimes. Journal of the American Statistical Association. 2001;96:1410–1423. doi: 10.1198/016214501753382327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lunceford JK, Davidian M, Tsiatis AA. Estimation of survival distributions of treatment policies in two-stage randomization designs in clinical trials. Biometrics. 2002;58:48–57. doi: 10.1111/j.0006-341x.2002.00048.x. [DOI] [PubMed] [Google Scholar]

- 10.Bang H, Robins J. Doubly robust estimation in missing data and causal inference models. Biometrics. 2005;61:962–973. doi: 10.1111/j.1541-0420.2005.00377.x. [DOI] [PubMed] [Google Scholar]

- 11.Petersen M, Sinisi S, van der Laan M. Estimation of direct causal effects. Epidemiology. 2006;17.3:276–284. doi: 10.1097/01.ede.0000208475.99429.2d. [DOI] [PubMed] [Google Scholar]

- 12.Robins J, Orellana L, Rotnitzky A. Estimation and extrapolation of optimal treatment and testing strategies. Statistics in Medicine. 2008;27:4678–4721. doi: 10.1002/sim.3301. [DOI] [PubMed] [Google Scholar]

- 13.Moodie EM, Richardson TS. Estimating optimal dyanamic regimes: correcting bias under the null. Scandinavian Journal of Statistics. 2009;37:126–146. doi: 10.1111/j.1467-9469.2009.00661.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhao Y, Zeng D, Socinski M, Kosorok M. Reinforcement learning strategies for clinical trials in non-small cell lung cancer. Biometrics. 2011;67:1422–1433. doi: 10.1111/j.1541-0420.2011.01572.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Goldberg Y, Kosorok M. Q-learning with censored data. Annals of Statistics. 2012;40:529–560. doi: 10.1214/12-AOS968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhao Y, Zeng D, Rush A, Kosorok M. Estimating individualized treatment rules using outcome weighted learning. Journal of the American Statistical Association. 2012;107:1106–1118. doi: 10.1080/01621459.2012.695674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wang L, Rotnitzky A, Lin X, Millikan RE, Thall PF. Evaluation of viable dynamic treatment regimes in a sequentially randomized trial of advanced prostate cancer. Journal of American Statistical Association. 2012;107:493–508. doi: 10.1080/01621459.2011.641416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wahed A, Thall P. Evaluating joint effects of induction-salvage treatment regimes on overall survival in acute leukemia. Journal of Royal Statistical Society, Series C. 2013;62:67–83. doi: 10.1111/j.1467-9876.2012.01048.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Thall P, Millikan R, Sung HG. Evaluating multiple treatment courses in clinical trials. Statistics in Medicine. 2000;19:1011–1028. doi: 10.1002/(sici)1097-0258(20000430)19:8<1011::aid-sim414>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- 20.Lavori PW. A design for testing clinical strategies: Biased adaptive within-subject randomization. Journal of the Royal Statistical Society, Series A. 2000;163:29–38. [Google Scholar]

- 21.Lavori PW, Dawson R. Dynamic treatment regimes: Practical design considerations. Statistics in Medicine. 2001;20:1487–1498. doi: 10.1191/1740774s04cn002oa. [DOI] [PubMed] [Google Scholar]

- 22.Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (most) and the sequential multiple assignment randomized trial (smart): New methods for more potent e-health interventions. American Journal of Preventive Medicine. 2007;32:S112–S118. doi: 10.1016/j.amepre.2007.01.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Watkins CJCH, Dayan P. Q-learning. Machine Learning. 1992;8:279–292. [Google Scholar]

- 24.Murphy SA. A generalization error for q-learning. Journal of machine learning research. 2005;6:1073–1097. [PMC free article] [PubMed] [Google Scholar]

- 25.Moodie EEM, Richardson TS, Stephens DA. Demystifying optimal dynamic treatment regimes. Biometrics. 2007;63:447–455. doi: 10.1111/j.1541-0420.2006.00686.x. [DOI] [PubMed] [Google Scholar]

- 26.Laber E, M Q, Lizotte D, Murphy S. Statistical inference in dynamic treatment regimes. Arxiv preprint. 2010 arXiv:1006.5831. [Google Scholar]

- 27.Song R, Wang W, Zeng D, Kosorok MR. Penalized q-learning for dynamic treatment regimes. arXiv preprint. 2011 doi: 10.5705/ss.2012.364. arXiv:1108.5338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nahum-Shani I, Qian M, Almirall D, Pelham W, Gnagy B, Fabiano G, Waxmonsky J, Yu J, Murphy S. Q-learning: a data analysis method for developing adaptive interventions. Psychological Methods. 2012;17:478–494. doi: 10.1037/a0029373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zhang B, Tsiatis AA, Laber EB, Davidian M. Robust estimation of optimal dynamic treatment regimes for sequential treatment decisions. Biometrika. 2013;100:681–691. doi: 10.1093/biomet/ast014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Schulte PJ, Tsiatis AA, Laber EB, M D. Q-and a-learning methods for estimating optimal dynamic treatment regimes. Statistical Science. 2014 doi: 10.1214/13-STS450. to appear. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bellman R. Dynamic Programming. Princeton, N.J.: Princenton University press; 1957. [Google Scholar]

- 32.Robins J. Robust estimation in sequential ignorable missing data and causal inference models. Proc. Bayesian Statist. Sci. Sec. Am. Statist. Ass. 2000:6–10. [Google Scholar]

- 33.White H. A heteroskedasticity-consistent covariance matrix estimator and a direct test for heteroskedasticity. Econometrika. 1980;48:817–838. [Google Scholar]

- 34.Henderson R, Ansell P, Alshibani D. Regret-regression for optimal dynamic treatment regimes. Biometrics. 2010;66:1192–1201. doi: 10.1111/j.1541-0420.2009.01368.x. [DOI] [PubMed] [Google Scholar]

- 35.Petersen ML, Deeks SG, Martin JN, van der Laan MJ. History-adjusted marginal structural models to estimate time-varying effect modification. U.C. Berkeley Division of Biostatistics Working Paper Series. 2005 Working Paper 199. [Google Scholar]

- 36.Rosthø jS, Fullwood C, Henderson R. Estimation of optimal dynamic anticoagulation regimes from observational data: a regret-based approach. Statistics in Medicine. 2006;25:4197–4215. doi: 10.1002/sim.2694. [DOI] [PubMed] [Google Scholar]

- 37.Chakraborty B, Murphy S, Strecher V. Inference for nonregular parameters in optimal dynamic treatment regimes. Statistical Methods in Medical Research. 2010;19:317–343. doi: 10.1177/0962280209105013. [DOI] [PMC free article] [PubMed] [Google Scholar]