Abstract

Both the occurrence and intensity of facial expressions are critical to what the face reveals. While much progress has been made towards the automatic detection of facial expression occurrence, controversy exists about how to estimate expression intensity. The most straight-forward approach is to train multiclass or regression models using intensity ground truth. However, collecting intensity ground truth is even more time consuming and expensive than collecting binary ground truth. As a shortcut, some researchers have proposed using the decision values of binary-trained maximum margin classifiers as a proxy for expression intensity. We provide empirical evidence that this heuristic is flawed in practice as well as in theory. Unfortunately, there are no shortcuts when it comes to estimating smile intensity: researchers must take the time to collect and train on intensity ground truth. However, if they do so, high reliability with expert human coders can be achieved. Intensity-trained multiclass and regression models outperformed binary-trained classifier decision values on smile intensity estimation across multiple databases and methods for feature extraction and dimensionality reduction. Multiclass models even outperformed binary–trained classifiers on smile occurrence detection.

Keywords: Nonverbal behavior, Facial expression, Facial Action Coding System, Smile intensity, Support vector machines

1. Introduction

The face is an important avenue of communication capable of regulating social interaction and providing the careful observer with a wealth of information. Facial expression analysis has informed psychological studies of emotion (Darwin, 1872, Ekman et al., 1980, Zeng et al., 2009), intention (Fridlund, 1992, Keltner, 1996), physical pain (Littlewort et al., 2009, Prkachin and Solomon, 2008), and psychopathology (Cohn et al., 2009, Girard et al., 2013), among other topics. It is also central to computer science research on human-computer interaction (Cowie et al., 2001, Pantic and Rothkrantz, 2003) and computer animation (Pandzic and Forchheimer, 2002).

There are two general approaches to classifying facial expression (Cohn and Ekman, 2005). Message-based approaches seek to identify the meaning of each expression; this often takes the form of classifying expressions into one or more basic emotions such as happiness and anger (Ekman, 1999, Izard, 1971). This approach involves a great deal of interpretation and fails to account for the fact that facial expressions serve a communicative function (Fridlund, 1992), can be controlled or dissembled (Ekman, 2003), and often depend on context for interpretation (Barrett et al., 2011). Sign-based approaches, on the other hand, describe changes in the face during an expression rather than attempting to capture its meaning. By separating description from interpretation, sign-based approaches achieve more objectivity and comprehensiveness.

The most commonly used sign-based approach for describing facial expression is the Facial Action Coding System (FACS) (Ekman et al., 2002), which decomposes facial expressions into component parts called action units. Action units (AU) are anatomically-based and correspond to the contraction of specific facial muscles. AU may occur alone or in combination with others to form complex facial expressions. They may also vary in intensity (i.e., magnitude of muscle contraction). The FACS manual provides coders with detailed descriptions of the shape and appearance changes necessary to identify each AU and its intensity.

Much research using FACS has focused on the occurrence and AU composition of different expressions (Ekman and Rosenberg, 2005). For example, smiles that recruit the orbicular is oculi muscle (i.e., AU 6) are more likely to occur during pleasant circumstances (Ekman et al., 1990, Frank et al., 1993) and smiles that recruit the buccinator muscle (i.e., AU 14) are more likely to occur during active depression (Reed et al., 2007, Girard et al., 2013).

A promising subset of research has begun to focus on what can be learned about and from the intensity of expressions. This work has shown that expression intensity is linked to both the intensity of emotional experience and the sociality of the context (Ekman et al., 1980, Fridlund, 1991, Hess et al., 1995). For example, Hess et al. (1995) found that participants displayed the most facial expression intensity when experiencing strong emotions in the company of friends. Other studies have used the intensity of facial expressions (e.g., in yearbook photos) to predict a number of social and health outcomes years later. For example, smile intensity in a posed photograph has been linked to later life satisfaction, marital status (i.e., likelihood of divorce), and even years lived (Abel and Kruger, 2010, Harker and Keltner, 2001, Hertenstein et al., 2009, Oveis et al., 2009, Seder and Oishi, 2012). It is likely that research has only begun to scratch the surface of what might be learned from expressions’ intensities.

Intensity estimation is also critical to the modeling of an expression’s temporal dynamics (i.e., changes in intensity over time). Temporal dynamics is a relatively new area of study, but has already been linked to expression interpretation, person perception, and psychopathology. For example, the speed with which a smile onsets and offsets has been linked to interpretations of the expression’s meaning and authenticity (Ambadar et al., 2009), as well as to ratings of the smiling person’s attractiveness and personality (Krumhuber et al., 2007). Expression dynamics have also been found to be behavioral markers of depression, schizophrenia, and obsessive-compulsive disorder (Mergl et al., 2003, 2005, Juckel et al., 2008).

Efforts in automatic facial expression analysis have focused primarily on the detection of AU occurrence (Zeng et al., 2009), rather than the estimation of AU intensity. In shape-based approaches to automatic facial expression analysis, intensity dynamics can be measured directly from the displacement of facial landmarks (Valstar and Pantic, 2006, 2012). Shape-based approaches, however, are especially vulnerable to registration error (Chew et al., 2012), which is common in naturalistic settings. Appearance-based approaches are more robust to registration error, but require additional steps to estimate intensity. We address the question of how to estimate intensity from appearance features.

In an early and influential work on this topic, Bartlett et al. (2003) applied standard binary expression detection techniques to estimate expressions’ peak intensity. This and subsequent work (Bartlett et al., 2006a,b) encouraged the use of the margins of binary-trained maximum margin classifiers as proxies for facial expression intensity. The assumption underlying this practice is that the classifier’s decision value will be positively correlated with the expression’s intensity. However, this assumption is theoretically problematic because nothing in the formulation of a maximum margin classifier guarantees such a correlation (Yang et al., 2009). Indeed, many factors other than intensity may affect a data point’s decision value, such as its typicality in the training set, the presence of other facial actions, and the recording conditions (e.g., illumination, pose, noise). The decision-value-as-intensity heuristic is purely an assumption about the data. The current study tests this assumption empirically, and compares it with the more labor-intensive but theoretically-informed approaches of training multiclass and regression models using intensity ground truth.

1.1. Previous Work

Since Bartlett et al. (2003), many studies have used classifier decision values to estimate expression intensity (Bartlett et al., 2006a,b, Littlewort et al., 2006, Reilly et al., 2006, Koelstra and Pantic, 2008, Whitehill et al., 2009, Yang et al., 2009, Savran et al., 2011, Shimada et al., 2011, 2013). However, only a few of them have quantitatively evaluated their performance by comparing their estimations to manual (i.e., “ground truth”) coding. Several studies (Bartlett et al., 2006b, Whitehill et al., 2009, Savran et al., 2011) found that decision value and expression intensity were positively correlated during posed expressions. However, such correlations have typically been lower during spontaneous expressions. In a highly relevant study, Whitehill et al. (2009) focused on the estimation of spontaneous smile intensity and found a high correlation between decision value and smile intensity. However, this was in five short video clips and it is unclear how the ground truth intensity coding was obtained.

Recent studies have also used methods other than the decision-value-as-intensity heuristic for intensity estimation, such as regression (Ka Keung and Yangsheng, 2003, Savran et al., 2011, Dhall and Goecke, 2012, Kaltwang et al., 2012, Jeni et al., 2013b) and multiclass classifiers (Mahoor et al., 2009, Messinger et al., 2009, Mavadati et al., 2013). These studies have found that the predictions of support vector regression models and multiclass classifiers were highly correlated with expression intensity during both posed and spontaneous expressions. Finally, several studies (Cohn and Schmidt, 2004, Deniz et al., 2008, Messinger et al., 2008) used extracted features to estimate expression intensity directly. For example, Messinger et al. (2008) found that mouth radius was highly correlated with spontaneous smile intensity in five video clips.

Very few studies have compared different estimation methods using the same data and performance evaluation methods. Savran et al. (2011) found that support vector regression outperformed the decision values of binary support vector machine classifiers on the intensity estimation of posed expressions. Ka Keung and Yangsheng (2003) found that support vector regression outperformed cascading neural networks on the intensity estimation of posed expressions, and Dhall and Goecke (2012) found that Gaussian process regression outperformed both kernel partial least squares and support vector regression on the intensity estimation of posed expressions. Yang et al. (2009) also compared decision values with an intensity-trained model, but used their outputs to rank images by intensity rather than to estimate intensity.

Much of the previous work has been limited in three ways. First, many studies (Deniz et al., 2008, Dhall and Goecke, 2012, Ka Keung and Yangsheng, 2003, Yang et al., 2009) adopted a message-based approach, which is problematic for the reasons described earlier. Second, the majority of this work (Deniz et al., 2008, Dhall and Goecke, 2012, Ka Keung and Yangsheng, 2003, Savran et al., 2011, Yang et al., 2009) focused on posed expressions, which limits the external validity and generalizability of their findings. Third, most of these studies were limited in terms of the ground truth they compared their estimations to. Some studies (Bartlett et al., 2003, 2006a,b) only coded expressions’ peak intensities, while others (Mahoor et al., 2009, Messinger et al., 2008, 2009, Whitehill et al., 2009) obtained frame-level ground truth, but only for a handful of subjects. Without a large amount of expert-coded, frame-level ground truth, it is impossible to truly gauge the success of an automatic intensity estimation system.

1.2. The Current Study

The current study challenges the use of binary classifier decision values for the estimation of expression intensity. Primarily, we hypothesize that intensity-trained (i.e., multiclass and regression) models will outperform binary-trained (i.e., two-class) models for expression intensity estimation. Secondarily, we hypothesize that intensity-trained models will offer a smaller but significant boon to binary expression detection over binary-trained models.

We compared these approaches using multiple methods for feature extraction and dimensionality reduction, using the same data and the same performance evaluation methods. We also improve upon previous work by using a sign-based approach, two large datasets of spontaneous expressions, and expert-coded ground truth. Smiles were chosen for this in-depth analysis because they are the most commonly occurring facial expression (Bavelas and Chovil, 1997), are implicated in affective displays and social signaling (Hess et al., 2000, 2005), and appear in much of the previous work on both automatic intensity estimation and the psychological exploration of facial expression intensity.

2. Methods

2.1. Participants and Data

In order to increase the sample size and explore the generalizability of the findings, data was drawn from two separate datasets. Both datasets recorded and FACS coded participant facial behavior during a non-scripted, spontaneous dyadic interaction. They differ in terms of the context of the interaction, the demographic makeup of the sample, constraints placed upon data collection (e.g., illumination, frontality, and head motion), base rates of smiling, tracking, and inter-observer reliability of manual FACS coding. Because of how its segments were selected, the BP4D database also had more frequent and intense smiles.

2.1.1. BP4D Database

FACS coded video was available for 30 adults (50% female, 50% white, mean age 20.7 years) from the Binghamton-Pittsburgh 4D (BP4D) spontaneous facial expression database (Zhang et al., 2014). Participants were filmed with both a 3D dynamic face capturing system and a 2D frontal camera (520×720 pixel resolution) while engaging in eight tasks designed to elicit emotions such as anxiety, surprise, happiness, embarrassment, fear, pain, anger, and disgust. Facial behavior from the 20-second segment with the most frequent and intense facial expressions from each task was coded from the 2D video. The BP4D database is publicly available.

2.1.2. Spectrum Database

FACS coded video was available for 33 adults (67.6% female, 88.2% white, mean age 41.6 years) from the Spectrum database (Cohn et al., 2009). The participants suffered from major depressive disorder (American Psychiatric Association, 1994) and were recorded during clinical interviews to assess symptom severity over the course of treatment (Hamilton, 1967). A total of 69 interviews were recorded using four hardware-synchronized analogue cameras. Video from a camera roughly 15 degrees to the participant’s right was digitized into 640×480 pixel arrays for analysis. Facial behavior during the first three interview questions (about depressed mood, feelings of guilt, and suicidal ideation) was coded; these segments were an average of 100 seconds long. The Spectrum database is not publicly available due to confidentiality restrictions.

2.2. Manual Expression Annotation

2.2.1. AU Occurrence

For both the BP4D and Spectrum databases, participant facial behavior was manually FACS coded from video by certified coders. Inter-observer agreement - the degree to which coders saw the same AUs in each frame - was quantified using F1 score (van Rijsbergen, 1979). For the BP4D database, 34 commonly occurring AU were coded from onset to offset; inter-observer agreement for AU 12 occurrence was F1=0.96. For the Spectrum database, 17 commonly occurring AU were coded from onset to offset, with expression peaks also coded; inter-observer agreement for AU 12 occurrence was F1=0.71. For both datasets, onsets and offsets were converted to framelevel occurrence (i.e., present or absent) codes for AU 12.

2.2.2. AU Intensity

The manual FACS coding procedures described earlier were used to identify the temporal location of AU 12 events. Separate video clips of each event were generated and coded for intensity by certified coders using custom continuous measurement software. This coding involved assigning each video frame a label of “no smile” or “A” through “E” representing trace through maximum intensity (Fig. 1) as defined by the FACS manual (Ekman et al., 2002). Inter-observer agreement was quantified using intraclass correlation (ICC) (Shrout and Fleiss, 1979). Ten percent of clips were independently coded by a second certified FACS coder; inter-observer agreement was ICC=0.92.

Fig. 1.

Smile (AU 12) intensity levels from no contraction (left) to maximum contraction (right)

2.3. Automatic Expression Annotation

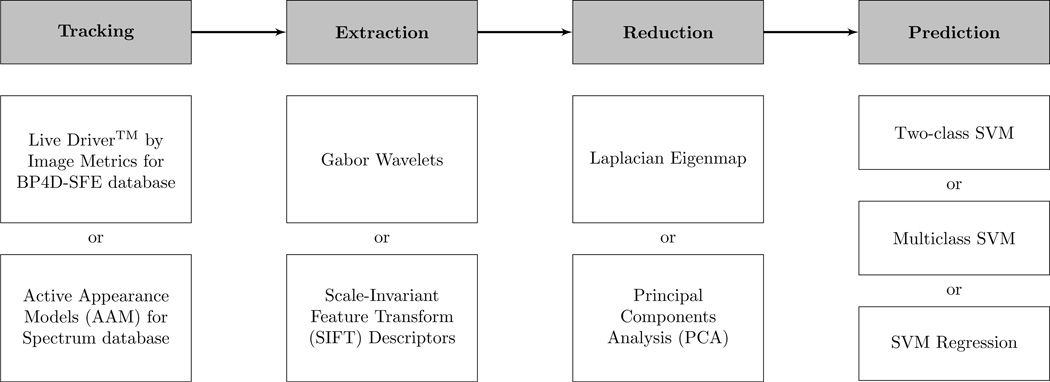

Smiles were automatically coded for both occurrence and intensity using each combination of the techniques listed in Fig. 2 for tracking, extraction, reduction, and prediction.

Fig. 2.

Techniques Used for Automatic Expression Annotation

2.3.1. Tracking

Facial landmark points indicate the location of important facial components (e.g., eye and lip corners). For the BP4D database, sixty-four facial landmarks were tracked in each video frame using Live Driver™ from Image Metrics (Image Metrics, 2013). Overall, 4% of video frames were untrackable, mostly due to occlusion or extreme out-of-plane rotation. A global normalizing (i.e., similarity) transformation was applied to the data for each video frame to remove variation due to rigid head motion. Finally, each image was cropped to the area surrounding the detected face and scaled to 128×128 pixels.

For the Spectrum database, sixty-six facial landmarks were tracked using active appearance models (AAM) (Cootes et al., 2001). AAM is a powerful approach that combines the shape and texture variation of an image into a single statistical model. Approximately 3% of video frames were manually annotated for each subject and then used to build the AAMs. The frames then were automatically aligned using a gradient-descent AAM fitting algorithm (Matthews and Baker, 2004). Overall, 9% of frames were untrackable, again mostly due to occlusion and rotation. The same normalization procedures used on the Live Driver landmarks were also used on the AAM landmarks. Additionally, because AAM includes landmark points along the jawline, we were able to remove non-face information from the images using a convex hull algorithm.

2.3.2. Extraction

Two types of appearance features were extracted from the tracked and normalized faces. Following previous work on expression detection (Chew et al., 2012) and intensity estimation (Bartlett et al., 2003, Savran et al., 2011), Gabor wavelets (Daugman, 1988, Fellenz et al., 1999) were extracted in localized regions surrounding each facial landmark point. Gabor wavelets are biologically-inspired filters, operating in a similar fashion to simple receptive fields in mammalian visual systems (Jones and Palmer, 1987). They have been found to be robust to misalignment and changes in illumination (Liu et al., 2001). By applying a filter bank of eight orientations and five scales (i.e., 17, 23, 33, 46, 65 pixels) at each localized region, specific changes in facial texture and orientation (which map onto facial wrinkles, folds, and bulges) were quantified. Scale-invariant feature transform (SIFT) descriptors (Lowe, 1999, Vedali and Fulkerson, 2008) were also extracted in localized regions surrounding each facial landmark point. SIFT descriptors are partially invariant to illumination changes. By applying a geometric descriptor to each facial landmark, changes in facial texture and orientation were quantified.

2.3.3. Reduction

Both types of features exhibited high dimensionality, which makes classification/regression difficult and resource-intensive problems. Two approaches for dimensionality reduction were compared on their ability to yield discriminant features for classification. For each model, only one of these approaches was used. The sample and feature sizes were motivated by the computational limitations imposed by each method.

Laplacian Eigenmap (Belkin and Niyogi, 2003) is a nonlinear technique used to find the low dimensional manifold that the original (i.e., high dimensional) feature data lies upon. Following recent work by Mahoor et al. (2009), supervised Laplacian Eigenmaps were trained on a randomly selected sample of 2500 frames and used in conjunction with spectral regression (Cai et al., 2007). Two manifolds were trained for the data: one using two classes (corresponding to FACS occurrence codes) and another using six classes (corresponding to the FACS intensity codes). The two-class manifolds were combined with the two-class models and the six-class manifolds were combined with the multiclass and regression models (described below). The Gabor and SIFT features were each reduced to 30 dimensions per video frame using this technique.

Principal Component Analysis (PCA) (Jolliffe, 2005) is a linear technique used to project a feature vector from a high dimensional space into a low dimensional space. Unsupervised PCA was used to find the smallest number of dimensions that accounted for 95% of the variance in a randomly selected sample of 100,000 frames. This technique reduced the Gabor features to 162 dimensions per video frame and reduced the SIFT features to 362 dimensions per video frame.

2.3.4. Prediction

Three techniques for supervised learning were used to predict the occurrence and intensity of smiles using the reduced features. Two-class models were trained on the binary FACS occurrence codes, while multiclass and regression models were trained on the FACS intensity codes. Data from the two databases were not mixed and contributed to separate models.

Following previous work on binary expression detection (Fasel and Luettin, 2003, Whitehill et al., 2009), two-class support vector machines (SVM) (Vapnik, 1995) were used for binary classification. We used a kernel SVM with a radial basis function kernel in all our approaches. SVMs were trained using two classes corresponding to the FACS occurrence codes described earlier. Training sets were created by randomly sampling 10,000 frames with roughly equal representation for each class. The choice of sample size was motivated by the computational limitations imposed by model training during cross-validation. Classifier and kernel parameters (i.e., C and γ, respectively) were optimized using a “grid-search” procedure (Hsu et al., 2003) on a separate validation set. The decision values of the SVM models were fractions corresponding to the distance of each frame’s high dimensional feature point from the class-separating hyperplane. These values were used for smile intensity estimation and also discretized using the standard SVM threshold of zero to provide predictions for binary smile detection (i.e., negative values were labeled absence of AU 12 and positive values were labeled presence of AU 12). Some researchers have proposed converting the SVM decision value to a pseudo-probability using a sigmoid function (Platt, 1999), but because the SVM training procedure is not intended to encourage this, it can result in a poor approximation of the posterior probability (Tipping, 2001).

Following previous work on expression intensity estimation using multiclass classifiers (Messinger et al., 2009, Mahoor et al., 2009, Mavadati et al., 2013), the SVM framework was extended for multiclass classification using the “one-against-one” technique (Hsu and Lin, 2002). In this technique, if k is the number of classes, then k(k − 1)/2 subclassifiers are constructed and each one trains data from two classes; classification is then resolved using a subclassifier voting strategy. Multiclass SVMs were trained using six classes corresponding to the FACS intensity codes described earlier. Training sets were created by randomly sampling 10,000 frames with roughly equal representation for each class. Classifier and kernel parameters (i.e., C and γ, respectively) were optimized using a “grid-search” procedure (Hsu et al., 2003) on a separate validation set. The output values of the multiclass classifiers were integers corresponding to each frame’s estimated smile intensity level. These values were used for smile intensity estimation and also discretized to provide predictions for binary smile detection (i.e., values of 0 were labeled absence of AU 12 and values of 1 through 5 were labeled presence of AU 12).

Following previous work on expression intensity estimation using regression (Jeni et al., 2013b, Kaltwang et al., 2012, Savran et al., 2011, Dhall and Goecke, 2012, Ka Keung and Yangsheng, 2003), epsilon support vector regression (ε-SVR) (Vapnik, 1995) was used. As others have noted (Savran et al., 2011), ε-SVR is appropriate to expression intensity estimation because its ε-insensitive loss function is robust and generates a smooth mapping. ε-SVRs were trained using a metric derived from the FACS intensity codes described earlier. The intensity scores of “A” through “E” were assigned a discrete numerical value from 1 to 5, with “no smile” assigned the value of 0. Although this mapping deviates from the non-metric definition of AU intensity in the FACS manual, wherein the range of some intensity scores is larger than others, it enables us to provide a more efficient computational model that works well in practice. Training sets were created by randomly sampling 10,000 frames with roughly equal representation for each class. Model and kernel parameters (i.e., C and γ, respectively) were optimized using a “grid-search” procedure (Hsu et al., 2003); the epsilon parameter was left at the default value (ε=0.1). The output values of the regression models were fractions corresponding to each frame’s estimated smile intensity level. This output was used for smile intensity estimation and also discretized using a threshold of 0.5 (so that low numbers rounded down) to provide predictions for binary smile detection.

It is important to note the differences between the three approaches that were tested. In the two-class approach, the five intensity levels of a given AU were collapsed into a single positive class. In the multiclass approach, each of the intensity levels was treated as a mutually-exclusive but unrelated class. Finally, in the regression approach, each intensity level was assigned a discrete numerical value and modeled on a continuous dimension. These differences are clarified by examination of the respective loss functions. The penalty of incorrect estimation in the regression approach is based on the distance between the prediction value y and the ground truth label t, given a buffer area of size ε (Equation 1). In contrast, the penalty of misclassification in the two-class approach is based on the classifier’s decision value y (Equation 2). In this case, the ground truth label is collapsed into present at any intensity level (t = 1) or absent (t = −1). As an extension of the two-class approach, similar phenomena occur for the multiclass approach.

| (1) |

| (2) |

2.3.5. Cross-validation

To prevent model over-fitting, stratified k-fold cross-validation (Geisser, 1993) was used. Cross-validation procedures typically involve partitioning the data and iterating through the partitions such that all the data is used but no iteration is trained and tested on the same data. Stratified cross-validation procedures ensure that the resultant partitions have roughly equal distributions of the target class (in this case AU 12). This property is desirable because many performance metrics are highly sensitive to class skew (Jeni et al., 2013a). By using the same partitions across methods, the randomness introduced by repeated repartitioning can also be avoided.

Each video segment was assigned to one of five partitions. Segments, rather than participants, were assigned to partitions to allow greater flexibility for stratification. However, this choice allowed independent segments from the same participant to end up in multiple partitions. As such, this procedure was a less conservative control for generalizability. For each iteration of the cross-validation procedure, three partitions were used for training, one partition was used for validation (i.e., optimization), and one partition was used for testing.

2.4. Performance Evaluation

The majority of previous work on expression intensity estimation has utilized the Pearson product-moment correlation coefficient (PCC) to measure the correlation between intensity estimations and ground truth coding. PCC is invariant to linear transformations, which is useful when using estimations that differ in scale and location from the ground truth coding (e.g., decision values). However, this same property is problematic when the estimations are similar to the ground truth (e.g., multiclass classifier predictions), as it introduces an undesired handicap. For instance, a classifier that always estimates an expression to be two intensity levels stronger than it is will have the same PCC as a classifier that always estimates the expression’s intensity level correctly.

For this reason, we performed our analyses using another performance metric that grants more control over its relation to linear transformations: the intraclass correlation coefficient (ICC) (Shrout and Fleiss, 1979). Equation 3 was used to compare the multiclass SVM and ε-SVR approaches to the manual intensity annotations as their outputs were consistently scaled; it was calculated using Between-Target Mean Squares (BMS) and Within-Target Mean Squares (WMS). Equation 4 was used for the decision value estimations because it takes into account differences in scale and location; it was calculated using Between-Target Mean Squares (BMS) and Residual Sum of Squares (EMS). For both formulas, k is equal to the number of coding sources being compared; in the current study, there are two: the automatic and manual codes. ICC ranges from −1 to +1, with more positive values representing higher agreement.

| (3) |

| (4) |

The majority of previous work on binary expression detection has utilized receiver operating characteristic (ROC) analysis. When certain assumptions are met, the area under the curve (AUC) is equal to the probability that the classifier will rank a randomly chosen positive instance higher than a randomly chosen negative instance (Fawcett, 2006). The fact that AUC captures information about the entire distribution of decision points is a benefit of the measure, as it removes the subjectivity of threshold selection. However, in the case of automatic expression annotation, a threshold must be chosen in order to create predictions that can be compared with ground truth coding. In light of this issue, we performed our analyses using a threshold-specific performance metric: the F1 score, which is the harmonic mean of precision and recall (Equation 5) (van Rijsbergen, 1979). F1 score is computed using true positives (TP), false positives (FP), and false negatives (FN); it ranges from 0 to 1, with higher values representing higher agreement between coders.

| (5) |

2.5. Data Analysis

Main effects and interaction effects among the different methods were analyzed using two univariate general linear models (IBM Corp, 2012) (one for binary smile detection and one for smile intensity estimation). F1 and ICC were entered as the sole dependent variable in each model, and database, extraction type, reduction type, and classification type were entered as “fixed factor” independent variables. The direction of significant differences were explored using marginal means for all variables except for classification type. In this case, posthoc Tukey HSD tests (IBM Corp, 2012) were used to explore differences between the three types of classification.

3. Results

3.1. Smile Intensity Estimation

Across all methods and databases, the average intensity estimation performance was ICC=0.64. However, performance varied widely between databases and methods, from a low of ICC=0.23 to a high of ICC=0.92.

The overall general linear model for smile intensity estimation was significant (Table 1). Main effects of database, extraction method, and supervised learning method were apparent. Intensity estimation performance was significantly higher for the BP4D database than for the Spectrum database, and intensity estimation performance using SIFT features was significantly higher than that using Gabor features. Intensity estimation performance using multiclass and regression models was significantly higher than that using the two-class approach. There was no significant difference in performance between Laplacian Eigenmap and PCA for reduction.

Table 1.

General Linear Model Results for Smile Intensity Estimation

| Database | ICC | F | p |

| BP4D | 0.765 | 158.206 | .00 |

| Spectrum | 0.521 | ||

| Extraction | ICC | F | p |

| Gabor | 0.602 | 17.892 | .00 |

| SIFT | 0.684 | ||

| Reduction | ICC | F | p |

| Laplacian | 0.639 | 0.197 | .66 |

| PCA | 0.648 | ||

| Prediction | ICC | F | p |

| Two-class | 0.467a | 83.360 | .00 |

| Multiclass | 0.739b | ||

| Regression | 0.724b | ||

| Interaction Effects | F | p | |

| Database × Extraction | 4.627 | .03 | |

| Database × Reduction | 3.958 | .05 | |

| Database × Model | 8.873 | .00 | |

| Reduction × Model | 13.391 | .00 | |

These main effects were qualified by four significant interaction effects. First, the difference between SIFT features and Gabor features was greater in the Spectrum database than in the BP4D database. Second, while Laplacian Eigenmap performed better in the Spectrum database, PCA performed better in the BP4D database. Third, while multiclass models performed better in the Spectrum database, regression models performed better in the BP4D database. Fourth, PCA reduction yielded higher intensity estimation performance when combined with two-class models, but lower performance when combined with multiclass and regression models.

3.2. Binary Smile Detection

Across all methods and databases, the average binary detection performance was F1=0.64. However, performance varied between databases and methods, from a low of F1=0.40 to a high of F1=0.81.

The overall general linear model for binary smile detection was significant (Table 2). Main effects of database, extraction method, and supervised learning method were apparent. Detection performance on the BP4D database was significantly higher than that on the Spectrum database, and detection performance using SIFT features was significantly higher than that using Gabor features. Detection performance was significantly higher using multiclass models than using two-class models.

Table 2.

General Linear Model Results for Binary Smile Detection

| Database | F1 Score | F | p |

| BP4D | 0.772 | 440.209 | .00 |

| Spectrum | 0.504 | ||

| Extraction | F1 Score | F | p |

| Gabor | 0.618 | 9.740 | .00 |

| SIFT | 0.658 | ||

| Reduction | F1 Score | F | p |

| Laplacian | 0.642 | 0.501 | .48 |

| PCA | 0.633 | ||

| Prediction | F1 Score | F | p |

| Two-class | 0.616a | 4.175 | .02 |

| Multiclass | 0.661b | ||

| Regression | 0.636 | ||

| Interaction Effects | F | p | |

| Reduction × Model | 5.753 | .00 | |

These main effects were qualified by a significant interaction effect between reduction method and supervised learning method. PCA reduction yielded higher detection performance when combined with two-class models, but lower detection performance when combined with multiclass and regression models. There was no main effect of dimensionality reduction method and no other interactions were significant.

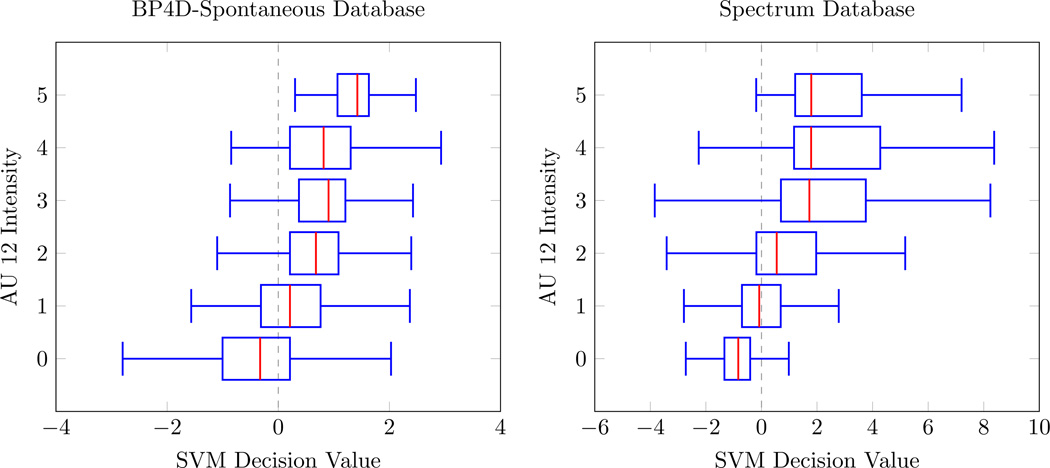

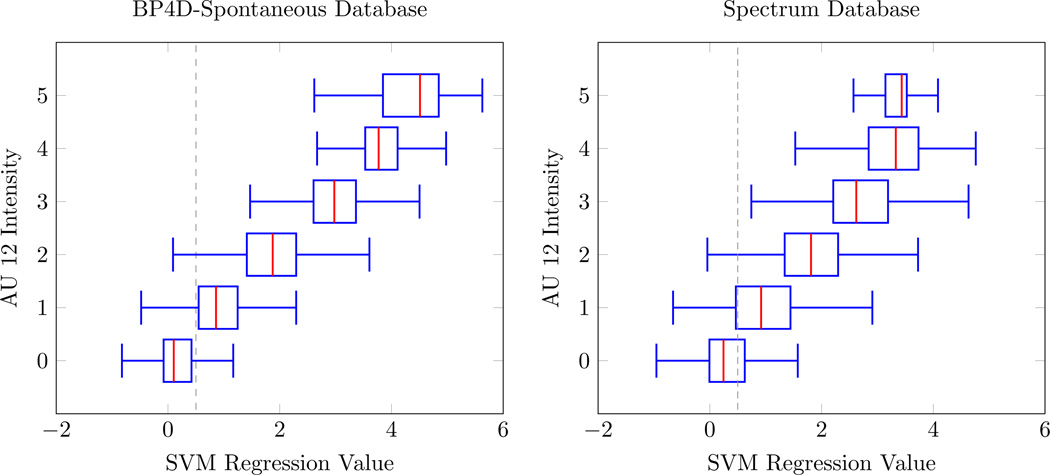

3.3. Distribution of Output Values

The decision values of the best-performing two-class model for each database are presented in Figure 4 as box plots (Frigge et al., 1989). The blue boxes represent the first and third quartiles of each smile intensity level, while the red line in each box represents the median. The blue lines represent data within 1.5 times the inter-quartile range of the lower and upper quartiles. The regression values of the best-performing regression model for each database are similarly presented in Figure 5.

Fig. 4.

SVM Decision Values by AU 12 Intensity in Two Databases

Fig. 5.

SVM Regression Values by AU 12 Intensity in Two Databases

Examination of Figure 4 reveals a slight right-leaning tendency, indicating that more positive SVM decision values are on average more likely to be higher intensity. However, there is substantial overlap between the distributions and a great deal of “clumping” between intensity levels; the distributions for levels 2 through 4 (i.e., “B” through “D”) are very similar for the BP4D dataset, while the distributions for levels 3 through 5 (i.e., “C” through “E”) are very similar for the Spectrum dataset. Finally, the observed range of values spans −3.6 to 5.5 for BP4D and −8.4 to 8.1 for Spectrum.

Examination of Figure 5 reveals a stepped and right-leaning pattern, indicating that more positive regression values are on average more likely to be higher intensity. There is some overlapping between the distributions, although the inter-quartile ranges for each group are largely distinct. One exception to this is clumping for levels 4 and 5 (i.e., “D” and “E”), especially for the Spectrum dataset. The observed range of values spans −3.1 to 5.7 for BP4D and −1.2 to 4.8 for Spectrum.

4. Discussion

4.1. Smile Intensity Estimation

Intensity estimation performance varied between databases, feature extraction methods, and supervised learning methods. Performance was higher in the BP4D database than in the Spectrum database. It is not surprising that performance differed between the two databases, given how much they differed in terms of participant demographics, social context, and image quality. Further experimentation will be required to pinpoint exactly what differences between the databases contributed to this drop in performance, but we suspect that illumination conditions, frontality of camera placement, and participant head pose were involved. It is also possible that the participants in the Spectrum database were more difficult to analyze due to their depressive symptoms. Previous research has found that nonverbal behavior (and especially smiling) changes with depression symptomatology (e.g., Girard et al., 2014). There were also differences between databases in terms of social context that likely influenced smiling behavior; Spectrum was recorded during a clinical interview about depression symptoms, while BP4D was recorded during tasks designed to elicit specific and varied emotions. Participants in the Spectrum database smiled less frequently (20.5% of frames) and less intensely (average intensity 1.5) than did participants in the BP4D database (56.4% of frames and average intensity 2.4). The inter-observer reliability for manual smile occurrence coding was also higher in the BP4D database (F1=0.96) than in the Spectrum database (F1=0.71). These differences may have affected the difficulty of smile intensity estimation.

More surprising was that intensity estimation performance was higher for SIFT features than for Gabor features. This finding is encouraging from a computational load perspective, considering the toolbox implementation of SIFT used in this study (Vedali and Fulkerson, 2008) was many times faster than our custom implementation of Gabor. However, it is possible that SIFT was particularly well-suited to our form of registration with dense facial land-marking. Although we did not test this hypothesis in the current study, it would have been interesting to compare these two methods of feature extraction in conjunction with a method of registration using sparse land-marking (e.g., holistic face detection or eye tracking). It is also important to note that the difference between SIFT and Gabor features was larger in the Spectrum database than in BP4D.

For dimensionality reduction, intensity estimation performance was not significantly different between Laplacian Eigenmap and PCA. This may be an indication that the features used in this study were linearly separable and that manifold learning was unnecessary. This finding is also encouraging from a computational load perspective, as PCA is a much faster and simpler technique. However, it is important to note that the success of each dimensionality reduction technique depended on the database and on the classification method used. Laplacian Eigenmap was better suited to the Spectrum database, multiclass models, and regression models; while PCA was better suited to the BP4D database and two-class models.

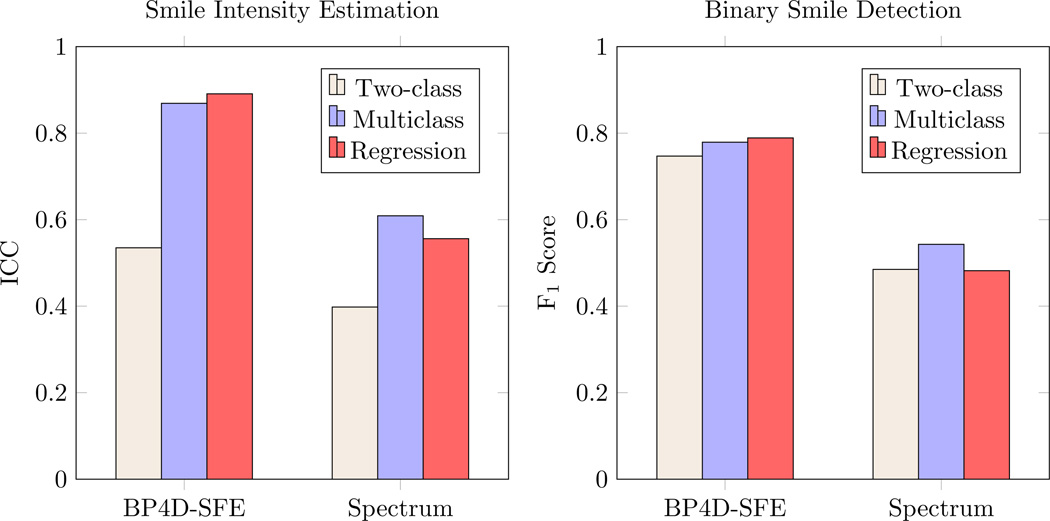

Most relevant to our main hypothesis are the findings regarding supervised learning method. In line with our hypothesis that the decision-value-as-intensity heuristic is flawed in practice, the intensity-trained multiclass and regression models performed significantly better at intensity estimation than the decision values of two-class models. However, it is important to note that the intensity estimation performance yielded by binary-trained models was not negligible. Consistent with previous reports, decision values showed a low to moderate correlation with smile intensity.

4.2. Binary Smile Detection

Binary detection performance also varied between databases, feature extraction methods, and supervised learning methods. These differences were very similar to those for expression intensity estimation. Binary detection performance was higher for the BP4D database than for the Spectrum database, higher for SIFT features than for Gabor features, and no different between Laplacian Eigenmap and PCA for reduction.

Examination of the decision values for the two-class models (Fig. 4) reveals that there is substantial overlap between the distributions of “no smile” and “A” level smiles. Furthermore, the first quartile of “A” level smiles is negative in both datasets and therefore contributes to substantial misclassification. Examination of the confusion matrices for the multiclass models reveals a similar pattern: detecting “trace” level smiles is difficult.

Surprisingly, detection performance was higher for multiclass models than for two-class models; this difference was modest but statistically significant (Fig. 3). This suggests that the best classifier for binary detection is not necessarily the one trained on binary labels. As far as we know, this is the first study to attempt binary expression detection using an intensity-trained classifier. Although collecting frame-level intensity ground truth is labor-intensive, our findings indicate that this investment is worthwhile for both binary expression detection and expression intensity estimation.

Fig. 3.

Average Performance for Three Approaches to Supervised Learning in Two Databases

4.3. Conclusions

We provide empirical evidence that the decision-value-as-intensity heuristic is flawed in practice as well as in theory. Unfortunately, there are no shortcuts when it comes to estimating smile intensity: researchers must take the time to collect and train on intensity ground truth. However, if they do so, high reliability with expert human FACS coders can be achieved. Intensity-trained multiclass and regression models outperformed binary-trained classifier decision values on smile intensity estimation across multiple databases and methods for feature extraction and dimensionality reduction. Multiclass models even outperformed binary-trained classifiers on binary smile detection. Examination of the distribution of classifier decision values indicates that there is substantial overlap between smile intensity levels and that low intensity smiles are frequently confused with non-smiles. A much cleaner set of distributions can be achieved by training a regression model explicitly on the intensity levels.

4.4. Limitations and Future Directions

The primary limitations of the current study were that it focused on a single facial expression and supervised learning framework. Future work should explore the generalizability of these findings by comparing different methods for supervised learning and other facial expressions. Another limitation is the divergence between the number of reduced features yielded by Laplacian Eigenmap and PCA. Future work might standardize the number of features or forego dimensionality reduction entirely (at the cost of computation time or kernel complexity). Finally, future work would benefit from a comparison of additional techniques for facial landmark registration, feature extraction, and dimensionality reduction.

Supplementary Material

Acknowledgments

The authors wish to thank Nicole Siverling, Laszlo A. Jeni, Wen-Sheng Chu, Dean P. Rosenwald, Shawn Zuratovic, Kayla Mormak, and anonymous reviewers for their generous assistance. Research reported in this publication was supported by the U.S. National Institute of Mental Health under award MH096951 and the U.S. National Science Foundation under award 1205195.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Abel EL, Kruger ML. Smile Intensity in Photographs Predicts Longevity. Psychological Science. 2010;21:542–544. doi: 10.1177/0956797610363775. [DOI] [PubMed] [Google Scholar]

- Ambadar Z, Cohn JF, Reed LI. All smiles are not created equal: Morphology and timing of smiles perceived as amused, polite, and embarrassed/nervous. Journal of Nonverbal Behavior. 2009;33:17–34. doi: 10.1007/s10919-008-0059-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 4th ed. Washington, DC.: 1994. [Google Scholar]

- Barrett LF, Mesquita B, Gendron M. Context in emotion perception. Current Directions in Psychological Science. 2011;20:286–290. [Google Scholar]

- Bartlett MS, Littlewort G, Braathen B, Sejnowski TJ, Movellan JR. A prototype for automatic recognition of spontaneous facial actions. In: Becker S, Obermayer K, editors. Advances in Neural Information Processing Systems. MIT Press; 2003. [Google Scholar]

- Bartlett MS, Littlewort G, Frank MG, Lainscsek C, Fasel IR, Movellan JR. Automatic recognition of facial actions in spontaneous expressions. Journal of Multimedia. 2006a;1:22–35. [Google Scholar]

- Bartlett MS, Littlewort G, Frank MG, Lainscsek C, Fasel IR, Movellan JR. Fully Automatic Facial Action Recognition in Spontaneous Behavior. IEEE International Conference on Automatic Face & Gesture Recognition; Southampton. 2006b. pp. 223–230. [Google Scholar]

- Bavelas JB, Chovil N. Faces in dialogue. In: Russell JA, Fernandez-Dols JM, editors. The psychology of facial expression. New York: Cambridge University Press; 1997. pp. 334–346. [Google Scholar]

- Belkin M, Niyogi P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Computation. 2003;15:1373–1396. [Google Scholar]

- Cai D, He X, Zhang WV, Han J. Regularized locality preserving indexing via spectral regression; Conference on Information and Knowledge Management, ACM; 2007. pp. 741–750. [Google Scholar]

- Chew SW, Lucey P, Lucey S, Saragih J, Cohn JF, Matthews I, Sridharan S. In the Pursuit of Effective Affective Computing: The Relationship Between Features and Registration. IEEE Transactions on Systems Man, and Cybernetics. 2012;42:1006–1016. doi: 10.1109/TSMCB.2012.2194485. [DOI] [PubMed] [Google Scholar]

- Cohn JF, Ekman P. Measuring facial action by manual coding, facial EMG, automatic facial image analysis. In: Harrigan JA, Rosenthal R, Scherer KR, editors. The new handbook of nonverbal behavior research. New York NY: Oxford University Press; 2005. pp. 9–64. [Google Scholar]

- Cohn JF, Kruez TS, Matthews I, Ying Y, Minh Hoai N, Padilla MT, Feng Z, De la Torre F. Affective Computing and Intelligent Interaction. Amsterdam: 2009. Detecting depression from facial actions and vocal prosody; pp. 1–7. [Google Scholar]

- Cohn JF, Schmidt KL. The timing of facial motion in posed and spontaneous smiles. International Journal of Wavelets, Multiresolution and Information Processing. 2004;2:57–72. [Google Scholar]

- Cootes TF, Edwards GJ, Taylor CJ. Active appearance models. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2001;23:681–685. [Google Scholar]

- Cowie R, Douglas-Cowie E, Tsapatsoulis N, Votsis G, Kollias S, Fellenz W, Taylor JG. Emotion recognition in human-computer interaction. IEEE Signal Processing Magazine. 2001;18:32–80. [Google Scholar]

- Darwin C. The expression of emotions in man and animals. 3rd ed. New York: Oxford University; 1872. [Google Scholar]

- Daugman JG. Complete discrete 2-D Gabor transforms by neural networks for image analysis and compression. IEEE Transactions on Acoustics, Speech and Signal Processing. 1988;36:1169–1179. doi: 10.1109/29.9030. [DOI] [PubMed] [Google Scholar]

- Deniz O, Castrillon M, Lorenzo J, Anton L, Bueno G. Smile Detection for User Interfaces. In: Bebis G, Boyle R, Parvin B, Koracin D, Remagnino P, Porikli F, Peters J, Klosowski J, Arns L, Chun YK, Rhyne TM, Monroe L, editors. Advances in Visual Computing. Berlin, Heidelberg: Springer Berlin Heidelberg; 2008. pp. 602–611. volume 5359 of Lecture Notes in Computer Science. [Google Scholar]

- Dhall A, Goecke R. Group expression intensity estimation in videos via Gaussian Processes; International Conference on Pattern Recognition; 2012. pp. 3525–3528. [Google Scholar]

- Ekman P. Basic emotions. In: Dalagleish T, Power M, editors. Handbook of cognition and emotion. UK: John Wiley & Sons; 1999. pp. 45–60. [Google Scholar]

- Ekman P. Darwin, deception, and facial expression. Annals of the New York Academy of Sciences. 2003;1000:205–221. doi: 10.1196/annals.1280.010. [DOI] [PubMed] [Google Scholar]

- Ekman P, Davidson RJ, Friesen WV. The Duchenne smile: Emotional expression and brain physiology: II. Journal of Personality and Social Psychology. 1990;58:342–353. [PubMed] [Google Scholar]

- Ekman P, Friesen WV, Ancoli S. Facial signs of emotional experience. Journal of Personality and Social Psychology. 1980;39:1125–1134. [Google Scholar]

- Ekman P, Friesen WV, Hager J. Facial action coding system: A technique for the measurement of facial movement. Salt Lake City, UT.: Research Nexus; 2002. [Google Scholar]

- Ekman P, Rosenberg EL. What the face reveals: Basic and applied studies of spontaneous expression using the facial action coding system (FACS) 2nd ed. New York, NY: Oxford University Press; 2005. [Google Scholar]

- Fasel B, Luettin J. Automatic facial expression analysis: a survey. Pattern Recognition. 2003;36:259–275. [Google Scholar]

- Fawcett T. An introduction to ROC analysis. Pattern Recognition Letters. 2006;27:861–874. [Google Scholar]

- Fellenz W, Taylor JG, Tsapatsoulis N, Kollias S. Comparing template-based, feature-based and supervised classification of facial expressions from static images. Computational Intelligence and Applications. 1999 [Google Scholar]

- Frank MG, Ekman P, Friesen WV. Behavioral markers and recognizability of the smile of enjoyment. Journal of Personality and Social Psychology. 1993;64:83–93. doi: 10.1037//0022-3514.64.1.83. [DOI] [PubMed] [Google Scholar]

- Fridlund AJ. Sociality of solitary smiling: Potentiation by an implicit audience. Journal of Personality and Social Psychology. 1991;60:12. [Google Scholar]

- Fridlund AJ. The behavioral ecology and sociality of human faces. In: Clark MS, editor. Review of Personality Social Psychology. Sage Publications; 1992. pp. 90–121. [Google Scholar]

- Frigge M, Hoaglin D, Iglewicz B. Some implementations of the boxplot. The American Statistician. 1989;43:50–54. [Google Scholar]

- Geisser S. Predictive inference. New York, NY: Chapman and Hall; 1993. [Google Scholar]

- Girard JM, Cohn JF, Mahoor MH, Mavadati S, Rosenwald DP. Social Risk and Depression: Evidence from manual and automatic facial expression analysis; IEEE International Conference on Automatic Face & Gesture Recognition; 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Girard JM, Cohn JF, Mahoor MH, Mavadati SM, Hammal Z, Rosenwald DP. Nonverbal social withdrawal in depression: Evidence from manual and automatic analyses. Image and Vision Computing. 2014;32:641–647. doi: 10.1016/j.imavis.2013.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamilton M. Development of a rating scale for primary depressive illness. British Journal of Social and Clinical Psychology. 1967;6:278–296. doi: 10.1111/j.2044-8260.1967.tb00530.x. [DOI] [PubMed] [Google Scholar]

- Harker L, Keltner D. Expressions of Positive Emotion in Women’s College Yearbook Pictures and Their Relationship to Personality and Life Outcomes Across Adulthood. Journal of Personality and Social Psychology. 2001;80:112–124. [PubMed] [Google Scholar]

- Hertenstein M, Hansel C, Butts A, Hile S. Smile intensity in photographs predicts divorce later in life. Motivation and Emotion. 2009;33:99–105. [Google Scholar]

- Hess U, Adams RB, Jr, Kleck RE. Who may frown and who should smile? Dominance, affiliation, and the display of happiness and anger. Cognition and Emotion. 2005;19:515–536. [Google Scholar]

- Hess U, Banse R, Kappas A. The intensity of facial expression is determined by underlying affective state and social situation. Journal of Personality and Social Psychology. 1995;69:280–288. [Google Scholar]

- Hess U, Blairy S, Kleck RE. The influence of facial emotion displays, gender, and ethnicity on judgments of dominance and affiliation. Journal of Nonverbal Behavior. 2000;24:265–283. [Google Scholar]

- Hsu CW, Chang CC, Lin CJ. A practical guide to support vector classification. Technical Report. 2003 [Google Scholar]

- Hsu CW, Lin CJ. A comparison of methods for multiclass support vector machines. IEEE Transactions on Neural Networks. 2002;13:415–425. doi: 10.1109/72.991427. [DOI] [PubMed] [Google Scholar]

- IBM Corp. IBM SPSS Statistics for Windows. Version 21.0. 2012 [Google Scholar]

- Image Metrics. Live Driver SDK. 2013 URL: http://image-metrics.com/. [Google Scholar]

- Izard CE. The face of emotion. New York, NY: Appleton-Century-Crofts; 1971. [Google Scholar]

- Jeni LA, Cohn JF, De la Torre F. Facing imbalanced data: Recommendations for the use of performance metrics; International Conference on Affective Computing and Intelligent Interaction; 2013a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeni LA, Girard JM, Cohn JF, De la Torre F. Continuous AU intensity estimation using localized, sparse facial feature space; Proceedings of the IEEE International Conference on Automated Face & Gesture Recognition Workshops, IEEE; 2013b. pp. 1–7. [Google Scholar]

- Jolliffe I. Encyclopedia of statistics in behavioral science. John Wiley & Sons, Ltd.; 2005. Principal component analysis. [Google Scholar]

- Jones JP, Palmer LA. An evaluation of the two-dimensional Gabor filter model of simple receptive fields in cat striate cortex. Journal of neurophysiology. 1987;58:1233–1258. doi: 10.1152/jn.1987.58.6.1233. [DOI] [PubMed] [Google Scholar]

- Juckel G, Mergl R, Prassl A, Mavrogiorgou P, Witthaus H, Moller HJ, Hegerl U. Kinematic analysis of facial behaviour in patients with schizophrenia under emotional stimulation by films with “Mr. Bean”. European Archives of Psychiatry and Clinical Neuroscience. 2008;258:186–191. doi: 10.1007/s00406-007-0778-3. [DOI] [PubMed] [Google Scholar]

- Ka Keung L, Yangsheng X. Real-time estimation of facial expression intensity; IEEE International Conference on Robotics and Automation; 2003. pp. 2567–2572. [Google Scholar]

- Kaltwang S, Rudovic O, Pantic M. Continuous Pain Intensity Estimation from Facial Expressions. In: Bebis G, Boyle R, Parvin B, Koracin D, Fowlkes C, Wang S, Choi MH, Mantler S, Schulze J, Acevedo D, Mueller K, Papka M, editors. Advances in Visual Computing. Berlin, Heidelberg: Springer; 2012. pp. 368–377. volume 7432 of Lecture Notes in Computer Science. [Google Scholar]

- Keltner D. Evidence for the Distinctness of Embarrassment, Shame, and Guilt: A Study of Recalled Antecedents and Facial Expressions of Emotion. Cognition and Emotion. 1996;10:155–172. [Google Scholar]

- Koelstra S, Pantic M. Non-rigid registration using free-form deformations for recognition of facial actions and their temporal dynamics; IEEE International Conference on Automatic Face & Gesture Recognition; 2008. pp. 1–8. [Google Scholar]

- Krumhuber E, Manstead AS, Kappas A. Temporal Aspects of Facial Displays in Person and Expression Perception: The Effects of Smile Dynamics, Head-tilt, and Gender. Journal of Nonverbal Behavior. 2007;31:39–56. [Google Scholar]

- Littlewort G, Bartlett MS, Fasel IR, Susskind J, Movellan JR. Dynamics of facial expression extracted automatically from video. Image and Vision Computing. 2006;24:615–625. [Google Scholar]

- Littlewort GC, Bartlett MS, Lee K. Automatic coding of facial expressions displayed during posed and genuine pain. Image and Vision Computing. 2009;27:1797–1803. [Google Scholar]

- Liu C, Louis S, Wechsler H. A Gabor Feature Classifier for Face Recognition; IEEE International Conference on Computer Vision; 2001. pp. 270–275. [Google Scholar]

- Lowe DG. Object recognition from local scale-invariant features; IEEE International Conference on Computer Vision; 1999. pp. 1150–1157. [Google Scholar]

- Mahoor MH, Cadavid S, Messinger DS, Cohn JF. A framework for automated measurement of the intensity of non-posed Facial Action Units. Miami: Computer Vision and Pattern Recognition Workshops; 2009. pp. 74–80. [Google Scholar]

- Matthews I, Baker S. Active Appearance Models Revisited. International Journal of Computer Vision. 2004;60:135–164. [Google Scholar]

- Mavadati SM, Mahoor MH, Bartlett K, Trinh P, Cohn JF. DISFA: A spontaneous facial action intensity database. IEEE Transactions on Affective Computing. 2013 [Google Scholar]

- Mergl R, Mavrogiorgou P, Hegerl U, Juckel G. Kinematical analysis of emotionally induced facial expressions: a novel tool to investigate hypomimia in patients suffering from depression. Journal of Neurology, Neurosurgery, and Psychiatry. 2005;76:138–140. doi: 10.1136/jnnp.2004.037127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mergl R, Vogel M, Mavrogiorgou P, Gobel C, Zaudig M, Hegerl U, Juckel G. Kinematical analysis of emotionally induced facial expressions in patients with obsessive-compulsive disorder. Psychological Medicine. 2003;33:1453–1462. doi: 10.1017/s0033291703008134. [DOI] [PubMed] [Google Scholar]

- Messinger DS, Cassel TD, Acosta SI, Ambadar Z, Cohn JF. Infant Smiling Dynamics and Perceived Positive Emotion. Journal of Nonverbal Behavior. 2008;32:133–155. doi: 10.1007/s10919-008-0048-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Messinger DS, Mahoor MH, Chow SM, Cohn JF. Automated measurement of facial expression in infant-mother interaction: A pilot study. Infancy. 2009;14:285–305. doi: 10.1080/15250000902839963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oveis C, Gruber J, Keltner D, Stamper JL, Boyce WT. Smile intensity and warm touch as thin slices of child and family affective style. Emotion. 2009;9:544–548. doi: 10.1037/a0016300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pandzic IS, Forchheimer R. The Origins of the MPEG-4 Facial Animation Standard. Wiley Online Library; 2002. [Google Scholar]

- Pantic M, Rothkrantz LJM. Toward an affect-sensitive multimodal human-computer interaction. Proceedings of the IEEE. 2003;91:1370–1390. [Google Scholar]

- Platt J. Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Advances in large margin classifiers. 1999;10:61–74. [Google Scholar]

- Prkachin KM, Solomon PE. The structure, reliability and validity of pain expression: Evidence from patients with shoulder pain. Pain. 2008;139:267–274. doi: 10.1016/j.pain.2008.04.010. [DOI] [PubMed] [Google Scholar]

- Reed LI, Sayette MA, Cohn JF. Impact of depression on response to comedy: A dynamic facial coding analysis. Journal of Abnormal Psychology. 2007;116:804–809. doi: 10.1037/0021-843X.116.4.804. [DOI] [PubMed] [Google Scholar]

- Reilly J, Ghent J, McDonald J. Investigating the dynamics of facial expression. Advances in Visual Computing. 2006:334–343. [Google Scholar]

- van Rijsbergen CJ. Information Retrieval. 2nd ed. London: Butterworth; 1979. [Google Scholar]

- Savran A, Sankur B, Taha Bilge M. Regression-based intensity estimation of facial action units. Image and Vision Computing. 2011 [Google Scholar]

- Seder JP, Oishi S. Intensity of Smiling in Facebook Photos Predicts Future Life Satisfaction. Social Psychological and Personality Science. 2012;3:407–413. [Google Scholar]

- Shimada K, Matsukawa T, Noguchi Y, Kurita T. Appearance- Based Smile Intensity Estimation by Cascaded Support Vector Machines. ACCV 2010 Workshops. 2011:277–286. [Google Scholar]

- Shimada K, Noguchi Y, Kurita T. Fast and Robust Smile Intensity Estimation by Cascaded Support Vector Machines. Internation Journal of Computer Theory and Engineering. 2013;5:24–30. [Google Scholar]

- Shrout PE, Fleiss JL. Intraclass correlations: Uses in assessing rater reliability. Psychological Bulletin. 1979;86:420. doi: 10.1037//0033-2909.86.2.420. [DOI] [PubMed] [Google Scholar]

- Tipping M. Sparse Bayesian learning and the relevance vector machine. Journal of Machine Learning Research. 2001;1:211–244. [Google Scholar]

- Valstar MF, Pantic M. Fully Automatic Facial Action Unit Detection and Temporal Analysis; Conference on Computer Vision and Pattern Recognition Workshops; 2006. [Google Scholar]

- Valstar MF, Pantic M. Fully automatic recognition of the temporal phases of facial actions. IEEE Transactions on Systems Man, Cybernetics. 2012;42:28–43. doi: 10.1109/TSMCB.2011.2163710. [DOI] [PubMed] [Google Scholar]

- Vapnik V. The nature of statistical learning theory. New York, NY: Springer; 1995. [Google Scholar]

- Vedali A, Fulkerson B. VLFeat: An open and portable library of computer vision algorithms. 2008 [Google Scholar]

- Whitehill J, Littlewort G, Fasel IR, Bartlett MS, Movellan JR. Toward practical smile detection. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2009;31:2106–2111. doi: 10.1109/TPAMI.2009.42. [DOI] [PubMed] [Google Scholar]

- Yang P, Qingshan L, Metaxas DN. RankBoost with l1 regularization for facial expression recognition and intensity estimation; IEEE International Conference on Computer Vision; 2009. pp. 1018–1025. [Google Scholar]

- Zeng Z, Pantic M, Roisman GI, Huang TS. A survey of affect recognition methods: audio, visual, and spontaneous expressions. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2009;31:39–58. doi: 10.1109/TPAMI.2008.52. [DOI] [PubMed] [Google Scholar]

- Zhang X, Yin L, Cohn JF, Canavan S, Reale M, Horowitz A, Liu P, Girard JM. BP4D-Spontaneous: a high-resolution spontaneous 3D dynamic facial expression database. Image and Vision Computing. 2014;32:692–706. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.