Abstract

Analysis of vocal expression is a critical endeavor for psychological and clinical sciences, and is an increasingly popular application for computer-human interfaces. Despite this, and advances in the efficiency, affordability and sophistication of vocal analytic technologies, there is considerable variability across studies regarding what aspects of vocal expression are studied. Vocal signals can be quantified in a myriad of ways and its underlying structure, at least with respect to “macroscopic” measures from extended speech, is presently unclear. To address this issue, we evaluated psychometric properties, notably structural and construct validity, of a systematically-defined set of global vocal features. Our analytic strategy focused on: a) identifying redundant variables among this set, b) employing Principal Components Analysis (PCA) to identify non-overlapping domains of vocal expression, c) examining the degree to which vocal variables modulate as a function of changes in speech task, and d) evaluating the relationship between vocal variables and cognitive (i.e., verbal fluency) and clinical (i.e., depression, anxiety, hostility) variables. Spontaneous speech samples from 11 independent studies of young adults (> 60 seconds in length), employing one of three different speaking tasks, were examined (N = 1350). Confounding variables (i.e., sex, ethnicity) were statistically controlled for. The PCA identified six distinct domains of vocal expression. Collectively, vocal expression (defined in terms of these domains) modulated as a function of speech task and was related to cognitive and clinical variables. These findings provide empirically-grounded implications for the study of vocal expression in psychological and clinical sciences.

Keywords: voice, expression, prosody, acoustic, frequency, intensity

Vocal communication is a foundational tool for exchanging both explicit and implicit information between people, and is an important indicator of trait-related individual differences (Roy, Bless, & Heisey, 2000) and emotional and cognitive states (Cohen, Dinzeo, Donovan, Brown, & Morrison, 2014; Giddens, Barron, Clark, & Warde, 2010; Sobin & Alpert, 1999). Moreover, vocal communication is affected in a broad array of mental illnesses, such as schizophrenia (Cohen, Alpert, Nienow, Dinzeo, & Docherty, 2008), depression (Cannizzaro, Harel, Reilly, Chappell, & Snyder, 2004) and anxiety (Cohen, Kim, & Najolia, 2013; Laukka et al., 2008). For these reasons, vocal communication is important for both clinical and psychological sciences. Regarding the assessment of vocal communication, objective technologies that employ computerized acoustic analysis of digitized speech samples have existed for decades. The use of these technologies is a boom for many reasons, not the least of which involves near perfect “inter-rater” reliability (assuming similar analytic procedures), and high test-retest reliability (assuming similar recording conditions and speaking tasks) in healthy (Shriberg et al., 2010) and communication-disordered populations (Kim, Kent, & Weismer, 2011). Vocal analytic technologies are also important for computer-human interfaces – notably involving self-automation, emotion-assessment applications and telemedicine software (Cohen & Elvevag, 2014; Esposito & Esposito, 2012; Krajewski, Batliner, & Golz, 2009). At present, there is a lack of consensus regarding the optimal measures of vocal expression for psychological and clinical sciences. We sought to redress this issue by evaluating psychometric properties of key measures extracted from a large database of spontaneous speech procured from young adults.

Although a large literature on vocal expression exists, establishing a consensus on which measures to employ is quite difficult. In part, this reflects complexity in how vocal expression is conceptualized more generally. Consider that aspects of vocal expression vary considerably as a function of a wide range of contextual and individual difference variables, including: sex (Scherer, 2003), affective (Sobin & Alpert, 1999; Tolkmitt & Scherer, 1986; Batliner, Steidl, Hacker, & Nöth, 2008), arousal (Cohen, Hong, & Guevara, 2010; Johnstone et al., 2007), social (Nadig, Lee, Singh, Bosshart, & Ozonoff, 2010), speaking task (Scherer, 2003; Huttunen, Keranen, Vayrynen, Paakkonen, & Leino, 2011) and cognitive (Cohen, Dinzeo, et al., 2014) factors. Thus, the importance of an isolated speech measure may be relegated to specific contexts or groups of individuals. In effect, there is no isomorphic measure of vocal expressivity.

Even the structure of vocal measures is complicated and likely varies depending on whether “microscopic” or “macroscopic” aspects of speech are examined. Acoustic analysis is a technology that generally focuses on “micro” or brief vocal samples (e.g., utterances), in part, because it provides “high resolution” information about the physical processes involved in communication (Kent & Kim, 2003; Shriberg, et al., 2010). Theoretical-based structural models of vocal expression at this “micro” level of analysis exist and generally focus on the physical functions involved in speaking and on physical anomalies that occur as part of communication disorders (Kent & Kim, 2003). Conceptually driven taxonomies of emotional expression – for example, based on changes during relatively brief speaking epochs, have also been developed (Banse & Scherer, 1996; Scherer, 1986; Sobin & Alpert, 1999). A complimentary, but different approach to understanding speech focuses on “macroscopic” features of vocal communication involving extended speech samples (generally > 30 seconds). This approach yields aggregate statistics characterizing how speech is produced and about absolute values and signal variability across entire speech samples. While the aforementioned “micro” approaches can provide much more nuanced information about the signal, the latter approach is indispensable for capturing more stable phenomenon, particularly those requiring extended sampling. For example, there is evidence to suggest that pause behavior may become longer and more erratic as a function of “on-line” cognitive abilities being unavailable or restricted in some manner (Cohen, Dinzeo, et al., 2014). Hence, increased pause length may be a useful index of cognitive resource depletion due to fatigue (e.g., in airplane pilots; Huttunen, et al., 2011) or cognitive deficits more generally (e.g., in psychiatric or neurological disorders; Cohen & Elvevag, 2014; Cohen, McGovern, Dinzeo, & Covington, 2014). Given the dynamic nature of pause behavior, analysis of brief vocal samplings is inadequate for approximating an individual’s cognitive abilities. From a pragmatic perspective, “macro” analytic approaches are typically automated in a way that “microscopic” approaches aren’t, as they focus on vocal features that are less influenced by individual outliers (and hence, don’t typically require manual data inspection). For these reasons, automated “macroscopic” methods are important for a broad range of psychological and clinical applications, and are the focus of this study.

It is worth providing a brief primer on acoustic analysis for readers with limited familiarity on this topic. “Macroscopic” speech indices typically focus on four different physical properties or signals (Alpert, Merewether, Homel, & Marz, 1986; Cohen, et al., 2010; Cohen, Minor, Najolia, & Lee Hong, 2009), a) the fundamental frequency (i.e., F0) – the lowest frequency originating from the vocal folds that defines the subjectively-defined vocal “pitch”, b) the first formant frequency (F1) – important for vowel expression that is shaped by vertical tongue articulation, c) the second formant frequency (F2) – also important for vowel expression that is shaped by horizontal and back and forth tongue articulation, and d) intensity (i.e., volume). In terms of characterizing these variables, various measures of speech production – defined as the absence of signal (e.g., average pause length), the presence of signal (e.g., average utterance length), variability of the signal (e.g., standard deviation of pause or utterance length) and number of discrete signal events (e.g., number of pauses) can be computed. Similarly, a seemingly infinite number of measures regarding the signals can be computed (e.g., mean, standard deviation, range) for the F0, F1, F2 and intensity values. Moreover, variability statistics can be computed across different epochs, such that variability can be examined on small time scales (e.g., signal perturbation; change on the order of assessment “frames”; 10-50 milliseconds), within utterances (e.g., consecutive voiced frames; typically 250 – 1500 ms) or across key sections of the speech sample, or in its entirety.

It should be clear that a massive number of acoustic variables can be computed from speech. This is an issue in evaluating findings across literatures where inconsistency in variables is likely the rule rather than the exception. Consider a recent meta-analysis of published studies evaluating vocal deficits in patients with schizophrenia versus nonpsychiatric controls (Cohen, Mitchell, & Elvevag, 2014). Across 13 studies appropriate for review, a total of 10 different variables were reported. These variables appeared an average of 2.5 times across the thirteen studies, and each individual study reported an average of only 1.92 variables. Even more importantly, there were dramatic differences in effect sizes reported across variables, even among those that were conceptually related, suggesting that some but not all variables are important for understanding this disorder.

Recent efforts employing sophisticated data reduction strategies have been conducted focusing on “macroscopic” level speech data. Of particular note, Batliner (2011) employed feature vector analysis of 4,000 speech (acoustic and linguistic) features from a large corpus of speech samples from child-robotic interactions to derive a more modest set of variables (e.g., duration of speech, intensity, pitch, formant spectrum, voice quality). Other similar efforts, employing a range of statistical strategies, have been conducted (e.g., Schuller, Seppi, Batliner, Maier & Steidl, 2007a; Vogt and Andre, 2005), and ongoing exchanges have been established to aid in organizing analytic approaches (e.g., Eyben, Weninger, Groß,, Schuller, 2013; Schuller, Batliner, Seppi, Steidl, Vogt, & Amir, 2007b; Schuller, Steidl, Batliner, Vinciarelli, Scherer, Ringeval, & Kim, 2013). While providing critical insight for the application of acoustic technologies, information regarding the psychometrics of these variables, notably in terms of the incremental validity of individual variables, internal consistency and factor structure, is not explicitly clear. Moreover, most prior studies focus on predictive power of acoustic variables in classifying emotional states – an important endeavor in that emotional expression is closely tied to vocal expression. However, vocal expression is a function of a wide range of contextual, cognitive and clinical variables as well, and exploring how vocal expression is tied to these variables is a critical compliment to understanding their validity.

In the present study, we sought to evaluate the psychometric properties of global acoustic features of spontaneous speech as a function of speech task (i.e., tapping different cognitive and contextual functions) and clinical (i.e., hostility, depression, anxiety) and neuropsychological (i.e., verbal fluency) variables. We focused on global vocal signals, involving F0, F1, F2 and intensity, and vocal production variables, using a systematically-defined and limited set of variables. Our selection process is elaborated on in the methods section. It is noteworthy that the number of variables examined is not necessarily important (Batliner, Steidl, Schuller, Seppi, Laskowski, & Aharonson (2006); performance of a classification system using 32 vocal features was similar to that using 1000 features. Our focus on a limited set of features facilitated a more qualitative evaluation of the potential overlap, independence and redundancy of variables than could be achieved in studies analyzing large variable sets (e.g., by reporting zero-order correlation matrices). We subjected non-redundant vocal variables to Principal Components Analysis (PCA) for data reduction purposes. PCA was used because it is a relatively straightforward and interpretable analytic strategy that has been employed in studies of brief vocal utterances (Slavin & Ferrand, 1995; Yamashita et al., 2013). The resulting factors were subjected to validity analysis – examining a) the degree to which the factor scores changed as a function of different speaking tasks, b) their relative associations to a clinical neuropsychological test of speech production (i.e., semantic verbal fluency test), and c) their associations with measures of clinical symptomatology (i.e., depression, anxiety and hostility).

Method

Participants

Data were aggregated from 11 separate studies conducted at large public universities. As part of these studies, participants were asked to provide spontaneous speech samples. Descriptive statistics and study data are provided in Table 1. In total, data were available for 1350 undergraduate students who reported English was their primary language. Approximately two-thirds of this sample was female (i.e., 65.58%) and three-quarters was Caucasian (i.e., 78.65%). Each of the studies was approved by the appropriate Institutional Review Boards and all subjects provided written informed consent prior to beginning the study.

Table 1.

Descriptive and study characteristics.

| Study | N |

N Speech

Samples |

% Female | % Caucasian |

Speech

Length |

Speech Task |

|---|---|---|---|---|---|---|

| 1 | 79 | 1 | 49.9% | 84.8% | 200 sec | Superficial |

| 2 | 121 | 3 | 70.4% | 86.4% | 20 sec | Restricted |

| 3 | 227 | 1 | 68.1% | 80.3% | 100 sec | Superficial |

| 4 | 139 | 4 | 79.1% | 79.9% | 60 sec | Introspective |

| 5 | 154 | 1 | 68.0% | 72.2% | 90 sec | Superficial |

| 6 | 114 | 1 | 66.7% | 68.3% | 90 sec | Superficial |

| 7 | 122 | 1 | 65.3% | 84.7% | 200 sec | Superficial |

| 8 | 115 | 2 | 57.9% | 82.1% | 100 sec | Superficial |

| 9 | 96 | 4 | 69.0% | 87.0% | 60 sec | Introspective |

| 10 | 82 | 1 | 60.2% | 79.5% | 60 sec | Superficial |

| 11 | 101 | 4 | 66.8% | 60.0% | 60 sec | Introspective |

Speaking Tasks

Across studies, participants were asked to produce speech as one of three different tasks that varied in topical scope, involving: 1) “Superficial” – discussing daily routines, hobbies and/or living situations, 2) “Restricted” – discussing experiences and reactions to neutral images from the International Affective Picture System (IAPS; Lang, Bradley, & Cuthbert, 2005) (e.g., door, lamp), and 3) “Introspective” – discussing autobiographical memories that were neutral in tone (e.g., life events or changes that were not inherently pleasant or unpleasant). For all tasks, instructions and stimuli presentation (e.g., IAPS slides) were automated on a computer and participants were encouraged to speak as much as possible. Research assistants read all instructions to the participants, but were not allowed to speak while the participant was being recorded. Additional information regarding the tasks is provided in Table 1. We expected systematic differences in vocal expression across the three tasks. Based on prior research from our lab using these tasks (Cohen, et al., 2010) and from the extant literature more generally (Scherer, 2003; Huttunen, et al., 2011), we reasoned that the Restricted task was a particularly challenging task, at least cognitively, in that participants were asked to produce speech that was restricted in topic and in response to stimuli that was artificial in nature. Thus, we expected that vocal production and signal (e.g., F0) variability within speech would be shortest/lowest for this task. Conversely, the Superficial and Introspective tasks involved content that was general and more automatic (i.e., content that is relatively easy to retrieve from autobiographical stores) in nature, so we expected vocal production and signal for these tasks to be greater/higher than for the Restricted task.

Acoustic Analysis of Speech

The Computerized Assessment of Natural Speech protocol (CANS), developed by our lab to assess vocal expression from spontaneous speech, was employed here. Speech was digitally recorded at 16 bits per second at a sampling frequency of 44,100 Hertz using headset microphones. The CANS protocol takes advantage of Praat software (Boersma & Weenink, 2013), a shareware program that has been used extensively in speech pathology and linguistic studies, as well as Macros developed by our lab. Sound files are organized into “frames” for analysis, which for the present study was set at a rate of 100 per second. During each frame, F0, F1, F2 and intensity is quantified. Based on prior research examining optimization filters for measuring fundamental frequency in automated research (Vogel, Maruff, Snyder, & Mundt, 2009), we applied a low (i.e., 75 Hertz) and high (i.e., 300 Hertz)-pass filter. All frequency values were converted to semi-tones due to their non-linear nature. As noted in the introduction, there are a near limitless number of acoustic variables that can be computed. We employed a systematic approach to defining our acoustic variables. This approach to characterizing vocal signals was informed by work from studies of clinical populations both from our lab (Cohen, et al., 2008; Cohen, et al., 2010; Cohen, et al., 2009; Cohen, Morrison, Brown, & Minor, 2012) and others (e.g., Cannizzaro, et al., 2004; Alpert 1986; Laukka, et al., 2008), and is utilized in communication sciences more generally (e.g., Johnstone, et al., 2007; Tolkmitt & Scherer, 1986). Furthermore, this approach is consistent with the larger theoretical speech prosody literature (Huttunen, et al., 2011; Scherer, 2003; Sobin & Alpert, 1999), and provides a straightforward conceptual framework for understanding signal properties. It is not, by any means, exhaustive (see discussion section for potential limitations).

For each of the F0, F1, F2 and intensity signals, we computed mean and variability values. Variability was defined at three different temporal levels, in terms of 1) “frame” (i.e., “Perturbation”) - signal change in consecutive frames [Insert link to footnote 1 here]), 2) “local” - variability within utterances, and 3) “global” variability across utterances (i.e., across the speech sample). Local and global variability was defined in terms of two commonly-used computations: involving standard deviation and range scores (i.e., average difference between the highest and lowest values within utterances.). Additionally, speech production was examined in terms of the presence (i.e., utterance) or absence (i.e., pause) of signal – what we collectively refer to as speech production. “Utterances” were defined as an epoch of F0 signal greater than 150 milliseconds in length with no contiguous pause greater than 50 milliseconds, whereas “Pauses” were defined in terms of 50 milliseconds of signal absence. Both mean and standard deviation values were computed for these variables. An additional summary variable of speech production (i.e., percent silence), was included in this study. These variables are listed in Table 2.

Table 2. Vocal variables examined in this study.

| Variable | Description | Variable | Description |

|---|---|---|---|

| Pause Variables | |||

| Silence Percent | Percentage of time without F0 signal |

Pause Number | Total number of pauses |

| Pause SD | Standard deviation of pause length excluding the first and last pauses. |

Pause Mean | Average pause length in milliseconds (ms), excluding the first and last pauses. |

| Utterance Variables | |||

| Utterance mean | Average utterance length in milliseconds (ms) |

Utterance.SD | Standard Deviation of utterance length in milliseconds (ms) |

| Fundamental Frequency (F0) Variables | |||

| F0 Mean | M computed within each utterance and averaged across all utterances |

F0 SD Local | Average of SDs computed within each utterance. |

| F0 SD Global | SD of SDs computed within each utterance. |

F0 Range Local | Average of range scores computed within each utterance. |

| F0 Range Global | SD of range scores computed within each utterance. |

F0 Perturbation | Absolute value of average change in consecutively voiced frames within utterance |

| First Formant (F1) Variables | |||

| F1.Mean | M computed within each utterance and averaged across all utterances |

F1 SD Local | Average of SDs computed within each utterance. |

| F1 SD Global | SD of SDs computed within each utterance. |

F1 Range Local | Average of range scores computed within each utterance. |

| F1 Range Global | SD of range scores computed within each utterance. |

||

| Second Formant (F2) Variables | |||

| F2 Mean | M computed within each utterance and averaged across all utterances |

F2 SD Local | Average of SDs computed within each utterance. |

| F2 SD Global | SD of SDs computed within each utterance. |

F2 Range Local | Average of range scores computed within each utterance. |

| F2 Range Global | SD of range scores computed within each utterance. |

||

| Intensity Variables | |||

| Intensity Mean | M computed within each utterance and averaged across all utterances |

Intensity SD Local |

Average of SDs computed within each utterance. |

| Intensity SD Global | SD of SDs computed within each utterance. |

Intensity Range Local |

Average of range scores computed within each utterance. |

| Intensity Range Global |

SD of range scores computed within each utterance. |

Intensity Perturbation |

Absolute value of average change in consecutively voiced frames within utterance, |

Note – M = mean; SD = standard deviation; Range = Maximum – Minimum

Verbal Fluency

Measures of verbal fluency were included in three of the studies examined and were included here to evaluate the convergent validity of our speech production measures. For two of these studies, semantic fluency tests (i.e., fruits and vegetables) from the Repeatable Battery for the Assessment of Neuropsychological Status (Randolph, 1998) were administered. For the third study, a different semantic fluency (e.g., animal naming) test was used (Green et al., 2004). Data for other types of verbal fluency (e.g., phonological fluency) and other speaking tasks more generally (e.g., repetition tests) were not collected as part of these studies, and hence were not available for analysis here. Scores were standardized by study to account for potential differences in raw scores across tests. Based on evidence that speech rate has been significantly associated with increased verbal fluency in children and adolescents (Martins, Vieira, Loureiro, Santos, 2007), we predicted that greater verbal fluency ability would be associated with shorter pauses in speech. Note that empirical support for this notion is not overwhelming, particularly in that Martins, Vieira et al., (2007) reported that verbal fluency was not associated with number of extended pauses (> 4000 ms).

Mental Health Symptoms

Clinical symptoms were measured using the Brief Symptom Inventory (BSI; Derogatis & Melisaratos, 1983), which measures a broad range of psychopathology during the past seven days. We were particularly interested in depression (i.e., “feeling no interest in things”), anxiety (i.e., “feeling tense or keyed up”) and hostility (i.e., “having urges to break or smash things”) from this instrument, as these symptoms and emotional conditions have been related to vocal expression in other studies. Specifically, we predicted that both depression and anxiety would be associated with less speech production and speech variability (Cannizzaro, et al., 2004; Cohen, Kim, & Najolia, 2013); that anxiety would also be associated with greater F0 perturbation variability (Laukka et al., 2008); and that hostility would be associated with greater signal variability (Sobin & Alpert, 1999). The BSI has well-documented psychometric properties and has been used in hundreds of published, peer-reviewed studies to date.

Analyses

Analyses were conducted in five steps. First, we evaluated demographic (i.e., sex, ethnicity) variables in their relationship to each of the 28 vocal variables in order to evaluate their potential impact on consequent analyses. Non-Caucasian groups were collapsed into a single group due to their relatively small sample sizes. Second, we computed a zero-order correlation matrix between the 28 vocal variables to identify redundancies (defined as r values > .85) that could be excluded from further analyses. Third, the remaining variables were subjected to Principal Component Analysis (PCA) to further reduce the number of items. Because our data were potentially inter-correlated, oblique rotations (i.e., Promax) were used. Variables with notable cross-loading (weights > .40 on multiple factors) or without loading (weights < .40 on any factor) were excluded from the final PCA solution – though were examined in subsequent analyses. We reran the PCA in men and women and in Caucasians and non-Caucasians to ensure that the structure was not grossly invariant across sex and ethnicity groups. Fourth, we evaluated the degree to which vocal variables changed as a function of speaking task using both ANOVAs and Logistic Regression. For the latter analyses, the speaking task was entered as a dichotomous dependent variable (i.e., Superficial versus Restricted, and Introspective versus Restricted) in two separate regressions, and PCA factors identified above were entered in a first (and single) step. In a second step, we entered the non-redundant vocal variables excluded from the final PCA solution. This second step allowed for the evaluation of whether the excluded vocal variables contribute meaningful variance to speaking task above that made by the factor scores. Pseudo-R values (i.e., Cox & Snell) were used to evaluate the relative contributions of each step. Finally, we employed hierarchical regressions to determine the relative contributions that vocal factors (step 1) and excluded vocal items (step 2) made to cognitive (i.e., verbal fluency) and clinical (i.e., depression, anxiety, hostility) variables. For normalization purposes, all “extreme scores” (i.e., <> 3.5 standard deviations (SD) from the mean) were converted to values of 3.5, and all variables were normalized (i.e., skew statistic < 2.0) prior to being included in the PCA. Because of the large sample size (and hence, high degree of statistical power), effect sizes are reported and evaluated when appropriate. For the fourth and fifth steps, vocal variables were transformed to statistically control for sex and ethnicity (using standardized residuals computed from linear regressions). Unless otherwise noted, all variables are normally distributed.

Results

Demographic Variables

Of the 28 variables examined, six were statistically different between men and women – though only four of these differences exceeded a small effect size (d > .20). Women versus men had higher mean F0, F1 and F2 values (d’s > .50), less F0 perturbation (d = .42) and longer and more variable utterances (d’s < .20) (all p’s < .05).

Caucasians versus non-Caucasians were statistically different on 17 of the 28 variables, though none of the consequent group differences exceeded a small effect size. Caucasians showed less variability in virtually all F0, F1, F2 and intensity variables (p’s < .05). Sex and ethnicity were considered potential confounds for this study.

Redundancy Analysis

Across the F0, F1, F2 and intensity variables, the Range Local variables were redundant with the relevant SD Local scores and were excluded from the PCA as they were judged to be less stable indicators of variability compared to the SD scores (e.g., computed based on two versus all data points in an utterance). Pause and Utterance SD scores were also redundant with their relevant Mean scores, and the Intensity Range Global score was redundant with the Intensity SD Global. These scores were also excluded from the PCA. The non-redundant correlation values were included in a zero-order matrix (see Table 3).

Table 3. Zero-order correlation matrix of non-redundant vocal variables.

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Silence | 1.00 | |||||||||||||||||||

| 2. Pause M | 0.69 | 1.00 | ||||||||||||||||||

| 3. Pause N | −0.24 | −0.38 | 1.00 | |||||||||||||||||

| 4. Utterance M | −0.63 | −0.12 | −0.23 | 1.00 | ||||||||||||||||

| 5. F0 M | −0.17 | 0.00 | −0.15 | 0.26 | 1.00 | |||||||||||||||

| 6. F0 SD L | −0.20 | −0.02 | −0.03 | 0.31 | 0.41 | 1.00 | ||||||||||||||

| 7. F0 SD G | −0.09 | −0.02 | 0.03 | 0.12 | 0.45 | 0.84 | 1.00 | |||||||||||||

| 8. F0 Range G | −0.26 | −0.09 | −0.13 | 0.35 | 0.45 | 0.65 | 0.78 | 1.00 | ||||||||||||

| 9. F0 Perturb | 0.31 | 0.23 | 0.00 | −0.26 | −0.35 | 0.03 | −0.09 | −0.15 | 1.00 | |||||||||||

| 10. F1 M | −0.17 | −0.14 | 0.15 | 0.05 | 0.30 | 0.15 | 0.16 | 0.13 | −0.25 | 1.00 | ||||||||||

| 11. F1 SD L | −0.23 | −0.07 | 0.10 | 0.32 | −0.20 | 0.13 | 0.02 | 0.12 | 0.13 | 0.03 | 1.00 | |||||||||

| 12. F1 SD G | 0.16 | 0.00 | 0.22 | −0.27 | −0.43 | −0.13 | −0.07 | −0.08 | 0.20 | −0.01 | 0.57 | 1.00 | ||||||||

| 13. F1 Range G | −0.18 | −0.15 | 0.21 | 0.10 | −0.47 | −0.10 | −0.14 | −0.02 | 0.14 | −0.13 | 0.47 | 0.60 | 1.00 | |||||||

| 14. F2 M | −0.12 | −0.05 | −0.02 | 0.12 | 0.52 | 0.29 | 0.30 | 0.25 | −0.18 | 0.51 | 0.12 | −0.03 | −0.20 | 1.00 | ||||||

| 15. F2 SD L | −0.38 | −0.17 | 0.08 | 0.44 | 0.07 | 0.30 | 0.18 | 0.32 | −0.03 | −0.11 | 0.48 | 0.09 | 0.28 | −0.16 | 1.00 | |||||

| 16. F2 SD G | −0.15 | −0.23 | 0.24 | −0.04 | 0.12 | 0.14 | 0.21 | 0.23 | −0.12 | 0.05 | 0.09 | 0.18 | 0.17 | −0.01 | 0.51 | 1.00 | ||||

| 17. F2 Range G | −0.37 | −0.28 | 0.15 | 0.30 | 0.15 | 0.24 | 0.23 | 0.35 | −0.11 | −0.06 | 0.22 | 0.10 | 0.30 | 0.00 | 0.55 | 0.73 | 1.00 | |||

| 18. Intens M | −0.06 | 0.14 | −0.18 | 0.26 | −0.05 | 0.01 | −0.07 | −0.05 | −0.08 | −0.23 | 0.10 | −0.14 | 0.01 | −0.16 | 0.14 | −0.07 | 0.01 | 1.00 | ||

| 19. Intens SD L | −0.22 | −0.09 | 0.11 | 0.23 | −0.03 | 0.10 | 0.02 | 0.11 | 0.03 | −0.01 | 0.26 | 0.06 | 0.22 | 0.01 | 0.24 | 0.07 | 0.16 | 0.32 | 1.00 | |

| 20. Intens SD G | 0.00 | −0.02 | 0.10 | −0.04 | −0.05 | 0.00 | 0.00 | 0.01 | 0.02 | 0.00 | 0.07 | 0.11 | 0.12 | 0.01 | 0.01 | 0.04 | 0.03 | 0.04 | 0.77 | 1.00 |

| 21. Intens Perturb | 0.23 | 0.22 | 0.05 | −0.18 | 0.02 | 0.09 | 0.07 | −0.01 | 0.44 | 0.15 | −0.02 | 0.07 | 0.00 | 0.10 | −0.13 | −0.16 | −0.18 | −0.41 | 0.12 | 0.02 |

Note – M = mean; SD = standard deviation; L = local; G = global; Perturb = perturbation; Intens = Intensity

Factor Structure

An initial PCA was conducted with 21 variables; yielding 6 factors with Eigen values greater than 1 explaining 71% of the variance. Inspection of the Pattern Matrices yielded six cross-loaded (i.e., multiple beta-weights > .40) or non-fitting items (i.e., all beta-weights < .40) (i.e., Silence Percent, F0, F1, F2, and Intensity Mean and Utterance M), for which removal yielded a better fit. This yielded a six-factor solution explaining 78.55% of the variance. Separate PCA computed on men versus women (e.g., six factors explaining 77.78% and 76.26% of the variance respectively), Caucasians and Non-Caucasians (e.g., six factors explaining 78.77% and 77.15% of the variance respectively) revealed identical structures, suggesting that the factor structure is invariant across sex and ethnicity. These data are included in Table 4. The component correlation matrix suggested that the factors were relatively independent, with only two of 21 possible correlations exceeding a value of .20 (r = .31, F1 & F2 Variability; r = .29, F0 & F2 Variability).

Table 4. Pattern Matrix from the Principal Component Analysis.

|

FO

Variability |

Intensity

Variability |

F2

Variability |

F1

Variability |

Signal

Perturbation |

Pauses | |

|---|---|---|---|---|---|---|

| FO SD Global | 0.98 | −0.06 | −0.04 | −0.05 | 0.05 | 0.11 |

| FO SD Local | 0.92 | 0.01 | −0.01 | 0.00 | 0.11 | 0.00 |

| FO Range Global | 0.85 | 0.08 | 0.03 | 0.02 | −0.11 | −0.07 |

| F2 SD Global | −0.07 | 0.93 | −0.14 | −0.06 | 0.07 | 0.14 |

| F2 Range Global | 0.02 | 0.90 | −0.03 | 0.00 | −0.01 | 0.05 |

| F2 SD Local | 0.10 | 0.71 | 0.20 | 0.05 | −0.04 | −0.18 |

| F1 SD Local | 0.14 | −0.02 | 0.87 | 0.04 | −0.09 | −0.12 |

| F1 SD Global | −0.05 | −0.12 | 0.87 | −0.09 | 0.09 | 0.13 |

| F1 Range Global | −0.13 | 0.09 | 0.75 | 0.04 | 0.01 | 0.12 |

| Intensity SD Global | −0.05 | −0.07 | −0.05 | 0.95 | 0.00 | 0.04 |

| Intensity SD Local | 0.02 | 0.06 | 0.04 | 0.93 | 0.03 | −0.01 |

| Intensity Perturbation | 0.14 | −0.06 | −0.09 | 0.07 | 0.84 | 0.08 |

| FO Perturbation | −0.08 | 0.11 | 0.12 | −0.04 | 0.82 | −0.11 |

| Pause number | 0.01 | 0.02 | 0.09 | 0.01 | 0.17 | 0.87 |

| Pause mean | −0.06 | −0.06 | −0.02 | −0.02 | 0.33 | −0.69 |

Vocal Variables Across Speaking Tasks

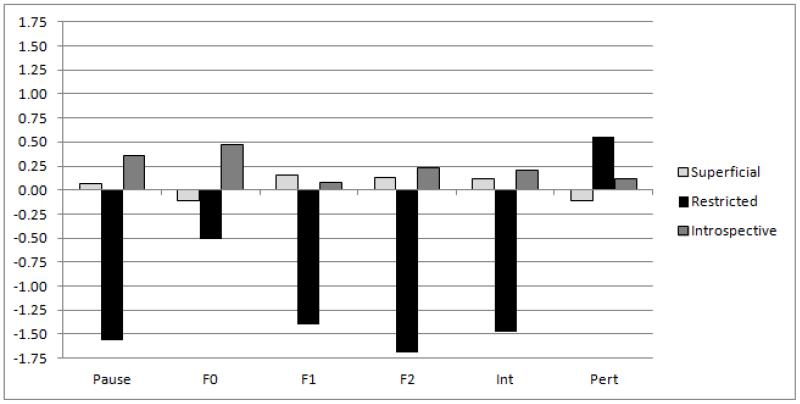

Each of the PCA-based vocal domain scores was significantly different between the Restricted versus Superficial and Introspective speaking tasks (see Figure 1). The effect sizes for the Pause, F1, F2 and Intensity variables were in the large range (d’s > 1.56), whereas those for the F0 and Perturbation were more modest (d’s > .39). Logistic regressions are presented in Table 5. The Chi-square values for all steps were significant, though inspection of the pseudo R square values suggests that the six PCA domains explained the lion’s share of the variance. As a function of Restricted topical conditions, Pauses got shorter and F1 and Intensity Variability decreased. F2 and F0 Variability also decreased as compared to the Introspective and Superficial speech respectively. In the second step, F1 Mean decreased during the Restricted condition for both regressions. Compared to the Introspective speech conditions, Utterance Means got longer, and Intensity increased.

Figure 1.

Standardized differences in vocal domains as a function of speaking task

Table 5. Logistic Regressions evaluating the relative contributions of vocal domains (identified from the PCA) and additional vocal variables (excluded from the PCA) to speaking task.

|

DV = Introspective v Restricted

Task |

DV = Superficial v. Restricted Task | |||||

|---|---|---|---|---|---|---|

| B (BSE) | Wald | Exp(B) | B (BSE) | Wald | Exp(B) | |

| Step 1. Vocal Factors | χ2 = 387.83*; ΔR2 = .58 | χ2 = 461.99*; ΔR2 = .37 | ||||

| Pause | 2.11 (0.32) | 44.30* | 8.24 | 1.27 (0.18) | 49.75* | 3.55 |

| F0 Variability | 0.98 (0.32) | 9.33* | 2.65 | −0.16 (0.20) | 0.61 | 0.85 |

| F1 Variability | 0.65 (0.34) | 3.59 | 1.92 | 0.78 (0.21) | 14.16* | 2.18 |

| F2 Variability | 0.63 (0.34) | 3.38 | 1.88 | 1.06 (0.21) | 25.60* | 2.90 |

| Intensity Variability | 0.87 (0.38) | 5.13* | 2.38 | 0.72 (0.22) | 11.10* | 2.06 |

| Perturbation | 0.02 (0.26) | 0.00 | 1.02 | −0.23 (0.18) | 1.66 | 0.80 |

|

Step 2. Additional

Vocal Variables |

χ2 = 31.32*; ΔR2 = .03 | χ2 = 86.25*; ΔR2 = .06 | ||||

| Silence Percent | 1.31 (0.60) | 4.80 | 3.71 | −0.16 (0.45) | 0.13 | 0.85 |

| Utterance Mean | 0.71 (0.43) | 2.77 | 2.04 | −0.80 (0.36) | 4.79* | 0.45 |

| F0 Mean | −0.95 (0.59) | 2.62 | 0.39 | −0.05 (0.30) | 0.03 | 0.95 |

| F1 Mean | 1.59 (0.44) | 13.28 | 4.92 | 1.51 (0.29) | 27.82* | 4.54 |

| F2 Mean | −0.23 (0.40) | 0.34 | 0.79 | −0.17 (0.28) | 0.36 | 0.84 |

| Intensity Mean | 0.07 (0.33) | 0.04 | 1.07 | −0.61 (0.25) | 6.13* | 0.54 |

Note - ΔR2 = Cox and Snell pseudo R-squared

= p < .05

Clinical & Verbal Fluency Correlates of Vocal Variables

Table six contains the results of the hierarchical regressions. The PCA–based domains made significant contributions to the variance in verbal fluency, depression and hostility, whereas the contributions of the additional vocal scores were only significant for the hostility measure. Inspection of the beta weights revealed that increased pauses were associated with better verbal fluency performance, that increased vocal perturbation was associated with more severe depressive symptoms, and that no specific vocal factor was associated with hostility – though perturbation increased at a trend level as a function of increased hostility. With respect to the additional variables, higher F1 Mean scores were associated with more hostility. In sum, the PCA-based factors explained a modest but meaningful amount of variance in clinical and cognitive variables.

Table 6. Hierarchical linear regressions evaluating the relative contributions of vocal domains (identified from the PCA) and additional vocal variables (excluded from the PCA) to cognitive and clinical variables.

| DV = Verbal Fluency | DV = Depression | DV = Anxiety | DV = Hostility | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B (BSE) | β | t | B (BSE) | β | t | B (BSE) | β | t | B (BSE) | β | t | |

|

Step 1: Vocal

Factors |

Δr2 = .05, ΔF = 2.49* | Δr2 = .05, ΔF = 2.28* | Δr2 = .03, ΔF = 1.14 | Δr2 = .05, ΔF = 2.28* | ||||||||

| Pause | 0.27 (0.07) | 0.23 | 3.73* | −0.35 (0.56) | −0.04 | −0.62 | −0.14 (0.49) | −0.02 | −0.29 | 0.32 (0.36) | 0.05 | 0.88 |

| F0 | 0.08 (0.08) | 0.07 | 1.10 | −0.02 (0.51) | 0.00 | −0.04 | 0.09 (0.45) | 0.01 | 0.19 | −0.51 (0.33) | −0.10 | −1.55 |

| F1 | 0.03 (0.07) | 0.03 | 0.49 | 0.82 (0.54) | 0.10 | 1.51 | 0.48 (0.48) | 0.07 | 1.00 | 0.14 (0.35) | 0.03 | 0.40 |

| F2 | −0.01 (0.08) | −0.01 | −0.13 | −0.65 (0.54) | −0.07 | −1.21 | −0.75 (0.48) | −0.10 | −1.57 | −0.57 (0.35) | −0.10 | −1.63 |

| Intensity | 0.00 (0.07) | 0.00 | 0.00 | −0.52 (0.54) | −0.06 | −0.95 | 0.49 (0.48) | 0.06 | 1.01 | 0.01 (0.35) | 0.00 | 0.03 |

| Perturbation | −0.01 (0.07) | −0.01 | −0.12 | 1.07 (0.45) | 0.15 | 2.40* | 0.25 (0.40) | 0.04 | 0.62 | 0.48 (0.29) | 0.11 | 1.65 |

|

Step 2: Additional

Vocal Variables |

Δr2 = .02, ΔF = .82 | Δr2 = .01, ΔF = .59 | Δr2 = .03, ΔF = 1.38 | Δr2 = .05, ΔF = 1.99* | ||||||||

| Silence Percent | −0.10 (0.27) | −0.09 | −0.37 | 2.09 (1.92) | 0.27 | 1.09 | 1.88 (1.69) | 0.27 | 1.11 | −1.81 (1.22) | −0.36 | −1.49 |

| Utterance Mean | 0.01 (0.24) | 0.01 | 0.05 | 1.47 (1.64) | 0.19 | 0.90 | 1.58 (1.44) | 0.23 | 1.09 | −1.43 (1.04) | −0.29 | −1.37 |

| F0 Mean | 0.05 (0.10) | 0.05 | 0.45 | −0.22 (0.65) | −0.04 | −0.34 | 1.32 (0.57) | 0.27 | 2.32 | 1.03 (0.41) | 0.28 | 2.51* |

| F1 Mean | 0.10 (0.07) | 0.11 | 1.41 | 0.56 (0.55) | 0.08 | 1.01 | −0.31 (0.49) | −0.05 | −0.64 | −0.10 (0.35) | −0.02 | −0.28 |

| F2 Mean | −0.05 (0.09) | −0.05 | −0.49 | 0.04 (0.51) | 0.01 | 0.08 | −0.20 (0.45) | −0.04 | −0.44 | −0.05 (0.32) | −0.01 | −0.14 |

| Intensity Mean | −0.06 (0.08) | −0.05 | −0.71 | 0.68 (0.53) | 0.10 | 1.27 | 0.28 (0.47) | 0.05 | 0.59 | 0.62 (0.34) | 0.14 | 1.84 |

= p < .05

Discussion

Computerized measures of spontaneous speech are improving in sophistication and their presence in psychological and clinical science is increasing. Despite this, studies of their psychometric properties, notably in terms of incremental and structural validity, have been lacking. In a large corpus of speech samples from young adults, we evaluated 28 systematically-defined variables that have been used in the literature. Through redundancy analysis and PCA, we identified six broad domains tapping distinct aspects of vocal expression. These domains were robust across both sex and ethnicity. Validity analysis suggested that each of these variables were important in some fashion, either because they changed as a function of contextual factors (i.e., speech topic) or via their significant association with verbal fluency or clinical symptom measures. Going forward, our recommendation is that future studies of “macroscopic”-levels of vocal expression include measures of each major vocal signal, notably F0, F1, F2 and intensity as well as the lack of signal (i.e., pauses).

With one notable exception (i.e., Perturbation; see below), specific measures of variability within a single signal provided little incremental validity over other signal-matched measures. Measures of signal variability based on range scores were generally redundant with those computed using standard deviation scores. Moreover, measures focused on local versus global features were highly correlated as well, and in the case of the F0 and intensity signals, were almost redundant. Conceptually speaking, local and global measures tap different phenomenon, as, for example, an individual may show considerable variability within utterances with high levels of consistency across utterances. Nonetheless, the present findings suggest that, at least in studies of extended spontaneous speech, “macroscopic” measures of signal variability need not be separately reported or evaluated. Given the importance of F0 and intensity variability in clinical and psychological studies (e.g., Cannizzaro, et al., 2004; Cohen, et al., 2013; Laukka, et al., 2008), further research confirming their potential redundancy is warranted.

The exception to the aforementioned redundancy between variability measures involved Signal Perturbation – a measure of signal variability occurring at the “frame” level. Generally, measures of F0 and Intensity perturbation were not highly correlated with their respective local and global variability measures, and perturbation measures emerged as a distinct factor in the PCA. Moreover, the perturbation factor was associated with clinical measures in a relatively unique way. Of note, increasing signal perturbation was associated with both depression (significantly) and hostility (at a trend level). Interestingly, F0 perturbation has been associated with anxiety, or the so-called “jittery” voice (Fuller, Horii, & Conner, 1992). The reason for our null findings in this regard are not clear. Significant correlations notwithstanding, several factors likely attenuated the magnitude of these relationships. First, insofar as the samples examined here were not clinical in nature, depression, anxiety and hostility levels were likely restricted in range. Second, our measure of clinical symptoms were based on self-report, an important, but relatively circumscribed domain of assessment. It is likely that depression, anxiety and hostility ratings derived from clinical interviews would yield more comprehensive estimates of symptom severity. Finally, the clinical measure tapped symptoms from a one week epoch, so clinical scores did not necessarily reflect symptomatology at the time of the vocal assessment.

Additional redundancies were observed between the mean and variability values for both Pause and utterance length. Similarly, Silence Percent was considered redundant (or at least, superfluous) in that it was correlated with a number of speech production and variability measures, and its inclusion in the PCA complicated the structure (i.e., contributing to dual loadings). Interestingly, Silence Percent, was not related to speaking task and cognitive or clinical correlates above and beyond the PCA factors, suggesting that this variable provides little incremental validity beyond other vocal measures.

The degree to which mean values of F0, F1, F2 and intensity signals are informative above and beyond vocal variability and pause measures is a bit unclear. There were some isolated significant findings, such that F1, Intensity and Utterance Means changed as a function of speech topics. Collectively however, the mean values explained very little variance above and beyond the vocal factors identified in the PCA. F0 values tended to be higher in people with greater self-reported hostility. Generally speaking, F0 and Intensity mean values are associated with emotional and clinical states in many studies of vocal expression (Batliner et al., 2008; Batliner et al., 2006; Cannizzaro, et al., 2004; Cohen, et al., 2010; Cohen, et al., 2013; Johnstone, et al., 2007; Laukka, et al., 2008; Sobin & Alpert, 1999; Tolkmitt & Scherer, 1986), so it is a bit surprising that these measures weren’t more highly associated in this study. Many prior studies have not controlled for sex and ethnicity in the same manner we did, and it is the case that Mean F0 values are generally much higher in women. It is also the case that many prior studies employed a “microscopic” level of analysis or did not examine spontaneous speech. In this manner, concerns can be raised that the relationship between these variables is confounded by demographic variables or is attenuated in speech, particularly involving extended speech samples.

Several limitations warrant mention. First, the sample was relatively homogeneous with respect to age, ethnicity and education. Given that culture, age, and education can affect speech, it would be important to replicate the present findings in a more diverse sample. Second, our measures of convergent validity were by no means comprehensive. That is, our use of three different speech tasks does not begin to approximate the variety of contextual and speech factors that potentially influence speech outside the laboratory setting. Third, all of the speech samples examined in this study were conducted as part of a laboratory study with limited opportunities for interaction with the research assistant. It would be important to evaluate the psychometric properties of spontaneous speech under more ecologically valid conditions. Finally, the acoustic measures examined in this study were by no means exhaustive, and it is possible that the factor structure and clinical correlates would differ if other measures were used. The variables examined here covered the major conceptual components of vocal analysis discussed in the literature (i.e., five signals across varying temporal levels) – though it remains an empirical question as to whether variables computed using other means may show different results.

The human voice offers an important window into the state of many psychological operations of an individual. Insofar as vocal samples can be easily obtained and their analysis can be automated, application of vocal analysis, particularly involving spontaneous speech has a near unlimited potential. A major obstacle in implementing vocal technologies involves analyzing and interpreting vocal signal – that is, which of the many variables that can be extracted from vocal signal should be used? The present data suggest that a limited number of domains are important for vocal analysis of extended speech samples, and offers an importantly empirically-derived structure for future research and technological applications.

Footnotes

Our “perturbation” measures are conceptually similar to the measures of jitter and shimmer that are reported in the extant communication sciences literature in that they reflect variability on a temporally brief scale. For automation purposes, our measure was based on consecutive frames (10 ms.) as opposed to a “cycle” to “cycle” basis. In this regard, our measure is meant to reflect subtle perturbation/variability in signal as opposed to jitter and shimmer more generally.

Contributor Information

Alex S. Cohen, Louisiana State University, Department of Psychology

Tyler L. Renshaw, Louisiana State University, Department of Psychology

Kyle R. Mitchell, Louisiana State University, Department of Psychology

Yunjung Kim, Louisiana State University, Department of Communication Sciences and Disorders.

References

- Alpert M, Merewether F, Homel P, Marz J. Voxcom: A system for analyzing natural speech in real time. Behavior Research Methods, Instruments & Computers. 1986;18(2):267. doi: 10.3758/BF03201035. [Google Scholar]

- Banse R, Scherer KR. Acoustic profiles in vocal emotion expression. Journal of Personality and Social Psychology. 1996;70(3):614–36. doi: 10.1037//0022-3514.70.3.614. doi: 10.1037/0022-3514.70.3.614. [DOI] [PubMed] [Google Scholar]

- Batliner A, Steidl S, Hacker C, Nöth E. Private emotions versus social interaction: a data-driven approach towards analysing emotion in speech. User Modeling and User-Adapted Interaction. 2008;18(1-2):175–206. doi: 10.1007/s11257-007-9039-4. [Google Scholar]

- Batliner A, Steidl S, Schuller B, Seppi D, Vogt T, Wagner J, Amir N. Whodunnit–searching for the most important feature types signalling emotion-related user states in speech. Computer Speech & Language. 2011;25(1):4–28. doi:10.1016/j.csl.2009.12.003. [Google Scholar]

- Batliner A, Steidl S, Schuller B, Seppi D, Laskowski K, Vogt T, Aharonson V. Combining efforts for improving automatic classification of emotional user states. Proc. IS-LTC. 2006:240–5. doi: 10.1.1.114.4354. [Google Scholar]

- Boersma P, Weenink D. Praat: doing phonetics by computer (Version 5.3.59) 2013 Producer. Retrieved from http://www.praat.org/

- Cannizzaro M, Harel B, Reilly N, Chappell P, Snyder PJ. Voice acoustical measurement of the severity of major depression. Brain and Cognition. 2004;56(1):30–5. doi: 10.1016/j.bandc.2004.05.003. doi: 10.1016/j.jneuroling.2006.04.001. [DOI] [PubMed] [Google Scholar]

- Cohen AS, Alpert M, Nienow TM, Dinzeo TJ, Docherty NM. Computerized measurement of negative symptoms in schizophrenia. Journal of Psychiatric Research. 2008;42(10):827–36. doi: 10.1016/j.jpsychires.2007.08.008. doi:10.1016/j.jpsychires.2007.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen AS, Dinzeo TJ, Donovan NJ, Brown CE, Morrison SC. Vocal acoustic analysis as a biometric indicator of information processing: Implications for neurological and psychiatric disorders. Psychiatry Research. 2014 doi: 10.1016/j.psychres.2014.12.054. pii: S0165-1781(15)00018-9. doi: 10.1016/j.psychres.2014.12.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen AS, Elvevag B. Automated computerized analysis of speech in psychiatric disorders. Current Opinion in Psychiatry. 2014;27(3):203–9. doi: 10.1097/YCO.0000000000000056. doi: 10.1097/YCO.0000000000000056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen AS, Hong SL, Guevara A. Understanding emotional expression using prosodic analysis of natural speech: refining the methodology. Journal of Behavioral Therapy and Experimental Psychiatry. 2010;41(2):150–7. doi: 10.1016/j.jbtep.2009.11.008. doi: 10.1016/j.jbtep.2009.11.008. [DOI] [PubMed] [Google Scholar]

- Cohen AS, Kim Y, Najolia GM. Psychiatric symptom versus neurocognitive correlates of diminished expressivity in schizophrenia and mood disorders. Schizophrenia Research. 2013;146(1-3):249–53. doi: 10.1016/j.schres.2013.02.002. doi:10.1016/j.schres.2013.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen Alex S., McGovern Jessica E., Dinzeo Thomas, Covington Michael. Speech deficits in severe mental illness: A cognitive resource issue? Schizophrenia Research. doi: 10.1016/j.schres.2014.10.032. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen AS, Minor KS, Najolia GM, Lee Hong S. A laboratory-based procedure for measuring emotional expression from natural speech. Behavior Research Methods. 2009;41(1):204–12. doi: 10.3758/BRM.41.1.204. doi: 10.3758/BRM.41.1.204. [DOI] [PubMed] [Google Scholar]

- Cohen AS, Mitchell KR, Elvevag B. What do we really know about blunted affect and alogia?: A meta-analysis of objective assessments. Schizophrenia Research. 2014;159(2-3):533–8. doi: 10.1016/j.schres.2014.09.013. doi: 10.1016/j.schres.2014.09.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen AS, Morrison SC, Brown LA, Minor KS. Towards a cognitive resource limitations model of diminished expression in schizotypy. Journal of Abnormal Psychology. 2012;121(1):109–18. doi: 10.1037/a0023599. doi: 10.1037/a0023599. [DOI] [PubMed] [Google Scholar]

- Derogatis LR, Melisaratos N. The Brief Symptom Inventory: An introductory report. Psychological Medicine. 1983;13(3):595–605. doi: 10.1017/S0033291700048017. [PubMed] [Google Scholar]

- Esposito A, Esposito AM. On the recognition of emotional vocal expressions: Motivations for a holistic approach. Cognitive Processing. 2012;13(Suppl 2):S541–S550. doi: 10.1007/s10339-012-0516-2. [DOI] [PubMed] [Google Scholar]

- Eyben F, Weninger F, Groß F, Schuller B. Recent developments in opensmile, the munich open-source multimedia feature extractor; Proceedings of the 21st ACM international conference on Multimedia; 2013, October; ACM; pp. 835–838. doi: 10.1145/2502081.2502224. [Google Scholar]

- Fuller BF, Horii Y, Conner DA. Validity and reliability of nonverbal voice measures as indicators of stressor-provoked anxiety. Research in Nursing & Health. 1992;15(5):379–89. doi: 10.1002/nur.4770150507. doi: 10.1002/nur.4770150507. [DOI] [PubMed] [Google Scholar]

- Giddens CL, Barron KW, Clark KF, Warde WD. Beta-adrenergic blockade and voice: A double-blind, placebo-controlled trial. Journal of Voice. 2010;24(4):477–89. doi: 10.1016/j.jvoice.2008.12.002. doi: 10.1016/j.jvoice.2008.12.002. [DOI] [PubMed] [Google Scholar]

- Green MF, Nuechterlein KH, Gold JM, Barch DM, Cohen J, Essock S, Marder SR. Approaching a consensus cognitive battery for clinical trials in schizophrenia: the NIMH-MATRICS conference to select cognitive domains and test criteria. Biological Psychiatry. 2004;56(5):301–7. doi: 10.1016/j.biopsych.2004.06.023. doi:10.1016/j.biopsych.2004.06.023. [DOI] [PubMed] [Google Scholar]

- Huttunen K, Keranen H, Vayrynen E, Paakkonen R, Leino T. Effect of cognitive load on speech prosody in aviation: Evidence from military simulator flights. Applied Ergonomics. 2011;42(2):348–57. doi: 10.1016/j.apergo.2010.08.005. doi: 10.1016/j.apergo.2010.08.005. [DOI] [PubMed] [Google Scholar]

- Johnstone T, van Reekum CM, Bänziger T, Hird K, Kirsner K, Scherer KR. The effects of difficulty and gain versus loss on vocal physiology and acoustics. Psychophysiology. 2007;44(5):827–37. doi: 10.1111/j.1469-8986.2007.00552.x. doi: 10.1111/j.1469-8986.2007.00552.x. [DOI] [PubMed] [Google Scholar]

- Kent RD, Kim YJ. Toward an acoustic typology of motor speech disorders. Clinical Linguistics and Phonetics. 2003;17(6):427–45. doi: 10.1080/0269920031000086248. doi: 10.1080/0269920031000086248. [DOI] [PubMed] [Google Scholar]

- Kim Y, Kent RD, Weismer G. An acoustic study of the relationships among neurologic disease, dysarthria type, and severity of dysarthria. Journal of Speech, Language, and Hearing Research. 2011;54(2):417–29. doi: 10.1044/1092-4388(2010/10-0020). doi: 10.1044/1092-4388(2010/10-0020) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krajewski J, Batliner A, Golz M. Acoustic sleepiness detection: Framework and validation of a speech-adapted pattern recognition approach. Behavior Research Methods. 2009;41(3):795–804. doi: 10.3758/BRM.41.3.795. doi: 10.3758/BRM.41.3.795. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. International affective picture system (IAPS): Affective ratings of pictures and instruction manual. Technical Report A-6. University of Florida; Gainesville, FL: 2005. [Google Scholar]

- Laukka P, Linnman C, Åhs F, Pissiota A, Frans Ö, Faria V, Furmark T. In a nervous voice: Acoustic analysis and perception of anxiety in social phobics’ speech. Journal of Nonverbal Behavior. 2008;32(4):195–214. doi: 10.1007/s10919-008-0055-9. [Google Scholar]

- Martins IP, Vieira R, Loureiro C, Santos ME. Speech rate and fluency in children and adolescents. Child Neuropsychology. 2007;13(4):319–32. doi: 10.1080/09297040600837370. doi: 10.1080/09297040600837370. [DOI] [PubMed] [Google Scholar]

- Nadig A, Lee I, Singh L, Bosshart K, Ozonoff S. How does the topic of conversation affect verbal exchange and eye gaze? A comparison between typical development and high-functioning autism. Neuropsychologia. 2010;48(9):2730–9. doi: 10.1016/j.neuropsychologia.2010.05.020. doi: 10.1016/j.neuropsychologia.2010.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Randolph C. RBANS Manual - Repeatable battary for the assessment of neuropsychological status. Psychological Corp (Harcourt); San Antonio, Texas: 1998. [Google Scholar]

- Roy N, Bless DM, Heisey D. Personality and voice disorders: A multitrait-multidisorder analysis. Journal of Voice. 2000;14(4):521–48. doi: 10.1016/s0892-1997(00)80009-0. doi:10.1016/S0892-1997(00)80009-0. [DOI] [PubMed] [Google Scholar]

- Scherer KR. Vocal affect expression: A review and a model for future research. Psychological Bulletin. 1986;99(2):143–165. doi: 10.1037/0033-2909.99.2.143. [PubMed] [Google Scholar]

- Scherer KR. Vocal communication of emotion: A review of research paradigms. Speech Communication. 2003;40(1):227–56. doi:10.1016/S0167-6393(02)00084-5. [Google Scholar]

- Shriberg LD, Fourakis M, Hall SD, Karlsson HB, Lohmeier HL, McSweeny JL, Wilson DL. Perceptual and acoustic reliability estimates for the Speech Disorders Classification System (SDCS) Clinical Linguistic and Phonetics. 2010;24(10):825–46. doi: 10.3109/02699206.2010.503007. doi: 10.3109/02699206.2010.503007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schuller B, Steidl S, Batliner A, Vinciarelli A, Scherer K, Ringeval F, Kim S. The INTERSPEECH 2013 computational paralinguistics challenge: social signals, conflict, emotion, autism. 2013 [Google Scholar]

- Schuller B, Batliner A, Seppi D, Steidl S, Vogt T, Wagner J, Aharonson V. August). The relevance of feature type for the automatic classification of emotional user states: low level descriptors and functionals. INTERSPEECH. 2007:2253–2256. [Google Scholar]

- Schuller B, Seppi D, Batliner A, Maier A, Steidl S. Towards more reality in the recognition of emotional speech; Acoustics, Speech and Signal Processing, 2007. ICASSP 2007. IEEE International Conference on; 2007, April; IEEE; pp. IV–941. [Google Scholar]

- Schuller B, Steidl S, Batliner A. The INTERSPEECH 2009 emotion challenge. INTERSPEECH. 2009 Sep;2009:312–315. [Google Scholar]

- Slavin DC, Ferrand CT. Factor analysis of proficient esophageal speech: Toward a multidimensional model. Journal of Speech & Hearing Research. 1995;38(6):1224–31. doi: 10.1044/jshr.3806.1224. doi:10.1044/jshr.3806.1224. [DOI] [PubMed] [Google Scholar]

- Sobin C, Alpert M. Emotion in speech: The acoustic attributes of fear, anger, sadness, and joy. Journal of Psycholinguistic Research. 1999;28(4):167–365. doi: 10.1023/a:1023237014909. doi: 10.1023/A:1023237014909. [DOI] [PubMed] [Google Scholar]

- Tolkmitt FJ, Scherer KR. Effect of experimentally induced stress on vocal parameters. Journal of Experimental Psychology: Humman Percepttion and Performance. 1986;12(3):302–13. doi: 10.1037//0096-1523.12.3.302. doi: 10.1037//0096-1523.12.3.302. [DOI] [PubMed] [Google Scholar]

- Vogel AP, Maruff P, Snyder PJ, Mundt JC. Standardization of pitch-range settings in voice acoustic analysis. Behavior Research Methods. 2009;41(2):318–24. doi: 10.3758/BRM.41.2.318. doi: 10.3758/BRM.41.2.318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogt T, André E. Comparing feature sets for acted and spontaneous speech in view of automatic emotion recognition; Multimedia and Expo, 2005. ICME 2005. IEEE International Conference on; 2005, July; IEEE; pp. 474–477. [Google Scholar]

- Yamashita Y, Nakajima Y, Ueda K, Shimada Y, Hirsh D, Seno T, Smith BA. Acoustic analyses of speech sounds and rhythms in Japanese- and English-learning infants. Frontiers in Psychology. 2013;4(57):1–10. doi: 10.3389/fpsyg.2013.00057. [DOI] [PMC free article] [PubMed] [Google Scholar]