Abstract

Let {f(t) : t ∈ T} be a smooth Gaussian random field over a parameter space T, where T may be a subset of Euclidean space or, more generally, a Riemannian manifold. We provide a general formula for the distribution of the height of a local maximum is a local maximum of f(t)} when f is non-stationary. Moreover, we establish asymptotic approximations for the overshoot distribution of a local maximum is a local maximum of f(t) and f(t0) > v} as v → ∞. Assuming further that f is isotropic, we apply techniques from random matrix theory related to the Gaussian orthogonal ensemble to compute such conditional probabilities explicitly when T is Euclidean or a sphere of arbitrary dimension. Such calculations are motivated by the statistical problem of detecting peaks in the presence of smooth Gaussian noise.

Keywords: Height, overshoot, local maxima, Riemannian manifold, Gaussian orthogonal ensemble, isotropic field, Euler characteristic, sphere

1 Introduction

In certain statistical applications such as peak detection problems [cf. Schwartzman et al. (2011) and Cheng and Schwartzman (2014)], we are interested in the tail distribution of the height of a local maximum of a Gaussian random field. This is defined as the probability that the height of the local maximum exceeds a fixed threshold at that point, conditioned on the event that the point is a local maximum of the field. Roughly speaking, such conditional probability can be stated as

| (1.1) |

where {f(t) : t ∈ T} is a smooth Gaussian random field parameterized on an N-dimensional set whose interior is non-empty, (the interior of T) and . In peak detection problems, this distribution is useful in assessing the significance of local maxima as candidate peaks. In addition, such distribution has been of interest for describing fluctuations of the cosmic background in astronomy [cf. Bardeen et al. (1985) and Larson and Wandelt (2004)] and describing the height of sea waves in oceanography [cf. Longuet-Higgins (1952, 1980), Lindgren (1982) and Sobey (1992)].

As written, the conditioning event in (1.1) has zero probability. To make the conditional probability well-defined mathematically, we follow the original approach of Cramer and Leadbetter (1967) for smooth stationary Gaussian process in one dimension, and adopt instead the definition

| (1.2) |

if the limit on the right hand side exists, where is the N-dimensional open cube of side ε centered at t0. We call (1.2) the distribution of the height of a local maximum of the random field.

Because this distribution is conditional on a point process, which is the set of local maxima of f, it falls under the general category of Palm distributions [cf. Adler et al. (2012) and Schneider and Weil (2008)]. Evaluating this distribution analytically has been known to be a difficult problem for decades. The only known results go back to Cramer and Leadbetter (1967) who provided an explicit expression for one-dimensional stationary Gaussian processes, and Belyaev (1967, 1972) and Lindgren (1972) who gave an implicit expression for stationary Gaussian fields over Euclidean space.

As a first contribution, in this paper we provide general formulae for (1.2) for non-stationary Gaussian fields and T being a subset of Euclidean space or a Riemannian manifold of arbitrary dimension. As opposed to the well-studied global supremum of the field, these formulae only depend on local properties of the field. Thus, in principle, stationarity and ergodicity are not required, nor is knowledge of the global geometry or topology of the set in which the random field is defined. The caveat is that our formulae involve the expected number of local maxima (albeit within a small neighborhood of t0), so actual computation becomes hard for most Gaussian fields except, as described below, for isotropic cases.

We also investigate the overshoot distribution of a local maximum, which can be roughly stated as

| (1.3) |

where u > 0 and . The motivation for this distribution in peak detection is that, since local maxima representing candidate peaks are called significant if they are sufficiently high, it is enough to consider peaks that are already higher than a pre-threshold v. As before, since the conditioning event in (1.3) has zero probability, we adopt instead the formal definition

| (1.4) |

if the limit on the right hand side exists. It turns out that, when the pre-threshold v is high, a simple asymptotic approximation to (1.4) can be found because in that case, the expected number of local maxima can be approximated by a simple expression similar to the expected Euler characteristic of the excursion set above level v [cf. Adler and Taylor (2007)].

The appeal of the overshoot distribution had already been realized by Belyaev (1967, 1972), Nosko (1969, 1970a, 1970b) and Adler (1981), who showed that, in stationary case, it is asymptotically equivalent to an exponential distribution. In this paper we give a much tighter approximation to the overshoot distribution which, again, depends only on local properties of the field and thus, in principle, does not require stationarity nor ergodicity. However, stationarity does enable obtaining an explicit closed-form approximation such that the error is super-exponentially small. In addition, the limiting distribution has the appealing property that it does not depend on the correlation function of the field, so these parameters need not be estimated in statistical applications.

As a third contribution, we extend the Euclidean results mentioned above for both (1.2) and (1.4) to Gaussian fields over Riemannian manifolds. The extension is not difficult once it is realized that, because all calculations are local, it is essentially enough to change the local geometry of Euclidean space by the local geometry of the manifold and most arguments in the proofs can be easily changed accordingly.

As a fourth contribution, we obtain exact (non-asymptotic) closed-form expressions for isotropic fields, both on Euclidean space and the N-dimensional sphere. This is achieved by means of an interesting recent technique employed in Euclidean space by Fyodorov (2004), Azaïs and Wschebor (2008) and Auffinger (2011) involving random matrix theory. The method is based on the realization that the (conditional) distribution of the Hessian ∇2f of an isotropic Gaussian field f is closely related to that of a Gaussian Orthogonal Ensemble (GOE) random matrix. Hence, the known distribution of the eigenvalues of a GOE is used to compute explicitly the expected number of local maxima required in our general formulae described above. As an example, we show the detailed calculation for isotropic Gaussian fields on . Furthermore, by extending the GOE technique to the N-dimensional sphere, we are able to provide explicit closed-form expressions on that domain as well, showing the two-dimensional sphere as a specific example.

The paper is organized as follows. In Section 2, we provide general formulae for both the distribution and the overshoot distribution of the height of local maxima for smooth Gaussian fields on Euclidean space. The explicit formulae for isotropic Gaussian fields are then obtained by techniques from random matrix theory. Based on the Euclidean case, the results are then generalized to Gaussian fields over Riemannian manifolds in Section 3, where we also study isotropic Gaussian fields on the sphere. Lastly, Section 4 contains the proofs of main theorems as well as some auxiliary results.

2 Smooth Gaussian Random Fields on Euclidean Space

2.1 Height Distribution and Overshoot Distribution of Local Maxima

Let {f(t) : t ∈ T} be a real-valued, C2 Gaussian random field parameterized on an N-dimensional set whose interior is non-empty. Let

and denote by index(∇2f(t)) the number of negative eigenvalues of ∇2f(t). We will make use of the following conditions.

(C1). f ∈ C2(T) almost surely and its second derivatives satisfy the mean-square Hölder condition: for any t0 ∈ T, there exist positive constants L, η and δ such that

(C2). For every pair (t, s) ∈ T2 with t ≠ s, the Gaussian random vector

is non-degenerate.

Note that (C1) holds when f ∈ C3(T) and T is closed and bounded.

The following theorem, whose proof is given in Section 4, provides the formula for Ft0(u) defined in (1.2) for smooth Gaussian fields over .

Theorem 2.1 Let {f(t) : t ∈ T} be a Gaussian random field satisfying (C1) and (C2). Then for each and ,

| (2.1) |

The implicit formula in (2.1) generalizes the results for stationary Gaussian fields in Cramér and Leadbetter (1967, p. 243), Belyaev (1967, 1972) and Lindgren (1972) in the sense that stationarity is no longer required.

Note that the conditional expectations in (2.1) are hard to compute, since they involve the indicator functions on the eigenvalues of a random matrix. However, in Section 2.2 and Section 3.2 below, we show that (2.1) can be computed explicitly for isotropic Gaussian fields.

The following result shows the exact formula for the overshoot distribution defined in (1.4).

Theorem 2.2 Let {f(t) : t ∈ T} be a Gaussian random field satisfying (C1) and (C2). Then for each , and u > 0,

Proof The result follows from similar arguments for proving Theorem 2.1.

The advantage of overshoot distribution is that we can explore the asymptotics as the pre-threshold v gets large. Theorem 2.3 below, whose proof is given in Section 4, provides an asymptotic approximation to the overshoot distribution of a smooth Gaussian field over . This approximation is based on the fact that as the exceeding level tends to infinity, the expected number of local maxima can be approximated by a simpler form which is similar to the expected Euler characteristic of the excursion set.

Theorem 2.3 Let {f(t) : t ∈ T} be a centered, unit-variance Gaussian random field satisfying (C1) and (C2). Then for each and each fixed u > 0, there exists α > 0 such that as v → ∞,

| (2.2) |

Here and in the sequel, ϕ(x) denotes the standard Gaussian density.

Note that the expectation in (2.2) is computable since the indicator function does not exist anymore. However, for non-stationary Gaussian random fields over with N ≥ 2, the general expression of the expectation in (2.2) would be complicated. Fortunately, as a polynomial in x, the coefficient of the highest order of the expectation above is relatively simple, see Lemma 4.2 below. This gives the following approximation to the overshoot distribution for general smooth Gaussian fields over .

Corollary 2.4 Let the assumptions in Theorem 2.3 hold. Then for each and each fixed u > 0, as v → ∞,

| (2.3) |

Proof The result follows immediately from Theorem 2.3 and Lemma 4.2 below.

It can be seen that the result in Corollary 2.4 reduces to the exponential asymptotic distribution given by Belyaev (1967, 1972), Nosko (1969, 1970a, 1970b) and Adler (1981), but the result here gives the approximation error and does not require stationarity. Compared with (2.2), (2.3) provides a less accurate approximation, since the error is only O(v–2), but it provides a simple explicit form.

Next we show some cases where the approximation in (2.2) becomes relatively simple and with the same degree of accuracy, i.e., the error is super-exponentially small.

Corollary 2.5 Let the assumptions in Theorem 2.3 hold. Suppose further the dimension N = 1 or the field f is stationary, then for each and each fixed u > 0, there exists α > 0 such that as v → ∞,

| (2.4) |

where HN–1(x) is the Hermite polynomial of order N – 1.

Proof (i) Suppose first N = 1. Since Var(f(t)) ≡ 1, and . It follows that

Plugging this into (2.2) yields the desired result.

(ii) If f is stationary, it can be shown that [cf. Lemma 11.7.1 in Adler and Taylor (2007)],

Then (2.4) follows from Theorem 2.3 and the following formula for Hermite polynomials

An interesting property of the results obtained about the overshoot distribution is that the asymptotic approximations in Corollaries 2.4 and 2.5 do not depend on the location t0, even in the case where stationarity is not assumed. In addition, they do not require any knowledge of spectral moments of f except for zero mean and constant variance. In this sense, the distributions are convenient for use in statistics because the correlation function of the field need not be estimated.

2.2 Isotropic Gaussian Random Fields on Euclidean Space

We show here the explicit formulae for both the height distribution and the overshoot distribution of local maxima for isotropic Gaussian random fields. To our knowledge, this article is the first attempt to obtain these distributions explicitly for N ≥ 2. The main tools are techniques from random matrix theory developed in Fyodorov (2004), Azaïs and Wschebor (2008) and Auffinger (2011).

Let {f(t) : t ∈ T} be a real-valued, C2, centered, unit-variance isotropic Gaussian field parameterized on an N-dimensional set . Due to isotropy, we can write the covariance function of the field as for an appropriate function , and denote

| (2.5) |

By isotropy again, the covariance of (f(t), ∇f(t), ∇2f(t)) only depends on ρ′ and ρ″, see Lemma 4.3 below. In particular, by Lemma 4.3, we see that Var(fi(t)) = –2ρ′ and Var(fii(t)) = 12ρ″ for any i ∈ {1, . . . , N}, which implies ρ′ < 0 and ρ″ > 0 and hence κ > 0. We need the following condition for further discussions.

(C3). κ ≤ 1 (or equivalently ρ″ – ρ′2 ≥ 0).

Example 2.6 Here are some examples of covariance functions with corresponding ρ satisfying (C3).

-

(i)

Powered exponential: ρ(r) = e–cr, where c > 0. Then ρ′ = –c, ρ″ = c2 and κ = 1.

-

(ii)

Cauchy: ρ(r) = (1 + r/c)–β, where c > 0 and β > 0. Then ρ′ = –β/c, ρ″ = β(β + 1)/c2 and .

Remark 2.7 By Azaïs and Wschebor (2010), (C3) holds when ρ(∥t – s∥2), t, , is a positive definite function for every dimension N ≥ 1. The cases in Example 2.6 are of this kind.

We shall use (2.1) to compute the distribution of the height of a local maximum. As mentioned before, the conditional distribution on the right hand side of (2.1) is extremely hard to compute. In Section 4 below, we build connection between such distribution and certain GOE matrix to make the computation available.

Recall that an N × N random matrix MN is said to have the Gaussian Orthogonal Ensemble (GOE) distribution if it is symmetric, with centered Gaussian entries Mij satisfying Var(Mii) = 1, Var(Mij) = 1/2 if i < j and the random variables {Mij, 1 ≤ i ≤ j ≤ N} are independent. Moreover, the explicit formula for the distribution QN of the eigenvalues λi of MN is given by [cf. Auffinger (2011)]

| (2.6) |

where the normalization constant cN can be computed from Selberg's integral

| (2.7) |

We use notation to represent the expectation under density QN(dλ), i.e., for a measurable function g,

Theorem 2.8 Let {f(t) : t ∈ T} be a centered, unit-variance, isotropic Gaussian random field satisfying (C1), (C2) and (C3). Then for each and ,

where κ is defined in (2.5).

Proof Since f is centered and has unit variance, the numerator in (2.1) can be written as

Applying Theorem 2.1 and Lemmas 4.6 and 4.7 below gives the desired result.

Remark 2.9 The formula in Theorem 2.8 shows that for an isotropic Gaussian field over , Ft0(u) only depends on κ. Therefore, we may write Ft0(u) as Ft0(u, κ). As a consequence of Lemma 4.4, Ft0(u, κ) is continuous in κ, hence the formula for the case of κ = 1 (i.e. ρ″ – ρ′2 = 0) can also be derived by taking the limit limκ↑1 Ft0(u, κ).

Next we show an example on computing Ft0(u) explicitly for N = 2. The calculation for N = 1 and N > 2 is similar and thus omitted here. In particular, the formula for N = 1 derived in such method can be verified to be the same as in Cramer and Leadbetter (1967).

Example 2.10 Let N = 2. Applying Proposition 4.8 below with a = 1 and b = 0 gives

| (2.8) |

Applying Proposition 4.8 again with a = 1/(1 – κ2) and , one has

| (2.9) |

where is the c.d.f. of standard Normal random variable. Let h(x) be the density function of the distribution of the height of a local maximum, i.e. . By Theorem 2.8, (2.8) and (2.9),

| (2.10) |

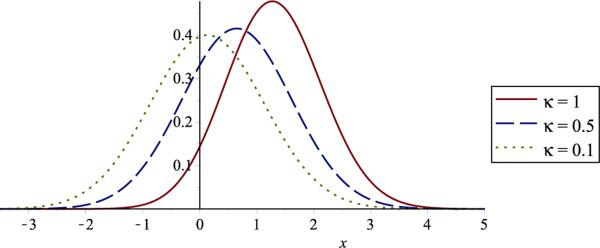

and hence . Figure 1 shows several examples. Shown in solid red is the extreme case of (C3), κ = 1, which simplifies to

As an interesting phenomenon, it can be seen from both (2.10) and Figure 1 that h(x) → ϕ(x) if κ → 0.

Figure 1.

Density function h(x) of the distribution Ft0 for isotropic Gaussian fields on .

Theorem 2.11 Let {f(t) : t ∈ T} be a centered, unit-variance, isotropic Gaussian random field satisfying (C1), (C2) and (C3). Then for each , and u > 0,

| (2.11) |

where κ is defined in (2.5).

Proof The result follows immediately by applying Theorem 2.2 and Lemma 4.7 below.

Note that the expectations in (2.11) can be computed similarly to (2.9) for any N ≥ 1, thus Theorem 2.11 provides an explicit formula for the overshoot distribution of isotropic Gaussian fields. On the other hand, since isotropy implies stationarity, the approximation to overshoot distribution for large v is simply given by Corollary 2.5.

3 Smooth Gaussian Random Fields on Manifolds

3.1 Height Distribution and Overshoot Distribution of Local Maxima

Let (M, g) be an N-dimensional Riemannian manifold and let f be a smooth function on M. Then the gradient of f, denoted by ∇f, is the unique continuous vector field on M such that g(∇f, X) = X f for every vector field X. The Hessian of f, denoted by ∇2f, is the double differential form defined by ∇2f(X, Y) = XY f – ∇XY f, where X and Y are vector fields, ∇X is the Levi-Civitá connection of (M, g). To make the notations consistent with the Euclidean case, we fix an orthonormal frame {Ei}1≤i≤N, and let

| (3.1) |

Note that if t is a critical point, i.e. ∇f(t) = 0, then ∇2f(Ei, Ej)(t) = EiEjf(t), which is similar to the Euclidean case.

Let Bt0(ε) = {t ∈ M : d(t, t0) ≤ ε} be the geodesic ball of radius ε centered at , where d is the distance function induced by the Riemannian metric g. We also define Ft0(u) as in (1.2) and F̄t0(u, v) as in (1.4) with Ut0(ε) replaced by Bt0(ε), respectively.

We will make use of the following conditions.

(C1′). f ∈ C2(M) almost surely and its second derivatives satisfy the mean-square Hölder condition: for any t0 ∈ M, there exist positive constants L, η and δ such that

(C2′). For every pair (t, s) ∈ M2 with t ≠ s, the Gaussian random vector

is non-degenerate.

Note that (C1′) holds when f ∈ C3(M).

Theorem 3.1 below, whose proof is given in Section 4, is a generalization of Theorems 2.1, 2.2, 2.3 and Corollary 2.4. It provides formulae for both the height distribution and the overshoot distribution of local maxima for smooth Gaussian fields over Riemannian manifolds. Note that the formal expressions are exactly the same as in Euclidean case, but now the field is defined on a manifold.

Theorem 3.1 Let (M, g) be an oriented N-dimensional C3 Riemannian manifold with a C1 Riemannian metric g. Let f be a Gaussian random field on M such that (C1′) and (C2′) are fulfilled. Then for each , u, and w > 0,

| (3.2) |

If we assume further that f is centered and has unit variance, then for each fixed w > 0, there exists α > 0 such that as v → ∞,

| (3.3) |

It is quite remarkable that the second approximation in (3.3) does not depend on the curvature of the manifold nor the covariance function of the field, which need not have any stationary properties other than zero mean and constant variance.

3.2 Isotropic Gaussian Random Fields on the Sphere

Similarly to the Euclidean case, we explore the explicit formulae for both the height distribution and the overshoot distribution of local maxima for isotropic Gaussian random fields on a particular manifold, sphere.

Consider an isotropic Gaussian random field , where is the N-dimensional unit sphere. For the purpose of simplifying the arguments, we will focus here on the case N ≥ 2. The special case of the circle, N = 1, requires separate treatment but extending our results to that case is straightforward.

The following theorem by Schoenberg (1942) characterizes the covariance function of an isotropic Gaussian field on sphere [see also Gneiting (2013)].

Theorem 3.2 A continuous function is the covariance of an isotropic Gaussian field on , N ≥ 2, if and only if it has the form

where λ = (N – 1)/2, , , and are ultraspherical polynomials defined by the expansion

Remark 3.3 (i). Note that [cf. Szegö (1975, p. 80)]

| (3.4) |

and λ = (N – 1)/2, therefore, is equivalent to .

(ii). When N = 2, λ = 1/2 and become Legendre polynomials. For more results on isotropic Gaussian fields on , see a recent monograph by Marinucci and Peccati (2011).

(iii). Theorem 3.2 still holds for the case N = 1 if we set [cf. Schoenberg (1942)] , where Tn are Chebyshev polynomials of the first kind defined by the expansion

The arguments in the rest of this section can be easily modified accordingly.

The following statement (C1″) is a smoothness condition for Gaussian fields on sphere. Lemma 3.4 below shows that (C1″) implies the pervious smoothness condition (C1′).

(C1″). The covariance C(·, ·) of , N ≥ 2, satisfies

where , , and are ultraspherical polynomials.

Lemma 3.4 [Cheng and Xiao (2014)]. Let f be an isotropic Gaussian field on such that (C1″) is fulfilled. Then the covariance and hence (C1′) holds for f.

For a unit-variance isotropic Gaussian field f on satisfying (C1″), we define

| (3.5) |

Due to isotropy, the covariance of (f(t), ∇f(t), ∇2f(t)) only depends on C′ and C″, see Lemma 4.10 below. In particular, by Lemma 4.10 again, Var(fi(t)) = C′ and Var(fii(t)) = C′ + 3C″ for any i ∈ {1, . . . , N}. We need the following condition on C′ and C″ for further discussions.

(C3′). C″ + C′ – C′2 ≥ 0.

Remark 3.5 Note that (C3′) holds when C(·, ·) is a covariance function (i.e. positive definite function) for every dimension N ≥ 2 (or equivalently for every N ≥ 1). In fact, by Schoenberg (1942), if C(·, ·) is a covariance function on for every N ≥ 2, then it is necessary of the form

where bn ≥ 0. Unit-variance of the field implies . Now consider the random variable X that assigns probability bn to the integer n. Then , and C″ + C′ – C′2 = Var(X) ≥ 0, hence (C3′) holds.

Theorem 3.6 Let , N ≥ 2, be a centered, unit-variance, isotropic Gaussian field satisfying (C1″), (C2′) and (C3′). Then for each and ,

where C′ and C″ are defined in (3.5).

Proof The result follows from applying Theorem 3.1 and Lemmas 4.12 and 4.13 below.

Remark 3.7 The formula in Theorem 3.6 shows that for isotropic Gaussian fields over , Ft0(u) depends on both C′ and C″. Therefore, we may write Ft0(u) as Ft0(u, C′, C″). As a consequence of Lemma 4.11, Ft0(u, C′, C″) is continuous in C′ and C″, hence the formula for the case of C″ + C′ – C′2 = 0 can also be derived by taking the limit limC″+C′–C′2↓0 Ft0(u, C′, C″).

Example 3.8 Let N = 2. Applying Proposition 4.8 with and b = 0 gives

| (3.6) |

Applying Proposition 4.8 again with and , one has

| (3.7) |

Let h(x) be the density function of the distribution of the height of a local maximum, i.e. . By Theorem 3.6, together with (3.6) and (3.7), we obtain

| (3.8) |

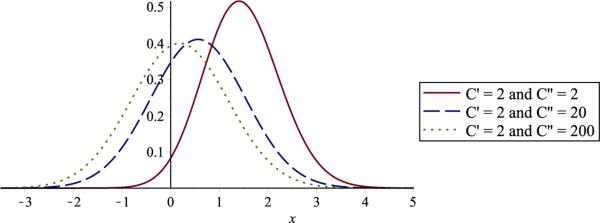

and hence . Figure 2 shows several examples. The extreme case of (C3′), C″ + C′ – C′2 = 0, is obtained when C(t, s) = 〈t, s〉n, n ≥ 2. Shown in solid red is the case n = 2, which simplifies to

It can be seen from both (3.8) and Figure 2 that h(x) → ϕ(x) if max(C′, C′2)/C″ → 0.

Figure 2.

Density function h(x) of the distribution Ft0 for isotropic Gaussian fields on .

Theorem 3.9 Let , N ≥ 2, be a centered, unit-variance, isotropic Gaussian field satisfying (C1″), (C2′) and (C3′). Then for each and u, v > 0,

where C′ and C″ are defined in (3.5).

Proof The result follows immediately by applying Theorem 3.1 and Lemma 4.13.

Because the exact expression in Theorem 3.9 may be complicated for large N, we now derive a tight approximation to it, which is analogous to Corollary 2.5 for the Euclidean case.

Let be the Euler characteristic of the excursion set . Let , the spherical area of the j-dimensional unit sphere , i.e., . The lemma below provides the formula for the expected Euler characteristic of the excursion set.

Lemma 3.10 [Cheng and Xiao (2014)]. Let , N ≥ 2, be a centered, unit-variance, isotropic Gaussian field satisfying (C1″) and (C2′). Then

where C′ is defined in (3.5), ρ0(u) = 1–Φ(u), ρj(u) = (2π)–(j+1)/2Hj–1(u)e–u2/2 with Hermite polynomials Hj−1 for j ≥ 1 and, for j = 0, . . . , N,

| (3.9) |

are the Lipschitz-Killing curvatures of .

Theorem 3.11 Let , N ≥ 2, be a centered, unit-variance, isotropic Gaussian field satisfying (C1″) and (C2′). Then for each and each fixed u > 0, there exists α > 0 such that as v → ∞,

| (3.10) |

where C′ is defined in (3.5), ρj(u) and are as in Lemma 3.10.

Remark 3.12 Note that (3.10) depends on the covariance function only through its first derivative C′. In comparison with Corollary 2.5 for the Euclidean case, there we only have the highest order term of the expected Euler characteristic expansion because we do not consider the boundaries of T. On the sphere, we need all terms in the expansion since sphere has no boundary.

Proof By Theorem 3.1,

Since f is isotropic, integrating the numerator and denominator above over , we obtain

where the last line comes from applying the Kac-Rice Metatheorem to the Euler characteristic of the excursion set, see Adler and Taylor (2007, pp. 315-316). The result then follows from Lemma 3.10.

4 Proofs and Auxiliary Results

4.1 Proofs for Section 2

For u > 0, let μ(t0, ε), μN(t0, ε), and be the number of critical points, the number of local maxima, the number of local maxima above u and the number of local maxima below u in Ut0(ε) respectively. More precisely,

| (4.1) |

where index(∇2f(t)) is the number of negative eigenvalues of ∇2f(t).

In order to prove Theorem 2.1, we need the following lemma which shows that, for the number of critical points over the cube of lengh ε, its factorial moment decays faster than the expectation as ε tends to 0. Our proof is based on similar arguments in the proof of Lemma 3 in Piterbarg (1996).

Lemma 4.1 Let {f(t) : t ∈ T} be a Gaussian random field satisfying (C1) and (C2). Then for each fixed , as ε → 0,

Proof By the Kac-Rice formula for factorial moments [cf. Theorem 11.5.1 in Adler and Taylor (2007)],

| (4.2) |

where

By Taylor's expansion,

| (4.3) |

where is a Gaussian vector field, with properties to be specified. In particular, by condition (C1), for ε small enough,

where C1 is some positive constant. Therefore, we can write

Note that the determinant of the matrix ∇2f(t) is equal to the determinant of the matrix

For any i = 2, . . . , N + 1, multiply the ith column of this matrix by (si – ti)/∥si – ti∥2, take the sum of all such columns and add the result to the first column, obtaining the matrix

whose determinant is still equal to the determinant of ∇2f(t). Let r = max1≤i≤N |si – ti|,

Using properties of a determinant, it follows that

Let et,s = (s – t)T/∥s – t∥, then we obtain

| (4.4) |

where

By (C1) and (C2), there exists C2 > 0 such that

It is obvious that

Applying Taylor's expansion (4.3), we obtain that as ∥s – t∥ → 0,

where the last determinant is bounded away from zero uniformly in t and s due to the regularity condition (C2). Therefore, there exists C3 > 0 such that

where C3 η and are some positive constants. Recall the elementary inequality

It follows that

Proof of Theorem 2.1 By the definition in (1.2),

| (4.5) |

Let , then and , it follows that

Therefore, by Lemma 4.1, as ε → 0,

| (4.6) |

Similarly,

| (4.7) |

Next we show that

| (4.8) |

Roughly speaking, the probability that there exists a local maximum and the field exceeds u at t0 is approximately the same as the probability that there is at least one local maximum exceeding u. This is because in the limit, the local maximum occurs at t0 and is greater than u. We show the rigorous proof below.

Note that for any evens A, B, C such that C ⊂ B,

By this inequality, to prove (4.8), it suffices to show

where the first probability above is the probability that the field exceeds u at t0 but all local maxima are below u, while the second one is the probability that the field does not exceed u at t0 but all local maxima exceed u.

Recall the definition of in (4.1), we have

| (4.9) |

where the second line follows from similar argument for showing (4.6). By the Kac-Rice metatheorem,

| (4.10) |

By (C1) and (C2), for small ε > 0,

for some positive constant C. On the other hand, by continuity, conditioning on f(t0) = x > u, tends to 0 a.s. as ε → 0. Therefore, for each x > u, by the dominated convergence theorem (we may choose as the dominating function for some ε0 > 0), as ε → 0,

Plugging these facts into (4.10) and applying the dominated convergence theorem, we obtain that as ε → 0,

which implies . By (4.9),

Similar arguments yield

Hence (4.8) holds and therefore,

| (4.11) |

where the last equality is due to (4.6) and (4.7). By the Kac-Rice metatheorem and Lebesgue's continuity theorem,

and similarly,

Plugging these into (4.11) yields (2.1).

Proof of Theorem 2.3 By Theorem 2.2,

| (4.12) |

We shall estimate the conditional expectations above. Note that f has unit-variance, taking derivatives gives

Since Λ(t0) is positive definite, there exists a unique positive definite matrix Qt0 such that Qt0Λ(t0)Qt0 = IN (Qt0 is also called the square root of (Λ(t0)), where IN is the N × N unit matrix. Hence

By the conditional formula for Gaussian random variables,

Make change of variable

where W(t0) = (Wij(t0))1≤i,j≤N. Then (W(t0)|f(t0) = x, ∇f(t0) = 0) is a Gaussian matrix whose mean is 0 and covariance is the same as that of (Qt0 ∇2f(t0)Qt0|f(t0) = x, ∇f(t0) = 0). Denote the density of Gaussian vector ((Wij(t0))1≤i≤j≤N|f(t0) = x, ∇f(t0) = 0) by ht0(w), , then

| (4.13) |

where (wij) is the abbreviation of matrix (wij)1≤i,j≤k. Note that there exists a constant c > 0 such that

Thus we can write (4.13) as

| (4.14) |

where Z(t, x) is the second integral in the first line of (4.14) and it satisfies

| (4.15) |

By the non-degenerate condition (C2), there exists a constant α′ > 0 such that as ∥(wij)∥ → ∞, . On the other hand, the determinant inside the integral in (4.15) is a polynomial in wij and x, and it does not affect the exponentially decay, hence as x → ∞, |Z(t, x)| = o(e–αx2) for some constant α > 0. Combine this with (4.13) and (4.14), and note that

we obtain that, as x → ∞,

Plugging this into (4.12) yields (2.2).

Lemma 4.2 Under the assumptions in Theorem 2.3, as x → ∞,

| (4.16) |

Proof Let Qt be the N × N positive definite matrix such that QtΛ(t)Qt = IN. Then we can write and therefore,

| (4.17) |

Since f(t) and ∇f(t) are independent,

It follows that

| (4.18) |

where is an N × N Gaussian random matrix such that and its covariance matrix is independent of x. By the Laplace expansion of the determinant,

where is the sum of the principle minors of order i in . Taking the expectation above and noting that since , we obtain that as x → ∞,

Combining this with (4.17) and (4.18) yields (4.16).

Lemma 4.3 [Azaïs and Wschebor (2008), Lemma 2]. Let {f(t) : t ∈ T} be a centered, unit-variance, isotropic Gaussian random field satisfying (C1) and (C2). Then for each t ∈ T and i, j, k, l ∈ {1, . . . , N},

| (4.19) |

where δij is the Kronecker delta and ρ′ and ρ″ are defined in (2.5).

Lemma 4.4 Under the assumptions in Lemma 4.3, the distribution of ∇2f(t) is the same as that of , where MN is a GOE random matrix, ξ is a standard Gaussian variable independent of MN and IN is the N × N identity matrix. Assume further that (C3) holds, then the conditional distribution of (∇2f(t)|f(t) = x) is the same as the distribution of .

Proof The first result is a direct consequence of Lemma 4.3. For the second one, applying (4.19) and the well-known conditional formula for Gaussian variables, we see that (∇2f(t)|f(t) = x) can be written as Δ + 2ρ′xIN, where Δ = (Δij)1≤i,j≤N is a symmetric N × N matrix with centered Gaussian entries such that

Therefore, Δ has the same distributionm as the random matrix , completing the proof.

Lemma 4.5 below is a revised version of Lemma 3.2.3 in Auffinger (2011). The proof is omitted here since it is similar to that of the reference above.

Lemma 4.5 Let MN be an N × N GOE matrix and X be an independent Gaussian random variable with mean m and variance σ2. Then,

| (4.20) |

where is the expectation under the probability distribution QN+1(dλ) as in (2.6) with N replaced by N + 1.

Lemma 4.6 Let {f(t) : t ∈ T} be a centered, unit-variance, isotropic Gaussian random field satisfying (C1) and (C2). Then for each t ∈ T,

Proof Since ∇2f(t) and ∇f(t) are independent for each fixed t, by Lemma 4.4,

where X is an independent centered Gaussian variable with variance 1/2. Applying Lemma 4.5 with m = 0 and , we obtain the desired result.

Lemma 4.7 Let {f(t) : t ∈ T} be a centered, unit-variance, isotropic Gaussian random field satisfying (C1), (C2) and (C3). Then for ach t ∈ T and ,

Proof Since ∇f(t) is independent of both f(t) and ∇2f(t) for each fixed t, by Lemma 4.4,

| (4.21) |

When ρ″ – ρ′2 > 0, then (4.21) can be written as

where X is an independent Gaussian variable with mean and variance . Applying Lemma 4.5 yields the formula for the case ρ″ – ρ′2 > 0.

When ρ″ – ρ′2 = 0, i.e. , then (4.21) becomes

We finish the proof.

The following result can be derived from elementary calculations by applying the GOE density (2.6), the details are omitted here.

Proposition 4.8 Let N = 2. Then for positive constants a and b,

4.2 Proofs for Section 3

Define μ(t0, ε), μN(t0, ε), and as in (4.1) with Ut0(ε) replaced by Bt0(ε) respectively. The following lemma, which will be used for proving Theorem 3.1, is an analogue of Lemma 4.1.

Lemma 4.9 Let (M, g) be an oriented N-dimensional C3 Riemannian manifold with a C1 Riemannian metric g. Let f be a Gaussian random field on M such that (C1′) and (C2′) are fulfilled. Then for any , as ε → 0,

Proof Let (Uα, φα)α∈I be an atlas on M and let ε be small enough such that φα(Bt0(ε)) ⊂ φα(Uα) for some α ∈ I. Set

Then it follows immediately from the di eomorphism of φα and the definition of μ that

Note that (C1′) and (C2′) imply that fα satisfies (C1) and (C2). Applying Lemma 4.1 gives

This verifies the desired result.

Proof of Theorem 3.1 Following the proof in Theorem 2.1, together with Lemma 4.9 and the argument by charts in its proof, we obtain

| (4.22) |

By the Kac-Rice metatheorem for random fields on manifolds [cf. Theorem 12.1.1 in Adler and Taylor (2007)] and Lebesgue's continuity theorem,

where Volg is the volume element on M induced by the Riemannian metric g. Similarly,

Plugging these facts into (4.22) yields the first line of (3.2). The second line of (3.2) follows similarly.

Applying Theorem 2.3 and Corollary 2.4, together with the argument by charts, we obtain (3.3).

Lemma 4.10 below is on the properties of the covariance of (f(t), ∇f(t), ∇2f(t)), where the gradient ∇f(t) and Hessian ∇2f(t) are defined as in (3.1) under some orthonormal frame {Ei}1≤i≤N on . Since it can be proved similarly to Lemma 3.2.2 or Lemma 4.4.2 in Auffinger (2011), the detailed proof is omitted here.

Lemma 4.10 Let f be a centered, unit-variance, isotropic Gaussian field on , N ≥ 2, satisfying (C1″) and (C2′). Then

| (4.23) |

where C′ and C″ are defined in (3.5).

Lemma 4.11 Under the assumptions in Lemma 4.10, the distribution of ∇2f(t) is the same as that of , where MN is a GOE matrix and ξ is a standard Gaussian variable independent of MN. Assume further that (C3′) holds, then the conditional distribution of (∇2f(t)|f(t) = x) is the same as the distribution of .

Proof The first result is an immediate consequence of Lemma 4.10. For the second one, applying (4.23) and the well-known conditional formula for Gaussian random variables, we see that (∇2f(t)|f(t) = x) can be written as Δ – C′xIN, where Δ = (Δij)1≤i,j≤N is a symmetric N × N matrix with centered Gaussian entries such that

Therefore, Δ has the same distribution as the random matrix , completing the proof.

By similar arguments for proving Lemmas 4.6 and 4.7, and applying Lemma 4.11 instead of Lemma 4.4, we obtain the following two lemmas.

Lemma 4.12 Let be a centered, unit-variance, isotropic Gaussian field satisfying (C1″) and (C2′). Then for each ,

Lemma 4.13 Let be a centered, unit-variance, isotropic Gaussian field satisfying (C1″), (C2′) and (C3′). Then for each and ,

Acknowledgments

The authors thank Robert Adler of the Technion - Israel Institute of Technology for useful discussions and the anonymous referees for their insightful comments which have led to several improvements of this manuscript.

Footnotes

Research partially supported by NIH grant R01-CA157528.

Contributor Information

Dan Cheng, North Carolina State University.

Armin Schwartzman, North Carolina State University.

References

- 1.Adler RJ. The Geometry of Random Fields. Wiley; New York: 1981. [Google Scholar]

- 2.Adler RJ, Taylor JE. Random Fields and Geometry. Springer; New York: 2007. [Google Scholar]

- 3.Adler RJ, Taylor JE, Worsley KJ. Applications of Random Fields and Geometry: Foundations and Case Studies. 2012 In preparation. [Google Scholar]

- 4.Auffinger A. Ph.D. Thesis. New York University; 2011. Random Matrices, Complexity of Spin Glasses and Heavy Tailed Processes. [Google Scholar]

- 5.Azaïs J-M, Wschebor M. A general expression for the distribution of the maximum of a Gaussian field and the approximation of the tail. Stoch. Process. Appl. 2008;118:1190–1218. [Google Scholar]

- 6.Azaïs J-M, Wschebor M. Erratum to: A general expression for the distribution of the maximum of a Gaussian field and the approximation of the tail. Stoch. Process. Appl. 2010;120:2100–2101. Stochastic Process. Appl. 118 (7) (2008) 1190–1218. [Google Scholar]

- 7.Bardeen JM, Bond JR, Kaiser N, Szalay AS. The statistics of peaks of Gaussian random fields. Astrophys. J. 1985;304:15–61. [Google Scholar]

- 8.Belyaev, Yu K. Bursts and shines of random fields. Dokl. Akad. Nauk SSSR. 1967;176:495–497. [Google Scholar]; Soviet Math. Dokl. 8:1107–1109. [Google Scholar]

- 9.Belyaev, Yu K. Proc. Sixth Berkeley Symp. on Math. Statist. and Prob., Vol. II: Probability Theory. University of California Press; 1972. Point processes and first passage problems. pp. 1–17. [Google Scholar]

- 10.Cheng D, Xiao Y. Excursion probability of Gaussian random fields on sphere. 2014 arXiv:1401.5498. [Google Scholar]

- 11.Cheng D, Schwartzman A. Multiple testing of local maxima for detection of peaks in random fields. 2014 doi: 10.1214/16-AOS1458. arXiv:1405.1400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cramér H, Leadbetter MR. Stationary and Related Stochastic Processes: Sample Function Properties and Their Applications. Wiley; New York: 1967. [Google Scholar]

- 13.Fyodorov YV. Complexity of random energy landscapes, glass transition, and absolute value of the spectral determinant of random matrices. Phys. Rev. Lett. 2004;92:240601. doi: 10.1103/PhysRevLett.92.240601. [DOI] [PubMed] [Google Scholar]

- 14.Gneiting T. Strictly and non-strictly positive definite functions on spheres. Bernoulli. 2013;19:1327–1349. [Google Scholar]

- 15.Larson DL, Wandelt BD. The hot and cold spots in the Wilkinson microwave anisotropy probe data are not hot and cold enough. Astrophys. J. 2004;613:85–88. [Google Scholar]

- 16.Lindgren G. Local maxima of Gaussian fields. Ark. Mat. 1972;10:195–218. [Google Scholar]

- 17.Lindgren G. Wave characteristics distributions for Gaussian waves – wave-length, amplitude and steepness. Ocean Engng. 1982;9:411–432. [Google Scholar]

- 18.Longuet-Higgins MS. On the statistical distribution of the heights of sea waves. J. Marine Res. 1952;11:245–266. [Google Scholar]

- 19.Longuet-Higgins MS. On the statistical distribution of the heights of sea waves: some e ects of nonlinearity and finite band width. J. Geophys. Res. 1980;85:1519–1523. [Google Scholar]

- 20.Marinucci D, Peccati G. Random Fields on the Sphere. Representation, Limit Theorems and Cosmological Applications. Cambridge University Press; 2011. [Google Scholar]

- 21.Nosko VP. Proceedings USSR-Japan Symposium on Probability (Khabarovsk,1969) Novosibirsk; 1969. The characteristics of excursions of Gaussian homogeneous random fields above a high level. pp. 11–18. [Google Scholar]

- 22.Nosko VP. On shines of Gaussian random fields. Vestnik Moskov. Univ. Ser. I Mat. Meh. 1970a;25:18–22. [Google Scholar]

- 23.Nosko VP. The investigation of level excursions of random processes and fields. Lomonosov Moscow State University, Faculty of Mechanics and Mathematics; 1970b. Unpublished dissertation for the degree of Candidate of Physics and Mathematics. [Google Scholar]

- 24.Piterbarg VI. Technical Report NO. 478. Center for Stochastic Processes, Univ; North Carolina: 1996. Rice's method for large excursions of Gaussian random fields. [Google Scholar]

- 25.Schneider R, Weil W. Probability and Its Applications. Springer-Verlag; Berlin: 2008. Stochastic and integral geometry. [Google Scholar]

- 26.Schoenberg IJ. Positive definite functions on spheres. Duke Math. J. 1942;9:96–108. [Google Scholar]

- 27.Schwartzman A, Gavrilov Y, Adler RJ. Multiple testing of local maxima for detection of peaks in 1D. Ann. Statist. 2011;39:3290–3319. doi: 10.1214/11-AOS943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sobey RJ. The distribution of zero-crossing wave heights and periods in a stationary sea state. Ocean Engng. 1992;19:101–118. [Google Scholar]

- 29.Szegö G. Othogonal Polynomials. American Mathematical Society; Providence, RI.: 1975. [Google Scholar]