Abstract

Purpose

The purpose of this article is to review recent research from our laboratory on the topic of aging, and the ear–brain system, as it relates to hearing aid use and auditory rehabilitation. The material described here was presented as part of the forum on the brain and hearing aids, at the 2014 HEaling Across the Lifespan (HEAL) conference.

Method

The method involves a narrative review of previously reported electroencephalography (EEG) and magnetoencephalography (MEG) data from our laboratory as they relate to the (a) neural detection of amplified sound and (b) ability to learn new sound contrasts.

Conclusions

Results from our studies add to the mounting evidence that there are central effects of biological aging as well as peripheral pathology that affect a person's neural detection and use of sound. What is more, these biological effects can be seen as early as middle age. The accruing evidence has implications for hearing aid use because effective communication relies not only on sufficient detection of sound but also on the individual's ability to learn to make use of these sounds in ever-changing listening environments.

Hearing aids (HAs) help people with hearing loss by making sounds more audible, but they do not guarantee satisfactory perception. Some HA users report positive outcomes, such as being able to use their HAs to effectively communicate with friends and family, whereas others report more negative outcomes. HA device-centered variables (e.g., directional microphones, compression and gain settings) and patient-centered variables (e.g., age, attention, motivation, and biology) are some of the factors believed to contribute to a person's HA experience. However, a large portion of the variance in HA outcomes remains unexplained. This presentation reviewed some of the ways in which neuroscience is advancing our understanding of HA use. The presentation was not intended to be comprehensive in its review of the literature on this topic; instead, it focused on findings obtained in recent years from the Brain and Behavior Laboratory at the University of Washington.

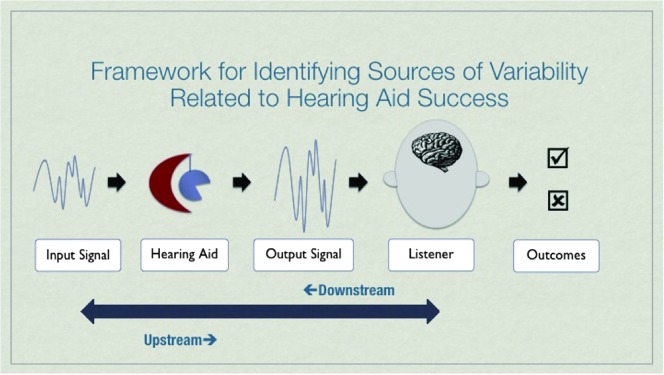

Tremblay and Miller (2014) put forth a framework for discussing the many potential variables associated with HA use, which are described in terms of upstream and downstream processes (see Figure 1). The term upstream is used here to refer to the ear-to-brain processes involved in relaying acoustic information from the periphery (ear) to, and within, the central auditory brain systems. The term downstream refers to processes associated with making use of sound; examples include the ability to segregate different sounds arriving at the cortex and learning how to identify a new sound contrast. Although the contribution of upstream processing to successful speech understanding has long been considered, the interplay between upstream and downstream functioning in relation to hearing instrument use is less understood (Kiessling et al., 2003). With this framework in mind, here we review two examples of how electroencephalography (EEG) and magnetoencephalography (MEG) studies are filling gaps in knowledge by defining how the human auditory system (a) neurally detects amplified sound and (b) learns to identify different sounds.

Figure 1.

Framework for identifying sources of variability related to hearing aid success. From “How Neuroscience Relates to Hearing Aid Amplification,” by K. L. Tremblay and C. W. Miller, 2014, International Journal of Otolaryngology, Volume 14, pp. 1–7. Article No. 641652. doi:10.10.1155/2014/641652. Reprinted with permission.

Upstream: The Neural Detection of Amplified Sound

What is received by the individual's auditory system is not the signal entering the HA but rather the modified signal that is entering the ear canal. HA devices alter the incoming signal (e.g., rise time, level, temporal envelope, and spectral content), which means that quantification of the signal at the output of the HA is important for understanding the biological processing of amplified sound. In a series of studies, Billings and colleagues (Billings, Papesh, Penman, Baltzell, & Gallun, 2012; Billings & Tremblay, 2011; Billings, Tremblay, Souza, & Binns, 2007) measured the output of HAs and found the HA-processed signal contains noise that can disrupt biological codes that convey information about a signal's level. For example, time-locked evoked cortical EEG activity (e.g., the P1-N1-P2 complex) typically increases in amplitude and decreases in latency with each increase in signal decibel level. However, when sound levels increase through the use of HA gain, the expected growth function is not seen. Neural codes, signaling HA gain, are also affected by different types of HA signal processing (analog and digital; Marynewich, Jenstad, & Stapells, 2012; Tremblay, Billings, et al., 2006; Tremblay, Kalstein, et al., 2006).

Listener variables (e.g., age, attention, motivation, personality, lifestyle, and biology) can also contribute to HA use. Age in particular is a relevant variable because amplified sound goes through an ear-to-brain system that has been compromised by the central effects of biological aging (CEBA) and peripheral pathology (CEPP; Willott, Chisolm, & Lister, 2001). CEBA refers to anatomic and physiologic changes in the brain (e.g., neuron loss, dysfunction of excitatory and inhibitory neurotransmitter systems) associated with aging. CEPP refers to changes in the central auditory system (e.g., altered synaptic) resulting from peripheral pathology (e.g., cochlear degeneration, sound deprivation). One example of a biological consequence is a loss of temporal precision, an important process of encoding spectral and temporal aspects of the incoming sound. With advancing age, the neural conduction of sound upstream is negatively affected by declines in neural synchrony and thus temporal processing (Clinard & Tremblay, 2013; Frisina, 2001; Schneider & Pichora-Fuller, 2001; Tremblay, Piskosz, & Souza, 2003; for reviews, see Billings, Tremblay, & Willott, 2012; Ison, Tremblay, & Allen, 2010; Tremblay & Burkard, 2007). This means that the typical older person who wears an HA physiologically processes an incoming signal that has been altered by the HA using an auditory system that has been affected by CEBA and CEPP. Hence, even though HAs or other types of personal amplifiers help by making sounds louder, they do not correct for this impaired biology. Put differently, amplified sound that is made audible through the use of an HA is not faithfully encoded by the peripheral and central auditory systems, and the resulting distorted speech signal is believed to contribute to impaired speech understanding even when sounds are sufficiently audible. This might help explain why HA users sometimes describe amplified signals to be louder but not necessarily clearer, much like turning up the volume on a radio station that is off frequency.

Upstream/Downstream: Learning to Identify Different Sounds

If the typical older adult is listening to an altered amplified signal using an impaired auditory system, can the listener be taught to make better use of the amplified signal? The second part of the presentation focused on cognitive processes involved in learning and memory. We have examined the ability of individuals to relearn new sounds because it is possible that the degree of benefit a particular person receives from a hearing prosthesis depends on the ability of that patient's system to make use of the modified acoustic cues introduced by the HA. Moreover, people who do not experience significant benefits from an HA may have auditory systems that are less capable of representing new acoustic cues or learning how to relate these new neural patterns to an existing memory of the sounds of speech.

Using EEG and MEG methods, we have shown that the central auditory systems of people with normal hearing change after being exposed to sound as well as in response to sound learning. More specifically, enhanced cortical activity (e.g., P1-N1-P2) that is most pronounced over temporal-occipital scalp regions happens rapidly and is long lasting (Alain, Campeanu, & Tremblay, 2010; Tremblay, Inoue, McClannahan, & Ross, 2010; Tremblay, Ross, Inoue, McClannahan, & Collet, 2014). For some individuals, enhanced cortical activity can persist for months after the last auditory experience and is thought to relate to a person's auditory memory capacity (Alain et al., 2010; Tremblay et al., 2010, 2014). However, the neural capacity for change and retention appears to decline with advancing age (Ross & Tremblay, 2009). Even in middle age, auditory systems appear less responsive to repeated episodes of stimulus exposure.

If being exposed to sound generates changes in the way sound is encoded in the auditory cortex, then it is reasonable to assume that the brain might change after being fit with an HA and that people learn to make better use of new amplified sounds in the real world. However, there is little evidence to support this notion. Humes and Wilson (2003) tracked perceptual measures (e.g., speech-in-noise performance) in HA users following 1, 2, and 3 years of HA use, and there was no overwhelming evidence of perceptual gains. The greatest gains arising from HA use appear to come almost immediately from making previously inaudible signals audible. Likewise, Dawes, Munro, Kalluri, and Edwards (2014) reported finding no significant changes in evoked P1-N1-P2 activity following a period of HA stimulation; however, they did not examine scalp regions over the temporal lobe that are known to be responsive to sound (Tremblay et al., 2010). Therefore, the effects of HA amplification on cortical brain activity is still unclear; however, results thus far might explain why fitting people with HAs and sending them out to interact in the real auditory world does not appear to result in gradual benefit over time.

If a person does not acclimatize to HA use in a way that improves perception over time, can they be taught to make better use of the amplified signal? Auditory training exercises have been used to improve perception as well as to study the potential modifications in neural activity. A decade of research from our laboratory shows that it is possible to improve a person's perception of a temporal cue contained in speech, called voice onset time, but training does not appear to change the neural representation of the timing code itself. Instead, we suggest that targeted listening training alters higher-order cognitive or upstream processes involved in categorizing sounds into objects and remembering them (Ross, Jamali, & Tremblay, 2013). When relating these findings to the typical HA user, a few important issues become relevant. First, the typical HA user is older and has likely experienced CEPP and CEBA. Second, the HA user is listening to a modified signal using an auditory system that has been biologically altered because of age and periods of auditory deprivation. Third, the ability to learn has been said to decline at a rate of about 1% per year after age 25 years (Knowles, 1980). Together, these types of age- and deprivation-related biological changes may explain why training exercises designed to enhance learning through upstream and downstream engagement have shown limited success (Chisolm, Saunders, McArdle, Smith, & Wilson, 2013; Henshaw & Ferguson, 2013).

In summary, HAs help people with hearing loss by making sounds more audible, but they do not guarantee satisfactory perception. There is an abundance of literature describing the CEBA and CEPP, but the contribution of these biological changes to successful HA use is not fully understood. Therefore, research and interventions directed at improving a patient's access and use of sound are important future directions. In the meantime, information about CEBA and CEPP can be incorporated into patient counseling to explain why some people respond more or less favorably to current clinical interventions (i.e., HA amplification and auditory training). Information about the brain can also be used to motivate people to use their HAs and mindfully interact with sound.

Acknowledgments

We acknowledge funding from National Institute on Deafness and Other Communication Disorders Grant R01 DC012769-02 as well as the Virginia Merrill Bloedel Hearing Research Center Traveling Scholar program.

Funding Statement

We acknowledge funding from National Institute on Deafness and Other Communication Disorders Grant R01 DC012769-02 as well as the Virginia Merrill Bloedel Hearing Research Center Traveling Scholar program.

References

- Alain C., Campeanu S., & Tremblay K. (2010). Changes in sensory evoked responses coincide with rapid improvement in speech identification performance. Journal of Cognitive Neuroscience, 22, 392–403. [DOI] [PubMed] [Google Scholar]

- Billings C. J., Papesh M. A., Penman T. M., Baltzell L. S., & Gallun F. J. (2012). Clinical use of aided cortical auditory evoked potentials as a measure of physiological detection or physiological discrimination. International Journal of Otolaryngology. doi:10.1155/2012/365752 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Billings C. J., & Tremblay K. L. (2011). Aided cortical auditory evoked potentials in response to changes in hearing aid gain. International Journal of Audiology, 50, 459–467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Billings C. J., Tremblay K. L., Souza P. E., & Binns M. A. (2007). Effects of hearing aid amplification and stimulus intensity on cortical auditory evoked potentials. Audiology and Neuro-Otology, 12, 234–246. [DOI] [PubMed] [Google Scholar]

- Billings C. J., Tremblay K. L., & Willott J. W. (2012). The aging auditory system. In Tremblay K., & Burkard R. (Eds.), Translational perspectives in auditory neuroscience: Hearing across the lifespan—Assessment and disorders (pp. 62–83). San Diego, CA: Plural Publishing. [Google Scholar]

- Chisolm T., Saunders G., McArdle R., Smith S., & Wilson R. (2013). Learning to listen again: The role of compliance in auditory training for adults with hearing loss. American Journal of Audiology, 22, 339–342. [DOI] [PubMed] [Google Scholar]

- Clinard C., & Tremblay K. L. (2013). What brainstem recordings may or may not be able to tell us about hearing aid–amplified signals. Seminars in Hearing, 34, 270–277. [Google Scholar]

- Dawes P., Munro K. J., Kalluri S., & Edwards B. (2014). Auditory acclimatization and hearing aids: Late auditory evoked potentials and speech recognition following unilateral and bilateral amplification. The Journal of the Acoustical Society of America, 135, 3560–3569. [DOI] [PubMed] [Google Scholar]

- Frisina R. D. (2001). Possible neurochemical and neuroanatomical bases of age-related hearing loss—Presbycusis. Seminars in Hearing, 22, 213–225. [Google Scholar]

- Henshaw H., & Ferguson M. A. (2013). Efficacy of individual computer-based auditory training for people with hearing loss: A systematic review of the evidence. PLoS One, 8(5), e62836 doi:10.1371/journal.pone.0062836 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes L. E., & Wilson D. L. (2003). An examination of changes in hearing-aid performance and benefit in the elderly over a 3-year period of hearing-aid use. Journal of Speech, Language, and Hearing Research, 46, 137–145. [DOI] [PubMed] [Google Scholar]

- Ison J., Tremblay K. L., & Allen P. (2010). Closing the gap between neurobiology and human presbycusis: Behavioral and evoked potential studies of age-related hearing loss in animal models and in humans. In Fay R. R., & Popper A. (Eds.), The aging auditory system: Springer handbook of auditory research (pp. 75–110). New York, NY: Springer. [Google Scholar]

- Kiessling J., Pichora-Fuller K., Gatehouse S., Stephens D., Arlinger S., Chisolm T., … von Wedel H. (2003). Candidature for and delivery of audiological services: Special needs of older people. International Journal of Audiology, 42, S92–S101. [PubMed] [Google Scholar]

- Knowles M. S. (1980). The modern practice of adult education. Chicago, IL: Follet. [Google Scholar]

- Marynewich S., Jenstad L. M., & Stapells D. R. (2012). Slow cortical potentials and amplification—Part I: N1-p2 measures. International Journal of Otolaryngology. doi:10.1155/2012/921513 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross B., Jamali S., & Tremblay K. L. (2013). Plasticity in neuromagnetic cortical responses suggests enhanced auditory object representation. BMC Neuroscience, 14, 151 doi:10.1186/1471-2202-14-151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross B., & Tremblay K. (2009). Stimulus experience modifies auditory neuromagnetic responses in young and older listeners. Hearing Research, 248, 48–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider B. A., & Pichora-Fuller M. K. (2001). Age-related changes in temporal processing: Implications for listening comprehension. Seminars in Hearing, 22, 227–239. [Google Scholar]

- Tremblay K. L., Billings C. J., Friesen L. M., & Souza P. E. (2006). Neural representation of amplified speech sounds. Ear and Hearing, 27, 93–103. [DOI] [PubMed] [Google Scholar]

- Tremblay K. L., & Burkard R. (2007). Aging and auditory evoked potentials. In Burkard R., Don M., & Eggermont J. (Eds.), Auditory evoked potentials: Scientific bases to clinical application (pp. 403–425). Philadelphia, PA: Lippincott Williams & Wilkins. [Google Scholar]

- Tremblay K. L., Inoue K., McClannahan K., & Ross B. (2010). Repeated stimulus exposure alters the way sound is encoded in the human brain. PLoS One, 5(4), e10283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay K. L., Kalstein L., Billings C. J., & Souza P. E. (2006). The neural representation of consonant–vowel transitions in adults who wear hearing aids. Trends in Amplification, 10, 155–162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay K. L., & Miller C. W. (2014). How neuroscience relates to hearing aid amplification. International Journal of Otolaryngology, 14, 1–7. Article No. 641652. doi:10.1155/2014/641652. Retrieved from http://www.hindawi.com/journals/ijoto/2014/641652/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay K. L., Piskosz M., & Souza P. (2003). Effects of age and age-related hearing loss on the neural representation of speech cues. Clinical Neurophysiology, 114, 1332–1343. [DOI] [PubMed] [Google Scholar]

- Tremblay K. L., Ross B., Inoue K., McClannahan K., & Collet G. (2014). Is the auditory evoked P2 response a biomarker of learning? Frontiers in Systems Neuroscience, 8, 28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willott L., Chisolm T., & Lister J. (2001). Modulations of presbycusis: Current status and future directions. Audiology and Neuro-Otology, 6, 231–249. [DOI] [PubMed] [Google Scholar]