Abstract

Purpose

This study compared the use of 2 different types of contextual cues (sentence based and situation based) in 2 different modalities (visual only and auditory only).

Method

Twenty young adults were tested with the Illustrated Sentence Test (Tye-Murray, Hale, Spehar, Myerson, & Sommers, 2014) and the Speech Perception in Noise Test (Bilger, Nuetzel, Rabinowitz, & Rzeczkowski, 1984; Kalikow, Stevens, & Elliott, 1977) in the 2 modalities. The Illustrated Sentences Test presents sentences with no context and sentences accompanied by picture-based situational context cues. The Speech Perception in Noise Test presents sentences with low sentence-based context and sentences with high sentence-based context.

Results

Participants benefited from both types of context and received more benefit when testing occurred in the visual-only modality than when it occurred in the auditory-only modality. Participants' use of sentence-based context did not correlate with use of situation-based context. Cue usage did not correlate between the 2 modalities.

Conclusions

The ability to use contextual cues appears to be dependent on the type of cue and the presentation modality of the target word(s). In a theoretical sense, the results suggest that models of word recognition and sentence processing should incorporate the influence of multiple sources of information and recognize that the 2 types of context have different influences on speech perception. In a clinical sense, the results suggest that aural rehabilitation programs might provide training to optimize use of both kinds of contextual cues.

During aural rehabilitation, patients with hearing loss are often taught to use specific strategies to rectify communication breakdowns and to promote conversational fluency. One strategy for repairing communication breakdowns is to request that a talker provide contextual information such that a patient might request a context-rich keyword (e.g., “I missed that; what are you talking about?”). A strategy for promoting conversational fluency, and thereby preventing communication breakdowns from occurring, is to attend to situational clues and anticipate what a talker might say on the basis of the situation. For example, in the lobby of a movie theater, a patient might anticipate that a communication partner will make a remark about buying popcorn (see Tye-Murray, 2015, for an overview of communication strategies training and conversational fluency, repair strategies, and anticipatory strategies). The assumption is that contextual information will enhance a patient's ability to recognize the speech signal.

Context for enhancing speech recognition comes in many forms, including topical context (i.e., knowing the topic of a sentence), sentence-based context, and situational context (Boothroyd, Hanin, & Hnath, 1985; Goebel, Tye-Murray, & Spehar, 2012; Kalikow, Stevens, & Elliott, 1977). The goal of the current study was to examine how sentence-based and situation-based context enhance auditory-only and visual-only speech recognition in noise.

Sentence-based context can be derived from the content of a sentence, as it “imposes constraints on the set of alternative words that are available as responses at a particular location in a sentence, and … [means] that the intelligibility of words increases when the number of response alternatives decreases” (Kalikow et al., 1977, p. 1338). For example, in the sentence “I saw elephants at the zoo,” each word in the sentence provides further constraints for the final word. The phrase I saw could be followed by a seemingly infinite number of words, but when followed by the words elephants, at, and the, the word zoo becomes a very likely final word. It is also likely that the constraints are not necessarily sequential (i.e., knowing the word zoo would provide constraints for the word elephants). Sentence-based context provides two sources of information: semantic and syntactic. In this example, the word elephants and the fact that elephants can be seen at this location (e.g., an elephant would not likely be seen at a pool) provide semantic context for the final word, whereas the prepositional phrase at the provides syntactic context for the word to be a noun. A test of sentence-based context is the Speech Perception in Noise Test (SPIN; Bilger, Nuetzel, Rabinowitz, & Rzeczkowski, 1984; Kalikow et al., 1977). The SPIN contains lists of high-predictability and low-predictability sentences. With these sentences, clinicians can examine an individual's use of sentence-based context by comparing how well the patient performs when the words in a sentence are highly predictive of the final word with how well the patient performs when the preceding content is minimally predictive.

Situational context is derived from the surroundings during conversation (Goebel et al., 2012). For example, at a baseball game, a communication partner may assume that conversation will most likely revolve around topics concerning the stadium or recent plays on the field. This type of context was incorporated into the recently developed Illustrated Sentence Test (IST; Tye-Murray, Hale, Spehar, Myerson, & Sommers, 2014). In the IST, context is provided in the form of a context-rich picture containing relevant information about a target sentence and is then followed by presentation of the corresponding sentence (see Figure 1).

Figure 1.

Example of an Illustrated Sentence Test picture for the target sentence “The rain came through the open window.”

Many studies have shown that older and younger individuals receive benefit when sentence-based context is provided via the auditory channel (Dubno, Ahlstrom, & Horwitz, 2000; Pichora-Fuller, 2008; Sommers & Danielson, 1999). For example, Sommers and Danielson (1999) studied the extent to which younger and older adults with normal hearing benefitted from contextual information using the SPIN sentences. They calculated the percentage correct performance for final-word identification of the high-predictability and low-predictability sentences when presented in a difficult signal-to-noise ratio (SNR). An example of a high-predictability sentence is “Cut the bacon into strips.” An example of a low-predictability sentence is “Bob heard Tom called about the strips.” The authors found that both groups of participants (older and younger) received significant improvements from the addition of contextual information. Assessment of sentence-based context that is provided via the visual channel has proved to be problematic. One of the biggest pitfalls is a floor effect due to the difficulty of the lipreading task (Gagné, Seewald, & Stouffer, 1987). Gagné et al. (1987) reported that individuals who were tested with the SPIN sentences in a visual-only condition could not understand words well enough to perform above floor level, which limited the assessment of the use of sentence-based visual context.

Few if any studies have assessed the effects of situational context on speech recognition in an auditory-only condition, although some research has focused on its effects in a visual-only condition. Pelson and Prather (1974), Tye-Murray et al. (2014), and Goebel et al. (2012) all used a picture presented before the target sentence to provide situational context in a visual-only condition and found that participants performed above a floor level. Garstecki and O'Neill (1980) used contextual scenery projected behind a recorded talker, who spoke sentences that were presented in a visual-only condition.

As far as we know, no investigation has considered the use of the two types of context cues within the same group of participants or compared the use of context cues in different test condition–presentation modalities. For this reason, we do not know whether individuals who can (or cannot) utilize one type of cue can similarly utilize the other type of cue, and we do not know whether either type of contextual cue is equally effective in an auditory-only versus a visual-only condition. An enhanced understanding of these issues could have both theoretical and clinical implications. In a theoretical sense, if we show that use of contextual cues is a general ability that seems to manifest regardless of the type of cue or the modality of speech presentation, we might refine models of word recognition and sentence processing. In a clinical sense, the results might inform aural rehabilitation programs about how optimally to teach the use of repair strategies and anticipatory strategies.

The present study concerned the use of the two different types of contextual cues (sentence based and situation based) in two different target modalities (visual only and auditory only). The SPIN was used to measure how speech recognition improved in the presence of sentence-based context, whereas the IST was used to measure the effects of situation-based context. Both tests were administered to assess speech recognition in the visual-only and auditory-only modalities. Benefit was quantified by comparing word recognition scores when context was present with scores when it was not present. We expected to find significant correlations between benefit for visual-only and auditory-only speech recognition scores when the type of context was held constant. This outcome would suggest that the use of context is not a modality-specific skill but rather a global skill used similarly regardless of the modality in which it is provided. We also examined whether the ability to benefit from context varies by the type of context used when the modality is held constant. We asked whether the benefit scores from the IST and SPIN would covary or show any differences when compared in the visual-only and auditory-only conditions. We hypothesized that results would show a significant correlation, suggesting that the ability to utilize context by type is a global skill. Should this be true, we could suggest that individuals use contextual information similarly regardless of the type of context provided.

Method

Participants

Participants were 20 women aged 21 to 30 years (M = 23.73 years, SD = 1.86 years). All participants were screened for normal hearing (20 dB HL or better), with pure-tone thresholds at octave frequencies 250 through 8000 Hz. Screening procedures were completed using a calibrated Madsen Aurical audiometer (Otometrics, Schaumburg, IL) and TDH-39p (Telephonics, Farmingdale, NY) headphones. Participants were screened for corrected or uncorrected vision of 20/40 or better using a Snellen eye chart. Written consent was obtained from all participants. This study was approved by the Washington University School of Medicine Human Research Protection Office.

Stimuli

Situational Context

The IST, modeled after Pelson and Prather (1974; Tye-Murray et al., 2014), was used to assess the use of situational context. The IST provides the option of either presenting a corresponding context-rich illustration (context condition) or providing no illustration (no-context condition) before a test sentence is presented in the auditory-only or visual-only modality. In the context condition, a participant is presented with a picture that provides situational context for 1.5 s before seeing or hearing the target sentence. In the no-context condition, participants repeat what they see or hear without benefit of an illustration clue. The sentences are spoken by a professional female actor with a general American dialect. The digital audio and video samples of the recordings were edited using Adobe (San Jose, CA) Premiere Elements and adjusted to have equal root-mean-square amplitude using Adobe Audition. When administered in a visual-only condition, the speaker's head and upper shoulders appear on the monitor screen and she speaks a sentence without sound. In an auditory-only condition, only her auditory signal is presented. The original test consists of 120 sentences comprising vocabulary used in the Bamford-Kowal-Bench Sentences (Bench, Kowal, & Bamford, 1979). In the present investigation, the IST stimuli were divided into four lists of 25 sentences (see Appendix) to accommodate the four test conditions: visual-only target with context, visual-only target with no context, auditory-only target with context, and auditory-only target with no context. All sentences were presented in six-talker babble at 62 dB SPL. The auditory- and visual-only conditions were both presented in noise to control for the possibility that any observed differences across conditions were due to the introduction of informational masking from babble noise (Helfer & Freyman, 2008) in one but not the other condition. In the auditory-only condition, sentences were presented at a −8 SNR (i.e., 54 dB). On the basis of pilot testing, this SNR was expected to prevent both floor and ceiling effects.

Sentence-Based Context

The SPIN sentences were used to assess the use of sentence-based context. Audiovisual recordings of the SPIN sentences were created in order to have test stimuli that could be presented in either an auditory-only or a visual-only condition. The SPIN consists of 100 sentences (50 low predictability, 50 high predictability). Each list was divided in half to create four lists. The first sentence of each list was used for practice. The result was two lists (24 sentences each) of high-predictability (context) sentences and two lists (24 sentences each) of low-predictability (no-context) sentences. The speaker was a female actor with a general American dialect. So that results from the SPIN stimuli could be compared with those obtained from the IST, the recordings were edited to reflect the four conditions described previously (visual-only target with context, visual-only target with no context, auditory-only target with context, and auditory-only target with no context).

To ensure reception of the sentence-based context in the SPIN sentences, contextual information (i.e., the entire sentence except the final word) was always delivered auditorily at a conversational level of 60 dB SPL. Recall that Gagné et al. (1987) showed that context was not effectively conveyed in a visual-only condition, which is why we did not attempt to deliver sentence-based context using the visual-only speech signal. To avoid having a face appear midsentence, the auditory signal of a sentence was coupled with a frozen visual image of the talker's head and shoulders. This image was taken from just before the onset of the final word in each sentence. In the auditory-only condition, six-talker babble was introduced at the onset of the final word at an SNR of −4, a level that pilot testing determined would minimize ceiling and floor effects. In the visual-only condition, the audio from the target speaker ceased just prior to the final word and the frozen face became animated to speak the final word. The method for presenting context in the SPIN was the same whether the target word was in the visual-only or auditory-only condition. The consistent delivery method of the contextual information in the SPIN sentences allowed for a more direct comparison of the type of context when the modality for perception of the target was the same. For example, in the IST, the picture was always the method of providing context when lipreading or listening. Likewise, when using the SPIN sentences, the context was always provided in the auditory channel when the target word was perceived via lipreading or listening.

Procedure

Participants were tested individually in a sound-treated booth. Following consent and screening, four lists (one list for each test condition, described previously) from both the IST and SPIN were administered. Test lists were counterbalanced to minimize list effects, and test order was counterbalanced to avoid learning effects. Participants sat in front of an Elo (Milpitas, CA) 17-in. touch-screen monitor, and verbal instructions for the tests were given for each test condition. All auditory stimuli were presented through loudspeakers positioned at ± 45° azimuth from the participant.

For the IST, eight practice sentences were given at the beginning of testing. Participants were instructed to listen to or lipread the entire target sentence and repeat it verbally to the examiner. If participants could not hear or lipread the entire sentence, they were instructed to repeat any words or phrases they understood. Guessing was encouraged. Scoring was calculated based on percentage of keywords correct for each condition (excluding the articles a, an, and the).

For the SPIN sentences, one practice sentence was administered prior to each list and repeated until the participant felt comfortable with the task. This reduced any potential reaction to the likely unusual condition in which speech quickly transitions from an auditory-only plus frozen face condition to a visual-only plus babble noise condition. Participants were instructed to repeat the last word of the sentence that they listened to and/or lipread. Guessing was encouraged.

Results

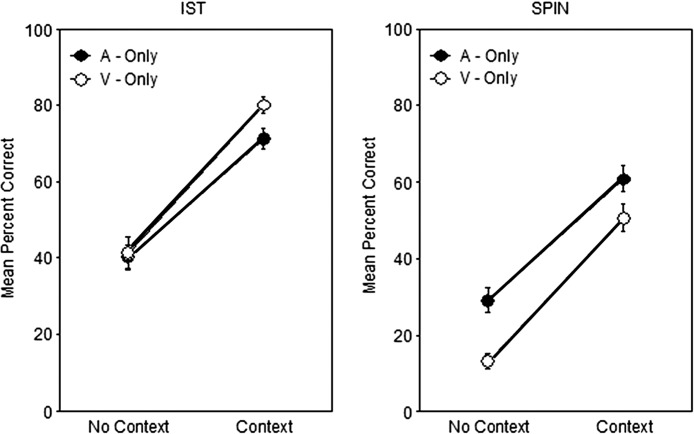

Results from all four conditions are shown in Figure 2 for both the IST and the SPIN. For the IST in a visual-only condition, participants scored 80.1% words correct on average (SD = 9.7, range = 53.7–92.1) in the context condition and 41.3% correct (SD = 18.7, range = 12.3–80.7) in the no-context condition. In an auditory-only condition, participants averaged 71.3% words correct (SD = 11.8, range = 45.7–88.6) in the context condition and 40.4% correct (SD = 13.0, range = 11.8–73.9) in the no-context condition.

Figure 2.

Average percentage correct scores for the Illustrated Sentence Test (IST; left) and the Speech Perception in Noise Test (SPIN; right). Error bars indicate standard error.

For the SPIN in a visual-only condition, participants scored 50.6% words correct on average (SD = 16.3, range = 25.0–87.5) in the context condition and 13.1% words correct (SD = 7.8, range = 0.0–33.3) in the no-context condition. In an auditory-only condition, participants averaged 60.8% words correct (SD = 14.9, range = 37.5–83.3) in the context condition and 29.2% correct (SD = 14.9, range = 8.3–58.3) in the no-context condition.

Participants benefitted from both types of context and in both test conditions. In a visual-only condition, participants improved an average of 38.8 percentage points on the IST between the no-context and context conditions. In an auditory-only condition, they improved 30.9 percentage points between the no-context and context conditions. In a visual-only condition, participants improved an average of 37.5 percentage points on the SPIN when context was provided. In the auditory-only condition, they improved an average of 31.7 percentage points between the no-context and context conditions.

Potential differences in the amount of benefit from context among the conditions were examined using a three-way analysis of variance (ANOVA) with repeated measures. Three factors—condition (context or no context), modality (auditory-only target or visual-only target), and context type (situation based or sentence based)—were entered into the ANOVA as repeated measures. As described previously, performance was better when context was provided, F(1, 19) = 946.5, p < .05, ηp2 = .980. Results also indicated that overall performance in the IST was higher than performance in the SPIN, F(1, 19) = 135.0, p < .05, ηp2 = .877, and that performance in the auditory-only and visual-only conditions was not different, F(1, 19) = 3.2, p = .091, ηp2 = .143.

There were two potential two-way interactions of interest to the current study. The first was the degree of the association between the amount of benefit from context and the type of context (sentence based or situation based). This interaction was not indicated, F(1, 16) < 1.0, p = .931, ηp2 < .000, suggesting that, regardless of the modality, the amount of benefit from context was similar for the SPIN and IST materials. The second potential interaction of interest was the degree of the association between the amount of benefit from context and the modality of the speech (visual only or auditory only). Results indicated a difference between the amount of benefit from context provided to participants in the visual-only and auditory-only conditions, F(1, 16) = 5.6, p < .05, ηp2 = .228. Post hoc testing, corrected for multiple comparisons, indicated that the degree of benefit from the addition of context was larger in the visual-only modality, t(38) = 2.3, p < .05. There was no three-way interaction for modality, context type, and presence of context, F(1, 19) = .116, p > .738, ηp2 = .006. The lack of a three-way interaction indicated that any benefits associated with the addition of context could not be attributed to any particular test or modality.

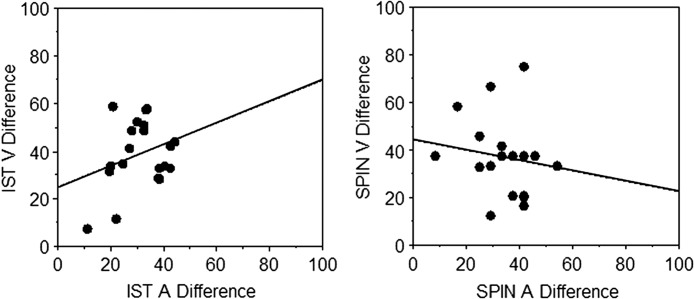

Correlational analyses were performed to determine whether participants received similar benefit from context, regardless of whether the context was presented during a visual-only or auditory-only test condition. As shown in the left panel of Figure 3, in the IST the ability to benefit from situational context in an auditory-only condition did not predict the ability to benefit from situational context in a visual-only condition, r(18) = .290, p = .22. Likewise, in the SPIN the ability to benefit from sentence context in an auditory-only condition did not predict the ability to benefit from sentence context in a visual-only condition, r(18) = −.183, p = .45 (right panel of Figure 3).

Figure 3.

Scatter plots with regression lines comparing the amount of benefit provided by context in the auditory-only modality (A difference) with the amount provided by the visual-only modality (V difference) for the Illustrated Sentence Test (IST; left) and the Speech Perception in Noise Test (SPIN; right). Neither the correlation for the IST nor the correlation for the SPIN was significant (rs = .290 and −.183, respectively; ps > .05).

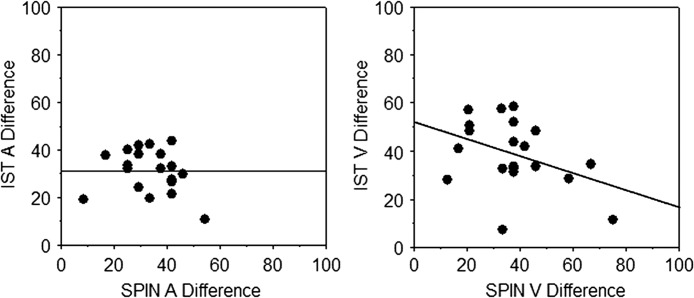

To determine if the ability to use context in a single modality would generalize across types of contextual cues, we correlated performance between benefit in one modality from situation-based context and benefit in that same modality from sentence-based context. As shown in the left panel of Figure 4, within the visual-only condition the ability to benefit from sentence-based context did not predict the ability to benefit from situation-based context or vice versa, r(18) = .002, p = .99. As shown in the right panel of Figure 4, the ability to benefit from sentence-based context did not predict the ability to benefit from situation-based context or vice versa, r(18) = −.401, p = .08. The ability to use situation-based context while listening did not predict the ability to use sentence-based context while listening.

Figure 4.

Scatter plots with regression lines comparing the amount of benefit provided by context in the auditory-only (A difference) modality across the two types of contexts (left) and the visual modality (V difference; right). Neither the correlation between the IST and the SPIN for the auditory-only nor visual-only tests was significant (rs = .002 and −.401, respectively; ps > .05).

Discussion

In this investigation, participants were shown to benefit from both situation-based and sentence-based context cues when asked to recognize spoken sentences. Although they received benefit in both visual-only and auditory-only test conditions, participants on average received significantly more benefit in the visual-only than the auditory-only condition. It is interesting to note that there did not appear to be any relation between one's ability to benefit from situation-based cues and one's ability to benefit from sentence-based cues. Performance on the IST was not correlated with performance on the SPIN despite the potential for sentence-based cues to be used while responding to the IST sentences. Moreover, participants who benefited more from one type of cue in a visual-only condition did not necessarily benefit more from the same type of cue in an auditory-only condition, because neither benefit for the IST nor the SPIN was correlated for the visual-only and auditory-only test conditions. Last, there was great variability among the participants in their ability to benefit from either type of contextual cue and in either of the two test modalities.

Recognizing sentences in a visual-only condition is notoriously difficult (e.g., Sommers, Spehar, & Tye-Murray, 2005), so at first blush it is tempting to attribute the increased benefit of contextual cues for visual-only performance compared with auditory-only performance to task difficulty. However, performance in the no-context condition was comparable for both test modalities and for both tests, so there was equal chance for performance gains regardless of whether the tests were presented in a visual-only or an auditory-only test condition. One possibility is that because visual-only speech recognition is challenging whereas auditory-only speech recognition is relatively easy in the absence of hearing loss, contextual cues typically have a greater facilitating effect on word recognition when individuals are attempting to recognize words through vision as opposed to through hearing during everyday activities. The present results are a reflection of this enhanced priming effect that has been developed through everyday experiences.

The present results suggest that there is not a generalized ability to benefit from context during speech recognition because there was no correlation between ability to benefit from context for the IST and the SPIN and no correlation between ability to benefit from context in a visual-only and an auditory-only test condition. On the other hand, there was large variability in the amount of benefit that participants received, which suggests that some people are better at using context cues than are others.

The results have implications for models of word recognition and sentence processing. Even though it is well established that the semantic and syntactic structure of an utterance can aid speech perception (e.g., Kalikow et al., 1977; Miller & Selfridge, 1950), some models of word recognition rely on phonetic-level competition or frequency of occurrence to account for patterns of word recognition. For example, the acoustic lexical neighborhood model (Luce, 1986; Luce & Pisoni, 1998) suggests that words that have a higher frequency of occurrence (i.e., how often a particular word occurs during everyday language use) are more likely to be recognized and that words that have similar acoustic–phonetic qualities belong to a common lexical neighborhood. As such, all other things being equal, when a word is misheard it is more likely to have a low frequency of occurrence than a high frequency of occurrence and is more likely to be mistaken for a word within its own neighborhood (e.g., hat may be misheard as sat). Indeed, in sentence tests, children with normal hearing recognize sentences comprising lexically easy words more easily compared with those comprising lexically difficult words (Conway, Deocampo, Walk, Anaya, & Pisoni, 2014).

In addition to lexical competition, however, psycholinguistic studies have yielded findings about sentence processing that suggest the recognition of individual words triggers syntactic and semantic information that is helpful to sentence processing (e.g., Boland & Cutler, 1996; Kim, Srinivas, & Trueswell, 2002). Situational cues provided by still photographs may also trigger syntactic and semantic information (January, Trueswell, & Thompson-Schill, 2009). Such findings suggest that speech perception entails far more than identifying a word and retrieving its core meaning. Likewise, the present results clearly indicate that models of word recognition and sentence processing must incorporate the influence of multiple sources of information, including sentence-based and situation-based context, because both types of context led participants to better recognize target words in the test sentences. Moreover, because the ability to use one kind of context did not predict an ability to use the other kind, it appears that our models should not lump them together as if they have equivalent influences on speech perception. Whereas sentence-based context provides immediate contextual cues and cues that are linguistic, situation-based cues may be more interpretative and are nonlinguistic.

It may be that participants varied so widely in their responses to context because of varying experience. For example, in the IST, an illustration of a family at a kitchen table (see Tye-Murray et al., 2014) might have cued words related to breakfast and morning activities (e.g., cereal, orange juice, school bus) in one participant and words related to dinner and evening activities (e.g., steak knife, salad dressing, bedtime) in another. Although less susceptible to interpretative effects, responses to sentences in the SPIN might also be affected by experience. For example, in the sentence “I saw elephants at the ______,” a participant who has been to the circus on many occasions might have been more likely to mishear the word zoo as the word circus than a participant who has never been to a circus.

Overall, the results indicate that the ability to use different types of context may not be a global skill. Instead, individuals may have differing abilities to use different types of context while using the same modality. The implication for aural rehabilitation is that training for using one type of context may not generalize to other types. This suggests that aural rehabilitation programs might incorporate multiple types of context and help patients learn how to use them in difficult communication situations. For example, with focus specifically on situational context, a clinician might think of ways to help the patient take advantage of context while in a situation that the patient identified as particularly troublesome. The patient might become familiar with the people, situational cues, or likely topics at a particular event before attending. The Client Orientated Scale of Improvement (Dillon, James, & Ginis, 1997) could be useful in identifying these situations. Further, explicit acknowledgment by the patient of information that was afforded by situational context as it occurs during conversation could reinforce the link between potential benefits of using context and improving speech recognition. In addition to training that considers multiple types of context, training might be modality specific so that patients can more effectively improve their skills for the use of context in both the auditory and visual channels. In addition, the use of specific assessment tools such as those used here could help guide rehabilitation programs to tailor contextual training on the basis of individual patient needs.

Acknowledgments

Support was provided by National Institutes of Health Grant AG018029. This research was completed as part of Stacey Goebel's capstone project at Washington University School of Medicine.

Appendix

IST Stimuli Divided Into Four Lists

Practice List

The family ate dinner at the table.

The baby sat in the drawer.

The rain came through the open window.

The girl ate the chocolate.

The mother washed the towels.

The water dripped into the bucket.

The children watched the chickens.

The mailman helped the lady.

List A

The doll was on the shelf.

The curtains fell off the window.

The boy's hand hurt.

The father dropped the knife.

The man cut the letter with scissors.

The dog chased the mailman.

The cat hid in the closet.

The children got into the car.

The farmer looked at the animals.

The strawberries were in the bowl.

The dog's dish was clean.

The blue chair broke.

The policeman was riding a bicycle.

The child was cleaning his room.

The baby was asleep on the bed.

The woman dropped her lunch.

The bus stopped at the house.

The mother was buying some paint.

The man closed the gate.

Tomatoes grow in the sun.

The father poured milk in the bottles.

Water helped the potatoes grow.

The chicken sat on her eggs.

He painted the house green.

She wore the hat and scarf to keep warm.

List B

The fence needed to be painted.

He brought his friends some drinks.

The child put water in the dog's bowl.

She was reading a book.

The garden had tomatoes.

The girl brushed the cat.

Yellow flowers grew in the garden.

The boy cut the long grass.

Mother put the picture in her purse.

The ice cream truck came down the road.

The mouse ran up the curtains.

She shopped for shoes.

The farmer picked up the eggs.

The family ate chocolate pudding.

The grass in the park was very green.

The flowers in the park were pretty.

The boy broke the mirror.

He needed a spoon for his ice cream.

She put the fork by the plate.

Mother washed the windows.

Gloves keep her hands warm.

The pond had lots of fish.

Cats like to sit in the sun.

He wore his raincoat in the rain.

Mother brushed the girl's hair.

List C

The cat chased the mouse out the door.

Father put the fruit in the bowl.

The policeman knocked on the door.

The dog ran up the stairs.

The clowns had orange and pink hair.

The boy made a basket in the game.

The children rode the bus to school.

He carried the boxes up the stairs.

She carried the boxes into the house.

The baby sucked her thumb.

Mother heard the boy shouting.

The farmer grew tomatoes.

The tomatoes fell on the rug.

The girl had two black cats.

The boy kicked the tomatoes.

People watched the dog running.

Mother poured cream into the pitcher.

The glass in the window broke.

Ice cream dripped from the bowl.

She showed the mailman her flowers.

The farmer chased the cow.

The children rode their bicycles to school.

She ate strawberries with lunch.

The snow made his hands cold.

He opened the door for the dog.

List D

He put a towel under the dripping bucket.

The ice cream was chocolate and strawberry.

They found a hole in the roof.

The children were crossing the street.

She put the potatoes into the pot.

Her friend gave her an orange hat.

The orange cat sat in the sun.

She put the dishes in boxes.

The boy opened the door for his mother.

The puppy jumped on the old shoe.

The dog heard the boy calling.

The children sat on the fence.

The woman put her hat on the bed.

She waited for her friend on the bench.

He put his shirt in the drawer.

She hung her shirt in the closet.

The child was jumping on the bed.

Father caught a fish in the pond.

Mother fed the baby a bottle.

The men were eating tomatoes and cheese.

She put eggs and bread on the plate.

The child set the plate on the table.

Father started the fire with a match.

He took a picture of the fire.

The boy threw his boot at the fire.

Funding Statement

Support was provided by National Institutes of Health Grant AG018029. This research was completed as part of Stacey Goebel's capstone project at Washington University School of Medicine.

References

- Bench J., Kowal Å., & Bamford J. (1979). The BKB (Bamford-Kowal-Bench) sentence lists for partially-hearing children. British Journal of Audiology, 13, 108–112. [DOI] [PubMed] [Google Scholar]

- Bilger R. C., Nuetzel J. M., Rabinowitz W. M., & Rzeczkowski C. (1984). Standardization of a test of speech perception in noise. Journal of Speech and Hearing Research, 27, 32–48. [DOI] [PubMed] [Google Scholar]

- Boland J. E., & Cutler A. (1996). Interaction with autonomy: Multiple output models and the inadequacy of the Great Divide. Cognition, 58, 309–320. [DOI] [PubMed] [Google Scholar]

- Boothroyd A., Hanin L., & Hnath T. (1985). A sentence test of speech perception, reliability, set equivalence and short-term learning (Speech and Hearing Science Report No. RC10). New York, NY: City University. [Google Scholar]

- Conway C. M., Deocampo J. A., Walk A. M., Anaya E. M., & Pisoni D. B. (2014). Deaf children with cochlear implants do not appear to use sentence context to help recognize spoken words. Journal of Speech, Language, and Hearing Research, 57, 2174–2190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon H., James A., & Ginis J. (1997). Client Oriented Scale of Improvement (COSI) and its relationship to several other measures of benefit and satisfaction provided by hearing aids. Journal of the American Academy of Audiology, 8, 27–43. [PubMed] [Google Scholar]

- Dubno J. R., Ahlstrom J. B., & Horwitz A. R. (2000). Use of context by young and aged adults with normal hearing. The Journal of the Acoustical Society of America, 107, 538–546. [DOI] [PubMed] [Google Scholar]

- Gagné J. P., Seewald R., & Stouffer J. L. (1987). List equivalency of SPIN forms in assessing speechreading abilities. Paper presented at the Annual Convention of the American Speech-Language-Hearing Association, New Orleans, LA. [Google Scholar]

- Garstecki D. C., & O'Neill J. J. (1980). Situational cue and strategy influence on speechreading. Scandinavian Audiology, 9, 147–151. [DOI] [PubMed] [Google Scholar]

- Goebel S., Tye-Murray N., & Spehar B. (2012, September 10). A lipreading test that assesses use of context: Implications for aural rehabilitation. Academy of Rehabilitative Audiology Institute. Lecture conducted from Providence, RI. [Google Scholar]

- Helfer K. S., & Freyman R. L. (2008). Aging and speech-on-speech masking. Ear and Hearing, 29, 87–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- January D., Trueswell J. C., & Thompson-Schill S. L. (2009). Co-localization of stroop and syntactic ambiguity resolution in Broca's area: Implications for the neural basis of sentence processing. Journal of Cognitive Neuroscience, 21, 2434–2444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalikow D. N., Stevens K. N., & Elliott L. L. (1977). Development of a test of speech intelligibility in noise using sentence materials with controlled word predictability. The Journal of the Acoustical Society of America, 61, 1337–1351. [DOI] [PubMed] [Google Scholar]

- Kim A. E., Srinivas B., & Trueswell J. C. (2002). A computational model of the grammatical aspects of word recognition as supertagging. In Merlo P., & Stevenson S. (Eds.), The lexical basis of sentence processing: Formal, computational and experimental issues (pp. 109–135). Amsterdam, The Netherlands: John Benjamins. [Google Scholar]

- Luce P. A. (1986). A computational analysis of uniqueness points in auditory word recognition. Perception & Psychophysics, 39, 155–158. [DOI] [PubMed] [Google Scholar]

- Luce P. A., & Pisoni D. B. (1998). Recognizing spoken words: The neighborhood activation model. Ear and Hearing, 19, 1–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller G. A., & Selfridge J. A. (1950). Verbal context and the recall of meaningful material. The American Journal of Psychology, 63, 176–185. [PubMed] [Google Scholar]

- Pelson R. O., & Prather W. F. (1974). Effects of visual message-related cues, age, and hearing impairment on speech reading performance. Journal of Speech and Hearing Research, 17, 518–525. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller K. (2008). Use of supportive context by younger and older adult listeners. International Journal of Audiology, 47, 72–82. [DOI] [PubMed] [Google Scholar]

- Sommers M. S., & Danielson S. M. (1999). Inhibitory processes and spoken word recognition in young and older adults: The interaction of lexical competition and semantic context. Psychology and Aging, 14, 458–472. [DOI] [PubMed] [Google Scholar]

- Sommers M. S., Spehar B., & Tye-Murray N. (2005). The effects of signal-to-noise ratio on auditory-visual integration: Integration and encoding are not independent [Abstract]. The Journal of the Acoustical Society of America, 117, 2574. [Google Scholar]

- Tye-Murray N. (2015). Foundations of aural rehabilitation: Children, adults, and their family members. Clifton, NY: Delmar Cengage Learning. [Google Scholar]

- Tye-Murray N., Hale S., Spehar B., Myerson J., & Sommers M. (2014). Lipreading in school-age children: The roles of age, hearing status, and cognitive ability. Journal of Speech, Language, and Hearing Research, 57, 556–565. [DOI] [PMC free article] [PubMed] [Google Scholar]