Abstract

Sea-based infrared search and track (IRST) is important for homeland security by detecting missiles and asymmetric boats. This paper proposes a novel scheme to interpret various infrared scenes by classifying the infrared background types and detecting the coastal regions in omni-directional images. The background type or region-selective small infrared target detector should be deployed to maximize the detection rate and to minimize the number of false alarms. A spatial filter-based small target detector is suitable for identifying stationary incoming targets in remote sea areas with sky only. Many false detections can occur if there is an image sector containing a coastal region, due to ground clutter and the difficulty in finding true targets using the same spatial filter-based detector. A temporal filter-based detector was used to handle these problems. Therefore, the scene type and coastal region information is critical to the success of IRST in real-world applications. In this paper, the infrared scene type was determined using the relationships between the sensor line-of-sight (LOS) and a horizontal line in an image. The proposed coastal region detector can be activated if the background type of the probing sector is determined to be a coastal region. Coastal regions can be detected by fusing the region map and curve map. The experimental results on real infrared images highlight the feasibility of the proposed sea-based scene interpretation. In addition, the effects of the proposed scheme were analyzed further by applying region-adaptive small target detection.

Keywords: infrared scene interpretation, background type, coastal region detection, line-of-sight, horizontal line, region-dependent detector

1. Introduction

Infrared search and track (IRST) is an important technology for protecting the homeland from sea skimming missiles, multiple rocket launchers (MRLs), coastal guns and asymmetric boats [1]. Although the real detection range of sea-based IRST is approximately 30 km (assuming a target height of 100 m and a sensor height of 30 m), IRST is adopted frequently in ships to complement radar because it can provide a high precision target location [2]. The main purpose of IRST is to achieve a high detection rate with a low false alarm rate. Several spatial filter-based [3,4,5] or temporal filter-based [6,7,8,9] small infrared target detection methods have been proposed. Spatial filter-based methods use a background estimation and subtraction approach. The background can be obtained using the least mean square filter [3], median filter [10], mean filter [4] or morphological filter [5]. Temporal filter-based methods use motion information to extract the targets from the background. The motion can be extracted using track-before-detect (TBD) [6], dynamic programming [11] and temporal profiles [7,9,12].

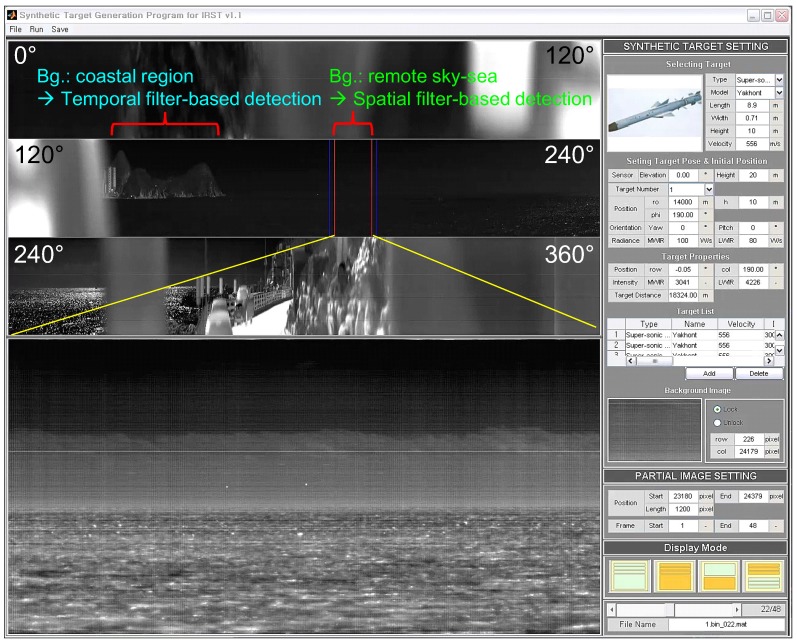

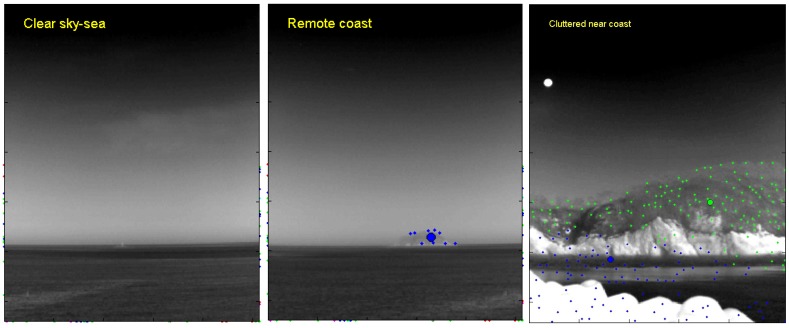

These small target detection approaches show good performance in their specific application domains. On the other hand, most of these small target detection algorithms use infrared images with a narrow field of view (NFOV) for specific backgrounds. According to the operational concept of the IRST system, omni-directional infrared images should be acquired and used for surveillance with both long-range capability and coastal environments [13]. In this paper, omni-directional infrared imaging systems refer to a field of view (FOV), not fish eye or wide FOV cameras. A parabolic mirror system in front of an infrared camera can provide FOV with reduced image resolution, which is not suitable for a long-range surveillance system [14]. Although omni-directional infrared imaging systems, such as Veille Air-Mer Panoramique Infrarouge (VAMPIR) [15] and Advanced Reliable Third Generation Naval IRST (ARTEMIS) [16,17], have been developed and deployed in several countries, there are almost no reports related to small target detection for omni-directional IRST systems. Only Weihua et al. have proposed a simple temporal difference-based detection method for aerial moving targets [18]. Figure 1 presents an omni-directional image, where the three rows represent a wide field of view ( each), and the fourth image shows an enlarged image for the specific sector. The panoramic image contains both sky-sea background and coastal regions.

Figure 1.

Motivation for the background classification and coastal region detection for sea-based infrared search and track: different types of small infrared target detection methods should be applied depending on the background to maximize the detection rate and to minimize the false alarm rate.

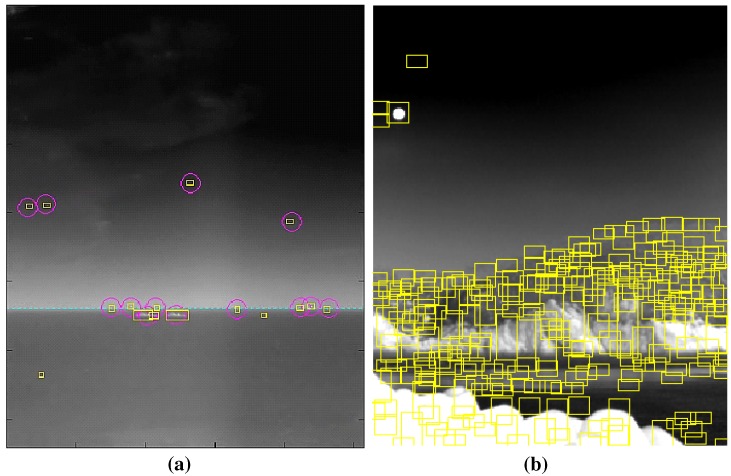

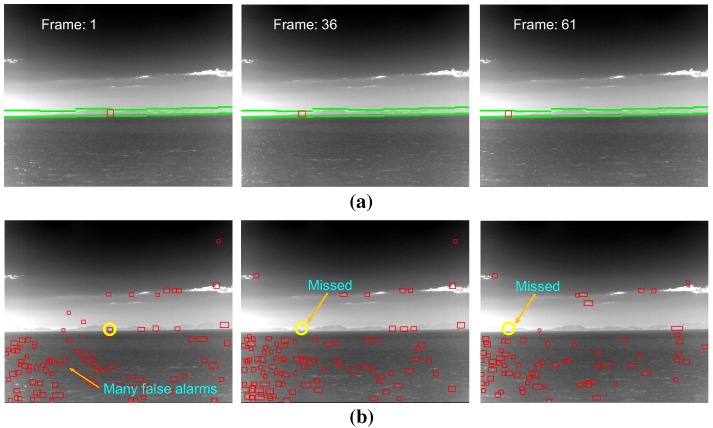

Different types of small infrared target detection methods are needed to satisfy both the detection rate and false alarm rate. The performance of the detection rate (DR) and false alarm rate (FAR) should be compared on the same public database. On the other hand, there is no public infrared database for small target detection problems. According to the authors’ experience, long-range targets normally exist around a horizontal line and are almost stationary with a very low signal-to-noise ratio. As shown in Figure 2a, spatial filter-based background estimation and subtraction methods can detect remote targets quite well [5,19,20,21]. If, however, the same method is applied to coastal regions, many false detections are produced due to ground clutter, as shown in Figure 2b. Normally, coastal regions are relatively close to a ship. This means that the target movement can be detected easily in an infrared image using temporal filters. Therefore, the detection of coastal regions is very important to the success of IRST operation in a real-world scenario by scene-dependent target detection.

Figure 2.

Comparison of spatial filter-based infrared target detection for different types of background: (a) sky-sea; (b) coast region. The yellow squares represent the detected target regions.

Although many studies have examined small infrared target detection, there are no reports related to infrared coastal region detection, except for Kim et al.’s initial work [22]. The method used a region segmentation and sky-coast line detection scheme. Kim et al. attempted to find the borderlines between the sky and coast regions using region contrast and statistical properties. In this study, the method was extended to an infrared scene interpretation by incorporating a background type classification with coastal region detection. Therefore, the contributions of this paper can be summarized as follows. First, a novel sea-based infrared scene interpretation method is proposed for the first time by cascading the background type classification and coastal region detection. Second, a novel sea-based infrared scene classification method is proposed using the relationships between the geometric horizon and the image horizon. Third, a new coastal region extraction method is proposed by the horizon-guided image fusion of the region map and the curve map. Fourth, the infrared scene interpretation method was validated using real infrared images. Finally, the effects of the infrared scene interpretation were found by applying a scene-dependent small target detection strategy for the synthetic database and real target sequence. Section 2 introduces the overall structure of the proposed infrared scene interpretation method, where the scene type classification method is explained. In addition, a precise coastal region detection method is presented for the coastal background type. In Section 3, the experimental results on real scenes with synthetic targets validate the power of the proposed architecture. Section 4 concludes and discusses the paper.

2. Proposed Infrared Scene Interpretation System

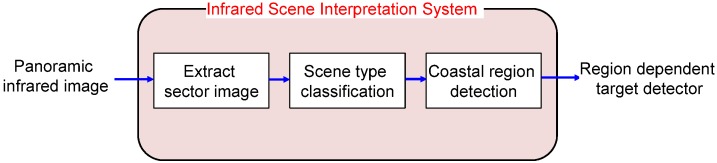

The key components of an omni-directional infrared search and track are sector image extraction, scene type classification and coastal region detection, as shown in Figure 3. An IRST sensor scans the scenes continuously, and each image sector containing a field of view is extracted and transferred to the target processing board. The next step is to determine the types of sector images. The scene types are sky-sea, remote coast, homogeneous near coast and cluttered near coast. The scene classification is based on the relationship between the geometric horizon position and the image horizon position. The proposed coastal region detector is activated iff the scene type contains a coastal region. Information about the coastal region is used in the following small target detector.

Figure 3.

Proposed infrared scene interpretation system.

2.1. Properties of the Sea-Based IRST Background

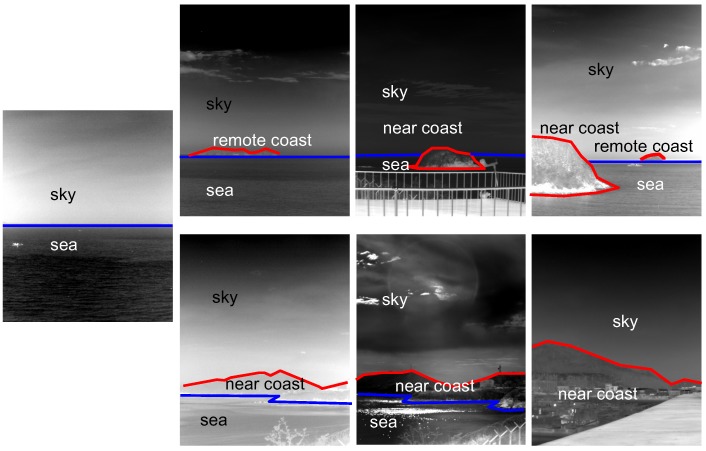

Sea-based IRST background images should be observed and analyzed carefully to obtain important domain knowledge. Figure 4 shows representative images used in the sea-based IRST system. Most of the background consists of a sky-sea region, sky-coast-sea or sky-near coast. Direct observations lead to three properties.

Figure 4.

Proposed infrared scene interpretation system.

The shapes of the sky, coast and sea regions are wide, because the imaging view point is slanted.

The order of the background is predictable, such as sky-sea, sky-coast-sea and sky-coast. The reverse order is not permitted.

A lower coast region generally occludes other remote regions due to the geometry of the camera projection.

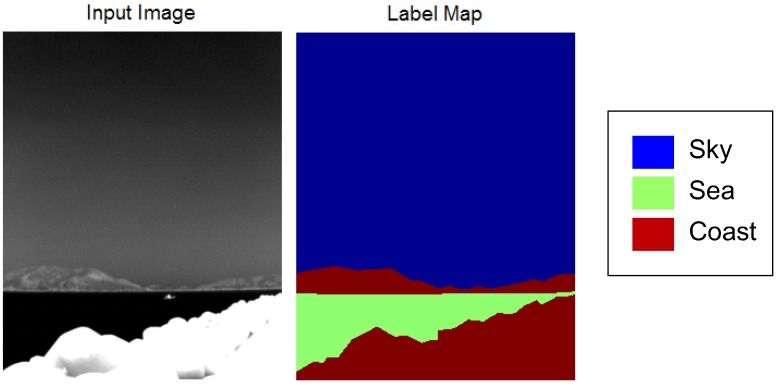

The next inspection is based on quantitative measurements of the temperature and texture distributions. Forty-one infrared background images were prepared and labeled. Figure 5 provides an example of an infrared background image and the corresponding label map consisting of sky, sea and coast regions.

Figure 5.

Example of the prepared test DB for a quantitative inspection.

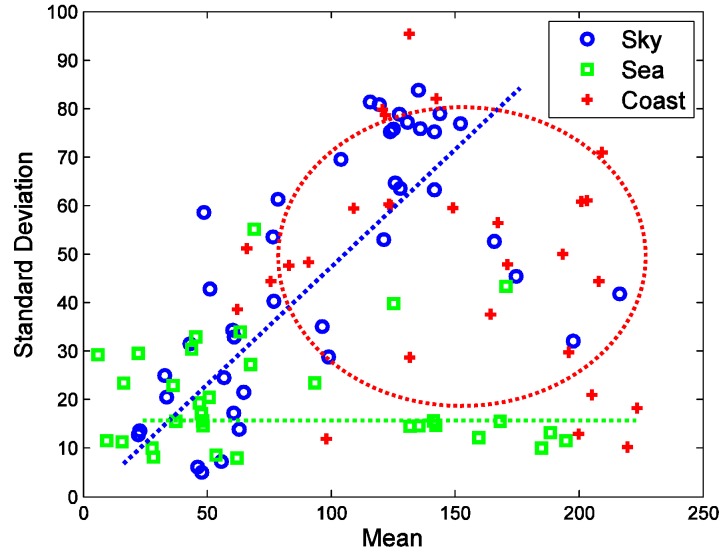

Figure 6 summarizes the distribution of the thermal average intensity and standard deviation. The sky region shows a wide thermal average intensity with a relatively low standard deviation. On the other hand, the sky region shows a proportional relationship between thermal intensity and the standard deviation. This originates from clear sky and warm clouds. Clear sky has a very low temperature with a low standard deviation. Warm clouds have a high temperature and a high standard deviation around the cloud edge. The coast region does not have any correlation between the thermal average intensity and the standard deviation. The coast region normally has a wide intensity and a wide standard deviation owing to its complex nature.

Figure 6.

Distribution of the thermal average intensity and standard deviation for each region.

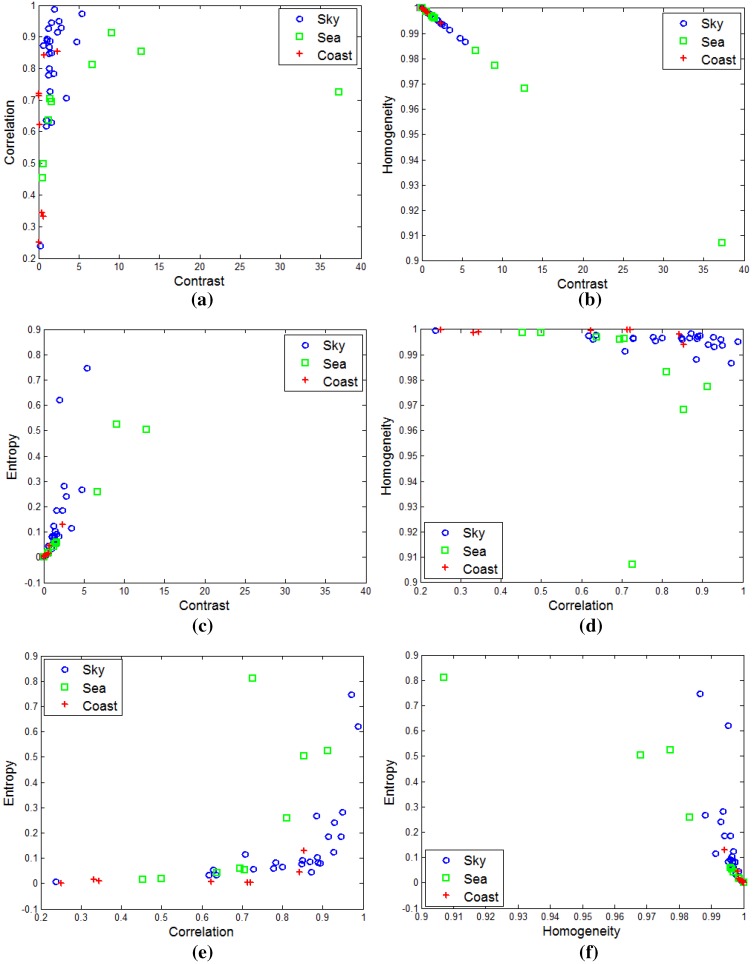

A quantitative inspection of the thermal texture can be estimated from the gray level co-occurrence matrix (GLCM) proposed by Blanton and Barner [23]. The GLCM () can be obtained by counting () the neighboring pixels with the specific gray values () in a probing image. The representative texture features are contrast, correlation, homogeneity and entropy [23]. Figure 7 shows the distribution of the GLCM-based texture using different tools for the sky, sea and coastal regions. The sea-based IRST images show no distinctive texture features. Most distributions are mixed and concentrated on the corners. Therefore, texture-based infrared image segmentation is not a suitable approach.

Figure 7.

Distributions of the gray level co-occurrence matrix (GLCM)-based thermal texture for each region: (a) contrast vs. correlation; (b) contrast vs. homogeneity; (c) contrast vs. entropy; (d) correlation vs. homogeneity; (e) correlation vs. entropy; (f) homogeneity vs. entropy.

2.2. Infrared Background Type Classification

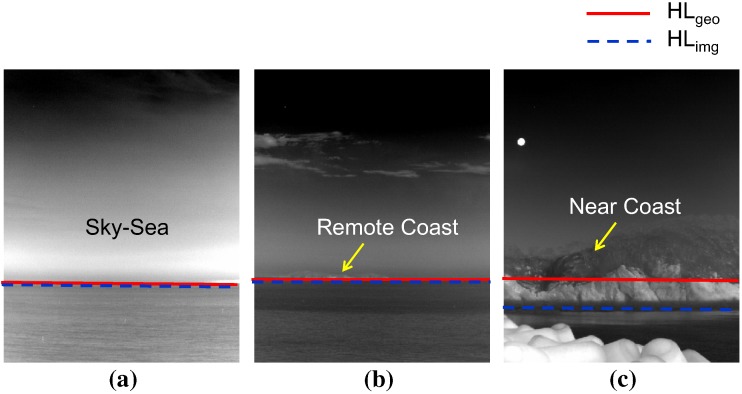

The sea-based infrared image types are normal sky-sea, remote coast and near coast background, as shown in Figure 8, according to the observation. The problem is how to classify the infrared background type using a simple, but robust method. The first idea is to use the horizontal information predicted by the sensor pose and the observed horizon position. As shown in Figure 8a,b, the horizon position (red solid line) predicted by sensor pose [24] is identical to the observed horizon (blue dotted line) for remote sea. In a near coast environment, the real horizontal line deviates from the predicted horizontal line, as shown in Figure 8c. The second key idea is to use the number of detection results to estimate the clutter density of the probing scene. Normally, a large number of false alarms is prohibited in an IRST system. On the other hand, the number of false detections can provide useful information regarding the coast types, as shown in Figure 2.

Figure 8.

Horizon observation of the sea-based infrared images and background types: (a) normal sky-sea; (b) remote coast; (c) near coast background.

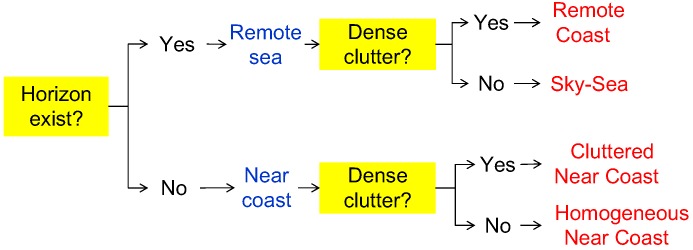

Figure 9 summarizes the proposed infrared scene classification method. The remote sea and near coast are determined depending on the existence of the horizon. In the remote sea case, the scene is classified as remote coast if there is dense clutter. Similarly, the scene is determined to be a cluttered near coast if there is dense clutter in the near coast.

Figure 9.

Proposed infrared scene classification method using the horizon and clutter density cues.

The first criterion is called the horizon evidence function () and is defined as Equation (1).

| (1) |

where denotes the sensor pose-based horizon prediction in the image and denotes the horizon position measured from the infrared image. If the difference margin is smaller than δ (normally four pixels), the real horizon can be confirmed to exist. was derived in [24] and is briefly introduced in this paper. Consider the IRST sensor height h, elevation angle α, field of view β and Earth radius R. The angle of the calculated horizontal line in an image can be found using Equation (2) assuming .

| (2) |

If the elevation angle is considered, the amount of sky region () can be calculated using Equation (3).

| (3) |

The geometrically-predicted horizontal line () can be found using Equation (4). The angle of the sea region () is just .

| (4) |

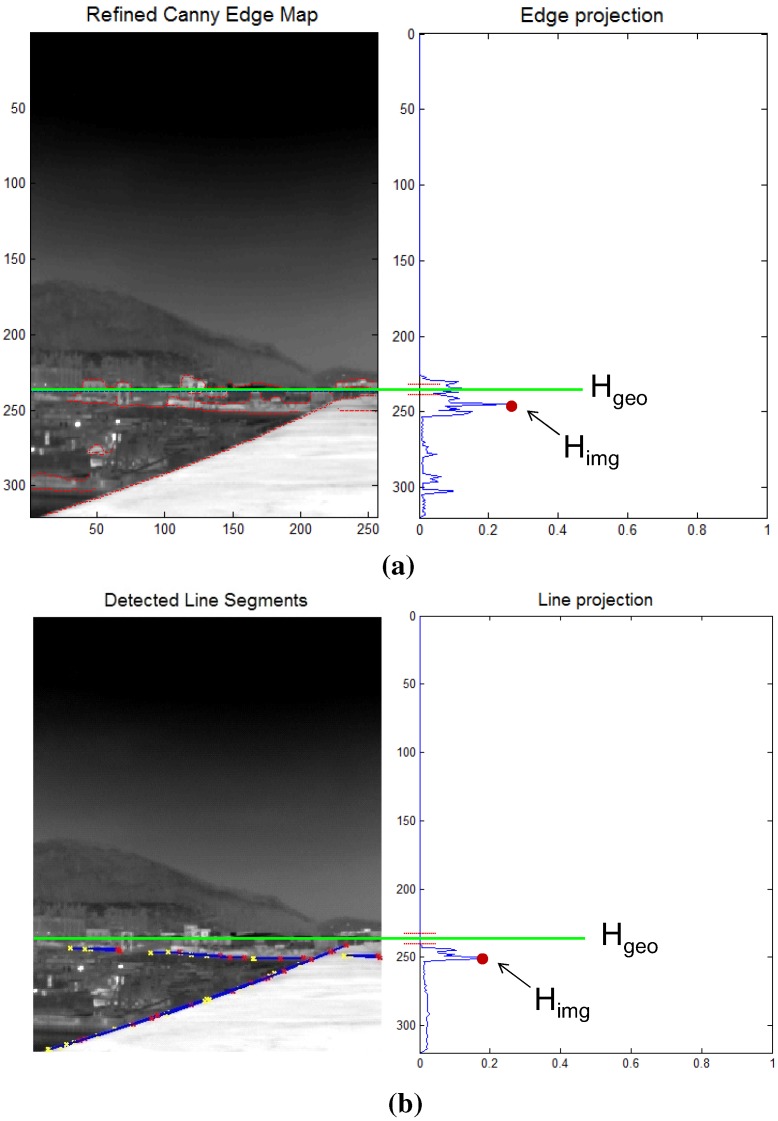

How can a horizon position () be found in an image? The camera roll angle is assumed to be zero to simplify the problem. The key idea of the estimation is to accumulate horizon-like pixel information () along each image row and to find a row index (i) that maximizes the horizon density using Equation (5). The probing image size is with a row index i and column index j. The edge map should be selected carefully to achieve the robustness of a horizon estimation in an image. If a well-known Canny edge detector [25] is applied, as shown in Figure 10a, the accumulated edge map shows an erroneous distribution of horizon density. Instead of a simple edge map, a line map is used, as shown in Figure 10b. The line segments are detected by applying a Hough transform [26] to the edge map. Therefore, the remote sea and near coast can be classified using Equation (1), Equation (4) and Equation (5).

| (5) |

Figure 10.

Estimation of the horizon in image () using the projection method in the near coast region: (a) edge map-based measurement; (b) line map-based measurement.

The second criteria is the clutter density to further classify the infrared background. The clutter density provides useful scene information on the coast types, as shown in the infrared scene type diagram (see Figure 9). The clutter density can be measured directly from the infrared images, such as the standard deviation [27]. On the other hand, the low level intensity-based variance shows very different behavior compared to coastal clutter. Therefore, this paper proposes a new clutter density () measurement, as expressed in Equation (6), where denotes a spatial filter-based small target detector with a pre-detection threshold in the hysteresis detector [24]. represents the area (pixels) occupied by pre-detection. If the density is larger than 5 clutters/(100 × 100 pixels), the scene is classified as cluttered coast. The mean-shift clustering method [28] is applied to the pre-detection results to estimate the area covered by clutter. Figure 11 presents representative examples of an infrared scene classification using the proposed rule-based algorithm with the horizon existence and clutter density function. Infrared images of the remote sky-sea, remote coast and cluttered near coast are classified correctly.

| (6) |

Figure 11.

Examples of scene classification using the proposed background type classification.

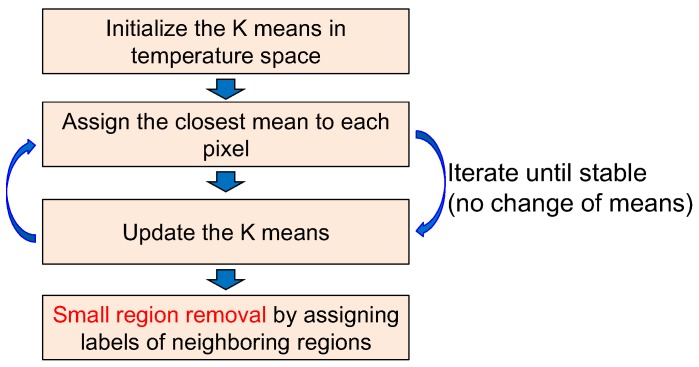

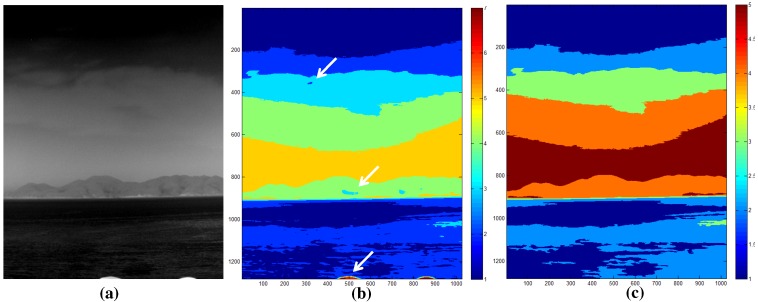

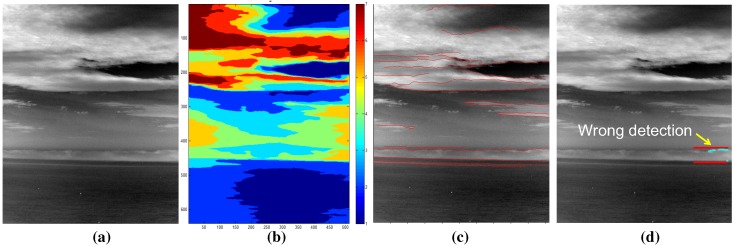

2.3. Coastal Region Detection

Given the information of the infrared background types, the coastal region should be detected for remote coast and nearest scenes. The state-of-the-art computer vision techniques were applied to find the coastal region in an infrared image. In the bottom-up approach, normalized graph cut (N-cut) is famous for its image segmentation capability [29]. N-cut regards an image as a set of nodes and edges. The strength of the edge increases if the similarity between pixels is strong. If it is not, the edge is cut to separate these pixels. Figure 12a shows the region segmentation result for an infrared image with the number of regions, three. Although the N-cut showed excellent region segmentation in the color image, it showed poor results in the infrared image because of the lack of multi-spectral (red, green, blue) information. Another well-known bottom-up method is the mean-shift segmentation [28]. The mean-shift finds clusters that maximize the local density. Figure 12b presents the application results using the mean-shift algorithm. This works quite well, but it misses the important coastal regions indicated by the arrow. The bottom-up approaches require huge processing time and miss important coastal regions. On the other hand, a top-down region segmentation approach uses prior information of the region of interest, such as the snake or active contour model [30]. The snake moves the initial contours to minimize both the external energy (edge similarity) and internal energy (elastic, bending energy). Figure 12d shows the final region segmentation result using the initial shape shown in Figure 12c. The top-down approach showed higher coastal region detection performance than the bottom-up approaches. On the other hand, it failed when there was weak contrast between the sky and coast region. Furthermore, it required the shape prior, and the initial position of the shape prior was selected manually, which is the weakest point of the algorithm.

Figure 12.

Limitations of the previous region segmentation approaches: (a) normalized graph cut (N-cut) with three prior regions; (b) mean-shift segmentation; (c) prior rectangle for snake; (d) after energy minimization in the snake algorithm.

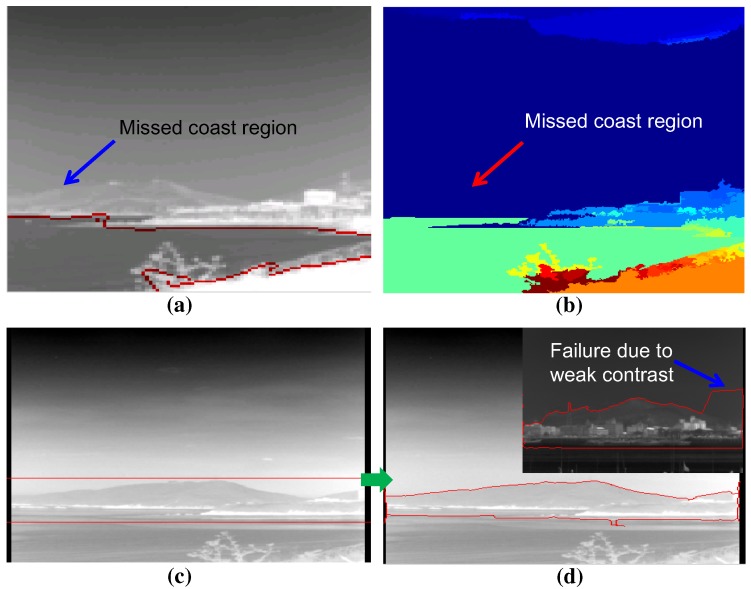

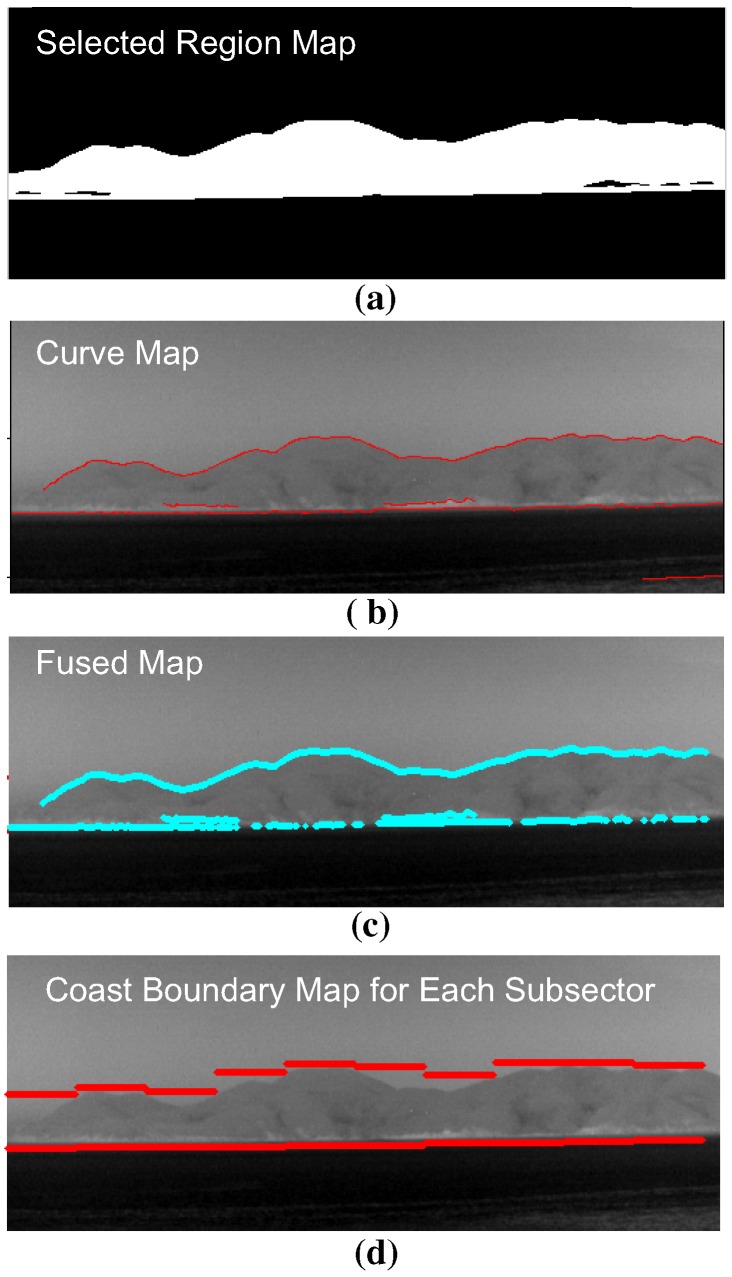

A novel coastal region detection method is proposed to overcome the limitations of previous approaches by fusing a region map and a curve map, as shown in Figure 13. A region map is obtained using temperature-based segmentation, and a curve map is obtained using temperature difference-based curve extraction. Two important key issues of coastal region detection are whether or not a coast exists, and if it exists, how to initialize the fusion process to detect the coast region. In this study, the information (scene type, horizon) obtained in the scene type classification block was used. The proposed coast region detector is initiated, if the scene type is determined to be a coastal region (remote, near). After the region map and curve map are extracted, the geometric horizontal line () is used to select the related coast-like region in the region map. The curves that surround the selected coast-like region are then selected. The final coast boundary map is generated by finding the sub-sector-wise local minima and maxima of the fused map.

Figure 13.

Proposed coast region detection flow given in the scene type information.

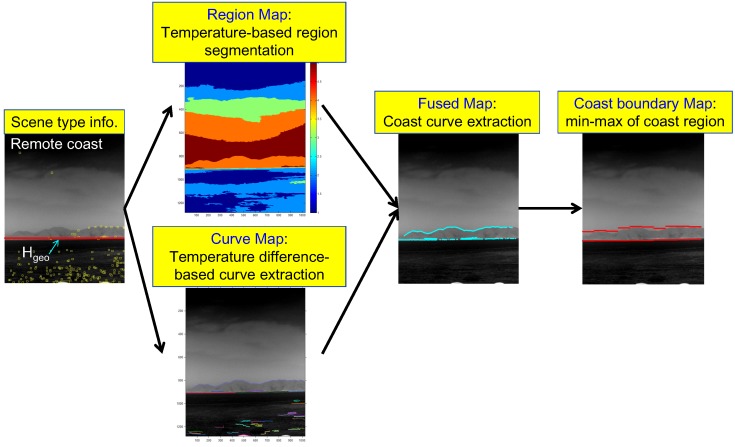

A region map () is generated using the K-means clustering algorithm, which is useful for segmenting regions according to the thermal energy [31]. k represents the region label at the pixel position (). The K-means clustering-based region segmentation method was selected instead of the complex N-cut or mean-shift to reflect the properties of the sky, coast and sea discussed in Section 2.1. The average temperature of the sea region is cold relative to the coast region, despite the sky region having a wide temperature range. Figure 14 shows the K-means clustering algorithm, where K denotes the number of temperature centers. If K is seven, the initial temperature means (digital number) are 0, 36, 72, 108, 144, 180, and 216 for an eight-bit resolution. The label of each pixel is assigned to the label of the closest mean. The iteration continues until convergence, as shown in Figure 15b for the test image of Figure 15a. Furthermore, small region removal by assigning the labels of the neighboring regions is processed to obtain meaningful regions, as shown in Figure 15c. Note that the small isolated regions indicated by the arrows in Figure 15b are removed clearly in Figure 15c.

Figure 14.

Region map extraction using K-means clustering and small region removal.

Figure 15.

Results of the region map extraction: (a) test image; (b) original K-means clustering; (c) final region map.

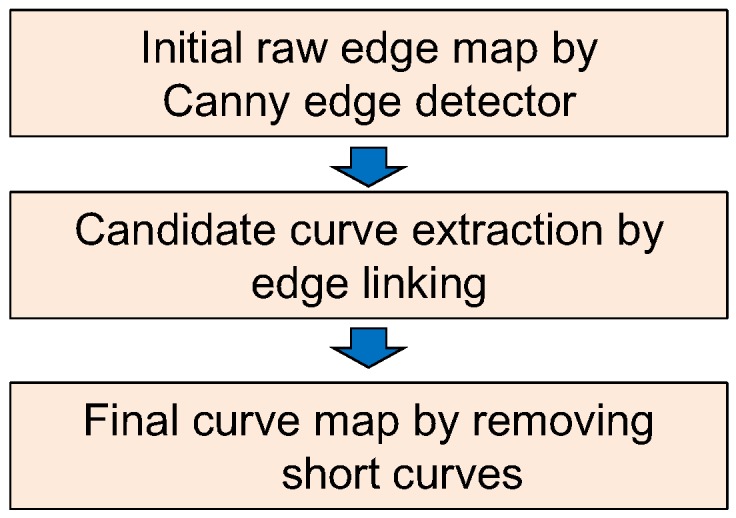

The sky-coast region and coast-sea region show different temperatures, which cause boundaries in the infrared images. A curve map () is calculated using the Canny edge detector and edge linking, as shown in Figure 16. The Canny edge detector [25] can determine the temperature differences using the Gaussian derivatives and non-maxima suppression, as shown in Figure 17b for the input image in Figure 17a. The curve fragments are extracted by applying the edge linking method with edge gap filling, as shown in Figure 17c, where different colors represent the curve fragments identified. The final curve map is obtained by limiting the minimum curve length because the sky-coast boundary and coast-sea boundary have a long curve length, as shown in Figure 17d.

Figure 16.

Curve map extraction using Canny edge detection, edge linking and short curve removal.

Figure 17.

Results of curve map extraction: (a) test image; (b) initial raw edge map by Canny edge detector; (c) contour extraction with a gap size of 2; (d) final curve map by removing the short curves.

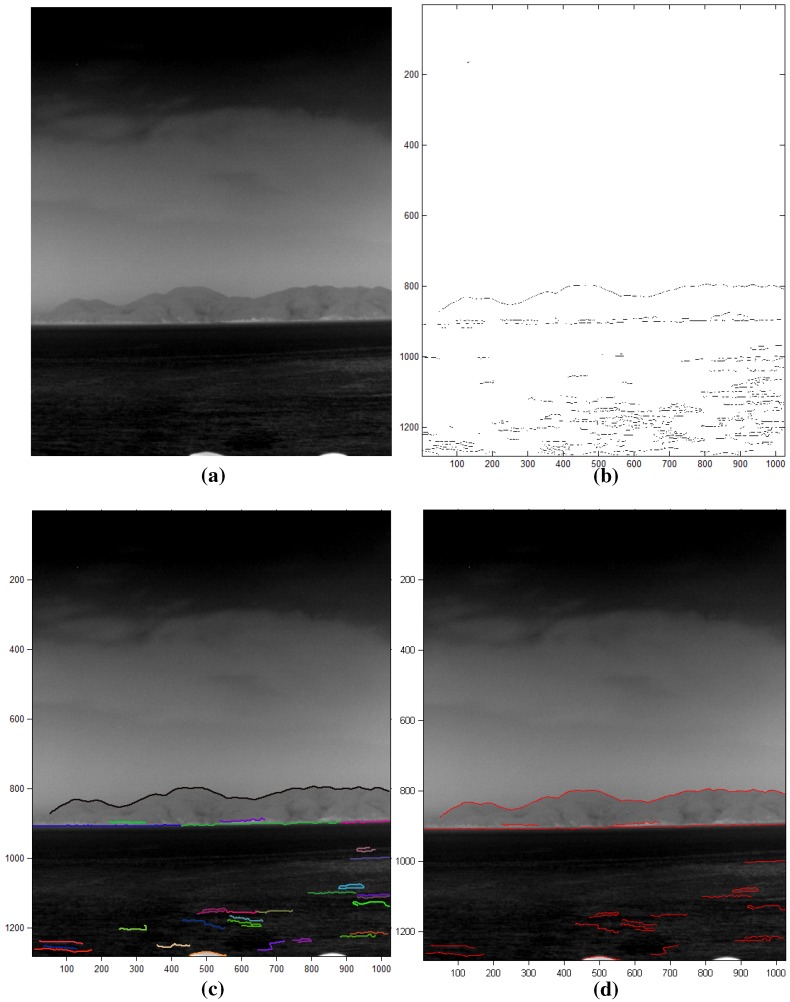

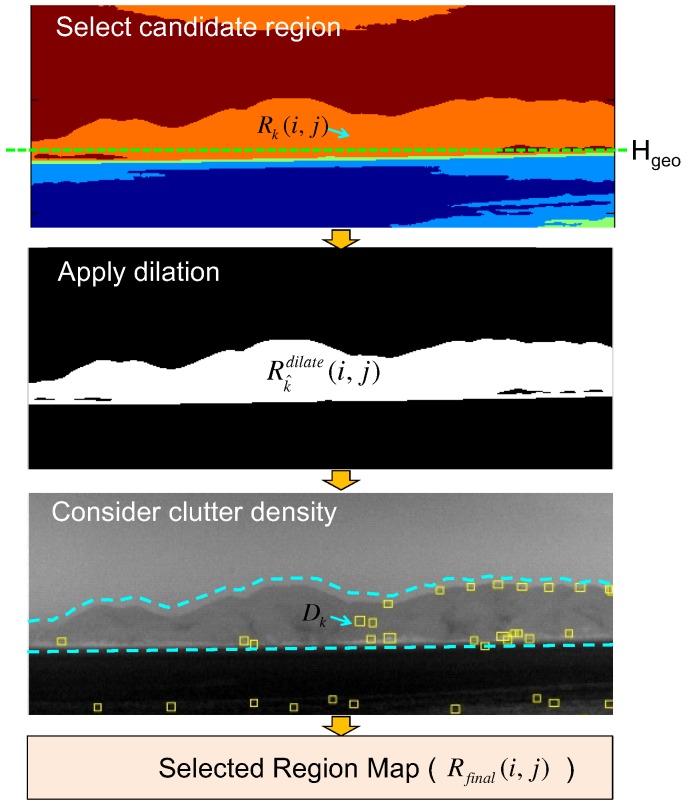

The fused map () in Figure 13 can be obtained from the selected region map () and curve map (), as shown in Figure 18. The selected region map is the result of the coast-like region selection considering both the geometric horizon line and clutter density, as shown in Figure 19.

Figure 18.

Fused map and coast boundary map generation flow using the selected region map and curve map: (a) selected region map using horizon information; (b) extracted curve map; (c) fused map generation by applying an AND operation to the selected region and curve map; (d) coast boundary representation.

Figure 19.

Region map selection flow using the geometric horizon and clutter density.

The candidate region label set () can be initialized using the predicted geometric horizon () along each column position as expressed in Equation (7).

| (7) |

The region () with a specific label () is expanded using a morphological dilation method [32] to include the coastal edges using Equation (8).

| (8) |

The initial region labels are refined further () using the density () of clutter of the related regions, as expressed in Equation (9). The clutter density of a region with an index of can be estimated by dividing the number of pre-detections () by the area of the specific region (), as expressed in Equation (10).

| (9) |

| (10) |

The finally selected region map () is generated by merging the surviving coast-like regions, as expressed in Equation (11).

| (11) |

The fused map () is obtained by applying AND fusion [33] of the selected region map () with the curve map (), as expressed in Equation (12).

| (12) |

3. Experimental Results

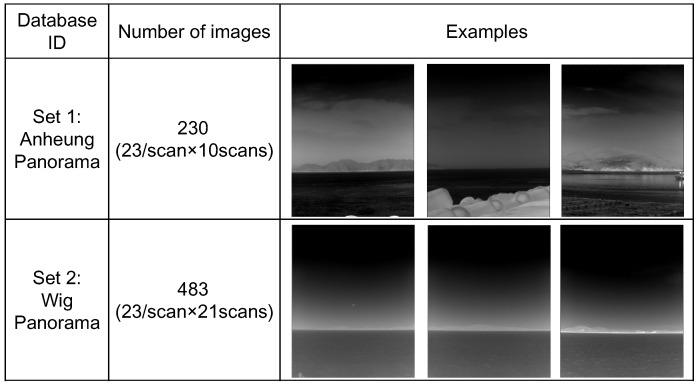

Panoramic IRST images were prepared to validate the proposed method, as shown in Figure 20. One panoramic image consisted of 23 sector images, where one sector image covered a field of view of . In addition, each sector image had an image resolution of and contains sky-sea, sky-coast-sea or sky-coast, depending on the viewing direction.

Figure 20.

Composition of the infrared database.

In the first experiment, the proposed infrared scene type classifier was evaluated for the two test sets. Table 1 lists the overall classification performance for each set. Three scene types (sky-sea, cluttered remote coast, cluttered near coast) were considered, because there were no related scenes with a homogeneous near coast, even though the proposed algorithm can classify them. The overall classification accuracy was approximately 95.6% for this database. Several miss-classifications occurred for the cluttered remote coast where a few pre-detections existed (classified as sky-sea). Figure 21 gives partial examples of the infrared scene type classification using the proposed horizon information and pre-detection results.

Table 1.

Infrared scene type classification performance for the test database using the proposed horizon and clutter density-based method.

| Dataset | Sky-Sea | Cluttered Remote Coast | Cluttered Near Coast | Accuracy (%) |

|---|---|---|---|---|

| Set 1 | 60/60 | 60/60 | 100/110 | 95.6% (220/230) |

| Set 2 | 42/42 | 294/315 | 126/126 | 95.7% (463/484) |

Figure 21.

Examples of successful infrared scene type classification.

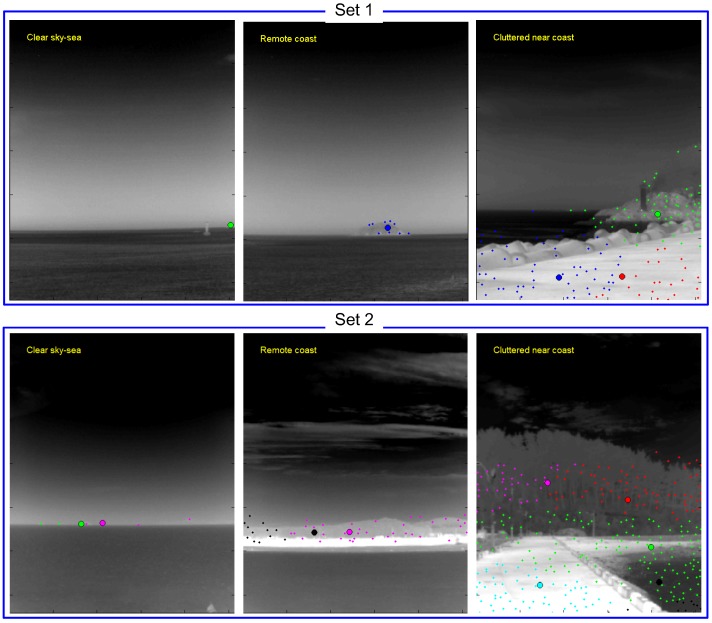

In the second experiment, the effect of the K value in the K-means segmentation was evaluated. The control parameter K is one of the most important parts in the proposed method. It can control the number of segmented regions and should be selected depending on the scenario. Figure 22 shows the effects of thermal image segmentation depending on the control parameter K. For a test image (Figure 22a), K with two cannot segment the coast region, as shown in Figure 22b. K with five or seven can segment it correctly (Figure 22c,d). However, K values larger than seven produce too many regions, which is not suitable for the proposed coast detection method, as shown in Figure 22e,f. Therefore, the optimal K values are five or seven, depending on the thermal image environments.

Figure 22.

The effects of the parameter K in the K-means segmentation: (a) test image; (b) K = 3; (c) K = 5; (d) K = 7; (e) K = 9; and (f) K = 12.

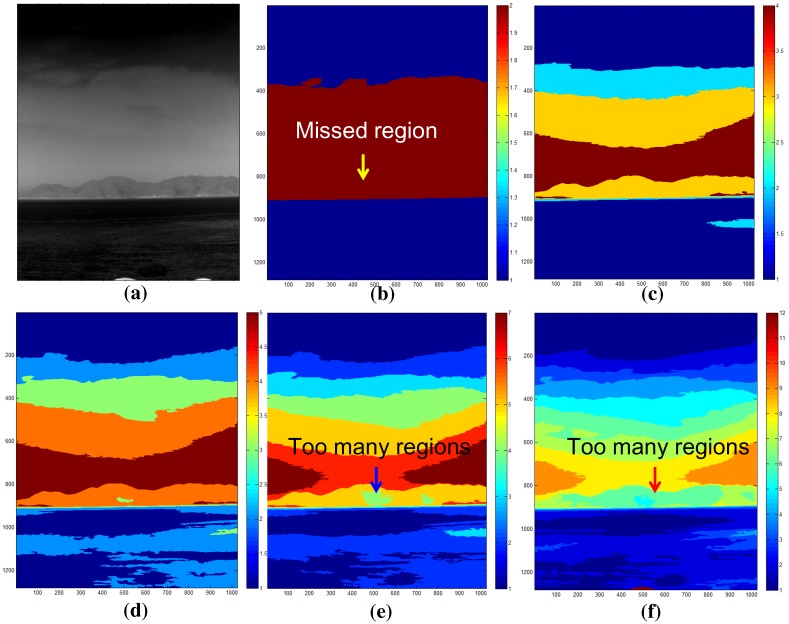

In the third experiment, the performance of the coastal region detection was evaluated for the test set. The mean-shift segmentation method [28] was used as a baseline method because it is used widely and has shown excellent performance in the computer vision community. Geometric horizon information-based coastal region selection was inserted after the original mean-shift segmentation to identify the coastal region. The infrared scene type information was assumed to have been given for both the baseline method and the proposed method. Therefore, the coastal region detection methods are activated if the scene type contains a coastal region. The coastal region detection rate is quite difficult to measure. In this study, a sub-sectional image-wise region overlap measure was used, as expressed in Equation (13) [34]. and represent the detected coastal region and ground truth coastal region, respectively. Two regions were determined to correspond if the region overlap was large enough (>0.5).

| (13) |

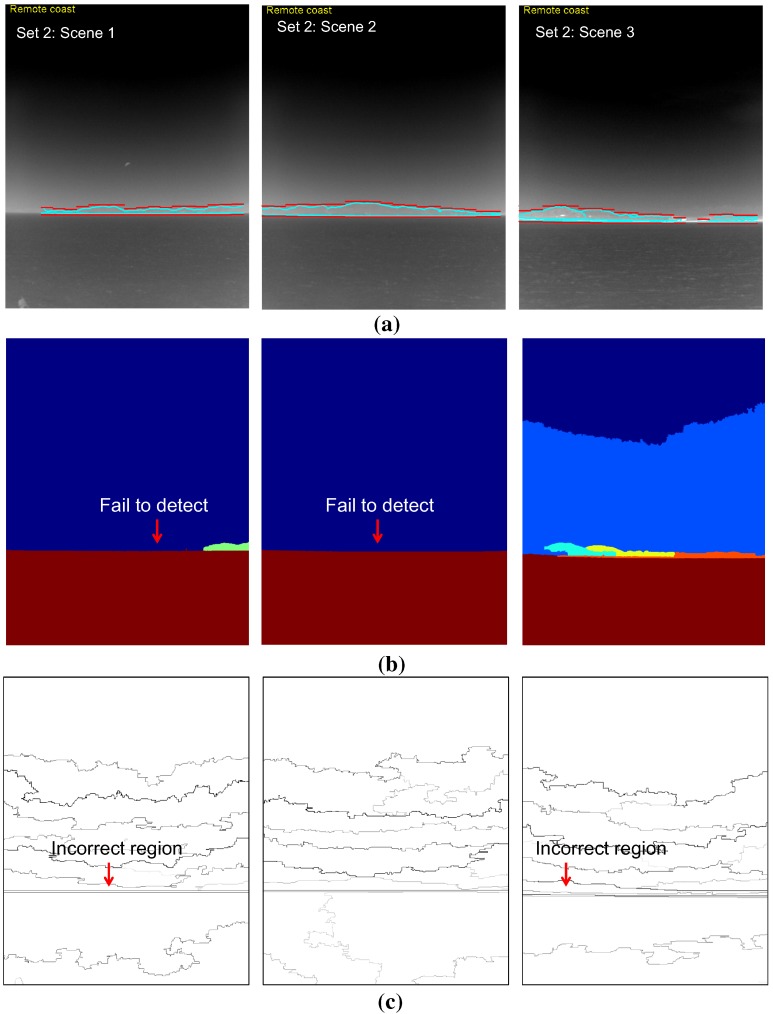

Table 2 lists the results of a quantitative evaluation of the coastal region detection using the proposed method and two baseline methods (mean-shift segmentation [28] and statistical region merging [35]) for the selected sky-coast-sea images. The proposed method outperformed (overall detection rate: 96.4%) the well-known mean-shift segmentation (overall detection rate: 34.8%) and statistical region merging (overall detection rate: 66.%). The parameters of the mean-shift segmentation were tuned to identify the coastal regions (spatial band width: 7, range band width: 7, minimum region area: 5000, gradient window radius: 7, edge strength threshold: 1). Those of the statistical region merging were set to the original method. Figure 23 and Figure 24 show the corresponding coastal region detection results for both methods. The proposed method failed on the right three consecutive sub-sectors in the Set 1:Scene 3 image, where the sky-coast line is ambiguous. The mean-shift segmentation was found in the coastal regions on the Set 1-Scene 1 with image segmentation and geometric horizon information. On the other hand, it failed on the remaining test images, because it could not segment out the ambiguous coast regions. In the quantitative evaluation, partial segmentation results of the coastal regions were regarded as a success for the failure cases. The strength of the contour represents the belief of the segmented region in statistical region merging method. The coastal regions can be found quite well compared to the mean-shift segmentation. On the other hand, it failed to find the coast regions in ambiguous sky-coast area.

Table 2.

Infrared coastal region detection performance for the test database using the proposed method and baseline method (mean-shift segmentation) [28]. DR denotes detection rate. and represent the number of detections and the number of ground truths, respectively.

| Test scene | Proposed | Mean-Shift Segmentation [28] | Statistical Region Mergin [35] | |||

|---|---|---|---|---|---|---|

| DR [%] | DR [%] | DR [%] | ||||

| Set 1:Scene 1 | 19/19 | 100% | 19/19 | 100% | 19/19 | 100% |

| Set 1:Scene 2 | 19/19 | 100% | 2/19 | 10.5% | 19/19 | 100% |

| Set 1:Scene 3 | 16/19 | 84.2% | 0/19 | 0% | 0/19 | 0% |

| Set 2:Scene 1 | 17/17 | 100% | 2/17 | 11.8% | 0/19 | 0% |

| Set 2:Scene 2 | 19/19 | 100% | 0/19 | 0% | 19/19 | 100% |

| Set 2:Scene 3 | 18/19 | 94.7% | 16/19 | 84.2% | 17/19 | 89.4% |

| Overall | 108/112 | 96.4% | 39/112 | 34.8% | 74/112 | 66.0% |

Figure 23.

Comparison of the coastal region detection results for Test Set 1: (a) proposed coastal region detection; (b) mean-shift segmentation-based method [28]: Scene 1 was successful; Scenes 2 and 3 failed (segmentation results were displayed as partial outputs.) [28]; (c) statistical region merging method [35].

Figure 24.

Comparison of the coastal region detection results for Test Set 2: (a) proposed coastal region detection; (b) mean-shift segmentation [28]: it failed for these test scenes; (c) statistical region merging method [35].

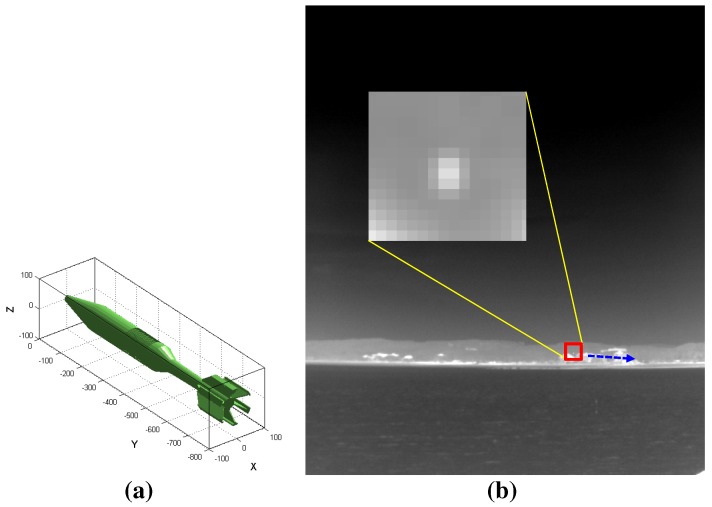

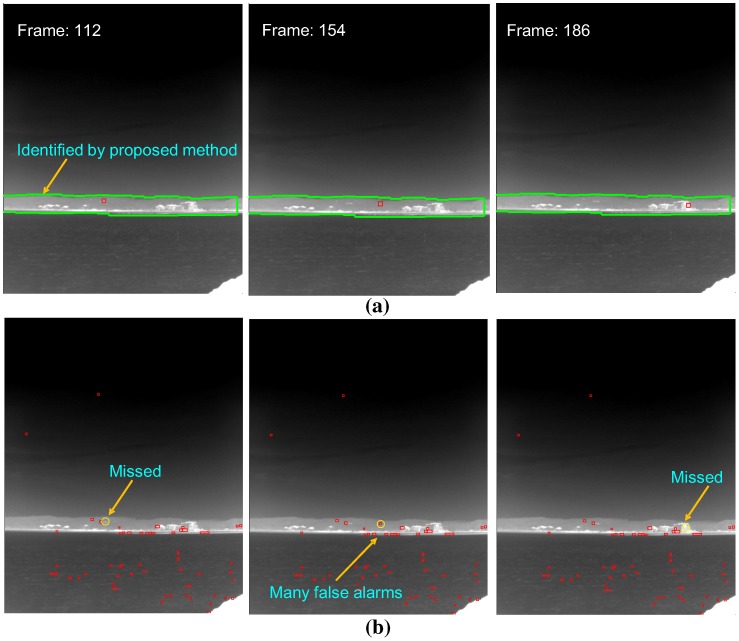

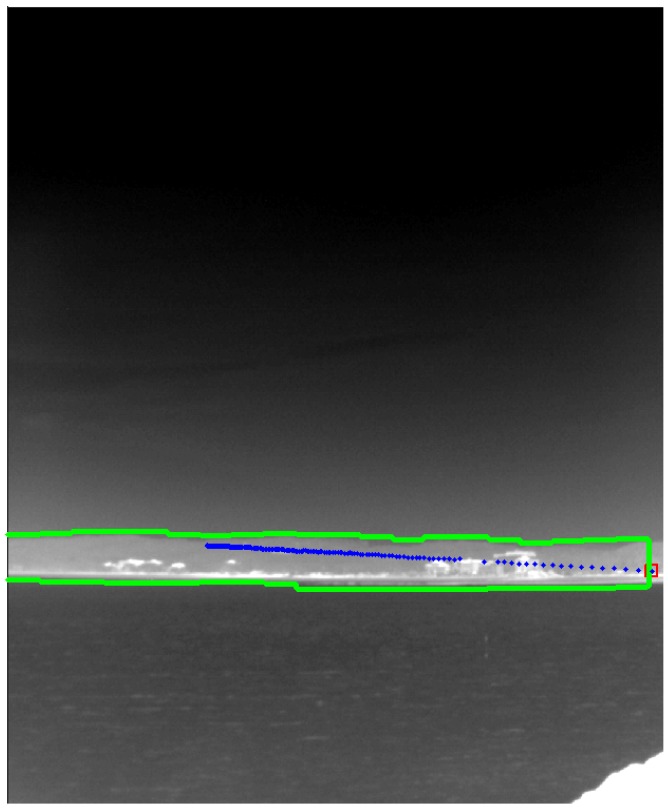

As a final experiment, the effects of the coastal region information were evaluated by applying small infrared target detection methods to the synthetic infrared test images and real WIGcraft sequence. The synthetic images were generated by inserting a 3D CAD model into the coast background [36], and the real WIG craft sequence was acquired using a CEDIPMWIRcamera. Figure 25 gives an example of the test sequence, where a synthetic target moves around a cluttered coast region. The target radiation was set to 150 W/sr, and the target moves along the coast region with a velocity of Mach 3 at a 2-km distance (target yaw angle , pitch angle ). The target detection scheme can be selected if the information on the coastal region is available. Normally, a spatial filter-based detector, such as top-hat [5], should be applied around the sky-sea horizontal region to detect the distant targets (almost stationary and dim). On the other hand, the proposed method can provide the scene type and the coast region information if the scene type is classified as the coast background. Because the coast regions are normally located near the sensor position, the target motion can be detected easily using the temporal filter-based method, such as the temporal contrast filter (TCF) [37]. Table 3 lists the target detection performance depending on the coast information for the two types of databases. The detection rate by applying TCF to the recognized coast region method was better than that of top-hat, where the coast information was unavailable. Furthermore, the false alarm rate (FAR) of the coast information-based method was reduced to zero/image. Figure 26 shows the related partial detection results depending on the coastal region information. Figure 27 presents the final footprints of target detection in the coastal region. Figure 28 shows the WIG craft detection results with the same test environments. Through the evaluation, the proposed coast region information can upgrade the target detection performance in the sea-based IRST problem.

Figure 25.

Generation of synthetic test images by inserting a 3D CAD model into the coast background image [36]: (a) 3D CAD model of a missile; (b) generated infrared image with the target motion.

Table 3.

Infrared coastal region detection performance for the test database using the proposed method and the baseline method (mean-shift segmentation) [28]. FAR denotes the false alarm rate. TCF, temporal contrast filter.

| DB Types | Performance Measure | With Coast Information by the Proposed Method Temporal Filter (TCF [37]) | Without Coast Information Spatial Filter (Top-Hat [5]) |

|---|---|---|---|

| Synthetic | Detection rate | 97.7% (171/175) | 89.7% (157/175) |

| DB | FAR | 0/image | 54/image |

| WIGcraft | Detection rate | 98.3% (60/61) | 85.3% (52/61) |

| DB | FAR | 0/image | 65/image |

Figure 26.

Effect of the coast region information in the infrared small target detection problem for the synthetic DB: (a) application of the temporal filter-based detector (TCF) for the identified coast region; (b) application of a spatial filter-based detector (top-hat). The yellow circles denote the ground truths, and the red rectangles represent the detection results.

Figure 27.

Final footprint of target detection using a temporal filter (TCF) in the identified coastal region. The blue dots represent the detected target locations.

Figure 28.

Effect of the coast region information in the infrared small target detection problem for the real WIG craft DB: (a) application of the temporal filter-based detector (TCF) for the identified coast region; (b) application of spatial filter-based detector (top-hat). The yellow circles denote the ground truths, and the red rectangles represent the detection results.

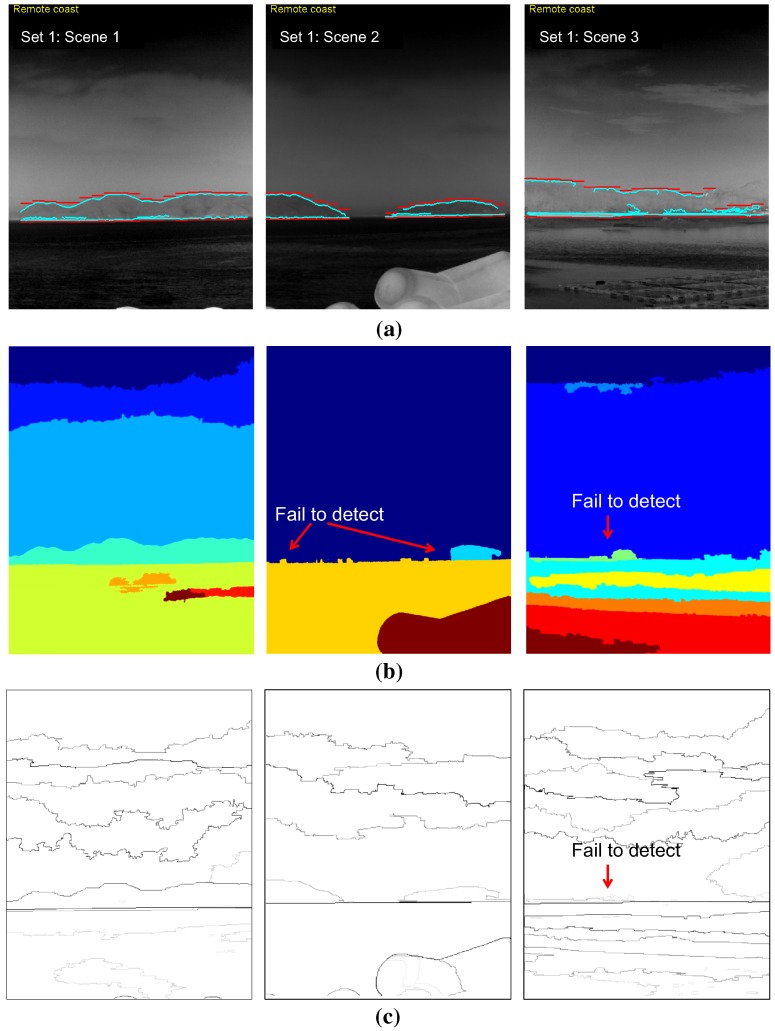

As validated in previous experiments, the proposed method works for a normal sky-coast-sea background. The proposed method produces partly false coast detection if there is a large portion of clouds in the sky and the clouds are close to the horizon, as shown in Figure 29. In this case, the false region map and cloud edge map lead to erroneous results.

Figure 29.

Examples of a failure case: (a) dense cloud clutter around horizon and sky; (b) extracted region map; (c) extracted edge map; (d) coast detection using the proposed method.

4. Conclusions and Discussion

Extracting the coast region is important for the successful operation of infrared search and track. This paper proposed a two-stage-based infrared scene interpretation by the consecutive scene type classification and coastal region detection. The coastal region detector was designed to be activated when the infrared scene contains the coast background. In the scene type classifier, the sea-based infrared background has no distinctive texture features according to gray level co-occurrence matrix (GLCM) analysis. On the other hand, the relationship between the geometrically-predicted horizon and image horizon provides a clue to the existence of a coastal region. In addition, the clutter density by pre-detection provides an additional clue to the clutter type of observed coast regions. The final coastal region detector is activated when the probing scene contains a near coast or remote coast. State-of-the-art computer vision techniques, such as normalized cut (N-cut) or mean-shift segmentation, showed poor region segmentation results due to the ambiguous sky-coast regions. This paper proposes a fusion-based method using a region map and a curve map. The region map was generated by K-means-based temperature segmentation, and the curve map was generated by a Canny edge detector and edge linking. The coast region was initiated by the geometric horizon information and confirmed whether the surrounding curves exist. The proposed method was evaluated by three experiments: scene type classification performance, coastal region detection performance and the effect of the coast region information on infrared small target detection. Various experiments on the real and synthetic infrared image showed convincing results. In the first evaluation, the proposed scene type classifier showed a classification accuracy of 95.7% for hundreds of test images. In the second evaluation, the proposed coastal region detection showed a detection rate of 96.4%, and the baseline method (mean-shift segmentation) showed a detection rate of 34.8%. In the final evaluation, the coastal region information enhanced the target detection rate and reduced the false alarm rate by applying a temporal filter-based method for the identified coast region. In the future, the test will be conducted on more and varied IRST operation scenarios.

Acknowledgments

This research was supported by the Basic Science Research Program through the National Research Foundationof Korea (NRF) funded by the Ministry of Science, ICT & Future Planning (NRF-2014R1A2A2A01002299). It was also supported by the STRL (Sensor Target Recognition Laboratory) program of the Defense Acquisition Program Administration and Agency for Defense Development.

Author Contributions

The contributions were distributed between authors as follows: Sungho Kim wrote the text of the manuscript, programmed the target detection and fusion methods, performed the in-depth discussion of the related literature and confirmed the accuracy experiments that are exclusive to this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.De Jong A.N. IRST and perspective. Proc. SPIE. 1995;2552:206–213. [Google Scholar]

- 2.Ristic B., Hernandez M., Farina A., Hwa-Tung O. Analysis of radar allocation requirements for an IRST aided tracking of anti-ship missiles; Proceedings of the 9th International Conference on Information Fusion; Florence, Italy. 10–13 July 2006; pp. 1–8. [Google Scholar]

- 3.Sang H., Shen X., Chen C. Architecture of a configurable 2-D adaptive filter used for small object detection and digital image processing. Opt. Eng. 2003;48:2182–2189. doi: 10.1117/1.1588294. [DOI] [Google Scholar]

- 4.Warren R.C. Ph.D. Thesis. University of South Australia; Adelaide, Australia: 2002. Detection of Distant Airborne Targets in Cluttered Backgrounds in Infrared Image Sequences. [Google Scholar]

- 5.Wang Y.L., Dai J.M., Sun X.G., Wang Q. An efficient method of small targets detection in low SNR. J. Phys. Conf. Ser. 2006;48:427–430. doi: 10.1088/1742-6596/48/1/081. [DOI] [Google Scholar]

- 6.Rozovskii B., Petrov A. Optimal Nonlinear Filtering for Track-before-Detect in IR Image Sequences. Proc. SPIE. 1999;3809:152–163. [Google Scholar]

- 7.Thiam E., Shue L., Venkateswarlu R. Adaptive Mean and Variance Filter for Detection of Dim Point-Like Targets. Proc. SPIE. 2002;4728:492–502. [Google Scholar]

- 8.Zhang Z., Cao Z., Zhang T., Yan L. Real-time detecting system for infrared small target. Proc. SPIE. 2007;6786 doi: 10.1117/12.751305. [DOI] [Google Scholar]

- 9.Kim S., Sun S.G., Kim K.T. Highly efficient supersonic small infrared target detection using temporal contrast filter. Electron. Lett. 2014;50:81–83. doi: 10.1049/el.2013.2109. [DOI] [Google Scholar]

- 10.Sang N., Zhang T., Shi W. Detection of Sea Surface Small Targets in Infrared Images based on Multi-level Filters. Proc. SPIE. 1998;3373:123–129. [Google Scholar]

- 11.Chan D.S.K. A Unified Framework for IR Target Detection and Tracking. Proc. SPIE. 1992;1698:66–76. [Google Scholar]

- 12.Tzannes A.P., Brooks D.H. Point Target Detection in IR Image Sequences: A Hypothesis-Testing Approach based on Target and Clutter Temporal Profile Modeling. Opt. Eng. 2000;39:2270–2278. [Google Scholar]

- 13.Schwering P.B., van den Broek S.P., van Iersel M. EO System Concepts in the Littoral. Proc. SPIE. 2007;6542 doi: 10.1117/12.719217. [DOI] [Google Scholar]

- 14.Wong W.-K., Chew Z.-Y., Lim H.-L., Loo C.-K., Lim W.-S. Omnidirectional Thermal Imaging Surveillance System Featuring Trespasser and Faint Detection. Int. J. Image Proc. 2011;4:518–538. [Google Scholar]

- 15.Missirian J.M., Ducruet L. IRST: A key system in modern warfare. Proc. SPIE. 1997;3061:554–565. [Google Scholar]

- 16.Grollet C., Klein Y., Megaides V. ARTEMIS: Staring IRST for the FREMM frigate. Proc. SPIE. 2007;6542 doi: 10.1117/12.724485. [DOI] [Google Scholar]

- 17.Fontanella J.C., Delacourt D., Klein Y. ARTEMIS: First naval staring IRST in service. Proc. SPIE. 2010;7660 doi: 10.1117/12.852429. [DOI] [Google Scholar]

- 18.Weihua W., Zhijun L., Jing L., Yan H., Zengping C. A Real-time Target Detection Algorithm for Panorama Infrared Search and Track System. Procedia Eng. 2012;29:1201–1207. doi: 10.1016/j.proeng.2012.01.113. [DOI] [Google Scholar]

- 19.Dijk J., van Eekeren A.W.M., Schutte K., de Lange D.J.J. Point target detection using super-resolution reconstruction. Proc. SPIE. 2007;6566 doi: 10.1117/12.725074. [DOI] [Google Scholar]

- 20.Kim S. Min-local-LoG filter for detecting small targets in cluttered background. Electron. Lett. 2011;47:105–106. doi: 10.1049/el.2010.2066. [DOI] [Google Scholar]

- 21.Kim S., Lee J. Scale invariant small target detection by optimizing signal-to-clutter ratio in heterogeneous background for infrared search and track. Pattern Recognit. 2012;45:393–406. doi: 10.1016/j.patcog.2011.06.009. [DOI] [Google Scholar]

- 22.Kim S., Sun S.G., Kwon S., Kim K.T. Robust coastal region detection method using image segmentation and sensor LOS information for infrared search and track. Proc. SPIE. 2013;8744 doi: 10.1117/12.2015720. [DOI] [Google Scholar]

- 23.Blanton W.B., Barner K.E. Infrared Region Classification using Texture and Model-based Features; Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); Las Vegas, NV, USA. 31 March–4 April 2008; pp. 1329–1332. [Google Scholar]

- 24.Kim S., Lee J. Small Infrared Target Detection by Region-Adaptive Clutter Rejection for Sea-Based Infrared Search and Track. Sensors. 2014;2014:13210–13242. doi: 10.3390/s140713210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zhou P., Ye W., Wang Q. An Improved Canny Algorithm for Edge Detection. J. Comput. Inf. Syst. 2011;7:1516–1523. [Google Scholar]

- 26.Fernandes L., Oliveira M. Real-time line detection through an improved Hough transform voting scheme. Pattern Recognit. 2007;41:299–314. doi: 10.1016/j.patcog.2007.04.003. [DOI] [Google Scholar]

- 27.Richter R., Davis J.S., Duggin M.J. Radiometric sensor performance model including atmospheric and IR clutter effects. Proc. SPIE. 1997;3062 doi: 10.1117/12.276692. [DOI] [Google Scholar]

- 28.Comaniciu D., Meer P. Mean Shift: A Robust Approach Toward Feature Space Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002;24:603–619. doi: 10.1109/34.1000236. [DOI] [Google Scholar]

- 29.Shi J., Malik J. Normalized Cuts and Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2000;22:888–905. [Google Scholar]

- 30.Xu C., Prince J.L. Snakes, Shapes, and Gradient Vector Flow. IEEE Trans. Image Proc. 1998;7:359–369. doi: 10.1109/83.661186. [DOI] [PubMed] [Google Scholar]

- 31.Yao H., Duan Q., Li D., Wang J. An improved K-means clustering algorithm for fish image segmentation. Math. Comput. Model. 2013;58:790–798. doi: 10.1016/j.mcm.2012.12.025. [DOI] [Google Scholar]

- 32.Gonzalez R., Woods R. Digital Image Processing. 3rd ed. Prentice Hall; Saddle River, NJ, USA: 2008. [Google Scholar]

- 33.Klein L.A. Sensor and Data Fusion Concepts and Applications. 2nd ed. SPIE Press; Bellingham, WA, USA: 1999. [Google Scholar]

- 34.Mikolajczyk K., Tuytelaars T., Schmid C., Zisserman A., Matas J., Schaffalitzky F., Kadir T., Gool L.V. A Comparison of Affine Region Detectors. Int. J. Comput. Vis. 2005;65:43–72. doi: 10.1007/s11263-005-3848-x. [DOI] [Google Scholar]

- 35.Nock R., Nielsen F. Statistical Region Merging. IEEE Trans. Pattern Anal. Mach. Intell. 2004;26:1452–1458. doi: 10.1109/TPAMI.2004.110. [DOI] [PubMed] [Google Scholar]

- 36.Kim S., Yang Y., Choi B. Realistic infrared sequence generation by physics-based infrared target modeling for infrared search and track. Opt. Eng. 2010;49 doi: 10.1117/1.3509363. [DOI] [Google Scholar]

- 37.Kim S. High-speed incoming infrared target detection by fusion of spatial and temporal detectors. Sensors. 2015;15:7267–7293. doi: 10.3390/s150407267. [DOI] [PMC free article] [PubMed] [Google Scholar]