Abstract

For many Markov chains of practical interest, the invariant distribution is extremely sensitive to perturbations of some entries of the transition matrix, but insensitive to others; we give an example of such a chain, motivated by a problem in computational statistical physics. We have derived perturbation bounds on the relative error of the invariant distribution that reveal these variations in sensitivity.

Our bounds are sharp, we do not impose any structural assumptions on the transition matrix or on the perturbation, and computing the bounds has the same complexity as computing the invariant distribution or computing other bounds in the literature. Moreover, our bounds have a simple interpretation in terms of hitting times, which can be used to draw intuitive but rigorous conclusions about the sensitivity of a chain to various types of perturbations.

1. Introduction

The invariant distribution of a Markov chain is often extremely sensitive to perturbations of some entries of the transition matrix, but insensitive to others. However, most perturbation estimates bound the error in the invariant distribution by a single condition number times a matrix norm of the perturbation. That is, perturbation estimates usually take the form

| (1) |

where F and F̃ are the exact and perturbed transition matrices, π(F) and π(F̃) are the invariant distributions of these matrices, ∥·∥ is a matrix norm, |·| is either a vector norm or some measure of the relative error between the two distributions, and κ(F) is a condition number depending on F. For example, see [2,5–7,9,13,16,19–21] and the survey given in [3]. No bound of this form can capture wide variations in the sensitivities of different entries of the transition matrix.

Alternatively, one might approximate the difference between π and using the linearization of π at F. (The derivative of π can be computed efficiently using the techniques of [6].) The linearization will reveal variations in sensitivities, but only yields an approximation of the form

not an upper bound on the error. That is, unless global bounds on π′(F) can be derived, linearization provides only a local, not a global estimate.

In this article, we give upper bounds that yield detailed information about the sensitivity of π(F) to perturbations of individual entries of F. Given an irreducible substochastic matrix S, we show that for all stochastic matrices F, F̃ satisfying the entry-wise bound F, F̃ ≥ S,

| (2) |

where Qij(S) is defined in Section 4. As a corollary, we also have

| (3) |

for all stochastic F, F̃ ≥ S.

The difference in logarithms on the left hand sides of (2) and (3) measures relative error. Usually, when x̃ ∈ (0, ∞) is computed as an approximation to x ∈ (0, ∞), the error of x̃ relative to x is defined to be either

| (4) |

Instead, we define the relative error to be |log x̃ – log x|. Our definition is closely related to the other two: it is the logarithm of the second definition in (4); and by Taylor expansion,

so it is equivalent with the first in the limit of small error. We chose our definition because it allows for simple arguments based on logarithmic derivatives of π(F).

We call the coefficient Qij(S)–1 in (3) the sensitivity of the ijth entry. Qij(S) has a simple probabilistic interpretation in terms of hitting times, which can sometimes be used to draw intuitive conclusions about the sensitivities. In Theorem 4, we show that our coefficients Qij(S)–1 are within a factor of two of the smallest possible so that a bound of form (3) holds. Thus, our bound is sharp. (We note that our definition of sharp differs slightly from other standard definitions; see Remark 5.) In Theorem 5, we give an algorithm by which the sensitivities may be computed in O(L3) time for L the number of states in the chain. Therefore, computing the error bound has the same order of complexity as computing the invariant measure or computing most other perturbation bounds in the literature; see Remark 8.

Since our result takes an unusual form, we now give three examples to illustrate its use. We discuss the examples only briefly here; details follow in Sections 4 and 6. First, suppose that F̃ has been computed as an approximation to an unknown stochastic matrix F and that we have a bound on the error between F̃ and F, for example |F̃ij – Fij| ≤ αij. In this case, we define Sij := max{F̃ij – αij, 0}, and we have estimate

for all F so that |F̃ij – Fij| ≤ αij. See Remark 7 for a more detailed explanation.

Now suppose instead that F ≥ αP where 0 < α < 1 and P is the transition matrix of an especially simple Markov chain, for example a symmetric random walk. Then we choose S := αP, and we compute or approximate Qij(αP) by easy to understand probabilistic arguments. This method can be used to draw intuitive but rigorous conclusions about the sensitivity of a chain to various types of perturbations. See Section 6.5 for details.

Finally, suppose that the transition matrix F has a large number of very small positive entries and that we desire a sparse approximation F̃ to F with approximately the same invariant distribution. In this case, we take S to be F with all its small positive entries set to zero. If the sensitivity Qij(S)–1 is very large, it is likely that the the value of Fij is important and cannot be set to zero. If Qij(S)–1 is small, then setting F̃ij = 0 and F̃ii = Fii + Fij will not have much effect on the invariant distribution.

We are aware of two other bounds on relative error in the literature. By [9, Theorem 4.1],

| (5) |

where for Fi the jth principal submatrix of F. This bound fails to identify the sensitive and insensitive entries of the transition matrix since the error in the ith component of the invariant distribution is again controlled only by the single condition number κi(F). Moreover, computing κi(F) for all i is of the same complexity as computing all of our sensitivities Qij(S)–1; see Remark 8. Therefore, in many respects, our result provides more detailed information at the same cost as [9, Theorem 4.1]. On the other hand, we observe that [9, Theorem 4.1] holds for all perturbations: one does not have to restrict the admissible perturbations by requiring F̃ ≥ S as we do for our result. However, this is not always an advantage, since we anticipate that in many applications bounds on the error in F are available and, as we will see, the benefit from using this information is significant.

In [18, Theorem 1], another bound on the relative error is given. Here, the relative error in the invariant distribution is bounded by the relative error in the transition matrix. Precisely, if F, are irreducible stochastic matrices with Fij = 0 if and only if F̃ij = 0, then

| (6) |

This surprising result requires no condition number depending on F, but it does require that F and F̃ have the same sparsity pattern, which greatly restricts the admissible perturbations. Our result may be understood as a generalization of (6) which allows perturbations changing the sparsity pattern.

Our result also bears some similarities with the analysis in [2, Section 4], which is based on the results of [9]. In [2], a state m of a Markov chain is said to be centrally located if Ei[τm] is small for all states i. (Here, τm is the first passage time to state m; see Section 2.) It is shown that if |Ei[τm] – Ej[τm]| is small, then πm(F) is insensitive to Fij in relative error. Therefore, if m is centrally located, πm(F) is not sensitive to any entry of the transition matrix. Our can also be expressed in terms of first passage times, and they provide a better measure of the sensitivity of πm(F) to Fij than |Ei[τm] – Ej[τm]|; see Section 6.3.

Our bounds on derivatives of π(F) in Theorem 2 and our estimates (2) and (3) share some features with structured condition numbers [12, Section 5.3]. The structured condition number of an irreducible, stochastic matrix F is defined to be

Structured condition numbers yield approximate bounds valid for small perturbations. These bounds are useful, since for small perturbations, estimates of type (1) are often far too pessimistic. We remark that our results (2) and (3) give the user control over the size of the perturbation through the choice of S. (If S is nearly stochastic, then only small perturbations are allowed.) Therefore, like structured condition numbers, our results are good for small perturbations. In addition, our results are true upper bounds, so they are more robust than approximations derived from structured condition numbers.

Our interest in perturbation bounds for Markov chains arose from a problem in computational statistical physics; we present a drastically simplified version below in Section 6. For this problem, the invariant distribution is extremely sensitive to some entries of the transition matrix, but insensitive to others. We use the problem to illustrate the differences between our result, [18, Theorem 1], [9, Theorem 4.1], and the eight bounds on absolute error surveyed in [3]. Each of the eight bounds has form (1), and we demonstrate that the condition number κ(F) in each bound blows up exponentially with the inverse temperature parameter in our problem. By contrast, many of the sensitivities from our result are bounded as the inverse temperature increases. Thus, our result gives a great deal more information about which perturbations can lead to large changes in the invariant distribution.

2. Notation

We fix , and we let X be a discrete time Markov chain with state space Ω = {1, 2, . . . , L} and irreducible, row-stochastic transition matrix . Since F is irreducible, X has a unique invariant distribution satisfying

We let ei denote the ith standard basis vector in , e denote the vector of ones, and I denote the identity matrix. We treat all vectors, including π, as column vectors (that is, as L × 1 matrices). For , we let be the operator defined by

Instead of defining Sj as above, we could define Sj to be S with the jth row and column set to zero. We could also define Sj to be the jth principal submatrix. We chose our definition to emphasize that we treat Sj as an operator on . If S and T are matrices of the same dimensions, we say S ≥ T if and only if Sij ≥ Tij for all indices i, j. For any with v > 0, we define by (log v)i = log(vi).

For k ∈ {1, 2, . . . , L}, we define 1k to be the indicator function of the set {k}, and

to be the first return time to state k. We also define

to be the probability of the event A conditioned on X0 = k and the expectation of the random variable Y conditioned on X0 = k, respectively. Finally, for Y a random variable and B an event, we let

where χB is the indicator function of the event B.

3. Partial derivatives of the invariant distribution

Given an irreducible, stochastic matrix , let be the invariant distribution of F; that is, let π(F) be the unique solution of

We regard π as a function defined on the set of irreducible stochastic matrices, and in Lemma 1, we show that π is differentiable in a certain sense. We give a proof of the lemma in Appendix A.

Lemma 1

The function π admits a continuously differentiable extension to an open neighborhood of the set of irreducible stochastic matrices in . The extension may be chosen so that for all and so that if Ge = e, then

Remark 1

The set of stochastic matrices is not a vector space; it is a compact, convex polytope lying in the affine space . As a consequence, we need the extension guaranteed by Lemma 1 to define the derivative of π on the boundary of the polytope, which is the set of all stochastic matrices with at least one zero entry. We introduce only to resolve this unpleasant technicality, not to define π(F) for matrices which are not stochastic. In fact, all our results are independent of the particular choice of extension, as long as it meets the conditions in the second sentence of the lemma.

Our perturbation bounds are based on partial derivatives of π with respect to entries of F. As usual, the partial derivatives are defined in terms of a coordinate system, and we choose the off-diagonal entries of F as coordinates: Any stochastic F is determined by its off-diagonal entries through the formula

Accordingly, for i, j ∈ Ω with i ≠ j, we define

| (7) |

These partial derivatives must be understood as derivatives of the extension guaranteed by Lemma 1. Otherwise, if Fij or Fii were zero, the right hand side of (7) would be undefined.

Remark 2

We chose to define partial derivatives by (7), since that definition leads to the global bounds on the invariant distribution presented in Section 4. Other choices are reasonable: for example, one might consider derivatives of the form

where k ≠ i and j ≠ i. However, to the best of our knowledge, only definition (7) leads easily to global bounds.

In Theorem 1, we derive a convenient formula for . Comparable results relating derivatives and perturbations of π to the matrix of mean first passage times were given in [2,8]. A formula for the derivative of the invariant distribution in terms of the group inverse of I – F was given in [15]; a general formula for the derivative of the Perron vector of a nonnegative matrix was given in [4].

Theorem 1

Let F be an irreducible stochastic matrix, and let X be a Markov chain with transition matrix F. Define π(F) and as above. We have

for all i, j ∈ Ω with i ≠ j.

Proof

Define and as in Lemma 1, let for all , and let . Define

Since Gεe = e, Lemma 1 implies

| (8) |

for all ε sufficiently close to zero.

We derive an equation for from (8); differentiating (8) with respect to ε gives

| (9) |

Recalling the definition of Fi from Section 2, (9) implies

Moreover, by [1, Chapter 6, Theorem 4.16],

| (10) |

Therefore, we have

| (11) |

We now interpret (11) in terms of the Markov chain Xt with transition matrix F. We observe that for any m ∈ Ω \ {i},

where τi := min{t > 0 : Xt = i} is the first passage time to state i. Therefore, for m, j ∈ Ω \ {i}, (11) yields

| (12) |

In fact, this formula also holds for m = i, since we have

for all j ∈ Ω \ {i}.

Finally, we convert our formula for to a formula for . We have

and so by (12),

| (13) |

Now by [17, Theorem 1.7.5], we have

| (14) |

Therefore, (13) implies

Our goal is to bound the relative errors of the entries of the invariant measure π(F), where for x̃, x ∈ (0, ∞), we define the relative error between x̃ and x to be

| (15) |

Our definition of relative error is unusual, but it is closely related to the common definitions, as we explain in the introduction. In Theorem 2, we derive sharp bounds on the logarithmic partial derivatives of the invariant distribution. We want bounds on logarithmic derivatives, since we will ultimately prove bounds on the relative error in π, with relative error defined by (15).

The following lemma will be used in the proof of Theorem 2.

Lemma 2

We have

and

Proof

For n ≥ 0, define Yn := Xτj+n. For i ∈ Ω, let and denote the first return times to i for X and Y, respectively. By the strong Markov property, (a) Y is a Markov process with the same transition matrix as X, (b) the distribution of Y0 is , and (c) conditional on Y0, Yn is independent of X0, X1, . . . , Xτj. Therefore,

(The second equality above follows from the definition of Y; the third equality follows from the strong Markov property, since the event is determined by X0, X1, . . . , Xτj.) This proves the first formula in the statement of the lemma; the second formula follows on summing the first over all m.

Using Lemma 2, we now prove our bounds on the logarithmic derivatives.

Theorem 2

We have

and

Proof

Using Theorem 1, Lemma 2, and (14), we have

| (16) |

| (17) |

We observe that the term in formula (16) is nonnegative, hence that term attains a minimum value of zero when m = i. Therefore, we have

| (18) |

with the minimum attained when m = i, since the other term in formula (16) does not depend on m. By a similar argument using (17),

| (19) |

and the maximum is attained when m = j.

Subtracting (18) from (19) gives

hence

Finally, by Lemma 2 and (14),

so

Corollary 1 gives a simplified version of the bound in Theorem 2. This estimate can be used to derive [18, Theorem 1], which we have stated in equation (6) above. We omit the proof.

Corollary 1

Whenever Fij ≠ 0,

Proof

We have

and so the result follows by Theorem 2.

4. Global perturbation bounds

In this section, we use our bounds on the derivatives of the invariant distribution to prove global perturbation estimates. Our estimates assume that both the exact transition matrix F and the perturbed matrix F̃ are bounded below by some irreducible substochastic matrix S. As a consequence, coefficients Qij(S) depending on S arise.

We define Qij(S) in terms of a Markov chain with transition matrix depending on S, and our perturbation results are based on comparisons between this chain and other chains with transition matrices G ≥ S. Therefore, to avoid confusion, we let

denote the probability that XG ∈ A conditioned on for XG a chain with transition matrix G. To give a specific example, we intend Pi[τi < τj](G) to mean the probability that XG hits j before returning to i, conditional on .

We now define Qij(S).

Definition 1

For S an irreducible and substochastic or stochastic matrix, let Xω be the Markov chain with state space and transition matrix

We think of Xω as a chain with transition probability S, but augmented by an absorbing state ω to adjust for the fact that S is substochastic. For all i, j ∈ Ω with i ≠ j, we define

Remark 3

We observe that for F stochastic,

since the absorbing state ω does not communicate with the other states Ω when F is stochastic.

We now show that Qij(S) is monotone as a function of S. This is the crucial step in deriving global perturbation bounds from the bounds on derivatives in Theorem 2.

Lemma 3

Let S be an irreducible substochastic matrix. If F is a stochastic or substochastic matrix with F ≥ S, then

In addition, for any substochastic or stochastic matrix S,

Proof

Let be the set of all walks of length M in Ω which start at i, end at j, and visit i and j only at the endpoints. To be more precise, define

We observe that since F ≥ S,

Since S is irreducible, we also have that

Finally,

which concludes the proof.

Combining Theorem 2 with Lemma 3 yields our global perturbation estimate.

Theorem 3

Let F, F̃ be stochastic matrices, let S be substochastic and irreducible, and assume that F, F̃ ≥ S. We have

Remark 4

We allow S to be stochastic in Definition 1 and also in the hypotheses of Theorem 3. However, we observe that if S is stochastic, then the conclusion of the theorem is trivial since S is the unique stochastic F with F ≥ S.

Proof

Let G ∈ {tF̃+(1–t)F : t ∈ [0, 1]}. Since Qij(S) = Pi[τj < min{τi, τω}](Sω), we have

Therefore,

The first equality holds since by Lemma 1, the invariant distribution π is Fréchet differentiable on an open neighborhood of the set of irreducible, stochastic matrices. Directional derivatives can then be computed using partial derivatives as above. The denominator in the third line is positive since F, F̃ ≥ S implies

and by Lemma 3, Qij(S) > 0.

Theorem 3 takes a somewhat complicated form, so in Corollary 2, we present a simplified version. The proof of Corollary 2 shows that the bound in Theorem 3 is always smaller than the bound in Corollary 2.

Corollary 2

Let F, F̃ be stochastic matrices, let S be substochastic and irreducible, and assume that F, F̃ ≥ S. We have

Proof

We have

and so the result follows directly from Theorem 3.

We show in Theorem 4 that both Theorem 3 and Corollary 2 are sharp. That is, we show that ρij(S) = Qij(S) is within a factor of two of the largest value of ρij(S) so that a bound of the form

and we show that ηij(S) = Qij(S)–1 is within a factor of two of the smallest value of ηij(S) such that a bound of the form

holds.

Remark 5

We note that some authors call a bound sharp if it is possible for equality to hold. For example, a bound of form (1) may be called sharp if for every stochastic F, there exists a stochastic F̃ so that equality holds. We prefer to call a bound sharp if it is the best bound of a given form, possibly up to a small constant factor. Thus, for bounds of the type which we consider, we require that for every S and every i ≠ j, Qij(S)–1 is as small as possible.

Theorem 4

Let S be an irreducible substochastic matrix, and let i, j ∈ Ω with i ≠ j. For every ε > 0, there exist stochastic matrices F̃, F with F̃, F ≥ S so that

and for all (k, l) except (i, j) and (i, i).

Proof

Define

F is stochastic, F ≥ S, and Fj⊥,j = Sj⊥,j. Therefore,

We now distinguish two cases: and . In the first case, if F ≥ S and F is stochastic, then Fim = Sim for all m ∈ Ω. Therefore, for all stochastic F, F̃ ≥ S, and so the conclusion of the theorem holds. In the second case, we observe that implies Fii > Sii ≥ 0. Therefore, for any sufficiently small η > 0,

is stochastic, and Fη ≥ S. By Theorem 2, we have

It follows that for every ε > 0 there exists an η > 0 with

(The second inequality follows by an argument similar to the proof of Corollary 2.)

Theorem 3 and Corollary 2 take unusual forms, and at first glance the condition F, F̃ ≥ S may seem inconvenient. In Remarks 6 and 7, we explain how to use these estimates in the common case when only one matrix and a bound on the error are known.

Remark 6

For a given application, the best upper bounds are obtained by choosing the largest possible S. This is a consequence of Lemma 3. We apply this principle in Remark 7.

Remark 7

Suppose that F̃ has been computed as an approximation to an unknown stochastic matrix F and that we have some bound on the error between F̃ and F. For example, suppose that for some matrix α ≥ 0,

In this case, we define

We observe that this choice of S is the largest possible so that F ≥ S for all F so that |Fij – F̃ij| ≤ αij. Therefore, by Lemma 3, the coefficients Qij(S) are as large as possible, giving the best possible upper bounds.

If S is irreducible, we have

In general, if S is reducible, then no statement can be made about the error of the invariant distribution. In fact, if S is reducible, then there is a reducible, stochastic F with , and the invariant distribution of F is not even unique.

5. An efficient algorithm for computing sensitivities

In Theorem 5 below, we show that the coefficients Qij(S) can be computed by inverting an L × L matrix and performing additional operations of cost O(L2). Therefore, the cost of estimating the error in π using either Theorem 3 or Corollary 2 is comparable to the cost of computing π. Moreover, the cost of computing our bounds is the same as the cost of computing most other bounds in the literature, for example, those based on the group invserse; see Remark 8.

Our first step is to characterize Qij(S) as the solution of a linear equation. We advise the reader that we will make extensive use of the notation introduced in Section 2. In addition, we define

| (20) |

to be the jth column and row of S with the jth entry set to zero, respectively.

Lemma 4

Let S be irreducible and substochastic or stochastic. For i, j ∈ Ω with i ≠ j, let be the vector defined by

for all k ∈ Ω \ {j}. Define Sj as in Section 2, and Sj⊥,j by (20). The operator is invertible on , and qij(S) is the unique solution of the equation

| (21) |

Proof

Let i, j ∈ Ω with i ≠ j. We have

and for k ≠ j,

Therefore,

| (22) |

We observe that equation (22) above can be expressed as

| (23) |

We now claim that if S is irreducible, then is invertible, which shows that qij is the unique solution of (23). By (10), . We now observe that

so converges, hence is invertible.

The proof of Theorem 5 uses the following lemma.

Lemma 5

For S substochastic and irreducible,

Proof

We recall that

Multiplying both sides by (I – Sj)–1 then yields

Now Sj ≥ 0 is a substochastic matrix, so by (10), . Therefore, , and by the Sherman-Morrison formula,

Thus,

| (24) |

Theorem 5

Let be irreducible and substochastic. The set of all coefficients Qij(S) can be computed by inverting an L × L matrix and then performing additional operations of cost O(L2).

Proof

We begin with some definitions and notation. Define

(The right hand side above denotes the block decomposition of A with respect to the decomposition . Thus, for example, I – Sj is to be interpreted as an operator on ; cf. the definition of Sj in Section 2.) We observe that A(j) is invertible, since by (10), I – Sj is invertible, so

| (25) |

In the first step of the algorithm, we compute A(1)–1, which costs O(L3) operations. Second, we compute SA(1)–1. Given A(1)–1, this can be done in O(L2) operations using the formula

(The formula is easily proved by direct calculation using (25).) Third, we compute Qi1(S) for all i ≠ 1. By Lemma 5 and 25, we have

| (26) |

Therefore, once A(1)–1 has been computed, it costs O(L) operations to compute Qi1(S) for all i ≠ 1.

We compute the remaining sensitivities Qij(S) for j ≠ 1 by a formula analogous to (28), but with A(j)–1 in place of A(1)–1. To do so efficiently, we use the Sherman-Morrison-Woodbury identity to derive a formula expressing A(j)–1 in terms of A(1)–1:

| (27) |

where

In the fourth step of the algorithm, we loop over all j ≠ 1. For each j, we first compute C(j)–1, which requires a total of O(L) operations. We then compute A(j)–1ej at a cost of O(L) operations using a formula derived from (27):

Next, we must compute for all i ≠ j. By (27),

so the cost of computing for each i ≠ j is O(L). Finally, we compute Qij(S) for all i ≠ j. By Lemma 5 and 25, we have

| (28) |

so this last step costs O(L).

The total cost of the algorithm described above is a single L × L matrix inversion plus O(L2).

Remark 8

Most perturbation bounds in the literature have the same computational complexity as our bound. For example, some bounds are based on the group inverse of I – F [13,16]. The cost of computing the group inverse is O(L3) [6], so our bound has the same complexity as [13, 16]. Computing the bound on relative error in [9] requires finding for all j; see (5). This could be done in O(L3) operations by methods similar to Theorem 5, so we conjecture that our bound and the bound of [9] have the same complexity. On the other hand, the bound on relative error in [18] (see (6)) requires almost no calculation at all.

Remark 9

We give the algorithm above to show that the cost of computing our bounds is comparable to the cost of other bounds, in principle. We do not claim that the algorithm is always reliable, since we have not performed a complete stability analysis. Nonetheless, in many cases, the computation of Qij(S) is stable even when the computation of π(F) is unstable. For example, suppose that S = αF for F a stochastic matrix and α ∈ (0, 1). (This would be a good choice of S if all entries of F were known with relative error α–1; cf. Remark 7.) Let ∥M∥∞ denote the operator norm of the matrix with respect to the -norm

It is a standard result that

Therefore, since F is stochastic and 0 ≤ Sj ≤ S = αF, ∥Sj∥∞ ≤ α, and we have

Moreover,

and so the condition number for the inversion of I – Sj satisfies

We conclude that if α is not too close to one, then the algorithm is stable for any F. For example, if the entries of F are known with 2% error, then we choose S = 0.98F, and we have κ∞(F) ≤ 100.

6. The hilly landscape example

In this section, we discuss an example in which the invariant distribution is very sensitive to some entries of the transition matrix, but insensitive to others. The example arose from a problem in computational statistical physics. We will use the example to compare our results with previous work, especially [2,9,18] and the bounds on absolute error summarized in [3].

6.1. Transition matrix and physical interpretation

Our hilly landscape example is a simple analogue of the dynamics of a single particle in contact with a heat bath. Define by

Take , and let Ω := {1, 2, . . . , L} with periodic boundary conditions; that is, take . Given V, we define a probability distribution on Ω by

The measure π is in detailed balance with the Markov chain X having transition matrix defined by

(In the definition of F, FL,L+1 means FL,1 and F1,0 means F1,L, since we take Ω with periodic boundary conditions.)

We interpret X as the position of a particle which moves through the interval (0, 1] with periodic boundary conditions. If V(i) > V(j), we say that j is downhill from i. When the inequality is reversed we say that j is uphill from i. Under the dynamics prescribed by F, the particle is more likely to move downhill than uphill. In fact, as L tends to infinity, π becomes more and more concentrated near the minima of V. For large L, the particle spends most of the time near minima of V, and transitions of the particle between minima occur rarely.

6.2. Sensitivities for the hilly landscape transition matrix

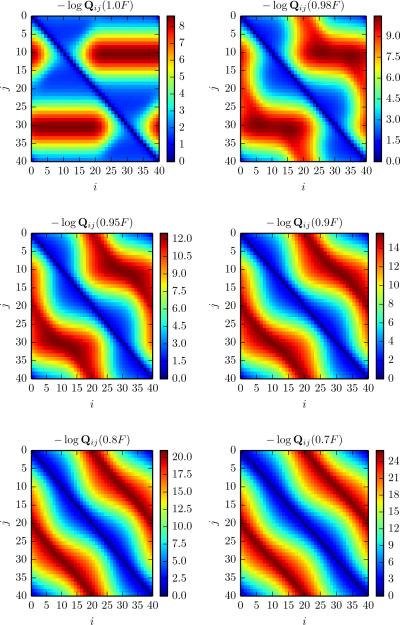

In Figure 1, we plot –logQij(αF) versus i and j for F the hilly landscape transition matrix with L = 40 and α ∈ {0.7, 0.8, 0.9, 0.95, 0.98, 1}. The purpose of this section is to give an intuitive explanation of the main features observed in the figure. Recall that the potential V is shaped roughly like a “W” with peaks at 0, ½, and 1 and valleys at ¼ and ¾. When L = 40, the peaks correspond to the indices 0, 20, and 40 in Ω, and the valleys correspond to 10 and 30. (To be precise, 0 and 40 are identical since we take periodic boundary conditions.)

Figure 1.

Sensitivities for the hilly landscape transition matrix.

Now consider the case α = 1. We observe that –logQ20,j(F) is small for all j, so Q20,j(F)–1 is small, and π(F) is insensitive to perturbations which change the transition probabilities from the peak to other points. This is as expected, since the probability P20[τj < τ20](F) = Q20,j(F) of hitting a point j in the valley before returning to the peak should be fairly large. On the other hand, –logQ30,10(F) is enormous, so π(F) is sensitive to the transition probability from the valley to the peak. This is also as expected, since the probability P30[τ10 < τ30](F) of climbing from the valley to the peak without falling back into the valley should be small. To explain the small values of –logQij(F) observed near the diagonal, we observe that for all i ∈ Ω and all L,

where Lip(V) = 1 is the Lipschitz constant of the potential V. Therefore, by Corollary 1,

The same estimate holds for Qi,i–1(F).

The coefficients Qij(αF) for α < 1 share many features with Qij(F). These coefficients would be relevant if F were known with relative error 1 – α; see Remark 7. The main difference between Qij(αF) and Qij(F) is that Qij(αF) is small whenever the minimum number of time steps required to transition between i and j is large. The reader is directed to Section 6.5 for discussion of a related phenomenon. Observe that this effect grows more dominant as α decreases. We also note that Qij(αF)–1 is again small near the diagonal. In fact, by Lemma 3, we have

The same estimate holds for Qi,i–1(αF).

6.3. Mean first passage times and related relative error bounds

Section 4 of [2] also suggests bounds on relative error in terms of certain first passage times. A comparison is therefore in order. We record a simplified version of these results below.

Theorem 6

[2, Corollaries 4.1,4.2] Let F and F̃ be irreducible stochastic matrices. We have

where the expectations are taken for the chain with transition matrix F and δ is the Kronecker delta function. Therefore,

Taking the maximum over all m in the second sentence of Theorem 6 yields a pertubation result having a form similar to Theorem 3, but with

in place of Qij(S)–1. In the next paragraph, we show for the hilly landscape transition matrix that for some values of i and j, βij grows exponentially with L while Qij(S)–1 remains bounded. Thus, the results of [2] dramatically overestimate the error due to some perturbations.

To derive the estimate in the second sentence of Theorem 6 from the exact formula in the first sentence, one discards a factor of πi(F̃). Therefore, roughly speaking, the estimate is poor when πi(F̃) is small. To give a specific example, let F be the hilly landscape transition matrix, assume that L is even, and let i = L/2 and j = L/2 + 1. Observe that we have chosen i at a peak of the potential V, so

(Here, we use the symbols ≲ and ≳ to denote bounds up to multiplicative constants, so in the last line above, we mean that there is some C > 0 so that the left hand side is bounded above by C exp(–L) for all L.) Let . We have

by Theorem 6. Therefore, by Theorem 2,

and so

Now suppose that the substochastic matrix S appearing in our bound is chosen for each L so that Sij for i = L/2 and j = L/2 + 1 is bounded above zero uniformly as L → ∞. For example, one might choose S to be a multiple of F as in Section 6.2 or a multiple of a simple random walk transition matrix as in Section 6.5. Then by Lemma 3, we have

so Qij(S)–1 is bounded. Thus, βij(F) is a poor estimate of the sensitivity of the ijth entry for this problem.

6.4. The spectral gap and related absolute error bounds

The survey article [3] lists eight condition numbers κi(F) for i = 1, 2, . . . , 8 for which bounds of the form

hold. (The Hölder exponents p and p′ vary with the choice of condition number.) Some of these condition numbers are based on ergodicity coefficients [20,21], some on mean first passage times [2], and some on generalized inverses of the characteristic matrix I – F [5,7,9,16,19]. We prove for the hilly landscape transition matrix F that κi(F) increases exponentially with L for all i. By contrast, we have already seen that many of the coefficients Qij(αF)–1 are bounded as L tends to infinity.

Our proof that the condition numbers increase exponentially is based on an analysis of the spectral gap of F. Let σ(F) denote the spectrum of F. The spectral gap γ is defined to be

| (29) |

We use the bottleneck inequality [14, Theorem 7.3] to show that the spectral gap of the hilly landscape transition matrix decreases exponentially with L. For convenience, assume that L is even, and let

The bottleneck ratio [14, Section 7.2] for the partition {E, Ec} is

(As in the last section, we use the symbols ≲ and ≳ to denote bounds up to multiplicative constants.) Therefore, by the bottleneck inequality, the mixing time tmix [14, Section 4.5] satisfies

By [14, Theorem 12.3],

Therefore,

| (30) |

and we see that the spectral gap decreases exponentially in L.

We now relate the condition numbers to the spectral gap. Using Equation (3.3) and the table at the bottom of page 147 in [3], and also [11, Corollary 2.6], we have

| (31) |

for all i = 1, . . . , 8. Now we claim that for sufficiently large L,

| (32) |

in which case (30) and (31) imply that all condition numbers grow exponentially with L. To see this, we first observe that since F is reversible, its spectrum is real [14, Lemma 12.2]. Moreover,

where Lip(V) = 1 is the Lipschitz constant of V. Therefore, using the Gershgorin circle theorem we have

| (33) |

for all λ ∈ σ(F). Inequality (33) shows that σ(F) is bounded above –1 uniformly in L, and by (30),

It follows that for sufficiently large L, max{|λ| : λ ∈ σ(F) \ {1}} is attained for λ > 0. Thus, equation (32) holds, and we conclude using (30) and (31) that

for all i = 1, . . . , 8.

6.5. Bounds below by a random walk

Let Y be the random walk on Ω with transition matrix

(As above, since Ω has periodic boundaries, PL,L+1 means PL,1, etc.) In this section, we use Theorem 3 to relate Qij(P) with Qij(F) for F the hilly landscape transition matrix. First, using the lower bounds on entries of F derived in Section 6.2, we have

Therefore, by (35) and Lemma 3,

| (34) |

Now for any β ∈ (0, 1),

Let |i – j| denote the minimum number of time steps required for the chain to reach state j from state i. Adopting the notation used in the proof of Lemma 3, there is some path of length |i – j| for which

| (35) |

Combining (34) and (35) then yields

Acknowledgements

This work was funded by the NIH under grant 5 R01 GM109455-02. We would like to thank Aaron Dinner, Jian Ding, Lek-Heng Lim, and Jonathan Mattingly for many very helpful discussions and suggestions.

Appendix A. Proof of Lemma 1

Proof

Let F be irreducible and stochastic. By [6, Equation (3.1)], det(I – Fi) > 0 for all i ∈ Ω, and

| (36) |

The right hand side of (36) yields the desired extension. To show this, we first observe that there exists an open neighborhood of F and a disc with such that implies (a) and (b) G has exactly one eigenvalue in and that eigenvalue is simple. There exists a neighborhood with property (a), since is continuous in G and . The existence of a neighborhood and disc with property (b) follows from standard results in perturbation theory, since F irreducible and stochastic implies that 1 is a simple eigenvalue of F; see [10, Ch. II, Theorem 5.14]. We now let be the union of the sets over all irreducible, stochastic F, and we define by extending the formula on the right hand side of (36). By (a), is continuously differentiable. By property (b), we know that if with Ge = e then e is a simple eigenvalue of G, and so . Following the proof of [6, Theorem 3.1], one may then use the identity

where adj(·) denotes the adjugate matrix, to show that .

Contributor Information

ERIK THIEDE, The University of Chicago, Department of Chemistry.

BRIAN VAN KOTEN, The University of Chicago, Department of Statistics.

JONATHAN WEARE, The University of Chicago, Department of Statistics and the James Franck Institute.

References

- 1.Berman A, Plemmons RJ. Nonnegative matrices in the mathematical sciences. Academic Press (Harcourt Brace Jovanovich Publishers), New York, 1979. Computer Science and Applied Mathematics [Google Scholar]

- 2.Cho GE, Meyer CD. Markov chain sensitivity measured by mean first passage times.. Linear Algebra Appl; Conference Celebrating the 60th Birthday of Robert J. Plemmons (Winston-Salem, NC, 1999).2000. pp. 21–28. [Google Scholar]

- 3.Cho GE, Meyer CD. Comparison of perturbation bounds for the stationary distribution of a Markov chain. Linear Algebra Appl. 2001;335:137–150. [Google Scholar]

- 4.Deutsch E, Neumann M. On the first and second order derivatives of the perron vector. Linear Algebra and its Applications. 1985;71(0):57–76. [Google Scholar]

- 5.Funderlic RE, Meyer CD., Jr. Sensitivity of the stationary distribution vector for an ergodic Markov chain. Linear Algebra Appl. 1986;76:1–17. [Google Scholar]

- 6.Golub GH, Meyer CD., Jr. Using the QR factorization and group inversion to compute, differentiate, and estimate the sensitivity of stationary probabilities for Markov chains. SIAM J. Algebraic Discrete Methods. 1986;7(2):273–281. [Google Scholar]

- 7.Haviv M, Van der Heyden L. Perturbation bounds for the stationary probabilities of a finite Markov chain. Adv. in Appl. Probab. 1984;16(4):804–818. [Google Scholar]

- 8.Hunter JJ. Stationary distributions and mean first passage times of perturbed markov chains. Linear Algebra and its Applications. 2005;410(0):217–243. Tenth Special Issue (Part 2) on Linear Algebra and Statistics.

- 9.Ipsen ICF, Meyer CD. Uniform stability of Markov chains. SIAM J. Matrix Anal. Appl. 1994;15(4):1061–1074. [Google Scholar]

- 10.Kato T. Perturbation theory for linear operators. Classics in Mathematics. Springer-Verlag; Berlin: 1995. Reprint of the 1980 edition. [Google Scholar]

- 11.Kirkland S. On a question concerning condition numbers for markov chains. SIAM Journal on Matrix Analysis and Applications. 2002;23(4):1109–1119. [Google Scholar]

- 12.Kirkland S, Neumann M. Chapman & Hall/CRC applied mathematics and nonlinear science series. CRC Press; Boca Raton: 2013. Group inverses of M-matrices and their applications. [Google Scholar]

- 13.Kirkland SJ, Neumann M, Shader BL. Applications of Paz's inequality to perturbation bounds for Markov chains. Linear Algebra Appl. 1998;268:183–196. [Google Scholar]

- 14.Levin DA, Peres Y, Wilmer EL. Markov chains and mixing times. American Mathematical Society, Providence, RI. 2009 With a chapter by James G. Propp and David B. Wilson.

- 15.Meyer C., Jr. The role of the group generalized inverse in the theory of finite markov chains. SIAM Review. 1975;17(3):443–464. [Google Scholar]

- 16.Meyer CD., Jr. The condition of a finite Markov chain and perturbation bounds for the limiting probabilities. SIAM J. Algebraic Discrete Methods. 1980;1(3):273–283. [Google Scholar]

- 17.Norris JR. Markov chains, volume 2 of Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press; Cambridge: 1998. [Google Scholar]

- 18.O'Cinneide CA. Entrywise perturbation theory and error analysis for Markov chains. Numer. Math. 1993;65(1):109–120. [Google Scholar]

- 19.Schweitzer PJ. Perturbation theory and finite Markov chains. J. Appl. Probability. 1968;5:401–413. [Google Scholar]

- 20.Seneta E. Perturbation of the stationary distribution measured by ergodicity coefficients. Adv. in Appl. Probab. 1988;20(1):228–230. [Google Scholar]

- 21.Seneta E. Numerical solution of Markov chains, volume 8 of Probab. Pure Appl. Dekker; New York: 1991. Sensitivity analysis, ergodicity coefficients, and rank-one updates for finite Markov chains. pp. 121–129. [Google Scholar]