Animal studies have shown that congenital visual deprivation reduces the ability of neurons to integrate cross-modal inputs. Guerreiro et al. reveal that human patients who suffer transient congenital visual deprivation because of cataracts lack multisensory integration in auditory and multisensory areas as adults, and suppress visual processing during audio-visual stimulation.

Keywords: visual deprivation, sight restoration, multisensory integration, functional magnetic resonance imaging

Animal studies have shown that congenital visual deprivation reduces the ability of neurons to integrate cross-modal inputs. Guerreiro et al. reveal that human patients who suffer transient congenital visual deprivation because of cataracts lack multisensory integration in auditory and multisensory areas as adults, and suppress visual processing during audio-visual stimulation.

Abstract

Developmental vision is deemed to be necessary for the maturation of multisensory cortical circuits. Thus far, this has only been investigated in animal studies, which have shown that congenital visual deprivation markedly reduces the capability of neurons to integrate cross-modal inputs. The present study investigated the effect of transient congenital visual deprivation on the neural mechanisms of multisensory processing in humans. We used functional magnetic resonance imaging to compare responses of visual and auditory cortical areas to visual, auditory and audio-visual stimulation in cataract-reversal patients and normally sighted controls. The results showed that cataract-reversal patients, unlike normally sighted controls, did not exhibit multisensory integration in auditory areas. Furthermore, cataract-reversal patients, but not normally sighted controls, exhibited lower visual cortical processing within visual cortex during audio-visual stimulation than during visual stimulation. These results indicate that congenital visual deprivation affects the capability of cortical areas to integrate cross-modal inputs in humans, possibly because visual processing is suppressed during cross-modal stimulation. Arguably, the lack of vision in the first months after birth may result in a reorganization of visual cortex, including the suppression of noisy visual input from the deprived retina in order to reduce interference during auditory processing.

Introduction

Early sensory experience is deemed to play a crucial role in shaping the development of the neural circuitry underlying multisensory processes. Studies performed in lid-sutured monkeys have shown that visual and multisensory areas lose responsiveness to visual stimulation (Hyvärinen et al., 1981a, b; Carlson et al., 1987), whereas visual areas become partially responsive to non-deprived (e.g. tactile) modalities (Hyvärinen et al., 1981a). Studies performed in dark-reared cats have shown that multisensory neurons at cortical and subcortical sites lose their capability to integrate cross-modal inputs (Wallace et al., 2004; Carriere et al., 2007).

Individuals born with dense bilateral cataracts provide a unique opportunity to investigate the effect of congenital visual deprivation on multisensory processing in humans. Cataracts are clouded lenses that preclude patterned vision from reaching the retina until the lenses are surgically removed and replaced by interocular lenses or other visual aids. After treatment, patterned vision finally reaches the retina. The few studies assessing multisensory functions in this population have shown that their ability to integrate more complex cross-modal stimuli (e.g. speech input) is impaired (Putzar et al., 2007, 2010b), despite a gain in reaction times for simple cross-modal stimuli (e.g. simultaneously presented light flashes and noise bursts) as compared to their unimodal counterparts (Putzar et al., 2012).

Thus far, only two studies have investigated the effect of a transient period of congenital visual deprivation in sensory cortical processing during auditory (Saenz et al., 2008) and visual (Putzar et al., 2010a) stimulation. In one of these studies (Saenz et al., 2008), Patient M.S.—who was born blind due to retinopathy of prematurity and cataracts, and whose vision was partially restored after cataract removal in the right eye at the age of 43 years—was shown to exhibit both visual and auditory motion-selective responses in the typically visual motion-selective area MT, suggesting that cross-modal (i.e. auditory) responses co-exist with restored visual responses within visual cortex of sight-recovery individuals (cf. Hyvärinen et al., 1981a). In another study (Putzar et al., 2010a), lip-reading-specific activation of auditory and multisensory temporal areas was observed in normally sighted controls but not in cataract-reversal patients, suggesting that auditory and multisensory areas lose responsiveness to visual stimulation (cf. Hyvärinen et al., 1981b; Carlson et al., 1987). Importantly, the extent to which developmental vision affects the neural bases of multisensory processing remains to be investigated in humans using cross-modal (e.g. simultaneously presented audio-visual) stimuli (Stein et al., 2010; Stein and Rowland, 2011).

A paradigm commonly used to investigate multisensory integration in humans consists of presenting individuals with cross-modal audio-visual speech stimuli, as well as with their unimodal visual (i.e. lip movements) and auditory (i.e. spoken words) counterparts, while undergoing functional MRI. By comparing cortical responses elicited by cross-modal stimuli with those elicited by unimodal stimuli, multisensory integration can be inferred (Calvert and Thesen, 2004; Beauchamp, 2005; Goebel and Van Atteveldt, 2009). Such studies have implicated auditory and multisensory regions within superior temporal areas—particularly, the superior temporal sulcus—as critical sites for multisensory audio-visual integration (Calvert et al., 2000; Stevenson and James, 2009). Furthermore, some studies have provided evidence for multisensory audio-visual integration in extrastriate visual areas (Calvert et al., 2000).

The goal of the present study was to assess the effect of congenital visual deprivation on the neural bases of multisensory processing. To this end, we compared responses of visual and auditory cortical areas between cataract-reversal patients and normally sighted controls during the visual, auditory and audio-visual presentation of speech stimuli (Putzar et al., 2007). We hypothesized that congenital visual deprivation affects the capability of cortical areas to integrate cross-modal inputs (Putzar et al., 2007, 2010b), such that cataract-reversal patients would show reduced multisensory integration in auditory areas relative to normally sighted controls. In addition, we assessed whether cortical responses in visual areas were modulated by auditory input.

Material and methods

Participants

Nine individuals with history of dense bilateral congenital cataracts [aged 18–51 years, mean = 35.67, standard deviation (SD) = 12.09, seven females], whose cataract-removal surgery took place between the ages of 3 and 24 months (mean = 12.67, SD = 8.37), and nine normally sighted individuals matched for age, gender, and years of education (aged 19–56 years, mean = 34.89, SD = 10.71, seven females) participated in this study. For further participant information, see the online Supplementary material. The study was approved by a local ethics committee (Ärztekammer Hamburg, Nr. 2502) and was conducted according to the Declaration of Helsinki.

Experimental design

The experiment reported here was part of a larger study testing 10 different conditions (parts of this study have been published elsewhere; Putzar et al., 2007, 2010a). In the present study, we were interested in comparing cortical responses to unimodal visual and auditory speech, as well as to cross-modal audio-visual speech, between cataract-reversal patients and normally sighted controls. Here we used auditory and audio-visual conditions with high signal-to-noise ratio to eliminate group differences in behavioural performance in auditory and audio-visual conditions [t(15) = 1.16, P = 0.264 and t(15) = 0.03, P = 0.978, respectively], although residual group differences in the visual condition remained [t(15) = 2.14, P = 0.049] (Putzar et al., 2007, 2010a). Therefore, we further equated behavioural performance across groups by selecting a subsample of seven cataract-reversal patients (aged 23–56 years, mean = 38.43, SD = 11.77, five females) and seven normally sighted controls (aged 18–51 years, mean = 35.86, SD = 12.08, five females), who were matched with respect to their accuracy in the visual condition.

Task and stimuli

The task and stimuli were those described in Putzar et al. (2007, 2010a). Participants performed a one-back task, in which they indicated via a button press whether the current word was the same as the previous word. The stimuli were video clips showing mouth movements without sound (henceforth, visual condition), no mouth movements (i.e. still video frame showing the speaker’s lips) but sound (henceforth, auditory condition), and mouth movements with sound (henceforth, audio-visual condition).

A block design was used: each block consisted of 12 words of the same condition, presented consecutively for ∼36 s (i.e. the trial duration varied depending on the video and/or audio track length). Each block was presented six times throughout the experiment and was followed by a 15.75-s baseline presentation (i.e. still video frame showing the speaker’s lips). The order of the conditions was randomized for each participant.

Imaging acquisition and analysis

Imaging data were acquired with the protocol described in Putzar et al. (2010a) and analysed using BrainVoyager QX 2.8 (BrainInnovation, Maastricht, Netherlands). The following is a shortened description of the imaging analysis; for full details, see the Supplementary material. Functional preprocessing consisted of slice scan time correction, head motion correction and temporal high-pass filtering (removing linear trends and frequencies below three cycles per run).

Anatomical images were transformed into Talairach space and segmented at the grey/white matter boundary, allowing for cortical surface reconstruction of each participant’s brain hemisphere. The reconstructed cortical surfaces were aligned to a selected target brain (i.e. the template brain provided by BrainVoyager QX), based on the gyral/sulcal folding pattern. Functional images were co-registered to the anatomical images and transformed into surface space.

The data were analysed with a multi-subject general linear model comprising three predictors of interest separated for each subject: visual, auditory, and audio-visual stimulation. The still frame of the speaker’s lips was implicitly modelled as baseline in the design. A z-normalization was performed for each signal time course.

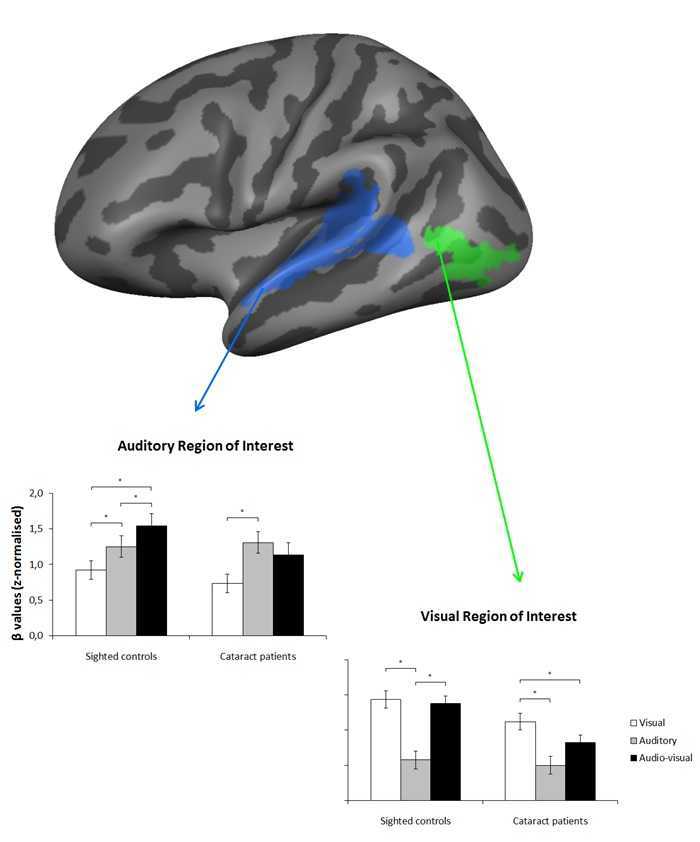

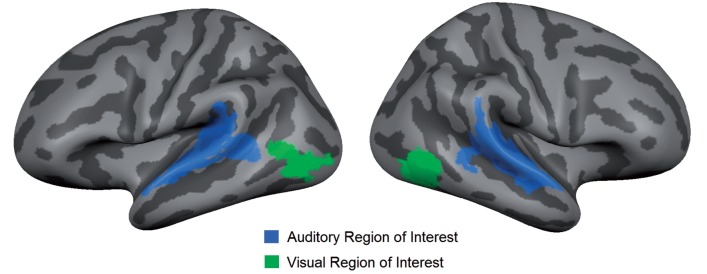

To increase statistical power (Supplementary material), we used a region of interest approach whereby we restricted our analyses to sensory areas that are responsive to the present task in the normally sighted group, namely primary and higher auditory cortex along the superior temporal gyrus and sulcus bilaterally (henceforth, auditory region of interest) and higher-order, ventral visual cortex in the vicinity of the occipitotemporal junction bilaterally (henceforth, visual region of interest) (Fig. 1).

Figure 1.

Regions of interest. This figure shows the selected regions of interest, displayed on the average cortical representation of all participants: primary and higher auditory cortex bilaterally (i.e. auditory region of interest) and higher, ventral visual cortex bilaterally (i.e. visual region of interest).

Z-normalized β-values from each condition were extracted from each region of interest, and submitted to a 2 (Group: cataract-reversal patients, normally sighted controls) × 2 (Hemisphere: left, right) × 3 (Sensory modality: visual, auditory, audio-visual) repeated measures ANOVA. Planned comparisons (two-tailed, paired-sample t-tests) were used to test the significance of differences (P < 0.05) between conditions. Multisensory integration was defined according to the maximum criterion (Beauchamp, 2005; Goebel and Van Atteveldt, 2009), whereby the response of a given cortical area during cross-modal stimulation should exceed its largest response during unimodal stimulation. For details on region of interest definition and analysis, see Supplementary material.

Behavioural analysis

To compensate for possible speed-accuracy trade-offs (Supplementary material), inverse efficiency scores were calculated (i.e. mean reaction time of the correct responses divided by the percentage of correct responses) and submitted to a 2 (Group: cataract-reversal patients, normally sighted controls) × 3 (Sensory modality: visual, auditory, audio-visual) repeated measures ANOVA. For details on behavioural analysis, see the Supplementary material.

Results

Behavioural results

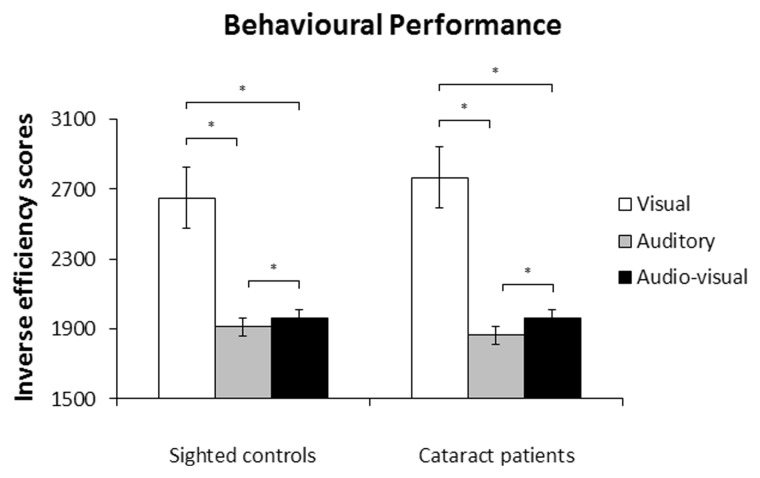

There was no effect of group [F(1,12) = 0.03, P = 0.858], such that overall inverse efficiency scores did not differ between cataract-reversal patients and normally sighted controls. There was an effect of sensory modality [F(1.11,13.37) = 57.93, P < 0.001], but group did not interact with this effect [F(1.11,13.37) = 0.52, P = 0.502], suggesting that inverse efficiency scores differed across sensory modalities, but not across groups (Fig. 2). Planned comparisons, collapsed across groups, revealed that inverse efficiency scores were lower—that is, processing efficiency was higher—in the audio-visual condition than in the visual condition (P < 0.001), as well as lower in the auditory condition than in the audio-visual condition (P = 0.024), and lower in the auditory condition than in the visual condition (P < 0.001).

Figure 2.

Behavioural performance. Mean inverse efficiency scores, i.e. mean reaction times (in ms) of correct responses divided by the percentage of correct responses) and standard errors of the mean for normally sighted controls and cataract-reversal patients. *P < 0.05.

Imaging results

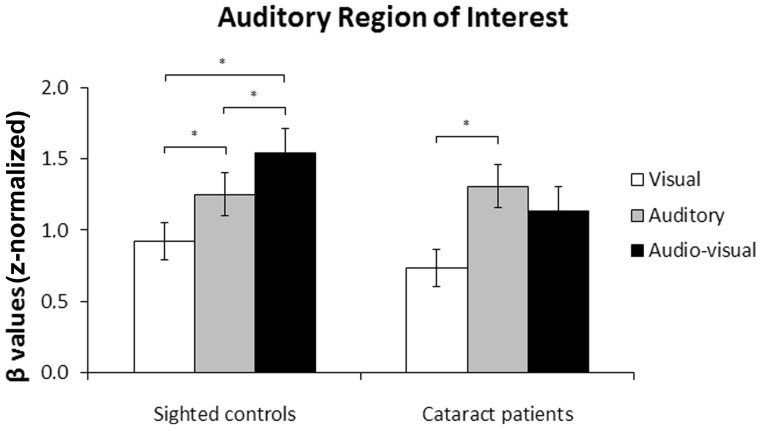

Auditory region of interest

There was no effect of group [F(1,12) = 0.89, P = 0.363], such that overall auditory region of interest activation did not differ between cataract-reversal patients and normally sighted controls. As there were no significant effects involving the factor hemisphere (P-values > 0.05), we present the auditory region of interest results collapsed across hemispheres.

There was an effect of sensory modality [F(2,24) = 22.02, P < 0.001], as well as an interaction between sensory modality and group [F(2,24) = 3.81, P = 0.037], revealing that the effect of sensory modality on the auditory region of interest differed across groups (Fig. 3). The effect of sensory modality was significant in the group of normally sighted controls [F(1,12) = 22.87, P < 0.001], indicating that auditory region of interest activation was higher during audio-visual stimulation than during auditory stimulation (P = 0.038), and higher during auditory stimulation than during visual stimulation (P = 0.024), as well as higher during audio-visual stimulation than during visual stimulation (P < 0.001). These results provide evidence for multisensory integration in normally sighted controls, as activation was higher during cross-modal stimulation than its maximal activation during unimodal (i.e. auditory) stimulation. The effect of sensory modality was also significant in the group of cataract-reversal patients [F(2,12) = 8.65, P = 0.005], such that auditory region of interest activation was higher during auditory stimulation than during visual stimulation (P = 0.009), but not different between audio-visual and auditory stimulation (P = 0.210), nor between audio-visual and visual stimulation (P = 0.130). These results provide no evidence for multisensory integration in cataract-reversal patients, as activation during cross-modal stimulation did not differ from its maximal activation during unimodal (i.e. auditory) stimulation.

Figure 3.

Auditory region of interest. Mean β-values and standard errors of the mean in the auditory region of interest for normally sighted controls and cataract-reversal patients. *P < 0.05.

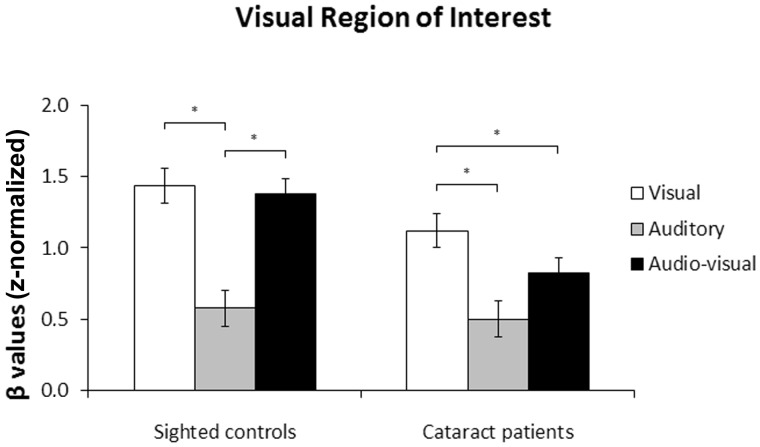

Visual region of interest

There was an effect of group [F(1,12) = 6.75, P = 0.023], indicating that overall visual region of interest activation was higher in normally sighted controls than in cataract-reversal patients.

There was no effect of hemisphere [F(1,12) = 0.16, P = 0.700], but group interacted with hemisphere [F(1,12) = 4.92, P = 0.047], such that overall visual region of interest activation was higher in normally sighted controls than in cataract-reversal patients in the left hemisphere [F(1,12) = 8.05, P = 0.015], but not in the right hemisphere [F(1,12) = 2.76, P = 0.123]. There were no other interactions involving hemisphere (Ps > 0.05). In the following, we therefore present the visual region of interest results collapsed across hemispheres.

There was an effect of sensory modality [F(2,24) = 31.15, P < 0.001], as well as a marginally significant interaction between sensory modality and group [F(2,24) = 3.04, P = 0.066], suggesting that the effect of sensory modality on the visual region of interest differed across groups (Fig. 4). Planned within-group comparisons confirmed that this was true: the effect of sensory modality was significant in the group of normally sighted controls [F(2,12) = 18.63, P < 0.001], such that visual region of interest activation was higher during visual stimulation than during auditory stimulation (P = 0.008), and higher during audio-visual stimulation than during auditory stimulation (P = 0.006), but not different between visual and audio-visual stimulation (P = 0.620). The effect of sensory modality was also significant in the group of cataract-reversal patients [F(2,12) = 14.25, P = 0.001], revealing that visual region of interest activation was higher during visual stimulation than during audio-visual stimulation (P = 0.012), as well as higher during visual stimulation than during auditory stimulation (P = 0.006), but only marginally higher during audio-visual stimulation than during auditory stimulation (P = 0.076). These results provide no evidence for multisensory integration in the visual cortex of normally-sighted controls, as visual region of interest activation during cross-modal stimulation did not exceed its maximal activation during unimodal (i.e. visual) stimulation. In contrast, in cataract-reversal patients, visual region of interest activation was actually lower during audio-visual stimulation than during visual stimulation. Worth noting, an additional analysis using independent, anatomical regions of interest indicated that the marginally significant Sensory modality × Group interaction observed here was present as soon as at the level of the primary visual cortex (i.e. Brodmann area 17) [F(2,23) = 2.77, P = 0.083].

Figure 4.

Visual region of interest. Mean β-values and standard errors of the mean in the visual region of interest for normally sighted controls and cataract-reversal patients. *P < 0.05.

Discussion

The present study investigated the effect of congenital visual deprivation on the neural bases of multisensory processing, by comparing visual and auditory cortical responses between cataract-reversal patients and normally sighted controls during the visual, auditory and audio-visual presentation of speech stimuli. Worth noting, we used auditory and audio-visual conditions with high signal-to-noise ratio to rule out that differences in cortical responses between groups would be confounded by group differences in behavioural performance (Röder and Neville, 2003; Collignon et al., 2013). The use of a high signal-to-noise ratio—although effective in eliminating group differences in behavioural performance—did preclude behavioural multisensory gains. This is consistent with the observation that cross-modal stimuli are more likely to enhance performance relative to unimodal stimuli when the latter are relatively weak (i.e. inverse effectiveness; Stein and Stanford, 2008). Accordingly, we have previously shown—using the paradigm reported here—that during auditory and audio-visual conditions with low signal-to-noise ratio cataract-reversal patients, unlike normally sighted controls, do not exhibit multisensory integration at the behavioural level (Putzar et al., 2007).

The imaging results revealed two important findings: first, unlike normally sighted controls—who, in line with previous studies (Calvert et al., 2000), exhibited multisensory integration in primary and higher auditory cortex within superior temporal regions—there was no evidence for multisensory integration in superior temporal areas in cataract-reversal patients. This is consistent with the observation that congenital visual deprivation affects multisensory interactions (Putzar et al., 2007, 2010b), as well as with the lack of responsiveness of superior temporal areas to visual stimuli (i.e. lip reading) in these individuals (Putzar et al., 2010a). Importantly, the present study extends these findings by demonstrating, for the first time, that the capability of superior temporal areas to integrate audio-visual speech stimuli is affected by a period of visual deprivation within the first months of life (i.e. 3–24 months), even though individuals in the present study experienced an extended period of visual recovery (i.e. 18–51 years). These results corroborate animal studies showing that the capability of multisensory neurons to integrate cross-modal stimuli is affected by congenital visual deprivation (Wallace et al., 2004; Carriere et al., 2007).

A second important finding obtained in this study was that cortical responses in higher-order, ventral visual cortex did not differ between visual and audio-visual stimulation in normally sighted controls, whereas in cataract-reversal patients they were actually lower during audio-visual stimulation than during visual stimulation (cf. Carriere et al., 2007). We speculate that this visual-deprivation-induced shift towards response suppression is due to a reorganization of visual areas during the period of visual deprivation, whereby noisy input from the deprived retina might be inhibited in order to reduce interference during auditory processing. Furthermore, we suggest that these inhibitory interactions contributed to the lack of multisensory interactions during audio-visual stimulation within auditory and multisensory cortex of cataract-reversal patients, as observed here.

In conclusion, the present study suggests that developmental vision within a putative sensitive period in human development is necessary for the maturation of the neural circuitry enabling the mutual enhancement of congruent cross-modal stimulation later in life.

Acknowledgements

We thank Ines Goerendt for her help in assisting with data collection, Björn Zierul for his assistance in running some of the imaging preprocessing steps, and Judith Eck for her comments on an earlier version of this article.

Funding

This research was supported by a grant from the European Research Council to Brigitte Röder (ERC-2009-AdG249425 – CriticalBrainChanges).

Supplementary material

Supplementary material available at Brain online.

References

- Beauchamp MS. Statistical criteria in fMRI studies of multisensory integration [Review] Neuroinformatics. 2005;3:93–113. doi: 10.1385/NI:3:2:093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert GA, Campbell R, Brammer MJ. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr Biol. 2000;10:649–57. doi: 10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Thesen T. Multisensory integration: methodological approaches and emerging principles in the human brain [Review] J Physiol. 2004;98:191–205. doi: 10.1016/j.jphysparis.2004.03.018. [DOI] [PubMed] [Google Scholar]

- Carlson S, Pertovaara A, Tanila H. Late effects of early binocular visual deprivation on the function of Broadmann’s area 7 of monkeys (Macaca arctoides) Dev Brain Res. 1987;33:101–11. doi: 10.1016/0165-3806(87)90180-5. [DOI] [PubMed] [Google Scholar]

- Carriere BR, Royal DW, Perrault TJ, Jr., Morrison SP, Vaughan JW, Stein BE, et al. Visual deprivation alters the development of cortical multisensory integration. J Neuropsysiol. 2007;98:2858–67. doi: 10.1152/jn.00587.2007. [DOI] [PubMed] [Google Scholar]

- Collignon O, Dormal G, Albouy G, Vandewalle G, Voss P, Phillips C, et al. Impact of blindness on the functional organization and the connectivity of the occipital cortex. Brain. 2013;136:2769–83. doi: 10.1093/brain/awt176. [DOI] [PubMed] [Google Scholar]

- Goebel R, Van Atteveldt N. Multisensory functional magnetic resonance imaging: a future perspective [Review] Exp Brain Res. 2009;198:153–64. doi: 10.1007/s00221-009-1881-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyvärinen J, Carlson S, Hyvärinen L. Early visual deprivation alters modality of neuronal responses in area 19 of monkey cortex. Neurosci Lett. 1981a;26:239–43. doi: 10.1016/0304-3940(81)90139-7. [DOI] [PubMed] [Google Scholar]

- Hyvärinen J, Hyvärinen L, Linnankoski I. Modification of parietal association cortex and functional blindness after binocular deprivation in young monkeys. Exp Brain Res. 1981b;42:1–8. doi: 10.1007/BF00235723. [DOI] [PubMed] [Google Scholar]

- Putzar L, Goerendt I, Heed T, Richard G, Büchel C, Röder B. The neural basis of lip-reading capabilities is altered by early visual deprivation. Neuropsychologia. 2010a;48:2158–66. doi: 10.1016/j.neuropsychologia.2010.04.007. [DOI] [PubMed] [Google Scholar]

- Putzar L, Goerendt I, Lange K, Rösler F, Röder B. Early visual deprivation impairs multisensory interactions in humans. Nat Neurosci. 2007;10:1243–5. doi: 10.1038/nn1978. [DOI] [PubMed] [Google Scholar]

- Putzar L, Gondan M, Röder B. Basic multisensory functions can be acquired after congenital visual pattern deprivation in humans. Dev Neuropsychol. 2012;37:697–711. doi: 10.1080/87565641.2012.696756. [DOI] [PubMed] [Google Scholar]

- Putzar L, Hötting K, Röder B. Early visual deprivation affects the development of face recognition and of audio-visual speech perception. Restor Neurol Neurosci. 2010b;28:251–7. doi: 10.3233/RNN-2010-0526. [DOI] [PubMed] [Google Scholar]

- Röder B, Neville H. Developmental functional plasticity. In: Grafman J, Robertson IH, editors. Handbook of neuropsychology. Amsterdam: Elsevier; 2003. pp. 231–70. [Google Scholar]

- Saenz M, Lewis LB, Huth AG, Fine I, Koch C. Visual motion area MT+/V5 responds to auditory motion in human sight-recovery subjects. J Neurosci. 2008;28:5141–8. doi: 10.1523/JNEUROSCI.0803-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Burr D, Constantinidis C, Laurienti PJ, Meredith MA, Perrault TJ, Jr., et al. Semantic confusion regarding the development of multisensory integration: a practical solution. Eur J Neurosci. 2010;31:1713–20. doi: 10.1111/j.1460-9568.2010.07206.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Rowland BA. Organization and plasticity in multisensory integration: early and late experience affects its governing principles [Review] Prog Brain Res. 2011;191:145–63. doi: 10.1016/B978-0-444-53752-2.00007-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron [Review] Nat Rev Neurosci. 2008;9:255–66. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, James TW. Audiovisual integration in human superior temporal sulcus: inverse effectiveness and the neural processing of speech and object recognition. NeuroImage. 2009;44:1210–23. doi: 10.1016/j.neuroimage.2008.09.034. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Perrault TJ, Jr., Hairston D, Stein BE. Visual experience is necessary for the development of multisensory integration. J Neurosci. 2004;24:9580–4. doi: 10.1523/JNEUROSCI.2535-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]