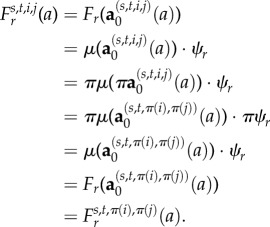

Abstract

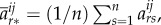

In evolutionary game theory, an important measure of a mutant trait (strategy) is its ability to invade and take over an otherwise-monomorphic population. Typically, one quantifies the success of a mutant strategy via the probability that a randomly occurring mutant will fixate in the population. However, in a structured population, this fixation probability may depend on where the mutant arises. Moreover, the fixation probability is just one quantity by which one can measure the success of a mutant; fixation time, for instance, is another. We define a notion of homogeneity for evolutionary games that captures what it means for two single-mutant states, i.e. two configurations of a single mutant in an otherwise-monomorphic population, to be ‘evolutionarily equivalent’ in the sense that all measures of evolutionary success are the same for both configurations. Using asymmetric games, we argue that the term ‘homogeneous’ should apply to the evolutionary process as a whole rather than to just the population structure. For evolutionary matrix games in graph-structured populations, we give precise conditions under which the resulting process is homogeneous. Finally, we show that asymmetric matrix games can be reduced to symmetric games if the population structure possesses a sufficient degree of symmetry.

Keywords: game theory, finite population, evolution

1. Introduction

One of the most basic models of evolution in finite populations is the Moran process [1]. In the Moran process, a population consisting of two types, a mutant type and a wild-type, is continually updated via a birth–death process until only one type remains. The mutant and wild-types are distinguished by only their reproductive fitness, which is assumed to be an intrinsic property of a player. A mutant type has fitness r > 0 relative to the wild-type (whose fitness relative to itself is 1), and in each step of the process an individual is selected for reproduction with probability proportional to fitness. Reproduction is clonal, and the offspring of a reproducing individual replaces another member of the population who is chosen for death uniformly at random. Eventually, this population will end up in one of the monomorphic absorbing states: all mutant type or all wild-type. In this context, a fundamental metric of the success of the mutant type is its ability to invade and replace a population of wild-type individuals [2].

In a population of size N, the probability that a single mutant in a wild-type population will fixate in the Moran process is

| 1.1 |

In this version of a birth–death process, the members of the population are distinguished by only their types; in particular, there is no notion of spatial arrangement, i.e. the population is well mixed. Lieberman et al. [3] extend the classical Moran process to graph-structured populations, which are populations with links between the players that indicate who is a neighbour of whom. In this structured version of the Moran process, reproduction happens with probability proportional to fitness, but the offspring of a reproducing individual can replace only a neighbour of the parent. Since individuals are now distinguished by both their types (mutant or wild) and locations within the population, a natural question is whether or not the fixation probability of a single-mutant type depends on where this mutant appears in the population. Lieberman et al. [3] show that this fixation probability is independent of the location of the mutant if everyone has the same number of neighbours, i.e. the graph is regular [4]. In fact, remarkably, the fixation probability of a single mutant on a regular graph is the same as that of equation (1.1)—an observation first made in a special case by Maruyama [5]. This result, known as the Isothermal Theorem, is independent of the number of neighbours the players have (i.e. the degree of the graph).

The Moran process is frequency-independent in the sense that the fitness of an individual is determined by type and is not influenced by the rest of the population. However, the Moran model can be easily extended to account for frequency-dependent fitness. A standard way in which to model frequency-dependence is through evolutionary games [6–8]. In the classical set-up, each player in the population has one of two strategies, A and B, and receives an aggregate pay-off from interacting with the rest of the population. This aggregate pay-off is usually calculated from a sequence of pairwise interactions whose pay-offs are described by a pay-off matrix of the form

|

1.2 |

Each player's aggregate pay-off is then translated into fitness and the strategies in the population are updated based on these fitness values. Since a player's pay-off depends on the strategies of the other players in the population, so does that player's fitness. Traditionally, this population is assumed to be infinite, in which case the dynamics of the evolutionary game are governed deterministically by the replicator equation of Taylor & Jonker [6]. More recently, evolutionary games have been considered in finite populations [8,9], where the dynamics are no longer deterministic but rather stochastic. In order to restrict who interacts with whom in the population, these populations can also be given structure. Popular types of structured populations are graphs [3,10,11], sets [12] and demes [13,14].

We focus here on evolutionary games in graph-structured populations that proceed in discrete time steps. Such processes define discrete-time Markov chains, either with or without absorbing states (depending on mutation rates). Typically, in evolutionary game theory, one starts with a population of players and repeatedly updates the population based on some update rule such as birth–death [8], death–birth [10,15], imitation [16], pairwise comparison [17,18] or Wright–Fisher [19,20]. These update rules can be split into two classes: cultural and genetic (see [21]). Cultural update rules involve strategy imitation, whereas genetic update rules involve reproduction and inheritance. Without mutations, an update rule may be seen as giving a probability distribution over a number of strategy-acquisition scenarios: a player inherits a new strategy through imitation (cultural rules) or is born with a strategy determined by the parent(s) (genetic rules). Mutation rates disrupt these scenarios by placing a small probability of a player taking on a novel strategy. The way in which strategy-mutation rates are incorporated into an evolutionary process depends on both the class of the update rule and the specifics of the update rule itself. In a general sense, we say that strategy mutations are homogeneous if they depend on neither the players themselves nor the locations of the players. This notion of homogeneous strategy mutations is analogous to that of a symmetric game, which is a game for which the pay-offs depend on the strategies played but are independent of the identities and locations of the players.

The Isothermal Theorem seems to indicate that populations structured by regular graphs possess a significant degree of homogeneity, meaning that different locations within the population appear to be equivalent for the purposes of evolutionary dynamics. However, it is important to note that (i) fixation probability is just one metric of evolutionary success and (ii) the Moran process is only one example of an evolutionary process. For example, in addition to the probability of fixation, one could look at the absorption time, which is the average number of steps until one of the monomorphic absorbing states is reached. Moreover, one could consider frequency-dependent processes, possibly with different update rules, in which fitness is no longer an intrinsic property of an individual but is also influenced by the other members of the population. We show that the Isothermal Theorem does not extend to arbitrary frequency-dependent processes such as evolutionary games. Furthermore, we show that this theorem does not apply to fixation times; that is, even for the Moran process on a regular graph, the average number of updates until a monomorphic absorbing state is reached can depend on the initial placement of the mutant.

Given that the Isothermal Theorem does not extend to other processes defined on regular graphs, the next natural question is what is the meaning of a spatially homogeneous population in evolutionary game theory? In fact, we argue using asymmetric games [21] that the term ‘homogeneous’ should apply to an evolutionary process as a whole rather than to just the population structure. Even for populations that appear to be spatially homogeneous, such as populations on complete graphs, non-uniform distribution of resources within the population can result in heterogeneity of the overall process. Similarly, for symmetric games, heterogeneity can be introduced into the dynamics of an evolutionary process through strategy mutations. Therefore, a notion of homogeneity of an evolutionary game should take into account at least (i) population structure, (ii) pay-offs, and (iii) strategy mutations.

If the strategy-mutation rates are miniscule, then the population spends most of its time in monomorphic states. With small mutation rates, one can define an embedded Markov chain on the monomorphic states and use this chain to study the success of each strategy [22,23]. That is, when a mutation occurs, the population is assumed to return to a monomorphic state before another mutant arises. Thus, the states of interest are the monomorphic states and the states consisting of a single mutant in an otherwise-monomorphic population. We say that an evolutionary game is homogeneous if any two states consisting of a single mutant (A-player) in a wild-type population (B-players) are mathematically equivalent. We make precise what we mean by ‘mathematically equivalent’ in §2, but, informally, this equivalence means that any two such states are the same up to relabelling. In particular, all metrics, such as fixation probability, absorption time, etc., are the same for any two states consisting of a single A-mutant in a B-population. We show that an evolutionary game in a graph-structured population is homogeneous if the graph is vertex-transitive (‘looks the same’ from each vertex), the pay-offs are symmetric and the strategy mutations are homogeneous. This result holds for any update rule and selection intensity.

Finally, we explore the effects of population structure on asymmetric evolutionary games. In the weak selection limit, we show that asymmetric matrix games with homogeneous strategy mutations can be reduced to symmetric games if the population structure is arc-transitive (‘looks the same’ from each edge in the graph). This result is a finite-population analogue of the main result of McAvoy & Hauert [21], which states that a similar reduction to symmetric games is possible in sufficiently large populations. Thus, we establish that this reduction applies to any population size if the graph possesses a sufficiently high degree of symmetry. Our explorations, both for symmetric and asymmetric games, clearly demonstrate the effects of population structure, pay-offs and strategy mutations on symmetries in evolutionary games.

2. Markov chains and evolutionary equivalence

2.1. General Markov chains

The evolutionary processes we consider here define discrete-time Markov chains on finite state spaces. The notions of symmetry and evolutionary equivalence that we aim to introduce for evolutionary processes can actually be stated quite succinctly at the level of the Markov chain. We first work with general Markov chains, and later we apply these ideas to evolutionary games.

Definition 2.1 (Symmetry of states). —

Suppose that

is a Markov chain on a (finite) state space,

with transition matrix T. An automorphism of X is a bijection

such that

for each

Two states

are said to be symmetric if there exists

such that

Definition 2.1 says that the states of the chain can be relabelled in such a way that the transition probabilities are preserved. This relabelling may affect the long-run distribution of the chain since it need not fix absorbing states, so we make one further refinement in order to ensure that if two states are symmetric, then they behave in the same way:

Definition 2.2 (Evolutionary equivalence). —

States

and

are evolutionarily equivalent if there exists an automorphism of the Markov chain,

such that

(i)

(ii) if μ is a stationary distribution of X, then

For a Markov chain with absorbing states, the notions of symmetry and evolutionary equivalence of states need not coincide (see example B.16). However, if the Markov chain has a unique stationary distribution (as would be the case if it were irreducible), then symmetry implies evolutionary equivalence:

Proposition 2.3. —

If X has a unique stationary distribution, then two states are symmetric if and only if they are evolutionarily equivalent.

We show in appendix B that a Markov chain symmetry preserves the set of stationary distributions (lemma B.7), so if there is a unique stationary distribution, then condition (ii) of definition 2.2 is satisfied automatically by any symmetry. Proposition 2.3 is then an immediate consequence of this result.

If  and

and  are evolutionarily equivalent, then it is clear for absorbing processes that the probability that

are evolutionarily equivalent, then it is clear for absorbing processes that the probability that  fixates in absorbing state

fixates in absorbing state  is the same as the probability that

is the same as the probability that  fixates in state

fixates in state  (and similarly for fixation times). If the process has a unique stationary distribution, then the symmetry of

(and similarly for fixation times). If the process has a unique stationary distribution, then the symmetry of  and

and  implies that this distribution puts the same mass on

implies that this distribution puts the same mass on  and

and  These properties follow at once from the fact that the states

These properties follow at once from the fact that the states  and

and  are equivalent up to relabelling.

are equivalent up to relabelling.

2.2. Markov chains defined by evolutionary games

Our focus is on evolutionary games on fixed population structures. If S is a finite set of strategies (or ‘actions’) available to each player, and if the population size is N, then the state space of the Markov chain defined by an evolutionary game in such a population is  For evolutionary games without random strategy mutations, the absorbing states of the chain are the monomorphic states, i.e. the strategy profiles consisting of just a single unique strategy. Thus, states

For evolutionary games without random strategy mutations, the absorbing states of the chain are the monomorphic states, i.e. the strategy profiles consisting of just a single unique strategy. Thus, states  and

and  are evolutionarily equivalent if they are symmetric relative to the monomorphic states. On the other hand, evolutionary processes with strategy mutations are typically irreducible (and have unique stationary distributions); in these processes, the notions of symmetry and evolutionary equivalence coincide by proposition 2.3.

are evolutionarily equivalent if they are symmetric relative to the monomorphic states. On the other hand, evolutionary processes with strategy mutations are typically irreducible (and have unique stationary distributions); in these processes, the notions of symmetry and evolutionary equivalence coincide by proposition 2.3.

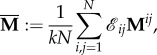

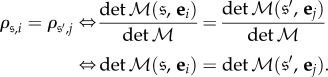

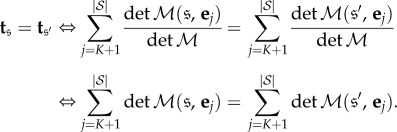

In order to state the definition of a homogeneous evolutionary process, we first need some notation. For  we denote by

we denote by  the state in SN whose ith coordinate is s’ and whose jth coordinate for

the state in SN whose ith coordinate is s’ and whose jth coordinate for  is s; that is, all players are using strategy s except for player i, who is using s’.

is s; that is, all players are using strategy s except for player i, who is using s’.

Definition 2.4 (Homogeneous evolutionary process). —

An evolutionary process on SN is homogeneous if for each

the states

and

are evolutionarily equivalent for each

An evolutionary process is heterogeneous if it is not homogeneous.

In other words, an evolutionary process is homogeneous if, at the level of the Markov chain it defines, any two states consisting of a single mutant in an otherwise-monomorphic population appear to be relabellings of one another. As noted in §2.1, all quantities with which one could measure evolutionary success are the same for these single-mutant states if the process is homogeneous.

3. Evolutionary games on graphs

We consider evolutionary games in graph-structured populations. Unless indicated otherwise, a ‘graph’ means a directed, weighted graph on N vertices. A directed graph is one in which the edges have orientations, meaning there may be an edge from i to j but not from j to i. Moreover, the edges carry weights, which we assume are non-negative real numbers. A directed, weighted graph is equivalent to a non-negative N × N matrix,  where there is an edge from i to j if and only if

where there is an edge from i to j if and only if  If there is such an edge, then the weight of this edge is simply

If there is such an edge, then the weight of this edge is simply  Since there is a one-to-one correspondence between directed, weighted graphs on N vertices and N × N real matrices, we refer to graphs and matrices using the same notation, describing

Since there is a one-to-one correspondence between directed, weighted graphs on N vertices and N × N real matrices, we refer to graphs and matrices using the same notation, describing  as a graph but using the matrix notation

as a graph but using the matrix notation  to indicate the weight of the edge from vertex i to vertex j.

to indicate the weight of the edge from vertex i to vertex j.

Every graph considered here is assumed to be connected (strongly), which means that for any two vertices, i and j, there is a (directed) path from i to j. This assumption is not that restrictive in evolutionary game theory since one can always partition a graph into its strongly connected components and study the behaviour of an evolutionary process on each of these components. Moreover, for evolutionary processes on graphs that are not strongly connected, it is possible to have both (i) recurrent non-monomorphic states in processes without mutations and (ii) multiple stationary distributions in processes with mutations. Some processes (such as the death–birth process) may not even be defined on graphs that are not strongly connected. Therefore, we focus on strongly connected graphs and make no further mention of the term ‘connected’.

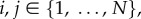

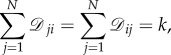

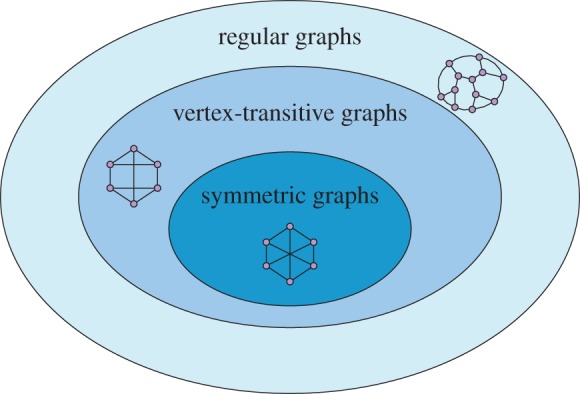

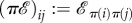

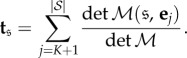

Since our goal is to discuss symmetry in the context of evolutionary processes, we first describe several notions of symmetry for graphs. The three types of graphs we treat here are regular, vertex-transitive and symmetric. Informally speaking, a regular graph is one in which each vertex has the same number of neighbouring vertices (and this number is known as the degree of the graph). A vertex-transitive graph is one that looks the same from any two vertices; based on the graph structure alone, a player cannot tell if he or she has been moved from one location to another. A symmetric (or arc-transitive) graph is one that looks the same from any two edges. That is, if two players are neighbours and are both moved to another pair of neighbouring vertices, then they cannot tell that they have been moved based on the structure of the graph alone. We recall in detail the formal definitions of these terms in appendix B. The relationships between these three notions of symmetry, as well as some examples, are illustrated in figure 1.

Figure 1.

Three different levels of symmetry for connected graphs. Regular graphs have the property that the degrees of the vertices are all the same. Vertex-transitive graphs look the same from each vertex and are necessarily regular. Symmetric (arc-transitive) graphs look the same from any two (directed) edges. Each of these containments is strict; there exist graphs that are regular but not vertex-transitive (figure 2) and vertex-transitive but not symmetric (figure 7a). (Online version in colour.)

We now focus on evolutionary processes in graph-structured populations.

3.1. The Moran process

Consider the Moran process on a graph,  Lieberman et al. [3] show that if

Lieberman et al. [3] show that if  is regular, then the fixation probability of a randomly placed mutant is given by equation (1.1), the fixation probability of a single mutant in the classical Moran process. This result (known as the Isothermal Theorem) proves that, in particular, this fixation probability does not depend on the initial location of the mutant. (We refer to this latter statement as the ‘weak’ version of the Isothermal Theorem.) Our definition of homogeneity in the context of evolutionary processes (definition 2.4) is related to this independence of initial location and has nothing to do with fixation probabilities in the classical Moran process. Naturally, the Isothermal Theorem raises the question of whether or not this location independence extends to absorption times (average number of steps until an absorbing state is reached) when

is regular, then the fixation probability of a randomly placed mutant is given by equation (1.1), the fixation probability of a single mutant in the classical Moran process. This result (known as the Isothermal Theorem) proves that, in particular, this fixation probability does not depend on the initial location of the mutant. (We refer to this latter statement as the ‘weak’ version of the Isothermal Theorem.) Our definition of homogeneity in the context of evolutionary processes (definition 2.4) is related to this independence of initial location and has nothing to do with fixation probabilities in the classical Moran process. Naturally, the Isothermal Theorem raises the question of whether or not this location independence extends to absorption times (average number of steps until an absorbing state is reached) when  is regular.

is regular.

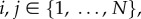

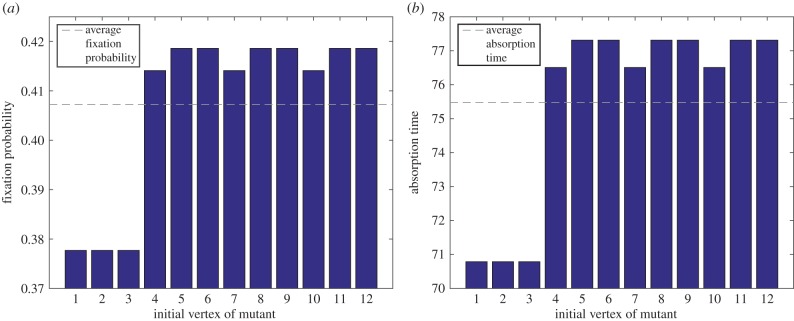

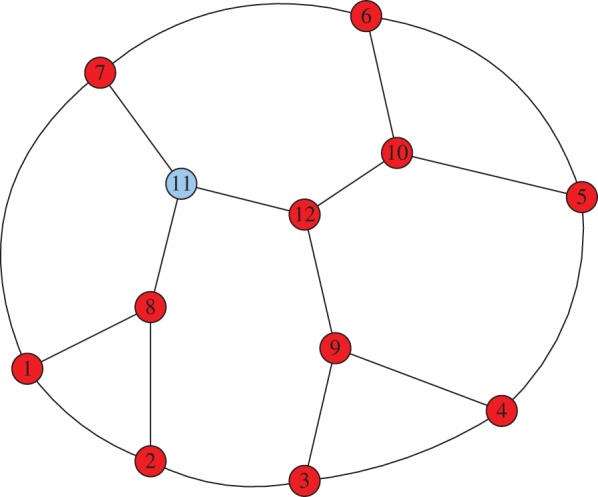

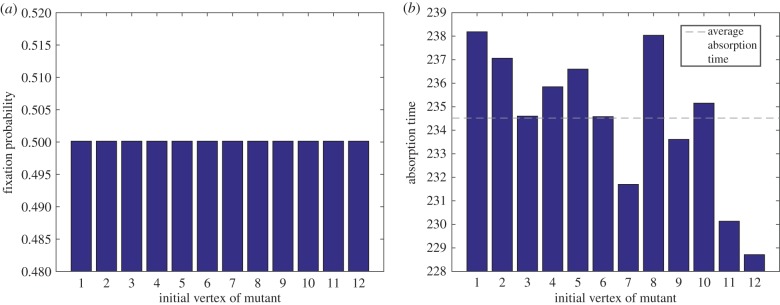

Suppose that  is the Frucht graph of figure 2. The Frucht graph is an undirected, unweighted, regular (but not vertex-transitive) graph of size 12 and degree 3 [24]. The fixation probabilities and absorption times of a single mutant in a wild-type population are given in figure 3 as a function of the initial location of the mutant. The fixation probabilities do not depend on the initial location of the mutant, as predicted by the Isothermal Theorem, but the absorption times do depend on where the mutant arises. In fact, the absorption time is distinct for each different initial location of the mutant. The details of these calculations are in appendix C. Therefore, even the weak form of the Isothermal Theorem fails to hold for absorption times. In particular, the Moran process on a regular graph need not define a homogeneous evolutionary process.

is the Frucht graph of figure 2. The Frucht graph is an undirected, unweighted, regular (but not vertex-transitive) graph of size 12 and degree 3 [24]. The fixation probabilities and absorption times of a single mutant in a wild-type population are given in figure 3 as a function of the initial location of the mutant. The fixation probabilities do not depend on the initial location of the mutant, as predicted by the Isothermal Theorem, but the absorption times do depend on where the mutant arises. In fact, the absorption time is distinct for each different initial location of the mutant. The details of these calculations are in appendix C. Therefore, even the weak form of the Isothermal Theorem fails to hold for absorption times. In particular, the Moran process on a regular graph need not define a homogeneous evolutionary process.

Figure 2.

A single mutant (cooperator) at vertex 11 of the Frucht graph. In the Snowdrift Game, the probability that cooperators fixate depends on the initial location of this mutant on the Frucht graph (even if the intensity of selection is weak). (Online version in colour.)

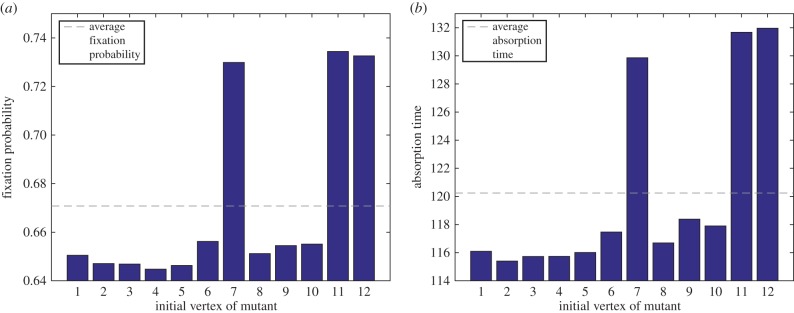

Figure 3.

(a) Fixation probability and (b) absorption time versus initial vertex of mutant for the Moran process on the Frucht graph. In both figures, the mutant has fitness r = 2 relative to the wild-type. As predicted by the Isothermal Theorem, the fixation probability does not depend on the initial location of the mutant. The absorption time (measured in number of updates) is different for each initial placement of the mutant on the Frucht graph. The precise values of these fixation probabilities and absorption times are in appendix C. (Online version in colour.)

This set-up involving two types of players, frequency-independent interactions, and a population structure defined by a single graph, can be generalized considerably:

3.2. Symmetric games

A powerful version of evolutionary graph theory uses two graphs to define relationships between the players: an interaction graph,  and a dispersal graph,

and a dispersal graph,  [25–29]. These graphs both have non-negative weights. As an example of how these two graphs are used to define an evolutionary process, we consider a birth–death process based on two-player, symmetric interactions:

[25–29]. These graphs both have non-negative weights. As an example of how these two graphs are used to define an evolutionary process, we consider a birth–death process based on two-player, symmetric interactions:

Example 3.1. —

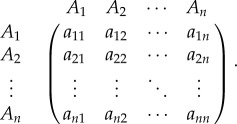

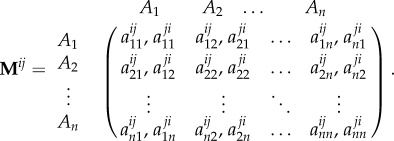

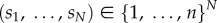

Consider a symmetric matrix game with n strategies, A1,…, An, and pay-off matrix

3.1 If

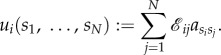

then the total pay-off to player i is

3.2 If

is the intensity of selection, then the fitness of player i is

3.3 In each time step, a player (say, player i) is chosen for reproduction with probability proportional to fitness. With probability

the offspring of this player adopts a novel strategy uniformly at random from {A1, …, An}; with probability 1 − ɛ, the offspring inherits the strategy of the parent. Next, another member of the population is chosen for death, with the probability of player j dying proportional to

The offspring then fills the vacancy created by the deceased neighbour, and the process repeats.

is called the ‘interaction’ graph since it governs pay-offs based on encounters, and

is called the ‘dispersal’ graph since it is involved in strategy propagation.

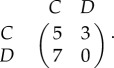

3.2.1. Heterogeneous evolutionary games

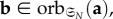

We now explore the ways in which population structure and strategy mutations can introduce heterogeneity into an evolutionary process. Consider the Snowdrift Game with strategies C (cooperate) and D (defect) and pay-off matrix

|

3.4 |

Suppose that both  and

and  are the (undirected, unweighted) Frucht graph (figure 2). If the selection intensity is β = 1, then the fixation probability of a single cooperator in a population of defectors in a death–birth process depends on the initial location of the cooperator (figure 4). Since the Frucht graph is regular (but not vertex-transitive), this example demonstrates that the Isothermal Theorem does not extend to frequency-dependent games. In particular, symmetric games on regular graphs can be heterogeneous, and regularity of the graph does not imply that the ‘fixation probability of a randomly placed mutant’ is well defined. This dependence of the fixation probability on the initial location of the mutant is not specific to the Snowdrift Game or the death–birth update rule; one can show that it also holds for the Donation Game in place of the Snowdrift Game or the birth–death rule in place of the death–birth rule, for instance.

are the (undirected, unweighted) Frucht graph (figure 2). If the selection intensity is β = 1, then the fixation probability of a single cooperator in a population of defectors in a death–birth process depends on the initial location of the cooperator (figure 4). Since the Frucht graph is regular (but not vertex-transitive), this example demonstrates that the Isothermal Theorem does not extend to frequency-dependent games. In particular, symmetric games on regular graphs can be heterogeneous, and regularity of the graph does not imply that the ‘fixation probability of a randomly placed mutant’ is well defined. This dependence of the fixation probability on the initial location of the mutant is not specific to the Snowdrift Game or the death–birth update rule; one can show that it also holds for the Donation Game in place of the Snowdrift Game or the birth–death rule in place of the death–birth rule, for instance.

Figure 4.

(a) Fixation probability and (b) absorption time versus initial vertex of mutant (cooperator) for a death–birth process on the Frucht graph. In both figures, the game is a Snowdrift Game whose pay-offs are described by pay-off matrix (3.4), and the selection intensity is β = 1. Unlike the Moran process, this process is frequency-dependent, and it is evident that both fixation probabilities and absorption times (measured in number of updates) depend on the initial placement of the mutant. See appendix C for details. Notably, the three vertices (7, 11 and 12) for which both fixation probability and absorption time are highest are the only vertices in the Frucht graph not appearing in a three-cycle. (Online version in colour.)

With β = 1, the selection intensity is fairly strong, which raises the question of whether or not these fixation probabilities still differ if selection is weak. In fact, our observation for this value of β is not an anomaly: suppose that  and

and  are states (indicating some non-monomorphic initial configuration of strategies), and that

are states (indicating some non-monomorphic initial configuration of strategies), and that  and

and  are monomorphic absorbing states (indicating states in which each player uses the same strategy). Let

are monomorphic absorbing states (indicating states in which each player uses the same strategy). Let  denote the probability that state i is reached after starting in state

denote the probability that state i is reached after starting in state  and let

and let  denote the average number of updates required for the process to reach an absorbing state after starting in state

denote the average number of updates required for the process to reach an absorbing state after starting in state  Each of

Each of  and

and  may be viewed as functions of β, and we have the following result:

may be viewed as functions of β, and we have the following result:

Proposition 3.2. —

Each of the equalities

3.5a and

3.5b holds for either (i) every

or (ii) at most finitely many

Thus, if one of these equalities fails to hold for even a single value of β, then it fails to hold for all sufficiently small β > 0.

For a proof of proposition 3.2, see appendix A. This result allows one to conclude that if there are differences in fixation probabilities or times for large values of β (where these differences are more apparent), then there are corresponding differences in the limit of weak selection.

Even if a symmetric game is played in a well-mixed population, heterogeneous strategy mutations may result in heterogeneity of the evolutionary process. Consider, for example, the pairwise comparison process [17,18] based on the symmetric Snowdrift Game, (3.4), in a well-mixed population with N = 3 players. We model this well-mixed population using a complete, undirected, unweighted graph of size 3 for each of  and

and  (figure 5). For

(figure 5). For  let

let  be the strategy-mutation (‘exploration’) rate for player i. These strategy mutations are incorporated into the process as follows: at each time step, a focal player (player i) is chosen uniformly at random to update his or her strategy. A neighbour (one of the two remaining players) is then chosen randomly as a model player. If β is the selection intensity, πf is the pay-off of the focal player, and πm is the pay-off of the model player, then the focal player imitates the strategy of the model player with probability

be the strategy-mutation (‘exploration’) rate for player i. These strategy mutations are incorporated into the process as follows: at each time step, a focal player (player i) is chosen uniformly at random to update his or her strategy. A neighbour (one of the two remaining players) is then chosen randomly as a model player. If β is the selection intensity, πf is the pay-off of the focal player, and πm is the pay-off of the model player, then the focal player imitates the strategy of the model player with probability

| 3.6 |

and chooses to retain his or her strategy with probability

| 3.7 |

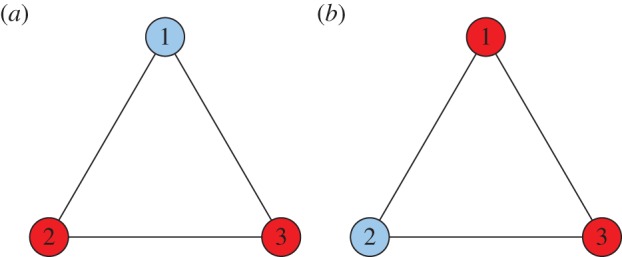

Figure 5.

Two states consisting of a single cooperator (mutant) among defectors (the wild-type) in a well-mixed population of size N = 3. Despite the spatial symmetry of this population, an evolutionary game on this graph can be heterogeneous as a result of heterogeneous strategy mutations or asymmetric pay-offs. (Online version in colour.)

With probability ɛi, the focal player adopts a new strategy uniformly at random from the set {C, D}, irrespective of the current strategy. Provided at least one of ɛ1, ɛ2 and ɛ3 is positive, the Markov chain on {C, D}3 defined by this process is irreducible and has a unique stationary distribution, μ. Let ɛ1 = 0.01 and ɛ2 = ɛ3 = 0. Since the mutation rate depends on the location, i, the strategy mutations are heterogeneous. If the selection intensity is β = 1, then a direct calculation (to four significant figures) gives

| 3.8 |

where μ(C, D, D) (resp. μ(D, C, D)) is the mass μ places on the state (C, D, D) (resp. (D, C, D)). Therefore, by proposition 2.3 and definition 2.4, this evolutionary process is not homogeneous, despite the fact that the population is well mixed and the game is symmetric. This result is not particularly surprising, but it clearly illustrates the effects of heterogeneous strategy-mutation rates on symmetries of the overall process.

3.2.2. Homogeneous evolutionary games

The behaviour of an evolutionary process sometimes depends heavily on the choice of update rule. As a result, a particular problem in evolutionary game theory is often stated (such as the evolution of cooperation) and subsequently explored separately for a number of different update rules. For example, consider the Donation Game (an instance of the Prisoner's Dilemma) in which cooperators pay a cost, c, in order to provide the opponent with a benefit, b. Defectors pay no costs and provide no benefits. On a large regular graph of degree k, Ohtsuki et al. [10] show that selection favours cooperation in the death–birth process if b/c > k, but selection never favours cooperation in the birth–death process. Therefore, the approach of exploring a problem in evolutionary game theory separately for several update rules has its merits. On the other hand, one might expect that high degrees of symmetry in the population structure, pay-offs and strategy mutations induce symmetries in an evolutionary game for a variety of update rules.

Before stating our main theorem for symmetric matrix games, we must first understand the basic components that make up an evolutionary game. Evolutionary games generally have two timescales: interactions and updates. In each (discrete) time step, every player in the population has a strategy, and this strategy profile determines the state of the population. Neighbours then interact (quickly) and receive pay-offs based on these strategies and the game(s) being played. The total pay-off to a player determines his or her fitness. In the update step of the process, the strategies of the players are updated stochastically based on the fitness profile of the population, the population structure and the strategy mutations. Popular examples of evolutionary update rules are birth–death, death–birth, imitation, pairwise comparison and Wright–Fisher. Since interactions happen much more quickly than updates, there is a separation of timescales.

The most difficult part of an evolutionary game to describe in generality is the update step. If S is the strategy set of the game and N is the population size, then a state of the population is simply an element of SN, i.e. a specification of a strategy for each member of the population. Implicit in the state space of the population being SN is an enumeration of the players. That is, if  is an N-tuple of strategies, then this profile indicates that player i uses strategy

is an N-tuple of strategies, then this profile indicates that player i uses strategy  For our purposes, we need only one property to be satisfied by the update rule of the process, which we state here as an axiom of an evolutionary game:

For our purposes, we need only one property to be satisfied by the update rule of the process, which we state here as an axiom of an evolutionary game:

Axiom. —

The update rule of an evolutionary game is independent of the enumeration of the players.

Remark 3.3. —

As an example of what this axiom means, consider a death–birth process in which a player is selected uniformly at random for death and is replaced by the offspring of a neighbour. A neighbour is chosen for reproduction with probability proportional to fitness, and the offspring of this neighbour inherits the strategy of the parent with probability 1 − ɛ and takes on a novel strategy with probability ɛ for some ɛ > 0. If all else is held constant (fitness, mutations, etc.), the fact that a player is referred to as the player at location i is irrelevant: let

be the symmetric group on N letters. If

is a permutation that relabels the locations of the players by sending i to π−1(i), then the strategy of the player at location π−1(i) after the relabelling is the same as the strategy of the player at location i before the relabelling. In particular, if

is the state of the population before the relabelling, then

is the state of the population after the relabelling, where

The probability that player π−1(i) is selected for death and replaced by the offspring of player π−1(j) after the relabelling is the same as the probability that player i is selected for death and replaced by the offspring of player j before the relabelling. Thus, for this death–birth process, the probability of transitioning between states

and

before the relabelling is the same as the probability of transitioning between states

and

after the relabelling. In this sense, a relabelling of the players induces an automorphism of the Markov chain defined by the process (in the sense of definition 2.1), and the axiom states that this phenomenon should hold for any evolutionary update rule.

In order to state our main result for symmetric games, we note that an evolutionary graph, Γ, in this setting consists of two graphs:  and

and  We say that Γ is regular if both

We say that Γ is regular if both  and

and  are regular. For vertex-transitivity of Γ (resp. symmetry of Γ), we require slightly more than each

are regular. For vertex-transitivity of Γ (resp. symmetry of Γ), we require slightly more than each  and

and  being vertex-transitive (resp. symmetric); we require them to be simultaneously vertex-transitive (resp. symmetric). First of all, we need to define what an automorphism of Γ is. For

being vertex-transitive (resp. symmetric); we require them to be simultaneously vertex-transitive (resp. symmetric). First of all, we need to define what an automorphism of Γ is. For  let

let  be the graph defined by

be the graph defined by  for each i and j. Using this action, we define an automorphism of an evolutionary graph as follows:

for each i and j. Using this action, we define an automorphism of an evolutionary graph as follows:

Definition 3.4 (Automorphism of an evolutionary graph). —

An automorphism of

is an action,

such that

and

We denote by Aut(Γ) the set of automorphisms of Γ.

We now have the definitions of vertex-transitive and symmetric evolutionary graphs:

Definition 3.5. —

is vertex-transitive if for each i and j, there exists

such that π(i) = j.

Definition 3.6. —

is symmetric if

and

is a symmetric graph.

Finally, using the notion of an automorphism of Γ, we have our main result:

Theorem 3.7. —

Consider an evolutionary matrix game on a graph,

with symmetric pay-offs and homogeneous strategy mutations. If

then the states with a single mutant at vertex i and π(i), respectively, in an otherwise-monomorphic population, are evolutionarily equivalent. That is, in the notation of definition 2.4, the states

and

are evolutionarily equivalent for each

The proof of theorem 3.7 may be found in appendix B. The proof relies on the observation that the hypotheses of the theorem imply that any two states consisting of a single A-player in a population of B-players can be obtained from one another by relabelling the players. Thus, in light of the argument in Remark (3.3), relabelling the players induces an automorphism on the Markov chain defined by the evolutionary game. Since any relabelling of the players leaves the monomorphic states fixed, there is an evolutionary equivalence between any two such states in the sense of definition 2.2. Note that this theorem makes no restrictions on the selection strength or the update rule.

Corollary 3.8. —

An evolutionary game on a vertex-transitive graph with symmetric pay-offs and homogeneous strategy mutations is itself homogeneous.

Remark 3.9. —

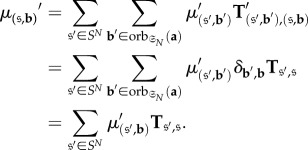

By theorem 3.7, two mutants appearing on a graph might define evolutionarily equivalent states even if the graph is not vertex-transitive. For example, the Tietze graph [30], like the Frucht graph, has 12 vertices and is regular but not vertex-transitive. However, unlike the Frucht graph, the Tietze graph has some non-trivial automorphisms. By theorem 3.7, any two vertices in the Tietze graph that are related by an automorphism have the property that the two corresponding single-mutant states are indistinguishable. An example of two evolutionarily equivalent states on this graph is given in figure 6. In appendix C, for the Snowdrift Game with death–birth updating, we give the fixation probabilities and absorption times for all configurations of a single cooperator among defectors, which further illustrates the effects of graph symmetries on an evolutionary process.

Figure 6.

The Tietze graph with two different initial configurations. Like the Frucht graph, the Tietze graph is regular of degree k = 3 (but not vertex-transitive) with N = 12 vertices. Unlike the Frucht graph, the Tietze graph possesses non-trivial automorphisms. In (a), a cooperator is at vertex 6 and all other players are defectors. In (b), a cooperator is at vertex 11 and, again, the other players are defectors. Despite the fact that the Tietze graph is not vertex-transitive, the single-mutant states defined by (a) and (b) are evolutionarily equivalent. Graphically, this result is clear since one obtains (a) from (b) by flipping the graph (i.e. applying an automorphism), and such a difference between the two states does not affect fixation probabilities, times, etc. However, it is not true that any two single-mutant states are evolutionarily equivalent. For example, in the Snowdrift Game with β = 0.1 and death–birth updating, the single-mutant state with a cooperator at vertex 1 (resp. vertex 6) has a fixation probability of 0.3777 (resp. 0.4186). Therefore, the two single-mutant states with cooperators at vertices 1 and 6, respectively, are not evolutionarily equivalent, so this process is not homogeneous. (Online version in colour.)

Remark 3.10. —

For a given population size, N, and network degree, k, there may be many vertex-transitive graphs of size N with degree k. For each such graph, the fixation probability of a randomly occurring mutant is independent of where on the graph it occurs by theorem 3.7. However, this fixation probability depends on more than just N and k; it also depends on the configuration of the network. For example, figure 7 gives two vertex-transitive graphs of size N = 6 and degree k = 3. As an illustration, consider the Snowdrift Game (with pay-off matrix (3.4)) on these graphs with birth–death updating. If the selection intensity is β = 0.1, then the fixation probability of a single cooperator in a population of defectors is 0.1632 in (A) and 0.1722 in (B) (both rounded to four significant figures). These two fixation probabilities differ for all but finitely many

by proposition (3.2).

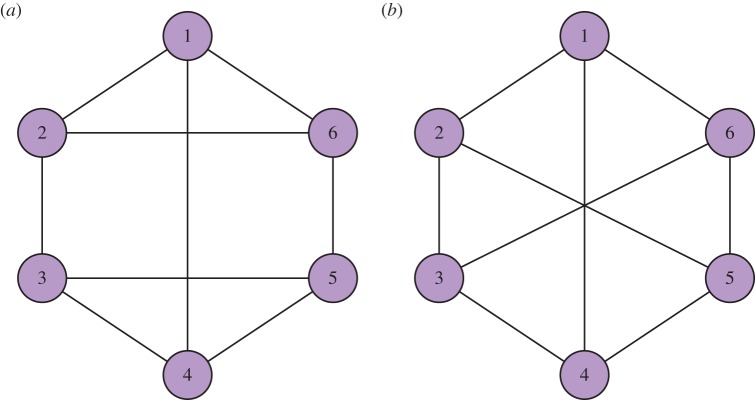

Figure 7.

Undirected, unweighted, vertex-transitive graphs of degree k = 3 with N = 6 vertices; (b) is a symmetric (arc-transitive) graph and (a) is not. (Online version in colour.)

Until this point, our focus has been on states consisting of just a single mutant in an otherwise-monomorphic population. One could also inquire as to when any two states consisting of two (or three, four, etc.) mutants are evolutionarily equivalent. It turns out that that the answer to this question is simple: in general, the population must be well mixed in order for any two m-mutant states to be evolutionarily equivalent if m > 1. The proof that this equivalence holds in well-mixed populations follows from the argument given to establish theorem 3.7 (see appendix B). On the other hand, if the population is not well mixed, then one can find a pair of states with the first state consisting of two mutants on neighbouring vertices and the second state consisting of two mutants on non-neighbouring vertices. In general, the mutant type will have different fixation probabilities in these two states. For example, in the Snowdrift Game on the graph of figure 7b, consider the two states,  and

and  where

where  consists of cooperators on vertices 1 and 2 only and

consists of cooperators on vertices 1 and 2 only and  consists of cooperators on vertices 1 and 3 only. If β = 0.1, then the fixation probability of cooperators under death–birth updating when starting at

consists of cooperators on vertices 1 and 3 only. If β = 0.1, then the fixation probability of cooperators under death–birth updating when starting at  (resp.

(resp.  ) is 0.3126 (resp. 0.2607). Therefore, despite the arc-transitivity of this graph, it is not true that any two states consisting of exactly two mutants are evolutionarily equivalent. Only in well-mixed populations are we guaranteed that any two such states are equivalent.

) is 0.3126 (resp. 0.2607). Therefore, despite the arc-transitivity of this graph, it is not true that any two states consisting of exactly two mutants are evolutionarily equivalent. Only in well-mixed populations are we guaranteed that any two such states are equivalent.

3.3. Asymmetric games

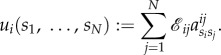

One particular form of pay-off asymmetry appearing in evolutionary game theory is ecological asymmetry [21]. Ecological asymmetry can arise as a result of an uneven distribution of resources. For example, in the Donation Game, a cooperator at location i might provide a benefit to his or her opponent based on some resource derived from the environment. Both this resource and the cost of donating it could depend on i, which means that different players have different pay-off matrices. These pay-off matrices depend on both the location of the focal player and the locations of the opponents. Thus, pay-offs for a player at location i against an opponent at location j in an n-strategy ‘bimatrix’ game [21,31,32] are given by the asymmetric pay-off matrix

|

3.9 |

Similar to equation (3.2), the total pay-off to player i for strategy profile  is

is

|

3.10 |

We saw in §3.2 an example of a heterogeneous evolutionary game in a well-mixed population with symmetric pay-offs. Rather than looking at a symmetric game with heterogeneous strategy mutations, we now look at an asymmetric game with homogeneous strategy mutations. Consider the ecologically asymmetric Donation Game on the graph of figure 5 (both  and

and  ) with a death–birth update rule. In this asymmetric Donation Game, a cooperator at location i donates bi at a cost of ci; defectors donate nothing and incur no costs. If β = 0.1, b1 = b2 = b3 = 4, c1 = 1 and c2 = c3 = 3, then the fixation probability of a single cooperator at location 1 (figure 5a) is 0.2232, while the fixation probability of a single cooperator at location 2 (figure 5b) is 0.1842 (both rounded to four significant figures). Therefore, even in a well-mixed population with homogeneous strategy mutations (none, in this case), asymmetric pay-offs can prevent an evolutionary game from being homogeneous.

) with a death–birth update rule. In this asymmetric Donation Game, a cooperator at location i donates bi at a cost of ci; defectors donate nothing and incur no costs. If β = 0.1, b1 = b2 = b3 = 4, c1 = 1 and c2 = c3 = 3, then the fixation probability of a single cooperator at location 1 (figure 5a) is 0.2232, while the fixation probability of a single cooperator at location 2 (figure 5b) is 0.1842 (both rounded to four significant figures). Therefore, even in a well-mixed population with homogeneous strategy mutations (none, in this case), asymmetric pay-offs can prevent an evolutionary game from being homogeneous.

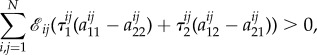

Asymmetric matrix games in large populations reduce to symmetric games if selection is weak [21]. In the limit of weak selection, McAvoy & Hauert [33] establish a selection condition for asymmetric matrix games in finite graph-structured populations that extends the condition (for symmetric games) of Tarnita et al. [34]:

Theorem 3.11 (McAvoy & Hauert [33]). —

There exists a set of structure coefficients,

independent of pay-offs, such that weak selection favours strategy

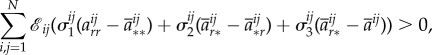

if and only if

3.11 where

and

Strictly speaking, theorem 3.11 is established for  and

and  undirected, unweighted and satisfying

undirected, unweighted and satisfying  However, the proof of theorem 3.11 extends immediately to the case with

However, the proof of theorem 3.11 extends immediately to the case with  and

and  directed, weighted and possibly distinct, so we make no restrictive assumptions on

directed, weighted and possibly distinct, so we make no restrictive assumptions on  and

and  in the statement of this theorem here. In the simpler case n = 2, condition 3.11 takes the form

in the statement of this theorem here. In the simpler case n = 2, condition 3.11 takes the form

|

3.12 |

for some collection  For the death–birth process with

For the death–birth process with  and

and  the graph of figure 7a, we calculate exact values for all of these structure coefficients (see appendix C). In particular, we find that

the graph of figure 7a, we calculate exact values for all of these structure coefficients (see appendix C). In particular, we find that  and

and  so vertex-transitivity does not guarantee that the structure coefficients are independent of i and j. For the same process on the graph in figure 7b, we find that

so vertex-transitivity does not guarantee that the structure coefficients are independent of i and j. For the same process on the graph in figure 7b, we find that  for each i and j, so these coefficients do not depend on i and j. (In general, even for well-mixed populations, τ1 and τ2 need not be the same; for the same process studied here but on the graph of figure 5,

for each i and j, so these coefficients do not depend on i and j. (In general, even for well-mixed populations, τ1 and τ2 need not be the same; for the same process studied here but on the graph of figure 5,  and

and  for each i and j.) This lack of dependence on i and j is due to the fact that the graph of figure 7b is symmetric, and it turns out to be a special case of a more general result:

for each i and j.) This lack of dependence on i and j is due to the fact that the graph of figure 7b is symmetric, and it turns out to be a special case of a more general result:

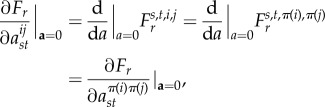

Theorem 3.12. —

Suppose that an asymmetric matrix game with homogeneous strategy mutations is played on an evolutionary graph,

For each

and

| 3.13 |

The proof of theorem 3.1 may be found in appendix B. The following corollary is an immediate consequence of theorem 3.12:

Corollary 3.13. —

If

and

is a symmetric graph (i.e. Γ is a symmetric evolutionary graph), then the structure coefficients are independent of i and j.

Since symmetric graphs are also regular, we have:

Corollary 3.14. —

If

and

is a symmetric graph (i.e. Γ is a symmetric evolutionary graph), then strategy r is favoured in the limit of weak selection if and only if

3.14 where

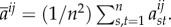

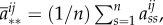

is the spatial average of the matrices Mij, i.e.

3.15 where k is the degree of the graph, Γ.

Remark 3.15. —

Equation (3.14) is just the selection condition of Tarnita et al. [34] for symmetric matrix games.

It follows from corollary 3.14 that asymmetric matrix games on arc-transitive (symmetric) graphs can be reduced to symmetric games in the limit of weak selection.

4. Discussion

Evolutionary games in finite populations may be split into two classes: those with absorbing states (‘absorbing processes’) and those without absorbing states. In absorbing processes, the notion of fixation probability has played a crucial role in quantifying evolutionary outcomes, but fixation probabilities are far from the only measure of evolutionary success. Much of the literature on evolutionary games with absorbing states has neglected other metrics such as the time to absorption or the time to fixation conditioned on fixation occurring (‘conditional fixation time’). This bias towards fixation probabilities has resulted in certain evolutionary processes appearing more symmetric than they actually are. We have illustrated this phenomenon using the frequency-independent Moran process on graphs: the Isothermal Theorem guarantees that, on regular graphs, a single mutant cannot distinguish between initial locations in the graph if the only metric under consideration is the probability of fixation. However, certain initial placements of the mutant may result in faster absorption times than others if the graph is regular but not vertex-transitive, and the Frucht graph exemplifies this claim. The same phenomenon also holds for conditional fixation times.

The Frucht graph, which is a regular structure with no non-trivial symmetries (there are no two vertices from which the graph ‘looks’ the same), also allowed us to show that the Isothermal Theorem of Lieberman et al. [3] does not extend to frequency-dependent evolutionary games. That is, on regular graphs, the probability of fixation of a single mutant may depend on the initial location of the mutant if fitness is frequency-dependent. This claim was illustrated via a death–birth process on the Frucht graph, in which the underlying evolutionary game was a Snowdrift Game. For β = 1 (strong selection), the fixation probability of a cooperator at vertex 11 was nearly 14% larger than the fixation probability of a cooperator at vertex 4. Moreover, we showed that if the fixation probabilities of two initial configurations differ for a single value of β, then they are the same for at most finitely many values of β. In particular, these fixation probabilities differ for almost every selection strength, so our observation for β = 1 was not an anomaly. Similar phenomena are observed for frequency-dependent birth–death processes on the Frucht graph, for example, and even for frequency-dependent games with the ‘equal gains from switching’ property, such as the Donation Game.

Theorem 3.7 is an analogue of the Isothermal Theorem that applies to a broader class of games and update rules. The Isothermal Theorem is remarkable since regularity of the population structure implies that the fixation probabilities are not only independent of the initial location of the mutant, they are the same as those of the classical Moran process. Our treatment of homogeneous evolutionary processes is focused on when different single-mutant states are equivalent, not when they are equivalent to the corresponding states in the classical Moran process. Even if the fixation probability of a single mutant does not depend on the mutant's location, other factors (such as birth and death rates) may affect whether or not this fixation probability is the same as the one in a well-mixed population [35,36]. Remark 3.10, which compares the fixation probabilities for the Snowdrift Game on two different vertex-transitive graphs of the same size and degree, shows that the fixation probability of a single mutant—even if independent of the mutant's location—can depend on the configuration of the graph. In light of these results, the symmetry phenomena for the Moran process guaranteed by the Isothermal Theorem do not generalize and should be thought of as properties of the frequency-independent Moran process and not of evolutionary processes in general.

Theorem 3.7, and indeed most of our discussion of homogeneity, focused on symmetries of states consisting of just a single mutant. In many cases, mutation rates are sufficiently small that a mutant type, when it appears, will either fixate or go extinct before another mutation occurs [22,23]. Thus, with small mutation rates, one need not consider symmetries of states consisting of more than one mutant. However, if mutation rates are larger, then these multi-mutant states become relevant. Our definition of evolutionary equivalence (definition 2.2) applies to these states, but, as expected, the symmetry conditions on the population structure guaranteeing any two multi-mutant states are equivalent are much stronger. In fact, as we argued in §3.2.2, the population must, in general, be well-mixed even for any pair of states consisting of two mutants to be evolutionarily equivalent. Consequently, our focus on single-mutant states allowed us to simultaneously treat biologically relevant configurations (assuming mutation rates are small) and obtain non-trivial conditions guaranteeing homogeneity of an evolutionary process.

The counterexamples presented here could be defined on sufficiently small population structures, and thus all calculations (fixation probabilities, structure coefficients, etc.) are exact. However, these quantities need not always be explicitly calculated in order to prove useful: in our study of asymmetric games, we concluded that an asymmetric game on an arc-transitive (symmetric) graph can be reduced to a symmetric game in the limit of weak selection. (The graph of figure 7a demonstrates that vertex-transitivity alone does not guarantee that an asymmetric game can be reduced to a symmetric game in this way.) This result was obtained by examining the qualitative nature of the structure coefficients in the selection condition 3.11, but it did not require explicit calculations of these coefficients. Therefore, despite the difficulty in actually calculating these coefficients, they can still be used to glean qualitative insight into the dynamics of evolutionary games.

On large random regular graphs, the dynamics of an asymmetric matrix game are equivalent to those of a certain symmetric game obtained as a ‘spatial average’ of the individual asymmetric games [21]. Corollary 3.14 is highly reminiscent of this type of reduction to a symmetric game. For large populations, this result is obtained by observing that large random regular graphs approximate a Bethe lattice [4] and then using the pair approximation method [37] to describe the dynamics. The pair approximation method is exact for a Bethe lattice [10], so, from this perspective, corollary 3.14 is not that surprising since Bethe lattices are arc-transitive. Of course, a Bethe lattice has infinitely many vertices, and corollary 3.14 is a finite-population analogue of this result.

The term ‘homogeneous’ is used in the literature to refer to several different kinds of population structures. This term has been used to describe well-mixed populations [38,39]. For graph-structured populations, ‘homogeneous graph’ sometimes refers to vertex-transitive graphs [26,40]. In algebraic graph theory, however, the term ‘homogeneous graph’ implies a much higher degree of symmetry than does vertex-transitivity [41]. ‘Homogeneous’ has also been used to describe graphs in which each vertex has the same number of neighbours, i.e. regular graphs [42–44]. In between regular and vertex-transitive graphs, ‘homogeneous graph’ has also referred to large, random regular graphs [45]. As we noted, large, random regular graphs approximate Bethe lattices (which are infinite, arc-transitive graphs), but these approximations need not themselves be even vertex-transitive.

In many of the various uses of the term ‘homogeneous’, a common aim is to study the fixation probability of a randomly placed mutant. Our definition of homogeneous evolutionary game formally captures what it means for two single-mutant states to be equivalent, and our explorations of the Frucht graph (in conjunction with theorem 3.7) show that vertex-transitivity, and not regularity, is what the term ‘homogeneous’ in graph-structured populations should indicate. We also demonstrated the effects of pay-offs and strategy mutations on the behaviour of these single-mutant states and concluded that the term homogeneous should apply to the entire process rather than to just the population structure. The homogeneity (theorem 3.7) and symmetry (theorem 3.12) results given here do not depend on the update rule, in contrast to results such as the symmetry of conditional fixation times in the Moran process of Taylor et al. [46] or the Isothermal Theorem of Lieberman et al. [3]. We now know that games on regular graphs are not homogeneous, and we know precisely under which conditions the ‘fixation probability of a randomly placed mutant’ is well defined. These results provide a firmer foundation for evolutionary game theory in finite populations and a basis for defining the evolutionary success of the strategies of a game.

Acknowledgements

The authors thank Wes Maciejewski for helpful conversations.

Appendix A. Fixation and absorption

Using a method inspired by a technique of Press & Dyson [47], we derive explicit expressions (in terms of the transition matrix) for fixation probabilities and absorption times. Subsequently, we prove a simple lemma that says that Markov chain symmetries preserve the set of a chain's stationary distributions.

A.1. Fixation probabilities

Suppose that  is a discrete-time Markov chain on a finite state space,

is a discrete-time Markov chain on a finite state space,  , that has exactly K (

, that has exactly K ( ) absorbing states,

) absorbing states,  Moreover, suppose that the non-absorbing states are transient [22]. The transition matrix for this chain, T, may be written as

Moreover, suppose that the non-absorbing states are transient [22]. The transition matrix for this chain, T, may be written as

| A 1 |

where IK is the K × K identity matrix and 0 is the matrix of zeros (in this case, its dimension is  where

where  is the number of states in

is the number of states in  ). This chain will eventually end up in one of the K absorbing states, and we denote by

). This chain will eventually end up in one of the K absorbing states, and we denote by  the probability that state

the probability that state  is reached when the chain starts off in states

is reached when the chain starts off in states  Let P be the

Let P be the  matrix of fixation probabilities, i.e.

matrix of fixation probabilities, i.e.  for each

for each  and i. This matrix satisfies

and i. This matrix satisfies

| A 2 |

which is just the matrix form of the recurrence relation satisfied by fixation probabilities (obtained from a ‘first-step’ analysis of the Markov chain). Consider the matrix,  defined by

defined by

| A 3 |

Since  for

for  we see that

we see that

| A 4 |

Moreover, the matrix  must have full rank since the non-absorbing states are transient; that is,

must have full rank since the non-absorbing states are transient; that is,

| A 5 |

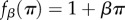

Therefore, by Cramer's rule,

| A 6 |

where the notation  means the matrix obtained by replacing the column corresponding to state

means the matrix obtained by replacing the column corresponding to state  with the ith standard basis vector, ei. Thus, equation (A 6) gives explicit formulae for the fixation probabilities.

with the ith standard basis vector, ei. Thus, equation (A 6) gives explicit formulae for the fixation probabilities.

A.2. Absorption times

Let t be the  -vector indexed by

-vector indexed by  whose entry

whose entry  is the expected time until the process fixates in one of the absorbing states when started in state

is the expected time until the process fixates in one of the absorbing states when started in state  This vector satisfies ti = 0 for i = 1,…, K as well as the recurrence relation

This vector satisfies ti = 0 for i = 1,…, K as well as the recurrence relation  Therefore,

Therefore,

|

A 7 |

so, by Cramer's rule,

|

A 8 |

We now turn to Markov chains defined by evolutionary games. Before proving proposition 3.2, we make two assumptions:

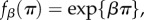

(i) The pay-off-to-fitness mapping is of the form

or

or  where β denotes the intensity of selection and π denotes pay-off. (Of course, fitness can be defined in one of the latter three ways only if the pay-offs are such that

where β denotes the intensity of selection and π denotes pay-off. (Of course, fitness can be defined in one of the latter three ways only if the pay-offs are such that  .)

.)(ii) The update probabilities are rational functions of the fitness profile of the population.

Remark A.1. —

Assumptions (i) and (ii) are not at all restrictive in evolutionary game theory. Any process in which selection occurs with probability proportional to fitness will satisfy this rationality condition, and indeed all of the standard evolutionary processes (birth–death, death–birth, imitation, pairwise comparison, Wright–Fisher, etc.) have this property. The four pay-off-to-fitness mappings are also standard.

With assumptions (i) and (ii) in mind, we have:

Proposition (3.2). —

Each of the equalities

A 9a and

A 9b holds for either (i) every

or (ii) at most finitely many

. Thus, if one of these equalities fails to hold for even a single value of β, then it fails to hold for all sufficiently small β>0.

Proof. —

Suppose that

and that

and

are absorbing states. By equation (A 6),

A 10 Similarly, by equation (A 8),

A 11 Assuming (i) and (ii), equations (A 10) and (A 11) are equivalent to polynomial equations in either β or exp{β}. Either way, since non-zero polynomial equations have at most finitely many solutions, we see that the equalities

and

each hold for either (i) every β or (ii) finitely many values of β. Thus, if

(resp.

) for even a single selection intensity, then these fixation probabilities (resp. absorption times) differ for almost every selection intensity. In particular, they differ for all sufficiently small β. ▪

Appendix B. Symmetry and evolutionary equivalence

B.1. Symmetries of graphs

Here we recall some standard notions of symmetry for graphs. Although we treat directed, weighted graphs in general, throughout the main text we give several examples of undirected and unweighted graphs, which are defined as follows:

Definition B.1 (Undirected graph). —

A graph,

is undirected if

for each i and j.

Definition B.2 (Unweighted graph). —

A graph,

is unweighted if

for each i and j.

Since our goal is to discuss symmetry in the context of evolutionary processes, we first describe several notions of symmetry for graphs. In a graph,  the indegree and outdegree of vertex i are

the indegree and outdegree of vertex i are  and

and  respectively. With these definitions in mind, we recall the definition of a regular graph:

respectively. With these definitions in mind, we recall the definition of a regular graph:

Definition B.3 (Regular graph). —

is regular if and only if there exists

such that

B 1 for each i. If

is regular, then k is called the degree of

Let  denote the symmetric group on N letters; that is,

denote the symmetric group on N letters; that is,  is the set of all bijections

is the set of all bijections  Each

Each  extends to a relabelling action on the set of directed, weighted graphs defined by

extends to a relabelling action on the set of directed, weighted graphs defined by  In other words, any relabelling of the set of vertices results in a corresponding relabelling of the graph. The automorphism group of

In other words, any relabelling of the set of vertices results in a corresponding relabelling of the graph. The automorphism group of

written

written  is the set of all

is the set of all  such that

such that  We now recall a condition slightly stronger than regularity known as vertex-transitivity:

We now recall a condition slightly stronger than regularity known as vertex-transitivity:

Definition B.4 (Vertex-transitive graph). —

is vertex-transitive if for each i and j, there exists

such that

Informally, a graph is vertex-transitive if and only if it ‘looks the same’ from every vertex. If a graph is vertex-transitive, then it is necessarily regular. The strongest form of symmetry for graphs that we consider here is the following:

Definition B.5 (Symmetric graph). —

is symmetric (or arc-transitive) if for each i,j with

and

with

there exists

such that

and

A graph is symmetric if it ‘looks the same’ from any two directed edges. Arc-transitivity is typically defined for unweighted graphs, i.e. graphs satisfying  For the more general class of weighted graphs, we require that

For the more general class of weighted graphs, we require that  act transitively on the set of edges of

act transitively on the set of edges of  where ‘edge’ means a pair (i, j) with

where ‘edge’ means a pair (i, j) with  . Thus, all of the edges in a symmetric, weighted graph have the same weight: otherwise, if (i, j) and

. Thus, all of the edges in a symmetric, weighted graph have the same weight: otherwise, if (i, j) and  are edges but

are edges but  then there would exist no permutation, π, sending i to i′, j to j′, and preserving the weights of the graph. Therefore, since the weights of a symmetric graph take one of two values (0 or else the only non-zero weight), such a graph is essentially unweighted.

then there would exist no permutation, π, sending i to i′, j to j′, and preserving the weights of the graph. Therefore, since the weights of a symmetric graph take one of two values (0 or else the only non-zero weight), such a graph is essentially unweighted.

B.2. Symmetries of evolutionary processes

In §2.1, we defined two states,  and

and  to be evolutionarily equivalent if (i) there exists an automorphism of the Markov chain,

to be evolutionarily equivalent if (i) there exists an automorphism of the Markov chain,  such that

such that  and (ii) this automorphism satisfies

and (ii) this automorphism satisfies  for each stationary distribution, μ, of the chain. Condition (i), which means that

for each stationary distribution, μ, of the chain. Condition (i), which means that  and

and  are symmetric, alone is not quite strong enough to guarantee that

are symmetric, alone is not quite strong enough to guarantee that  and

and  have the same long-run behaviour. To give an example of a symmetry of states that is not an evolutionary equivalence, we consider the neutral Moran process in a well-mixed population of size N = 3:

have the same long-run behaviour. To give an example of a symmetry of states that is not an evolutionary equivalence, we consider the neutral Moran process in a well-mixed population of size N = 3:

Example B.6. —

In a well-mixed population of size N = 3, consider the (frequency-independent) Moran process with two types of players: a mutant type and a wild-type. Suppose that the mutant type is neutral with respect to the mutant; that is, the fitness of the mutant relative to the wild-type is 1. Since the population is well mixed, the state of the population is given by the number of mutants it contains,

. Consider the map

defined by

States 0 and 3 are absorbing, and, for

the transition probabilities of this process are as follows:

B 2a and

B 2b It follows at once that ϕ preserves these transition probabilities, so ϕ is an automorphism of the Markov chain. Let ρi be the probability that mutants fixate given an initial abundance of i mutants. The states 1 and 2 are symmetric since ϕ(1) = 1, but it is not true that ρ1 = ρ2 since ρ1 = 1/3 and ρ2 = 2/3. The reason for this difference in fixation probabilities is that states 1 and 2, although symmetric, are not evolutionary equivalent since ϕ swaps the two absorbing states of the process.

In contrast to example B.6, processes with unique stationary distributions have the property that every symmetry of the Markov chain is an evolutionary equivalence (proposition 2.3). The following lemma establishes proposition (2.3):

Lemma B.7. —

If

is a symmetry of a Markov chain and μ is a stationary distribution of this chain, then ϕ(μ) is also a stationary distribution. In particular, if μ is unique, then ϕ(μ) = μ.

Proof. —

If T is the transition matrix of this Markov chain, then

B 3 so

which completes the proof. ▪

We turn now to the proofs of our main results (theorems 3.7 and 3.12):

Theorem 3.7. —

Consider an evolutionary matrix game on a graph,

with symmetric pay-offs and homogeneous strategy mutations. If

then the states with a single mutant at vertex i and π(i), respectively, in an otherwise-monomorphic population, are evolutionarily equivalent. That is, in the notation of definition 2.4, the states

and

are evolutionarily equivalent for each

Proof. —

The state space of the Markov chain defined by this evolutionary game is SN, where S is the strategy set and N is the population size. For

let π act on the state of the population by changing the strategy of player i to that of player π−1(i) for each i = 1, … , N. In other words, π sends

to

which is defined by

for each i. Therefore, for

we have

B 4 for each i = 1, … , N. Since π is an automorphism of the evolutionary graph, Γ, we have

and

Moreover, π preserves the strategy mutations since they are homogeneous. Since the pay-offs are symmetric, π just rearranges the fitness profile of the population: the pay-off of player i becomes the pay-off of player π−1(i) (see equation (3.2)), so the same is true of the fitness values. Therefore, applying the map π to SN is equivalent to applying the map on SN obtained by simply relabelling the players. Since any such relabelling of the players results in an automorphism of the Markov chain on SN that preserves the monomorphic absorbing states, it follows that

and

are evolutionarily equivalent.▪

Theorem 3.12. —

Suppose that an asymmetric matrix game with homogeneous strategy mutations is played on an evolutionary graph,

For each

and

B 5 Proof. Let T be the transition matrix for the Markov chain defined by this process. Since there are non-zero strategy mutations, this chain has a unique stationary distribution, μ. The matrix T defines a directed, weighted graph on

vertices that has an edge from vertex

to vertex

if and only if

If there is an edge from

to

then the weight of this edge is simply

The (outdegree) Laplacian matrix of this graph,

is defined by

[48]. In terms of this Laplacian matrix, Press & Dyson [47] show that for any vector, v, the stationary distribution satisfies

B 6 for each state,

where

denotes the matrix obtained from

by replacing the column corresponding to state

by v. Thus, if ψr is the vector indexed by

with

being the frequency of strategy r in state

then the average abundance of strategy r is

B 7 Since T is a function of the pay-offs,

, we may write

(a is just an ordered tuple defined by

for each s, t, i and j.) Moreover, since the entries of T are assumed to be smooth functions of a [34], Fr is also a smooth function of a by equation (B 7) and the definition of

We will show that for each s and t,

B 8 for each i and j. The theorem will then follow from the derivations of

and

in [33] since it is shown there that each σij is a function of the elements in the set

B 9 For s, t, i and j fixed and

let

be the function of the vector with a at entry

and 0 in all other entries. Symbolically, if

is defined as 1 if x = y and 0 otherwise and

B 10 then

B 11 Let

and suppose that

that is,

and

π induces a map on the pay-offs, a, defined by

Let

denote the orbit of a under this action, and consider the enlarged state space

. Using the Markov chain on SN coming from the evolutionary process, we obtain a Markov chain on

via the transition matrix, T′, defined by

B 12 for

and

(We write T(b) to indicate the transition matrix as a function of the pay-off values of the game.) π extends to a map on

defined by

Since π preserves

and the strategy mutations (since they are homogeneous), it follows that the induced map

is an automorphism of the Markov chain on

defined by T′. If μ′ is a stationary distribution for the chain T′, then, for each

and

B 13 It then follows from the uniqueness of

that there exists

such that

If μ′ is such a stationary distribution, then, by lemma (B.7),