Abstract

Neuropsychological performance has historically been measured in laboratory settings using standardized assessments. However, these methods may be inherently limited in generalizability. This concern may be mitigated with paradigms such as ecological momentary assessment (EMA). We evaluated the initial feasibility and acceptability of administering a visual working memory (VWM) task on handheld computers across one EMA study week among adolescents/young adults (N=39). Participants also completed standardized laboratory neurocognitive measures to determine the extent to which EMA VWM performance mapped onto scores obtained in traditional testing environments. Compliance with the EMA protocol was high as participants responded to 87% of random prompts across the study week. As expected, EMA VWM performance was positively associated with laboratory measures of auditory and visual working memory, and these relationships persisted after adjusting for predicted intelligence. Further, discriminant validity tests showed that EMA VWM was not linked with laboratory scores of verbal abilities and processing speed. These data provide initial evidence on the convergent and discriminant validity of interpretations from this novel, ecologically-valid neurocognitive approach. Future studies will aim to further establish the psychometric properties of this (and similar) tasks and investigate how momentary fluctuations in VWM correspond with contextual influences (e.g., substance use, mood) and clinical outcomes.

Keywords: visual working memory, ecological momentary assessment, neurocognition

Introduction

Historically, neuropsychological research has relied heavily on stringently controlled laboratory paradigms. This offers several methodological benefits including the availability of normative data, assured compliance through continuous supervision, decreased measurement error through systematic assessments of overlapping processes, and use of test theory and sophisticated psychometric tools for the understanding of complex data. However, probing cognitive processes exclusively in the laboratory may come at the cost of ecological validity. Many studies have demonstrated only moderate effect sizes between neuropsychological tests and measures of everyday functioning, with many other studies showing weak or no relationships (Chaytor & Schmitter-Edgecombe, 2003; Sunderland, Harris, & Baddeley, 1983). This is problematic because the ability to draw inferences from test data about real-world functioning and make recommendations about functional capacity has become increasingly important as the field of neuropsychology has shifted in the past two decades (Heinrichs, 1990; Johnston & Farmer, 1997; Troster, 2000).

To enhance the generalizability of neurocognitive assessment and to continue evolving as a field, a growing emphasis has been placed on understanding the feasibility and acceptability of technology applications in neuropsychological assessment (Parsey & Schmitter-Edgecombe, 2013) as well as documenting the psychometric properties of innovative methodologies including virtual environments (Parsons, Silva, Pair, & Rizzo, 2008) and pen-computer paradigms (Cameron, Sinclair, & Tiplady, 2001). Naturalistic cognitive data allow for measurement over a long period of time and therefore may be better suited for capturing cognitive processes, which are subject to natural temporal fluctuations over a day and/or week (Schmidt, Collette, Cajochen, & Peigneux, 2007). Similarly, extended measurement intervals allow for the averaging across multiple data points over a longer period of time, which should result in greater reliability of scores than single time point assessments. Finally, less constrained technological innovations permit modeling across contexts that may influence cognition in natural environments (e.g., social engagement, mood), thereby allowing for inferences on contextual dependency and within-person variability. With this in mind, a number of recent studies have developed and validated neuropsychological tasks that can be administered in immersive, virtual reality environments and have found that these paradigms yield additional information not provided by traditional laboratory tasks (Parsons & Courtney, 2014; Parsons & Rizzo, 2008). This emphasis on in situ measurement has even been embraced within the field of neuroscience with growing attention being paid to developing imaging techniques that can be used to visualize brain dynamics during natural environmental interactions (Kasai, Fukuda, Yahata, Morita, & Fujii, 2015).

However, few studies have assessed cognition with ecological momentary assessment (EMA), which involves assessing phenomena at the moment in which they occur and in an individual's natural environment (Stone & Shiffman, 2002). This approach typically involves querying an individual randomly throughout the day and in response to certain events (e.g., mood changes, substance use) using handheld computers. The emphasis on repeated data collection in naturalistic settings may make EMA a prime candidate platform for more generalizable neurocognitive assessment. Surprisingly, this research is limited with most existing studies not specifically designed to measure cognition (Lukasiewicz, Benyamina, Reynaud, & Falissard, 2005) and restricted to single time-point assessments (Scholey, Benson, Neale, Owen, & Tiplady, 2012). To our knowledge, only two studies from one research group have specifically addressed this gap and found that a reaction time task can be modified and successfully integrated into an EMA framework (Waters & Li, 2008; Waters et al., 2014).

This study aimed to determine the feasibility and acceptability of repeated EMA measurement for visual working memory (VWM) assessment in adolescents/young adults, and to evaluate the convergent and discriminant validity of VWM scores assessed via EMA. VWM, which can broadly be defined as the ability to actively but temporarily maintain information about a visual environment to serve an immediate task (Luck & Vogel, 2013), has only been assessed in the laboratory until this juncture. Our rationale for targeting VWM in this initial investigation is multi-factorial. First, the neural correlates of VWM are well-established (Ventre-Dominey et al., 2005). Second, VWM may serve as a proxy for overall cognitive capacity (Fukuda, Vogel, Mayr, & Awh, 2010). Additionally, VWM has established relationships with important clinical outcomes such as addiction (Tapert, Pulido, Paulus, Schuckit, & Burke, 2004) and liability for psychopathology (Castaneda et al., 2011; Myles-Worsley & Park, 2002). Finally, VWM as a construct has been suggested to be largely stable over time (Kyllingsbaek & Bundesen, 2009), which is an important property for future investigations which may attempt to understand how momentary fluctuations in this capacity is related to subsequent behavior changes. We hypothesized that performance on an EMA VWM task would be more strongly associated with performance on validated laboratory measures of working memory than assessments of potential confounders including intelligence, processing speed, and verbal abilities.

Methods

This project was incorporated into a large natural history study of the social-emotional contexts of adolescent smoking, which involves a sample of high-risk adolescents aging into young adulthood. Initial recruitment procedures and participant characteristics are detailed in other publications that utilized the parent project cohort from which this sample was derived (e.g., Dierker & Mermelstein, 2010; Piasecki, Trela, Hedeker, & Mermelstein, 2014). Briefly, the goal of recruitment for the parent project was to develop a cohort of adolescents that mirrored the racial and ethnic diversity of the greater Chicago metropolitan area and that were at high risk for smoking. The parent project recruited a cohort of adolescents in a multi-stage process from 16 Chicago-area high schools. The cohort over-sampled for students who had ever smoked a cigarette (83% ever smoked), and were thus at high risk for smoking escalation. All 9th and 10th graders (N = 12,970) completed a screening survey of smoking behavior and were eligible if they fell into one of four levels of smoking experience: 1) never smokers; 2) former experimenters (smoked > one cigarette in the past, have not smoked in the last 90 days, and < 100 cigarettes in their lifetime); 3) current experimenters (smoked in the past 90 days, but smoked < 100 cigarettes in their lifetime); and 4) current smokers (smoked in the past 30 days and have smoked > 100 cigarettes in their lifetime). Of the 3,654 students invited, 1,344 agreed to participate (36.8%). Of these, 1,263 (94.0%) completed the baseline measurement wave. Parental consent and student assent were obtained and procedures were approved by the University of Illinois at Chicago Institutional Review Board. Participants were compensated for their participation.

This project built onto the data collection and infrastructure of the parent EMA study, taking advantage of an established cohort of adolescent/young adult tobacco smokers, half of whom had participated in earlier waves of EMA data collection. Although the parent EMA study is longitudinal, this study focused only on measures administered five years after baseline (participants were 19-22 years old). The EMA assessment protocol comprised a 7-day monitoring period during which they completed assessments each day and multiple times throughout the day. This strategy ensured both weekday and weekend sampling and provided an adequate sample of events. At the start of each EMA assessment week, participants were individually trained by study staff on how to record data prior to taking devices out of the laboratory. Custom interviews were installed onto palmOne Tungsten E2 handheld computers, with all other functions disabled. Entries were password protected as well as time and date stamped. Three interview types were programmed onto the handheld computers: 1) random prompts, which were device initiated (randomly “beeping” the participant 5-7 times/day); 2) tobacco smoke events, which were subject-initiated immediately after smoking a cigarette; and 3) no smoke events, which were subject-initiated each time a participant wanted to smoke a cigarette but was unable to do so due to situational constraints. Each interview type had a similar set of questions asking about mood, activity, location, companionship, use of other substances, and social-situational factors, among other questions. Each interview also concluded with an experimental VWM task, which will be further described below. On average, EMA interviews were completed within 230 seconds (approximately 90 seconds for questions and 40 seconds for the VWM task) to minimize burden and promote compliance. Participants could “delay” random prompts (for up to 20 minutes) and “suspend” the palmpilot for up to three hours during times when responding to the device would be prohibited or impractical (e.g., during school exams, athletic events, etc.). Time to respond to a prompt, missed prompts, wake and sleep times, and “suspensions” were recorded, allowing us to monitor compliance. At the end of the measurement week, we met with participants to collect the devices, dispense compensation, and conduct an interview about the week, including reviewing problems, compliance, and providing feedback.

After the EMA study week, participants were recruited for a brief laboratory-based neurocognitive assessment. Individuals were contacted via direct invitation letter. Interested participants completed a one-time, one-hour laboratory visit during which they completed several neurocognitive measures that were examiner-led. The order of test administration was counterbalanced using a random sequence generator for each participant to minimize any systematic effects of ordering or fatigue.

EMA Visual Working Memory Task

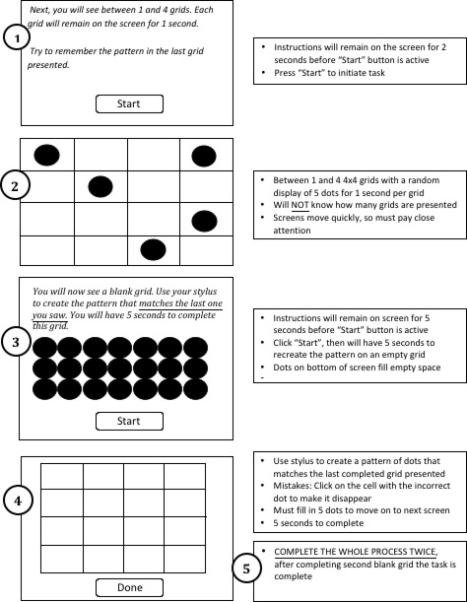

During each EMA interview, participants completed a brief VWM assessment (duration approximately 40 seconds) that was programmed onto the handheld computers and was administered in participants’ natural environments (Figure 1). Participants were thoroughly trained on the task before data collection began. This task required active processing of spatial information (i.e., dot locations) and was based on visuospatial simple span tasks such as the dot memory task (Ichikawa, 1983) and the Corsi blocks task (Milner, 1971). Similar tasks have been administered in laboratory settings (Tremblay, Saint-Aubin, & Jalbert, 2006). However, our task also incorporated an added processing requirement insofar as participants were required to continuously maintain and update spatial configurations in memory. Specifically, participants were presented with between two and four 4x4 grids in sequence. Participants were told that they might see between one and four grids to ensure they attended to the first stimulus presented. The number of grids presented (range: two to four) and the configuration of the dots within each grid were completely random. Each grid was displayed on the handheld computer screen for one second. After the final grid presentation, participants were instructed to recreate the pattern of dots from the last grid presented on a blank grid using their stylus. Participants were then presented with one additional trial consisting of a random number of grids with a random pattern of dots as well as test grid where they recreated the last observed pattern of dots. The software was programmed to minimize the likelihood of duplicate displays during the study week.

Figure 1.

Schematic of EMA Visual Working Memory Task

Participants were told that this task is difficult when there are a lot of distractions, but that they should try to concentrate because it is very brief. They were also told that they would not receive performance feedback until the debriefing session at the end of the week. Performance was measured by the proportion of correct responses across both trials. Additionally, as bottom-up information about perceptual input can influence the allocation of attentional resources (Duncan, 1984; Moore, Yantis, & Vaughan, 1998; Vecera & Farah, 1994), it was important for us to take into account possible variability in the difficulty of the dot configurations being presented. For example, Gestalt grouping principles suggest that objects in close proximity are more likely to be grouped together than those that are more distant (Wagemans, Elder, Kubovy, Palmer, Peterson, Singh, & von der Heydt, 2012) and configural grouping, in turn, influences the efficiency by which visual stimuli are stored in VWM (Jiang, Olson, & Chun, 2000; Patterson, Bly, Porcelli, Rypma, 2007; Woodman, Vecera, & Luck. 2003). As such, we quantified four parameters of task complexity based broadly according to Gestalt cues that may facilitate associative learning. The first complexity parameter was the number of grids occupied with dots (range: two to four) per trial, with higher numbers indicative of greater interference and thus greater complexity. Three additional complexity variables that accounted for the locations of the dots in the target grid were calculated: 1) dispersion of the dots on the target grid from a regression line; 2) the number of corner dots in the target grid; and 3) and the cumulative distance between the dots on the target grid. Greater dispersion from a regression line, fewer corner dots, and greater distance between dots reflected greater task complexity. The EMA interview also addressed multiple aspects of the participants’ objective and subjective context; however, these were not the focus of this study and are therefore not detailed in this manuscript. Interested readers can find information pertaining to such EMA variables in related publications (e.g., Dierker et al., 2010; Piasecki et al., 2014).

Laboratory Measures of Neurocognition

Participants completed an examiner-led, laboratory-based neurocognitive battery. Assessments were chosen based on their large and overlapping standardization samples and their established construct validity. Additionally, tasks that assessed both auditory and visual aspects of working memory were included to determine the convergent validity of EMA task scores and tasks that assessed domains that were theoretically suspected to be less strongly associated with working memory were included to establish discriminant validity of the EMA task scores. General intellectual functioning was estimated with the Wechsler Test of Adult Reading (WTAR; Wechsler, 2001), which involves reading words out loud that have atypical grapheme to phoneme translations. Psychomotor processing speed was indexed with Coding and Symbol Search from the Wechsler Adult Intelligence Scale, Fourth Edition (WAIS-IV) Processing Speed Index (PSI; Wechsler, 2008a), both of which rely on efficient visual-motor coordination and speed. Verbal abilities were measured with the WAIS-IV Similarities subtest, which assesses abstract verbal reasoning. Auditory working memory was measured by Digit Span and Letter-Number Sequencing from the WAIS-IV Working Memory Index (WMI), both which require repetition, reversal and sequencing of digits and numbers. Finally, VWM was quantified with the Wechsler Memory Scale, Fourth Edition (WMS-IV) Visual Working Memory Index (VWMI; Wechsler, 2008b), which includes Spatial Addition (based on n-back paradigms) and Symbol Span (a visual analogue to the WAIS-IV digit span tasks).

Participants

This was a healthy, community-residing population that was part of a longitudinal cohort of adolescents initially sampled based on smoking history. This sample has no known CNS-compromising disorders. Individuals were strategically recruited from the larger EMA participant pool (N=287) to obtain a sample that had an even distribution of EMA VWM performance. This recruitment approach was selected because it allowed for stable correlational estimates and feedback on feasibility and acceptability without imposing additional burden on the overall sample. Specifically, quintiles of task performance were determined from the random subject effect estimates that were obtained from a mixed ordinal logistic regression that included covariates for task complexity and study day. Thus, they are the measures of a subject's ability on the EMA VWM task, controlling for the effects of task complexity and study day. A random sample of participants from the larger EMA participant pool was recruited from each quintile to complete laboratory neurocognitive assessments. Recruitment for this study aimed to accrue a sample size of 40 with an equal number by gender per quintile of EMA VWM performance (i.e., 4 males and 4 females per quintile). The current study includes the 39 participants who were ultimately recruited (4 males, 3 females from the lowest EMA quintile).

The resulting sample had a mean age of 21.10 years (SD=.73) and was 48.70% female. The ethnic distribution was 48.72% Caucasian, 25.64% Black, 20.51% Hispanic, and 5.13% of other ethnic origins. Most individuals had at least some college education (66.67%). Demographic composition of this sample was comparable to that of the parent project (p's >.05).

Results

Descriptive Analyses of EMA Reporting

A total of 1,890 data points with EMA VWM performance were provided. Participants provided an average of 38 EMA random prompts and they responded to 87% of random prompts within three minutes of signaling. After the final grid of the EMA VWM task was presented, it took participants an average of 3.52 seconds (SD=.97) and 3.49 seconds (SD=.97) to complete trials one and two, respectively. None of the EMA VWM tasks were discontinued by the device because it took it too long for the participant to respond. On average, participants saw 6.01 distracter grids (SD=1.17) across both trials per administration. Across the study week, participants provided a median of 90% correct responses per administration (IQR: 70, 100). Spearman's rho correlation between proportion of correct responses provided per administration and study day was positive (ρs=.12, p<.0001), suggesting that individuals did better on the task as the week progressed. However, performance was not associated with the actual day of the week (F (6, 1883) = 1.35, p=.23). Further, performance on the EMA VWM task did not vary by interview type (random prompt, smoke, no smoke; F (2, 1887) = 1.96, p=.14). During the debriefing interview at the end of the EMA data collection week, participants reported an overwhelmingly positive experience with the task, often commenting on it being like a game, enhancing the rest of the interview experience, and they did not consider it to be a burden.

Associations with Laboratory Measures of Neurocognition

Descriptive information on laboratory neurocognitive measures is presented in Table 1. Scores from the laboratory assessments were tabulated according to standardized test protocols and were adjusted based on available normative data. Spearman's rank correlations were then run between quintile of EMA VWM performance and composite scores from the standardized measures of neuropsychological functioning. As is evident in Table 2, quintile was positively correlated with predicted full scale IQ (WTAR), auditory working memory on the WAIS-IV (Digit Span scaled score, Letter-Number Sequencing scaled score, and WMI index score), and visual working memory on the WMS-IV (Spatial Addition scaled score, Symbol Span scaled score, and VWMI index score; all p's <.05). However, quintile was not significantly associated with estimates of processing speed and verbal reasoning (all p's >.09), providing evidence in support of the interpretation of task performance's discriminant validity. To determine whether significant relationships were best accounted for by intellectual abilities, partial correlation coefficients were estimated using nonparametric methods between quintile and indices of auditory and visual attention and working memory while controlling for predicted full scale IQ. The correlation between quintile and all indices of VWM and Letter-Number Sequencing remained significant after adjusting for predicted full scale IQ (all p's <.05), and correlations between quintile and other indices of auditory working memory trended toward significance (all p's <.09; Table 2).

Table 1.

Descriptives of Laboratory Neurocognitive Measures by Quintile of EMA Visual Working Task Performance

| Quintile of EMA Working Memory Task Performance |

||||||

|---|---|---|---|---|---|---|

| Total Sample (n=39) | 1 (n=7) | 2 (n=8) | 3 (n=8) | 4 (n=8) | 5 (n=8) | |

| Auditory Working Memory (WAIS-IV WMI) | ||||||

| Digit Span Scaled Score | 9.56 (2.58) | 8.29 (1.70)a,c | 8.22 (2.28)a,f | 9.63 (1.69) | 10.86 (1.77)c,f | 11.00 (3.78) |

| Letter-Number Sequencing Scaled Score | 9.62 (1.95) | 8.57 (.53)b,c | 8.56 (1.33)e,f | 9.88 (1.13)b,e | 10.14 (1.35)c,f | 11.00 (3.25) |

| WMI Index Score | 97.56 (12.04) | 97.29 (5.22)b,c | 90.78 (9.52)f | 98.50 (6.87)b | 102.57 (7.91)c,f | 105.38 (19.10) |

| Visual Working Memory (WMS-IV VWMI) | ||||||

| Spatial Addition Scaled Score | 8.97 (2.75) | 6.86 (1.57)b,d | 8.22 (2.59)g | 9.38 (2.26)b | 8.14 (2.79)h | 12.00 (1.69)d,g,h |

| Symbol Span Scaled Score | 9.79 (2.98) | 8.00 (1.73)d | 8.33 (2.35)g | 9.25 (2.60) | 10.00 (3.11)h | 13.38 (1.77)d,g,h |

| VWMI Index Score | 96.38 (14.22) | 84.86 (3.98)b,d | 90.00 (10.66)g | 95.88 (11.89)b | 94.71 (13.09)h | 115.63 (7.39)d,g,h |

| General Intelligence (WTAR) | ||||||

| WTAR Standard Score | 103.97 (13.76) | 100.86 (10.81)d | 92.78 (17.17)e,g | 110.38 (11.24)e | 103.86 (9.10)h | 113.00 (9.09)d,g,h |

| Predicted FSIQ | 101.85 (11.04) | 98.71 (9.67)d | 92.56 (11.81)e,g | 106.38 (8.77)e | 101.57 (7.85) | 110.75 (7.55)d,g |

| Verbal Abilities (WAIS-IV Similarities) | ||||||

| Similarities Scaled Score | 11.15 (3.29) | 11.14 (2.41) | 8.67 (2.00)e,g | 12.00 (3.16)e | 11.14 (4.26) | 13.13 (3.23)g |

| Processing Speed (WAIS-IV PSI) | ||||||

| Symbol Search Scaled Score | 11.85(2.92) | 10.29 (2.06) | 11.56 (2.74) | 12.63 (3.42) | 12.43 (2.99) | 12.25 (3.28) |

| Coding Scaled Score | 10.69 (2.82) | 9.71 (2.21) | 10.00 (3.00) | 11.38 (2.77) | 11.00 (3.92) | 11.38 (2.26) |

| PSI Index Score | 106.23 (13.76) | 99.86 (9.89) | 104.33 (14.64) | 107.25 (14.25) | 109.71 (17.56) | 109.88 (12.59) |

Note. Values represent means (standard deviations); WAIS-IV, Wechsler Adult Intelligence Scale-Fourth Edition; WMI, Working Memory Index; WMS-IV, Wechsler Memory Scale-Fourth Edition; VWMI, Visual Working Memory Index; WTAR, Wechsler Test of Adult Reading; FSIQ, Full Scale IQ; PSI, Processing Speed Index.

Across quintiles, mean values with shared alphabetic superscripts signify significant pairwise comparisons; all other pairwise comparisons were nonsignificant at p > .05.

Table 2.

Bivariate Correlations between Quintile of EMA Visual Working Memory Task Performance and Laboratory Measures of Neurocognition

| Variables |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Variables | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 1. Quintile | .42** (.28) | .46** (.33*) | .44** (.31) | .53*** (.46**) | .60*** (.54***) | .64*** (.59***) | .45* | .28 | .23 | .25 | .27 |

| 2. Digit Span Scaled Score | -- | .80*** | .97*** | .19 | .40** | .35* | .43** | .14 | .30 | .28 | .28 |

| 3. Letter-Number Sequencing Scaled Score | -- | -- | .91*** | .17 | .42** | .34* | .44** | .29 | .18 | .27 | .19 |

| 4. WMI Index Score | -- | -- | -- | .19 | .41** | .34* | .45** | .20 | .25 | .28 | .24 |

| 5. Spatial Addition Scaled Score | -- | -- | -- | -- | .41** | .81*** | .32* | .19 | .23 | .20 | .23 |

| 6. Symbol Span Scaled Score | -- | -- | -- | -- | -- | .84*** | .32* | .38* | .25 | .10 | .21 |

| 7. VWMI Index Score | -- | -- | -- | -- | -- | -- | .32* | .28 | .26 | .14 | .22 |

| 8. Predicted FSIQ | -- | -- | -- | -- | -- | -- | -- | .49** | .21 | .20 | .23 |

| 9. Similarities Scaled Score | -- | -- | -- | -- | -- | -- | -- | -- | .01 | .14 | .05 |

| 10. Symbol Search Scaled Score | -- | -- | -- | -- | -- | -- | -- | -- | -- | .61*** | .81*** |

| 11. Coding Scaled Score | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | .87*** |

| 12. PSI Index Score | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- |

Note. Values represent means spearman rank order correlations; Values in parentheses represent partial spearman rank order correlations controlling for FSIQ

p < .05

p <.01

p <.001

italicized values have p-values <.09.

WMI, Working Memory Index; VWMI, Visual Working Memory Index; FSIQ, Full Scale IQ; PSI, Processing Speed Index

Discussion

Given potential limitations in generalizability of traditional neurocognitive approaches, the overall goal of this study was to investigate the acceptability and feasibility of EMA VWM assessments, and whether real-time, ambulatory measures of VWM correlated with standardized measures of neurocognition. Only two studies to date have specifically examined the translatability of cognitive tasks into EMA paradigms (Waters & Li, 2008; Waters et al., 2014); however, these investigations focused on a reaction time task (Stroop) and did not thoroughly investigate the degree to which this task correlated with a broad range of neurocognitive measures. Therefore, the convergent and discriminant validity of interpretations from such approaches remain in question. As such, this study compared the performance on a novel EMA-adapted VWM task to scores on several standardized, laboratory measures of neurocognition.

The results of this study support the feasibility of administering a VWM task in an EMA paradigm. First, participants on average provided 38 prompts over the course of the interview week and responded to 87% of random prompts within three minutes of signaling, which is consistent with the criterion set forth by Stone and Shiffman (2002) for adequate compliance. Importantly, only half of the participants in this sample had prior experience with EMA data collection from the parent project, all participants were naive to the examined EMA VWM task, and there were no differences on EMA VWM task performance based on prior EMA exposure. Therefore, high rates of compliance are likely not attributable to repeated exposure and practice to this form of intensive data collection methodology. During debriefing interviews at the end of the EMA study week, participants generally indicated that they enjoyed the task and few individuals encountered problems during its administration. Individuals were also able to complete the entire assessment in an average of seven seconds (following stimulus presentation), which is substantially less than typical neuropsychological assessments conducted in a laboratory.

Results from this study increase confidence that the properties of standard assessments of VWM transfer to an EMA paradigm and support this approach's acceptability. People who performed better on the EMA task also performed better on laboratory measures of working memory, namely VWM. Consistent with prior reports (Fukuda et al., 2010), EMA VWM was associated with overall intellectual capacity. In this study, most relationships between EMA VWM performance and laboratory-assessed working memory persisted even after adjusting for predicted IQ; however, performance on the EMA VWM task cannot be definitively disentangled from overall intellectual capacity given that the employed laboratory measure of working memory is a component of full-scale IQ on the WAIS-IV. Importantly, EMA VWM was not linked with processing speed and verbal abilities, providing preliminary evidence for the convergent and discriminant validity of task performance interpretation from this novel EMA approach. However, it should be noted that other studies have documented both theoretical and empirical relationships between working memory and cognitive speed in adult clinical populations (Brebion et al., 2014), indicating that the nature of these relationships require further scrutiny both in the laboratory and in real-time.

The current investigation is not without limitations. First, although preliminary evidence shows that our EMA VWM task relates to standardized assessments of working memory but not other cognitive capacities, this task is novel and it is possible that it was not an accurate VWM measure. Future studies are warranted that stringently establish the psychometric properties of this task and implement redundant EMA measures of working memory to determine the sensitivity and specificity of effects observed in this study. Additionally, it is necessary to compare performance on the EMA VWM task to additional standardized and/or commonly employed measures of VWM (e.g., subtests from the WMS-III; n-back paradigms). Second, our participants were adolescents/young adults who may be technologically savvy, which may have inflated compliance and performance. Carrying handheld devices may be more of an adjustment and burden to older cohorts and therefore future studies should not only consider the replicability of our findings, but also compare performances in adolescents/young adults to older samples. However, EMA methods were used with an adult sample and similar compliance rates were achieved (Waters & Li, 2008), which argues that this method of data collection is not uniquely implementable among younger age cohorts. Regardless, as EMA transitions to smartphone applications and there is greater familiarity with these devices, any existing technological advantage of young adults may diminish. Finally, EMA task performance was negatively skewed and the same task was given multiple times a day over the course of a week. Therefore, it is possible that task learning might have influenced findings and may have resulted in a ceiling effect. Importantly, all participants engaged in the same protocol including an EMA training session prior to the initiation of data collection; therefore, if there was a learning effect, it would likely be uniform across participants and would not impose systematic effects on analyses. Regardless, next steps will include implementation of a more challenging paradigm into EMA protocols to achieve a more normal performance distribution and may wish to compare learning curves across test modalities.

Despite these limitations, this study presents feasibility and acceptability data on the integration of traditional neurocognitive assessments into ambulatory paradigms. The task from the current study offers an important extension of the extant literature insofar as it examines the validity of interpretations from an EMA-based VWM measure that assessed functioning over an entire week and across multiple naturalistic settings. Future directions include further establishing the psychometric properties of this particular task and translating assessment of other cognitive domains into ambulatory paradigms. Finally, although one might argue that the significant inter-method correlations speaks to the sufficiency of traditional “paper and pencil” neuropsychological tests, we believe these data instead suggest that ambulatory assessments may be an ideal complimentary approach to laboratory protocols. Indeed, ambulatory approaches place a greater emphasis on one core aspect of ecological validity given that testing administration occurs in the natural living environment of the individual as compared to an isolated testing room, which in turn may enhance generalizability of findings. Additionally, the correlations between the EMA VWM task and the laboratory measures were moderate (.46 to .64) indicating overlap but not total redundancy in the information provided. To the extent that cognition may fluctuate not only day to day but also moment to moment, EMA may allow for a more nuanced assessment of an individual's pattern of variability in cognitive capacities as well as the simultaneous consideration of the factors that trigger momentary changes. Toward this end, we have work in progress that models real-time variability in cognition as a function of between and within subject differences in contextual factors (e.g., substance use, proximity to peers). A long-term aim of this body of work is to more clearly define individual patterns of cognitive fluctuations experienced in real-world settings and translate this information to targeted mobile prevention and intervention efforts.

Acknowledgments

This publication was made possible by the National Cancer Institute (NCI; P01CA098262, PI: Mermelstein). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of NCI or the National Institutes of Health. We thank Dr. Kathi Diviak and John O'Keefe for their work recruiting and maintaining study participants.

Footnotes

The authors declare no conflicts of interest.

References

- Brebion G, Stephan-Otto C, Huerta-Ramos E, Usall J, Perez Del Olmo M, Contel M, Ochoa S. Decreased processing speed might account for working memory span deficit in schizophrenia, and might mediate the associations between working memory span and clinical symptoms. Eur Psychiatry. 2014 doi: 10.1016/j.eurpsy.2014.02.009. [DOI] [PubMed] [Google Scholar]

- Cameron E, Sinclair W, Tiplady B. Validity and sensitivity of a pen computer battery of performance tests. J Psychopharmacol. 2001;15:105–110. doi: 10.1177/026988110101500207. [DOI] [PubMed] [Google Scholar]

- Castaneda AE, Suvisaari J, Marttunen M, Perala J, Saarni SI, Aalto-Setala T, Tuulio-Henriksson A. Cognitive functioning in a population-based sample of young adults with anxiety disorders. Eur Psychiatry. 2011;26:346–353. doi: 10.1016/j.eurpsy.2009.11.006. [DOI] [PubMed] [Google Scholar]

- Chaytor N, Schmitter-Edgecombe M. The ecological validity of neuropsychological tests: A review of the literature on everyday cognitive skills. Neuropsychology Review. 2003;13:181–197. doi: 10.1023/b:nerv.0000009483.91468.fb. [DOI] [PubMed] [Google Scholar]

- Dierker L, Mermelstein R. Early emerging nicotine-dependence symptoms: a signal of propensity for chronic smoking behavior in adolescents. J Pediatr. 2010;156:818–822. doi: 10.1016/j.jpeds.2009.11.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J. Selective attention and the organization of visual information. Journal of Experimental Psychology: General. 1984;113:501–517. doi: 10.1037//0096-3445.113.4.501. [DOI] [PubMed] [Google Scholar]

- Fukuda K, Vogel E, Mayr U, Awh E. Quantity, not quality: the relationship between fluid intelligence and working memory capacity. Psychon Bull Rev. 2010;17:673–679. doi: 10.3758/17.5.673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heinrichs RW. Current and emergent applications of neuropsychological assessment: Problems of validity and utility. Professional Psychology: Research and Practice. 1990;21:171–176. [Google Scholar]

- Ichikawa SI. Verbal memory span, visual memory span, and their correlations with cognitive tasks. Japanese Psychological Research. 1983;25:173–180. [Google Scholar]

- Jiang Y, Olson IR, Chun MM. Organization of visual short-term memory. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2000;2:683–702. doi: 10.1037//0278-7393.26.3.683. [DOI] [PubMed] [Google Scholar]

- Johnstone B, Farmer JE. Preparing neuropsychologists for the future: The need for additional training guidelines. Archives of Clinical Neuropsychology. 1997;12:523–530. [PubMed] [Google Scholar]

- Kasai K, Fukuda M, Yahata N, Morita K, Fujii N. The future of real-world neuroscience: Imaging techniques to assess active brains in social environments. Neurosci Res. 2015;90C:65–71. doi: 10.1016/j.neures.2014.11.007. [DOI] [PubMed] [Google Scholar]

- Kyllingsbaek S, Bundesen C. Changing change detection: improving the reliability of measures of visual short-term memory capacity. Psychon Bull Rev. 2009;16:1000–1010. doi: 10.3758/PBR.16.6.1000. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Vogel EK. Visual working memory capacity: from psychophysics and neurobiology to individual differences. Trends Cogn Sci. 2013;17:391–400. doi: 10.1016/j.tics.2013.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lukasiewicz M, Benyamina A, Reynaud M, Falissard B. An in vivo study of the relationship between craving and reaction time during alcohol detoxification using the ecological momentary assessment. Alcohol Clin Exp Res. 2005;29:2135–2143. doi: 10.1097/01.alc.0000191760.42980.50. [DOI] [PubMed] [Google Scholar]

- Milner B. Interhemispheric differences in the localization of psychological processes in man. Br Med Bull. 1971;27:272–277. doi: 10.1093/oxfordjournals.bmb.a070866. [DOI] [PubMed] [Google Scholar]

- Moore CM, Yantis S, Vaughan B. Object-based visual selection: Evidence from perceptual completion. Psychological Science. 1998;9:104–110. [Google Scholar]

- Myles-Worsley M, Park S. Spatial working memory deficits in schizophrenia patients and their first degree relatives from Palau, Micronesia. Am J Med Genet. 2002;114:609–615. doi: 10.1002/ajmg.10644. [DOI] [PubMed] [Google Scholar]

- Parsey CM, Schmitter-Edgecombe M. Applications of technology in neuropsychological assessment. Clinical Neuropsychologist. 2013;27:1328–1361. doi: 10.1080/13854046.2013.834971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parsons TD, Courtney CG. An initial validation of the Virtual Reality Paced Auditory Serial Addition Test in a college sample. J Neurosci Methods. 2014;222:15–23. doi: 10.1016/j.jneumeth.2013.10.006. [DOI] [PubMed] [Google Scholar]

- Parsons TD, Rizzo AA. Initial validation of a virtual environment for assessment of memory functioning: Virtual Reality Cognitive Performance Assessment Test. Cyberpsychol Behav. 2008;11:17–25. doi: 10.1089/cpb.2007.9934. [DOI] [PubMed] [Google Scholar]

- Parsons TD, Silva TM, Pair J, Rizzo AA. Virtual environment for assessment of neurocognitive functioning: virtual reality cognitive performance assessment test. Stud Health Technol Inform. 2008;132:351–356. [PubMed] [Google Scholar]

- Patterson MD, Bly BM, Porcelli AJ, Rypma B. Visual working memory for global, object, and part-based information. Memory and Cognition. 2007;35:738–751. doi: 10.3758/bf03193311. [DOI] [PubMed] [Google Scholar]

- Piasecki TM, Trela CJ, Hedeker D, Mermelstein RJ. Smoking antecedents: separating between- and within-person effects of tobacco dependence in a multiwave ecological momentary assessment investigation of adolescent smoking. Nicotine Tob Res. 2014;16(Suppl 2):S119–126. doi: 10.1093/ntr/ntt132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pomerantz JR, Portillo MC. Grouping and emergent features in vision: Toward a theory of basic Gestalts. Journal of Experimental Psychology: Human Perception and Performance. 2011;37:1331–1349. doi: 10.1037/a0024330. [DOI] [PubMed] [Google Scholar]

- Schmidt C, Collette F, Cajochen C, Peigneux P. A time to think: circadian rhythms in human cognition. Cogn Neuropsychol. 2007;24:755–789. doi: 10.1080/02643290701754158. doi. [DOI] [PubMed] [Google Scholar]

- Scholey AB, Benson S, Neale C, Owen L, Tiplady B. Neurocognitive and mood effects of alcohol in a naturalistic setting. Hum Psychopharmacol. 2012;27:514–516. doi: 10.1002/hup.2245. [DOI] [PubMed] [Google Scholar]

- Stone AA, Shiffman S. Capturing momentary, self-report data: a proposal for reporting guidelines. Ann Behav Med. 2002;24:236–243. doi: 10.1207/S15324796ABM2403_09. [DOI] [PubMed] [Google Scholar]

- Sunderland A, Harris JE, Baddeley AD. Do laboratory tests predict everyday memory? A neuropsychological study. Journal of Verbal Learning and Verbal Behavior. 1983;22:341–357. [Google Scholar]

- Tapert SF, Pulido C, Paulus MP, Schuckit MA, Burke C. Level of response to alcohol and brain response during visual working memory. J Stud Alcohol. 2004;65:692–700. doi: 10.15288/jsa.2004.65.692. [DOI] [PubMed] [Google Scholar]

- Tremblay S, Saint-Aubin J, Jalbert A. Rehearsal in serial memory for visual-spatial information: evidence from eye movements. Psychon Bull Rev. 2006;13:452–457. doi: 10.3758/bf03193869. [DOI] [PubMed] [Google Scholar]

- Troster AI. Clinical neuropsychology, functional neurosurgery, and restorative neurology in the next millennium: Beyond secondary outcome measures. Brain and Cognition. 2000;42:117–119. doi: 10.1006/brcg.1999.1178. [DOI] [PubMed] [Google Scholar]

- Vecera SP, Farah MJ. Does visual attention select objects or locations? Journal of Experimental Psychology: General. 1994;123:146–160. doi: 10.1037//0096-3445.123.2.146. [DOI] [PubMed] [Google Scholar]

- Ventre-Dominey J, Bailly A, Lavenne F, Lebars D, Mollion H, Costes N, Dominey PF. Double dissociation in neural correlates of visual working memory: a PET study. Brain Res Cogn Brain Res. 2005;25:747–759. doi: 10.1016/j.cogbrainres.2005.09.004. [DOI] [PubMed] [Google Scholar]

- Wagemans J, Elder JH, Kubovy M, Palmer SE, Peterson MA, Singh M, von der Heydt R. A century of Gestalt psychology in visual perception: I Perceptual grouping and figure-ground organization. Psychological Bulletin. 2012;138:1172–1217. doi: 10.1037/a0029333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waters AJ, Li Y. Evaluating the utility of administering a reaction time task in an ecological momentary assessment study. Psychopharmacology (Berl) 2008;197:25–35. doi: 10.1007/s00213-007-1006-6. [DOI] [PubMed] [Google Scholar]

- Waters AJ, Szeto EH, Wetter DW, Cinciripini PM, Robinson JD, Li Y. Cognition and craving during smoking cessation: an ecological momentary assessment study. Nicotine Tob Res. 2014;16(Suppl 2):S111–118. doi: 10.1093/ntr/ntt108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Test of Adult Reading. Pearson; San Antonio, TX: 2001. [Google Scholar]

- Wechsler D. Wechsler Adult Intelligence Scale. 4th Edition Pearson; San Antonio, TX: 2008a. [Google Scholar]

- Wechsler D. Wechsler Memory Scale. 4th Edition Pearson; San Antonio, TX: 2008b. [Google Scholar]

- Woodman GF, Vecera SP, Luck SJ. Perceptual organization influences visual working memory. Psychonomic Bulletin and Review. 2003;10:80–87. doi: 10.3758/bf03196470. [DOI] [PubMed] [Google Scholar]