Abstract

Objectives

Speech perception in background noise is difficult for many individuals and there is considerable performance variability across listeners. The combination of physiological and behavioral measures may help to understand sources of this variability for individuals and groups and prove useful clinically with hard-to-test populations. The purpose of this study was threefold: (1) determine the effect of signal-to-noise ratio (SNR) and signal level on cortical auditory evoked potentials (CAEPs) and sentence-level perception in older normal-hearing (ONH) and older hearing-impaired (OHI) individuals, (2) determine the effects of hearing impairment and age on CAEPs and perception; and (3) explore how well CAEPs correlate with and predict speech perception in noise.

Design

Two groups of older participants (15 ONH and 15 OHI) were tested using speech-in-noise stimuli to measure CAEPs and sentence-level perception of speech. The syllable /ba/, used to evoke CAEPs, and sentences were presented in speech-spectrum background noise at four signal levels (50, 60, 70, and 80 dB SPL) and up to seven SNRs (−10, −5, 0, 5, 15, 25, 35 dB). These data were compared between groups to reveal the hearing impairment effect and then combined with previously published data for 15 young normal-hearing individuals (Billings et al., 2013) to determine the aging effect.

Results

Robust effects of SNR were found for perception and CAEPs. Small but significant effects of signal level were found for perception, primarily at poor SNRs and high signal levels, and in some limited instances for CAEPs. Significant effects of age were seen for both CAEPs and perception, while hearing impairment effects were only found with perception measures. CAEPs correlate well with perception and can predict SNR50s to within 2 dB for ONH. However, prediction error is much larger for OHI and varies widely (from 6 to 12 dB) depending on the model that was used for prediction.

Conclusions

When background noise is present, SNR dominates both perception-in-noise testing and cortical electrophysiological testing, with smaller and sometimes significant contributions from signal level. A mismatch between behavioral and electrophysiological results was found (hearing impairment effects were primarily only seen for behavioral data), illustrating the possible contributions of higher order cognitive processes on behavior. Interestingly, the hearing impairment effect size was more than five times larger than the aging effect size for CAEPs and perception. Sentence-level perception can be predicted well in normal-hearing individuals; however, additional research is needed to explore improved prediction methods for older individuals with hearing impairment.

Keywords: Cortical auditory evoked potentials (CAEPs), Event-related potentials (ERPs), Signals in noise, Signal-to-noise ratio (SNR), Background noise, N1, perception in noise, psychometric function, prediction

INTRODUCTION

Understanding speech in the presence of background noise is a challenge that has been studied for many decades (Miller 1947; Cherry 1953) and which requires the use of temporal, spectral, and spatial auditory cues as well as the integration of multi-sensory information. In addition, there are important considerations regarding how the background noise interacts with and is similar to the target signal. These variables, and others related to the listener such as age and hearing status, contribute to the considerable variability in performance across individuals. A physiological measure, such as cortical auditory evoked potentials (CAEPs), may be useful in understanding how the auditory system encodes cues that are used by higher-order processing for accurate perception. Furthermore, the relationship between CAEPs and behavior may provide a neural correlate of speech understanding for populations who have particular difficulties understanding speech in noise, and inform our understanding of the underlying mechanisms at work in speech understanding in difficult listening environments. The clinical utility of CAEPs depends on determining the relationship between behavior and electrophysiology. For example we could use one measure to predict the other in hard-to-test populations. It may be that complementary information gained from the combination of behavior and physiological measures will improve diagnosis and treatment of perception-in-noise difficulties. However, to date, the relationship between CAEPs in noise and speech perception in noise is not well understood.

Recording cortical auditory evoked potentials in background noise was originally studied to understand the effects of masking and bandwidth on evoked responses or to simulate hearing loss (Martin et al. 1997; Whiting et al. 1998; Martin et al. 1999). More recently, researchers have used CAEPs recorded in background noise to explore the neural processing that is associated with auditory perception in noise (Androulidakis & Jones 2006; Kaplan-Neeman et al. 2006; Hiraumi et al. 2008). The important factors affecting CAEPs elicited in background noise (e.g., SNR, signal level, noise level, signal type, noise type, etc.) are only beginning to be understood. SNR has been shown to be a key factor affecting CAEPs elicited in background noise (Whiting et al. 1998; Kaplan-Neeman et al. 2006; Hiraumi et al. 2008; Billings et al. 2009, 2013; Sharma et al. 2014). In addition, when background noise is present and audible, it has been shown that SNR has a much stronger effect on waveform morphology than signal level when both are varied (Billings et al. 2009, 2013). Signal level effects, when background noise is present, do appear in the literature (Billings et al. 2013; Sharma et al. 2014); however, they are much smaller than the robust SNR effect that is seen. Behaviorally, the effect of signal level in noise is well understood; performance increases as signal levels become audible, then as signal levels begin to exceed 60-70 dB SPL, modest decrements in performance are seen and are thought to be due to spread of masking in the cochlea at higher levels (Studebaker et al. 1999; Summers & Molis 2004; Hornsby et al. 2005). Taken together, behavioral and CAEP data indicate that signal level effects do occur, but that CAEP sensitivity to signal level is poor/weak or overwhelmed by a stronger sensitivity to SNR. To date, all CAEP studies that vary both SNR and signal level have been completed in younger normal-hearing adults. The relationship between signal level and SNR is important to determine in older hearing-impaired populations, because it is likely that these effects are different than in younger populations due to changes in upward spread of masking in impaired populations (Klein et al. 1990).

While several studies have demonstrated the behavioral effects of hearing impairment and age on perception in noise, limited human electrophysiological data exist. It would be helpful to be able to differentiate between age and hearing impairment effects; indeed, separating the “central effects of peripheral pathology” from the “central effects of biological aging” has been discussed for several decades (Willott, 1991). Characterizing the effect sizes of age and hearing impairment as they relate to human electrophysiology could prove helpful in understanding behavioral performance variability across individuals. Only two CAEP studies, that we are aware of, explore the effects of age as a function of SNR (Kim et al. 2012; McCullagh & Shinn 2013). Both used pure tones presented in continuous noise and tested normal-hearing younger and older groups. Not surprisingly, both studies found main effects of SNR across all CAEP measures that were used; however, main effects of age were only demonstrated by McCullagh & Shinn (2013) for amplitude measures and P2 latency, while interactions between age and SNR were seen in both studies but for different measures (for amplitudes in McCullagh & Shinn and for N1 latency for Kim et al). Distinct methodologies likely led to differences across studies. For example, McCullagh & Shinn (2013) held signal level constant and manipulated noise level while Kim et al. (2012) held noise level constant and manipulated signal level. In the current study, both signal and noise levels are varied to determine how signal level and SNR modulate brain and behavioral measures.

Several studies have explored the relationship between behavior and CAEPs particularly in the domain of speech-in-noise testing (e.g., Anderson et al. 2010; Parbery-Clark et al. 2011; Bennett et al. 2012; Billings et al. 2013). However, most of these studies limited their analysis to correlations between one behavioral measure and one aspect of the evoked response; while this is an important first step, clinical usefulness necessitates a more thorough understanding of the relationship between behavior and physiology. Perhaps a combination of characteristics of the evoked response would better correlate with behavior. Previously we demonstrated that the behavioral SNR50 (the SNR value at which an individual understands 50% of the target signal) could be predicted to within 1 dB by CAEPs in young normal-hearing (YNH) individuals when multiple peak latencies and amplitudes were considered (Billings et al. 2013). Such a prediction model that accounts for multiple aspects of the CAEP waveform (i.e., latencies, amplitudes, and area measures) could be used to predict behavior in older individuals as well.

In the current study, two groups of older individuals were tested and data was then combined with a previously published dataset (Billings et al. 2013) of YNH individuals to extend our understanding of signals-in-noise coding and perception. The specific purposes of this study were threefold. The first objective was to determine the effects of signal level and SNR on cortical electrophysiology and sentence-level perception in noise in older normal-hearing (ONH) and older hearing-impaired (OHI) individuals. It was hypothesized that signal level would have a stronger effect on electrophysiology for older individuals than it does for younger individuals. The second objective was to determine the effects of hearing impairment (ONH versus OHI) and age (ONH versus YNH) on signal-in-noise neural encoding and behavior. It was hypothesized that hearing impairment and age effects would be present for both electrophysiology and behavior. The final objective was to explore the relationship between the behavioral and physiological datasets using correlation and prediction analyses. It was hypothesized that predictions for older hearing-impaired individuals would be poorer than for older normal-hearing or younger normal-hearing individuals.

MATERIALS AND METHODS

Participants

Fifteen ONH listeners (mean age = 69.4 years, age range = 60-78 years; six males and nine females) and 15 OHI individuals (mean age = 72.8 years, age range = 63-84 years; nine males and six females) were tested in this study. In addition, previous data from 15 YNH listeners (mean age = 27.6 years, age range = 23-34; seven males and eight females) were included to facilitate group comparisons (Billings et al., 2013). Previous data have demonstrated that a sample size of 15 participants was sufficient to demonstrate effects of SNR, signal level, and age. Pure tone hearing thresholds were symmetrical across ears for the three groups. Thresholds for the right ear from 250 to 14,000 Hz are shown in Table 1. Ultra-high frequencies were tested to better characterize the differences between ONH and OHI hearing thresholds. Thresholds for normal-hearing individuals were better than 25 dB HL from 250 to 4000 Hz (exception: one 4000-Hz threshold at 25 dB HL was found for an ONH individual). A two (group: ONH vs. YNH) by five (thresholds: 250, 500, 1000, 2000, and 4000 Hz) repeated measures analysis of variance was completed on normal-hearing thresholds and resulted in no main effect of group (F(1,14) = 4.27, p>.05) or threshold (F(4,58) = .328, p>.05). Thresholds for the OHI group were mild to severe sloping sensori-neural hearing. All participants had 226-Hz tympanometric peak pressure between −80 and +80 daPa, ear canal volume between 0.7 and 2.3 cm3, and static admittance between 0.2 and 2.2 mmho. Participants all reported right-hand dominance. Participants were in good general health with no history of otologic disorders and passed the Mini-Mental Status Examination (Folstein, Folstein, McHugh, 1975) with scores above 28. Participants provided informed consent and research was completed with approval from the pertinent institutional review boards.

Table 1.

Mean audiometric thresholds (dB HL) for the right ear for the YNH, ONH, and OHI groups

| 250 Hz |

500 Hz |

1000 Hz |

2000 Hz |

3000 Hz |

4000 Hz |

6000 Hz |

8000 Hz |

10000 Hz |

12500 Hz |

14000 Hz |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Group | Mean | (SD) | Mean | (SD) | Mean | (SD) | Mean | (SD) | Mean | (SD) | Mean | (SD) | Mean | (SD) | Mean | (SD) | Mean | (SD) | Mean | (SD) | Mean | (SD) |

| YNH | 6.0 | (5.7) | 8.7 | (7.2) | 7.3 | (7.0) | 6.3 | (6.7) | 5.5 | (8.0) | 3.7 | (6.7) | 5.0 | (8.2) | 1.7 | (5.6) | 4.7 | (6.9) | 1.3 | (8.5) | 3.0 | (10.0) |

| ONH | 10.0 | (6.5) | 8.3 | (5.6) | 10.7 | (6.8) | 9.3 | (7.0) | 10.8 | (7.0) | 14.3 | (5.6) | 31.4 | (17.6) | 42.9 | (22.8) | 61.8 | (19.1) | 69.6 | (9.4) | 63.6 | (6.4) |

| OHI | 19.3 | (7.0) | 26.3 | (9.0) | 32.3 | (12.2) | 44.0 | (12.3) | 52.7 | (8.8) | 57.3 | (5.6) | 62.0 | (6.5) | 65.3 | (13.3) | 70.0 | (6.5) | 78.9 | (8.0) | 71.9 | (5.9) |

Hz-Hertz, SD-standard deviation, dB-decibel, HL-hearing level, YNH-young normal hearing, ONH-old normal hearing, OHI-old hearing impaired

Stimuli

Stimuli for behavioral and electrophysiological testing included signals and noises presented through an Etymotic ER2 insert earphone to the right ear and were identical to those used previously (Billings et al. 2013). Briefly, behavioral test stimuli consisted of sentences from the Institute of Electrical and Electronic Engineers (IEEE, 1969). IEEE sentences provided a clinically relevant behavioral measure with which to explore the relationship between brain and behavior. Female talker exemplars of the IEEEs were used. The electrophysiological signal was the speech syllable /ba/ (naturally produced female exemplar from the UCLA Nonsense Syllable Test), shortened to 450 ms by windowing the steady vowel offset of the stimulus in order to reduce test time. The syllable /ba/ provides an abrupt onset with a relatively clear response even at poor SNRs. Signals were presented at four intensity levels: 50, 60, 70, and 80 dB SPL (broadband C-weighted).

The background noise was continuous speech spectrum noise, with a spectrum based on the long-term spectral average of 720 IEEE sentences (see Billings et al., 2011 for noise creation details). The level of the noise was varied to result in SNRs ranging from −10 to 35 dB. All stimuli (signals and noise) were low-pass filtered using a Butterworth filter with a cutoff of 4000 Hz and transitioning to 5000 Hz, beyond which no frequency content was passed.. Background noise was presented continuously for each testing condition for electrophysiology; whereas, for behavioral sentence testing, the noise was gated on (2 seconds pre onset) and off (2 seconds post offset) for each sentence.

Behavioral testing included SNRs of −10, −5, 0, +5, +15, +25, and +35 dB. Because of test time restrictions, electrophysiological testing only included −5, +5, +15, +25, and +35 dB SNRs (i.e. −10 and 0 dB were not tested). It should be noted that at the lowest signal levels, the most positive SNR conditions could not be tested behaviorally or electrophysiologically due to noise floor constraints (noise floor measured approximately 40 dBC SPL in the sound booth). More specifically, at a 50-dB signal level, +15, +25, and +35 dB SNRs were not tested; at a 60-dB signal level, +25 and +35 dB SNRs were not tested; at a 70-dB signal level, +35 dB SNR was not tested. Therefore, a total of 22 behavioral conditions and 14 electrophysiology conditions were completed. Randomized presentation order and visit type (behavioral vs. electrophysiological) was completed to avoid order effects associated with fatigue. Signals and noise levels were calibrated using the overall RMS level of a concatenated 10-second version of the stimulus.

Electrophysiology

Stimuli were presented in a passive paradigm in which participants were asked to ignore the auditory stimuli and watch a silent closed-captioned movie of their choice. Application of electrodes and testing lasted approximately 3.5 hours. Neuroscan software and hardware were used for stimulus presentation and electrophysiological acquisition (Compumedics Neuroscan Stim2/Scan 4.5; Charlotte, NC). A 64-channel tin-electrode cap (Electro-Cap International, Inc.; Eaton, Ohio) was used for data collection with the ground electrode on the forehead and the reference electrode at Cz. Electroencephalography was low-pass filtered on-line at 100 Hz (12 dB/octave roll off), amplified with a gain × 10, and converted using an analog-to-digital sampling rate of 1000 Hz. The stimulus for a given condition was repeated at least 150 times with an offset-to-onset interstimulus interval of 1900 ms. Evoked potentials were epoched using a 200-ms pre-stimulus to 1050-ms post-stimulus interval window. Data were baseline corrected (based on 100 ms of prestimulus baseline), linear detrended, and re-referenced off-line to an average reference. Eye movement was monitored in the horizontal and vertical planes with electrodes located inferior to and at the outer canthi of both eyes. Eye-blink artifacts were corrected offline, using Neuroscan software (Neuroscan, 2007). This blink reduction procedure calculates the amount of covariation between each evoked potential channel and a vertical eye channel using a spatial, singular value decomposition and removes the vertical blink activity from each electrode on a point-by-point basis to the degree that the evoked potential and blink activity covaried. Following blink correction, epochs with artifacts exceeding +/− 70 microvolts were rejected from averaging. The remaining sweeps were then averaged and filtered off-line from 0.1 Hz (high-pass filter, 24 dB/octave) to 30 Hz (low-pass filter, 12 dB/octave).

Behavioral Testing

In a separate session from CAEP measurements, participants completed a behavioral sentence-in-noise identification task that took approximately one hour. Order of session (electrophysiology vs behavioral) was randomized and the behavioral testing usually occurred within one month of electrophysiological testing (for four subjects, out of the 45 tested, the delay between session was approximately six months due to scheduling conflicts). IEEE sentences each have five keywords that were scored as correct or incorrect. Ten sentences were presented for each condition for a total of 50 possible responses per condition. Two judges scored the participants’ repetition of the sentence. To help acquaint participants with testing, approximately 10 sentences at a signal level of 80 dB and varying SNRs were presented prior to testing.

Data Analysis and Interpretation

Electrophysiological outcome measures included peak amplitudes and latencies at electrode Cz and for global field power (GFP; Skrandies 1989). Global field power characterizes the simultaneous activity, or more specifically the standard deviation, across all electrode sites as a function of time. In addition, a rectified area measure was used (calculated based on a time window of 50 to 550 ms). Peak amplitudes were calculated relative to baseline, and peak latencies were calculated relative to stimulus onset (i.e., 0 ms). Latency and amplitude measures were initially determined by an automatic peak-picking algorithm and then verified by two judges using temporal electrode inversion, two sub-averages made up of even and odd trials (to demonstrate replication), and global field power traces for a given condition.

In this study we asked six questions using three statistical approaches. Dependent variables included CAEPs (P1, N1, P2, and N2 amplitudes and latencies along with area measures) and performance on IEEE sentence perception (SNR50 score for each condition). Independent variables included SNR, signal level, and participant group (i.e. OHI, ONH, or YNH).

Question #1: What are the effects of signal level and SNR on cortical electrophysiology?

Question #2: What are the effects of signal level and SNR on perception in noise?

The first statistical approach focused on the effects of signal level and SNR on CAEPs for each group. A unique linear mixed model was used for each participant group (i.e. ONH and OHI). The linear mixed model generalizes the conventional repeated measures ANOVA to include covariance or arbitrary complexity across measurements for a participant. The linear mixed model, which is fit using maximum likelihood methods, also provides the advantage of using all measurements, not just those for participants who can provide valid measures under all stimulus conditions. The following questions were addressed with this statistical approach:

Question #3: What are the effects of age and hearing impairment on cortical electrophysiology?

Question #4: What are the effects of age and hearing impairment on perception in noise?

The second statistical approach addressed the potential differences between groups for electrophysiology and also for behavior. For these analyses, YNH data from the previous paper (Billings et al., 2013) was included for analysis. This approach combines all three groups into each analysis, and uses the linear mixed model methodology with a group level indicator as an additional independent variable. We answered the following questions with this second approach:

Question #5: How well does the YNH-prediction model work for older groups?

Question #6: Does a more specific model result in better predictions for the OHI group?

The third statistical approach established how well electrophysiology measures predicted behavioral performance on the speech-in-noise test. Initially, the partial least squares (PLS) model established previously for YNH individuals (Billings et al., 2013) was used to determine the best possible prediction given the combination of latency and amplitude measures. Subsequently, an additional model specific to older individuals was created. We answered the following questions using this approach:

RESULTS

Effects of SNR and Signal Level

Electrophysiology

The first question focused on the effects of SNR and signal level on electrophysiology measures. Supplementary Appendix 1 includes mean latencies and amplitudes for ONH and OHI data in this study; in addition, YNH measures from our previous work (Billings et al., 2013) were included for comparison purposes. Table 2 shows the statistical results for the ONH and OHI groups. At an alpha of .05, both groups demonstrated significant main effects of SNR on almost all latencies and amplitudes. Main effects of signal level were limited to the OHI group and were significant for N1 latency at electrode Cz, N1 amplitude at GFP, area at Cz, and area at GFP. As level increased for the OHI, N1 latency decreased while area and amplitude measures increased. These changes are consistent with more robust neural encoding of the auditory signal, probably due to improved audibility. Significant SNR by level interactions were limited to N1 and P2 latency at electrode Cz for ONH, and P1 latency at Cz for OHI.

Table 2.

Statistical analysis of SNR and signal level on amplitudes, latencies, and area(50-550 ms) for Cz and GFP waveforms for the ONH and OHI groups (significance at alpha 0.05 in bold).

| Effect of SNR |

Effect of Signal Level |

SNR × Level Interaction |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| Group | Waveform | Metric | Peak | F-statistic (df) | P-value | F-statistic (df) | P-value | F-statistic (df) | P-value |

| OHI | |||||||||

| Cz | Latency | P1 | 51.9 (4, 54.9) | <0.001 | 1.4 (2, 34.0) | 0.265 | 2.7 (5, 55.0) | 0.032 | |

| N1 | 69.4 (4, 70.1) | <0.001 | 4.7 (2, 46.3) | 0.014 | 0.4 (5, 72.9) | 0.861 | |||

| P2 | 20.0 (4, 38.7) | <0.001 | 1.2 (2, 16.9) | 0.324 | 0.9 (5, 40.3) | 0.467 | |||

| N2 | 5.2 (4, 38.7) | 0.002 | 0.4 (2, 18.8) | 0.695 | 0.5 (5, 35.3) | 0.810 | |||

| Amplitude | P1 | 2.0 (4, 37.4) | 0.119 | 1.3 (2, 127.1) | 0.267 | 1.7 (5, 127.1) | 0.143 | ||

| N1 | 12.8 (4, 29.5) | <0.001 | 1.4 (2, 117.0) | 0.258 | 1.7 (5, 117.0) | 0.132 | |||

| P2 | 6.3 (4, 38.0) | <0.001 | 1.5 (2, 99.1) | 0.235 | 0.9 (5, 99.0) | 0.466 | |||

| N2 | 1.3 (4, 54.1) | 0.289 | 3.0 (2, 113.4) | 0.055 | 0.5 (5, 113.7) | 0.759 | |||

| Area | 23.1 (4, 26.1) | <0.001 | 3.6 (2, 118.3) | 0.031 | 1.0 (5, 118.3) | 0.427 | |||

| GFP | Latency | N1 | 72.7 (4, 63.2) | <0.001 | 1.5 (2, 34.9) | 0.237 | 0.5 (5, 64.4) | 0.741 | |

| P2 | 6.7 (4, 10.1) | 0.007 | 0.8 (2, 4.4) | 0.521 | 1.4 (5, 12.9) | 0.285 | |||

| Amplitude | N1 | 11.7 (4, 26.3) | <0.001 | 3.1 (2, 106.3) | 0.050 | 1.1 (5, 106.2) | 0.386 | ||

| P2 | 9.4 (4, 45.6) | <0.001 | 0.7 (2, 64.3) | 0.489 | 1.7 (5, 66.3) | 0.138 | |||

| Area | 18.5 (4, 27.6) | <0.001 | 5.7 (2, 122.6) | 0.004 | 1.7 (5, 122.6) | 0.147 | |||

| ONH | |||||||||

| Cz | Latency | P1 | 25.6 (4, 60.4) | <.001 | 0.5 (3, 35.6) | 0.680 | 0.8 (6, 68.3) | 0.602 | |

| N1 | 45.6 (4, 72.0) | <.001 | 0.4 (3, 43.7) | 0.756 | 2.3 (6, 81.0) | 0.045 | |||

| P2 | 22.8 (4, 68.2) | <.001 | 2.7 (3, 39.0) | 0.058 | 3.1 (6, 66.0) | 0.009 | |||

| N2 | 6.4 (4, 56.8) | <.001 | 0.5 (3, 35.3) | 0.676 | 1.4 (6, 55.0) | 0.228 | |||

| Amplitude | P1 | 5.2 (4, 48.8) | 0.001 | 0.3 (3, 124.1) | 0.845 | 0.9 (6, 123.9) | 0.471 | ||

| N1 | 14.3 (4, 29.1) | <.001 | 0.6 (3, 139.2) | 0.594 | 1.7 (6, 139.2) | 0.122 | |||

| P2 | 21.9 (4, 38.5) | <.001 | 1.8 (3, 119.0) | 0.151 | 0.9 (6, 116.3) | 0.481 | |||

| N2 | 5.2 (4, 37.6) | 0.002 | 0.6 (3, 111.7) | 0.590 | 0.9 (6, 108.3) | 0.467 | |||

| Area | 17.8 (4, 25.7) | <.001 | 0.6 (3, 143.7) | 0.641 | 1.7 (6, 143.7) | 0.120 | |||

| GFP | Latency | N1 | 39.7 (4, 62.1) | <.001 | 0.4 (3, 36.4) | 0.731 | 1.4 (6, 74.1) | 0.217 | |

| P2 | 17.8 (4, 46.0) | <.001 | 0.3 (3, 24.5) | 0.830 | 0.2 (6, 47.3) | 0.977 | |||

| Amplitude | N1 | 12.5 (4, 26.2) | <.001 | 1.3 (3, 128.5) | 0.268 | 1.1 (6, 128.6) | 0.368 | ||

| P2 | 15.0 (4, 29.2) | <.001 | 2.4 (3, 95.2) | 0.070 | 1.0 (6, 95.1) | 0.418 | |||

| Area | 12.6 (4, 24.9) | <.001 | 0.2 (3, 144.4) | 0.919 | 0.6 (6, 144.4) | 0.766 | |||

SNR-signal-to-noise ratio, ONH-old normal hearing, OHI-old hearing impaired, df-degrees of freedom, ms-millisecond

Perception in Noise

Mean accuracy across SNR and mean SNR50s are shown in Supplementary Appendix 2 as a function of group. It should be noted that test-time constraints sometimes prevented collection of the −10 dB condition. In addition, one of the YNH participants was familiar with some of the IEEE lists; in such cases, corresponding data was excluded from the analysis. Similar to the electrophysiology results, statistical analysis of sentence-level perception reveals main effects of SNR for both groups (ONH: F(6,283) = 612.7, p<.001); OHI: F(6,159) = 251.5, p<0.001). In addition, significant main effects of signal level (ONH: F(3,283) = 6.09, p<.001); OHI: F(1,159) = 219, p<.001) and significant SNR by level interactions were also found for both groups (ONH: F(12,283) = 4.95, p<.001); OHI: F(4,159) = 416.7, p<.001). Follow-up statistical testing was completed to determine the causes of the interaction between SNR and signal level. Individual level comparisons at each SNR were completed and revealed significance at an alpha of .05 for the ONH group at −5-dB SNR (60 versus 80 dB signal level, p < .0001; 70 versus 80 dB signal level, p < .0001), 0-dB SNR (60 versus 80 dB signal level, p < .0001; 70 versus 80 dB signal level, p < .0001), 5-dB SNR (60 versus 80 dB signal level, p = .0001; 70 versus 80 dB signal level, p < .0001), and 15-dB SNR (60 versus 70 dB signal level, p =.0093; 60 versus 80 dB signal level, p = .0358). However, with a Bonferroni-corrected alpha of .003 due to the sixteen post hoc comparisons, the two 15-dB SNR conditions would not be considered significant. For the OHI group, only 70- and 80-dB signal levels were compared due to potential inaudibility at lower levels. Differences at a .05 alpha level were found at −10-dB SNR (p < .0001), 0-dB SNR (p = .0336), 5-dB SNR (p = .0071), 15-dB SNR (p = .03), and 25-dB SNR (p < .0002). A Bonferroni-corrected alpha of .008 (six post hoc comparisons) would result in the 0- and 15-dB SNR conditions not being significant. It is noteworthy that significant Bonferroni-corrected post hoc comparisons universally included the 80-dB signal level and usually occurred at poorer SNRs. These results are generally consistent with effects seen in YNH individuals (Billings et al., 2013).

Effects of Group (hearing impairment and age)

Electrophysiology

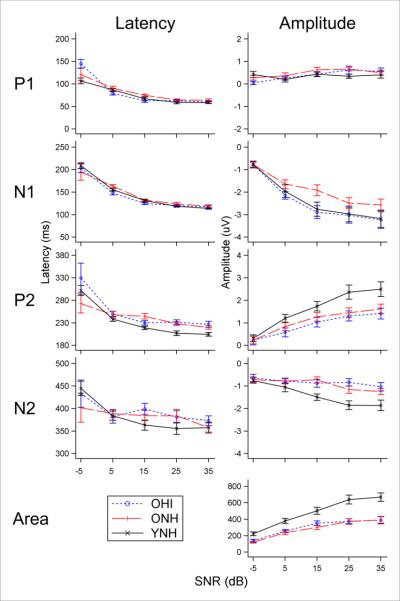

Figure 1 illustrates CAEP grand mean Cz waveforms for all conditions tested and the three groups (overlaid): YNH (black), ONH (red dashed), and OHI (blue dotted). Generally, the YNH group demonstrates the most robust waveform morphology (i.e. large amplitudes and short latencies). The 80-dB signal level growth functions for the different components are shown in Figure 2 and reveal shorter latencies and larger amplitudes for YNH as compared to the older groups. To contrast the SNR effects among group, we fit the linear mixed model to the electrophysiological measures using main effects of group, SNR, and signal level, along with interactions between group and SNR and between group and signal level. This model induces parallel growth functions across levels within groups, so that an omnibus test of the differences in SNR effects between groups is based solely on the SNR by group interaction. Results shown in Table 3 demonstrate that group effects are only seen as a function of age and are found for P2 and N2 amplitudes at electrode Cz, and for area measures at both Cz and GFP (note, P1 and N2 were not able to be measured for electrode GFP). This effect can be seen in Figure 1, which shows larger and broader N2 negativities for YNH. The effect of hearing impairment was not seen statistically in these electrophysiological data.

Figure 1.

Grand mean electrophysiology waveforms (n=15 for each group) for all 14 conditions tested. Robust morphology effects of SNR are evident while more subtle signal level effects are not as clear. Waveforms for each group are overlaid demonstrating group differences in waveform morphology across SNR and signal level. Generally, waveforms are more robust for YNH (solid black line) than for ONH (red dashed line) and OHI (dotted blue line) groups. Differences between older groups vary depending on the peak, but generally show larger ONH P2 amplitudes and larger OHI N1 amplitudes.

Figure 2.

Growth functions of electrophysiology measures (P1, N1, P2, N2, and area) as a function of group at an 80-dB signal level. Peak latencies (left) are generally similar across group, while peak amplitudes (right) for YNH (solid black) are generally larger than ONH (dashed red) and OHI (dotted blue) groups, except for N1 amplitude in which OHI appears to have a larger peak than ONH, similar to YNH.

Table 3.

Effects of hearing impairment and age on electrophysiological measures.

| Cz |

GFP |

|||||

|---|---|---|---|---|---|---|

| Group Comparison | Metric | Peak | F-statistic (df) | P-value | F-statistic (df) | P-value |

| Hearing impairment (ONH vs. OHI) | Latency | P1 | 2.04 (4.0, 205.2) | 0.090 | ||

| N1 | 2.11 (4.0, 215.0) | 0.081 | 2.49 (4.0, 211.0) | 0.045 | ||

| P2 | 1.46 (4.0, 197.4) | 0.215 | 1.54 (4.0, 185.1) | 0.178 | ||

| N2 | 1.49 (4.0, 195.0) | 0.207 | ||||

| Amplitude | P1 | 0.54 (4.0, 144.9) | 0.706 | |||

| N1 | 0.28 (4.0, 95.15) | 0.889 | 1.12 (4.0, 86.7) | 0.352 | ||

| P2 | 0.23 (4.0, 115.0) | 0.921 | 1.71 (4.0, 140.4) | 0.152 | ||

| N2 | 0.71 (4.0, 167.6) | 0.585 | ||||

| Area | (50-550 ms) | 0.18 (4.0, 87.3) | 0.947 | 0.47 (4.0, 94.5) | 0.758 | |

| Age (ONH vs. YNH) | Latency | P1 | 0.51 (4.0, 207.2) | 0.726 | ||

| N1 | 0.27 (4.0, 216.0) | 0.897 | 1.53 (4.0, 211.7) | 0.196 | ||

| P2 | 0.87 (4.0, 199.2) | 0.482 | 0.46 (4.0, 183.3) | 0.763 | ||

| N2 | 0.95 (4.0, 196.3) | 0.438 | ||||

| Amplitude | P1 | 2.38 (4.0, 131.0) | 0.060 | |||

| N1 | 1.17 (4.0, 89.8) | 0.328 | 0.48 (4.0, 82.2) | 0.749 | ||

| P2 | 2.50 (4.0, 97.5) | 0.047 | 1.54 (4.0, 98.2) | 0.198 | ||

| N2 | 4.06 (4.0, 141.3) | 0.004 | ||||

| Area | (50-550 ms) | 3.89 (4.0, 84.3) | 0.006 | 13.50 (4.0, 90.6) | 0.000 | |

ONH-old normal hearing, OHI-old hearing impaired, YNH-young normal hearing, GFP-global field power, df-degrees of freedom, ms-millisecond

Differences between groups at specific signal levels were also of interest given some limited age effect data in the literature (Kim et al., 2012; McCullaugh & Shinn, 2013). Estimates of the age and hearing impairment differences for electrophysiology measures at level and SNR were derived from the fitted linear mixed model described above. Using a Bonferroni-corrected alpha level of .0035 (.05/14 conditions), no hearing impairment comparisons were significant. Age comparisons revealed universal differences (i.e., for all 14 conditions) for Cz and GFP area measures, and N2 amplitude differences at 70 dB signal level for SNRs of 15 and 25 and at 80 dB signal level for an SNR of 15 dB. YNH areas/amplitudes were larger than those for ONH, an effect that is apparent in Figure 1. Age effects on latency were only significant for P2 at signal levels of 70 and 80 dB when the SNR was 25 dB.

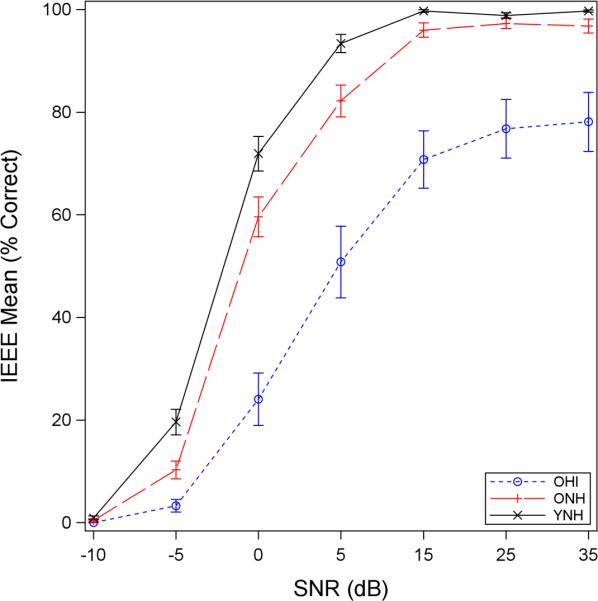

Perception in Noise

The effects of hearing impairment and age on sentence perception were estimated by separate group (i.e. hearing impairment: ONH vs. OHI; age: YNH vs. ONH) contrasts deduced from the linear mixed model. Mean psychometric curves are shown in Figure 3 for all three groups at a signal level of 80 dB. Statistical results, shown in Table 4, demonstrate significant effects of hearing impairment and age across multiple levels. Hearing impairment effects on SNR50s were estimated to be about 12 dB; that is, the 50% point (i.e. SNR50) on the OHI psychometric function (see Figure 3) is 12 dB to the right of the 50% point on the ONH psychometric function. Age effects on SNR50s were approximately 2 dB; in other words, SNR50s were larger, or shifted to the right, by 2 dB for ONH relative to YNH individuals.

Figure 3.

Sentence-level psychometric functions at an 80-dB signal level. YNH (solid black) show the best performance followed by ONH (dashed red) and then OHI (dotted blue) groups. The magnitude of hearing impairment effects at 50% (ONH vs. OHI) was about five to six times greater than the magnitude of age effects (YNH vs. ONH).

Table 4.

Behavioral sentence perception as a function of signal level and group comparisons (hearing impairment and age)

| Group Comparison | Level (dB) | Estimate | Error | T-statistic (df) | P-value |

|---|---|---|---|---|---|

| Hearing Impairment (ONH v. OHI) | |||||

| 70 | −12.2100 | 2.1031 | −5.81 (75) | <.0001 | |

| 80 | −12.4752 | 2.1031 | −5.93 (75) | <.0001 | |

| Age (ONH v. YNH) | |||||

| 60 | −2.7129 | 0.5668 | −4.79 (75) | <.0001 | |

| 70 | −1.5906 | 0.5668 | −2.81 (75) | 0.0064 | |

| 80 | −2.1954 | 0.5668 | −3.87 (75) | 0.0002 |

dB-decibel, df-degrees of freedom, ONH-old normal hearing, OHI-old hearing impaired, YNH-young normal hearing

Electrophysiology as a Predictor of Perception

Pearson's product correlations were computed for electrophysiology and behavioral data. Table 5 shows the top five correlations for each behavioral signal level. The absolute value of correlation coefficients (r) ranged from .53 to .80 depending on the CAEP measure and level/SNR that was used.

Table 5.

Best predictions based on ranking absolute Pearson's correlations for the OHI and ONH groups

| Group | Behavioral Signal Level (dB) | CAEP Signal Level (dB) | CAEP SNR (dB) | Best predictors of Behavioral SNR50 | Correlation Coefficient (r) |

|---|---|---|---|---|---|

| OHI | 70 | ||||

| 60 | 15 | GFP N1 latency | 0.69 | ||

| 60 | 15 | GFP P2 latency | 0.67 | ||

| 60 | 15 | CZ N1 latency | 0.66 | ||

| 70 | 5 | GFP P2 latency | 0.63 | ||

| 60 | 5 | GFP N1 latency | 0.62 | ||

| 80 | |||||

| 60 | 15 | GFP N1 latency | 0.76 | ||

| 70 | −5 | CZ N1 amplitude | 0.74 | ||

| 60 | 5 | CZ P1 latency | 0.73 | ||

| 60 | 15 | CZ N1 latency | 0.72 | ||

| 50 | 5 | GFP N1 latency | 0.65 | ||

| ONH | 50 | ||||

| 70 | 15 | GFP P2 latency | 0.64 | ||

| 60 | −5 | GFP N1 amplitude | −0.62 | ||

| 60 | 5 | GFP P2 latency | −0.58 | ||

| 70 | 15 | CZ N2 amplitude | −0.54 | ||

| 80 | 15 | GFP P2 amplitude | −0.53 | ||

| 60 | |||||

| 80 | 15 | CZ P2 amplitude | −0.75 | ||

| 50 | −5 | GFP N1 latency | 0.71 | ||

| 60 | 15 | CZ P2 amplitude | −0.69 | ||

| 70 | 15 | CZ P2 amplitude | −0.68 | ||

| 80 | 5 | CZ N2 latency | 0.67 | ||

| 70 | |||||

| 70 | 25 | CZ P2 amplitude | −0.76 | ||

| 80 | 15 | CZ P2 amplitude | −0.72 | ||

| 80 | 5 | CZ P2 amplitude | −0.69 | ||

| 70 | 5 | CZ P2 amplitude | −0.69 | ||

| 70 | 15 | GFP P2 latency | 0.65 | ||

| 80 | |||||

| 80 | 15 | CZ P2 amplitude | −0.80 | ||

| 70 | 15 | GFP P2 latency | 0.76 | ||

| 70 | 5 | CZ P2 amplitude | −0.73 | ||

| 60 | 15 | GFP P2 latency | 0.72 | ||

| 50 | −5 | CZ N1 latency | 0.71 |

dB-decibel, OHI-old hearing impaired, ONH-old normal hearing, CAEP-cortical auditory evoked potentials, GFP-global field power

YNH-Model Predictions

The PLS model developed previously (Billings et al., 2013) was used to predict SNR50s in ONH and OHI groups with one exception: GFP N2 peak values were not included in the model due to the difficulty identifying N2 in the GFP waveform in all ONH and OHI individuals. Clinical application of the previously developed model would require the presence of all CAEP metrics to complete a behavioral prediction; therefore, GFP N2 was removed from the YNH model and rerun on all three groups. In addition, the −5-dB SNR condition was excluded because of very poor waveform morphology in older individuals. Figure 4 demonstrates the accuracy of predictions for the three groups as a function of SNR and signal level. Table 6 shows PLS model predictions of SNR50 at a signal level of 80 dB. It is noteworthy that root mean square prediction errors (RMSPEs) for the YNH group are within a quarter dB of values reported previously (compare Table 6 herein with Table 3 from Billings et al. 2013). Despite excluding GFP N2 and the −5-dB SNR conditions from the model there still may be difficulty with clinical applications because of other missing CAEP values in certain individuals; there are many more missing values for ONH and OHI individuals than for YNH individuals (see Supplementary Appendix 1). Therefore, another model was developed in YNH listeners that included only area measures, which are available in all participants regardless of waveform morphology. These area-based models demonstrated RMSPE values that were only slightly larger than when multiple peak measures were included (Table 6).

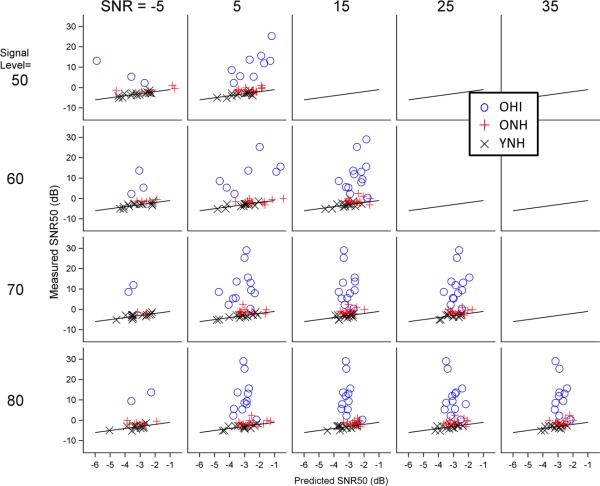

Figure 4.

Accuracy of electrophysiology-based predictions of behavior. Predicted SNR50s are plotted against measured SNR50s for YNH (black x), ONH (red +), and OHI (blue o) for all SNR and signal level conditions. Estimates are based on the model created from YNH data. The solid black line represents an accurate prediction. Deviations from this line were used to calculate the root mean squared prediction error. Predictions are relatively good for YNH and ONH groups and quite poor for the OHI group.

Table 6.

Root mean square prediction errors based on partial least squares (PLS) regression for CAEPs for behavior at 80dB signal for the OHI and ONH groups

| YNH Model |

OHI Model |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| All Peaks* |

Area Only** |

All Peaks* |

Area Only** |

|||||||||

| Group | Signal Level | SNR | RMPSE | Signal Level | SNR | RMPSE | Signal Level | SNR | RMPSE | Signal Level | SNR | RMPSE |

| OHI | ||||||||||||

| 70 | 25 | 16.268 | 70 | 5 | 16.778 | 70 | 25 | 6.786 | 70 | 5 | 6.984 | |

| 80 | 25 | 16.576 | 80 | 15 | 16.418 | 80 | 35 | 6.621 | 80 | 5 | 6.860 | |

| ONH | ||||||||||||

| 70 | 25 | 1.771 | 70 | 15 | 2.897 | |||||||

| 80 | 15 | 1.755 | 80 | 25 | 2.527 | |||||||

| YNH | ||||||||||||

| 70 | 5 | 0.586 | 70 | 5 | 0.754 | |||||||

| 80 | 5 | 0.733 | 80 | 5 | 0.675 | |||||||

Cz-P1, Cz-N1, Cz-N2, Cz-P2, CZ-Area, GFP-N1, GFP-Area

CZ-Area and GFP-Area

CAEP-cortical auditory evoked potentials, dB-decibel, OHI-old hearing impaired, ONH-old normal hearing, YNH-young normal hearing, SNR-signal-to-noise ratio, RMSPE-root mean square prediction errors, GFP-global field power

OHI-Specific Model

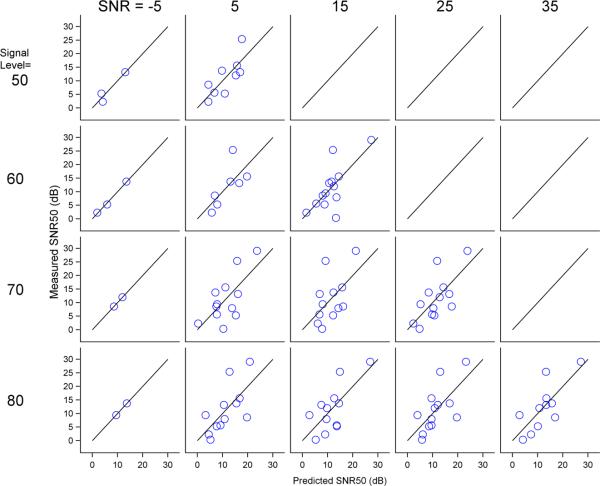

An examination of RMSPEs in Table 6 demonstrates that SNR50 predictions are within 1 dB of behavior for YNH, within about 2 dB for ONH, and within about 16 dB for OHI. In an attempt to improve the prediction error for OHI individuals, an OHI-based model was developed using partial least squares. Prediction error within the sample was computed using the predicted residual sum of squares statistic (PRESS), which provides a less biased estimate of the accuracy of predictions within a sample (Hastie et al., 2004). The right half of Table 6 shows RMSPEs for the OHI based on the model derived from the OHI individuals. The RMSPEs of the OHI-based model improve by 10 dB to about 6 dB. Figure 5 shows the accuracy of predictions for the OHI-specific model.

Figure 5.

Accuracy of electrophysiology-based predictions of behavior for the OHI group using a model specific to OHI. Predicted SNR50s are plotted against measured SNR50s. The solid black line represents an accurate prediction; deviations from this line were used to calculate the root mean squared prediction error. Predictions are better using an OHI-specific model than the YNH model as in Figure 4.

DISCUSSION

The purpose of this study was to determine the effects of SNR, signal level, hearing impairment, and age on electrophysiology and behavior. In addition, the experiment was designed to help determine the relationship between electrophysiological and behavioral data in older individuals.

Signal-to-Noise Ratio and Signal Level

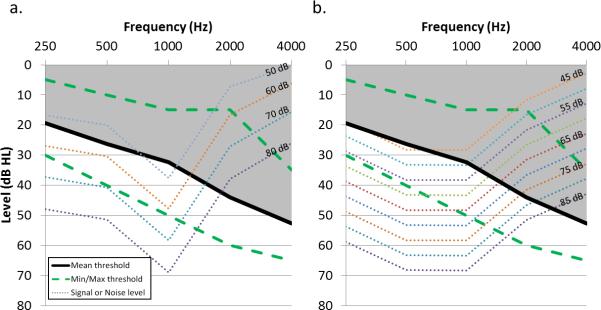

Electrophysiology and behavioral results from this study generally confirm those found previously: large effects of SNR with limited and/or small effects of signal level as presented in this study. It is worth noting that signal level effects are not universally seen in electrophysiology data, especially when both SNR and signal level are varied (Billings et al. 2009, 2013). The signal level effects in the current dataset were isolated to the OHI group. To determine whether signal audibility may have contributed to these results, signal levels at 250, 500, 1000, 2000, and 4000 Hz (measured in dB SPL for 1/3-octave bands) were converted to dB HL and compared to participant hearing thresholds. On average, for a signal level of 50 dB, signal content at frequencies of 250 and 500 Hz were not audible to OHI participants. For signal levels of 60, 70, and 80 dB, stimulus content from 250-1000 Hz was suprathreshold on average; however, for 60 dB, some stimulus content between 250-1000 Hz was inaudible for a third of OHI individuals. Therefore, inaudibility of portions of the /ba/ signal at lower signal levels likely resulted in degraded waveform morphology and decreased behavioral performance, contributing to the effects of signal level found in the OHI group. Figure 6 illustrates the relationship between average threshold and signal (Figure 6a) and noise (Figure 6b) levels. Interestingly, regarding the absence of signal level effects for the ONH group, participants perceived louder levels as signal level was increased; therefore, it is reasonable to assume that signal level is getting encoded to some extent, but that the electrophysiological correlate of signal level is perhaps obscured by the stronger SNR effects.

Figure 6.

Signal and noise audibility for the OHI group. Mean and min/max audiometric thresholds for the OHI group are shown (solid black and dashed green, respectively) together with 1/3rd octave band measurements for signal (a) and noise (b) measurements (dotted lines). The shaded area represents portions of the signal and noise that were not audible on average and likely explain the signal level effect that was found for the OHI group.

Behavioral performance is known to decrease at higher signal levels and has been attributed to a spread of masking resulting in saturation in the cochlea; several studies have found that this decrement in performance, or rollover in the psychometric function, starts as signal levels exceed 70 dB (Studebaker et al., 1999; Summers & Molis 2004; Hornsby et al, 2005). We also found significant decrements at higher levels, particularly when the 80-dB signal level was compared with lower levels and when poorer SNRs were used. These results are consistent with the cochlear saturation hypothesis. Interestingly, the decrements for the sentence-level stimuli used here were not shown for word-in-noise testing conducted previously in OHI and ONH individuals (Penman, et al. 2014). These mixed effects of signal level are likely due to the smaller effect size of signal level relative to the SNR effect size. For example, in the present study, scores usually varied by only 30 percent for a 30-dB range of signal levels, but ranged up to about 90 percent for a 30-dB range of SNRs.

Hearing Impairment and Age

The conditions tested in this study provided a matrix of signal levels and SNRs with which to determine hearing impairment and aging effects. Main electrophysiological age effects (ONH vs. YNH) were generally present for amplitude and area measures (Table 3) and may be due to poorer neural synchrony associated with central effects of biological aging (Willott, 1991). These results are consistent with the presence of some age-related signal-in-noise amplitude effects found previously (McCullaugh & Shinn, 2013), but contrast with another study where no amplitude effect was found (Kim et al., 2012). Interestingly, aging effects on N1 and P2 latency have also been shown in the literature at certain levels and SNRs (Kim et al., 2012; McCullaugh & Shinn, 2013); additional Bonferroni-corrected post hoc analyses of the current data confirmed only P2 latency age effects at limited SNRs and signal levels. These specific differences may result from methodological differences such as ONH sample characteristics (e.g. audiogram, age, etc) or stimulus parameters (e.g. presentation rate, use of speech stimuli vs tone stimuli, conditions tested, etc).

Hearing impairment effects (OHI vs. ONH) were not present in the electrophysiological data (Table 3). This may be due to heterogeneity related to etiology and/or degree of hearing impairment for the OHI group. Although auditory thresholds were controlled to the extent possible, variability was still present to a larger degree than for ONH or YNH individuals (see Table 1); in addition, there may be variability in hearing ability among this group that cannot be accounted for using pure tone hearing. There is evidence in animal studies that aging and hearing impairment are accompanied by decreases in inhibitory neural firing; this release from inhibition is thought to compensate for decreased excitatory activity resulting from the effects of aging and hearing impairment (Frisina & Walton, 2006; Caspary et al., 2008). The lack of an electrophysiological effect of hearing impairment in the current data may be a result of greater release from inhibition in OHI individuals due to the increased damage in the periphery relative to ONH individuals. It might also be possible that a hearing impairment effect would emerge if additional participants were tested. We are not aware of other CAEP-in-noise studies that have compared ONH and OHI individuals, thus no data was available for sample size decisions relating to the effect of hearing impairment. Additional testing may be necessary to determine the presence of a hearing impairment effect.

Another important consideration is that the effective SNR may be different than the acoustic SNR; that is, because of an impaired threshold of an OHI individual at a given frequency, the signal may be audible while the background noise is inaudible. As a result, the effective SNR (i.e. the difference between the signal level and the threshold) may actually be smaller than the intended acoustic SNR (i.e. the difference between the signal level and the background noise level). For example, an individual may be presented with an 80-dB signal and a 45-dB background noise for an acoustic SNR of 35 dB; however, if his hearing threshold is 55 dB, then the effective SNR will actually be 25 dB. Three considerations are important to keep in mind: (1) effective SNRs will be equal to or less than the intended acoustic SNR; effective SNRs cannot be larger than the acoustic SNR; (2) effective SNRs are more likely to be smaller than acoustic SNRs when the intended SNR is large, because there is a larger range over which the signal may be audible while the noise is inaudible; and (3) on average, the effective SNR of OHI participants is smaller than ONH and YNH participants due to poorer audiometric thresholds. It is noteworthy that the spectral content of the signal (the syllable /ba/ or IEEE sentences) and noise (continuous speech spectrum noise) are different, resulting in varying effective SNRs as a function of frequency. To complicate matters further, CAEPs have been shown to be differentially sensitive to frequency content (Picton et al., 1978; Jacobson et al., 1992; Sugg et al., 1995; Alain et al., 1997; Agung et al., 2006). This suggests that deviations in effective SNR away from the intended SNR will have a greater or lesser effect on CAEPs depending on where in the frequency spectrum the deviations occur.

Behaviorally, hearing impairment effect sizes were five to six times larger than aging effect sizes (see Table 4). These sentence-level hearing impairment and age effects are similar to word- and phoneme-level effects shown previously (Penman et al. 2014). The mismatch between electrophysiology and behavioral effects of hearing impairment is noteworthy given the magnitude of the behavioral effect of hearing impairment relative to age. It is likely that the mismatch between behavioral and electrophysiological response could be explained in part by higher order cognitive contributions that would be present in sentence-level perception but absent for obligatory passive cortical encoding. To some extent a mismatch would be expected at different levels of the auditory system using distinct measurement techniques. Such a difference between measures may be problematic if the clinical purpose is to substitute an electrophysiological test for a behavioral one. However, if complementary information is desired, a physiological measure may help to clarify why breakdowns in the perceptual process are occurring. With regard to behavioral data, Moore and colleagues (2014) have discussed a similar situation in which pure-tone air conduction thresholds did not match performance on a digit perception task. As was demonstrated with their data, mismatches may lead to hypotheses for why results may be different using different outcome measures.

Relationship between Electrophysiology and Perception

Some effects, such as level effects, were more robust behaviorally than they were electrophysiologically. Differences in outcome measure sensitivity to factors such as signal level and SNR are worth considering; certainly, the P1-N1-P2 CAEP complex is not the exclusive coding mechanism of signal characteristics such as level and SNR, but instead represents only a subset of the ongoing cortical neural encoding in the central auditory system. For this reason it may be helpful to correlate the two measures to understand their relationship. Most studies that have compared electrophysiology with behavior have correlated various peak latencies or amplitudes to determine what measures demonstrate the strongest correlation with behavior. Some have compared speech-in-noise perception with self-report complaints as well (Anderson et al., 2013). In the current study, the top five metrics correlating with behavior were selected and were predominantly N1 and P2 latencies and amplitudes.

Additional analyses were completed to explore the possibility of using physiological measures to predict behavioral results as a clinical tool, especially for hard-to-test populations. The use of a YNH-based prediction model to predict behavioral SNR50s in older groups was reasonably successful for ONH; ONH prediction errors averaged about 2 dB, about double those of the YNH group (Billings et al., 2013). In contrast, OHI prediction errors were more than 10 times those of YNH. An OHI-based model greatly improved SNR50 predictions for OHI participants (i.e., improvements about 10 dB were found). While encouraging, these improvements may be a result of over fitting the model by basing it on OHI data from this study. The PRESS statistic was used to minimize over-fitting bias, however, verification on a separate samples of participants is needed to verify the validity of the prediction model. In addition, the analysis of effects of test-retest reliability on prediction outcomes is necessary. Attempts were made to make predictions more useful clinically in situations where waveform morphology is poor, preventing the selection of individual peaks. Results using area measures only were generally comparable to models that included peak specific measures. That said, it is important to note that this OHI model may not be useful clinically with prediction errors of about 6 dB. In order for an SNR50 prediction to be useful, it should probably be within 1-2 dB of the true value, given that clinical improvements in SNR achieved with hearing aids and other interventions may be smaller than 5 dB. Further work is needed to determine if the current prediction model for OHI can be improved or if a better model can be developed.

CONCLUSION

These data demonstrate that both perception and electrophysiology measures are highly sensitive to the SNR of a stimulus. In addition, signal level effects, resulting from an interaction between hearing thresholds and signal level (i.e. audibility of specific frequency content), are present for behavior and to a limited extent for electrophysiology. These results are similar to those found previously in YNH individuals. Age effects are reflected in both electrophysiology and behavior. Interestingly, and in contrast to behavioral results, electrophysiology was not sensitive to the effects of hearing impairment in contrast to behavioral results. The difference in sensitivity to hearing impairment is likely due, in part, to the passive obligatory nature of the CAEPs used in this study. Electrophysiology was highly correlated with behavior and could be used to predict behavior reasonably well in normal-hearing individuals. However, predictions for hearing-impaired individuals yielded large errors indicating that additional research is needed to determine whether predictions of speech perception using CAEPs can be improved for hearing-impaired groups. The analysis of combined electrophysiological and behavioral data may be helpful in the diagnosis and treatment of perception in noise difficulties and will help clarify the underlying neural mechanisms contributing to these difficulties.

Supplementary Material

Acknowledgements

Portions of the data in this manuscript were presented at the 2012 American Auditory Society Scientific and Technology meeting and at the 2013 Association for Research in Otolaryngology Midwinter Meeting. We wish to thank Drs. Marjorie Leek, Robert Burkard, and Kelly Tremblay for helpful comments on the design of this experiment. This work was supported by a grant from the National Institute on Deafness and Other Communication Disorders (R03DC10914) and career development awards from the United States (U.S.) Department of Veterans Affairs Rehabilitation Research and Development Service (C4844C and C8006W). The contents do not represent the views of the U.S. Department of Veterans Affairs or the U.S. Government.

Abbreviations

- SPL

sound pressure level

- HL

hearing level

- dB

decibel

- SNR

signal-to-noise ratio

- CAEPs

cortical auditory evoked potentials

- ANOVA

analysis of variance

- LOOCV

leave one out cross validation

- PLS

partial least squares

- RMSPE

root mean square prediction error

Footnotes

We have no conflicts of interest pertaining to this manuscript.

REFERENCES

- Agung K, Purdy SC, McMahon CM, et al. The use of cortical auditory evoked potentials to evaluate neural encoding of speech sounds in adults. J Am Acad Audiol. 2006;17(8):559–572. doi: 10.3766/jaaa.17.8.3. [DOI] [PubMed] [Google Scholar]

- Alain C, Woods DL, Covarrubias D. Activation of duration-sensitive auditory cortical fields in humans. Electroencephalogr Clin Neurophysiol. 1997;104(6):531–539. doi: 10.1016/s0168-5597(97)00057-9. [DOI] [PubMed] [Google Scholar]

- Anderson S, Parbery-Clark A, White-Schwoch T, et al. Auditory brainstem response to complex sounds predicts self-reported speech-in-noise performance. J Sp Lang Hear Res. 2013;56:31–43. doi: 10.1044/1092-4388(2012/12-0043). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, Chandrasekaran B, Yi J, et al. Neural timing is linked to speech perception in noise. J Neurosci. 2010;30:4922–4926. doi: 10.1523/JNEUROSCI.0107-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Androulidakis AG, Jones SJ. Detection of signals in modulated and unmodulated noise observed using auditory evoked potentials. Clin Neurophysiol. 2006;117:1783–1793. doi: 10.1016/j.clinph.2006.04.011. [DOI] [PubMed] [Google Scholar]

- Billings CJ, Bennett KO, Molis MR, et al. Cortical encoding of signals in noise: Effects of stimulus type and recording paradigm. Ear Hear. 2011;32:53–60. doi: 10.1097/AUD.0b013e3181ec5c46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Billings CJ, McMillan GP, Penman TM, et al. Predicting perception in noise using cortical auditory evoked potentials. J Assoc Res Otolaryngol. 2013;14:891–903. doi: 10.1007/s10162-013-0415-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Billings CJ, Tremblay KL, Stecker GC, et al. Human evoked cortical activity to signal-to-noise ratio and absolute signal level. Hear Res. 2009;254:15–24. doi: 10.1016/j.heares.2009.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspary DM, Ling L, Turner JG, Hughes LF. Inhibitory neurotransmission, plasticity and aging in the mammalian central auditory system. J Exp Biol. 2008;211:1781–1791. doi: 10.1242/jeb.013581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherry EC. Some experiments on the recognition of speech, with one and with two ears. J Acoust Soc Am. 1953;25:975–979. [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatric Research. 1975;12(3):189–98. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Frisina ST, Mapes F, Kim S, Frisina DR, Frisina RD. Characterization of hearing loss in aged type II diabetics. Hear Res. 2006;211:103–113. doi: 10.1016/j.heares.2005.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The elements of statistical learning, 2nd edn. Springer Series in Statistics. Springer; NewYork: 2009. [Google Scholar]

- Hiraumi H, Nagamine T, Morita T. Effect of amplitude modulation of background noise on auditory-evoked magnetic fields. Brain Res. 2008;1239:191–197. doi: 10.1016/j.brainres.2008.08.044. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, Trine TD, Ohde RN. The effects of high presentation levels on consonant feature transmission. J Acoust Soc Am. 2005;118:1719–129. doi: 10.1121/1.1993128. [DOI] [PubMed] [Google Scholar]

- Jacobson GP, Lombardi DM, Gibbens ND, et al. The effects of stimulus frequency and recording site on the amplitude and latency of multichannel cortical auditory evoked potential (CAEP) component N1. Ear Hear. 1992;13(5):300–6. doi: 10.1097/00003446-199210000-00007. [DOI] [PubMed] [Google Scholar]

- Kaplan-Neeman R, Kishon-Rabin L, Henkin Y, et al. Identification of syllables in noise: Electrophysiological and behavioral correlates. J Acoust Soc Am. 2006;120:926–33. doi: 10.1121/1.2217567. [DOI] [PubMed] [Google Scholar]

- Kim JR, Ahn SY, Jeong SW, et al. Cortical auditory evoked potential in aging: Effects of stimulus intensity and noise. Otol Neurotol. 2012;33:1105–1112. doi: 10.1097/MAO.0b013e3182659b1e. [DOI] [PubMed] [Google Scholar]

- Klein AJ, Mills JH, Adkins WY. Upward spread of masking, hearing loss, and speech recognition in young and elderly listeners. J Acoust Soc Am. 1990;87:1266–1271. doi: 10.1121/1.398802. [DOI] [PubMed] [Google Scholar]

- Martin BA, Kurtzberg D, Stapells DR. The effects of decreased audibility produced by high-pass noise masking on N1 and the mismatch negativity to speech sounds /ba/ and /da/. J Speech Lang Hear Res. 1999;42:271–286. doi: 10.1044/jslhr.4202.271. [DOI] [PubMed] [Google Scholar]

- Martin BA, Sigal A, Kurtzberg D, et al. The effects of decreased audibility produced by high-pass noise masking on cortical event-related potentials to speech sounds /ba/ and /da/. J Acoust Soc Am. 1997;101:1585–1599. doi: 10.1121/1.418146. [DOI] [PubMed] [Google Scholar]

- McCullagh J, Shinn JB. Auditory cortical processing in noise in younger and older adults. Hear Balanc Commun. 2013;11:182–190. [Google Scholar]

- Miller GA. The masking of speech. Psychol Bull. 1947;44:105–129. doi: 10.1037/h0055960. [DOI] [PubMed] [Google Scholar]

- Moore DR, Edmondson-Jones M, Dawes P, Fortnum H, McCormack A, Pierzycki RH, Munro KJ. Relation between speech-in-noise threshold, hearing loss and cognition from 40-69 years of age. PLoS One. 2014;9(9):e107720. doi: 10.1371/journal.pone.0107720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Marmel F, Bair J. What subcortical-cortical relationships tell us about processing speech in noise. Eur J Neurosci. 2011;33:549–557. doi: 10.1111/j.1460-9568.2010.07546.x. [DOI] [PubMed] [Google Scholar]

- Penman T, Billings CJ, Ellis E, et al. How age, hearing loss, signal-to-noise ratio, and signal level affect word/phoneme perception in noise. submitted. [Google Scholar]

- Picton TW, Woods DL, Proulx GB. Human auditory sustained potentials. II. Stimulus relationships. Electroencephalogr Clin Neurophysiol. 1978;45(2):198–210. doi: 10.1016/0013-4694(78)90004-4. [DOI] [PubMed] [Google Scholar]

- Sharma M, Purdy SC, Munro KJ, et al. Effects of broadband noise on cortical evoked auditory responses at different loudness levels in young adults. Neuroreport. 2014;25:312–319. doi: 10.1097/WNR.0000000000000089. [DOI] [PubMed] [Google Scholar]

- Skrandries W. Data reduction of multichannel fields: global field power and principal component analysis. Brain Topogr. 1989;2:73–80. doi: 10.1007/BF01128845. [DOI] [PubMed] [Google Scholar]

- Studebaker GA, Sherbecoe RL, McDaniel DM, et al. Monosyllabic word recognition at higher-than-normal speech noise levels. J Acoust Soc Am. 1999;105:2431–2444. doi: 10.1121/1.426848. [DOI] [PubMed] [Google Scholar]

- Sugg MJ, Polich J. P300 from auditory stimuli: intensity and frequency effects. Biol Psychol. 1995;41(3):255–269. doi: 10.1016/0301-0511(95)05136-8. [DOI] [PubMed] [Google Scholar]

- Summers V, Molis MR. Speech recognition in fluctuating and continuous maskers: Effects of hearing loss and presentation level. J Speech Lang Hear Res. 2004;47:245–256. doi: 10.1044/1092-4388(2004/020). [DOI] [PubMed] [Google Scholar]

- Whiting KA, Martin BA, Stapells DR. The effects of broadband noise masking on cortical event-related potentials to speech sounds /ba/ and /da/. Ear Hear. 1998;19:218–231. doi: 10.1097/00003446-199806000-00005. [DOI] [PubMed] [Google Scholar]

- Willott JF. Aging and the auditory system: Anatomy, physiology and psychophysics. Singular; San Diego, CA: 1991. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.