Investigations of how the nervous system represents, transmits, and transforms sensory information provide invaluable insights for the field of neuroscience as a whole. Early sensory neuroscience typically focused on neural responses to sensory inputs of a single modality. These approaches are understandable given that the characterization of responses to simplistic stimuli is prerequisite for evaluating responses to more complex stimuli, especially stimuli spanning multiple sensory modalities. However, our natural sensory environment is replete with stimuli that simultaneously provide inputs to multiple sensory modalities. Successful integration of this information helps enhance our perception of real-world events. For example, at crowded events, many competing voices can overlap, making it difficult to attend to and understand a single voice. Visual observation of lip movements can help a listener focus on one speaker while ignoring other irrelevant voices. Furthermore, mismatches in multimodal stimuli can interfere with perception, resulting in a host of interesting illusions, such as the ventriloquism effect, where auditory and visual information coming from different spatial sources cause an illusory displacement of sounds toward the visual source (Recanzone and Sutter, 2008), and the McGurk effect, which arises when viewing lip movements coupled artificially with an incompatible sound, creating the illusion of perceiving a different sound (McGurk and MacDonald, 1976).

Although many sensory neuroscientists study modalities in isolation, there is an increasing body of work focused on multi- and cross-sensory information transfer (for review, see Driver and Noesselt, 2008). Despite recent advancements, myriad questions remain regarding the precise brain structures and mechanisms involved in parsing and interpreting multisensory environmental cues.

With the exception of specialized zones like the superior colliculus, multimodal sensory convergence has generally been thought to occur late in the information processing hierarchy, such as in frontal, temporal, and parietal association areas (Goldman-Rakic, 1988). However, some studies suggest that multisensory integration may occur earlier in the cortical pathways. For instance, animal studies have found that auditory stimuli can modulate activity of neurons in visual cortex (Murray et al., 2015) and vice versa (Kayser et al., 2008).

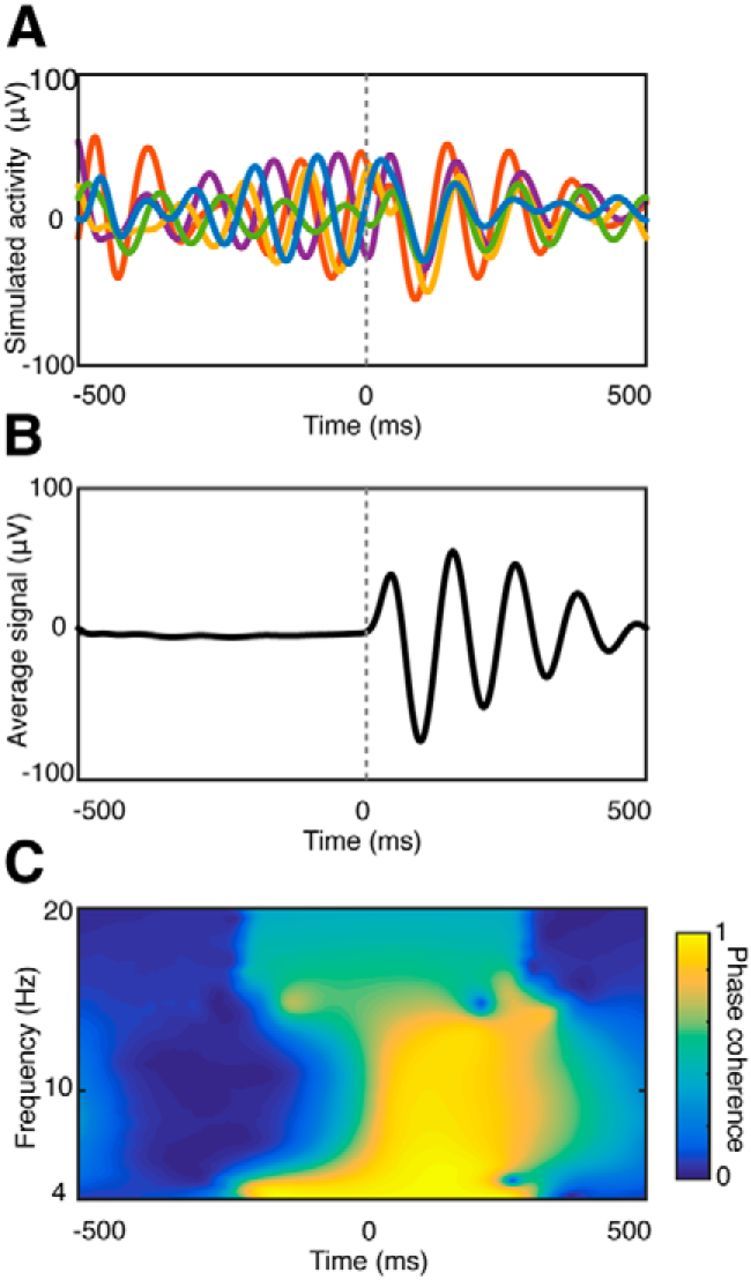

In human scalp EEG recordings, Giard and Peronnet (1999) observed that posterior visual areas respond differently to audiovisual stimulation (AV) than to visual (V) or auditory (A) stimulation alone, with differences evident as early as 40–50 ms following stimulus onset. However, the spatial ambiguity of scalp EEG makes it difficult to determine whether AV integration does indeed occur earlier in the human visual system than previously appreciated. To circumvent these shortcomings, Mercier et al. (2013) recorded electrocorticographic (ECoG) data from subdural electrodes placed over visual cortex of epilepsy patients. They found that auditory stimuli do indeed modulate the activity of visual cortex, but rather than inducing a change in the magnitude of responses, auditory stimulation led to phase reset of ongoing slow oscillations. A schematic diagram of induced phase reset and how it can improve signal detection is shown in Figure 1. This feature is consistent with AV event-related potential (ERP) responses observed by Giard and Peronnet (1999).

Figure 1.

Schematic illustration of stimulus-induced phase reset. A, Phase reset of ongoing oscillations. The phases of five simulated oscillations (8–10 Hz) are reset at time 0. The group of simulated signals illustrates a set of hypothetical responses to stimulus presentation in a single brain region or from multiple brain regions. Note that the induced phase reset does not affect the amplitude or frequency of the ongoing oscillation. B, Average of 1000 simulated signals. The average amplitude is around zero before stimulus onset (time = 0 s), as the signals cancel out one another due to phase variability of the ongoing oscillations. Following stimulus onset and consequent phase reset, peaks and troughs in the individual signals across trials are aligned, resulting in increased average response amplitude. C, Time–frequency phase clustering plot of the 1000 simulated signals. Phase clustering was calculated by complex wavelet convolution of each signal and averaging the resulting phase angles at each time and frequency (Cohen, 2014). Phase clustering values can range from 0 to 1, with 0 (dark colors) corresponding to no phase relationship between the signals, and 1 (light colors) corresponding to perfect phase alignment. Note that phase clustering increases following the phase reset event at time = 0 s.

In a recent article published in The Journal of Neuroscience, Mercier et al. (2015), again recording from subdural electrodes in epilepsy patients, examined AV interactions in the context of a well established behavioral paradigm known as the redundant target effect (RTE; Miller, 1982). When participants are instructed to detect and respond as quickly as possible to any stimuli presented (A, V, or simultaneous AV), response times (RTs) to AV stimuli (redundant targets) are consistently faster than those to A or V stimuli presented alone. Although the RTE is considered a classic example of multisensory integration, the neural mechanisms facilitating faster processing of multimodal percepts are still largely unknown.

Mercier and colleagues (2015) hypothesized that local and interregional phase alignment are two key mechanisms driving the multimodal RTE. The theoretical framework lies in the temporal coding hypothesis, specifically the phase reset model, which predicts that information is encoded in the precise phases at which neurons are active (Makeig et al., 2002; Thorne et al., 2011). The temporal alignment of responses to multisensory events would be evident as increased oscillatory phase coupling between unisensory brain regions. Mercier et al. (2015) further proposed that phase coupling between sensory and motor regions is linked to faster behavioral performance in RTE tasks. Specifically, they evaluated whether (1) cross-sensory phase reset occurs in human auditory cortex, (2) such a phase reset in auditory cortex is stronger for multisensory than unisensory input, (3) there is a phase relationship between auditory and motor cortices, and (4) such a relationship is related to the RTE.

To evaluate these possibilities, they tested three patients with a version of the RTE paradigm while recording ECoG from temporal and frontal subdural electrode grids covering auditory and motor areas. Along with reaction times, ERPs, power, and phase angles of oscillations in different frequency bands were extracted for each trial. Phase alignment was quantified by two measures: (1) regional phase alignment across trials was calculated as the phase concentration index (PCI; elsewhere referred to as phase clustering, phase-locking factor, or intertrial coherence; Cohen, 2014), and (2) phase alignment between auditory and motor areas was calculated for each trial [referred to as phase locking value (PLV)].

As expected from previous studies, reaction times appeared to be faster in AV trials than A or V alone, although statistical tests and quantification of the RT distributions for individual patients were not provided. Delta band PCI values in auditory cortex were higher for AV trials than for either A or V trials, indicating that multimodal stimuli were more effective in resetting the phase of ongoing oscillations than unimodal stimuli. Moreover, auditory–motor phase alignment was positively correlated with the auditory intertrial phase coherence consistently across individuals in the multisensory input condition. Within individuals, faster reaction times were associated with stronger PLVs between auditory and motor cortices following stimulus onset, suggesting that sensorimotor phase alignment may be the neural mechanism of the RTE.

These results provide clear empirical evidence that local and interregional phase alignment is stronger or more consistent in the multisensory condition than for either unimodal condition. However, not enough evidence is provided to evaluate the claim that interregional phase synchrony is faster in the multisensory condition. Figure 4A in Mercier et al. (2015) shows that significant changes in delta band auditory–motor cortex phase alignment for the multisensory condition occur earlier by perhaps 50 ms, but only in one of the three patients (P2). However, the boundaries of significant zones in Mercier et al.'s (2015) Figures 4 and 5 are difficult to discern, due to their use of a linear frequency axis. Depicting time–frequency PLVs on a logarithmic frequency axis (Morillon et al., 2012) or with the axis limited to bands of interest (e.g., delta and theta; Sauseng et al., 2007) would highlight the areas in question and facilitate the reader's interpretation of the data. Although a strong correlation between reaction time and interregional synchrony could imply faster time to synchrony, quantifying and comparing the latency to maximum interregional phase alignment is necessary to test the hypothesis that multimodal stimulation leads to faster phase locking.

Overall, the results presented in Mercier et al. (2015) are striking in a number of important ways. First, they demonstrate that visual stimuli can disrupt the phase of ongoing oscillations in auditory cortex without inducing a change in their magnitude. Together, the results of Mercier et al. (2013, 2015) are consistent with the binding-by-synchrony hypothesis, which predicts that synchronous neural activity underlies the neural representation of sensory features (Gray and Singer, 1989; Buzsaki, 2006). While the binding-by-synchrony hypothesis is typically used to describe responses to unimodal visual stimuli, the present findings hint that related features present in separate sensory modalities could be bound and represented by interregional synchrony.

Second, Mercier et al. (2015) demonstrate induced phase locking between brain regions and show a linear relationship between the strength of phase locking and RTs. This latter result bridges a possible neural mechanism subserving multisensory integration with behavioral performance via the phase reset model. One idea left to explore is the possibility that communication between sensory and motor areas may have optimal and suboptimal time windows based on their ongoing phase relationship, even before a target is presented. That is, phase reset of motor cortex might be unnecessary if it is already aligned with auditory cortex at the time of stimulus presentation, resulting in faster RTs. Likewise, slower RTs may result if phase reset is necessary to align activity in the two areas. Taking into account phase relationships before AV stimulus presentation—as well as stimulus-induced sensorimotor phase locking—could help further explain reaction time differences.

Many questions are raised by the link between local and interregional phase synchrony to behavioral facilitation by multisensory integration shown in this study. For example, is the link unbreakable? In other words, is local (auditory) phase reset and auditory–motor phase synchrony in multisensory conditions necessary and sufficient for reaction time facilitation?

In the naturalistic environments of daily lives, the brain constantly receives and integrates multisensory input signals. Thus, investigations of multisensory integration can enhance the ecological validity of neural processing models. This new study by Mercier and colleagues (2015) provides novel insight about the neural substrate of multisensory integration. Specifically, the study provides evidence that the local phase resetting and interregional phase locking play important roles in the representation, transmission, and transformation of information necessary for multisensory integration and behavior. Furthermore, this work suggests that optimal information transfer between human brain regions may occur in temporal windows that dynamically open and close.

Footnotes

Editor's Note: These short, critical reviews of recent papers in the Journal, written exclusively by graduate students or postdoctoral fellows, are intended to summarize the important findings of the paper and provide additional insight and commentary. For more information on the format and purpose of the Journal Club, please see http://www.jneurosci.org/misc/ifa_features.shtml.

This work was funded in part by a fellowship from the Keck Center for Quantitative Biomedical Sciences to B.D.M. (Training Program in Biomedical Informatics, National Library of Medicine T15LM007093). We thank Cihan Kadipasaoglu for insightful thoughts and comments.

The authors declare no competing financial interests.

References

- Buzsaki, 2006.Buzsaki G. Rhythms of the brain. New York: Oxford UP; 2006. [Google Scholar]

- Cohen, 2014.Cohen MX. Analyzing neural time series data. Cambridge, MA: MIT; 2014. [Google Scholar]

- Driver and Noesselt, 2008.Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron. 2008;57:11–23. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giard and Peronnet, 1999.Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic, 1988.Goldman-Rakic PS. Topography of cognition: parallel distributed networks in primate association cortex. Annu Rev Neurosci. 1988;11:137–156. doi: 10.1146/annurev.ne.11.030188.001033. [DOI] [PubMed] [Google Scholar]

- Gray and Singer, 1989.Gray CM, Singer W. Stimulus-specific neuronal oscillations in orientation columns of cat visual cortex. Proc Natl Acad Sci U S A. 1989;86:1698–1702. doi: 10.1073/pnas.86.5.1698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser et al., 2008.Kayser C, Petkov CI, Logothetis NK. Visual modulation of neurons in auditory cortex. Cereb Cortex. 2008;18:1560–1574. doi: 10.1093/cercor/bhm187. [DOI] [PubMed] [Google Scholar]

- Makeig et al., 2002.Makeig S, Westerfield M, Jung TP, Enghoff S, Townsend J, Courchesne E, Sejnowski TJ. Dynamic brain sources of visual evoked responses. Science. 2002;295:690–694. doi: 10.1126/science.1066168. [DOI] [PubMed] [Google Scholar]

- McGurk and MacDonald, 1976.McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Mercier et al., 2013.Mercier MR, Foxe JJ, Fiebelkorn IC, Butler JS, Schwartz TH, Molholm S. Auditory-driven phase reset in visual cortex: human electrocorticography reveals mechanisms of early multisensory integration. Neuroimage. 2013;79:19–29. doi: 10.1016/j.neuroimage.2013.04.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mercier et al., 2015.Mercier MR, Molholm S, Fiebelkorn IC, Butler JS, Schwartz TH, Foxe JJ. Neuro-oscillatory phase alignment drives speeded multisensory response times: an electro-corticographic investigation. J Neurosci. 2015;35:8546–8557. doi: 10.1523/JNEUROSCI.4527-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller, 1982.Miller J. Divided attention: evidence for coactivation with redundant signals. Cogn Psychol. 1982;14:247–279. doi: 10.1016/0010-0285(82)90010-X. [DOI] [PubMed] [Google Scholar]

- Morillon et al., 2012.Morillon B, Liégeois-Chauvel C, Arnal LH, Bénar CG, Giraud AL. Asymmetric function of theta and gamma activity in syllable processing: an intra-cortical study. Front Psychol. 2012;3:248. doi: 10.3389/fpsyg.2012.00248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray et al., 2015.Murray MM, Thelen A, Thut G, Romei V, Martuzzi R, Matusz PJ. The multisensory function of primary visual cortex in humans. Neuropsychologia. 2015 doi: 10.1016/j.neuropsychologia.2015.08.011. Advance online publication. [DOI] [PubMed] [Google Scholar]

- Recanzone and Sutter, 2008.Recanzone GH, Sutter ML. The biological basis of audition. Annu Rev Psychol. 2008;59:119–142. doi: 10.1146/annurev.psych.59.103006.093544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauseng, 2007.Sauseng P, et al. Are event-related potential components generated by phase resetting of brain oscillations? A critical discussion. Neuroscience. 2007;146:1435–1444. doi: 10.1016/j.neuroscience.2007.03.014. [DOI] [PubMed] [Google Scholar]

- Thorne et al., 2011.Thorne JD, De Vos M, Viola FC, Debener S. Cross-modal phase reset predicts auditory task performance in humans. J Neurosci. 2011;31:3853–3861. doi: 10.1523/JNEUROSCI.6176-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]