Abstract

Objective

Nucleus Hybrid CI users hear low-frequency sounds via acoustic stimulation and high frequency sounds via electrical stimulation. This within-subject study compares three different methods of coordinating programming of the acoustic and electrical components of the Hybrid device. Speech perception and cortical auditory evoked potentials (CAEP) were used to assess differences in outcome. The goals of this study were to determine (1) if the evoked potential measures could predict which programming strategy resulted either in better outcome on the speech perception task or was preferred by the listener, and (2) whether CAEPs could be used to predict which subjects benefitted most from having access to the electrical signal provided by the Hybrid implant.

Design

CAEPs were recorded from 10 Nucleus Hybrid CI users. Study participants were tested using three different experimental MAPs that differed in terms of how much overlap there was between the range of frequencies processed by the acoustic component of the Hybrid device and range of frequencies processed by the electrical component. The study design included allowing participants to acclimatize for a period of up to 4 weeks with each experimental program prior to speech perception and evoked potential testing. Performance using the experimental MAPs was assessed using both a closed-set consonant recognition task and an adaptive test that measured the signal to noise ratio that resulted in 50% correct identification of a set of 12 spondees presented in background noise (SNR-50). Long-duration, synthetic vowels were used to record both the cortical P1-N1-P2 “onset” response and the auditory “change” or ACC response. Correlations between the evoked potential measures and performance on the speech perception tasks are reported.

Results

Differences in performance using the three programming strategies were not large. Peak-to-peak amplitude of the AAC response was not found to be sensitive enough to accurately predict the programming strategy that resulted in the best performance on either measure of speech perception. All 10 Hybrid CI users had residual low frequency acoustic hearing. For all 10 subjects, allowing them to use both the acoustic and electrical signals provided by the implant improved performance on the consonant recognition task. For most subjects, it also resulted in slightly larger cortical change responses. However, the impact that listening mode had on the cortical change responses was small and again, the correlation between the evoked potential and speech perception results was not significant.

Conclusions

CAEPs can be successfully measured from Hybrid CI users. The responses that are recorded are similar to those recorded from normal hearing listeners. The goal of this study was to see if CAEPs might play a role either in identifying the experimental program that resulted in best performance on a consonant recognition task or documenting benefit from use of the electrical signal provided by the Hybrid CI. At least for the stimuli and specific methods used in this study, no such predictive relationship was found.

Keywords: Cochlear implant, Hybrid cochlear implant, Cortical auditory evoked potential, Auditory evoked potential, Acoustic change complex

INTRODUCTION

For individuals with profound bilateral sensorineural hearing loss the benefits of cochlear implantation can be (and often are) substantial (e.g., Shannon et al. 2004; Spahr & Dorman 2004; Wilson & Dorman 2007). Loss of residual acoustic hearing is not typically a major concern for these individuals. For those with good low-frequency hearing, the decision to pursue cochlear implantation is considerably more difficult. These individuals are often are very concerned about losing their residual acoustic hearing. Hybrid cochlear implants (CI) are designed specifically for this population.

The Nucleus Hybrid CI has an internal electrode array designed that is shorter and thinner than the standard intracochlear electrode array. It also has fewer electrode contacts. It was designed specifically to maximize the chance that residual acoustic hearing in the implanted ear could be preserved. Preliminary results suggest that not only is hearing preservation possible with this device, but most Hybrid CI users are able to successfully fuse the information presented acoustically with information presented electrically. There is a growing body of literature that suggests that word recognition in the acoustic plus electric (A+E) listening mode is better than either the acoustic alone (A-alone) or electric alone (E-alone) modes (e.g., Turner et al. 2008; Lenarz et al. 2009; Gantz et al. 2009; Woodson et al. 2010). Results like this have led to an increase in the number of clinics offering the Hybrid device to their hearing impaired clients and it seems reasonable to assume that children may also soon be considered candidates for the Hybrid CI.

Compared to a traditional CI, programming a Hybrid CI is somewhat more complicated. Hybrid CI users present with varying amounts of low-frequency hearing and most need some degree of amplification. The Nucleus Hybrid CI has both an acoustic component (i.e., a traditional hearing aid) and electric component (i.e., the speech processor of the CI). Clinicians are required to program both the acoustic and electrical components. There are several different approaches that could be used to do this (for review, see Incerti et al. 2013). In the Hybrid Desk Reference Guide Cochlear Corporation suggests starting by identifying the highest audiometric frequency where prescriptive targets for gain can be achieved using the acoustic component of the Hybrid device. The speech processor of the CI is then programmed so that only information in the signal above that point (often referred to as a “cross-over frequency”) is processed electrically. This can be achieved either by turning off apical electrodes or by adjusting the boundaries of the frequency allocation table. Karsten et al. (2013) referred to this programming strategy as the “Meet” strategy. We will use the same label in this report. This is also an approach to programming advocated by Wolfe and Schafer (2015) in a widely used textbook.

Karsten et al (2013) also describes two other general approaches to coordinating programming of the acoustic and electrical components of the Hybrid device. One approach suggests that the entire acoustic spectrum be divided across all of the available intracochlear electrodes. This method creates redundancy or “overlap” between the range of frequencies processed via the acoustic component and the range of frequencies that are conveyed via electric stimulation. It could be argued that such redundancy in the input signal could prove useful for the listener. However, depending on the length of the intracochlear electrode array and the extent of the residual acoustic hearing, this method of programming could also introduce distortion. A third alternative may be to construct a program that includes a separation (or “gap”) between the highest frequency that can be successfully amplified using the acoustic component of the Hybrid device and the lowest frequency that is conveyed electrically. This approach to programming could help minimize potentially negative interactions between the information processed by the two transducers in the Hybrid implant. Karsten et al. (2013) report that speech perception in noise was better for a group of 10 Hybrid CI recipients tested using the “Meet” programming strategy as opposed to the “Overlap” strategy. This is also the strategy that was preferred by the majority of participants in the Karsten et al. (2013) report.

There is, of course, no reason to believe that any of these three experimental strategies will result in optimal levels of performance. Also, it may be that, despite group trends, a programming method that works well for one individual may not work as well for others. Creating several different programs and asking the CI user to evaluate each program is time consuming and may not be possible for pediatric implant recipients. This is an example of a situation where it may be helpful to have an objective method of determining how to approach programming the speech processor of the Hybrid device. One of the goals of this study is to explore the feasibility of using cortical auditory evoked potentials (CAEPs) for this purpose.

There are several different evoked potentials that could be used to help assess benefit from use of a HA or specific CI processing strategy. For an excellent review of the relative strengths and weaknesses of each, the interested reader is referred to Martin et al (2008). This study was modelled after one published several years ago by Martin and Boothroyd (2000). Like Martin and Boothroyd (2000), we opted to use long duration, synthetic vowels presented in the sound field. Each vowel contained a change in the formant structure midway through the vowel that resulted in a shift in the way the vowel was perceived. That is, the 2nd and 3rd harmonics are shifted such that perception of the vowel changes from /u/ to /i/ or from /i/ to /u/. We elected to use vowel stimuli and a stimulation/recording paradigm similar to those described by Martin and Boothroyd because we knew vowels would be easily processed by either acoustic or electrical components of the Hybrid device and because the paradigm that these investigators used allowed us to record both an N1-P2 response at the onset of the vowel as well as a second N1-P2 response (the ACC) following the change in formant frequency. We reasoned that the low-frequency information in the vowel (e.g., F0) would be continuous and largely transmitted via the acoustic component of the Hybrid implant. However, amplitude of the ACC would most likely be related to the ability of the listener to detect a change in the second and third formant frequencies. Perception of a change in the higher frequency content in the acoustic signal was likely to depend on the ability of the listener to detect a change in the electrical signal. We wanted to test the assumption that better performance with a specific program would depend on the ability of the Hybrid CI user to integrate information processed acoustically and electrically and reasoned that the ACC might provide an objective metric with which to assess that interaction.

We opted to focus on the N1-P2 response, as opposed to another evoked response, precisely because this evoked potential can be recorded from children using a passive listening paradigm (e.g., Ponton et al. 2000; Sharma & Dorman 2006; Small & Werker 2012) and from cochlear implant users (e.g., Friesen & Tremblay 2006; Sharma & Dorman 2006; Brown et al. 2008). Additionally, the N1-P2 onset response has been well-studied and the impact of factors like maturation, attention, sleep, the choice of recording electrode montage and training are known (e.g., Hyde 1997; Tremblay & Kraus 2002; Wunderlich & Cone-Wesson 2006; Nikjeh et al. 2009). We also reasoned that since previous investigators had related amplitude of the ACC to salience of the perceived change in an ongoing stimulus, this response might prove to be an index that could help drive decisions about how to program the processor for an individual Hybrid CI user. While all three experimental programs might be expected to evoke an onset response, a programming method that resulted in improved discrimination of the two vowels or made detection of the electrical information used to code the shift in formant frequencies easier, might be expected to evoke a more robust change response than a less effective programming strategy.

Our goal was also to assess whether or not this method was sensitive enough to be useful on an individual level. The amplitude of the N1-P2 onset response can vary significantly across listeners. Therefore, we opted to use a method that provided for within-subject comparison of onset and change response amplitudes. While somewhat time consuming, this method allows for normalization of the change response amplitude and, as a result, facilitates comparison across listeners.

We were also interested in contrasting results obtained in the A+E listening mode with the A-alone listening mode. We reasoned that most individuals who consider the Hybrid device will do so because they have reasonably good low frequency acoustic hearing. The subjects included in this study did not lose that hearing after surgery. They exhibited a range of different levels of performance on measures of speech perception and we wanted to know if the response that we were able to record might predict which of the group of Hybrid users benefited most from having access to the electrical input. Others have compared performance of Hybrid CI users in the electric alone listening mode either to performance using both the acoustic and electrical input or to the performance levels observed in standard long electrode CI users (e.g., Gstoettner et al. 2004; Gantz et al. 2006; Dorman & Gifford 2010; Incerti et al. 2013). Those studies are particularly important if the goal is to characterize the impact that the potential loss of residual acoustic hearing may have on performance. That was not, however, the focus of this study. We also found that it was difficult to eliminate all acoustic cues when testing in the sound field. Thus, our choice was to focus on obtaining within-subject comparisons of CAEPs recorded using both the A-alone and A+E listening modes.

If CAEPs are to play a role in clinical practice, evidence is required that demonstrates that the evoked potential used is sensitive enough to predict either the programming option that results in the best outcome on a behavioral measure of performance or alternately, help explain variance in outcome. Toward that end, the experiments described in this report address two hypotheses:

The experimental program (Meet, Overlap or Gap) that results in the most robust AAC responses will be the program that also results in the best performance on behavioral measures of consonant or word recognition.

The difference in peak-to-peak amplitude of the N1-P2 change response recorded in the A+E and A-alone listening modes will be related to the amount of benefit an individual Hybrid CI user receives from having access to the electrical signal provided by the device.

MATERIALS AND METHODS

Participants

Ten hearing-impaired individuals (8 female, 2 male) who participated previously in the Karsten et al. (2013) study also participated in this study. All ten had had significant, high-frequency sensorineural hearing loss bilaterally and were native speakers of American English. They used a Hybrid CI in one ear and in most cases, a conventional hearing aid in the other ear during most of their waking hours. All ten were implanted at the University of Iowa Hospitals and Clinics at least a year prior to enrolling in this study. Seven used the Nucleus Hybrid S8 device. Three used the Nucleus Hybrid S12 device. Both implants have a 10 mm intracochlear electrode array. The S12 device has 10 intracochlear electrodes. The S8 device has 6 intracochlear electrodes. All study participants used the Nucleus Freedom Hybrid sound processor with the acoustic component (HA) and were programmed using the ACE programming strategy. Table 1 (adapted from Karsten et al. 2013) includes relevant demographic information about the subjects.

Table 1.

Relevant demographics. Adapted from Table 1 in Karsten et al. (2013).

| Participant | Age (yrs) |

Duration Hybrid CI use (years) |

Implant type |

Number of Active Electrodes |

Upper edge of acoustic hearing (Hz) |

High vs Low from Karsten et al. (2013) |

Everyday MAP | Preferred Experimental Program |

||

|---|---|---|---|---|---|---|---|---|---|---|

| Rate (pps) |

Pulse duration (usec) |

Most Similar to: |

||||||||

| SE6 | 55 | 7 | S8 | 5 | 530 | L | 1200 | 25 | Meet | Meet |

| SE10 | 72 | 6 | S8 | 6 | 1240 | H | 900 | 25 | Overlap | Meet |

| SE16 | 65 | 5 | S8 | 6 | 770 | L | 1400 | 25 | Overlap | Meet & Overlap |

| SE21 | 54 | 4 | S8 | 6 | 530 | H | 1200 | 25 | Meet | Meet |

| SE26 | 78 | 4 | S8 | 6 | 1760 | H | 2400 | 12 | Meet | None |

| SE27 | 59 | 4 | S8 | 6 | 630 | L | 1400 | 25 | Meet | Meet |

| SE31 | 57 | 4 | S8 | 5 | 630 | L | 900 | 25 | Gap | Meet |

| SE37 | 65 | 2 | S12 | 9 | 1240 | H | 900 | 50 | Meet | None |

| SE38 | 29 | 1.5 | S12 | 10 | 1790 | H | 900 | 50 | Meet | Meet |

| SE40 | 46 | 1 | S12 | 9 | 900 | H | 900 | 25 | Meet | Overlap |

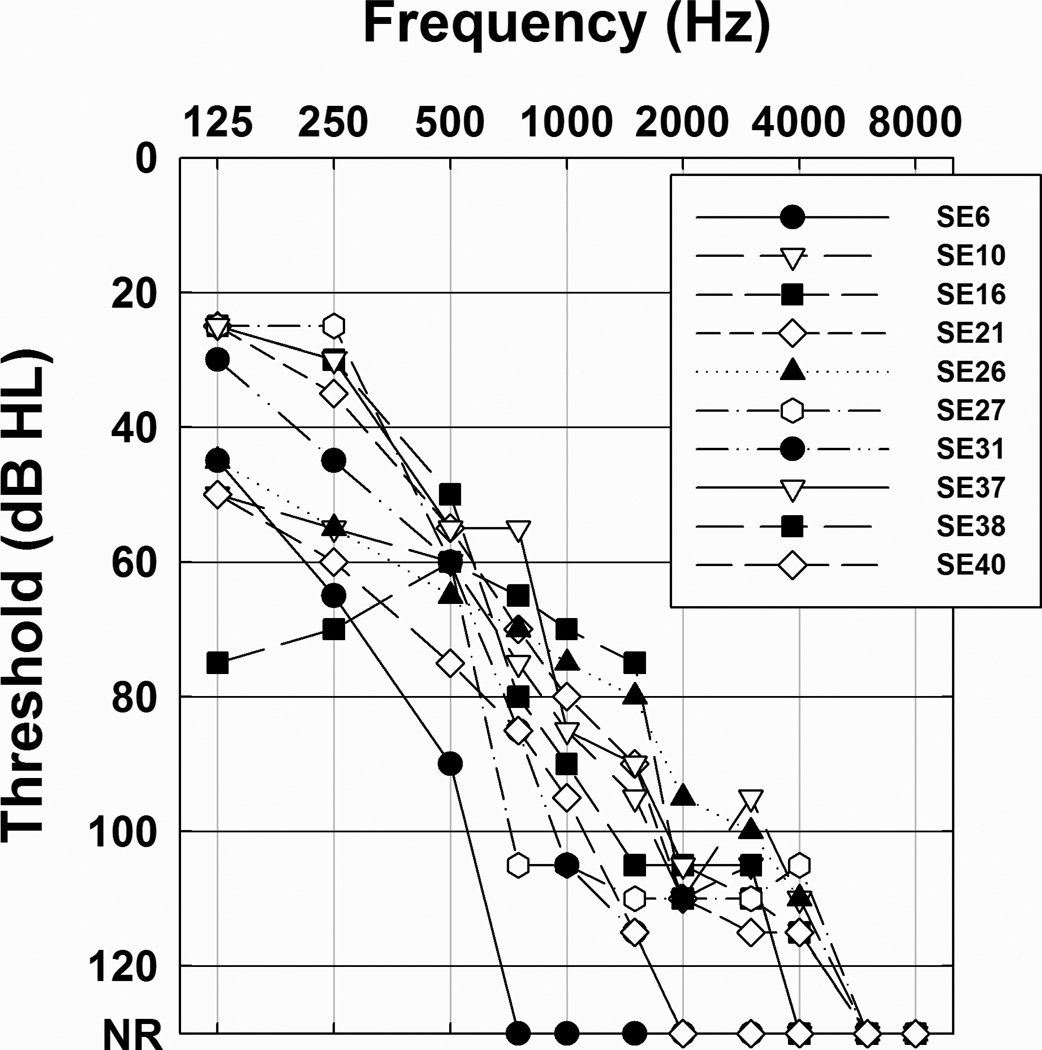

Audiograms for the implanted ear of each study participants are shown in Figure 1. Several of these individuals lost some of their acoustic hearing sensitivity during the first few months following surgery. However, audiometric thresholds had stabilized by the time the studies described in this report began and the thresholds shown in Figure 1 were collected immediately prior to their participation in this study. Karsten et al. (2013) divided the subjects into two groups (High or Low) based on the amount of high frequency acoustic hearing that they retained. That information as well as information about the program they used on a daily basis and the program that they preferred after completing the Karsten et al. study is also included in Table 1.

Figure 1.

Audiometric thresholds for the implanted ear of each of the 10 study participants are shown.

For comparison, we also recruited ten NH individuals (3 male, 7 female) who ranged in age from 21 to 43 years (mean age = 29, SD = 7.53) to participate. All were native speakers of American English and had audiometric thresholds lower than 20 dB HL for octave frequencies between 250 and 4000 Hz. They were tested using the same stimuli and recording equipment used to test the Hybrid CI users. The goal of including data from this group of subjects, who were younger than the experimental group and who had normal hearing, was to provide an optimal or best case control.

The Institutional Review Board at the University of Iowa approved this research project and participants were both paid for their time and reimbursed for costs associated with travel.

Programming procedures and parameters

Three different strategies for programming the speech processor of the CI were compared (For details, see Karsten et al. 2013). Briefly, the fitting process began by making real ear measures of the output of the acoustic component of the Hybrid CI (i.e., the HA) using an AudioScan Verifit System. Adjustments in the acoustic output were made if necessary to ensure optimal fit to targets derived using NAL-NL1 guidelines (Byrne et al. 2001). The highest frequency in the acoustic filter band where the real-ear measures came within 7 dB of NAL-NL1 targets was designated the “upper edge of hearing” and the HA was set such that no gain was provided above that frequency. The frequency response characteristics of the acoustic component of the Hybrid device were then held constant and three different experimental programs were created by systematically altering the frequency allocation table used to divide the acoustic frequency range across the available intracochlear electrodes. The three experimental programs are referred to as Meet, Overlap and Gap. In the “Meet” condition, the lowest acoustic frequency processed by the CI was selected to equal the frequency designated as the “upper edge of acoustic hearing”. In the “Overlap” condition, the lowest frequency conveyed electrically was 50% below the upper edge of acoustic hearing for each of the individual study participants. In the “Gap” condition, the lowest frequency processed by the CI was 50% higher than the frequency designated as the “upper edge of acoustic hearing”.

Once the frequency allocation table of the CI speech processor was set, electrical thresholds (T-levels) and maximum comfortable levels (C-level) were measured using standard clinical programming methods. All ten Hybrid CI users were experienced with making these loudness judgments. T- and C-levels were modified further if a sweep across the electrode array revealed individual electrodes that were either too loud or too soft relative to the others. Finally, the overall loudness of the three experimental MAPs and the balance between the acoustic and electric stimulation modes was verified and modified as necessary using live speech. The general speech processing strategy used for all subjects was the Advanced Combination Encoder (ACE) strategy. The pulse duration and number of spectral maxima selected for use varied across listeners but was based on the settings in the MAP they used for everyday listening prior to starting this study and was kept the same for all three experimental programs.

Each subject participated in a series of four data collection sessions. During the first session, speech perception data were collected using their everyday MAP. One of the three experimental programs (Meet, Gap or Overlap) was then randomly selected and saved to the user’s personal speech processor. Subjects were instructed to use the experimental program exclusively during all of their waking hours and to switch back to their clinical program only if absolutely necessary and only for short periods of time during the 3–4 week acclimatization period. Data logging was not available, but subjects were asked to complete a program use log and estimate the number of hours per day that they used the experimental MAPs. They also were encouraged to record their impressions about sound quality. At the end of this period of acclimatization, they returned to the lab for evoked potential and speech perception testing and were asked to rank the program they had been using on a scale from 1 (very unfavorable) to 10 (very favorable). This process was repeated for each of the three experimental programs. The order of testing for the three experimental conditions was randomized across subjects. Study participants were blinded to specific program type until the completion of the study. At the end of the data collection period, subjects were asked to indicate which of the three experimental MAPs they preferred. That information is provided for reference in Table 1 and discussed in more detail in Karsten et al. (2013).

Evoked Potential Measures

Stimulation parameters

The stimuli used to record the CAEPs were pairs of synthetic vowels presented at 70 dBA SPL from a loudspeaker located approximately 4 feet from the subject at 0 degrees azimuth. One stimulus pair was presented every 2.8 to 3.8 seconds. This relatively slow stimulation rate and the addition of some jitter in the timing between consecutive stimuli helped minimize the effects of adaptation. MATLAB software (MathWorks, Inc. US R2011b) was used to control stimulus timing and trigger the averaging computer.

The vowel stimuli were generated using a Klatt synthesizer with Sound Designer software. Two vowel sounds were created: a synthetic version of /u/ and /i/. Each vowel sound was 400 ms in duration. The formant frequencies for the /u/ vowel sound were: 400 Hz (F1), 1300 Hz (F2) and 2400 Hz (F3). The formant frequencies used to create the /i/ stimulus were 400 Hz (F1), 2600 Hz (F2) and 3000 Hz (F3). The two vowels were RMS balanced to minimize level effects and spliced together digitally to create two 800-ms stimuli: an /u/ that changed to an /i/, and an /i/ that changed to an /u/. The transition between the two vowels was edited manually to eliminate discontinuities.

Recording Parameters

Standard disposable disc electrodes were applied to the scalp and used to record the ongoing EEG activity. In order to minimize contamination of the recording by electrical stimulus artifact, the recording electrode sites were located on the midline or the side of the head contralateral to the CI. Six differential recordings were obtained simultaneously: vertex Cz (+) to contralateral mastoid Mc (−); contralateral temporal lobe Tc (+) to Mc (−), Fpz (+) to Mc (−), Cz (+) to Oz (−); Tc (+) to Oz (−); and Fpz (+) to Oz (−). Two additional recording electrodes placed above and lateral to the eye contralateral to the stimulated ear were used to record eye blinks. An electrode placed off center on the forehead was used as a ground for all of the recording channels. Ongoing EEG activity was amplified (gain = 10,000) and digitally filtered (1–30 Hz) using an optically isolated, Intelligent Hearing System differential amplifier (IHS 8000). A National Instruments Data Acquisition board (DAQ Card-6062E) was used to sample the ongoing EEG activity at a rate of 10,000 samples per second per channel. Custom-designed LabVIEW™ software (National Instruments Corp.) was used to compute and display the averaged waveforms. Two recordings that represented the average of 100 trials were obtained for each stimulus pair. These two recordings were combined offline creating a single evoked potential composed of 200 trials before being down-sampled to a 1 kHz sampling rate and smoothed using a 3-point moving window average. Finally, online artifact rejection was used to eliminate trials containing artifact from eye-blinks or voltage excursions greater than 0.8 mV in any of the recording channels.

Recording Procedures

The evoked potential data collection was completed in 2–3 hour blocks. During testing, study participants were seated in a comfortable chair inside a double-wall sound booth. No active listening task was required, but subjects were asked to stay awake and were allowed to read or watch captioned videos during data collection. Subject state was monitored carefully using both audio and video recording systems, and frequent breaks were provided.

The CI users were tested twice. Once using both the acoustic and electrical components of the Hybrid CI (A+E) and once using only the acoustic component of the device (A-alone). NH listeners were tested only once. Test ear was selected randomly. For both subject groups, the non-test ear was plugged and muffed during evoked potential testing. The order in which the CAEP responses were recorded was randomized across and within testing sessions. Peak latencies, as well as N1-P2 peak-to-peak amplitudes, were measured offline for each subject and test condition.

Data Analysis

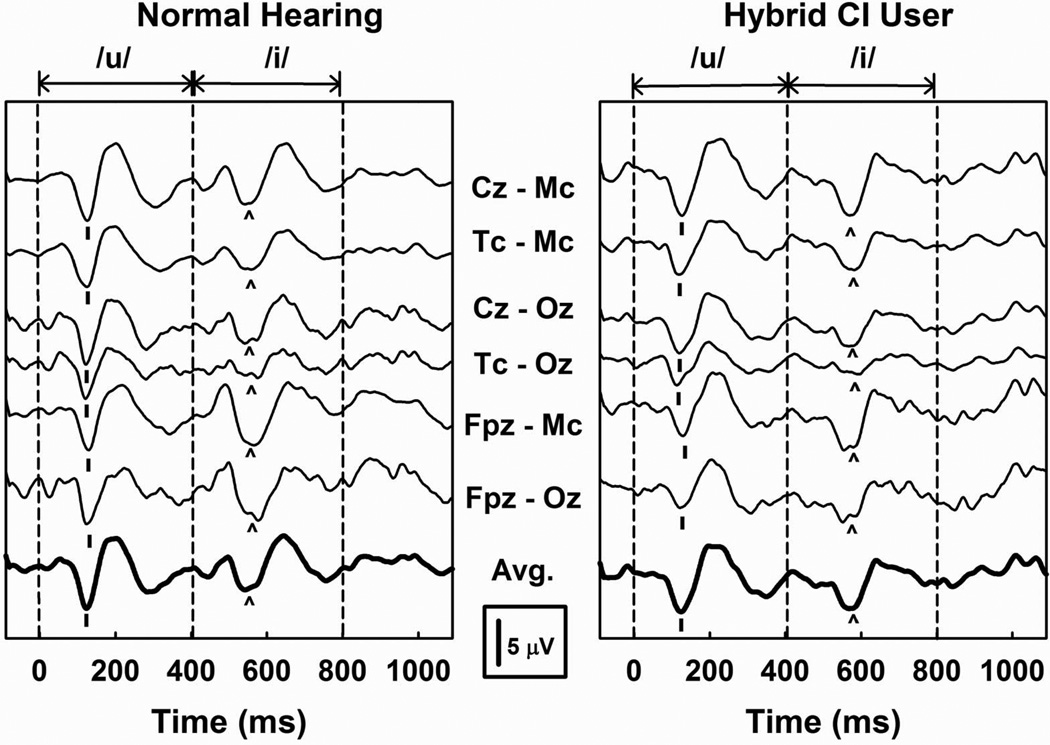

Figure 2 shows a set of evoked potential recordings obtained from an NH listener and an individual Hybrid CI user (SE27). Both subjects were tested using the same stimuli and recording equipment. Vertical dashed lines mark the onset and offset of the stimulus as well as the point where the formant frequencies in the vowel changed. The active and reference electrodes used for each recording are indicated. Typically the largest amplitude responses were recorded from either Cz-Mc or Cz-Oz. The bold waveform at the bottom of each set of recordings is the average of those two recording electrode montages. This composite recording was used to establish the presence or absence of a response, to pick peak latencies, to measure peak-to-peak amplitudes, and to compute normalized amplitude values.

Figure 2.

CAEPs recorded from a NH listener and a Hybrid CI user are shown. The stimulus is an /u/ that changes to an /i/ and is presented in the sound field. Vertical dashed lines indicate stimulus onset, the point where the vowel formant frequencies changed, and the stimulus offset. N1 of the onset response is indicated with a short vertical line. N1 of the change response is indicated with the caret. The parameter is the recording electrode montage.

Figure 2 shows a set of typical waveforms that illustrate the difference in amplitude between onset and change responses, and allows comparison of results from a single Hybrid CI user and “best case” results from a single, younger, NH control subject. Results recorded from the Hybrid CI user are typical in that there is little evidence of contamination of the responses by electrical artifact. The general morphology of the responses from the NH listener and CI users are also similar.

Speech Perception Measures

Results from speech perception testing were reported previously by Karsten et al. (2013). Consonant recognition was assessed in quiet using a closed-set /a/-consonant-/a/ format with 16 consonants spoken by four talkers (two male and two female) (Turner et al. 2004). The individual speech tokens were randomized creating a total of 128 test items and presented in the sound field at a comfortable listening level for each participant. Consonant recognition was assessed in the A+E listening mode as well as in both the A-alone and E-alone listening modes.

Speech recognition in babble was also measured using a closed set of 12 randomized spondees spoken by a female talker in the presence of a competing-talker background noise (2 talkers: one female and one male). The speech and babble were presented from the same speaker. The signal-to-noise ratio required for each subject to achieve a score of 50% correct (SNR-50) was determined using an adaptive procedure in which the level of the speech stimulus was fixed at 69 dB SPL and the level of the background noise was systematically varied (Turner et al. 2004). Participants completed a practice test and then three replications of the test were recorded and averaged together. Feedback was not provided. Performance on this speech in noise task was only completed in the A+E listening mode.

Statistical Analyses

A series of ANOVAs were used to evaluate the effect of programming strategy (Meet, Gap and Overlap), hearing status (NH vs CI user), listening mode (A+E vs A-alone), stimulus type (/u/ vs /i/) and evoked potential response type (Onset vs Change) on measures of N1-P2 amplitude. Repeated measures ANOVAs were used to account for within-subject correlations when appropriate. Depending on the comparison of interest, paired or unpaired T-tests were used to evaluate statistical significance and direction of the changes observed. Post hoc comparisons were computed using a Tukey-Kramer adjustment (α = 0.05) as necessary to adjust for multiple comparisons. Linear regression analysis was used to compare evoked potential peak-to-peak amplitudes with scores on the consonant recognition task and with the SNR-50 score. All of the statistical analyses were computed using SAS version 9.3.

RESULTS

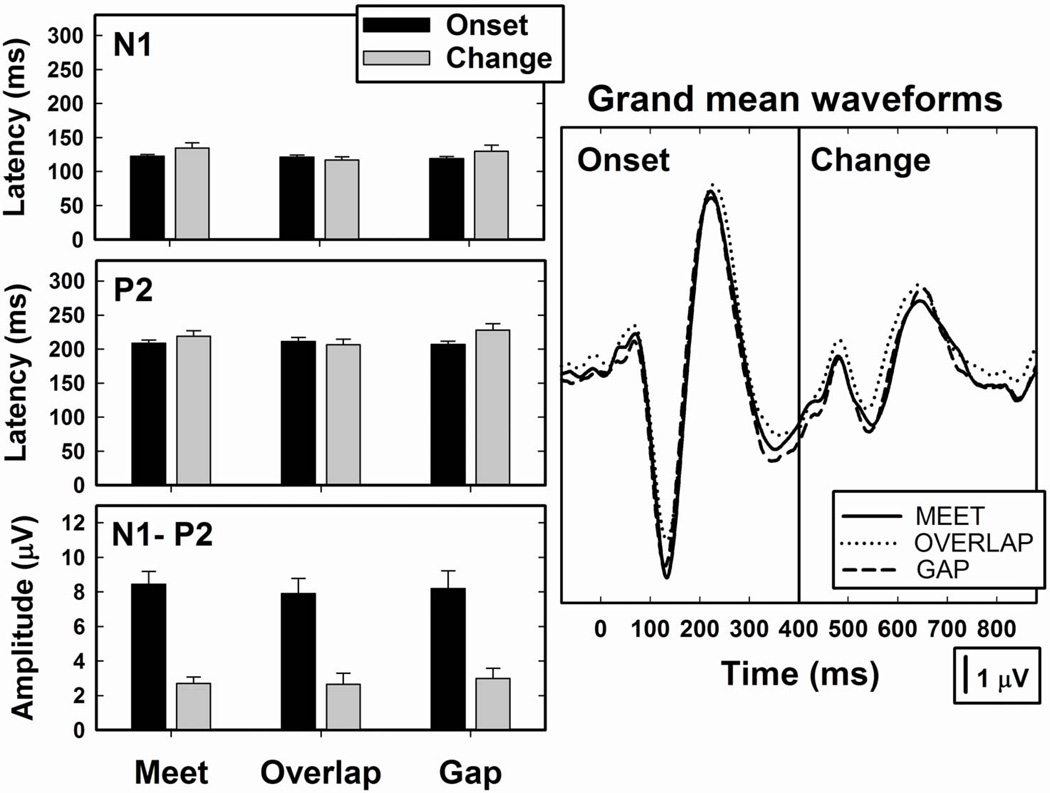

Our first goal was to assess the effect that programming strategy (Meet, Gap, Overlap) had on the evoked potential recordings. The study was designed to test the hypothesis that the experimental programming strategy that resulted in the largest amplitude change responses would be the programming strategy that also resulted in the best performance on the speech perception tasks. The panel on the right in Figure 3 shows grand mean waveforms recorded from the 10 Hybrid CI users. These grand mean recordings were obtained using the A+E listening mode. The /u-i/ and /i-u/ stimuli have been combined. Clearly, the effect of programming strategy on the grand mean waveforms was minimal.

Figure 3.

Grand mean waveforms constructed using data from all 10 Hybrid CI users are shown. The parameter is the programming strategy: Meet, Overlap, Gap. The bar graphs show mean peak latency and peak-to-peak amplitude values (+ 1 SE) for both the onset and change responses. The results reflect the average of responses recorded using both the /u-i/ and /i-u/ stimulus pairs.

The bar graphs on the left side of Figure 3 show mean latency and peak-to-peak amplitude measures for both the onset and change responses. Error bars give an indication of the variance in the individual data. The choice of programming strategy did not have a significant effect on N1-P2 amplitude for either the onset (F(2,9) = 0.23, p=0.798) or change (F(2,9) = 0.12, p=0.888) responses. Regardless of programming mode, onset responses were found to have significantly larger peak-to-peak amplitudes (F(1,9) = 51.91, p < 0.0001) and shorter peak latencies (F(1,9) = 5.93, p = 0.0377) than change responses.

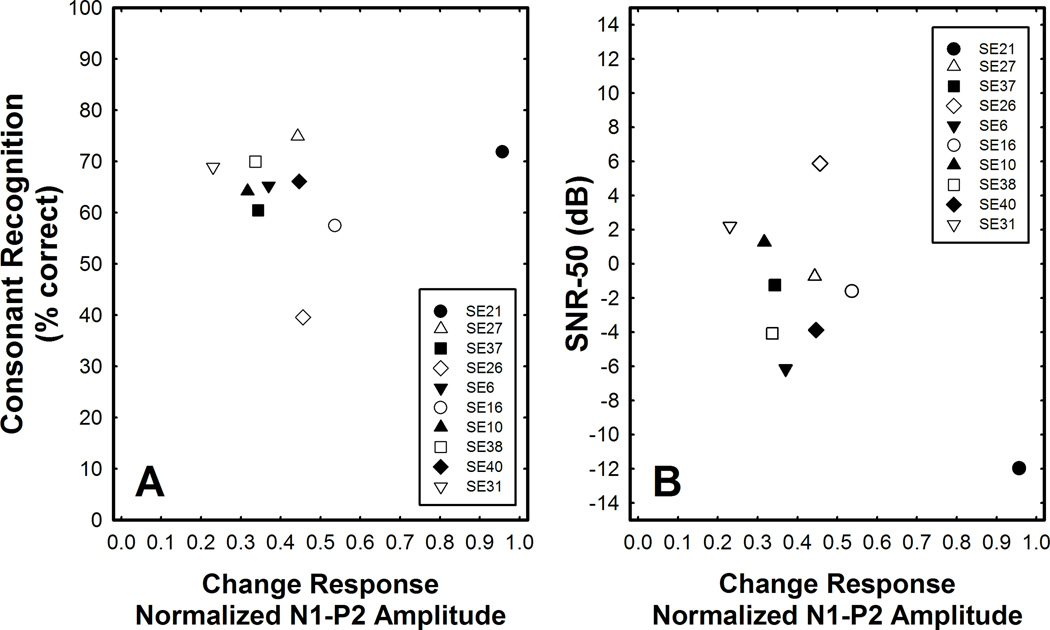

Figure 4 shows the relationship between normalized change response amplitude and performance. In both graphs, the independent variable is the change response amplitude measured from N1 to P2 divided by the peak-to-peak amplitude of the onset response. A normalized amplitude of one indicates that the peak-to-peak amplitude of the change response is the same as that of the onset response. Panel A shows the relationship between the normalized amplitude of the change response and score on the consonant recognition task. Panel B shows the relationship between the normalized evoked potential amplitude measures and SNR-50. Note that positive SNRs indicate worse performance. The speech perception scores are from Karsten et al. (2013) and in this figure results from all three programming strategies have averaged. Similarly, the change response amplitudes that are plotted reflect the average of the results obtained using each of the experimental MAPs.

Figure 4.

The relationship between normalized amplitude of the change response and performance on the two different measures of speech perception is shown. Panel A shows CNC word recognition scores measured in quiet. Panel B shows SNR that results in 50% correct performance on a spondee recognition task. In both graphs, the speech perception and evoked potential results reflect the average of results measured using the three experimental programs (Meet, Overlap, Gap).

Linear regression analysis was used to assess the relationship between the normalized change response amplitudes and these two measures of speech perception. There is one outlier point in both graphs (SE21). If that subject’s data is eliminated, the correlation between normalized change response amplitude and performance on each speech perception task was not statistically significant (i.e., p > 0.05).

Karsten et al. (2013) found significant performance differences for the “Meet” vs “Overlap” programming strategies. Paired t-tests were used to determine if these two programming strategies also resulted in significant differences in the normalized change response amplitudes and whether responses recorded in the preferred programming strategy were significantly larger in amplitude than responses recorded using the experimental strategy they ranks lowest. Neither comparison revealed a significant difference in normalized change response amplitude measures (p > 0.05).

Karsten et al. (2013) also grouped the subjects based on their residual acoustic hearing (High vs Low). Table 1 lists the four study participants that were considered to have less acoustic hearing and those that had residual acoustic hearing thresholds for higher frequency stimuli. It also shows which subjects used the shorter (S8) electrode array and which used the longer (S12) array. Neither method of grouping the subjects resulted in significant differences in performance (Karsten et al. 2013) nor did t-tests reveal significant differences in mean normalized change response amplitude for either method of dividing the subject group (p > 0.05).

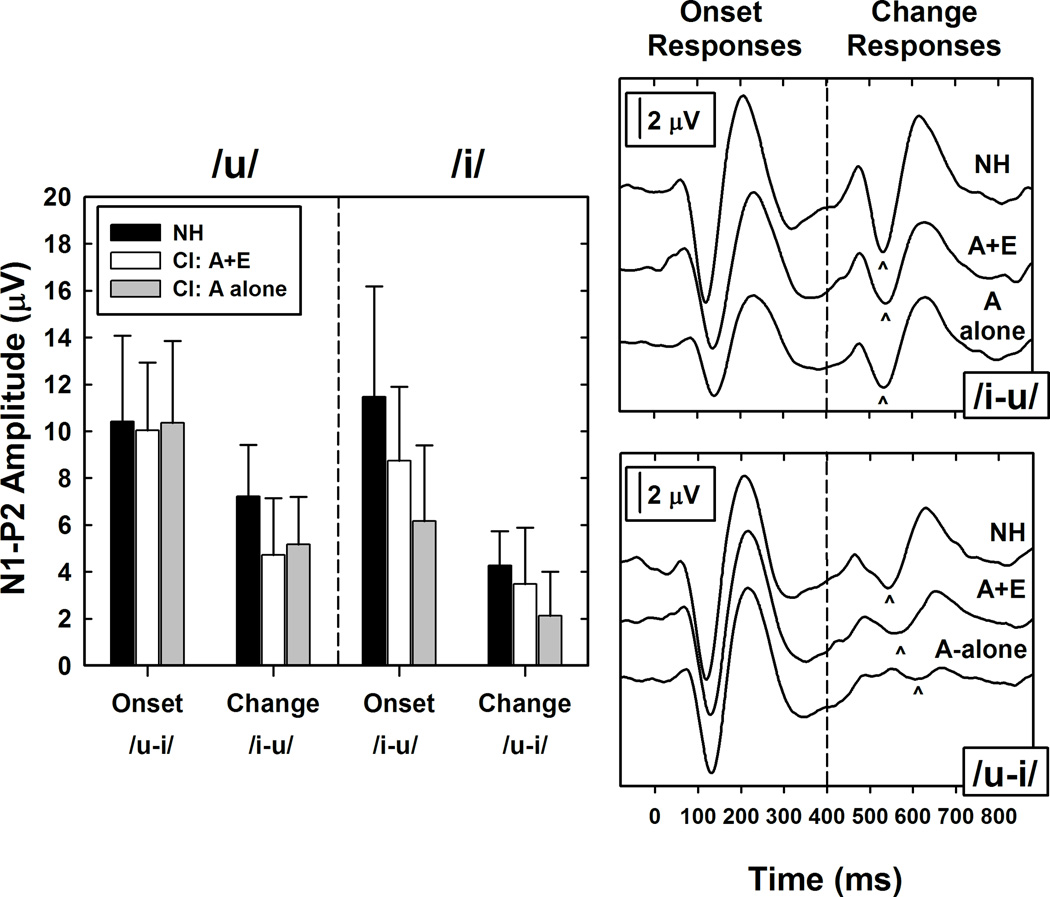

Our second goal was to determine whether the change response could be used to provide an estimate of how much benefit that an individual Hybrid user received from having access to the portion of the auditory signal that was processed electrically. We hypothesized that CAEP recordings obtained using the A+E listening mode would be larger than similar measures obtained using the A-alone listening mode and that the difference in amplitude between these two listening modes would be correlate with the performance difference on the speech perception measures. The grand mean waveforms on the right side of Figure 5 were recorded using the “Meet” programming strategy and show how differences in the listening mode affected the overall morphology of the onset and change responses. Recordings obtained using /u–i/ are plotted separately from recordings obtained using /i–u/. For comparison, similar measures obtained from a group of 10 younger and normal hearing listeners are also shown. The comparison data represents a best-case scenario against which to evaluate the data recorded from the CI users.

Figure 5.

Grand mean waveforms constructed using data from all 10 Hybrid CI users tested while using the “Meet” programming strategy are shown alongside similar recordings obtained from NH listeners. For CI users, results obtained in the A+E and A-alone listening modes are shown. The upper panel shows grand mean waveforms recorded using /i-u/. The lower panel shows results obtained using /u-i/. The bar graphs show mean peak-to-peak amplitude measures (+1 SE) recorded from NH listeners and from CI users tested in both listening modes (A+E and A-alone).

The grand mean waveforms obtained from the NH and Hybrid CI users share many of the same morphologic features and show no evidence of significant contamination by electrical stimulus artifact. When the /i-u/ stimulus was used, onset responses recorded from both the CI users and from the younger, NH control group are very similar. This is likely because the /u/ is dominated by low-frequency energy. When the /u-i/ stimulus was used, grand mean waveforms recorded from the Hybrid CI users are slightly smaller than similar recording obtained from the NH control group regardless of the stimulation mode (A+E vs A-alone). Additionally, the change responses recorded from Hybrid CI users in response to the /u-i/ stimulus pair were very small. . However, to the extent that the grand mean data represents relevant trends in the group data, the impact of having access to the electrical signal provided by the CI is most notable in this condition (i.e., compare change response amplitude in the A+E vs A-alone listening mode for the /u-i/ stimulus pairing in Figure 5).

The bar graph on the left side of Figure 5 shows mean N1-P2 peak-to-peak amplitude data for the NH listeners and for the CI users tested both in the A+E and A-alone listening modes. Error bars reflect variance in the individual data. These results are grouped according to the response type (onset vs change) and according to which vowel was used to evoke that response (/u/ vs /i/).

The results shown in Figure 5 were analyzed using a series of ANOVAs that confirmed the trends illustrated in the grand mean waveforms. When the dependent variable was peak-to-peak amplitude and comparisons were made between results obtained from the NH subjects and similar recordings from the CI users tested in the A+E listening mode, significant main effects were found for response type (onset vs change). That is, onset responses were found to be significantly larger than change responses for both subject groups (Hybrid CI users: F(1,9) = 64.3, p<0.0001; NH listeners: F(1,9) = 44.21, p< 0.0001) and for both vowels (/i/: F(1,19) = 45.54, p < 0.0001; /u/: F(1,19) = 68.51, p < 0.0001). Peak-to-peak onset response amplitudes recorded from the CI users (tested in the A+E listening mode) were not found to be significantly different from onset response N1-P2 amplitudes recorded from the NH subjects (F(1,18) = 1.5, p=0.2370). Change response amplitudes, however, were significantly different (F(1,18)=4.88, p=0.0403): larger change response amplitudes were obtained from NH listeners than from Hybrid CI users (t = −2.21, p = 0.0403). Further testing showed that there was no group difference in change response amplitude for the /u-i/ stimulus (p > 0.05). There was a significant difference in change response amplitude measures recorded using the /i-u/ stimulus pair (F(1,18)=6.22, p=0.0226).

For the CI users, N1-P2 amplitudes evoked using the two different listening modes were also compared using ANOVA. When the /i/ stimulus was used to elicit either the onset response (e.g., in the /i-u/ pairing) or the change response (e.g., in the /u-i/ pairing), significant differences in amplitude were found between results obtained in the A+E and A-alone listening modes (Onset /i-u/: F(1,9) =10.19, p = 0.011; Change /u-i/: F(1,9) =6.54, p = 0.0308). Post-hoc tests revealed that response amplitudes were larger for the A+E listening mode compared to the A-alone listening mode (Onset /i-u/: t = 3.19, p = 0.0110; Change /u-i/: t = 2.56, p = 0.0308).

When the /u/ stimulus was used, no significant difference was found between the peak-to-peak amplitude of either the onset or change responses when testing was conducted in the A+E listening mode compared to the A-alone listening mode (p > 0.05).

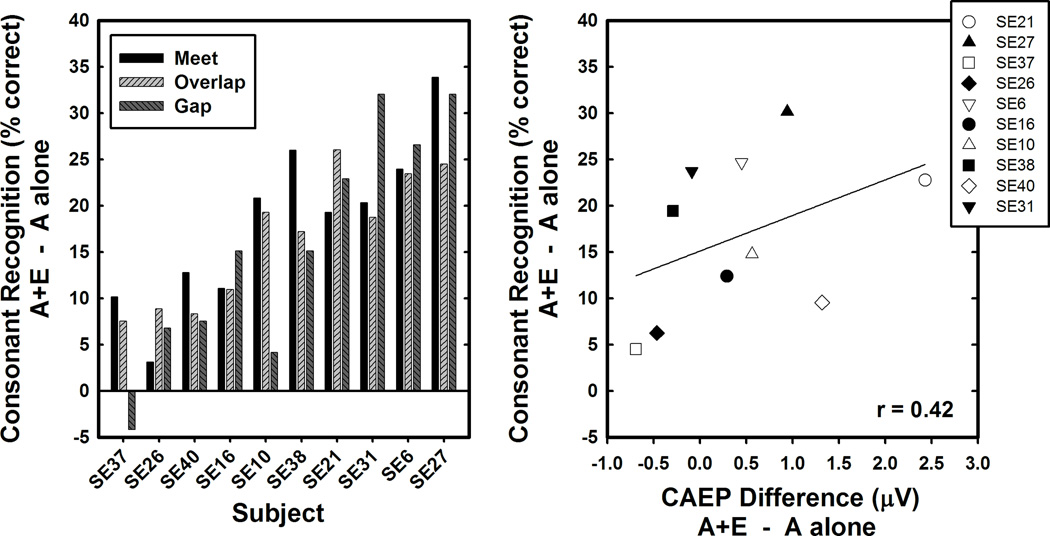

Figure 6 allows comparison of the relative benefit that Hybrid CI users received by having access to the electrical signal provided by the cochlear implant. The panel on the left side of Figure 6 shows the difference in the consonant recognition scores measured in the A+E and A-alone listening modes for all ten study participants and for each of the three experimental MAPs. Positive values indicate that the A+E mode resulted in improved performance on the consonant recognition task when compared to the A-alone listening mode. These data are derived from the speech perception data initially reported as group data by Karsten et al. (2013). Differences across subjects are apparent. For example, participants SE37 and SE26 experience an improvement in overall performance of less than 10% regardless of the program used. For SE27, however, that addition of the electrical signal provided by the cochlear implant resulted in an improvement in performance on the same consonant recognition task of about 30%. Unfortunately, the SNR-50 data was only collected in the A+E listening mode, making it impossible to compute “benefit” based on this measure of speech recognition.

Figure 6.

The left panel shows the difference between consonant recognition scores for each Hybrid CI user tested in quiet using the A+E listening mode and the A-alone listening mode. The bars show results obtained using each of the three experimental programs. The scatterplot on the right shows the relationship between average benefit in percent correct on the consonant recognition task and mean change response amplitude that resulted from subtraction of the recordings made in the A-alone listening mode from similar measured obtained using the A+E listening mode.

The panel on the right in Figure 6 shows the difference in perception of consonants in these two listening modes measured in percent correct plotted on the y-axis and the difference in peak-to-peak amplitude of the N1-P2 cortical change response in the two listening modes (A+E minus A-alone) plotted on the x-axis. Karsten et al. (2013) reported that programming strategy did not have a significant effect on performance measured using the consonant recognition test. Results from the current study did not reveal a significant effect of programming strategy on the evoked potential data. Therefore, in constructing Figure 6 we opted to combine the results obtained using all three programming strategies. A positive correlation (r = 0.42) between the evoked potential and speech perception data was found and the slope of the regression line is positive, however, the results of the regression analysis were not found to be statistically significant (p > 0.05).

DISCUSSION

This report describes CAEPs recorded from Nucleus Hybrid CI users. This is a growing population of individuals and while currently Hybrid CIs are primarily used for adults, is it likely that the technology will soon be available for children. The stimuli were pairs of synthetic vowels that were concatenated and presented in the sound field and the study was designed to parallel an earlier study by Martin and Boothroyd (2000). This report was also based on evoked potential data recorded from the same subjects who participated in the Karsten et al. (2013) study. Our focus was on exploring the feasibility of using an objective measure of neural response to a speech stimulus to assess the benefit from the use of a Hybrid CI. Our goals were to determine whether these evoked potentials could be used either to help predict which of the three experimental methods of programming the speech processor of the Hybrid CI system would result in optimal performance and/or to determine if these evoked responses might serve as a measure of overall benefit derived by individual hybrid CI users from the combination of acoustic and electric hearing in the same ear.

Several investigators have described recordings of electrically evoked potentials in CI users (e.g., Friesen & Tremblay 2006; Martin 2007; Brown et al. 2008). What is novel about this study is the focus on how individuals with residual acoustic hearing are able to combine acoustic and electric information presented to the same ear. We are not aware of another study that reports CAEPs recorded using a combination of acoustic and electrical stimulation. We were able to record both N1-P2 onset and change responses from this population and we report comparisons between these measures and similar measures obtained from a group of younger, normal hearing control subjects.

The results of this study generally did not support our stated hypotheses. Karsten et al. (2013) reported small but statistically significant differences in performance between the “Meet” and “Overlap” strategies but the evoked potential data obtained from listeners tested using these two programming strategies did not show significant differences. Also, correlations between normalized change response amplitudes and overall performance with the Hybrid CI were not statistically significant (see Figure 4). While disappointing, in retrospect, this is not particularly surprising given the small differences in perception among programming strategies. Perhaps programming strategies that resulted in more extremes in performance would have resulted in significant differences on the evoked potential tests. However, if the ACC is only sensitive to differences in programming strategies that result in large differences in the outcome, the clinical utility of the measure could also be called into question.

It is rare that any evoked potential accurately predicts speech perception test results. Additionally, the stimuli used to evoke the CAEP measures consisted of a single pair of synthetic vowel contrasts (e.g., /u-i/ and /i-u/) and the evoked potential recordings were obtained without a competing background noise. In retrospect, a study design that included a measure of evoked responses presented in noise and/or using a wider range of stimuli might have been preferable.

Figures 5 shows the relatively small effect that the choice of listening mode had on the evoked potential data and Figure 6 contrasts results obtained using the evoked potential methods with perceptual measures. The speech perception data illustrate the variance across subjects in terms of how much they benefit from having access to the electrical signal provided by the Hybrid device. Unfortunately, having access to that same electrical signal – while enhancing the amplitude of the cortical change responses for the majority of subjects - was not found to be predictive of the magnitude of benefit they received on the consonant recognition test when results obtained in the A+E vs A-alone listening modes were compared.

We know that evoked responses recorded using acoustic stimuli presented in the sound field and processed either by a hearing aid or a cochlear implant can be problematic. For example, Billings et al. (2011) and Tremblay et al (2006) have demonstrated that hearing aids can introduce noise and that amplitude of the N1-P2 response is more dependent on the signal-to-noise ratio than signal intensity and the differences in CAEPs recorded using stimuli that have been processed by either a hearing aid or a cochlear implant may be level dependent. Clearly, these are valid concerns that impose limitations on the how we might interpret the results. For that reason we attempted to maintain similar processing for comparisons. We used a study design where the acoustic component used for all three programs was identical and the only difference in the way signals were processed electrically was related to how the frequency allocation table was configured. Also, we did not modify any of the compression characteristics or otherwise change the input or output dynamic range of either the acoustic or electrical components of the Hybrid device. Furthermore, review of the literature also provides examples of studies where comparison of measures obtained with and without use of a hearing aid or cochlear implant did seem to inform clinical practice – even if just on a fairly macro level (e.g., Sharma et al. 2002; Purdy et al. 2005; Souza & Tremblay 2006; Golding et al. 2007; Pearce et al. 2007).

CAEPs will not (nor should they) replace behavioral measures. However, that does not mean there is not a role for CAEPs in clinical practice. In this report, our focus was on exploring whether the ACC could help support the choice of programming strategy for an individual Hybrid CI user or alternately, help confirm benefit from use of the electrical component of the device. The results suggest that the ACC, evoked using the stimulation and recording parameters tested, is not sensitive enough to accomplish that goal. Despite generally negative findings, results of this study do provide normative data and explore a method that, at least on the surface, seems to provide a viable means of assessing more than simple detection of sound, but perhaps something closer to discrimination. Won, Clinard, Kwon, et al. (2011) used rippled noise stimuli to record N1-P2 change responses from CI users. They report significant correlations between the CAEP data and behavioral measures of spectral ripple discrimination and between spectral ripple discrimination thresholds and speech perception. While our focus has been on Hybrid CI users, the techniques we describe have and could be adapted to address a wide range of potentially clinically relevant questions. For example, Kirby and Brown (in press) report measures of the ACC evoked using ripple noise stimuli with contrasting spectra, similar to those described by Won and colleagues (2011). They showed that AAC amplitude measures were affected by the use of frequency compression in a hearing aid and that changes in the frequency compression ratio directly influenced the amplitude of the ACC recorded using rippled noise stimuli. While the current study describes essentially negative findings, our hope is the techniques similar to those used in this report and in the Won et al. (2011) and the Kirby and Brown (in press) studies will serve as a small step toward identifying a larger role for CAEP in clinical practice.

ACKNOWLEDGMENTS

The authors are especially appreciative to the study participants who were gracious enough to participate willingly in the long and rather tedious studies outlined in this report. We are also thankful to Jake Oleson for his statistical expertise. This work was supported in part by grants from the NIH/NIDCD (RC1 DC010696 and P50 DC000242).

Contributor Information

Carolyn Brown, Email: carolyn-brown@uiowa.edu.

Eun Kyung Jeon, Dept. Communication Sciences and Disorders University of Iowa Iowa City, IA.

Li-Kuei Chiou, Dept. Communication Sciences and Disorders University of Iowa Iowa City, Iowa.

Benjamin Kirby, Dept. Communication Sciences and Disorders University of Iowa Iowa City, IA.

Sue Karsten, Dept. Communication Sciences and Disorders University of Iowa Iowa City, IA.

Christopher Turner, Dept. Communication Sciences and Disorders University of Iowa Iowa City, IA.

Paul Abbas, Dept. Communication Sciences and Disorders University of Iowa Iowa City, IA.

REFERENCES

- Billings CJ, Tremblay KL, Miller CW. Aided cortical auditory evoked potentials in response to changes in hearing aid gain. Int J Audiol. 2011;50(7):459–467. doi: 10.3109/14992027.2011.568011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CJ, Etler C, He S, et al. The electrically evoked auditory change complex: preliminary results from nucleus cochlear implant users. Ear Hear. 2008;29(5):704–717. doi: 10.1097/AUD.0b013e31817a98af. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne D, Dillion H, Ching T, et al. NAL-NL1 procedure for fitting nonlinear hearing aids: Characteristics and comparisons with other procedures. J Am Acad Audiol. 2001;12:37–51. [PubMed] [Google Scholar]

- Cochlear Hybrid Desk Reference Guide. www.Cochlear.com/US.

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. Int J Audiol. 2010;49:912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friesen LM, Tremblay KL. Acoustic change complexes recorded in adult cochlear implant listeners. Ear Hear. 2006;27(6):678–685. doi: 10.1097/01.aud.0000240620.63453.c3. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Turner C, Gfeller KE. Acoustic plus electric speech processing: Preliminary results of a multicenter clinical trial of the Iowa/Nucleus hybrid implant. Audiol Neurootol. 2006;11(suppl 1):63–68. doi: 10.1159/000095616. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Hansen MR, Turner CW, et al. Hybrid 10 clinical trial. Audiol Neurootol. 2009;14(suppl 1):32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golding M, Pearce W, Seymour J, et al. The relationship between obligatory cortical auditory evoked potentials (CAEPs) and functional measures in young infants. J Am Acad Audiol. 2007;18:117–125. doi: 10.3766/jaaa.18.2.4. [DOI] [PubMed] [Google Scholar]

- Gstoettner W, Kiefer J, Baumgartner W-D, et al. Hearing preservation in cochlear implantation for electric acoustic stimulation. Acta Otolaryngol. 2004;124:348–352. doi: 10.1080/00016480410016432. [DOI] [PubMed] [Google Scholar]

- Hyde M. The N1 response and its applications. Audiol Neurootol. 1997;2:281–307. doi: 10.1159/000259253. [DOI] [PubMed] [Google Scholar]

- Incerti P, Ching T, Cowan R. A Systematic Review of Electric-Acoustic Stimulation: Device Fitting Ranges, Outcomes, and Clinical Fitting Practices. Trends in Amplification. 2013;17(1):3–26. doi: 10.1177/1084713813480857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karsten SA, Turner CW, Brown CJ, et al. Optimizing the combination of acoustic and electric hearing in the implanted ear. Ear Hear. 2013;34(2):142–150. doi: 10.1097/AUD.0b013e318269ce87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirby BJ, Brown CJ. Effects of Nonlinear Frequency Compression on ACC Amplitude and Listener Performance. Ear Hear. doi: 10.1097/AUD.0000000000000177. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lenarz T, Stöver T, Buechner A, et al. Hearing conservation surgery using the Hybrid-L electrode. Results from the first clinical trial at the Medical University of Hannover. Audiol Neurootol. 2009;14(suppl 1):22–31. doi: 10.1159/000206492. [DOI] [PubMed] [Google Scholar]

- Martin BA. Can the acoustic change complex be recorded in an individual with a cochlear implant? Separating neural responses from cochlear implant artifact. J Am Acad Audiol. 2007;18(2):126–140. doi: 10.3766/jaaa.18.2.5. [DOI] [PubMed] [Google Scholar]

- Martin BA, Boothroyd A. Cortical, auditory, evoked potentials in response to changes of spectrum and amplitude. J Acoust Soc Am. 2000;107(4):2155–2161. doi: 10.1121/1.428556. [DOI] [PubMed] [Google Scholar]

- Martin BA, Tremblay KL, Korczak P. Speech evoked potentials: From the laboratory to the clinic. Ear Hear. 2008;29(3):285–313. doi: 10.1097/AUD.0b013e3181662c0e. [DOI] [PubMed] [Google Scholar]

- Nikjeh DA, Lister JJ, Frisch SA. Preattentive cortical-evoked responses to pure tones, harmonic tones, and speech: Influence of music training. Ear Hear. 2009;30(4):432–446. doi: 10.1097/AUD.0b013e3181a61bf2. [DOI] [PubMed] [Google Scholar]

- Pearce W, Golding M, Dillon H. Cortical auditory evoked potentials in the assessment of auditory neuropathy: two case studies. J Am Acad Audiol. 2007;18(5):380–390. doi: 10.3766/jaaa.18.5.3. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Eggermont JJ, Kwong B, et al. Maturation of human central auditory system activity: evidence from multi-channel evoked potentials. Clin Neurophysiol. 2000;111(2):220–236. doi: 10.1016/s1388-2457(99)00236-9. [DOI] [PubMed] [Google Scholar]

- Purdy SC, Katsch R, Dillion H, et al. A sound foundation through early amplification. Chicago: Phonak AG; 2005. Aided cortical auditory evoked potentials for hearing instrument evaluation in infants; pp. 115–127. [Google Scholar]

- Shannon RV, Fu Q, Galvin J, et al. In Cochlear implants auditory prostheses and electric hearing. New York: Springer; 2004. Speech perception with cochlear implants; pp. 334–376. [Google Scholar]

- Sharma A, Dorman MF. Central auditory development in children with cochlear implants: clinical implications. Advances in Oto-Rhino-Laryngology. 2006;64:66–88. doi: 10.1159/000094646. [DOI] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Spahr AJ. Rapid development of cortical auditory evoked potentials after early cochlear implantation. Neuroreport. 2002;13:1365–1368. doi: 10.1097/00001756-200207190-00030. [DOI] [PubMed] [Google Scholar]

- Small SA, Werker JF. Does the ACC have potential as an index of early speech discrimination ability? A preliminary study in 4-month-old infants with normal hearing. Ear Hear. 2012;33(6):e59–e69. doi: 10.1097/AUD.0b013e31825f29be. [DOI] [PubMed] [Google Scholar]

- Souza PE, Tremblay KL. New perspectives on assessing amplification effects. Trends Amplif. 2006;10:119–143. doi: 10.1177/1084713806292648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spahr AJ, Dorman MF. Performance of subjects fit with the Advanced Bionics CII and Nucleus 3G cochlear implant devices. Arch. Otolaryngol. Head Neck Surg. 2004;130:624–628. doi: 10.1001/archotol.130.5.624. [DOI] [PubMed] [Google Scholar]

- Tremblay KL, Billings CJ, Friesen LM, et al. Neural representation of amplified speech sounds. Ear Hear. 2006;27(2):93–103. doi: 10.1097/01.aud.0000202288.21315.bd. [DOI] [PubMed] [Google Scholar]

- Tremblay KL, Kraus N. Auditory training induces asymmetrical changes in cortical neural activity. J Speech Lang Hear Res. 2002;45:564–572. doi: 10.1044/1092-4388(2002/045). [DOI] [PubMed] [Google Scholar]

- Turner CW. Integration of acoustic and electrical hearing. J Rehabil Res Dev. 2008;45(5):769–778. doi: 10.1682/jrrd.2007.05.0065. [DOI] [PubMed] [Google Scholar]

- Turner CW, Gantz BJ, Vidal C, et al. Speech recognition in noise for cochlear implant listeners: Benefits of residual acoustic hearing. J Acoust Soc Am. 2004;115(4):1729–1735. doi: 10.1121/1.1687425. [DOI] [PubMed] [Google Scholar]

- Wilson BS, Dorman MF. The surprising performance of present-day cochlear implants. IEEE Trans Biomed Eng. 2007;54:969–972. doi: 10.1109/TBME.2007.893505. [DOI] [PubMed] [Google Scholar]

- Wolfe JC, Schafer EC. Programming Cochlear Implants. 2nd revised ed. San Diego, CA: Plural Publishing Inc; 2015. [Google Scholar]

- Won JH, Clinard CG, Kwon S, et al. Relationship between behavioral and physiological spectral-ripple discrimination. JARO. 2011;12:375–393. doi: 10.1007/s10162-011-0257-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodson EA, Reiss LA, Turner CW, et al. The Hybrid cochlear implant: a review. Adv OtoRhinoLaryngol. 2010;67:125–134. doi: 10.1159/000262604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wunderlich JL, Cone-Wesson BK. Maturation of CAEP in infants and children: a review. Hearing Res. 2006;212(1–2):212–223. doi: 10.1016/j.heares.2005.11.008. [DOI] [PubMed] [Google Scholar]