Abstract

Wireless synchronization of the digital signal processing (DSP) features between two hearing aids in a bilateral hearing aid fitting is a fairly new technology. This technology is expected to preserve the differences in time and intensity between the two ears by co-ordinating the bilateral DSP features such as multichannel compression, noise reduction, and adaptive directionality. The purpose of this study was to evaluate the benefits of wireless communication as implemented in two commercially available hearing aids. More specifically, this study measured speech intelligibility and sound localization abilities of normal hearing and hearing impaired listeners using bilateral hearing aids with wireless synchronization of multichannel Wide Dynamic Range Compression (WDRC). Twenty subjects participated; 8 had normal hearing and 12 had bilaterally symmetrical sensorineural hearing loss. Each individual completed the Hearing in Noise Test (HINT) and a sound localization test with two types of stimuli. No specific benefit from wireless WDRC synchronization was observed for the HINT; however, hearing impaired listeners had better localization with the wireless synchronization. Binaural wireless technology in hearing aids may improve localization abilities although the possible effect appears to be small at the initial fitting. With adaptation, the hearing aids with synchronized signal processing may lead to an improvement in localization and speech intelligibility. Further research is required to demonstrate the effect of adaptation to the hearing aids with synchronized signal processing on different aspects of auditory performance.

Key words: binaural hearing, sound localization, speech intelligibility, hearing aids, wireless technology

Introduction

Difficulty in understanding speech in noisy/reverberant backgrounds is the main complaint of individuals with sensorineural hearing loss. Amongst the benefits of binaural hearing is the ability to accurately locate different sounds and improve speech intelligibility in noisy environments.1 Binaural hearing enhances speech understanding in noise because of several factors such as head diffraction, which causes the signal-to-noise ratio (SNR) to be greater at one ear than the other when noise and target speech arrive from different directions; binaural squelch, which refers to the role of the central auditory system in taking advantage of the amplitude and timing differences [interaural level differences (ILDs), and interaural time differences (ITDs), respectively] of speech and noise arriving at each ear; and binaural redundancy, which refers to the ability of the central auditory system to combine the signals arriving at the two ears.1 There is evidence, at least in a laboratory setting, that hearing impaired listeners wearing two hearing aids (i.e. bilateral amplification) can also extract some benefit from binaural hearing.2,3 It is therefore not surprising that the rate of bilateral fitting is increasing4 and, together with advances in digital signal processing (DSP) features such as adaptive directionality and digital noise reduction, bilateral amplification continues to contribute to hearing aid (HA) fitting success.5

As mentioned before, binaural cues are important for sound localization as well.1 The ability to localize sounds in space depends on the differences in arrival time and intensity between the two ears (i.e., ITDs and ILDs), respectively.6,7 For example, for a sound source that is located at the right side of a listener, the sound waves reach the right ear earlier than the left ear, and with a higher intensity than the intensity with which the waves reach the left ear. ITDs and ILDs vary with the angle of the sound source; however the ILD and ILD are close to 0 dB and 0 ms, respectively, at 0° and 180° azimuths. In addition, the peaks and notches introduced within the spectral shape of incoming sound by the pinna assist the listener in resolving front vs back (F/B) ambiguity, and in localizing a sound source in vertical plane.1 It is pertinent to note here that lateralization is predominantly mediated by the ITD cues for low frequency stimuli (with content below 1500 Hz) and by ILD cues for high frequency stimuli (above 1500 Hz),8 and that the ITD cues are dominant for a wideband sound source with low frequency content.9,10 In addition, some studies10 have suggested that ILDs contribute to F/B resolution as well.

The ability to accurately localize sounds, especially in the horizontal plane, could be crucial for safety in certain everyday situations such as localizing a car horn, or any other alerting sound. Persons with sensorineural hearing loss (SNHL) often report decreased speech intelligibility rather than poor localization. However, the disturbed localization abilities caused by the hearing loss may contribute to the problem of decreased speech intelligibility, particularly in noisy backgrounds, because locating the person who is talking becomes more difficult.1,11 It is therefore important to investigate the effect of HA signal processing on sound localization cues.

Keidser et al.6 summarized the potential effect of modern HA signal processing features on sound localization cues. They stated that independently acting multi-channel Wide Dynamic Range Compression (WDRC) and digital noise reduction features may affect the ILDs and spectral shape differences, with mismatched directional microphone configurations between left and right HAs additionally impacting the ITDs. A few studies have conducted sound localization studies with hearing impaired (HI) listeners wearing modern HAs. Van den Bogaert et al.7 studied the sound localization performance of ten HI subjects wearing HAs in omnidirectional and adaptive directional processing modes. Results showed that there were fewer sound localization errors when HI subjects were unaided (provided the stimuli were loud enough to be audible), than when wearing bilateral independent HAs either in omnidirectional or adaptive directional modes. Furthermore, the errors associated with the adaptive directional mode were higher than in the omnidirectional mode, indicating that independent bilateral HA processing in which adaptive directionality is implemented could negatively impact the sound localization abilities of HI listeners. In a more recent study, Vaillancourt et al.12 tested the sound localization performance of 57 HI participants who wore modern HAs. These researchers found that the HAs did not have a significant effect on localization in the Left/Right (L/R) dimension (i.e. lateralization), for which ITDs and ILDs are most important, but they substantially increased localization errors in the F/B dimension, for which spectral shape cues are most important, when compared to the unaided condition. A similar pattern of results was found by Best et al.13 who compared the localization performances of HI listeners wearing completely-in-the-canal (CIC) and behind-the-ear (BTE) hearing aids to that of a normal hearing (NH) control group. There was a significant and substantial performance gap between the HI and NH groups when localization angular errors in the F/B dimension were analyzed, especially when the HI participants were wearing the BTEs. Mean lateralization errors were similar across CIC and BTE conditions and between HI and NH groups.

Keidser et al.6 conducted a systematic investigation on the impact of individual HA features on sound localization in the L/R and F/B dimensions. Results from 12 HI listeners revealed: i) a statistically insignificant degradation in either L/R or F/B localization with multichannel WDRC alone, despite a substantial reduction in ILDs measured electroacoustically; ii) a statistically significant degradation in the L/R localization with the activation of the noise reduction feature alone; and iii) a statistically significant degradation in L/R localization with non-identical directional microphone configurations on left and right HAs. The fact that independent multichannel WDRC processing did not significantly impact localization may be due to the broadband nature of the test stimulus (pink noise) and its duration of 750 ms. Although this stimulus was pulsed, it may have allowed for sufficient head movement to help localization.14-16 Thus, while independent multichannel WDRC affected the ILD cues, it did not distort the ITD cues associated with the lower frequency portion of the broadband signal, potentially aiding sound localization. A follow-up study by Keidser et al.17 expounded this further by evaluating the effect of non-synchronized compression on horizontal localization in nine HI listeners using five different stimuli with varying spectral content. The results from three broadband stimuli demonstrated fewer L/R errors than did the two narrowband noise stimuli. For one high-frequency stimulus (the one octave wide pink noise centred at 3150 Hz), the mean localization error was significantly more biased than the errors produced for the low-frequency weighted stimuli. The authors suggested that L/R discrimination was not severely affected by non-synchronized compression, as long as the stimulus contained low frequency components, thus preserving the ITDs. Localization errors in the F/B dimension were not reported for this study and it is not clear how non-synchronized compression affects F/B confusions in HI listeners when stimuli of varying spectral content are presented.

Preservation of binaural cues is important for speech understanding in complex noisy environments as well.18 For example, Hawley et al.19 tested the effect of spatial separation in a multi-source environment on speech intelligibility in monaural and binaural conditions with NH listeners. Speech targets were played along with one to three competing sentences, played simultaneously from various combinations of positions, defined as either close, intermediate, or far from target locations, to test the effect of spatial separation between target and noise. Results showed that binaural listening led to better word recognition rates when compared to better ear (ear with the better SNR for that particular spatial configuration) or poorer ear (ear with the poorer SNR for that particular spatial configuration) monaural conditions, with the magnitude of difference dependent on the number of competing sounds, and the proximity between the target signal and the competing sounds. Rychtáriková et al.20 conducted localization, and speech intelligibility experiments in anechoic and reverberant environments, with NH participants. Experiments were conducted with participants listening to the test stimuli in the free field and to the stimuli recorded through an artificial head or BTE HAs and presented over headphones. Spatial separation between the target and the noise sources improved speech reception threshold (SRT) because of spatial release from masking and the binaural squelch effect. There was a moderate correlation between F/B error rate and speech intelligibility scores in the anechoic environment, but no such correlation was found for the reverberant environment. Singh et al.21,22 evaluated word identification scores from a target speaker in the presence of spatially separated interferers. A key result from their work was the improvement in word recognition accuracy when the subjects had access to ITD and ILD information of the target speaker and when the target location was not known a priori. In other words, when there was uncertainty in the location of the target, performance improved with the availability of undistorted binaural cues. To summarize, spatial separation between the signal of interest and the interfering noise is important for better speech understanding in complex environments.19,20 Access to rich binaural cues facilitates improved speech understanding in noise because it enables accurate localization of the source of interest and the subsequent focus on the target results in the desired spatial separation between wanted and unwanted sources.

With the aim of preserving naturally occurring binaural cues, bilateral HAs that coordinate and synchronize their processing through wireless communication have been introduced to the market.23,24 Wireless synchronization between the two HAs ensures that the volume control, automatic program changes (e.g. switching between omni and directional microphone modes), and gain processing are coordinated. Smith et al.25 evaluated the ear-to-ear (e2e) synchronization feature found in Siemens’ advanced digital HAs with 30 HI listeners. The researchers compared the performance of synchronized and non-synchronized bilateral HAs using the Speech, Spatial, and Qualities of Hearing scale. Results revealed a general trend in the preference for the synchronized HA condition on many survey items in the speech and spatial domain. Sockalingam et al.24 evaluated the binaural broadband feature in Oticon Dual XW HAs (Oticon A/S, Smørum, Denmark), which optimizes the compression settings to improve spatial fidelity and resolution,24 in addition to synchronizing the noise reduction and directionality features. Sound localization tests conducted with 30 HI listeners revealed a 14% improvement in localization performance when wireless synchronization was activated.

There is limited evidence on the effect of bilateral synchronization of HA DSP features on speech intelligibility in noise. For example, Cornelis et al.26 compared the performance of a synchronized binaural speech distortion weighted multichannel Wiener filter (SDW-MWF) and bilateral fixed directional microphone configuration. Speech intelligibility experiments with 10 NH and 8 HI listeners showed that the binaural algorithm significantly outperformed the bilateral version. In a similar vein, Wiggins and Seeber27 recently presented data showing an improvement of long term SNR at the better ear with the synchronization of bilateral multichannel WDRC. Experiments conducted with 10 NH listeners revealed a significant improvement in speech understanding in noise with linked multichannel WDRC, when target speech was presented from 0° azimuth and speech-shaped noise presented from 60° azimuth simultaneously. The authors predicted a small to moderate benefit for HI listeners, although no supporting data was presented.

In summary, there is the potential for hearing aid adaptive signal processing algorithms in bilateral HAs to distort the binaural cues if they are allowed to operate independently. Hearing aid manufacturers have developed HAs that co-ordinate their signal processing through wireless communication. Independent studies investigating the performance of wirelessly coordinated bilateral HAs are currently lacking. There is very little independent evidence on how well this strategy works, and whether there are any performance differences among the wirelessly-communicating hearing aids offered by different manufacturers. The current study aimed to address this gap by examining the effect of wireless multichannel WDRC synchronization in bilateral HAs on two aspects of binaural hearing: sound localization and speech intelligibility in noise. Our working hypothesis for the sound localization was that synchronized multichannel WDRC will better preserve ILDs and enhance horizontal sound localization abilities. The signal-processing scheme under test was not expected to distort the ITDs. Furthermore, the synchronized multichannel WDRC is expected to better preserve the better ear SNR in asymmetric noise conditions, which may lead to an improvement in speech intelligibility scores.

Materials and Methods

Participants

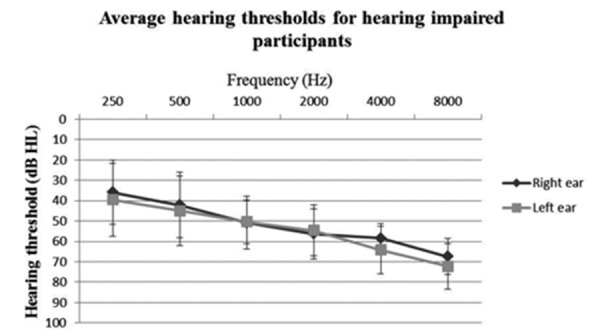

Eight NH subjects with a mean age of 26 years (sd=3 years), with hearing thresholds ≤25 dB HL at 0.25-8 kHz participated in the study as a control group. Twelve subjects, with a mean age of 69 years (sd=5 years), with bilaterally symmetrical (difference between right and left ear frequency-specific thresholds ≤10 dB) moderate-to-severe hearing loss and a minimum of one year experience with HA use participated in the HI group. Figure 1 shows the mean audiograms for the HI participants.

Figure 1.

Means and standard deviations of hearing thresholds of hearing impaired participants.

Hearing aids

Two pairs of HAs were used: Oticon Epoq XW (Oticon A/S) and Siemens Motion 700 (Siemens AG, Munich, Germany). They will be referred to in this study as HA1 and HA2 respectively. HAs were programmed to fit the targets specified by the Desired Sensation Level (DSL 5.0) formula28 for each hearing impaired participant and verified using Audioscan Verifit (Etymotic Design Inc., Dorchester, ON, Canada). For the NH subjects, the HAs were programmed to fit the DSL targets for a flat audiogram of 25 dB HL across all audiometric frequencies. The purpose of the present study was to test wireless synchronization of multichannel WDRC in isolation, without potential confounding influence from other DSP features. As such, adaptive directionality, noise reduction, and feedback management features were disabled in both HAs. It must be noted that there are substantial differences in how the compression is implemented in these two HAs. Oticon Epoq (Oticon A/S) utilizes a parallel system that includes a fifteen channel slow-acting compressor and a four channel fast-acting compressor. The compression applied depends on the input level, with a predominantly fast-acting compression at higher SPLs and slow-acting compression at lower SPLs.29 Siemens Motion 700 (Siemens AG), on the other hand, incorporates a sixteen channel compressor with syllabic compression time constants.30 The Otion Epoq (Oticon A/S) incorporates the binaural compression algorithm named Spatial Sound that aims to preserve the ILDs, while Siemens Motion only synchronizes the volume control and program changes through the e2e wireless communication. Custom full-shell ear molds were prepared for each participant with regular #13 tubing and no venting.

Methods

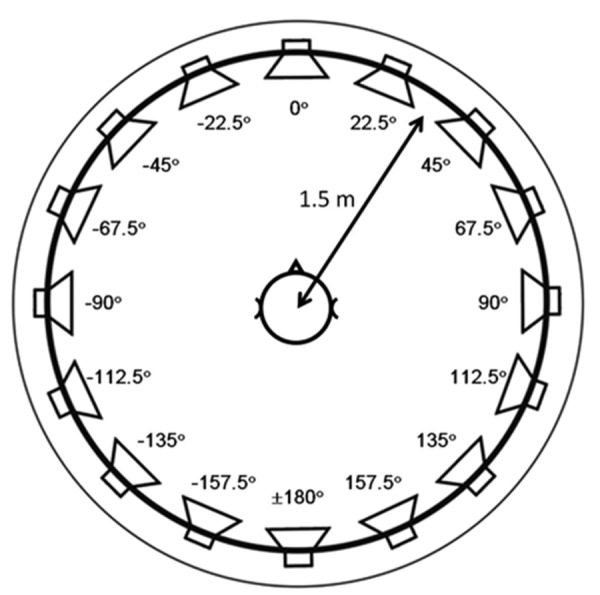

The study was divided into three test sessions. During the first session, participants provided informed consent and had their hearing assessed by otoscopy, immitance, and pure tone audiometry if their most recent assessment was more than six months old. Subsequently, ear impressions were taken to produce hard, unvented full-shell molds with regular #13 tubing. Sessions two and three were performed in a hemi-anechoic chamber, where a circular array of 16 Tannoy i5 AW speakers was used as shown in Figure 2. The floor area inside the array was covered with one or two layers of 4-inch acoustic foam to attenuate reflections.

Figure 2.

Speaker arrangement in the hemi-anechoic chamber used for intelligibility and localization testing.

The speakers received signals from a PC through an Echo AudioFire 12 sound card (Echo Digital Audio Corp., Santa Barbara, CA, USA), and/or Tucker Davis Technologies RX6 real time processor (Tucker Davis Technologies Inc., Alachua, FL, USA), for digital to analog conversion, Soundweb 9008 networked signal processor (BSSAudio, Sandy, UT, USA), for speaker equalization and level control, and QSC CX168 power amplifiers (QSC Audio Products, Costa Mesa, CA, USA), for power amplification and impedance matching. Participants stood in the middle of the speaker array on an adjustable stand. This setup was utilized for both intelligibility and localization experiments, details of which are given below.

Speech intelligibility

Speech intelligibility was assessed using the Hearing in Noise Test (HINT)31 procedure under three test conditions: i) noise presented to the right of the participant (90° azimuth), ii) noise presented to the left of the participant (270° azimuth), and iii) noise presented simultaneously from 90° and 270° azimuths. Under all test conditions, the speech was presented from directly in front of the participant (0° azimuth). Twenty sentences were presented in each condition and the participants were asked to repeat the sentences they heard. The level of the sentences was varied adaptively with a 1-down, 1-up rule to estimate the SNR yielding 50% correct performance, which defined the SRT for that particular listening condition. The HINT was administered 5 times for each subject: unaided, plus four combinations of the HA make and wireless synchrony mode (HA1 with wireless synchrony on, HA1 with wireless synchrony off, HA2 with wireless synchrony on, and HA2 with wireless synchrony off). The order of testing with these device settings was randomized for each participant. Custom software developed at the National Centre for Audiology was used to automate the HINT process, to visually monitor the test progress, and to store the test results.

Localization test

Localization abilities were tested using two different stimuli: a car horn of 450 ms duration presented in stereo traffic noise at a +13 dB SNR, and a 1/3-octave narrow band noise (NBN) centered around 3150 Hz, with 200 ms duration and 76 dB SPL. The car horn in traffic noise was chosen to simulate a common everyday situation where localization abilities play an important safety role. For this test condition, the stereo traffic noise was played from two fixed speaker locations at 90° and 270° azimuths, which were placed below the speakers used for localization testing. Figure 3 displays the 1/3-octave spectrum for the car horn stimulus,32 with spectral peaks at 400 and 2500 Hz bands. The traffic noise33 was a looped 3:06-min stereo recording of traffic noise, adjusted in level to provide a minimum intensity of 53.7 dB SPL, and a maximum intensity of 83.3 dB SPL, with an Leq of 60 dB SPL. The 1/3-octave NBN with a center frequency of 3150 Hz was chosen as a second stimulus to test the effect of activating the wireless synchrony on ILDs. The same stimulus has been used in previous studies7,17 to test sound localization that depends mainly on ILDs, because its spectral content is above 1500 Hz.

Figure 3.

1/3-octave spectrum of the car horn stimulus used in the sound localization experiment.

Each stimulus was presented 48 times (3 times from each of the 16 speakers, in a randomized order) at a presentation level roved by +/-3 dB about a mean level of 73 dB SPL for the car horn stimulus and 76 dB SPL for the NBN stimulus. Participants stood in the middle of the speaker array wearing a headtracker (Polhemus Fastrak, Polhemus, Colchester, VT, USA) helmet with an LED and a control button in hand. Upon hearing the stimulus, the participants turned their head to the perceived source speaker. Participants then registered their response with a button press. The next stimulus was presented 600 ms after a button press following return to the centre of the speaker array (0°). Localization experiments were performed under the same four combinations of the HA make and wireless synchrony mode and in an unaided condition, as in the speech intelligibility testing.

Prior to the actual testing, the localization task started with a practice session to familiarize the participant with the task. Participants were asked to orient toward 0 degrees azimuth during trial initiation and after stimulus onset they were free to move their heads to localize. Audibility of the stimuli and the ability to understand and perform the localization task were assessed by 3 practice stimuli, each played 10 times from a randomly chosen different speaker. Practice stimuli were broadband noise bursts, gradually decreasing in duration: 3x500 ms, 5¥300 ms, and finally 3¥300 ms. Custom software written in MATLAB controlled the localization tests. The traffic noise was played through an Echo Audio Fire 12 sound card, and the target (car horn or NBN) was played through the Tucker Davis Technologies RX6 real time processor. Participants’ head positions were measured at the onset and offset of the stimuli.

Results

The data collected for both speech intelligibility and localization were averaged and the means were compared for statistical significance. Statistical significance was assessed using the repeated measures ANOVA procedure implemented in SPSS v16.0 and post-hoc t-tests.

Speech intelligibility

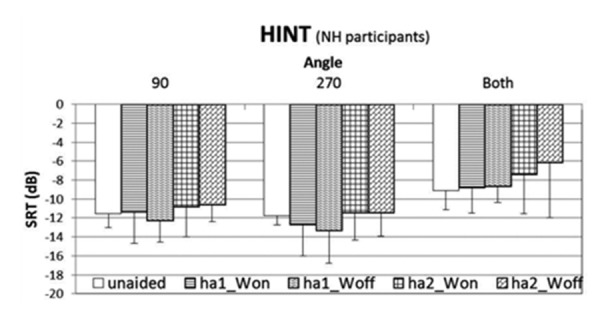

Figure 4 displays the averaged HINT data from NH participants with scores from each experimental condition (unaided and HA make+wireless condition) depicted as a separated bar; error bars denote one standard deviation. A 2×2×3 repeated measures ANOVA was performed, with 2 aided conditions: (HA1- HA2), 2 wireless conditions (wireless on-off), and 3 noise presentation angles (90°-270°-both) as independent variables. The test revealed a significant main effect of the angle of presentation [F (2, 4)=13.54, P=0.017]. As expected, SRT scores were higher for the condition in which the noise was presented from both azimuths (i.e. a higher speech level was required for 50% correct performance). No other main effects or interactions were significant. When comparing the average aided performance with the unaided performance using paired-samples t-test, the difference was insignificant: t (5)=-0.538, P=0.613.

Figure 4.

Hearing in noise test results for the normal hearing subjects. HA1, hearing aid #1; HA2, hearing aid #2; Won, wireless connectivity enabled; Woff, wireless connectivity disabled.

Figure 5 depicts the averaged HINT data collected from 12 HI participants, with the data displayed in a format similar to Figure 4. The HI HINT data were analysed using a 2×2×3 repeated measures ANOVA with 2 aided conditions (HA1-HA2), 2 wireless conditions (wireless on-off), and 3 noise presentation angles (90°-270°-both) as independent variables. The test revealed a significant main effect of the angle of presentation [F (2, 9)=43.42, P<0.001], in which performance with the noise presented from both angles yielded worse results (lower SNR values) than when the noise was presented only from either 90° and 270°. There was also a significant interaction between the hearing aid and the noise presentation angle [F (2, 9)=4.81, P=0.38]; the performance with HA1 was better than the performance with HA2 at either 90° and 270° noise presentation angles, and the performance with HA2 was better when the noise was presented from both 90° and 270° simultaneously. A significant interaction between the hearing aid, the wireless condition, and the noise presentation angle was also noted [F (2, 9)=4.78, P=0.038]: performance with HA1 in the wireless off condition was significantly better than all the other conditions when the noise was presented from 90°. No other main effects or interactions were found to be significant. When comparing the average aided performance with the unaided performance using paired-samples t-test, the aided performance was found to be significantly better than the unaided performance: t (10)=-2.92, P=0.015.

Figure 5.

Hearing in noise test results for the hearing impaired subjects. HA1, hearing aid #1; HA2, hearing aid #2; Won, wireless connectivity activated; Woff, wireless connectivity disabled.

When the averaged performances of NH and HI listeners were compared, it was found that the NH listeners performed significantly better than the HI listeners across all the listening conditions (the five listening conditions: unaided and four aided conditions, in the three noise presenting angles): t (14)=-10.3, P<0.001.

Sound localization

Figure 6 displays a sample output from the sound localization experiment. In Figure 6A, the x-axis represents the target azimuth, which is the angle of the speaker that emitted the sound, and the y-axis represents the response azimuth, which is the angle at which the sound was perceived to originate. In this plot, data points near the main diagonal indicate responses close to the target location and data points near the anti-diagonals of the lower left and upper right quadrants indicate F/B reversals. As exemplified in Figure 6A, large errors we observed were typically of this form. To calculate the rate of F/B errors, the data set was reduced to those trials on which both target and response were within the ±67.5° (front hemisphere) and/or beyond the ±112.5° (rear hemisphere) ranges. The ratio of the number of target/response hemisphere mismatches and the total number of trials within this range was computed to produce the F/B error rate.

Figure 6.

Sample localization data from one of the participants for an experimental condition. (A) Localization data illustrating front-back confusions. (B) Lateralization data from which lateral angle gain, bias, and scatter parameters are calculated. The orange boxes at the horizontal axis as well as the red boxes at the vertical axis contain the trials that represent F/B and B/F errors, respectively.

Figure 6B displays the lateralization response of the same subject, where the F/B data within left and right hemispheres were collapsed. A linear fit to the lateralization data was used to compute three metrics: lateral angle gain, which is the slope of the linear fitting function; lateral angle bias, which is the y-intercept of the linear fitting function, and which is the mean shift in lateral response (in degrees) either towards the left or right hemisphere; and lateral angle scatter, which is the RMS deviation of the individual data points (in degrees) from the linear fitting function, and which represents the consistency of the listener’s responses.

Figure 7 displays the F/B error rate for the NH participants. A 2×2×2 repeated measures ANOVA with stimulus, HA, and the wireless condition was performed, and revealed no statistically significant main effect or interaction. Comparing the average unaided to the averaged aided performance using paired-samples t-test revealed a significantly better unaided performance: t (7)=-3.14, P=0.016.

Figure 7.

The front/back error rate for the normal hearing listeners for the two test stimuli is shown here. HA1, hearing aid #1; HA2, hearing aid #2; Won, wireless connectivity activated; Woff, wireless connectivity disabled.

Figure 8 displays the mean F/B error rate observed with the 12 HI participants. Similar to the NH data, the F/B error rates from HI listeners are lower for the car horn stimulus although they do not reach normal performance. Statistical analysis [due to unavoidable circumstances, one of the HI participants could not complete the sound localization experiment for one of the conditions (HA1, NBN stimulus, and wireless connectivity disabled). This single missing data point was replaced by the mean of the remaining 11 HI participant data for the same condition] confirmed this as the repeated measures 2×2×2 ANOVA (HA, the wireless condition, and stimulus) revealed a significant main effect of the wireless condition [F (1, 11)=6.33, P=0.029] indicating that activating the wireless feature allowed for better discrimination in the F/B dimension. No other significant main effects or interactions were noted.

Figure 8.

Front/back error rate by hearing impaired listeners for the two test stimuli. HA1, hearing aid #1; HA2, hearing aid #2; Won, wireless connectivity activated; Woff, wireless connectivity disabled.

Although the gain, bias, and scatter metrics were computed from the lateralization data, only the statistical analyses of the lateral angle gain data are presented in detail because the other two metrics exhibited similar trends. Figure 9 shows the lateral angle gain calculated from the NH listeners’ localization data. Note that a value of 1 for the lateral gain parameter indicates no mean lateral overshoot or undershoot in response, while a value less than 1 indicates a bias towards the midline (undershoot). A 2×2×2 ANOVA revealed that the only significant results were for the interaction between the wireless condition and the stimulus [F (1, 7)=7.57, P=0.028]. The wireless off condition produced higher gain value for the high frequency NBN stimulus. There was no significant difference between the aided and unaided performances: t (7)=2.31, P=0.054.

Figure 9.

Lateral angle gain computed from the localization data obtained from normal hearing listeners for the two test stimuli. HA1, hearing aid #1; HA2, hearing aid #2; Won, wireless connectivity activated; Woff, wireless connectivity disabled.

Figure 10 displays the lateral angle gain data collected from the HI listeners. A 2×2×2 ANOVA revealed a significant main effect of the stimulus [F (1, 13)=10.81, P= 0.006], with the high frequency NBN resulting in a lateral gain of significantly less than 1.

Figure 10.

Lateral angle gain computed from the localization data obtained from hearing impaired listeners for the two test stimuli. HA1, hearing aid #1; HA2, hearing aid #2; Won, wireless connectivity activated; Woff, wireless connectivity disabled.

Discussion

The focus of this study was to evaluate the performance of the wireless multichannel WDRC synchrony feature in bilateral HAs insofar that it better preserves binaural cues important for sound localization and speech perception in noise. Two HAs with the wireless synchrony feature were tested with a group of eight normally hearing and a group of 12 hearing impaired listeners. The HINT test in its standard format was used to evaluate the speech intelligibility in noise. For testing localization abilities, two stimuli were used: a car horn in stereo traffic noise and a 1/3-octave narrowband noise centered around 3150 Hz. Results showed that activation of the wireless synchrony feature neither significantly improved nor degraded the HINT scores when compared to the performance with the deactivated wireless synchrony, but did lower the localization error rate for the HI listeners in the F/B dimension for a broadband stimulus. These results are discussed in detail below.

Horizontal sound localization

Results from our sound localization experiments agree with previously published data on several fronts: i) broadband stimuli were more accurately localized than high frequency narrowband stimuli17,34,35 ii) the localization in the L/R dimension was much more accurate than localization in the F/B dimension,12,13,36 and iii) aided localization performance by HI listeners did not reach normative performance.11,35

The sound localization experiments were carried out with two different stimuli in this study: a broadband car horn in the presence of traffic noise and a narrowband high frequency stimulus. The F/B error rate was lowest (~24%) for the NH listeners when localizing the car horn stimulus in the unaided condition. These listeners had access to a full range of natural spectral cues indicating the F/B location. However, the location of the hearing aid microphone outside the pinna in aided conditions prevented access to the natural spectral cues with concomitant increase in errors. The high rate of F/B errors observed for the NBN stimulus for all listeners was expected because the NBN stimulus spectrum did not excite the broad range of high frequencies necessary to reveal the shape of the spectral cues produced by the pinnae.34,37

The most salient result from the sound localization experiments is the significant decrease of 4.33 percentage points (from 41.35% without the wireless synchrony to 37.02% with the activation of the wireless synchrony, making a 10.5% decrease in the rate of F/B confusions) within the HI group for the broadband car horn stimulus with the activation of the wireless synchrony feature.38 This result is similar to the one reported by Sockalingam et al.24 in which HI subjects exhibited lower errors in localizing a bird chirp in the presence of speech-shaped background noise when wearing synchronized bilateral HAs. It is worth noting that the bird chirp was high-frequency weighted, and as such lacked the ITD information useful for localization. In their study to reexamine the duplex theory for sound localization in normal hearing listeners, Macpherson and Middlebrooks10 found that, for wideband stimuli, biased ILD cues (created by attenuating one ear of a virtual auditory space stimulus to make an ILD bias favouring the other ear) result in more F/B confusions, perhaps due to the mismatch between ITD and ILD cues at low frequencies. Similar results were found in sound localization experiments conducted by Macpherson and Sabin.39 More recently, Wiggins and Seeber40 reported that static ILD bias, which simulates the effect of non-synchronized compression, can affect spatial perception of broadband signals. The results from the present study suggest that the synchronized WDRC reduces the bias in ILDs that would otherwise be present with independent bilateral WDRC, and this facilitates better F/B discrimination of broadband sounds. For the NH listeners, the wireless synchronization yielded fewer F/B errors for the car horn stimulus as well; however the difference was not statistically significant. NH listeners had 19% F/B error rate with the wireless synchrony, compared to 21% error rate when deactivating the wireless synchrony. For the high-frequency NBN stimulus in the NH listeners, the error percentages were 22.3% and 22.2% for activating and deactivating the wireless synchrony, respectively.

Analysis of the localization performance of HI listeners in the L/R dimension revealed a significant main effect of stimulus. The lateral angle gain was around 1 for the car horn stimulus and around 0.8 for the NBN stimulus, which implies a bias towards the midline for the NBN. This result can be explained from examining the audibility of two test stimuli. Sabin et al.41 reported that the lateral angle gain is biased toward the midline (about 0.5) when stimuli are near threshold of sensation, and gradually increases to 1 as the sensation level increases. In the present study, the participants’ audiograms (Figure 1) show that the average hearing threshold for the 500 Hz is ~45 dB HL, and for the 4 kHz is ~60 dB HL. Considering that there is a spectral peak in the car horn stimulus around 400 Hz (Figure 2), and that the NBN centre frequency is 3150 Hz, it can be inferred that the car horn stimulus had a higher sensation level compared to the NBN stimulus. In addition, the frequency response of a typical hearing aid rolls off beyond 4000 Hz1 impacting the audibility of high frequency sounds. Inadequate high frequency gain and the restricted HA bandwidth both contributes to the lower sensation level of high frequency sounds leading to poorer lateralization.

Unlike the F/B data, there was no effect of wireless synchronization on localization in the L/R dimension, even for the car horn stimulus. The lack of an effect of activating the wireless synchrony on the lateral angle gain with the car horn stimulus might be due to the availability of low-frequency ITD cues, which, when available, the listeners depend on more than the ILD cues for localization.10,42 It may be reasonable to think that the effect of restoring ILDs by activating the wireless synchrony would be greater for the NBN noise, where ITDs are not available, however other factors influenced the NBN noise performance, such as the lower audibility of the NBN stimulus compared to the car horn stimulus because of the high frequency sloping hearing loss configuration of the HI listeners, and the restricted HA bandwidth.

Finally, a few comments are warranted on head movement during the sound localization experiment. Apart from the requirement to orient toward 0° azimuth during trial initiation and to indicate the apparent target position via head orientation, the participants were not given instructions regarding head movements because we wanted to measure the natural response as it would be in real life. After stimulus onset, participants were free to move their heads and/or body in order to localize. The minimum latency of head movement in response to an auditory stimulus is approximately 200 ms,43,44 thus the 200-ms high-frequency stimuli were likely too short to allow head movement while they were played; the 450-ms car horn targets, however, might have been long enough to permit useful head movements before offset. By comparing the head positions measured at the onset and offset of the stimuli, head movement angles were calculated for the two stimuli in the study for all the participants and all the hearing aid conditions. The percentage of head movements that were >10 degrees (and therefore large enough to assist in front/back localization)45 were: 0.7% for the high-frequency noise, 28.4% for the car horn with wireless connectivity enabled, and 22.8% for the car horn with wireless connectivity disabled. When the F/B error rates for the car-horn localization trials with big head movements (>10 degrees) were compared to the error rates for the trials with small head movements (<10 degrees), it was revealed that big head movements resulted in significantly lower error rates: t (82)=-3.84, P<0. 001. While the difference between the percentages of big head movements in wireless on and wireless off conditions was close to significance (t (21)=2.05, P=0.052), it must be highlighted that when the errors in only the trials with small head movements were analysed, the wireless synchrony activation was still found to produce significantly fewer errors (t (21)=-2.38, P=0.027). As such, a combination of head movements and wireless synchrony may have contributed to overall statistical significance. Further research is warranted to delineate these two effects.

Speech intelligibility in noise

The HINT data obtained from NH listeners are similar to the published normative data.31 As expected, there was a significant difference between the HINT scores obtained from NH and HI participants across listening conditions. Furthermore, activation of the binaural wireless synchrony feature did not produce a significantly different result for either NH or HI participants, although it is purported to better preserve the binaural cues and better ear SNR that facilitate improved speech perception in noise. This result contradicts the findings from Kreisman et al.46 in which a significant improvement in speech understanding in noise by HI listeners was reported with HAs incorporating wireless synchrony. However, Kreisman et al.46 compared the performance of two HAs in their study, the Oticon Epoq (Oticon A/S) and Oticon Syncro (Oticon A/S). Epoq was a newer generation model than Syncro; in addition to the wireless synchrony feature, Epoq also incorporated a newer DSP platform and wider bandwidth in comparison to Syncro. Also, Kreisman et al. did not deactivate the DSP features, such as the directional microphone, which could have resulted in a significant improvement when synchronized. In the present study, we evaluated the performance of the wireless synchrony feature in isolation while keeping all other HA parameters constant.

Wireless synchronization was expected to preserve the better ear SNR in 90° and 270° asymmetric noise conditions, and consequently lead to improved speech understanding in noise. This expectation was based on the evidence for fast acting multichannel compressor performance in noisy environments. As an example, for a stationary noise masker, Naylor and Johannesson47 showed that the long term SNR at the output of a fast acting compressor can be worse than the input SNR. This is due to gain reduction for speech peaks and gain increase for noise in speech gaps and silence periods.47 Thus, independent bilateral compression systems may lead to worsened better ear SNR in situations where noise emanates from only one side of the listener. Recent presentation by Wiggins and Seeber27 contained evidence supporting this notion. Using a simulated two channel fast acting WDRC system, Wiggins and Seeber showed that the output SNR at the better ear is 2-3 dB worse when the compressors were acting independently and when the speech was presented from 0° azimuth and noise presented from 60° azimuth. Behavioural experiments conducted with 10 NH listeners resulted in a significant improvement in speech intelligibility scores for the synchronized compressor condition, in the same speech and noise conditions. It is pertinent to note here that the positive role of better ear SNR in speech intelligibility scores with NH listeners is also highlighted in data presented by Hawley et al.19

Several factors may have contributed to the lack of a significant difference in intelligibility scores between the two wireless conditions in our study. First, the HI participants in our study are older adults and age-related cognitive processing deficits may have played a role in their ability to extract benefit from better ear SNR. Perhaps more importantly, both NH and HI participants were tested without a period of acclimatization to either HAs, similar to an earlier study by Van den Bogaert et al.48 While there are conflicting reports on the effect of acclimatization on speech recognition in noise,49 there is evidence that HI listeners improve over time in their speech recognition abilities when using multichannel WDRC.50 Furthermore, Neher et al.51 commented that a lack of acclimatization may impact the degree of spatial benefit experienced by HI listeners in complex listening environments. Additional research studies, which include a period of acclimatization to the new HAs in both modes of synchronization, are therefore necessary.

Finally, the different outcomes for speech in noise and localization performances in the present study are in concordance with the findings of Hawley et al.19 and Rychtarikova et al.20 that the binaural cues that are prominent for sound localization (ITDs, ILDs, and spectral cues), are different from the binaural cues that support speech intelligibility in noise (SNR, spatial separation between the target and the noise, the number and the nature of the interfering noises).

Conclusions

The present study evaluated the benefit of wireless synchronization of WDRC in bilateral hearing aids. Speech recognition in noise and localization abilities of normal hearing and hearing impaired subjects were measured with two different brands of bilateral wirelessly connected hearing aids. Speech recognition data showed no statistically significant preference for either wireless on or wireless off conditions. Localization results were analyzed for errors in the F/B and L/R dimensions. Activating the wireless synchronization significantly reduced the rate of F/B confusions by 10.5% among the hearing impaired group when the sound source was broadband. Localization results in the L/R dimension were unaffected by the wireless condition. Together, these results suggest a benefit from wireless synchronization, at least in certain environments. Results are to be considered with caution, because participants were not acclimatized to the hearing aids and ear molds, and the adaptive directionality, noise reduction, and feedback cancellation were disabled. Further research is warranted to systematically investigate the effects of acclimatization, additional adaptive DSP, and complex listening environments (multiple sources in a reverberant setting) on the wirelessly connected synchronization feature in modern hearing aids.

Acknowledgements

Laya Poost-Foroosh, Paula Folkeard, Francis Richert, Shane Moodie, and Jeff Crukley provided technical and recruiting assistance. The Ontario Research Fund and the Canada Foundation for Innovation provided financial support.

References

- 1.Dillon H. Binaural and bilateral considerations in hearing aid fitting. Hearing aids. New York, NY: Thieme; 2001. pp 370-403. [Google Scholar]

- 2.Byrne D, Noble W, Lepage B. Effects of long term bilateral and unilateral fitting of different hearing aid types on the ability to locate sounds. J Am Acad Audiol 1992;3:369-82. [PubMed] [Google Scholar]

- 3.Boymans M, Goverts ST, Kramer SE, Festen JM, Dreschler WA. Candidacy for bilateral hearing aids: a retrospective multicenter study. J Speech Lang Hear Res 2009;52:130-40. [DOI] [PubMed] [Google Scholar]

- 4.Kochkin S. MarkeTrak VIII: 25-year trends in the hearing health market. Hear Rev 2009;16:12-31. [Google Scholar]

- 5.Bretoli S, Bodmer D, Probst R. Survey on hearing aid outcome in Switzerland: Associations with type of fitting (bilateral/unilateral), level of hearing aid signal processing, and hearing loss. Int J Audiol 2010;49:333-46. [DOI] [PubMed] [Google Scholar]

- 6.Keidser G, Rohrseitz K, Dillon H, Hamacher V, Carter L, Rass U, et al. The effect of multi-channel wide dynamic range compression, noise reduction, and the directional microphone on horizontal localization performance in hearing aid wearer. Int J Audiol 2006;45:563-79. [DOI] [PubMed] [Google Scholar]

- 7.Van den Bogaert T, Klasen T, Moonen M, Van Deun L, Wouters J. Horizontal localization with bilateral hearing aids: without is better than with. J Acoust Soc Am 2006;119:515-26. [DOI] [PubMed] [Google Scholar]

- 8.Middlebrooks JC, Green DM. Sound localization by human listeners. Ann Rev Psych 1991;42:135-59. [DOI] [PubMed] [Google Scholar]

- 9.Wightman FL, Kistler DJ. The dominant role of low frequency interaural time differences in sound localization. J Acoust Soc Am 1992;91:1648-61. [DOI] [PubMed] [Google Scholar]

- 10.Macpherson E, Middlebrooks J. Listener weighting of cues for lateral angle: the duplex theory of sound localization revisited. J Acoust Soc Am 2002;111:2219-36. [DOI] [PubMed] [Google Scholar]

- 11.Byrne D, Noble W. Optimizing sound localization with hearing aids. Trends Amplif 1998;13:51-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Vaillancourt V, Laroche C, Giguere C, Beaulieu M-A, Legault J-P. Evaluation of auditory functions for Royal Canadian Mounted Police Officers. J Am Acad Audiol 2011;22:313-31. [DOI] [PubMed] [Google Scholar]

- 13.Best V, Kalluri S, McLachlan S, Valentine S, Edwards B, Carlile S. A comparison of CIC and BTE hearing aids for three-dimensional localization of speech. Int J Audiol 2010;49:723-32. [DOI] [PubMed] [Google Scholar]

- 14.Noble W, Sinclair S, Byrne D. Improvement in aided sound localization with open earmolds: observations in people with high-frequency hearing loss. J Am Acad Audiol 1998;9:25-34. [PubMed] [Google Scholar]

- 15.Macpherson EA. Stimulus continuity is not necessary for the salience of dynamic sound localization cues. J Acoust Soc Am 2009;125:2691(A). [Google Scholar]

- 16.Macpherson E, Cumming M, Quelch R. Accurate sound localization via head movements in listeners with precipitous high-frequency hearing loss. J Acoust Soc Am 2011;129:2486. [Google Scholar]

- 17.Keidser G, Convery E, Hamacher V. The effect of gain mismatch on horizontal localization performance. Hear J 2011;64:26-33. [Google Scholar]

- 18.Wightman FL, Kistler DJ. Factors affecting the relative salience of sound localization cues. In: Gilkey RH, Anderson TR. Binaural and spatial hearing in real and virtual environments. Hillsdale, NJ: Lawrence Erlbaum Associates; 1997. pp 1-23. [Google Scholar]

- 19.Hawley ML, Litovsky RY, Colburn HS. Speech intelligibility and localization in complex environments. J Acoust Soc Am 1999;105:3436-48. [DOI] [PubMed] [Google Scholar]

- 20.Rychtáriková M, Bogaert T, Vermeir G, Wouters J. Perceptual validation of virtual room acoustics: Sound localisation and speech understanding. Appl Acoust 2011;72:196-204. [Google Scholar]

- 21.Singh G, Pichora-Fuller MK, Schneider BA. The effect of age on auditory spatial attention in conditions of real and simulated spatial separation. J Acoust Soc Am 2008;124:1294-305. [DOI] [PubMed] [Google Scholar]

- 22.Singh G, Pichora-Fuller MK, Schneider BA. The effect of interaural intensity cues and expectations of target location on word identification in multi-talker scenes for younger and older adults. Auditory signal processing in hearing-impaired listeners. 1st International Symposium on Auditory and Audiological Research (ISAAR) 2007, Marienlyst, Helsingør, Denmark. Available from: http://www.audiological-library.gnresound.dk/ [Google Scholar]

- 23.Powers T, Burton P: Wireless technology designed to provide true binaural amplification. Hear J 2005;58:25-34. [Google Scholar]

- 24.Sockalingam R, Holmberg M, Eneroth K, Shulte M. Binaural hearing aid communication shown to improve sound quality and localization. Hear J 2009;62:46-7. [Google Scholar]

- 25.Smith P, Davis A, Day J, Unwin S, Day G, Chalupper J. Real-world preferences for linked bilateral processing. Hear J 2008;61:33-8. [Google Scholar]

- 26.Cornelis B, Moonen M, Wouters J. Speech intelligibility improvements with hearing aids using bilateral and binaural adaptive multichannel Wiener filtering based noise reduction. J Acoust Soc Am 2012;131:4743-55. [DOI] [PubMed] [Google Scholar]

- 27.Wiggins I, Seeber B. Speech understanding in spatially separated noise with bilaterally linked compression. International Hearing Aid Conference (IHCON), 8-12 August 2012, Lake Tahoe, CA, USA. Available from: http://www.hei.org/ihcon/ [Google Scholar]

- 28.Scollie S, Seewald R, Cornelisse L, Moodie S, Bagatto M, Laurnagaray D, et al. The desired sensation level multistage input/output algorithm. Trends Amplif 2005;9:159-97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Oticon. The audiology in Epoq, a white paper. Smørum: Oticon A/S; 2007. pp 1-36. Available from: http://www.oticon.com/~asset/cache.ashx?id=10193&type=14&format=web [Google Scholar]

- 30.Siemens. Siemens motion product guide for audiologists and hearing care providers; 2009. Available from: https://www.medical.siemens.com/siemens/en_GLOBAL/gg_au_FBAs/files/product_brochures/newproducts_fall2009/Motion_DispenserProductGuide.pdf [Google Scholar]

- 31.Nilsson M, Soli S, Sullivan J. Development of the hearing in noise test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am 1994;95:1085-99. [DOI] [PubMed] [Google Scholar]

- 32.Conny. Datsun180 - DATSUN_T.wav; 2005. Sound file available from: http://www.freesound.org/people/conny/sounds/2937/

- 33.Inchadney. Scottish village traffic noise.wav; 2006. Sound file available from: http://www.freesound.org/people/inchadney/sounds/21245/ [Google Scholar]

- 34.Benjamini Y, Yekutieli D. The control of the false discovery rate in multiple testing under dependency. Ann Stat 2001;29:1165-88. [Google Scholar]

- 35.Middlebrooks JC. Narrow-band sound localization related to external ear acoustics. J Acoust Soc Am 1992;92:2607-24. [DOI] [PubMed] [Google Scholar]

- 36.Keidser G, O’Brien A, Hain J, McLelland M, Yeend I. The effect of frequency-dependent microphone directionality on horizontal localization performance in hearing-aid users. Int J Audiol 2009;48:789-803. [DOI] [PubMed] [Google Scholar]

- 37.Noble W, Byrne D, Lepage B. Effects on sound localization of configuration and type of hearing impairment. J Acoust Soc Am 1994;95:992-1005. [DOI] [PubMed] [Google Scholar]

- 38.Blauert J. Sound localization in median plane. Acustica 1969;22:205-7. [Google Scholar]

- 39.Macpherson E, Sabin A. Binaural weighting of monaural spectral cues for sound localization. J. Acoust Soc Am 2007;121:3677-88. [DOI] [PubMed] [Google Scholar]

- 40.Wiggins I, Seeber B. Effects of dynamic-range compression on the spatial attributes of sounds in normal-hearing listeners. Ear Hear 2012;33:339-410. [DOI] [PubMed] [Google Scholar]

- 41.Sabin A, Macpherson E, Middlebrooks J. Human sound localization at near-threshold levels. Hear Res 2005;199:124-34. [DOI] [PubMed] [Google Scholar]

- 42.Wightman F, Kistler D. The dominant role of low-frequency interaural time differences in sound localization. J Acoust Soc Am 1992;91:1648-61. [DOI] [PubMed] [Google Scholar]

- 43.Brimijoin WO, McShefferty D, Akeroyd MA. Auditory and visual orienting responses in listeners with and without hearing-impairment. J Acoust Soc Am 2010;127:3678-88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Zambarbieri D, Schmid R, Versino M, Beltrami G. Eye-head coordination toward auditory and visual targets in humans. J Vestibular Res 1997;7:251-63. [PubMed] [Google Scholar]

- 45.Machpherson E, Cumming M, Quelch R. Accurate sound localization via head movements in listeners with precipitous high-frequency hearing loss. J Acoust Soc Am 2011;129:2486. [Google Scholar]

- 46.Kreisman B, Mazevski A, Schum D, Sockalingam R. Performance with broadband ear to ear wireless instruments. Trends Amplif 2010;14:3-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Naylor G, Johannesson RB. Long-term signal-to-noise ratio at the input and output of amplitude-compression systems. J Am Acad Audiol 2009;20:161-71. [DOI] [PubMed] [Google Scholar]

- 48.Van den Bogaert T, Carette E, Wouters J. Sound source localization using hearing aids with microphones placed behind-the-ear, in-the-canal, and in-the-pinna. Int J Audiol 2011;50:164-76 [DOI] [PubMed] [Google Scholar]

- 49.Munro K. Reorganization of the adult auditory system: Perceptual and physiological evidence from monaural fitting of hearing aids. Trends Amplif 2008;12:254-71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Yund E, Roup C, Simon H, Bowman G. Acclimatization in wide dynamic range multichannel compression and linear amplification hearing aids. J. Rehabil Res Dev 2006;43:517-35. [DOI] [PubMed] [Google Scholar]

- 51.Neher T, Behrens T, Carlile S, Jin C, Kragelund L, Petersen A, van Schaik A. Benefit from spatial separation of multiple talkers in bilateral hearing-aid users: Effects of hearing loss, age, and cognition. Int J Audiol 2009;48:758-74. [DOI] [PubMed] [Google Scholar]