Abstract

To participate effectively in multi-talker conversations, listeners need to do more than simply recognize and repeat speech. They have to keep track of who said what, extract the meaning of each utterance, store it in memory for future use, integrate the incoming information with what each conversational participant has said in the past, and draw on the listener’s own knowledge of the topic under consideration to extract general themes and formulate responses. In other words, to acquire and use the information contained in spoken language requires the smooth and rapid functioning of an integrated system of perceptual and cognitive processes. Here we review evidence indicating that the operation of this integrated system of perceptual and cognitive processes is more easily disrupted in older than in younger adults, especially when there are competing sounds in the auditory scene.

Key words: hearing, speech, cognition, speech understanding, speech comprehension

Introduction

Older listeners often experience communication difficulties in everyday life. For example, in noisy environments, they often find it difficult to determine who is talking and exactly what is being said. These difficulties could be due to i) hearing losses, ii) age-related changes in cognitive functioning, or iii) both of these factors. Because hearing status is highly correlated with cognitive performance in older adults (e.g., Lindenberger & Baltes, 1994), it is sometimes difficult to ascertain whether the communication difficulties experienced by them are a consequence of age-related changes in auditory functioning, or of cognitive declines. For example, to participate effectively in a multi-talker conversation, listeners need to do more than simply recognize and repeat speech. They have to keep track of who said what, extract the meaning of each utterance, store it in memory for future use, integrate the incoming information with what each conversational participant has said in the past, and draw on the listener’s own knowledge of the topic under consideration to extract general themes and formulate responses. In other words, to acquire and use the information contained in spoken language requires the smooth and rapid functioning of an integrated system of perceptual and cognitive processes. Recent evidence suggests that the operation of this integrated system is more easily disrupted in older than in younger adults, especially when there are competing sounds in the auditory scene (see Schneider et al., 2010; Wingfield & Tun, 2007). In turn, the increased susceptibility to disruption of language processes in older adults by competing sound sources not only leads to speech understanding difficulties, but also can negatively impact those cognitive processes that make it possible to comprehend1 and remember what was heard.

Competing sounds disrupt speech communication on many levels. At a peripheral level, the pattern of activity they induce along the basilar membrane often overlaps that produced by the target talker, resulting in peripheral or energetic masking. Competing speech, in addition to producing energetic masking, also tends to activate phonemic, semantic, and/or linguistic processes that can interfere with speech understanding at more cognitive levels. This kind of interference is often referred to as informational masking. Age-related cochlear pathologies will reduce audibility and increase the listener’s susceptibility to energetic masking, leading to errors in speech identification. These errors, in turn, cascade upward, making it more difficult for listeners to keep track of different auditory sources and to separate streams of information for subsequent processing. At the cognitive level, age-related declines in speed of processing, working memory capacity, and the ability to suppress irrelevant information might make it more difficult for the listener to handle multiple streams of information, rapidly switch attention from one talker to another, and comprehend and store information extracted from speech for later recall.

Recent research suggests that a large part of the speech understanding difficulties encountered by healthy older adults with relatively good hearing2 are due to age-related declines in sensory and perceptual processes. To compensate for these auditory declines, older adults have to engage cognitive resources more often and more fully than do younger adults to help parse the auditory scene and recover imperfectly-heard material, leaving fewer resources for the higher-order tasks involved in speech comprehension.

At the cochlear level, there are a number of age-related changes that will affect the transduction of sound into neural impulses (see Schmiedt, 2010). As a result, some information in the speech signal (especially that conveyed by the high-frequency components of speech) is lost at this stage. In addition, age related changes in retro-cochlear processes in the auditory pathways (e.g, loss of neural synchrony, disruption of binaural processing) may result in further loss of information. The net result in older adults is that the input to the cognitive processes engaged in lexical access (such as working memory, Daneman & Carpenter, 1980; Rönnberg et al., 2008) is impoverished or degraded. In the first part of this paper we will consider the consequences of this information degradation on speech understanding and speech comprehension. In the second part, we will discuss how age-related changes in the cognitive processes involved in speech understanding and comprehension can compensate to a certain degree for the impoverishment of speech signals.

Potential consequences of information degradation

Consider the sequence of events involved in repeating a spoken sentence. In cohort models of lexical access (Marslen-Wilson, 1989), it is assumed that the auditory input associated with speech activates most if not all of the cohort of words that are possible given the auditory input up until that point of time. It follows that if the speech signal is degraded, the cohort of activated words will become larger. This will place a greater processing load on those cognitive mechanisms involved in lexical access, and most likely engage compensatory, top-down processes. For example, consider how background babble could interfere with successfully identifying the last word in the high-predictability R-SPIN sentence (Bilger et al., 1984). His plan meant taking a big risk. As a result of the babble (or of an aging auditory system) the activated cohort at any period of time is likely to be much larger than if the sentence were presented in quiet to an intact auditory system. But, because the last word is highly predictable from the sentence’s content, the information previously extracted from the speech signal, when properly comprehended, could theoretically be used to rule out many of the words activated by the acoustic input. This, in turn, would lead to more rapid and accurate lexical access for the final word in the sentence. Note, however, that the engagement of top-down attentionally-based cognitive processes potentially has two serious consequences. The first is that these mechanisms may not be available to other tasks that the individual needs to, or wants to engage in, and the second is that listening becomes effortful. Hence it is reasonable to ask whether aging affects the older adult’s ability to employ top-down contextual information to enhance speech recognition and comprehension.

Are older adults as capable as younger adults at understanding and comprehending speech when the speech signal is impoverished either through masking (energetic or informational) or because of age-related auditory-processing declines? The answer to that question is a qualified ‘Yes’ for tasks that require both speech understanding and speech comprehension. However, in order to demonstrate that this is true, one must first insure that younger and older adults are tested under conditions in which their auditory systems are delivering equivalently impoverished auditory signals. This is generally accomplished in two ways: i) by degrading the signal to simulate an auditory decline in younger adults roughly comparable to that experienced by older adults (Humes & Dubno, 2010); or ii) by adjusting the listening conditions to make it equally difficult for younger and older adults to recognize individual words when top-down contextually-based information is not available to assist lexical access (Schneider et al., 2000). Both approaches generally support the notion that older adults are as capable, if not more so, than younger adults at using contextual information to facilitate speech understanding and comprehension.

Evidence for this assertion comes from a number of studies. Consider sentences where it is difficult to use context to identify the last word, such as He wants to talk about the risk. One can individually adjust the signal-to-noise ratio (SNR) so that a listener has approximately a 20% chance of identifying the last word correctly. Typically, an older normal-hearing adult will require an SNR that is 2-4 dB higher than that needed by a young normal-hearing adult to correctly identify 20% of sentence-final words in such low-predictability sentences. If we now present the same sentence-final words to younger and older adults in high-predictability sentences (e.g., His plan meant taking a big risk), at these individually-tailored SNRs, speech recognition in older adults actually improves more than it does in younger adults (Pichora-Fuller et al., 1995). Hence, the ability to utilize top-down processes to aid speech understanding is, at the very least, preserved (if not enhanced) in older adults.

A similar finding holds for tasks involving speech comprehension. Schneider et al. (2000) had younger and older adults listen to lectures in quiet and in a babble background and answer both detail and integrative questions after having listened to the lecture. Older adults scored worse than younger adults on both types of questions when they listened to these lectures under identical conditions (same SPL in quiet, same SNR in noise). When, however, the lectures were presented at the same sensation level (SL), and when the SNR (if noise was present) was individually adjusted to make it equally difficult to identify words when there was no contextual support, younger and older adults performed equivalently. Hence, under comparable levels of perceptual stress, age differences in comprehension disappeared. Murphy et al. (2006) report a similar result for younger and older adults listening to two-person plays, when the voices of the two actors, as well as the masking noise, were presented over a single loudspeaker. That is, when the SNR was adjusted to make it equally difficult for younger and older adults to recognize ndividual words, age differences in comprehension disappeared. However, equating for individual differences in word recognition did not eliminate all of the age differences in comprehension when the voices of the actors and the masking babble originated from spatially-separated loudspeakers. This suggests that, under some circumstances, older adults cannot benefit as much as younger adults from auditory cues such as spatial separation.

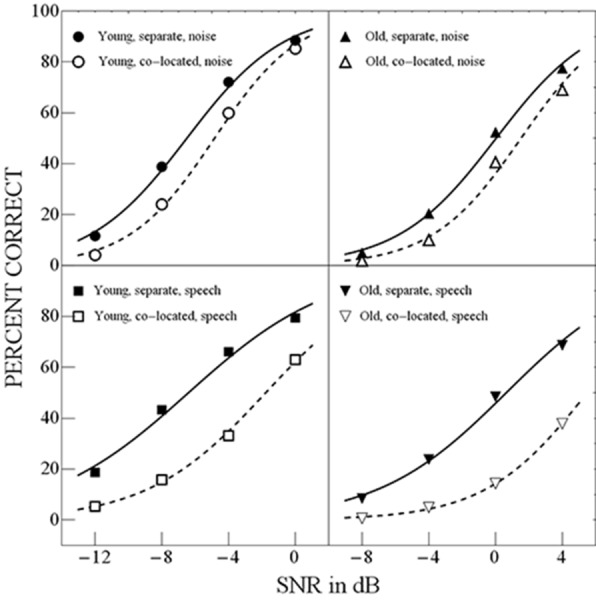

Bottom-up and top-down factors in understanding speech masked by speech

As mentioned earlier, when the masking sounds are competing speech, there may be interference (informational masking) at the cognitive level because the competing speech elicits activity in the phonemic, semantic, and/or linguistic systems that interferes with the processing of the speech signal. To function well in such situations, the listener has to be able to somehow parse the auditory stream into its component sound sources (e.g., speech target on the left, competing talkers to the centre and right), and focus his or her attention on the target speech signal (Bregman, 1990; Schneider et al., 2007). There are several bottom-up (e.g., spatial separation) and top-down (e.g., knowledge of the topic) factors that make it easier for the listener to parse the auditory scene into its component sound sources. Therefore we could expect age-related changes in auditory processes to modulate the effectiveness of the bottom-up factors leading to a successful parsing of the auditory scene (e.g., binaural processing difficulties might mitigate the effectiveness of spatial separation), and age-related changes in cognitive processes to reduce the listener’s ability to use top-down information to achieve and/or benefit from stream segregation. However, a number of studies have shown that although older adults generally require stronger bottom-up cues to perceptually separate the target speech from competing speech, they reap approximately the same degree of benefit from stream segregation as do younger adults. For example, we know (Freyman et al., 1999) that spatially separating a target talker from a masker provided by two other talkers, reduces the SNR required to identify key words in a syntactically-correct but semantically anomalous sentence (e.g., A rose could paint a fish) by as much as 4-9 dB. Li et al. (2004) investigated whether older adults with good hearing would achieve the same benefit from spatial separation as do younger adults. To control for age-related differences in the ability to use head-shadow cues (SNR differences between the two ears when sound sources are physically separated compared to when they are spatially co-located), they used the precedence effect to produce a perceived spatial separation between sound sources while maintaining the same SNR at each ear. Specifically, both the speech target and the masker were presented over two loudspeakers located to the left and right of the listener. However, to achieve the perception that the target speaker was to the right of the listener, the target speech presented over the left speaker always lagged that presented over the right loudspeaker by 3 ms. To achieve the perception that the masker was co-located with the speech target on the right, the masker presented over the left loudspeaker also lagged that presented over the right loudspeaker by 3 ms. Finally to produce the perception that the target was on the right, and the masker on the left, the target played over the left loudspeaker lagged that played over the right by 3 ms, whereas the masker played on the left led that played on the right by 3 ms. Figure 1 plots the percentage of correctly identified target words for younger and older adults when the target was perceived as co-located with the masker, and when it was perceived as spatially-separated from the masker for a steady-state noise masker and for a speech masker consisting of two other people talking. Note first that the degree of release produced by perceived spatial separation (different perceived locations) was considerably less when the masker was a steady state noise than when it was competing speech. Hence there is a much greater degree of release from a speech masker (which presumably interferes with the processing of the speech target at more central levels) than from a noise masker (whose masking effect is primarily energetic in nature). Second, note that the degree of release from masking is approximately the same for younger and older adults, but that older adults need an SNR that is approximately 3 dB higher than that of younger adults to achieve the same level of performance at each condition in the experiment. Here again we see that, in a noisy situation, older adults with good hearing need a higher SNR than younger adults to achieve the same level of speech recognition. However, they appear to benefit as much as younger adults do from spatial separation. It is also interesting to note that evidence is accumulating that, with respect to speech on speech masking, older adults also can benefit as much as younger adults do from top-down cues that are known to facilitate stream segregation (Ezzatian et al., 2011; Singh et al., 2008). Hence, the major impediments to good speech understanding in older adults appear to be age-related changes in the peripheral auditory system. The consequence in most situations is that the speech understanding abilities of older adults will be equivalent to those of younger adults provided that the older adults are listening at an SNR that is 3-4 dB greater than that experienced by younger adults. However, it should be noted that in difficult listening situations, both younger and older adults most likely need to engage top-down, attention-demanding, cognitive processes to maintain a high-level of speech understanding. This raises the interesting question as to whether drawing on such resources differentially affects higher-order processes involved in the comprehension of speech.

Figure 1.

Percentage of target words correctly identified as a function of SNR for younger and older adults when the masker was speech spectrum noise (upper panels) or two-talker speech (lower panels). The maskers were either perceived to come from the same or a different location as the speech target. Adapted from Li et al. (2004) with permission of the author.

The effects of perceptual stress on higher-order cognitive processes

A prevalent theory in cognitive neuroscience is that the resources required to manipulate information gleaned from the senses are limited, and diminish with age (e.g. Craik & Byrd, 1982). This point of view is supported by a number of studies (see McDowd & Shaw, 2000) which show that adding a secondary task while engaged in a primary task (e.g., talking on your cell phone while driving a car) can have a quite deleterious effect on the primary task (e.g., an increased accident rate in cell phone users). When listening conditions are easy (single talker, clear speech, quiet background, familiar topic), the listener most likely does not need to engage top-down processes to support speech understanding. However, as listening becomes more difficult, there is a greater need to deploy such resources in order to maintain speech understanding at a reasonably high level. This, in turn, could limit the availability of some of the cognitive resources required for speech comprehension. Recall that in order to participate in a conversation, a listener not only has to be able to identify words, phrases, and sentences (speech understanding), she or he has to be able to extract the meaning of the utterances, store this information in memory for later recall, and integrate this information with the listener’s world knowledge in order to formulate an appropriate response (i.e., speech comprehension). If older listeners have fewer resources to devote to these tasks than do younger adults, or are slower in deploying them, then stressful listening situations might have a more deleterious effect on comprehension and memory in older than in younger adults. Although studies have shown that older adults can comprehend and recall information gleaned from lectures and plays when both younger and older adults are tested under conditions of equivalent perceptual stress, it should be noted that, in all of these cases, there was considerable contextual support for these cognitive operations (e.g., the degree of contextual support provided by a dialogue between a priest and a penitent concerning a murder committed by the penitent is substantial). The cognitive literature indicates that world knowledge is relatively unaffected by age, and that older adults depend more on context than do younger adults, and may, indeed, derive more benefit from it (Pichora-Fuller et al., 1995). Hence, provided that the contextual support for comprehension and memory is good, older adults might be able to comprehend and recall meaningful material as well as younger adults under conditions of equivalent perceptual stress, even though their cognitive resources may be more limited than those of younger adults.

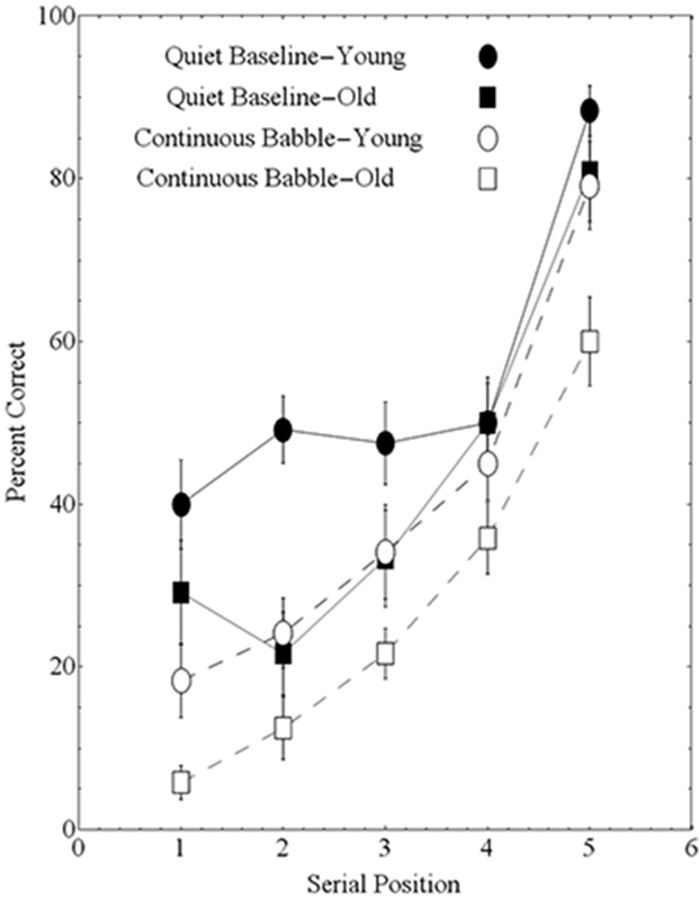

To determine whether resource limitations differentially affect the abilities of older and younger adults to remember material heard in the absence of contextual support, Murphy et al., (2000) investigated the effects of noise and aging on memory in a paired-associates paradigm. Participants were presented aurally with five word pairs (e.g., algaerattle, helmet-clothing, jersey-luggage, slipper-music, oven-madman) separated by 4 s. Eight seconds later they were presented with the first word of one of the pairs (e.g., helmet) and asked to supply the second word of the pair. Only one randomly chosen pair was tested out of the five. The percentage of words correctly recalled as a function of their position in the series was recorded and is shown in Figure 2 when the word pairs were presented in a quiet background to both younger (filled circles) and older (filled squares) adults. Note that younger and older adults perform equivalently for the two most recently presented word pairs but that younger adults are better than the older adults at recalling words in the first three serial positions. This age difference is most often attributed to the lesser ability of older adults to encode information into longterm or episodic memory. No age difference is expected for the more recently presented words because these are presumably retained equally well by both age groups in working memory, a limited-capacity system that is hypothesized to handle the processing and storage of task-relevant information during the performance of everyday tasks such as speech comprehension (Daneman & Carpenter, 1980; Daneman & Merikle, 1996). Because the words were presented in a quiet background, it is reasonable to assume that, for both younger and older adults, the most recently presented words will be available in working memory at the time of recall. However, if the processing and manipulation of the information required for lexical access consumes more of the resources of working memory in older than in younger adults (perhaps because of age-related declines in working memory capacity) older adults may not have the resources available to younger adults for encoding the heard words into episodic memory. Hence, a resource limitation hypothesis can explain why older adults perform more poorly than younger adults in the first three serial positions (see Heinrich et al., 2008, Heinrich & Schneider, 2011).

Figure 2.

Percentage of words correctly recalled as a function of serial position. Adapted from Murphy et al. (2000) with permission of the author.

Interestingly, if younger adults are tested in a noisy background (a 12-talker babble) their performance (unfilled circles) matches that of older (filled squares) adults in quiet (Figure 2). One interpretation of this result is that extracting the words from a background of babble increases the demand on working memory. This increase in demand reduces the pool of resources that is available for encoding items into episodic memory, resulting in memory decrements in the first three serial positions. But, provided that the increase in processing load is not so large that it interferes with the retention of recently-presented word pairs in working memory, the presence of background babble may have a negligible effect on memory for the most recently presented items. Hence, in young adults, extracting the words from noise may reduce the pool of resources available to them for encoding items into episodic memory to the same extent that age-related processing declines do for the older adults.

If noise has this effect on younger adults, how will it affect the performance of older adults? To compare younger and older adults under equivalent levels of perceptual stress, Murphy et al. (2000) adjusted SNRs so that both younger and older adults were equally likely to be able to identify the individual words involved in the test when they were presented singly. Figure 2 shows that noise tends to reduce performance in all serial positions in older adults (unfilled circles). Hence, attempting to memorize items in a continuous noise background interferes with both working memory and episodic memory in older adults whereas it appears to affect only episodic memory in younger adults.3 These studies indicate that when there is no contextual support to aid memory processes, noise has a more adverse effect on memory for words in older than in younger adults, even when we equate word identification in both age groups. How is it then that older adults can perform as well as younger adults when the task requires the listener to comprehend and recall monologues and dialogues? One possibility is that older adults may depend upon and derive a greater degree of benefit from context. A second possibility is that a larger vocabulary, and a greater store of accumulated knowledge, allows older adults to compensate for memory and other processing deficits occasioned by listening in noise.

How cognitive factors affect speech understanding and speech comprehension

I have argued that the ability of an individual to comprehend heard material will depend on both sensory and cognitive factors, and that the comprehension difficulties of healthy older adults primarily reflect clinical or sub-clinical, age-related changes in hearing.

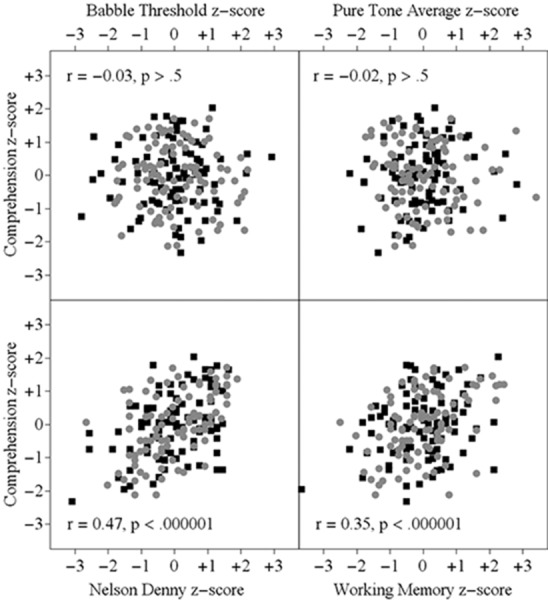

Hence, on an individual level, the cognitive abilities of older adults should modulate the degree of difficulty they experience in comprehending speech in noisy situations. This raises the question of just how much of a role is played by cognitive factors when older adults report that they are experiencing communication difficulties in everyday listening situations. To begin to answer that question we have attempted to assess the contribution of cognitive factors such as working memory and reading skill to speech comprehension. A number of researchers have argued that working memory is likely to play a critical role in speech understanding and comprehension (e.g., Rönnberg et al., 2008). In addition, it is quite likely that there are other higher-order cognitive processes, such as those involved in reading, that are likely to affect an individual’s ability to comprehend speech. To evaluate how individual differences in these abilities might affect speech comprehension, we re-examined the data from Schneider et al. (2000) and Murphy et al. (2006), where, in addition to assessing the ability of younger and older individuals to answer questions concerning monologues and dialogues, we had also collected a number of audiological and cognitive measures. A hierarchical regression analysis showed that two cognitive tests (the Nelson-Denny reading comprehension task, Brown et al., 1981, and Daneman & Carpenter’s, 1980, working memory span task) were significantly correlated with comprehension and memory on the monologue and dialogue tasks, whereas babble thresholds and average pure-tone thresholds (250-4,000 Hz) were not (Figure 3). Moreover, the degree of association between the cognitive measures and performance was essentially identical in younger and older adults. Recall that in both of these studies listening conditions were adjusted to make it equally difficult for younger and older listeners to identify individual words when these words were not supported by context. Hence, we might expect that neither babble thresholds nor average pure tone thresholds would be correlated with the listener’s ability to answer questions about the content of either monologues or dialogues. Figure 3 suggests that the cognitive abilities of an older adult will affect the degree to which she or he is able to comprehend speech in difficult listening situations. Older individuals with relatively high cognitive functioning will be better able to use their world knowledge to compensate, in a top-down fashion, for age-related declines in auditory processing. There is even evidence that a hard-of-hearing person’s cognitive status will affect the degree to which she or he can benefit from different types of hearing aids. For example, Lunner and Sundewall-Thorén (2007) found that a measure of working memory capacity predicted the degree to which individuals would benefit from different types of signal-processing algorithms, with those individuals who scored high on the working memory test benefitting more from a fast-acting compression algorithm than from a slow one, with the reverse being true for those with lower scores on the working memory test. Indeed a number of audiologists (e.g., Pichora-Fuller and Singh, 2006) have begun to argue that, because an individual’s cognitive status can potentially predict their ability to benefit from a range of assistive technologies, and because an individual’s cognitive status appears to determine the extent to which she or he can use top-down, knowledge-driven processes to compensate for poor hearing in difficult listening situations, audiologists should seriously consider incorporating cognitive tests into their practice.

Figure 3.

Top panels: Comprehension scores for monologues and dialogues as a function of the listener’s babble threshold (left) and pure tone average (right). Bottom panels. The same comprehension scores as a function of the listener’s reading (Nelson Denny, left) and working memory (right) scores. All scores have been z transformed with respect to the experiments from which they were drawn. Younger adults (squares); older adults (circles). Data taken from Schneider et al. (2000) and Murphy et al. (2006) with permission of the authors.

Conclusions

The primary reason why healthy older adults find it difficult to understand speech in difficult listening situations is that age-related changes in the auditory system make it difficult for the older adult to parse the auditory scene into its component sound sources and extract the speech signal from the background noise. In turn, the degradation of the signal impedes or slows lexical access (see Rönnberg et al., 2008, for a theory of how signal degradation might affect lexical access). It is also likely that under stressful listening conditions, signal extraction and lexical access will place a greater demand on cognitive resources such as working memory. Consequently, fewer resources will be available for many of the cognitive level operations that are required for speech comprehension (e.g., extracting meaning from the utterances, storing this information in memory), leading to poorer comprehension of speech. However, the ability of an individual to function under such conditions will depend on her or his cognitive status. Individuals with capacious and well-oiled cognitive machinery will be better able to cope with the increased demands placed on the cognitive system by a failing auditory system. They are also more likely to respond better to certain types of intervention (e.g., fast-acting compression algorithms). Finally, if we are to fully understand the hearing difficulties experienced by older adults, we need to better understand how sensory and cognitive systems interact in speech understanding and speech comprehension tasks.

Acknowledgements

This work was supported by grants from the Canadian Institutes of Health Research (MOP 15359) and the Natural Sciences and Engineering Research Council of Canada (RGPIN 9952).

Footnotes

1Following Humes & Dubno (2010) I will use the term speech understanding to refer to the ability to recognize and repeat speech. The term speech comprehension will be reserved for those processes involving the extraction, manipulation, interpretation, and storage of the information contained in the speech material. Accurately repeating spoken words or sentences demonstrates that the speech is understood. Drawing inferences from the same sentence, however, involves the comprehension of speech.

2By healthy older adults, I mean those community-living individuals older than 65 years of age, who are free of any signs of dementia, and do not have any clinically-significant hearing loss.

3Henrich & Schneider (2011) show that the degree to which a noisy background differentially interferes with memory in younger and older adults depends upon when the noise is presented and whether it is gated on or off with the word pairs.

References

- Bilger R.B., Nuetzel M.J., Rabinowitz W.M., Rzeczkowski C., 1984. Standardization of a test of speech perception in noise. J Speech Hear Res, 27, 32-48. [DOI] [PubMed] [Google Scholar]

- Bregman A.S., 1990. Auditory Scene Analysis: The Perceptual Organization of Sound. MIT Press, Cambridge, MA. [Google Scholar]

- Brown J.I., Bennett J.M., Hanna G., 1981. Nelson-Denny Reading Test. Riverside, Chicago. [Google Scholar]

- Craik F.I.M., Byrd M., 1982. Aging and cognitive deficits: the role of attentional resources. Craik F.I.M., Trehub S. (Eds.), Aging and Cognitive Processes. Plenum, New York, pp. 191-211. [Google Scholar]

- Daneman M., Carpenter P.A., 1980. Individual differences in working memory and reading. J Verb Learn Verb Behav, 19, 450-466. [Google Scholar]

- Daneman M., Merikle P.M., 1996. Working memory and comprehension: a meta-analysis. Psychon Bull Rev, 3, 422-433. [DOI] [PubMed] [Google Scholar]

- Ezzatian P., Li L., Pichora-Fuller M.K., Schneider B.A., 2011. The effects of priming on release from informational masking is equivalent for younger and older adults. Ear Hear, 32(1), 84-96. [DOI] [PubMed] [Google Scholar]

- Freyman R.L., Helfer K.S., McCall D.D., Clifton R.K., 1999. The role of perceived spatial separation in the unmasking of speech. J Acoust Soc Am, 106, 3578-3588. [DOI] [PubMed] [Google Scholar]

- Heinrich A., Schneider B.A., Craik F.I.M., 2008. Investigating the influence of continuous babble on auditory short-term memory performance. Q J Exp Psychl, 61, 735-751. [DOI] [PubMed] [Google Scholar]

- Heinrich A., Schneider B.A., 2011. Elucidating the effects of ageing on remembering perceptually distorted word-pairs. Q J Exp Psychol, 64, 186-205. [DOI] [PubMed] [Google Scholar]

- Humes L.E., Dubno J.R.,. 2010, Factors affecting speech understanding in older adults. Gordon-Salant S., Frisina R.D., Popper A.N, Fay R.R. (Eds.), Springer Handbook of Auditory Research: The Aging Auditory System: Perceptual Characterization and Neural Bases of Presbycusis. New York, Springer, pp 211-258. [Google Scholar]

- Li L., Daneman M., Qi J., Schneider B.A., 2004. Does the information content of an irrelevant source differentially affect spoken word recognition in younger and older adults? J Exp Psychol Hum Percept Perform, 30, 1077-1091. [DOI] [PubMed] [Google Scholar]

- Lindenberger U., Baltes P.B., 1994. Sensory functioning and intelligence in old age: a sensory connection. Psychol Aging, 9, 339-355. [DOI] [PubMed] [Google Scholar]

- Lunner T., Sundewall-Thorén E., 2007. Interactions between cognition, compression, and listening conditions: effects on speech-in-noise performance in a two-channel hearing aid. J Am Acad Audiol, 18, 604-617. [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson W, 1989. Access and integration: projecting sound onto meaning. Marslen-Wilson W., (Ed.), Lexical Representation and Process. MIT Press, Cambridge, MA. [Google Scholar]

- McDowd J.M., Shaw R.J., 2000. Attention and aging: a functional perspective. Craik F.I.M., Salthouse T.A. (Eds), The Handbook of Aging and Cognition, 2nd Ed Erlbaum, Mahwah, NJ, pp. 221-292. [Google Scholar]

- Murphy D.R., Craik F.I.M., Li K.Z.H., Schneider B.A., 2000. Comparing the effects of aging and background noise on short-term memory performance. Psychol Aging, 15, 323-334. [DOI] [PubMed] [Google Scholar]

- Murphy D.R., Daneman M., Schneider B.A., 2006. Why do older adults have difficulty following conversations? Psychol Aging 21, 49-61. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller M.K., Singh G., 2006. Effects of age on auditory and cognitive processing: Implications for hearing aid fitting and audio-logical rehabilitation. Trends Amplif, 10, 29-59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller M.K., Schneider B.A., Daneman M., 1995. How young and old adults listen to and remember speech in noise. J Acoust Soc Am, 97, 593-608. [DOI] [PubMed] [Google Scholar]

- Rönnberg J., Rudner M., Foo C., Lunner T., 2008. Cognition counts: A working memory system for ease of language understanding. Int J Audiol, 47, (1), supplement 2: S99-S105. [DOI] [PubMed] [Google Scholar]

- Schmiedt R.A., 2010. The physiology of cochlear presbycusis. Gordon-Salant S., Frisina R.D., Popper A.N, Fay R.R. (Eds.), Springer Handbook of Auditory Research: The Aging Auditory System: Perceptual Characterization and Neural Bases of Presbycusis. New York, Springer, pp. 9-38. [Google Scholar]

- Schneider B.A., Daneman M., Murphy D.R., Kwong See S., 2000. Listening to discourse in distracting settings: the effects of aging. Psychol Aging 15, 110-125. [DOI] [PubMed] [Google Scholar]

- Schneider B.A., Li L., Daneman M., 2007. How competing speech interferes with speech comprehension in everyday listening situations. J Am Acad Audiol, 18, 578-591. [DOI] [PubMed] [Google Scholar]

- Schneider B. A., Pichora-Fuller M. K., Daneman M., 2010. The effects of senescent changes in audition and cognition on spoken language comprehension. Gordon-Salant S., Frisina R.D., Popper A.N, Fay R.R. (Eds.), Springer Handbook of Auditory Research : The Aging Auditory System: Perceptual Characterization and Neural Bases of Presbycusis. New York, Springer, pp. 167-210. [Google Scholar]

- Singh G., Pichora-Fuller M. K., Schneider B. A., 2008. The effect of age on auditory spatial attention in conditions of real and simulated spatial separation. J Acoust Soc Am, 124, 1294-1305. [DOI] [PubMed] [Google Scholar]

- Wingfield A., Tun P.A., 2007. Cognitive supports and cognitive constraints on comprehension of spoken language. J Am Acad Audiol, 18, 548-558. [DOI] [PubMed] [Google Scholar]