Abstract

Background

Computerized reminder systems (CRS) show promise for increasing preventive services such as colorectal cancer (CRC) screening. However, prior research has not evaluated a generalizable CRS across diverse, community primary care practices. We evaluated whether a generalizable CRS, ClinfoTracker, could improve screening rates for CRC in diverse primary care practices.

Methods

The study was a prospective trial to evaluate ClinfoTracker using historical control data in 12 Great Lakes Research In Practice Network community-based, primary care practices distributed from Southeast to Upper Peninsula Michigan. Our outcome measures were pre- and post-study practice-level CRC screening rates among patients seen during the 9-month study period. Ability to maintain the CRS was measured by days of reminder printing. Field notes were used to examine each practice’s cohesion and technology capabilities.

Results

All but one practice increased their CRC screening rates, ranging from 3.3% to 16.8% improvement. t tests adjusted for within practice correlation showed improvement in screening rates across all 12 practices, from 41.7% to 50.9%, P = 0.002. Technology capabilities impacted printing days (74% for high technology vs. 45% for low technology practices, P = 0.01), and cohesion demonstrated an impact trend for screening (15.3% rate change for high cohesion vs. 7.9% for low cohesion practices).

Conclusions

Implementing a generalizable CRS in diverse primary care practices yielded significant improvements in CRC screening rates. Technology capabilities are important in maintaining the system, but practice cohesion may have a greater influence on screening rates. This work has important implications for practices implementing reminder systems.

Despite high levels of awareness among primary care physicians of the recommendations for colorectal cancer (CRC) screening, rates of screening remain low nationally.1 One solution that has been proposed and studied to remedy this problem is computerized reminders. Studies that have examined the use of computerized reminders to increase cancer screening rates have generally shown favorable results. Unfortunately, the potential for gains has been outweighed in the past by the fact that computerized systems are frequently designed around specific practices’ computer infrastructure.2–4 Therefore, these systems have largely not been generalizable or sustainable. In addition, few of the reminder system studies have been performed in community-based practices, most likely because of technology barriers.

Another theme in the primary care preventive services literature explores the role of practice organization. One group has conceptualized this in terms of “tools, teamwork and tenacity.”5 In this model, technology is a tool that operates within the context of a complex, adaptive system. The specific role that the system plays is just now being explored, especially with respect to the implementation of electronic health records (EHR).6 It is clear based on this work that unless attention is devoted to the organizational context, efforts to introduce new technology into a practice are not likely to produce consistent results and may produce negative results.7

Prompting and reminding at encounters for prevention (PREP) was undertaken to examine the feasibility of integrating a computerized reminder system (CRS) into a wide variety of practice infrastructures and to examine potential differential effects of directing encounter-based reminders toward clinicians, patients, or both. The study was designed to recruit practices from nonacademic, community settings to maximize the generalizability of its findings. In addition to examining whether a generalizable reminder system could produce increased CRC screening rates, we also sought to understand the impact of practices’ organizational contexts.

METHODS

Study Setting and Practice Recruitment

PREP was conducted during 2003–2005 in 13 community practices in the Great Lakes Research into Practice Network (GRIN), a statewide practice-based research network located in Michigan. We sought to select diverse practices in size, organizational structure, and location. An initial GRIN survey returned with 43 practices expressing interest. We excluded residency-training practices due to concerns about generalizability. We mailed a paper survey to remaining interested practices, made follow-up phone calls to further explain the study, and finally made site visits to 16 practices that continued to be interested. Three practices of these declined after the site visit, resulting in a study group of 13 practices. One practice dropped out during the study due to an ownership change. Characteristics of the practices are summarized in Table 1. Although primarily located in rural settings (Fig. 1), the practices represented a wide range of sizes and management styles. Apart from a largely rural distribution, practice characteristics were comparable to national norms for family physicians.8

TABLE 1.

Characteristics of PREP Study Practices (n = 12)

| Range | PREP Mean | Overall GRIN Practice Mean | |

|---|---|---|---|

| Provider numbers | |||

| Physicians | 0–12 | 3.5 | 4.8 |

| Non-physician clinicians | 0–2 | 1.2 | 1.1 |

| Mean number of patients seen per day | 18–150 | 54.0 | 45.6 |

| Patient gender distribution | |||

| Female (%) | 50–65 | 58.4 | Not available |

| Male (%) | 35–50 | 41.6 | |

| Patient race/ethnicity distribution | |||

| White (%) | 50–99 | 85.3 | 59.2 |

| Latino (%) | 0–20 | 4.3 | 7.2 |

| Black (%) | 0–20 | 3.2 | 26.8 |

| Other (%) | 0–14 | 4.6 | 7.1 |

| Patient insurance status | |||

| Medicare (%) | 1–48 | 24.4 | 23.9 |

| Medicaid (%) | 5–50 | 18.5 | 17.3 |

| Managed care (%) | 0–60 | 20.8 | 22.7 |

| Traditional fee for service (%) | 1–50 | 23.7 | 26.7 |

| Self pay (%) | 5–27 | 10.8 | 9.4 |

FIGURE 1.

Location of PREP practices.

After entry into the study, Institutional Review Board (IRB) approval was sought from University of Michigan IRBMED, Michigan State University’s Community Research IRB, Synergy Medical Education Alliance of Saginaw, Michigan, and St. Mary’s Health Care IRB of Grand Rapids, Michigan. HIPAA Business Associate agreements were executed between the participating practices and the project.

Intervention

We used ClinfoTracker as our computerized reminder system. ClinfoTracker has been described elsewhere.9 ClinfoTracker is capable of tracking complete coded problem lists for patients and delivering a wide variety of reminders for preventive and disease management services. However, for PREP, the system was limited to providing CRC screening reminders. ClinfoTracker’s CRC screening reminders are driven according to US Preventive Services Task Force guidelines by age and history of prior screening services, be these fecal occult blood test, flexible sigmoidoscopy, colonoscopy, or air contrast barium enema.10

During 2–3 day implementation visits, ClinfoTracker was installed, staff and clinicians were trained in its use, workflow observations were made, and field notes were gathered on the practices. All practices provided an electronic data file of their patients aged 50 or older for population of their ClinfoTracker system. Whenever possible, we also obtained dates of recent fecal occult blood testing, flexible sigmoidoscopy, and colonoscopy from electronic billing data. In all but 2 practices, ClinfoTracker was integrated with electronic appointment systems so that reminders were automatically printed in advance of patient appointments. In the 2 practices without electronic scheduling, patient charts were preseeded with reminder forms.

Our study protocol randomized practices into 3 arms specifying delivery of reminders to clinicians only, patients only, or both patients and clinicians. ClinfoTracker prints reminders for clinicians on encounter forms and patient reminders on separate forms. Practices were informed that they would be randomized to one of these arms before agreeing to take part, and staff and clinicians were informed of and given training in the delivery of reminders based on their assigned arm. Five practices were randomized to the clinicians only arm, and 4 practices were randomized to both the patients only and both patients and clinicians arms. However, despite our efforts to educate practices on these strategies, practices in the patients only arm found it impractical or illogical to deliver reminders solely to patients, and instead delivered the reminders to their clinicians after only a few weeks of the study. Therefore, our analysis comparing practices compared 9 clinician only practices to 4 patients and clinician practices.

During the 9-month study period, the study team stayed in contact with each practice through check-in calls every 2 weeks. Periodic electronic data pulls were also performed to ensure that the reminder systems were being maintained at the practices and to examine interim data. Study practices also initiated contact on an as needed basis for technical support with the reminder system. Six months into the study period one of the study practices requested permission to terminate the study due to a complete turnover in their clinician staff, leaving the study with 12 practices participating in the full 9-month study period.

At the end of the study period, we spent 2–3 days at each study site. At each site, we performed chart reviews on a random sample of 50 female patients and 50 male patients who had visited the practice during the study period. During this final site visit, a focus group was conducted with the entire office staff to debrief on their experiences of working with the ClinfoTracker system.

Data Collection/Analysis

Screening Data

Data collected by means of the ClinfoTracker software included all dates of patient encounters and the clinician’s response to prompting (whether they checked “ordered,” “discussed,” “not a candidate,” “not addressed” or “done previously”) prior CRC screening history, current screening status, and demographic information. These data were used to determine each patient’s actual CRC screening status at the beginning and at the end of the study period, which was our primary outcome. Similar data points were gathered from the chart review. We calculated the level of agreement between these 2 methods of assessing screening status with Cohen’s kappa statistic (0.43, P < 0.001) indicating a “moderate” level of agreement.11 Because of the larger patient sample size in the total ClinfoTracker data from each practice, we used the ClinfoTracker data for all analyses.

Our analysis of the screening data focused on a comparison of the screening rates at the beginning of the study versus the end. We excluded the practice that did not complete the full 9-month study period. We estimated screening rates primarily using data extracted from the ClinfoTracker system. Electronic screening rates were estimated using a denominator based on eligibility for CRC screening at age of 50 or greater at baseline and a numerator of those who were up to date at baseline and the end of the study. We also performed a random chart audit of 100 CRC screening eligible patients in each practice to confirm the ClinfoTracker rates. We computed the change in screening rates for each practice and analyzed the effects of percent days printing, treatment arm, technology adoption, and organizational cohesion on these changes using standard weighted linear regression methods. The weight applied to each practice was the inverse of the variance of the change in its screening rates, which required adjustment for the level of intrapractice correlation, estimated to be ρ = 0.05.

Printing Data

To obtain a measure of how consistently practices were able to use the system, we referenced ClinfoTracker logs to determine the number days during the study that each practice actually printed reminders. After adjusting for holidays and weekends during the study period, a figure was calculated for the percentage of days that printing occurred during the study.

Qualitative Data

The study team took extensive field notes during each site visit, including the recruitment visit. These contained both observational information about practice dynamics and operational information regarding how ClinfoTracker was integrated into each practice. Field notes were maintained in a study folder on our secure research fileserver. All study team members kept abreast of the field notes during the study’s progress.

To develop themes and categories from our qualitative data that could be used to characterize our practices, we followed methods that included phases described by Crabtree and Miller of immersion and crystallization and connecting, combined with a templated approach.12,13 After the final site visit and before analysis of quantitative data, a daylong meeting of the study team was convened to review the field notes and summarize the team’s observations on each practice. After detailed discussion of the practices and our recorded notes, consensus was reached during the first part of the meeting on the importance of practices’ organizational cohesion and technology attributes. Briefly, we defined the technology factor as the practice’s use of technology resources or infrastructure to ensure project success. As only 2 practices had an electronic medical record at the end of the study, technology had more to do with use of other electronic systems such as electronic billing, scheduling, and internet connectivity and resources to support technology. We defined organizational cohesion as having the ability to adapt to change without significantly compromising the cohesion and productivity of team members. An observed example of this was practice staff working together to adapt and modify existing workflows when unexpected problems arose.

To further the discussion, during the second half of the meeting, the study team ranked each practice on the basis of their organizational and technical attributes. The discussion during the meeting provided a rich summary of the study team’s impressions that were built on existing field notes with additional observations and interpretations about the practices. This provided a second-order summary of the data both in general terms and in terms of organizational and technical attributes that either facilitated or hindered practices from implementing and using ClinfoTracker. The entire qualitative data meeting was audio recorded and then transcribed.

Two study team members iteratively coded the transcribed text from the qualitative data meeting using Hyper-Research software, and the coded text was subsequently reviewed by 5 study team members with resolution of disagreements about coding being achieved by a consensus process. Study team members were asked to independently rate each practice positively or negatively on the factors of technology and organizational cohesion as they impacted implementation and use of ClinfoTracker. A final study team meeting was held to resolve differences in ratings through a consensus process, with the coded text used as a reference.

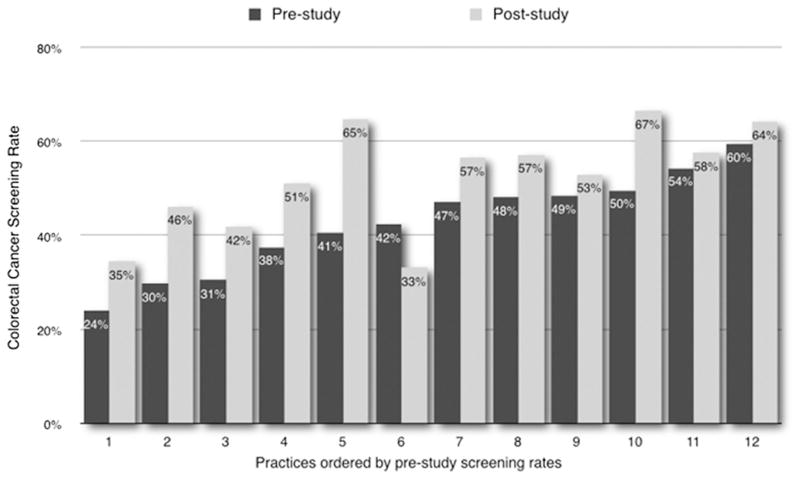

RESULTS

Excluding the 2 practices that did not have an electronic appointment system to enable full ClinfoTracker integration, practices printed reminders on average 59% of study days (range, 38–99%). Figure 2 depicts the baseline and 9-month CRC screening rates for each practice. Baseline screening rates in the 12 practices averaged 41.7% (range, 24.1–59.6%). Nine-month CRC screening rates averaged 66.5% (range, 33.2–66.5%). There was an average 9% increase in CRC screening rates (P = 0.002) (range, 9–24%). Printing days did not influence screening rate improvement. No significant differences or trends were seen in the changes in screening rates between practices in the original randomization groups or the clinician versus patient and clinician reminder groups that practices moved into.

FIGURE 2.

Pre- and post-study colorectal cancer screening rates by practice.

Analysis of the impact of technology and organizational cohesion factors demonstrated that high technology practices printed on 74% versus 45% of days for low technology practices (P = 0.01). There was no impact on printing from organizational cohesion, nor any interaction effect between technology adoption and organizational cohesion. Examining the contributions of each of these factors on CRC screening rates, trends were observed for greater average improvement in practices with higher organizational cohesion (15.3% vs. 7.9% for low cohesion practices), as well as for lower average improvement in high technology practices (13.3% vs. 8.0% for high technology practices). Taking both into account, in low technology practices the presence of higher organizational cohesion resulted in an average increase in screening rates of 2%. Among high technology practices, this trend was much stronger, showing mean difference of 7.9% (Table 2). Because of the small numbers of practices in the study, none of these trends reached statistical significance.

TABLE 2.

Mean CRC Screening Rate Changes In Practices Grouped By Technology Adoption and Organizational Cohesion Ratings

| Technology Adoption

|

|||

|---|---|---|---|

| Low (n = 6) (%) | High (n = 6) (%) | Overall (%) | |

| Organizational cohesion | |||

| Low (n = 8) | 12.2 | 6.9 | 7.9* |

| High (n = 4) | 14.2 | 14.8 | 15.3† |

| Overall | 13.3‡ | 8.0§ | |

Mean screening rate change for all “low cohesion” practices (P = 0.026).

Mean screening rate change for all “high cohesion” practices.

Mean screening rate change for all “low technology” practices (P = 0.004).

Mean screening rate change for all “high technology” practices (P = NS).

DISCUSSION

The PREP study set out to examine the feasibility and potential impact of introducing a generalizable CRS into a diverse set of community primary care practices. Prior studies of computerized reminders have been largely limited to practices within a single health system using standardized technology. A recent review examining information technology and cancer screening confirmed this limitation of most prior work.14 To our knowledge, our study is the first to evaluate integration of a computerized reminder system into a diverse set of community-based primary care practices. Another strength of our study is that we examined, as our primary outcome, actual screening status rather than intermediate endpoints such as screening orders. The main results from PREP demonstrate that it is possible to use a common reminder system in a diverse set of practices and achieve significant gains in CRC screening across the group.

Beyond these significant results, however, we are aware from prior work of the importance of other factors beyond the technology being used to deliver the reminders. Indeed we became increasingly aware of this as our project progressed. Introduction of any new process requires a practice to adapt and change its existing ways of doing business. Introduction of a new technology may also stress a practice that has limited resources for information technology support, or support that itself is resistant to introduction of a new technology. The lack of an observed impact of organizational cohesion on printing days suggests that when technology resources exist in a practice, they are sufficient to keep a computerized system running. However, this does not guarantee an impact on outcomes. Our findings of trends toward greater increases in CRC screening in practices where we observed higher organizational cohesion echoes other literature that highlights the importance of teams and change management in primary care practices.15–18 Simply printing reminders does not ensure that they are available, accepted, and used by a practice.

A full analysis of our qualitative data will be helpful in further developing the themes we report in this article. For this article, we report the preliminary, templated analysis we have performed to date. Because of the important and unique aspect this analysis sheds on our data, we feel it is important to include in this article. Reporting the effects we saw based on technology capabilities and cohesion may spur other investigators to include this type of analysis in their work on how practices implement technology solutions.

Primary care practices are complex organizations that encompass individuals of varied backgrounds, educational attainment, and skill sets. These practices evolve their various workflows in response to numerous influences, both internal and external. For example, efforts to promote CRC screening in a practice may be influenced by prior experiences with patients being diagnosed with colorectal cancer, availability of endoscopic screening, the payor mix and socioeconomic status of the patient population, and sense of “mission” around prevention, among other factors. These influences in turn exert themselves in an organizational context that may be team-oriented with shared decision-making about practice goals and mission, or a context that is hierarchical with decisions being handed down by practice leadership. Hierarchical organization can even manifest itself within solo practices, as we observed to the detriment of an otherwise beneficial technology. This view of primary care practice and the need for team approaches in concert with other strategies is embodied in a recent review.19

Others’ work suggests that where practices have the organizational resources to change and adapt, simple tracking and reminder systems may work as well or better than sophisticated EHR. Orzano et al20 recently published work showing that EHR technology by itself was not enough to improve key measures but that practices reporting use of identification and tracking systems did demonstrate improvements. This same group has also just published findings demonstrating potential negative effects of EHR use in practices.7 Finally, a recent analysis of National Ambulatory Medical Care Survey data also fails to show a demonstrable impact of EHR technology on quality of care.21

Our primary study limitations were the small number of practices involved and the relatively short time window of observation. Our assessment of impact was limited by the scope, which required the use of historical controls. Additionally, practices unwillingness to adhere to the patient only reminder arm was a limitation. These limitations manifested in our inability to demonstrate significant differences between reminder strategies and significant impacts of our organizational factors. We were also highly dependent on the practices’ staff to reliably ensure operation of ClinfoTracker, including entry of data into the system showing screening completion. In future reports, we hope to explore in greater depth clinicians’ recorded responses to individual reminders, as opposed to the primary outcome of screening status, as well as explore our extensive qualitative data.

A brief comment is warranted regarding our decision to use the ClinfoTracker data for our analyses rather than the chart audit data. We made this decision based on the larger number of analyzable patients in the ClinfoTracker data and the fact that our kappa showed moderate agreement between the two. There was likely missing data both in charts and in the computer system. Given that we did not find evidence of a systematic bias in over- or under-reporting of the screening data in the chart versus computer data, we feel comfortable in our decision to base our primary analysis on the ClinfoTracker data.

In summary, PREP successfully demonstrated that a generalizable CRS could improve CRC screening in diverse primary care practices. This work has important implications for practices that may be reluctant or unable to implement a full EHR in pursuit of quality improvement. We are building on this work through the further development and licensing of the software to Cielo MedSolutions, LLC. The commercial version of the software, Cielo Clinic, is being tested in further clinical trials.

Acknowledgments

Supported by National Cancer Institute/Agency for Healthcare Research and Quality “Colorectal Cancer Screening in Primary Care Practice” program grant R21 CA104484.

The authors to acknowledge their participating practices: Advantage Health NE Office, Grand Rapids, MI; Alcona Health Center, Lincoln, MI; Alpena Medical Arts, Alpena, MI; Charlotte Medical Group, Charlotte, MI; Louis L. Constan MD, Saginaw, MI; Gwinn Medical Center, Gwinn, MI; Ingham County Health Center, Leslie/Stockbridge, MI; Little Traverse Family Care, Harbor Springs, MI; Northern Menominee Health Center, Spalding, MI; OSF Medical Group, Escanaba and Powers, MI; Shelby Family Care Center, Shelby, MI; Wyoming Family Medicine, Wyoming, MI.

References

- 1.Seeff LC, Nadel MR, Klabunde CN, et al. Patterns and predictors of colorectal cancer test use in the adult U.S. population. Cancer. 2004;100:2093–2103. doi: 10.1002/cncr.20276. [DOI] [PubMed] [Google Scholar]

- 2.McDonald CJ, Hui SL, Smith DM, et al. Reminders to physicians from an introspective computer medical record. A two-year randomized trial. Ann Intern Med. 1984;100:130–138. doi: 10.7326/0003-4819-100-1-130. [DOI] [PubMed] [Google Scholar]

- 3.Garr DR, Ornstein SM, Jenkins R, et al. The effect of routine use of computer-generated preventive reminders in a clinical practice. Am J Prev Med. 1993;9:55–61. [PubMed] [Google Scholar]

- 4.Rossi RA, Every NR. A computerized intervention to decrease the use of calcium channel blockers in hypertension. J Gen Intern Med. 1997;12:672–678. doi: 10.1046/j.1525-1497.1997.07140.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Carpiano RM, Flocke SA, Frank SH, et al. Tools, teamwork, and tenacity: an examination of family practice office system influences on preventive service delivery. Prev Med. 2003;36:131–140. doi: 10.1016/s0091-7435(02)00024-5. [DOI] [PubMed] [Google Scholar]

- 6.Crosson JC, Stroebel C, Scott JG, et al. Implementing an electronic medical record in a family medicine practice: communication, decision making, and conflict. Ann Fam Med. 2005;3:307–311. doi: 10.1370/afm.326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Crosson J, Ohman-Strickland P, Hahn K, et al. Electronic medical records and diabetes quality of care: results from a sample of family medicine practices. Ann Fam Med. 2007;5:209–215. doi: 10.1370/afm.696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.American Academy of Family Physicians. [Accessed November 14, 2007];Facts about family medicine. 2007 Available at: http://www.aafp.org/online/en/home/aboutus/specialty/facts.html.

- 9.Nease DE, Jr, Green LA. ClinfoTracker: a generalizable prompting tool for primary care. J Am Board Fam Pract. 2003;16:115–123. doi: 10.3122/jabfm.16.2.115. [DOI] [PubMed] [Google Scholar]

- 10.U S. Preventive Services Task Force. Screening for colorectal cancer: recommendation and rationale. Ann Intern Med. 2002;137:129–131. doi: 10.7326/0003-4819-137-2-200207160-00014. [DOI] [PubMed] [Google Scholar]

- 11.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. [PubMed] [Google Scholar]

- 12.Crabtree BF, Miller WL. Using codes and code manuals: a template organizing style of interpretation. In: Crabtree BF, Miller WL, editors. Doing Qualitative Research. Thousand Oaks, CA: Sage Publications; 1999. pp. 163–177. [Google Scholar]

- 13.Miller WL, Crabtree BF. The dance of interpretation. In: Crabtree BF, Miller WL, editors. Doing Qualitative Research. Thousand Oaks, CA: Sage Publications; 1999. pp. 127–143. [Google Scholar]

- 14.Jimbo M, Nease DE, Jr, Ruffin MT, IV, et al. Information technology and cancer prevention. CA Cancer J Clin. 2006;56:26–36. doi: 10.3322/canjclin.56.1.26. [DOI] [PubMed] [Google Scholar]

- 15.Solberg L. Improving medical practice: a conceptual framework. Ann Fam Med. 2007;5:251–256. doi: 10.1370/afm.666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stroebel CK, McDaniel RR, Jr, Crabtree BF, et al. How complexity science can inform a reflective process for improvement in primary care practices. Jt Comm J Qual Patient Saf. 2005;31:438–446. doi: 10.1016/s1553-7250(05)31057-9. [DOI] [PubMed] [Google Scholar]

- 17.Gask L. Powerlessness, control, and complexity: the experience of family physicians in a group model HMO. Ann Fam Med. 2004;2:150–155. doi: 10.1370/afm.58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cohen D, McDaniel RR, Jr, Crabtree BF, et al. A practice change model for quality improvement in primary care practice. J Healthc Manag. 2004;49:155–168. discussion 169–170. [PubMed] [Google Scholar]

- 19.Klabunde C, Lanier D, Breslau E, et al. Improving colorectal cancer screening in primary care practice: innovative strategies and future directions. J Gen Intern Med. 2007;22:1195–1205. doi: 10.1007/s11606-007-0231-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Orzano A, Strickland P, Tallia A, et al. Improving outcomes for high-risk diabetics using information systems. J Am Board Fam Med. 2007;20:245–251. doi: 10.3122/jabfm.2007.03.060185. [DOI] [PubMed] [Google Scholar]

- 21.Linder JA, Ma J, Bates DW, et al. Electronic health record use and the quality of ambulatory care in the United States. Arch Intern Med. 2007;167:1400–1405. doi: 10.1001/archinte.167.13.1400. [DOI] [PubMed] [Google Scholar]