Abstract

The aim of this paper is to develop a sparse projection regression modeling (SPReM) framework to perform multivariate regression modeling with a large number of responses and a multivariate covariate of interest. We propose two novel heritability ratios to simultaneously perform dimension reduction, response selection, estimation, and testing, while explicitly accounting for correlations among multivariate responses. Our SPReM is devised to specifically address the low statistical power issue of many standard statistical approaches, such as the Hotelling’s T2 test statistic or a mass univariate analysis, for high-dimensional data. We formulate the estimation problem of SPREM as a novel sparse unit rank projection (SURP) problem and propose a fast optimization algorithm for SURP. Furthermore, we extend SURP to the sparse multi-rank projection (SMURP) by adopting a sequential SURP approximation. Theoretically, we have systematically investigated the convergence properties of SURP and the convergence rate of SURP estimates. Our simulation results and real data analysis have shown that SPReM out-performs other state-of-the-art methods.

Keywords: heritability ratio, imaging genetics, multivariate regression, projection regression, sparse, wild bootstrap

1 Introduction

Multivariate regression modeling with a multivariate response y ∈ ℝq and a multivariate covariate x ∈ ℝp is a standard statistical tool in modern high-dimensional inference, with wide applications in various large-scale applications, such as genome-wide association studies (GWAS) and neuroimaging studies. For instance, in GWAS, our primary problem of interest is to identify genetic variants (x) that cause phenotypic variation (y). Specifically, in imaging genetics, multivariate imaging measures (y), such as volumes of regions of interest (ROIs), are phenotypic variables, whereas covariates (x) include single nucleotide polymorphisms (SNPs), age, and gender, among others. The joint analysis of imaging and genetic data may ultimately lead to discoveries of genes for neuropsychiatric and neurological disorders such as autism and schizophrenia (Scharinger et al., 2010; Paus, 2010; Peper et al., 2007; Chiang et al., 2011a,b). Moreover, in many neuroimaging studies, there is a great interest in the use of imaging measures (x), such as functional imaging data and cortical and subcortical structures, to predict multiple clinical and/or behavioral variables (y) (Knickmeyer et al., 2008; Lenroot and Giedd, 2006). This motivates us to systematically investigate a multivariate linear model with a multivariate response y and a multivariate covariate x.

Throughout this paper, we consider n independent observations (yi, xi) and a Multivariate Linear Model (MLM) given by

| (1) |

where Y = (y1, …, yn)T, X = (x1, …, xn)T, and B = (βjl) is a p × q coefficient matrix with rank(B) = r* ≤ min(p, q). Moreover, the error term E = (e1, …, en)T has E(ei) = 0 and Cov(ei) = ΣR for all i, where ΣR is a q × q matrix. Many hypothesis testing problems of interest, such as comparison across groups, can often be formulated as

| (2) |

where C is an r × p matrix and B0 is an r × q matrix. Without loss of generality, we center the covariates, standardize the responses, and assume rank(C) = r.

We focus on a specific setting that q is relatively large, but p is relatively small. Such a setting is general enough to cover two-sample (or multi-sample) hypothesis testing for high-dimensional data (Chen and Qin, 2010; Lopes et al., 2011). There are at least three major challenges including (i) a large number of regression parameters, (ii) a large covariance matrix, and (iii) correlations among multivariate responses. When the number of responses and the number of covariates are even moderately high, fitting the conventional MLM usually requires estimating a p × q matrix of regression coefficients, whose number pq can be much larger than n. Although accounting for complicated correlations among multiple responses is important for improving the overall prediction accuracy of multivariate analysis (Breiman and Friedman, 1997; Cook et al., 2010), it requires estimating q(q+1)/2 unknown parameters in an unstructured covariance matrix.

There is a great interest in the development of efficient methods for handling MLMs with large q. Four popular traditional methods include the mass univariate analysis, the Hotelling’s T2 test, partial least squares regression, and dimension reduction methods. As pointed by Klei et al. (2008) and many others, testing each response variable individually in the mass univariate analysis requires a substantial penalty of controlling for multiplicity. The Hotelling’s T2 test is not well-defined, when q > n. Even when q ≤ n, the power of the Hotelling’s T2 can be very low if q is nearly as large as n. Partial least squares regression (PLSR) aims to find a linear regression model by projecting y and x to a smaller latent space (Chun and Keles, 2010; Krishnan et al., 2011), but it focuses on prediction and classification. Although dimension reduction techniques, such as principal component analysis (PCA), are considered to reduce the dimensions of both the response and covariates (Formisano et al., 2008; Kherif et al., 2002; Rowe and Hoffmann, 2006; Teipel et al., 2007), most of the methods ignore the variation of covariates and their associations with responses. Thus, such methods can be sub-optimal for our problem.

Some recent developments primarily include regularization methods and envelope models (Peng et al., 2010; Tibshirani, 1996; Breiman and Friedman, 1997; Cook et al., 2010, 2013; Lin et al., 2012). Cook, Li and Chiaromonte (2010) developed a powerful envelope modeling framework for MLMs. Such envelope methods use dimension reduction techniques to remove the immaterial information, while achieving efficient estimation of the regression coefficients by accounting for correlations among the response variables. However, the existing envelope methods are limited to the n > max(p, q) scenario. Recently, much attention has been given to regularization methods for enforcing sparsity in B (Peng et al., 2010; Tibshirani, 1996). These regularization methods, however, do not provide a standard inference tool (e.g., standard deviation) on the regression coefficient matrix B. Lin et al. (2012) developed a projection regression model (PRM) and its associated estimation procedure to assess the relationship between a multivariate phenotype and a set of covariates without providing any theoretical justification.

This paper presents a new general framework, called sparse projection regression model (SPReM), for simultaneously performing dimension reduction, response selection, estimation, and testing in a general high dimensional MLM setting. We introduce two novel heritability ratios, which extend the idea of principal components of heritability from familial studies (Klei et al., 2008; Ott and Rabinowitz, 1999), for MLM and overcome over-fitting and noise accumulation in high dimensional data by enforcing the sparsity constraint. We develop a fast algorithm for both sparse unit rank projection (SURP) and sparse multi-rank projection (SMURP). Furthermore, a test procedure based on the wild-bootstrap method is proposed, which leads to a single p–value for the test of an association between all response variables and covariates of interest, such as genetic markers. Simulations show that our method can control the overall Type I error well, while achieving high statistical power.

Section 2 of this paper introduces the SPReM framework. We introduce a novel deflation procedure to extract the most informative directions for testing hypotheses of interest. Simulation studies and an imaging genetic example are used to examine the finite sample performance of SPReM in Section 3. We present concluding remarks in Section 4.

2 Sparse Projection Regression Model

2.1 Model Setup and Heritability Ratios

We introduce SPReM as follows. The key idea of our SPReM is to appropriately project yi in a high-dimensional space onto a low-dimensional space, while accounting for the correlation structure ΣR among the response variables and the hypothesis test in (2). Let W = [w1, …, wk] be a q × k nonrandom and unknown direction matrix, where wj are q × 1 vectors. A projection regression model (PRM) is given by

| (3) |

where βw is a p × k regression coefficient matrix and the random vector εi has E(εi) = 0 and Cov(εi) = WTΣRW. When k = 1, PRM reduces to the pseduo-trait model considered in (Amos et al., 1990; Amos and Laing, 1993; Klei et al., 2008; Ott and Rabinowitz, 1999). If k << min(n, q) and W were known, then one could use likelihood (or estimating equation) based methods to efficiently estimate βw, and (2) would reduce approximately to

| (4) |

where Cβw = CBW and b0 = B0W. In this case, the number of null hypotheses in (4) is much smaller than that of (2). It is also expected that different W’s strongly influence the statistical power of testing the hypotheses in (2).

A fundamental question arises “how do we determine an ‘optimal’ W to achieve good statistical power of testing (2)?” To determine W, we develop a novel deflation approach to sequentially determine each column of W at a time starting from w1 to wk. We focus on how to determine w1 below and then discuss how to extend it to the scenario with k > 1.

To determine an optimal w1, we consider two principles. The first principle is to maximize the mean value of the square of the signal-to-noise ratio, called the heritability ratio, for model (3). For each i, the signal-to-noise ratio in model (3) is defined as the ratio of mean to standard deviation of a signal or measurement wT yi, denoted by SNRi = wTBT xi/(wTΣRw)0.5. Thus, the heritability ratio (HR) is given by

| (5) |

where . The HR has several important interpretations. If the xi are independently and identically distributed (i.i.d) with E(xi) = 0 and Cov(xi) = ΣX, then as n → ∞, we have

where →p denotes convergence in probability. Thus, HR(w) is close to the ratio of the variance of signal wTBT xi to that of noise εi. Moreover, HR(w) is close to the heritability ratio considered in (Amos et al., 1990; Amos and Laing, 1993; Klei et al., 2008; Ott and Rabinowitz, 1999) for familial studies, but we define HR from a totally different perspective. With such new perspective, one can easily define HR for more general designs, such as cross-sectional or longitudinal design. One might directly maximize HR(w) to calculate an ‘optimal’ w1, but such a w1 can be sub-optimal for testing the hypotheses in (2) as discussed below.

The second principle is to explicitly account for the hypotheses in (2) under model (1) and the reduced ones in (4) under model (3). We define four spaces associated with the null and alternative hypotheses of (2) and (4) as follows:

It can be shown that they satisfy the following relationship:

Due to potential information loss during dimension reduction, both SHW − SH0 and SH1 − SH1W may not be the empty set, but we need to choose W such that SH1 − SH1W ≈ ø. The next question is how to achieve this.

We consider a data transformation procedure. Let C1 be a (p−r) × p matrix such that

| (6) |

Let be a p × p matrix and be a p × 1 vector, where x̃i1 and x̃i2 are, respectively, the r × 1 and (p − r) × 1 subvectors of x̃i. We define , or B = D−1B̃, where B̃1 and B̃2 are, respectively, the first r rows and the last p − r rows of B̃. Therefore, model (3) can be rewritten as

| (7) |

In (7), due to (6), we only need to consider the transformed covariate vector x̃i1, which contains useful information associated with B̃1 − B0 = CB − B0.

We define a generalized heritability ratio based on model (7). Specifically, for each i, we define a new signal-to-noise ratio as the ratio of mean to standard deviation of signal wT (B̃1 − B0)T x̃i1 + wT ei, denoted by SNRi,C = wT (B̃1 − B0)T x̃i1/(wTΣRw)0.5. The generalized heritability ratio is then defined as

| (8) |

where . If the xis are random, then we have

| (9) |

where ΣC = (B̃1 − B0)T (D−TΣXD−1)(r,r)(B̃1 − B0), and (D−TΣXD−1)(r,r) is the upper r × r submatrix of D−TΣXD−1. Particularly, if C = [Ir 0], then ΣC reduces to wT (B̃1 − B0)T (ΣX)(1,1)(B̃1 − B0)w, in which (ΣX)(1,1) is the upper r × r submatrix of ΣX. Thus, GHR(w; C) can be interpreted as the ratio of the variance of wT (B̃1 − B0)T x̃i1 relative to that of wT ei. We propose to calculate an optimal w* as follows:

| (10) |

We expect that such an optimal w* can substantially reduce the size of both SH1 − SH1W and SHW − SH0 and thus the use of such an optimal w* can enhance the power of testing the hypotheses in (2). Without loss of generality, we assume B0 = 0 from now on.

We consider a simple example to illustrate the appealing properties of GHR(w; C).

Example We consider model (1) with p = q = 5 and want to test the nonzero effect of the first covariate on all five responses. In this case, r = 1, C = (1, 0, 0, 0, 0), B0 = (0, 0, 0, 0, 0), and D = I5, which is a 5 × 5 identity matrix. Without loss of generality, it is assumed that (ΣX)(1,1) = 1.

We consider three different cases of ΣR and B. In the first case, we set and the first column of B to be (1, 0, 0, 0, 0). It follows from (8) that

where c0 is any nonzero scalar. Therefore, w* picks out the first response, which is the sole one that is associated with the first covariate.

In the second case, we set with and the first row of B to be (1, 1, 0, 0, 0). It follows from (8) that

where c0 is any nonzero scalar. Therefore, w* picks out both the first and second response with larger weight on the second component. This is desirable since β11 and β21 are equal in terms of strength of effect and the noise level for the second response is smaller than that of the first one.

In the third case, we set the first row of B to be (1, 1, 0, 0, 0) and the first and second columns of ΣR are set as σ2(1, ρ, 0, 0, 0) and σ2(ρ, 1, 0, 0, 0), respectively. It follows from (8) that

where Q(w3,w4,w5) is a non-negative quadratic form of (w3,w4,w5). Thus, the optimal w* chooses the first two responses with equal weight, since they are correlated with each other with same variance and β11 = β21 = 1.

For high dimensional data, it is difficult to accurately estimate w*, since the sample covariance matrix estimator Σ̂R can be either ill-conditioned or not invertible for large q > n. One possible solution is to focus only on a small number of important features for testing. However, a naive search for the best subset is NP-hard. We develop a penalized procedure to address these two problems, while obtaining a relatively accurate estimate of w. Let Σ̃R and Σ̂C be, respectively, estimators of ΣR and ΣC. Here we use Σ̃R to denote the covariance estimator other than sample covariance matrix Σ̂R. To obtain Σ̂C, we need to plug B̂, an estimator of B, into ΣC. Without loss of generality, we consider the ordinary least squares estimate of B. By imposing a sparse structure on w1, we recast the optimization problem as

| (11) |

where ‖·‖1 is the L1 norm and t > 0.

2.2 Sparse Unit Rank Projection

When r = 1, we call the problem in (10) as the unit rank projection problem and its corresponding sparse version in (11) as the sparse unit rank projection (SURP) problem. Actually, many statistical problems, such as two-sample test and marginal effect test problems, can be formulated as the unit rank projection problem (Lopes et al., 2011). We consider two cases including ℓ = (CB)T = 0 and ℓ = (CB)T ≠ 0. When ℓ = (CB)T = 0, the solution set of (8) is trivial, since any w ≠ 0 is a solution of (8). As discussed later, this property is extremely important for controlling the type I error rate.

When ℓ = (CB)T ≠ 0, (8) reduces to the following optimization problem:

| (12) |

where ℓ is the sole eigenvector of ΣC, since ΣC is a unit-rank matrix. To impose an L1 sparsity on w, we propose to solve the penalized version of (12) given by

| (13) |

Although (13) can be solved by using some standard convex programming methods, such methods are too slow for most large-scale applications, such as imaging genetics. We therefore reformulate our problem below. Without special saying, we focus on ℓ = (CB)T ≠ 0.

By omitting a scaling factor , which will not affect the generalized heritability ratio, we note that (12) is equivalent to the following

| (14) |

We consider a penalized version of (14) as

| (15) |

A nice property of (15) is that it does not explicitly involve the inequality constraint, which leads to a fast computation. We define (14) as the oracle, since wλ converges to w0 as λ → 0. It can be shown that

| (16) |

We obtain an equivalence between (15) and (13) as follows.

Theorem 2.1 Problem (15) is equivalent to problem (13) and wλ ∝ w0,λ.

We discuss some connections between our SURP problem and the optimization problem considered in Fan et al. (2012) for performing classification in high dimensional space.

However, rather than recasting the problem as in (12) and then (15), they formulate it as

which can further be reformulated as

| (17) |

Since (17) involves a linear equality constraint, they replace it by a quadratic penalty as

| (18) |

This new formulation requires the simultaneously tuning of λ and γ, which can be computationally intensive. However, in Fan et al. (2012), they stated that the solution to (18) is not sensitive to γ, since solution is always in the direction of when λ = 0, as validated by simulations. Their formulation (17) is close to the formulation (15). This result sheds some light on why wλ,γ is not sensitive to γ. Finally, we can show that the solution path to (15) has a piecewise linear property.

Proposition 2.2 Let ℓ ∈ ℝq be a constant vector and ΣR be positive definite. Then, w0,λ is a continuous piecewise linear function in λ.

We derive a coordinate descent algorithm to solve (15). Without loss of generality, suppose that , w̃j for all j ≥ 2 are given, and we need to optimize (15) with respect to w̃1. In this case, the objective function (15) becomes

where and σ11, Σ12, and Σ22 are subcomponents of ΣR. Then, by taking the sub-gradient with respect to w̃1, we have

where Γ1 = sign(w̃1) for w̃1 ≠ 0 and is between −1 and 1 if w̃1 = 0. Let Sλ(t) = sign(t)(|t|−λ)+ be the soft-thresholding operator. By setting , we have w̃1 = Sλ(ℓ̃1 − Σ12w̃2)/σ11. Based on this result, we can obtain a coordinate descent algorithm as follows.

Algorithm 1

Initialize w at a starting point w(0) and set m = 0.

- Repeat:

- (b.1) Increase m by 1: m ← m + 1

- (b.2) for j ∈ 1, …, p, if , then set ; otherwise:

Until numerical convergence: we require |f(w(m))−f(w(m−1))| to be sufficiently small.

2.3 Extension to Multi-rank Cases

In this subsection, we extend the sparse unit rank projection procedure to handle multiple rank test problems when r > 1. We propose the k–th projection direction as the solution to the following problem:

| (19) |

It can be shown that (19) is equivalent to

| (20) |

Following the reasoning in Witten and Tibshirani (2011), we recast (20) into an equivalent problem.

Proposition 2.3 Problem (20) is equivalent to the following problem:

| (21) |

where is the projection matrix onto the orthogonal space spanned by , in which Σ11 = (D−TΣX D−1)(r,r).

Based on Proposition 2.3, we consider several strategies of imposing the sparsity structure on wk. A simple strategy is to consider the following problem given by

| (22) |

where . When the rank of C is greater than 1, the problem in (22) is no longer convex, since it involves maximizing an objective function that is not concave. A potential solution is to use the minorization-maximization (MM) algorithm (Lange et al., 2000). Specifically, for any fixed w(m), we take a Taylor series expansion of at w(m) and get

| (23) |

Thus, the right hand side of (23) minorizes the objective function (22) at and is a convex function, which can be solved by using some convex optimization methods. However, based on our extensive experience, the MM algorithm is too slow for most large-scale problems, such as imaging genetics.

To further improve computational efficiency, we consider a surrogate of (22). Recall the discussion in the second principle, we are only interested in extracting informative directions for testing hypotheses of interest. We consider a spectral decomposition of (D−TΣXD−1)(r×r) as , where (γj, ℓj) are eigenvalue-eigenvector pairs with γ1 ≥ γ2 ≥ … ≥ γr. Then, instead of solving (22), we propose to solve r SURP problems as

| (24) |

Solving (24) leads to r sparse projection directions. In (24), since we sequentially extract the direction vector according to the input signal ΣC, it may produce a less informative direction vector compared with those from (22). However, such formulation leads to a fast computational algorithm and our simulation results demonstrate its reasonable performance. Thus, (24) is preferred in practice.

2.4 Test Procedure

We consider three statistics for testing H0W against H1W in (4). Based on model (3), we calculate the ordinary least squares estimate of βw, given by .

Subsequently, we calculate a k × k matrix, denoted by Tn, as follows:

| (25) |

where ΣΩ̃ is a consistent estimate of the covariance matrix of Cβ̂w − b0. Specifically, let β̃w be the restricted least squares (RLS) estimate of β under H0, which is given by

Then, we can set , where and . When k > 1, we use the determinant, trace and eigenvalues of Tn as test statistics, which are given by

| (26) |

where det, trace, and eig, respectively, denote the determinant, trace and eigenvalues of a symmetric matrix. When k = 1, all three statistics in (26) reduce to the Wald-type (or Hotelling’s T2) test statistic. For simplicity, we focus on Trn throughout the paper.

We propose a wild bootstrap method to improve the finite sample performance of the test statistic Trn. First, we fit model (1) under the null hypothesis (2) to calculate the estimated regression coefficient matrix, denoted by B̂0, with corresponding residuals for i = 1, …, n. Then we generate G bootstrap samples for i = 1, …, n, where are independently and identically distributed as a distribution F, which is chosen to be ±1 with equal probability. For each generated wild-bootstrap sample, we repeat the estimation procedure for estimating the optimal weights and the calculation of the test statistic . Subsequently, the p-value of Trn is computed as , where 1(·) is an indicator function.

2.5 Tuning Parameter Selection

We consider several methods to select the tuning parameter λ. The first one is cross validation (CV), which is primarily a way of measuring the predictive performance of a statistical model. However, the CV technique can be computationally expensive for large-scale problems. The second one is the information criterion, which has been widely to measure the relative goodness of fit of a statistical model. However, neither of these two methods are applicable for SURP, since our primary interest is to find informative directions for appropriately testing the null and alternative hypotheses of (2). If the null hypothesis is true, it is expected that CB̂ only contains noisy components and the estimated direction vectors should be random. In this case, the test statistics Trn, Wn, and Royn should not be sensitive to the value of λ. This motivates us to use the rejection rate to select the tuning parameter as follows:

| (27) |

where λmax is the largest λ to make w nonzero.

3 Asymptotic Theory

We investigate several theoretical properties of SURP and its associated estimator. By substituting Σ̃R and ℓ̂ = CB̂ into (15), we can calculate an estimate of w0 as

| (28) |

The following question arises naturally:

how close is ŵλ to w0?

We address this question in Theorems 3.1 and 3.2.

We consider the scenario that there are a few nonzero components in w0, that is, a few response variables are associated with the covariates of interest. Such a scenario is common in many large-scale problems. We make a note here that the sparsity of does not require neither to be sparse, and hence are more quite flexible. Let S0 = {j : w0,j ≠ 0} be the active set of w0 = (w0,1, …, w0,q)T and s0 is the number of elements in S0. We use the banded covariance estimator of ΣR (Bickel and Levina, 2008) such that for some well behaved covariance class 𝒰(ε0, α, C1), which is defined as

We have the following results.

Theorem 3.1 Assume that ΣR ∈ 𝒰(ε0, α, C1) and

| (29) |

where , and , in which γ(ε0, δ) and δ = δ(ε0) only depends on (ε0. Then, with probability at least 1 − (q ∨ n)−η1 − (q ∨ n)−η2, we have

| (30) |

where C is a constant not depending on q and n. Furthermore, for ‖ℓ‖2 > δ0, we have

| (31) |

Theorem 3.1 gives an oracle inequality and the L2 convergence rate of ŵλ in the sparse case, which indicates direction consistency and is important to ensure the good performance of test statistics. This result has several important implications. If , then ‖ŵλ − w0‖2 converges to zero in probability. Therefore, our SURP should perform well for the extremely sparse cases with s0 << n. This is extremely important in practice, since the extremely sparse cases are common for many large-scale problems. Although we consider the banded covariance estimator of ΣR in Theorem 3.1 (Bickel and Levina, 2008), the convergence rate of ŵλ can be established for other estimators of ΣR and ℓ as follows.

Theorem 3.2 Suppose that we have ‖Σ̃R − ΣR‖2 = Op(an) = op(1) and ‖ℓ̂ − ℓ‖∞ = Op(bn) = op(1), then

| (32) |

Furthermore, for ‖ℓ‖2 > δ0, we have

| (33) |

Theorem 3.2 gives the L2 convergence rate of ŵλ for any possible estimators of ΣR and ℓ. A direct implication is that we can consider other estimators of ΣR in order to achieve better estimation of ΣR under different assumptions of ΣR. For instance, if ΣR has an approximate factor structure with sparsity, then we may consider the principal orthogonal complement thresholding (POET) method in Fan et al. (2013) to estimate ΣR. Moreover, if we can achieve good estimation of ℓ for large p, then we can extend model (1) to the scenario with large p. We will systematically investigate these generalizations in our future work.

Remark The SPReM estimator ŵλ is closely connected with those estimators in Witten and Tibshirani (2011) and Fan et al. (2012) in the framework of penalized linear discriminant analysis. However, little is known about the theoretical properties of such estimators. To the best of our knowledge, Theorems 3.1 and 3.2 are the first results on the convergence rate of such estimators under the restricted eigen-vectors of problem (11).

Remark The SPReM estimator ŵλ does not have the oracle property due to the asymptotic bias introduced by the L1 penalty. See detailed discussions in (Fan and Li, 2001; Zou, 2006). However, our estimation procedure may be modified to achieve the oracle property by using some non-concave penalties or adaptive weights. We will investigate this issue in more depth in our future work.

4 Numerical Examples

4.1 Simulation 1: Two Sample Test in High Dimensions

In this subsection, we consider high-dimensional two-sample test problems and compare SPReM with the High-dimensional Two-Sample test (HTS) method in Chen and Qin (2010) and the Random Projection (RP) method proposed by Lopes et al. (2011). Both HTS and RP are the state-of-the-art methods for detecting a shift between the means of two high-dimensional normal distributions. It has been shown in Lopes et al. (2011) that the random projection method outperforms several competing methods when q/n converges to a constant or ∞.

We simulated two sets of samples {y1, …, yn1} and {yn1+1, …, yn} from N(β1, ΣR) and N(β2, ΣR), respectively, where β1 and β2 are q × 1 mean vectors and ΣR = σ2(ρjj′ ), in which (ρjj′) is a q × q correlation matrix. We set n = 2n1 = 100 and the dimension of the multivariate response q is 50, 100, 200, 400, and 800, respectively. We are interested in testing the null hypothesis H0 : β1 = β2 against H1 : β1 ≠ β2. This two-sample test problem can be formulated as a special case of model (1) with n = n1 + n2. Moreover, we have BT = [β1, β2] and C = (1, −1). Without loss of generality, we set β1 = β2 = 0 to assess type I error rate and then introduce a shift in the first ten components of β2 to be 1 to assess power. We set σ2 to be 1 and 3 and consider three different correlation matrices as follows.

Case 1 is an independent covariance matrix with (ρjj′) = diag(1, …, 1).

Case 2 is a weak correlation matrix with ρjj′ = 1(j′ = j) + 0.3 + 1(j′ ≠ j).

Case 3 is a strong correlation covariance matrix with ρjj′ = 0.8|j′−j|.

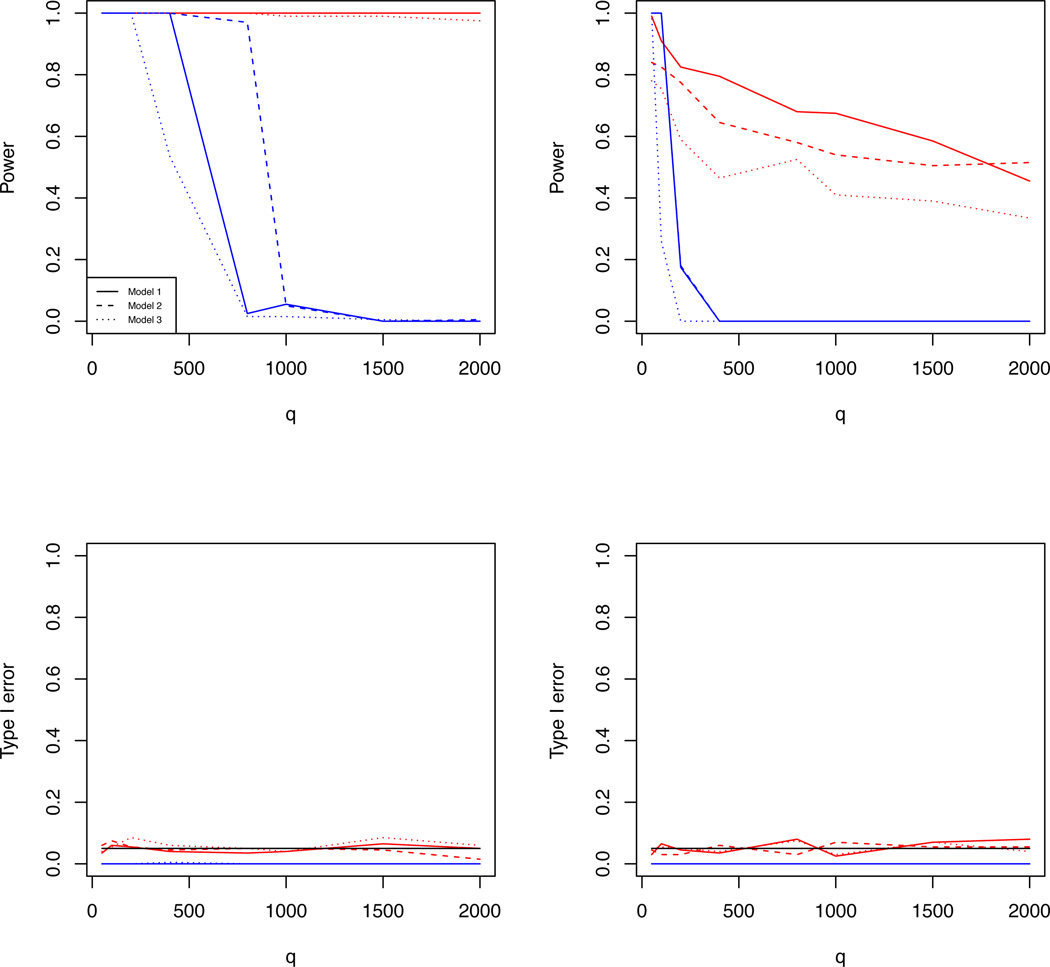

Simulation results are summarized in Tables 1 and 2. As expected, both HTS and RP perform worse as q gets larger, whereas our SPReM works very well even for relatively large q. This is consistent with our theoretical results in Theorems 3.1 and 3.2. Moreover, HTS and RP cannot control the type I error rate well in all scenarios, whereas our SPReM based on the wild bootstrap method works reasonably well. According to the best of our knowledge, none of the existing methods for the two sample test in high dimensions work well in this sparse setting. For cases (ii) and (iii), is not sparse, but SPReM performs reasonably well under the correlated scenarios. This may indicate the potential of extending SPReM and its associated theory to non-sparse cases. As expected, increasing σ2 decreases statistical power in rejecting the null hypothesis. Since both SPReM and RP significantly outperform HTS, we increased q to 2,000 and presented some additional comparisons between SPReM and RP based on 100 simulated data sets in Figure 1.

Table 1.

Simulation 1: power and type I error are reported for two sample test at 5 different qs at significance level α = 5% when σ2 = 1.

| Power | Type I error | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| q | 50 | 100 | 200 | 400 | 800 | 50 | 100 | 200 | 400 | 800 |

| case 1 | ||||||||||

| SPReM | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.035 | 0.060 | 0.055 | 0.040 | 0.035 |

| RP | 1.000 | 1.000 | 1.000 | 1.000 | 0.025 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| HTS | 0.965 | 0.320 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| case 2 | ||||||||||

| SPReM | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.060 | 0.075 | 0.055 | 0.045 | 0.050 |

| RP | 1.000 | 1.000 | 1.000 | 1.000 | 0.970 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| HTS | 1.000 | 0.245 | 0.030 | 0.005 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| case 3 | ||||||||||

| SPReM | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.040 | 0.055 | 0.085 | 0.060 | 0.050 |

| RP | 1.000 | 1.000 | 1.000 | 0.535 | 0.015 | 0.000 | 0.000 | 0.000 | 0.005 | 0.000 |

| HTS | 1.000 | 0.140 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

Table 2.

Simulation 1: power and type I error are reported for two sample test at 5 different qs at significance level α = 5% when σ2 = 3.

| Power | Type I error | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| q | 50 | 100 | 200 | 400 | 800 | 50 | 100 | 200 | 400 | 800 |

| case 1 | ||||||||||

| SPReM | 0.990 | 0.910 | 0.825 | 0.795 | 0.680 | 0.030 | 0.065 | 0.045 | 0.035 | 0.080 |

| RP | 1.000 | 1.000 | 0.175 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| HTS | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| case 2 | ||||||||||

| SPReM | 0.840 | 0.825 | 0.775 | 0.645 | 0.580 | 0.045 | 0.030 | 0.030 | 0.060 | 0.030 |

| RP | 1.000 | 1.000 | 0.180 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| HTS | 0.105 | 0.015 | 0.005 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| case 3 | ||||||||||

| SPReM | 0.780 | 0.755 | 0.590 | 0.465 | 0.525 | 0.050 | 0.055 | 0.050 | 0.040 | 0.075 |

| RP | 1.000 | 0.260 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| HTS | 0.095 | 0.005 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

Fig. 1.

Simulation 1 results: the estimated rejection rates as functions of q for two different σ2 values. The upper and lower rows are, respectively, for powers and for type I error rates, whereas the left and right columns correspond to σ2 = 1 and σ2 = 3, respectively. In all panels, the lines obtained from SPReM and RP are, respectively, presented in red and in blue, and the results for independence, weak, and strong correlation structures are, respectively, presented as thick, dashed, and dotted lines.

4.2 Simulation 2: Multiple Rank Cases

In this subsection, we evaluate the finite sample performance of SMURP. The simulation studies were designed to establish the association between a relatively high-dimensional imaging phenotype with a genetic marker (e.g., SNP or haplotype), which is common in imaging genetics studies, while adjusting for age and other environmental factors. We set the sample size n = 100 and the dimension of the multivariate phenotype q to be 50, 100, 200, 400 and 800, respectively, and then simulated the multivariate phenotype according to model (1). The random errors were simulated from a multivariate normal distribution with mean 0 and covariance matrix with diagonal elements 1. For the off-diagonal elements in the covariance matrix, which characterize the correlations among the multivariate phenotypes, we categorized each component of the multivariate phenotype into three categories: high correlation, medium correlation and very low correlation with the corresponding number of components (1, 1, q − 2) in each category, and then we set the three degrees of correlation among the different components of the multivariate phenotype according to Table 3. The final covariance matrix is set to be ΣR = σ2(ρjj′), where (ρjj′) is the correlation matrix. We considered σ2 = 1 and 3.

Table 3.

Correlation matrix of responses used in the simulation

| High | Med | Low | |

|---|---|---|---|

| High | 0.9 | 0.6 | 0.3 |

| Med | 0.6 | 0.9 | 0.1 |

| Low | 0.3 | 0.1 | 0.1 |

For the covariates, we included two SNPs with an additive effect and 3 additional continuous covariates. We varied the minor allele frequency (MAF) of the first SNP, whereas we fixed the MAF of the second SNP to be 0.5. For the first SNP, we considered 6 scenarios assuming the MAFs are 0.05, 0.1, 0.2, 0.3, 0.4, and 0.5, respectively. We simulated the three additional continuous covariates from a multivariate normal distribution with mean 0, standard deviation 1, and equal correlation 0.3. We first set B = 0 to assess type I error rate. To assess power, we set the first response to be the only components of the multivariate phenotype associated with the first SNP and the second response to be the component related to the second SNP effect. Specifically, we set the coefficients of the two SNPs to be 1 for the selected responses and all other regression coefficients to be 0. We are interested in testing the joint effects of the two SNPs on phenotypic variance.

We applied SPReM to 100 simulated data sets. Note that to the best of our knowledge, no other methods can be used to test the multi-rank test problem and thus we only focus on SPReM here. Table 4 presents the estimated rejection rates corresponding to different MAFs, q, and σ2. Our SPReM works very well even for relatively large q under both σ2 = 1 and 3. Specifically, the wild bootstrap method can control the type I error rate well in all scenarios. For the power, SPReM performs reasonably well under the small MAFs and q = 800. It may indicate that our method can perform well for much larger q if the sample size gets larger. As expected, increasing σ2 decreases statistical power in rejecting the null hypothesis.

Table 4.

Simulation 2: the estimates of rejection rates were reported at 6 different MAFs, 5 different qs, and 2 different σ2 values at significance level α = 5%. For each case, 100 simulated data sets were used.

| Power | Type I error | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| σ2 = 1 | ||||||||||

| MAF\q | 50 | 100 | 200 | 400 | 800 | 50 | 100 | 200 | 400 | 800 |

| 0.050 | 0.950 | 0.955 | 0.930 | 0.940 | 0.930 | 0.045 | 0.060 | 0.030 | 0.070 | 0.080 |

| 0.100 | 0.995 | 0.990 | 0.990 | 0.980 | 0.975 | 0.045 | 0.055 | 0.040 | 0.045 | 0.045 |

| 0.200 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.045 | 0.045 | 0.080 | 0.030 | 0.060 |

| 0.300 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.065 | 0.040 | 0.020 | 0.065 | 0.060 |

| 0.400 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.050 | 0.070 | 0.035 | 0.060 | 0.070 |

| 0.500 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.060 | 0.050 | 0.030 | 0.020 | 0.035 |

| σ2 = 3 | ||||||||||

| 0.050 | 0.915 | 0.875 | 0.765 | 0.795 | 0.735 | 0.050 | 0.040 | 0.030 | 0.050 | 0.065 |

| 0.100 | 0.970 | 0.960 | 0.940 | 0.875 | 0.865 | 0.040 | 0.055 | 0.070 | 0.080 | 0.050 |

| 0.200 | 0.995 | 0.985 | 0.975 | 0.975 | 0.970 | 0.015 | 0.050 | 0.060 | 0.010 | 0.065 |

| 0.300 | 1.000 | 1.000 | 0.990 | 0.970 | 0.955 | 0.045 | 0.055 | 0.055 | 0.080 | 0.040 |

| 0.400 | 0.995 | 1.000 | 1.000 | 0.990 | 0.985 | 0.055 | 0.035 | 0.045 | 0.050 | 0.070 |

| 0.500 | 0.995 | 1.000 | 1.000 | 0.985 | 0.980 | 0.085 | 0.055 | 0.055 | 0.065 | 0.030 |

4.3 Alzheimer’s Disease Neuroimaging Initiative (ADNI) Data Analysis

The development of SPReM is motivated by the joint analysis of imaging, genetic, and clinical variables in the ADNI study. “Data used in the preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.ucla.edu). The ADNI was launched in 2003 by the National Institute on Aging (NIA), the National Institute of Biomedical Imaging and Bioengineering (NIBIB), the Food and Drug Administration (FDA), private pharmaceutical companies and non-profit organizations, as a $60 million, 5-year publicprivate partnership. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer’s disease (AD). Determination of sensitive and specific markers of very early AD progression is intended to aid researchers and clinicians to develop new treatments and monitor their effectiveness, as well as lessen the time and cost of clinical trials. The Principal Investigator of this initiative is Michael W. Weiner, MD, VA Medical Center and University of California, San Francisco. ADNI is the result of efforts of many coinvestigators from a broad range of academic institutions and private corporations, and subjects have been recruited from over 50 sites across the U.S. and Canada. The initial goal of ADNI was to recruit 800 subjects but ADNI has been followed by ADNI-GO and ADNI-2. To date these three protocols have recruited over 1500 adults, ages 55 to 90, to participate in the research, consisting of cognitively normal older individuals, people with early or late MCI, and people with early AD. The follow up duration of each group is specified in the protocols for ADNI-1, ADNI-2 and ADNI-GO. Subjects originally recruited for ADNI-1 and ADNI-GO had the option to be followed in ADNI-2. For up-to-date information, see www.adni-info.org.”

The Huamn 610-Quad BeadChip (Illumina, Inc. San Diego, CA) was used to genotype 818 subjects in the ADNI-1 database, which resulted in a set of 620,901 SNPs and copy number variation (CNV) markers. Since the Apolipoprotein E (ApoE) SNPs, rs429358 and rs7412, are not on the Human 610-Quad Bead-Chip, they were genotyped separately and added to the data set manually. For simplicity, we only considered the 10, 479 SNPs collected on the chromosome 19, which houses the famous ApoE gene commonly suspected of having association with Alzheimer’s disease. A complete GWAS of ADNI will be reported elsewhere. The SNP data were preprocessed by standard quality control steps including dropping any SNP that has more than 5% missing data, imputing the missing values in each SNP with its mode, dropping SNPs with minor allele frequency < 0.05, and screening out SNPs violating the Hardy-Weinberg equilibrium. Finally, we obtained 8, 983 SNPs on chromosome 19, including the ApoE allele as the last SNP in our dataset.

Our problem of interest is to perform a genome-wide search for establishing the association between the 10, 479 SNPs collected on the chromosome 19 and the brain volume of 93 regions of interest (ROIs). We fitted model (1) with all 93 ROIs as responses and a covariate vector including an intercept, a specific SNP, age, gender, whole brain volume, and the top 5 principal components to account for population stratification. To reduce population stratification effects, we only used 761 Caucasians from all 818 subjects. Subjects with missing values were removed, which leads to 747 subjects. We set λ = λmax in our SPReM for computational efficiency. To test the SNP effect on all 93 ROIs, we calculated the test statistic and its p–value for each SNP. We further performed a standard massive univariate analysis. Specifically, we fitted a linear model with the same set of covariates and calculated a p–value for every pair of ROIs and SNPs.

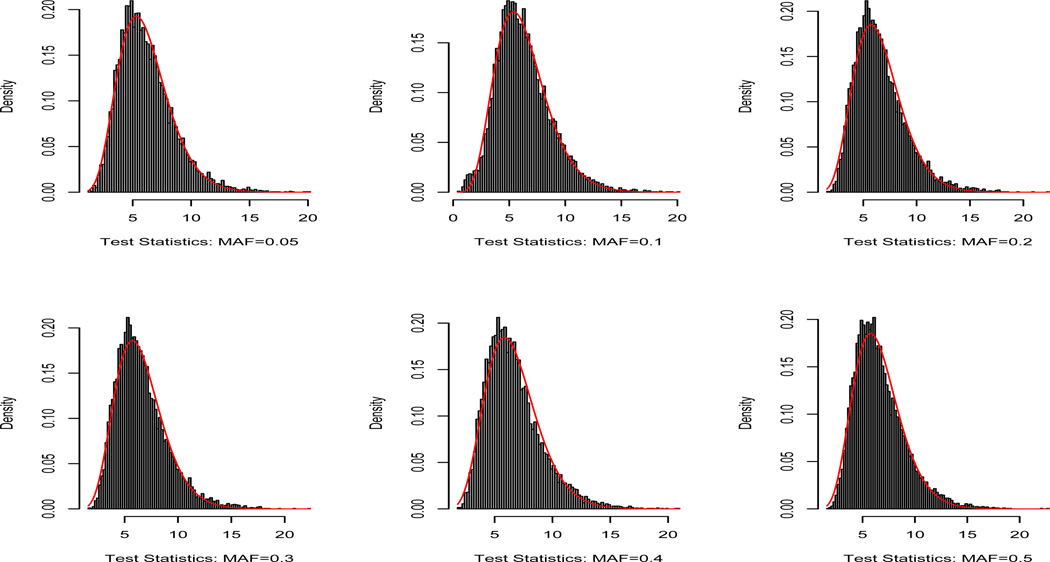

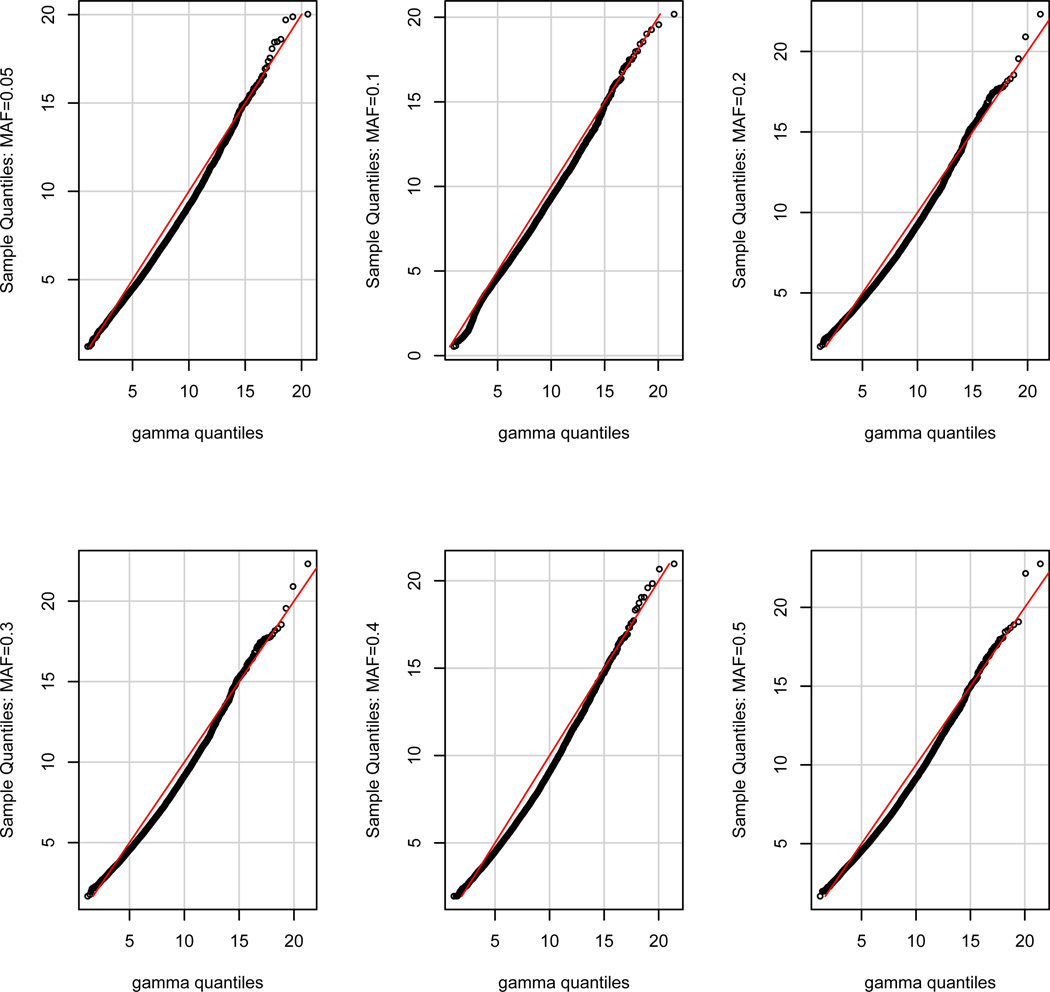

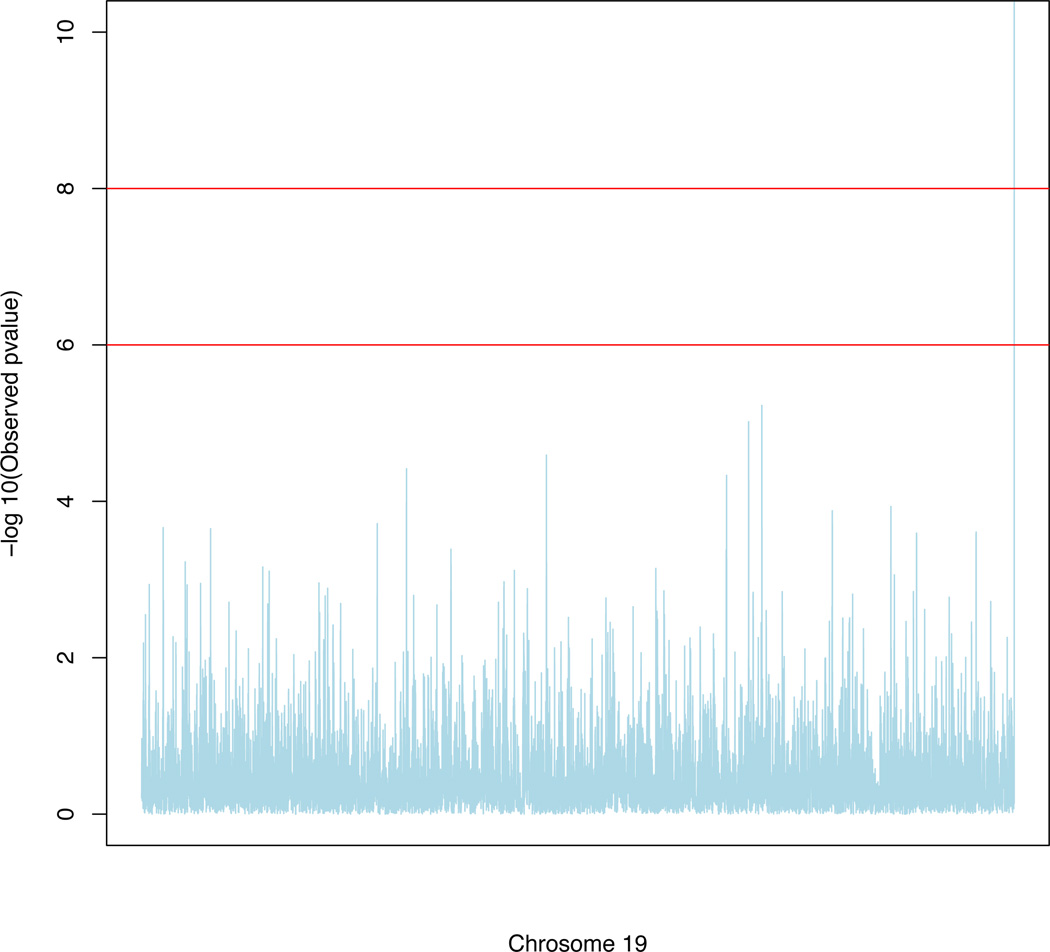

We developed a computationally efficient strategy to approximate the p–value of each SNP with different MAFs. In the real data analysis, we considered a pool of SNPs consisting of 6 MAF groups including MAF∈ (0.05, 0.075], MAF∈ (0.075, 0.15], MAF∈ (0.15, 0.25], MAF∈ (0.25, 0.35], MAF∈ (0.35, 0.45], and MAF∈ (0.45, 0.50]. Each MAF group contains 40 SNPs. For each SNP, we generated 10,000 wild bootstrap samples under the null hypothesis to obtain 10,000 bootstrapped test statistics. Then, based on 40 × 10, 000 bootstrapped samples for each MAF group, we use the Satterthwaite method to approximate the null distribution of the test statistic by a Gamma distribution with parameters (aT, bT). Specifically, we set aT = ε2/ν and bT = ν/ε by matching the mean (ε) and the variance (ν) of the test statistics and those of the Gamma distribution. The histograms and the fitted gamma distributions along with the QQ-plots are, respectively, presented in Figures 2–3. Figures 2 and 3 reveal that our gamma approximations work reasonably well for a wide range of MAFs when λ = λmax. Since we only use Gamma(aT, bT ) to approximate the p–value of large test statistic, we only need a good approximation at the tail of the Gamma distribution. See Figure 3 for details. For each SNP, we matched its MAF with the closest MAF group in the pool and then calculated the p–value of the test statistic based on the approximated gamma distribution. We present the manhattan plot in Figure 4 and the top 10 SNPs with their p–values for SPReM and the mass univariate analysis in Table 5 for λ = λmax.

Fig. 2.

Histograms and their gamma approximations based on the wild bootstrap samples under the null hypothesis for different MAFs for λ = λmax.

Fig. 3.

QQ-plot of the gamma approximations based on the wild bootstrap samples under the null hypothesis for different MAFs for λ = λmax.

Fig. 4.

ADNI GWAS results: Manhattan plot of −log10(p)-values on chromosome 19 by SPReM for λ = λmax.

Table 5.

Comparison between SPReM and the massive univariate analysis (MUA) for ADNI data analysis: the top 10 SNPs and their – log10(p) values for λ = λmax.

| SNP | apoe_allele | rs11667587 | rs2075650 | rs7248284 | rs3745341 |

|---|---|---|---|---|---|

| SPReM | 5.04E-16 | 5.95E-06 | 9.58E-06 | 2.56E-05 | 3.83E-05 |

| MUA | 3.43E-11 | 4.42E-04 | 1.12E-04 | 8.75E-04 | 1.00E-03 |

| SNP | rs4803646 | rs8106200 | rs2445830 | rs8102864 | rs740436 |

| SPReM | 4.65E-05 | 1.16E-04 | 1.32E-04 | 1.93E-04 | 2.17E-04 |

| MUA | 7.56E-04 | 3.70E-03 | 1.33E-02 | 9.34E-04 | 1.63E-03 |

We have several important findings. The ApoE allele was identified as the top one significant covariate with −log10(p) ~ 15 and 9 respectively, indicating a strong association between the ApoE allele and imaging phenotype, a biomarker of Alzheimer’s disease diagnosis. This finding agrees with the previous result in Vounou et al. (2012). We also found some interesting results regarding rs207650 on the TOMM40 gene, which is one of the top 10 significant SNPs with −log10(p) ~ 5 and 4 respectively. The TOMM40 gene is located in close proximity to the ApoE gene and has also been linked to AD in some recent studies (Vounou et al., 2012). We are also able to detect some additional SNPs, such as rs11667587 on the NOVA2 gene, among others, on the chromosome 19, which are not identified in existing genome-wide association studies. The new findings may shed more light on further Alzheimer’s research. The p–values for those top 10 SNPs calculated from SPReM are much smaller than those calculated from the mass univariate analysis. In other words, to achieve comparable p–values, the mass univariate analysis requires many more samples. This strongly demonstrates the effectiveness of our proposed method.

5 Discussion

In this paper, we have developed a general SPReM framework based on the two heritability ratios. Our SPReM methodology has a wide range of applications, including sparse linear discriminant analysis, two sample tests, and general hypothesis tests in MLMs, among many others. We have systematically investigated the L2 convergence rate of ŵλ in the ultrahigh dimensional framework. We further extend the SURP problem to the SMURP and offered a sequential SURP approximation algorithm. We carried out simulation studies and examined a real data set to demonstrate the excellent performance of our SPReM framework compared to other state-of-the-art methods.

6 Assumptions and Proofs

Throughout the paper, the following assumptions are needed to facilitate the technical details, although they may not be the weakest conditions.

Assumption A1. C(n−1XTX)−1CT ≍ 1, that is, there exists constant c0 and C0 such that c0 ≤ C(n−1XTX)−1CT ≤ C0.

Assumption A2. 0 ≤ ε0 ≤ λmin(ΣR) ≤ λmax(ΣR) ≤ 1/ε0.

Assumption A3. The covariance estimator Σ̃R satisfies: ‖Σ̃R − ΣR‖2 = Op(an) ≤ op(1).

Remark : Assumption A1 is a very weak and standard assumption for regression models. Assumption A2 has been widely used in the literature. Assumption A3 requires a relatively accurate covariance estimator in terms of spectral norm convergence. We may use some good penalized estimators of ΣR under different assumptions of ΣR (Bickel and Levina, 2008; Cai et al., 2010; Lam and Fan, 2009; Rothman et al., 2009; Fan et al., 2013).

Proof of Theorem 2.1 The Karush-Kuhn-Tucker (KKT) conditions for problem (13) are given by:

where Γ is a q × 1 vector and equals the subgradient of ‖w‖1 with respect to w. We consider two scenarios. First, suppose that |ℓj| > λ for some j. We must have γΣRw ≠ 0, which leads to γ > 0 and wTΣRw = 1. Thus, the KKT conditions reduce to

If we write w̃ = γw, this is equivalent to solving problem (15) with w̃ and then take normalization. Second, if |ℓj| ≤ λ for any j, then w = 0 and γ = 0, which is the solution of (15) as well. This finishes the proof.

Proof of Proposition 2.2 It follows from Theorem 2 of Rosset and Zhu (2007).

Proof of Proposition 2.3 The proof is similar to that of Proposition 1 of Witten and Tibshirani (2011). Letting , then problem (20) can be rewritten as

which is equivalent to

| (34) |

where . Thus, w̃k and uk that solve problem (34) are the k-th left and right singular vectors of A (Witten and Tibshirani, 2011). Therefore, we have and uk is the k-th eigenvector of ATA, or equivalently the k-th right singular vector of A. For problem (34), w̃k is the k-th left singular vector of A. Therefore, the solution of (21) is the k-th discriminant vector of (20).

Proof of Theorem 3.1 In this theorem, we specifically use the banded covariance estimator Σ̃R = Bkn(Σ̃R), where Bk(Σ) = [σjj′I(|j′ −j| ≤ k)] and Σ̂R is the sample covariance matrix of yi − B̂T xi.

First, we define 𝒥 = {‖Σ̃R − Bkn(ΣR)‖∞ ≤ t1} ∩ {‖ℓ̂ − ℓ‖∞ ≤ t2}, where t1 and t2 are specified as in Lemma 6.2. Then, it follows from Lemma 6.2 that P(𝒥) ≥ 1−3(q ∨ n)−η1 − 2(q ∨ n)−η2.

On the set 𝒥, by taking and using Lemma 6.1, we have

Let w0,S0 = [w0,jI(j ∈ S0)], where w0,j is the j–th component of w0. The above equation can be rewritten as

which yields

Finally, we obtain the following inequality

which finishes the proof.

Proof of Theorem 3.2 It follows from Lemma (6.1) that

Note that . Then, by taking

we have

By using Weyl’s inequality, we have

where ‖Σ̃R − ΣR‖2 = Op(an) = op(1). Finally, we have

| (35) |

which finishes the proof.

Lemma 6.1 We have the following basic inequality

| (36) |

Proof We rewrite the optimization problem (28) as

Thus, we have

which yields

in which we have used ℓ̂ = ΣRw0 + ℓ̂ − ℓ in the last equality.

Lemma 6.2 For all and , we have

| (37) |

Proof First, it follows from Lemma A.3 of Bickel and Levina (2008) that

where .

Second, we know that is Sub(1)-distributed, where . Then by the union sum inequality, we have

| (38) |

By taking , we can rewrite the above inequality as

Finally, we get

which finishes the proof.

Acknowledgments

The research of Drs. Zhu and Ibrahim was supported by NIH grants RR025747-01, GM70335, CA74015, P01CA142538-01, MH086633, EB005149-01 and AG033387. The research of Dr. Liu was supported by NSF Grant DMS-07-47575 and NIH Grant NIH/NCI R01 CA- 149569. Data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; BioClinica, Inc.; Biogen Idec Inc.; Bristol-Myers Squibb Company; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; GE Healthcare; Innogenetics, N.V.; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Medpace, Inc.; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Synarc Inc.; and Takeda Pharmaceutical Company. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Disease Cooperative Study at the University of California, San Diego. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of California, Los Angeles. This research was also supported by NIH grants P30 AG010129 and K01 AG030514.

Footnotes

Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.ucla.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.ucla.edu/wp−content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf. We thank the Editor, the Associate Editor, and two anonymous referees for valuable suggestions, which greatly helped to improve our presentation.

Contributor Information

Qiang Sun, Email: qsun@bios.unc.edu, Department of Biostatistics, University of North Carolina at Chapel Hill, NC 27599-7420..

Hongtu Zhu, Email: hzhu@bios.unc.edu, Department of Biostatistics, University of North Carolina at Chapel Hill, NC 27599-7420..

Yufeng Liu, Email: yiu@email.unc.edu, Department of Statistics and Operation Research, University of North Carolina at Chapel Hill, CB 3260, Chapel Hill, NC 27599..

Joseph G. Ibrahim, Email: ibrahim@bios.unc.edu, Department of Biostatistics, University of North Carolina at Chapel Hill, NC 27599-7420..

References

- Amos CI, Elston RC, Bonney GE, Keats BJB, Berenson GS. A multivariate method for detecting genetic linkage, with application to a pedigree with an adverse lipoprotein phenotype. Am. J. Hum. Genet. 1990;47:247–254. [PMC free article] [PubMed] [Google Scholar]

- Amos CI, Laing AE. A comparison of univariate and multivariate tests for genetic linkage. Genetic Epidemiology. 1993;84:303–310. doi: 10.1002/gepi.1370100657. [DOI] [PubMed] [Google Scholar]

- Bickel PJ, Levina E. Regularized estimation of large covariance matrices. The Annals of Statistics. 2008;36:199–227. [Google Scholar]

- Breiman L, Friedman J. Predicting multivariate responses in multiple linear regression. Journal of the Royal Statistical Society, Series B, Statistical Methodology. 1997;59:3–54. [Google Scholar]

- Cai T, Zhang C, Zhou H. Optimal rates of convergence for covariance matrix estimation. The Annals of Statistics. 2010;38:2118–2144. [Google Scholar]

- Chen S, Qin Y. A two-sample test for high-dimensional data with applications to gene-set testing. The Annals of Statistics. 2010;38:808–835. [Google Scholar]

- Chiang MC, Barysheva M, Toga AW, Medland SE, Hansell NK, James MR, McMahon KL, de Zubicaray GI, Martin NG, Wright MJ, Thompson PM. BDNF gene effects on brain circuitry replicated in 455 twins. NeuroImage. 2011a;55:448–454. doi: 10.1016/j.neuroimage.2010.12.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiang MC, McMahon KL, de Zubicaray GI, Martin NG, Hickie I, Toga AW, Wright MJ, Thompson PM. Genetics of white matter development: A DTI study of 705 twins and their siblings aged 12 to 29. NeuroImage. 2011b;54:2308–2317. doi: 10.1016/j.neuroimage.2010.10.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun H, Keles S. Sparse partial least squares regression for simultaneous dimension reduction and variable selection. Journal of the Royal Statistical Society, Series B, Statistical Methodology. 2010;72:3–25. doi: 10.1111/j.1467-9868.2009.00723.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook RD, Helland IS, Su Z. Envelopes and partial least squares regression. Journal of the Royal Statistical Society, Series B, Statistical Methodology. 2013 To appear. [Google Scholar]

- Cook RD, Li B, Chiaromonte F. Envelope models for parsimonious and efficient multivariate linear regression. Statist. Sinica. 2010;20:927–1010. [Google Scholar]

- Fan J, Feng Y, Tong X. A road to classification in high dimensional space: the regularized optimal affine discriminant. Journal of the Royal Statistical Society, Series B, Statistical Methodology. 2012;74:745–771. doi: 10.1111/j.1467-9868.2012.01029.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- Fan J, Liao Y, Mincheva M. Large covariance estimation by thresholding principal orthogonal complements. Journal of Royal Statistical Society, Series B. 2013;75:603–680. doi: 10.1111/rssb.12016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Formisano E, Martino FD, Valente G. Multivariate analysis of fMRI time series: classification and regression of brain responses using machine learning. Magnetic Resonance Imaging. 2008;26:921–934. doi: 10.1016/j.mri.2008.01.052. [DOI] [PubMed] [Google Scholar]

- Kherif F, Poline JB, Flandin G, Benali H, Simon O, Dehaene S, Worsley KJ. Multivariate model specification for fMRI data. Neuroimage. 2002;16:1068–1083. doi: 10.1006/nimg.2002.1094. [DOI] [PubMed] [Google Scholar]

- Klei L, Luca D, Devlin B, Roeder K. Pleiotropy and principle components of heritability combine to increase power for association. Genetic Epidemiology. 2008;32:9–19. doi: 10.1002/gepi.20257. [DOI] [PubMed] [Google Scholar]

- Knickmeyer RC, Gouttard S, Kang C, Evans D, Wilber K, Smith JK, Hamer RM, Lin W, Gerig G, Gilmore JH. A structural MRI study of human brain development from birth to 2 years. J Neurosci. 2008;28:12176–12182. doi: 10.1523/JNEUROSCI.3479-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnan A, Williams LJ, McIntosh AR, Abdi H. Partial least squares (PLS) methods for neuroimaging: a tutorial and review. Neuroimage. 2011;56:455–475. doi: 10.1016/j.neuroimage.2010.07.034. [DOI] [PubMed] [Google Scholar]

- Lam C, Fan J. Sparsistency and rates of convergence in large covariance matrix estimation. The Annals of statistics. 2009;37:4254–4278. doi: 10.1214/09-AOS720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lange K, Hunter DR, Yang I. Optimization transfer using surrogate objective functions. Journal of Computational and Graphical Statistics. 2000;9:1–20. [Google Scholar]

- Lenroot RK, Giedd JN. Brain development in children and adolescents: insights from anatomical magnetic resonance imaging. Neurosci Biobehav Rev. 2006;30:718–729. doi: 10.1016/j.neubiorev.2006.06.001. [DOI] [PubMed] [Google Scholar]

- Lin J, Zhu H, Knickmeyer R, Styner M, Gilmore J, Ibrahim J. Projection regression models for multivariate imaging phenotype. Genetic Epidemiology. 2012;36:631–641. doi: 10.1002/gepi.21658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopes ME, Jacob LJ, Wainwright MJ. A more powerful two-sample test in high dimensions using random projection. 2011 arXiv preprint, arXiv:1108.2401. [Google Scholar]

- Ott J, Rabinowitz D. A principle-components approach based on heritability for combining phenotype information. Hum Heredity. 1999;49:106–111. doi: 10.1159/000022854. [DOI] [PubMed] [Google Scholar]

- Paus T. Population neuroscience: Why and how. Human Brain Mapping. 2010;31:891–903. doi: 10.1002/hbm.21069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peng J, Zhu J, Bergamaschi A, Han W, Noh D, Pollack JR, Wang P. Regularized multivariate regression for identifying master predictors with application to integrative genomics study of breast cancer. Annals of Applied Statistics. 2010;4:53–77. doi: 10.1214/09-AOAS271SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peper JS, Brouwer RM, Boomsma DI, Kahn RS, Pol HEH. Genetic inuences on human brain structure: A review of brain imaging studies in twins. Human Brain Mapping. 2007;28:464–473. doi: 10.1002/hbm.20398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosset S, Zhu J. Piecewise linear regularized solution paths. The Annals of Statistics. 2007;35:1012–1030. [Google Scholar]

- Rothman AJ, Levina E, Zhu J. Generalized thresholding of large covariance matrices. Journal of the American Statistical Association. 2009;104:177–186. [Google Scholar]

- Rowe DB, Hoffmann RG. Multivariate statistical analysis in fMRI. IEEE Eng Med Biol Med. 2006;25:60–64. doi: 10.1109/memb.2006.1607670. [DOI] [PubMed] [Google Scholar]

- Scharinger C, Rabl U, Sitte HH, Pezawas L. Imaging genetics of mood disorders. NeuroImage. 2010;53:810–821. doi: 10.1016/j.neuroimage.2010.02.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teipel SJ, Born C, Ewers M, Bokde ALW, Reiser MF, Moller HJ, Hampel H. Multivariate deformation-based analysis of brain atrophy to predict Alzheimer's disease in mild cognitive impairment. NeuroImage. 2007;38:13–24. doi: 10.1016/j.neuroimage.2007.07.008. [DOI] [PubMed] [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B, Statistical Methodology. 1996;58:267–288. [Google Scholar]

- Vounou M, Janousova E, Wolz R, Stein J, Thompson P, Rueckert D, Montana G ADNI. Sparse reduced-rank regression detects genetic associations with voxel-wise longitudinal phenotypes in Alzheimer's disease. Neuroimage. 2012;60:700–716. doi: 10.1016/j.neuroimage.2011.12.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witten DM, Tibshirani R. Penalized classification using Fisher's linear discriminant. Journal of the Royal Statistical Society, Series B, Statistical methodology. 2011;73:753–772. doi: 10.1111/j.1467-9868.2011.00783.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association. 2006;101:1418–1429. [Google Scholar]