Abstract

Torsional eye movements are rotations of the eye around the line of sight. Measuring torsion is essential to understanding how the brain controls eye position and how it creates a veridical perception of object orientation in three dimensions. Torsion is also important for diagnosis of many vestibular, neurological, and ophthalmological disorders. Currently, there are multiple devices and methods that produce reliable measurements of horizontal and vertical eye movements. Measuring torsion, however, noninvasively and reliably has been a longstanding challenge, with previous methods lacking real-time capabilities or suffering from intrusive artifacts. We propose a novel method for measuring eye movements in three dimensions using modern computer vision software (OpenCV) and concepts of iris recognition. To measure torsion, we use template matching of the entire iris and automatically account for occlusion of the iris and pupil by the eyelids. The current setup operates binocularly at 100 Hz with noise <0.1° and is accurate within 20° of gaze to the left, to the right, and up and 10° of gaze down. This new method can be widely applicable and fill a gap in many scientific and clinical disciplines.

Keywords: eye tracking, torsional eye movements, Listing's law, vestibulo-ocular reflex (VOR)

Introduction

The recording of eye movements is a strategic tool in many fields of contemporary neuroscience for both human and animal studies. Any complete description and understanding of ocular motor control requires information about how the brain specifies the orientation of the globe around its line of sight. Clinically, eye movements are used as biomarkers for diagnosis and to monitor the course of disease or the effects of treatments in many common disorders, including degenerative neurological diseases, vestibular disorders, and strabismus (Leigh & Zee, 2015). In addition to studying control of eye movements per se, scientific inquiries by computational, cognitive, and motor control neuroscientists often measure eye movements as a critical part of their experimental paradigms. Eye movements are commonly used to probe the mechanisms underlying decision making, learning, reward, attention, memory, and perception along with other methods such as noninvasive imaging of neural activity, transcranial magnetic stimulation, and electrophysiological recording of neural activity. To interpret these studies it is essential to know how the external environment is being presented to the brain. To this end, a complete description of where we are looking and, in turn, what we are seeing requires accurate measures of the position and movements of the globes (actually the retinas) of both eyes around the three rotational axes: horizontal, vertical, and the line of sight (torsional).

Torsional eye movements are particularly important for (a) developing the perception of the orientation of our heads relative to the external environment (Wade & Curthoys, 1997); (b) detecting the orientation of objects in depth (Howard, 1993), which is essential to maintaining a veridical sense of the visual world in three dimensions; (c) analyzing the response of the vestibular system to rotations or tilts of the head (Schmid-Priscoveanu, Straumann, & Kori, 2000); (d) planning corrective surgery in patients who have strabismus (Guyton, 1983, 1995; Kekunnaya, Mendonca, & Sachdeva, 2015); and (e) understanding how the orientation of the globe during fixation is dictated by the fundamental laws of torsion put forth by Listing and Donders (Wong, 2004).

Both invasive contact techniques (e.g., scleral search coils: Collewijn, Van der Steen, Ferman, & Jansen, 1985; Robinson, 1963; contact lenses: Ratliff & Riggs, 1950) and noninvasive, noncontact techniques (e.g., corneal infrared reflection: Cornsweet & Crane, 1973; pupil video tracking: Clarke, Teiwes, & Scherer, 1991; Haslwanter & Moore, 1995; Hatamian & Anderson, 1983) are available to reliably track the globe as it traverses the horizontal and vertical extents of the orbit. But this is not the case for tracking the torsional orientation of the eye. Slippage of contact lenses or search coils is a particular problem for measuring torsion with contact techniques in human subjects (Barlow, 1963; Bergamin, Ramat, Straumann, & Zee, 2004; Straumann, Zee, Solomon, & Kramer, 1996). On the other hand, noninvasive techniques that capture images of the eyes are subject to artifacts related to the lids or the geometry of the globe (Haslwanter & Moore, 1995) and are computationally costly.

Many noncontact techniques for measuring torsion have been developed (Table 1), but none has become an accepted, widely used, noninvasive standard. The reasons include a lack of real-time measurements, lack of an objectively defined zero position, low precision and accuracy, artifacts when the eye is in an eccentric position of gaze, occlusion of the pupil by the eyelids, and unwieldy complex algorithms, some of which require manual intervention. The long list of attempts to develop new methods for measuring torsion reflects not only interest and need but also the associated challenges.

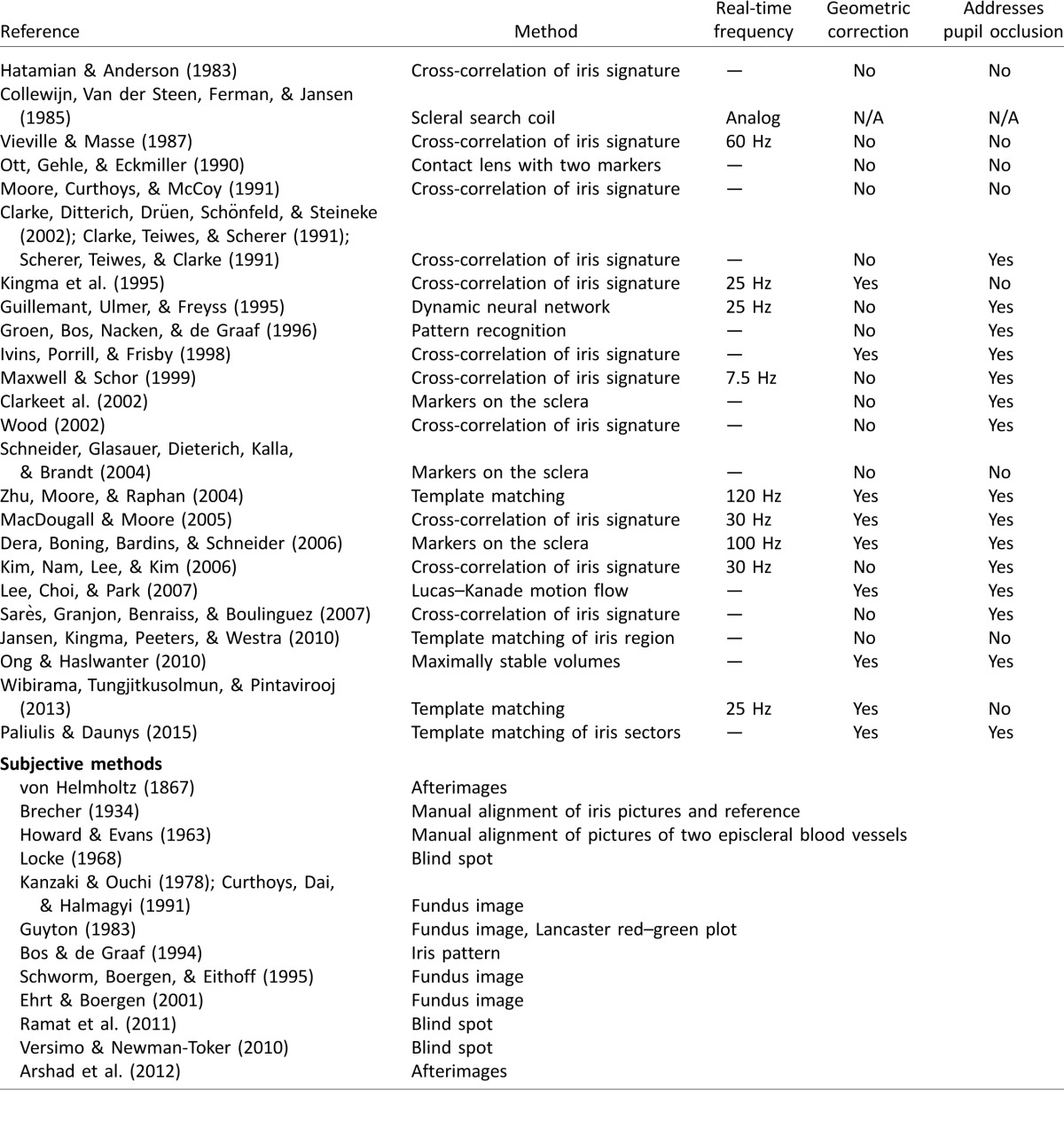

Table 1.

Previous methods for recording torsional eye movements. The first column includes references that describe each method. The second column lists the main algorithm used to calculate torsion. The last three columns specify whether the method can operate in real time and at what frame rate, whether it accounts for the geometry of the eyeball, and whether it attempts to address occlusion of the pupil by the eyelids. The lower part of the table includes methods that rely on subjective evaluation of iris images, fundus images, or afterimages. These methods typically measure only static torsion at a few time points and do not provide continuous recordings of dynamic changes in torsion. Notes: —: information not available; NA: not applicable.

Here we propose a new method for measuring eye movements in three dimensions that takes advantage of new open-source software available for image processing (OpenCV; Bradski, 2000) and advances in the field of iris recognition to improve measurement of torsional eye movements. Those interested in iris recognition have had to solve problems similar to those of recording torsional eye movements (Daugman, 2004; Masek, 2003; Yooyoung, Micheals, Filliben, & Phillips, 2013). Here we describe a method that combines ideas from these two fields and promises to bring measures of torsion to the same standards, reliability, and relative ease that we now have for vertical and horizontal eye movements. This step forward will give us a complete and reliable description of where the eyes are pointing and, most important, what the brain is seeing.

In order to evaluate the precision and accuracy of our method and to demonstrate its potential in studying multiple neurobiological questions, we used simulations, torsion recordings under different paradigms, and comparisons with the current gold standard for measuring eye movements: the search coil. Even though we have performed all the recordings using a commercial head-mounted video goggle system (RealEyes xDVR, Micromedical Technologies Inc., Chatham, IL), our method can work with any other video system that can provide images of the iris with enough resolution (about 150 pixels in diameter). Quality of the recordings may vary from system to system depending on parameters such as frame rate, orientation of the camera relative to the eye, illumination sources, and reflections.

Method

We used the RealEyes xDVR system manufactured by Micromedical Technologies Inc. This system uses two cameras (Firefly MV, PointGrey Research Inc., Richmond, BC, Canada) mounted on goggles to capture infrared images of each eye. We set up the system to capture images at a rate of 100 frames per second with a resolution of 400 by 300 pixels. All traces in figures show raw, unfiltered data.

The software was written in the C# language using Microsoft Visual Studio 2013 and ran on a laptop with an Intel i7-350M central processing unit. We used the open-source libraries emguCV and openCV and the libraries provided by PointGrey (FlyCapture) to control the cameras and to capture and process the images. The software runs seven concurrent threads: one for user interface and main control, one for image capture, three for image processing, one for video recording, and one for data recording.

Eye movements were recorded simultaneously using scleral search coil and video oculography (VOG) techniques. Subjects wore a modified version of a scleral search coil (Bergamin et al., 2004) in one eye only while also wearing the video goggles (Micromedical Technologies). The head was immobilized with a bite bar fashioned from dental impression material.

Coil movements were recorded around all three axes of rotation (horizontal, vertical, and torsional) using the magnetic field search coil method with dual-coil annulus. The annulus was placed on one eye after administration of a topical anesthetic (Alcaine, which is proparacaine hydrochloride 0.5%). The output signals were filtered with a bandwidth of 0 to 90 Hz and sampled at 1000 Hz with 12-bit resolution. System noise was limited to 0.1°. Data were stored on a disc for later offline analysis using Matlab (Mathworks Inc., Natick, MA). Further details of the calibration and recording procedures can be found in Bergamin et al. (2004). All procedures were performed according to protocols approved by the Johns Hopkins institutional review board committees.

Results

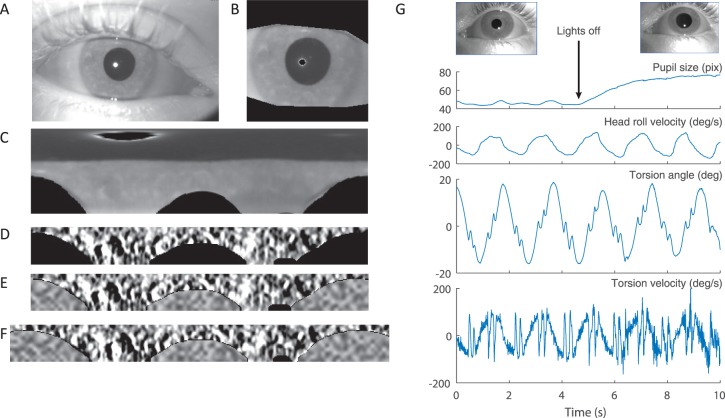

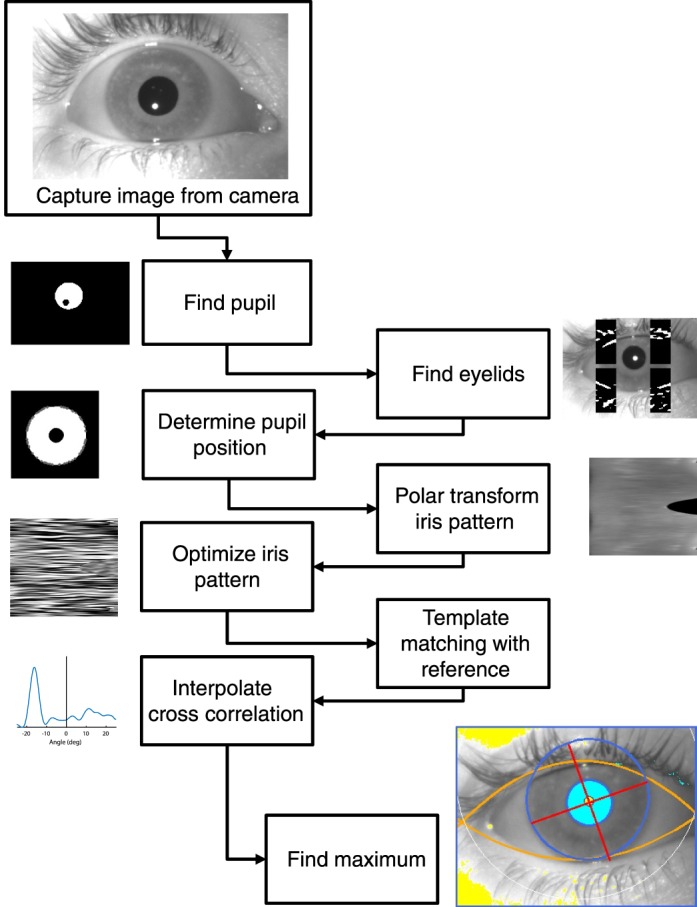

Figure 1 shows the general structure of our method. We measure horizontal and vertical eye movements by tracking the center of the pupil and torsional eye movements by quantifying the rotation of the iris pattern around the center of the pupil. First, we get a rough estimate of the location of the pupil using an image with reduced resolution. This approximation defines the region of interest where the subsequent processing steps occur. Next, we detect the eyelids and use them to identify the parts of the pupil contour and the iris not covered by the eyelids or eyelashes. Then, we fit an ellipse to the visible pupil contour to get a precise measurement of the horizontal and vertical position of the pupil center. Next, we select the iris pattern, apply a polar transformation (including geometric correction for eccentric eye positions), optimize the image to enhance the iris features, and mask the parts covered by the eyelids. To determine the torsion angle we use the template matching method implemented in OpenCV to compare the current iris pattern with a reference image. Thus, all measurements of torsion are relative to the orientation of the eye when the reference image is obtained. The method produces real-time recordings at 100 Hz of the horizontal, vertical, and torsional positions of both eyes. Before executing the automatic algorithm, the operator must specify a few parameters: the two thresholds that are used to identify the darkest (pupil) and brightest (corneal reflections) pixels in the image, and the iris radius. All parameters can be selected easily using an interactive user interface by observing them directly overlaid on the images of the eye.

Figure 1.

General structure of the method.

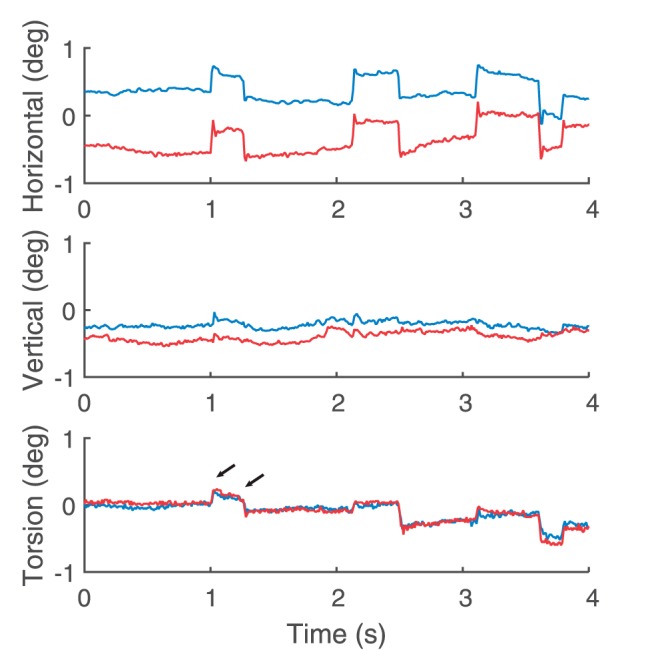

To demonstrate the precision of this method we show in Figure 2 an example of a recording during attempted fixation of a small laser target. Small saccades of less than 1° (microsaccades) are seen in the horizontal trace. The noise level in the torsion measurement is less than 0.1°, and the small torsional component of the microsaccades can be appreciated (arrows).

Figure 2.

Example of eye movements during attempted fixation of a small target. Horizontal, vertical, and torsional eye position during sustained fixation. Blue and red traces correspond to left eye and right eye, respectively. Note the microsaccades in the horizontal traces and the small torsion (arrows) associated with them.

Eyelid tracking

The eyelids or eyelashes may naturally cover parts of the iris or the pupil. These occlusions add biases and noise to recordings of eye position including torsion. To address occlusion of the iris, previous methods (Clarke et al., 1991; Zhu, Moore, & Raphan, 2004) have relied on the operator selecting a portion of the iris for analysis that they assume to be continuously unobstructed. However, any fluctuations in the position of the eyelid during the recording, which are not uncommon, can cover some of the previously selected area and contaminate the measurement of torsion. To address occlusion of the pupil by the eyelids, some methods fit an ellipse to the visible parts of the pupil. Others have applied pilocarpine nitrate to constrict the pupils (Mezey, Curthoys, Burgess, Goonetilleke, & MacDougall, 2004). In our method we automatically track the position of the eyelids and ignore the parts of the pupil contour or the iris that are covered.

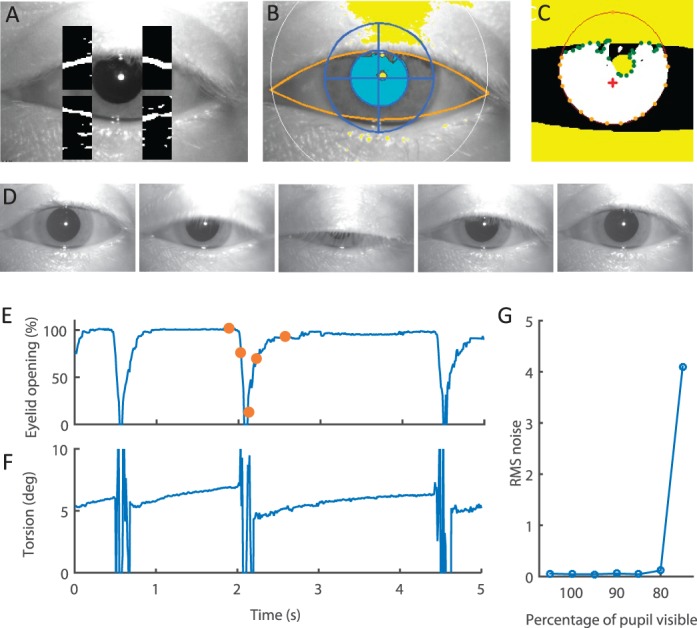

Our method is based on the Hough transform to detect portions of the eyelid edges that approximate a small straight line. First, we filter the image and enhance the lines present in the image using an edge detection filter. Following a similar method developed for iris recognition (Yooyoung et al., 2013), we define four regions of interest around the pupil (Figure 3A) and detect one line in each region that corresponds with a segment of the eyelid edge. Next, to approximate the curvature of the eyelid, we fit two parabolas (one for the lower lid and one for the upper lid) to the segments of the eyelid edges (Figure 3B). We consider the area between the two parabolas to be the visible part of the eye and mask or ignore anything falling outside of it.

Figure 3.

Eyelid detection. (A) Sample image with the four image sectors containing the eyelid segments. The images within the sector have been filtered with an edge detector. The pixels that are more likely to be part of an edge are labeled as white, and the rest are labeled as black. (B) Result of fitting a parabola (shown in orange) to the eyelid segments. Only pixels within the two parabolas will be considered for tracking the pupil and the iris. (C) Representation of the pupil detection and contour analysis. Pixels in white are pixels above the set threshold. Pixels in yellow are the pixels masked by the eyelids or the reflection of the light-emitting diode on the cornea. Orange dots indicate the smoothed contour of the pupil to fit the ellipse, and green dots correspond with the parts of the contour eliminated due to eyelid or reflection interference. The red cross indicates the centroid of the contour. Finally, the red line shows the fitted ellipse. (D) Sequence of frames during a blink. (E) Example recording of eyelid movements represented by percentage eyelid opening. Eyelid opening is measured as the average distance between the top eyelid segments and the bottom eyelid segments. Orange dots mark the points corresponding with the image frames in panel D. (F) Raw torsion measurement corresponding with panel E. Large spikes correspond with the periods of time the eye is closed, making tracking impossible. Note how quickly stable torsion tracking resumes as the eye opens, even before the eyelids completely open again. (G) Root-mean-square noise in the torsional eye position recordings as a function of percentage of visible pupil.

Pupil tracking

Most video-based methods for tracking eye movements use the pupil to track horizontal and vertical eye movements. Generally, vertical eye movement recordings are less accurate and noisier than horizontal eye movement recordings. This is due to the interference of the eyelids and eyelashes that often cover parts of the upper and lower edges of the pupil. Most algorithms for pupil tracking rely on a threshold to find the darkest pixels in the image. Some methods then calculate the center of the pupil using the center of mass of the dark pixels; others fit an ellipse to the contour defined by the dark pixels. The ellipse-fitting method helps eliminate biases due to partial pupil occlusion. Here we also implemented an ellipse-fitting algorithm to determine the horizontal and vertical eye positions accurately. The most challenging problem in this method is determining which parts of the contour actually correspond with the contour of the pupil and which parts are the edges of the eyelid, eyelashes, shadows, or corneal reflections (Figure 3B). One approach is to rely on the contour of the pupil and analyze properties such as changes in the curvature to eliminate the points that do not fit into the contour of a smooth elliptical pupil (Zhu, Moore, & Raphan, 1999). Here we opted to also add information about the position of the eyelid to adjust the contour reliably. First we smooth the contour by a polynomial approximation (implemented in cvAproxPoly in OpenCV). Then we eliminate points with a high change in curvature and points covered by the eyelid or the corneal reflections. We define the curvature of a contour point as the angle formed between that point, the next contiguous point, and the centroid of the contour. We eliminate a point when the angle between that point and the next one differs by more than 20% from the angle between that point and the previous one (i.e., when the contour is not smooth at that point). Finally, to determine the center of the pupil and its size, we fit an ellipse to the remaining points of the contour using the least squares method (implemented in OpenCV as cvFitEllipse2).

Accurate detection of the pupil is most often hampered by the upper eyelid, which is more mobile. If the pupil had a fixed size, the bottom edge would carry all the necessary information to measure vertical eye position. However, this is not the case as the pupil can continuously change size, causing movements of the lower edge that might be taken as movements of the globe. Here we use the information from the lower edge but also account for possible changes in the pupil size. We measure the current size of the pupil using the left and right edges and assume that the relationship between its height and width does not change when the pupil dilates or constricts. To implement this constraint we added an extra point to the top part of the contour in place of the occluded points (Figure 3C) at the location that would correspond with the current width of the pupil and the current position of the lower edge. Adding this extra point reduces the level of noise when the pupil is partially occluded by making it less likely to choose ellipses with unrealistic shapes that do not pass near that point (i.e., very large, elongated ellipses). A solution that is conceptually similar but computationally more costly would be to implement a new ellipse-fitting method in which the error term depends not only on the distance between the points of the pupil contour and the ellipse but also on the aspect ratio of the pupil and its similarity to the aspect ratio of a fully visible pupil obtained during calibration. Our simpler approach seems adequate, with a 30% reduction in the level of noise in some recordings in which the pupil remained partially occluded. Finally, we measured the amount of noise (or error) introduced by our method as a function of amount of pupil occlusion. We artificially removed points of the pupil contour of an otherwise completely visible pupil to simulate controlled amounts of pupil occlusion. We show that if more than half of the pupil is visible the algorithm works well but that if less than half of the pupil is visible the measurements become noisy and unreliable (Figure 3G).

Iris pattern optimization and torsion calculation

Our method for tracking torsion is based on measuring the rotation of the iris pattern around the pupil center by comparing each video frame with a reference frame. First we select the area of the image around the center of the pupil that contains the entire iris and then mask the pixels that are covered by the eyelids and corneal reflections with zero values (black pixels in Figure 4B; see previous sections describing the estimation of the pupil center and eyelid tracking). Then we apply a polar transformation to the image to transform the circular pattern of the iris into a rectangular configuration (Figure 4C). This facilitates the torsion calculation because rotations around the pupil center become translations in one dimension. Since the relevant information about the torsion is in only one dimension, we can optimize the image by low-pass filtering the radial dimension and band-pass filtering the tangential dimension (Figure 4D) using the Sobel function in OpenCV. The low-pass filtering reduces the noise in the image without reducing the information available for calculating torsion, and the high-pass filtering eliminates the effect of changes in overall luminance in the image and enhances the small but salient iris features. This filtering is comparable to the Gabor filtering typically used in iris recognition (Daugman, 2004). Finally, we replace the masked pixels with random noise to eliminate the interference of eyelids, eyelashes, and corneal reflections (Figure 4E) when calculating the cross-correlation.

Figure 4.

Iris pattern. (A) Example of image of the eye. (B) Region of interest containing the iris. Eyelids and reflections are masked by black. (C) Polar transformation of the image in panel B. Top corresponds with the center of the pupil, and bottom corresponds with the outer edge of the iris. (D) Iris band extracted from panel C. The iris pattern is filtered to optimize the contrast of the features. (E) Same as panel D but with noise added to replace the masked regions. (F) Reference iris pattern. Note how the reference image expands to both sides, repeating a portion of the pattern. (G) Recoding of torsional eye movements during head rolling while the pupil changes size due to changes in illumination. Top images show images of the constricted and dilated pupils in the light and dark conditions.

The iris pattern contains pixels between the edge of the pupil and the outer edge of the iris. Because the pupil size can change, it is necessary to apply a transformation to compare the iris pattern obtained at different pupil sizes. We opted for simply scaling the pattern to a fixed size of 60 pixels regardless of the size of the pupil or iris. Thus, our iris pattern will always be 360 pixels long and 60 pixels wide regardless of the pupil size (Figure 4E). This resizing is also important to ensure that the processing time does not change when the properties of the image (e.g., resolution, iris size, pupil size) change so that real-time processing is always possible at 100 frames per second. Figure 4G shows how the method can record torsional eye movements even during large changes in the pupil size. Here we first started the recording and obtained the reference image while the lights were on and the pupils were small. Then we turned off the lights and continued the recording while the pupils dilated. As shown, only a small increase in noise in the velocity recording (unfiltered) can be appreciated. Similar results were obtained using a reference from dilated pupils to record torsional eye movements when the pupils were constricted.

To calculate the torsion we use a template matching technique based on the fast Fourier transform as implemented in the OpenCV library (matchTemplate function). The template matching provides a measure of similarity of two images for different overlapping positions. The template matching method is conceptually equivalent to shifting the current image one pixel at a time relative to the reference image and measuring their similarity. Because the images have a total of 360 pixels, the resolution obtained with the template matching is only 1°. To obtain subpixel resolution we interpolated the cross-correlation by an order of 50 to obtain a resolution of 0.02°. This value sets the minimum amount of torsion that can be resolved and must not be confused with the level of noise or accuracy in the recordings.

We considered a maximum torsion value of 25° (range of 50°)—that is, the angle of rotation between the current image and the reference image must not be more than 25°. This limit is necessary to constrain the computational cost of the template matching operation, and it is large enough to easily include the natural range of torsional eye movements (Collewijn et al., 1985). To implement this limit we extended the reference iris pattern so that it contains 410° instead of 360°—that is, the reference pattern is redundant by repeating the angles between −25 and +25 (Figure 4F). Applying this circular redundancy allows the use of the entire 360° iris pattern when shifting the current image over the reference image to measure their similarity.

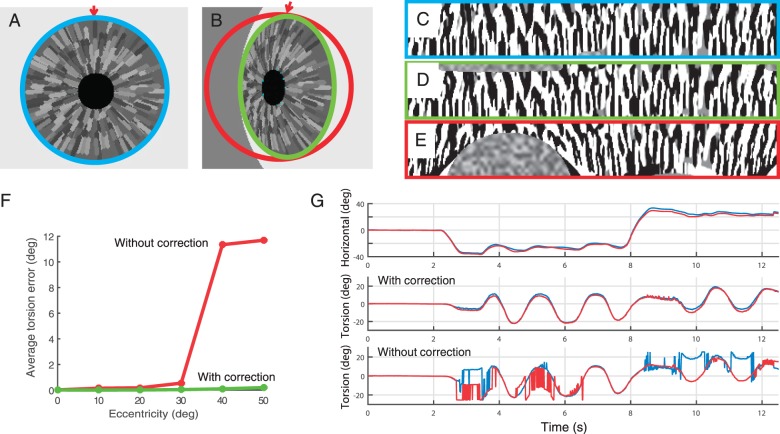

Geometric correction for eccentric gaze

The image of the eye captured by the camera results from the projection of an approximately spherical globe onto a plane. Thus, in an eccentric gaze position, the camera does not have a straight view of the pupil and the iris, so they both can appear distorted. In this case a perfectly circular iris would appear to be approximately elliptical (Figure 5A, B). The distortion due to the geometry of the eye causes two problems. First, the distorted image of the iris cannot be directly compared with the reference image to determine the relative rotation between them. This will affect mainly the precision or noise level of the recordings, making it impossible to track beyond some eccentricity. Second, the torsional rotation needs to be put in the context of horizontal and vertical movements to completely describe the rotation of the eye in three-dimensional coordinates. This problem will affect the accuracy of the recordings. Here we opted to encode the eye movements in Hess coordinates in which both the horizontal and vertical axes are head (camera) fixed, and thus our calculations intuitively reflect the coordinates of the pupil as seen by the camera. Conversion to any other coordinate system, however, would be possible.

Figure 5.

Geometric correction. (A) Simulation of the eye looking straight ahead. (B) Simulation of the eye looking 50° to the side. (C) Iris pattern corresponding with the eye looking straight ahead. (D) Iris pattern corresponding with the eye looking 50° to the side applying the geometric correction. (E) Iris pattern corresponding with the eye looking 50° to the side without applying the geometric correction (note the distortion on the pattern). (F) Average torsion error as a function of eccentricity in simulated eye movements calculated with and without geometric correction. Note that even though the degradation of the signal with eccentricity is gradual, the accuracy breaks down at about 35° when other peaks in the cross-correlation reach a similar height as the main peak. (G) Example of torsion in response to head rolling (left eye in blue and right eye in red) recorded at an eccentric horizontal gaze position with geometric correction (middle) and without geometric correction (bottom).

Many factors can introduce geometrical distortions in the image of the iris seen by the camera (Haslwanter & Moore, 1995): distortion due to the shape of the eye, translations of the eyeball, the shape of the cornea, or the angle between the optical axis and the visual axis. Simulations performed by Haslwanter and Moore (1995) showed that, among all the potential sources of error, the geometric distortion in the shape and pattern of the iris due to the spherical shape of the eye is by far the most important one. Correcting for the geometry of the eye is essential to obtaining precise and accurate measurements of torsion at eccentric positions.

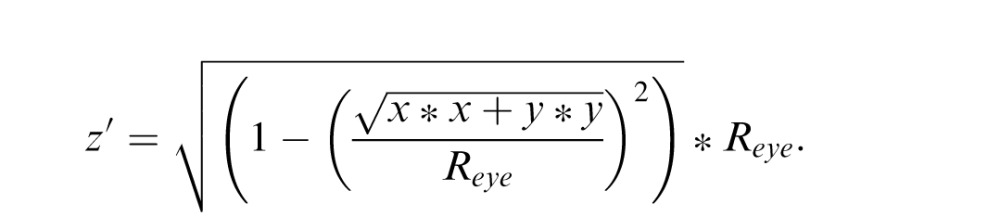

To correct for the geometrical distortion and account for the eccentric position of the pupil and the iris, we modified the polar transformation that converts the iris annulus into a rectangle (Figure 5A, B). If the eye is looking straight at the camera, the polar transformation consists of mapping pixels in a circle to a straight line, with one coordinate corresponding to the angular position of the pixel within the iris and the other coordinate corresponding to the distance from the edge of the pupil to the edge of the iris. At an eccentric position this mapping needs to change. To achieve the proper mapping between the distorted image and the reference image, we first determine the center of the pupil and use it to calculate the horizontal and vertical rotation of the eye globe.

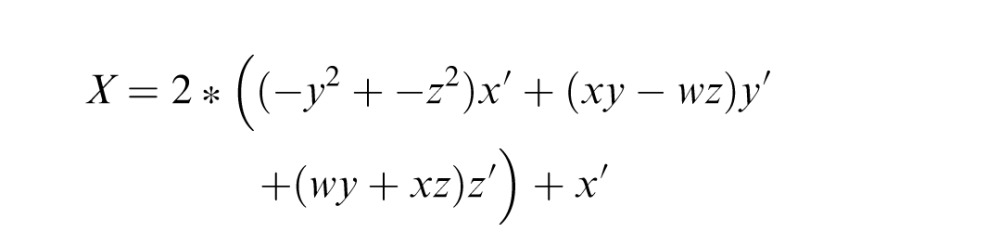

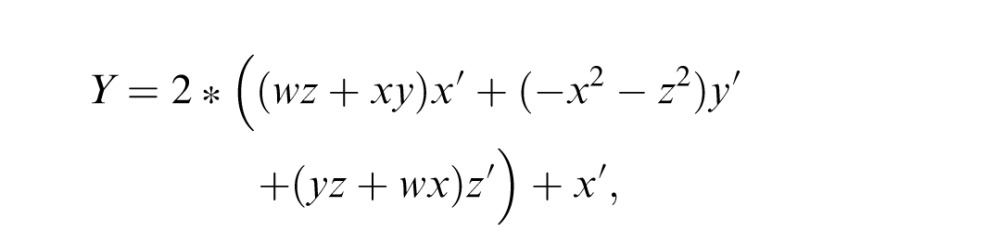

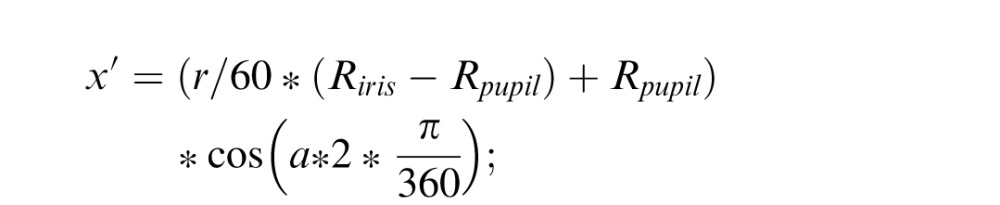

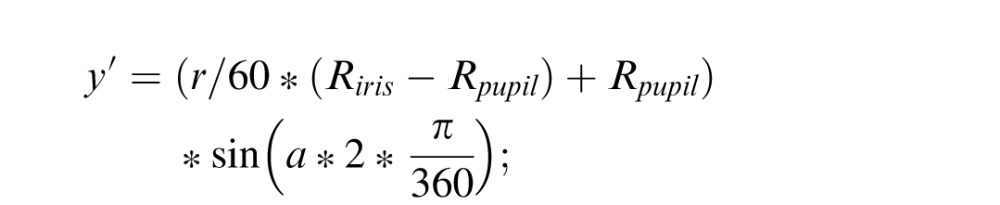

For remapping we use the OpenCV function cvRemap, which performs an arbitrary morphological transformation of an image given the correspondence of the pixel coordinates between the original image and the transformed image. Thus, we need to determine the positions of the pixels in the image with the distorted iris that correspond to the iris pattern in polar coordinates. Let a and r represent the coordinates of the pixels in the iris pattern image, which correspond with the angle (from 0 to 359) and the radial distance from the edge of the pupil to the edge of the iris (from 0 to 59). After estimating the position of the pupil, let  be the quaternion that defines a rotation of the eye without torsion to the current eye position. Then, the coordinates of the pixels

be the quaternion that defines a rotation of the eye without torsion to the current eye position. Then, the coordinates of the pixels  on the raw image that correspond with the coordinates of the pixels

on the raw image that correspond with the coordinates of the pixels  in the iris pattern image are

in the iris pattern image are

|

|

with

|

|

|

To evaluate the geometric transformation we used an image of a simulated spherical eyeball. We compared the error in torsional eye position calculated with and without a geometric correction at different eccentric gaze positions. Figure 5A and B shows the images of the simulated iris at two different eye positions, and Figure 5C through E shows the distortion in the iris pattern caused by the geometry of the eye at an eccentric gaze position. It is important to note that not only is the overall shape of the iris distorted (i.e., from circular to elliptical), but the individual features of the iris and their relative angles are also altered (Figure 5A, B, arrows). Using the geometric correction, the torsional position was accurate up to 50° of eccentricity. Without the geometric correction, tracking breaks down at 30° of eccentricity (Figure 5F). The simulations are a simplified case, so those values might not correspond with the ranges of accuracy for a real eye, but they show the need for the correction and its effectiveness. In Figure 5G, we also show the results of tracking real eyes during head rolling while maintaining an eccentric gaze position to the left or to the right. Using the geometric correction the torsion measurement remains stable for positions of more than 20°; without the geometric correction, tracking becomes unstable at those positions.

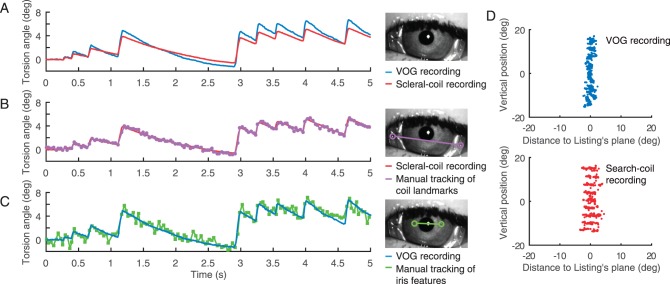

Simultaneous recordings with scleral coil and VOG

The gold standard method for recording eye movements is the scleral search coil (Collewijn et al., 1985; Robinson, 1963), which for wearing in humans is embedded in a silastic annulus that adheres to the eye. However, there is a potential problem of slippage of the annulus on the eye, thus introducing an artifact of movement or lack thereof, especially for torsion (Straumann et al., 1996). Quantitative measurements and analysis of slippage are scarce, though Van Rijn, Van Der Steen, and Collewijn (1994) pointed out the possibility of a long-term slippage of the coil (based on what seemed to be a physiologically unrealistic drift in the difference between the torsional positions of the two eyes—between 2° and 4°). Slippage has typically been considered to occur during blinks (as the eyelids may move the exiting wire of the coil). Many studies have attempted to account for the slippage using different methods for postprocessing the data (Minken & Gisbergen, 1994; Steffen, Walker, & Zee, 2000; Straumann et al., 1996), while others have modified the coil design to try to minimize slippage (Bergamin et al., 2004). However, those methods relied on assumptions about slippage and not direct measurements.

Here we simultaneously measured torsional eye movements using the scleral annulus search coil method and VOG. Subjects wore a modified coil developed previously to reduce slippage (Bergamin et al., 2004). For a direct comparison, we recorded torsional eye movements induced by a torsional optokinetic stimulus while looking straight ahead to avoid other confounding factors such as head movements or eccentric gaze positions. Figure 6A shows that the search coil recordings systematically underestimated the torsional movements compared with the VOG recording. We did not find a similar underestimation for the horizontal and vertical eye movements. Both slow phases and quick phases of the torsional nystagmus from the coil method show lower velocities and proportionally smaller amplitudes—only about 70% of the values from the VOG method.

Figure 6.

Simultaneous recordings of torsional eye movements with the VOG method and the scleral annulus with an embedded search coil. (A) Comparison of recordings during torsional Optokinetic Nystagmus (OKN) obtained simultaneously with the VOG method and the search coil. Note the coil visible in the video image. (B) Comparison of coil recordings with manual tracking of coil landmarks in the video images. (C) Comparison of the VOG automatic recording with the manual tracking of iris features. (D) Comparison of thickness of Listing's plane obtained with VOG and coil methods.

Since an error in the calibration of the coil or the VOG system could account for the difference, we directly compared the search coil recordings with the rotation seen in the video images. Looking at each video frame, we manually tracked several landmarks of the coil and the iris to provide independent measures of the torsional movement of the eye and the coil. During the manual tracking the observer did not have access to, and could not be biased by, the measurements obtained by the other techniques. Figure 6B shows the rotation of the coil as measured with the voltage induced by the magnetic field and by visually tracking the coil in the video. Both recordings match perfectly, eliminating the possibility of erroneous calibration of the coil. Next, we repeated the same analysis for the iris. Figure 6C shows the rotation of the iris as measured by the automatic VOG system and by visually tracking the iris landmarks in the video images. Both recordings also match. These recordings confirm that the coil may not be firmly attached to the eye and that it can misrepresent the movements of the eye, although the degree of adhesion might vary across subjects. Future studies can address this issue.

As a second test for compareing VOG and coil, we measured the dispersion of the fixation points around Listing's plane (Figure 6D; see Wong, 2004, for a review). The subject looked at fixed targets within ±20° horizontally and ±15° vertically. The so-called thickness of the plane, or average distance from the three-dimensional eye positions to their best-fitting plane (Listing's plane), is larger for the data recorded with the coil system, suggesting less accurate measurements. This is also likely due to the slippage of the coil on the eye.

The first major implications of these results are that scleral search coils in an annulus might not be a valid gold standard for recording torsional eye movements and that VOG systems may be more accurate. These results also challenge the assumptions about coil slippage during torsional eye movements, suggesting that the coil does not always faithfully transduce the orientation of the eye in the orbit.

Recordings of torsional eye movements during static head tilts

To study visual perception it is essential to know exactly what is being seen by the retina. Thus, knowing the position and orientation of the eye relative to the visual world is critical in any study trying to correlate any parameter of a visual stimulus to how it is perceived by the brain and, in turn, how it relates to neural behavior. For example, to study the perception of one's orientation in the environment, subjects are often asked to report the orientation of a line in otherwise total darkness (subjective visual vertical; Mittelstaedt, 1986) while their head is tilted at different angles. Recent studies have emphasized that knowing the torsional orientation of the globe is critical for interpreting the results (Barra, Pérennou, Thilo, Gresty, & Bronstein, 2012; Bronstein, Perennou, Guerraz, Playford, & Rudge, 2003)—for example, of the effect of interventions such as transcranial magnetic stimulation on visual perception of upright (Kheradmand, Lasker, & Zee, 2015).

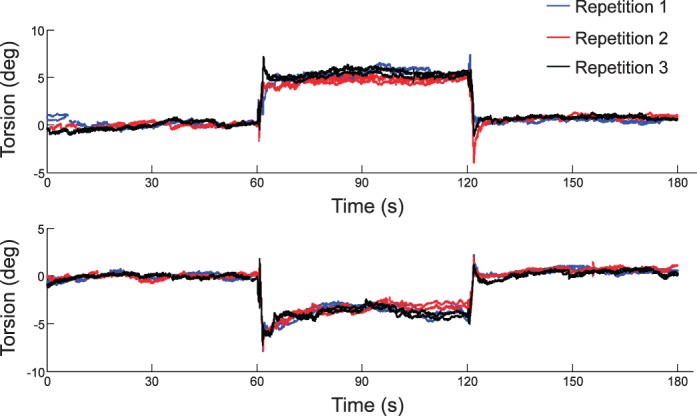

Obtaining accurate and reliable torsion data during sustained static head tilts requires precise and stable measurement techniques. A method relying on measurements from frame to frame (Zhu et al., 2004) may produce data that are less stable over long periods, whereas the method presented here uses a constant, full-reference iris image to provide accurate measurements of changes in the torsional position of the eyes over long time periods. Also, as discussed above, coil measurements are subject to slippage. Figure 7 shows an example of recordings of torsion during static head tilt of 30° to the left or to the right using our method. These measurements are precise, stable, and repeatable.

Figure 7.

Recordings of torsional eye movements during static head tilt to the left (top) and to the right (bottom). Different colors show different repetitions of binocular recordings. Subjects fixate a small spot using a bite bar to stabilize the position of the head. After 60 s with the head straight up, the head is tilted 30° using a motor that rotates the bite bar. After another 60 s, the head is rotated back to the original position. Data recorded during blinks have been removed for clarity.

Discussion

We have developed a new method for measuring eye movements in three-dimensional coordinates that can operate binocularly in real time at 100 Hz. We have taken advantage of open-source tools such as OpenCV to achieve advanced real-time image processing. Our method tracks torsional eye movements by comparing the rotation of the iris pattern in every frame with the iris pattern of a reference frame. In addition, it automatically tracks the position of the eyelids to determine what parts of the iris and the pupil are visible. We have also taken advantage of advances in the field of iris recognition, such as methods for optimizing the iris pattern and detecting eyelids. This new methodology overcomes many of the limitations of previous approaches to obtain accurate, sensitive, and consistent measures of the torsional orientation of the globe. We have shown that our method is sensitive enough to detect small changes in torsion during maintained fixation (Figure 2) and that it is more accurate than the current gold standard: the scleral search coil (Figure 6). To assess the accuracy of the method we used simulations (Figure 5) and manual subjective tracking of the iris (Figure 6).

Many methods for recording torsional eye movements using video tracking have been proposed previously (Table 1). Among those methods, the ones developed by Clarke and colleagues (Clarke et al., 1991; Clarke, Ditterich, Drüen, Schönfeld, & Steineke, 2002; Scherer, Teiwes, & Clarke, 1991), part of the Chronos system (Chronos Vision GmbH, Berlin, Germany), and the one commercially available from Senso Motoric Instruments GmbH have been cited more often in the literature, but they require offline postprocessing with manual intervention. Perhaps a more promising method on the list is the one developed by Zhu et al. (2004). Their method achieves high frame rates with real-time processing and accounts for the geometry of the eye and the pupil occlusion by the eyelids. They achieve fast processing by measuring torsion on a frame-by-frame basis—that is, they use the current measured torsional eye position to reduce the range of possible torsional angles for the following frame. This results in improved computational efficiency because the range of possible torsion values is small and thus the search would take less time. However, this approach may also introduce other problems, such as how to recover accuracy promptly after a blink or after a spike of noise. As soon as a frame does not measure an accurate torsional position, it will not be possible to maintain the accuracy unless proper mechanisms are added to detect this accordingly. Our method always compares the current frame with a fixed common reference frame, which allows a maximum range of torsional positions of ±25°. Thus, the torsional position measured on a given frame depends only on the torsion pattern on that frame and the reference frame, avoiding unwanted biases introduced by previous erroneous measurements. Despite this higher computational cost, we can still achieve a high frame rate and perform all the calculations in real time at 100 Hz for binocular recordings.

To compare the iris pattern between different frames, it is important to identify parts of the iris that are visible and those that are covered by the eyelids in each frame. Previous methods have relied on the operator to select segments of the iris for analysis. Here, instead of selecting a portion of the iris to perform the analysis, we take advantage of a typical method used in iris recognition. We always match the entire area of the iris but mask the portions that are not visible. To do this, we track the eyelids automatically in every frame. As a first advantage, this approach simplifies the recording process as the operator does not need to arbitrarily set the sector of the iris for analysis, which eliminates one source of observer bias. Second, it maximizes the signal to noise ratio because template matching is used for the entire 360° iris pattern and not just a portion of it—that is, always the maximum amount of information is available for analysis.

Our method is still subject to some of the inherent limitations of all video eye-tracking systems. First, at some gaze positions, depending on the position of the cameras, the eyes might be largely occluded by the eyelids and eyelashes. This occurs mainly in downgaze in our setup. Second, during fast movements of the head, any head-mounted display may suffer from slippage of the goggles on the head, which results in an artificial shift of the image. Other limitations are subject dependent and may reduce the quality of the recordings in some cases; for instance, when changes in pupil size shift the relative position of the centers of the pupil and the iris, when pupils have very nonelliptical shapes, and when pupils are very small and the corneal reflection from the light-emitting diode illumination completely covers them. How prevalent and how intrusive these potential artifacts are remains to be shown, though in our experience with more than 20 subjects we have yet to find a subject in whom these issues precluded reliable measures of torsion.

Conclusions

We have developed a novel, relatively simple, noninvasive, and precise method for measuring torsional eye movements that solves many long-standing problems that have impeded visual and ocular motor research. We have presented examples that show the considerable potential of this method to become an easy-to-use, modern tool with widespread application in basic and clinical research or in diagnostic testing. Finally, we show that measurements of torsion using the magnetic field search coil method with scleral annuli may be subject to more artifacts than previously appreciated because of the slippage of the coil during the movement of the eye.

Supplementary Material

Acknowledgments

This work was supported by the NIH (award K23DC013552 to A. K.), the Leon Levy Foundation, the Lott Family Foundation, and Paul and Betty Cinquegrana.

Commercial relationships: none.

Corresponding author: Jorge Otero-Millan.

Email: jorge.oteromillan@gmail.com.

Address: Department of Neurology, The Johns Hopkins University, Baltimore, MD, USA.

Contributor Information

Jorge Otero-Millan, Email: jotero@jhu.edu.

Dale C. Roberts, Email: dale.roberts@jhu.edu.

Adrian Lasker, Email: alasker@jhu.edu.

David S. Zee, Email: dzee@jhu.edu.

Amir Kheradmand, Email: akherad@jhu.edu.

References

- Arshad, Q.,, Kaski D.,, Buckwell D.,, Faldon M. E.,, Gresty M. A.,, Seemungal B. M.,, Bronstein A. M. (2012). A new device to quantify ocular counterroll using retinal afterimages. Audiology and Neurotology, 17 (1), 20–24, doi:http://dx.doi.org/10.1159/000324859. [DOI] [PubMed] [Google Scholar]

- Barlow H. B. (1963). Slippage of contact lenses and other artefacts in relation to fading and regeneration of supposedly stable retinal images. Quarterly Journal of Experimental Psychology, 15 (1), 36–51, doi:http://dx.doi.org/10.1080/17470216308416550. [Google Scholar]

- Barra J.,, Pérennou D.,, Thilo K. V.,, Gresty M. A.,, Bronstein A. M. (2012). The awareness of body orientation modulates the perception of visual vertical. Neuropsychologia, 50 (10), 2492–2498, doi:http://dx.doi.org/10.1016/j.neuropsychologia.2012.06.021. [DOI] [PubMed] [Google Scholar]

- Bergamin O.,, Ramat S.,, Straumann D.,, Zee D. S. (2004). Influence of orientation of exiting wire of search coil annulus on torsion after saccades. Investigative Ophthalmology & Visual Science, 45 (1), 131–137. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Bos J. E.,, de Graaf B. (1994). Ocular torsion quantification with video images. IEEE Transactions on Biomedical Engineering, 41 (4), 351–357, doi:http://dx.doi.org/10.1109/10.284963. [DOI] [PubMed] [Google Scholar]

- Bradski G. (2000). OpenCV. Dr. Dobb's Journal of Software Tools, http://code.opencv.org/projects/opencv/wiki/CiteOpenCV.

- Brecher G. A. (1934). Die optokinetische Auslösung von Augenrollung und rotatorischem Nystagmus. Pflüger's Archiv für die Gesamte Physiologie des Menschen und der Tiere, 234 (1), 13–28, doi:http://dx.doi.org/10.1007/BF01766880. [Google Scholar]

- Bronstein A. M.,, Perennou D. A.,, Guerraz M.,, Playford D.,, Rudge P. (2003). Dissociation of visual and haptic vertical in two patients with vestibular nuclear lesions. Neurology, 61 (9), 1260–1262. [DOI] [PubMed] [Google Scholar]

- Clarke A. H.,, Ditterich J.,, Drüen K.,, Schönfeld U.,, Steineke C. (2002). Using high frame rate CMOS sensors for three-dimensional eye tracking. Behavior Research Methods, Instruments, & Computers, 34 (4), 549–560, doi:http://dx.doi.org/10.3758/BF03195484. [DOI] [PubMed] [Google Scholar]

- Clarke A. H.,, Teiwes W.,, Scherer H. (1991). Videooculography—An alternative method for measurement of three-dimensional eye movements. Schmid R., Zambarbieri D. (Eds.) Oculomotor control and cognitive processes (pp 431–443). Amsterdam, the Netherlands: Elsevier. [Google Scholar]

- Collewijn, H.,, Van der Steen J.,, Ferman L.,, Jansen T. C. (1985). Human ocular counterroll: Assessment of static and dynamic properties from electromagnetic scleral coil recordings. Experimental Brain Research, 59 (1), 185–196, doi:http://dx.doi.org/10.1007/BF00237678. [DOI] [PubMed] [Google Scholar]

- Cornsweet T. N.,, Crane H. D. (1973). Accurate two-dimensional eye tracker using first and fourth Purkinje images. Journal of the Optical Society of America, 63 (8), 921–928, doi:http://dx.doi.org/10.1364/JOSA.63.000921. [DOI] [PubMed] [Google Scholar]

- Curthoys I. S.,, Dai M. J.,, Halmagyi G. M. (1991). Human ocular torsional position before and after unilateral vestibular neurectomy. Experimental Brain Research, 85 (1), 218–225, doi:http://dx.doi.org/10.1007/BF00230003. [DOI] [PubMed] [Google Scholar]

- Daugman J. (2004). How iris recognition works. IEEE Transactions on Circuits and Systems for Video Technology, 14 (1), 21–30, doi:http://dx.doi.org/10.1109/TCSVT.2003.818350. [Google Scholar]

- Dera T.,, Boning G.,, Bardins S.,, Schneider E. (2006). Low-latency video tracking of horizontal, vertical, and torsional eye movements as a basis for 3dof realtime motion control of a head-mounted camera. IEEE International Conference on Systems, Man and Cybernetics, 6, 5191–5196. doi:http://dx.doi.org/10.1109/ICSMC.2006.385132 [Google Scholar]

- Ehrt O.,, Boergen K.-P. (2001). Scanning laser ophthalmoscope fundus cyclometry in near-natural viewing conditions. Graefe's Archive for Clinical and Experimental Ophthalmology, 239 (9), 678–682. [DOI] [PubMed] [Google Scholar]

- Groen E.,, Bos J. E.,, Nacken P. F. M.,, de Graaf B. (1996). Determination of ocular torsion by means of automatic pattern recognition. IEEE Transactions on Biomedical Engineering, 43 (5), 471–479, doi:http://dx.doi.org/10.1109/10.488795. [DOI] [PubMed] [Google Scholar]

- Guillemant P.,, Ulmer E.,, Freyss G. (1995). 3-D eye movement measurements on four Comex's divers using video CCD cameras, during high pressure diving. Acta Oto-Laryngologica, 520 (Suppl. Pt. 2), 288–292. [DOI] [PubMed] [Google Scholar]

- Guyton D. L. (1983). Clinical assessment of ocular torsion. American Orthoptic Journal, 33, 7–15. [Google Scholar]

- Guyton D. L. (1995). Strabismus surgery decisions based on torsion findings. Archivos Chilenos de Oftalmologia, 52, 131–137. [Google Scholar]

- Haslwanter T.,, Moore S. T. (1995). A theoretical analysis of three-dimensional eye position measurement using polar cross-correlation. IEEE Transactions on Biomedical Engineering, 42 (11), 1053–1061, doi:http://dx.doi.org/10.1109/10.469371. [DOI] [PubMed] [Google Scholar]

- Hatamian M.,, Anderson D. J. (1983). Design considerations for a real-time ocular counterroll instrument. IEEE Transactions on Biomedical Engineering, 30 (5), 278–288, doi:http://dx.doi.org/10.1109/TBME.1983.325117. [DOI] [PubMed] [Google Scholar]

- Howard I. P. (1993). Cycloversion, cyclovergence and perceived slant. Proceedings of the 1991 York Conference on Spacial Vision in Humans and Robots. 349–365.

- Howard I. P.,, Evans J. A. (1963). The measurement of eye torsion. Vision Research, 3 (9–10), 447–455, doi:http://dx.doi.org/10.1016/0042-6989(63)90095-6. [DOI] [PubMed] [Google Scholar]

- Ivins J. P.,, Porrill J.,, Frisby J. P. (1998). Deformable model of the human iris for measuring ocular torsion from video images. IEE Proceedings: Vision, Image and Signal Processing, 145 (3), 213–220, doi:http://dx.doi.org/10.1049/ip-vis:19981914. [Google Scholar]

- Jansen S. H.,, Kingma H.,, Peeters R. M.,, Westra R. L. (2010). A torsional eye movement calculation algorithm for low contrast images in video-oculography. 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 5628–5631. [DOI] [PubMed]

- Kanzaki J.,, Ouchi T. (1978). Measurement of ocular countertorsion reflex with fundoscopic camera in normal subjects and in patients with inner ear lesions. Archives of Oto-Rhino-Laryngology, 218 (3–4), 191–201, doi:1http://dx.doi.org/0.1007/BF00455553. [DOI] [PubMed] [Google Scholar]

- Kekunnaya R.,, Mendonca T.,, Sachdeva V. (2015). Pattern strabismus and torsion needs special surgical attention. Eye, 29 (2), 184–190, doi:http://dx.doi.org/10.1038/eye.2014.270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kheradmand A.,, Lasker A.,, Zee D. S. (2015). Transcranial magnetic stimulation (TMS) of the supramarginal gyrus: A window to perception of upright. Cerebral Cortex, 25 (3), 765–771, doi:http://dx.doi.org/10.1093/cercor/bht267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim S. C.,, Nam K. C.,, Lee W. S.,, Kim D. W. (2006). A new method for accurate and fast measurement of 3D eye movements. Medical Engineering & Physics, 28 (1), 82–89, doi:http://dx.doi.org/10.1016/j.medengphy.2005.04.002. [DOI] [PubMed] [Google Scholar]

- Kingma H.,, Gullikers H.,, de Jong I.,, Jongen R.,, Dolmans M.,, Stegeman P, (1995). Real time binocular detection of horizontal vertical and torsional eye movements by an infrared video-eye tracker. Acta Oto-Laryngologica, 115 (S520), 9–15. [DOI] [PubMed] [Google Scholar]

- Lee I.,, Choi B.,, Park K. S. (2007). Robust measurement of ocular torsion using iterative Lucas–Kanade. Computer Methods and Programs in Biomedicine, 85 (3), 238–246, doi:http://dx.doi.org/10.1016/j.cmpb.2006.12.001. [DOI] [PubMed] [Google Scholar]

- Leigh R. J.,, Zee D. S. (2015). The neurology of eye movements. Oxford, United Kingdom: Oxford University Press. [Google Scholar]

- Locke J. C. (1968). Heterotopia of the blind spot in ocular vertical muscle imbalance. American Journal of Ophthalmology, 65 (3), 362–374, doi:http://dx.doi.org/10.1016/0002-9394(68)93086-9. [DOI] [PubMed] [Google Scholar]

- MacDougall H. G.,, Moore S. T. (2005). Functional assessment of head–eye coordination during vehicle operation. Optometry & Vision Science, 82 (8), 706–715. [DOI] [PubMed] [Google Scholar]

- Masek L. (2003). Recognition of human iris patterns for biometric identification (Master's thesis). University of Western Australia, Perth: Retrieved from http://people.csse.uwa.edu.au/~pk/studentprojects/libor/LiborMasekThesis.pdf [Google Scholar]

- Maxwell J. S.,, Schor C. M. (1999). Adaptation of torsional eye alignment in relation to head roll. Vision Research, 39 (25), 4192–4199, doi:http://dx.doi.org/10.1016/S0042-6989(99)00139-X. [DOI] [PubMed] [Google Scholar]

- Mezey L. E.,, Curthoys I. S.,, Burgess A. M.,, Goonetilleke S. C.,, MacDougall H. G. (2004). Changes in ocular torsion position produced by a single visual line rotating around the line of sight––Visual “entrainment” of ocular torsion. Vision Research, 44 (4), 397–406, doi:http://dx.doi.org/10.1016/j.visres.2003.09.026. [DOI] [PubMed] [Google Scholar]

- Minken A. W. H.,, Gisbergen J. A. M. V. (1994). A three-dimensional analysis of vergence movements at various levels of elevation. Experimental Brain Research, 101 (2), 331–345, doi:http://dx.doi.org/10.1007/BF00228754. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt H. (1986). The subjective vertical as a function of visual and extraretinal cues. Acta Psychologica, 63 (1), 63–85, doi:http://dx.doi.org/10.1016/0001-6918(86)90043-0. [DOI] [PubMed] [Google Scholar]

- Moore S. T.,, Curthoys I. S.,, McCoy S. G. (1991). VTM—An image-processing system for measuring ocular torsion. Computer Methods and Programs in Biomedicine, 35 (3), 219–230, doi:http://dx.doi.org/10.1016/0169-2607(91)90124-C. [DOI] [PubMed] [Google Scholar]

- Ong J. K. Y.,, Haslwanter T. (2010). Measuring torsional eye movements by tracking stable iris features. Journal of Neuroscience Methods, 192 (2), 261–267, doi:http://dx.doi.org/10.1016/j.jneumeth.2010.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ott D.,, Gehle F.,, Eckmiller R. (1990). Video-oculographic measurement of 3-dimensional eye rotations. Journal of Neuroscience Methods, 35 (3), 229–234, doi:http://dx.doi.org/10.1016/0165-0270(90)90128-3. [DOI] [PubMed] [Google Scholar]

- Paliulis E.,, Daunys G. (2015). Determination of eye torsion by videooculography including cornea optics. Elektronika Ir Elektrotechnika, 69 (5), 83–86, doi:http://dx.doi.org/10.5755/j01.eee.69.5.10755. [Google Scholar]

- Ramat S.,, Nesti A.,, Versino M.,, Colnaghi S.,, Magnaghi C.,, Bianchi A.,, Beltrami G. (2011). A new device to assess static ocular torsion. Annals of the New York Academy of Sciences, 1233 (1), 226–230, doi:http://dx.doi.org/10.1111/j.1749-6632.2011.06157.x. [DOI] [PubMed] [Google Scholar]

- Ratliff F.,, Riggs L. A. (1950). Involuntary motions of the eye during monocular fixation. Journal of Experimental Psychology, 40 (6), 687–701. [DOI] [PubMed] [Google Scholar]

- Robinson D. (1963). A method of measuring eye movement using a scleral search coil in a magnetic field. IEEE Transactions on Bio-Medical Electronics, 10 (4), 137–145, doi:http://dx.doi.org/10.1109/TBMEL.1963.4322822. [DOI] [PubMed] [Google Scholar]

- Sarès F.,, Granjon L.,, Benraiss A.,, Boulinguez P. (2007). Analyzing head roll and eye torsion by means of offline image processing. Behavior Research Methods, 39 (3), 590–599, doi:http://dx.doi.org/10.3758/BF03193030. [DOI] [PubMed] [Google Scholar]

- Scherer H.,, Teiwes W.,, Clarke A. H. (1991). Measuring three dimensions of eye movement in dynamic situations by means of videooculography. Acta Oto-Laryngologica, 111 (2), 182–187, doi:http://dx.doi.org/10.3109/00016489109137372. [DOI] [PubMed] [Google Scholar]

- Schmid-Priscoveanu A.,, Straumann D.,, Kori A. A. (2000). Torsional vestibulo-ocular reflex during whole-body oscillation in the upright and the supine position. Experimental Brain Research, 134 (2), 212–219, doi:http://dx.doi.org/10.1007/s002210000436. [DOI] [PubMed] [Google Scholar]

- Schneider E.,, Glasauer S.,, Dieterich M.,, Kalla R.,, Brandt T. (2004). Diagnosis of vestibular imbalance in the blink of an eye. Neurology, 63 (7), 1209–1216, doi:http://dx.doi.org/10.1212/01.WNL.0000141144.02666.8C. [DOI] [PubMed] [Google Scholar]

- Schworm H. D.,, Boergen K. P.,, Eithoff S. (1995). Measurement of subjective and objective cyclodeviation in oblique eye muscle disorders. Strabismus, 3 (3), 115–122. [DOI] [PubMed] [Google Scholar]

- Steffen H.,, Walker M. F.,, Zee D. S. (2000). Rotation of Listing's plane with convergence: Independence from eye position. Investigative Ophthalmology & Visual Science, 41 (3), 715–721. [PubMed] [Article] [PubMed] [Google Scholar]

- Straumann D.,, Zee D. S.,, Solomon D.,, Kramer P. D. (1996). Validity of Listing's law during fixations, saccades, smooth pursuit eye movements, and blinks. Experimental Brain Research, 112 (1), 135–146, doi:http://dx.doi.org/10.1007/BF00227187. [DOI] [PubMed] [Google Scholar]

- Van Rijn L. J.,, Van Der Steen J.,, Collewijn H. (1994). Instability of ocular torsion during fixation: Cyclovergence is more stable than cycloversion. Vision Research, 34 (8), 1077–1087, doi:http://dx.doi.org/10.1016/0042-6989(94)90011-6. [DOI] [PubMed] [Google Scholar]

- Versimo M.,, Newman-Toker D. E. (2010). Blind spot heterotopia by automated static perimetry to assess static ocular torsion: Centro-cecal axis rotation in normals. Journal of Neurology, 257 (2), 291–293. doi:http://dx.doi.org/10.1007/s00415-009-5341-x [DOI] [PubMed] [Google Scholar]

- Vieville T.,, Masse D. (1987). Ocular counter-rolling during active head tilting in humans. Acta Oto-Laryngologica, 103 (3–4), 280–290. [PubMed] [Google Scholar]

- von Helmholtz H. (1867). Handbuch der physiologischen optik. Leipzig, Germany: Voss. [Google Scholar]

- Wade S. W.,, Curthoys I. S. (1997). The effect of ocular torsional position on perception of the roll-tilt of visual stimuli. Vision Research, 37 (8), 1071–1078, doi:http://dx.doi.org/10.1016/S0042-6989(96)00252-0. [DOI] [PubMed] [Google Scholar]

- Wibirama S.,, Tungjitkusolmun S.,, Pintavirooj C. (2013). Dual-camera acquisition for accurate measurement of three-dimensional eye movements. IEEE Transactions on Electrical and Electronic Engineering, 8 (3), 238–246, doi:http://dx.doi.org/10.1002/tee.21845. [Google Scholar]

- Wong A. M. F. (2004). Listing's law: Clinical significance and implications for neural control. Survey of Ophthalmology, 49 (6), 563–575, doi:http://dx.doi.org/10.1016/j.survophthal.2004.08.002. [DOI] [PubMed] [Google Scholar]

- Wood S. J. (2002). Human otolith–ocular reflexes during off-vertical axis rotation: Effect of frequency on tilt–translation ambiguity and motion sickness. Neuroscience Letters, 323 (1), 41–44, doi:http://dx.doi.org/10.1016/S0304-3940(02)00118-0. [DOI] [PubMed] [Google Scholar]

- Yooyoung L.,, Micheals R. J.,, Filliben J. J.,, Phillips P. J. (2013). VASIR: An open-source research platform for advanced iris recognition technologies. Journal of Research of the National Institute of Standards & Technology, 118, 218–259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu D.,, Moore S. T.,, Raphan T. (1999). Robust pupil center detection using a curvature algorithm. Computer Methods and Programs in Biomedicine, 59 (3), 145–157. [DOI] [PubMed] [Google Scholar]

- Zhu D.,, Moore S. T.,, Raphan T. (2004). Robust and real-time torsional eye position calculation using a template-matching technique. Computer Methods and Programs in Biomedicine, 74 (3), 201–209, doi:http://dx.doi.org/10.1016/S0169-2607(03)00095-6. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.