Abstract

Contour detection has been extensively investigated as a fundamental problem in computer vision. In this study, a biologically-inspired candidate weighting framework is proposed for the challenging task of detecting meaningful contours. In contrast to previous models that detect contours from pixels, a modified superpixel generation processing is proposed to generate a contour candidate set and then weigh the candidates by extracting hierarchical visual cues. We extract the low-level visual local cues to weigh the contour intrinsic property and mid-level visual cues on the basis of Gestalt principles for weighting the contour grouping constraint. Experimental results tested on the BSDS benchmark show that the proposed framework exhibits promising performances to capture meaningful contours in complex scenes.

Keywords: contour detection, biologically inspired, candidate set, hierarchical visual cues, Gestalt principles

1. Introduction

Contour detection has remained a fundamental problem in computer vision that has been intensively studied in the past fifty years. It is a crucial step for many computer vision tasks, including object segmentation [1,2], shape-based object matching [3,4,5,6], detection and recognition [7,8,9,10,11,12,13], image restoration [14,15,16], recovery of intrinsic scene properties [17], etc. Unlike edges, which refer to any abrupt change in local image features, such as luminance and color, contour represents changes from object to background or one surface to another, which need deeper levels of image information to detect.

A large number of methods have been proposed for edge or contour detection in recent decades. Typical methods include local linear filters [18,19,20,21], active contours [22,23,24], learning or statistical inference methods [25,26,27,28,29,30,31]. Due to the broad diversity and ambiguity of visual patterns in a natural image, it remains a challenging task to automatically extract image contours with a computer accurately and efficiently, even though much progress has been made.

However, the human vision system is able to acquire relative integration and structure from images without any prior knowledge of objects. Research has shown that the brain does not directly obtain the projected images on the retina presented by the external stimuli. Instead, it recognizes objects from visual information that has been aggregated and specialized [32]. Neurons in lateral geniculate nucleus (LGN) and primary visual cortex (V1 or striate cortex) respond to oriented luminance and chromatic stimulation and extract relevant information from natural images that have specific characteristics through this low-level processing. Additionally, the main function of the later visual cortex is to perform the extraction and calculation of perceptual signals, in a way that dramatically reduces the data volume, but retains the useful structural information of the objects. In a complex natural scene, the components that have abundant and specific attributes are more prominent and are thus easier to isolate from the background. This problem of extracting and integrating structural information is called perceptual grouping. The process of perceptual grouping is often considered as being guided by the middle-level Gestalt principle from the psychological perspective. In the Gestalt principle, the visual system tends to group consistent image features into high-level structures through some rules, including brightness and contrast similarity, good continuation, local proximity and curvature constancy. A series of neurophysiological supporting evidence of these properties has been studied in [33,34,35,36] (see [37] for a review). Inspired by these biological bases, many works for contour detection have been developed, for example [38,39,40,41,42,43,44,45,46,47,48].

Almost all of these existing contour detection methods, either biologically-inspired models or computational ones, are conducted on discrete pixels. However, discrete pixel-based methods extract features of each pixel in its local patch regardless of whether or not similar pixels need to be computed repeatedly. They often have a large computational cost and are more likely to generate discontinuous or broken contours than the methods conducted in larger primitives, especially when suffering from noises. Larger primitive conducting and a smaller search space are new trends of image processing or vision tasks. Therefore, superpixel-based methods and candidate-based frameworks have become hotspots in tasks such as object segmentation [49] or object detection [50] in recent years. However, there are few contour detection algorithms based on these methods and frameworks.

In our work, we propose a biologically-inspired candidate weighting framework for contour detection. To generate contour candidates for reducing the computation space, we introduce a modified superpixel generation processing. Our basic assumption is that the contours of the meaningful objects align well with superpixel boundaries. Based on that, the superpixel boundaries can be taken as contour candidates after segmenting the input image into superpixels. Therefore, the problem of detecting contours is transformed into another one, weighing or estimating the probability of being an object contour of each candidate. Then, we propose a biologically-inspired method of extracting hierarchical visual cues to weight the candidates. The lower level of this hierarchical visual system responds to oriented luminance and chromatic stimulation and is thus used to weight intrinsic properties of contours. The mid-level of the hierarchical visual system groups perceptual signals by Gestalt principles, resulting in the contour connection constraint. Our proposed method has been tested on the BSDS 300/500 benchmarks [1,26], and experimental results exhibit promising performances.

The remainder of this paper is organized as follows. In Section 2, we present related work in contour detection and the candidate-based framework. Then, we describe the details of our candidate-based contour detection framework and biologically-inspired candidate weighting algorithm from hierarchical visual cues in Section 3. In Section 4, we evaluate the performance of the proposed method on the BSDS 300 and 500 datasets [1,26]. Finally, we discuss our model and draw conclusions in Section 5.

2. Related Work

Contour detection and edge detection are classical problems in computer vision. There is an enormous literature on these topics. It is hard for this paper to give a full survey on the topic, so only a small relevant subset of works will be reviewed here.

The early approaches to contour detection relied on local measurements with linear filters. Typical algorithms are Sobel [18], Robert [19], Prewitt [20] and Canny [21] edge detectors. All of these method use local filters of a fixed scale and few orientations. Small and unimportant edges are treated equally as meaningful contours in these detectors. This will lead to noisy contour maps, which are hard to use for subsequent higher level processing. The key challenge is to enhance the weights of stable and meaningful contours without enhancing gradients due to repeated or stochastic textures.

Therefore, over the years, many new approaches have been developed for contour detection. Typically, Malik et al. [1,26] defined gradient operators for multiple cues, such as brightness, color and texture. A regression classifier is used to predict edge strength from these features. This is the popular Pb algorithm [26], which learns to give each pixel in the image the probability of a contour at that point. Then, multiple scales [51] and multiple features [52] have been incorporated into learning-based contour detection algorithms. In the last five years, other learning methods were proposed. Ren and Bo [30] used sparse coding and oriented gradients to learn dictionaries of contour patches. Lim et al. [28] used random forest-based learning on image patches and achieved state-of-the-art results. Their key idea is to use a dictionary of human-generated contours as features for contours within a patch. Dollar and Zitnick [29] further combined random forests with structured prediction to achieve real-time edge detection. However, the performances of most learning-based methods are strongly dependent on the selection of training sets, which makes the methods inflexible for an individual image. Furthermore, training a method on a dataset normally leads to a high computational cost.

Besides, many non-learning-based algorithms have been proposed for boundary detection recently. The research was focused on a global framework that minimized the global cost over all disjoint pairs of patches. For example, Felzenszwalb and McAllester [53] extracted salient contours by solving the min-cover problem. Arbelaez et al. [1] embedded the local Pb measure [26] into a spectral clustering framework. Isola et al. [54] measured rarity based on pointwise mutual information. They also obtained good results, although the computation cost is exhausted.

Another line is the success of biologically-inspired methods. From neuro-physiological perspectives, it has been widely believed that the neurons in V1 are exquisitely sensitive to oriented bars or edges in the classical receptive field (CRF). Subsequently, extensive neuro-physiological findings indicate that a peripheral region beyond CRF, known as non-classical receptive field (non-CRF), can modulate the spiking response of a V1 neuron to the stimuli placed within the CRF. The neuronal responses are strongly inhibited when the stimuli within the CRF and non-CRF share similar features. Based on this physiological mechanism, several biologically-inspired contour detection methods have been proposed recently [38,39,40,41,42,43,44,45,46,47,55]. These methods modeled the orientation-selective excitation mechanism of CRF with orientated Gabor or derivate Gaussian filters, with rare exceptions. For surroundings inhibition, many studies proposed different models, like [32,47,55,56,57], and used another strategy of the visual system to cope with complex scenes in the last five years. For instance, multiple scales [32] and multiple visual features [55] were integrated. In addition, an efficient color boundary detection framework was proposed by simulating the biological mechanisms of color information processing and color-opponent mechanisms along the retina-LGN-cortex visual pathway [48]. From the psychophysical perspective, psychologists formulated Gestalt rules for perceptually-significant image structure during contour grouping, such as “proximity”, “good continuity”, “closure”, etc. Gestalt-based contour grouping or detection algorithms have also been studied recently. In [58,59], an optimal Bayesian model was employed to investigate the inferential power of three classical Gestalt cues: proximity, good continuation and luminance similarity for contour grouping. Hess et al. [37] discussed the mechanism of contour integration from psychophysical and neurophysiological perspectives and summarized some computational models. In [60], a novel higher order CRF model was proposed to address the contour closure effect through local connectedness approximation. Han et al. [61] proposed a fuzzy connection facilitation model to achieve the enhancement of contour response and the connection of discontinuous contour. These biologically-inspired methods also achieved good results.

The above methods commonly use pixel-level measurements to create contours. However, these methods often lead to noisy and broken contours, which are costly and less likely to be useful for further processing. Superpixels provide a convenient primitive and have become increasingly popular for use in computer vision applications. A series of superpixel generating algorithms have been proposed. One major category is graph-based approaches, like [62,63,64,65]. These approaches treat each pixel as a node in a graph and use edge weights between two nodes to measure the similarity between neighboring pixels. Superpixels are created by minimizing a cost function defined over the graph. Another major category is gradient ascent-based algorithms [66,67,68,69,70,71]. These kinds of algorithms start from a rough initial clustering of pixels, then use gradient ascent methods iteratively refining the clusters until some convergence criterion is met to form superpixels. In addition, candidate-based frameworks have emerged as a new mechanism to reduce computation costs in vision tasks. For example, objectness-based bounding box candidates have been applied for object detection [50]. Object hypotheses have been represented as figure-ground segmentation proposed in [72] and used as a basis of salient object segmentation [49]. However, to the best of our knowledge, similar algorithms or frameworks have been rarely used to detect contours.

In our work, a biologically-inspired candidate weighting (BICW) framework is proposed for contour detection. We introduce a modified superpixel generation processing to obtain contour candidates and then weigh the candidates by multiple biological cues.

3. Method of Biologically-Inspired Candidate Weighting Framework for Contour Detection

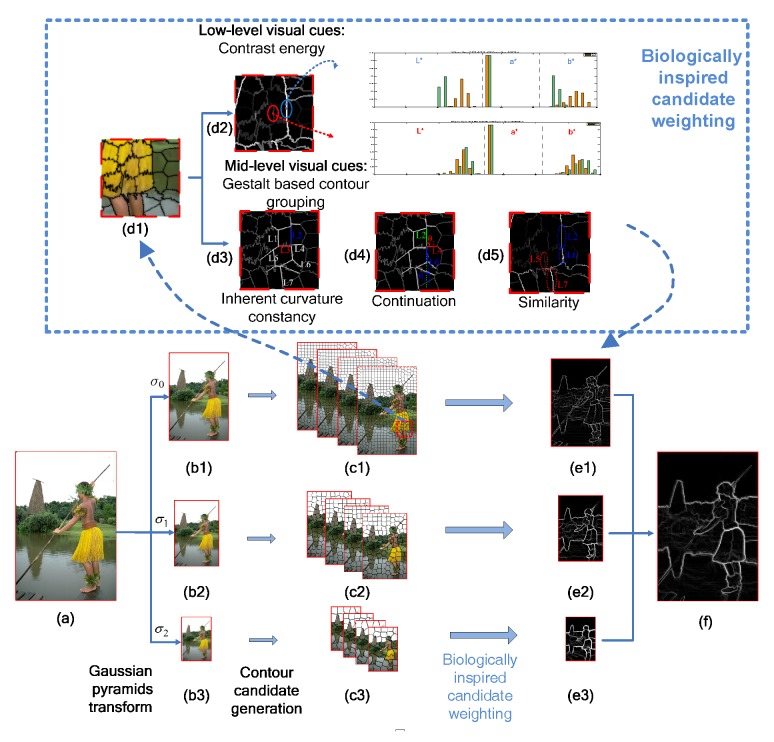

In this section, we will describe our contour detection framework. A detailed flowchart is shown in Figure 1. Firstly, in Section 3.1, a modified superpixel generation processing with a multi-scale strategy is introduced to get a set of contour candidates. Then, we propose our biologically-inspired method for weighting the contour candidates from hierarchical visual cues in Section 3.2.

Figure 1.

Flowchart of our contour detection framework. (a) Input image; (b1–b3) transformed images of different scales (, , ); (c1–c3) contour candidates generated by our modified simple linear iterative clustering (SLIC) processing. We perform SLIC with four different initials in each scale. To facilitate the expression, we draw the candidates on four images in each scale, which together form the candidate set; (d1) Zoomed-in region for the demonstration; (d2) contrast energy extracted with low-level cues; (d3) inherent curvature constancy extracted with mid-level cues; L2 labeled by the blue line indicates the candidate with good inherent curvature constancy, which is more likely to be a true contour, while L3 labeled by a red line indicates poor inherent curvature constancy; (d4) continuation extracted with mid-level cues. A true contour candidate tends to connect to its co-linear neighbor, but is independent from the vertical one. For example, L6 is more likely to link with L2, but L4 is slightly related to L2; (d5) Similarity extracted with mid-level cues. Connection-probability attenuates with the increasing of contrast difference, for example L2 and L6 labeled by blue-dotted circles yield a higher connection-probability than L5 and L7 labeled by red-dotted circles; (e) Weighted candidates on an image at each scale; (f) final output.

3.1. Generation of Contour Candidates

Our first step is to generate contour candidates by the superpixel generation algorithm. A modified simple linear iterative clustering (SLIC) [66] algorithm is used to obtain the superpixels from the input image. Superpixel boundaries are subsequently obtained as contour candidates. The computations of this method are not intended as a model of biological visual processing. However, these computations are intended to provide closed and continuous edges, which seems to be more in line with the Gestalt principles than a biologically-inspired edge detection algorithm that uses a derivative Gaussian filter or classical hand-designed methods, such as a Canny detector.

SLIC is an adaptation of K-means for superpixel generation, which is faster and more memory efficient than existing superpixel generation methods. SLIC also exhibits state-of-the-art boundary adherence. In this method, the k cluster centers sampled on a regular grid space with S pixels are initialized separately, where S is the grid interval and k is defined as . Each pixel is then iteratively associated with the nearest cluster center measured by the 5D Euclidean distance in the space, whose search region is limited to around the center.

If the assumption that the contour of the meaningful objects aligns well with superpixel boundaries is valid, in other words, if the boundary recall is sufficiently high, superpixel boundaries can be taken as contour candidates. The boundary recall of SLIC has been discussed in [66]. Its experiment showed that the boundary recall increased with the number of superpixels, and SLIC demonstrated an optimum performance. We can see from the SLIC algorithm calculation process shown above that the only parameter of SLIC is the desired number of approximately equally-sized superpixels k, which determines the initial clustering centers in the initialization step and the cluster searching scope in the assignment step. In our work, we make a modification of the original SLIC processing. Instead of changing k of the original SLIC to get better performance, we separately process SLIC with different initial cluster centers. We change the initial cluster center with some offsets and generate a series of superpixels with different initial centers. Therefore, the contour candidate set at the scale can be obtained as follows:

| (1) |

We experimentally validated the completeness of the candidate set generated from this method, which will be explained in detail later in Section 4. Therefore, contour detection can be simplified as another problem of weighting candidates with the probability of being the object contour from hierarchical visual cues.

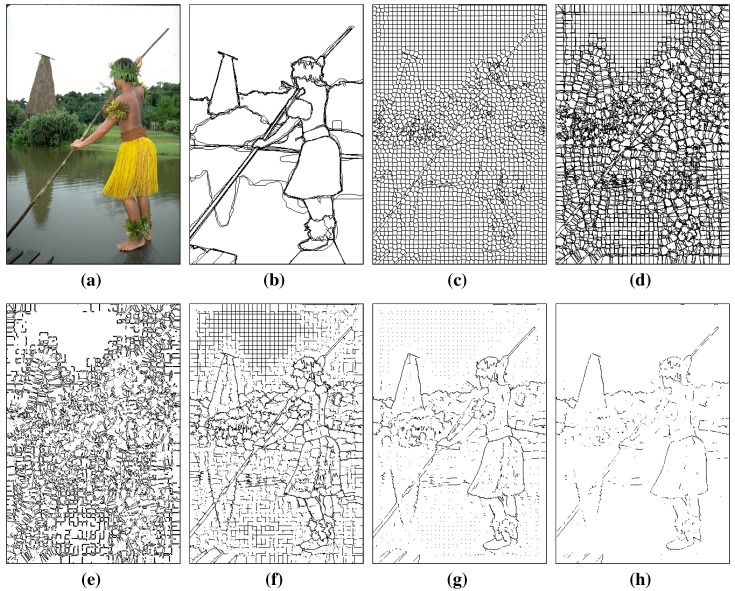

The advantage of our modified superpixel generation method is that the weight of stable edges, which are more likely to be a contour, can be increased. In Figure 2, we can see that stable edges are still the boundaries of superpixels when the initial cluster centers are different. By contrast, the unstable edges are easily altered. In our method, the pixels of stable edges occur multiple times as different candidates. When we sum the weights of each candidate to get the final probability of being a true contour, the pixels of the stable edges yield higher weights than those of the unstable edges.

Figure 2.

Contour candidates generated by original SLIC and our modified SLIC. (a) Input image; (b) ground truth; (c) contour candidates generated by original SLIC; (d) contour candidates generated by our modified SLIC, superimposed on all of the contour candidates over this image; (e) Pixels appear only once in contour candidates; many of them are clutter or meaningless textures; (f)–(h) Pixels appear more than once, twice and three times; pixels of meaningful contour are preserved to be candidates with different initials.

The contour candidates are derived from superpixel boundaries, which are formed by the adjacent superpixels. Thus, we only need to extract features of each contour candidate from adjacent superpixels. In previous work, the features of each pixel are extracted in its local patch. Similar pixels in its neighborhood will be computed repeatedly. Compared to the previous approach, our method can reduce many repeated calculations brought by these similar pixels and weaken the influence of noises, because pixels are grouped into perceptually-meaningful atomic regions by using our superpixel-based candidate generation method.

The information obtained at different scales is different, so the resulting contours are also different. At a small scale, information, including contours and some textures, is mostly retained. The main contours of the target object are retained at a larger scale, but some details may be missing. Salient and meaningful contours are more likely to be retained at different scales, which is called continuity at scales. To handle the scale problem and to use the continuity property to obtain more meaningful contours, we generate contour candidates and weigh them at different scales. In previous work, the convolution kernel [32,51] or measure scope [1] is changed to obtain images of multiple scales, but the image size is unchanged. Different from previous work, we obtain the transforming image of different scales through Gaussian pyramid down-sampling transformation on account of the time and space cost. The modified candidate generation operation is then performed on transformed images of each scale. The total contour candidate set is expressed as follows:

| (2) |

In this work, we consider weights at three scales (), where and . Here, only integer multiple scales are considered to avoid interpolation during Gaussian pyramid down-sampling transformation.

3.2. Extraction of Hierarchical Cues

The human visual system has evolved to extract relevant information hierarchically from natural images with specific characteristics. First, neurons in primary visual cortex (V1 or striate cortex) respond to oriented luminance and chromatic stimulation, which is expressed as a low-level visual process. Then, the mid-level process performs some kind of grouping operation in both V1 and later visual areas. This process provides a bridge between low-level local information and high-level concepts, such as object- and scene-level information. The rules of contour grouping are also formulated by the Gestalt “law” in the psychological perspective [37]. In this work, we propose a hierarchical cue extraction method that extracts low-level visual local cues to weigh contour intrinsic properties and mid-level visual cues based on Gestalt principles to weigh the contour grouping constraint, as described in this subsection.

3.2.1. Low-Level Cues

Local luminance and chromatic information are important visual low-level cue features to understand natural scenes. In this work, the contour intrinsic property is weighted with luminance and chromatic contrast. We measure the luminance and chromatic cues from the input color image using the CIELAB color space, which is a good expression for both luminance and chromaticity. For the luminance cue of each superpixel, we compute a histogram of values. For the chromatic cue, it presents additional challenges for estimation because the pixel values are in the 2D space ( and ). The joint approach is far more expensive computationally than the marginal one due to the additional dimension in histograms. One might expect that the joint color distribution using the 2D histogram contains more visual perceptual information than the marginal one. However, their performances do not remarkably differ, since the and axes in the CIELAB color space are designed to mimic the blue-yellow and green-red color opponency, which is found in the human visual cortex [73]. Following the method in [26], we compute the marginal color values for and , rather than compute the joint ones. We make a histogram of the values of each channel of each superpixel with , and bins, respectively:

| (3) |

| (4) |

| (5) |

Here, we set , and according to [26].

As shown in Figure 1d2, the histogram vectors between adjacent superpixels on the sides of a meaningful contour (positive candidate) are quite different, while vectors between negative ones are similar. We use these vectors to compute the contrast energy of the contour candidate between superpixels and additional mid-level cues. The contrast energy of the contour candidate formed by the adjacent superpixels and is expressed by the weighted sum of the distances between superpixels and based on luminance and chromatic cues, described as follows:

| (6) |

where c indexes feature channels (luminance , chromatic and ), and indicates the relative contribution of each color channel. is the distance between histograms of superpixel and in channel c.

3.2.2. Mid-Level Cues

For the mid-level visual process, the visual system groups local edge information into contours guided by the Gestalt principles. In the Gestalt principle, the visual system tends to group consistent image features into high-level structures through some rules, including brightness and contrast similarity, good continuation, local proximity and curvature constancy. Thus, on the basis of these rules, we propose a model for weighting the grouping constraint of contour candidates by computing the connection probabilities with the adjacent candidates.

Although our contour candidates yield an accurate representation for the image, these candidates do not provide an optimal basis for contour grouping, as they would be jagged and noisy to some extent. On the other hand, a contour candidate is less likely to be a contour if it is jagged or sensitive to noises, which does not conform to the “curvature constancy” rules. To avoid these problems and to measure the inherent curvature changes of a contour candidate , we fit it to a straight-line and evaluate the inherent curvature constancy of the contour candidate as follows:

| (7) |

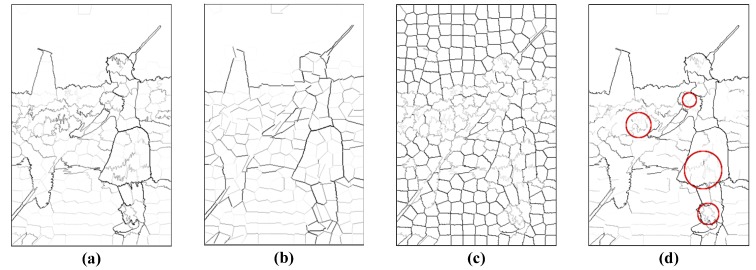

where is the length of the fitting line and is the number of pixels of contour candidate . Figure 3 presents an example of the effect of the inherent constancy constraint.

Figure 3.

Inherent curvature constancy. (a) Contrast energy of the contour candidates computed by low-level cues, using the gray image to represent the contrast energy of each candidate; (b) fitted straight line of each candidate with the contrast energy weight; (c) inherent curvature constancy evaluation of each candidate; clutter textures tend to be jagged and have small evaluation values; (d) modulated weight after inherent curvature constancy evaluation; note that clutter textures marked by red circles have been suppressed obviously compared to (a).

According to the rules of continuity, a true contour candidate tends to be connected to its neighbors if they are aligned along a linear or co-circular path. In previous work [41,58,59], the co-circularity rule is encoded with the direction of a pixel and its neighboring ones and the angle of the line connecting these pixels. However, the angle of the line connection in our work is redundant and difficult to formulate because continuation and proximity have been concerned to some extent in our contour candidate generation method. Thus, we encode a continuation connection probability with the differences in the direction of the fitting straight lines and of contour candidates and :

| (8) |

where ; are the directions of and , respectively. Note that when increases, these two candidates tend to be vertical and have less impact on each other, that is to say the continuation connection probability is small.

For similarity, the connection-probability function should be attenuated with the increasing of contrast difference. Thus, we define the similarity connection probability between contour candidate and as follows:

| (9) |

where the standard deviation δ establishes the sensitivity of the connection probability with contrast difference. Contrast difference and low-level feature difference are calculated using the following equation:

| (10) |

3.2.3. Combination of Hierarchical Cues

Combined with these elements, the weight of being a contour for candidate is defined as the combination of contrast energy and the grouping constraint of its neighboring candidates:

| (11) |

where denotes a set of neighborhood contour candidates that partially or totally fall on the discs of radius centered at the terminal points of . should be set as a small value to establish the good continuity of the neighbor candidates adjacent to . In this work, we set pixels.

Then, the final output of each pixel of the image is the combination of the weights of each candidate computed from hierarchical cues over all scales:

| (12) |

where indicates the relative contribution of each scale and denotes the inverse Gaussian pyramids processing to transform the contour candidates at to the original scale .

4. Tests and Results

In this section, we evaluate the performance of our model on a popular Berkeley Segmentation Dataset (BSD300 and BSD500) provided by Malik et al. [1,26]. This dataset has been frequently used as a benchmark of contour detection algorithms. Each image in the dataset was labeled by 5∼10 human subjects. We first evaluated our superpixel-based contour candidate generation method; then, we further tested the performance of our algorithm and compared it to other state-of-the-art algorithms.

To compare the algorithms conveniently, we took the sum of weights of contour candidates over each pixel as the probability of being a true contour after an operation of non-maxima suppression [1,26]. We also computed the so-called F-measure [26],

| (13) |

where P and R represent the precision and recall, respectively, which have been widely used to evaluate the performance of edge detectors [26]. Precision reflects the probability that the detected edge is valid, and recall denotes the probability that the ground truth edge is detected.

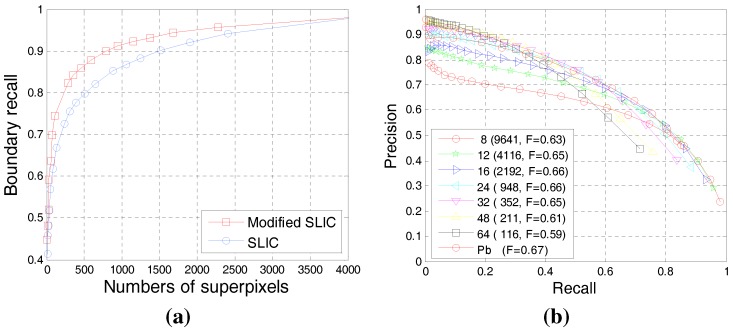

4.1. Evaluation of the Superpixel-Based Contour Candidate

A basic assumption of our method is that superpixel boundaries adhere well to the contours of meaningful objects. If this assumption is valid, then the superpixel boundaries can be taken as contour candidates. Boundary recall is a standard measure of boundary adherence [66], and this parameter measures the fraction of the ground truth edges that falls within at least m pixels of a superpixel boundary ( according to [66]). A high boundary recall indicates that very few true edges are missed. Experiments in [66] had discussed the boundary recall changes of original SLIC with increasing numbers of superpixels. We further evaluated our modified SLIC processing on the BSDS dataset [1]. Figure 4a shows the boundary recall with different numbers of superpixels generated by the original SLIC and our modified approach. We can see from Figure 4a that the boundary recall increased with the number of superpixels. Our modified SLIC demonstrated a better performance than the original SLIC. When the number of superpixels reached 4000, the boundary recall was 0.98, which experimentally verified the completion of our candidate generation method. Note that the boundary recall already reached 0.96 when the number of superpixels is 2000. That is, the doubled number of superpixels only brings a 0.02 increase of boundary recall. To ensure subsequent calculation efficiency without evidently reducing the boundary recall, we set the number of superpixels as 2000. Correspondingly, we perform SLIC with four initial centers of different offsets ( (0,0), (0,), (,0), (,) ) relative to the initial centers of the original method and set the number of approximately equally-sized superpixels as for each initial center (the initial grid interval S is 16 for approximation correspondingly).

Figure 4.

(a) Plot of the boundary recall w.r.t. the number of superpixels; (b) P-Rcurve comparison with our method under different initial grid intervals and Pb [26] ; values inside those parentheses of the legend are the number of superpixels corresponding to the initial grid interval and its F-scores.

We further evaluated the performance of our superpixel-based candidate generation method on BSDS500. Here, we only use low-level cues (luminance and color) to weigh the candidates at the original scale. Figure 4b illustrates a comparison based on the precision-recall (PR)curve with our method under different initial grid intervals and the previously proposed method Pb [26]. When the initial grid interval S was set as 16, our method yielded good results (), close to Pb [26] (), which uses texture, as well as luminance and color and requires a training process. In addition, the method with the small initial interval showed better performance in the part with high recall, while that with a large initial interval exhibited better performance in the part with high precision. Therefore, we can use multiscale strategies to improve the effectiveness of our algorithm.

4.2. Experimental Evaluation of Our BICW Algorithm

The parameters involved in our model are summarized in Table 1. The parameters mainly come from three aspects: low-level cue computation, mid-level cue computation and multi-scale combination. We experimentally analyzed these parameters. To ensure the integrity of the evaluation, we only employed the training set (200 images) of the BSDS500 dataset [1] for parameter setting during the optimization phase and then benchmarked our method on the test set (200 images). We use the expectation maximization (EM) algorithm to tune these parameters. Our algorithm achieves an optimal performance ( on the training set of BSDS500 [1]) with the settings shown in Table 1. Figure 5 provides a performance measure with the changing of parameters in our model.

Table 1.

Parameters involved in our model.

| Parameter | Description | Equation | Setting |

|---|---|---|---|

| Low-level: Relative contribution of each color channel | (6) | 1,0.5,1 | |

| δ | Mid-level: Sensitivity of connection probability with contrast | (9) | 1/16 |

| Scales: Relative contribution of each scale | (12) | 1,1,0.5 |

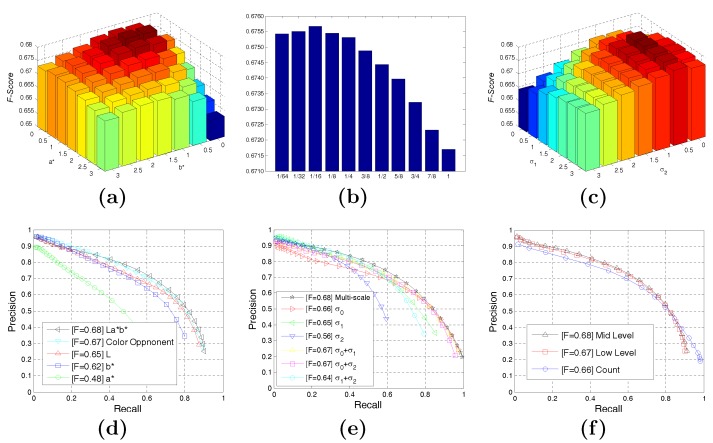

Figure 5.

Performance tests with the parameters in our model. (a) Performance with the changing of low-level cue parameters; (b) performance with the changing of mid-level cue parameters; (c) performance with the changing of the relative contribution of each scale; (d) P-R curves of -based and color opponent-based methods; (e) P-R curves of each scale; (f) P-R curves of the performance of each part in our algorithm.

For the parameters of low-level computation, as shown in Figure 5a, we set for normalization and discuss the performance with the changing of (seven values within [0 3]) and (seven values within [0 3]). The corresponding parameters of the optimal performance were and . Besides , the F-scores decrease with the increasing of at the same . The contribution of each color channel is also shown in Figure 5d. The channel exhibited the poorest performance, so that the the contribution of was smaller than that of other channels. The information from channel is often missing, which leads to a sharp drop of the recall. Therefore, the relative contribution of channel is reasonably smaller than that of other channels. We also compared our LAB-based luminance and chromatic information extraction method with the color-opponent-based method proposed by [74]. We extracted the red-green (R-G), red-cyan (R-C), yellow-blue (B-Y) and white-black (Wh-Bl) color opponent channels instead of lab channels in the computation of low-level cues and perform the subsequent computations. The achieved performance was similar to the ones computed with LAB channels with marginal color values for and . This is mainly because CIELAB is also a kind of color-opponent space with dimension for lightness (luminance) and and for the color-opponent dimensions. In this work, we use CIELAB for simplification.

For the parameters of mid-level cues, the corresponding parameter of the optimal performance was , as shown in Figure 5b. For the parameters of scales, was set to achieve normalization and to discuss the performance with the changes in (seven values within [0 3]) and (seven values within [0 3]). When and , we get an optimal performance. We also examined the performance of each scale and combination of each scale, whose P-R curves were shown in Figure 5e. Most of the information was retained at the smallest scale of , and the details were mostly preserved, so the performance of is better for the measure of recall. Since only the main contours were retained at the scale (), the recall of this scale drops sharply. Scale performs better for precision, as both details and main contours are retained well. We used the weighted sum approach to aggregate the results of different scales. With this method, the main outlines emerging at various scales are applied to generate higher impact, leading to higher performance than that of the single scale.

The performance of each part in our algorithm is shown in Figure 5f. The blue curve in Figure 5f shows the performance of our candidate-based contour detection framework without weighting by visual cues. The weights are just the occurrence of pixels in the contour candidate set. It actually represents the performance of our modified superpixel-based contour candidate generation method. The red and black curve indicate the performance of our algorithm weighted by low-level and mid-level visual cues.

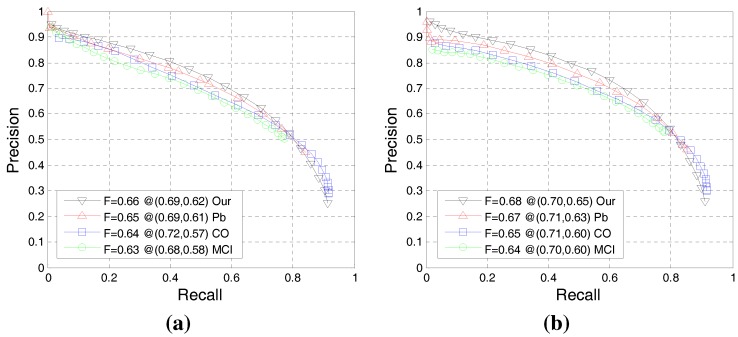

In the following step, these parameter settings were fixed in different scenes when the proposed method is compared to other models. Figure 6 illustrates the P-R curve-based comparison of our BICW with the typical learning method Pb [26] and two state-of-the-art biologically-inspired methods, CO [55] and MCI [48], on the datasets of BSDS300 [26] and BSDS500 [1]. Figure 6 reveals that the proposed model outperforms the Pb [26] method, but employs less information (it does not use texture information). In addition, our model also outperforms CO [55] and MCI [48], which are state-of-the-art biologically-inspired models proposed within the last three years. Figure 7 presents qualitative comparisons on some images.

Figure 6.

(a) The overall performance of the P-R curve on BSDS300 [26]; (b) the overall performance of the P-R curve on BSDS500 [1].

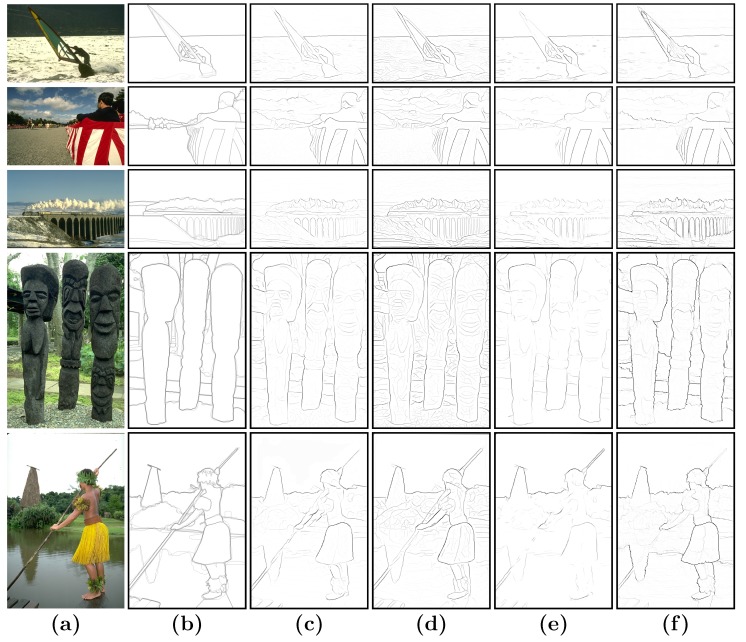

Figure 7.

(a) Image; (b) human; (c) Pb [26]; (d) CO [48]; (e) MCI [55]; (f) ours.

Table 2 lists further comparisons with other state-of-the-art biologically-inspired or non-learning methods on BSDS300 [26] and BSDS500 [1]. The state-of-the-art algorithm gPb [1] achieved a higher F-measure (F = 0.70) than our model. This method is particularly time consuming because of the complex texture calculations and globalization with spectral clustering. In the gPb [1] algorithm, the computation is conducted by placing a circular disc in each pixel split into two half-discs with a diameter at a certain angle. The measure of the boundary strength is computed in each pixel (more than 150,000 pixels) with eight orientations. In our method, the contour candidates are reduced to 30,000. The mean computation time to compute one contour map with gPb [1] is 191 s, while our algorithms only takes 13 s (the computer used here is Intel Core 2, 2.5 GHZ with 8.0 G RAM). Our method is a littler slower than CO [48] and SCO [75]. Note that, CO [48] and SCO [75] only perform at a single scale, because their extension into multi-scale space did not yet produce clear performance improvements [48,75]. The computation time of our method is promising as a multi-scale method. Therefore, this result indicated that our model yields a good trade-off between performance and complexity. In addition, our proposed method is very suitable for parallel computing, which is a future direction to optimize our model.

Table 2.

Quantitative comparison of various models on the BSDS300 [26] and BSDS500 [1] images with the F-score.

Another point worth noticing is that [76] pointed out that the BSDS benchmark [1,26] has potential pitfalls. Psychophysical experiments in a previous work [76] showed that several “weak” boundary labels are unreliable and may contaminate the benchmark. They pointed out that the current benchmarking protocol encourages an algorithm to bias towards those problematic “weak” boundary labels. Although a consensus to define a new standard has yet to be obtained, new directions have been proposed to focus on the new problem of strong boundary detection. These boundaries are more likely to be meaningful contours. For example, as shown in Figure 3, cluttered edges of the ornamentson the legs were also labeled as boundaries by specific subjects. These “weak” boundaries are suppressed by our method. Figure 7 gives several typical examples, which clearly demonstrate that our algorithm is consistent with the strong boundaries of the consensus labels.

5. Conclusions

In this study, a BICW framework that uses superpixel-based candidates and hierarchical visual cues is proposed to detect meaningful contours. In contrast to previous models with pixel-based contour detection, a modified superpixel generation processing has been introduced to generate a contour candidate set. The candidates are then weighted by integrating the information from multiple biologically-inspired cues. We extract the low-level visual local cues on the basis of the contrast energy to weigh the contour intrinsic property. Gestalt principle-based mid-level visual cues, namely curvature consistency, continuation and similarity, are integrated to weigh the contour grouping constraint. The final output is generated by considering information at multiple scales.

The main contribution of the work could be summarized as follows. (1) A candidate weighting framework is designed for the task of contour detection. To our knowledge, this is the first attempt to build a contour candidate set of a line segment rather than a candidate set of pixels using the superpixel-based method. Other visual features could be easily integrated into this unified framework in the future; (2) A model based on biologically-inspired cues is designed to weigh the candidates. Our model is distinguished mainly by how to use and integrate the multiple visual cues for weighing the candidates; (3) The final output is generated by integrating the information at multiple scales. This strategy employs the property that salient and meaningful contours are more likely to be retained at different scales. The weights of the meaningful contours are boosted when they are integrated from multiple scales; (4) The result shows that our model achieves a good trade-off between performance and efficiency. The method is also very suitable for parallel computing, which is a future direction for optimization.

To summarize, we propose a biologically-inspired framework for contour detection using superpixel-based candidates and hierarchical visual cues. Experimental results based on the BSDS benchmark show that the proposed framework exhibits promising performances in terms of capturing meaningful contours in complex scenes.

Acknowledgments

This research was supported by the National Natural Science Foundation of China (NSFC) projects under Grant No. 61273241, No. 61273279 and No. 61503288, and the China Postdoctoral Science Foundation under Grant No. 2015M570665.

Author Contributions

Xiao Sun, Ke Shang and Jiayi Ma have made substantial contributions to the algorithm design. Xiao Sun has made substantial contributions to the algorithm implementation and manuscript preparation. Ke Shang has made substantial contributions to the experimental data collection and the result analysis. Delie Ming, Jinwen Tian and Jiayi Ma revised the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Arbelaez P., Maire M., Fowlkes C., Malik J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011;33:898–916. doi: 10.1109/TPAMI.2010.161. [DOI] [PubMed] [Google Scholar]

- 2.Malik J., Belongie S., Leung T., Shi J. Contour and texture analysis for image segmentation. Int. J. Comput. Vis. 2001;43:7–27. doi: 10.1023/A:1011174803800. [DOI] [Google Scholar]

- 3.Ma J., Zhao J., Tian J., Yuille A.L., Tu Z. Robust point matching via vector field consensus. IEEE Trans. Image Process. 2014;23:1706–1721. doi: 10.1109/TIP.2014.2307478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shen W., Wang X., Wang Y., Bai X., Zhang Z. DeepContour: A Deep Convolutional Feature Learned by Positive-sharing Loss for Contour Detection; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Boston, MA, USA. 7–12 June 2015; pp. 3982–3991. [Google Scholar]

- 5.Bai X., Rao C., Wang X. A Robust and Efficient Shape Representation for Shape Matching. IEEE Trans. Image Process. 2014;23:3935–3949. doi: 10.1109/TIP.2014.2336542. [DOI] [PubMed] [Google Scholar]

- 6.Ma J., Qiu W., Zhao J., Ma Y., Yuille A.L., Tu Z. Robust L2E estimation of transformation for non-rigid registration. IEEE Trans. Signal Process. 2015;63:1115–1129. doi: 10.1109/TSP.2014.2388434. [DOI] [Google Scholar]

- 7.Papari G., Petkov N. Edge and line oriented contour detection: State of the art. Image Vis. Comput. 2011;29:79–103. doi: 10.1016/j.imavis.2010.08.009. [DOI] [Google Scholar]

- 8.Tian Y., Guan T., Wang C. Real-time occlusion handling in augmented reality based on an object tracking approach. Sensors. 2010;10:2885–2900. doi: 10.3390/s100402885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Opelt A., Pinz A., Zisserman A. Computer Vision–ECCV 2006. Springer; Berlin, Germany: 2006. A boundary-fragment-model for object detection; pp. 575–588. [Google Scholar]

- 10.Ma J., Zhou H., Zhao J., Gao Y., Jiang J., Tian J. Robust feature matching for remote sensing image registration via locally linear transforming. IEEE Trans. Geosci. Remote Sens. 2015;53:6469–6481. doi: 10.1109/TGRS.2015.2441954. [DOI] [Google Scholar]

- 11.García-Garrido M.A., Ocana M., Llorca D.F., Arroyo E., Pozuelo J., Gavilán M. Complete vision-based traffic sign recognition supported by an I2V communication system. Sensors. 2012;12:1148–1169. doi: 10.3390/s120201148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Borza D., Darabant A.S., Danescu R. Eyeglasses lens contour extraction from facial images using an efficient shape description. Sensors. 2013;13:13638–13658. doi: 10.3390/s131013638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ma J., Zhao J., Ma Y., Tian J. Non-rigid visible and infrared face registration via regularized Gaussian fields criterion. Pattern Recognit. 2015;48:772–784. doi: 10.1016/j.patcog.2014.09.005. [DOI] [Google Scholar]

- 14.Jiang J., Hu R., Han Z., Lu T. Efficient single image super-resolution via graph-constrained least squares regression. Multimed. Tools Appl. 2014;72:2573–2596. doi: 10.1007/s11042-013-1567-9. [DOI] [Google Scholar]

- 15.Jiang J., Hu R., Wang Z., Han Z. Noise robust face hallucination via locality-constrained representation. IEEE Trans. Multimed. 2014;16:1268–1281. doi: 10.1109/TMM.2014.2311320. [DOI] [Google Scholar]

- 16.Jiang J., Hu R., Wang Z., Han Z. Face Super-Resolution via Multilayer Locality-Constrained Iterative Neighbor Embedding and Intermediate Dictionary Learning. IEEE Trans. Image Process. 2014;23:4220–4231. doi: 10.1109/TIP.2014.2347201. [DOI] [PubMed] [Google Scholar]

- 17.Barron J., Malik J. Shape, illumination, and reflectance from shading. IEEE Trans. Pattern Anal. Mach. Intell. 2013;37:1670–1687. doi: 10.1109/TPAMI.2014.2377712. [DOI] [PubMed] [Google Scholar]

- 18.Duda R.O., Hart P.E. Pattern Classification and Scene Analysis. Volume 3 Wiley; New York, NY, USA: 1973. [Google Scholar]

- 19.Lawrence G.R. Ph.D. Thesis. Massachusetts Institute of Technology; Cambridge, MA, USA: 1963. Machine Perception of Three-Dimensional Solids. [Google Scholar]

- 20.Prewitt J.M. Object enhancement and extraction. Pict. Process. Psychopictorics. 1970;10:15–19. [Google Scholar]

- 21.Canny J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986;PAMI-8:679–698. doi: 10.1109/TPAMI.1986.4767851. [DOI] [PubMed] [Google Scholar]

- 22.Kim J.H., Park B.Y., Akram F., Hong B.W., Choi K.N. Multipass active contours for an adaptive contour map. Sensors. 2013;13:3724–3738. doi: 10.3390/s130303724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kass M., Witkin A., Terzopoulos D. Snakes: Active contour models. Int. J. Comput. Vis. 1988;1:321–331. doi: 10.1007/BF00133570. [DOI] [Google Scholar]

- 24.Caselles V., Kimmel R., Sapiro G. Geodesic active contours. Int. J. Comput. Vis. 1997;22:61–79. doi: 10.1023/A:1007979827043. [DOI] [Google Scholar]

- 25.Lu X., Song L., Shen S., He K., Yu S., Ling N. Parallel Hough Transform-based straight line detection and its FPGA implementation in embedded vision. Sensors. 2013;13:9223–9247. doi: 10.3390/s130709223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Martin D.R., Fowlkes C.C., Malik J. Learning to detect natural image boundaries using local brightness, color, and texture cues. IEEE Trans. Pattern Anal. Mach. Intell. 2004;26:530–549. doi: 10.1109/TPAMI.2004.1273918. [DOI] [PubMed] [Google Scholar]

- 27.Konishi S., Yuille A.L., Coughlan J.M., Zhu S.C. Statistical edge detection: Learning and evaluating edge cues. IEEE Trans. Pattern Anal. Mach. Intell. 2003;25:57–74. doi: 10.1109/TPAMI.2003.1159946. [DOI] [Google Scholar]

- 28.Lim J.J., Zitnick C.L., Dollár P. Sketch tokens: A learned mid-level representation for contour and object detection; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Portland, OR, USA. 23–28 June 2013; pp. 3158–3165. [Google Scholar]

- 29.Dollár P., Zitnick C.L. Structured forests for fast edge detection; Proceedings of the IEEE International Conference on Computer Vision (ICCV); Sydney, Australia. 1–8 December 2013; pp. 1841–1848. [Google Scholar]

- 30.Ren X., Bo L. Advances in Neural Information Processing Systems. MIT Press; Cambridge, MA, USA: 2012. Discriminatively trained sparse code gradients for contour detection; pp. 584–592. [Google Scholar]

- 31.Zhao J., Ma J., Tian J., Ma J., Zheng S. Boundary extraction using supervised edgelet classification. Opt. Eng. 2012;51 doi: 10.1117/1.OE.51.1.017002. [DOI] [Google Scholar]

- 32.Wei H., Lang B., Zuo Q. Contour detection model with multi-scale integration based on non-classical receptive field. Neurocomputing. 2013;103:247–262. doi: 10.1016/j.neucom.2012.09.027. [DOI] [Google Scholar]

- 33.Stettler D.D., Das A., Bennett J., Gilbert C.D. Lateral connectivity and contextual interactions in macaque primary visual cortex. Neuron. 2002;36:739–750. doi: 10.1016/S0896-6273(02)01029-2. [DOI] [PubMed] [Google Scholar]

- 34.Bosking W.H., Zhang Y., Schofield B., Fitzpatrick D. Orientation selectivity and the arrangement of horizontal connections in tree shrew striate cortex. J. Neurosci. 1997;17:2112–2127. doi: 10.1523/JNEUROSCI.17-06-02112.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Knierim J.J., van Essen D.C. Neuronal responses to static texture patterns in area V1 of the alert macaque monkey. J. Neurophysiol. 1992;67:961–980. doi: 10.1152/jn.1992.67.4.961. [DOI] [PubMed] [Google Scholar]

- 36.Stemmler M., Usher M., Niebur E. Lateral interactions in primary visual cortex: A model bridging physiology and psychophysics. Science. 1995;269:1877–1880. doi: 10.1126/science.7569930. [DOI] [PubMed] [Google Scholar]

- 37.Hess R.F., May K.A., Dumoulin S.O. Oxford Handbook of Perceptual Organization. Oxford University Press; Oxford, UK: 2013. Contour integration: Psychophysical, neurophysiological and computational perspectives. [Google Scholar]

- 38.Grigorescu C., Petkov N., Westenberg M. Contour detection based on nonclassical receptive field inhibition. IEEE Trans. Image Process. 2003;12:729–739. doi: 10.1109/TIP.2003.814250. [DOI] [PubMed] [Google Scholar]

- 39.Petkov N., Westenberg M.A. Suppression of contour perception by band-limited noise and its relation to nonclassical receptive field inhibition. Biol. Cybern. 2003;88:236–246. doi: 10.1007/s00422-002-0378-2. [DOI] [PubMed] [Google Scholar]

- 40.Papari G., Campisi P., Petkov N., Neri A. A biologically motivated multiresolution approach to contour detection. EURASIP J. Appl. Signal Process. 2007;2007 doi: 10.1155/2007/71828. [DOI] [Google Scholar]

- 41.Tang Q., Sang N., Zhang T. Extraction of salient contours from cluttered scenes. Pattern Recognit. 2007;40:3100–3109. doi: 10.1016/j.patcog.2007.02.009. [DOI] [Google Scholar]

- 42.Tang Q., Sang N., Zhang T. Contour detection based on contextual influences. Image Vis. Comput. 2007;25:1282–1290. doi: 10.1016/j.imavis.2006.08.007. [DOI] [Google Scholar]

- 43.Long L., Li Y. Contour detection based on the property of orientation selective inhibition of non-classical receptive field; Proceedings of the IEEE Conference on Cybernetics and Intelligent Systems; Chengdu, China. 21–24 September 2008; pp. 1002–1006. [Google Scholar]

- 44.La Cara G.E., Ursino M. A model of contour extraction including multiple scales, flexible inhibition and attention. Neural Netw. 2008;21:759–773. doi: 10.1016/j.neunet.2007.11.003. [DOI] [PubMed] [Google Scholar]

- 45.Li Z. A neural model of contour integration in the primary visual cortex. Neural Comput. 1998;10:903–940. doi: 10.1162/089976698300017557. [DOI] [PubMed] [Google Scholar]

- 46.Ursino M., La Cara G.E. A model of contextual interactions and contour detection in primary visual cortex. Neural Netw. 2004;17:719–735. doi: 10.1016/j.neunet.2004.03.007. [DOI] [PubMed] [Google Scholar]

- 47.Zeng C., Li Y., Li C. Center–surround interaction with adaptive inhibition: A computational model for contour detection. NeuroImage. 2011;55:49–66. doi: 10.1016/j.neuroimage.2010.11.067. [DOI] [PubMed] [Google Scholar]

- 48.Yang K., Gao S., Li C., Li Y. Efficient color boundary detection with color-opponent mechanisms; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Portland, OR, USA. 23–28 June 2013; pp. 2810–2817. [Google Scholar]

- 49.Li Y., Hou X., Koch C., Rehg J.M., Yuille A.L. The secrets of salient object segmentation; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Columbus, OH, USA. 23–28 June 2014; pp. 280–287. [Google Scholar]

- 50.Cheng M.M., Zhang Z., Lin W.Y., Torr P. BING: Binarized normed gradients for objectness estimation at 300 fps; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Columbus, OH, USA. 23–28 June 2014; pp. 3286–3293. [Google Scholar]

- 51.Ren X. Computer Vision–ECCV 2008. Springer; Berlin, Germany: 2008. Multi-scale improves boundary detection in natural images; pp. 533–545. [Google Scholar]

- 52.Dollar P., Tu Z., Belongie S. Supervised learning of edges and object boundaries. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2006;2:1964–1971. [Google Scholar]

- 53.Felzenszwalb P., McAllester D. A min-cover approach for finding salient curves; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshop; New York, NY, USA. 17–22 June 2006; [DOI] [Google Scholar]

- 54.Isola P., Zoran D., Krishnan D., Adelson E.H. Computer Vision–ECCV 2014. Springer; Berlin, Germany: 2014. Crisp boundary detection using pointwise mutual information; pp. 799–814. [Google Scholar]

- 55.Yang K.F., Li C.Y., Li Y.J. Multifeature-based surround inhibition improves contour detection in natural images. IEEE Trans. Image Process. 2014;23:5020–5032. doi: 10.1109/TIP.2014.2361210. [DOI] [PubMed] [Google Scholar]

- 56.Xiao J., Cai C. Contour detection based on horizontal interactions in primary visual cortex. Electron. Lett. 2014;50:359–361. doi: 10.1049/el.2013.3657. [DOI] [Google Scholar]

- 57.Papari G., Petkov N. An improved model for surround suppression by steerable filters and multilevel inhibition with application to contour detection. Pattern Recognit. 2011;44:1999–2007. doi: 10.1016/j.patcog.2010.08.013. [DOI] [Google Scholar]

- 58.Elder J.H., Goldberg R.M. Ecological statistics of Gestalt laws for the perceptual organization of contours. J. Vis. 2002;2 doi: 10.1167/2.4.5. [DOI] [PubMed] [Google Scholar]

- 59.Geisler W., Perry J., Super B., Gallogly D. Edge co-occurrence in natural images predicts contour grouping performance. Vis. Res. 2001;41:711–724. doi: 10.1016/S0042-6989(00)00277-7. [DOI] [PubMed] [Google Scholar]

- 60.Ming Y., Li H., He X. Connected contours: A new contour completion model that respects the closure effect; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Providence, RI, USA. 16–21 June 2012; pp. 829–836. [Google Scholar]

- 61.Han J., Yue J., Zhang Y., Bai L.F. Salient contour extraction from complex natural scene in night vision image. Infrared Phys. Technol. 2014;63:165–177. doi: 10.1016/j.infrared.2013.12.021. [DOI] [Google Scholar]

- 62.Shi J., Malik J. Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2000;22:888–905. [Google Scholar]

- 63.Felzenszwalb P.F., Huttenlocher D.P. Efficient graph-based image segmentation. Int. J. Comput. Vis. 2004;59:167–181. doi: 10.1023/B:VISI.0000022288.19776.77. [DOI] [Google Scholar]

- 64.Moore A.P., Prince J., Warrell J., Mohammed U., Jones G. Superpixel lattices; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Anchorage, AK, USA. 23–28 June 2008; pp. 1–8. [Google Scholar]

- 65.Veksler O., Boykov Y., Mehrani P. Computer Vision–ECCV 2010. Springer; Berlin, Geramy: 2010. Superpixels and supervoxels in an energy optimization framework; pp. 211–224. [Google Scholar]

- 66.Achanta R., Shaji A., Smith K., Lucchi A., Fua P., Susstrunk S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012;34:2274–2282. doi: 10.1109/TPAMI.2012.120. [DOI] [PubMed] [Google Scholar]

- 67.Avidan S., Shamir A. Seam carving for content-aware image resizing. ACM Trans. Gr. 2007;26 doi: 10.1145/1276377.1276390. [DOI] [Google Scholar]

- 68.Comaniciu D., Meer P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002;24:603–619. [Google Scholar]

- 69.Vedaldi A., Soatto S. Computer Vision–ECCV 2008. Springer; Berlin, Germany: 2008. Quick shift and kernel methods for mode seeking; pp. 705–718. [Google Scholar]

- 70.Vincent L., Soille P. Watersheds in digital spaces: An efficient algorithm based on immersion simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991;6:583–598. doi: 10.1109/34.87344. [DOI] [Google Scholar]

- 71.Levinshtein A., Stere A., Kutulakos K.N., Fleet D.J., Dickinson S.J., Siddiqi K. Turbopixels: Fast superpixels using geometric flows. IEEE Trans. Pattern Anal. Mach. Intell. 2009;31:2290–2297. doi: 10.1109/TPAMI.2009.96. [DOI] [PubMed] [Google Scholar]

- 72.Carreira J., Sminchisescu C. Constrained parametric min-cuts for automatic object segmentation; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); San Francisco, CA, USA. 13–18 June 2010; pp. 3241–3248. [Google Scholar]

- 73.Palmer S.E. Vision Science: Photons to Phenomenology. Volume 1 MIT Press; Cambridge, MA, USA: 1999. [Google Scholar]

- 74.Zhang J., Barhomi Y., Serre T. Computer Vision–ECCV 2012. Springer; Berlin, Germany: 2012. A new biologically inspired color image descriptor; pp. 312–324. [Google Scholar]

- 75.Yang K.F., Gao S., Guo C., Li C., Li Y. Boundary detection using double-opponency and spatial sparseness constraint. IEEE Trans. Image Process. 2015;24:2565–2578. doi: 10.1109/TIP.2015.2425538. [DOI] [PubMed] [Google Scholar]

- 76.Hou X., Koch C., Yuille A. Boundary detection benchmarking: Beyond f-measures; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Portland, OR, USA. 23–28 June 2013; pp. 2123–2130. [Google Scholar]