Abstract

The human brain encodes experience in an integrative fashion by binding together the various features of an event (i.e., stimuli and responses) into memory “event files.” A subsequent reoccurrence of an event feature can then cue the retrieval of the memory file to “prime” cognition and action. Intriguingly, recent behavioral studies indicate that, in addition to linking concrete stimulus and response features, event coding may also incorporate more abstract, “internal” event features such as attentional control states. In the present study, we used fMRI in healthy human volunteers to determine the neural mechanisms supporting this type of holistic event binding. Specifically, we combined fMRI with a task protocol that dissociated the expression of event feature-binding effects pertaining to concrete stimulus and response features, stimulus categories, and attentional control demands. Using multivariate neural pattern classification, we show that the hippocampus and putamen integrate event attributes across all of these levels in conjunction with other regions representing concrete-feature-selective (primarily visual cortex), category-selective (posterior frontal cortex), and control demand-selective (insula, caudate, anterior cingulate, and parietal cortex) event information. Together, these results suggest that the hippocampus and putamen are involved in binding together holistic event memories that link physical stimulus and response characteristics with internal representations of stimulus categories and attentional control states. These bindings then presumably afford shortcuts to adaptive information processing and response selection in the face of recurring events.

SIGNIFICANCE STATEMENT Memory binds together the different features of our experience, such as an observed stimulus and concurrent motor responses, into so-called event files. Recent behavioral studies suggest that the observer's internal attentional state might also become integrated into the event memory. Here, we used fMRI to determine the brain areas responsible for binding together event information pertaining to concrete stimulus and response features, stimulus categories, and internal attentional control states. We found that neural signals in the hippocampus and putamen contained information about all of these event attributes and could predict behavioral priming effects stemming from these features. Therefore, medial temporal lobe and dorsal striatum structures appear to be involved in binding internal control states to event memories.

Introduction

Human cognition is strongly conditioned by recent experience—we are better at perceiving, and faster to respond to, stimuli that resemble recent observations than those that do not (Pashler and Baylis, 1991; Cheadle et al., 2014; Fischer and Whitney, 2014). These “priming” effects likely represent adaptations to an environment of high temporal autocorrelation in which our experience at one moment strongly predicts stimulation at the next moment (Dong and Atick, 1995). Research into such short-term sequential dependencies in behavior suggests that the cognitive apparatus binds together the various features of our experience, integrating different physical attributes of stimuli (Treisman and Gelade, 1980), as well as actions performed in response to those stimuli, into memory “event files” (Hommel, 1998, 2004). A proximate reoccurrence of one or more of these event features then appears to activate (or prompt the retrieval of) the prior event memory, presumably to serve as a potential shortcut for fast, appropriate responses to recurring stimuli or events (Logan, 1988).

Interestingly, recent work suggests that this type of mnemonic event coding may extend beyond the binding of concrete, observable stimulus and response characteristics to also include more abstract features such as stimulus categories (Goschke and Bolte, 2007) and, most notably, concurrent internal states such as attentional control settings (for review, see Egner, 2014). Therefore, a physical event feature such as stimulus location or color can become associated with an internal cognitive state such as a particular task set (Waszak et al., 2003; Crump and Logan, 2010) or level of attentional selectivity (Crump et al., 2006; Spapé and Hommel, 2008; Crump and Milliken, 2009; Heinemann et al., 2009; Bugg et al., 2011; King et al., 2012) such that future presentation of the feature in question can come to prime the retrieval of the associated attentional set (Verguts and Notebaert, 2009). For example, the sight of a particular intersection on your daily commute could come to trigger the retrieval of a heightened attentional focus that you previously had to engage when navigating that junction.

However, whereas neural concomitants of basic stimulus–response event binding have been investigated previously (Keizer et al., 2008; Kühn et al., 2011), the neural mechanisms underlying the integration of concrete, observable event characteristics such as specific stimuli and responses with more abstract (generalizable), internal event features such as control states are presently not well understood. It has been speculated that this process might be supported by the hippocampus, which could rapidly integrate diverse event features through interactions with other brain regions that selectively encode concrete, categorical, and control demand aspects of events along a posterior-to-anterior anatomical gradient in which the bindings of more abstract features occur in more anterior regions (Egner, 2014). This proposal, however, remains untested.

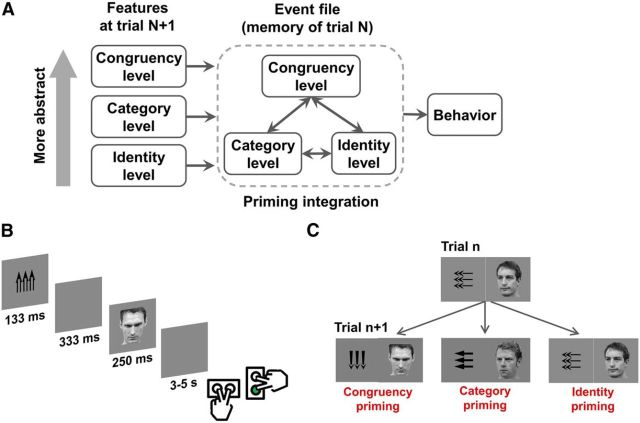

Here, we sought to elucidate how concrete and abstract event features are integrated by exploiting the well known congruency sequence effect (CSE) (Gratton et al., 1992), which, depending on the specifics of the experimental design, can be driven by (mis-)matching stimulus and response features and/or (mis-)matching attentional states over successive trials (for reviews, see Egner, 2007; Duthoo et al., 2014). Specifically, we combined fMRI with a novel CSE protocol that could dissociate the expression of priming effects from event information pertaining to concrete stimulus and response features, stimulus categories, and attentional control demands (Fig. 1). This paradigm, combined with searchlight multivoxel pattern analysis (MVPA) (Kriegeskorte et al., 2006) and multivariate brain–behavior correlation analyses, allowed us to characterize commonalities and differences in the neural substrates of event integration as a function of event features at levels of abstraction ranging from physical stimulus characteristics to internal control states.

Figure 1.

Event-file-priming framework and task design. A, Schematic illustration of the event file memory-matching framework in the context of the current task. Physical, categorical, and internal (attentional state) event features are bound together into an event file (trial N). In trial N + 1, any features matching the previous trial event file will cue the retrieval of that file; the exact feature matching relationship (e.g., partial match vs complete match) will determine whether this process speeds or slows responses. B, Time course of an example incongruent trial and response mapping (correct response is in green). C, Example trial sequence conditions: congruency-priming sequence (left): a congruent trial is followed by another congruent trial in the absence of any concrete stimulus feature repetitions, representing a match of features at the most abstract (congruency or control state) feature level; category-priming sequence (middle): a trial with left-oriented arrows and face is followed by another trial with left-oriented arrows and face, but of differing identities, representing a repetition at the category (direction) level; identity-priming sequence (right): two physically identical target-distracter ensembles appear successively, representing a complete repetition at the most concrete, identity feature level.

Materials and Methods

Subjects.

Twenty-nine right-handed volunteers gave informed consent in accordance with institutional guidelines. All subjects had normal or corrected-to-normal vision. Four subjects were excluded from further analysis (two stopped responding during the task and two had excessive head movement during scanning). The final sample consisted of 25 subjects (14 females, 22–37 years old, mean age = 28 years).

Apparatus and stimuli.

Stimulus delivery and behavioral data collection were performed using Presentation software (http://www.neurobs.com/). Stimuli consisted of eight gray-level photographs of unique male faces and eight unique arrow ensembles. The face stimuli were adopted from Weidenbacher et al. (2007). The arrows appeared in a stack of 3 to cover approximately the same area as the faces (subtending ∼3° of horizontal and 4° of vertical visual angle). The faces/arrows faced/pointed up, down, left, or right (two unique faces and arrow identities for each direction). The stimuli were presented against a gray background in the center of a back projection screen, which was viewed via a mirror attached to the scanner head coil.

Procedure.

A trial started with the presentation of a stationary arrow stack for 133 ms, followed by a blank screen for 333 ms and then a face for 250 ms (see Fig. 1B). The trials were separated by jittered intertrial intervals (ITIs) ranging from 3 to 5 s in uniformly distributed steps of 500 ms, during which a blank screen was shown. Subjects performed a speeded button response to the direction of the face stimulus (target) while trying to ignore the task-irrelevant arrows (distracter). The up, down, left, and right responses were made with the right middle finger, right index finger, left middle finger, and left index finger, respectively. Responses were collected using two MRI-compatible button boxes (one for each hand) placed perpendicular to each other to form an intuitive response mapping (see Fig. 1B). Subjects completed a practice run before entering the MRI scanner to ensure that they comprehended the task requirements. The task consisted of 10 runs, each of which included 65 trials presented in a pseudorandomized order.

Defining different levels of event feature priming in the CSE.

In our task, a trial could be either congruent or incongruent depending on whether the target face and distracter arrows pointed in the same or opposite directions. We expected responses to incongruent trials to be slower and more error prone than those to congruent trials, reflecting the classic congruency effect (e.g., MacLeod, 1991). In addition, this type of task produces a reliable CSE in which the congruency effect tends to be reduced on trials that follow an incongruent trial compared with those that follow a congruent trial (Gratton et al., 1992; for a review, see Egner, 2007) and, importantly, this effect can involve the expression of feature learning (or priming) at different levels of abstraction (Duthoo et al., 2014; for review, see Egner, 2014). Note that we use the term “priming” here as a convenient shorthand for any effects of recent experience without theoretical commitment to the implicit nature of these effects.

First, based on the idea that stimulus and response features get bound together into event files, the CSE can reflect the degree of overlap between the physical current and previous trial stimulus and response attributes (Mayr et al., 2003; Hommel, 2004). In the context of the present task, a complete overlap at this level corresponds to a repetition of the exact face and arrow stimuli over two consecutive trials. Complete repetitions and complete changes of stimulus and response features across consecutive trials lead to relatively fast responses, whereas “partial repetitions,” in which some trial features (e.g., the distracter) repeat whereas others (e.g., the target and response) change, slow responses. This slowing occurs because the repeated feature cues the retrieval of the other associated event features from the previous trial, which are, however, unhelpful as a shortcut to the correct response. By contrast, in complete repetition trials, the primed event file is an appropriate shortcut and, in complete change trials, the previous trial event is not being cued in the first place, thus not incurring the “unbinding cost” of a partial memory match (Hommel, 2004).

The reason that this priming of concrete event features can produce the CSE is illustrated by the following example: consider a typical congruency task with a small stimulus set of two targets (T1, T2) and two distracters (D1, D2), with the combinations T1D1 and T2D2 representing congruent stimuli and T1D2 and T2D1 representing incongruent stimuli. As noted above, complete repetitions (e.g., from T1D1 to T1D1 or T1D2 to T1D2) and complete changes (e.g., from T1D1 to T2D2 or T1D2 to T2D1) of stimulus features across trials will result in relatively fast responses, but these trials also happen to always be associated with a repetition of congruency. In other words, because each stimulus has a 50% chance of repetition, successive congruent trials (cC trials) and incongruent trials (iI trials) will consist of 50% complete repetitions and 50% complete changes. Conversely, slow partial repetition trials (e.g., from T1D1 to T1D2 or from T1D2 to T2D1) will always be associated with changes in congruency (cI and iC trials). Given this scenario, concrete event feature priming renders cC and iI trials faster compared with cI and iC trials, which results in the characteristic CSE pattern of smaller mean congruency effects after an incongruent trial than after a congruent trial (Mayr et al., 2003; Hommel, 2004).

Second, it has been suggested that the same mechanisms operate at the level of stimulus categories (Schmidt, 2013) in which target and distracter stimulus categories can become associated with specific responses and are therefore subject to benefits under complete repetitions (or changes) and costs under partial repetitions (Goschke and Bolte, 2007, but see Hommel and Müsseler, 2006). In the present task, this would correspond, for example, to deriving a benefit from the direction (e.g., left), but not the physical identities, of arrows and faces being repeated across trials.

Third, crucially, it has also been demonstrated that the CSE can be an expression of trial-to-trial matching of internal control states rather than particular stimulus–response features. Specifically, one can design a congruency task in a manner that avoids any concrete event feature repetitions across trials but still obtain a robust CSE (Kunde and Wuhr, 2006; Schmidt and Weissman, 2014; Weissman et al., 2014). In this scenario, the behavioral benefit derived from congruency repetitions presumably occurs due to a learning process at the level of attentional or control state. Specifically, an incongruent trial requires the resolution of conflict created by the incongruent distracter and is thus thought to engage control processes such as the enhancement of attentional focus on the target stimulus (Egner and Hirsch, 2005) and/or the suppression of the response that is cued by the distracter (Ridderinkhof, 2002). Recruitment of these control states is thought to prime the same kind of control settings for the subsequent trial, thus leading to a relatively suppressed influence of distracters on performance (i.e., a smaller congruency effects) after an incongruent compared with a congruent trial (Gratton et al., 1992; Botvinick et al., 2001).

Dissociating different levels of event feature priming in the present task.

The basic premise behind our task design is that all event features—physical, categorical, and attentional—are bound together on each trial (see Fig. 1A), but their respective impact on processing during the subsequent trial (i.e., their “expression”) is determined by the degree of overlap (i.e., match/mismatch) of concrete, categorical, and control demand features across the two trials. Note that we focus on first-order trial sequences, but not because we believe that event-binding effects only occur at short latencies. On the contrary, we assume that mnemonic event feature matching effects can occur cumulatively and over much longer periods of time (Logan, 1988). However, consistent with much of the previous empirical feature integration literature (Hommel, 1998, 2004), we also assume that the most recently encoded event file (of the previous trial) will be the most accessible and influential in affecting feature matching on the current trial. Moreover, the present design isolates these short-term effects of event feature binding from possible contamination by longer-term effects by carefully controlling for any additional predictive associations between event features, as described below. The task was therefore designed to produce three types of distinct first-order priming conditions in which the expression of event feature priming varied systematically as a function of feature level. To this end, we used three first-order sequence conditions (Fig. 1C): (1) the identities and orientation of face and arrow stimuli switched to another axis (e.g., from vertical to horizontal) from the previous trial (congruency–priming trial); (2) the axis of direction remained the same as in the previous trial (i.e., the direction was either the same or reversed), but both the identities of the face target and arrow distracter changed from the previous trial (category-priming trial); and (3) the identity of the face and/or arrows may repeat from the previous trial (identity-priming trial). Note that the label attached to each sequence type indicates the most concrete (rather than the only) level of trial feature in which matching between the previous and current trial could mediate behavior. In the congruency-priming condition, the CSE can only be due to congruency-level priming because both the directions and identities of the stimuli changed. In the category-priming condition, the CSE can be modulated by congruency- and category-level priming because identities of the face and arrows changed but the category (axis) remained the same. Finally, in the identity-priming condition, all three levels of priming can mediate the CSE. Because the CSE in each sequence condition is attributable to a unique combination of the three levels of priming, these three levels can be dissociated in our design. The numbers of congruency-priming, category-priming, and identity-priming trials in each scan run were 32, 16, and 16, respectively (excluding the first trial of the run due to the lack of a previous trial). The cC and iI trials in both the category-priming and identity-priming conditions also included eight complete change trials (i.e., the directions of both the face and the arrows were reversed) to balance the proportion of congruency for the stimuli (see below).

To rule out the possibility that a particular physical stimulus feature would predict congruency and thus confound congruency-level priming, each stimulus (face and arrows) appeared in four congruent and four incongruent trials in each run (excluding the first trial). This constraint also eliminated the potential confound of a particular distractor predicting responses (Schmidt, 2013) because, for each arrow, the number of responses in the same and in the opposite direction were identical. Finally, note that the stimuli involved in each of the three sequence conditions all derive from the same stimulus set such that there were no basic perceptual differences between conditions. In sum, to study the expression of event-binding effects at different levels of abstraction, we used a 3 (sequence condition: congruency priming, category priming, and identity priming) × 2 (previous congruency: congruent, incongruent) × 2 (current congruency: congruent, incongruent) factorial design. Across the 10 scanning runs, the numbers of trials for each previous congruency × current congruency combination (i.e., cC, cI, iC, iI) were 80, 40, and 40 for the congruency-priming, category-priming, and identity-priming sequence conditions, respectively. All congruency-priming trials were complete change trials at both category and identity levels. In category-priming and identity-priming conditions, the cC and iI trials consisted of 50% complete repetition trials and 50% complete change trials (at the category and identity level, respectively), whereas the iC and cI trials consisted of 100% partial repetition trials (at the category and identity level, respectively), creating the standard conditions for the CSE to emerge as an expression of stimulus and response feature integration (Hommel, 2004).

Behavioral data analyses.

Reaction times (RTs) were analyzed using a repeated-measures 3 (sequence condition) × 2 (previous congruency) × 2 (current congruency) ANOVA. Error trials, posterror trials, and outlier trials (i.e., RT values that deviated >3 SDs from an individual subject's grand mean) were excluded from further analyses. In addition, the CSE of RT (i.e., previous × current congruency interaction, or [cI + iC − cC − iI]) was computed for each subject and each sequence condition. The sequence-condition-specific CSEs were compared with 0 using a two-tailed, one-sample t test. The difference of RT in complete repetition trials between category-priming and identity-priming conditions was tested using a two-tailed, paired t test. Accuracy approached ceiling (M = 97%) and thus was not analyzed any further.

Image acquisition and preprocessing.

Images were acquired parallel to the AC–PC line on a 3 T GE scanner. Structural images were scanned using a T1-weighted SPGR axial scan sequence (146 slices, slice thickness = 1 mm, TR = 8.124 ms, FoV = 256 mm * 256 mm, in-plane resolution = 1 mm * 1 mm). Functional images were scanned using a T2*-weighted sense spiral sequence of 39 contiguous axial slices (slice thickness = 3 mm, TR = 2 s, TE = 28 ms, flip angle = 90°, FoV = 192 mm * 192 mm, in-plane resolution = 3 mm * 3 mm). Functional data were acquired in 10 runs of 156 images each. Preprocessing was done using SPM8 (http://www.fil.ion.ucl.ac.uk/spm/). After discarding the first five scans of each run, the remaining images were realigned to their mean image and corrected for differences in slice-time acquisition. Each subject's structural image was coregistered to the mean functional image and normalized to the Montreal Neurological Institute template brain. The transformation parameters of the structural image normalization were then applied to the functional images. Normalized functional images were kept in their native resolution and then smoothed by a Gaussian kernel with 5 mm full width at half maximum to increase signal-to-noise ratio (Xue et al., 2010).

Task model.

These smoothed images were then used in conjunction with a task model to estimate activation levels for each experimental condition. The task model consisted of event-based regressors representing the onsets of trials in each of the 12 conditions of the factorial design, along with four nuisance regressors representing the onsets of each type of excluded trial (error trials, posterror trials, outlier trials, and the first trial of each run). This task model was then convolved with SPM8 's canonical hemodynamic response function. The convolved task model was appended by regressors representing head motion parameters and the grand mean of the run (to remove the run-specific baseline signal) to form a design matrix against which the smoothed functional images were regressed, resulting in model estimates for each of the first 12 regressors. Finally, for each subject and each sequence condition, two contrasts (cI − cC and iI − iC) were applied to the model estimates across runs, producing 6 (3 sequence conditions × 2 previous congruency) neural congruency effects (i.e., the t values of these contrasts) at every gray matter (GM) voxel defined in the segmented SPM T1 template (dilated by 1 voxel). These neural congruency effects were normalized within each voxel to remove individual baseline variance for the intersubject multivariate analysis (see below).

Multivariate classification analyses.

To locate brain regions that are either commonly or selectively involved in the expression of the different levels of event priming that contribute to the CSE, we conducted a multivariate decoding analysis (see Fig. 3B) with a searchlight strategy (Kriegeskorte et al., 2006) that scanned through spheres of GM voxels (searchlight radius = 3 voxels). Because the CSE is expressed as the modulation of previous-trial congruency on the current-trial congruency effect, this analysis aimed to distinguish fMRI activation patterns of postcongruent congruency effects (i.e., cI − cC) from activation patterns of postincongruent congruency effects (i.e., iI − iC) for each type of sequence (identity-priming, category-priming, and congruency-priming) using linear support vector machines. Note that, although another intuitive way of testing category- and identity-level priming would be to distinguish cC + iI (complete repetition and complete change trials) from cI + iC (partial repetitions), this contrast is equivalent to our classification of cI − cC vs iI − iC (by shifting cC and iC to the other class) given that linear classifiers were used in the analyses. In addition, our subtraction-based congruency effects removed activity related to nuisance processes (e.g., motor activity) and thus allowed for more accurate regression to isolate each level of priming (see below).

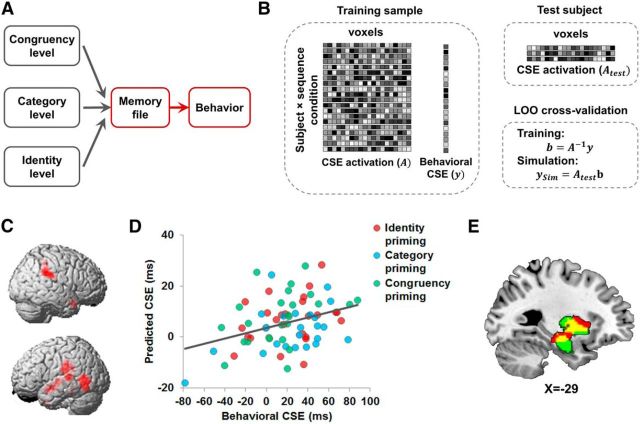

Figure 3.

Event-feature-priming MVPA results. A, Within the context of the event file model (Fig. 1A), the present analysis examines the integration of different event features in memory (highlighted in red). B, Illustration of the cross-subject classification MVPA. Given a searchlight, activation of voxels in this searchlight was extracted from individual t-maps and grouped together for cross-subject analysis. C, Schematic illustration of isolating neural congruency effects for each level of priming. Neural congruency effects from the congruency priming condition were regressed from category priming trials and congruency-level and category-level neural congruency effects were regressed from those of identity-priming trials. D, Brain regions displaying feature-level-specific expression of priming effects [i.e., showing higher decoding accuracy for one feature level than the other two levels (p < 0.05, corrected)]; for full results, see Table 1. E, Overlap of searchlights in left putamen and hippocampus (in red) showing above-chance encoding of feature priming at all three levels of abstraction.

The MVPA was performed in an intersubject manner using a leave-one-out (LOO) cross-validation approach: the classifiers were trained on the data from 24 subjects and tested on the data from the remaining subject. The training and testing iterated until each subject served as test subject once. As a result, for each level of priming, a classification accuracy map was computed in which each GM voxel represented the classification accuracy from the LOO cross-validation of the searchlight centered at that voxel. Compared with within-subject MVPA, this analysis imposed an additional constraint that the activation patterns that represent priming effects are similar across subjects, thus creating a more rigorous (and generalizable) test of the functional mapping between cognitive processes and specific brain regions (Jiang et al., 2013).

To assess the decoding performance of congruency-level priming, neural congruency effects (postcongruent vs postincongruent) from the congruency-priming sequence condition were classified. For the other two sequence conditions, priming levels that are not uniquely expressed in one particular sequence types were dissociated by filtering out neural activity related to other priming levels using linear regression. Specifically, category-level priming was tested by classifying the category-priming condition's neural congruency effects (postcongruent vs postincongruent) after they had been regressed against both neural congruency effects (postcongruent and postincongruent) from the congruency-priming condition to remove possible confounds from congruency-level priming (see Fig. 3C). Similarly, neural congruency effects (postcongruent vs postincongruent) from the identity-priming condition were regressed against neural congruency effects from the other two sequence conditions and were then classified to test identity-level priming (see Fig. 3C).

To locate brain regions that selectively express priming effects at a particular event feature level, the classification accuracy maps for each feature level were compared with the classification accuracy maps of each of the other two levels. For exampe, we would consider a brain region to selectively express congruency-level (control demand) priming effects if classification of congruency-level priming in this region was above chance and significantly better than classification of the category and identity levels. These classification comparisons were conducted using a Bayesian approach (Jiang et al., 2013): to test the null hypothesis that two classification accuracies o1 and o2 observed from the same searchlight over two different accuracy maps (classification analyses) belonged to the same underlying classification accuracy o (i.e., no significant difference between o1 and o2), we constructed a null distribution of observing any classification accuracy given o1 and the two accuracy maps over all possible o. Specifically, the probability of observing o2 based on o1 in the null distribution, or p(o2|o1), was expressed as ∫p(o2|o)p(o|o1)do, where p(o2|o) was calculated using a binomial distribution; p(o|o1) was computed using Bayes' rule: p(o|o1)∝ p(o1|o)p(o1), where p(o1|o) was again calculated using a binomial distribution and p(o1) was obtained by sampling the accuracy map. With this null distribution, the p value of the null hypothesis of no significant difference between o1 and o2 was defined based on a two-tailed test: (without loss of generality, here, we assume that o2 > 0.5). For example, a p value of 0.05 would indicate that the probability that o1 and o2 do not differ from each other (i.e., o1 and o2 reflect the same underlying accuracy) is 0.05. Similarly, a one-tailed test (e.g., null hypothesis o2 > o1) can be derived using either the first or second term of the definition of the two-tailed test, depending on the direction of the test.

By contrast, to identify brain regions involved in integrating event characteristics across all levels of abstraction, we delineated searchlights that expressed priming effects at all feature levels. This was done by performing a “logical AND” conjunction analysis (Nichols et al., 2005) across the classification accuracy maps taken from the initial searchlight MVPAs for each of the three priming levels (i.e., before computing the contrast between these maps). In other words, for each voxel, we tested whether it belonged to a searchlight cluster that passed the multiple-comparison correction in all three accuracy maps.

Multivariate brain–behavior analysis.

To link the putative integrated neural event file representations directly to behavioral priming effects, a searchlight-based, multivariate brain–behavior analysis was performed to predict behavioral CSEs (i.e., the RT differential of [iI − cI] − [cI − cC]) using neural CSEs (i.e., the difference between the neural congruency effects, or [iI − cI] − [cI − cC]). Here, data from all sequence conditions were pooled together (because we were interested in effects related to integrated event files) and then submitted to a LOO cross-validation for each searchlight. Because this analysis involved the use of linear regression and correlation (see below), both of which are susceptible to spurious results (overfitting) due to high dimensionality and noise, we first performed a dimension reduction by means of a principle component analysis (PCA). Specifically, the to-be-predicted variable in this analysis consisted of three behavioral CSEs per 25 subjects (i.e., 75 data points), which could easily be overfit by a searchlight consisting of up to 123 dimensions (voxels). Therefore, the neural CSEs from the training subjects first underwent a PCA and the principle components (PCs) that explained the highest portion of variance in the data were selected until the total amount of variance explained by these selected PCs reached 50%. This selection mitigated against the possibility of overfitting by drastically reducing the number of predictor dimensions (maximum number of selected PCs = 4 for all searchlights analyzed) while keeping the majority of variance (i.e., information) represented in the data.

The selected components were fit to behavioral CSEs in the training sample using supported vector regression and an ε parameter of 0.01 (Kahnt et al., 2011; Jimura and Poldrack, 2012). Then, the regression coefficients were applied to the neural CSEs of the test subject, which were transformed and selected using the PCA outcome computed using the training subjects to produce predicted behavioral CSEs. After the LOO cross-validation, we computed correlations between predicted and observed behavioral CSEs to assess the quality of the prediction. A significant positive correlation in a searchlight implies that the behavioral CSE—reflecting the combined priming effect of an integrated event file—can be predicted from neural signal patterns in that searchlight, suggesting that this brain region is involved in the process that translates the integrated event file into behavioral output.

Control for false positives.

For all aforementioned statistical analyses, false positives due to multiple comparisons were controlled for at p < 0.05 (for classification and brain–behavior analyses, the p values were obtained using binomial and correlation tests for each searchlight, respectively) for combined searchlight classification accuracy and cluster extent thresholds using the AFNI ClusterSim algorithm (http://afni.nimh.nih.gov/pub/dist/doc/program_help/3dClustSim.html) and smoothness estimation using AFNI 3dFWHMx tool (http://afni.nimh.nih.gov/pub/dist/doc/program_help/3dFWHMx.html). Ten thousand Monte Carlo simulations determined that an uncorrected voxelwise p value threshold of <0.01 (for p value transformed from binomial distribution, the largest p value that was <0.01) in combination with a searchlight cluster size 9 to 25 searchlights (depending on the specific analysis) ensured a false discovery rate of <0.05.

It has been suggested that binomial test results in LOO cross-validation analyses (i.e., the decoding analysis and the brain–behavior analysis) may be biased by the interdependence between training and test data and statistical tests based on random permutations can overcome this bias (Stelzer et al., 2013). However, random permutations for whole-brain searchlight analyses tend to be impractical due to the huge amount of computation required. To reduce the amount of computation, we instead took an alternative approach by conducting random permutation tests only on brain regions displaying statistically significant effects in binomial tests. To this end, we first defined ROIs based on the AFNI ClusterSim algorithm above (i.e., searchlight clusters that survived multiple-comparison correction) and then performed permutation tests on the resulting ROIs to ensure that the ROI-based results were free from such bias. Notably, for each ROI, the null distribution was obtained using all voxels within that ROI such that the resulting null distribution did not suffer from undersampling. Specifically, for each analysis, the random permutation test repeated the analysis using randomly shuffled data (e.g., the order of voxels for each data point was randomly shuffled in the classification analysis and each data point was randomly assigned to an observed behavioral CSE in the brain–behavior analysis) 1000 times. The results from the shuffled data were then used to approximate the null distribution to calculate the p value of the random permutation test.

Results

Behavioral data

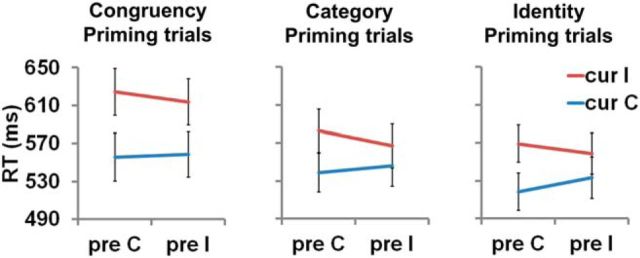

Subjects (n = 25) performed the task with very high accuracy (97 ± 1%). We analyzed RT data using a repeated-measures 3 (sequence condition) × 2 (previous trial congruency) × 2 (current trial congruency) ANOVA. As shown in Figure 2, we observed a main effect of sequence condition (F(2,23) = 20.7, p < 0.001) due to faster responses to identity-priming trials (545 ± 29 ms) than category-priming trials (559 ± 35 ms; t24 = 5.7, p < 0.001) and congruency priming trials (588 ± 27 ms; t24 = 6.1, p < 0.001) and faster responses to category priming trials than congruency priming trials (t24 = 5.0; p < 0.001). The ANOVA also revealed a significant main effect of current-trial congruency (F(1,24) = 88.0, p < 0.001) driven by faster responses to congruent (541 ± 22 ms) than incongruent trials (585 ± 22 ms). Moreover, we detected an interaction between sequence and current-trial congruency factors (F(2,23) = 9.3, p = 0.001) due to a larger congruency effect in congruency priming (62 ± 6 ms) than category repetition (38 ± 6 ms; t24 = 3.8, p < 0.001) and identity priming (33 ± 7 ms; t24 = 3.9; p < 0.001) conditions. Crucially, a significant interaction between previous and current congruency (i.e., the CSE) was evident (F(1,24) = 11.6, p = 0.002) and CSE magnitude did not interact with sequence condition. By performing previous-trial × current-trial congruency interaction tests separately for each sequence condition, we furthermore ascertained that the CSE was significant in each condition (calculated by testing the interaction contrast [cI − cC − (iI − iC)] against null using two-tailed one-sample t tests; congruency-priming trials: 14 ± 7 ms; t24 = 2.2; p = 0.038; Cohen's d = 0.44; category-priming trials: 24 ± 7 ms; t24 = 3.4; p = 0.002; Cohen's d = 0.69; identity-priming trials: 23 ± 6 ms; t24 = 3.9; p < 0.001; Cohen's d = 0.79). No other effects reached statistical significance.

Figure 2.

Group-mean RT (± SEM) plotted as a function of sequence condition and previous-trial and current-trial congruency. Significant CSEs occurred at each level of priming. Cur, current trial; pre, previous trial; C, congruent; I, incongruent.

In order for our imaging analyses to reveal distinct and shared neural substrates of priming effects at different event feature levels, we had to first establish that, in each sequence condition, behavior was actually affected by priming at the most concrete level available in that sequence condition. This is self-evident for the congruency-priming condition because congruency-level priming was the only type of priming available. Conversely, category-priming trials could be driven by either category-level or congruency-level priming (see Materials and Methods). To ensure that category-level priming was operational in this sequence condition, we interrogated the [iC − cC] contrast of the category sequence condition, which measures priming without the potential confound of current trial conflict occurring in incongruent trials. Crucially, if congruency-level priming were the only source of the CSE in the category-priming sequence condition, then the stimulus-feature-independent nature of congruency-level priming predicts that this effect (i.e., [iC − cC]) should not vary with the repetition/change of target category. However, contrary to this prediction of no category-level priming, we found such dependence, as documented by a significant previous congruency × target category repetition/change interaction in congruent trials of the category-priming condition (F(1,24) = 6.6, p < 0.05). This reliance on stimulus category can only be attributed to the category-level, indicating that category-level priming affected behavior in the category-priming sequence condition, although this does not rule out an additional contribution from congruency-level priming in this sequence condition.

Next, we applied the same logic to identity-priming trials in which behavior could theoretically be driven by all three levels of priming. In this analysis, testing the modulation of target identity repetition/change on the [iC − cC] contrast, we again found a significant interaction between previous congruency × target identity repetition/change (F(1,24) = 10.4, p < 0.005). This interaction suggests the presence of category-level and/or identity-level priming. Importantly, if identity-level priming were present, it should make responses to complete repetition trials in the identity-priming condition faster than those in the category-priming condition. This prediction was confirmed (identity-priming condition: 535 ± 20 ms, category-priming condition: 553 ± 22 ms, t24 = 3.2; p < 0.005). In sum, these manipulation checks confirmed that the classic CSE occurred in the context of three different levels of event features contributing to priming effects. These data set the stage for the fMRI analyses in which we delineated the neural substrates of integrative event coding.

Imaging data

Binding of concrete and abstract event features in the hippocampus and putamen

To locate brain regions that are either selectively or commonly involved in the expression of the different levels of event priming that contribute to the CSE, we conducted a searchlight based, whole-brain MVPA to decode priming effects at each level of event feature (identity, category, congruency). We then contrasted searchlight classification performance across different event features to isolate brain regions that are selectively involved in the expression of one particular event feature and applied a conjunction analysis to identify brain regions that store information about all three event features (see Materials and Methods).

The comparison of searchlight decoding accuracy across different levels of event features revealed a number of brain regions showing selective expression of priming effects for each of the three levels of abstraction. Figure 3D depicts the main findings and Table 1 provides a complete list of regions. Beginning at the most concrete, identity level, where priming effects are an expression of binding together specific stimulus and response features, selective decoding was observed primarily in the visual brain. Specifically, concrete-event feature encoding regions comprised areas of occipital and temporal cortex, including face-sensitive regions (fusiform gyrus) of the ventral visual stream (Fig. 3D), but also one more anterior focus in inferior frontal gyrus. At the more abstract category level, where priming effects are related to the direction of target/distracter stimuli (but not their physical identity), selective decoding was obtained in frontal regions, including posterior lateral sites along the inferior frontal, precentral, and postcentral gyri, as well as a focus in medial frontal cortex. Finally, at the most abstract, congruency level, where priming effects are an expression of the matching between previous and current trial control states (independent of stimulus identity or category), selective decoding was observed in the anterior medial frontal cortex, including the anterior cingulate (Fig. 3D), as well as in bilateral dorsal striatum (particularly the caudate) and left insula and precuneus.

Table 1.

Summary of clusters showing significantly higher decoding accuracy of priming effects at each level of abstraction compared with the two other levels

| Location | Peak MNI | Peak decoding accuracy | Cluster size (no. of searchlights) |

|---|---|---|---|

| Selective congruency level decoding | |||

| R. putamen | (18, 8, −5) | 0.78 | 10 |

| L. calcarine and precuneus | (−21, −58, 4) | 0.78 | 56 |

| L. insula | (−39, −1, −2) | 0.76 | 11 |

| R. medial frontal gyrus | (12, 50, 16) | 0.78 | 56 |

| R. caudate | (15, 5, 16) | 0.82 | 35 |

| L. caudate | (−18, −10, 22) | 0.78 | 14 |

| Precuneus | (−12, −67, 34) | 0.88 | 48 |

| Middle cingulate | (6, −10, 49) | 0.80 | 21 |

| Selective category level decoding | |||

| L. medial frontal gyrus | (−15, 47, 4) | 0.82 | 13 |

| R. inferior frontal gyrus | (45, 11, 19) | 0.86 | 9 |

| L. precental gyrus and L. postcental gyrus | (−45, −7, 37) | 0.82 | 36 |

| Selective identity level decoding | |||

| L. fusiform gyrus and L. parahippocampal gyrus | (−24, −37, −14) | 0.92 | 46 |

| L. inferior frontal gyrus | (−18, 17, −20) | 0.80 | 19 |

| L. superior and middle temporal gyri | (−54, −7, −11) | 0.80 | 37 |

| R. superior and middle temporal gyri | (57, −40, −13) | 0.84 | 84 |

| R. middle occipital gyrus | (36, −85, 10) | 0.84 | 11 |

| L. middle occipital gyrus | (−39, −67, 13) | 0.88 | 21 |

| L. Superior occipital gyrus and precuneus | (−24, −73, 28) | 0.80 | 9 |

| R. Superior occipital gyrus and precuneus | (18, −73, 31) | 0.84 | 14 |

| Precuneus | (−9, −43, 43) | 0.76 | 14 |

Peak decoding accuracy refers to the level that shows superior decoding accuracy than the other two levels.

Our main interest, however, lay in discovering brain regions involved in the integration of concrete and abstract event features; that is, brain regions that would simultaneously carry information about priming effects at all three of the event feature levels. A conjunction analysis using results of decoding analyses at each of the congruency, category, and identity levels (p < 0.05, corrected) revealed overlapping searchlights with significantly above-chance decoding of priming effects at all three levels in a cluster spanning the left anterior hippocampus and putamen (Fig. 3E). To cross-validate that this region of overlap contains information encoding all three levels of priming, we repeated the decoding analysis using all voxels defined within this region of overlap from the conjunction analysis (Fig. 3E) and applied an unbiased permutation test to assess decoding accuracy (Stelzer et al., 2013). We obtained significant decoding (i.e., above-chance classification accuracy) for all three levels of priming (congruency level: 0.74, p < 0.001; category level: 0.68, p < 0.01; identity level: 0.64; p < 0.05, permutation test, see Materials and Methods), thus corroborating that the hippocampus and putamen store information about the relationship between previous and current trial event features at multiple levels of abstraction, ranging from the (mis-)matching of concrete physical stimulus characteristics to that of attentional demands. These data indicate that these regions integrate concrete, categorical, and control-state event features into a holistic event memory.

The hippocampal and striatal regions identified above are part of a single cluster of searchlights spanning both of these regions. To test whether voxels within each anatomical structure by themselves would be able to decode event features across all levels of abstraction, we further divided this region of overlap from the conjunction analysis (Fig. 3E) into separate hippocampus (139 voxels) and putamen (202 voxels) regions by masking the searchlight cluster with anatomical masks based on the AAL template (Tzourio-Mazoyer et al., 2002). Interestingly, using the voxels in each of these two ROIs separately, neither region by itself displayed reliable classification performance across all three levels of abstraction in this decoding analysis (Table 2). Given that the sizes of these two ROIs are comparable to the size of the searchlights (up to 123 voxels) used in the classification analysis, these null findings are unlikely to be due to an insufficient number of voxels making up each ROI. These results suggest that the hippocampus and putamen may represent complementary information regarding event features at different levels of abstraction.

Table 2.

Accuracy of congruency effect classifiers shown as a function of the level of priming and ROI (defined as the intersection of the ROI defined in Fig. 3D and respective AAL masks)

| Congruency level classifier | Category level classifier | Identity level classifier | |

|---|---|---|---|

| Hippocampus ROI | 0.62* | 0.64* | 0.52 |

| Putamen ROI | 0.64* | 0.56 | 0.56 |

*p < 0.05 using permutation tests.

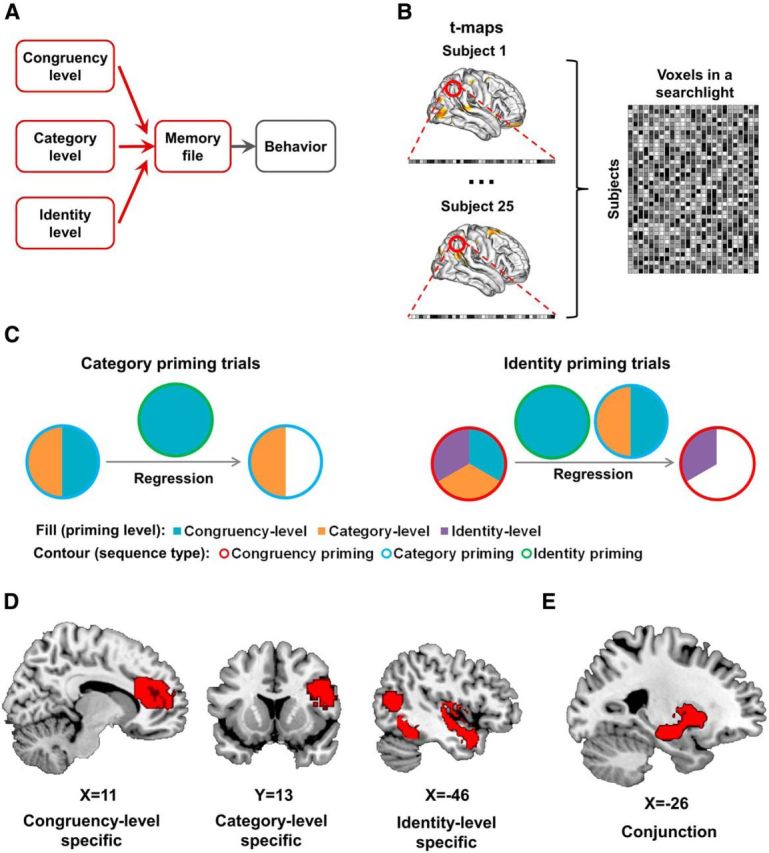

Neural signals in hippocampus and putamen predict behavioral priming effects

The preceding analyses suggest that hippocampus and putamen jointly encode event representations that integrate information over various levels of abstraction ranging from physical stimulus and response features to attentional states. However, it does not necessarily follow that these regions' representations are directly involved in driving behavioral priming effects. Our second set of analyses therefore probed how the matching of multilevel event features across trials is translated from neural into behavioral effects (as highlighted in red in Fig. 4A). We reasoned that brain regions involved in this process should harbor neural CSE signal patterns from which we can predict the size of the behavioral CSE. To pinpoint these brain regions, we conducted a regression-based brain–behavior analysis (Kahnt et al., 2011; Jimura and Poldrack, 2012) in which we tried to predict the behavioral CSE using multivariate patterns pertaining to the neural CSE (Fig. 4B; see Materials and Methods). Briefly, for each searchlight, the neural CSEs for each subject and each sequence condition (see matrix A in Fig. 4B) were fit to their corresponding behavioral CSEs (vector y in Fig. 4B) in the training sample. The resulting fitting parameters (vector b in Fig. 4B) were then applied to the test subject's neural CSEs (see matrix Atest in Fig. 4B) to produce a set of three simulated behavioral CSEs, one for each sequence condition. After cross-validation, simulated behavioral CSEs were correlated with observed behavioral CSEs to assess the quality of fit.

Figure 4.

Multivariate brain–behavior analysis results. A, Within the context of the event file model (Fig. 1A), the present analysis examines the translation of event file matching between successive trials into behavior (highlighted in red). B, Illustration of the analysis procedure (see Materials and Methods for details). C, Lateral views of brain regions (in red; p < 0.05, corrected) with neural CSEs that could predict behavioral CSEs. D, Individual predicted behavioral CSEs computed using data from the hippocampus–putamen ROI defined by the whole-brain brain–behavior analysis plotted as a function of observed behavioral CSEs. The line shows the linear trend between the predicted and observed behavioral CSEs. E, The overlap (in yellow) of regions capable of decoding the CSE at all three levels of abstraction (in red, also see Fig. 3E) and the searchlights with neural CSEs that predicted behavioral CSEs (in green).

This analysis revealed significant prediction of behavior from fMRI data (p < 0.05, corrected; Fig. 4C) in bilateral temporal regions, including the temporal poles and posterior lateral temporal cortex, in addition to right temporoparietal junction and the left insula. Most notably, we again detected the left putamen and hippocampus in this analysis, suggesting that these regions not only integrate event features across different levels of abstraction, but are also involved in the matching and retrieval process by which integrated event representations drive behavior. We followed up on this finding with two additional analyses investigating whether voxels in each of these structures could predict behavioral CSEs by themselves and if the same hippocampal and putamen subregions identified in the event-binding analyses above would also be able to predict behavior. First, we defined ROIs using the left putamen and the hippocampus clusters that survived the multiple-comparison correction (see Materials and Methods) and masked these ROIs using the anatomical AAL templates of the left putamen and the left hippocampus. We found significant positive correlations between simulated and observed behavioral CSE in both regions (hippocampus: r = 0.23, p < 0.05; putamen: r = 0.30, p < 0.005; both ROIs: r = 0.28, p < 0.01, permutation test, Fig. 4D). These hippocampus and putamen ROIs also largely overlapped the left putamen and hippocampus cluster that was found to decode priming effects at all three levels of abstraction (Fig. 4E). We next tested directly whether neural signal patterns in the hippocampus and putamen region defined by the previous event-binding findings (Fig. 3E) could also predict the size of the behavioral CSE and this was in fact the case (r = 0.29, p < 0.01, permutation test). In sum, our data across the two sets of analyses suggest that the putamen and hippocampus are involved both in event file integration, as well as in a matching process of event features across trials, presumably consisting of the cued retrieval and application of an integrated memory event file to facilitate response selection.

Discussion

Recent behavioral studies have demonstrated that the binding of event information in memory extends beyond the long-acknowledged linking of stimulus features (Treisman and Gelade, 1980) and responses (Hommel, 1998) to incorporate more abstract and “internal” event features, most notably attentional control states (Spapé and Hommel, 2008; Crump and Milliken, 2009; for review, see Egner, 2014). The present study is the first to assess the neural substrates of event feature binding that ranged from concrete stimulus and response properties to stimulus categories to control demands (or attentional states). Using a novel fMRI paradigm, we found that neural signal patterns in the (left) hippocampus and putamen represented event priming effects at all levels of event features and furthermore predicted the behavioral expression of trial-by-trial priming effects. Together, these results suggest that both medial temporal lobe (MTL) and dorsal striatum (DS) are crucially involved in binding together “holistic” event representations that link physical stimulus and response characteristics with internal control states, which afford shortcuts to adaptive information processing and response selection in a stable environment. We discuss key aspects of our design and results in turn.

We assessed the process of event file binding at different levels of abstraction by exploiting distinct feature priming sources of the CSE, as delineated in the recent cognitive control literature (for review, see Egner, 2007, 2014; Duthoo et al., 2014). The present design contributes to this literature by manipulating different levels of event feature learning orthogonally within the same task (for a related approach, see Weissman et al., 2015). A key validation question was therefore whether all levels of priming would in fact be observable in this design. Importantly, and consistent with the basic assumption that priming effects can occur simultaneously for different event features, we obtained robust CSEs for each of the sequence conditions (Fig. 2). Although CSEs at the level of stimulus identity and category in our design represent a mixture of priming effects from those levels and all “higher” levels, we conducted additional analyses to show that the most concrete possible level of priming did in fact modulate RTs in each condition.

The main aim of this study was to determine which brain regions underlie the mnemonic integration of “external” event characteristics with the concurrent internal, attentional state of the individual. To this end, we used a between-subjects neural pattern classifier approach to first delineate neural signal patterns associated with each level of event feature coding and then test for brain regions representing information about all three event feature levels. This analysis identified the anterior hippocampus and the putamen as harboring representations related to both external and internal aspects of the trial events (Fig. 3E), which we interpret as indicating that these MTL and DS regions are involved in the creation of “integrative” or “holistic” event files (Egner, 2014). This functional attribution was furthermore bolstered by a multivariate brain–behavior correlation analysis in which we found that neural signal patterns in the very same regions of the hippocampus and putamen predicted the magnitude of behavioral priming effects (i.e., the CSE) across subjects (Fig. 4).

The involvement of the anterior hippocampus in integrating internal states with external event features was hypothesized a priori (Egner, 2014) and fits well with this structure's proposed role in promoting fast-paced learning (Squire, 1992; Eichenbaum and Cohen, 2001) and binding processes in the context of relational memory encoding (Sperling et al., 2003; Preston et al., 2004; Prince et al., 2005; Shohamy and Wagner, 2008). In fact, previous studies highlighted the anterior (as opposed to posterior) hippocampus in particular as a key structure for supporting relational memory (Schacter and Wagner, 1999). Of particular relevance to the present study, an elegant recent experiment showed that this region is involved in the binding and retrieving of “holistic event engrams” representing different elements of a scene such as locations, people, and objects (Horner et al., 2015). Crucially, the present findings complement and significantly extend this conception by showing that hippocampal event binding incorporates not only various elements of an observed scene or event, but also the concurrent internal attentional state of the observer.

The putamen's involvement in this type of holistic event binding represents a more surprising finding, particularly when considering the traditional view that the hippocampus and DS support distinct or even competing memory systems that operate at different time scales, with the DS thought to mediate primarily slow, procedural learning (Gabrieli et al., 1995; Poldrack and Packard, 2003). However, recent work renders this categorical distinction between MTL and DS function less tenable and the type of finding we report in the present study has a number of parallels in the contemporary literature.

First, it is of course well established that the DS contributes to the acquisition of stimulus–response and stimulus–category associations (Packard and Knowlton, 2002; Seger and Cincotta, 2005) but, importantly, recent work demonstrated that this binding function extends beyond slow, multitrial learning to fast, single-trial memory formation in which the DS appears to be jointly involved with the hippocampus (Shohamy and Wagner, 2008; Albouy et al., 2008). Second, and of particular relevance to the present data, Sadeh et al. (2011) showed that the recruitment of the DS (specifically, the putamen) in cooperative encoding with the hippocampus might specifically be triggered in a context in which distracter stimuli need to be filtered out for optimal task performance (see also McNab and Klingberg, 2008). This is precisely the kind of situation that participants encountered in our congruency protocol. Moreover, the current results also corroborate the idea that the anterior hippocampus and putamen play complementary roles in this encoding process because we found that multilevel event learning effects could not be reliably decoded using only information from the hippocampus or putamen alone (Table 2), but were successfully decoded when signals from both regions were considered together. In addition, the present study extends our appreciation of the nature of this cooperation by showing that it appears to contribute to memory-guided cognitive control by facilitating the encoding and primed retrieval of internal control states that form part of holistic event ensembles.

In addition to these primary findings, we observed some event-feature-selective involvement of other brain regions that broadly followed a posterior-to-anterior anatomical gradient related to their level of abstraction (Fig. 3, Table 1). Priming of concrete stimulus features could unsurprisingly be decoded from large swaths of occipitotemporal cortex. Moreover, selective stimulus category binding effects were observed in posterior frontal cortex, notably including inferior frontal gyrus, which has in the past been implicated in the priming of concepts and abstract features (Schacter et al., 2007). Perhaps most interestingly, we observed selective representations of control demand priming in the insula, caudate, medial frontal cortex (including the anterior cingulate) and parietal regions. Although all of these areas have previously been implicated in imaging studies of cognitive control (Niendam et al., 2012; Jiang and Egner, 2014), the present study assessed their functional roles from a somewhat different perspective than most previous work. Specifically, our analyses gauged the degree to which brain regions harbored mnemonic information about the relationship (or match) between control demands across trials, which implicates these regions in control-learning processes. This interpretation is bolstered by the fact that the present set of regions matches up very closely with findings from a recent model-based fMRI study that specifically investigated how the brain learns to predict future control demands in a similar task (Jiang et al., 2014, 2015). That study documented an interplay between the anterior insula and caudate in predicting the level of forthcoming control demand and implicated the anterior cingulate in translating that prediction into anticipatory adjustments of attentional set (cf. Botvinick et al., 2001).

We demonstrated the integration of concrete and abstract event features by focusing on the modulation of current-trial processing by features of the previous trial. This focus on short-term effects of event binding should not be taken to imply that there are no longer-term and/or cumulative influences of event file encoding and retrieval on performance. Rather, we investigated first-order feature binding effects while controlling for higher-order effects (see Materials and Methods), primarily because of experimental expediency—these trial-by-trial effects can be easily detected and are grounded in a large prior literature (Hommel, 2004). However, studying event file effects over longer periods of time would make for an extremely interesting next step in improving our understanding of event feature binding.

In conclusion, the current study investigated how internal attentional control states might be bound together with concrete sensory and response features comprising a task trial “event.” We found that the integration of “holistic” event information ranging from the specifics of observed stimuli to the internal attentional state of the observer appears to be performed jointly by the anterior hippocampus and putamen in concert with other brain regions that selectively support the encoding of different types of event features. Specifically, the integration of attentional control settings with the event file seems to involve, beyond the hippocampus and putamen, a network of insula, caudate, anterior cingulate, and parietal cortices.

Footnotes

This work was supported in part by the National Institutes of Health–National Institute of Mental Health (Grant R01 MH087610 to T.E.). We thank Alison Adcock and Roberto Cabeza for helpful comments on a previous version of this manuscript, and Daniel Weissman and Mike X. Cohen for discussing the task design.

The authors declare no competing financial interests.

References

- Albouy G, Sterpenich V, Balteau E, Vandewalle G, Desseilles M, Dang-Vu T, Darsaud A, Ruby P, Luppi PH, Degueldre C, Peigneux P, Luxen A, Maquet P. Both the hippocampus and striatum are involved in consolidation of motor sequence memory. Neuron. 2008;58:261–272. doi: 10.1016/j.neuron.2008.02.008. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychol Rev. 2001;108:624–652. doi: 10.1037/0033-295X.108.3.624. [DOI] [PubMed] [Google Scholar]

- Bugg JM, Jacoby LL, Chanani S. Why it is too early to lose control in accounts of item-specific proportion congruency effects. Journal of Experimental Psychology Human Perception and Performance. 2011;37:844–859. doi: 10.1037/a0019957. [DOI] [PubMed] [Google Scholar]

- Cheadle S, Wyart V, Tsetsos K, Myers N, de Gardelle V, Herce Castañón S, Summerfield C. Adaptive gain control during human perceptual choice. Neuron. 2014;81:1429–1441. doi: 10.1016/j.neuron.2014.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crump MJ, Logan GD. Contextual control over task-set retrieval. Atten Percept Psychophys. 2010;72:2047–2053. doi: 10.3758/BF03196681. [DOI] [PubMed] [Google Scholar]

- Crump MJC, Milliken B. The flexibility of context-specific control: Evidence for context-driven generalization of item-specific control settings. Q J Exp Psychol. 2009;62:1523–1532. doi: 10.1080/17470210902752096. [DOI] [PubMed] [Google Scholar]

- Crump MJ, Gong Z, Milliken B. The context-specific proportion congruent Stroop effect: location as a contextual cue. Psychon Bull Rev. 2006;13:316–321. doi: 10.3758/BF03193850. [DOI] [PubMed] [Google Scholar]

- Dong DW, Atick JJ. Statistics of natural time-varying images. Network Computation in Neural Systems. 1995;6:345–358. [Google Scholar]

- Duthoo W, Abrahamse EL, Braem S, Boehler CN, Notebaert W. The congruency sequence effect 3.0: a critical test of conflict adaptation. PLoS One. 2014;9:e110462. doi: 10.1371/journal.pone.0110462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egner T. Congruency sequence effects and cognitive control. Cogn Affect Behav Neurosci. 2007;7:380–390. doi: 10.3758/CABN.7.4.380. [DOI] [PubMed] [Google Scholar]

- Egner T. Creatures of habit (and control): a multi-level learning perspective on the modulation of congruency effects. Front Psychol. 2014;5:1247. doi: 10.3389/fpsyg.2014.01247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egner T, Hirsch J. The neural correlates and functional integration of cognitive control in a Stroop task. Neuroimage. 2005;24:539–547. doi: 10.1016/j.neuroimage.2004.09.007. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H, Cohen NJ. From conditioning to conscious recollection: memory systems of the brain. Oxford: OUP; 2001. [Google Scholar]

- Fischer J, Whitney D. Serial dependence in visual perception. Nat Neurosci. 2014;17:738–743. doi: 10.1038/nn.3689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabrieli JDE, Fleischman DA, Keane MM, Reminger SL, Morrell F. Double dissociation between memory-systems underlying explicit and implicit memory in the human brain. Psychological Science. 1995;6:76–82. doi: 10.1111/j.1467-9280.1995.tb00310.x. [DOI] [Google Scholar]

- Goschke T, Bolte A. Implicit learning of semantic category sequences: Response-independent acquisition of abstract sequential regularities. J Exp Psychol Learn Mem Cogn. 2007;33:394–406. doi: 10.1037/0278-7393.33.2.394. [DOI] [PubMed] [Google Scholar]

- Gratton G, Coles MG, Donchin E. Optimizing the use of information: strategic control of activation of responses. J Exp Psychol Gen. 1992;121:480–506. doi: 10.1037/0096-3445.121.4.480. [DOI] [PubMed] [Google Scholar]

- Heinemann A, Kunde W, Kiesel A. Context-specific prime-congruency effects: On the role of conscious stimulus representations for cognitive control. Consciousness and Cognition. 2009;18:966–976. doi: 10.1016/j.concog.2009.08.009. [DOI] [PubMed] [Google Scholar]

- Hommel B. Automatic stimulus-response translation in dual-task performance. J Exp Psychol Hum Percept Perform. 1998;24:1368–1384. doi: 10.1037/0096-1523.24.5.1368. [DOI] [PubMed] [Google Scholar]

- Hommel B. Event files: feature binding in and across perception and action. Trends Cogn Sci. 2004;8:494–500. doi: 10.1016/j.tics.2004.08.007. [DOI] [PubMed] [Google Scholar]

- Hommel B, Musseler J. Action-feature integration blinds to feature-overlapping perceptual events: evidence from manual and vocal actions. Q J Exp Psychol. 2006;59:509–523. doi: 10.1080/02724980443000836. [DOI] [PubMed] [Google Scholar]

- Horner AJ, Bisby JA, Bush D, Lin WJ, Burgess N. Evidence for holistic episodic recollection via hippocampal pattern completion. Nat Commun. 2015;6:7462. doi: 10.1038/ncomms8462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang J, Egner T. Using neural pattern classifiers to quantify the modularity of conflict-control mechanisms in the human brain. Cereb Cortex. 2014;24:1793–1805. doi: 10.1093/cercor/bht029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang J, Summerfield C, Egner T. Attention sharpens the distinction between expected and unexpected percepts in the visual brain. J Neurosci. 2013;33:18438–18447. doi: 10.1523/JNEUROSCI.3308-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang J, Heller K, Egner T. Bayesian modeling of flexible cognitive control. Neurosci Biobehav Rev. 2014;46:30–43. doi: 10.1016/j.neubiorev.2014.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang J, Beck J, Heller K, Egner T. An insula-frontostriatal network mediates flexible cognitive control by adaptively predicting changing control demands. Nat Commun. 2015;6:8165. doi: 10.1038/ncomms9165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jimura K, Poldrack RA. Analyses of regional-average activation and multivoxel pattern information tell complementary stories. Neuropsychologia. 2012;50:544–552. doi: 10.1016/j.neuropsychologia.2011.11.007. [DOI] [PubMed] [Google Scholar]

- Kahnt T, Heinzle J, Park SQ, Haynes JD. Decoding different roles for vmPFC and dlPFC in multi-attribute decision making. Neuroimage. 2011;56:709–715. doi: 10.1016/j.neuroimage.2010.05.058. [DOI] [PubMed] [Google Scholar]

- Keizer AW, Colzato LS, Hommel B. Integrating faces, houses, motion, and action: spontaneous binding across ventral and dorsal processing streams. Acta Psychol. 2008;127:177–185. doi: 10.1016/j.actpsy.2007.04.003. [DOI] [PubMed] [Google Scholar]

- King JA, Korb FM, Egner T. Priming of control: implicit contextual cuing of top-down attentional set. J Neurosci. 2012;32:8192–8200. doi: 10.1523/JNEUROSCI.0934-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kühn S, Keizer AW, Colzato LS, Rombouts SA, Hommel B. The neural underpinnings of event-file management: evidence for stimulus-induced activation of and competition among stimulus-response bindings. J Cogn Neurosci. 2011;23:896–904. doi: 10.1162/jocn.2010.21485. [DOI] [PubMed] [Google Scholar]

- Kunde W, Wühr P. Sequential modulations of correspondence effects across spatial dimensions and tasks. Mem Cognit. 2006;34:356–367. doi: 10.3758/BF03193413. [DOI] [PubMed] [Google Scholar]

- Logan GD. Toward an instance theory of automatization. Psychological Review. 1988;95:492–527. doi: 10.1037/0033-295X.95.4.492. [DOI] [Google Scholar]

- MacLeod CM. Half a century of research on the Stroop effect: an integrative review. Psychol Bull. 1991;109:163–203. doi: 10.1037/0033-2909.109.2.163. [DOI] [PubMed] [Google Scholar]

- Mayr U, Awh E, Laurey P. Conflict adaptation effects in the absence of executive control. Nat Neurosci. 2003;6:450–452. doi: 10.1038/nn1051. [DOI] [PubMed] [Google Scholar]

- McNab F, Klingberg T. Prefrontal cortex and basal ganglia control access to working memory. Nat Neurosci. 2008;11:103–107. doi: 10.1038/nn2024. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Niendam TA, Laird AR, Ray KL, Dean YM, Glahn DC, Carter CS. Meta-analytic evidence for a superordinate cognitive control network subserving diverse executive functions. Cogn Affect Behav Neurosci. 2012;12:241–268. doi: 10.3758/s13415-011-0083-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Packard MG, Knowlton BJ. Learning and memory functions of the Basal Ganglia. Annu Rev Neurosci. 2002;25:563–593. doi: 10.1146/annurev.neuro.25.112701.142937. [DOI] [PubMed] [Google Scholar]

- Pashler H, Baylis G. Procedural learning.2. Intertrial repetition effects in speeded-choice tasks. J Exp Psychol Learn Mem Cogn. 1991;17:33–48. doi: 10.1037/0278-7393.17.1.33. [DOI] [Google Scholar]

- Poldrack RA, Packard MG. Competition among multiple memory systems: converging evidence from animal and human brain studies. Neuropsychologia. 2003;41:245–251. doi: 10.1016/S0028-3932(02)00157-4. [DOI] [PubMed] [Google Scholar]

- Preston AR, Shrager Y, Dudukovic NM, Gabrieli JD. Hippocampal contribution to the novel use of relational information in declarative memory. Hippocampus. 2004;14:148–152. doi: 10.1002/hipo.20009. [DOI] [PubMed] [Google Scholar]

- Prince SE, Daselaar SM, Cabeza R. Neural correlates of relational memory: successful encoding and retrieval of semantic and perceptual associations. J Neurosci. 2005;25:1203–1210. doi: 10.1523/JNEUROSCI.2540-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ridderinkhof KR. Micro- and macro-adjustments of task set: activation and suppression in conflict tasks. Psychological Research Psychologische Forschung. 2002;66:312–323. doi: 10.1007/s00426-002-0104-7. [DOI] [PubMed] [Google Scholar]

- Sadeh T, Shohamy D, Levy DR, Reggev N, Maril A. Cooperation between the hippocampus and the striatum during episodic encoding. J Cogn Neurosci. 2011;23:1597–1608. doi: 10.1162/jocn.2010.21549. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Wagner AD. Medial temporal lobe activations in fMRI and PET studies of episodic encoding and retrieval. Hippocampus. 1999;9:7–24. doi: 10.1002/(SICI)1098-1063(1999)9:1%3C7::AID-HIPO2%3E3.0.CO%3B2-K. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Wig GS, Stevens WD. Reductions in cortical activity during priming. Curr Opin Neurobiol. 2007;17:171–176. doi: 10.1016/j.conb.2007.02.001. [DOI] [PubMed] [Google Scholar]

- Schmidt JR. Questioning conflict adaptation: proportion congruent and Gratton effects reconsidered. Psychon Bull Rev. 2013;20:615–630. doi: 10.3758/s13423-012-0373-0. [DOI] [PubMed] [Google Scholar]

- Schmidt JR, Weissman DH. Congruency sequence effects without feature integration or contingency learning confounds. PLoS One. 2014;9:e102337. doi: 10.1371/journal.pone.0102337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seger CA, Cincotta CM. The roles of the caudate nucleus in human classification learning. J Neurosci. 2005;25:2941–2951. doi: 10.1523/JNEUROSCI.3401-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shohamy D, Wagner AD. Integrating memories in the human brain: hippocampal-midbrain encoding of overlapping events. Neuron. 2008;60:378–389. doi: 10.1016/j.neuron.2008.09.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spapé MM, Hommel B. He said, she said: episodic retrieval induces conflict adaptation in an auditory Stroop task. Psychonomic Bulletin and Review. 2008;15:1117–1121. doi: 10.3758/PBR.15.6.1117. [DOI] [PubMed] [Google Scholar]

- Sperling R, Chua E, Cocchiarella A, Rand-Giovannetti E, Poldrack R, Schacter DL, Albert M. Putting names to faces: successful encoding of associative memories activates the anterior hippocampal formation. Neuroimage. 2003;20:1400–1410. doi: 10.1016/S1053-8119(03)00391-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Squire LR. Memory and the hippocampus: a synthesis from findings with rats, monkeys, and humans. Psychol Rev. 1992;99:195–231. doi: 10.1037/0033-295X.99.2.195. [DOI] [PubMed] [Google Scholar]

- Stelzer J, Chen Y, Turner R. Statistical inference and multiple testing correction in classification-based multi-voxel pattern analysis (MVPA): random permutations and cluster size control. Neuroimage. 2013;65:69–82. doi: 10.1016/j.neuroimage.2012.09.063. [DOI] [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cogn Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Verguts T, Notebaert W. Adaptation by binding: a learning account of cognitive control. Trends Cogn Sci. 2009;13:252–257. doi: 10.1016/j.tics.2009.02.007. [DOI] [PubMed] [Google Scholar]

- Waszak F, Hommel B, Allport A. Task-switching and long-term priming: role of episodic stimulus-task bindings in task-shift costs. Cogn Psychol. 2003;46:361–413. doi: 10.1016/S0010-0285(02)00520-0. [DOI] [PubMed] [Google Scholar]

- Weidenbacher U, Layher G, Strauss P-M, Neumann H. A comprehensive head pose and gaze database. Paper presented at 3rd IET International Conference on Intelligent Environments; September; Ulm, Germany. 2007. [Google Scholar]

- Weissman DH, Jiang JF, Egner T. Determinants of congruency sequence effects without learning and memory confounds. J Exp Psychol Hum Percept Perform. 2014;40:2022–2037. doi: 10.1037/a0037454. [DOI] [PubMed] [Google Scholar]

- Weissman DH, Hawks Z, Egner T. Different levels of learning interact to shape the congruency sequence effect. J Exp Psychol Learn Mem Cogn. 2015 doi: 10.1037/xlm0000182. In press. [DOI] [PubMed] [Google Scholar]

- Xue G, Dong Q, Chen C, Lu Z, Mumford JA, Poldrack RA. Greater neural pattern similarity across repetitions is associated with better memory. Science. 2010;330:97–101. doi: 10.1126/science.1193125. [DOI] [PMC free article] [PubMed] [Google Scholar]