Summary

Adults optimize perceptual judgements by integrating different types of sensory information [1, 2]. This engages specialized neural circuits that fuse signals from the same [3, 4, 5] or different [6] modalities. Whereas young children can use sensory cues independently, adult-like precision gains from cue combination only emerge around ages 10 to 11 years [7, 8, 9]. Why does it take so long to make best use of sensory information? Existing data cannot distinguish whether this (1) reflects surprisingly late changes in sensory processing (sensory integration mechanisms in the brain are still developing) or (2) depends on post-perceptual changes (integration in sensory cortex is adult-like, but higher-level decision processes do not access the information) [10]. We tested visual depth cue integration in the developing brain to distinguish these possibilities. We presented children aged 6–12 years with displays depicting depth from binocular disparity and relative motion and made measurements using psychophysics, retinotopic mapping, and pattern classification fMRI. Older children (>10.5 years) showed clear evidence for sensory fusion in V3B, a visual area thought to integrate depth cues in the adult brain [3, 4, 5]. By contrast, in younger children (<10.5 years), there was no evidence for sensory fusion in any visual area. This significant age difference was paired with a shift in perceptual performance around ages 10 to 11 years and could not be explained by motion artifacts, visual attention, or signal quality differences. Thus, whereas many basic visual processes mature early in childhood [11, 12], the brain circuits that fuse cues take a very long time to develop.

Highlights

-

•

Children aged 6–12 years viewed displays depicting depth via two visual cues

-

•

We used MVPA fMRI to test for fusion of these cues in retinotopic cortex

-

•

Perceptual and cortical fusion of depth cues emerged only after age ∼11 years

-

•

Children’s perception is limited by immature fusion of depth cues in visual cortex

Humans combine sensory signals to improve perceptual precision, but this ability does not develop until ∼11 years of age. Dekker et al. show that the onset of this perceptual skill is linked to functional changes within the visual cortex, revealing the surprisingly long development of sensory mechanisms underlying human perception.

Results

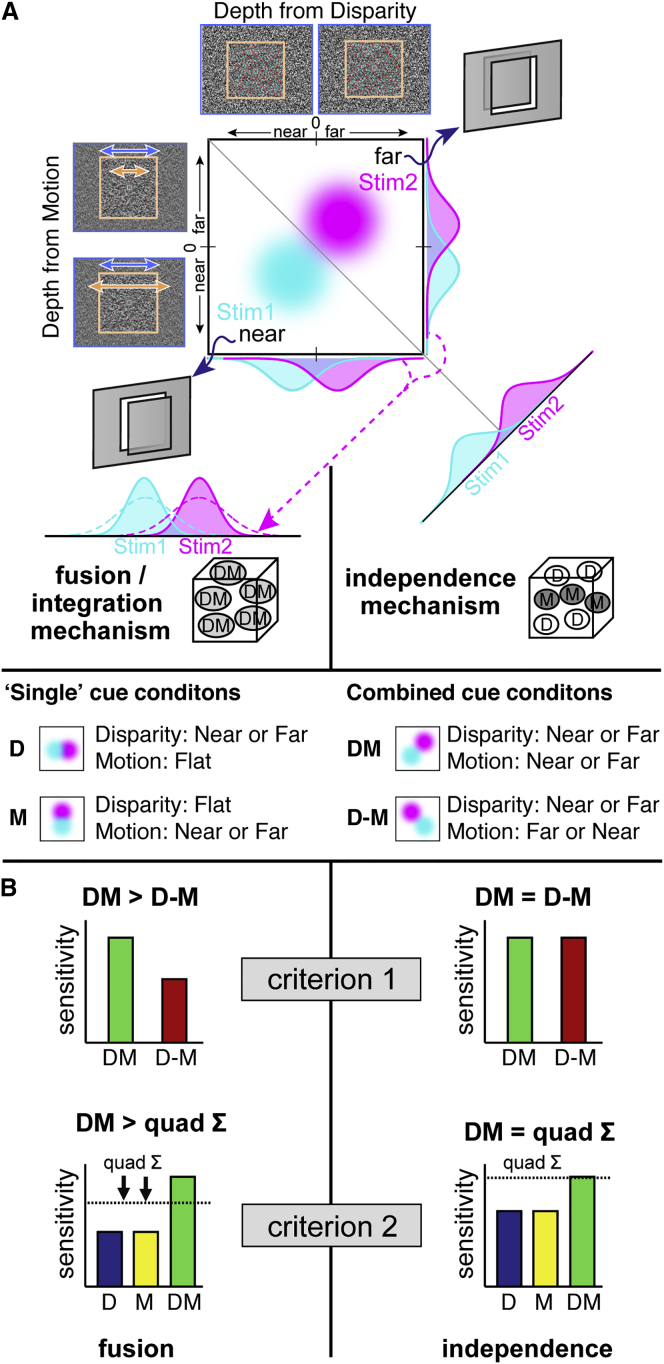

To measure how cue integration develops in childhood, we adapted methods used with adults [3]. We presented dot displays depicting a target square in front of or behind its surround (Figure 1A). The impression of depth was created using differences in dot positions between the two eyes (binocular disparity) and differences in the target’s speed relative to its surround (relative motion). Using this disparity-motion stimulus space, we created four conditions in which the two targets’ near versus far depth was defined by (1) disparity, D, where the motion cue indicated zero depth (i.e., flat); (2) relative motion, M, where the disparity cue was flat; (3) both cues conveying consistent depths, DM (e.g., disparity = “near”; motion = “near”); or (4) both cues in extreme conflict, D-M (e.g., disparity = “near”; motion = “far”).

Figure 1.

Detection Mechanisms and Integration Criteria

(A) Near versus far depths defined by motion and disparity. We illustrate depth estimators for stimulus 1 versus 2 as bivariate Gaussian distributions (magenta versus cyan “blobs”) in this motion-disparity space. A fusion mechanism (left) combines the cue dimensions to reduce variance: averaged estimator distributions become more “peaked” and are thus more discriminable. By contrast, an independence mechanism (right) increases the effective separation between the stimuli: by Pythagoras’ theorem (quadratic sum), the peak-to-peak separation between stimulus 1 and 2 is greater along the hypotenuse. Experimentally, we draw stimuli from different regions of the disparity-motion space. In “single” cue cases (D and M), stim1 and 2 differ in disparity-defined depth, but motion stays the same or vice versa. In combined cue conditions, disparity and motion can depict depth positions consistently (DM) or indicate opposite depths (D-M).

(B) Predictions for fusion (left) versus independence (right) mechanisms. In criterion 1, the fusion mechanism is compromised (lower performance) in the D-M condition, but the independence mechanism is unaffected because depth differences are detected independently. In criterion 2, the fusion mechanism is compromised by the “flat” cues in the D and M single cue conditions. In consequence, the ideal observer prediction (quadratic sum) underestimates DM empirical performance in the fusion case.

These stimuli were designed to distinguish between two possible detection mechanisms. An optimal fusion/integration mechanism averages disparity and motion depth estimates into a fused estimate with lower variance (Figure 1A, left; the fused distributions are more sharply peaked). Under this scenario, the stimuli are more discriminable because fused depth estimators are more reliable. Alternatively, we can conceive an optimal independence mechanism that exploits the outputs of separate detectors for disparity and motion. This mechanism works by finding the maximal separation between the two stimuli (i.e., the magenta and cyan “blobs” in Figure 1A are furthest apart when projected orthogonal to the negative diagonal). Performance for this mechanism corresponds to the quadratic sum of the separations along the disparity and motion dimensions, which makes intuitive geometrical sense in terms of Pythagoras’ theorem. Under this scenario, stimuli are more discriminable when defined by two cues because their effective separation is increased (DM = √[D2+M2]). Typically, the performance of these mechanisms looks very similar; however, we can distinguish them experimentally in two ways.

First, both mechanisms will be more sensitive when depth is defined by two cues in agreement (DM condition) compared to depth defined by the single cues (D or M). However, the fusion mechanism is less sensitive when cues are in conflict (D-M), because opposing depth values are averaged together. By contrast, as the independence mechanism uses detectors that only measure one aspect of the stimuli (i.e., only depth from motion or only depth from disparity), the depth sign is effectively ignored (Figure 1B, criterion 1), i.e., the Pythagorean separation still increases whether the cues agree or disagree.

Second, we can compare performance in the DM condition with an ideal observer prediction. For an independence mechanism, this is the quadratic sum of performance in the “single cue” conditions (D and M). However, the fusion mechanism is compromised in the single cue conditions: e.g., the “flat” disparity cue is averaged with the near or far motion cue, resulting in smaller differences between near versus far stimuli. In the fusion case, as the quadratic sum prediction uses (compromised) D and M performance, empirical performance in DM (where all conflict is removed) will surpass the prediction (Figure 1B, criterion 2; see [13] for more details).

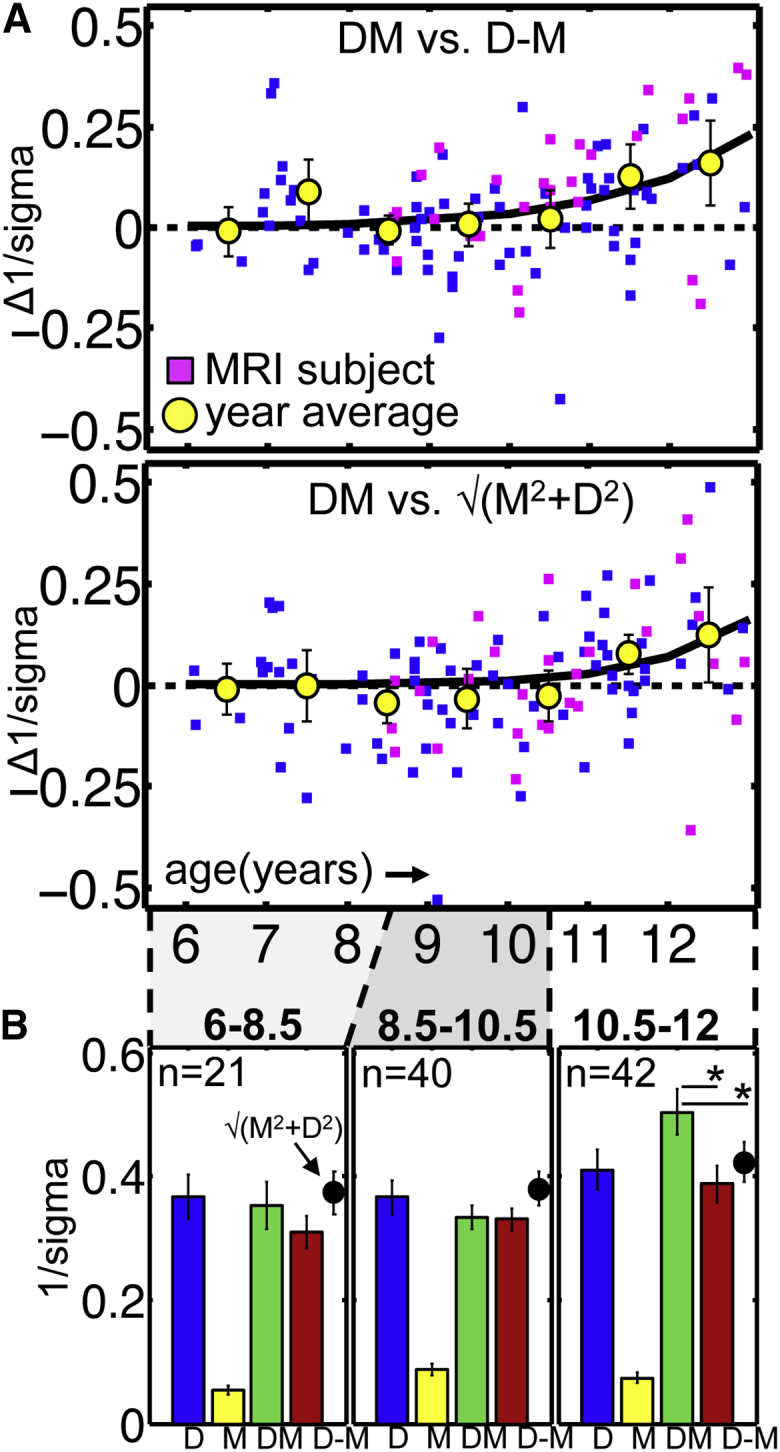

Behavioral Psychophysics Measures of Cue Integration

To assess perceptual cue integration, children (n = 103; age 6–12 years) judged which of two sequentially presented square planes appeared “furthest” behind the surround (Figure 1A). We fit their responses with a cumulative Gaussian and quantified performance using 1/sigma (larger indicates better depth sensitivity). We measured performance under the four experimental conditions (D, M, DM, and D-M) and assessed integration using our two criteria (1) DM − D-M and (2) DM − √(M2+D2) (Figure 2A; positive values suggest sensory fusion).

Figure 2.

Behavioral Psychophysics Results

(A) Integration indices by age: individual subjects and mean by year (error bars: 95% CI), and exponential function fitted to individual data points. Positive values indicate cue integration.

(B) Mean (95% CI) 1/sigma of cumulative Gaussians fitted to participants’ depth discriminations. Higher values indicate better depth sensitivity.

Both indices increased between the 6th and 12th year of life (Pearson’s linear correlation DM − D-M = 0.25; DM − √[M2+D2] = 0.28; both p < 0.05). This increase was well described by an exponential function, diverging from zero around age 10 years (Figure 2A; for details and model selection, see Table S1). As a group, however, 10 year olds did not meet the integration criteria yet whereas 11 year olds did (yellow circles and 95% CIs are above zero). We therefore identified 10.5 years as a reasonable (although necessarily approximate) cutoff age for the emergence of robust integration abilities. Indeed, children grouped into an age bin of 10.5–12 years (Figure 2B) met both criteria for perceptual cue integration (paired t tests of DM versus D-M: t41 = 3.8, p < 0.001; DM versus √[M2+D2]: t41 = 3.3, p = 0.002). By contrast, we found no evidence for integration at 6–8.5 years (DM versus D-M: t20 = 1.6, p = 0.13; DM versus √[M2+D2]: t20 = −0.71, p = 0.49) or 8.5–10.5 years (DM versus D-M: t37 = 0.15, p = 0.88); DM sensitivity is lower than √(M2+D2) prediction: (t39 = −2.3; p = 0.034). This is not because relative motion was too unreliable compared with disparity to induce perceptual benefits via fusion; M and D differed most in 10.5–12 year olds, where the motion cue nevertheless induced fusion. Together, these psychophysical data show that adult-like perceptual benefits from integrating disparity and motion cues to depth only become reliable by 10 or 11 years of age. Note, whereas our tests uncover integration, we cannot test whether integration is optimal. Previous behavioral work with children tested for optimal fusion [9] by isolating cues from each other [1], but the stimulus differences required create interpretational difficulties for fMRI, so our single cue conditions were designed to contain cue conflicts.

fMRI Measures of Cue Integration

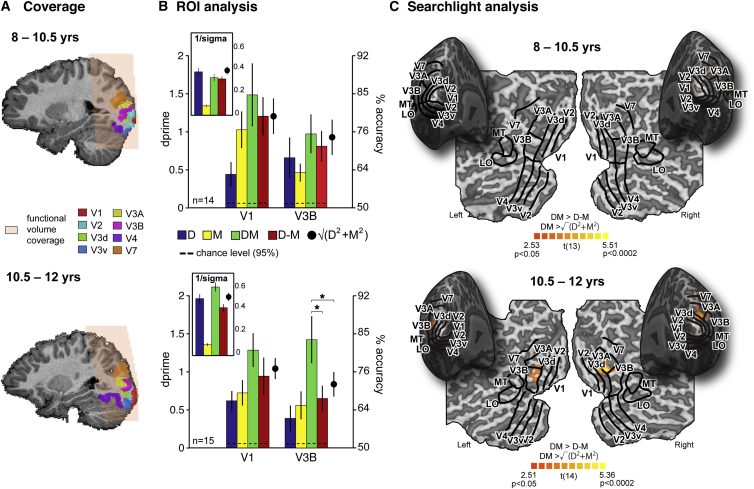

We measured fMRI BOLD responses in independently localized retinotopic regions of interest (scanned volume, Figure 3A; ROIs, Figures 3C and S1A; Supplemental Experimental Procedures) in the visual cortex of 8–12 year olds (n = 29; magenta symbols in Figure 2A). We presented near or far depth stimuli under the four experimental conditions while participants performed an orthogonal fixation task that required no depth judgements (see Control Analyses; Figure S2). We analyzed the data in each ROI by testing the performance of a linear support vector classifier trained to predict near versus far depth based on voxel activation patterns evoked when participants viewed targets in the D, M, DM, and D-M conditions. As with the psychophysical measures, we compared prediction accuracies using two criteria for cue fusion: DM > D-M (criterion 1) and DM > √ (D2+M2) (criterion 2).

Figure 3.

Pattern Classification fMRI Results

(A) Scanned area and ROIs for two representative subjects

(B) Large bar plots: accuracy (d-prime) with which near versus far stimulus depth was decoded from V3B activation patterns. V1 is shown for comparison. Performance was well above chance level; upper bounds (97.5%) were derived from permutation tests (dashed lines). Small panels show the same subjects’ perceptual performance (1/sigma).

(C) Searchlight results. Individual accuracy maps were smoothed (3 mm FWHM) to account for inter-subject variability before statistical maps were computed and projected on the inflated cortical surface. ROIs from two representative subjects are super-imposed on the group result flatmaps. Note that significant regions may therefore be slightly misaligned with respect to their labels. Areas where both integration indices were significantly above zero are colored. T values are from DM versus √(D2+M2) > 0.

Adults show evidence for integrated depth representations in cortical area V3B [3, 4, 5] (overlapping with the kinetic occipital [KO] area [13], a region of cortex also designated LO1) [14]. We therefore expected the development of sensory fusion to be expressed in this brain area. In younger children (<10.5 years), there was no evidence for integration in V3B (Figure 3; paired t tests of DM versus D-M: t13 = 0.82, p = 0.43; DM versus √[D2+M2]: t13 = 0.26, p = 0.80). By contrast, in older children (>10.5 years), V3B activation patterns met our criteria for integration: depth decoding for congruent depth stimuli exceeded performance for conflicting depth stimuli and single-cue predictions (Figure 3; DM versus D-M: t14 = 3.7, p = 0.002; DM versus √[D2+M2]: t14 = 3.3, p = 0.005). These differences in V3B response across age groups were statistically significant (independent samples t tests: DM − D-M, t27 = 2.1, p = 0.043; DM − √[D2+M2], t27 = 2.2, p = 0.036). The age-related change in integration occurred despite decoding accuracies for the D and M conditions being similar across groups (D: t27 = 1.18, p = 0.25; M: t27 = −0.64, p = 0.527) and well above chance (black dotted lines, Figure 3).

We also tested for sensory fusion in V1-4, V3A, V7, LO, and MT (Figure S1B; Table S2; V1 in Figure 3). However, none of these other areas met both criteria for cue integration in either younger or older children. Specifically, indices of integration did not differ across age in any region besides V3B (Figure S1B; Table S2; there were marginally significant effects in directly adjacent area V3A). To ensure we had not missed areas outsides our localized ROIs, we ran a group-level searchlight analysis looking for areas where DM > D-M and DM > √(D2+M2). Results (Figure 3) are mapped onto a representative cortex from each age group for visualization. No cortex met the criteria for integration in 8–10.5 year olds. In older children, only cortex around area V3B met both criteria. Thus, whereas depth defined by motion and disparity could be decoded reliably across visual cortex (including V3B) at all ages, robust evidence for fusion of these cues only emerged around ages 10 to 11 years, coinciding with marked improvements in behavioral performance (Figure 3B, small graphs). This suggests an area intricately linked to the development of cue integration but does not imply a sole locus of fusion nor exclude the involvement of other areas outside the sampled volume.

Control Analyses

We took precautions to minimize age-related confounds. Control analyses suggest that our fMRI findings cannot be explained by motion artifacts, visual attention, or signal quality. First, after our stringent movement exclusion criteria (see Experimental Procedures) head movements were small and equivalent across age groups and did not correlate with classifier performance (mean [and SD] scan-to-scan displacement < 10.5 years: 0.038 [0.014] mm; >10.5 years: 0.029 [0.014] mm, t27 = 1.61, p = 0.12; mean absolute scan-to-scan rotation < 10.5 years: 0.011° [0.0041]; >10.5 years: 0.0085° [0.0040], t27 = 1.73, p = 0.095). Second, to control eye vergence and fixation during fMRI, subjects performed left/right Vernier discriminations with small infrequent targets flashed briefly with 25% probability. Shifts in perceived position provided a subjective index of vergence [15] and revealed a slight change in fixation depth across near and far stimuli (∼2 arcmin; 15% of the stimulus depth). This shift did not correlate with prediction accuracy (Figure S2), making fixation differences an unlikely explanation of our findings. Whereas the proportion of Vernier targets responded to (correctly and incorrectly) differed slightly across condition (F7,17 = 2.4; p = 0.035; due to more responses in Dfar than Dnear), this was similar across group (F7,17 = 0.82; p = 0.58), suggesting shifts in vigilance across condition did not vary with age. Finally, there were no age differences in percent signal change and functional signal to noise (signal mean/SD; Figure S3) or in overall SVM prediction accuracy (<10.5 years: 65% [SD = 6.1]; >10.5 years 65% [SD = 5.6]; t27 = 0.57; p = 0.57), which suggests that data quality and attention to display were well matched across groups.

Discussion

Our findings reveal striking changes in depth representations within visual cortex until late childhood. This suggests that delays in sensory integration found at the behavioral level have their roots in the late maturation of neural circuits involved in sensory fusion, rather than downstream, post-perceptual decision processes.

Does this reflect the need for extensive perceptual learning about cues before they can be combined or a long postnatal time course for human brain maturation? Animal studies indicate an important role for learning: dark-reared kittens fail to develop neural markers of integration without exposure to audio-visual cue pairs [16]. When cue pairs were provided—but misaligned in space—emerging audio-visual integration responses in superior colliculus reflected this unusual relationship [17]. This suggests experience is crucial for learning when cues should be integrated. By extension, it is possible that children in our study did not integrate depth cues until 10 to 11 years because they were still learning to assign the cues to a single common cause. However, this is unlikely as disparity and relative motion both index relative distance across the retina (one across eyes; the other within eyes over time) and are typically highly correlated [18, 19]. Therefore, if the need to learn when these cues jointly signal depth were the only obstacle to developing sensory fusion in childhood, we might expect integration to develop relatively early for this cue pair. Instead, the developmental time course we found resembles those of other cues, both within and across modalities [8, 9, 20], raising the possibility of a maturational bottleneck. Accordingly, once correlated audio-visual signals are provided to mature visually deprived cats, super-additive audio-visual integration responses in superior colliculus develop much faster than in young animals [21]. This suggests that the normal time course is not only prolonged by the need to learn cue statistics but also by constraints from neural circuitry. Whereas it is not yet clear how this relates to human development, the human brain undergoes substantial changes in long- and short-range myelination and connectivity in late childhood [22, 23, 24]. It is possible that such changes play a role in the development of cue integration abilities, for example, by segregating (decorrelating) sensory pathways, thus improving the efficiency of a fusion process [25].

More generally, our findings suggest that perception in childhood is not only limited by noisier signal processing [26, 27]. Rather, the developing brain is still optimizing how it represents and combines uncertain information to make inferences about the world [28]. Current models of brain function place this process at the core of perception and cognition [29, 30]. Other perceptual skills that are still developing in late childhood such as object [31, 32, 33] and scene perception [34] may also be affected by the suboptimal detection of patterns in noise. This highlights the need to understand the extent to which perceptual development in general may be described as optimization of inference.

Experimental Procedures

Participants

We assessed depth discrimination in 142 children aged 6–12 years with no known visual or neurological problems; 27 withdrew from testing or had difficulty perceiving depth from disparity and 39 were tested but excluded from analysis because psychometric functions fitted to their data had a poor fit (R2 < 0.7), showed a large bias (>3 arcmin), or integration indices (Figure 2A) deviated >5 SDs from the mean. We report psychophysical measures from 103 children (for age distribution, see Figure 2A). Less-stringent exclusion criteria (R2 < 0.65; bias > 99; Nincluded = 112) did not change the results. Forty-one 8–12 year olds were invited back for retinotopic mapping and depth cue integration fMRI sessions. After exclusions due to excessive movement (n = 8), failure to complete both sessions (n = 2), or unclear retinotopic borders (n = 1), we report fMRI results of 29 children (magenta symbols, Figure 2A). See Supplemental Experimental Procedures for MRI selection procedures. Procedures were approved by the UCL Research Ethics Committee.

Stimuli and Task

Stimuli consisted of random dot stereograms rendered for red/cyan anaglyphs (following [3]), presented against a mid-gray background (Figure 1A). A central target square (11° × 11°) defined by relative motion and/or binocular disparity was surrounded by a “background” rectangle (20° × 16°) located in the plane of the projection screen. Both dot planes moved horizontally with a sinusoidal movement period of 1 s. The background movement amplitude was fixed at 0.5°. Condition orders were randomized. We accounted for interocular distance and calibrated displays to minimize crosstalk through the anaglyph glasses. Performance was rewarded (Supplemental Experimental Procedures).

Behavioral Psychophysics

Two depth stimuli were presented for 1 s each, separated by a 0.5-s interval fixation screen. Participants judged which of the two intervals contained the square furthest behind its background. One interval had a fixed depth (disparity: 8 arcmin and/or movement amplitude: 0.25°) whereas the other varied across six levels (3–13 arcmin disparity and/or 0.1°–0.4° of movement). In the extreme conflict condition (D-M), relative movement depicted the target in front of the plane (fixed target depth: 0.75°; variable target depths: 0.6°–0.9°). D, M, DM, and D-M stimuli were presented in blocks of 90 trials (15 trials per six depth levels). Participants practiced discriminating the largest depth difference correctly four times in a row before starting each block. Stimuli were presented on an LCD screen (1,920 × 1,080 pix) at eye height and 50 cm distance at 60 Hz.

fMRI

In the scanner, stimuli were either in front of (near; disparity −6 arcmin; motion 0.75°) or behind (far; disparity +6 arcmin; motion 0.25°) the surround. The Vernier fixation stimulus was a square (0.5° × 0.5°) with horizontal and vertical nonius lines (0.35°). The fixation marker was presented within a 1° cut out in the stimulus, at the same (zero) disparity of the projection screen. There were eight stimulus configurations: two target depths (near and far) for each experimental condition (D, M, DM, and D-M). These were presented in blocks of eight stimuli, each shown for 1 s with a 1-s fixation interval. Runs consisted of three repetitions per condition and a 16-s fixation baseline at the start and end. We collected six runs per participant. Stimuli were back projected (1,920 × 1,080 pix) onto a screen inside the bore of the magnet; viewing distance was 65 cm.

MRI Methods

Imaging

BOLD measures were obtained using single-shot EPI (TR = 2 s; volumes = 128; slices = 22; voxel size = 2.3 mm3; isotropic) with a Siemens 1.5T MRI scanner and 32-channel coil without top. Participants who moved more than 1 mm or 5° from scan to scan at any point were excluded from the analysis. In a separate session, we collected high-resolution structural scans and identified retinotopic regions using polar angle mapping (Figure S1A). Standard preprocessing was done with BrainVoyagerQX (Supplemental Experimental Procedures).

Multi-voxel Pattern Analysis

For the ROI analysis, we selected the 400 most-responsive gray matter voxels in each region, based on the t statistic resulting from contrasting all stimulus conditions with the fixation baseline. For the searchlight classification analysis [35] (Supplemental Experimental Procedures), we selected spherical ROI with 8-mm radii, moving voxelwise through the volume of cortex. Voxel time courses were converted to Z scores and shifted by two TRs (4 s) to account for the hemodynamic response. To create voxel patterns, we averaged all eight volumes within each block. Each pattern was then mean centered by subtracting the mean voxel amplitude. We used a linear support vector machine (penalty parameter C = 1) [36] to classify near versus far depth stimuli in each condition (D, M, DM, and D-M) using a leave-one-out cross-validation procedure with six folds, with 15 near and far patterns in each training and three in each test. Mean prediction accuracies were converted to d-prime using:

erfinv, inverse error function. We ran permutation tests to assess chance level prediction accuracy for these data (dotted lines, Figures 3B and S1B) by running 1,000 SVMs with shuffled near/far labels.

Acknowledgments

Supported by UK ESRC grant RES-061-25-0523, the Wellcome Trust (095183/Z/10/Z), JSPS (KAKENHI 26870911), NIH grant R01-MH-081990, a Royal Society Wolfson Research Merit Award, the Swire Trust, and the NIHR Biomedical Research Centre at Moorfields Eye Hospital and the UCL Institute of Ophthalmology.

Published: October 15, 2015

Footnotes

This is an open access article under the CC BY license (http://creativecommons.org/licenses/by/4.0/).

Supplemental Information includes Supplemental Experimental Procedures, three figures, and two tables and can be found with this article online at http://dx.doi.org/10.1016/j.cub.2015.09.043.

Supplemental Information

References

- 1.Hillis J.M., Watt S.J., Landy M.S., Banks M.S. Slant from texture and disparity cues: optimal cue combination. J. Vis. 2004;4:967–992. doi: 10.1167/4.12.1. [DOI] [PubMed] [Google Scholar]

- 2.Ernst M.O., Banks M.S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- 3.Ban H., Preston T.J., Meeson A., Welchman A.E. The integration of motion and disparity cues to depth in dorsal visual cortex. Nat. Neurosci. 2012;15:636–643. doi: 10.1038/nn.3046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dövencioğlu D., Ban H., Schofield A.J., Welchman A.E. Perceptual integration for qualitatively different 3-D cues in the human brain. J. Cogn. Neurosci. 2013;25:1527–1541. doi: 10.1162/jocn_a_00417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Murphy A.P., Ban H., Welchman A.E. Integration of texture and disparity cues to surface slant in dorsal visual cortex. J. Neurophysiol. 2013;110:190–203. doi: 10.1152/jn.01055.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gu Y., Angelaki D.E., Deangelis G.C. Neural correlates of multisensory cue integration in macaque MSTd. Nat. Neurosci. 2008;11:1201–1210. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nardini M., Jones P., Bedford R., Braddick O. Development of cue integration in human navigation. Curr. Biol. 2008;18:689–693. doi: 10.1016/j.cub.2008.04.021. [DOI] [PubMed] [Google Scholar]

- 8.Gori M., Del Viva M., Sandini G., Burr D.C. Young children do not integrate visual and haptic form information. Curr. Biol. 2008;18:694–698. doi: 10.1016/j.cub.2008.04.036. [DOI] [PubMed] [Google Scholar]

- 9.Nardini M., Bedford R., Mareschal D. Fusion of visual cues is not mandatory in children. Proc. Natl. Acad. Sci. USA. 2010;107:17041–17046. doi: 10.1073/pnas.1001699107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kiorpes L. Visual development in primates: neural mechanisms and critical periods. Dev. Neurobiol. 2015;75:1080–1090. doi: 10.1002/dneu.22276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Norcia A.M., Tyler C.W. Spatial frequency sweep VEP: visual acuity during the first year of life. Vision Res. 1985;25:1399–1408. doi: 10.1016/0042-6989(85)90217-2. [DOI] [PubMed] [Google Scholar]

- 12.Braddick O.J., Atkinson J. Infants’ sensitivity to motion and temporal change. Optom. Vis. Sci. 2009;86:577–582. doi: 10.1097/OPX.0b013e3181a76e84. [DOI] [PubMed] [Google Scholar]

- 13.Dupont P., De Bruyn B., Vandenberghe R., Rosier A.M., Michiels J., Marchal G., Mortelmans L., Orban G.A. The kinetic occipital region in human visual cortex. Cereb. Cortex. 1997;7:283–292. doi: 10.1093/cercor/7.3.283. [DOI] [PubMed] [Google Scholar]

- 14.Larsson J., Heeger D.J. Two retinotopic visual areas in human lateral occipital cortex. J. Neurosci. 2006;26:13128–13142. doi: 10.1523/JNEUROSCI.1657-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Popple A.V., Smallman H.S., Findlay J.M. The area of spatial integration for initial horizontal disparity vergence. Vision Res. 1998;38:319–326. doi: 10.1016/s0042-6989(97)00166-1. [DOI] [PubMed] [Google Scholar]

- 16.Wallace M.T., Perrault T.J., Jr., Hairston W.D., Stein B.E. Visual experience is necessary for the development of multisensory integration. J. Neurosci. 2004;24:9580–9584. doi: 10.1523/JNEUROSCI.2535-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Xu J., Yu L., Rowland B.A., Stanford T.R., Stein B.E. Incorporating cross-modal statistics in the development and maintenance of multisensory integration. J. Neurosci. 2012;32:2287–2298. doi: 10.1523/JNEUROSCI.4304-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rogers B., Graham M. Similarities between motion parallax and stereopsis in human depth perception. Vision Res. 1982;22:261–270. doi: 10.1016/0042-6989(82)90126-2. [DOI] [PubMed] [Google Scholar]

- 19.Howard I.P. Oxford University Press; 2012. Perceiving in Depth, Volume 3: Other Mechanisms of Depth Perception. [Google Scholar]

- 20.Petrini K., Remark A., Smith L., Nardini M. When vision is not an option: children’s integration of auditory and haptic information is suboptimal. Dev. Sci. 2014;17:376–387. doi: 10.1111/desc.12127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yu L., Rowland B.A., Stein B.E. Initiating the development of multisensory integration by manipulating sensory experience. J. Neurosci. 2010;30:4904–4913. doi: 10.1523/JNEUROSCI.5575-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fair D.A., Dosenbach N.U.F., Church J.A., Cohen A.L., Brahmbhatt S., Miezin F.M., Barch D.M., Raichle M.E., Petersen S.E., Schlaggar B.L. Development of distinct control networks through segregation and integration. Proc. Natl. Acad. Sci. USA. 2007;104:13507–13512. doi: 10.1073/pnas.0705843104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fair D.A., Cohen A.L., Power J.D., Dosenbach N.U., Church J.A., Miezin F.M., Schlaggar B.L., Petersen S.E. Functional brain networks develop from a “local to distributed” organization. PLoS Comput. Biol. 2009;5:e1000381. doi: 10.1371/journal.pcbi.1000381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gogtay N., Giedd J.N., Lusk L., Hayashi K.M., Greenstein D., Vaituzis A.C., Nugent T.F., 3rd, Herman D.H., Clasen L.S., Toga A.W. Dynamic mapping of human cortical development during childhood through early adulthood. Proc. Natl. Acad. Sci. USA. 2004;101:8174–8179. doi: 10.1073/pnas.0402680101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Oruç I., Maloney L.T., Landy M.S. Weighted linear cue combination with possibly correlated error. Vision Res. 2003;43:2451–2468. doi: 10.1016/s0042-6989(03)00435-8. [DOI] [PubMed] [Google Scholar]

- 26.Skoczenski A.M., Norcia A.M. Neural noise limitations on infant visual sensitivity. Nature. 1998;391:697–700. doi: 10.1038/35630. [DOI] [PubMed] [Google Scholar]

- 27.Buss E., Hall J.W., 3rd, Grose J.H. Psychometric functions for pure tone intensity discrimination: slope differences in school-aged children and adults. J. Acoust. Soc. Am. 2009;125:1050–1058. doi: 10.1121/1.3050273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Beck J.M., Ma W.J., Pitkow X., Latham P.E., Pouget A. Not noisy, just wrong: the role of suboptimal inference in behavioral variability. Neuron. 2012;74:30–39. doi: 10.1016/j.neuron.2012.03.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Friston K. The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 2010;11:127–138. doi: 10.1038/nrn2787. [DOI] [PubMed] [Google Scholar]

- 30.Clark A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 2013;36:181–204. doi: 10.1017/S0140525X12000477. [DOI] [PubMed] [Google Scholar]

- 31.Yoon, J., Winawer, J., Witthoft, N., and Markman, E. (2007). Striking deficiency in top-down perceptual reorganization of two-tone images in preschool children. In Proceedings of the IEEE 6th International Conference on Development and Learning, 181–186.

- 32.Bova S.M., Fazzi E., Giovenzana A., Montomoli C., Signorini S.G., Zoppello M., Lanzi G. The development of visual object recognition in school-age children. Dev. Neuropsychol. 2007;31:79–102. doi: 10.1207/s15326942dn3101_5. [DOI] [PubMed] [Google Scholar]

- 33.Dekker T., Mareschal D., Sereno M.I., Johnson M.H. Dorsal and ventral stream activation and object recognition performance in school-age children. Neuroimage. 2011;57:659–670. doi: 10.1016/j.neuroimage.2010.11.005. [DOI] [PubMed] [Google Scholar]

- 34.Ellemberg D., Hansen B.C., Johnson A. The developing visual system is not optimally sensitive to the spatial statistics of natural images. Vision Res. 2012;67:1–7. doi: 10.1016/j.visres.2012.06.018. [DOI] [PubMed] [Google Scholar]

- 35.Kriegeskorte N., Goebel R., Bandettini P. Information-based functional brain mapping. Proc. Natl. Acad. Sci. USA. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chang C.-C., Lin C.-J. LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011;2:1–27. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.