Abstract

General results from statistical learning theory suggest to understand not only brain computations, but also brain plasticity as probabilistic inference. But a model for that has been missing. We propose that inherently stochastic features of synaptic plasticity and spine motility enable cortical networks of neurons to carry out probabilistic inference by sampling from a posterior distribution of network configurations. This model provides a viable alternative to existing models that propose convergence of parameters to maximum likelihood values. It explains how priors on weight distributions and connection probabilities can be merged optimally with learned experience, how cortical networks can generalize learned information so well to novel experiences, and how they can compensate continuously for unforeseen disturbances of the network. The resulting new theory of network plasticity explains from a functional perspective a number of experimental data on stochastic aspects of synaptic plasticity that previously appeared to be quite puzzling.

Author Summary

Synaptic connectivity between neurons in the brain and the efficacies (“weights”) of these synaptic connections are thought to encode the long-term memory of an organism. But a closer look at their molecular implementation, as well as imaging experiments over longer periods of time, have shown that synaptic connections are subject to numerous stochastic processes. We propose that this seeming unreliability of synaptic connections is not a bug, but an important feature. It endows networks of neurons with an important experimentally observed but theoretically not understood capability: Automatic compensation for internal and external changes. This perspective of network plasticity requires a new conceptual and mathematical framework, which is provided by this article. Stochasticity of synapses is seen here not as noise of an inherently deterministic system, but as an inherent property, similarly as Brownian motion of particles in a physical system cannot be abstracted away if one wants to understand certain properties of a physical system. In fact, we find that this underlying stochasticity of synaptic connections enables a network of neurons to continuously try out new network configurations while maintaining its functionality.

Introduction

We reexamine in this article the conceptual and mathematical framework for understanding the organization of plasticity in networks of neurons in the brain. We will focus on synaptic plasticity and network rewiring (spine motility) in this article, but our framework is also applicable to other network plasticity processes. One commonly assumes, that plasticity moves network parameters θ (such as synaptic connections between neurons and synaptic weights) to values θ* that are optimal for the current computational function of the network. In learning theory, this view is made precise for example as maximum likelihood learning, where model parameters θ are moved to values θ* that maximize the fit of the resulting internal model to the inputs x that impinge on the network from its environment (by maximizing the likelihood of these inputs x). The convergence to θ* is often assumed to be facilitated by some external regulation of learning rates, that reduces the learning rate when the network approaches an optimal solution.

This view of network plasticity has been challenged on several grounds. From the theoretical perspective it is problematic because in the absence of an intelligent external controller it is likely to lead to overfitting of the internal model to the inputs x it has received, thereby reducing its capability to generalize learned knowledge to new inputs. Furthermore, networks of neurons in the brain are apparently exposed to a multitude of internal and external changes and perturbations, to which they have to respond quickly in order to maintain stable functionality.

Other experimental data point to surprising ongoing fluctuations in dendritic spines and spine volumes, to some extent even in the adult brain [1] and in the absence of synaptic activity [2]. Also a significant portion of axonal side branches and axonal boutons were found to appear and disapper within a week in adult visual cortex, even in the absence of imposed learning and lesions [3]. Furthermore surprising random drifts of tuning curves of neurons in motor cortex were observed [4]. Apart from such continuously ongoing changes in synaptic connections and tuning curves of neurons, massive changes in synaptic connectivity were found to accompany functional reorganization of primary visual cortex after lesions, see e.g. [5].

We therefore propose to view network plasticity as a process that continuously moves high-dimensional network parameters θ within some low-dimensional manifold that represents a compromise between overriding structural rules and different ways of fitting the internal model to external inputs x. We propose that ongoing stochastic fluctuations (not unlike Brownian motion) continuously drive network parameters θ within such low-dimensional manifold. The primary conceptual innovation is the departure from the traditional view of learning as moving parameters to values θ* that represent optimal (or locally optimal) fits to network inputs x. We show that our alternative view can be turned into a precise learning model within the framework of probability theory. This new model satisfies theoretical requirements for handling priors such as structural constraints and rules in a principled manner, that have previously already been formulated and explored in the context of artificial neural networks [6, 7], as well as more recent challenges that arise from probabilistic brain models [8]. The low-dimensional manifold of parameters θ that becomes the new learning goal in our model can be characterized mathematically as the high probability regions of the posterior distribution p*(θ∣x) of network parameters θ. This posterior arises as product of a general prior p 𝒮(θ) for network parameters (that enforces structural rules) with a term that describes the quality of the current internal model (e.g. in a predictive coding or generative modeling framework: the likelihood p 𝒩(x∣θ) of inputs x for the current parameter values θ of the network 𝒩). More precisely, we propose that brain plasticity mechanisms are designed to enable brain networks to sample from this posterior distribution p*(θ∣x) through inherent stochastic features of their molecular implementation. In this way synaptic and other plasticity processes are able to carry out probabilistic (or Bayesian) inference through sampling from a posterior distribution that takes into account both structural rules and fitting to external inputs. Hence this model provides a solution to the challenge of [8] to understand how posterior distributions of weights can be represented and learned by networks of neurons in the brain.

This new model proposes to reexamine rules for synaptic plasticity. Rather than viewing trial-to-trial variability and ongoing fluctuations of synaptic parameters as the result of a suboptimal implementation of an inherently deterministic plasticity process, it proposes to model experimental data on synaptic plasticity by rules that consist of three terms: the standard (typically deterministic) activity-dependent (e.g., Hebbian or STDP) term that fits the model to external inputs, a second term that enforces structural rules (priors), and a third term that provides the stochastic driving force. This stochastic force enables network parameters to sample from the posterior, i.e., to fluctuate between different possible solutions of the learning task. The stochastic third term can be modeled by a standard formalism (stochastic Wiener process) that had been developed to model Brownian motion. The first two terms can be modeled as drift terms in a stochastic process. A key insight is that one can easily relate details of the resulting more complex rules for the dynamics of network parameters θ, which now become stochastic differential equations, to specific features of the resulting posterior distribution p*(θ∣x) of parameter vectors θ from which the network samples. Thereby, this theory provides a new framework for relating experimentally observed details of local plasticity mechanisms (including their typically stochastic implementation on the molecular scale) to functional consequences of network learning. For example, one gets a theoretically founded framework for relating experimental data on spine motility to experimentally observed network properties, such as sparse connectivity, specific distributions of synaptic weights, and the capability to compensate against perturbations [9].

We demonstrate the resulting new style of modeling network plasticity in three examples. These examples demonstrate how previously mentioned functional demands on network plasticity, such as incorporation of structural rules, automatic avoidance of overfitting, and inherent and immediate compensation for network perturbances, can be accomplished through stochastic local plasticity processes. We focus here on common models for unsupervised learning in networks of neurons: generative models. We first develop the general learning theory for this class of models, and then describe applications to common non-spiking and spiking generative network models. Both structural plasticity (see [10, 11] for reviews) and synaptic plasticity (STDP) are integrated into the resulting theory of network plasticity.

Results

We present a new theoretical framework for analyzing and understanding local plasticity mechanisms of networks of neurons in the brain as stochastic processes, that generate specific distributions p(θ) of network parameters θ over which these parameters fluctuate. This framework can be used to analyze and model many types of learning processes. We illustrate it here for the case of unsupervised learning, i.e., learning without a teacher or rewards. Obviously many learning processes in biological organisms are of this nature, especially learning processes in early sensory areas, and in other brain areas that have to provide and maintain on their own an adequate level of functionality, even in the face of internal or external perturbations.

A common framework for modeling unsupervised learning in networks of neurons are generative models, which date back to the 19th century, when Helmholtz proposed that perception could be understood as unconscious inference [12]. Since then the hypothesis of the “generative brain” has been receiving considerable attention, fueling interest in various aspects of the relation between Bayesian inference and the brain [8, 13, 14]. The basic assumption of the “Bayesian brain” theory is that the activity z of neuronal networks in the brain can be viewed as an internal model for hidden variables in the outside world that give rise to sensory experiences x (such as the response x of auditory sensory neurons to spoken words that are guessed by an internal model z). The internal model z is usually assumed to be represented by the activity of neurons in the network, e.g., in terms of the firing rates of neurons, or in terms of spatio-temporal spike patterns. A network 𝒩 of stochastically firing neuron is modeled in this framework by a probability distribution p 𝒩(x,z∣θ) that describes the probabilistic relationships between N input patterns x = x 1, …, x N and corresponding network responses z = z 1, …, z N, where θ denotes the vector of network parameters that shape this distribution, e.g., via synaptic efficacies and network connectivity. The marginal probability p 𝒩(x∣θ) = ∑z p 𝒩(x,z∣θ) of the actually occurring inputs x = x 1, …, x N under the resulting internal model of the neural network 𝒩 with parameters θ can then be viewed as a measure for the agreement between this internal model (which carries out “predictive coding” [15]) and its environment (which generates the inputs x).

The goal of network learning is usually described in this probabilistic generative framework as finding parameter values θ* that maximize this agreement, or equivalently the likelihood of the inputs x (maximum likelihood learning):

| (1) |

Locally optimal parameter solutions are usually determined by gradient ascent on the data likelihood p 𝒩(x∣θ).

Learning a posterior distribution through stochastic synaptic plasticity

In contrast, we assume here that not only a neural network 𝒩, but also a prior p 𝒮(θ) for its parameters are given. This prior p 𝒮 can encode both structural constraints (such as sparse connectivity) and structural rules (e.g., a heavy-tailed distribution of synaptic weights). Then the goal of network learning becomes:

| (2) |

The patterns x = x 1, …, x N are assumed here to be regularly reoccurring network inputs.

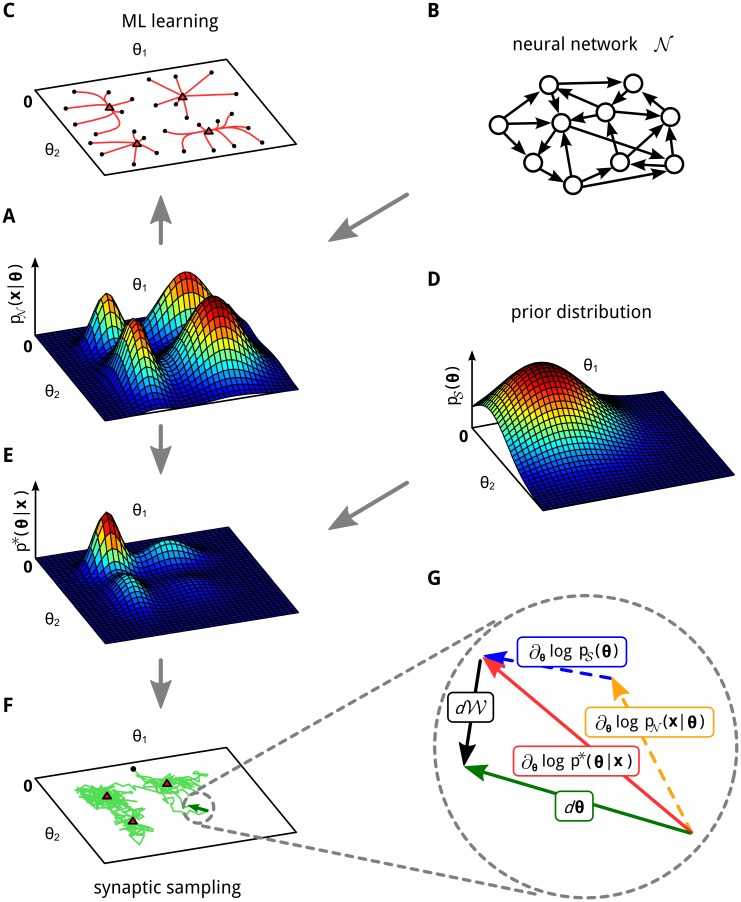

A key insight (see Fig 1 for an illustration) is that stochastic local plasticity rules for the parameters θ i enable a network to achieve the learning goal Eq (2): The distribution of network parameters θ will converge after a while to the posterior distribution p*(θ) = p*(θ∣x)—and produce samples from it—if each network parameter θ i obeys the dynamics

| (3) |

where the learning rate b > 0 controls the speed of the parameter dynamics. Eq (3) is a stochastic differential equation (see [16]), which differs from commonly considered differential equations through the stochastic term d𝒲i that describes infinitesimal stochastic increments and decrements of a Wiener process 𝒲i. A Wiener process is a standard model for Brownian motion in one dimension (more precisely: the limit of a random walk with infinitesimal step size and normally distributed increments between times t and s). Thus in an approximation of Eq (3) for discrete time steps Δt the term d𝒲i can be replaced by Gaussian noise with variance Δt (see Eq (7)). Note that Eq (3) does not have a single solution θ i(t), but a continuum of stochastic sample paths (see Fig 1F for an example) that each describe one possible time course of the parameter θ i.

Fig 1. Maximum likelihood (ML) learning vs. synaptic sampling.

A, B, C: Illustration of ML learning for two parameters θ = (θ 1,θ 2) of a neural network 𝒩. A: 3D plot of an example likelihood function. For a fixed set of inputs x it assigns a probability density (amplitude on z-axis) to each parameter setting θ. B: This likelihood function is defined by some underlying neural network 𝒩. C: Multiple trajectories along the gradient of the likelihood function in (A). The parameters are initialized at random initial values (black dots) and then follow the gradient to a local maximum (red triangles). D: Example for a prior that prefers small values for θ. E: The posterior that results as product of the prior (D) and the likelihood (A). F: A single trajectory of synaptic sampling from the posterior (E), starting at the black dot. The parameter vector θ fluctuates between different solutions, the visited values cluster near local optima (red triangles). G: Cartoon illustrating the dynamic forces (plasticity rule Eq (3)) that enable the network to sample from the posterior distribution p*(θ∣x) in (E). Magnification of one synaptic sampling step dθ of the trajectory in (F) (green). The three forces acting on θ: the deterministic drift term (red) is directed to the next local maximum (red triangle), it consists of the first two terms in Eq (3); the stochastic diffusion term d𝒲 (black) has a random direction. See S2 Text for figure details.

Rigorous mathematical results based on Fokker-Planck equations (see Methods and S1 Text for details) allow us to infer from the stochastic local dynamics of the parameters θ i given by a stochastic differential equation of the form Eq (3) the probability that the parameter vector θ can be found after a while in a particular region of the high-dimensional space in which it moves. The key result is that for the case of the stochastic dynamics according to Eq (3) this probability is equal to the posterior p*(θ∣x) given by Eq (2). Hence the stochastic dynamics Eq (3) of network parameters θ i enables a network to achieve the learning goal Eq (2): to learn the posterior distribution p*(θ∣x). This posterior distribution is not represented in the network through any explicit neural code, but through its stochastic dynamics, as the unique stationary distribution of a Markov process from which it samples continuously. In particular, if most of the mass of this posterior distribution is concentrated on some low-dimensional manifold, the network parameters θ will move most of the time within this low-dimensional manifold. Since this realization of the posterior distribution p*(θ∣x) is achieved by sampling from it, we refer to this model defined by Eq (3) (in the case where the parameters θ i represent synaptic parameters) as synaptic sampling.

The stochastic term d𝒲i in Eq (3) provides a simple integrative model for a multitude of biological and biochemical stochastic processes that effect the efficacy of a synaptic connection. The mammalian postsynaptic density comprises over 1000 different types of proteins [17]. Many of those proteins that effect the amplitude of postsynaptic potentials and synaptic plasticity, for example NMDA receptors, occur in small numbers, and are subject to Brownian motion within the membrane [18]. In addition, the turnover of important scaffolding proteins in the postsynaptic density such as PSD-95, which clusters glutamate receptors and is thought to have a substantial impact on synaptic efficacy, is relatively fast, on the time-scale of hours to days, depending on developmental state and environmental condition [19]. Also the volume of spines at dendrites, which is assumed to be directly related to synaptic efficacy [20, 21] is reported to fluctuate continuously, even in the absence of synaptic activity [2]. Furthermore the stochastically varying internal states of multiple interacting biochemical signaling pathways in the postsynaptic neuron are likely to effect synaptic transmission and plasticity [22].

The contribution of the stochastic term d𝒲i in Eq (3) can be scaled by a temperature parameter , where T can be any positive number. The resulting stationary distribution of θ is proportional to , so that the dynamics of the stochastic process can be described by the energy landscape . For high values of T this energy landscape is flattened, i.e., the main modes of p*(θ) become less pronounced. For T → 0 the dynamics of θ approaches a deterministic process and converges to the next local maximum of p*(θ). Thus the learning process approximates for low values of T maximum a posteriori (MAP) inference [7]. We propose that this temperature parameter T is regulated in biological networks of neurons dependent on the developmental state, environment, and behavior of an organism. One can also accommodate a modulation of the dynamics of each individual parameter θ i by a learning rate b(θ i) that depends on its current value (see Methods).

Online synaptic sampling

For online learning one assumes that the likelihood p 𝒩(x∣θ) = p 𝒩(x 1, …, x N∣θ) of the network inputs can be factorized:

| (4) |

i.e., each network input x n can be explained as being drawn individually from p 𝒩(x n∣θ), independently from other inputs.

The weight update rule Eq (3) depends on all inputs x = x 1, …, x N, hence synapses have to keep track of the whole set of all network inputs for the exact dynamics (batch learning). In an online scenario, we assume that only the current network input x n is available for synaptic sampling. One then arrives at the following online-approximation to Eq (3)

| (5) |

Note the additional factor N in the rule. It compensates for the N-fold summation of the first and last term in Eq (5) when one moves through all N inputs x n. Although convergence to the correct posterior distribution cannot be guaranteed theoretically for this online rule, we show in Methods that the rule is a reasonable approximation to the batch-rule Eq (3). Furthermore, all subsequent simulations are based on this online rule, which demonstrates the viability of this approximation.

Relationship to maximum likelihood learning

Typically, synaptic plasticity in generative network models is modeled as maximum likelihood learning. Time is often discretized into small discrete time steps Δt. For gradient-based approaches the parameter change is then given by the gradient of the log likelihood multiplied with some learning rate η:

| (6) |

To compare this maximum likelihood update with synaptic sampling, we consider a version of the parameter dynamics Eq (5) for discrete time (see Methods for a derivation):

| (7) |

where the learning rate η is given by η = b Δt and denotes Gaussian noise with zero mean and variance 1, drawn independently for each parameter θ i and each update time t. We see that the maximum likelihood update Eq (6) becomes one term in this online version of synaptic sampling. Eq (7) is a special case of the online Langevin sampler that was introduced in [23].

The first term in Eq (7) arises from the prior p 𝒮(θ), and has apparently not been considered in previous rules for synaptic plasticity. An additional novel component is the Gaussian noise term (see also Fig 1G). It arises because the accumulated impact of the Wiener process 𝒲i over a time interval of length Δt is distributed according to a normal distribution with variance Δt. In contrast to traditional maximum likelihood optimization based on additive noise for escaping local optima, this noise term is not scaled down when learning approaches a local optimum. This ongoing noise is essential for enabling the network to sample from the posterior distribution p*(θ) via continuously ongoing synaptic plasticity (see Fig 1F).

Synaptic sampling improves the generalization capability of a neural network

The previously described theory for learning a posterior distribution over parameters θ can be applied to all neural network models 𝒩 where the derivative in Eq (5) can be efficiently estimated. Since this term also has to be estimated for maximum likelihood learning Eq (6), synaptic sampling can basically be applied to all neuron and network models that are amenable to maximum likelihood learning. We illustrate salient new features that result from synaptic sampling (i.e., plasticity rules Eqs (5) or (7)) for some of these models. We begin with the Boltzmann machine [24], one of the oldest generative neural network models. It is currently still extensively investigated in the context of deep learning [25, 26]. We demonstrate in Fig 2D and 2F the improved generalization capability of this model for the learning approach Eq (2) (learning of the posterior), compared with maximum likelihood learning (approach Eq (1)), which had been theoretically predicted by [6] and [7]. But this model for learning the posterior (approach Eq (2)) in Boltzmann machines is now based on local plasticity rules. Note that the Boltzmann machine with synaptic sampling samples simultaneously on two different time scales: In addition to sampling for given parameters θ from likely network states in the usual manner, it now samples simultaneously on a slower time scale according to Eq (7) from the posterior of network parameters θ.

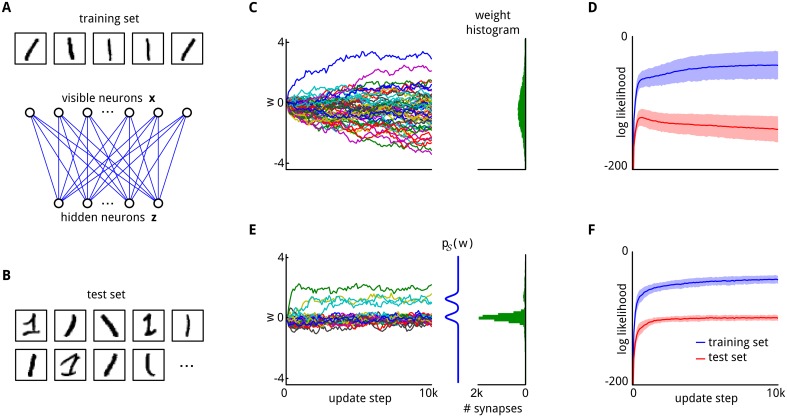

Fig 2. Priors for synaptic weights improve generalization capability.

A: The training set, consisting of five samples of a handwritten 1. Below a cartoon illustrating the network architecture of the restricted Boltzmann machine (RBM), composed of a layer of 784 visible neurons x and a layer of 9 hidden neurons z. B: Examples from the test set. It contains many different styles of writing that are not part of the training set. C: Evolution of 50 randomly selected synaptic weights throughout learning (on the training set). The weight histogram (right) shows the distribution of synaptic weights at the end of learning. 80 histogram bins were equally spaced between -4 and 4. D: Performance of the network in terms of log likelihood on the training set (blue) and on the test set (red) throughout learning. Mean values over 100 trial runs are shown, shaded area indicates std. The performance on the test set initially increases but degrades for prolonged learning. E: Evolution of 50 weights for the same network but with a bimodal prior. The prior p 𝒮(w) is indicated by the blue curve. Most synaptic weights settle in the mode around 0, but a few larger weights also emerge and stabilize in the larger mode. Weight histogram (green) as in (C). F: The log likelihood on the test set maintains a constant high value throughout the whole learning session, compare to (D).

A Boltzmann machine employs extremely simple non-spiking neuron models with binary outputs. Neuron y i outputs 1 with probability σ(∑j w ij y j + b i), else 0, where σ is the logistic sigmoid , with synaptic weights w ij and bias parameters b i. Synaptic connections in a Boltzmann machine are bidirectional, with symmetric weights (w ij = w ji). The parameters θ for the Boltzmann machine consist of all weights w ij and biases b i in the network. For the special case of a restricted Boltzmann machine (RBM), maximum likelihood learning of these parameters can be done efficiently [27], and therefore RBM’s are typically used for deep learning. An RBM has a layered structure with one layer of visible neurons x and a second layer of hidden neurons z. Synaptic connections are formed only between neurons on different layers (Fig 2A). The maximum likelihood gradients and can be efficiently approximated for this model, for example

| (8) |

where is the output of input neuron j while input x n is presented, and its output during a subsequent phase of spontaneous activity (“reconstruction phase”); analogously for the hidden neuron z j (see Methods and S3 Text).

We integrated this maximum likelihood estimate Eq (8) into the synaptic sampling rule Eq (7) in order to test whether a suitable prior p 𝒮(w) for the weights improves the generalization capability of the network. The network received as input just five samples x 1, …, x 5 of a handwritten Arabic number 1 from the MNIST dataset (the training set, shown in Fig 2A) that were repeatedly presented. Each pixel of the digit images was represented by one neuron in the visible layer (which consisted of 784 neurons). We selected a second set of 100 samples of the handwritten digit 1 from the MNIST dataset as test set (Fig 2B). These samples include completely different styles of writing that were not present in the training set. After allowing the network to learn the five input samples from Fig 2A for various numbers of update steps (horizontal axis of Fig 2D and 2F), we evaluated the learned internal model of this network 𝒩 for the digit 1 by measuring the average log-likelihood log p 𝒩(x∣θ) for the test data. The result is indicated in Fig 2D and 2F for the training samples by the blue curves, and for the new test examples, that were never shown while synaptic plasticity was active, by the red curves.

First, a uniform prior over the synaptic weights was used (Fig 2C), which corresponds to the common maximum likelihood learning paradigm Eq (8). The performance on the test set (shown on vertical axis) initially increases but degrades for prolonged exposure to the training set (length of that prior exposure shown on horizontal axis). This effect is known as overfitting [6, 7]. It can be reduced by choosing a suitable prior p 𝒮(θ) in the synaptic sampling rule Eq (7). The choice for the prior distribution is best if it matches the statistics of the training samples [6], which has in this case two modes (resulting from black and white pixels). The presence of this prior in the learning rule maintains good generalization capability for test samples even after prolonged exposure to the training set (red curve in Fig 2F).

The improved generalization capability of the network is a result of the prior distribution. It is well known that the prior in Bayesian inference allows to effectively prevent overfitting by making solutions that use fewer or smaller parameters more likely. Similar results would therefore emerge in any other implementation of Bayesian learning in neural networks. A thorough discussion on this topic which is known as Bayesian regularization can be found in [6, 7].

As a consequence, the choice of the prior distribution can have a significant impact on the learning result. In S3 Text we compared a set of different priors and demonstrate this effect more systematically. There it can also be seen that if the choice of the prior is bad, the learning performance can even get worse than in the case without a prior.

Spine motility as synaptic sampling

In the following sections we apply our synaptic sampling framework to networks of spiking neurons and biological models for network plasticity. The number and volume of spines for a synaptic connection is thought to be directly related to its synaptic weight [28]. Experimental studies have provided a wealth of information about the stochastic dynamics of dendritic spines (see e.g. [1, 28–32]). They demonstrate that the volume of a substantial fraction of dendritic spines varies continuously over time, and that all the time new spines and synaptic connections are formed and existing ones are eliminated. We show that these experimental data on spine motility can be understood as special cases of synaptic sampling. The synaptic sampling framework is however very general, and many different models for spine motility can be derived from it as special cases. We demonstrate this here for one simple model, induced by the following assumptions:

We restrict ourselves to plasticity of excitatory synapses, although the framework is general enough to apply to inhibitory synapses as well.

In accordance with experimental studies [28], we require that spine sizes have a multiplicative dynamics, i.e., that the amount of change within some given time window is proportional to the current size of the spine.

We assume here for simplicity that a synaptic connection between two neurons is realized by a single spine and that there is a single parameter θ i for each potential synaptic connection i.

The last requirement can be met by encoding the state of the synapse in an abstract form, that represents synaptic connectivity and synaptic plasticity in a single parameter θ i. We define that negative values of θ i represent a current disconnection and positive values represent a functional synaptic connection. The distance of the current value of θ i from zero indicates how likely it is that the synapse will soon reconnect (for negative values) or withdraw (for positive values), see Fig 3A. In addition the synaptic parameter θ i encodes for positive values the synaptic efficacy w i, i.e., the resulting EPSP amplitudes, by a simple mapping w i = f(θ i).

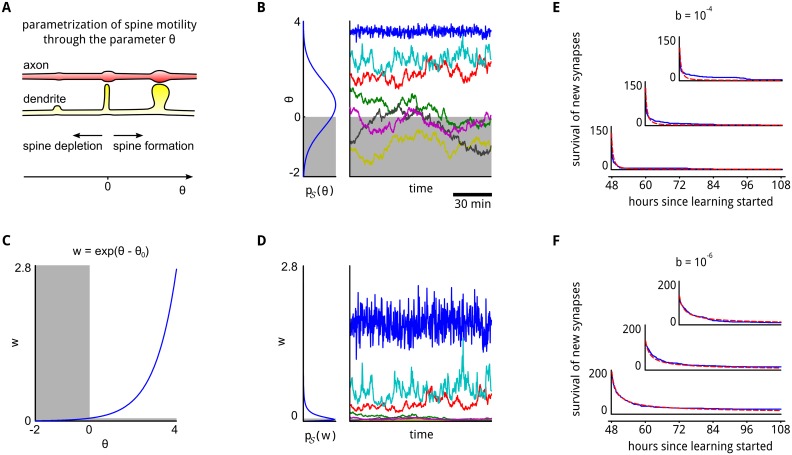

Fig 3. Integration of spine motility into the synaptic sampling model.

A: Illustration of the parametrization of spine motility. Values θ > 0 indicate a functional synaptic connection. B: A Gaussian prior p 𝒮(θ), and a few stochastic sample trajectories of θ according to the synaptic sampling rule Eq (10). Negative values of θ (gray area) are interpreted as non-functional connections. Some stable synaptic connections emerge (traces in the upper half), whereas other synaptic connections come and go (traces in lower half). All traces, as well as survival statistics shown in (E, F), are taken from the network simulation described in detail in the next section and S5 Text. C: The exponential function maps synapse parameters θ to synaptic efficacies w. Negative values of θ, corresponding to (retracted) spines are mapped to a tiny region close to zero in the w-space. D: The Gaussian prior in the θ-space translates to a log-normal distribution in the w-space. The traces from (B) are shown in the right panel transformed into the w-space. Only persistent synaptic connections contribute substantial synaptic efficacies. E, F: The emergent survival statistics of newly formed synaptic connections, (i.e., formed during the preceding 12 hours) evaluated at three different start times throughout learning (blue traces, axes are aligned with start times of the analyses). The survival statistics exhibit in our synaptic sampling model a power-law behavior (red curves, see S5 Text). The time-scale (and exponent of the power-law) depends on the learning rate b in Eq (10), and can assume any value in our quite general model (shown is b = 10−4 in (E) and b = 10−6 in (F)).

A large class of mapping functions f is supported by our theory (see S4 Text for details). The second assumption which requires multiplicative synaptic dynamics supports an exponential function f in our model, in accordance with previous models of spine motility [28]. Thus, we assume in the following that the efficacy w i of synapse i is given by

| (9) |

see Fig 3C. Note that for a large enough offset θ 0, negative parameter values θ i (which model a non-functional synaptic connection) are automatically mapped onto a tiny region close to zero in the w-space, so that retracted spines have essentially zero synaptic efficacy. The general rule for online synaptic sampling Eq (5) for the exponential mapping Eq (9) can be written as (see S4 Text)

| (10) |

In Eq (10) the multiplicative synaptic dynamics becomes explicit. The gradient , i.e., the activity-dependent contribution to synaptic plasticity, is weighted by w i. Hence, for negative values of θ i (non-functional synaptic connection), the activities of the pre- and post-synaptic neurons have negligible impact on the dynamics of the synapse. Assuming a large enough θ 0, retracted synapses therefore evolve solely according to the prior p 𝒮(θ) and the random fluctuations d𝒲i. For large values of θ i the opposite is the case. The influence of the prior and the Wiener process d𝒲i become negligible, and the dynamics is dominated by the activity-dependent likelihood term. Large synapses can therefore become quite stable if the presynaptic activity is strong and reliable (see Fig 3B). Through the use of parameters θ which determine both synaptic connectivity and synaptic efficacies, the synaptic sampling framework provides a unified model for structural and synaptic plasticity. The prior distribution can have significant impact on the spine motility, encouraging for example sparser or denser synaptic connectivity. If the activity-dependent second term in Eq (10), that tries to maximize the likelihood, is small (e.g., because θ i is small or parameters are near a mode of the likelihood) then Eq (10) implements an Ornstein Uhlenbeck process. This prediction of our model is consistent with a previous analysis which showed that an Ornstein Uhlenbeck process is a viable model for synaptic spine motility [28].

The weight dynamics that emerges through the stochastic process Eq (10) is illustrated in the right panel of Fig 3D. A Gaussian parameter prior p 𝒮(θ i) results in a log-normal prior p 𝒮(w i) in a corresponding stochastic differential equation for synaptic efficacies w i (see S4 Text for details).

The last term (noise term) in our synaptic sampling rule Eq (10) predicts that eliminated connections spontaneously regrow at irregular intervals. In this way they can test whether they can contribute to explaining the input. If they cannot contribute, they disappear again. The resulting power-law behavior of the survival of newly formed synaptic connections (Fig 3E and 3F) matches corresponding new experimental data [32] and is qualitatively similar to earlier experimental results which revealed a quick decay of transient dendritic spines [30, 31, 33]. Functional consequences of this structural plasticity are explored in the following sections.

Fast adaptation of synaptic connections and weights to a changing input statistics

We will explore in this and the next section implications of the synaptic sampling rule Eq (10) for network plasticity in simple generative spike-based neural network models.

The main types of spike-based generative neural network models that have been proposed are [34–37]. We focus here on the type of models introduced by [36–38], since these models allow an easy estimation of the likelihood gradient (the second term in Eq (10)) and can relate this likelihood term to STDP. Since these spike-based neural network models have non-symmetric synaptic connections (that model chemical synapses between pyramidal cells in the cortex), they do not allow to regenerate inputs x from internal responses z by running the network backwards (like in a Boltzmann machine). Rather they are implicit generative models, where synaptic weights from inputs to hidden neurons are interpreted as implicit models for presynaptic activity, given that the postsynaptic neuron fires.

We focus in this section on a simple model for an ubiquitous cortical microcircuit motif: an ensemble of pyramidal cells with lateral inhibition, often referred to as Winner-Take-All (WTA) circuit. It has been proposed that this microcircuit motif provides for computational analysis an important bridge between single neurons and larger brain systems [39]. We employ a simple form of divisive normalization (as proposed by [39]; see Methods) to model lateral inhibition, thereby arriving at a theoretically tractable version of this microcircuit motif that allows us to compute the maximum likelihood term (second term in Eq (10)) in the synaptic sampling rule. We assumed Gaussian prior distributions p 𝒮(θ i), with mean μ and variance σ 2 over the synaptic parameters θ i (as in Fig 3B). Then the synaptic sampling rule Eq (10) yields for this model

| (11) |

where S(t) denotes the spike train of the postsynaptic neuron and x i(t) denotes the weight-normalized value of the sum of EPSPs from presynaptic neuron i at time t (i.e., the summed EPSPs that would arise for weight w i = 1; see Methods for details). α is a parameter that scales the impact of synaptic plasticity depending on the current synaptic efficacy. The resulting activity-dependent component S(t)(xi(t) − α e wi) of the likelihood term is a simplified version of the standard STDP learning rule (Fig 4B and 4C), like in [36, 40]. Synaptic plasticity (STDP) for connections from input neurons to pyramidal cells in the WTA circuit can be understood from the generative aspect as fitting a mixture of Poisson (or other exponential family) distributions to high-dimensional spike inputs [36, 37]. The factor w i = exp(θ i − θ 0) had been discussed in [36], because it is compatible with the underlying generative model, but provides in addition a better fit to the experimental data of [41]. We examine in this section emergent properties of network plasticity in this simple spike-based neural network under the synaptic sampling rule Eq (11).

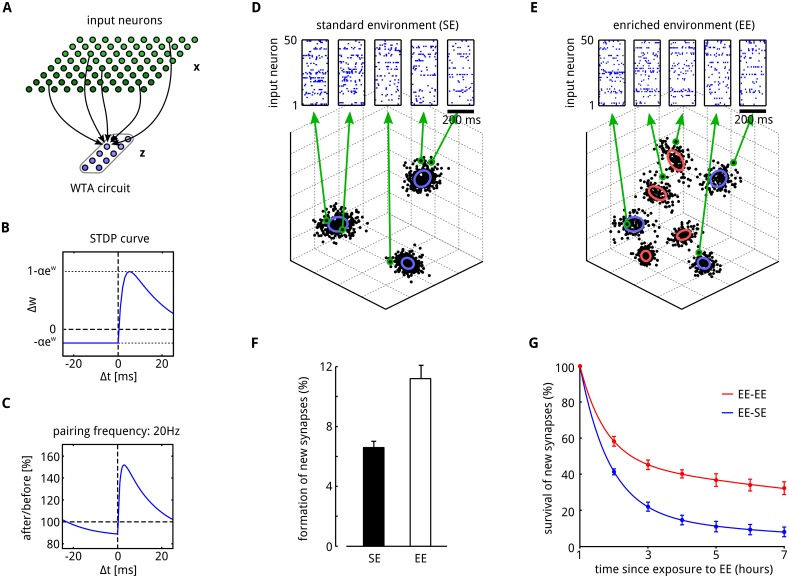

Fig 4. Adaptation of synaptic connections to changing input statistics through synaptic sampling.

A: Illustration of the network architecture. A WTA circuit consisting of ten neurons z receives afferent stimuli from input neurons x (few connections shown for a single neuron in z). B: The STDP learning curve that arises from the likelihood term in Eq (11). C: Measured STDP curve that results from a related STDP rule for a moderate pairing frequency of 20 Hz, as in [41]. (Figure adapted from [36]). D, E: Each sensory experience was modeled by 200 ms long spiking activity of 1000 input neurons, that covered some 3D data space with Gaussian tuning curves (the results do not depend on the finite dimension of the data space, we chose 3 dimension for easier visualization). Insets show the firing activity of randomly chosen 50 of the 1000 input neurons for the sample data points marked by green circles. Objects in the environment were represented by Gaussian clusters (ellipses) in this finite dimensional data space. F: During learning phase 1 (3 hours) only samples from SE were presented to the network, in phase 2 (which lasted 1 hour) samples from EE. Shortly after the transition from SE to EE the number of newly formed synaptic connections significantly increases (compare to Fig. 1h in [33]). G: Comparison of the survival of synapses for a network with persistent exposure to EE (EE-EE condition) and a network that was returned to SE (EE-SE condition). Newly formed synaptic connections are transient and quickly decay after formation. A significantly larger fraction of synapses persists if the network continuously receives EE inputs (compare to Fig. 2c in [33]). The dots show means of measurements taken every 30 minutes, the lines represent two-term exponential fits (r 2 = 1). The results in (F, G) show means over 5 trial runs. Error bars indicate STD.

It is well documented that cortical dendritic spines are transient and that spine turnover is enhanced by novel experience and training [33, 42, 43]. For example, enhanced spine formation as a consequence of sensory enrichment was found in mouse somatosensory cortex [33]. In this study the animals were exposed to a new sensory environment by adding additional objects to their home cage. This sensory enrichment resulted in a rapid increase in the formation of new spines. If the exposure to the enriched environment was only brief, the newly formed spines quickly decayed.

We wondered whether these experimentally observed effects also emerge in our synaptic sampling model. As in [33] we exposed the network to different sensory environments to study these effects. Sensory experiences typically involve several processing steps and interactions between multiple brain systems, and precise knowledge about their cortical representation is still missing. Therefore we used here a simple symbolic representation of the sensory environment. We represented each sensory experience by a point in some finite dimensional space which is covered by the tuning curves of a large number of input neurons. Their spike output was then communicated to the WTA circuit in the form of 200 ms-long spike patterns of the 1000 input neurons (see Fig 4D and 4E and Methods for details). Independently drawn sensory experiences were presented sequentially and synaptic sampling according to Eq (11) was applied continuously to all synapses from the 1000 input neurons to the ten neurons in the WTA circuit.

Each environment was represented as a mixture of Gaussians (clusters) of points in the finite-dimensional sensory space. Each cluster could represent for example different sensory experiences with some object in the environment. Consequently we modelled an enriched environment (EE) simply by adding a few new clusters to the standard environment (SE). In phase 1 the network was exposed to an environment with 3 clusters (standard environment (SE), see Fig 4D). After 3 hours the network input was enriched by adding 4 additional clusters (enriched environment (EE), see Fig 4E). We found that exposure to EE significantly increased the rate of new synapse formation as in the experimental result of [33] (Fig 4F).

Most of the newly formed synapses decayed within a few hours after return to the standard environment (EE-SE situation, see Fig 4G). In this case only about about 8% become stable. A fraction of about 30% becomes stable when the enriched environment was maintained (EE-EE situation). These results qualitatively reproduce the findings from mouse barrel cortex (compare Figures 1h and 2c in [33]). Note that we used here relatively large update rates b to keep simulation times in a feasible range, which results in spine dynamics on the time scale of hours instead of days as in biological synapses [33].

Inherent network compensation capability through synaptic sampling

Numerous experimental data show that the same function of a neural circuit is achieved in different individuals with drastically different parameters, and also that a single organism can compensate for disturbances by moving to a new parameter vector [9, 44–47]. These results suggest that there exists some low-dimensional submanifold of values for the high-dimensional parameter vector θ of a biological neural network that all provide stable network function (degeneracy). We propose that the previously discussed posterior distribution of network parameters θ provides a mathematical model for such low-dimensional submanifold. Furthermore we propose that the underlying continuous stochastic fluctuation d𝒲 provides a driving force that automatically moves network parameters (with high probability) to a functionally more attractive regime when the current solution performs worse because of perturbations, such as lesions of neurons or network connections. This compensation capability is not an add-on to the synaptic sampling model, but an inherent feature of its organization.

We demonstrate this inherent compensation capability in Fig 5 for a generative spiking neural network with synaptic parameters θ that regulate simultaneously structural plasticity and synaptic plasticity (dynamics of weights) as in Figs 3 and 4. The prior p 𝒮(θ) for these parameters is here the same as in the preceding section (see Fig 4G on the left). But in contrast to the previous section we consider here a network that allows us to study the self-organization of connections between hidden neurons. The network consists of eight WTA-circuits, but in contrast to Fig 4 we allow here arbitrary excitatory synaptic connections between neurons within the same or different ones of these WTA circuits. This network models multi-modal sensory integration and association in a simplified manner. Two populations of “auditory” and “visual” input neurons x A and x V project onto corresponding populations z A and z V of hidden neurons (each consisting of one half of the WTA circuits, see lower panel of Fig 5A). Only a fraction of the potential synaptic connections became functional (see Fig. S2A in S6 Text) through the synaptic sampling rule Eq (11) that integrates structural and synaptic plasticity. Synaptic weights and connections were not forced to be symmetric or bidirectional.

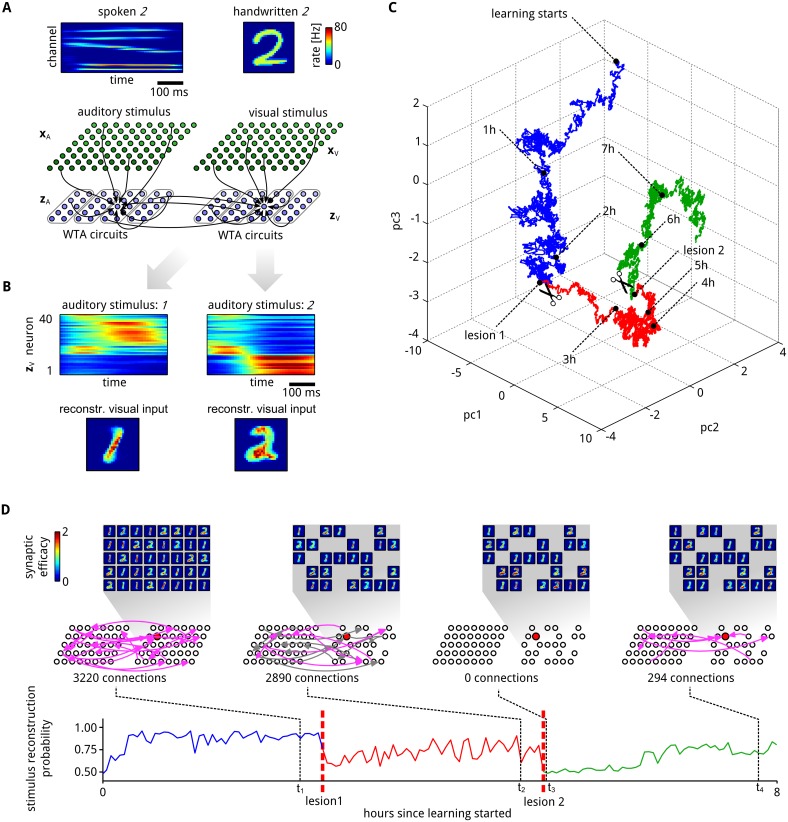

Fig 5. Inherent compensation for network perturbations.

A: A spike-based generative neural network (illustrated at the bottom) received simultaneously spoken and handwritten representations of the same digit (and for tests only spoken digits, see (B)). Stimulus examples for spoken and written digit 2 are shown at the top. These inputs are presented to the network through corresponding firing rates of “auditory” (x A) and “visual” (x V) input neurons. Two populations z A and z V of 40 neurons, each consisting of four WTA circuits like in Fig 4, receive exclusively auditory or visual inputs. In addition, arbitrary lateral excitatory connections between these “hidden” neurons are allowed. B: Assemblies of hidden neurons emerge that encode the presented digit (1 or 2). Top panel shows PETH of all neurons from z V for stimulus 1 (left) and 2 (right) after learning, when only an auditory stimulus is presented. Neurons are sorted by the time of their highest average firing. Although only auditory stimuli are presented, it is possible to reconstruct an internally generated “guessed” visual stimulus that represents the same digit (bottom). C: First three PCA components of the temporal evolution of a subset θ′ of network parameters θ (see text). Two major lesions were applied to the network. In the first lesion (transition to red) all neurons that significantly encode stimulus 2 were removed from the population z V. In the second lesion (transition to green) all currently existing synaptic connections between neuron in z A and z V were removed, and not allowed to regrow. After each lesion the network parameters θ′ migrate to a new manifold. D: The generative reconstruction performance of the “visual” neurons z V for the test case when only an auditory stimulus is presented was tracked throughout the whole learning session, including lesions 1 and 2 (bottom panel). After each lesion the performance strongly degrades, but reliably recovers. Insets show at the top the synaptic weights of neurons in z V at 4 time points t 1, …, t 4, projected back into the input space like in Fig 4E. Network diagrams in the middle show ongoing network rewiring for synaptic connections between the hidden neurons z A and z V. Each arrow indicates a functional connection between two neurons. To keep the figure uncluttered only subsets of synapses are shown (1% randomly drawn from the total set of possible lateral connections). Connections at time t 2 that were already functional at time t 1 are plotted in gray. The neuron whose parameter vector θ′ is tracked in (C) is highlighted in red. The text under the network diagrams shows the total number of functional connections between hidden neurons at the time point.

As in the previous demonstrations we do not use external rewards or teacher-inputs for guiding network plasticity. Rather, we allow the model to discover on its own regularities in its network inputs. The “auditory” hidden neurons z A on the left in Fig 5A received temporal spike patterns from the auditory input neurons x A that were generated from spoken utterings of the digit 1 and 2 (which lasted between 320 ms and 520 ms). Simultaneously we presented to the “visual” hidden neurons z V on the right for the same time period a (symbolic) visual representation of the same digit (randomly drawn from the MNIST database like in Fig. 2).

The emergent associations between the two populations z A and z V of hidden neurons were tested by presenting auditory input only and observing the activity of the “visual” hidden neurons z V. Fig 5B shows the emergent activity of the neurons z V when only the auditory stimulus was presented (visual input neurons x V remained silent). The generative aspect of the network can be demonstrated by reconstructing for this case the visual stimulus from the activity of the “visual” hidden neurons z V. Fig 5B shows reconstructed visual stimuli from a single run where only the auditory stimuli x A for digits 1 (left) and 2 (right) were presented to the network. Digit images were reconstructed by multiplying the synaptic efficacies of synapses from neurons in x V to neurons in z V (which did not receive any input from x V in this experiment) with the instantaneous firing rates of the corresponding z V-neurons.

Interestingly we found that synaptic sampling significantly outperforms the pure deterministic STDP updates introduced in [38], which do not impose a prior distribution over synaptic parameters. The structural prior that favors solutions with only a small number of large synaptic weights seems to be beneficial for this task as it allows to learn few but pronounced associations between the neurons (see S6 Text).

In order to investigate the inherent compensation capability of synaptic sampling, we applied two lesions to the network within a learning session of 8 hours. In the first lesion all neurons (16 out of 40) that became tuned for digit 2 in the preceding learning (see Fig 5D and S6 Text) were removed. The lesion significantly impaired the performance of the network in stimulus reconstruction, but it was able to recover from the lesion after about one hour of continuing network plasticity according to Eq (11) (Fig 5D). The reconstruction performance of the network was measured here continuously through the capability of a linear readout neuron from the visual ensemble to classify the current auditory stimulus (1 or 2).

In the second lesion all synaptic connections between hidden neurons that were present after recovery from the first lesion were removed and not allowed to regrow (2936 synapses in total). After about two hours of continuing synaptic sampling 294 new synaptic connections between hidden neurons emerged. These made it again possible to infer the auditory stimulus from the activity of the remaining 24 hidden neurons in the population z V (in the absence of any input from the population x V), at about 75% of the performance level before the second lesion (see bottom panel of Fig 5D).

In order to illustrate the ongoing network reconfiguration we track in Fig 5C the temporal evolution of a subset θ′ of network parameters (35 parameters θ i associated with the potential synaptic connections of the neuron marked in red in the middle of Fig 5D from or to other hidden neurons, excluding those that were removed at lesion 2 and not allowed to regrow). The first three PCA components of this 35-dimensional parameter vector are shown. The vector θ′ fluctuates first within one region of the parameter space while probing different solutions to the learning problem, e.g., high probability regions of the posterior distribution (blue trace). Each lesions induced a fast switch to a different region (red, green), accompanied by a recovery of the visual stimulus reconstruction performance (see Fig 5D).

The random fluctuations were found to be an integral part of the fast recovery form lesions. In S6 Text we analyzed the impact of the diffusion term in Eq (11) on the learning speed. We found that it acts as a temperature parameter that allows to scale the speed of exploration in the parameter space (see also the Methods for a detailed derivation).

Altogether this experiment showed that continuously ongoing synaptic sampling maintains stable network function also in a more complex network architecture. Another consequence of synaptic sampling was that the neural codes (assembly sequences) that emerged for the two digit classes within the hidden neurons z A and z V (see Fig. S2B in S6 Text) drifted over larger periods of time (also in the absence of lesions), similarly as observed for place cells in [48] and for tuning curves of motor cortex neurons in [4].

Discussion

We have shown that stochasticity may provide an important function for network plasticity. It enables networks to sample parameters from some low-dimensional manifold in a high-dimensional parameter space that represents attractive combinations of structural constraints and rules (such as sparse connectivity and heavy-tailed distributions of synaptic weights) and a good fit to empirical evidence (e.g., sensory inputs). We have developed a normative model for this new learning perspective, where the traditional gold standard of maximum likelihood optimization is replaced by theoretically optimal sampling from a posterior distribution of parameter settings, where regions of high probability provide a theoretically optimal model for the low-dimensional manifold from which parameter settings should be sampled. The postulate that networks should learn such posterior distributions of parameters, rather than maximum likelihood values, had been proposed already for quite some while for artificial neural networks [6, 7], since such organization of learning promises better generalization capability to new examples. The open problem how such posterior distributions could be learned by networks of neurons in the brain, in a way that is consistent with experimental data, has been highlighted in [8] as a key challenge for computational neuroscience. We have presented here such a model, whose primary innovation is to view experimentally found trial-to-trial variability and ongoing fluctuations of parameters such as spine volumes no longer as a nuisance, but as a functionally important component of the organization of network learning, since it enables sampling from a distribution of network configurations. The mathematical framework that we have presented provides a normative model for evaluating such empirically found stochastic dynamics of network parameters, and for relating specific properties of this “noise” to functional aspects of network learning.

Reports of trial-to-trial variability and ongoing fluctuations of parameters related to synaptic weights are ubiquitous in experimental studies of synaptic plasticity and its molecular implementation, from fluctuations of proteins such as PSD-95 [19] in the postsynaptic density that are thought to be related to synaptic strength, over intrinsic fluctuations in spine volumes and synaptic connections [1–3, 5, 28, 31, 32], to surprising shifts of neural codes on a larger time scale [4, 48]. These fluctuations may have numerous causes, from noise in the external environment over noise and fluctuations of internal states in sensory neurons and brain networks, to noise in the pre- and postsynaptic molecular machinery that implements changes in synaptic efficacies on various time scales [18]. One might even hypothesize, that it would be very hard for this molecular machinery to implement synaptic weights that remain constant in the absence of learning, and deterministic rules for synaptic plasticity, because the half-life of many key proteins that are involved is relatively short, and receptors and other membrane-bound proteins are subject to Brownian motion. In this context the finding that neural codes shift over time [4, 48] appears to be less surprising. In fact, our model predicts (see S6 Text) that also stereotypical assembly sequences that emerge in our model through learning, similarly as in the experimental data of [49], are subject to such shifts on a larger time scale. However it should be pointed out that our model is agnostic with regard to the time scale on which these changes occur, since this time scale can be defined arbitrarily through the parameter b (learning rate) in Eq (3).

The model that we have presented makes no assumptions about the actual sources of noise. It only assumes that salient network parameters are subject to stochastic processes, that are qualitatively similar to those which have been studied and modeled in the context of Brownian motion of particles as random walk on the microscale. One can scale the influence of these stochastic forces in the model by a parameter T that regulates the “temperature” of the stochastic dynamics of network parameters θ. This parameter T regulates the tradeoff between trying out different regions (or modes) of the posterior distribution of θ (exploration), and staying for longer time periods in a high probability region of the posterior (exploitation). We conjecture that this parameter T varies in the brain between different brain regions, and possibly also between different types of synaptic connections within a cortical column. For example, spine turnover is increased for large values of T, and network parameters θ can move faster to a new peak in the posterior distribution, thereby supporting faster learning (and faster forgetting). Since spine turnover is reported to be higher in the hippocampus than in the cortex [50], such higher value of T could for example be more adequate for modeling network plasticity in the hippocampus. This model would then also support the hypothesis of [50] that memories are more transient in the hippocampus. In addition T is likely to be regulated on a larger time scale by developmental processes, and on a shorter time scale by neuromodulators and attentional control. The view that synaptic plasticity is stochastic had already been explored through simulation studies in [4, 51]. Artificial neural networks were trained in [51] through supervised learning with high learning rates and high amounts of noise both on neuron outputs and synaptic weight changes. The authors explored the influence of various combinations of noise levels and learning rates on the success of learning, which can be understood as varying the temperature parameters T in the synaptic sampling framework. In order to measure this parameter T experimentally in a direct manner, one would have to apply repeatedly the same plasticity induction protocol to the same synapse, with a complete reset of the internal state of the synapse between trials, and measure the resulting trial-to-trial variability of changes of its synaptic efficacy. Since such complete reset of a synaptic state appears to be impossible at present, one can only try to approximate it by the variability that can be measured between different instances of the same type of synaptic connection.

We have shown that the Fokker-Planck equation, a standard tool in physics for analyzing the temporal evolution of the spatial probability density function for particles under Brownian motion, can be used to create bridges between details of local stochastic plasticity processes on the microscale and the probability distribution of the vector θ of all parameters on the network level. This theoretical result provides the basis for the new theory of network plasticity that we are proposing. In particular, this link allows us to derive rules for synaptic plasticity which enable the network to learn, and represent in a stochastic manner, a desirable posterior distribution of network parameters; in other words: to approximate Bayesian inference.

We find that resulting rules for synaptic plasticity contain the familiar term for maximum likelihood learning. But another new term, apart from the Brownian-motion-like stochastic term, is the term that results from a prior distributions p 𝒮(θ i), which could actually be different for each biological parameter θ i and enforce structural requirements and preferences of networks of neurons in the brain. Some systematic dependencies of changes in synaptic weights (for the same pairing of pre- and postsynaptic activity) on their current values had already been reported in [41, 52–54]. These can be modeled as impact of priors. Other potential functional benefits of priors (on emergent selectivity of neurons) have recently been demonstrated in [55] for a restricted Boltzmann machine. An interesting open question is whether the non-local learning rules of their model can be approximated through biologically more realistic local plasticity rules, e.g. through synaptic sampling. We have also demonstrated in Figs 3 and 4 that suitable priors can model experimental data from [32] and [33] on the survival statistics of dendritic spines. The transient behavior of synaptic turnover in our model fits a two-term exponential function, the long-term (stationary) behavior is well described by a power-law. Both findings are in accordance with experimental data.

The results reported in [56] suggest that learned neural representations integrate experience with a priori beliefs about the sensory environment. The model presented here could be used to further investigate this hypothesis. Also the Fokker-Planck formalism was previously applied to describe the dynamics of dendritic spines in hippocampus [57]. The methods described there to integrate experimental data into computational models could be combined with the synaptic sampling framework to further improve the fit to biology.

Finally, we have demonstrated in Figs 4 and 5 that suitable priors for network parameters θ i that model spine volumes endow a neural network with the capability to respond to changes in the input distribution and network perturbations with a network rewiring that maintains or restores the network function, while simultaneously observing structural constraints such as sparse connectivity.

Our model underlines the importance of further experimental investigation of priors for network parameters. How are they implemented on a molecular level? What role does gene regulation have in their implementation? How does the history of a synapse affect its prior? In particular, can consolidation of a synaptic weight θ i be modeled in an adequate manner as a modification of its prior? This would be attractive from a functional perspective, because according to our model it both allows long-term storage of learned information and flexible network responses to subsequent perturbations.

Besides the use of parameter priors, dropout [58] and dropconnect [59] can be used to avoid overfitting in artificial neural networks. In particular, dropconnect, which drops randomly chosen synaptic connections during training, is reminiscent of stochastic synaptic release in biological neuronal networks. In synaptic sampling, synaptic parameters are assumed to be stochastic, however, this stochastic dynamics evolves on a much slower time scale than stochastic release, which was not modeled in our simulations. An interesting open question is whether synaptic sampling combined with stochastic synaptic release would further improve generalization capabilities of spiking neural networks in a similar manner as dropconnect for artificial neural networks.

We have focused in the examples for our model on the plasticity of synaptic weights and synaptic connections. But the synaptic sampling framework can also be used for studying the plasticity of other synaptic parameters, e.g., parameters that control the short term dynamics of synapses, i.e., their individual mixture of short term facilitation and depression. The corresponding parameters U, D, F of the models from [60, 61] are known to depend in a systematic manner on the type of pre- and postsynaptic neuron [62], indicative of a corresponding prior. However also a substantial variability within the same type of synaptic connections, had been found [62]. Hence it would be interesting to investigate functional properties and experimentally testable consequences of stochastic plasticity rules of type Eq (5) for U, D, F, and to compare the results with those of previously considered deterministic plasticity rules for U, D, F (see e.g., [63]).

Early theoretical work on activity-dependent formation and elimination of synapses has been used to model ocular dominance in the visual cortex [64, 65]. Theoretical models for structural plasticity have also shown that simple plasticity models combined with mechanisms for rewiring are able to model cortical reorganization after lesions [66, 67]. In [68] a model was presented that combines structural plasticity and STDP. This model was able to reproduce the existence of transient and persistent spines in the cortex. A recently introduced probabilistic model of structural plasticity was also able to reproduced the statistics of the number of synaptic connections between pairs of neurons in the cortex [69]. Furthermore a simple model of structural synaptic plasticity has been introduced that was able to explain cognitive phenomena such as graded amnesia and catastrophic forgetting [70]. In contrast to these previous studies, the goal of the current work was to establish a model of structural plasticity that follows from a first functional principle, that is, sampling from the posterior distribution over parameters.

We have demonstrated that this framework provides a new and principled way of modeling structural plasticity [10, 11]. The challenge to find a biologically plausible way of modeling structural plasticity as Bayesian inference has been highlighted by [8]. In addition, the proposed framework does not treat rewiring and synaptic plasticity separately, but provides a unified theory for both phenomena, that can be directly related to functional aspects of the network via the resulting posterior distribution. We have shown in Figs 3 and 4 that this rule produces a population of persistent synapses that remain stable over long periods of time, and another population of transient synaptic connections which disappear and reappear randomly, thereby supporting automatic adaptation of the network structure to changes in the distribution of external inputs (Fig 4) and network perturbation (Fig 5).

On a more general level we propose that a framework for network plasticity where network parameters are sampled continuously from a posterior distribution will automatically be less brittle than previously considered maximum likelihood learning frameworks. The latter require some intelligent supervisor who recognizes that the solution given by the current parameter vector is no longer useful, and induces the network to resume plasticity. In contrast, plasticity processes remain active all the time in our sampling-based framework. Hence network compensation for external or internal perturbations is automatic and inherent in the organization of network plasticity.

The need to rethink observed parameter values and plasticity processes in biological networks of neurons in a way which takes into account their astounding variability and compensation capabilities has been emphasized by Eve Marder (see e.g. [9, 47, 71]) and others. This article has introduced a new conceptual and mathematical framework for network plasticity that promises to provide a foundation for such rethinking of network plasticity.

Methods

Details to Learning a posterior distribution through stochastic synaptic plasticity

Here we prove that p*(θ) = p(θ∣ x) is the unique stationary distribution of the parameter dynamics Eq (3) that operate on the network parameters θ = (θ 1, …,θ M). Convergence to this stationary distribution then follows for strictly positive p*(θ). In fact, we prove here a more general result for parameter dynamics given by

| (12) |

for i = 1, …, M and . This dynamics includes a temperature parameter T and a sampling-speed factor b(θ i) that can in general depend on the current value of the parameter θ i. The temperature parameter T can be used to scale the diffusion term (i.e., the noise). The sampling-speed factor controls the speed of sampling, i.e., how fast the parameter space is explored. It can be made dependent on the individual parameter value without changing the stationary distribution. For example, the sampling speed of a synaptic weight can be slowed down if it reaches very high or very low values. Note that the dynamics Eq (3) is a special case of the dynamics Eq (12) with unit temperature T = 1 and constant sampling speed b(θ i) ≡ b. We show that the stochastic dynamics Eq (12) leaves the distribution

| (13) |

invariant, where 𝒵 is a normalizing constant 𝒵 = ∫q*(θ) dθ and

| (14) |

Note that the stationary distribution p*(θ) is shaped by the temperature parameter T, in the sense that p*(θ) is a flattened version of the posterior for high temperature. The result is formalized in the following theorem, which is proven in detail in S1 Text:

Theorem 1. Let p( x,θ) be a strictly positive, continuous probability distribution over continuous or discrete states x = x 1, …, x N and continuous parameters θ = (θ1, …,θM), twice continuously differentiable with respect to θ. Let b(θ) be a strictly positive, twice continuously differentiable function. Then the set of stochastic differential Eq (12) leaves the distribution p*(θ) invariant. Furthermore, p*(θ) is the unique stationary distribution of the sampling dynamics.

Online approximation

We show here that the rule Eq (5) is a reasonable approximation to the batch-rule Eq (3). According to the dynamics Eq (12), synaptic plasticity rules that implement synaptic sampling have to compute the log likelihood derivative . We assume that every τ x time units a different input x n is presented to the network. For simplicity, assume that x 1, …, x N are visited in a fixed regular order. Under the assumption that input patterns are drawn independently, the likelihood of the generative model factorizes

| (15) |

The derivative of the log likelihood is then given by

| (16) |

Using Eq (16) in the dynamics Eq (12), one obtains

| (17) |

Hence, the parameter dynamics depends at any time on all network inputs and network responses.

This “batch” dynamics does not map readily onto a network implementation because the weight update requires at any time knowledge of all inputs x n. We provide here an online approximation for small sampling speeds. To obtain an online learning rule, we consider the parameter dynamics

| (18) |

As in the batch learning setting, we assume that each input x n is presented for a time interval of τ x. Integrating the parameter changes Eq (18) over one full presentation of the data x, i.e., starting from t = 0 with some initial parameter values θ(0) up to time t = Nτ x, we obtain for slow sampling speeds (Nτ x b(θ i) ≪ 1)

This is also what one obtains when integrating Eq (17) for Nτ x time units (for slow b(θ i)). Hence, for slow enough b(θ i), Eq (18) is a good approximation of optimal weight sampling. The update rule Eq (5) follows from Eq (18) for T = 1 and b(θ i) ≡ b.

Discrete time approximation

Here we provide the derivation for the approximate discrete time learning rule Eq (7). For a discrete time parameter update at time t with discrete time step Δt during which x n is presented, a corresponding rule can be obtained by short integration of the continuous time rule Eq (18) over the time interval from t to t + Δt:

| (19) |

where denotes Gaussian noise . The update rule Eq (7) is obtained by choosing a constant b(θ) ≡ b, T = 1, and defining η = Δt b.

Synaptic sampling with hidden states

When there is a direct relationship between network parameters θ and the distribution over input patterns x n, the parameter dynamics can directly be derived from the derivative of the data log likelihood and the derivative of the parameter prior. Typically however, generative models for brain computation assume that the network response z n to input pattern x n represents in some manner the value of hidden variables that explain the current input pattern. In the presence of hidden variables, maximum likelihood learning cannot be applied directly, since the state of the hidden variables is not known from the observed data. The expectation maximization algorithm [7] can be used to overcome this problem. We adopt this approach here. In the online setting, when pattern x n is applied to the network, it responds with network state z n according to p 𝒩(z|xn, θ), where the current network parameters are used in this inference process. The parameters are updated in parallel according to the dynamics

| (20) |

Note that in comparison with the dynamics Eq (18), the likelihood term now also contains the current network response z n. It can be shown that this dynamics leaves the stationary distribution

| (21) |

invariant, where 𝒵 is again a normalizing constant (the dynamics Eq (20) is again the online-approximation). Hence, in this setup, the network samples concurrently from circuit states (given θ) and network parameters (given the network state z n), which can be seen as a sampling-based version of online expectation maximization.

Details to Improving the generalization capability of a neural network through synaptic sampling

For learning the distribution over different writings of digit 1 with different priors in Fig 2, a restricted Boltzmann machine (RBM) with 748 visible and 9 hidden neurons was used. A detailed definition of the RBM model and additional details to the simulations are given in S3 Text.

Network inputs

Handwritten digit images were taken from the MNIST dataset [72]. In MNIST, each instance of a handwritten digit is represented by a 784-dimensional vector x n. Each entry is given by the gray-scale value of a pixel in the 28 × 28 pixel image of the handwritten digit. The pixel values were scaled to the interval [0, 1]. In the RBM, each pixel was represented by a single visible neuron. When an input was presented to the network, the output of a visible neuron was set to 1 with probability as given by the scaled gray-scale value of the corresponding pixel.

Learning procedure

In each parameter update step the contrastive divergence algorithm of [27] was used to estimate the likelihood gradients. Therefore, each update step consisted of a “wake” phase, a “reconstruction” phase, and the update of the parameters. The “wake” samples were generated by setting the outputs of the visible neurons to the values of a randomly chosen digit x n from the training set and drawing the outputs of all hidden layer neurons for the given visible output. The “reconstruction” activities and were generated by starting from this state of the hidden neurons and then drawing outputs of all visible neurons. After that, the hidden neurons were again updated and so on. In this way we performed five cycles of alternating visible and hidden neuron updates. The outputs of the network neurons after the fifth cycle were taken as the resulting “reconstruction” samples and and used for the parameter updates Eqs (22)–(24) given below. This update of parameters concluded one update step.

Log likelihood derivatives for the biases of hidden neurons are approximated in the contrastive divergence algorithm [27] as (the derivatives for visible biases are analogous). Using Eq (7), the synaptic sampling update rules for the biases are thus given by

| (22) |

| (23) |

Note that the parameter prior does not show up in these equations since no priors were used for the biases in our experiments. Contrastive divergence approximates the log likelihood derivatives for the weights w ij as . This leads to the synaptic sampling rule

| (24) |

In the simulations, we used this rule with η = 10−4 and N = 100. Learning started from random initial parameters drawn from a Gaussian distribution with standard deviation 0.25 and means at 0 and -1 for weights w ij and biases (, ), respectively.

To compare learning with and without parameter priors, we performed simulations with an uninformative (i.e., uniform) prior on weights (Fig 2C and 2D), which was implemented by setting to zero. In simulations with a parameter prior (Fig 2E and 2F), we used a local prior for each weight in order to obtain local plasticity rules. In other words, the prior p 𝒮(w) was assumed to factorize into priors for individual weights p 𝒮(w) = ∏i, j p 𝒮(w ij). For each individual weight prior, we used a bimodal distribution implemented by a mixture of two Gaussians

| (25) |

with means μ 1 = 1.0, μ 2 = 0.0, and standard deviations σ 1 = σ 2 = 0.15.

Details to Fast adaptation to changing input statistics

Spike-based Winner-Take-All network model

Network neurons were modeled as stochastic spike response neurons with a firing rate that depends exponentially on the membrane voltage [73, 74]. The membrane potential u k(t) of neuron k is given by

| (26) |

where x i(t) denotes the (unweighted) input from input neuron i, w ki denotes the efficacy of the synapse from input neuron i, and β k(t) denotes a homeostatic adaptation current (see below). The input x i(t) models the influence of additive excitatory postsynaptic potentials (EPSPs) on the membrane potential of the neuron. Let denote the spike times of input neuron i. Then, x i(t) is given by

| (27) |

where ϵ is the response kernel for synaptic input, i.e., the shape of the EPSP, that had a double-exponential form in our simulations:

| (28) |

with the rise-time constant τ r = 2 ms, the fall-time constant τ f = 20 ms. Θ(⋅) denotes the Heaviside step function. The instantaneous firing rate ρ k(t) of network neuron k depends exponentially on the membrane potential and is subject to divisive lateral inhibition I lat(t) (described below):

| (29) |