Abstract

One of the most salient social categories conveyed by human faces and voices is gender. We investigated the developmental emergence of the ability to perceive the coherence of auditory and visual attributes of gender in 6- and 9-month-old infants. Infants viewed two side-by-side video clips of a man and a woman singing a nursery rhyme and heard a synchronous male or female soundtrack. Results showed that 6-month-old infants did not match the audible and visible attributes of gender, and 9-month-old infants matched only female faces and voices. These findings indicate that the ability to perceive the multisensory coherence of gender emerges relatively late in infancy and that it reflects the greater experience that most infants have with female faces and voices.

Keywords: Multisensory perception, Gender perception, Infants, Audio-visual speech

One of the most salient and socially relevant multisensory categories specified by faces and voices is gender. Even though gender can be specified by separate facial and vocal attributes (e.g., Brown & Perrett, 1993; Coleman, 1971), adults typically expect that male faces go with male voices and that female faces go with female voices, and perceive the visual and auditory attributes of an individual’s gender as part of a unitary and coherent entity (Barenholtz, Lewkowicz, Davidson, & Mavica, 2014; Kamachi, Hill, Lander, & Vatikiotis-Bateson, 2003).

Research has determined that the ability to perceive various forms of multisensory coherence in faces and voices begins to emerge during infancy. For example, newborn infants can recognize their mother’s face following postnatal exposure to her voice and face (Sai, 2005), and newborn and 3-month-old infants can associate an unfamiliar person’s face and voice after familiarization with both (Brookes et al., 2001; Coulon, Guellai, & Streri, 2011; Guellai, Coulon, & Streri, 2011). Similarly, studies show that infants can perceive the multisensory coherence of the audible and visible attributes of speech syllables (e.g., Kuhl & Meltzoff, 1982; Patterson & Werker, 1999) by 4 months of age, and the equivalence of audible and visible fluent speech by the end of the first year of life (Lewkowicz, Minar, Tift, & Brandon, 2015).

Given that multisensory perception of various aspects of faces and voices emerges in infancy, and that gender is one of the most frequently experienced perceptual categories in early development, perception of the multisensory coherence of gender may also emerge in infancy. This possibility is all the more likely given that infants can detect the gender of voices by 2 months of age (Jusczyk, Pisoni, & Mullennix, 1992; Miller, 1983; Miller, Younger, & Morse, 1982) and begin to categorize human faces based on gender between 3 and 12 months of age, although with certain processing advantages for female over male faces (Cornell, 1974; Fagan & Singer, 1979; Leinbach & Fagot, 1993; Quinn, Yahr, Kuhn, Slater, & Pascalis, 2002; Ramsey, Langlois, & Marti, 2005).

Several previous studies have reported that infants can perceive the multisensory coherence of gender (see Ramsey et al., 2005, pp. 219–220, for a review). For example, Walker-Andrews, Bahrick, Raglioni, and Diaz (1991) found that 6-month-old infants matched faces and voices based on gender, although this was only the case in half the trials. In addition, to ensure that the faces and voices were synchronized, Walker-Andrews et al. dubbed each speaker’s voice onto the same speaker’s video. This procedure allowed for the possibility that matching was partially due to infant detection of the idiosyncratic relation between the specific faces and voices, an observation which is supported by findings that adults (Kamachi, Hill, Lander, & Vatikiotis-Bateson, 2003; Rosenblum et al., 2002) as well as 6-month-old infants (Trehub, Plantinga, & Brcic, 2009) can match dynamic auditory and visual speaker identity cues.

Subsequent studies only found evidence of the ability to perceive the multisensory coherence of gender starting either at 8 months of age (Patterson & Werker, 2002) or as late as 9 to 12 months of age (Poulin-Dubois, Serbin, Kenyon, & Debryshire, 1994). One especially interesting finding reported in several studies is that between 8 and 12 months of age, infants only exhibit perception of the multisensory coherence of female faces and voices (Noles, Kayl, & Rennels, 2012; Poulin-Dubois et al., 1994). Another noteworthy finding is that it is not until 18 months of age that infants can recognize the multisensory coherence of gender for males as well as females (Poulin-Dubois, Serbin, and Derbyshire, 1998).

The seeming discrepancy in the specific age when the ability to perceive the multisensory coherence of gender first emerges is probably due to the fact that the particular nature of the presented faces and vocalizations has varied across different studies. Specifically, some studies have presented animated faces (Noles et al., 2012; Patterson & Werker, 2002; Walker-Andrews et al., 1991), whereas others have presented pictures of static faces (Poulin-Dubois et al., 1998; Poulin-Dubois et al., 1994). It is possible that the earlier emergence of the ability to perceive the multisensory coherence of gender with animated faces is due to the fact that infants can more easily perceive the physiognomic aspects of such faces (Xiao et al., 2014). Similarly, some studies have presented recordings of fluent speech (Noles et al., 2012; Poulin-Dubois et al., 1998; Poulin-Dubois et al., 1994; Walker-Andrews et al., 1991), whereas other studies have presented isolated vowels (Patterson & Werker, 2002). The former may make the detection of gender per se easier because continuous utterances not only provide fundamental frequency and formant cues (as do isolated syllables) which usually distinguish between male and females voices (Gelfer & Mikos, 2005; Ladefoged & Broadbent, 1957; Peterson & Barney, 1952; Sachs et al., 1973), but they also provide cues related to intonation, stress, duration, and vocal breathiness. Any of these cues could potentially signal the gender of a person (see, Klatt & Klatt, 1990; Van Borsel, Janssens, & De Bodt, 2009, for examples on vocal breathiness).

The various issues raised by the specific types of stimulus materials used in past research justify further investigation. Based on previous findings, we predicted that the ability to perceive multisensory gender coherence is most likely to emerge between 6 and 9 months of age (Patterson & Werker, 2002; Poulin-Dubois et al., 1994, Walker-Andrews et al., 1991). Therefore, we investigated the ability of 6- and 9-month-old infants to match auditory and visual gender information inherent in dynamic displays of male and female faces that could be seen and heard singing. We chose singing as opposed to continuous speech for three reasons. First, songs are more prosodically varied and infants are known to be highly sensitive to prosody both in the auditory (Fernald, 1985) and visual domains (Kim & Johnson, 2014; Nakata & Trehub, 2004). Second, the sensitivity of infants to song prosody is likely to facilitate their detection of the multisensory coherence of gender. Finally, singing, unlike fluent speech, spontaneously elicits similar rhythms in different singers, which is not the case when speakers are asked to utter a monologue.

The experiment consisted of two trials where infants saw side-by-side singing faces, one a male face and the other a female face and, at the same time, heard a male or a female person singing. If the infants could detect the gender-based correspondence, we expected them to look longer at the face whose gender matched the gender of the accompanying voice than at the face that did not match it. An additional expectation was that infants might only exhibit successful perception of the multisensory coherence of female faces and voices. This prediction is based on studies that have found that 3- to 4-month-old infants reared by a female primary caregiver exhibit a spontaneous preference for female over male faces (Quinn et al., 2002) and that infants are more efficient at categorizing female as opposed to male faces (Ramsey et al., 2005; Quinn et al., 2002; Younger & Fearing, 1999). As indicated earlier, prior studies have also shown that, starting at 8 to 9 months of age, infants exhibit evidence of intersensory matching of gender for female faces and voices (Patterson & Werker, 2002; Poulin-Dubois et al., 1994). Crucial from the standpoint of the current study, however, is the fact that studies involving infants older than 6 months only presented single auditory and visual isolated syllables or static faces. Because of this methodological limitation, it is not known whether the results from these studies generalize to infant perception of the multisensory coherence of dynamic gender cues. Answering this question is important because dynamic gender cues are more representative of an infant’s typical ecological setting than isolated syllables or static faces.

Method

Participants

Forty-eight 6-month-old infants (Mage = 193 days, SD = 7 days, 182–215 days; 26 females), and 47 9-month-old infants (Mage = 285 days, SD = 7 days, 271–299 days; 21 females) were included in the final analysis. All participants were healthy full-term infants. They were recruited from the maternity ward of the Centre Hospitalier Universitaire (CHU), Grenoble, France. Eighteen additional infants were tested but eliminated from the analysis due to technical problems (6-month-olds, n = 1; 9-month-olds, n = 2), crying (6-month-olds, n = 1), or side bias (defined as greater than 95% of looking time to one side of the display; 6-month-olds, n = 10; 9-month-olds, n = 4).

Stimuli

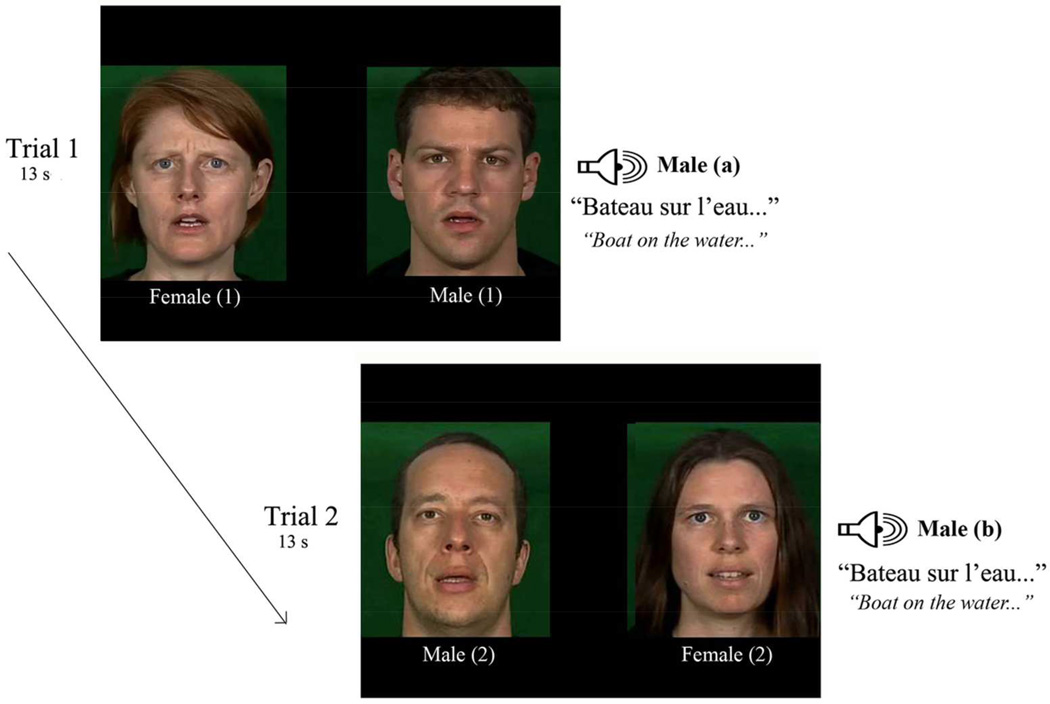

First, we video-recorded the face and voice of a female adult singing a traditional French nursery rhyme in French and used this person’s recording as a template for the way other actors should sing it. After playing this template video to each of six adult actors (3 females and 3 males) for several minutes, we video-recorded the actors while they sang the nursery rhyme in a manner as similar to the model singer as possible. During the video recording, the actors looked directly at the video camera, maintained a neutral facial expression, and sang at the same tempo. We edited the videos to make sure that they started with the same opening of the mouth. To draw the attention of the infants to the physiognomic features of the faces, only faces were presented and culture-specific visual information (i.e., make-up, jewelry) was removed. Figure 1 shows an example of the faces presented in the experiment.

Figure 1.

Schematic representation of the procedure. Infants saw a male and female face singing at the same time and heard the accompanying song during each of the two paired-preference trials. The male voice condition is shown.

The final stimulus set consisted of six different faces (20 cm in height and 15 cm in width) and six different accompanying voices. Three of the faces and voices were male and three were female. Twelve different pairs of male and female face-voice combinations were created (six male-female face combinations associated with a male or a female voice). The voice presented during the presentation of each male-female face pair was not the voice of either of the actors seen in the video. Importantly, we dubbed another person’s singing onto the videos to ensure that infants could not make the match based on speaker identity, or idiosyncrasies in visible and audible articulatory or respiratory patterns.

A perceptual test was carried out in order to measure the perceived degree of synchrony of each of the 24 individual videos (each actor-voice combination). Twenty French participants were tested (10 males, Mage = 34.3, SD = 9.2). The instruction was to watch each video carefully and evaluate the degree of synchrony between the image and the sound, on a Likert scale from 1 (very poor synchrony, long lag between image and sound) to 7 (perfect synchrony, no observable lag). Results showed that the degree of agreement between observers was high, as revealed by the intra-class correlation coefficient of 0.88 (95% confidence interval 0.78–0.94). The fact that none of the facial movements was perfectly synchronized with the voices is inherent to this procedure, but the fact that none of the faces of a pair was better synchronized than its counterpart with the sound, ensures that the potential matching cannot be based on synchronization cues (Mmales = 5.1, SD = 0.8; Mfemales = 4.9, SD = 1.4; t(11) = 0.7, p = .50, paired two-samples t-test).

Procedure

Infants were tested individually in a quiet room. They were seated on their caregiver’s lap, 60 cm away from a 22-inch monitor that showed the videos. E-Prime software (Psychology Software Tools, Pittsburgh, PA) was used to present the videos. The experiment consisted of two 13 s trials during which infants saw the side-by-side video clips of a man and a woman singing a nursery rhyme and heard either a male or female soundtrack (Figure 1). The two faces were separated by a 15 cm gap. For each infant, the gender of the voice was the same in both trials. The male or female voices were randomly assigned to infants and differed in terms of their identity on each trial. Also, different pairs of male and female faces were presented across the two test trials. We deliberately varied the specific identity of the voices and faces across the two test trials to maximize our ability to make generalizations regarding infant ability to perceive the multisensory coherence of gender. The side of gender presentation (male or female) was counterbalanced across infants on the first trial and reversed on the following trial.

All parents were instructed to fixate centrally above the screen and to remain quiet during testing. A low-light video camera, located above the stimulus-presentation monitor, was used to record infant looking behavior. This video recording was subsequently digitized and analyzed frame-by-frame by two independent observers (inter-observer agreement calculated as a Pearson r was .94). The dependent measure was the total duration of looking directed to each face.

Results

Infants spent an average of 95.2% of their time looking at the faces. To determine whether infants perceived multisensory gender coherence, we calculated the mean proportion-of-total-looking-time (PTLT) that each infant directed at the matching face over the two test trials. For this calculation, we divided the total amount of looking at the matching face by the total amount of looking at both faces during each trial and then averaged those two proportions over the two test trials. If infants perceived multisensory gender coherence, they should exhibit greater looking at the face that matched the heard voice (i.e., PTLT to the matching face superior to the 50 % chance level).

An analysis of variance (ANOVA) was first conducted on the PTLT directed at the matching face to determine whether voice gender (male, female), infant gender (male, female) or age (6-month-olds, 9-month-olds) affected looking behavior. Results indicated that only the gender of the voice affected responsiveness, F(1, 87) = 5.14, p < .05, ηp2 = .06. As expected, infants looked longer at the matching face in presence of a female voice (M = 53.8%, SD = 8.5%) than a male voice (M = 49.4%, SD = 10.3%). Neither age F(1, 87) = 0.09, p = .77, ηp2 = .001, nor infant gender, F(1, 87) = 0.02, p = .89, ηp2 < .001, nor any of the interactions between factors reached significance.

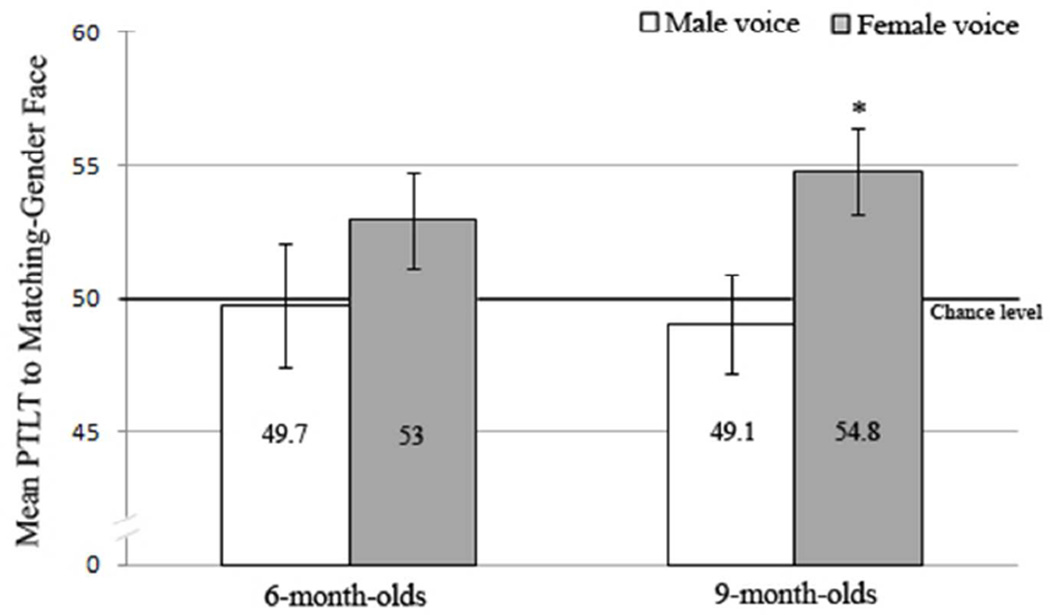

We further investigated infant ability to perform audiovisual matching by comparing mean PTLTs directed at the matching face against chance. One-sample t-tests against chance were performed at each age, for male and female voices, in order to compare more directly our results to previous studies. The data for these comparisons can be seen in Figure 2. The analyses revealed differences across the two age groups in terms of how they performed relative to chance, showing a systematic preference for the matching face in the female voice condition only in 9-month-old infants. These differences were also reflected in analyses of individual PTLTs.

Figure 2.

Mean PTLT directed to the face that matched the heard voice in terms of gender for 6- and 9-month-old infants. Note: *p < .01.

In particular, 6-month-old infants did not prefer to look at the face that matched the gender of the voice when they heard a female voice, t(24) = 1.63, p = .12, Cohen’s d = .33, or when they heard a male voice, t(22) = 0.11, p = .92, Cohen’s d = .02. At the individual level, only 14 of the 25 infants (56%) looked longer at the female face when a female voice was presented, and just 12 of the 23 infants (52%) looked longer at the male face when a male voice was presented (binomial tests, p = .13, p = .16, respectively). The proportion of matching was not different in the female versus male voice conditions, Fisher exact test, p = .79

The results with the 9-month-old infants indicated that they matched female faces and voices, t(22) = 2.89, p < .01, Cohen’s d = .60, but not male faces and voices, t(23) = 0.50, p = .62, Cohen’s d = .10. At the individual level, we found that 16 of the 23 infants (70%) looked longer at the female face when a female voice was presented (binomial test, p < .05), and that only 10 of 24 infants (42%) looked longer at the male face when a male voice was presented (binomial test, p = .12). The proportion of matching in the female voice condition was marginally greater than that in the male voice condition, Fisher exact test, p = .08.

To ensure that overall attention could not account for behavioral differences between the two age groups, we analyzed the total amount of looking at the faces with a two-way ANOVA with age (6-month-olds, 9-months-olds) as the between-subjects factor, and the gender of the face (male, female) as the within-subject factor. This analysis showed that the age effect was not significant, F(1, 93) = 2.99, p = .087, ηp2 = .03, suggesting that the difference in audio-visual matching performance at 6 and 9 months of age was not attributable to a difference in overall attention (6-month-olds, M = 24.5 s, SD = 2.6 s; 9-month-olds, M = 25.1 s, SD = 1.3 s). There was a significant effect of the gender of the face, F(1, 93) = 4.21, p < .05, ηp2 = .04, whereas the interaction between the gender of the face and age did not reach significance, F(1, 93) = 0.55, p = .46, ηp2 = 0.01. The infants preferred to look at the female faces (M = 12.9 s, SD = 2.6 s) over the male faces (M = 11.9 s, SD = 2.6 s). Follow-up paired two-sample t-tests critically revealed that infants looked more at the female face (M = 13.5 s, SD = 2.4 s) than the male face (M = 11.5 s, SD = 2.1 s) when they heard the female voice, t(47) = 3.16, p < .01, Cohen’s d = .86, but equally at the female face (M = 12.2 s, SD = 2.7 s) and the male face (M = 12.2 s, SD = 3 s) when they heard the male voice t(46) = 0.04, p = .49 Cohen’s d = .001.

Discussion

The current study investigated infant perception of multisensory gender coherence. Results showed that 6-month-old infants did not match the audible and visible attributes of gender, but that 9-month-old infants did match these attributes, although only when presented with female faces and voices. These effects were observed in the comparisons of infant performance relative to chance; we did not obtain an age×voice gender interaction in the ANOVA. Nonetheless, the effects were present in both the group and individual data in the comparisons to chance. Given these results, it is possible that the absence of the interaction in the ANOVA indicates that the age-related behavioral difference is due to some quantitative (rather than qualitative) change where intersensory matching in older infants is more systematic and less variable than matching in younger infants (see Patterson & Werker, 2002, for similar results in 4.5- to 8-month-olds, and Poulin-Dubois, et al., 1994, for similar results in 9- to 12-month-olds). It is also worth noting that whatever degree of developmental change is occurring in the perception of multisensory gender coherence between 6 and 9 months remains incomplete, since older infants only matched the audible and visible attributes of gender for females.

The finding that perception of multisensory gender coherence may emerge between 6 and 9 months of age takes on significance when one considers that young infants can already distinguish and categorize voices (Jusczyk et al., 1992; Miller, 1983; Miller et al., 1982) and faces (Cornell, 1974; Fagan & Singer, 1979; Leinbach & Fagot, 1993; Quinn et al., 2002) in terms of gender. The later emergence of the ability to perceive multisensory gender coherence is consistent with the idea that multisensory perceptual mechanisms develop independently from unisensory perceptual mechanisms even though the former obviously depend on the latter.

At first blush, it might seem that our findings are inconsistent with the Walker-Andrews et al. (1991) result that matching of the auditory and visual attributes of gender occurs as early as 6 months of age. A closer examination of the specific procedures used in the Walker-Andrews et al. study reveals, however, that the gender of the voice was changed between the first and the second half of the experiment. This type of change highlighted the audible gender difference and, by doing so, probably facilitated the ability of younger infants to choose the matching face. In contrast, in the present study, as well as in other previous studies where gender did not vary across trials, only older infants exhibited evidence of audio-visual matching (Patterson & Werker, 2002, Poulin-Dubois, et al., 1994).

In addition, as previously mentioned, a crucial issue in the Walker-Andrews et al. study is that the voices heard by the infants were the actual voices of the two speakers whose faces were shown. This aspect of the procedure raises the possibility that idiosyncratic information might have facilitated matching because speakers differ in the way they pronounce individual segmental and suprasegmental information (Dohen, Lœvenbruck, & Hill, 2009; Johnson, Ladefoged, & Lindau, 1993; Keating, Lindblom, Lubker & Kreiman, 1994). Because only two people were presented in Walker-Andrews et al. and because these people had been instructed to speak in synchrony during the recordings, they may each have produced idiosyncratic articulatory or respiratory patterns. Therefore, the possibility that idiosyncratic properties of the voices and faces facilitated matching in the Walker-Andrews et al. study cannot be ruled out. In contrast, in our study, for each of the face pairs presented, we used a voice that did not correspond to any of the faces. This procedure ensured that the matching could not be based on idiosyncratic properties.

Like in previous studies of older infants, we found that infants only matched the gender of female faces and voices. The most likely explanation for this asymmetrical responsiveness to female versus male faces and voices is that infants typically have more experience with females as opposed to males (Rennels & Davis, 2008; Sugden et al., 2014). Also of note is that the late emergence of the ability to perceive multisensory gender coherence contrasts with the earlier emergence of the ability to perceive the multisensory coherence of other attributes. The fact is, however, that the detection of face and voice gender relations requires previous learning of arbitrary multisensory associations (e.g., low pitch and prominent eyebrows). Even though infants as young as 3 months of age already exhibit the ability to associate individual voice characteristics with particular faces (Brookes et al., 2001; Spelke & Owsley, 1979; Trehub et al., 2009), the present results suggest that infants require more extended experience with the perceptual characteristics of gender before they can recognize its multisensory coherence. The later emergence of multisensory gender categories (i.e., male vs. female) relative to multisensory identity categories (e.g., Harry vs. Sally) may reflect the fact that each gender category would need to be maintained across a range of variation of values along each of the relevant attributes (e.g., pitch for voices, eyebrow prominence for faces) for the different exemplars of the category.

Finally, it should be noted that task complexity plays a role in infant perception of multisensory gender coherence. Put differently, the detection of multisensory gender coherence is not an all-or-none phenomenon, but rather depends on what is required of the infants. This principle is illustrated by findings showing that, on the one hand, infants as young as four months of age can perceive multisensory gender coherence when it is specified by the visible and audible attributes of an isolated vowel (Patterson & Werker, 2002). When, on the other hand, infants have to extract and match audible and visible gender information from continuous streams of auditory and visual information, they appear unable to do so until several months later. The fact that task complexity must be taken into account indicates that our initial hypothesis that prosodic variation of audiovisual gender information would make it easier for infants to perceive the multisensory coherence of gender was too simplistic. Rather, it appears that infant multisensory responsiveness depends on a combination of factors, including stimulus complexity, task complexity, and the specific degree of infant perceptual expertise. This more nuanced theoretical perspective is probably a more accurate description of the mechanisms underlying the development of multisensory perception and suggests new avenues for future research. For example, future studies might contrast perception of multisensory gender coherence in infants raised primarily by female caregivers versus those raised primarily by male caregivers. Presumably, this sort of differential experience with a specific gender category would have asymmetrical effects on infant ability to perceive female versus male multisensory gender coherence.

Acknowledgments

This work was supported by grant R01 HD-46526 from the National Institute of Child Health and Human Development. We thank two anonymous reviewers for their comments.

References

- Barenholtz E, Lewkowicz DJ, Davidson M, Mavica L. Categorical congruence facilitates multisensory associative learning. Psychonomic Bulletin & Review. 2014;21:1346–1352. doi: 10.3758/s13423-014-0612-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brookes H, Slater A, Quinn PC, Lewkowicz DJ, Hayes R, Brown E. Three-month-old infants learn arbitrary auditory-visual pairings between voices and faces. Infant and Child Development. 2001;10:75–82. [Google Scholar]

- Brown E, Perrett DI. What gives a face its gender? Perception. 1993;22:829–840. doi: 10.1068/p220829. [DOI] [PubMed] [Google Scholar]

- Coleman RO. Male and female voice quality and its relationship to vowel formant frequencies. Journal of Speech and Hearing Research. 1971;14:565–577. doi: 10.1044/jshr.1403.565. [DOI] [PubMed] [Google Scholar]

- Cornell EH. Infants' discrimination of photographs of faces following redundant presentations. Journal of Experimental Child Psychology. 1974;18:98–106. [Google Scholar]

- Coulon M, Guellai B, Streri A. Recognition of unfamiliar talking faces at birth. International Journal of Behavioral Development. 2011;35:282–287. [Google Scholar]

- Dohen M, Lœvenbruck H, Hill H. Recognizing prosody from the lips: is it possible to extract prosodic focus from lip features? In: Liew A Wee-Chung, Wang S., editors. Visual speech recognition: Lip segmentation and mapping. Hershey, PA: Medical Information Science Reference; 2009. pp. 416–438. [Google Scholar]

- Fagan JF, Singer LT. The role of simple feature differences in infants' recognition of faces. Infant Behavior and Development. 1979;2:39–45. doi: 10.1016/S0163-6383(79)80006-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A. Five-month-old infants prefer to listen to motherese. Infant Behavior and Development. 1985;8:181–195. [Google Scholar]

- Gelfer MP, Mikos VA. The relative contributions of speaking fundamental frequency and formant frequencies to gender identification based on isolated vowels. Journal of Voice. 2005;19:544–554. doi: 10.1016/j.jvoice.2004.10.006. [DOI] [PubMed] [Google Scholar]

- Guellai B, Coulon M, Streri A. The role of motion and speech in face recognition at birth. Visual Cognition. 2011;19:1212–1233. [Google Scholar]

- Johnson K, Ladefoged P, Lindau M. Individual differences in vowel production. The Journal of the Acoustical Society of America. 1993;94:701–714. doi: 10.1121/1.406887. [DOI] [PubMed] [Google Scholar]

- Jusczyk PW, Pisoni DB, Mullennix J. Some consequences of stimulus variability on speech processing by 2-month-old infants. Cognition. 1992;43:253–291. doi: 10.1016/0010-0277(92)90014-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamachi M, Hill H, Lander K, Vatikiotis-Bateson E. 'Putting the face to the voice': Matching identity across modality. Current Biology. 2003;13:1709–1714. doi: 10.1016/j.cub.2003.09.005. [DOI] [PubMed] [Google Scholar]

- Keating PA, Lindblom B, Lubker J, Kreiman J. Variability in jaw height for segments in English and Swedish VCVs. Journal of Phonetics. 1994;22:407–422. [Google Scholar]

- Kim HI, Johnson SP. Detecting 'infant-directedness' in face and voice. Developmental Science. 2014;17:621–627. doi: 10.1111/desc.12146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klatt DH, Klatt LC. Analysis, synthesis, and perception of voice quality variations among female and male talkers. Journal of the Acoustical Society of America. 1990;87:820–857. doi: 10.1121/1.398894. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218:1138–1141. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- Ladefoged P, Broadbent D. Information conveyed by vowels. Journal of the Acoustical Society of America. 1957;29:98–104. doi: 10.1121/1.397821. [DOI] [PubMed] [Google Scholar]

- Leinbach MD, Fagot BI. Categorical habituation to male and female faces: Gender schematic processing in infancy. Infant Behavior and Development. 1993;16:317–332. [Google Scholar]

- Lewkowicz DJ, Minar NJ, Tift AH, Brandon M. Perception of the multisensory coherence of fluent audiovisual speech in infancy: Its emergence and the role of experience. Journal of Experimental Child Psychology. 2015;130:147–162. doi: 10.1016/j.jecp.2014.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller CL. Developmental changes in male/female voice classification by infants. Infant Behavior and Development. 1983;6:313–330. [Google Scholar]

- Miller CL, Younger BA, Morse PA. The categorization of male and female voices in infancy. Infant Behavior and Development. 1982;5:143–159. [Google Scholar]

- Nakata T, Trehub SE. Infants’ responsiveness to maternal speech and singing. Infant Behavior and Development. 2004;27:455–464. [Google Scholar]

- Noles EC, Kayl AJ, Rennels JL. Dynamic presentation does not augment infants' intermodal knowledge of males. Paper presented at the International Conference on Infant Studies; Minneapolis, USA. 2012. [Google Scholar]

- Patterson ML, Werker JF. Matching phonetic information in lips and voice is robust in 4.5-month-old infants. Infant Behavior and Development. 1999;22:237–247. [Google Scholar]

- Patterson ML, Werker JF. Infants' ability to match dynamic phonetic and gender information in the face and voice. Journal of Experimental Child Psychology. 2002;81:93–115. doi: 10.1006/jecp.2001.2644. [DOI] [PubMed] [Google Scholar]

- Peterson GE, Barney HL. Control methods used in a study of the vowels. Journal of the Acoustical Society of America. 1952;24:175–185. [Google Scholar]

- Poulin-Dubois D, Serbin LA, Derbyshire A. Toddlers' intermodal and verbal knowledge about gender. Merrill-Palmer Quarterly. 1998;44:338–354. [Google Scholar]

- Poulin-Dubois D, Serbin LA, Kenyon B, Derbyshire A. Infants' intermodal knowledge about gender. Developmental Psychology. 1994;30:436–442. [Google Scholar]

- Quinn PC, Yahr J, Kuhn A, Slater AM, Pascalis O. Representation of the gender of human faces by infants: A preference for female. Perception. 2002;31:1109–1122. doi: 10.1068/p3331. [DOI] [PubMed] [Google Scholar]

- Ramsey JL, Langlois JH, Marti NC. Infant categorization of faces: Ladies first. Developmental Review. 2005;25:212–246. [Google Scholar]

- Rennels JL, Davis RE. Facial experience during the first year. Infant Behavior and Development. 2008;31:665–678. doi: 10.1016/j.infbeh.2008.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenblum LD, Yakel DA, Baseer N, Panchal A, Nodarse BC, Niehus RP. Visual speech information for face recognition. Perception & Psychophysics. 2002;64:220–229. doi: 10.3758/bf03195788. [DOI] [PubMed] [Google Scholar]

- Sachs J, Lieberman P, Erickson D. Anatomical and cultural determinants of male and female speech. In: Shuy RW, Fasold RW, editors. Language attitudes. Washington, DC: Georgetown University Press; 1973. pp. 74–83. [Google Scholar]

- Sai FZ. The role of the mother's voice in developing mother's face preference: Evidence for intermodal perception at birth. Infant and Child Development. 2005;14:29–50. [Google Scholar]

- Spelke ES, Owsley CJ. Intermodal exploration and knowledge in infancy. Infant Behavior and Development. 1979;2:13–27. [Google Scholar]

- Sugden NA, Mohamed-Ali MI, Moulson MC. I spy with my little eye: Typical, daily exposure to faces documented from a first-person infant perspective. Developmental Psychobiology. 2014;56:249–261. doi: 10.1002/dev.21183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trehub SE, Plantinga J, Brcic J. Infants detect cross-modal cues to identity in speech and singing. Annals of the New York Academy of Sciences. 2009;1169:508–511. doi: 10.1111/j.1749-6632.2009.04851.x. [DOI] [PubMed] [Google Scholar]

- Van Borsel J, Janssens J, De Bodt M. Breathiness as a feminine voice characteristic: a perceptual approach. Journal of Voice. 2009;23:291–294. doi: 10.1016/j.jvoice.2007.08.002. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews AS, Bahrick LE, Raglioni SS, Diaz I. Infants' bimodal perception of gender. Ecological Psychology. 1991;3:55–75. [Google Scholar]

- Xiao NG, Perrotta S, Quinn PC, Wang Z, Sun Y-HP, Lee K. On the facilitative effects of face motion on face recognition and its development. Frontiers in Psychology. 2014;5:633. doi: 10.3389/fpsyg.2014.00633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Younger BA, Fearing DD. Parsing items into separate categories: Developmental change in infant categorization. Child Development. 1999;70:291–303. [Google Scholar]