Abstract

PURPOSE

Mixed methods research is becoming an important methodology to investigate complex health-related topics, yet the meaningful integration of qualitative and quantitative data remains elusive and needs further development. A promising innovation to facilitate integration is the use of visual joint displays that bring data together visually to draw out new insights. The purpose of this study was to identify exemplar joint displays by analyzing the various types of joint displays being used in published articles.

METHODS

We searched for empirical articles that included joint displays in 3 journals that publish state-of-the-art mixed methods research. We analyzed each of 19 identified joint displays to extract the type of display, mixed methods design, purpose, rationale, qualitative and quantitative data sources, integration approaches, and analytic strategies. Our analysis focused on what each display communicated and its representation of mixed methods analysis.

RESULTS

The most prevalent types of joint displays were statistics-by-themes and side-by-side comparisons. Innovative joint displays connected findings to theoretical frameworks or recommendations. Researchers used joint displays for convergent, explanatory sequential, exploratory sequential, and intervention designs. We identified exemplars for each of these designs by analyzing the inferences gained through using the joint display. Exemplars represented mixed methods integration, presented integrated results, and yielded new insights.

CONCLUSIONS

Joint displays appear to provide a structure to discuss the integrated analysis and assist both researchers and readers in understanding how mixed methods provides new insights. We encourage researchers to use joint displays to integrate and represent mixed methods analysis and discuss their value.

Keywords: study design, data display, methodology, quantitative, qualitative, multimethod research, integrative analysis

INTRODUCTION

Mixed methods research increasingly is being used as a methodology in the health sciences1,2 to gain a more complete understanding of issues and hear the voices of participants. Researchers have used the mixed methods approach to examine nuanced topics, such as electronic personal health records,3 knowledge resources,4 patient-physician communication,5 and insight about intervention feasibility and implementation practices.6 Mixed methods research is the collection and analysis of both qualitative and quantitative data and its integration, drawing on the strengths of both approaches.7,8 We examined joint displays as a way to represent and facilitate integration of qualitative and quantitative data in mixed methods studies.

Integration

Increasingly, methodologists have emphasized the integration of qualitative and quantitative data as the centerpiece of mixed methods.9 Integration is an intentional process by which the researcher brings quantitative and qualitative approaches together in a study.7 Quantitative and qualitative data then become interdependent in addressing common research questions and hypotheses.10 Meaningful integration allows researchers to realize the true benefits of mixed methods to “produce a whole through integration that is greater than the sum of the individual qualitative and quantitative parts.”11 Integration, however, is not well developed or practiced. Rigorous reviews of published studies have found that often researchers collect quantitative and qualitative data but do not integrate.12–14 The work of Fetters et al15 illustrated how integration can be achieved through study design, methods, interpretation, and reporting.

Mixed Methods Designs

Basic mixed methods study designs provide an overall process to guide integration. There are 3 types of basic designs: exploratory sequential, explanatory sequential, and convergent designs.7 The exploratory design begins with a qualitative data collection and analysis phase, which builds to the subsequent quantitative phase. The explanatory design begins with a quantitative data collection and analysis phase, which informs the follow-up qualitative phase. The convergent design involves quantitative and qualitative data collection and analysis at similar times, followed by an integrated analysis. Adding features to the basic designs results in advanced designs: intervention,16,17 case study,18 multistage evaluation,19 and participatory20 approaches. Integration of the quantitative and qualitative approaches can then occur through methods in at least 4 ways: explaining quantitative results with a qualitative approach, building from qualitative results to a quantitative component (eg, instrument), merging quantitative and qualitative results, or embedding one approach within another.7,15

Integration at the Analytic and Interpretation Level

Data integration at the analytic and interpretation level has been done primarily in 2 ways: (1) by writing about the data in a discussion wherein the separate results of quantitative and qualitative analysis are discussed,21 and (2) by presenting the data in the form of a table or figure, a joint display, that simultaneously arrays the quantitative and quantitative results. A joint display is defined as a way to “integrate the data by bringing the data together through a visual means to draw out new insights beyond the information gained from the separate quantitative and qualitative results.”15 Although integrating mixed methods data in the discussion is well established,21 using joint displays in the process of analysis and interpretation has received relatively little explication despite the fact that they are increasingly seen as an area of innovation for advancing integration. Joint displays provide a visual means to both integrate and represent mixed methods results to generate new inferences.7,8 A joint display provides a method and a cognitive framework for integration, which should be an intentional process with a clear rationale.22

Despite the evolving interest and innovation in the use of joint displays, the types and applications of joint displays in health sciences research has been lacking. The purposes of this research therefore was to examine the various types of joint displays used according to the mixed methods design in the health sciences, and to identify exemplars and describe how researchers use the joint displays to enhance interpretation of the integrated quantitative and qualitative data.

METHODS

Design and Study Selection

We included journals that publish high-quality, state-of-the art mixed methods research and focused on health-related topics for the target audience of health sciences researchers. Although health sciences articles seem more likely to use joint displays, other disciplines also use joint displays that could be insightful. In the journals, we identified articles with joint displays for analysis. The first step was to search for articles published in the Annals of Family Medicine from January 2004 through September 2014. Search terms were “mixed method*,” “multimethod,” and “qualitative & quantitative.” Next, a manual review of all published articles in the Journal of Mixed Methods Research and International Journal of Multiple Research Approaches from their inception in January 2007 through September 2014 yielded additional examples. We targeted these journals because of their high impact factor and history of publishing empirical and methodologic mixed methods articles. The process consisted of scanning all 81 identified articles that addressed a health-related topic.

Eligibility Criteria

The first eligibility criterion required that articles reported an empirical health-related study, as opposed to solely being conceptual articles. The second criterion was that the study used mixed methods, defined as the collection, analysis, and integration of quantitative and qualitative data.8 Quantitative research is typically used to describe a topic statistically, generalize, make causal inferences, or test a theory. Qualitative inquiry is typically used when there is a need to explore a phenomenon, understand individuals’ experiences, or develop a theory.23 Studies reporting quantitative and qualitative components without their integration were excluded. We screened each article by title and abstract. A review of the full text was necessary to assess the third criterion, namely, the presence of a visual joint display to represent the integration of quantitative and qualitative data.

Data Extraction

For each article, we extracted the following information: (1) the design; (2) the study purpose; (3) the mixed methods rationale, (4) quantitative data sources; (5) qualitative data sources; (6) integration approaches used at the methods level: explaining, building, merging, and embedding; and (7) analytic strategies at the interpretation and reporting level: narrative, data transformation, and joint display.15 Individually, each author analyzed each joint display for what it uniquely communicated or represented (ie, mixed methods analysis) that is better captured visually than by words alone. To categorize the type of joint display used, we used a typology of joint displays24: the side-by-side, comparing results, statistics-by-themes, instrument development, adding qualitative data into an experiment, and adding a theoretical lens displays. To identify best practices and exemplars, as a group we analyzed the insights the researchers gained through using the joint display.

RESULTS

Included Studies and Displays

Of 81 studies identified, 19 met inclusion criteria, and the remaining either lacked joint displays or were not empirical studies. From the review, we identified the distribution of joint displays organized by mixed methods design (Supplemental Tables are available at http://annfammed.org/content/13/6/554/DC1).

For explanatory sequential designs, researchers used 3 display types: side-by-side, adding a theoretical/conceptual lens, and an innovative path diagram with clinical vignettes. Displays found in exploratory sequential designs were statistics-by-themes and instrument development displays. The displays demonstrated the potential to represent mixing by linking the qualitative findings to scale items25 or to the quantitative analysis.26 The convergent design joint displays were statistics-by-themes or side-by-side comparisons. In studies using data transformation, whereby qualitative results were transformed into numeric scores, joint displays presented the statistical analysis of qualitatively derived data (eg, coded transcripts of patient visits27). Next, the intervention design displays included side-by-side displays of results to embed qualitative findings with treatment outcomes illuminating issues, such as implementation practices28 or patient experiences.29

We found innovations in the use of joint displays. Several joint displays combined types, for example, integrating a theoretical framework into a side-by-side display.30 We identified an additional type of joint display, the cross-case comparison, which fits the case study design.

Exemplar Joint Displays

As a result of this overview of joint displays, we identified 5 exemplar joint displays that researchers conducting mixed methods investigations could use to guide integration during analytic and interpretation processes. The exemplars illustrate unique characteristics of joint displays and their value for generating inferences, and are described in greater detail below.

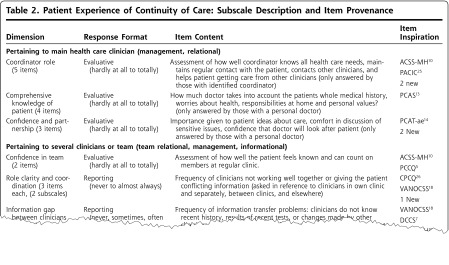

Exploratory Sequential Design Joint Display

Haggerty et al25 conducted an exploratory sequential design study to develop and validate an instrument to assess continuity of care from patients’ perspectives. They examined themes from 33 qualitative studies of patient care experiences and matched codes to existing instruments and added new items.

An instrument development joint display mapped the qualitative dimensions of care continuity to quantitative instrument items (Figure 1). Major headings of rows marked each continuity of care dimension. Columns provided the response format, a description of item content, and the source of the item (ie, existing survey or new). By presenting each qualitatively derived dimensions from the patient perspective along with particular item content, the display clearly articulated how the authors systematically develop the instrument.

Figure 1.

A joint display from an exploratory sequential design that maps qualitatively derived codes to items.

Reprinted with permission from Ann Fam Med. 2012;10(5):443–451.25

Explanatory Sequential Design Joint Display

Finley et al31 had an explanatory sequential study aim of developing and validating the Work Relationship Scale (WRS) for primary care clinics. They used the model of Lanham et al32 containing 7 characteristics of work relationships in high-quality practices. They analyzed measurement properties of the WRS and correlations with patient ratings of care quality. They interviewed key informants regarding clinic relationships and analyzed interviews based on the 7 characteristics32 with particular attention to patterns among high vs low WRS clinics. They concluded that interview data supported the statistical analysis, providing validity evidence for the WRS and indicating the importance of relationships in the delivery of primary care.

A statistics-by-themes joint display in this study compared clinics with high and low WRS scores (Figure 2). The display had a row for low WRS clinics with a representative quote and then a row for high WRS clinics with a representative quote. Headings organized the results by the theoretical model of work relationships of Lanham et al.32 A noteworthy characteristic was that this theoretical framework threaded throughout the study. The authors discussed the insight gained by examining the characteristics of high- vs low-quality relationships (ie, quantitative results) in primary care clinics, noting “considerable differences emerged in patterns of communication and relating between low- and high-scoring clinics.”31 They were able to communicate this message in their table as well.

Figure 2.

A joint display from an explanatory sequential design that is organized by a theoretical framework and relates categorical scores to quotes.

Reprinted with permission from Ann Fam Med. 2013;11(6):543–549.31

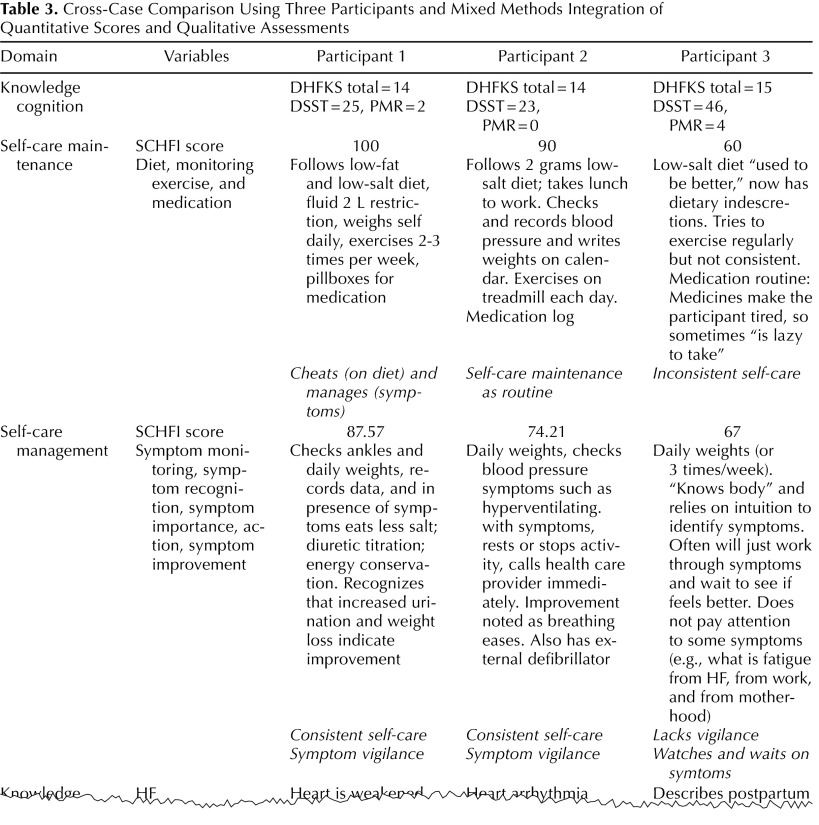

Convergent Design Joint Display

Dickson et al33 investigated how cognitive function and knowledge affected heart failure self-care using a convergent design in which they merged quantitative and qualitative results to better understand the complexity of the clinical phenomenon. At 2 outpatient heart failure specialty clinics of a large urban medical center in the United States, they administered standardized instruments to measure self-care, knowledge, and cognitive function, and they concurrently conducted patient interviews to understand their self-care practices. Integration focused on the concordance between qualitative and quantitative results.

Dickson et al33 developed a cross-case comparison joint display to compare and contrast the interview data with quantitative self-care, cognitive function, and knowledge scales (Figure 3). The display contained a row for each qualitative domain (ie, theme) and reported the corresponding quantitative variables for the domain. Participants were arrayed in columns. Within each cell were the actual quantitative scores as well as qualitative summaries and quotes for each domain-participant combination. The display allowed them “to more fully understand”33 the influences of cognitive function in order to develop effective solutions to improve heart failure self-care. They used this technique to validate the quantitative knowledge and self-care scores while also looking for instances of inconsistency. It illustrates qualitative and quantitative data for multiple participant cases.

Figure 3.

A cross-case comparison joint display from a convergent design showing scored items and descriptions.

Reprinted with permission from J Mixed Methods Res. 2011;5(2):167–189.33

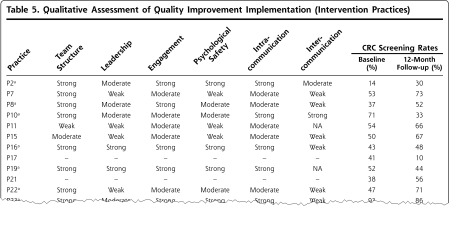

Mixed Methods Intervention Design Joint Display 1

Shaw et al28 conducted a cluster-randomized trial of a quality improvement (QI) intervention to improve colorectal cancer screening in primary care practices with an embedded qualitative evaluation. Across 23 practices, they collected colorectal cancer screening rates through medical record reviews and surveys. They used a qualitative multimethod assessment, a reflective adaptive process, and learning collaboratives via interviews, observations, and audio-recordings. The analysis of practices at baseline and the 12-month follow-up revealed no statistically significant improvements in intervention and control arms, but integrating the qualitative findings yielded insights into QI implementation and patterns in high- and low-performing practices.

Shaw et al28 created a side-by-side joint display that presented each row as a practice (Figure 4). The columns then displayed the qualitatively derived QI implementation characteristics next to the quantitative colorectal cancer screening rates at baseline and 12 months. A helpful feature was the identification of strong, moderate, and weak practices based on the patterns of implementation. Their use of the joint display as a framework to discuss their integrated analysis was unique. For each practice, they discussed the QI implementation characteristics and the colorectal cancer screening rate change from baseline. They noted the value of integrating qualitative methods “to answer recent calls to explore the implementation context of null trials.”28 The joint display addressed this call by presenting QI implementation patterns in light of the colorectal cancer screening rate.

Figure 4.

A joint display from a mixed methods intervention design that presents qualitatively derived implementation practices with quantitative screening rate results.

Reprinted with permission from Ann Fam Med. 2013;11(3):220–228, S221–S228.28

Mixed Methods Intervention Design Joint Display 2

Bradt et al29 conducted a mixed methods cross-over trial that exposed patients to both music therapy (MT) and music medicine (MM). Collecting data from patients with cancer in an academic hospital in the United States, they conducted semistructured interviews to understand patients’ experiences with music intervention and hear their voices about the impact of the intervention. Quantitative data sources were a visual analogue scale and numeric pain intensity. Results indicated that both were effective, although most patients preferred MT.

To represent integration, Bradt et al29 created a joint display to represent adding qualitative data into an experiment. It was a side-by-side display that integrated the experiences of patients whose quantitative data indicated greater benefit of MT vs MM or vice versa (Figure 5). After calculating z scores of treatment benefit for MT and MM, they developed a combination typology of 4 categories of treatment effect (eg, great improvement with MM–less improvement or worsening with MT). The display was organized by rows for each of the 4 types of treatment benefits. Columns represent change in pain for MT and MM and patient experiences. The reader can scan each row (ie, treatment benefit) and see the z scores of the most extreme cases side-by-side with patients’ experience. The display helped the authors to explore “if and why certain patients benefited more from MT than MM sessions or vice versa.”29 This exemplar uniquely illustrated a cross-over trial wherein patients had both exposures, MT and MM, and distributed their comments relative to their responses to both. The authors reported the table added insights into how patient characteristics affected treatment benefits and provided several examples with implications for practice.

Figure 5.

A joint display from a mixed methods intervention design organized by 4 categories of patient treatment benefits.

Reprinted with permission from Support Care Cancer. 2015;23(5):1261–1271.29

DISCUSSION

In this study, patterns emerged about the use of joint displays across mixed methods designs. First, the integration and analytic strategies are relatively similar within each of the designs. Convergent design joint displays were mainly themes-by-statistics or side-by-side comparisons. Convergent designs were most prevalent in the data set and tend to be the most complex7 from an integration standpoint, so we anticipated varied uses of joint displays. The explanatory, exploratory, intervention designs demonstrated other innovative displays, such as aligning mixed results to theory31 and policy recommendations.30 Furthermore, across designs, the most frequently used type of joint display is the themes-by-statistics type. This type typically involved reporting categorical data, such as “high,” “medium,” and “low” patient satisfaction scores,34 to organize the presentation of themes or quotes. The most insightful joint displays were consistent with both the mixed methods design and the approach to integration. For example, researchers using a convergent design and a merging approach to integration represented results with a themes-by-statistics display to array themes about patient perceptions of physician encounters against sociodemographic and health characteristics.5 The displays assist readers in understanding how quantitative and qualitative data interfaced and in considering inferences.

On the basis of the results of this study and existing literature,10,24,35 we recommend the following best practices: (1) label quantitative and qualitative results, (2) be consistent with the design, (3) be consistent with the integration approach, and (4) identify inferences or insights generated. Indeed, several articles30,33 included an integration section to describe their approach and insights gained. Identifying insights can help researchers consider their integration rationale and share it with the research community.

A limitation of this study is that our sources were predominately 3 journals, so selection bias is present. Assuredly, additional studies in health sciences have used joint displays, as nearly 700 empirical mixed methods studies have been identified from 2000 to 2008 alone in the social and health sciences.1 Although not the focus of this study, future inquiry could also focus on the use of graphical displays. We are aware of some examples29,36 but found relatively few new ones.

Our analysis of 19 joint displays in published health-related literature demonstrates the intent of a variety of joint displays for providing insights and inferences in mixed methods studies. Joint displays may provide a structure to discuss the integrated analysis. Integration is needed to reach the full potential of a mixed methods approach and gain new insights. Thus, we call for increased application of joint displays to integrate and represent mixed methods analysis. We urge researchers to discuss the “synergy”11 gained by integrating quantitative and qualitative methods.

Footnotes

Conflicts of interest: authors report none.

Supplementary materials: Available at http://www.AnnFamMed.org/content/13/6/554/suppl/DC1/.

References

- 1.Ivankova NV, Kawamura Y. Emerging trends in the utilization of integrated designs in the social, behavioral, and health sciences. In: Tashakkori A, Teddlie C, eds. Sage Handbook of Mixed Methods in Social and Behavioral Research. 2nd ed. Thousand Oaks, CA: Sage; 2010:581–611. [Google Scholar]

- 2.Plano Clark VL. The adoption and practice of mixed methods: U.S. trends in federally funded health-related research. Qual Inq. 2010;16(6):428–440. [Google Scholar]

- 3.Krist AH, Woolf SH, Bello GA, et al. Engaging primary care patients to use a patient-centered personal health record. Ann Fam Med. 2014;12(5):418–426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pluye P, Grad RM, Johnson-Lafleur J, et al. Number needed to benefit from information (NNBI): proposal from a mixed methods research study with practicing family physicians. Ann Fam Med. 2013;11(6):559–567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wittink MN, Barg FK, Gallo JJ. Unwritten rules of talking to doctors about depression: integrating qualitative and quantitative methods. Ann Fam Med. 2006;4(4):302–309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jaen CR, Crabtree BF, Palmer RF, et al. Methods for evaluating practice change toward a patient-centered medical home. Ann Fam Med. 2010;8 Suppl 1:S9–20; S92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Creswell JW. A Concise Introduction to Mixed Methods Research. Thousand Oaks, CA: Sage; 2015. [Google Scholar]

- 8.Creswell JW, Plano Clark VL. Designing and Conducting Mixed Methods Research. 2nd ed. Thousand Oaks, CA: Sage; 2011. [Google Scholar]

- 9.O’Cathain A, Murphy E, Nicholl J. Integration and publications as indicators of “yield” from mixed methods studies. J Mixed Methods Res. 2007;1(2):147–163. [Google Scholar]

- 10.Bazeley P. Integrative analysis strategies for mixed data sources. Am Behav Sci. 2012;56(6):814–828. [Google Scholar]

- 11.Fetters MD, Freshwater D. The 1 + 1 = 3 integration challenge. J Mixed Methods Res. 2015;9(2):115–117. [Google Scholar]

- 12.Bryman A. Barriers to integrating quantitative and qualitative research. J Mixed Methods Res. 2007;1(1):8–22. [Google Scholar]

- 13.Bryman A. Integrating quantitative and qualitative research: how is it done? Qual Res. 2006;6(1):97–113. [Google Scholar]

- 14.O’Cathain A, Murphy E, Nicholl J. The quality of mixed methods studies in health services research. J Health Serv Res Policy. 2008; 13(2):92–98. [DOI] [PubMed] [Google Scholar]

- 15.Fetters MD, Curry LA, Creswell JW. Achieving integration in mixed methods designs-principles and practices. Health Serv Res. 2013; 48(6 Pt 2):2134–2156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Creswell JW, Fetters MD, Plano Clark VL, Morales A. Mixed methods intervention trials. In: Andrew S, Halcomb EJ, eds. Mixed Methods Research for Nursing and the Health Sciences. Oxford, United Kingdom: John Wiley & Sons; 2009:161–180. [Google Scholar]

- 17.Sandelowski M. Focus on qualitative methods: using qualitative methods in intervention studies. Res Nurs Health. 1996;19(4): 359–364. [DOI] [PubMed] [Google Scholar]

- 18.Yin RK. Case Study Research: Design and Methods. Thousand Oaks, CA: Sage; 2014. [Google Scholar]

- 19.Nastasi BK, Hitchcock J, Sarkar S, Burkholder G, Varjas K, Jayasena A. Mixed methods in intervention research: theory to adaptation. J Mixed Methods Res. 2007;1(2):164–182. [Google Scholar]

- 20.Greysen SR, Allen R, Lucas GI, Wang EA, Rosenthal MS. Understanding transitions in care from hospital to homeless shelter: a mixed-methods, community-based participatory approach. J Gen Intern Med. 2012;27(11):1484–1491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Stange KC, Crabtree BF, Miller WL. Publishing multimethod research. Ann Fam Med. 2006;4(4):292–294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Greene JC. Mixed Methods in Social Inquiry. San Francisco, CA: Jossey-Bass; 2007. [Google Scholar]

- 23.Creswell JW. Qualitative Inquiry and Research Design: Choosing Among Five Approaches. 3rd ed. Thousand Oaks, CA: Sage; 2013. [Google Scholar]

- 24.Guetterman T, Creswell JW, Kuckartz U. Using joint displays and MAXQDA software to represent the results of mixed methods research. In: McCrudden M, Schraw G, Buckendahl C, eds. Use of Visual Displays in Research and Testing: Coding, Interpreting, and Reporting Data. Charlotte, NC: Information Age Publishing; 2015:145–176. [Google Scholar]

- 25.Haggerty JL, Roberge D, Freeman GK, Beaulieu C, Bréton M. Validation of a generic measure of continuity of care: when patients encounter several clinicians. Ann Fam Med. 2012;10(5):443–451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nutting PA, Rost K, Dickinson M, et al. Barriers to initiating depression treatment in primary care practice. J Gen Intern Med. 2002;17(2):103–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tarn DM, Paterniti DA, Orosz DK, Tseng C-H, Wenger NS. Intervention to enhance communication about newly prescribed medications. Ann Fam Med. 2013;11(1):28–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Shaw EK, Ohman-Strickland PA, Piasecki A, et al. Effects of facilitated team meetings and learning collaboratives on colorectal cancer screening rates in primary care practices: a cluster randomized trial. Ann Fam Med. 2013;11(3):220–228, S221–S228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bradt J, Potvin N, Kesslick A, et al. The impact of music therapy versus music medicine on psychological outcomes and pain in cancer patients: a mixed methods study. Support Care Cancer. 2015; 23(5):1261–1271. [DOI] [PubMed] [Google Scholar]

- 30.Petros SG. Use of a mixed methods approach to investigate the support needs of older caregivers to family members affected by HIV and AIDS in South Africa. J Mixed Methods Res. 2012;6(4):275–293. [Google Scholar]

- 31.Finley EP, Pugh JA, Lanham HJ, et al. Relationship quality and patient-assessed quality of care in VA primary care clinics: development and validation of the work relationships scale. Ann Fam Med. 2013;11(6):543–549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lanham HJ, McDaniel RR, Jr, Crabtree BF, et al. How improving practice relationships among clinicians and nonclinicians can improve quality in primary care. In: Joint Commission Journal on Quality and Patient Safety/Joint Commission Resources. 2009;35(9):457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Dickson VV, Lee CS, Riegel B. How do cognitive function and knowledge affect heart failure self-care? J Mixed Methods Res. 2011;5(2):167–189. [Google Scholar]

- 34.Andrew S, Salamonson Y, Everett B, Halcomb E, Davidson PM. Beyond the ceiling effect: using a mixed methods approach to measure patient satisfaction. International Journal of Multiple Research Approaches. 2011;5(1):52–63. [Google Scholar]

- 35.Dickinson WB. Visual displays for mixed methods findings. In: Tashakkori A, Teddlie C, eds. SAGE Handbook of Mixed Methods in Social & Behavioral Research. 2nd ed. Thousand Oaks, CA: Sage; 2010. [Google Scholar]

- 36.Legocki LJ, Meurer WJ, Frederiksen S, et al. Clinical trialist perspectives on the ethics of adaptive clinical trials: a mixed-methods analysis. BMC Med Ethics. 2015;16(1):27. [DOI] [PMC free article] [PubMed] [Google Scholar]