Abstract

The use of stepped wedge designs in cluster-randomized trials and implementation studies has increased rapidly in recent years but there remains considerable debate regarding the merits of the design. We discuss three key issues in the design and analysis of stepped wedge trials - time-on-treatment effects, treatment effect heterogeneity and cohort studies.

Keywords: stepped wedge design, cluster randomized trial, implementation research

1. Introduction

The use of stepped wedge designs in cluster-randomized trials and implementation studies has become increasingly common in recent years. In the first comprehensive literature review on this topic, Brown & Lilford (2007) found 12 references to papers incorporating stepped wedge designs. Four years later, Mdege et al. (2011) reported 25 citations. More recently, an informal PubMed search encompassing just the 7 month period August, 2014 – February, 2015 found 18 studies reporting use of the stepped wedge design, as well as additional methodological papers. In spite of this rapid increase in use, there remains considerable debate regarding the merits of the stepped wedge design.

Figure 1 illustrates a classic stepped wedge cluster randomized design compared to a standard parallel cluster randomized trial design. In general, a stepped wedge cluster randomized trial is any design in which the clusters cross over unidirectionally (in a randomized order) from the control or standard of care condition to the intervention condition in a staggered fashion such that the intervention effect is partly, but not completely, confounded with time. Outcome data collection must be synchronized in time between clusters. See Hemming et al. (2015) figures 1–3 for some variations of this design. Note that this relatively broad definition of the stepped wedge design includes some designs that Kotz et al. (2012b) include as variations of parallel designs. Nonetheless, we choose this definition to emphasize the unique features of this design – the unidirectional crossover and the partial confounding of intervention effect and time. Note that a before-after trial does not qualify as a stepped wedge design since the intervention effect is completely confounded with time in that design.

Figure 1.

Schematic representation of parallel versus stepped wedge cluster randomized designs with 8 clusters. Each row represents a cluster. C = Control condition, T = Treatment condition

An informative series of papers and letters (Mdege et al., 2011; Kotz et al., 2012b; Mdege et al., 2012; Kotz et al., 2012a; Hemming et al., 2013; Kotz et al., 2013; Hemming et al., 2015) lays out many of the strengths and weaknesses of the stepped wedge design. Table 1 summarizes key issues raised in these papers and others. In this manuscript we provide more detailed comments on three key issues relevant to the design and analysis of stepped wedge trials - delayed treatment effects, heterogeneous treatment effects, and cohort studies.

Table 1.

Summary of key issues regarding stepped wedge designs

| Issue | Comments |

|---|---|

| Feasibility |

|

| Social/Political |

|

| Duration |

|

| Delayed rollout/effects |

|

| Size |

|

| Participant burden |

|

| Power |

|

| Analysis |

|

2. Power calculation and analysis of stepped wedge trials

In this section we introduce notation and briefly review standard approaches for analyzing data and computing power in stepped wedge trials based on repeated cross-sectional samples (see section 5 for remarks on cohort-based trials).

Any analysis of data from a stepped wedge design must account for the intentional confounding of time and treatment as well as the correlation between repeated observations in the same cluster. Model based approaches include generalized linear mixed models (glmm) (Hussey & Hughes, 2007) or generalized estimating equations (gee) (Scott et al., 2015).

Hussey & Hughes (2007) propose the following model for data from a stepped wedge trial:

| (1) |

where Yijk denotes the response corresponding to individual k at time j from cluster i (i = 1 … I, j = 1 … T, k = 1 … mij), βj are fixed time effects corresponding to interval j (βT = 0 for identifiability), Xij is an indicator of the treatment mode in cluster i at time j, θ is the treatment effect, μ is the mean of Yijk in the control or standard of care condition at time T, αi is a random intercept for cluster i such that αi ~ N(0, τ2), and . Typically, Xij = 1 if the intervention is provided in cluster i at time j and 0 otherwise. If mij = m for all i and j then an analysis of the cluster-level means (Ȳij) is possible; however, if the cluster sizes vary then an analysis of individual-level data provides increased efficiency.

An important assumption of model (1) is that the underlying time effect is the same for each cluster. This assumption could be relaxed somewhat to allow groups of clusters (strata) to have different underlying time trends by adding a stratum main effect and a strata by time interaction. However, it is not possible to allow each cluster to have a separate time trend; such a model would be unidentifiable (unless one assumes a parametric form for the time trend).

The approximate power for testing the hypothesis Ho : θ = 0 versus Ha : θ = θA may be determined from the formula

| (2) |

For the special case were the Xij are all 0 or 1 and mij = m, a closed form formula for Var(θ̂) based on model (1) is given in Hussey & Hughes (2007). For a “balanced” stepped wedge design (one with T time periods, I clusters (all of equal size m), all clusters starting in the control condition and h = I/(T − 1) (an integer) clusters crossing over from control to intervention at each time) Rhoda et al. (2011) show that

| (3) |

where . For binary outcomes, one may set . Woertman et al. (2013) provide a formula for direct calculation of sample size by deriving a design effect for balanced stepped wedge cluster randomized trials (see also Hemming & Girling (2013) and de Hoop et al. (2013)).

More generally, for situations were the above do not apply (i.e. Xij not 0 or 1, or all mij not equal), Var(θ̂) may be computed using basic results from generalized least squares (Draper & Smith, 1998). Specifically, let D be the design matrix from model (1) and V be the covariance matrix of Y. Then the appropriate diagonal element of (D′V−1D)−1 gives the variance of θ̂ (see appendix for details).

3. Delayed treatment effects

An important consideration in designing stepped wedge studies is the potential for loss of power associated with delayed intervention effects. Specifically, if the intervention is not fully effective in the time interval in which it is introduced then substantial reductions in power are possible, even for minor delays (Hussey & Hughes, 2007). Such delays can occur due to a slower than expected intervention rollout or due to an intrinsic lag between introduction of the intervention and its effect on the outcome.

The most effective approach to avoiding this loss of power is through careful study design. For instance, in a stepped wedge design the time intervals between steps should be long enough for the intervention to be rolled out and become fully effective (and for the outcome to be measured) within the time step in which the intervention is introduced. In some cases this may require a “wash-out” period between steps that allows enrolled individuals to provide outcome data but during which no new individuals are enrolled in the trial (Hemming et al., 2015). For example, in a clinic-based trial of approaches to providing antiretroviral (ART) medications to HIV-infected women to prevent mother-to-child transmission of HIV, Killiam et al. (2010) enrolled women during pregnancy but the endpoint (ART uptake) could occur anytime until delivery. A transition period was needed between the stepped wedge time steps to allow the women enrolled in the control condition to deliver their infants before introducing the intervention in the clinic. Women initiating clinic care during the transition period were not included in the analysis.

In other cases, there may be an intrinsic delay between provision of the intervention and realization of its effect and this may lead to an unacceptably long trial duration. For example, suppose one were interested in implementing a program of male circumcision to reduce HIV incidence in a community. Previous randomized trials (Auvert et al., 2005; Gray et al., 2007; Bailey et al., 2007) have shown that male circumcision reduces the risk of acquiring HIV by approximately 60% in heterosexual men. However, the effect of implementing a large-scale male circumcision program on community-wide HIV incidence (i.e. incidence among both women and men, circumsized or not) is unknown. Since implementation of a mass circumcision campaign would take time and be extremely resource intensive, an argument might be made to use a stepped wedge design to measure the program effect during rollout. Nonetheless, a stepped wedge would be a poor design choice in such a situation. The effect of male circumcision on community-level HIV incidence depends not only on direct effects (protecting the circumcised men) but also on indirect effects (protecting partners, and partners of partners, of the circumcised men by breaking chains of infection). Modeling predicts that the full effect of a mass circumcision intervention would take years to be realized (Alsallaq et al., 2013), but a stepped wedge design with multi-year time steps is likely impractical. As an alternative, a stepped wedge design that measured a process outcome, such as number of men circumcised in each interval, would be feasible. Of course, additional modeling work and assumptions would be needed to relate this outcome to community-level HIV incidence.

In some cases, clever design can overcome a delay between provision of an intervention and its effect. The Gambia Hepatitis Intervention Study (Hall et al., 1987) used a stepped wedge design to roll out a Hepatitis B vaccination program to infants in 17 districts in The Gambia over a 4 year period. One objective for the trial was to show an increase in HBV titers due to the intervention. This endpoint is highly amenable to the stepped wedge design as there is little delay between vaccination and development of a detectable HBV titer. However, the primary objective of the trial was to show a decrease in hepatocellular cancer and other chronic liver disease due to the vaccination program. Since a reduction in hepatocellular cancer and liver disease due to infant HBV vaccination would take many years to be realized, it would appear as though this objective is incompatible with the trial design. However, during the rollout of the vaccination program a national registry to record all cases of hepatocellular cancer and other chronic liver diseases was created in The Gambia. When a case is entered in the registry, the birthdate and birthplace of the affected individual is also recorded. Over the years, as individuals born during the HBV vaccination program rollout enter the registry, the birthdate/birthplace information can be used to assign each case of disease to the appropriate cluster and time step in the stepped wedge design, thereby making an analysis of the long-term effects of infant HBV vaccination possible (Viviani et al., 2008). In contrast, if one were to simply measure incidence of liver disease over time, without the ability to explicitly link cases back to the original cluster-time steps, it is highly unlikely that changes in incidence 30 years after the (4 year) rollout of vaccination would yield interpretable information about the intervention effect due to the variable time of disease onset, migration, etc.

3.1. Estimation of time-on-treatment effects

In spite of the best efforts of investigators, however, unexpected delays in the intervention rollout or effect may occur. Although model (1) assumes complete rollout of the intervention in the time step when it is introduced and a constant intervention effect, these assumptions can be relaxed. If a delay in realizing the full effectiveness of the intervention is due to a slower than expected or incomplete rollout of the intervention, and if a measure of the completeness of the intervention rollout in a given interval is available, then this measure can be included in the analysis by setting the Xij in model (1) to fractional values between 0 and 1 (Hussey & Hughes, 2007). This is effectively an “as-treated” analysis (while the use of Xij equal to 0 or 1 is comparable to an intent-to-treat analysis). For example, in a stepped wedge trial to access the impect of expedited partner treatment trial (EPT) on gonorrhea and chlamydia prevalence in Washington state (Golden et al., 2015) an estimate of the proportion of STI patients who were offered EPT was obtained through a random survey of individuals diagnosed with gonorrhea or chlamydia. This information could be used in the analysis to adjust for less than complete provision of the intervention. Note, however, that this approach assumes that the effect of the intervention on the outcome is directly proportional to the amount of the intervention provided.

A similar approach can also be used to account for delayed treatment effects (time-on-treatment effects). Suppose prior modelling or theory predicts that the intervention will only be 50% effective in the time interval in which it is introduced and 100% effective thereafter. Then, as above, the Xij may be set to fractional values to incorporate this (prior) knowledge into the analysis.

If the completeness of the intervention rollout or the expected fractional treatment effect in each interval is unknown, then the time-on-treatment intervention effect can be estimated from the data. This can be accomplished by extending model (1) to

| (4) |

where ℓ is the number of time steps since the intervention was introduced (ℓ = 0 for control intervals, ℓ = 1 in the time step in which the intervention is introduced, ℓ ≤ T − 1) (Scott et al., 2015). Setting Lijℓ = 1 if the jth time interval in the ith cluster is ℓ (≥ 1) intervals since the introduction of the intervention and 0 otherwise provides an estimate of the time-on-treatment effects, θℓ. The global hypothesis Ho : θ1 = θ2 = … = θT−1 can be used to test for a time-on-treatment effect. Parametric functional forms for the time-on-treatment effect that are linear in θℓ (e.g. Lijℓ = ℓ and θℓ = θ) may be directly incorporated in equation (4) and estimated using standard statistical methods (glmm or gee).

Alternatively, a nonlinear model for time-on-treatment may be of interest. For example, the following model would be appropriate for an intervention that is thought to increase to a long-term maximum effect over time following introduction

| (5) |

where d indexes the rate with which the intervention approaches its full long-term effect, θ0 (which may be positive or negative). Granston (2013) proposes using a two-stage estimation procedure to fit this model:

Fit model (4)

Use the θ̂ℓ from step 1 and nonlinear weighted least squares to fit model (5) with weights given by the covariance matrix of the θ̂ℓ from step 1.

A bootstrap procedure is used to obtain standard errors of the parameters.

3.2. Effect of delayed treatment effects on power

The generalized least squares framework (section 2 and appendix) can be used to predict the expected attenuation in the estimated treatment effect and associated loss of power due to a delay in the treatment effect or incomplete rollout of the intervention. Let Da be a design matrix that is identical to D except that the last column (corresponding to the Xij) contains fractional numbers that represent the the expected fractional rollout of the intervention or the expected fractional treatment effect in each cluster at each time point. Let b be the vector (of length T + 1) of expected parameters under the alternative hypothesis, (μ, β1 … βT−1, θA). Then, if the data are analyzed without accounting for the delayed treatment effect (i.e. using D instead of Da in the analysis),

| (6) |

The (T +1)’st element of E(b̂) is , the adjusted treatment effect under the delayed effect/rollout scenario. can be substituted for θA in equation (2) to determine the power of an analysis that fails to account for the delayed rollout/effect.

A correct analysis would incorporate the delayed treatment effect as described in section 3.1. If the delay is expressed using fractional treatment effects then the power may be computed using equation (2) with the original value of the treatment effect, θA, and with Var(θ̂) computed from (Da′V−1Da)−1. Note that this approach is appropriate for computing power when known fractional values are used for the Xij but not when the delayed treatment effect is estimated from the data. The latter is an area of ongoing research.

4. Treatment Effect Heterogeneity

Hussey & Hughes (2007) argue that variations in the mean response from cluster to cluster in model (1) (as quantified by τ2 or ρ) have little effect on power when designing a stepped wedge trial. Intuitively, this is because part of the information on the intervention effect is coming from the within-cluster comparisons (adjusted for time trends) in which each cluster serves as its own control. In a within-cluster comparison the αi in model (1) effectively cancels out when taking the difference between the intervention and control time periods, making variations in this term irrelevant.

However, model (1) assumes that the intervention effect, θ, is constant across clusters. In many circum-stances it is plausible that the effect of the intervention may vary from cluster to cluster due to variation in the quality of implementation or other factors. Thus, model (1) may be extended to include treatment heterogeneity:

| (7) |

where γi ~ N(0, η2) is a random treatment effect for cluster i. Powering a stepped wedge design trial based on model (7) can still use equation (2) but now requires specification of three variance components: τ2, η2, and to compute Var(θ̂). In this situation, no simple formula for Var(θ̂), analogous to equation (3), is possible; rather, Var(θ̂) must be obtained by computing (D′V−1D)−1 as described in section 3.2 and the appendix.

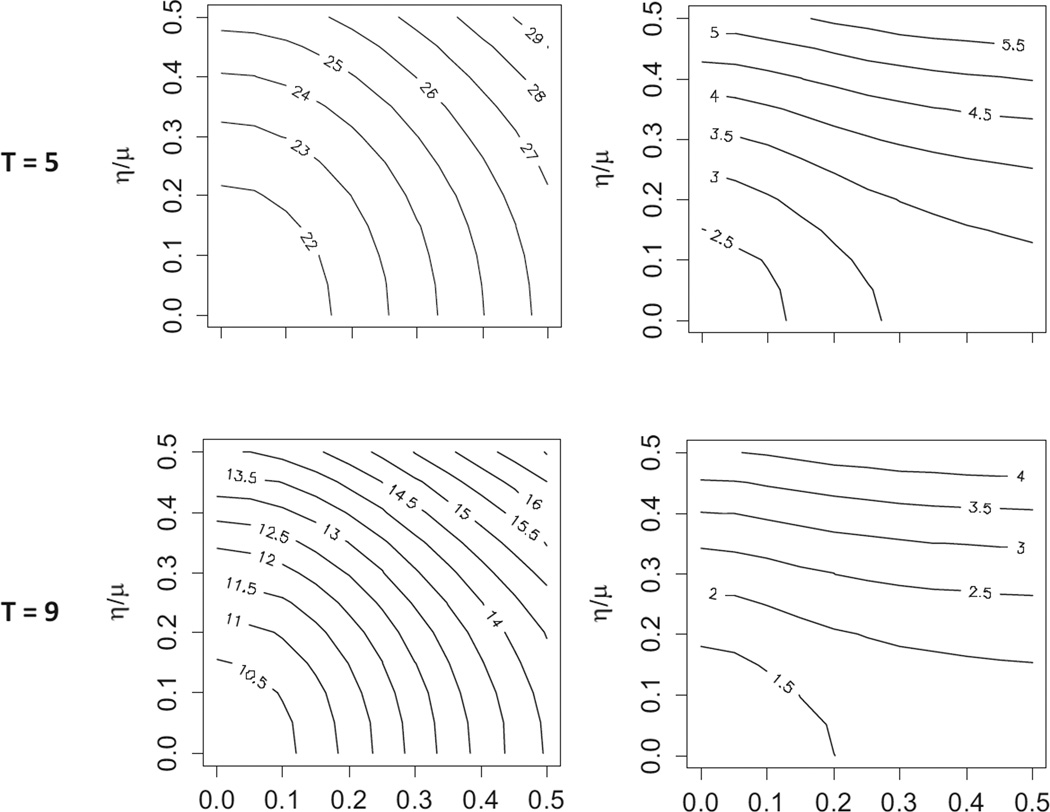

Importantly, the magnitude of η2 can have a significant effect on power. Figure 2 shows the effect of variations in the magnitude of τ2 and η2 on Var(θ̂) for various choices of T and m in a balanced stepped wedge trial (we ignore I since, in a balanced stepped wedge trial, the number of clusters affects only the magnitude, but not the shape, of the contours shown in figure 2). In these examples, variation in T has minimal effect on the shape of the contours, although, of course, Var(θ̂) declines as T increases. However, variation in m has a significant effect on the shape of the contours. Specifically, for small m, variations in both τ2 and η2 have a significant effect on Var(θ̂). However, for large m (such that ) the value of τ2 has little effect on Var(θ̂) and, hence, little effect on power but variations in η2 continue to have a significant effect on Var(θ̂), regardless of the size of m. As seen in the figure, increases in η2 result in increases in Var(θ̂) and, hence, decreases in power.

Figure 2.

Contours of Var(θ̂) × 105 as a function of τ and η for selected m and T using the Washington State Expedited Treatment Trial (??) balanced stepped wedge design: μ = 0.05, σ2 = 0.05 * 0.95, 24 clusters and h = 6 (T = 5) or h = 3 (T = 9)

The preceding observations suggest that a reasonable estimate of treatment effect heterogeneity, as quantified by η, should be included in power calculations when designing a stepped wedge trial. If preliminary data are available then these can be used to estimate η (and τ). However, if no such data are available then we have found that conducting the following thought experiment with knowledgeable investigators can be useful in deriving reasonable values of η and τ. We first orient the discussion by identifying reasonable values for the overall mean of the outcome (or prevalence for a binary outcome), μ, and the average intervention effect, θ. Then we explain that the “true” mean of each cluster may vary around μ, where variations in the true mean reflect underlying differences between the clusters, not sampling variability. We ask the investigators to specify a range around μ that should include the true mean response for “almost all” the clusters. We take this range to represent an interval that includes 95% of the cluster means (i.e. ±2τ), so τ = (range in means)/4. Similarly, we ask the investigators to specify the cluster to cluster variation in intervention effects as a range of possible intervention effects, centered around θ. Then, η = (range in intervention effects)/4.

An R package, swCRTdesign, containing functions for power computation (accommodating treatment heterogeneity) and summarizing data from a stepped wedge design, is available at http://faculty.washington.edu/jphughes. A Stata package for doing power calculations is described by Hemming & Girling (2014).

5. Cohort Studies

The discussion above has focused primarily on data collected cross-sectionally at each time point. Although there have been fewer examples and less methodological development for stepped wedge studies in which cohorts of individuals are enrolled and followed for multiple time periods, the THRio study (Duronvi et al., 2013) provides one such example. The THRio trial was designed to evaluate a program for increasing provision of isoniazid prophylaxis therapy to prevent tuberculosis in HIV-infected individuals. Moulton et al. (2007) used a simulation approach to determine power and the final analysis of the trial used a Cox proportional hazard model in calendar time (so the baseline hazard incorporated any underlying time trends in incidence), a gamma-distributed random effect to control for clustering, and a time-varying covariate to capture the intervention effect (Duronvi et al., 2013).

This example illustrates some of the key issues that must be considered in the design and analysis of a cohort-based stepped wedge design, including i) individuals, as well as clusters, may cross over from the control to the intervention condition, ii) repeated measures may be collected on individuals leading to two levels of correlation (cluster and individual), and iii) loss-to-followup. Additional issues, not illustrated in the THRio study, but that may be relevant to cohort trials include iv) healthy survivor bias, where occurrence of the outcome removes individuals from the risk set (i.e. death, HIV, etc), and v) interval-censoring, in which the event time for a participant may only be known to occur within a period that overlaps the transition from control to intervention. Some results on the analysis of stepped wedge studies with time-to-event data and interval-censoring are provided in Granston (2013) but additional research is needed on power calculations and analytic approaches for stepped wedge studies using repeated measures and cohort data.

6. Conclusion

In summary, the stepped wedge design is a useful tool for clinical and implementation science research, particularly in situations where a parallel design study is viewed as infeasible, unethical or otherwise unacceptable. However, those contemplating use of a stepped wedge design must be aware of the limitations of the design, particularly with respect to issues around modeling assumptions, delayed effects, and treatment effect heterogeneity.

Acknowledgements

This research was supported by the National Institute of Allergy and Infectious Diseases grant AI29168.

Appendix

In this appendix we give a detailed description of the construction of the design and covariance matricies that can be used for power calculations in non-standard designs. We use the notation introduced with equation 1.

Let M = (∑ij mij) be the total number of observations across clusters and time periods. In general, the design matrix, D, and the covariance matrix, V, have dimension M × (T + 1) and M × M, respectively, if the analysis is at the individual-level and dimension IT × (T +1) and IT × IT, respectively, if the analysis uses cluster-time summaries.

Consider a stepped wedge design with 3 clusters and 4 time periods:

where 0 indicates the control condition and 1 indicates the intervention condition.

Assuming model (7) with the analysis at the cluster-time level and parameter vector (μ, β1, β2, β3, θ), then

| (8) |

Notice that the last column of D corresponds to the Xij. V is given by

| (9) |

where

and 0 is a 4 × 4 matrix of 0’s. Note that if η = 0 (no variation in the intervention effect among clusters) then V1 = V2 = V3 and V is the block diagonal covariance matrix used by Hussey & Hughes (2007). The variance of θ̂, which is used for power calculations, is given by the [T + 1, T + 1] element of (D′V−1D)−1).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Alsallaq R, Baeten J, Celum C, Hughes J, Abu-Raddad L, Barnabas R, Hallett T. Understanding the potential impact of a combination hiv prevention intervention in a hyper-endemic community. PLoS ONE. 2013;8:e54575. doi: 10.1371/journal.pone.0054575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Auvert B, Taljaard D, Lagarde E, Sobngwi-Tambekou J, Sitta S, Puren A. Randomized, controlled intervention trial of male circumcision for reduction of hiv infection risk: The anrs 1265 trial. PLoS Medicine. 2005;3:e226. doi: 10.1371/journal.pmed.0020298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bailey R, Moses S, Parker C, Agot K, Maclean I, Krieger J, Williams C, Campbell R, Ndinya-Achola J. Male circumcision for hiv prevention in young men in kisumu, kenya: a randomised controlled trial. Lancet. 2007;369:643–656. doi: 10.1016/S0140-6736(07)60312-2. [DOI] [PubMed] [Google Scholar]

- Brown C, Lilford R. The stepped wedge trial design: A systematic review. BMC Medical Research Methodology. 2007;6:54. doi: 10.1186/1471-2288-6-54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Draper N, Smith H. Applied Regression Analysis. 3rd ed. New York: John Wiley and Sons; 1998. p. 736. [Google Scholar]

- Duronvi B, Saraceni V, Moulton L, Pacheco A, Cavalcante S, King B, Cohn S, Efron A, Chaisson R, Golub J. Effect of improved tuberculosis screening and isoniazid preventive therapy on incidence of tuberculosis and death in patients with hiv in clinics in rio de janeiro, brazil: a stepped wedge, cluster-randomised trial. Lancet Infectious Diseases. 2013;13:852–858. doi: 10.1016/S1473-3099(13)70187-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golden M, Kerani R, M S, Hughes J, Aubin M, Malinski C, Holmes K. Uptake and population-level impact of expedited partner therapy (ept) on chlamydia trachomatis and neisseria gonorrhoeae: The washington state community-level randomized trial of ept. PLoS ONE. 2015;12:e1001777. doi: 10.1371/journal.pmed.1001777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Granston T. Ph.D. thesis. University of Washington; 2013. Addressing Lagged Effects and Interval Censoring in the Stepped Wedge Design of Cluster-Randomized Clinical Trials. [Google Scholar]

- Gray R, Kigozi G, Serwadda D, Makumbi F, Watya S, Nalugoda F, Kiwanuka N, Moulton L, Chaudhary M, Chen M, Sewankambo N, Wabwire-Mangen F, Bacon M, Williams C, Opendi P, Reynolds S, OLaeyendecker O, Quinn T, Wawer M. Male circumcision for hiv prevention in men in rakai, uganda: a randomised trial. Lancet. 2007;369:657–666. doi: 10.1016/S0140-6736(07)60313-4. [DOI] [PubMed] [Google Scholar]

- Hall A, Inskip H, Loik N, Day, O’Conor G, Bosch X, Muir C, Parkin M, Munoz N, Tomatis L, Greenwood B, Whittle H, Ryder R, Oldfield F, N’jie A, Smith P, Coursaget P. The gambia hepatitis intervention study. Cancer Research. 1987;47:5782–5787. [PubMed] [Google Scholar]

- Hemming K, Girling A. The efficiency of stepped wedge vs. cluster randomized trials: stepped wedge studies do not always require a smaller sample size. [letter] Journal of Clinical Epidemiology. 2013;66:1428–1429. doi: 10.1016/j.jclinepi.2013.07.007. [DOI] [PubMed] [Google Scholar]

- Hemming K, Girling A. A menu-driven facility for power and detectable-difference calculations in stepped-wedge cluster-randomized trials. Stata Journal. 2014;14:363–380. [Google Scholar]

- Hemming K, Girling A, Martin J, Bond Sa. Stepped wedge cluster randomized trials are efficient and provide a method of evaluation without which some interventions would not be evaluated (letter) Journal of Clinical Epidemiology. 2013;66:1058–1059. doi: 10.1016/j.jclinepi.2012.12.020. [DOI] [PubMed] [Google Scholar]

- Hemming K, Lilford R, Girling A. Stepped-wedge cluster randomised controlled trials: a generic framework including parallel and multiple level designs. Statistics in Medicine. 2015;34:181–196. doi: 10.1002/sim.6325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Hoop E, Woertman W, Teerenstra S. The stepped wedge cluster randomized trial always requires fewer clusters but not always fewer measurements, that is, participants than a parallel cluster randomized trial in a cross-sectional design. [letter] Journal of Clinical Epidemiology. 2013;66:1429. doi: 10.1016/j.jclinepi.2013.07.008. [DOI] [PubMed] [Google Scholar]

- Hussey M, Hughes J. Design and analysis of stepped wedge cluster randomized trials. Contemporary Clinical Trials. 2007;28:182–191. doi: 10.1016/j.cct.2006.05.007. [DOI] [PubMed] [Google Scholar]

- Killiam W, Tambatamba B, Chintu N, Rouse D, Stringer E, Bweupe M, Yu Y, Stringer J. Antiretroviral therapy in antenatal care to increase treatment initiation in hiv-infected pregnant women: a stepped-wedge evaluation. AIDS. 2010;24:85–91. doi: 10.1097/QAD.0b013e32833298be. [DOI] [PubMed] [Google Scholar]

- Kotz D, Spigt M, Arts I, Crutzen R, Viechtbauer W. Researchers should convince policy makers to perform a classic cluster randomized controlled trial instead of a stepped wedge design when an intervention is rolled out. Journal of Clinical Epidemiology. 2012a;65:1255–1256. doi: 10.1016/j.jclinepi.2012.06.016. [DOI] [PubMed] [Google Scholar]

- Kotz D, Spigt M, Arts I, Crutzen R, Viechtbauer W. Use of the stepped wedge design cannot be recommended: A critical appraisal and comparison with the classic cluster randomized controlled trial design. Journal of Clinical Epidemiology. 2012b;65:1249–1252. doi: 10.1016/j.jclinepi.2012.06.004. [DOI] [PubMed] [Google Scholar]

- Kotz D, Spigt M, Arts I, Crutzen R, Viechtbauer W. The stepped wedge design does not inherently have more power than a cluster randomized controlled trial (letter) Journal of Clinical Epidemiology. 2013;66:1059–1060. doi: 10.1016/j.jclinepi.2013.05.004. [DOI] [PubMed] [Google Scholar]

- Mdege N, Man M, Taylor C, Torgerson D. Systematic review of stepped wedge cluster randomized trials shows that design is particularly used to evaluate inerventions during routine implementation. Journal of Clinical Epidemiology. 2011;64:936–948. doi: 10.1016/j.jclinepi.2010.12.003. [DOI] [PubMed] [Google Scholar]

- Mdege N, Man M, Taylor C, Torgerson D. There are some circumstances where the stepped-wedge cluster randomized trial is preferable to the alternative: no randomized trial at all. Journal of Clinical Epidemiology. 2012;65:1253–1254. doi: 10.1016/j.jclinepi.2012.06.003. [DOI] [PubMed] [Google Scholar]

- Moulton L, Golub J, Durovni B, Cavalcante S, Pacheco A, Saraceni V, B K, Chaisson R. Statistical design of thrio: a phased implementation clinic-randomized study of a tuberculosis preventive therapy intervention. Clinical Trials. 2007;4:190–199. doi: 10.1177/1740774507076937. [DOI] [PubMed] [Google Scholar]

- Rhoda D, Murray D, Andridge R, Pennell M, Hade E. Studies with staggered starts: Multiple baseline designs and group-randomized trials. American Journal of Public Health. 2011;101:2164–2169. doi: 10.2105/AJPH.2011.300264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott J, deCamp A, M J, MP F, PB G. Finite-sample corrected generalized estimating equation of population average treatment effects in stepped wedge cluster randomized trials. Statistical Methods in Medical Research. 2015 doi: 10.1177/0962280214552092. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Viviani S, Carrieri P, Bah E, Hall A, GD K, Mendy M, Montesano R, Plymonth A, Sam O, Van der Sande M, Whittle H, Hainaut P. 20 years into the gambia hepatitis intervention study: Assessment of initial hypotheses and prospects for evaluation of protective effectiveness against liver cancer. Cancer Epidemiology, Biomarkers and Prevention. 2008;17:3216–3223. doi: 10.1158/1055-9965.EPI-08-0303. [DOI] [PubMed] [Google Scholar]

- Woertman W, de Hoop E, Moerbeek M, Zuidema S, Gerritsen D, Teerenstra S. Stepped wedge designs could reduce the required sample size in cluster randomized trials. Journal of Clinical Epidemiology. 2013;66:752–758. doi: 10.1016/j.jclinepi.2013.01.009. [DOI] [PubMed] [Google Scholar]