Abstract

Current research has shown that comprehension can vary based on text and question types, and that readers’ word recognition and background knowledge may account for these differences. Other reader characteristics such as semantic and syntactic awareness, inferencing, planning/organizing have also all been linked to reading comprehension, but have not been examined with regard to specific text and question types. The aim of this study was to explore the relationships between reader characteristics, text types, and question types, in children aged 10–14. We sought to compare children’s performance when comprehending narrative, expository, and functional text, as well as to explore differences between children’s performance on comprehension questions that assess their literal or inferential comprehension of a passage. To examine such differences, we analyzed the degree to which distinct cognitive skills (semantic and syntactic awareness, inferencing, planning/organizing) contribute to performance on varying types of texts and questions. This study found main effects of text and question types, as well as an interaction in which relations between question types varied between text types. Analyses indicated that higher order cognitive skills, including the ability to make inferences and to plan and organize information, contribute to comprehension of more complex text (e.g., expository vs. narrative) and question types (e.g., inferential vs. literal), and therefore are important components of reading for later elementary and middle school students. These findings suggest that developing these skills in early elementary school may better equip students for comprehending the texts they will encounter in higher grades.

Reading comprehension is an essential skill for children in school settings and beyond. A broad range of tasks require children to comprehend written text, particularly as children progress in school and are expected to learn more independently. The most familiar reading task, and the one that children frequently encounter during early exposures to text, is the comprehension of a story, or narrative passage (Graesser, McNamara & Kulikowich, 2011). Starting in late elementary school there is an increasing emphasis on independent reading as a primary means of presenting information in classes such as science and social studies. Thus expository passages become of increasing importance later on in development (Graesser et al., 2011). Finally, both in and out of school, children must decipher functional texts, such as reading instructions for completing a school assignment or how to perform a task.

Various theoretical models of reading have been proposed, and a significant body of research has accumulated regarding the skills required for comprehension. Much research has focused on models that are developmental in origin, which commonly concentrate on child characteristics such as word reading ability and listening comprehension, such as the Simple View of Reading (Hoover & Gough, 1990), Other studies have built upon the Construction-Integration (C-I) model, a complex model proposing that reading comprehension comes from a process where a text base is constructed and then integrated into a “coherent whole” (Kintsch, 1988, p. 164).

Word recognition is considered essential for all types of texts and levels of development according to all reading comprehension models. Studies have consistently led to the finding that children with poor decoding/word recognition will experience significant impairment in reading comprehension (e.g., Lyon, 1995; Torgesen, 2000). However, word recognition does not sufficiently account for variation in comprehension, and researchers within developmental and C-I models have explored numerous reader characteristics that may also contribute to comprehension (e.g., Cain & Oakhill, 2007; Nation, Clarke, & Snowling, 2002; Cutting, Materek, Cole, Levine, & Mahone, 2009; Cutting & Scarborough, 2006; Graesser & Bertus, 1998; McNamara, Kintsch, Songer, & Kintsch, 1996).

For developmental models of reading, which usually place central importance on word recognition, oral language has additionally proven to be a significant predictor of reading comprehension, as illustrated in the Simple View of reading (Hoover & Gough, 1990). This model has been supported by numerous studies, although the specific amount that oral language and word recognition each contributes appears to vary depending on age (Catts, Adlof, & Weismer, 2006; Kendeou, van den Broek, White, & Lynch, 2009; Storch & Whitehurst, 2002; Tilstra, McMaster, van den Broek, Kendeou, & Rapp, 2009) and how comprehension is measured (Cutting & Scarborough, 2006; Keenan, Betjemann, & Olson, 2008). Beyond the factors that comprise the simple view, other reader characteristics such as fluency, working memory, background knowledge, and use of reading strategies have all been linked to reading comprehension, but study findings have been inconsistent (Cutting & Scarborough, 2006; Goff, Pratt, & Ong, 2005; Samuelstuen & Braten, 2005).

While C-I models acknowledge the importance of reader characteristics, they have not necessarily focused on the same factors as those that comprise the developmental models (Graesser, Singer, & Trabasso, 1994; Kintsch, 1994, 1998; van Dijk & Kintsch, 1983). Most discourse models incorporate Kintsch’s model (1998), which described two levels of understanding. The text base relates solely to information explicitly presented in the text while the deeper level of the situation model requires the integration of the various pieces of information from the text. Studies based on the C-I perspective have emphasized the importance of the background knowledge that a reader possesses prior to encountering a text and readers’ ability to generate inferences. Background knowledge and inferencing are considered particularly important for developing the situation model, where readers often need to fill in gaps and relate the text to previous knowledge (Best, Floyd, & McNamara, 2008; Brandao & Oakhill, 2005; Graesser et al., 1994).

Another contribution of studies based in the C-I perspective is the recognition that text characteristics themselves also impact comprehension (McNamara, Graesser, & Louwerse, in press; McNamara et al., 1996; Ozuru, Rowe, O’Reilly, & McNamara, 2008). Traditionally capturing text characteristics has focused on text difficulty as measured by word frequency and sentence length (Stenner, 1996). More recently, others have examined additional characteristics, including text type and cohesiveness, or the extent to which parts of a text relate to each other (see Graesser et al., 2011). Indeed, research studies have supported the effects of cohesion on comprehension (McNamara et al., in press; Ozuru et al., 2008; Sanchez & Garcia, 2009).

Given these findings from C-I research, it is possible that the variations among results from developmentally-oriented comprehension studies may relate to the different measures of comprehension used in each study, particularly the texts presented and the types of questions asked to assess comprehension. Differences in format and content of texts may draw upon unique cognitive skills, depending upon the purpose of reading. As a consequence, children’s comprehension of one text type may not be wholly indicative of their overall ability to comprehend. Thus, the present study intended to extend the simple view of reading by incorporating the text features that C-I models have often taken into consideration.

Explorations of Differences between Text Types

Reading research often categorizes passages based on the purpose and structure of the text. Narrative passages, or stories with characters and a plot, are typically structured in a temporal sequence, written in the past tense, and make use of everyday vocabulary (Medina & Pilonieta, 2006). Narratives are often fictional (e.g., fairy tales, science fiction); however, biographies are frequently written in a narrative format as well (Medina & Pilonieta, 2006). Expository texts, on the other hand, focus on providing information about a particular topic. The structure does not necessarily follow a timeline, and passages often include technical vocabulary that may not be encountered in commonplace conversations (Medina & Pilonieta, 2006). Of course there may be exceptions to this, such as when descriptions of scientific processes may be described as a sequence of events, much like a narrative. Other texts may not clearly fit the characteristics of one text type or another; McNamara et al. (in press) found that while narrative passages and scientific passages had distinct structural qualities, passages related to social studies had overlap with both genres.

Many studies examining reader characteristics that contribute to comprehension have either used measures that include both (but do not differentiate between) expository and narrative texts or have not clearly indicated which passage types are included in their measures of comprehension. Recently, however, some studies of reading have acknowledged that narrative and expository texts may place demands on different skills, suggesting that text types differ in how well children comprehend them. Narrative texts have been found to generally be easier than expository texts (e.g., Best et al., 2008; Diakidoy, Stylianou, Karefillidou, & Papageorgiou, 2005; Haberlandt & Graesser, 1985).

While the findings of these studies indicate that children may struggle more with comprehending expository text, it is unclear what factors lead to differences in successfully understanding narrative and expository texts. One possibility is that in order to comprehend expository text, children must use skills that are not as crucial for comprehension of narrative passages. As mentioned earlier, much of the literature concerning text type and comprehension address the issue of text cohesion. Employing signaling devices (e.g., titles, summary paragraphs), using connectives to describe how sentences relate to each other (e.g., however, because), and explaining unfamiliar terms are all methods of increasing text cohesion. Less cohesive texts require readers to rely on skills such as making inferences and recalling previous knowledge in order to fill in the gaps. While some studies have suggested that expository texts may be less cohesive than narratives (Best, Rowe, Ozuru, & McNamara, 2005), more recent work (McNamara et al., in press) reveals that narrative texts may actually be less cohesive than expository texts.

Studies examining predictors of specific text types suggest that background knowledge is needed for comprehension of expository passages. Best et al. (2008) observed differential demands of narrative and expository texts, such that word recognition was the strongest predictor for comprehension of narrative text, while background knowledge contributed the largest amount of variance for comprehending expository passages. Other studies have further supported the contribution of background knowledge while also recognizing additional factors that appear to influence expository text comprehension (Samuelstuen and Braten, 2005; Sanchez & Garcia, 2009). For example, Samuelstuen and Braten (2005) found that background knowledge and the use of strategies (elaboration, organization, and monitoring) were strong predictors of tenth grade students’ comprehension of expository texts, even after accounting for word recognition ability. Additionally, Sanchez and Garcia (2009) found that understanding discourse markers such as connectives and signaling devices significantly contributed to comprehension of expository passages.

Explorations of Differences between Question Types

In addition to recognizing that text type can influence how well a child comprehends a passage, it is also well-accepted that there are several levels of comprehension and different question types are designed to gauge each of these levels. Literal questions assess a child’s recall of information explicitly presented in a passage, although determining what qualifies as a text base level of understanding is not always easy. Inferential questions, on the other hand, require the reader to develop a situation model (Graesser et al., 1994; Kintsch, 1988) and integrate pieces of information by either relating multiple pieces of information presented in the text to each other or combining previous knowledge with the information from the passage. The varying demands of such question types may lead to discrepancies in children’s performances on comprehension assessments.

Many studies of comprehension include both literal and inferential questions in their assessment measures, but do not analyze the performances of each question type separately (Best et al., 2008; Diakidoy et al., 2005; Goff et al., 2005). This is due in part to the fact that, as explained by Samuelstuen and Braten (2005), comprehension is frequently assessed in terms of understanding the text’s purpose, main ideas, and core concepts. Thus many questions rely on both recalling literal information and making inferences and it can be challenging to distinguish between children’s levels of literal and inferential comprehension. Nevertheless, combining both types of questions into one comprehension construct poses several risks. First, specific areas of weakness may be overlooked as it is difficult to ascertain whether a child’s comprehension deficit stems from an inability to pull information directly from the text or from an inability to integrate different parts of the text. Second, cognitive deficits that influence particular levels of comprehension may be masked if only the relationship with overall comprehension is analyzed.

Some studies have attempted to distinguish between the two levels of comprehension, and to investigate differences that may exist. Generally, findings indicate that literal questions appear to be easier to answer than inferential questions, and that children are more likely to correctly answer inferential questions relying only on integrating different parts of the text, rather than requiring integration of text and background knowledge (Brandao & Oakhill, 2005; Geiger & Millis, 2004; Raphael and Wonnacott, 1985). Within the body of literature comparing performances on various question types, fewer studies have sought to explain why children may perform better on questions assessing literal comprehension of a text than on questions requiring use of inferences. Clearly, children must possess background knowledge about a topic before they are able to integrate this knowledge with information being presented in a text. That being said, children must also be able to recognize when such integrations are necessary.

Previous studies have found that good readers in fourth through eighth grade are better at recognizing when text based information is sufficient to answer a question and when it is necessary to integrate background knowledge. Furthermore, poor readers’ performances improved when students were trained to recognize different question-answer relationships (Raphael and Pearson, 1985; Raphael, Winograd, & Pearson, 1980; Raphael & Wonnacott, 1985). Brandao and Oakhill (2005) observed similar findings when younger children were asked to explain how they developed their answers to questions about a narrative text. Children were more likely to refer back to the text when responding to literal questions and to use both background knowledge and information from the text for “gap-filling” questions. This relationship was found regardless of whether children responded correctly. These observations indicate that even young children have begun the process of developing strategies for reading comprehension.

In summary, different text and question types appear to draw upon various skills, thus some text and question types may prove to be more difficult than others. These issues of text and question difficulty are pertinent to understanding reading comprehension performance in children. The present study sought to address some of these issues by examining how text and question characteristics might be related to each other, as well as to variations in cognitive skills. To this end, our first question was, do text and/or question types differ in terms of how difficult they are for children, and are there interactions between text and question types? The extant literature has established that characteristics of texts or questions influence comprehension; however, less is known regarding possible text-question interactions. Therefore, the present study sought to begin exploring interactions between text type and question type. It was hypothesized that expository texts would be the most difficult passages, and that participants would have the highest level of accuracy on literal questions. Including both text and question types also allowed us to explore interactions between text and question types.

Our second question was, if there are differences between text and question types, is it because variations among text and question types place demands upon different cognitive skills? Many previous studies, namely those based in a C-I perspective, examining particular text or question types have primarily focused on word recognition, background knowledge, and reading strategies. Still, developmental research expanding on the simple view of reading has found that after accounting for basic skills (word recognition), other reader characteristics, such as semantic and syntactic awareness, inferencing, and planning/organizing (which may be especially crucial for readers to recognize when to draw upon background knowledge), contribute to reading comprehension. Consequently, the second aim of our study was to determine if the cognitive skills emphasized in developmental models building from the simple view are especially influential for more demanding texts. It was hypothesized that components of basic language (semantic and syntactic awareness) would predict performance for all text and question types, but that higher-level processes such as inferencing and planning/organizing would only contribute to more complex text and question types.

Method

Participants

The data presented in the current paper come from a larger study in which children between the ages of 10 and 14 were recruited to participate in a reading study investigating the cognitive processes in reading comprehension (Locascio, Mahone, Eason, & Cutting, 2010; Eason, Sabatini, Goldberg, Bruce, & Cutting, in press). Recruitment of participants was achieved through distribution of fliers inviting parents of children with and without reading disabilities to “learn more about [their] child’s reading”, as well as “word of mouth” around the community, supplying information to learning disability organizations and their websites, and advertisements in local parent magazines and resources.

Prior to entering the study, all participants underwent a telephone screening interview to ensure eligibility. Whether or not a child met exclusionary and inclusionary criteria was determined from the telephone screening evaluation, which included a review of the child’s birth, development, and medical history, as well as previous neuropsychological testing, if available. Participants were excluded from participation in the study based on: (1) previous diagnosis of Mental Retardation; (2) known uncorrectable visual impairment; (3) documented hearing impairment of 25 dB or more in either ear; (4) history of a known neurological disorder (e.g. epilepsy, spina bifida, cerebral palsy, traumatic brain injury); (5) treatment of any psychiatric disorder, other than ADHD, with psychotropic medications; (6) IQ scores below 80 or above 130; and (7) being a non-native English speaker or having learned English simultaneously with another language. Children who met criteria for ADHD, Oppositional Defiant Disorder, and/or Adjustment Disorders were included in eligibility.

During the initial phase of the study, participants were screened to finalize eligibility. Participants were required to have either a Verbal Comprehension Index (VCI) or Perceptual Reasoning Index (PRI) score of at least 80 on the Wechsler Intelligence Scale for Children-IV (WISC-IV; Wechsler, 2003) in order to continue participation in the study.

Our participants included 126 children with and without reading disabilities. Of the total children who participated, 72 were male (57.1%) and 54 were female (42.9%). Mean age of participants was 11.85 ± 1.32, with 39 ten-year-olds (31%), 32 eleven-year-olds (25.4%), 28 twelve-year-olds (22.2 %), 16 thirteen-year-olds (12.7%), and 11 fourteen-year-olds (8.7%). The percentages in each grade were as follows: 22 in fourth (17.5%), 31 in fifth (24.6%), 30 in sixth (23.8%), 21 in seventh (16.7%), 13 in eighth (6.3%), 8 in ninth (6.3%), and 1 in tenth (0.8%). The percentages of race/ethnicity were as follows: 28 African American (22.2%); 3 Asian (2.4%); 82 Caucasian (65.1%); and 7 Multiracial (5.6%); with no response from 6 participants (4.8%). Five participants (4.0%) also identified themselves as Hispanic/Latino. The distributions of Full Scale IQ and reading skills were also assessed to determine if the participants in our sample represented a full range of reading abilities. The mean Full Scale IQ score from the WISC-IV was 104.94 ± 14.66. The mean Basic Reading Skills Composite from the Woodcock Reading Mastery Test (WRMT-R/NU) was 100.59 ± 12.08. The mean standard score on the Sight Word Efficiency subtest of the Test of Word Reading Efficiency (TOWRE) was 99.01 ± 11.88. The mean stanine on the Reading Comprehension subtest of the Stanford Diagnostic Reading Test (SDRT) was 5.29 ± 2.19. Based on our criteria, 26 children (20.6%) were identified as having a word reading deficit (below 25th percentile on the WRMT-R/NU Basic Reading Skills Composite), and an additional 15 children (11.9%) were identified as having a reading comprehension deficit, but average word reading (above 37th percentile on the WRMT-R/NU Basic Reading Skills Composite and below the 25th percentile on reading comprehension). More detailed criteria for our definitions of word reading and comprehension deficits are outlined in a previous publication pertaining to the same study (Locascio, Mahone, Eason, & Cutting, 2010).

Measures

Reading Comprehension Measure

Comprehension was assessed by the Stanford Diagnostic Reading Test – Fourth Edition (SDRT-4; Karlsen & Gardner, 1995). It should be noted that while there are no ideal agreed upon measures of reading comprehension at this time, the SDRT-4 is of great practical importance, as the SDRT-4 is comparable to reading comprehension measures that are regularly administered; therefore, we chose this measure based upon the knowledge that current psychometric tests, particularly high stakes tests, often use this format. The Reading Comprehension subtest of the SDRT-4 is a timed, 54-item multiple-choice assessment with varying text and question types. There are three levels, based on grade, included in this study: the Purple level (for grades 4.5 – 6.4); the Brown level (for grades 6.5 – 8.9); and the Blue level (for grades 9.0 – 12.9). The Reading Comprehension subtest consists of reading selections of text followed by answering five to seven questions related to the selection just read. Reliability estimates for the SDRT-4 have been reported as ranging from .91 to .93.

As outlined in the Teacher’s Manual for Interpreting, all items are categorized by type of text and mode of comprehension (question type). Passages for each of the three levels (Purple, Brown, and Blue) are categorized as Recreational (narrative), Textual (informational), or Functional reading selections. Recreational texts, also known as narrative texts, are passages that are often read for pleasure and are prevalent in early grades, and typically are a short story about a fictional character. Textual passages are written to inform the reader, and are often referred to as expository texts such as a passage describing how something was invented, characteristics of animals or insects, or biographical information. In the interest of remaining consistent we hereafter refer to Recreational text and Textual text by the more universally-recognized terms Narrative and Expository. Functional text types are typically encountered in daily life, such as instructions for assembling a craft or a poster explaining how to enter a contest. Functional passages remain labeled as such. In order to confirm that the differences between narrative and expository texts were consistent with those conceptualized by cognitive psychology models (McNamara et al., in press), we analyzed passages using Coh-Metrix (see Preliminary Analyses and Appendix A). Coh-Metrix examines many different dimensions of text and is therefore distinct from other readability formulas that typically focus on sentence length and indices of word frequency/difficulty only. Note that it was not possible to analyze Functional passages by Coh-Metrix, since signs and posters are rarely structured as paragraphs.

After reading the passages, students use the selected text to answer questions that measure Initial Understanding, Interpretation, Critical Analysis, and Process Strategies; these labels are directly from the SDRT-4 manual, but it should be noted that they are also similar to those from the NAEP 1992 framework. Each of the question types in the Comprehension subtest draws on a unique set of skills that enable the reader to understand, interpret, and evaluate the passage. Questions measuring Initial Understanding examine students’ literal understanding of relationships and ideas in the passage that are directly stated (“What did the children do when they came home?”). Answers to questions regarding Initial Understanding can be found directly in the passage without the need for further interpretation or evaluation of the text. Interpretation questions focus on students’ abilities to make inferences and predictions about the selection, and draw conclusions from information that is not directly stated in the text (“After the boy brought home his report card, he probably felt…”). In order to correctly respond to this question type, the child must be able to comprehend both explicit and implicit information from the text, and build a situation model. According to the definitions provided by the SDRT-4, Critical Analysis questions gauge the student’s ability to synthesize and evaluate information from the passage (“The main idea of this passage is to…”). Finally, Process Strategies questions ask students to recognize and utilize the characteristics of the text and other reader strategies (“If you want to learn more about the animals described in this article, you should look in…”). More information about the SDRT-4 can be found at: http://www.pearsonassessments.com/HAIWEB/Cultures/en-us/Productdetail.htm?Pid=015-4887-242.

Overall Reading Comprehension scores on the SDRT-4 are converted to percentiles based on grade-based norms. For this study, subscores were computed for each text and question type as a percentage correct. Compared to the number of items for Initial Understanding and Interpretation questions, there were significantly fewer items in each of the Critical Analysis and Process Strategies categories; therefore the items for the two latter categories were combined into one question type. This seemed justified given that other levels of the SDRT-4 for younger readers do not distinguish between Critical Analysis and Process Strategies items but instead group them together as one question type. Participants who did not respond to at least 90 percent of the items were excluded because low scores resulting from items that were skipped or not reached may underestimate reader accuracy. Of the 143 participants who took the Purple, Brown, or Blue level of the SDRT, 17 were excluded because they responded to less than 90 percent of the items. Only one of the participants excluded was an average reader; the others were identified as having a word reading or comprehension deficit, or did not meet criteria for any of the groups (scores were either inconsistent or in between the cutoffs). While this does open up the possibility of specific types of readers being overlooked, in order to validly analyze the data it was necessary to include individuals who completed a sufficient number of items.

Word Level Measures

Two measures of word level skills were included: the Woodcock Reading Mastery Tests – Revised/Normative Update (WRMT-R/NU; Woodcock, 1998) and the Test of Word Reading Efficiency (TOWRE; Torgesen, Wagner, & Rashotte, 1999).

From the WRMT-R/NU, the Word Identification subtest, a measure of single-word sight reading, and Word Attack, which measures the ability to sound out words, were both administered to assess for basic reading skills. Standard scores, based on age, were used to screen participants. Reports indicate internal consistency reliability for the WRMT-R/NU tests ranges from .68 to .98; a split-half reliability range from .87 to .98 has been reported for the Cluster scores.

The Sight Word Efficiency (SWE) subtest from the TOWRE measures single word reading fluency as the number of real printed words that can be accurately identified within a 45 second time frame. Standard scores, based on age, were used in analyses as a measure of word level skills. The TOWRE manual reports reliability ranges of .90 to .99.

Language, Inferencing, and Planning/Organizing Measures

The following measures were selected because they have been identified in previous studies as being linked to comprehension, in addition to word level skills (Cutting et al., 2009; Locascio et al., 2010).

Peabody Picture Vocabulary Test – Third Edition (PPVT-III; Dunn & Dunn, 1997)

The PPVT-III is a test designed to assess receptive vocabulary. For each item, the participant points to one of four pictures that best represents a word said by the examiner. Alpha coefficients for the PPVT-III range from .92–.98. Age-based standard scores were used for analyses. The PPVT was used as an index of primary language performance, specifically semantics.

Test of Language Development – Intermediate: Third Edition (TOLD-I:3; Newcomer & Hammill, 1997)

A measure of receptive grammar (syntax), the Grammatic Comprehension subtest of the TOLD-I:3 requires the subject to identify orally presented sentences as either grammatically correct or incorrect. Reliability for the Grammatic Comprehension subtest has been reported as .95. Scaled scores, based on age, were used for analyses. The TOLD Grammatic Comprehension subtest was used as an index of primary language performance, specifically syntax.

Test of Language Competence – Expanded Edition (TLC-E; Wiig & Secord, 1989)

The Listening Comprehension: Making Inferences subtest from the TLC-E measures inferential language by requiring the child to listen to a scenario (simultaneously presented in print) and deduce what may have happened. The Listening Comprehension subtest’s internal consistency reliability has been reported as alpha coefficients ranging from .57 to .71; test-retest reliability was reported as .54 in the manual (Wiig & Secord). Age-based scaled scores were used for analyses. This assessment was used as the primary index of children’s inferencing abilities.

Delis-Kaplan Executive Function System (D-KEFS; Delis, Kaplan, & Kramer, 2001)

The D-KEFS Tower Test assesses planning and organizing. The participant moves five discs across three pegs to match a visual model in as few moves as possible, all while adhering to a specific set of rules. Internal consistency for the D-KEFS Tower ranges from .43 to .84 for the age range included in the present study; test-retest reliability was reported as .51 in the manual (Delis et al., 2001). Age-based scaled scores were used for analyses.

Procedures

During the two-day study, participants were assessed on word recognition, reading comprehension, and cognitive skills including language, inferencing, and planning/organizing. Total testing time per child averaged to be around seven hours over the course of this two-day period. On the first day of testing, children worked in a one-on-one setting with a psychology associate at our lab in Baltimore, MD. The second day was included to complete any remaining testing. All procedures followed a protocol that had been approved by the Johns Hopkins Medical Institutional Review Board.

Data Analyses

After the preliminary analyses on Coh-Metrix were conducted, a Repeated Measures Analysis of Variance (ANOVA) was run to analyze within-subjects differences by text and question types, as well as to explore a possible interaction between text and question type. In order to control for Type I error, Bonferroni post hoc, pair-wise comparisons were used to examine specific differences between text and question types. Second, hierarchical regression analyses were used to examine the contributions of age, word level skills (TOWRE SWE), semantic and syntactic awareness (PPVT-III, TOLD-I:3 Grammatic Comprehension), inferencing (TLC-E Listening Comprehension: Making Inferences), and planning/organizing skills (D-KEFS Tower Test) to each text and question type. Note that for the hierarchical regressions, we took a bottom up approach where we entered basic reading components and then subsequently entered the more complex skills step-by-step: oral language, inferences, and reasoning. While several other ordering of variables could be justified, the long standing importance of word-level abilities for reading comprehension made us choose the bottom up approach (Lyon, 2005). In particular, in the developmental literature, word recognition is thought to be an essential component of reading comprehension, and therefore, logically, contributions of other skills are almost always examined after word-level skills are taken into account. Illustrating that these higher-level skills contribute to deeper levels of comprehension, even after taking basic skills into account, would suggest the importance of considering these skills in developmental models of reading comprehension, particularly if differences were found amongst the different types of text.

A challenge to accurately comparing the effect of text type or question type was the fact that the proportion of each question type varied between text types (e.g., Interpretation questions accounted for over 60% of the items for Narrative passages, but less than 30% of the items for Expository passages; see Table 1). For the Repeated Measures analyses, this is automatically corrected, as the main effects for question and text type are based on the nine scores for each text-question combination, and each are equally weighted. However, for the Regression analyses, using the overall score obtained for a particular text or question type would be problematic, since it would be difficult to distinguish whether differences among text types are due to characteristics of the text itself or due to the weighted demands of a particular question type. To control for these discrepancies, we used the same weighted score derived in the Repeated Measures analyses.

Table 1.

Number and Proportion of Question Types within Text Types by SDRT Level

| Question Type | Text Type by SDRT Level

|

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Purple (n = 69)

|

Brown (n = 48)

|

Blue (n = 9)

|

|||||||

| Nar | Exp | Fun | Nar | Exp | Fun | Nar | Exp | Fun | |

| IU | 5 (28%) | 9 (50%) | 4 (22%) | 3 (17%) | 4 (22%) | 11 (61%) | 5 (28%) | 5 (28%) | 8 (44%) |

| IN | 11 (61%) | 5 (28%) | 9 (50%) | 13 (72%) | 9 (50%) | 3 (17%) | 10 (56%) | 9 (50%) | 6 (33%) |

| CAPS | 2 (11%) | 4 (22%) | 5 (28%) | 2 (11%) | 5 (28%) | 4 (22%) | 3 (17%) | 4 (22%) | 4 (22%) |

Note: Nar = Narrative; Exp = Expository; Fun = Functional; IU = Initial Understanding; IN = Interpretation; CAPS = Critical Analysis/Process Strategies.

Results

Preliminary Analyses

Prior to running the analyses to address our central research questions, we used Coh-Metrix (Graesser, McNamara, Louwerse, & Cai, 2004) to compare text features between the narrative and expository passages, in order to determine what text features differentiate the passages and therefore may relate to any effects of text type that we observe. Our findings were consistent with those from other studies (McNamara et al., in press), in that narrative passages showed less referential cohesion than expository passages. Additionally, narrativity indices were consistent with the SDRT’s classification of text as narrative or expository (72.22 and 44.89, respectively). Finally, while differences in text difficulty between levels were not significant, trends were in the appropriate direction, with increasing difficulty from lower to higher levels. Findings are presented in Appendix A.

Differences among Text Types and Question Types

In order to assess the differences among text types and question types, as well as interactions between text and question type, a Repeated Measures ANOVA was run. Mean scores for each text and question type are listed in Table 2. There was a significant main effect of text type [F(2, 250) = 10.77, p < .001, η2p = .079] such that percentage of correct items for Functional passages was higher than that of Narrative (Cohen’s d = 0.34) and Expository (Cohen’s d = 0.21) passages (all p < .01); there was no significant difference between Narrative and Expository passages (Cohen’s d = 0.15). Additionally, there was a significant main effect of question type [F(2, 250) = 50.59, p < .001, η2p = .288], such that Critical Analysis and Process Strategies questions yielded fewer accurate responses than Initial Understanding (Cohen’s d = −0.63) and Interpretation (Cohen’s d = −0.58) questions; there was no significant difference between accuracy for Initial Understanding and Interpretation questions (Cohen’s d = 0.05).

Table 2.

Summary of Intercorrelations, Means, and Standard Deviations for Predictor Variables, Text Types, and Question Types

| Variable | 1 | 2 | 3 | 4 | 5 | 6 | M | SD |

|---|---|---|---|---|---|---|---|---|

| 1. Age | -- | 11.82 | 1.32 | |||||

| 2. TOWRE | −.14 | -- | 98.86 | 11.79 | ||||

| 3. PPVT | −.11 | .52*** | -- | 111.46 | 14.22 | |||

| 4. TOLD | −.28** | .36*** | .60*** | -- | 10.73 | 3.15 | ||

| 5. TLC | −.02 | .21* | .41*** | .34*** | -- | 8.99 | 2.57 | |

| 6. D-KEFS | −.16* | .04 | .26** | .07 | .22** | -- | 10.35 | 2.17 |

| Nar | −.15 | .46*** | .59*** | .46*** | .37*** | .19* | .73 | .22 |

| Exp | −.15 | .54*** | .53*** | .44*** | .40*** | .33*** | .75 | .20 |

| Fun | .07 | .43*** | .55*** | .33*** | .33*** | .23** | .79 | .20 |

| IU | −.13 | .50*** | .55*** | .43*** | .33*** | .22** | .80 | .19 |

| IN | −.07 | .50*** | .63*** | .45*** | .41*** | .25** | .79 | .19 |

| CAPS | −.04 | .45*** | .52*** | .33*** | .38*** | .28** | .67 | .23 |

Note: n = 118, because 8 participants were missing scores for at least one of the predictor measures. TOWRE: Test of Word Reading Efficiency, Sight Word Efficiency, Standard Score; PPVT: Peabody Picture Vocabulary Test – 3rd Edition – Standard Score; TOLD: Test of Language Development – Grammatic Comprehension – Scaled Score; TLC: Test of Language Competence – Listening Comprehension: Making Inferences – Scaled Score; D-KEFS: Delis-Kaplan Executive Function – Tower Test, Total Achievement – Scaled Score; Nar = Narrative, Exp = Expository, Fun = Functional Text, IU = Initial Understanding Questions, IN = Interpretation Questions, CAPS= Critical Analysis/Process Strategies Questions.

p < .05,

p < .01,

p < .001

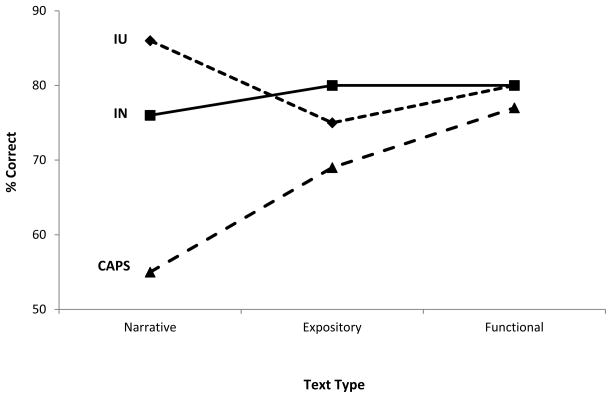

Tests of within-subjects effects also showed an interaction between text and question type [F(4, 500) = 21.99, p < .001, η2p = .150], as illustrated in Figure 1. Follow-up analyses showed that all three question types yielded similar levels of performance for Functional passages [F(2, 250) = 1.09, p = .336, η2p = .009]; however, the levels of accuracy for each question type significantly differed within both Narrative [F(2, 250) = 57.96, p < .001, η2p = .317] and Expository passages [F(2, 250) = 12.03, p < .001, η2p = .088]. While scores on Critical Analysis/Process Strategies questions were significantly lower than the other two question types for Narrative (Cohen’s d = −1.01 and −0.68 for Initial Understanding and Interpretation, respectively; both p < .001) and Expository passages (Initial Understanding, Cohen’s d = −0.24, p < .05; Interpretation, Cohen’s d = −0.47, p < .001), the relationship between Initial Understanding and Interpretation questions was different for the two text types. For Narrative passages, accuracy on Initial Understanding questions was significantly higher than accuracy on Interpretation questions (Cohen’s d = 0.43, p < .001); there was a marginal difference in the opposite direction for Expository passages (p = .06), with a slightly higher accuracy on Interpretation questions (Cohen’s d = −0.22).

Figure 1.

Percent of items answered correctly as a function of text type and question type. IU = Initial Understanding; IN = Interpretation; CAPS = Critical Analysis/Process Strategies

Predictors of Reading Comprehension by Text Type

Hierarchical regressions were conducted to examine the extent to which different cognitive skills (word level skills, semantic and syntactic awareness, inferencing, and planning/organizing) predicted reading comprehension success for particular text types (see Table 2 for correlations between and means of predictors used in regression analyses).

Overall, the regression models accounted for 38–49% of the variance for comprehension of the three text types (see Table 3). Model 1 accounted for a significant amount of variance for all three text types - Narrative (22 %), Expository (30%), and Functional (20%) - with word recognition efficiency (measured by TOWRE) being the significant contributor (p ≤ .001). Additionally, basic language (Model 2) played an influential role across all three text types (10–18% of the variance), with semantic awareness, as measured by the PPVT, contributing the most variance (all p < .01). For Expository passages, inferencing (Model 3, 4%) and planning/organizing (Model 4, 5%) were also strong predictors. Inferencing did not contribute a significant amount of variance for Narrative and Functional passages. Planning/organizing added marginally significant variance to performance on Functional passages (almost 2%), but did not contribute to Narrative passage comprehension.

Table 3.

Hierarchical Multiple Regression Analyses of Reader Characteristics on Reading Comprehension by Text Type

| Model and Variables | Text Type

|

|||||

|---|---|---|---|---|---|---|

| Narrative

|

Expository

|

Functional

|

||||

| Δ R2 | β | Δ R2 | β | Δ R2 | β | |

| 1. Age + Word Level | .218*** | .301*** | .202*** | |||

| Age | −.085 | −.076 | .136 | |||

| TOWRE | .447*** | .532*** | .448*** | |||

| 2. + Basic Language | .180*** | .097*** | .155*** | |||

| PPVT | .401*** | .261** | .436*** | |||

| TOLD | .144 | .145 | .044 | |||

| 3. + Inferential Lang. | .018 | .038** | .011 | |||

| TLC | .147 | .215** | .115 | |||

| 4. + Planning/Org. | .002 | .050*** | .016 | |||

| D-KEFS | .046 | .242*** | .136 | |||

| Total R2 | .417*** | .486*** | .383*** | |||

Note: TOWRE = Test of Word Reading Efficiency, Sight Word Efficiency, Standard Score; PPVT = Peabody Picture Vocabulary Test – 3rd Edition – Standard Score; TOLD = Test of Language Development – Grammatic Comprehension – Scaled Score; TLC = Test of Language Competence Listening Comprehension: Making Inferences – Scaled Score; D-KEFS = Delis-Kaplan Executive Function – Tower Test, Total Achievement – Scaled Score.

p ≤ .05.

p ≤ .01.

p ≤ .001.

Predictors of Reading Comprehension by Question Type

Hierarchical regressions were also conducted to examine the extent to which different cognitive skills predicted reading comprehension success for particular question types. The regression models accounted for 38–48% of the variance for performance of the three types of questions (see Table 4). As with the text type regressions, Model 1 accounted for a significant amount of variance for all three question types – Initial Understanding (25%), Interpretation (25%), Critical Analysis/Process Strategies (20%) - with word level skills (TOWRE) being the significant contributor (all p ≤ .001). In Model 2, basic language contributed an additional 12–20% of the variance with semantic awareness (PPVT) being the most significant factor (all p ≤ .01). For Interpretation and Critical Analysis/Process Strategies questions, inferencing (Model 3) added 2–3% of variance. Finally, planning/organizing (Model 4) contributed an additional 3% of variance to Critical Analysis/Process Strategies questions.

Table 4.

Hierarchical Regression Analyses of Reader Characteristics on Reading Comprehension by Question Type

| Model and Variables | Question Type

|

|||||

|---|---|---|---|---|---|---|

| Initial Understanding

|

Interpretation

|

Critical Analyses/Process Strategies

|

||||

| Δ R2 | β | Δ R2 | β | Δ R2 | β | |

| 1. Age + Word Level | .252*** | .245*** | .201*** | |||

| Age | −.059 | .001 | .020 | |||

| TOWRE | .490*** | .496*** | .451*** | |||

| 2. + Basic Language | .125*** | .196*** | .118*** | |||

| PPVT | .325** | .449*** | .340** | |||

| TOLD | .131 | .111 | .098 | |||

| 3. + Inferential Lang. | .010 | .023* | .030* | |||

| TLC | .110 | .169* | .193* | |||

| 4. + Planning/Org. | .010 | .012 | .028* | |||

| D-KEFS | .110 | .118 | .180* | |||

| Total R2 | .397*** | .477*** | .378*** | |||

Note: TOWRE = Test of Word Reading Efficiency, Sight Word Efficiency, Standard Score; PPVT = Peabody Picture Vocabulary Test – 3rd Edition – Standard Score; TOLD = Test of Language Development – Grammatic Comprehension – Scaled Score; TLC = Test of Language Competence Listening Comprehension: Making Inferences – Scaled Score; D-KEFS = Delis-Kaplan Executive Function – Tower Test, Total Achievement – Scaled Score.

p ≤ .05.

p ≤ .01.

p ≤ .001.

Discussion

The present study aimed to explore potential differences in children’s ability to comprehend varying text and question types. By investigating interactions between text and question types, we sought to expand the current body of literature on how text and question types may influence a child’s reading performance. Furthermore, we sought to explain how these differences may relate to varying readers’ cognitive skills. When exploring influences of text and question types, reader characteristics taken into consideration in previous studies have primarily centered on word reading and background knowledge. While other studies, particularly those utilizing developmental models, have demonstrated the contributions of semantic and syntactic awareness, inferencing, and planning/organizing to reading comprehension, these skills have rarely been analyzed within the context of comprehending specific types of text or answering specific types of questions. This was the focus of the current study.

Differences among Text Types

Not surprisingly, our findings revealed significant differences among performances on the three text types. Specifically, Functional passages were found to be easier than both Narrative and Expository passages. This finding, consistent with our hypothesis, may be explained by the structure of this text type. Functional text is designed to replicate “real world” situations, such as reading signs or following directions. Since the structure of this text is commonly broken down into steps or presented in a list-like format, it may be easier to navigate, which in turn leads to better comprehension. Findings from previous studies support this conclusion. Geiger and Millis (2004) found that procedural text types presented in a step-like structure, similar to Functional text, are easier to comprehend when the goal is to answer questions following the text. When children are exposed to this text type on a regular basis, the easy-to-navigate format of this text type may aid in comprehension. Contrary to expectation, there was no difference between accuracy on Narrative and Expository passages. This could relate to the findings from the Coh-Metrix analyses of the passages. The higher levels of cohesiveness among the Expository texts may have compensated for the less-familiar topics (Graesser et al., 2011).

Analyses that examined the contributions of word-level skills as well as language, inferencing, and planning/organizing skills to each text type suggested that some of the variance in comprehension of the text types stems from varying demands on distinct cognitive skills. Word level skills and basic language, particularly semantic awareness, consistently contributed to successful reading comprehension across all text types. This is supportive of the simple view of reading; word recognition and language comprehension are both essential for comprehension (Hoover & Gough, 1990). Furthermore, word level skills and semantic awareness appeared to be sufficient for comprehending narrative and functional text types.

Although readers showed relatively similar overall performance on Narrative and Expository passages, findings suggested that Expository passages require the use of higher-level cognitive skills (inferencing and planning/organizing) in addition to the word-level and basic language skills needed for comprehension of Narrative text. Together with previous studies (Best et al., 2008; Samuelstuen & Braten, 2005; Sanchez & Garcia, 2009), our findings support the need for readers to apply more advanced skills to comprehending expository text and that deficits in these higher order cognitive skills may result in poorer expository comprehension despite adequate word-level and basic language skills. It is also possible that problems with higher order cognitive skills may go unnoticed until later childhood when the focus of reading instruction switches from narrative to expository text.

The above findings could be viewed as counterintuitive given that performance on Narrative and Expository passages was similar and that analyses of passages in the current study, and previous studies (McNamara et al., in press), indicate that expository passages may be written in a more cohesive manner, which should reduce the need for inferencing and organizing. Studies showing that inferences are generated quickly and reliably by all readers for narrative texts (see Graesser & McNamara, 2011; Haberlandt & Graesser, 1985) may explain why inferences and reasoning did not make significant contributions to the Narrative passages in our study. The differences between the cognitive skill requirements for Narrative versus Expository texts suggest the need to consider measures of genre in order to fully understand the cognitive skills required for reading comprehension.

Another possible explanation for the relationship between expository passages and inferencing ability may relate to interactions between text and question characteristics. While analyzing the characteristics of the questions was beyond the scope for the present study, the text-question type interaction indicated that Initial Understanding questions were more difficult when linked to Expository passages rather than Narrative passages. It has been observed that Expository passages are more likely to use the same words multiple times in order to relate sentences together and promote development of a situation model. Perhaps this actually makes it more difficult for a reader to identify the proper section of text needed to answer a literal question, because searching for words in the text that match words in the question may yield multiple possibilities. This may be the point at which a reader’s inferencing abilities could contribute to performance on Expository passages, and what accounts for the differences between good and poor readers that Raphael and colleagues observed (Raphael and Pearson, 1985; Raphael et al., 1980; Raphael & Wonnacott, 1985). Future studies will need to further investigate interactions between text and question types.

Differences among Question Types

Significant differences on performance of the three question types were observed. Not surprisingly, Critical Analysis and Process Strategies questions proved to be the most difficult for readers overall. This question type requires children to not only comprehend the text that they are reading, but also to analyze and evaluate the passage while using reading strategies to recognize text structures. The contributions of inferencing and planning/organizing to accuracy on Critical Analysis and Process Strategies questions indicated that, in addition to word level skills and basic language, such higher order cognitive abilities are required to answer these types of questions. Even if their word reading and basic language skills are sufficient for comprehending the text overall, children with deficits in one or more of these higher order skills may struggle with specific question types.

We observed similar rates of accuracy for Initial Understanding and Interpretation questions. Word level skills and semantic awareness were sufficient to answer the Initial Understanding items, for which children must draw information directly from the text. Interpretation questions, on the other hand, are designed to require children to “read between the lines” and utilize background knowledge and inferencing skills. Not surprisingly, the present study found that a measure of inferencing ability was a predictor of performance on such questions. Less is known regarding the reader characteristics that may relate to answering inferential questions; however, our findings seem to be consistent with observations from Brandao and Oakhill’s 2005 study which found that young children have an understanding of when questions require the synthesis of story information and previous knowledge.

Interactions between Text and Question Types

Another aspect of our findings involved text-question interactions. Although it was beyond the scope of the study to examine the contributions of cognitive skills to each text-question pair (but as discussed below, will need to be addressed in future studies), results suggested a text-question interaction, driven by significant differences in the relationship among question types for Narrative and Expository passages. For these two text types, Critical Analysis and Process Strategies questions proved to be more difficult than questions focused on Initial Understanding and Interpretation. When comparing performance on Initial Understanding and Interpretation questions, the findings for Narrative passages were reversed from the findings for Expository passages. For Narrative passages, Initial Understanding questions were significantly easier than Interpretation, while there was a marginal difference in the opposite direction for Expository passages.

One possible explanation for these results may stem from level of familiarity with the format of Narrative versus Expository passages. For example, Narrative passages, such as short stories, typically guide the reader through a series of events in a particular timeline. This format does not require the reader to infer information from the passage, but rather more commonly asks questions that only require the reader to directly understand what is written in the passage. On the other hand, Expository passages provide information to the reader but assume that children possess a certain level of background knowledge to aid in drawing conclusions and understanding the central idea. If readers naturally make inferences more frequently when reading expository passages, they may be better prepared to answer questions that assess this ability. This may account for why Interpretation questions were the easiest within Expository passages, while Initial Understanding questions were the easiest for Narrative passages. As previously mentioned, it is also possible that there are characteristics of the questions themselves which differ between text types.

General Discussion

The present study included a comprehensive model of cognitive skills that contribute to successful reading comprehension, and overall our findings revealed that children’s comprehension is not identical across all types of text and questions. In particular, we found that higher-level cognitive skills such as inferencing and reasoning contributed to expository texts and more complex question types, while word recognition and oral language contributed to all types of texts and questions. Although the factors we included have been incorporated into models of reading comprehension built upon the simple view of reading (e.g., Cutting & Scarborough, in press), no prior study has examined this particular group of factors while distinguishing among text and question types. By looking at how these cognitive skills affect comprehension by text and question type, we were able to understand more about which skills are most necessary for different types of reading and comprehension tasks.

There are several implications for our findings. First, while some have advocated a unidimensional scale of text complexity (such as the Lexile Framework for Reading; Lennon & Burdick, 2004; Stenner, 1996), our findings indicate that interactions between reader characteristics such as different cognitive skills and specific text and question categories exist. Such findings support a more multidimensional scale of text complexity (Graesser, et al., 2011). Second, our findings may provide some insight as to the origin of the discrepancies among previous studies investigating predictors of comprehension. It is established that the way comprehension is measured will affect what specific cognitive processes are being assessed (Cutting & Scarborough, 2006; Keenan et al., 2008). The results from the present study suggest that performance on comprehension measures may vary not only due to test format, but also due to the specific passage and question types that are included. Finally, as the SDRT-4 is comparable to reading comprehension measures often administered as a part of school assessments, findings from this study may be applicable for design and interpretation of students’ performances on standardized tests, and yield some clues as to why certain students struggle with different aspects of reading comprehension. Such insights may be particularly pertinent given the current focus on high stakes assessments (e.g., Common Core State Standards Initiative, 2010).

While our study provides some important directions towards understanding more about reader-text interactions in reading comprehension, it does have several limitations that will need to be addressed in future studies. First, while we recognize that background knowledge is an important factor in reading comprehension, as supported by previous findings (Best et al., 2008; Samuelstuen & Braten, 2005; Sanchez & Garcia, 2009), the present study did not include a measure of background knowledge specifically assessing children’s knowledge of the topics encountered in the passages. While this certainly somewhat limits our ability to relate our findings to previous studies, our measure of semantic knowledge (the PPVT-III) is often used as a proxy for general knowledge, and the current study found that both inferential and planning/organizing skills contributed unique variance to particular types of texts/questions, even after accounting for PPVT-III. Kintsch (1988) explains that comprehending text requires two types of knowledge: both background knowledge and knowledge of language (p. 180). Thus, our measure of semantic awareness may have accounted for some of the variance typically attributed to background knowledge. Support for this supposition is seen in our findings, which show that semantic knowledge, along with inferencing and planning/organizing, contributed to comprehension of Expository passages, which is consistent with the model developed by Samuelstuen and Braten (2005). It may be that semantic awareness is related to background knowledge, and that inferencing, planning, and organizing abilities all relate to their “use of strategies” variable which included elaboration, organization, and monitoring.

It is also important to consider the potential implications of our selection criteria and other elements of our design. Although the mean and distribution of SDRT-4 scores for the current sample were relatively average, this sample was not randomly selected. It is possible that children from families who are willing and able to participate in a two-day study outside of school are not representative of the population as a whole. Testing within the classroom may gain access to participants who otherwise would not be available and has the potential for a much larger sample, allowing for more in-depth analyses than were feasible with the current sample. For example, current regression analyses were limited to examining the contributions of distinct cognitive skills to text types and question types as a whole. Exploring the contributions of cognitive skills to specific question types within specific text types (e.g., analyzing the influence of planning/organizing on comprehension of Functional passages when assessed with Critical Analysis and Process Strategies questions) is an important direction for future studies. Identifying skills that are related to particular combinations of text and question types may help to explain the text-question interactions that we observed and, in turn, further assist us in understanding what types of intervention would be most effective in different situations.

Some participants were excluded because they failed to respond to 90% of the items on the SDRT; however, it should be mentioned that it does not appear that one underlying factor related to the exclusion of these readers. For example, only seven were categorized as having a word reading deficit, and more than half met our criteria for ADHD. Still, it is possible that our criteria excluded some specific reader profiles; future studies using untimed measures may allow for the inclusion of these readers and confirm whether our findings can be generalized to all types of readers.

The next step for future studies would be to include both the variables indentified in the present study and background knowledge, especially specific to the topics included in readings. Doing so may further expand upon our understanding of how different cognitive processes play a role in a child’s ability to comprehend certain types of texts and questions. It is also possible that some of the factors may moderate each other. As discussed earlier, perhaps an individual’s possession of background knowledge is only useful if he/she also possesses the ability to infer when it is necessary to incorporate background knowledge. To this end, it would be worthwhile to consider different ordering of skills in regression analyses. While our analyses clearly illustrate the value of deeper levels of comprehension, which are generally not a focus of developmental models, other models that place these higher-level skills known to contribute to reading comprehension more in the forefront (i.e., from the C-I perspective) would be valuable to test.

Finally, it is important to mention developmental considerations: children’s exposure to certain types of text varies from earlier to later grades and, as children get older, the relationship between reading comprehension and different cognitive skills changes (e.g., Catts, et al., 2006; Tilstra, et al., 2009). Analyzing whether children’s age or reading ability influences the contributions of cognitive processes to particular text or question types may reveal relationships that are specific to an individualized age group or reader profile. Such knowledge would ultimately help in understanding how to specific strengths and weaknesses children may have in reading comprehension, highlight the skills upon which teachers should focus for particular reading tasks, and inform the development of new assessments of reading comprehension.

Acknowledgments

This research was supported by the National Institute of Health grant, 1RO1 HD044073-01A1.

Appendix A. Summary of Coh-Metrix Analyses for Narrative and Expository Passages

Table A1.

Coh-Metrix Indices as a Function of Text Type

| Index | Text Type

|

p | Cohen’s d | |||

|---|---|---|---|---|---|---|

| Narrative | Expository | |||||

|

| ||||||

| M | SD | M | SD | |||

| Number of sentences | 25.90 | 11.61 | 14.50 | 2.88 | .016 | 1.35 |

| Number of words | 304.90 | 116.26 | 206.38 | 57.05 | .044 | 1.08 |

| Words per sentence | 12.73 | 4.15 | 14.14 | 2.64 | .417 | −0.41 |

| Syllables per word | 1.34 | 0.07 | 1.41 | 0.14 | .147 | −0.63 |

| Flesch Reading Ease | 80.88 | 9.65 | 72.91 | 14.28 | .177 | 0.65 |

| Celex Word Frequency (Log) | 2.23 | 0.14 | 2.21 | 0.23 | .833 | 0.11 |

| Content Word Concreteness | 420.88 | 18.09 | 435.34 | 23.85 | .162 | −0.68 |

| Connective to Verb Ratio | 0.43 | 0.22 | 0.61 | 0.23 | .117 | −0.80 |

| Connectives Incidence | 62.45 | 11.09 | 77.14 | 15.09 | .030 | −1.11 |

| Narrativity | 72.33 | 13.39 | 44.89 | 26.52 | .017 | 1.31 |

| LSA Sentence to Sentence | 0.15 | 0.07 | 0.31 | 0.07 | .000 | −2.29 |

| LSA All Sentences | 0.14 | 0.07 | 0.31 | 0.05 | .000 | −2.79 |

| LSA Paragraph to Paragraph | 0.31 | 0.11 | 0.49 | 0.13 | .005 | −1.49 |

Note: Means and SDs of indices are derived from the values across all passages classified as narrative or expository in the SDRT. Number of Sentences=mean number of sentences in the passage; Number of Words=total number of words in the passage; Words per Sentence=total number of words in the passage divided by total number of sentences in the passage; Syllables per Word=mean number of syllables per content word in the passage; Flesch Reading Ease=readability formula that measures text difficulty based on average words per sentence and average syllables per word in the passage (see Kincaid, Fishburne, Rogers, & Chissom, 1975); Celex Word Frequency=log frequency of content words in the passage (nouns, adverbs, adjectives, main verbs in the passage, see Baayen, Piepenbrock, & van Rijn, 1993); Content Word Concreteness=ratings of word concreteness based on MRC Psycholinguistics Database (Coltheart, 1981), with scores ranging from 100 (abstract) to 700 (concrete); Connective to Verb Ratio=ratio of causal particles to causal verbs in the passage, thus representing causal relationships; Connectives Incidence=incidence of connecting words such as “and, so, then, or, but”; Narrativity=the extent to which the passage conveys a story (larger value) versus informational text (smaller value); LSA Sentence to Sentence, All Sentences, and Paragraph to Paragraph=semantic overlap based on meaning (see Landauer, McNamara, Dennis, & Kintsch, 2007).

Table A2.

Coh-Metrix Indices as a Function of Test Level

| Index | Level

|

p | Cohen’s d | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Purple | Brown | Blue | ||||||||

|

|

|

|||||||||

| M | SD | M | SD | M | SD | P v Br | P v Bl | Br v Bl | ||

| Number of sentences | 17.67 | 6.41 | 21.67 | 9.42 | 23.17 | 14.85 | .667 | −0.50 | −0.48 | −0.12 |

| Number of words | 183.67 | 51.92 | 306.00 | 90.52 | 293.67 | 125.46 | .076 | −1.66 | −1.15 | 0.11 |

| Words per sentence | 10.79 | 2.48 | 15.04 | 3.61 | 14.23 | 3.33 | .079 | −1.37 | −1.17 | 0.23 |

| Syllables per word | 1.28 | 0.08 | 1.42 | 0.12 | 1.42 | 0.08 | .028 | −1.37 | −1.75 | 0.00 |

| Flesch Reading Ease | 87.89 | 8.86 | 71.65 | 12.65 | 72.48 | 8.37 | .023 | 1.49 | 1.79 | −0.08 |

| Celex Word Frequency | 2.26 | 0.14 | 2.22 | 0.24 | 2.18 | 0.16 | .755 | 0.20 | 0.53 | 0.20 |

| Content Word Concreteness | 439.21 | 29.13 | 421.64 | 11.60 | 421.09 | 18.25 | .265 | 0.79 | 0.75 | 0.04 |

| Connective to Verb Ratio | 0.39 | 0.13 | 0.52 | 0.30 | 0.62 | 0.23 | .258 | −0.56 | −1.23 | −0.37 |

| Connectives Incidence | 64.50 | 15.08 | 69.42 | 16.35 | 73.00 | 13.91 | .631 | −0.31 | −0.59 | −0.24 |

| LSA Sentence to Sentence | 0.22 | 0.10 | 0.21 | 0.11 | 0.24 | 0.13 | .898 | 0.10 | −0.17 | −0.25 |

| LSA All Sentences | 0.24 | 0.11 | 0.20 | 0.10 | 0.21 | 0.12 | .821 | 0.38 | 0.26 | −0.09 |

| LSA Paragraph to Paragraph | 0.37 | 0.17 | 0.41 | 0.13 | 0.38 | 0.17 | .909 | −0.26 | −0.06 | 0.20 |

Note: Means and SDs of indices reflect values across all passages classified by the SDRT as Purple (P), Brown (Br), or Blue (Bl). Number of Sentences=mean number of sentences in the passage; Number of Words=total number of words in the passage; Words per Sentence=total number of words in the passage divided by total number of sentences in the passage; Syllables per Word=mean number of syllables per content word in the passage; Flesch Reading Ease=readability formula that measures text difficulty based on average words per sentence and average syllables per word in the passage (see Kincaid, Fishburne, Rogers, & Chissom, 1975); Celex Word Frequency=log frequency of content words in the passage (nouns, adverbs, adjectives, main verbs in the passage, see Baayen, Piepenbrock, & van Rijn, 1993); Content Word Concreteness=ratings of word concreteness based on MRC Psycholinguistics Database (Coltheart, 1981), with scores ranging from 100 (abstract) to 700 (concrete); Connective to Verb Ratio=ratio of causal particles to causal verbs in the passage, thus representing causal relationships; Connectives Incidence=incidence of connecting words such as “and, so, then, or, but”; LSA Sentence to Sentence, All Sentences, and Paragraph to Paragraph=semantic overlap based on meaning (see Landauer, McNamara, Dennis, & Kintsch, 2007).

References

- Baayen RH, Piepenbrock R, Van Rijn H. The CELEX lexical database (CD-ROM) Philadelphia, PA: University of Pennsylvania, Linguistic Data Consortium; 1993. [Google Scholar]

- Best RM, Floyd RG, McNamara DS. Differential competencies contributing to children’s comprehension of narrative and expository texts. Reading Psychology. 2008;29:137–164. [Google Scholar]

- Best RM, Rowe M, Ozuru Y, McNamara DS. Deep-level comprehension of science texts: The role of the reader and the text. Top Language Disorders. 2005;25(1):65–83. [Google Scholar]

- Brandao ACP, Oakhill J. “How do you know this answer?” – Children’s use of text data and general knowledge in story comprehension. Reading and Writing. 2005;18:687–713. [Google Scholar]

- Cain K, Oakhill J. Reading comprehension difficulties: Correlates, causes, and consequences. In: Cain K, Oakhill J, editors. Children’s comprehension problems in oral and written language: A cognitive perspective. New York, NY: Guilford Press; 2007. [Google Scholar]

- Catts HW, Adlof SM, Weismer SE. Language deficits in poor comprehenders: A case for the simple view of reading. Journal of Speech, Language, and Hearing Research. 2006;49:278–293. doi: 10.1044/1092-4388(2006/023). [DOI] [PubMed] [Google Scholar]

- Colheart M. The MRC psycholinguistic database. The Quarterly Journal of Experimental Psychology. 1981;33:497–505. [Google Scholar]

- Cutting LE, Materek A, Cole CAS, Levine TM, Mahone EM. Effects of fluency, oral language, and executive function on reading comprehension performance. Annals of Dyslexia. 2009;59(1):34–54. doi: 10.1007/s11881-009-0022-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cutting LE, Scarborough HS. Prediction of reading comprehension: Relative contributions of word recognition, language proficiency, and other cognitive skills can depend on how comprehension is measured. Scientific Studies of Reading. 2006;10(3):277–299. [Google Scholar]

- Delis DC, Kaplan E, Kramer JH. The Delis-Kaplan Executive Function System. San Antonio, TX: The Psychological Corporation; 2001. [Google Scholar]

- Diakidoy IN, Stylianou P, Karefillidou C, Papageorgiou P. The relationship between listening and reading comprehension of different types of text at increasing grade levels. Reading Psychology. 2005;26:55–80. [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3. Circle Pines, MN: American Guidance Service; 1997. [Google Scholar]

- Eason SH, Sabatini JP, Goldberg LF, Bruce KM, Cutting LE. Examining the relationship between word reading efficiency and oral reading rate in predicting comprehension among different types of readers. Scientific Studies of Reading. doi: 10.1080/10888438.2011.652722. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geiger JF, Millis KK. Assessing the impact of reading goals and text structures on comprehension. Reading Psychology. 2004;25:93–110. [Google Scholar]

- Goff DA, Pratt C, Ong B. The relations between children’s reading comprehension, working memory, language skills and components of reading decoding in a normal sample. Reading and Writing. 2005;18:583–616. [Google Scholar]

- Graesser AC, Bertus EL. The construction of causal inferences while reading expository texts on science and technology. Scientific Studies of Reading. 1998;2:247–269. [Google Scholar]

- Graesser AC, McNamara DS. Computational analyses of multilevel discourse comprehension. Topics in Cognitive Science. 2011;3:371–398. doi: 10.1111/j.1756-8765.2010.01081.x. [DOI] [PubMed] [Google Scholar]

- Graesser AC, McNamara DS, Kulikowich JM. Coh-Metrix: Providing multilevel analyses of text characteristics. Educational Researcher. 2011;40:223–234. [Google Scholar]

- Graesser AC, McNamara DS, Louwerse MM, Cai Z. Coh-Metrix: Analysis of text on cohesion and language. Behavior Research Methods, Instruments, and Computers. 2004;36:193–202. doi: 10.3758/bf03195564. [DOI] [PubMed] [Google Scholar]

- Graesser AC, Singer M, Trabasso T. Constructing inferences during narrative text comprehension. Psychological Review. 1994;101:371–395. doi: 10.1037/0033-295x.101.3.371. [DOI] [PubMed] [Google Scholar]

- Haberlandt KF, Graesser AC. Component processes in text comprehension and some of their interactions. Journal of Experimental Psychology: General. 1985;114:357–374. [Google Scholar]

- Hoover WA, Gough PB. The simple view of reading. Reading and Writing. 1990;2(2):127–160. [Google Scholar]

- Jenkins JR, Fuchs LS, van den Broek P, Espin C, Deno SL. Sources of individual differences in reading comprehension and reading fluency. Journal of Educational Psychology. 2003;95(4):719–729. [Google Scholar]

- Karlsen B, Gardner EF. Stanford Diagnostic Reading Test. 4. San Antonio, TX: The Psychological Corporation; 1995. [Google Scholar]

- Keenan JM, Betjemann RS, Olson RK. Reading comprehension tests vary in the skills they assess: Differential dependence on decoding and oral comprehension. Scientific Studies of Reading. 2008;12(3):281–300. [Google Scholar]

- Kendeou P, van den Broek P, White MJ, Lynch JS. Predicting reading comprehension in early elementary school: The independent contributions of oral language and decoding skills. Journal of Educational Psychology. 2009;101(4):765–778. [Google Scholar]

- Kincaid JP, Fishburne RP, Rogers RL, Chissom BS. Research Branch Report. Millington, TN: Naval Technical Training, U. S. Naval Air Station, Memphis, TN; 1975. Derivation of new readability formulas (Automated Readability Index, Fog Count and Flesch Reading Ease Formula) for Navy enlisted personnel; pp. 8–75. [Google Scholar]

- Kintsch W. The role of knowledge in discourse comprehension: A construction-integration model. Psychological Review. 1988;95:163–182. doi: 10.1037/0033-295x.95.2.163. [DOI] [PubMed] [Google Scholar]

- Kintsch W. Text comprehension, memory, and learning. American Psychologist. 1994;49:294–303. doi: 10.1037//0003-066x.49.4.294. [DOI] [PubMed] [Google Scholar]

- Kintsch W. Comprehension: A paradigm for cognition. Cambridge, UK: Cambridge University Press; 1998. [Google Scholar]

- Landauer TK, McNamara DS, Dennis S, Kintsch W. Handbook of Latent Semantic Analysis. Mahwah, NJ, US: Lawrence Erlbaum Associates Publishers; 2007. [Google Scholar]

- Lennon C, Burdick H. The Lexile Framework as an approach for reading measurement and success. MetaMetrics, Inc; 2004. Retrieved from http://www.lexile.com/research/1/ [Google Scholar]

- Locascio G, Mahone EM, Eason SE, Cutting LE. Executive dysfunction among children with reading comprehension deficits. Journal of Learning Disabilities OnlineFirst. 2010;0:1–14. doi: 10.1177/0022219409355476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon GR. Toward a definition of dyslexia. Annals of Dyslexia. 1995;45:3–27. doi: 10.1007/BF02648210. [DOI] [PubMed] [Google Scholar]

- McNamara DS, Graesser AC, Louwerse MM. Sources of text difficulty: Across the ages and genres. In: Sabatini JP, Albo E, editors. Assessing reading in the 21st century: Aligning and applying advances in the reading and measurement sciences. Lanham, MD: R&L Education; in press. [Google Scholar]

- McNamara DS, Kintsch E, Songer NB, Kintsch W. Are good texts always better? Interactions of text coherence, background knowledge, and levels of understanding in learning from text. Cognition and Instruction. 1996;14:1–43. [Google Scholar]

- Medina AL, Pilonieta P. Once upon a time: Comprehending narrative text. In: Schumm JS, editor. Reading assessment and instruction for all learners. New York, NY: The Guilford Press; 2006. pp. 222–261. [Google Scholar]

- Nation K, Clarke P, Snowling MJ. General cognitive ability in children with reading comprehension difficulties. British Journal of Educational Psychology. 2002;72:549–560. doi: 10.1348/00070990260377604. [DOI] [PubMed] [Google Scholar]

- Newcomer PL, Hammill DD. Test of Language Development – Intermediate. 3. San Antonio, TX: Pro-Ed; 1997. [Google Scholar]

- Ozuru Y, Rowe M, O’Reilly T, McNamara DS. Where’s the difficulty in standardized reading tests: The passage of the question? Behavior Research Methods. 2008;40:1001–1015. doi: 10.3758/BRM.40.4.1001. [DOI] [PubMed] [Google Scholar]

- Raphael TE, Pearson PD. Increasing students’ awareness of sources of information for answering questions. American Educational Research Journal. 1985;22:217–236. [Google Scholar]