Summary

We propose a method of effective dimension reduction for functional data, emphasizing the sparse design where one observes only a few noisy and irregular measurements for some or all of the subjects. The proposed method borrows strength across the entire sample and provides a way to characterize the effective dimension reduction space, via functional cumulative slicing. Our theoretical study reveals a bias-variance trade-off associated with the regularizing truncation and decaying structures of the predictor process and the effective dimension reduction space. A simulation study and an application illustrate the superior finite-sample performance of the method.

Keywords: Cumulative slicing, Effective dimension reduction, Inverse regression, Sparse functional data

1. Introduction

In functional data analysis, one is often interested in how a scalar response varies with a smooth trajectory X(t), where t is an index variable defined on a closed interval ; see Ramsay & Silverman (2005). To be specific, one seeks to model the relationship Y = M(X; ϵ), where M is a smooth functional and the error process ϵ has zeromean and finite variance σ2 and is independent of X. Although modelling M parametrically can be restrictive in many applications, modelling M nonparametrically is infeasible in practice due to slow convergence rates associated with the curse of dimensionality. Therefore a class of semiparametric index models has been proposed to approximate M(X; ϵ) with an unknown link function ; that is,

| (1) |

where K is the reduced dimension of the model, β1, … , βK are linearly independent index functions, and ⟨u, v⟩ = ∫ u(t)v(t) dt is the usual L2 inner product. The functional linear model Y = β0 + ∫ β1(t)X(t) dt + ϵ is a special case and has been studied extensively (Cardot et al., 1999; Müller & Stadtmüller, 2005; Yao et al., 2005b; Cai & Hall, 2006; Hall & Horowitz, 2007; Yuan & Cai, 2010).

In this article, we tackle the index model (1) from the perspective of effective dimension reduction, in the sense that the K linear projections ⟨β1, X⟩, … , ⟨βK , X⟩ form a sufficient statistic. This is particularly useful when the process X is infinite-dimensional. Our primary goal is to discuss dimension reduction for functional data, especially when the trajectories are corrupted by noise and are sparsely observed with only a few observations for some, or even all, of the subjects. Pioneered by Li (1991) for multivariate data, effective dimension reduction methods are typically link-free, requiring neither specification nor estimation of the link function (Duan & Li, 1991), and aim to characterize the K-dimensional effective dimension reduction space SY∣X = span(β1, … , βK ) onto which X is projected. Such index functions βk are called effective dimension reduction directions, K is the structural dimension, and SY∣X is also known as the central subspace (Cook, 1998). Li (1991) characterized SY∣X via the inversemean E(X ∣ Y) by sliced inverse regression, which has motivated much work for multivariate data. For instance, Cook & Weisberg (1991) estimated var(X ∣ Y), Li (1992) dealt with the Hessian matrix of the regression curve, Xia et al. (2002) proposed minimum average variance estimation as an adaptive approach based on kernel methods, Chiaromonte et al. (2002) modified sliced inverse regression for categorical predictors, Li & Wang (2007) worked with empirical directions, and Zhu et al. (2010) proposed cumulative slicing estimation to improve on sliced inverse regression.

The literature on effective dimension reduction for functional data is relatively sparse. Ferré & Yao (2003) proposed functional sliced inverse regression for completely observed functional data, and Li & Hsing (2010) developed sequential χ2 testing procedures to decide the structural dimension of functional sliced inverse regression. Apart from effective dimension reduction approaches, James & Silverman (2005) estimated the index and link functions jointly for an additive form , assuming that the trajectories are densely or completely observed and that the index and link functions are elements of a finite-dimensional spline space. Chen et al. (2011) estimated the index and additive link functions nonparametrically and relaxed the finite-dimensional assumption for theoretical analysis but retained the dense design.

Jiang et al. (2014) proposed an inverse regression method for sparse functional data by estimating the conditional mean with a two-dimensional smoother applied to pooled observed values of X in a local neighbourhood of . The computation associated with a two-dimensional smoother is considerable and further increased by the need to select two different bandwidths. In contrast, we aim to estimate the effective dimension reduction space by drawing inspiration from cumulative slicing for multivariate data (Zhu et al., 2010). When adapted to the functional setting, cumulative slicing offers a novel and computationally simple way of borrowing strength across subjects to handle sparsely observed trajectories. This advantage has not been exploited elsewhere. As we will demonstrate later, although extending cumulative slicing to completely observed functional data is straightforward, it adopts a different strategy for the sparse design via a one-dimensional smoother, with potentially effective usage of the data.

2. Methodology

2·1. Dimension reduction for functional data

Let be a compact interval, and let X be a random variable defined on the real separable Hilbert space endowed with inner product and norm ‖f‖ = ⟨f, f⟩1/2. We assume that:

Assumption 1

X is centred and has a finite fourth moment, ∫τ E{X4(t)} dt < ∞.

Under Assumption 1, the covariance surface of X is ∑(s, t) = E{X(s)X(t)}, which generates a Hilbert–Schmidt operator ∑ on H that maps f to (∑f)(t) = ∫τ ∑(s, t) f (s) ds. This operator can be written succinctly as ∑ = E(X ⊗ X), where the tensor product u ⊗ v denotes the rank-one operator on H that maps w to (u ⊗ v)w = ⟨u, w⟩v. By Mercer’s theorem, ∑ admits a spectral decomposition , where the eigenfunctions {ϕj}j=1,2,… form a complete orthonormal system in H and the eigenvalues {αj}j=1,2,… are strictly decreasing and positive such that . Finally, recall that the effective dimension reduction directions β1, … , βK in model (1) are linearly independent functions in H, and the response is assumed to be conditionally independent of X given the K projections ⟨β1, X⟩, … , ⟨βK , X⟩.

Zhu et al. (2010) observed that for a fixed , using two slices and would maximize the use of data and minimize the variability in each slice. The kernel of the sliced inverse regression operator var{E(X ∣ Y)} is estimated by the two-slice version , where is an unconditional expectation, in contrast to the conditional expectation E{X(t) ∣ Y} of functional sliced inverse regression. Since Λ0 with a fixed spans at most one direction of SY∣X, it is necessary to combine all possible estimates of by letting run across the support of , an independent copy of Y. Therefore, the kernel of the proposed functional cumulative slicing is

| (2) |

where is a known nonnegative weight function. Denote the corresponding integral operator of Λ(s, t) by Λ also. The following theorem establishes the validity of our proposal. Analogous to the multivariate case, a linearity assumption is needed.

Assumption 2

For any function b ∈ H, there exist constants such that

This assumption is satisfied when X has an elliptically contoured distribution, which is more general than, but has a close connection to, a Gaussian process (Cambanis et al., 1981; Li & Hsing, 2010).

Theorem 1

If Assumptions 1 and 2 hold for model (1), then the linear space spanned by is contained in the linear space spanned by {∑β1, … , ∑βK}, i.e., .

An important observation from Theorem 1 is that for any b ∈ H orthogonal to the space spanned by {∑β1, … , ∑βK} and for any x ∈ H, we have ⟨b, Λx⟩ = 0, implying that range(Λ) ⊆ span(∑β1, … , ∑βK). If has K nonzero eigenvalues, the space spanned by its eigenfunctions is precisely span(∑β1, … , ∑βK). Recall that our goal is to estimate the central subspace SY∣X, even though the effective dimension reduction directions themselves are not identifiable. For specificity, we regard these eigenfunctions of ∑−1Λ associated with the K largest nonzero eigenvalues as the index functions β1, … , βK, unless stated otherwise.

As the covariance operator ∑ is Hilbert–Schmidt, it is not invertible when defined from H to H. Similarly to Ferré & Yao (2005), let R∑ denote the range of ∑, and let . Then ∑ is a one-to-one mapping from onto R∑, with inverse . This is reminiscent of finding a generalized inverse of a matrix. Let ξj = ⟨X, ϕj⟩ denote the jth principal component, or generalized Fourier coefficient, of X, and assume that:

Assumption 3

.

Proposition 1

Under Assumptions 1–3, the eigenspace associated with the K nonnull eigenvalues of ∑−1 Λ is well-defined in H.

This is a direct analogue of Theorem 4.8 in He et al. (2003) and Theorem 2.1 in Ferré & Yao (2005).

2·2. Functional cumulative slicing for sparse functional data

For the data {(Xi, Yi) : i = 1, … , n}, independent and identically distributed as (X, Y), the predictor trajectories Xi are observed intermittently, contaminated with noise, and collected in the form of repeated measurements {(Tij, Uij) : i = 1, … , n; j = 1, … , Ni}, where Uij = Xi (Tij) + εij with measurement error εij that is independent and identically distributed as ε with zero mean and constant variance , and independent of all other random variables. When only a few observations are available for some or even all subjects, individual smoothing to recover Xi is infeasible and one must pool data across subjects for consistent estimation.

To estimate the functional cumulative slicing kernel Λ in (2), the key quantity is the unconditional mean . For sparsely and irregularly observed Xi, cross-sectional estimation as used in multivariate cumulative slicing is inapplicable. To maximize the use of available data, we propose to pool the repeated measurements across subjects via a scatterplot smoother, which works in conjunction with the strategy of cumulative slicing. We use a local linear estimator (Fan & Gijbels, 1996), solving

| (3) |

where K1 is a nonnegative and symmetric univariate kernel density and h1 = h1(n) is the bandwidth to control the amount of smoothing. We ignore the dependence among data from the same individual (Lin & Carroll, 2000) and use leave-one-curve-out crossvalidation to select h1 (Rice & Silverman, 1991). Then an estimator of the kernel function Λ(s, t) is its sample moment

| (4) |

The distinction between our method and that of Jiang et al. (2014) lies in the inverse function m(t, y) which forms the effective dimension reduction space. It is notable that (4) is a univariate smoother that includes the effective data satisfying {Tij ∈ (t − h1, t + h1), Yi ≤ y}, roughly at an order of (nh1)1/2 for estimating m(t, y) = E{X(t)1(Y ≤ y)} for a sparse design with E(Nn) < ∞, where Nn is the expected number of repeated observations per subject. By contrast, equation (2·4) in Jiang et al. (2014) uses the data satisfying {Tij ∈ (t − ht, t + ht), Yi ∈ (y − hy, y + hy)} for estimating m(t, y)= E{X(t) ∣ Y = y}, roughly at an order of (nhthy)1/2. This is reflected in the faster convergence of the estimated operator compared with in Jiang et al. (2014), indicating potentially effective usage of the data based on univariate smoothing. The computation associated with a two-dimensional smoother is considerable and further exacerbated by the need to select different bandwidths ht and hy.

For the covariance operator ∑, following Yao et al. (2005a), denote the observed raw covariances by Gi(Tij, Til) = UijUil. Since E{Gi(Tij, Til) ∣ Tij, Til} = cov{X(Tij), X(Til)} + σ2δjl, where δjl is 1 if j = l and 0 otherwise, the diagonal of the raw covariances should be removed. Solving

| (5) |

yields , where K2 is a nonnegative bivariate kernel density and h2 = h2(n) is the bandwidth chosen by leave-one-curve-out crossvalidation; see Yao et al. (2005a) for details on the implementation. Since the inverse operator ∑−1 is unbounded, we regularize by projection onto a truncated subspace. To be precise, let sn be a possibly divergent sequence and let and denote the orthogonal projectors onto the eigensubspaces associated with the sn largest eigenvalues of ∑ and , respectively. Then ∑sn = πsn∑πsn and are two sequences of finite-rank operators converging to ∑ and as n → ∞, with bounded inverses and , respectively. Finally, we obtain the eigenfunctions associated with the K largest nonzero eigenvalues of as the estimates of the effective dimension reduction directions .

The situation for completely observed Xi is similar to the multivariate case and considerably simpler. The quantities and ∑(s, t) are easily estimated by their respective samplemoments and , while the estimate of Λ remains the same as (4). For densely observed Xi, individual smoothing can be used as a pre-processing step to recover smooth trajectories, and the estimation error introduced in this step can be shown to be asymptotically negligible under certain design conditions, i.e., it is equivalent to the ideal situation of the completely observed Xi (Hall et al., 2006).

For small values of obtained by (3) may be unstable due to the smaller number of pooled observations in the slice. A suitable weight function w may be used to refine the estimator . In our numerical studies, the naive choice of w ≡ 1 performed fairly well compared to other methods. Analogous to the multivariate case, choosing an optimal w remains an open question.

Ferré & Yao (2005) avoided inverting ∑ with the claim that for a finite-rank operator Λ, range(Λ−1∑) = range(∑−1 Λ); however, Cook et al. (2010) showed that this requires more stringent conditions that are not easily fulfilled.

The selection of Kn and sn deserves further study. For selecting the structural dimension K, the only relevant work to date is Li & Hsing (2010), where sequential χ2 tests are used to determine K for the method of Ferré & Yao (2003). How to extend such tests to sparse functional data, if feasible at all, is worthy of further exploration. It is also important to tune the truncation parameter sn that contributes to the variance-bias trade-off of the resulting estimator, although analytical guidance for this is not yet available.

3. Asymptotic properties

In this section we present asymptotic properties of the functional cumulative slicing kernel operator and the effective dimension reduction directions for sparse functional data. The numbers of measurements Ni and the observation times Tij are considered to be random, to reflect a sparse and irregular design. Specifically, we make the following assumption.

Assumption 4

The Ni are independent and identically distributed as a positive discrete random variable Nn, where E(Nn) < ∞, pr(Nn ≥ 2) > 0 and pr(Nn ≤ Mn) = 1 for some constant sequence Mn that is allowed to diverge, i.e., Mn → ∞ as n → ∞. Moreover, ({Tij, j ∈ Ji}, {Uij, j ∈ Ji}) are independent of Ni for Ji ⊆ {1, … , Ni}.

Writing Ti = (Ti1, … , TiNi)T and Ui = (Ui1, … , UiNi)T, the data quadruplets Zi = {Ti, Ui, Yi, Ni} are thus independent and identically distributed. Extremely sparse designs are also covered, with only a few measurements for each subject. Other regularity conditions are standard and listed in the Appendix, including assumptions on the smoothness of themean and covariance functions of X, the distributions of the observation times, and the bandwidths and kernel functions used in the smoothing steps. Write for .

Theorem 2

Under Assumptions 1, 4 and A1–A4 in the Appendix, we have

The key result here is the L2 convergence of the estimated operator , in which we exploit the projections of nonparametric U-statistics together with a decomposition of to overcome the difficulty caused by the dependence among irregularly spaced measurements. The estimator is obtained by averaging the smoothers over Yi, which is crucial in order to achieve the univariate convergence rate for this bivariate estimator. The convergence of the covariance operator ∑ is presented for completeness, given in Theorem 2 of Yao & Müller (2010).

We are now ready to characterize the estimation of the central subspace SY∣X = span(β1, … , βK). Unlike the multivariate or finite-dimensional case, where the convergence of follows immediately from the convergence of and given a bounded ∑−1, we have to approximate ∑−1 with a sequence of truncated estimates , which introduces additional variability and bias inherent in a functional inverse problem. Since we specifically regarded the index functions {β1, … , βK} as the eigenfunctions associated with the K largest eigenvalues of ∑−1Λ, their estimates are thus equivalent to . For some constant C > 0, we require the eigenvalues of ∑ to satisfy the following condition:

Assumption 5

αj > αj+1 > 0, , and αj − αj+1 ≥ C−1 j−a−1 for j ≥ 1.

This condition on the decaying speed of the eigenvalues αj prevents the spacings between consecutive eigenvalues from being too small, and also implies that αj ≥ Cj−a with a > 1 given the boundedness of ∑. Expressing the index functions as (k = 1, … , K), we impose a decaying structure on the generalized Fourier coefficients bkj = ⟨βk, ϕj⟩:

Assumption 6

∣bkj∣ ≤ Cj−b for j ≥ 1 and k = 1, … , K, where b > 1/2.

In order to accurately estimate the eigenfunctions ϕj from , one requires , i.e., that the distance to αj from the nearest eigenvalue does not fall below (Hall & Hosseini-Nasab, 2006); this implicitly places an upper bound on the truncation parameter sn. Given Assumption 5 and Theorem 2, we provide a sufficient condition on sn. Here we write c1n ≍ c2n when c1n = O(c2n) and c2n = O(c1n).

Assumption 7

As n → ∞, ; moreover, if h2 ≍ n−1/6, sn = o{n1/(3a+3)}.

Theorem 3

Under Assumptions 1–7 and A1–A4 in the Appendix, for all k = 1, … , K,

| (6) |

This result associates the convergence of with the truncation parameter sn and the decay rates of αj and bkj, indicating a bias-variance trade-off with respect to sn. One can view sn as a tuning parameter that is allowed to diverge slowly and which controls the resolution of the covariance estimation. Specifically, the first two terms on the right-hand side of (6) are attributed to the variability of estimating with , and the last term corresponds to the approximation bias of . The first term of the variance is due to and becomes increasingly unstable with a larger truncation. The second part of the variance is due to , and the approximation bias is determined by the smoothness of βk; for instance, a smoother βk with a larger b leads to a smaller bias.

4. Simulations

In this section we illustrate the performance of the proposed functional cumulative slicing method in terms of estimation and prediction. Although our proposal is link-free for estimating index functions βk, a general index model (1) may lead to model predictions with high variability, especially given the relatively small sample sizes frequently encountered in functional data analysis. Thus we follow Chen et al. (2011) in assuming an additive structure for the link function g in (1), i.e., . In each Monte Carlo run, a sample of n = 200 functional trajectories is generated from the process , where ϕj(t) = sin(πtj/5)/ √5 for j even and ϕj(t)=cos(πtj/5)/ √5 for j odd, the functional principal component scores ξij are independent and identically distributed as N(0, j−1·5), and . For the setting of sparsely observed functional data, the number of observations per subject, Ni, is chosen uniformly from {5, … , 10}, the observational times Tij are independent and identically distributed as Un[0, 10], and the measurement error εij is independent and identically distributed as N(0, 0·1). The effective dimension reduction directions are generated by , where bj = 1 for j = 1, 2, 3 and bj = 4(j − 2)−3 for j = 4, … , 50, and β2(t)=0·31/2(t/5 − 1), which cannot be represented with finite Fourier terms. The following single- and multiple-index models are considered:

where the regression error ϵ is independent and identically distributed as N(0, 1) for all models.

We compare our method with that of Jiang et al. (2014) for sparse functional data in terms of estimation and prediction. Denote the true structural dimension by K0. Due to the nonidentifiability of the βk, we examine the projection operator of the effective dimension space, i.e., and . To assess the estimation of the effective dimension reduction space, we calculate as the estimation error. To assess model prediction, we estimate the link functions gk nonparametrically by fitting a generalized additive model (Hastie & Tibshirani, 1990), where with being the best linear unbiased predictor of Xi (Yao et al., 2005a). We generate a validation sample of size 500 in each Monte Carlo run and calculate the average of the relative prediction errors, 500−1 , over different values of (K, sn), where σ2 = 1 and with , the being the underlying trajectories in the testing sample. We report in Table 1 the average estimation and prediction errors, minimized over (K, sn), along with their standard errors over 100 Monte Carlo repetitions. For estimation and prediction, both methods selected (K, sn) = (1, 3) for the single-index models I and II, and selected (K, sn) = (2, 2) for the multiple-index models III and IV. The two approaches perform comparably in this sparse setting, which could be due to the inverse covariance estimation that dominates the overall performance. Our method takes one-third of the computation time of the method of Jiang et al. (2014) for this sparse design.

Table 1.

Estimation error and relative prediction error, multiplied by 100, obtained from 100 Monte Carlo repetitions (with standard errors in parentheses) for sparse functional data

| Model | Metric | FCS | IRLD | Metric | FCS | IRLD |

|---|---|---|---|---|---|---|

| I | Estimation error |

61·1 (1·1) | 61·3 (1·1) | Prediction error |

17·7 (0·6) | 17·9 (0·5) |

| II | 59·3 (1·0) | 59·5 (1·0) | 19·6 (0·6) | 19·4 (0·5) | ||

| III | 63·7 (0·8) | 63·9 (0·9) | 18·8 (0·5) | 19·5 (0·4) | ||

| IV | 63·8 (0·8) | 63·9 (0·9) | 45·2 (1·1) | 45·4 (1·1) |

FCS, functional cumulative slicing; IRLD, the method of Jiang et al. (2014), where (K, sn) is selected by minimizing the estimation and prediction errors.

We also present simulation results for dense functional data, where Ni = 50 and the Tij are sampled independently and identically from Un[0, 10]. With (K, sn) selected so as to minimize the estimation and prediction errors, we compare our proposal with the method of Jiang et al. (2014), functional sliced inverse regression (Ferré & Yao, 2003) using five or ten slices, and the functional index model of Chen et al. (2011). Table 2 indicates that our method slightly outperforms the method of Jiang et al. (2014), followed by the method of Chen et al. (2011), while functional sliced inverse regression (Ferré & Yao, 2003) is seen to be suboptimal. Our method takes only one-sixth of the time required by Jiang et al. (2014) for this setting.

Table 2.

Estimation error and relative prediction error, multiplied by 100, obtained from 100 Monte Carlo repetitions (with standard errors in parentheses) for dense functional data

| Metric | Model | FCS | IRLD | FSIR5 | FSIR10 | FIND |

|---|---|---|---|---|---|---|

| Estimation error |

I | 39·2 (1·6) | 45·5 (1·5) | 59·4 (2·1) | 61·7 (2·2) | 47·1 (1·6) |

| II | 35·5 (1·4) | 38·1 (1·3) | 56·1 (1·8) | 57·8 (1·9) | 44·5 (1·5) | |

| III | 59·6 (0·8) | 63·1 (0·8) | 72·6 (1·1) | 74·1 (1·3) | 63·6 (0·9) | |

| IV | 57·2 (0·6) | 59·0 (0·6) | 69·3 (1·0) | 68·9 (0·9) | 61·0 (0·8) | |

| Prediction error |

I | 11·1 (0·6) | 12·7 (0·5) | 17·1 (0·7) | 16·7 (0·6) | 16·1 (1·1) |

| II | 9·8 (0·5) | 10·5 (0·4) | 15·5 (0·7) | 16·9 (1·0) | 14·9 (0·8) | |

| III | 13·5 (0·5) | 15·2 (0·5) | 15·8 (0·6) | 16·6 (0·5) | 14·7 (0·6) | |

| IV | 19·9 (0·7) | 21·9 (0·7) | 31·1 (1·4) | 32·2 (1·4) | 24·2 (1·2) |

FCS, functional cumulative slicing; IRLD, inverse regression for longitudinal data (Jiang et al., 2014); FSIR5, functional sliced inverse regression (Ferré & Yao, 2003) with five slices; FSIR10, functional sliced inverse regression (Ferré & Yao, 2003) with ten slices; FIND, functional index model (Chen et al., 2011).

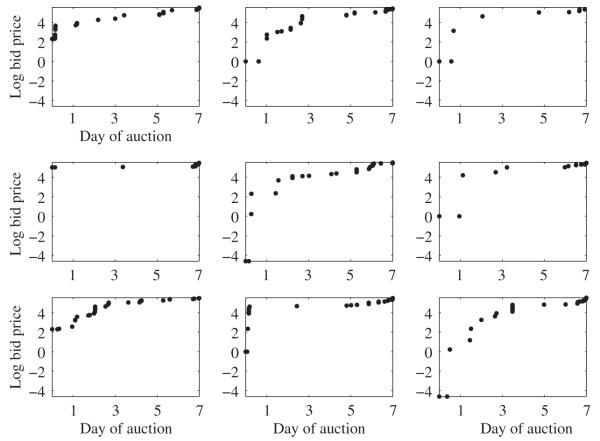

5. Data application

In this application, we study the relationship between the winning bid price of 156 Palm M515 PDA devices auctioned on eBay between March and May of 2003 and the bidding history over the seven-day period of each auction. Each observation from a bidding history represents a live bid, the actual price a winning bidder would pay for the device, known as the willingness-to-pay price. Further details on the bidding mechanism can be found in Liu & Müller (2009). We adopt the view that the bidding histories are independent and identically distributed realizations of a smooth underlying price process. Due to the nature of online auctions, the jth bid of the ith auction usually arrives irregularly at time Tij, and the number of bids Ni can vary widely, from nine to 52 for this dataset. As is usual in modelling prices, we take the log-transform of the bid prices. Figure 1 shows a sample of nine randomly selected bid histories over the seven-day period of the respective auction. Typically, the bid histories are sparse until the final hours of each auction, when bid sniping occurs. At this point, snipers place their bids at the last possible moment to try to deny competing bidders the chance of placing a higher bid.

Fig. 1.

Observed bid prices over the seven-day auction period of nine randomly selected auctions, after log-transform.

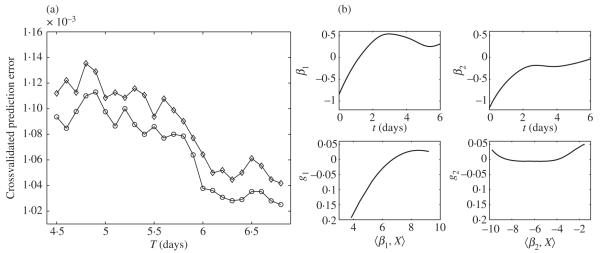

Since our main interest is in the predictive power of price histories up to time T for the winning bid prices, we consider the regression of the winning price on the history trajectory X(t) (t ∈ [0, T]), and set T = 4·5, 4·6, 4·7, … , 6·8 days. For each analysis on the domain [0, T], we select the optimal structural dimension K and the truncation parameter sn by minimizing the average five-fold crossvalidated prediction error over 20 random partitions. Figure 2(a) shows the minimized average crossvalidated prediction errors, compared with those obtained using the method of Jiang et al. (2014). With the increasing prediction power as the bidding histories encompass more data, the proposed method appears to yield more favourable prediction across different time domains.

Fig. 2.

(a) Average five-fold crossvalidated prediction errors for functional cumulative slicing (circles) and the method of Jiang et al. (2014) (diamonds) over 20 random partitions across different time domains [0, T], for sparse eBay auction data. (b) Estimated model components for eBay auction data using functional cumulative slicing with K = 2 and sn = 2; the upper panels show the estimated index functions, i.e., the effective dimension reduction directions, and the lower panels show the additive link functions.

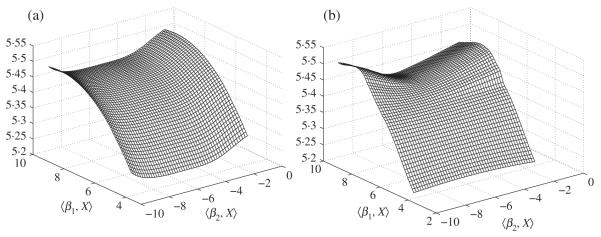

As an illustration, we present the analysis for T = 6. The estimated model components using the proposed method are shown in Fig. 2(b), with the parameters chosen as K = 2 and sn = 2. The first index function assigns contrasting weights to bids made before and after the first day, indicating that some bidders tend to underbid at the beginning only to quickly overbid relative to the mean. The second index represents a cautious type of bidding behaviour, entering at a lower price and slowly increasing towards the average level. These features contribute most towards the prediction of the winning bid prices. Also seen are the nonlinear patterns in the estimated additive link functions. Using these estimated model components, we display in Fig. 3(a) the additive surface . We also fit an unstructured index model g(⟨β1, X⟩, ⟨β2, X⟩), where g is nonparametrically estimated using a bivariate local linear smoother; this is shown in Fig. 3(b), and is seen to agree reasonably well with the additive regression surface.

Fig. 3.

Fitted regression surfaces for the eBay data: (a) additive; (b) unstructured.

Acknowledgement

We thank two reviewers, an associate editor, and the editor for their helpful comments. This research was partially supported by the U.S. National Institutes of Health and National Science Foundation, and the Natural Sciences and Engineering Research Council of Canada.

Appendix

Regularity conditions and auxiliary lemmas

Without loss of generality, we assume that the known weight function is w(·) = 1. Write and for some δ > 0; denote a single observation time by T and a pair of observation times by (T1, T2)T, with densities f(t) and f2(s, t), respectively. Recall the unconditional mean function m(t, y) = E{X(t)1(Y ≤ y)}. The regularity conditions for the underlying moment functions and design densities are as follows, where ℓ1 and ℓ2 are nonnegative integers. We assume that:

Assumption A1

∂2∑/(∂sℓ1∂tℓ2) is continuous on for ℓ1 + ℓ2 = 2, and ∂2m/∂t2 is bounded and continuous with respect to for all .

Assumption A2

is continuous on with f1(t) > 0, and ∂f2/(∂sℓ1∂tℓ2) f2 is continuous on for ℓ1 + ℓ2 = 1 with f2(s, t) > 0.

Assumption A1 can be guaranteed by a twice-differentiable process, and Assumption A2 is standard and implies the boundedness and Lipschitz continuity of f. Recall the bandwidths h1 and h2 used in the smoothing steps for in (3) and in (5), respectively; we assume that:

Assumption A3

h1 → 0, h2 → 0, , and .

We say that a bivariate kernel function K2 is of order (ν, ℓ), where ν is a multi-index ν = (ν1, ν2)T, if

where ∣ν∣ = ν1 + ν2 < ℓ. The univariate kernel K is said to be of order (ν, ℓ) for a univariate ν = ν1 if this definition holds with ℓ2 = 0 on the right-hand side, integrating only over the argument u on the left-hand side. The following standard conditions on the kernel densities are required.

Assumption A4

The kernel functions K1 and K2 are nonnegative with compact supports, bounded, and of order (0, 2) and {(0, 0)T, 2}, respectively.

Lemma A1 is a mean-squared version of Theorem 1 in Martins-Filho & Yao (2006), which asserts the asymptotic equivalence of a nonparametric V-statistic to the projection of the corresponding U-statistic. Lemma A2 is a restatement of Lemma 1(b) of Martins-Filho & Yao (2007) adapted to sparse functional data.

Lemma A1

Let be a sequence of independent and identically distributed random variables, and let un and vn be U- and V-statistics with kernel function ψn(Z1, … , Zk). In addition, let , where ψ1n(Zi)= E{ψn(Zi1, … , Zik) ∣ Zi} for i ∈ {i1, … , ik} and ϕn = E{ψn(Z1, … , Zk)}. If , then .

Lemma A2

Given Assumptions 1–4 and A1–A4, let

Then for k = 0, 1, 2.

Proofs of the theorems

Proof of Theorem 1

This theorem is an analogue of Theorem 1 in Zhu et al. (2010); thus its proof is omitted.

Proof of Theorem 2

For brevity, we write Mn and Nn as M and N, respectively. Let

where . The local linear estimator of with kernel K1 is

Let . Then

Denote a point between Tij and t by ; by Taylor expansion, . Finally, let . Then

where

This allows us to write , where

which implies, by the Cauchy–Schwarz inequality, that . In the rest of the proofs, we drop the subscript H and the dummy variable in integrals for brevity. Recall that we defined Zi as the underlying data quadruplet (Ti, Ui, Yi, Ni). Further, let ∑(p) hi1,…,ip denote the sum of hi1,…,ip over the permutations of i1, … , ip. Finally, by Assumptions A1, A2 and A4, write for the lower and upper bounds of the density function of T, ∣K1(x)∣ ≤ BK < ∞ for the bound on the kernel function K1, and ∣∂2m/∂t2∣ ≤ B2m < ∞ for the bound on the second partial derivative of with respect to t.

(a) We further decompose I1n(s, t) into I1n(s, t) = I11n(s, t) + I12n(s, t) + I13n(s, t), where

which we analyse individually below.

We first show that . We write I11n(s, t) as

where vn(s, t) is a V-statistic with symmetric kernel ψn(Zi, Zk; s, t) and

Since E{eij(Yk) ∣ Tij, Yk} = 0, it is easy to show that E{hik(s, t)} = E{hik(t, s)} = E{hki(s, t)} = E{hki(t, s)} = 0. Thus θn(s, t) = E{ψn(Zi, Zk; s, t)} = 0. Additionally,

If , Lemma A1 gives , where is the projection of the corresponding U-statistic. Since the projection of a U-statistic is a sum of independent and identically distributed random variables ψ1n(Zi; s, t), E‖I11n‖2 ≤ 2n−1 ∫∫ var[E{hik(s, t) ∣ Zi}] + 2n−1 ∫∫ var[E{hik(t, s) ∣ Zi}] + o(n−1), where

where the first line follows from the Cauchy–Schwarz inequality, the second line is obtained by letting and observing that Tij is independent of Xi, Yi and εi, and the third line follows from a variant of the dominated convergence theorem (Prakasa Rao, 1983, p. 35) that allows us to derive rates of convergence for nonparametric regression estimators. Thus , provided that for all i and k, which we will show below. For i ≠ k,

Observe that

For j = l, applying the dominated convergence theorem to the expectation on the right-hand side gives , and hence by Assumption A3. For j ≠ l, a similar argument gives n−1 . The next two terms, and E{hik(s, t)hik(t, s)}, can be handled similarly, as well as E{hik(s, t)hki(s, t)} = o(n) and the case of i = k. Thus .

Using similar derivations, one can show that .

We next show that . Following Lemma 2 of Martins-Filho & Yao (2007),

Lemma A2 gives . Next, Rn(t, Yk) ≤ ∣Rn1(t, Yk)∣ + ∣Rn2(t, Yk)∣ + ∣Rn3(t, Yk)∣ + ∣Rn4(t, Yk)∣, where

Thus n−1 ∑k m(s, Yk) Rn1(t, Yk) = h1fT(t)I11n(s, t) leads to ‖h1 fT I11n‖2 = Op(n−1h1), and n−1 ∑k m(s, Yk)Rn2(t, Yk) = h1 fT(t)I12n(s, t) leads to . It follows similarly that the third and fourth terms are Op(n−1h1) and , respectively. Hence, . Combining the previous results gives .

(b) These terms are of higher order and are omitted for brevity.

(c) By the law of large numbers, .

Combining the above results leads to .

Proof of Theorem 3

To facilitate the theoretical derivation, for each k = 1, … , K let ηk = ∑1/2 βk and be, respectively, the normalized eigenvectors of the equations ∑−1 Λ ∑−1/2ηk = λkβk and . Then

using the fact that . Applying standard theory for self-adjoint compact operators (Bosq, 2000) gives

where C > 0 is a generic positive constant. Thus , where

It suffices to show that . The calculations for I2n are similar and yield that I2n = op(I1n).

Observe that I1n ≤ 3I11n + 3I12n + 3I13n, where and . Recall that is the orthogonal projector onto the eigenspace associated with the sn largest eigenvalues of ∑. Let I denote the identity operator and the operator perpendicular to πsn, i.e., is the orthogonal projector onto the eigenspace associated with eigenvalues of ∑ that are less than αsn. Thus allows us to write . Since ∑−1Λ ∑−1/2ηk = λkβk,

similarly, .

We decompose I12n as I12n ≤ 3I121n + 3I122n + 3I123n, where and . Note that I121n ≤ 6‖Λ∑−1/2πsn‖2(I1211n + I1212n), where

Under Assumption 7, for all 1 ≤ j ≤ sn, implies that , i.e., for some C > 0. Thus

For I1212n, using the fact that (Bosq, 2000), where δ1 = α1 − α2 and δj = min2≤ℓ≤j (αℓ−1 − αℓ, αℓ − αℓ+1) for j > 1, we have that and

Using Λ∑−1/2ηk = λk∑βk, we obtain . Thus . Using decompositions similar to the one for I121n, both I122n and I123n can be shown to be . This leads to .

Note that , where and, similarly, . From Theorem 2, we have . Combining the above results leads to (6).

REFERENCES

- Bosq D. Linear Processes in Function Spaces: Theory and Applications. Springer; New York: 2000. [Google Scholar]

- Cai TT, Hall P. Prediction in functional linear regression. Ann. Statist. 2006;34:2159–79. [Google Scholar]

- Cambanis S, Huang S, Simons G. On the theory of elliptically contoured distributions. J. Mult. Anal. 1981;11:368–85. [Google Scholar]

- Cardot H, Ferraty F, Sarda P. Functional linear model. Statist. Prob. Lett. 1999;45:11–22. [Google Scholar]

- Chen D, Hall P, MüLler H-G. Single and multiple index functional regression models with nonparametric link. Ann. Statist. 2011;39:1720–47. [Google Scholar]

- Chiaromonte F, Cook DR, Li B. Sufficient dimension reduction in regressions with categorical predictors. Ann. Statist. 2002;30:475–97. [Google Scholar]

- Cook DR. Regression Graphics: Ideas for Studying Regressions through Graphics. Wiley; New York: 1998. [Google Scholar]

- Cook DR, Weisberg S. Comment on “Sliced inverse regression for dimension reduction”. J. Am. Statist. Assoc. 1991;86:328–32. [Google Scholar]

- Cook DR, Forzani L, Yao A-F. Necessary and sufficient conditions for consistency of a method for smoothed functional inverse regression. Statist. Sinica. 2010;20:235–8. [Google Scholar]

- Duan N, Li K-C. Slicing regression: A link-free regression method. Ann. Statist. 1991;19:505–30. [Google Scholar]

- Fan J, Gijbels I. Local Polynomial Modelling and Its Applications. Chapman & Hall; London: 1996. [Google Scholar]

- Ferré L, Yao A-F. Functional sliced inverse regression analysis. Statistics. 2003;37:475–88. [Google Scholar]

- Ferré L, Yao A-F. Smoothed functional inverse regression. Statist. Sinica. 2005;15:665–83. [Google Scholar]

- Hall P, Horowitz JL. Methodology and convergence rates for functional linear regression. Ann. Statist. 2007;35:70–91. [Google Scholar]

- Hall P, Hosseini-Nasab M. On properties of functional principal components analysis. J. R. Statist. Soc. B. 2006;68:109–26. [Google Scholar]

- Hall P, Müller H-G, Wang J-L. Properties of principal component methods for functional and longitudinal data analysis. Ann. Statist. 2006;34:1493–517. [Google Scholar]

- Hastie TJ, Tibshirani RJ. Generalized Additive Models. Chapman & Hall; London: 1990. [Google Scholar]

- He G, Müller H-G, Wang J-L. Functional canonical analysis for square integrable stochastic processes. J. Mult. Anal. 2003;85:54–77. [Google Scholar]

- James GM, Silverman BW. Functional adaptive model estimation. J. Am. Statist. Assoc. 2005;100:565–76. [Google Scholar]

- Jiang C-R, Yu W, Wang J-L. Inverse regression for longitudinal data. Ann. Statist. 2014;42:563–91. [Google Scholar]

- Li B, Wang S. On directional regression for dimension reduction. J. Am. Statist. Assoc. 2007;102:997–1008. [Google Scholar]

- Li K-C. Sliced inverse regression for dimension reduction. J. Am. Statist. Assoc. 1991;86:316–27. [Google Scholar]

- Li K-C. On principal Hessian directions for data visualization and dimension reduction: Another application of Stein’s lemma. J. Am. Statist. Assoc. 1992;87:1025–39. [Google Scholar]

- Li Y, Hsing T. Deciding the dimension of effective dimension reduction space for functional and high-dimensional data. Ann. Statist. 2010;38:3028–62. [Google Scholar]

- Lin X, Carroll RJ. Nonparametric function estimation for clustered data when the predictor ismeasured without/with error. J. Am. Statist. Assoc. 2000;95:520–34. [Google Scholar]

- Liu B, Müller H-G. Estimating derivatives for samples of sparsely observed functions, with application to on-line auction dynamics. J. Am. Statist. Assoc. 2009;104:704–14. [Google Scholar]

- Martins-Filho C, Yao F. A note on the use of V and U statistics in nonparametric models of regression. Ann. Inst. Statist. Math. 2006;58:389–406. [Google Scholar]

- Martins-Filho C, Yao F. Nonparametric frontier estimation via local linear regression. J. Economet. 2007;141:283–319. [Google Scholar]

- Müller H-G, Stadtmüller U. Generalized functional linear models. Ann. Statist. 2005;33:774–805. [Google Scholar]

- Prakasa Rao BLS. Nonparametric Functional Estimation. Academic Press; Orlando, Florida: 1983. [Google Scholar]

- Ramsay JO, Silverman BW. Functional Data Analysis. 2nd ed Springer; New York: 2005. [Google Scholar]

- Rice JA, Silverman BW. Estimating the mean and covariance structure nonparametrically when the data are curves. J. R. Statist. Soc. B. 1991;53:233–43. [Google Scholar]

- Xia Y, Tong H, Li W, Zhu L-X. An adaptive estimation of dimension reduction space. J. R. Statist. Soc. B. 2002;64:363–410. [Google Scholar]

- Yao F, Müller H-G. Empirical dynamics for longitudinal data. Ann. Statist. 2010;38:3458–86. [Google Scholar]

- Yao F, Müller H-G, Wang J-L. Functional data analysis for sparse longitudinal data. J. Am. Statist. Assoc. 2005a;100:577–90. [Google Scholar]

- Yao F, Müller H-G, Wang J-L. Functional linear regression analysis for longitudinal data. Ann. Statist. 2005b;33:2873–903. [Google Scholar]

- Yuan M, Cai TT. A reproducing kernel Hilbert space approach to functional linear regression. Ann. Statist. 2010;38:3412–44. [Google Scholar]

- Zhu L-P, Zhu L-X, Feng Z-H. Dimension reduction in regressions through cumulative slicing estimation. J. Am. Statist. Assoc. 2010;105:1455–66. [Google Scholar]