Abstract

Cortical networks, in-vitro as well as in-vivo, can spontaneously generate a variety of collective dynamical events such as network spikes, UP and DOWN states, global oscillations, and avalanches. Though each of them has been variously recognized in previous works as expression of the excitability of the cortical tissue and the associated nonlinear dynamics, a unified picture of the determinant factors (dynamical and architectural) is desirable and not yet available. Progress has also been partially hindered by the use of a variety of statistical measures to define the network events of interest. We propose here a common probabilistic definition of network events that, applied to the firing activity of cultured neural networks, highlights the co-occurrence of network spikes, power-law distributed avalanches, and exponentially distributed ‘quasi-orbits’, which offer a third type of collective behavior. A rate model, including synaptic excitation and inhibition with no imposed topology, synaptic short-term depression, and finite-size noise, accounts for all these different, coexisting phenomena. We find that their emergence is largely regulated by the proximity to an oscillatory instability of the dynamics, where the non-linear excitable behavior leads to a self-amplification of activity fluctuations over a wide range of scales in space and time. In this sense, the cultured network dynamics is compatible with an excitation-inhibition balance corresponding to a slightly sub-critical regime. Finally, we propose and test a method to infer the characteristic time of the fatigue process, from the observed time course of the network’s firing rate. Unlike the model, possessing a single fatigue mechanism, the cultured network appears to show multiple time scales, signalling the possible coexistence of different fatigue mechanisms.

Author Summary

The spontaneous neural activity is the dynamic floor on which the cortex builds its response to incoming stimuli and organizes its information processing, thereby the importance of understanding its dynamical underpinnings. In-vitro preparations, as well as the intact cortex in deep sleep or anesthesia, display a variety of spontaneous collective events, including quasi-synchronous ‘network spikes’ and a complex spectrum of ‘avalanches’, which has been considered suggestive of a ‘typically critical’ state. Light has been shed on selected aspects of such events; still, a unified picture stays elusive, also due to varying statistical definitions of network events. Our work aims to take a step in this direction. We first introduce a probabilistic definition of population events that naturally adapts to different scales of analysis; it reveals, in the activity of cultured networks, as well as in a simple rate model, the co-occurrence of network spikes, ‘quasi-orbits’ and avalanches. Model’s analysis suggests that their emergence is governed by a single parameter measuring the proximity to an oscillatory instability, where the network can amplify fluctuations on a wide range of scales in space and time. We also propose a procedure to infer from neural activity the slow underlying time-scales of the dynamics.

Introduction

The spontaneous activity of excitable neuronal networks exhibits a spectrum of dynamic regimes ranging from quasi-linear, small fluctuations close to stationary activity, to dramatic events such as abrupt and transient synchronization. Understanding the underpinnings of such dynamic versatility is important, as different spontaneous modes may imply in general different state-dependent response properties to incoming stimuli and different information processing routes.

Cultured neuronal networks offer a controllable experimental setting to open a window into the network excitability and its dynamics, and have been used intensively for the purpose.

Neuronal cultures in early development phases naturally show alternating quasi-quiescent states and ‘network spikes’ (NS) of brief outbreaks of network activity [1–6].

In addition, recent observations in-vitro (and later even in-vivo) revealed a rich structure of network events (‘avalanches’) that attracted much attention because their spatial and temporal structure exhibited features (power-law distributions) reminiscent of what is observed in a ‘critical state’ of a physical system (see e.g. [7, 8], and [9, 10] and references therein). Generically, an avalanche is a cascade of neural activities clustered in time; while there persist ongoing debate on the relation between observed avalanches and whatever ‘criticality’ may mean for brain dynamics [11], understanding their dynamical origin remains on the agenda.

Quasi-synchronous NS, avalanches and small activity fluctuations are frequently coexisting elements of the network dynamics. Besides, as we will describe in the following, in certain conditions one can recognize network events which are clearly distinct from the mentioned network events, which we name here as ‘quasi-orbits’.

The abundant modeling literature on the above dynamical phenomena has been frequently focused on specific aspects of one of them [12, 13]; on the other hand, getting a unified picture is made often difficult by different assumptions on the network’s structure and constitutive elements and, importantly, by different methods used to detect the dynamic events of interest.

In the present paper we define a common probabilistic criterion to detect various coexisting dynamic events (NS, avalanches and quasi-orbits) and adopt it to analyze the spontaneous activity recorded from both cultured networks, and a computational rate model.

Most theoretical models accounting for NS are based on an interplay between network self-excitation on one side, and on the other side some fatigue mechanism provoking the extinction of the network spike [12, 13]. For such a mechanism two main options, up to details, have been considered: neural ‘spike-frequency adaptation’ [3, 14] and synaptic ‘short-term depression’ (STD) [4, 5, 15–18]. Despite their differences, both mechanisms share the basic property of generating an activity-dependent self-inhibition in response to the upsurge of activity upon the generation of a NS, the more vigorously, the stronger the NS (i.e. the higher the average firing rate). In this paper, we will mainly focus on STD, stressing the similarities of the two mechanisms, yet not denying their possibly different dynamic implications.

While STD acts as an activity-dependent self-inhibition, the self-excitability of the network depends on the balance between synaptic excitation and inhibition; investigating how such balance, experimentally modifiable through pharmacology, influences the dynamics of spontaneous NSs is interesting and relevant as a step towards the identification of the ‘excitability working point’ in the experimental preparation.

To study the factors governing the co-occurrence of different network events and their properties we adopt a rate model for the dynamics of the global network activity, that takes into accounts finite-size fluctuations and the synaptic interplay between one excitatory and one inhibitory population, with excitatory synapses being subject to STD.

On purpose we implicitly exclude any spatial topology in the model, which is meant to describe the dynamics of a randomly connected, sparse network, since we intend to expose the exquisite implications of the balance between synaptic excitation and inhibition, and the activity-dependent self-inhibition due to STD. In doing this, we purposely leave out not only the known relevance of a topological organization [9, 19, 20], but also the role of cliques of neurons which have been proposed to play a pivotal role in the the generation of NS as functional hubs [21], as well as the putative role of ‘leader neurons’.

We perform a systematic numerical and analytical study of NSs for varying excitation/inhibition balance. The distance from an oscillatory instability of the mean-field dynamics (in terms of the dominant eigenvalue of the linearized dynamics) largely appears to be the sole variable governing the statistics of the inter-NS intervals, ranging from a very sparse, irregular bursting (coefficient of variation CV ∼ 1) to a sustained, periodic one (CV ∼ 0). The intermediate, weakly synchronized regime (CV ∼ 0.5), in which the experimental cultures are often observed to operate, is found in a neighborhood of the instability that shrinks as the endogenous fluctuations in the network activity become smaller with increasing network size.

Moreover, the model robustly shows the co-presence of avalanches with NS and quasi-orbits. The avalanche sizes are distributed according to a power-law over a wide region of the excitation-inhibition plane, although the crossing of the instability line is signaled by a bump in the large-size tail of the distribution; we compare such distributions and their modulation (as well as the distributions of NS) across the instability line with the experimental results from cortical neuronal cultures; again the results appear to confirm that neuronal cultures operate in close proximity of an instability line.

Taking advantage of the fact that the sizes of both NS and quasi-orbits are found to be significantly correlated with the dynamic variable associated with STD (available synaptic resources) just before the onset of the event, we developed a simple optimization method to infer, from the recorded activity, the characteristic time-scales of the putative fatigue mechanism at work. We first tested the method on the model, and then applied it to in-vitro recordings; we could identify in several cases one or two long time-scales, ranging from few hundreds milliseconds to few seconds.

Weak or no correlations were found instead between avalanche sizes and the STD dynamics; this suggests that avalanches originate from synaptic interaction which amplifies a wide spectrum of small fluctuations, and are mostly ineffective in eliciting a strong self-inhibition.

Models

Experimental data

As originally described in [2], cortical neurons were obtained from newborn rats within 24 hours after birth, following standard procedures. Briefly, the neurons were plated directly onto a substrate-integrated multielectrode array (MEA). The cells were bathed in MEM supplemented with heat-inactivated horse serum (5%), glutamine (0.5 mM), glucose (20 mM), and gentamycin (10 μg/ml) and were maintained in an atmosphere of 37°C, 5% CO2/95% air in a tissue culture incubator as well as during the recording phases. The data analyzed here was collected during the third week after plating, thus allowing functional and structural maturation of the neurons. MEAs of 60 Ti/Au/TiN electrodes, 30 μm in diameter, and spaced 200 μm from each other (Multi Channel Systems, Reutlingen, Germany) were used. The insulation layer (silicon nitride) was pretreated with poly-D-lysine. All experiments were conducted under a slow perfusion system with perfusion rates of ∼100 μl/h. A commercial 60-channel amplifier (B-MEA-1060; Multi Channel Systems) with frequency limits of 1–5000 Hz and a gain of 1024× was used. The B-MEA-1060 was connected to MCPPlus variable gain filter amplifiers (Alpha Omega, Nazareth, Israel) for additional amplification. Data was digitized using two parallel 5200a/526 analog-to-digital boards (Microstar Laboratories, Bellevue, WA). Each channel was sampled at a frequency of 24000 Hz and prepared for analysis using the AlphaMap interface (Alpha Omega). Thresholds (8× root mean square units; typically in the range of 10–20 μV) were defined separately for each of the recording channels before the beginning of the experiment. The electrophysiological data is freely accessible for download at marom.net.technion.ac.il/neural-activity-data/.

Network rate dynamics

A set of Wilson-Cowan-like equations [22] for the spike-rate of the excitatory (ν E) and the inhibitory (ν I) neuronal populations lies at the core of our dynamic mean-field model:

| (1) |

where τ E and τ I represent two characteristic times (of the order of few to few tens of ms), and Φ is the gain function of the input currents, I E and I I, that in turn depend on ν E, ν I, and the synaptic efficacies. We chose Φ to be the transfer function of the leaky integrate-and-fire neuron under the assumptions of Gaussian, uncorrelated input of mean μ and infinitesimal variance σ 2[23]:

| (2) |

where τ V is the membrane time constant, τ refract is a refractory period, and V rest, V reset, and V thresh are respectively the rest, the post-firing reset, and the firing-threshold membrane potential of the neuron (we assume the membrane resistance R = 1).

The model incorporates the non-instantaneous nature of synaptic transmission in its simplest form, by letting the νs being low-pass filtered by a single synaptic time-scale :

| (3) |

One can regard the variables s as the instantaneous firing rates as seen by post-synaptic neurons, after synaptic filtering. The form of Eq 3 and our choice of values (see Table 1) implicitly neglects slow NMDA contributions and is restricted to AMPA and GABA synaptic currents. Thus, the input currents I E and I I in Eq 1 will be functions of the rates νs through these filtered rates; with reference to Eq 2, the model assumes the following form for the mean and the variance of the current I E (the expressions for I I are similarly defined):

| (4) |

where the n E and n I are the number of neurons in the excitatory and inhibitory population respectively; c is the probability of two neurons being synaptically connected; J EE (J EI) is the average synaptic efficacy from an excitatory (inhibitory) pre-synaptic neuron to an excitatory one, is the variance of the J-distribution; wexc and winh are dimensionless parameters that we will use in the following to independently rescale excitatory and inhibitory synapses respectively. Finally, an external current is assumed in the form of a Poisson train of spikes of rate ν ext driving the neurons in the network with average synaptic efficacy J ext. In Eq 4 r E(t) (0 < r E < 1) is the fraction of synaptic resources available at time t for the response of an excitatory synapse to a pre-synaptic spike; the evolution of r E evolves according to the following dynamics, which implements the effects of short-term depression (STD) [24, 25] into the network dynamics:

| (5) |

where 0 < u STD < 1 represents the (constant) fraction of the available synaptic resources consumed by an excitatory postsynaptic potential, and τ STD is the recovery time for the synaptic resources.

Table 1. Network parameters.

| Parameter | Value |

|---|---|

| n E / n I | 160 / 40 |

| τ V / τ refract | 20 / 2 ms |

| V rest = V reset / V thresh | −70 / −55 mV |

| c | 0.25 |

| J EE ± σ JEE | 0.809 ± 0.202 mV |

| J IE ± σ JIE | 1.23 ± 0.307 mV |

| J EI ± σ JEI | −0.340 ± 0.0850 mV |

| J II ± σ JII | −0.358 ± 0.0894 mV |

| J ext ± σ Jext | 0.416 ± 0.104 mV |

| νext | 1.25 kHz |

| / | 10 / 2 ms |

| τ r | 800 ms |

| u STD | 0.2 |

| τ E = τ I | 20 ms |

Finally, for a network of n neurons, we introduce finite-size noise by assuming that the signal the synapses integrate in Eq 3 is a random process ν n of mean ν; in a time-bin dt, we expect the number of action potentials fired to be a Poisson variable of mean n ν(t) dt; Eq 3 will thus become:

| (6) |

Putting all together, the noisy dynamic mean-field model is described by the following set of (stochastic) differential equations:

| (7) |

complemented by Eqs 2, 4 and 6. The values of all the fixed network parameters are shown in Table 1. Since we will compare the dynamics of networks of different sizes, we scale the connectivity with network size in order to keep invariant the mean field equations: we hold the number of synaptic connection per neuron constant by rescaling, with reference to Eq 4, the probability of connection c so that c n E and c n I are kept constant to the reference values that can be deduced from Table 1.

Spike-frequency adaptation (SFA) (not present in simulations unless explicitly stated) is introduced by subtracting a term to the instantaneous mean value of the I E current:

| (8) |

proportional to the instantaneous value of the variable c E, that simply integrates ν nE:

| (9) |

with a characteristic time τ SFA. This additional term aims to model an after-hyperpolarization, Ca2+-dependent K+ current [26, 27]. In this sense, c E can be interpreted as the cytoplasmic calcium concentration [Ca2+]), whose effects on the network dynamics are controlled by the value of the “conductance” g SFA.

Simulations are performed by integrating the stochastic dynamics with a fixed time step dt = 0.25 ms.

In the following, by “spike count” we will mean the quantity ν(t) n dt.

Network events detection

For the detection of network events (NSs, quasi-orbits, and avalanches) we developed a unified approach based on Hidden Markov Models (HMM) [28]. Despite HMM have been widely used for temporal pattern recognition in many different fields, to our knowledge few attempts have been made to use them in the context of interest here [29, 30]. For the purpose of the present description, we just remind that a HMM is a stochastic system that evolves according to Markov transitions between “hidden”, i.e. unobservable, states; at each step of the dynamics the visible output depends probabilistically on the current hidden state. Such models can be naturally adapted to the detection of network events, the observations being the number of detected spikes per time bin, and the underlying hidden states, between which the system spontaneously alternates, being associated with high or low network activity (‘network event—no network event’). A standard optimization procedure adapts then the HMM to the recorded activity sample by determining the most probable sequence of hidden states given the observations.

The two-step method we propose is based on HMM, has no user-defined parameters, and automatically adapts to different conditions.

In the first step, the algorithm finds the parameters of the two-state HMM (one low-activity state, representing the quasi-quiescent periods, and one high-activity state, associated with network events) that best accounts for a given sequence of spike counts—the visible states in the HMM; such fitting is performed through the Baum-Welch algorithm [28]. In the second step, the most probable sequence for the two alternating hidden levels, given the sequence of spike counts and the fitted parameters, is found through the Viterbi algorithm. Network events are identified as the periods of dominance of the high activity hidden state.

In order to retain only the most significant events a minimum event duration is imposed; such threshold is self-consistently determined as follows. The Viterbi algorithm is also applied to a “surrogate” time-series obtained by randomly shuffling the original one, thereby generating a set of “surrogate” events. The purpose is to determine the desired minimum event duration from the high duration tail of surrogate events (which, by construction, come from a time-series with no real temporal structure). Since the high duration distribution tail is found to be roughly exponential, we fit such tail by considering only the surrogate events of duration larger than the 75th percentile. Then, from the fitted exponential, we compute the duration value such that the probability of durations greater than this value is P(surrogate) = 10−3. In other words, we set the threshold on minimum duration of detected events to the duration of exceptionally long (P < 10−3) surrogate events.

As already remarked, we used essentially the same algorithm for detecting NS/quasi-orbits and avalanches. The only significant difference is that, in the case of avalanches, the emission probability of the low-activity hidden state is kept fixed during the Baum-Welch algorithm to p(n) ≃ δ n0 (δ ij is the Kronecker delta; p(n) is the probability of emitting n spikes in a time-bin). Thus the lower state is constrained to a negligible probability of outputting non-zero spike-counts, conforming to the intuition that in between avalanches the network is (almost) completely silent. More precisely, we set p(1) = 10−6 〈n〉, where 〈n〉 is the average number of spikes that the network emits during a time-bin dt. After the modified Baum-Welch first step, avalanches are determined, as above, by applying the Viterbi algorithm; no threshold is applied in this case, neither to the avalanche duration nor to its size.

The proposed procedures introduce three arbitrary parameters: the time bin dt, the probability P(surrogate) for network spikes and quasi-orbits, and the probability p(1). To test the robustness of the algorithms, we varied these parameters over ample ranges: dt between 0.25 and 8 ms; P(surrogate) between 10−2 and 10−4; p(1) between 10−8 and 10−4. We found that avalanche size distributions are virtually unaffected under variations of p(1), and only mildly affected for the largest dt explored; higher values of P(surrogate) lead, as expected, to detect a larger number of small quasi-orbits, yet these additional events do not alter the overall shape of the size distribution predicted by the theory (see next section); on the other hand, a large number of very small quasi-orbits does have a detrimental effect on the correlation results reported in Section “Inferring the time-scales”.

Simulations and data analysis have been performed using custom-written mixed C++/ MATLAB (version R2013a, Mathworks, Natick, MA) functions and scripts.

Size distribution for quasi-orbits and network spikes

The non-linear rate model described above can show a wide repertoire of dynamical patterns, as for example multiple stable fixed points and large, quasi-periodic oscillations. As we will show, for sufficiently excitable networks, a stable state of asynchronous activity (fixed point) is destabilized, in favor of stable global oscillations. Finite size noise probes differently network’s excitability at different distances from such instability. Before global oscillations become stable (in the infinite network limit), the network’s highly non-linear reaction to its own fluctuations can ignite large, relatively stereotyped, “network spikes”. Also, in the proximity of the oscillatory (Hopf) instability, noise can promote “quasi orbits”, i.e., transient departures from the fixed point which develop on time-scales dictated by the upcoming oscillatory instability, of which they are precursors. Under a linear approximation, the probability distribution of the amplitude l of these quasi-orbits can be explicitly derived as explained in the following.

Consider a generic planar linear dynamics with noise:

| (10) |

where is 2 × 2 real matrix, and ξ = (ξ(t), 0) is a white noise with 〈ξ(t)ξ(t′)〉 = δ(t − t′). We here assume that the system is close to a Hopf bifurcation; in other words that the matrix has complex-conjugated eigenvalues λ ± = ℜλ + i ℑλ, with ℜλ < 0 and ∣ℜλ∣ ≪ ℑλ.

By means of a linear transformation, the system can be rewritten as:

| (11) |

with σ x and σ y constants determined by the coordinate transformation. Making use of Itō’s lemma to write:

and summing the previous two equations, we find for the square radius l 2 ≡ x 2 + y 2 the dynamics:

| (12) |

with .

As long as ℑλ ≫ ∣ℜλ∣, it is physically sound to make the approximation:

| (13) |

for 0 ≤ t ≤ T = 2 π/ℑλ and then to average the variance of the noise over such period to get:

in order to rewrite Eq (12) as:

| (14) |

Such stochastic differential equation is associated with the Fokker-Planck equation:

| (15) |

that admits an exponential distribution as stationary solution:

| (16) |

that is, a Rayleigh distribution for l:

| (17) |

On the other hand, we found a correlation between l (the maximal departure from the low-activity fixed point) and the duration of the quasi-orbit. Therefore the size of the quasi-orbit (the ‘area’ below the firing rate time profile during the excursion from the fixed point) is expected to scale as l 2, so that it should be exponentially distributed.

For network spikes we do not have a theoretical argument to predict the shape of the size distribution, however empirically a (left-truncated) Gaussian distribution proved to be roughly adequate. Since we expect that quasi-orbits and NS contribute with different weights for varying excitatory/inhibitory balance, we adopted the following form for the overall distribution of network event size to fit experimental data:

| (18) |

The parameters of the two distributions and their relative weight 0 ≤ p 0 ≤ 1 are estimated by minimizing the log-likelihood on the data. A threshold for the event size is determined as the value having equal probability of being generated by either the exponential or the normal distribution. In the following, NSs are defined as events having size larger than this threshold. In those cases in which a threshold smaller than the peak of the normal distribution could not be determined, no threshold was set.

Results

In the following, we will study a stochastic firing-rate model and make extensive comparison of its dynamical behavior with the activity of ex-vivo networks of cortical neurons recorded through a 60-channel multielectrode array.

The first question we want to answer is how the excitation-inhibition balance affects network dynamics. Starting from the statistics of network spikes (NS) we show that it is well described by a single variable measuring the distance from an oscillatory instability of the dynamics. We then study in the model the effects of finite-size fluctuations on the statistics of NS.

Then, taking advantage of new detection algorithm we introduce (see Models and Analysis), we recognize the presence of a spectrum of network events, including three families: NS, “quasi-orbits”, and avalanches. The predicted size distribution of quasi-orbits, exponential component in Eq 18, is confirmed by simulations and recovered in experimental data analysis. We investigate how the different network events characterize in various proportions the network dynamics depending on the excitatory-inhibitory balance; experimental data offer an interesting match with model findings, compatible with ex-vivo network being typically slightly below the oscillatory instability.

Finally we introduce a simple procedure to infer the time-scales of putative slow self-inhibitory mechanisms underlying the occurrence of network events. The inference is obtained based on knowledge of the firing activity alone; this makes the method interesting for analysis of experimental data, as we show through exemplary results.

The stochastic firing-rate model consists of two populations of neurons, one excitatory and one inhibitory, interacting through effective synaptic couplings; excitatory synaptic couplings follow a dynamics mimicking short-term depression (described after the Tsodyks-Markram model, [24]). We adopted the transfer function of the leaky integrate-and-fire neuron subject to white-noise current with drift [23] as the single population input-output function; moreover the activity of each population is made stochastic by adding realistic finite-size noise. Working with a noisy mean field model allows in principle to easily sweep through widely different network sizes and, more importantly, allows us to perform the stability analysis.

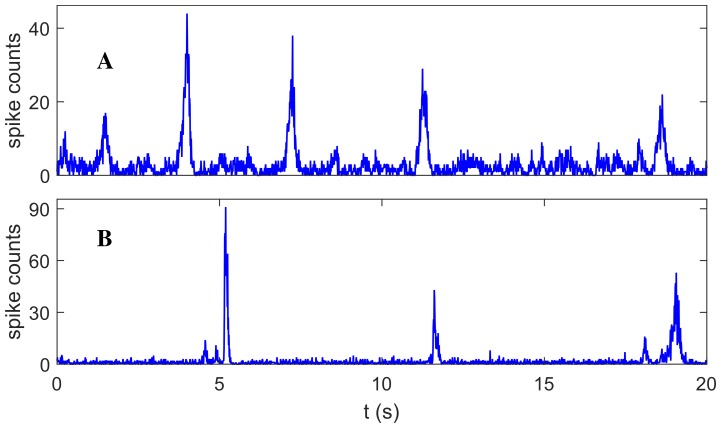

To start the exploration that follows, we chose a reference working point where the model’s dynamics has a low-rate fixed point (2 − 4 Hz) just on the brink of an oscillatory instability or, in other words, where the dominant eigenvalue λ of the dynamics, linearized around the fixed point, is complex with null real part. The model network (Fig 1, panel A) shows in proximity of this point a dynamical behavior qualitatively similar, in terms of population spikes, to what is observed in ex-vivo neuronal networks (Fig 1, panel B).

Fig 1. Time course of the network firing rate.

Panel A: noisy mean-field simulations; panel B: ex-vivo data. Random large excursions of the firing rate (network spikes and quasi-orbits) are clearly visible in both cases.

Excitation-inhibition balance and network spike statistics

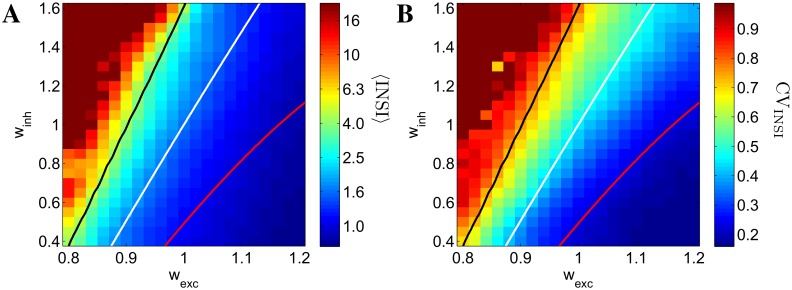

As the relative balance of excitation and inhibition is expected to be a major determinant of NS statistics we investigated first, for spontaneous NSs, how the inter-NS intervals (INSI) and their regularity (as measured by the coefficient of variation, CVINSI) depend on such balance. In Fig 2 we report the average INSI (left panel) and CVINSI (right panel) in the plane (wexc, winh) of the excitatory and inhibitory synaptic efficacies (J EE → w E J EE, J IE → w E J IE, J EI → w I J EI, J II → w I J II, see Eq 4). Starting from the center of this plane (wexc = 1, winh = 1) and moving along the horizontal axis, all the excitatory synapses of the network are multiplied by a factor wexc: moving right, the total excitation of the network increases (wexc > 1), toward left it decreases (wexc < 1). Along the vertical line, instead, all the inhibitory synapses are damped (moving downward, winh < 1) or strengthened (going upward, winh > 1).

Fig 2. Inter-network-spike interval (INSI) statistics in the noisy mean-field model, for varying levels of excitation (wexc) and inhibition (winh).

Panel A: 〈INSI〉 (the scale is in seconds); panel B: coefficient of variation of INSI (CVINSI). For high net excitation (bottom-right quadrant) short-term depression plays a determinant role in generating frequent and regular (low CVINSI) NSs; for weak excitability (upper-left quadrant) random fluctuations are essential for the generation of rare, quasi-Poissonian NSs (CVINSI ≃ 1). The solid lines are isolines of the real part ℜλ of the dominant eigenvalue of the mean-field dynamics’ Jacobian; white line: ℜλ = 0 Hz; red line: ℜλ = 3.5 Hz; black line: ℜλ = −3.5 Hz. Note how such lines roughly follow isolines of 〈INSI〉 and CVINSI.

It is clearly seen that both 〈INSI〉 and CVINSI are approximately distributed in the plane along almost straight lines of equal values: for a chosen 〈INSI〉 or CVINSI one can trade more excitation for less inhibition keeping the value constant, suggesting that, at this level of approximation, a measure of net synaptic excitation governs the NS statistics. Besides, not surprisingly, for high net excitation NSs are more frequent (∼ 1 Hz) and quasi-periodic (low CVINSI), due to the fact that the STD recovery time determines quasi-deterministically when the network is again in the condition of generating a new NS. Weak excitability, on the other hand, leads to rare NSs, approaching a Poisson statistics (CVINSI ≃ 1), since excitability is so low that fluctuations are essential for recruiting enough activation to elicit a NS, with STD playing little or no role at the ignition time; below an “excitation threshold”, NSs disappear.

The solid lines in Fig 2 are derived from the linearization of the 5-dimensional dynamical system (see Eq 7), and are curves of iso-ℜλ, where λ is the dominant eigenvalue of the Jacobian: ℜλ = 0 Hz (white line, signaling a Hopf bifurcation in the corresponding deterministic system), ℜλ = 3.5 Hz (red line), and ℜλ = −3.5 Hz (black line). Values of CV found in typical cultured networks are close to model results near the bifurcation line ℜλ = 0 Hz. We observe, furthermore, that such lines roughly follow iso-〈INSI〉 and iso-CVINSI curves, suggesting that a quasi one-dimensional representation might be extracted.

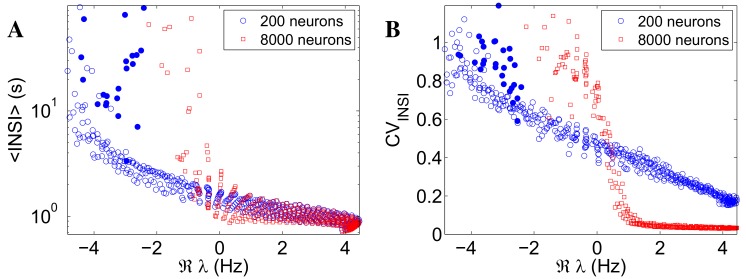

We show in Fig 3 〈INSI〉 (panel A) and CVINSI (panel B) against ℜλ for the same networks (circles) of Fig 2, and for a set of larger networks (N = 8000 neurons, squares) that are otherwise identical to the first ones, pointwise in the excitation-inhibition plane (the average number of synaptic connections per neuron for the larger networks is kept constant to the value used in the original, smaller ones, as explained in Models and Analysis) The difference in size amounts, for the new, larger networks, to weaker endogenous noise entering the stochastic dynamics of the populations’ firing rates (see Eq 6, second line). The points are seen to approximately collapse onto lines for both sets of networks, thus confirming ℜλ as the relevant control quantity for 〈INSI〉 and CVINSI. It is seen that, for the smaller networks, 〈INSI〉 and CVINSI depend smoothly on ℜλ, due to finite-size effects smearing the bifurcation. Also note the branch of points (filled circles) for which ℑλ = 0 and then no oscillatory component is present, corresponding to points in the extreme top-left region of the planes in Fig 2. For the set of larger networks, the dependence of 〈INSI〉 and CVINSI on the ℜλ is much sharper, as expected given the much smaller finite-size effects; this shrinks the available region, around the instability line, allowing for intermediate, more biologically plausible values of CVINSI.

Fig 3. Stability analysis of the linearized dynamics captures most of the variability in the inter-network-spike interval (INSI) statistics.

〈INSI〉 (panel A) and CVINSI (panel B) vs the real part ℜλ of the dominant eigenvalue of the Jacobian of the linearized dynamics, for two networks that are pointwise identical in the excitation-inhibition plane, except for their size (circles: 200 neurons, as in Fig 2; squares: 8000 neurons). The data points almost collapse on 1-D curves when plotted as functions of ℜλ, leading effectively to a “quasi one-dimensional” representation of the INSI statistics in the (wexc, winh)-plane. The region in which the INSIs are neither regular (CVINSI ∼ 0) nor completely random (CVINSI ≃ 1), as typically observed in experimental data, shrinks for larger networks. The filled circles mark a null imaginary part ℑλ.

We remark that NSs are highly non-linear and relatively stereotyped events, typical of an excitable non-linear system. The good predictive power of the linear analysis for the statistics of INSI signals that relatively small fluctuations around the system’s fixed point, described well by a linear analysis, can ignite a NS.

A spectrum of network events

Our mean-field, finite-size network is a non-linear excitable system which, to the left of the Hopf bifurcation line, and close to it, can express different types of excursions from the otherwise stable fixed point. Large (almost stereotyped for high excitation) NSs are exquisite manifestations of the non-linear excitable nature of the system, ignited by noise; the distribution of NS size (number of spikes generated during the event) is relatively narrow and approximately symmetric (the Gaussian component of Eq 18).

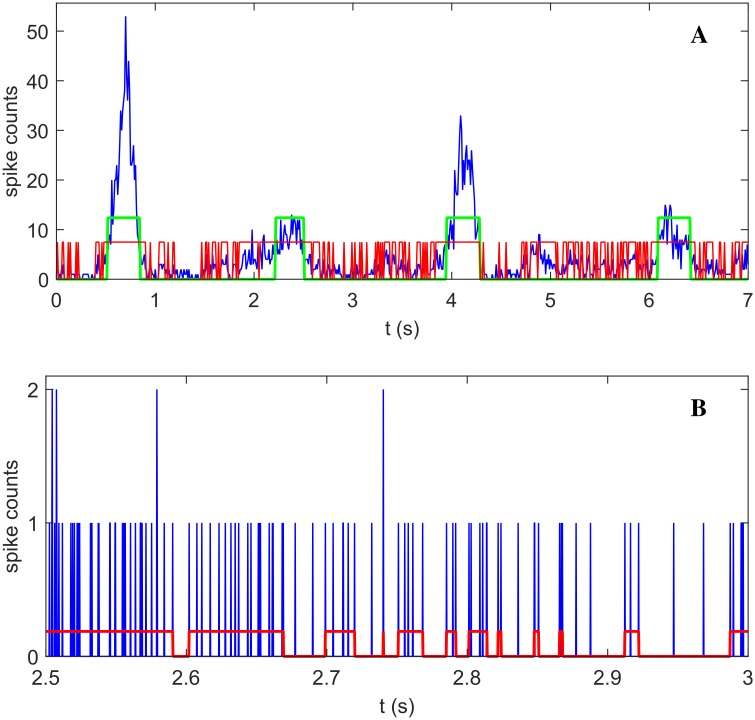

Noise can also induce smaller, transient excursions from the fixed point which can be adequately described as quasi-orbits in a linear approximation. In fact, noise induces a probability distribution on the size of such events, which can be computed as explained in Methods and Analysis (the exponential part in Eq 18). Fig 4, panel A, shows the activity of a simulated network (blue line) alongside with detected network events. We remark that the the different event types may not in general be easily distinguished on a single-event basis, while we argue that they are probabilistically distinguishable. From the best fit for the expected size distribution a threshold for the event size can be determined to separate events that are (a-posteriori) more probably quasi-orbits from the ones that are more probably NSs (for details, see Models and Analysis). Following such classification, the green line in Fig 4, panel A, marks the detection of two NSs (first and third event) and two quasi-orbits (second and fourth event).

Fig 4. Algorithms for network events detection.

Panel A: total network activity from simulation (blue line) with detected NS/quasi-orbits (green line) and avalanches (red line). Four large events (green line) are visible; the first and third are statistically classified as network spikes; the other smaller two as quasi-orbits. Note how network spikes and quasi-orbits are typically included inside a single avalanche. Panel B: a zoom over 0.5 seconds of activity, with discretization time-step 0.25 ms, illustrates avalanches structure (red line).

We also emphasize that the existence of quasi-orbits is a specific consequence of the fact that in the whole excitation-inhibition plane explored for the model, the low-activity fixed point becomes unstable via a Hopf bifurcation. It is indeed known that for nonlinear systems in the proximity of a Hopf bifurcation, noise promotes precursors of the bifurcation, which appear as transient synchronization events (see, e.g., [31]).

As one moves around the excitation-inhibition plane, to the left of the bifurcation line, the two types of events contribute differently to the overall distribution of network event sizes. Qualitatively, the farther from the bifurcation line, the higher the contribution of the small, “quasi-linear” events. This fact can be understood by noting that the average size of such events is expected to scale as 1/∣ℜλ∣, where ℜλ is the real part of the dominant eigenvalue of the (stable) linearized dynamics (see Models and Analysis, Eq 16). The average size is furthermore expected to scale with the amount of noise affecting the dynamics, thus the contribution of quasi-linear events is also expected to vanish for larger networks.

It has been previously reported that activity dynamics may be different from one network to the other, reflecting idiosyncrasies of composition and history-dependent processes ([32]). Moreover, the dynamics of a given network, as well as its individual neurons, may shift over time (minutes and hours) between different modes of activity ([32–34]). We therefore chose to demonstrate the efficacy of our analytical approach on two data sets of large-scale random cortical networks.

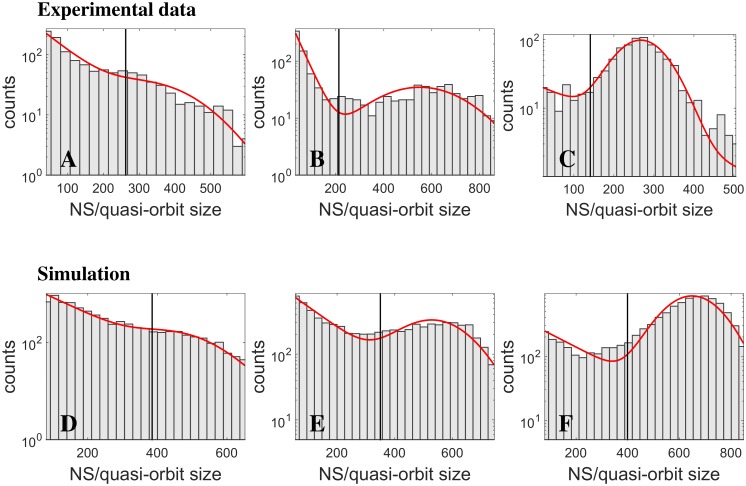

In panels A-C of Fig 5, we show the experimental distributions of event sizes for two cultured networks: panels A and B are ∼40-minute recordings taken from a very long recording for the same network; panel C is ∼1-hour recording from a different cultured network. By visual inspection, the distributions appear to be consistent with two components contributing with various weights, both for different periods of the same network, and for different networks. In the light of the above theoretical considerations, one is led to generate the hypothesis that the two components contributing to the overall distribution were associated with quasi-orbits and network spikes respectively; to test this hypothesis, we fitted (solid lines in Fig 5) the experimental distributions with the sum of an exponential and a Gaussian distribution (see Models and Analysis, Eq 18), prepared to interpret a predominance of the exponential (Gaussian) component as a lesser (greater) excitability of the network. We remark that (see panels A and B) the relative weights of the two components appear to change over time for the same network, as if the excitability level would dynamically change; more on this at the end of this section.

Fig 5. A broad spectrum of synchronous network events: simulations vs ex-vivo data.

Panels A-C: experimental distributions of network events. Panels A and B: ∼40-minute recordings from a very long recording, for the same network; panel C: ∼1-hour recording from another cultured network. Panels D-F: distributions from simulations of networks corresponding to the points in Fig 2 ((wexc, winh) = (0.82, 0.7), (wexc, winh) = (0.82, 0.55), (wexc, winh) = (0.88, 0.55)). The three networks of panels D-F have increasing levels of subcritical excitability. Note the logarithmic scale on the y-axis. The solid lines are fits of the theoretical distribution of event sizes, a sum of an exponential (for quasi-orbits) and a Gaussian (for NS) distribution (see Models and Analysis, Eq 18). The vertical lines mark the probabilistic threshold separating NS and quasi-orbits.

To substantiate the above interpretation of experimental results, we turned to long simulations (about 5.5 hours) of networks in different points in the excitation-inhibition plane (Fig 2), from which we extracted the distribution of network events and fitted them with Eq 18 as for experimental data (see panels D-F in Fig 5). Again, to the eye, the fits appear to be consistent with the two components variously contributing to the overall distribution, depending on the excitability of the network.

If, however, the fits are subject to a Kolmogorov-Smirnov test, the test fails (p < 0.01) for panels D and F. By inspecting the maximum distance between the cumulative distributions for simulation data and the fit, we found it at the lowest size bin for panel D, while the “Gaussian” part gives the greater mismatch for panel F. As for panel D, while the theoretical argument for the quasi-orbits clearly captures the shape of the size distributions, the way the test fails in the exponential part is interesting.

In fact, network events cannot be detected with arbitrarily small size: in a way, the detection procedure imposes a soft threshold on the event size, below which the exponential distribution is not applicable.

We can provide a rough estimate of such soft threshold as follows. A quasi-orbit duration is, to a first approximation, proportional to 1/ℑλ, which is of the order of few hundreds milliseconds not too far from the bifurcation line in the excitation-inhibition plane. Taking, for instance, 150 ms, an event will be detected if network activity within this time-span is larger than average (typically few spikes per second per neuron; we take 3 for the present example): this leads to a soft threshold of about 100 spikes. This would be the lower limit of applicability of the exponential part of the distribution; this also explains the trough observed for very small sizes.

As for the failure of the Kolmogorov-Smirnov test for the right part of the distribution in panel F, it should be remarked that the assumption of a Gaussian distribution for the size of network spikes, although generically plausible, is not grounded in a theoretical argument, and it’s not surprising that, on the order of 104 detected events, even a moderate skewness, as the one observed, can make the test fail.

The fit for experimental data of panels A-C passed the Kolmogorov-Smirnov test (p > 0.01).

As mentioned in the introduction, avalanches are cascades of neural activities clustered in time (see Models and Analysis for our operational definition; examples of different methods used in the literature to detect avalanches can be found in [7, 35–37]). Fig 4, panel A and panel B, shows an example of the structure of the detected avalanches (red lines) in the model network.

We extracted avalanches from simulated data, as well as from experimental data. For simulations, we choose data corresponding to three points in the (wexc, winh) plane of Fig 2, with constant winh = 1 and increasing wexc, with the rightmost falling exactly over the instability line (white solid line in Fig 2). Three experimental data sets were extracted from different periods of a very long recording of spontaneous activity from a neural culture; each data set is a 40-minute recording.

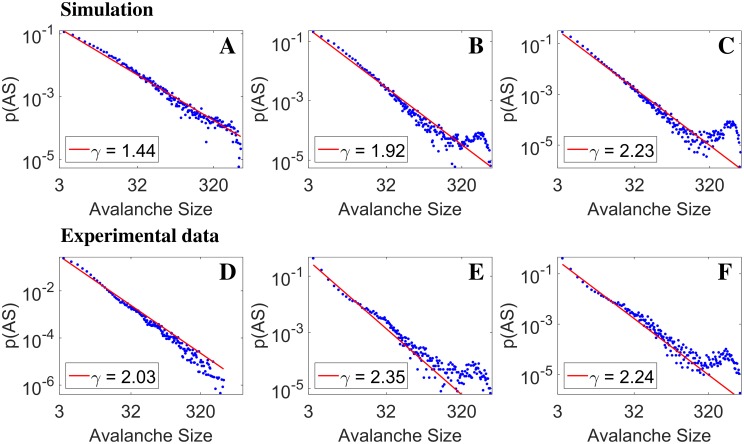

In Fig 6 we show (in log-log scale) the distribution of avalanche sizes for the three simulated networks (top row) and the three experimental (bottom row) data sets (blue dots); red lines are power-law fits [38].

Fig 6. Avalanche size distribution: simulations vs ex-vivo data.

Panels A-C: mean-field simulations, with fixed inhibition winh = 1. and increasing excitation (wexc = 0.9, 0.94, 1). The distributions are well fitted by power-laws; panel B and C clearly show the buildup of ‘bumps’ in the high-size tails, reflecting the increasing contribution from network spikes and quasi-orbits in that region of the distribution. Panels D-F from ex-vivo data, different ∼40-minute segments from one long recording; power-laws are again observed, although fitted exponents cover a smaller range; in panels E and F, bumps are visible, similar to model findings. The similarity between the theoretical and experimental distributions could reflect changes of excitatory/inhibitory balance in time in the experimental preparation. Since all the three simulations lay on the left of or just on the bifurcation line (white line in Fig 2), the shown results are compatible with the experimental network operating in a slightly sub-critical regime.

From the panels in the top row we see that the distributions are well fitted, over a range of two orders of magnitude, by power-laws with exponents ranging from about 1.5 to about 2.2, consistent with the results found in [7]. Note that in the cited paper the algorithm used for avalanche detection is quite different from ours, and the wide range of power-law exponents is related to their dependence on the time-window used to discretize data. In [39] (adopting yet another algorithm for avalanche detection), both the shape of the avalanche distribution and the exponent vary depending on using pharmacology to manipulate synaptic transmission, over a range compatible with our model findings; notably, they find the slope of the power-law to be increasing with the excitability of the network, which is consistent with our modeling results.

Panels B and C of Fig 6 clearly show the buildup of ‘bumps’ in the high-size tails, increasing with the self-excitation of the network; this is understood as reflecting the predominance of a contribution from NS and possibly quasi-orbits in that region of the distribution, on top of a persisting wide spectrum of avalanches. This feature also is consistent with the experimental findings of [39], and has been previously shown in a theoretical model [40] for non-leaky integrate-and-fire neurons endowed with STD and synaptic facilitation.

Turning to the plots in the bottom row of Fig 6, we observe the following features: power-laws are again observed over two decades and more; in panels E and F, bumps are visible, similar to model findings; power-law exponents cover a smaller range just above 2.

While the sequence of plots in two rows (modeling and experiment) clearly shows similar features, we emphasize that experimental data were extracted from a unique long recording, with no intervening pharmacological manipulations affecting synaptic transmission; on the other hand, it has been suggested [41] that a dynamic modulation of the excitatory/inhibitory balance can indeed be observed in long recordings; although our model would be inherently unable to capture such effects, it is tempting to interpret the suggestive similarity between the theoretical and experimental distributions in Fig 6 as a manifestation of such changes of excitatory/inhibitory balance in time, of which the theoretical distributions would be a ‘static’ analog. To rule out the possibility that different behaviors in time could be due to intrinsic and global modifications in the experimental preparation, we checked (see S1 Fig) the waveforms of the recorded spikes across all MEA electrodes, comparing the earliest and latest used recordings (about 40 minutes each, separated by about 34 hours). In most cases the waveforms for the two recordings are remarkably similar, and when they are not, no systematic trend in the differences is observed.

If our interpretation is correct, the experimental preparation operates below, and close, to an oscillatory instability; on the other hand, contrary to NS, the appearance of avalanches does not seem to be exquisitely related to a Hopf bifurcation, rather they seem to generically reflect the non-linear amplification of spontaneous fluctuations around an almost unstable fixed point—a related point will be mentioned in the next section. We also remark that we obtain power-law distributed avalanches in a (noisy) mean-field rate model, by definition lacking any spatial structure; while the latter could well determine specific (possibly repeating) patterns of activations (as observed in [19]), it is here suggested to be not necessary for power-law distributed avalanches.

The avalanche size distribution for the same network as in Fig 5, panel C, is sparser but qualitatively compatible with the distribution in Fig 6, panel F (see S2 Fig); in particular, the distribution shows a prominent peak for high-size avalanches, consistently with the interpretation, given in connection with Fig 5, of high excitability.

We do not provide examples of avalanche and NS-quasi orbits size distributions in the super-critical region on the right of the Hopf bifurcation line in Fig 2; this is because the phenomenology in that region is relatively stereotyped and easy to guess/understand: the high excitability of the network generates, moving on the right of the bifurcation line, increasingly stereotyped network spikes, which dominate the size distribution of the network events (see S3 Fig, panel A); even though finite-size fluctuations blur the bifurcation line, quasi-orbits are expected to contribute very little in the supercritical region; the distribution of avalanche sizes is increasingly dominated by the high-size bump associated with network spikes (see S3 Fig, panel B).

Inferring the time-scales

The fatigue mechanism at work (STD in our case) is a key element of the transient network events, in its interplay with the excitability of the system. While the latter can be manipulated through pharmacology, STD itself (or spike frequency adaptation, another neural fatigue mechanism) cannot be directly modulated. It is therefore interesting to explore ways to infer relevant properties of such fatigue mechanisms from the experimentally accessible information, i.e. the firing activity of the network. We focus in the following on deriving the effective (activity-dependent) time scale of STD from the sampled firing history.

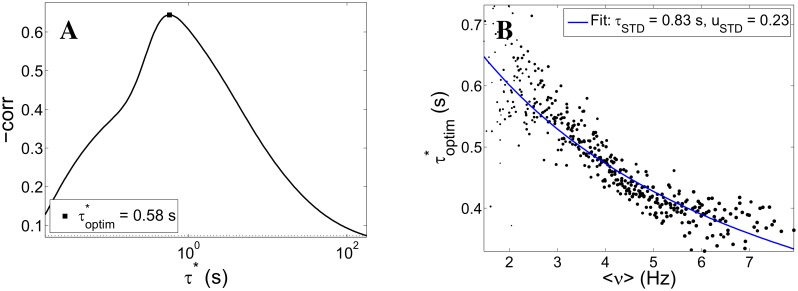

The starting point is the expectation that the fatigue level just before a NS should affect the strength of the subsequent NS. We therefore measured the correlation between r (fraction of available synaptic resources) and the total number of spikes emitted during the NS (NS size) from simulations. We found that the average value of r just before a NS is an effective predictor of the NS size, the more so as the excitability of the network grows.

Based on the r-NS size correlation, we took the above “experimental” point of view, that only the firing activity ν is directly observable, while r is not experimentally accessible. Furthermore, the success of the linear analysis for the inter-NS interval statistics (due to the NS being a low-threshold very non-linear phenomenon), suggests that without assuming a specific form for the dynamics of the fatigue variable f, we may tentatively adopt for it a generic linear integrator form, of which we want to infer the characteristic time-scale τ*:

| (19) |

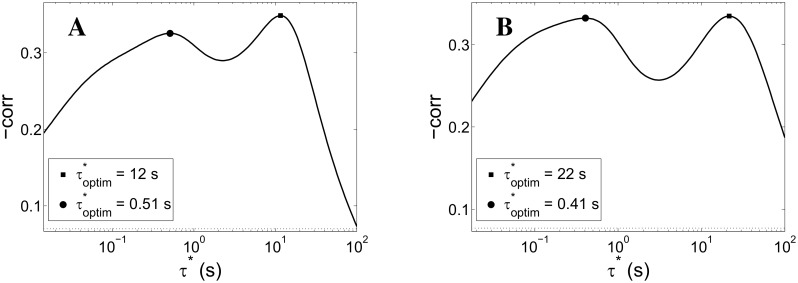

To do this, first we reconstruct f(t) from ν(t) for a given τ* then we set up an optimization procedure to estimate , based on the maximization of the (negative) f-NS size correlation (a strategy inspired by a similar principle was adopted in [11]). Fig 7, panel A, shows an illustrative example of how the correlation peaks around the optimal value. As a reference, the dotted line marks the value below which 95% of the correlations computed from surrogate data fall; surrogate data are obtained by shuffling the values of f at the beginning of each NS.

Fig 7. Slow time-scales inference procedure: test on simulation data.

Panel A: correlation between low-pass filtered network activity f (see Eq 19) and the size of the immediately subsequent network spike plotted against the time-scale τ* of the low-pass integrator (continuous line). The correlation presents a clear (negative) peak for an ‘optimal’ value s of the low-pass integrator; such value is interpreted as the effective time-scale of the putative slow self-inhibitory mechanism underlying the statistics of network events—in this case, short-term synaptic depression (STD); as a reference, the dotted line marks the value computed for surrogate data (see text). Panel B: for each point in the (wexc, winh)-plane (see Fig 2), vs average network activity; the continuous line is the best fit of the theoretical expectation for STD’s effective time-scale (Eq 20); the fitted values for the STD parameters τ STD and u STD are consistent with the actual values used in simulation (τ STD = 0.8 s, u STD = 0.2).

We remark that in this analysis we use both NS and quasi-orbit events (which are both related to the proximity to a Hopf bifurcation). This is reasonable since we expect to gain more information about the anti-correlation between f and NS size by including both types of large network events.

Although the procedure successfully recovers a maximum in the correlation, the value of (0.58 s) reported in Fig 7, panel A, is not close to the value of τ STD (0.8 s). Yet this is expected, since in Eq 19, τ* will in general depend on τ STD and other parameters of the dynamics, but also on the point around which the dynamics is being linearized, more precisely on the average activity 〈ν〉. Specifically, when the fatigue variable follows the Tsodyks-Markram model of STD (which of course was actually the case in the simulations), linearizing the dynamics of r around a fixed point 〈r〉 (〈r〉 = 1/(1 + u STD 〈ν〉 τ STD)), r behaves as a simple linear integrator with a time-constant:

| (20) |

that depends on τ STD, u STD, and 〈ν〉.

To test this relationship, we performed the optimization procedure for each point of the excitation-inhibition plane. The optimal τ* values across the excitation-inhibition plane against 〈ν〉 are plotted in Fig 7, panel B (dots). The solid line is the best fit of τ STD and u STD from Eq 20, which are consistent with the actual values used in simulations.

This result is suggestive of the possibility of estimating from experiments the time-scale of an otherwise inaccessible fatigue variable, by modeling it as a generic linear integrator, with a “state dependent” time-constant.

Fig 8 shows the outcome of the same inference procedure for two segments of experimental recordings. The plot in panel A is qualitatively similar to panel A in Fig 7: although the peak is broader and the maximum correlation (in absolute value) is smaller, the τ* peak is clearly identified and statistically significant (with respect to surrogates, dotted line), thus suggesting a dominant time scale for the putative underlying, unobserved fatigue process. However, Fig 8, panel B, clearly shows two significant peaks in the correlation plot; it would be natural to interpret this as two fatigue processes, with time scales differing by an order of magnitude, simultaneously active in the considered recording segment.

Fig 8. Slow time-scales inference procedure on ex-vivo data.

Correlation between low-pass filtered network activity f (see Eq 19) and the size of the immediately subsequent network spike plotted against the time-scale τ* of the low-pass integrator for two experimental datasets (different periods—about 40 minutes each—in a long recording). The plot in panel A is qualitatively similar to the simulation result shown in panel A of Fig 7: a peak, although broader and of smaller maximum (absolute) value, is clearly identified and statistically significant (with respect to surrogate data, dotted line). Panel B shows two significant peaks in the correlation plot, a possible signature of two concurrently active fatigue processes, with time scales differing by roughly an order of magnitude. Panel A: same data as Fig 5, panel B.

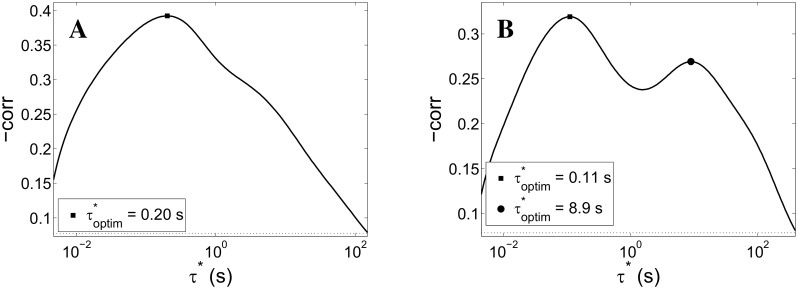

To test the plausibility of this interpretation, we simulated networks with simultaneously active STD and spike-frequency adaptation (SFA, see Models and Analysis). Fig 9 shows the results of time scale inference for two cases sharing the same time scale for STD (800 ms) and time scale of SFA differing by a factor of 2 (τ SFA = 15 and 30 s respectively). In both cases the negative correlation peaks at around τ* ≃ 500 ms; this peak is plausibly related to the characteristic time of STD, consistently with Fig 7. The peaks at higher τ*s, found respectively at 12 and 22 s, roughly preserve the ratio of the corresponding τ SFA values.

Fig 9. Slow time-scales inference procedure on simulation data with STD and spike-frequency adaptation.

Correlation between low-pass filtered network activity f (see Eq 19) and the size of the immediately subsequent network spike plotted against the time-scale τ* of the low-pass integrator. In this case, the mean-field model includes, besides short-term depression (STD), a mechanism mimicking spike-frequency adaptation. Panel A: spike-frequency adaptation with characteristic time τ SFA = 15 s. Panel B: τ SFA = 30 s. In both cases the correlation presents a STD-related peak at around τ* ≃ 500 ms (τ STD = 800 ms), consistently with Fig 7. The peaks at higher τ*s, found respectively at 12 and 22 s, in accordance with what is reported in the plot legends and in the main text, roughly preserve the ratio of the corresponding τ SFA values.

This analysis provides preliminary support to the above interpretation of the double peak in Fig 8, right panel, in terms of two coexisting fatigue processes with different time scales.

We also checked to what extent the avalanche sizes were influenced by the immediately preceding amount of available synaptic resources r, and we found weak or no correlations; this further supports the interpretation offered at the end on the previous section, that avalanches are a genuine manifestation of the network excitability which amplifies a wide spectrum of small fluctuations.

Discussion

Several works recently advocated a key role of specific network connectivity topologies in generating ‘critical’ neural dynamics as manifested in power-law distributions of avalanches size and duration (see [20, 42]). Also, it has been suggested that ‘leader neurons’, or selected coalitions of neurons, play a pivotal role in the onset of network events (see e.g. [21, 43–45]). While a role of network topology, or heterogeneity in neurons’ excitability, is all to be expected, we set out to investigate what repertoire of network events is accessible to a network with the simplest, randomly sparse, connectivity, over a wide range of excitation-inhibition balance, in the presence of STD as an activity-dependent self-inhibition. In the present work we showed that network spikes, avalanches and also large fluctuations we termed ‘quasi-orbits’ coexist in such networks, with various relative weights and statistical features depending on the excitation-inhibition balance, which we explored extensively, including the role of finite-size noise (irregular synchronous regimes in balanced excitatory-inhibitory networks has been studied in [35]). We remark in passing that the occurrence of quasi-orbits is primarily related to the proximity to a Hopf bifurcation for the firing rate dynamics; on the other hand, the occurrence of NS and, presumably, avalanches, does not necessarily require this condition: for instance, NS can occur in the proximity of a saddle-node bifurcation, where the low-high-low activity transitions derive from the existence of two fixed points, the upper one getting destabilized by the fatigue mechanism (see e.g. [46, 47]); notably, in [12] the authors find that, in a network of leaky integrate-and-fire neurons endowed with STD, when a saddle-node separates an up- and a down-state, the dynamics develops avalanches during up-state intervals only. We also remark that, with respect to the power-law distribution of avalanches, it is now widely recognized that while criticality implies power-law distributions, the converse is not true, which leaves open the problem of understanding what is actually in operation in the neural systems observed experimentally (for a general discussion on the issues involved, see [48]). In the present work, we do not commit ourselves to the issue of whether avalanches could be considered as evidence of Self-Organized Criticality.

In summary, the main contributions of the present work can be listed as follows.

We present a low-dimensional network model, derived from the mean field theory for interacting leaky integrate-and-fire neurons with short-term depression, in which we include the effect of finite-size (multiplicative) noise.

At the methodological level we introduce a probabilistic model for events detection, and a method for inferring the time-scale(s) of putative fatigue mechanisms. At the phenomenological level we recognize the existence of quasi-orbits as an additional type of network event, we show the coexistence of quasi-orbits, network spikes, and avalanches, and study their different mixing depending on the excitability of the network. We also offer a theoretical interpretation of the phenomenology, through a bifurcation analysis of the mean-field model, and a prediction on the effect of noise in the proximity of a Hopf bifurcation.

Supporting Information

Waveforms of the recorded spikes across all MEA electrodes, comparing the earliest and latest used recordings (about 40 minutes each, separated by about 34 hours). In most cases the waveforms for the two recordings are remarkably similar, and when they are not, no systematic trend in the differences is observed. Panel A: average wave-form for each electrode; blue lines refer to the earliest recording, green line to the latest. For each electrode, we averaged only the first spike detected (if any) for that electrode in each network spike, to best isolate local excitability properties from global network effects during the development of the network spike. The shaded colored strips are ±standard deviation. Panel B: waveforms averaged under a stricter selection: only the very first spike of the entire network in each network spike is considered, which clearly selects fewer leading electrodes (only electrodes with 5 or more recorded first spikes are shown).

(TIF)

The distribution is sparser but qualitatively compatible with the distribution in Fig 6, panel F; in particular, the distribution shows a prominent peak for high-size avalanches, consistently with the interpretation, given in connection with Fig 5, of high excitability.

(TIF)

Data from a simulation of the network corresponding to the point (wexc, winh) = (1.2, 0.4), on the right of the Hopf bifurcation of Fig 2. Panel A: NS-quasi orbits size distribution; the high excitability of the network generates increasingly stereotyped network spikes, whilst the contribution from noise-induced quasi-orbits vanishes. Panel B: the distribution of avalanche sizes, while preserving the small-size power-law tail, is increasingly dominated by the high-size bump associated with network spikes. See also Figs 5 and 6.

(TIF)

Acknowledgments

We thank Neta Haroush and Maurizio Mattia for several stimulating discussions.

Data Availability

All data files are freely available from: marom.net.technion.ac.il/neural-activity-data/

Funding Statement

We aknowledge the support of EU-Project CORONET (FP7-ICT-269459). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Baltz T, Herzog A, Voigt T (2011) Slow oscillating population activity in developing cortical networks: models and experimental results. J Neurophysiol 106: 1500–1514. 10.1152/jn.00889.2010 [DOI] [PubMed] [Google Scholar]

- 2. Eytan D, Marom S (2006) Dynamics and effective topology underlying synchronization in networks of cortical neurons. The Journal of Neuroscience 26: 8465–8476. 10.1523/JNEUROSCI.1627-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Giugliano M, Darbon P, Arsiero M, Lüscher HR, Streit J (2004) Single-neuron discharge properties and network activity in dissociated cultures of neocortex. Journal of Neurophysiology 92: 977–996. 10.1152/jn.00067.2004 [DOI] [PubMed] [Google Scholar]

- 4. Gritsun T, le Feber J, Stegenga J, Rutten WL (2011) Experimental analysis and computational modeling of interburst intervals in spontaneous activity of cortical neuronal culture. Biological Cybernetics 105: 197–210. 10.1007/s00422-011-0457-3 [DOI] [PubMed] [Google Scholar]

- 5. Park IPI, Xu DXD, DeMarse TB, Principe JC (2006). Modeling of Synchronized Burst in Dissociated Cortical Tissue: An Exploration of Parameter Space. 10.1109/IJCNN.2006.246734 [DOI] [Google Scholar]

- 6. Wagenaar DA, Pine J, Potter SM (2006) An extremely rich repertoire of bursting patterns during the development of cortical cultures. BMC Neurosci 7: 11 10.1186/1471-2202-7-11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Beggs JM, Plenz D (2003) Neuronal avalanches in neocortical circuits. The Journal of Neuroscience 23: 11167–11177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Petermann T, Thiagarajan TC, Lebedev MA, Nicolelis MA, Chialvo DR, et al. (2009) Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. Proceedings of the National Academy of Sciences 106: 15921–15926. 10.1073/pnas.0904089106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Plenz D, Thiagarajan TC (2007) The organizing principles of neuronal avalanches: cell assemblies in the cortex? Trends in neurosciences 30: 101–110. 10.1016/j.tins.2007.01.005 [DOI] [PubMed] [Google Scholar]

- 10. Plenz D, Schuster HG (2014) Criticality in neural systems. Wiley-VCH; New York, NY. [Google Scholar]

- 11. Linaro D, Storace M, Mattia M (2011) Inferring network dynamics and neuron properties from population recordings. Frontiers in Computational Neuroscience 5 10.3389/fncom.2011.00043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Millman D, Mihalas S, Kirkwood A, Niebur E (2010) Self-organized criticality occurs in non-conservative neuronal networks during up ’states. Nature Physics 6: 801–805. 10.1038/nphys1757 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Levina A, Herrmann JM, Geisel T (2007) Dynamical synapses causing self-organized criticality in neural networks. Nature Physics 3: 857–860. 10.1038/nphys758 [DOI] [Google Scholar]

- 14. Thivierge JP, Cisek P (2008) Nonperiodic synchronization in heterogeneous networks of spiking neurons. Journal of Neuroscience 28: 7968–7978. 10.1523/JNEUROSCI.0870-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Tsodyks M, Uziel A, Markram H (2000) Synchrony generation in recurrent networks with frequency-dependent synapses. Journal of Neuroscience 20: RC50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Vladimirski BB, Tabak J, O’Donovan MJ, Rinzel J (2008) Episodic activity in a heterogeneous excitatory network, from spiking neurons to mean field. Journal of Computational Neuroscience 25: 39–63. 10.1007/s10827-007-0064-4 [DOI] [PubMed] [Google Scholar]

- 17. Benita JM, Guillamon A, Deco G, Sanchez-Vives MV (2012) Synaptic depression and slow oscillatory activity in a biophysical network model of the cerebral cortex. Frontiers in Computational Neuroscience 6 10.3389/fncom.2012.00064 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Reig R, Gallego R, Nowak LG, Sanchez-Vives MV (2006) Impact of cortical network activity on short-term synaptic depression. Cerebral Cortex 16: 688–695. 10.1093/cercor/bhj014 [DOI] [PubMed] [Google Scholar]

- 19. Beggs JM, Plenz D (2004) Neuronal avalanches are diverse and precise activity patterns that are stable for many hours in cortical slice cultures. The Journal of Neuroscience 24: 5216–5229. 10.1523/JNEUROSCI.0540-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Massobrio P, Pasquale V, Martinoia S (2014) Criticality in cortical ensembles is supported by complex functional networks. BMC Neuroscience 15: O15 10.1186/1471-2202-15-S1-O15 [DOI] [Google Scholar]

- 21. Luccioli S, Ben-Jacob E, Barzilai A, Bonifazi P, Torcini A (2014) Clique of functional hubs orchestrates population bursts in developmentally regulated neural networks. PLoS Computational Biology 10: e1003823 10.1371/journal.pcbi.1003823 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Wilson HR, Cowan JD (1972) Excitatory and inhibitory interactions in localized populations of model neurons. Biophysical Journal 12: 1–24. 10.1016/S0006-3495(72)86068-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Ricciardi LM (1977) Diffusion processes and related topics in biology. 10.1007/978-3-642-93059-1 [DOI] [Google Scholar]

- 24. Tsodyks MV, Markram H (1997) The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proceedings of the National Academy of Sciences 94: 719–723. 10.1073/pnas.94.2.719 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Tsodyks M, Pawelzik K, Markram H (1998) Neural networks with dynamic synapses. Neural Computation 10: 821–835. 10.1162/089976698300017502 [DOI] [PubMed] [Google Scholar]

- 26. Wang XJ (1998) Calcium coding and adaptive temporal computation in cortical pyramidal neurons. Journal of Neurophysiology 79: 1549–1566. [DOI] [PubMed] [Google Scholar]

- 27. Ermentrout B (1998) Linearization of fi curves by adaptation. Neural Computation 10: 1721–1729. 10.1162/089976698300017106 [DOI] [PubMed] [Google Scholar]

- 28. Baum LE, Petrie T (1966) Statistical inference for probabilistic functions of finite state markov chains. The Annals of Mathematical Statistics: 1554–1563. 10.1214/aoms/1177699147 [DOI] [Google Scholar]

- 29. Chen Z, Vijayan S, Barbieri R, Wilson MA, Brown EN (2009) Discrete-and continuous-time probabilistic models and algorithms for inferring neuronal up and down states. Neural Computation 21: 1797–1862. 10.1162/neco.2009.06-08-799 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Tokdar S, Xi P, Kelly RC, Kass RE (2010) Detection of bursts in extracellular spike trains using hidden semi-markov point process models. Journal of Computational Neuroscience 29: 203–212. 10.1007/s10827-009-0182-2 [DOI] [PubMed] [Google Scholar]

- 31. Khovanov I, Schimansky-Geier L, Zaks M (2006) Spectral analysis of noisy oscillators near hopf bifurcations. Acta Physica Polonica Series B 37: 1551. [Google Scholar]

- 32. van Pelt J, Vajda I, Wolters PS, Corner MA, Ramakers GJ (2005) Dynamics and plasticity in developing neuronal networks in vitro. Progress in Brain Research 147: 171–188. 10.1016/S0079-6123(04)47013-7 [DOI] [PubMed] [Google Scholar]

- 33. Wagenaar DA, Nadasdy Z, Potter SM (2006) Persistent dynamic attractors in activity patterns of cultured neuronal networks. Physical Review E 73: 051907 10.1103/PhysRevE.73.051907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Gal A, Eytan D, Wallach A, Sandler M, Schiller J, et al. (2010) Dynamics of excitability over extended timescales in cultured cortical neurons. The Journal of Neuroscience 30: 16332–16342. 10.1523/JNEUROSCI.4859-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Benayoun M, Cowan JD, van Drongelen W, Wallace E (2010) Avalanches in a stochastic model of spiking neurons. PLoS Computational Biology 6: e1000846 10.1371/journal.pcbi.1000846 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Poil SS, Hardstone R, Mansvelder HD, Linkenkaer-Hansen K (2012) Critical-state dynamics of avalanches and oscillations jointly emerge from balanced excitation/inhibition in neuronal networks. The Journal of Neuroscience 32: 9817–9823. 10.1523/JNEUROSCI.5990-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Priesemann V, Wibral M, Valderrama M, Pröpper R, Le Van Quyen M, et al. (2014) Spike avalanches in vivo suggest a driven, slightly subcritical brain state. Frontiers in Systems Neuroscience 8: 108 10.3389/fnsys.2014.00108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Clauset A, Shalizi CR, Newman ME (2009) Power-law distributions in empirical data. SIAM Review 51: 661–703. 10.1137/070710111 [DOI] [Google Scholar]

- 39. Mazzoni A, Broccard FD, Garcia-Perez E, Bonifazi P, Ruaro ME, et al. (2007) On the dynamics of the spontaneous activity in neuronal networks. PLoS One 2: e439 10.1371/journal.pone.0000439 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Levina A, Herrmann JM, Geisel T (2009) Phase transitions towards criticality in a neural system with adaptive interactions. Physical Review Letters 102: 118110 10.1103/PhysRevLett.102.118110 [DOI] [PubMed] [Google Scholar]

- 41. Haroush N, Marom S (2015) Slow dynamics in features of synchronized neural network responses. Frontiers in Computational Neuroscience 9: 40 10.3389/fncom.2015.00040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Friedman EJ, Landsberg AS (2013) Hierarchical networks, power laws, and neuronal avalanches. Chaos: An Interdisciplinary Journal of Nonlinear Science 23: 013135 10.1063/1.4793782 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Eckmann JP, Jacobi S, Marom S, Moses E, Zbinden C (2008) Leader neurons in population bursts of 2d living neural networks. New Journal of Physics 10: 015011 10.1088/1367-2630/10/1/015011 [DOI] [Google Scholar]

- 44. Shein M, Volman V, Raichman N, Hanein Y, Ben-Jacob E (2008) Management of synchronized network activity by highly active neurons. Physical Biology 5: 036008 10.1088/1478-3975/5/3/036008 [DOI] [PubMed] [Google Scholar]

- 45. Pirino V, Riccomagno E, Martinoia S, Massobrio P (2014) A topological study of repetitive co-activation networks in in vitro cortical assemblies. Physical Biology 12: 016007–016007. 10.1088/1478-3975/12/1/016007 [DOI] [PubMed] [Google Scholar]

- 46. Gigante G, Mattia M, Del Giudice P (2007) Diverse population-bursting modes of adapting spiking neurons. Physical Review Letters 98: 148101 10.1103/PhysRevLett.98.148101 [DOI] [PubMed] [Google Scholar]

- 47. Mejias JF, Kappen HJ, Torres JJ (2010) Irregular dynamics in up and down cortical states. PLoS One 5: e13651 10.1371/journal.pone.0013651 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Beggs JM, Timme N (2012) Being critical of criticality in the brain. Frontiers in Physiology 3 10.3389/fphys.2012.00163 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Waveforms of the recorded spikes across all MEA electrodes, comparing the earliest and latest used recordings (about 40 minutes each, separated by about 34 hours). In most cases the waveforms for the two recordings are remarkably similar, and when they are not, no systematic trend in the differences is observed. Panel A: average wave-form for each electrode; blue lines refer to the earliest recording, green line to the latest. For each electrode, we averaged only the first spike detected (if any) for that electrode in each network spike, to best isolate local excitability properties from global network effects during the development of the network spike. The shaded colored strips are ±standard deviation. Panel B: waveforms averaged under a stricter selection: only the very first spike of the entire network in each network spike is considered, which clearly selects fewer leading electrodes (only electrodes with 5 or more recorded first spikes are shown).

(TIF)

The distribution is sparser but qualitatively compatible with the distribution in Fig 6, panel F; in particular, the distribution shows a prominent peak for high-size avalanches, consistently with the interpretation, given in connection with Fig 5, of high excitability.

(TIF)

Data from a simulation of the network corresponding to the point (wexc, winh) = (1.2, 0.4), on the right of the Hopf bifurcation of Fig 2. Panel A: NS-quasi orbits size distribution; the high excitability of the network generates increasingly stereotyped network spikes, whilst the contribution from noise-induced quasi-orbits vanishes. Panel B: the distribution of avalanche sizes, while preserving the small-size power-law tail, is increasingly dominated by the high-size bump associated with network spikes. See also Figs 5 and 6.

(TIF)

Data Availability Statement

All data files are freely available from: marom.net.technion.ac.il/neural-activity-data/