Abstract

Functional connectivity (FC) patterns obtained from resting-state functional magnetic resonance imaging data are commonly employed to study neuropsychiatric conditions by using pattern classifiers such as the support vector machine (SVM). Meanwhile, a deep neural network (DNN) with multiple hidden layers has shown its ability to systematically extract lower-to-higher level information of image and speech data from lower-to-higher hidden layers, markedly enhancing classification accuracy. The objective of this study was to adopt the DNN for whole-brain resting-state FC pattern classification of schizophrenia (SZ) patients vs. healthy controls (HCs) and identification of aberrant FC patterns associated with SZ. We hypothesized that the lower-to-higher level features learned via the DNN would significantly enhance the classification accuracy, and proposed an adaptive learning algorithm to explicitly control the weight sparsity in each hidden layer via L1-norm regularization. Furthermore, the weights were initialized via stacked autoencoder based pre-training to further improve the classification performance. Classification accuracy was systematically evaluated as a function of (1) the number of hidden layers/nodes, (2) the use of L1-norm regularization, (3) the use of the pre-training, (4) the use of framewise displacement (FD) removal, and (5) the use of anatomical/functional parcellation. Using FC patterns from anatomically parcellated regions without FD removal, an error rate of 14.2% was achieved by employing three hidden layers and 50 hidden nodes with both L1-norm regularization and pre-training, which was substantially lower than the error rate from the SVM (22.3%). Moreover, the trained DNN weights (i.e., the learned features) were found to represent the hierarchical organization of aberrant FC patterns in SZ compared with HC. Specifically, pairs of nodes extracted from the lower hidden layer represented sparse FC patterns implicated in SZ, which was quantified by using kurtosis/modularity measures and features from the higher hidden layer showed holistic/global FC patterns differentiating SZ from HC. Our proposed schemes and reported findings attained by using the DNN classifier and whole-brain FC data suggest that such approaches show improved ability to learn hidden patterns in brain imaging data, which may be useful for developing diagnostic tools for SZ and other neuropsychiatric disorders and identifying associated aberrant FC patterns.

Keywords: Deep learning, functional connectivity, resting-state functional magnetic resonance imaging, schizophrenia, sparsity, stacked autoencoder

Introduction

Resting-state functional MRI (rsfMRI) without a task paradigm has been successfully employed to exploit neuronal underpinnings implicated in neuropsychiatric disorders (Anand et al., 2005; Castellanos et al., 2008; Li et al., 2002), including schizophrenia (SZ) (Greicius, 2008; Jafri et al., 2008; Liang et al., 2006; Liu et al., 2008; Mingoia et al., 2012; Yu et al., 2012; Zhou et al., 2007). For example, Liu and colleagues (2008) presented evidence of significantly altered functional connectivity (FC) pairs (i.e., locally connected networks), or disrupted “small-world FC networks,” in the prefrontal, parietal, and temporal areas of the brain in patients with SZ. In that study, the hypothesis on dysfunctional network integration in SZ was supported by the lower strength of the FC in the pairs of nodes and decreased synchronization of functionally connected brain regions as well as the longer absolute path to reach global functional networks (Bullmore et al., 1997; Bullmore et al., 1998; Calhoun et al., 2009; Friston and Frith, 1995; Liu et al., 2008). In addition, Liang et al. (2006) reported that aberrant SZ-associated FC patterns were widely distributed throughout the entire brain (i.e., the FC levels of approximately 89% of the observed pairs of nodes were decreased), as opposed to showing a restricted pattern within only a few specific brain regions.

Machine-learning algorithms have been successfully deployed in the automated classification of altered FC patterns related to SZ (Arbabshirani et al., 2013; Du et al., 2012; Shen et al., 2010; Tang et al., 2012; Watanabe et al., 2014). In this regard, Du and colleagues (2012) developed a method combining kernel principal component analysis (PCA) and group independent component analysis (ICA) aimed at the computer-aided diagnosis of SZ, achieving 98% accuracy by using fMRI data acquired from an auditory oddball task paradigm. In addition, Shen et al. (2010) introduced an unsupervised learning-based classifier to discriminate SZ patients from HC subjects by applying a combination of nonlinear dimensionality reduction and self-organized clustering algorithms to rsfMRI data. The results of this analysis demonstrated the highest discriminating power for FC patterns between the cerebellum and the frontal cortex, with a classification accuracy of 92.3%. In addition, the altered resting-state functional network connectivity (FNC) among auditory, frontal-parietal, default-mode, visual, and motor networks were gainfully adopted for classification of SZ patients and 96% accuracy was achieved using k-nearest neighbors classifier (Arbabshirani et al., 2013). A recent schizophrenia classification challenge demonstrated clearly, across a broad range of classification approaches, the value of rsfMRI data in capturing useful information about this disease (Silva et al., 2014).

Of late, a strategy applying sparsity constraint to spatial patterns has favorably been deployed in various scenarios of fMRI data analysis directed toward extracting information from whole-brain FC patterns (Grosenick et al., 2013; Kim et al., 2012; Lee et al., 2008b; Watanabe et al., 2014). This explicit control of sparsity to analyze fMRI data also includes certain widely used ICA algorithms, such as the popular default algorithms of Infomax and FastICA, which jointly maximize sparsity and independence (Calhoun et al., 2013). This sparsity control has also been beneficial for brain decoding via fMRI data classification (Ng and Abugharbieh, 2011). The sparsity constraint strategy is particularly well-suited to fMRI data given the inherent high dimensionality and intra-subject variability. Moreover, sparsity constraint using total variation penalization (Michel et al., 2012) or anatomically-informed spatiotemporally smooth sparse constraint (Ng et al., 2012) for decoding of fMRI data can explicitly model intra/inter-subject variability, thus resulting in superior performance compared with the least absolute shrinkage and selection operator (LASSO)-based classifier (Michel et al., 2012; Ng and Abugharbieh, 2011; Ng et al., 2012).

The sparsity constraint strategy was recently put into play with rsfMRI data acquired from SZ patients and other neuropsychiatric patients, facilitating the identification of aberrant FC-based attributes, the extraction of distinct and sparse SZ-associated FC networks, and the subsequent application of these attributes and networks to automated classification and diagnosis (Cao et al., 2014; Watanabe et al., 2014). For instance, Watanabe et al. (2014) discovered clinically informative feature sets by using the same data set employed in the current study (see Methods section) via a sparsity constraint with a fused LASSO scheme for the conventional support vector machine (SVM) classifier. Altered FC patterns were prominent in the fronto-parietal networks, the default-mode networks (DMNs), and the cerebellar areas, and the corresponding accuracy was 71.9% (Watanabe et al., 2014).

A deep neural network (DNN) with multiple hidden layers has achieved unprecedented classification performance relative to the SVM and other conventional models (e.g., the hidden Markov model) in various data sets such as image and speech data (Graves et al., 2013; Krizhevsky et al., 2012). This technical breakthrough was accomplished by overcoming the limitations of traditional multilayer neural networks that are based on standard back-propagation algorithms and prone to over-fitting to the training data (Schmidhuber, 2014). More specifically, the distinct characteristics of DNN training encompass (1) unsupervised layer-wise pre-training followed by fine-tuning (Bengio et al., 2007), and (2) stochastic corruption of the input pattern or weight parameters via random zeroing, for example, a denoising autoencoder (Hinton et al., 2012; Vincent et al., 2010). Despite accumulating evidence showing the superiority of the DNN, previous applications of DNN to neuroimaging data are limited to only a few studies (Brosch and Tam, 2013; Hjelm et al., 2014; Plis et al., 2014; Suk et al., 2013). Among the limited attempts to apply the DNN to neuroimaging data, the restricted Boltzmann machine as a building block for the DNN network model has demonstrated its improved capacity to extract spatial and temporal information of fMRI data compared with conventional matrix factorization schemes, such as ICA and PCA algorithms (Hjelm et al., 2014). In addition, Suk et al. (2013) investigated the DNN training strategy by employing a stacked autoencoder (SAE) to discriminate Alzheimer’s disease patients from mild cognitive impairment patients. This was done by using volumetric information derived from structural MRI data combined with cerebral glucose metabolism data obtained by positron emission tomography. More recently, Plis et al. (2014) provided a validation study of DNN applied to several types of neuroimaging data, providing evidence that DNN can learn important features such as disease severity (Plis et al., 2014).

Whole-brain FC patterns from fMRI data have not yet been utilized as input patterns to demonstrate the efficacy of the DNN for classification of SZ or other neuropsychiatric disorders. Therefore, the objective of the present investigation was to enhance the classification accuracy of SZ patients vs. HC subjects by using the DNN classifier and whole-brain FC patterns estimated from rsfMRI data. The DNN has been applied to various data sets, such as image and speech data as well as neuroimaging data, with less than 1,000 input dimensions (i.e., number of nodes in the input layer) (Graves et al., 2013; Krizhevsky et al., 2012). Compared with these data sets, a dimension of the whole-brain FC patterns can easily reach approximately 5,000 when the whole brain is divided into 100 sub-regions. This high dimensionality would be confounded by a lack of straightforward interpretations of whole-brain FC patterns compared with those of speech, image data, and other neuroimaging modalities such as raw fMRI volumes and structural MRI data. Thus, training the DNN using complex and high-dimensional whole-brain FC patterns is inherently challenging. To this end, we evaluated our supposition that classification accuracy can be enhanced by (1) deploying sparsity control of DNN weight parameters and (2) systematically initializing the weight parameters via a pre-training scheme.

We defined a sparsity level of DNN weights as the ratio between a number of non-zero values of DNN weights and a total number of DNN weights (i.e., non-zero ratio). Then, to explicitly control the sparsity of the DNN weights, we developed an adaptive scheme to control the non-zero ratios of the weights between two connected layers to target levels. We then hypothesized that the DNN using the proposed scheme would improve the classification accuracy of SZ patients and HC subjects relative to the DNN without the proposed scheme as well as conventional approaches such as the SVM classifier. This is because hierarchical feature representations (i.e., a transition from lower-to-higher level information) of whole-brain FC patterns derived from rsfMRI data can be obtained from DNN weights with sparsity control and pre-training. Such hierarchical feature representations would not be similarly available from the DNN without the weight sparsity control and pre-training. In addition to evaluation of the classification performance, we assessed the validity of the learned lower-to-higher level features of the DNN classifier by using kurtosis and graph-theoretical modularity measures, as well as spatial correlation coefficients (CCs) between the learned features and the input FC patterns.

Materials and Methods

Overview

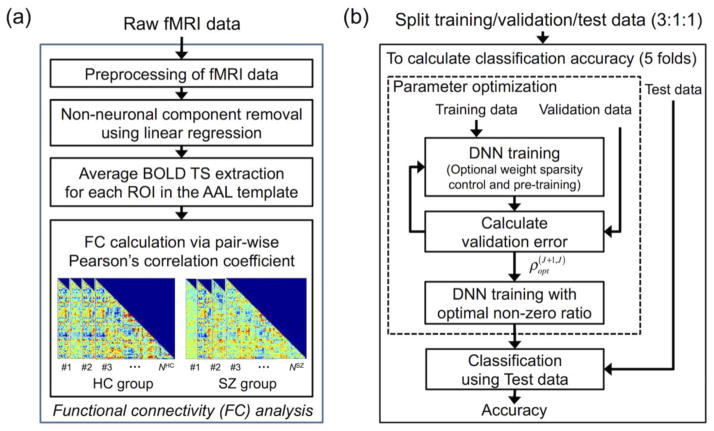

Figure 1 presents an overall flow diagram of the analysis. First, the raw fMRI data were preprocessed, and the whole-brain FC patterns were calculated by using Pearson’s CCs (Fig. 1a). Second, the FC patterns were used as input patterns to a DNN classifier, and the DNN classifier was trained and parameters were optimized by using training and validation data from subjects split from the cross validation (CV) framework during the training phase (Fig. 1b). Finally, a classification of SZ patient or HC subject was performed for each individual in the test data during the test phase (Fig. 1b). In addition, the weights of the DNN classifier were interpreted via qualitative visual inspection and quantitative evaluation.

Figure 1.

Overall flow diagram of the functional connectivity (FC) analysis. (a) Raw functional magnetic resonance imaging (fMRI) data were preprocessed, and the input patterns (i.e., whole-brain FC patterns) were extracted. (b) The FC patterns were used as input for the deep neural network (DNN) classifier. In the nested cross-validation scheme, the DNN classifier was trained by using the training (three out of five folds) and validation (one fold) data for parameter optimization employing optional sparsity control of weights and stacked autoencoder (SAE)-based pre-training. The trained DNN classifier was used to estimate classification accuracy using the test data in the remaining fold. BOLD, blood-oxygenation-level-dependent; ROI, region-of-interest; TS, time series; AAL, automated anatomical labeling; HC, healthy control; SZ, schizophrenia, NHC, number of subjects in the HC group; NSZ, number of subjects in the SZ group; , optimal target non-zero ratio of weights between the Jth and (J+1)th hidden layers of the DNN obtained from the inner loop of nested cross validation using the training and validation data.

Data description

The rsfMRI data from SZ patients and HC subjects were obtained from the Neuroimaging Informatics Tools and Resources Clearinghouse (NITRC) website, contributed by the Centers of Biomedical Research Excellence (COBRE; fcon_1000.projects.nitrc.org/indi/retro/cobre.html) and also available on the collaborative informatics and neuroimaging suite (COINS) data exchange (coins.mrn.org/dx) (Calhoun et al., 2011; Scott et al., 2011). A diagnosis of SZ was made by using the Structured Clinical Interview for DSM Disorders (SCID; Diagnostic and Statistical Manual of Mental Disorders, DSM-IV) (First et al., 2012). Exclusion criteria comprised any history of neurological disorders, a history of mental retardation, a history of severe head trauma with a > 5 min loss of consciousness, and/or a history of substance abuse/dependence within the last 12 months. All participants completed a battery of neuropsychological tests, including the Wechsler Test of Adult Reading and the Wechsler Abbreviated Scale of Intelligence (Venegas and Clark, 2011; Wechsler, 1999). The SZ patients were also rated according to the Positive and Negative Syndrome Scale (PANSS) as a severity measure of their SZ symptoms (Kay et al., 1987). The SZ patients (n=72; with the exception of one subject) were all receiving various antipsychotic medications at the time of the study, corresponding to both traditional agents (e.g., haloperidol and perphenazine) and newly developed agents (e.g., olanzapine and risperidone).

A 3-Tesla Siemens Tim Trio scanner with a 12-channel head coil, and a single-shot full k-space echo-planar imaging (EPI) system and ramp sampling correction using the inter-commissural line as a reference were employed to acquire rsfMRI data while their eyes open, where time-of-echo (TE) = 29 ms, time-of-repetition (TR) = 2000 ms, voxel size = 3 × 3 × 4 mm3, in-plane voxel = 64 × 64, 33 slices, and number of volumes = 150. The first five volumes were removed to allow equilibration of the T1-related signal. The remaining EPI volumes were preprocessed using statistical parametric mapping (SPM) software (SPM8; www.fil.ion.ucl.ac.uk/spm) in the order of slice-timing correction, realignment, and spatial normalization to the Montreal Neurological Institute (MNI) template with 3 mm isotropic voxel size, followed by spatial smoothing using an 8 mm isotropic full-width at half-maximum Gaussian kernel. The automated anatomical labeling (AAL) template (Tzourio-Mazoyer et al., 2002) available in the MNI space was re-sliced from a 2 mm to 3 mm isotropic voxel size using SPM8. Thus, each of the 116 AAL regions was readily available in each voxel of the normalized EPI volumes. Additionally, abrupt movements were minimized by utilizing the ArtRepair software toolbox (Raiko et al., 2012) which implements a “volume scrubbing” (Power et al., 2012). Thus, potential adverse effects of head-motion artifacts were minimized during the classification test, as well as during the qualitative/quantitative evaluation of the learned features.

Subjects (n=147 before screening) were excluded from the study if (1) the average displacement due to head motion during fMRI scanning, as estimated from the realignment parameters, exceeded 0.45 mm (Power et al., 2012); (2) the diagnostic results from the DSM-IV criteria were unrelated to SZ (n=4 subjects, as exemplified by late-onset dementia of the Alzheimer’s type as classified by the DSM-IV); and (3) the data acquisition process was incomplete (n=1 subject). From the remaining subjects, equal numbers (n=50) of individuals were assigned to the SZ and HC groups via a pseudo-randomized pick. Table 1 summarizes the sociodemographic information, neuropsychological test results, and clinical characteristics for each of the two study groups.

Table 1.

Summary of sociodemographics, neuropsychological test, and clinical characteristics for each of the HC and SZ groups.

| HC (Mean ± SD, N = 50) | SZ (Mean ± SD, N = 50) | p-value | |

|---|---|---|---|

| Demographics | |||

| Age (years) | 35.50 ± 11.88 | 35.94 ± 13.59 | 0.86 |

| Gender (male/%) | 34/68% | 43/86% | |

| Handedness (right/%) | 48/96% | 42/84% | |

| Ethnicity (Caucasian/%)* | 23/46% | 25/50% | |

|

| |||

| Neuropsychological performance* | |||

| WTAR standard score | 109.93 ± 12.73 | 103.04 ± 13.07 | 1×10−2 |

| WASI verbal IQ | 108.17 ± 9.02 | 100.74 ± 16.63 | 9×10−3 |

| WASI performance IQ | 113.86 ± 12.58 | 104.83 ± 16.18 | 3×10−3 |

|

| |||

| Clinical characteristics | |||

| Age of onset (years) | 20.94 ± 6.95 | ||

| Illness duration (years) | 15.00 ± 11.85 | ||

| PANSS positive | 14.36 ± 4.78 | ||

| PANSS negative | 15.00 ± 5.36 | ||

| PANSS general | 29.42 ± 8.55 | ||

| PANSS total | 58.78 ± 14.35 | ||

| Olanzapine equivalent dose (mg) | 11.00 ± 6.32 | ||

HC: healthy control; SZ: schizophrenia patients; SD: standard deviation; WTAR: Wechsler test of adult reading; WASI: Wechsler abbreviated scale of intelligence; IQ: intelligence quotient; PANSS: positive and negative syndrome scale.

missing values from some subjects were removed in calculating the mean and SD)

FC analysis

The average blood-oxygenation-level-dependent (BOLD) time series (TS) across the voxels in each of the 116 regions of the AAL atlas were linearly detrended and bandpass filtered at 0.004–0.08 Hz. The average TS from each white matter (WM) and cerebrospinal fluid (CSF) area was also extracted and used to exclude non-neuronal components in the BOLD TS. The WM and CSF areas were defined from the voxels with a probability of > 99th percentile in the apriori maps available in the SPM8 software package (Kim et al., 2013; Kim and Lee, 2013). In addition, six motion parameters (three rotations and three translations) obtained from the realignment step were employed as nuisance variables. These non-neuronal confounding factors were regressed out from the average BOLD TS for each AAL region via the least-squares error minimization scheme (Chai et al., 2012; Kim et al., 2015a; Kim et al., 2015b; Song et al., 2011). The Pearson’s CCs were then calculated using the resulting BOLD signals from all possible pairs (n=6,670) of the 116 AAL regions, a triangular portion of the CC matrix (Fig. 1a). The CCs were Fisher’s r-to-z transformed (Rosner, 2010). The z-scored FC levels for each subject were normalized to yield a zero mean and unit variance via pseudo z-scoring. The pseudo z-scored FC levels across all 6,670 pairs (116C2) of the AAL regions were used as input patterns for the DNN classifier.

DNN training with sparsity control of weights

The DNN layers consisted of multiple hidden layers and a softmax layer as an output layer. The target values of the two output nodes in the softmax layer were assigned as [1, 0]T and [0, 1]T for the input pattern from the HC group and the SZ group, respectively. The cost function, J(W) of the DNN for the supervised fine-tuning step was defined using the mean squared error (MSE), L1-norm, and L2-norm terms as follows:

| (1) |

where y(L),{n}(W) is a vector with elements of the output values at the Lth layer for the subject n in the training set, t{n} is the target output values of the subject n (i.e., class information; [1 0]T for HC and [0 1]T for SZ), β(J+1,J)(t) and γ(J+1,J) are the L1-norm and L2-norm regularization parameters, respectively, between the Jth and (J+1)th layer, N is the total number of subjects in the training set, and (L+1) is the number of the layers, including the input layer (i.e., the 0th layer) and the output layer (i.e., the Lth layer).

A learning algorithm of DNN weights was derived from a stochastic gradient descent scheme to this cost function (also termed the fine-tuning step) as follows:

| (2) |

where ΔMSE W(J+1,J)(t) is the first-order derivative of the cost function with respect to the W(J+1,J)(t) weight parameters, or the weights between the Jth and (J+1)th layer, and was previously used in a standard back-propagation algorithm (Bishop, 1995); t is the epoch number (i.e., one epoch was defined as the DNN weight updates derived from using all of the training data); and α(t) is the learning rate at the tth epoch. The learning rate α(t) was initially set to 0.002 (i.e., α(0)=0.002) and then gradually reduced after the first 250 epochs (Bengio, 2013; Darken and Moody, 1992). Note that β(J+1,J)(t) was adaptively controlled to reach a target sparsity level of weights between the Jth and (J+1)th layers, and γ(J+1,J) was fixed to 10−5 to prevent over-fitting of weights (Moody et al., 1995). A total of 500 epochs were used, and the number of hidden nodes was set to 50 for each of the hidden layers. To accelerate the learning procedure, a previous weight update term (or momentum) was added to the current weight update term (Bishop, 1995) as follows:

| (3) |

where t is the epoch number, and the learning rate m of this momentum (a fraction of the previous weight update term) was fixed to 0.1. This momentum term of the weight update accelerates the gradient descent learning to find an optimal point when the gradient of the MSE consistently points to the same direction (Bishop, 1995). The classification results using the DNNs with one to five hidden layers were obtained to test whether more hidden layers lead to better classification performance or saturate/degrade at a certain number of the hidden layers. A semi-batch learning process with a batch size of ten input vectors from ten subjects was utilized. The DNN training algorithm implemented in the publicly available DNN software toolbox was used with the above parameters in the MATLAB environment (github.com/rasmusbergpalm/DeepLearnToolbox).

Proposed scheme for sparsity control of DNN weights

Training of DNN weights is inherently challenging due to multiple hidden layers. This complication can be aggravated when whole-brain rsfMRI FC patterns are employed as input patterns. To overcome this issue, an approach was undertaken to explicitly control the degree of sparsity of the weights, or the weight sparsity, for each of the hidden layers of the DNN. The L1-norm regularization parameter β(J+1,J)(t) was then adaptively changed to achieve the target sparsity level in terms of the ratio of non-zero weights (hence, the lower the ratio of the non-zero weights, the higher the level of the weight sparsity, and vice versa). This approach therefore permits the systematic evaluation of associations between (1) the degrees of weight sparsity in each of the hidden layers during the training phase, and (2) the consequent classification accuracies during the test phase. In our proposed approach, an L1-norm regularization parameter, β(J+1,J)(t), was adaptively changed in each epoch, as follows:

| (4) |

where μ is the learning rate, fixed to a value of 10−5; ρ(J+1, J) is the target non-zero ratio of W(J+1, J); and nzr(·) is a function to account for the non-zero ratio of a vector/matrix. β(J+1, J)(t) was initially set to 10−3 and was bounded to a minimum value of 0 and maximum value of 10−2 during the update. Various target non-zero ratios (ρ(J+1, J) = 0.3, 0.5, 0.7, or 1.0) were tested for each hidden layer to reflect the potentially different optimal sparsity level in each layer. The optimal non-zero ratios for each of the hidden layers were determined among all combinatorial sets of non-zero ratios across the hidden layers when the validation accuracy was maximal. In addition, the reproducibility of the optimal non-zero ratios across the permuted CV sets was evaluated using the intra-class correlation coefficient (ICC) implemented in the MATLAB code available from MATLAB Central (www.mathworks.com/matlabcentral; “ICC.m” by A. Salarian) (Koch, 1982). Classification accuracies were evaluated using the test sets for these non-zero ratios. Note that the weights between the last hidden layer and the softmax layer were trained by using only L2-norm regularization to fully minimize the MSE-based cost function.

Pre-training of DNN weights for initialization

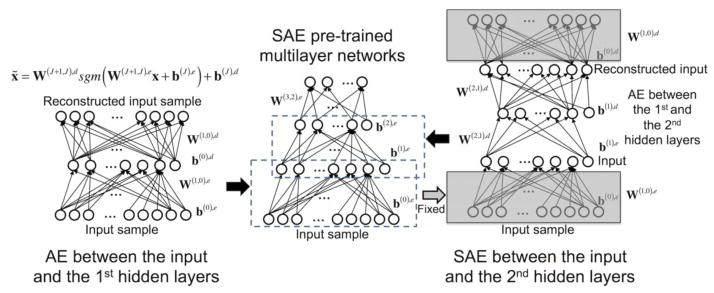

Pre-training of DNN weights as opposed to random initialization has proven its utility to circumvent a local minimum and thus enhances the classification performance (Hinton et al., 2006; Larochelle et al., 2009). Similarly, using whole-brain FC patterns as input sample, we evaluated whether the pre-training of DNN weights would also improve the classification performance. To this end, an autoencoder (AE) algorithm was applied to minimize the reconstruction error of the input sample in the reconstructed input layer (left of Fig. 2), the output of the first hidden layer was used as input to the second hidden layer in the SAE scheme (right of Fig. 2), and the multilayer networks consisted of SAE pre-trained layers (middle of Fig. 2). More specifically, the weights, W(1,0),e, and the bias, b(0),e, for encoding the input sample were trained from the AE between the input layer (i.e., layer 0) and the first hidden layer (i.e., layer 1) to minimize a cost function defined from a MSE between the input and the reconstructed input sample. The weights, W(2,1),e, and the bias, b(1),e, were trained from the AE between the first hidden layer and the second hidden layer, whereby the output of the first hidden layer was used as the input to this AE. Then, the trained weights and bias terms from these AEs were stacked and used as initial weights of the DNN in the subsequent fine-tuning phase using the target output and actual output of the input sample. A random zeroing scheme was adopted for the AE, in which randomly selected elements (approximately 30% of the input pattern) were set to zero (Vincent et al., 2010). The learning rate α(t) in Eq. (2) was initially set to 0.01 and then gradually reduced after 500 epochs (Bengio, 2013). The total number of epochs was set to 1,000 to allow for convergence of the weights. The learning rate of the momentum factor was fixed to 0.1. The L2-norm regularization parameter γ in Eq. (2) was set to 10−5 as in the fine-tuning step. In the DNN without pre-training and with sparsity control, the L1-norm regularization parameter was also adaptively changed using Eq. (4). In a condition without pre-training, uniformly distributed random numbers within the range of were assigned as initial weights for random initialization, where nin and nout corresponded to the numbers of nodes in the input and output layers (Bengio, 2013). Table 2 summarizes four combinatorial scenarios for the training of DNN weights, depending on the use of sparsity control and/or pre-training. To evaluate the efficacy of the pre-training scheme in the DNN, the average learning curves of error rates across all permuted training/validation/test sets with the pre-training scheme were compared with the learning curves obtained without pre-training in the sparsity control-based L1-norm regularization framework. The learning curves of the average non-zero ratio and adjusted L1-norm regularization parameter during the training phase were also compared across the two weight initialization schemes.

Figure 2.

Pre-training-based initialization of deep neural network (DNN) weights. Left: The autoencoder (AE) architecture for the layer-wise pre-training; Right: the stacked AE (SAE) architecture between the input and the second hidden layers using the output of the first hidden layer as an input to the AE; Middle: SAE pre-training-based multilayer networks. SAE-based pre-training is performed using the weights and bias terms trained from the AE of each layer (please refer to the detailed description in the Methods section).

Table 2.

Pseudo codes of the DNN training depending on the use of (a) L1-norm regularization for weight sparsity control and (b) SAE-based pre-training. The DNN training without L1-norm regularization and without pre-training corresponds to a standard back-propagation algorithm.

| Initialization of weights | |||

|---|---|---|---|

| With pre-training | Without Pre-training (i.e., random initialization) | ||

|

| |||

| Weight sparsity control | With L1-norm regularization | ||

|

| |||

| Without L1-norm regularization (i.e. β(J+1,J)(t)= 0) |

|

|

|

DNN, deep neural network; SAE, stacked autoencoder

Classification test

Classification performance was evaluated by using a nested CV framework during a training phase and the consequent test phase (Fig. 1b). In detail, a total of 50 subjects for each of the SZ and HC groups were split into five folds, with ten subjects in each fold for each group. During the training phase using the training data (three out of five folds) and the validation data (one fold), a grid search was conducted for several target non-zero ratios and optimal non-zero ratio parameters were obtained. Once the DNN classifier was trained, the classification performance was estimated by using the test data in the remaining fold. Using this scheme, a so-called “circular analysis” or “double dipping” issue could be prevented (Kriegeskorte et al., 2009). For instance, by using the DNN architecture with one hidden layer for each of the four target non-zero ratios, the DNN training and classification tests were both conducted 20 times, due to the numbers of scenarios required to select the training data (n=5C3) and the validation data (n=2C1). The classification tests were repeated ten times with a randomized split into five data folds, giving a total of 50 available error rates for each of the four target non-zero ratios. The reproducibility of these error rates for each of the conditions (i.e., with/without L1-norm regularization and with/without pre-training) was evaluated using an ICC (Koch, 1982). Potentially significant main effects and/or interactions of these error rates were evaluated for all data via a three-way repeated measures analysis of variance (ANOVA). In the three-way ANOVA, one factor pertained to the use of sparsity control, one factor pertained to the use of pre-training, and one factor pertained to the number of hidden layers of the DNN. The resulting p-value was Bonferroni-corrected for multiple comparisons (i.e., d.f. = 999 due to 50 permuted training/validation/test data sets for each option of the sparsity-control/pre-training/five hidden layers).

SVM-based classification

For comparison with the DNN classifier, a SVM classifier with a linear kernel or a Gaussian radial basis function (RBF) kernel was used as implemented in the LIBSVM software package (www.csie.ntu.edu.tw/~cjlin/libsvm) (Chang and Lin, 2011). To train the SVM classifier, the soft margin parameter, C, and the γSVM parameter to control for RBF kernel size were optimized using the training data (three out of five folds) and the validation data (one fold) via a grid search (i.e., C = 2−5, 2−3, …, and 215, and γSVM = 2−15, 2−13, …, and 23) (Cristianini and Shawe-Taylor, 2000; Lee et al., 2009). The parameters of the SVM classifier were determined optimal when the validation accuracy was maximal, and the optimally chosen parameters across the CV sets were reported. Once the SVM classifier was trained, the classification performance was estimated using the test data in the remaining fold.

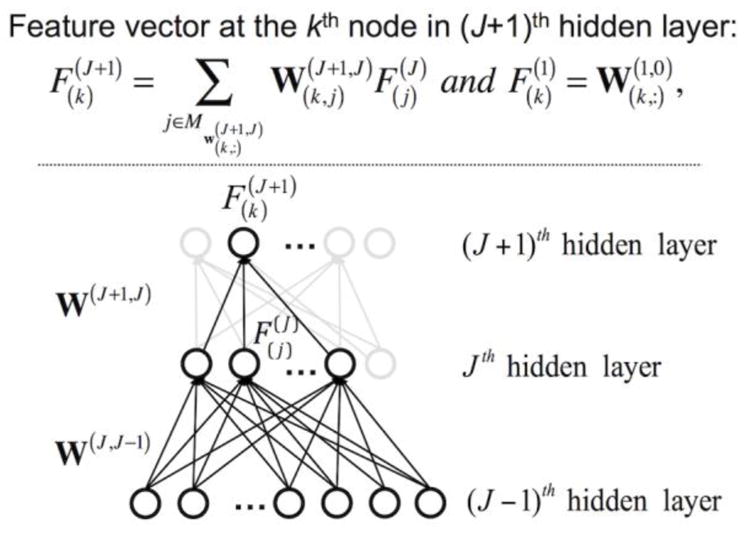

Learned features from the trained DNN and its qualitative interpretation via visualization

To test our hypothesis regarding the lower-to-higher level FC features of the DNN, the DNN weights were trained by taking into account the data from all of the subjects. This scheme avoids the complication of merging DNN weights obtained from various sets of training, validation, and test data, although classification accuracy is not simultaneously available. In this type of DNN training, the average non-zero ratio level (i.e., the weight sparsity) presenting the highest classification performance during the classification test is used to set the target non-zero ratio for each of the layers.

A linear combination of the weights across layers for feature representation from the trained DNN assumes that a hidden node can be characterized by the filters/weights of the previous layer that it is most strongly connected to (Lee et al., 2008a). For example, using the Mixed National Institute of Standards and Technology (MNIST) database of handwritten digits and natural images as inputs to the DNN, simple cell response (i.e., edge filters) in the early visual cortex was extracted from the weights between the input and first hidden layers, and the linear combination of these weights to the second layer resembled corner filters (Lee et al., 2008a). Similarly, the trained weights in each hidden layer of our DNN were visualized by linear projection from the input layer to the corresponding hidden layer (Fig. 3) (Denil et al., 2013; Lee et al., 2008a; Suk et al., 2014). Specifically, a feature vector at the kth node in the (J+1)th hidden layer was defined using the trained DNN weights as follows:

| (5) |

where is a set of the node indices at the Jth hidden layer that presents large magnitude values among the elements of the weight vector from the Jth hidden layer to the kth node at the (J+1)th hidden layer, . The choice of the number of hidden nodes for which weights were linearly combined might alter the characteristics of the DNN features; thus the DNN features were obtained for several numbers of linearly combined weights (i.e., 10, 15, and 30). Then, the reproducibility of the modularity and kurtosis values of the DNN features across these several numbers of the linear combination was evaluated via the ICC. The feature vectors were interpreted as the learned features of the whole-brain FC patterns in the corresponding hidden layer and then visualized using two options including (a) the BrainNet Viewer software (www.nitrc.org/projects/bnv) toolbox (Xia et al., 2013) and (b) the “circularGraph” toolbox available at MATLAB Central (www.mathworks.com/matlabcentral) to clearly illustrate the connectivity across the regions of interest (ROIs).

Figure 3.

Feature vector representation from the trained DNN weights. The learned feature vector, , at the kth hidden node in the (J+1)th hidden layer is defined from a linear combination of the feature vectors in the Jth hidden layer (please refer to the detailed description in the Methods section). Input layer is defined as the 0th layer.

Quantitative interpretation of learned features from trained DNN via spatial correlation and Fisher’s scores

Absolute values of spatial CCs between the learned features of the DNN and either the average FC patterns for the HC/SZ groups or the t-scored group-difference FC patterns (obtained from a two-sample t-test) were calculated. The capability of each hidden layer to discriminate between the two groups was also assessed using Fisher’s scores (Bishop, 1995), defined as follows:

| (6) |

where the mean value of the jth node input at the Jth hidden layer for the HC group ; sgm is the sigmoid function; the variance of the hidden node input for the HC group ; N is the number of hidden nodes; and NHC and NSZ are the number of subjects in the HC and SZ groups, respectively (a superscript indicates a layer index or a group label, and a subscript denotes a node index). The average Fisher’s score across 50 nodes in each hidden layer was taken as the score of the corresponding hidden layer. Hidden layers with higher Fisher’s scores represented layers with a greater capacity to discriminate between the two groups. To assess statistical significance of the hidden node input within each group and between the HC and SZ groups, a one-sample t-test and a two-sample t-test were administered using the hidden node input prior to application of sigmoid function of each hidden node.

The modularity of the learned features in each hidden layer was further analyzed via a graph-theoretical measurement (Girvan and Newman, 2002; Newman and Girvan, 2004) implemented in a graph-theoretical analysis toolbox (Hosseini et al., 2012). A modularity analysis incorporating the learned features of the DNN can reveal how whole-brain FC patterns are decomposed in each of the hidden layers to better discriminate SZ patients from HCs. The kurtosis values of the learned features in each layer were also calculated to evaluate the corresponding sparsity levels via non-Gaussianity and were compared between the layers using two-sample t-test.

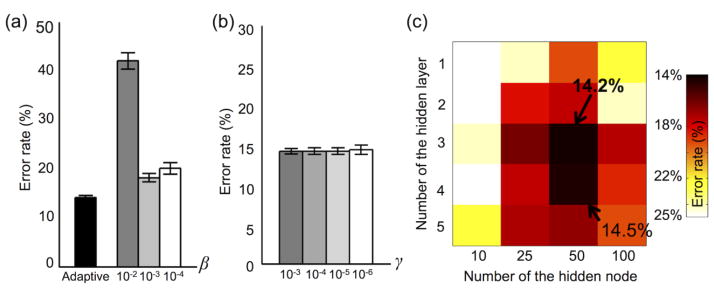

Performance evaluation for several parameter sets

Our proposed continuous update of the L1-norm regularization parameter β(t) based on the targeted non-zero ratio was compared with an approach that fixed the L1-norm regularization parameter to one of several values (i.e., β = 10−2, 10−3, and 10−4), in which the weight parameters were initialized with either pre-training or random initialization. The maximum number of epochs was set to 1,000 to train the DNN with the fixed L1-norm regularization parameter because of the potentially slow convergence. The resulting learning curves of both the classification accuracy and non-zero ratios were collated across all of the permuted 5-fold CV sets. The L2-norm regularization parameter γ was chosen from several additional values (i.e., 10−3, 10−4, and 10−6), and the classification performance was evaluated. The number of hidden layers and the number of nodes in each hidden layer can also be optimized using the training/validation data. Thus, we adopted a grid search to select the optimal number of hidden layers (i.e., among one to five hidden layers) and nodes (i.e., among 10, 25, 50, and 100) in each hidden layer.

Potential bias on classification performance due to head motion

The head motion of SZ patients is generally greater than that of HC subjects (Kong et al., 2014). Despite the volume scrubbing and removal of the motion-related component in the BOLD signal when the FC patterns were calculated in our present study, the classification performance could be positively biased due to head motions because the SZ patients potentially have less volume than the HC subjects. To evaluate this potential bias, the average framewise displacement (FD) for each subject was calculated, and averaged FD values for all subjects in the HC and SZ groups in the training sets were adopted as a regressor in the least-squares estimation. Then, the FC patterns with the confounding factor removed from the average FD values were calculated as follows and used as input samples for the DNN.

| (7) |

where Y and Ŷ (N×P; N is the number of subjects in the training set, and P is the number of elements [i.e., 6,670] in the FC pattern for each subject) are the FC patterns for all subjects in the HC and SZ groups in the training set with and without the FD components, respectively; xFD (N×1) is the FD values for all subjects; 1 is a vector with an element of 1 to adjust a bias of the FC level; and ϕFD and ϕbias are the regression coefficients of the average FD values and the bias obtained from the least-squares estimation, respectively. The estimated coefficient of the FD (i.e., ϕFD) was used to remove the FD component of the FC patterns for the subjects in the test set. The FD-removed FC patterns were used as inputs of (1) the DNN classifier (with three hidden layers and 50 nodes in each hidden layer) by applying both the pre-training and weight sparsity control strategies and (2) the SVM classifiers with linear and RBF kernels. As a result, classification performance obtained from 50 randomly permuted training/validation/test data was reported along with the kurtosis and skewness values of the FC patterns with and without FD components.

Classification test employing FC patterns of functionally parcellated regions from group ICA

The average BOLD signal could potentially smooth out useful subtle patterns between BOLD signals. These hundreds or even thousands of BOLD signals could be very heterogeneous within each AAL region since the BOLD signal in each voxel may come from many different brain networks. To alleviate this potential limitation, an alternative method to functionally parcellate the brain networks was considered using a group ICA (GICA) (Calhoun and Adali, 2012; Calhoun et al., 2001). Subsequently, the time-courses (TCs) of the brain networks (i.e., spatial patterns) of independent components (ICs) were used to calculate FC patterns across these brain networks (Arbabshirani et al., 2013).

This functional parcellation was conducted in the CV framework. In detail, the preprocessed rsfMRI data from the training/validation sets (i.e., subjects) were analyzed using a spatial GICA as implemented in the GIFT toolbox (mialab.mrn.org/software/gift). Two-step dimension reduction was applied via a PCA, and the reduced dimensions in subject and group levels were 100 and 145, respectively. Then, 145 ICs were estimated from the Infomax algorithm (Bell and Sejnowski, 1995). Once the group spatial patterns (SPs) of the 145 ICs were estimated from GICA using the training/validation data to parcellate the whole brain into 145 regions, the SPs and TCs corresponding to the 145 brain regions were estimated using the spatio-temporal dual-regression applied to preprocessed rsfMRI data for each subject in the training/validation/test sets (Silva et al., 2014). The dual-regression estimates were (1) individual TCs using a group SPs as regressors and (2) individual SPs using the individual TCs as regressors (Beckmann and Smith, 2005; Calhoun and Adali, 2012; Du et al., 2012; Kim et al., 2012).

Each voxel was assigned to one of the SPs (i.e., brain regions) of 145 ICs if the t-score of the voxel (available from one-sample t-test using SPs across the training/validation data) of the assigned IC was greater than that of the remaining ICs. The probabilities that each voxel belongs to gray matter (GM), WM, and CSF are readily available in a priori maps of these three brain structures in SPM8. Thus, the proportions of the GM, WM, or CSF areas in each of the brain regions (i.e., SPs of ICs) were calculated by counting the number of voxels of the corresponding structure. Then, the top 116 ICs with greater proportions of GM than WM or CSF (i.e., 116 functionally parcellated brain regions) were selected to match the number of brain regions defined in the AAL template.

The TCs of these 116 ICs were used to calculate the FC patterns of the functionally parcellated brain regions. The six motion parameters (three rotations and three translations) obtained from the realignment step were regressed out from the TCs to remove any potential confounding factors due to head motion. Subsequently, the Pearson’s CC values were calculated and Fisher’s r-to-z transformed. An optimal non-zero ratio between two subsequent layers ρ(J+1,J) was identified via a grid search from 0.3 to 1.0 with an interval of 0.1 (excluding 0.9) to include the grid search range of the non-zero ratios applied to the FC patterns using the AAL template. The classification test was performed using the DNNs with one to five hidden layers and with both pre-training and the proposed weight sparsity control schemes.

Results

Group-level FC patterns

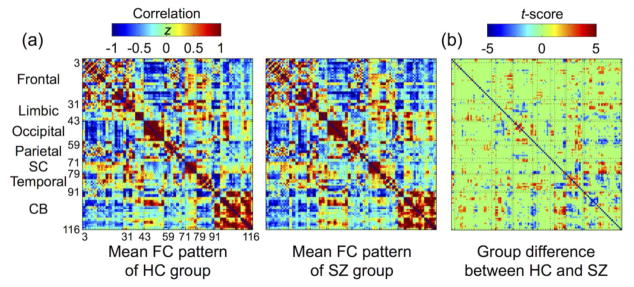

The number of removed and interpolated volumes by volume scrubbing (mean ± the standard deviation, SD: 2.9 ± 3.5 and 5.7 ± 6.6 from the HC and SZ group, respectively) was not significantly different between the two groups (uncorrected p > 0.05) from the Mann-Whitney U test (Mann and Whitney, 1947). Figure 4 illustrates the average FC patterns for each group and the group differences between the HC and SZ groups obtained by using a two-sample t-test. The average FC patterns showed prominent intra-network patterns involving the frontal, visual, subcortical, and cerebellar areas, as well as inter-network patterns involving DMNs and the fronto-temporal, cortical-thalamus, cortical-cerebellar, and subcortical-cerebellar networks (Liu et al., 2008; Salvador et al., 2005). The FC patterns were statistically different (uncorrected p < 0.05) between the two groups in the fronto-temporal, cortical-thalamus, and cortical-cerebellar networks.

Figure 4.

Group-level functional connectivity (FC) patterns. (a) Average FC patterns in the healthy control (HC) and schizophrenia (SZ) groups and (b) group differences in the FC patterns (evaluated via a two-sample t-test with a threshold of uncorrected p-values of < 0.05) are shown. Positive t-scores indicate greater FC level from HC group than SZ group. SC, subcortical area; CB, cerebellum.

Classification performance depending on weight sparsity levels

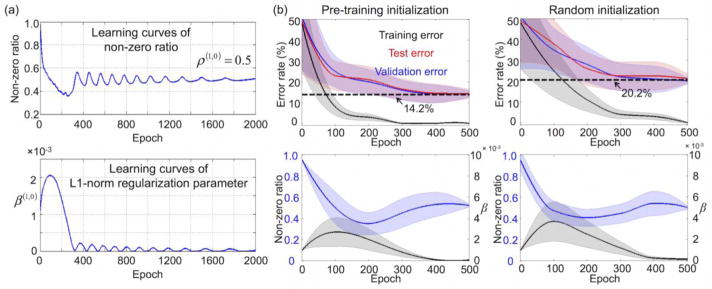

Figure 5a exemplifies the learning curves of (1) the target non-zero ratio ρ(J+1,J) (i.e., 0.5) of the DNN weights and (2) the L1-norm regularization parameter, β(J+1,J)(t), for the DNN with three hidden layers. The non-zero ratio was rapidly converged to the target value after several hundreds of epochs by adjusting β(J+1,J)(t). Figure 5b shows the average learning curves of the DNNs with and without pre-training in the weight sparsity control framework. The faster convergence and the lower minimum error rate that were achieved from pre-training (i.e., 14.2%) compared with that from random initialization (i.e., 20.2%) may indicate that pre-training facilitates the initialization of DNN weights to render the optimization process of the initial weights more effectively as opposed to the random initialization (Erhan et al., 2010).

Figure 5.

(a) Learning curves are exemplified for (i) the non-zero ratio of weights between the input and first hidden layers controlled during the training phase (target value = 0.5), and (ii) the L1-norm regularization parameter adaptation for the sparsity control of weights between the input and first hidden layers using the DNN with three hidden layers. (b) Averaged learning curves of error rates from the training, validation, and test data (top) and the learning curves of the non-zero ratio and L1-norm regularization parameter (bottom). DNN, deep neural network.

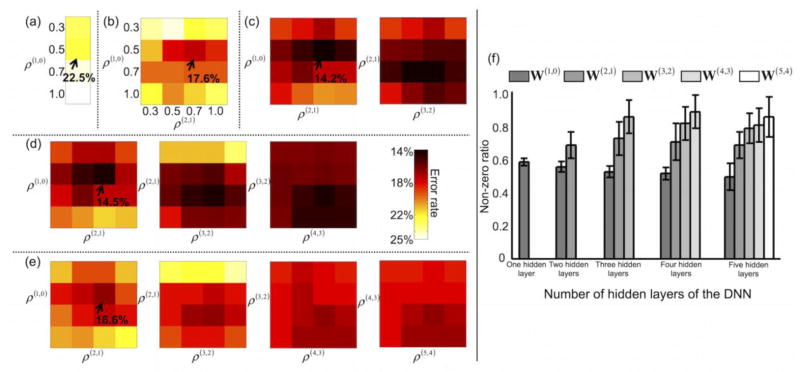

Figure 6 depicts the average error rates obtained from 50 randomly permuted training/validation/test data for each of the target non-zero ratios and for the DNNs with several numbers of hidden layers. The average error rate of the DNN with one hidden layer (22.5%) was lowest when the target non-zero ratio was 0.5 (Fig. 6a). For the DNN with three hidden layers, the average error rate was 14.2% when the target non-zero ratios were 0.5, 0.7, and 0.7 from the first, second, and third hidden layers, respectively (Fig. 6c). Figure 6f shows the optimal (i.e., when the error rate was at its minimum) non-zero ratio for each of the hidden layers obtained via explicit control of the weight sparsity for each of the DNNs with several numbers of hidden layers. Overall, the optimal non-zero ratio of the first hidden layer was consistently smaller than that of the higher hidden layers (Bonferroni-corrected p < 10−6, one-way ANOVA). For example, the average non-zero ratios for the DNN with three hidden layers were 0.52 for the first hidden layer, 0.72 for the second hidden layer, and 0.85 for the third hidden layer. Figure 7 shows the line plots of the non-zero ratio parameters optimally chosen for the DNNs with several numbers of hidden layers. The ICC value for the DNN with three hidden layers was maximal (i.e., 0.82); thus the optimally determined non-zero ratios were most reproducible from the DNN with three hidden layers among those tested.

Figure 6.

Error rates for several target non-zero ratios. Error rates are shown for deep neural networks (DNNs) with (a) one hidden layer, (b) two hidden layers, (c) three hidden layers, (d) four hidden layers, and (e) five hidden layers. (f) The resulting non-zero ratios for each layer (mean ± SD) are presented for the DNNs with one, two, three, four, or five hidden layers. ρ(J+1, J) is the target non-zero ratio of weights W(J+1,J) between the Jth and (J+1)th layer (e.g., the 0 layer is the input layer, and the 1st layer is the first hidden layer). The optimal non-zero ratio in each layer is determined using the validation data after the DNN has been trained using the training data for all combinatorial sets of non-zero ratios across the layers. The error rates are obtained using the test data.

Figure 7.

The non-zero ratio parameters optimally chosen for each of the hidden layers from the DNN with one to five hidden layers. The optimal non-zero ratios were found when the validation accuracy was maximal among the sets of non-zero ratios across hidden layers. The line in each subplot indicates each of the randomly permuted CV sets with training/validation/test data. ICC, intra-class correlation coefficient; CV, cross validation.

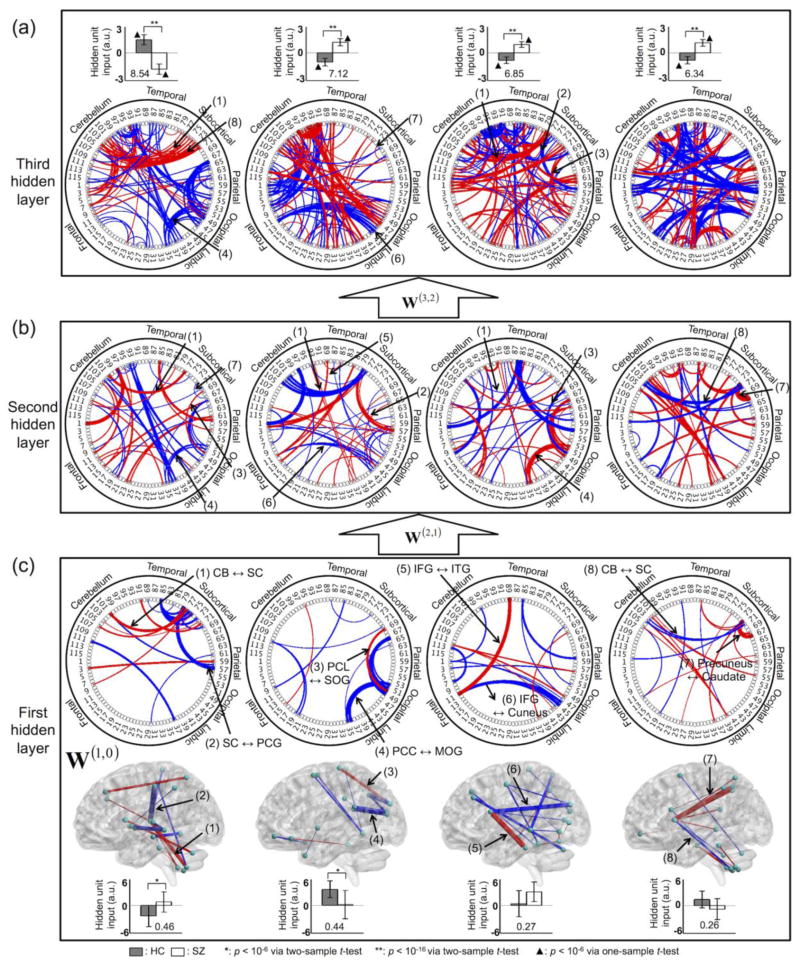

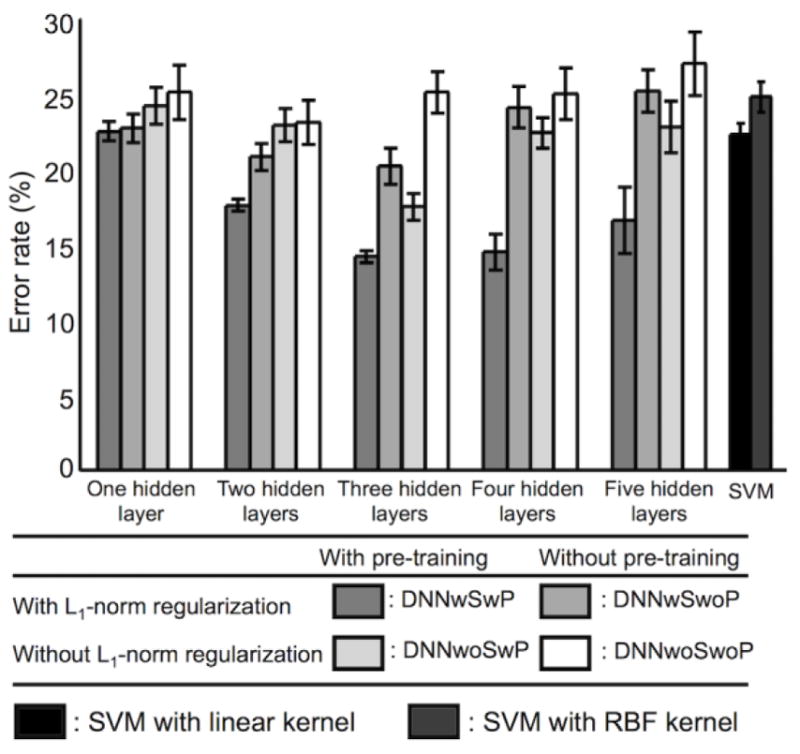

Classification performance depending on pre-training

Figure 8 summarizes the error rates of the DNNs with several numbers of hidden layers and (a) sparsity control and SAE-based pre-training (DNNwSwP), (b) sparsity control but no pre-training (DNNwSwoP), (c) SAE-based pre-training but no sparsity control (DNNwoSwP), and (d) neither sparsity control nor pre-training (DNNwoSwoP). Overall, SAE-based pre-training further reduced the error rates of the DNN compared with random initialization. This trend was particularly evident for DNNs with more than two hidden layers. Average error rates (± the SD) from the DNN with sparsity control and SAE-based pre-training were 22.5 (± 0.7), 17.6 (± 0.4), 14.2 (± 0.4), 14.5 (± 1.2), and 16.6 (± 2.2)% for one, two, three, four, and five hidden layers, respectively. Furthermore, the average error rates (± the SD) from the DNN with sparsity control but no pre-training were 22.7 (± 1.0), 20.8 (± 0.9), 20.2 (± 1.2), 24.1 (± 1.4), and 25.2 (± 1.4)% for one, two, three, four, and five hidden layers, respectively. Error rates from the DNN with a standard back-propagation algorithm (i.e., without sparsity control or pre-training) were 25.1 (± 1.8), 23.1 (± 1.5), 25.1 (± 1.4), 25.0 (± 1.7), and 27.0 (± 2.1)%, respectively. The classification results from the five hidden layers were substantially degraded compared with the results from the three hidden layers with pre-training and sparsity control (Bonferroni-corrected p < 10−2 from a paired t-test; d.f. = 98). Table 3 summarizes the error rates, sensitivities, and specificities for all combinatorial scenarios of the training strategies. The ICC values using the error rates across the pre-training and random initialization methods were greater from the DNN with three (0.49) and four (0.59) hidden layers (i.e., more reproducible) than from the DNNs with one (0.00), two (0.30), and five (0.29) hidden layers. When the pre-training initialization was used, the error rates from the DNN training with/without the L1-norm regularization schemes were reproducible when the DNNs with two (0.48), three (0.32), and four (0.51) hidden layers were deployed rather than the DNNs with one (0.04) and five (0.15) hidden layers. A three-way repeated measures ANOVA revealed that the main effects of sparsity control, pre-training, and the number of hidden layers to the error rates were all statistically significant (Bonferroni-corrected p < 10−7; d.f. = 999). Moreover, significant interactions were observed between (1) the number of hidden layers and the use of the sparsity control (Bonferroni-corrected p < 0.05; d.f. = 499), and (2) the number of hidden layers and the use of the pre-training (Bonferroni-corrected p < 10−7; d.f. = 499).

Figure 8.

Comparison of error rates. Error rates are shown for deep neural networks (DNNs) with (a) L1-norm regularization of weights (i.e., weight sparsity control) and stacked autoencoder (SAE)-based pre-training (DNNwSwP), (b) weight sparsity control but no pre-training (i.e., random initialization) (DNNwSwoP), (c) pre-training but no weight sparsity control (DNNwoSwP), and (d) neither weight sparsity control nor pre-training (DNNwoSwoP) for one, two, three, four, or five hidden layers, as well as for (e) the support vector machine (SVM) with a linear kernel or a Gaussian radial basis function (RBF) kernel. The DNN training neither weight sparsity control nor pre-training corresponds to a standard back-propagation algorithm.

Table 3.

Classification performance in terms of error rate (mean ± SD), sensitivity, and specificity. Sensitivity was defined as the ratio between (i) the number of true positives (i.e., correctly classified SZ patients) and (ii) the sum of the true positives and false negatives (i.e., incorrectly classified SZ patients). Specificity was defined as the ratio between (i) the number of true negatives (i.e., correctly classified HC subjects) and (ii) the sum of the true negatives and false positives (i.e., incorrectly classified HC subjects). The DNN training without L1-norm regularization and without pre-training (i.e., random initialization) corresponds to a standard back-propagation algorithm. The ICC values were obtained using the error rates from the with/without pre-training approaches across all the permuted sets, or using the error rates from the with/without L1-norm regularization schemes across all the permuted sets.

| Number of hidden layer | Weight sparsity control | Initialization of weights | ICC | |

|---|---|---|---|---|

| With pre-training | Without Pre-training | |||

|

| ||||

| Error rate (Sensitivity; Specificity) | Error rate (Sensitivity; Specificity) | |||

|

| ||||

| 1 | With L1-norm regularization

|

22.5 ± 0.7 (77.1; 77.9) | 22.7 ± 1.2 (75.8; 78.8) | 0.00 |

| Without L1-norm regularization | 24.2 ± 1.2 (77.0; 74.6) | 25.1 ± 1.8 (74.4; 75.4) | 0.00 | |

|

|

||||

| ICC | 0.04 | 0.03 | ||

|

| ||||

| 2 | With L1-norm regularization

|

17.6 ± 0.4 (82.6; 82.2) | 20.8 ± 0.9 (79.9; 78.6) | 0.30 |

| Without L1-norm regularization | 22.9 ± 1.1 (76.8; 77.4) | 23.1 ± 1.5 (77.1; 76.7) | 0.00 | |

|

|

||||

| ICC | 0.48 | 0.06 | ||

|

| ||||

| 3 | With L1-norm regularization

|

14.2 ± 0.4 (86.3; 85.3) | 20.2 ± 1.2 (79.8; 79.8) | 0.49 |

| Without L1-norm regularization | 17.5 ± 0.9 (83.0; 82.0) | 25.1 ± 1.4 (74.9; 74.9) | 0.44 | |

|

|

||||

| ICC | 0.32 | 0.22 | ||

|

| ||||

| 4 | With L1-norm regularization

|

14.5 ± 1.2 (85.3; 87.5) | 24.1 ± 1.4 (75.4; 76.4) | 0.59 |

| Without L1-norm regularization | 22.4 ± 1.0 (77.0; 78.2) | 25.0 ± 1.7 (75.1; 74.9) | 0.05 | |

|

|

||||

| ICC | 0.51 | 0.00 | ||

|

| ||||

| 5 | With L1-norm regularization

|

16.6 ± 2.2 (83.3; 83.5) | 25.2 ± 1.4 (75.4; 74.2) | 0.29 |

| Without L1-norm regularization | 22.8 ± 1.7 (77.5; 76.9) | 27.0 ± 2.1 (73.0; 74.9) | 0.06 | |

|

|

||||

| ICC | 0.15 | 0.00 | ||

ICC, intra-class correlation coefficient

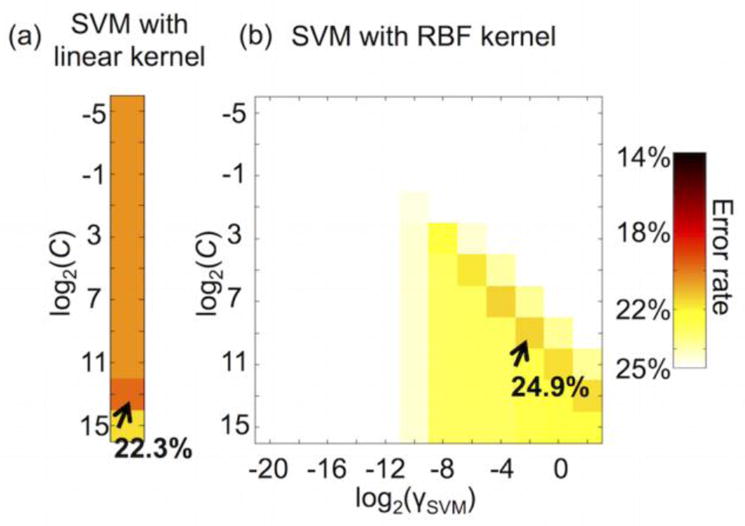

Classification performance from SVM

Error rates for the SVM classifier with a linear kernel (22.3 ± 0.8%) or a RBF kernel (24.9 ± 1.0%) were generally inferior compared with error rates obtained for DNNs with sparsity control and pre-training (Fig. 8). Figure 9 illustrates that the optimal parameters of the SVM from the grid search were 213 for the soft margin parameter C (using a linear kernel), 29 for the soft margin parameter C, and 2−2 for the RBF kernel size γSVM (using an RBF kernel).

Figure 9.

Optimally chosen SVM parameters: (a) the soft margin parameter C from the SVM with a linear kernel and (b) C and the RBF kernel size γSVM from the SVM with an RBF kernel.

Hierarchical features interpreted from DNN weights

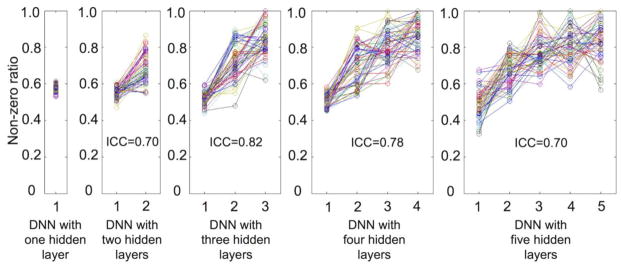

The learned features of the first hidden layer of the DNN with three hidden layers are visualized in Figures 10a–c and Figure S1 (see Tables S1–S4 for details). These learned features represent (1) the reduced FC level from SZ group between the cerebellum and the subcortical areas, and (2) both the reduced and increased FC levels from SZ group between the thalamus and the cortical regions (the first column in Fig. 10c, Table S1) (Çetin et al., 2014). The posterior cingulate cortex (PCC), angular gyrus, paracentral lobule, and occipital gyrus showed aberrant FC patterns (the second column in Fig. 10c, Table S2). The frontal areas, temporal lobe, and occipital gyrus exhibited reduced FC level from SZ group (the third column in Fig. 10c, Table S3), in addition to altered striatal FC patterns (the fourth column in Fig. 10c, Table S4). The learned features of the second and the third hidden layer showed densely populated FC patterns across multiple brain regions (Figs. 10a–b). All of the learned features for each hidden node in each hidden layer are visualized in Figures S1–S3. Overall, lower-level/localized features from the first hidden layer (Fig. S1) and higher-level/global features from the second (Fig. S2) and third (Fig. S3) hidden layers were observed. The first and second hidden node input from the first hidden layer were statistically different between the HC and SZ groups (Fig. 10c; Bonferroni-corrected p < 10−4 from a two-sample t-test; d.f. = 99), whereas all four hidden node input from the third hidden layer were statistically different between the two groups (Fig. 10a; Bonferroni-corrected p < 10−14 from a two-sample t-test; d.f. = 99). Moreover, all four hidden node input from the third hidden layer was statistically different from zero within each group presenting opposite signs across the two groups (Fig. 10a; Bonferroni-corrected p < 10−5 from a one-sample t-test; d.f. = 49), and thus this indicates that the hidden node output with sigmoid node function is readily separable from 0.5.

Figure 10.

Learned features of the top four hidden nodes (sorted by Fisher’s scores) in the (a) third, (b) second, and (c) first hidden layers. In the learned features, all weight values above 0.2 or below −0.2 are shown. Each number in the circular graphs indicates the automated anatomical labeling (AAL) region (as defined in the AAL toolbox for SPM8; odd indices are shown for brevity). The boxplots represent the mean and standard deviation of the corresponding hidden node input values prior to application of the sigmoid node function. The number in each boxplot is the Fisher’s score of the corresponding hidden node input. The thickness of each red or blue line represents the normalized magnitude of the weight parameters (i.e., the thicker the line, the greater the magnitude). HC, healthy control; SZ, schizophrenia; CB, cerebellum; SC, subcortical region; PCG, postcentral gyrus; PCL, paracentral lobule; SOG, superior occipital gyrus; PCC, posterior cingulate cortex; MOG, middle occipital gyrus; IFG, inferior frontal gyrus; ITG, inferior temporal gyrus; a.u., arbitrary unit.

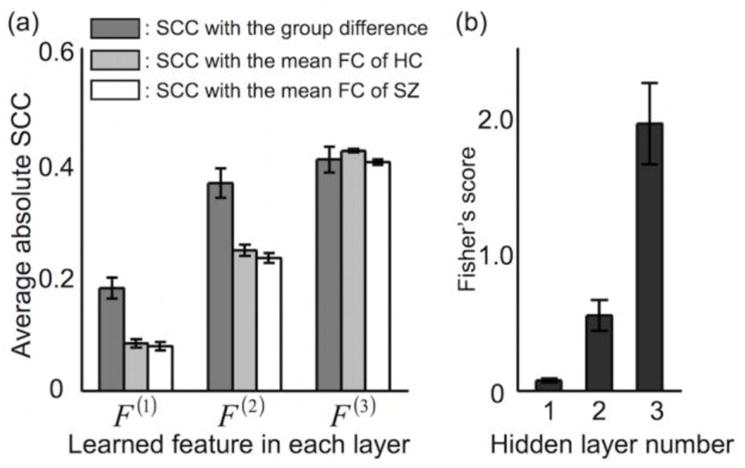

Figure 11a shows the increased average absolute values of the spatial CCs between the learned features and (1) the mean FC patterns from the SZ or HC group, as well as (2) the group differences in the FC patterns between the SZ and HC groups in the higher layer (see Fig. 4b). Meanwhile, Figure 11b shows the average Fisher’s score of each hidden layer for the DNN with three hidden layers. Note that the Fisher’s score of the third hidden layer was significantly larger than that of the first hidden layer (1.94 ± 0.30 and 0.07 ± 0.01 from the third and first hidden layers, respectively; Bonferroni-corrected p < 10−4 from a two-sample t-test; d.f. = 98). Overall, these results support the idea that learned features in the higher layers represent holistic information of the FC patterns associated with each group and/or group-level differences in FC patterns between the two groups. On the other hand, learned features in the lower layers characterize a portion of the FC patterns.

Figure 11.

Quantitative evaluation of the learned hierarchical features across the hidden layers. (a) Absolute values of the spatial correlation coefficients (SCCs) using the learned features in each of the hidden layers and (b) Fisher’s scores across 50 hidden nodes in each of the hidden layers (both data sets, means ± the SD). F(J) indicates the learned features in the Jth hidden layer. All measures were calculated from the trained deep neural network (DNN) with three hidden layers. FC, functional connectivity; SZ, schizophrenia; HC, healthy control.

Table S5 shows the kurtosis and modularity values of the DNN weights obtained for varying numbers of linearly combined weights. The estimated kurtosis values of the learned features for the first hidden layer (7.1 ± 0.9) were significantly greater than the kurtosis values of the second hidden layer (3.9 ± 0.4; Bonferroni-corrected p <10−4 from a two-sample t-test; d.f. = 98) and the third hidden layer (3.4 ± 0.2; Bonferroni-corrected p <10−4 from a two-sample t-test; d.f. = 98) when 15 DNN features in the lower layer were averaged to estimate the DNN feature in the higher layer. The average modularity values of the DNN features decreased from the lower to the higher layers. Across the hidden layers, the kurtosis values were more reproducible than the modularity values (ICC of 0.92 for kurtosis values vs. ICC of 0.44 for modularity values when the number of linearly combined weights/features was 15).

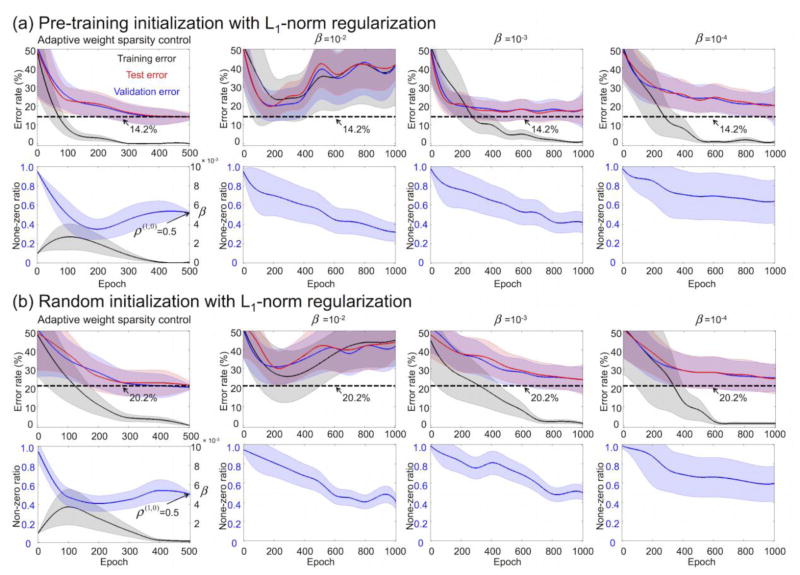

Classification performance for various parameter sets

Figure 12a illustrates the average learning curves, in which the proposed adaptive L1-norm regularization β(t) along with pre-training initialization was superior in terms of final error rate and convergence speed compared with the fixed β. When β was fixed to 10−2, the DNN weights during the DNN training diverged (i.e., the intensity of the DNN weights keeps increasing/decreasing). The adaptive β(t) method enabled a convergence (a) to a minimum error rate (compared with error rates from the fixed β of 10−3 and 10−4) and (b) to the target non-zero ratio (compared with the gradually decreasing non-zero ratio from the fixed β). A similar trend for the learning curves obtained from the adaptive β(t) and fixed β can be observed in the results using the random initialization of DNN weights, but with much higher error rates than the pre-training initialization of DNN weights (Fig. 12b). The average learning curves without pre-training (i.e., random initialization) suggested that the proposed adaptive β(t) was consistently better than the approach using a fixed β in terms of error rate (e.g., 20.2% with an adaptive β(t) vs. 24.3% with a fixed β of 10−3) and convergence speed (e.g., less than 500 epochs to converge with an adaptive β(t) vs. approximately 750 epochs to converge with a fixed β of 10−3) (Fig. 12b). Overall, the performance of the DNN without pre-training was inferior to that of the DNN with pre-training as evidenced by the greater error rate (20.2% vs. 14.2%) and slower convergence speed.

Figure 12.

Average learning curves (with SD) of error rates (first row) and the non-zero ratios (second row) from the first hidden layer (a) with pre-training and (b) without pre-training. The first column denotes the results from the proposed weight sparsity control via adaptation of the L1-norm regularization parameter. The second, third, and fourth columns are the results from the fixed L1-norm regularization parameter (10−2, 10−3, and 10−4, respectively). SD, standard deviation.

Figure 13a shows that error rate obtained from an adaptive control of the L1-norm regularization parameter (i.e., β(t)) to train the DNN with three hidden layers was 14.2 ± 0.4%, which is superior to the error rates (42.1 ± 1.7%, 18.2 ± 0.8%, and 20.1 ± 1.2% using 10−2, 10−3, and 10−4, respectively) from the fixed L1-norm regularization parameter (i.e., β). The L2-norm regularization parameter γ had virtually no impact on classification performance as shown in Figure 13b. Figure 13c shows the classification performance depending on the numbers of hidden layers/nodes via a grid search, and the error rate was minimal (14.2%) when three hidden layers and 50 hidden nodes were used.

Figure 13.

Classification performance obtained from varying sets of parameters: (a) the proposed adaptive L1-norm regularization parameter vs. several fixed L1-norm regularization parameters, (b) the L2-norm regularization parameters, and (c) a grid of the numbers of hidden layers and hidden nodes.

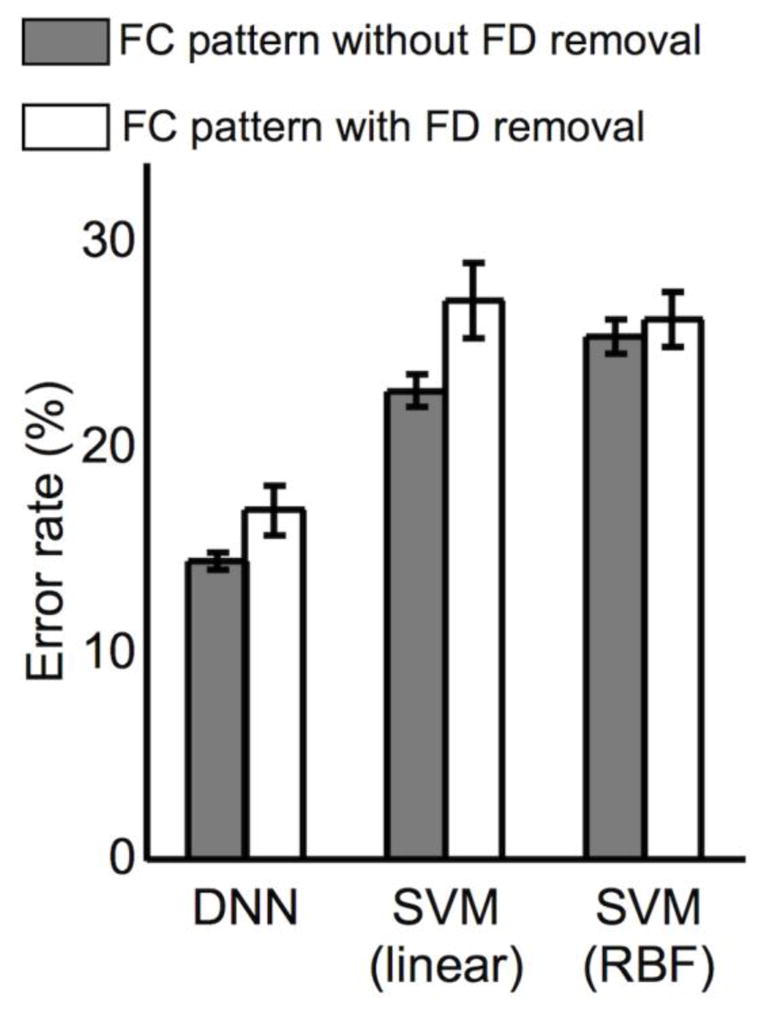

Classification performance bias due to framewise displacement

The average FD values from the HC (0.22 ± 0.09 mm) and SZ (0.28 ± 0.10 mm) groups were statistically different (Bonferroni-corrected p < 0.01 via a two-sample t-test; d.f. = 99). The higher-order momentum values (i.e., skewness and kurtosis) of the FC pattern before and after the FD removal are summarized in Table S6. Overall, the skewness and kurtosis values were significantly reduced after the FD removal, which indicated that the FC patterns were Gaussianized after the FD correction. There was no significant difference in these values between the two groups as measured using a two-sample t-test, and no significant interaction (group × FD) was detected using a two-way ANOVA (uncorrected p = 0.12). Figure 14 illustrates that the classification performance was slightly degraded by removing the FD component in the FC patterns, in which the error rates from the DNN classifier were 14.2 ± 0.4% and 16.6 ± 1.2% using the FC patterns with and without the FD removal, respectively. A similar trend was observed using SVM classifiers with the linear kernel (22.3 ± 0.8% and 26.6 ± 1.8%) and the RBF kernel (24.7 ± 1.3% and 24.9 ± 0.8%).

Figure 14.

Classification performance obtained from the adopted classifiers (i.e., the DNN with three hidden layers and 50 nodes in each hidden layer; the SVM with linear or RBF kernels) using FC patterns with and without framewise displacement (FD) removal. DNN, deep neural network; SVM, support vector machine; RBF, radial basis function.

Classification performance using FC patterns of functionally parcellated brain regions from GICA

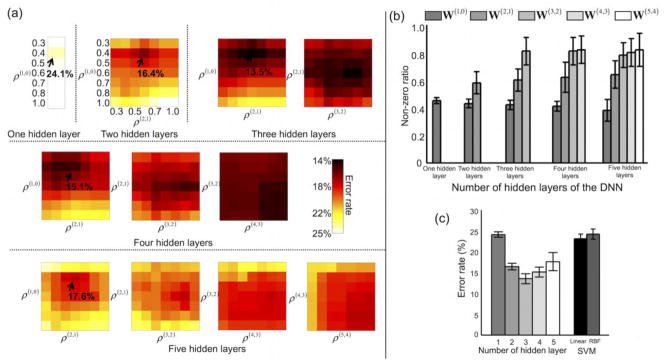

The 145 brain regions were functionally defined using the 145 ICs from the GICA (Fig. S4). The 126 ICs were predominantly located in the GM. Among these ICs, the TCs of the 116 ICs with greater proportions of voxels defined in the GM than those in the ten remaining ICs were further used to calculate the FC patterns. Figure 15a depicts the average error rates obtained from 50 randomly permuted training/validation/test data for each of the target non-zero ratios and for the DNNs with several numbers of hidden layers. The average error rate of the DNN with one hidden layer (24.1%) was lowest, and the optimal non-zero ratio was 0.4. For the DNN with three hidden layers, the average error rate was 13.5%, and the optimal non-zero ratios were 0.4, 0.6, and 0.7 from the first, second, and third hidden layers, respectively. Figure 15b shows the optimal (i.e., when the error rate was at its minimum) non-zero ratio for each of the hidden layers obtained via explicit control of the weight sparsity for each of the DNNs. Figure 15c shows that the error rates of 24.1 ± 0.7%, 16.4 ± 0.8%, 13.5 ± 1.2%, 15.1 ± 1.3%, and 17.6 ± 2.2% were achieved from the DNNs with one, two, three, four, and five hidden layers, respectively, whereas the error rates from the SVM with linear and RBF kernels were 23.1 ± 1.1% and 24.2 ± 1.2%, respectively.

Figure 15.

(a) Error rates for several target non-zero ratios using input FC patterns obtained from the GICA-based functional parcellation approach and using DNNs with several hidden layers. (b) The optimal (i.e., when average error rate evaluated across validation data was at the minimum) non-zero ratios for each of the DNNs (mean ± SD). (c) Minimum error rates from each of the DNNs with several numbers of hidden layers and from SVM classifiers with linear or RBF kernels. GICA, group independent component analysis; DNN, deep neural network; SD, standard deviation; RBF, radial basis function; ρ(J+1,J), target non-zero ratio of W(J+1,J); W(J+1, J), the DNN weights between the Jth and (J+1)th layers.

Discussion

Study summary

In the present study, a DNN classifier trained with pre-training and explicit control of weight sparsity demonstrated significantly enhanced performance for the rsfMRI-facilitated automated diagnosis of SZ patients from HC subjects relative to the SVM classifier. The classification performance was systematically evaluated under various conditions, including (1) differing numbers of hidden layers and hidden nodes, (2) the presence or absence of adaptive L1-norm regularization for weight sparsity control, (3) the presence or absence of SAE-based pre-training for weight initialization, (4) the presence or absence of FD regression to the FC patterns, and (5) anatomically or functionally defined ROIs to calculate the FC patterns.

The key findings of this investigation are summarized as follows: (1) the L1-norm regularization of the DNN weights via explicit sparsity control improved the classification performance; (2) the SAE-based pre-training of DNN weights further enhanced classification performance; (3) the lower-to-higher level features of the whole-brain FC patterns were learned in the lower-to-higher layers of the DNN; and (4) lower-level/local features in the lower layer reflected aberrant FC pairs associated with SZ, whereas higher-level/global features in the higher layer represented FC alterations of SZ patients across the entire brain. The minimum error rate (14.2%) obtained from the DNN with three hidden layers employing both L1-norm regularization and SAE-based pre-training was markedly decreased compared with the minimum error rate (28.1%) obtained from an earlier study using the same data set (albeit with slightly different included subjects) and the SVM-based classifier (Watanabe et al., 2014), as well as that obtained from the SVM classifier in the current study (22.3%).

Classification performance improvement from adaptive L1-norm regularization and pre-training

Both L1-norm regularization of the DNN weights via explicit sparsity control and SAE-based pre-training of the DNN weights contribute to the improved classification performance. Based on the results of a three-way ANOVA with three factors, including the use of sparsity control, use of pre-training, and the number of hidden layers of the DNN, the statistical significance of the interaction between the number of hidden layers and the use of pre-training (Bonferroni-corrected p < 10−7; d.f. = 499) was greater than that between the number of hidden layers and the use of sparsity control (Bonferroni-corrected p < 0.05; d.f. = 499). Pre-training benefitted DNNs with a greater number of hidden layers more than DNNs with a fewer number of hidden layers. L1-norm regularization of the DNN weights can work as an efficient feature selection strategy that yields a sparse solution, particularly in the lower layer, to deal with high dimensionality of the input and intra-subject variability (Michel et al., 2012).

Efficacy of sparsity control of weights to DNN training

It is important to note that our proposed explicit control of the weight sparsity via application of the adaptive L1-norm regularization parameter, β(t), improved the classification performance of the DNN by optimizing the sparsity level in each hidden layer through the use of non-zero ratios of the DNN weights during a training phase. On the other hand, the L1-norm regularization parameter was fixed in previous studies (Kim et al., 2012; Watanabe et al., 2014). Thus, the scheme employed herein allowed a systematic evaluation of DNN classifier performance depending on the sparsity level of the weights for each hidden layer. Consequent results indicated that the error rate was more sensitive to the target non-zero ratios for the first hidden layer than to the ratios for the higher hidden layers, and that the error rate deviated less across the target non-zero ratios in the higher hidden layers (Figs. 6b–e).

The optimization criterion of the proposed DNN that incorporate both L2-norm regularization and L1-norm regularization were similar to those of the elastic net (Zou and Hastie, 2005) in the context of sparse feature extraction and dealing with highly correlated variables. However, there are distinctions between the elastic net and the proposed DNN, in which (1) our proposed regularization scheme is to adaptively update the L1-norm regularization parameter explicitly to reach the target non-zero ratio, as opposed to the use of a constant regularization parameter in the elastic net, (2) optimization criteria with both L1-norm and L2-norm terms were extended to the multilayer networks of the DNN, and (3) the weight initialization was performed using an AE (stacked) in our DNN and using random initialization in an elastic net.

Importantly, an approach enforcing a sparsity level of the weights with L1-norm regularization would not guarantee that the obtained weights (i.e., features) were correct and would, thus, potentially generate multiple false positives. Even for the simpler LASSO scheme, there is no statistical control over false positives since ground truth may not be available. In our study, the maximum validation accuracy in the CV phase was used as a ground truth to identify the optimal weight sparsity levels (i.e., assuming lower false positives at the optimal sparsity level) across the layers among several pre-defined candidates. The fine-tuning of the optimal sparsity level with increased number of candidate sparsity levels in each layer can be implemented at the expense of computational complexity. Future study is warranted to investigate the optimal sparsity level in each of the hidden layers in a more systematic manner than that presented, such as by controlling a smoothing parameter of the adaptive LASSO to reach a desired local false discovery rate (Sampson et al., 2013).

Spatial regularization, in addition to sparsity control, is necessary to include variable patterns across subjects. Thus, consideration of both sparsity control to deal with the intra-subject variability and spatial regularization to deal with the inter-subject variability may further improve the classification performance (Michel et al., 2012; Ng and Abugharbieh, 2011; Ng et al., 2012). As an example, Michel and colleagues (2012) reported that the sparsity in an individual subject enhances prediction accuracy because of the capability of finding sparse and fine-grained patterns.

Efficacy of SAE-based pre-training for DNNs

The adopted cost function of our DNN with multiple hidden layers may be highly non-convex in the parameter space, and multiple distinct local minima or plateaus would exist in the parameter space. The major challenge is that not all of these local minima provide equivalent classification errors, and a gradient descent learning method with random initialization may be trapped into severe local minima or plateaus (Bengio and LeCun, 2007). Initialization of the weights with SAE-based pre-training can be seen as a constraint, that. the weights represent the distribution of the input p(XFC) modeled by an AE, where XFC is an input FC pattern (Bengio et al., 2007). This initialization can work as a good starting point to maximize the conditional probability of the target vector of the class for a given input, p(tclass|XFC) (Bengio et al., 2007). Since the supervised fine-tuning step of Eq. (2) was exactly the same for cases with and without pre-training, the performance degradation of random initialization compared with that of pre-training in our findings may provide empirical evidence in this context. The minimum error rates and lower variance in the error rates from the DNN with pre-training (14.2 ± 0.4%) compared with those using random initialization (20.2 ± 1.2%) in the adaptive L1-norm regularization framework would support the assertion that the DNN with pre-training is more robust to the variability of samples than the DNN training with random initialization (Table 3). The results from the report showing that the unsupervised pre-training scheme is particularly well-suited when the supervised training data are limited (Deng and Yu, 2014) may well explain our findings. Accordingly, SAE-based pre-training can maximize classification performance using the whole-brain rsfMRI FC patterns, particularly for DNNs with multiple hidden layers.

Optimal parameters of adopted classifiers

The optimal non-zero ratio parameters for the DNNs with several numbers of hidden layers were reproducible across the permuted CV sets. The DNN with three hidden layers presented the maximum ICC value as well as the highest classification performance among the DNNs with several different numbers of hidden layers, indicating that this DNN is well-suited to our input data. Compared with the optimally selected parameter of the linear kernel SVM, the SVM with the RBF kernel represented a trade-off between the soft margin and RBF kernel size parameters.

Hierarchical feature representation of whole-brain FC patterns obtained from DNNs with sparsity control and pre-training