Abstract

Active contour models are of great importance for image segmentation and can extract smooth and closed boundary contours of the desired objects with promising results. However, they cannot work well in the presence of intensity inhomogeneity. Hence, a novel region-based active contour model is proposed by taking image intensities and ‘vesselness values’ from local phase-based vesselness enhancement into account simultaneously to define a novel multi-feature Gaussian distribution fitting energy in this paper. This energy is then incorporated into a level set formulation with a regularization term for accurate segmentations. Experimental results based on publicly available STructured Analysis of the Retina (STARE) demonstrate our model is more accurate than some existing typical methods and can successfully segment most small vessels with varying width.

Introduction

Active contour models [1–4] have become very popular in the past few decades, and widely used in a wide range of problems including image segmentation and computer vision, which dynamically deforms object contours based on a predefined energy functional from image information and can, by minimizing this functional, yield smooth and closed boundary contours of the desired objects with sub-pixel accuracy [5–7]. These models can be coarsely categorized into two kinds: edge- [8–11] and region-based models [12–14].

Edge-based active contour models [15, 16] typically utilize image gradients as a driving force to identify object boundaries and attract the contour toward object boundaries. These models can be capable of extracting the boundaries of the desired objects in high-contrast images with clear and strong contour information. However, they may suffer from boundary leakage problem in the presence of image noises, weak contrast and intensity inhomogeneity, which in general occurs in a variety of medical images with varying contrast. In addition, they also cannot correctly find the boundaries of the desired objects if initial curve placement is far away from object boundaries due to the local nature of image gradients. These limitations of edge-based models have restricted their application range and in turn promoted the development of region-based active contour models, which are first introduced by Chan and Vase [13] (called the CV model), and have been subjected to more and more attentions.

Region-based models [17–20] tend to model the foreground and background regions of a given image to guide the motion of contour curve based on the assumption that image intensities are statistically homogeneous in each region, which means that the whole image can be divided into multiple image regions with different statistical properties of image intensities. Therefore, these models are robust against initial curve placement and insensitivity to image noises without using image gradients. However, they may produce erroneous segmentation results, especially in some cases where the desired objects cannot be easily distinguished using statistical information of image intensities, or have an expensive computational cost [16, 21]. These drawbacks, together with the complex problem of keeping a tradeoff among all of weighting parameters, have made these models barely useful for certain small objects, like blood vessels in retinal images.

Recently, a variety of region-based active contour models has been proposed ceaselessly by using local intensity statistical information [22–24] for more accurate image segmentation, especially in the presence of intensity inhomogeneity. Li et al. [25] improved the CV model using regularized distance and a local binary fitting (LBF) model to alleviate these problems caused by intensity inhomogeneity. This method is, to some extent, able to deal with intensity inhomogeneity using local intensity information, but sensitive to initialization and fails to extract the boundaries of object with low contrast. Wang et al. [26] proposed an active contour model driven by local Gaussian distribution fitting (LGDF) energy, which described the local image intensities by Gaussian distributions with different means and variances. The means and variances of local intensities are considered as spatially varying functions to identify the differences between the foreground and background regions. Hence, it has exhibited certain capability of handling intensity inhomogeneity and image noises, and of distinguishing regions with different intensity variances. However, it, using solely local intensity information, may fail to extract completely the desired objects.

In this paper, a novel region-based model is proposed by taking multiple image features rather than single intensity information into account simultaneously to construct a Gaussian distribution fitting energy for accurate image segmentation, called Multi-feature Gaussian distribution fitting (MGDF) model. Specifically, image intensities and their corresponding 'vesselness values' from local phase-based vesselness enhancement [27] are used to construct this fitting energy in the neighborhood of each pixel, and this energy is incorporated into a level set formulation with a regularization term for image segmentations, and evaluated based on publicly available retinal images [28] for the extraction of blood vessels.

The remainder of this paper is organized as follows: Section Background reviews several existing typical active contour models, and then a novel model is introduced in section Methods where local phase-based enhancement and a novel energy functional are presented in detail. Finally, this novel model is evaluated completely in Section Experimental results and analysis, followed by the discussions and conclusions.

Background

There is a variety of region-based active contour models proposed in last few years, but only a few typical models are widely used and improved in image segmentations with promising accuracy. In this section, we will review several typical region-based active contour models for the benefit of the reader.

CV Model

The CV model is first proposed by Chan et al. [13] base on the assumption that images to be segmented are simplified into multiple regions where image intensities are statistically homogeneous. Let Ω ⊂ ℜ2 be the 2D image domain, and I: Ω ⊂ ℜ be a given gray image, for each pixel point x in image domain Ω, the CV model can be expressed by:

| (1) |

where C is the needed contour curve, who can be represented by the zeros level set of Lipschitz function ϕ(x): Ω ⊂ ℜ. c 1 and c 2 are the average intensities of the image I(x) inside and outside C. ∇H(·) is the gradient of Heaviside function H(·), which is usually approximated by with a small positive constant ε to roughly specify the internal and external of ϕ. For simplification purposes, we define H 1(ϕ) = H(ϕ) and H 2(ϕ) = 1-H(ϕ) for the internal and external of ϕ. λ 1, λ 2 and υ are weighting parameters for intensity-based and length penalty terms, respectively. According to the assumption mentioned above, c 1 and c 2 should be quite different due to the statistical homogeneity of image intensities from different regions specified by C. When image intensities are severe inhomogeneous, c 1 may be approximately equal to c 2, causing the model to lose of the capability of identifying the foreground and background regions. In addition, this model solely utilizes the global information of image to drive the motion of curve contour and ignores the local information [29] around a neighborhood of each pixel point.

LBF Model

To overcome the disadvantages of the CV model, the LBF model [25] is proposed by replacing the global information with the local information of image, which can be given by:

| (2) |

| (3) |

where K σ(·) is a Gaussian kernel with standard deviation σ; R(·) is a regularization term to penalize the length of contour curve and the deviation from a signed distance function [10, 17], whose weighting parameters are υ and μ, respectively. f 1(x) and f 2(x) are spatially varying functions to locally approximate the intensities inside and outside the contour curve, which are quite different from the two constants c 1 and c 2 in the CV model due to the localization properties introduced by K σ(·). It plays a key role in highlighting the differences between the foreground and background regions with intensity inhomogeneity. Therefore, the model can alleviate these problems caused by intensity inhomogeneity and achieve satisfactory results. However, this model typically relies on the initial curve placement so as to avoid the local minimums of the energy functional. Furthermore, it is not sufficient enough to use solely f 1(x) and f 2(x) in energy functional for accurate image segmentation, especially in the presence of image noises and intensity inhomogeneity.

LGDF Model

To accurately segment the desired objects, the LGDF model [26] is proposed by using more complex statistical characteristics of local intensities, which characterizes local intensity information via partition of neighborhood defined in a circular window, resulting in the local fitting energy being expressed as:

| (4) |

where the pixel point x is used to define the local circle region, which is divided into N disjoint sub-regions . p i,x(I(y)) denotes a posteriori probability of intensity I(y) specified by a pixel point y in the ith sub-region, whose spatial weighting is given by ω(x-y) relying on the distance between x and y, and they can be given respectively by:

| (5) |

| (6) |

where u i(x) and σ i(x) are local intensity means and standard deviation respectively. a is a constant such that ∫ω(·) = 1 in the local neighborhood of the point x, τ>0 is a scale parameter.

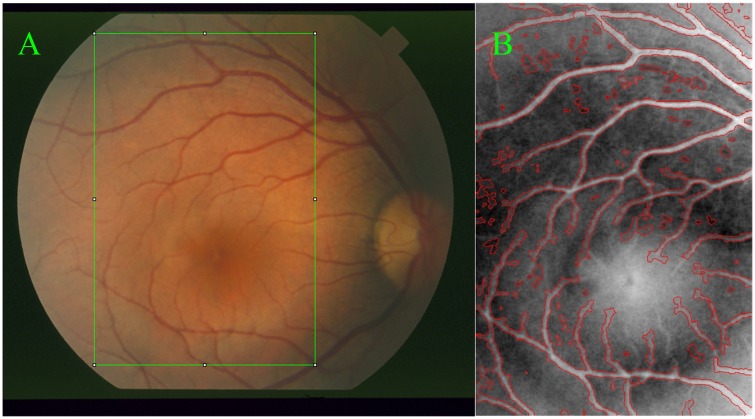

Using local intensity means and variances, this model can achieve a relatively accurate segmentation results in the presence of image noises and intensity inhomogeneity. Besides, it is insensitive to the initial curve placement. However, it may not be adequate in certain cases where more image information rather than single intensity information needs to be considered effectively for image segmentations, especially for small vessels in retinal images. For example, this model is used to extract the vessels in the region specified by a green rectangle shown as in Fig 1(A), and its corresponding segmentation result with red color is given in Fig 1(B), where several small vessels cannot be segmented adequately, but the background pixels have been already segmented incorrectly, confirmed by black circle regions. This means that the LGDF model is not adequate for the extraction of these small vessels where the intensity inhomogeneity is quite severe. To segment accurately these blood vessels, the other image information besides image intensities has to be taken into account simultaneously.

Fig 1. Retinal image and blood vessel extraction by active contour [26].

Image (A) is a randomly chosen region in a retinal image and (B) is the segmentation results of the LGDF model without ideal segmentations pointed by black circles.

Methods

To identify correctly and extract completely small vessels, active contour models have to alleviate these problems caused by intensity inhomogeneity by using more image information, which is generally obtained by different feature descriptors [27, 30] and characterizes the natures of certain objects. In this section, local phase-based vesselness enhancement filter [27] is chosen to extract vesselness features and viewed as a probability-like estimate of vesselness features, called 'vesselness value' in this paper. Larger vesselness value indicates the underlying object is more likely to be a vessel structure. Therefore, these vesselness values together with image intensities can be used to define a novel fitting energy for the extraction of vessels, which will be introduced respectively in the following.

Local phase-based vesselness enhancement

Local phase [27] is, as an important local feature, derived from quadrature filters under the concept of monogenic signals, which can be viewed as a complex filter pair in the spatial domain, and the real and imaginary parts correspond to line and edge filters, respectively. Hence, the angle θ between the real and imaginary parts can act as an important indicator of local features for line and edge information of an image, and has been extensively used for edge detection, symmetry analysis, and vesselness enhancements with promising results.

In the specific implementation of local phase, quadrature filters usually utilize a pair of even and odd filters with phase difference of π/2, denoted respectively by and at scale m and orientation j. For each pixel point x of an image I, the filter response is given by with and , where * denotes a convolution operation and is an imaginary unit. To obtain an orientation invariant phase map, it is necessary to replace the imaginary part with its absolute value so that , and combine all responses of different directions to yield a single response at each scale, defined as with J directions. By combining the responses from all of scales, the overall response P is given below:

| (7) |

where M is the number of scales and β≥1 is a weighting parameter. To make the phase map more regular, the response P needs to be normalized with a small positive number α to produce a final vesselness map, which can be given by:

| (8) |

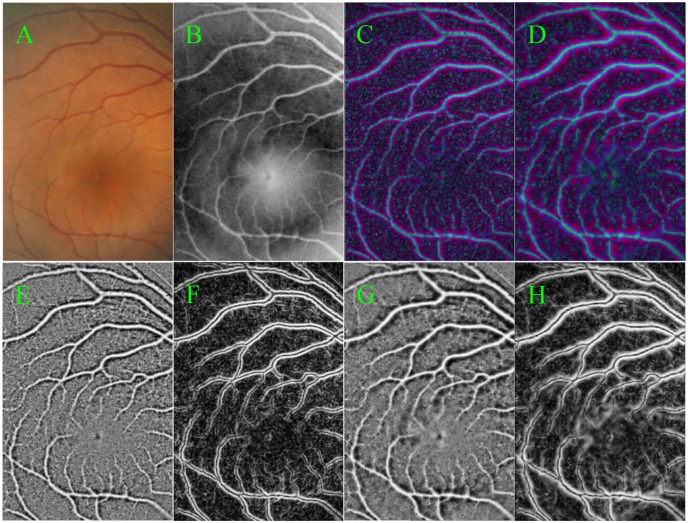

The effects of local phase-based enhancement on retinal images are shown in Fig 2, where a variety of small vessels is highlighted clearly regardless of intensity inhomogeneity. Among of Fig 2, (A) is a given retinal image region for vesselness enhancements, the green channel of (A) is shown in (B). The normalized enhancement results of (B) are shown in Fig 2(C) and 2(D) with scales of 1 and 2, respectively. From these enhanced images, we can find that the positions of blood vessels are highlighted from the background and the enhancement effects are not greatly different at different scales, which also can be seen in their corresponding real and imaginary parts shown in Fig 2(E)–2(H), respectively. Moreover, images (E) and (G) stress the positions of vessels, which are used as a vesselness map; while images (F) and (H) stress the edges of vessels. This vesselness map has a positive value inside the vessels but a negative value in the background, and has a zero value at the edge of the line structures, which can provide an important guide for the extraction of blood vessels.

Fig 2. The results of the local phase-based vesselness enhancement.

(A) is a randomly chosen retinal image region; (B) is the green channel of (A); (C) and (D) are the normalized results at scales 2 and 3, respectively, whose real and imaginary parts are given by (E) ~ (H)

Multi-feature Gaussian Distribute Fitting (MGDF)

Inspired by the LGDF model, a novel local fitting energy is defined by using image intensities and their corresponding 'vesselness values' from the vesselness map, which are viewed as two independent random variables to extend the LGDF model in the hope of improving its performances of image segmentation. This energy can be calculated around the neighborhood of point x by:

| (9) |

| (10) |

| (11) |

where p i,x(I(y)) and p i,x(V(y)) denote the posteriori probability of I(y) and V(y) in the intensity image and the vesselness map, located at the pixel point Y in the ith sub-region. and are weighting parameters for intensity- and vesselness-based terms, respectively. and are local means and standard deviations in the neighborhood of the point x for intensity image and vesselness map, respectively. For the whole image region, this energy can be given by:

| (12) |

To obtain smoother contour curves and more accurate image segmentations, this energy functional needs to be regularized by penalizing its length and deviation from a signed distance function. Furthermore, to keep fine details of the boundaries of the desired objects, we introduce the ϒ-neighborhood term to penalize the presence of isolated connected components in the segmented images according to the previous studies [31], which can be given by:

| (13) |

where γ and κ≥1 are scale parameters for a local neighborhood and the sensitivity of the local neighborhood, respectively. To combining all of these penalty terms, the complete regularization term can be rewritten as:

| (14) |

where η is the weighting parameter for the ϒ-neighborhood term. Adding the new regularization term and setting N = 2, the entire energy of the MGDF model can be given by:

| (15) |

| (16) |

By calculus of variations, it can be shown that the parameters , , and that minimize E MLGDF satisfy the following Euler—Lagrange equations [32–34]:

| (17) |

| (18) |

| (19) |

| (20) |

From Eq (17) to Eq (20), we can obtain

| (21) |

| (22) |

| (23) |

| (24) |

Minimization of the energy E MLGDF in Equation. Eq (15) with respect to ϕ can be achieved by solving the gradient descent flow equation:

| (25) |

| (26) |

| (27) |

where is the derivative of H(ϕ).

Experimental Results and Analysis

Experimental Data

To objectively evaluate the segmentation performances of our model, the public and available image datasets (STructured Analysis of the Retina, STARE) [28] are used for segmentation experiments (S1 File), which can be available at http://www.ces.clemson.edu/~ahoover/stare/. This dataset contain 20 color photographic images of the fundus, 10 of which show evidence of pathology, acquired by a Topcon TRV-50 fundus camera (Topcon, Tokyo, Japan). They have the same size of 605×700, along with two different manual segmentations generated by clinical experts working in the field of retinal image, and the first manual segmentations are used as ground truth for quantitative analysis.

Implementation details

In the course of vesselness enhancement, local phase-based filter was implemented by setting the center frequency to 5π/7, the bandwidth to 2 octaves, the size of filter to 15×15, and scales to 3 respectively, which were recommended by the previous studies [27] according to the nature of retinal images. After obtaining the desired enhancement images, our model is implemented based on image intensities and their corresponding 'vesselness values' from vesselness map with a level set framework, where the partial and temporal derivatives in Eq (25) are discretized as central and forward differences, respectively. And then the level set function ϕ is initialized as a binary step function which takes a negative constant value -c 0 inside a region Ω1 and a positive constant value c 0 outside, which we set to 2 in this paper. Spatial weighting ω(·) can be truncated as a (2τ+1)×(2τ+1) mask for the computational efficiency of our model, where τ is the smallest odd number no less than 2σ. Unless otherwise specified, other parameters in our experiments are set as follows:σ = 3, ,, time step Δt = 0.1, υ = 0.00065×255×255 and μ = η = 1 by default.

Evaluation Criteria

For purposes of quantitative evaluations, segmentation results are compared with their corresponding standard segmentation results and the results by other methods [25, 26] in terms of sensitivity (Se), specificity (Sp), accuracy (Acc) and the area under a receiver operating characteristic curve (Auc) [35], which can be given respectively by:

| (28) |

| (29) |

| (30) |

| (31) |

where tp,fn,tn and fp denote the true positive (correctly identified vessel pixels), false positive (incorrectly identified vessel pixels), true negative (correctly identified background pixels) and false negative (incorrectly identified background pixels), respectively. Among of the four measures, Se and Sp demonstrate the effectiveness of segmentation algorithms: the former for the desired pixels with positive values while the latter for the undesired pixels with negative values. Acc indicates the overall segmentation performance, and Auc reflect the tradeoffs between the Se and Sp according to [35]. In addition, vessel segmentation can be in essence referred as to an imbalanced data classification problem, where vessel pixels are much less than the background pixels. In such a case, the final performances of a method are mainly reflected by Acc and Auc. Paired t-test on these evaluation measures is implemented using the SPSS version 21.0 (SPSS Inc., Chicago, IL, USA). A p value of 0.05 is considered statistically significant, according to these papers [36], in order to evaluate the segmentation performances of different methods.

Experimental Results

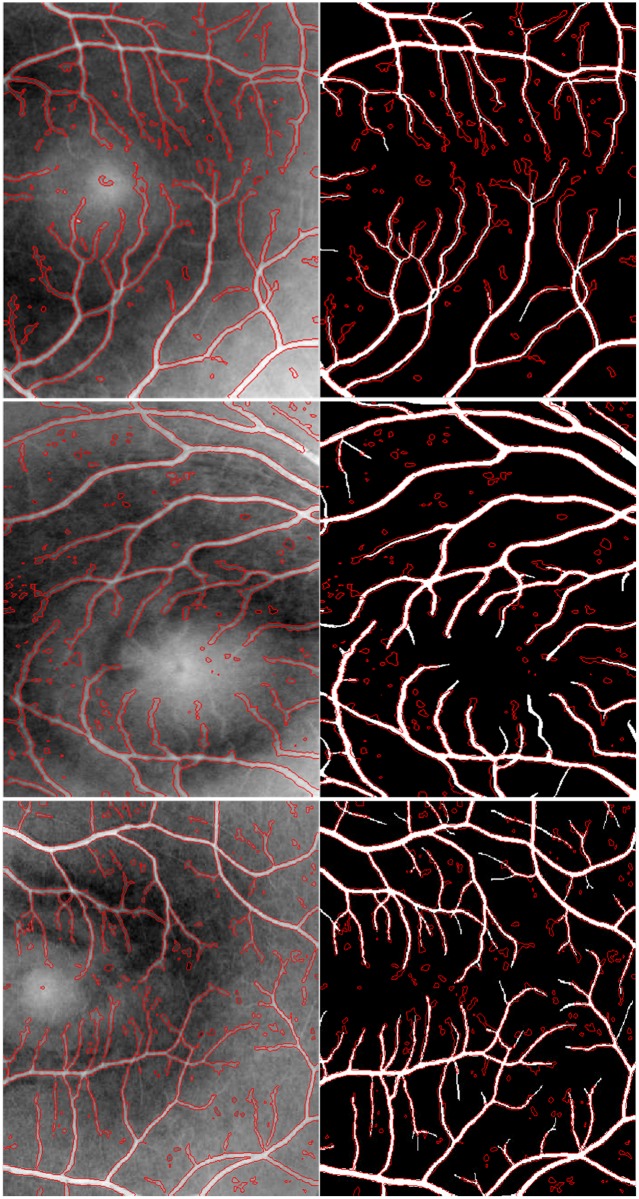

According to the implementation details above, the segmentation results of the MGDF model for three randomly chosen retinal image regions are shown in Fig 3, where the first column corresponds to the segmentation results highlighted by red contour curves, and the second column to the differences between these segmentation results and their corresponding manual segmentations. From Fig 3, it is easy to find that our proposed model is able to accurately extract most of the desired contours of blood vessels, including relatively small parts, some of which cannot be identified visually in original images due to the presence of severe intensity inhomogeneity. Moreover, these obtained contour curves can successfully overlap their corresponding manual segmentations, as shown in the second column in Fig 3. This indicates clearly that our proposed model can be at least comparable to manual segmentation in performance when ignoring the subtle parts of blood vessels. On the other hand, Fig 3 also shows that the segmentation results of our model contain lots of isolated components caused probably by image noises, and they are slightly different from the manual segmentations which present more details for small vessels. However, these components account for a relatively small portion of the whole segmentation results. Therefore, we can roughly reach a conclusion that our proposed model is competent for the extraction of vessels with varying width.

Fig 3. The segmentation results by the proposed model in contrast to the manual segmentations.

The first row corresponds to the segmentation results by the proposed model based on three randomly chosen retinal image regions; and the second row shows the differences between these segmentation results and their corresponding manual segmentations.

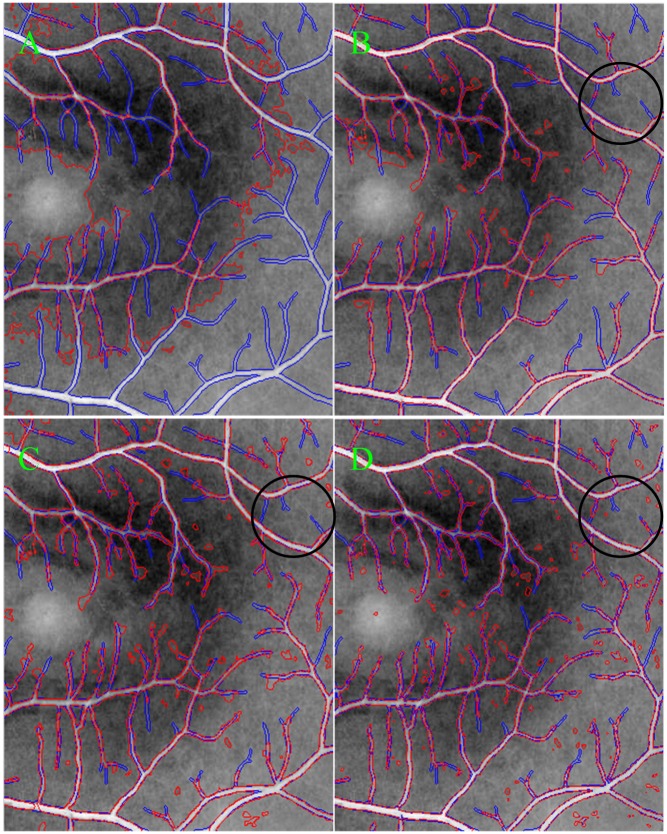

Fig 4 also illustrates the performance differences when comparing our proposed model with several existing typical active contour models (i.e., the CV, LBF and LGDF models), where blue and red colors correspond to the contours of blood vessels obtained by manual and different models (i.e., the CV, LBF, LGDF and our models), respectively. These obtained contour curves by different models can be compared among one another, relative to manual segmentation curve. And it is easy to find that our proposed model can extract more vessels, with varying width, than the other models, which can be seen in the region specified by black circles. As for three other active contour models, their segmentation results are greatly different as shown in Fig 4(A)–4(C), respectively. Specifically, the segmentation result of the CV model, as shown in Fig 4(A), is rough and incorrect because it just involves intensity information in image global region, without considering local image information in the neighborhood of each pixel. When the local information of each pixel is considered during image segmentations, the segmentation results can be greatly improved as shown in Fig 4(B) and 4(C) obtained by the LBF and LGDF models. Both of them are able to extract easily blood vessels with strong contrast with high accuracy. However, they cannot accurately segment a variety of small vessels due to the presence of intensity inhomogeneity and image noises. In addition, the LBF model tends to extract some background information in the neighborhood of vessels with small width, while the LGDF model tends to segment several isolated components.

Fig 4. The segmentation results of different models with red color are compared with the manual segmentation with blue color.

(A) ~ (D) correspond to the CV, LBF, LGDF and MGDF models, respectively.

To compare our model with the most recent methods, the quantitative segmentation results are given in Table 1 in terms of Se, Sp, Acc and Auc. This table shows that the MGDF model has the best performances among these four active contour models with the biggest values of Acc and Auc; while the CV model has the worst performances with the lowest values of Se, Sp, Acc and Auc. As for the LBF and LGDF models, the latter is more accurate than the former, with greater by 0.023, 0.016 and 0.010 for Se, Acc and Auc, respectively.

Table 1. Segmentation performance of different models in terms of Se, Sp, Acc and Auc.

| Method | Se | Sp | Acc | Auc |

|---|---|---|---|---|

| CV | 0.617 | 0.652 | 0.589 | 0.635 |

| LBF | 0.704 | 0.958 | 0.921 | 0.831 |

| LGDF | 0.727 | 0.952 | 0.937 | 0.840 |

| MGDF | 0.758 | 0.965 | 0.952 | 0.862 |

To evaluate their statistical differences, paired t-test on the final performance measures (i.e., Acc and Auc) is implemented based on all of segmentation results. The mean and standard deviation of Acc is 0.589±0.087, 0.917±0.012, 0.933±0.010 and 0.954±0.009 for the CV, LBF, LGDF and MGDF models, respectively. The difference between them is statistically significant with p < 0.002. There are also statistically significant differences in terms of Auc according to the mean and standard deviation of these models for the same p value. This means that phase-based vessel enhancement contributes significantly to the final performance results. This further confirms that in image segmentation, other texture information besides image intensities need also be considered in both global and local image regions, because they in general play an important role in identifying small vessels from image regions where intensity inhomogeneity is severe.

Although our proposed model outperforms these typical active contour models in performance, it is necessary to compare with other state-of-the art vessel segmentation methods. For this propose, several widely used vessel segmentation methods [37–41] are chosen, together with their publicly available results based on STARE database. The segmentation results are seen in Table 2, which clearly shows that our proposed method is has the first highest values for Se and Auc, and third for Acc. This demonstrates that our method can compete with these methods according to the final measures (i.e., Acc and Auc).

Table 2. The performance of different methods in terms of in terms of Se, Sp, Acc and Auc.

Discussion

Experimental results demonstrate that our model is competent for the task of vessel segmentation, and outperforms some existing typical vessel segmentation methods, but it is necessary to discuss the influences of the whole weighting parameters in our model, most of which have been deeply analyzed in previous studies [26, 42]. Hence, our focus is placed on the analyses of the robustness of our model for initial placement and image noises, the parameters for intensity- and vesselness-based terms, and η for the ϒ-neighborhood regularization term respectively.

Robustness to Initialization and Image noises

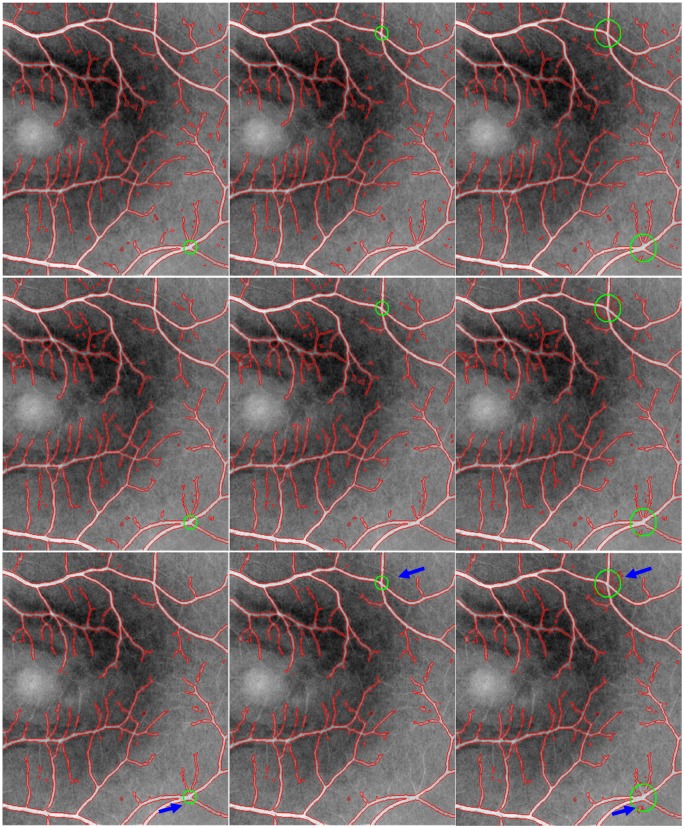

In order to further analyze the robustness of our model to different initial placements and image noises, our model is evaluated based on one randomly chosen image. This image is corrupted by commonly used additive Gaussian noise with different standard deviations presented in different columns. In this section, these deviations are set to 1, 3 and 5, respectively, which are large enough relative to the width of blood vessels. Based on these noise-corrupted images, the segmentation results of our model are obtained using different initial curve placements underlined by green circles, as shown in Fig 5. According to these segmentation results, we can find that the segmentation performances of our model reduces as standard deviations increase, but our model can still extract most of vessels regardless of different initializations, as in the first two rows in Fig 5. Besides, the two rows also show that there exist segmentation differences in the neighborhood of small vessels which are severely corrupted by image noises. However, these differences are small because these positions play a relatively small role in clinical applications. This means that our model is competent for clinical applications in presence of intensity inhomogeneity, image noises and the initial curve placements. Hence, our model is to some extent insensitive to image noises and the initial curve placements.

Fig 5. The segmentation results of our model on a randomly chosen image with different initializations highlighted by green circles.

The image is corrupted by additive Gaussian noise with standard deviations 1, 3 and 5, showed in different rows.

Although the influences of the initial curve placements on our model is small, the size of initial curves can influence the segmentation performances of our model in the neighborhood of initial curves, as shown in the last row in Fig 5, where the bigger initial green circlers are, the more the isolated connected components are, ignoring the impact of image noises. This may be caused partly by the size of the desired objects. Therefore, it is desirable to initialize curves in accordance with the size of desired objects.

The parameters and

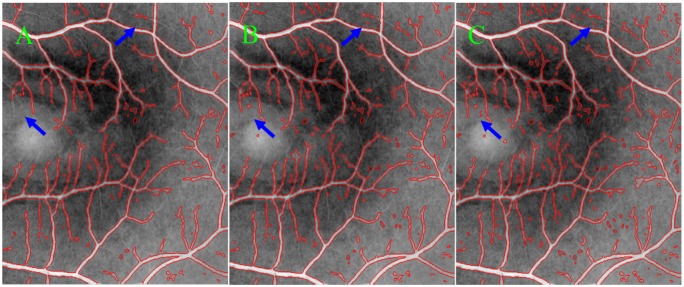

The parameters and are the weights of image regions inside C; while and for image regions outside. They are in general recommended about 1.0 according to the previous studies [42], but adjusted to yield better results according to the natures of intensity images and vesselness maps. In segmentation experiments, these four parameters are set , , which means that image intensities and the vesselness values of vesselness map have the same effects on the motions of curve contours. When the intensity inhomogeneity is severe, the intensity-based terms play a weak role in attracting the contour toward object boundaries. In such case, we should choose relatively large to stress the roles of vesselness maps for the extraction of small vessels in the regions with severe intensity inhomogeneity. However, too large may lead to the extraction of isolated connected components due to the natures of retinal images and vesselness maps, as shown in Fig 6, where blue arrows specified the differences between segmentation results with different values of , i.e., 1.0, 1.05 and 1.1 for Fig 6(A) and 6(B), respectively. Hence, it is a good tradeoff between small vessels and isolated components by setting and .

Fig 6. The Comparison of the segmentation results for different values in the MGDF model.

This value is set to 1.0, 1.05 and 1.1 for (A), (B) and (C), respectively.

The parameter η

The parameter η is used to control the influences of isolated connected components in segmented images so as to keep fine details of the desired boundaries. When setting a large value to η, it, to some degree, can prevent the isolated components, but also reduce the ability of identifying small vessels due to the presence of intensity inhomogeneity and image noises. In addition, this ability is also influenced greatly by the vesselness-based weighting parameters according to the analysis above, which suggests that it is complex to work out the optimal value for η for the correct extraction of desired objects, along with minimal amount of the undesired isolated components. In this paper, the parameter η is chosen to 1.0 as a tradeoff between them.

Conclusions

In this paper, a novel region-based active contour model is proposed and employed to segment vessels in retinal images, which takes image intensities and ‘vesselness values’ as two independent random variables with different means and variances, and then uses the two variables to construct a multi-feature Gaussian distribution fitting energy so as to improve the segmentation performances of the LGDF model. The novel model is evaluated and compared with the existing typical active contours (i.e., the CV, LBF and LGDF models) based on publicly available retinal datasets, the experimental results demonstrate that our model outperforms these typical region-based models in terms of sensitivity, specificity, accuracy and the area under a receiver operating characteristic curve.

Supporting Information

It permits us to use any materials posted at the cited web site http://www.ces.clemson.edu/~ahoover/stare/ for this paper.

(DOC)

Data Availability

All relevant data are within the paper.

Funding Statement

This work was funded by Chinese Academy of Sciences Research and Development Equipment Project (Grant No. YZ201313, YZ201445) (http://www.cas.cn/), and Jiangsu Natural Science Foundation (Grant No.BK20131171) (http://www.jstd.gov.cn/). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Paragios N, and Deriche R. Geodesic active contours and level sets for the detection and tracking of moving objects. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2000; 22: 415–415. 10.1109/34.841758 [DOI] [Google Scholar]

- 2. Mikic I, Krucinski S, Thomas J. D. Segmentation and tracking in echocardiographic sequences: Active contours guided by optical flow estimates. IEEE transactions on medical imaging, 1998; 17: 274–284. 10.1109/42.700739 [DOI] [PubMed] [Google Scholar]

- 3. Guidry D. L, Farag A. A. Active Contours: An overview with applications to motion artifact cancellation in MRI. Proceedings of the 20th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 1998; 20: 644–647. [Google Scholar]

- 4. Mylona E. A, Savelonas M. A, and Maroulis D. Automated Adjustment of Region-Based Active Contour Parameters Using Local Image Geometry. IEEE Transactions on Cybernetics, 2014; 44: 2757–2770. 10.1109/TCYB.2014.2315293 [DOI] [PubMed] [Google Scholar]

- 5. Sethian J. A. A review of level set and fast marching methods for image processing. Modern Methods in Scientific Computing and Applications, 2002; 75: 365–396. [Google Scholar]

- 6. Estellers V, Zosso D, Lai R. J, Osher S, Thiran J. P, and Bresson X. Efficient Algorithm for Level Set Method Preserving Distance Function. IEEE Transactions on Image Processing, 2012; 21: 4722–4734. 10.1109/TIP.2012.2202674 [DOI] [PubMed] [Google Scholar]

- 7. Dong B, Chien A, Mao Y, Ye J, and Osher S. Level Set Based Surface Capturing in 3D Medical Images. Medical Image Computing and Computer-Assisted Intervention—Miccai 2008, Pt I, Proceedings, vol. 5241, pp. 162–169, 2008. [DOI] [PubMed] [Google Scholar]

- 8. Kass M, Witkin A, and Terzopoulos D. Snakes—Active Contour Models. International Journal of Computer Vision, 1987; 1: 321–331. [Google Scholar]

- 9. Caselles V, Catte F, Coll T, and Dibos F. A Geometric Model for Active Contours in Image-Processing. Numerische Mathematik, 1993; 66: 1–31. 10.1007/BF01385685 [DOI] [Google Scholar]

- 10. Li C. M, Xu C. Y, Gui C and Fox M. D. Level set evolution without re-initialization: A new variational formulation. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005; 1: 430–436. [Google Scholar]

- 11. Li C. M, Liu J. D, and Fox M. D. Segmentation of edge preserving gradient vector flow: An approach toward automatically initializing and splitting of snakes. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005; 1: 162–167. [Google Scholar]

- 12. Vese L. A, Chan T. F. A multiphase level set framework for image segmentation using the Mumford and Shah model. International Journal of Computer Vision, 2002; 50: 271–293. [Google Scholar]

- 13. Chan T. F, Vese L. A. Active contours without edges. IEEE Transactions on Image Processing, 2001; 10: 266–277. 10.1109/83.902291 [DOI] [PubMed] [Google Scholar]

- 14. Rousson M, Paragios N, and Deriche R. Implicit active shape models for 3D segmentation in MR imaging. Medical Image Computing and Computer-Assisted Intervention—Miccai 2004, 2004; 3216: 209–216. 10.1007/978-3-540-30135-6_26 [DOI] [Google Scholar]

- 15. Caselles V, Kimmel R, and Sapiro G. Geodesic active contours. International Journal of Computer Vision, 1997; 22: 61–79. 10.1023/A:1007979827043 [DOI] [Google Scholar]

- 16. Li C. M, Kao C. Y, Gore J. C, and Ding Z. H. Implicit active contours driven by local binary fitting energy. 2007 IEEE Conference on Computer Vision and Pattern Recognition, 2007; 18: 339–345. 10.1109/CVPR.2007.383014 [DOI] [Google Scholar]

- 17. Yezzi A, Tsai A, and Willsky A. Medical image segmentation via coupled curve evolution equations with global constraints. IEEE Workshop on Mathematical Methods in Biomedical Image Analysis, 2000: 12–19. 10.1109/MMBIA.2000.852355 [DOI] [Google Scholar]

- 18. Kim J, Fischer J. W, Cetin M, Yezzi A, Willsky A. S. Incorporating complex statistical information in active contour-based image segmentation. 2003 International Conference on Image Processing, 2003; 2: 655–658. 10.1109/ICIP.2003.1246765 [DOI] [Google Scholar]

- 19. Wu H, Appia V, Yezzi A. Numerical Conditioning Problems and Solutions for Nonparametric i.i.d. Statistical Active Contours. IEEE Transactions on Pattern Analysis and Machine Intelligence,2013; 35: 1298–1311. 10.1109/TPAMI.2012.207 [DOI] [PubMed] [Google Scholar]

- 20. Piovano J, Papadopoulo T. Local Statistic Based Region Segmentation with Automatic Scale Selection. 10th European Conference on Computer Vision, 2008; 5303: 486–499. 10.1007/978-3-540-88688-4_36 [DOI] [Google Scholar]

- 21. Piovano J, Rousson M, Papadopoulo T. Efficient segmentation of piecewise smooth images. Conference: Scale Space and Variational Methods in Computer Vision, 2007; 4485: 709–720. 10.1007/978-3-540-72823-8_61 [DOI] [Google Scholar]

- 22. An J, Rousson M, Xu C. Gamma-Convergence approximation to piecewise smooth medical image segmentation. Medical Image Computing and Computer-Assisted Intervention- MICCAI 2007, 2007; 4792: 495–502. 10.1007/978-3-540-75759-7_60 [DOI] [PubMed] [Google Scholar]

- 23. Lankton S, Nain D, Yezzi A, Tannenbaum A. Hybrid geodesic region-based curve evolutions for image segmentation. Medical Imaging 2007: Physics of Medical Imaging, 2007; 6510: U1883–U1892. 10.1117/12.709700 [DOI] [Google Scholar]

- 24. Lankton S. Tannenbaum A. Localizing Region-Based Active Contours. IEEE Transactions on Image Processing, 2008; 17: 2029–2039. 10.1109/TIP.2008.2004611 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Li C. M, Kao C. Y, Gore J. C, Ding Z. H. Minimization of region-scalable fitting energy for image segmentation. IEEE Transactions on Image Processing, 2008; 17: 1940–1949. 10.1109/TIP.2008.2002304 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Wang L, He L, Mishra A, Li C. M. Active contours driven by local Gaussian distribution fitting energy. Signal Processing, 2009; 89: 2435–2447. 10.1016/j.sigpro.2009.03.014 [DOI] [Google Scholar]

- 27. Lathen G, Jonasson J, Borga M. Blood vessel segmentation using multi-scale quadrature filtering. Pattern Recognition Letters,2010; 31: 762–767. 10.1016/j.patrec.2009.09.020 [DOI] [Google Scholar]

- 28. Staal J, Abramoff M. D, Niemeijer M, Viergever M. A, van Ginneken B. Ridge-based vessel segmentation in color images of the retina. IEEE transactions on medical imaging, 2004; 23: 501–509. 10.1109/TMI.2004.825627 [DOI] [PubMed] [Google Scholar]

- 29. Wang H. J, Liu M. Active Contours Driven by Local Gaussian Distribution Fitting Energy Based on Local Entropy. International Journal of Pattern Recognition and Artificial Intelligence, 2013; 27 10.1142/S0218001413550082 [DOI] [Google Scholar]

- 30. Frangi A. F, Niessen W. J, Vincken K. L, Viergever M. A. Multiscale vessel enhancement filtering. Medical Image Computing and Computer-Assisted Intervention, 1998; 1496: 130–137. 10.1007/BFb0056195 [DOI] [Google Scholar]

- 31. Barchiesi M, Kang S. H, Le T. M, Morini M, Ponsiglione M. A Variational Model for Infinite Perimeter Segmentations Based on Lipschitz Level Set Functions: Denoising While Keeping Finely Oscillatory Boundaries. Multiscale Modeling & Simulation, 2010; 8: 1715–1741. [Google Scholar]

- 32. Rosenhahn B. Three-dimensional shape knowledge for joint image segmentation and pose tracking. International Journal of Computer Vision, 2007; 73: 243–262. 10.1007/s11263-006-9965-3 [DOI] [Google Scholar]

- 33. Brox T, Rosenhahn B, Weickert J. Three-dimensional shape knowledge for joint image segmentation and pose estimation. Pattern Recognition, Proceedings, 2005; 3663: 109–116. 10.1007/11550518_14 [DOI] [Google Scholar]

- 34. Brox T, Rousson M, Deriche R, Weickert J. Unsupervised segmentation incorporating colour, texture, and motion. Computer Analysis of Images and Patterns, Proceedings, 2003; 2756: 353–360. 10.1007/978-3-540-45179-2_44 [DOI] [Google Scholar]

- 35. Hong X, Chen S, Harris C. J. A kernel-based two-class classifier for imbalanced data sets. IEEE Transactions on Neural Networks, 2007; 18: 28–41. 10.1109/TNN.2006.882812 [DOI] [PubMed] [Google Scholar]

- 36. Zhao Y, Liu Y, Wu X, Harding SP, Zheng Y. Retinal Vessel Segmentation: An Efficient Graph Cut Approach with Retinex and Local Phase. PLoS ONE, 2015; 10(4). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. You X, Peng Q, Yuan Y, Cheung Y, Lei J. Segmentation of retinal blood vessels using the radial projection and semi-supervised approach. Pattern Recogn. 2011; 44: 2314–2324. 10.1016/j.patcog.2011.01.007 [DOI] [Google Scholar]

- 38. Marin D, Aquino A, Gegundez-Arias M, Bravo J. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans Med Imag. 2011; 30: 146–158. 10.1109/TMI.2010.2064333 [DOI] [PubMed] [Google Scholar]

- 39. Mendonça A, Campilho AC. Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Trans Med Imag. 2007; 25: 1200–1213. [DOI] [PubMed] [Google Scholar]

- 40. Martinez-Perez M, Hughes A, Thom S, Bharath A, Parker K. Segmentation of blood vessels from redfree and fluorescein retinal images. Med Image Anal. 2007; 11: 47–61. 10.1016/j.media.2006.11.004 [DOI] [PubMed] [Google Scholar]

- 41. Bankhead P, McGeown J, Curtis T. Fast retinal vessel detection and measurement using wavelets and edge location refinement. PLoS ONE. 2009; 7: e32435 10.1371/journal.pone.0032435 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Wang L, Li C. M, Sun Q. S, Xia D. S, Kao C. Y. Active contours driven by local and global intensity fitting energy with application to brain MR image segmentation. Computerized Medical Imaging and Graphics, 2009; 33: 520–531. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

It permits us to use any materials posted at the cited web site http://www.ces.clemson.edu/~ahoover/stare/ for this paper.

(DOC)

Data Availability Statement

All relevant data are within the paper.