Abstract

England and Wales are moving toward a model of ‘opt out’ for use of personal confidential data in health research. Existing research does not make clear how acceptable this move is to the public. While people are typically supportive of health research, when asked to describe the ideal level of control there is a marked lack of consensus over the preferred model of consent (e.g. explicit consent, opt out etc.). This study sought to investigate a relatively unexplored difference between the consent model that people prefer and that which they are willing to accept. It also sought to explore any reasons for such acceptance.

A mixed methods approach was used to gather data, incorporating a structured questionnaire and in-depth focus group discussions led by an external facilitator. The sampling strategy was designed to recruit people with different involvement in the NHS but typically with experience of NHS services. Three separate focus groups were carried out over three consecutive days.

The central finding is that people are typically willing to accept models of consent other than that which they would prefer. Such acceptance is typically conditional upon a number of factors, including: security and confidentiality, no inappropriate commercialisation or detrimental use, transparency, independent overview, the ability to object to any processing considered to be inappropriate or particularly sensitive.

This study suggests that most people would find research use without the possibility of objection to be unacceptable. However, the study also suggests that people who would prefer to be asked explicitly before data were used for purposes beyond direct care may be willing to accept an opt out model of consent if the reasons for not seeking explicit consent are accessible to them and they trust that data is only going to be used under conditions, and with safeguards, that they would consider to be acceptable even if not preferable.

Keywords: Personal confidential data, Health research, Opt out, Explicit consent, Patient preference, Public attitude

Background

The governance of research access to information in health records is changing in England and Wales. The Health and Social Care Act 2012 has created new powers to mandate the disclosure of personal confidential dataa (PCD) from health care professionals to support indirect care purposes, including health research.b The law allows for the mandated disclosure of confidential patient information, from health care professionals, with no requirement for patient consent or opportunity for patient objection. In fact, notwithstanding the formal legal position, the Secretary of State for Health has undertaken to ensure that patient objection is respected in practice.c This effectively moves toward a national ‘opt out’ model for much health research using PCD extracted from individual health records and processed within the Health and Social Care Information Centre.

Qualitative and quantitative research conducted on patient attitudes has consistently found individuals to be broadly supportive of health records being used for research purposes. At the same time people also frequently express a preference for being asked before PCD is disclosed by health professionals for purposes beyond direct care.d When pressed to choose between alternative consent models, for example between opt in or opt out, the picture reported has been fragmented. Opinion has been broadly divided across a range of possible consent models. However, within that broad spectrum of views there has been consistent support by some for disclosure only with explicit consent. A study by the Department of Health found that “about half of the general public (53%) and patients (46%) thought that identifiable data should never be used without consent”e and a meta-study of different published papers concluded that

“there was no consensus on a preferred model either within or across studies, although participants often considered the balance of obtaining consent against the public benefit incurred by unrestricted research. Despite this recognition, many participants maintained that informed consent should always be sought, out of respect for the individual”.f

This might lead one to query the extent to which the move to increasingly adopt an ‘opt out’ model of consent in England and Wales is acceptable to the public in general. The authors of the meta-study, Hill et al., noted that,

“[a]lthough researchers may wish for easier access to medical records to reduce potential bias and the cost of the consent process, public opinion may not be so permissive”.g

This most recent meta-study also confirmed studies reporting consistently low levels of public awareness of the research that takes place using health records and the difficulties presented to certain kinds of research if explicit consent is a requirement.h Hill et al’s response was to investigate whether providing individuals with information about the research process, and the problems of selection bias (that can be associated with seeking explicit consent to the use of PCD) altered people’s views about the necessity of consent. They found that

“following discussion about selection bias, participant’s views about research without consent became more favourable, with some men changing their opinion and no longer stating the need for informed consent. However, a small minority remained adamant that they always want an opportunity to consent or at least to have an opt out consent option”.i

The result is encouraging for those who favour ‘opt out’ as a model of consent. It does underline the importance of people being informed about the reasons for adopting ‘opt out’ as a model rather than ‘opt in’. It suggests that amongst those who have expressed a preference for explicit consent there are a number who would be willing to switch their preference if they were clearer on the benefits of doing so for health research. It is also possible that there is a fraction that would continue to want explicit consent, and if they were asked would continue to indicate opt in to be their preferred model, but would nevertheless accept there to be good reason for ‘opt out’ – at least in certain circumstances.

The idea that people might recognise a distinction between what they would ideally prefer to happen and what they would be willing to accept, given the costs associated with real-world alternatives, is relatively unexplored in the literature. Although studies to date have found a fragmented picture when investigating which models of consent members of the public want, it might be that broader levels of agreement could be found if the question of acceptability were to be explicitly disengaged within investigations from the question of preference.

Moreover, understanding what informs acceptance (of a lower level of individual control than would ideally be preferred) by members of the public will – assuming there is a desire for such access to be as widely acceptable to the public as possible – inform development of the criteria of access (without explicit consent). It is also relevant to which conditions and safeguards are highlighted within any campaign to raise public awareness, and presumably acceptance, of research use of PCD without explicit patient consent.j

Objectives

The research had two key objectives. First, to establish whether there is in fact a difference between the level of individual control over access to confidential patient data for health research purposes that people are willing to accept, given the implication of exercising different levels of control for the possibilities of health research, and the level that they would ideally prefer and express as their first choice. Second, if there is a difference, then to understand why people might be willing to accept a different level of control to that which they prefer. Associated with this second objective is understanding what conditions, if any, influence the acceptability of different trade-offs between individual control and health research access to PCD contained within individual health records. This was a pilot study and it was not intended that the results be generalizable. The objective was to test the idea that there might be a difference between preference and acceptance and to begin to explore the potential reasons for any such difference.

Methods

This was a pilot study to explore the reasons that people might give for preferring, and for accepting, different trade-offs between individual control and access for health research purposes: The higher the level of individual control over research access, then the more difficult it can be to conduct certain kinds of health research. A mixed methods approach was used to gather data, incorporating a structured questionnaire and in-depth focus group discussions led by an external facilitator. A focus group approach was adopted because it suited the aims of the research; at this pilot stage we were simply seeking to explore individual attitudes and to unpack the concepts of acceptability and preference regarding any unavoidable ‘trade-off’ between the public good of a confidential health service and the public good of health research.

The study was designed and delivered at the University of Sheffield. It involved focus groups with members drawn from active patient or PPI (public and patient involvement) communitiesk and also from the general university population. The intention was to establish, at an early stage, whether or not there is a difference between the level of individual control that people would prefer and the level that they would be willing to accept if it facilitated (conditional) access to personal confidential data for health research purposes. Also, to consider, if there was a difference, what reasons people might offer for accepting different trade-offs between access and privacy.

Sample

The sampling strategy was designed to include people with different levels and kinds of involvement in the National Health Service (NHS) and/or health research. Three separate focus groups were planned over three consecutive days; this was deemed an appropriate number given that it was a pilot study and exploratory in nature. We were also operating within time and funding restraints.

Groups 1 and 2 were recruited via a PPI Facilitator for the South Yorkshire Comprehensive Local Research Network (SYCLRN). Email invitations were sent by the PPI Facilitator, using email addresses already held or publicly available, to leaders of established patient groups with a request that the invitations were passed on to their members. If contact details of members were publicly available, then the facilitator also contacted group members directly and invited them to take part. Groups contacted were all local to the area to keep travel costs low. The Facilitator was asked to continue to recruit until there were at least 7 members in each of these two groups (in fact, 10 were recruited).

Individuals for Group 3 were recruited by generic internal email to students and staff of The University of Sheffield. The invitation was electronically signed by the Principal Investigator (PI), who is employed by the university, although it was sent via central university administration. There was a high response to the query and 10 people (5 staff and 5 students) were invited to participate on a ‘first-come’ basis.

It was expected that members of Groups 1 and 2 would have experience of the NHS that would result in those groups collectively having ‘above average’ involvement. It was hoped that members of Group 3 would respond to the invitation only due to an interest in the issues to be discussed but that members of Group 3 would have what might be described as ‘average’ levels of prior involvement with the NHS. This would have offered an interesting point of comparison between the three groups. However, numbers were always going to be too low to make any claims about views being representative of particular sections of the public or patient populations. In the event, members of Group 3 expressed prior levels of involvement that also might be described collectively as ‘above average’. The results of this work are not presented as generalizable to the public in general nor to any particular section of the public or patient community. This needs to be kept in mind when interpreting the results and their significance.

Although a person’s level of involvement was not treated as a variable for the purposes of analysis, it is relevant to know what people’s prior involvement was as it helped to contextualise their discussion. Each member of a focus group was invited to briefly explain, in a non identifiable way, the nature and kind of involvement that they previously had with the NHS. These descriptions were transcribed and are reported within the results section.

Focus groups

Each of the different groups was scheduled from 10.30 am to 2.00 pm with a break for lunch. When people arrived on the day, they were asked to sign a written consent form. Participants were informed that discussion would be audio-recorded and transcribed. It was made clear that no identifiable information would be reported.

From 10.30 am to 12.00 participants were given background information about the legality of use of confidential patient information for research purposes without patient consent and the significance of the concept of public interest for decisions about such use. They were introduced to different concepts of public interest and were introduced to the idea that one conception of public interest would insist that any trade-off between common interests in confidentiality and health improvement was for reasons that the public would find accessible and acceptable. Participants were then given a simple questionnaire, in the form of a table, to complete and we broke for lunch.

After lunch, participants were invited to explain and discuss their preferred idea of public interest and their ranking of alternative trade-offs between individual control and access for health research purposes. An exploratory qualitative approach was adopted to elicit the concerns or issues raised by people in relation to alternative trade-offs. Questions were asked by the facilitator to prompt discussion of preferences and to question the relationship between preferred level of control and the level(s) of control that was considered to be acceptable. The discussion was lively in each group and conversation was generally allowed to flow with intervention only to prompt participants to clarify responses, to challenge an individual to provide reasons for a position, or to offer an alternative view for the sake of stimulating the discussion. At the end of the discussion, participants were asked to repeat completion of the questionnaire so that any shift in opinion could be identified. The questionnaires, which contained separate columns for morning and afternoon responses, were returned anonymously at the end of the day and marked only with group number for the purposes of comparative analysis.

Questionnaire

The questionnaire asked participants to rank in order of preference (1 = most preferred, 4 = least preferred) four consent models to control access to patient confidential data (PCD) for health research purposes, each representing a different trade-off between individual control over access to PCD and the facilitation of health research. For the purposes of the discussion PCD was described as an individual’s identifiable health information. The four models are summarised in Table 1.

Table 1.

Four models of personal confidential data disclosure for health research

| Model | Description |

|---|---|

| Model 1 | Information should be disclosed without explicit consent and with no opt out |

| Model 2 | Information should be disclosed without explicit consent but with opt out permitted for sufficient reason l |

| Model 3 | Information should be disclosed without explicit consent but with opt out permitted for any reason |

| Model 4 | Information should be disclosed only with explicit consent |

In addition to ranking these four options in order of preference, participants were also asked to indicate in a separate column whether they considered each of the alternatives to represent either an acceptable or unacceptable trade-off between individual control and access for health research purposes. They were asked to put a “Y” next to each alternative that they considered to be acceptable (regardless of how they had ranked it in order of preference) and “N” next to each alternative that they considered to be unacceptable (again regardless of any order of preference).

Analysis

The quantitative data from the questionnaires were recorded and analysed using SPSS.m One-way frequency tables were used for descriptive analysis of the key concepts and bivariate analysis was conducted using 2×2 contingency tables. All variables were nominative/ordinal and the appropriate non-parametric statistical tests were selected. For the qualitative data, the de-identified transcripts were inputted into the Nvivon software package immediately after the focus group sessions by the PI. The transcripts were coded and a process of thematic analysis used to identify emergent themes, patterns and extracting any particularly cogently expressed opinions on different issues (Crow and Semmens 2008). The coding was carried out by two coders independently (the authors) and then the emergent themes were compared and refined in discussion. In total, 40 coded themes were finally agreed upon.

Results

In total, 28 of the 30 invited participants turned up and took part in the focus groups. One person gave their apologies in advance and one person did not contact the research team to offer explanation for non-attendance.

Each of the three groups was different in terms of their level of experience with the NHS. Gender but neither age nor ethnicity was recorded for each participant. Prior experience of the NHS or research involving NHS records was described by participants in their own words, in a non-identifiable way, at the beginning of the session.

Group 1 (Tuesday, n = 9) was a mixed group consisting of 8 women and 1 man. They included a member of a local consumer research panel for cancer; 2 carers (one with extensive experience of caring for mental health); 2 previous members of Community Health Councils (one of whom was a previous Health Researcher and the other of whom was a member of PPI Group); a member of a Health Panel; a Patient Advocate on Research Groups and REC member; a researcher with experience of using patient data and one other who self-described as ‘involved with cancer research’.

Group 2 (Wednesday, n = 10) comprised of people with primary care involvement. It included 8 people who sat on GP practice or local area patient participation group (3 of whom were now involved with a local CCG; 1 of whom indicated previous research experience; and 2 of whom were also involved in local quality assurance). In addition, one member reported involvement in the local quality assurance of out of hours GP services and one member reported involvement with both local CCG and a health charity. All were mature adults and there was an equal gender split (5 male, 5 female).

Group 3 (Thursday, n = 9), recruited through the University, had the broadest range of ages (from young adult upwards) and mix of previous NHS involvement. Four people had experience only as patients, although one of those described their previous experiences of NHS care as ‘extensive’. One was a medic, one was an Allied Health Professional, one was a mathematician engaged in research involving patient data, one had responsibility for considering research protocols involving collaboration with the NHS, and one described previous NHS involvement as “limited” but an active interest in the ethics of using data for secondary purposes. Of the 9 participants, 4 were women and 5 were men.

Stage 1: Quantitative analysis

The quantitative data were used to address the first research question: Is there a difference between what people prefer and what they are willing to accept? The analysis was conducted in three parts and these are reported in this section:

i) Identification of the most/least preferred models

ii) Identification of the acceptable/non-acceptable models

iii) To what extent do respondents who express a low preference for a model display a willingness to accept it?

Participants completed the questionnaire both before and after the round-table discussion. Although not a central objective of this study, we felt it important to analyse the morning and afternoon data separately in order to assess any aggregate changes in preference/acceptability towards the four models. Previous studies using a similar focus group/dialogue approach have shown that changes in opinion about consent in research can be expected at the individual level, although not necessarily at the aggregate level.o

We found that a large proportion of respondents changed their views – only 8 people (out of 28) did not change their answers at all. However, The Wilcoxon signed ranks test (a non-parametric T-test which does not require normally distributed variables and is suitable for the variable types) was used to assess whether there was a significant change between morning and afternoon scores. None of the eight variables (Models 1–4, preference and acceptability) were found to have statistically significant z scores; thus it is possible to conclude that the afternoon discussion did not elicit a statistically significant change on the aggregate attitudes of respondents, even though there were clearly some shifts in perspective at the individual level.

There was no focus in our discussion on selection bias in particular and, as Hill et al. speculate, the more specific nature of the information provided within their study might account for the greater influence upon aggregate attitudes.p This might prove relevant to the content of any information provided more generally about the adoption of opt out as a model of consent. Although not statistically significant, the changes to preference and acceptance results for the four models in the morning and afternoon sessions are discussed in the sections that follow since they may inform future research.

i) What were the most/least preferred models?

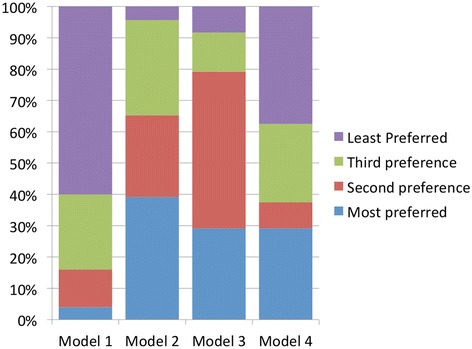

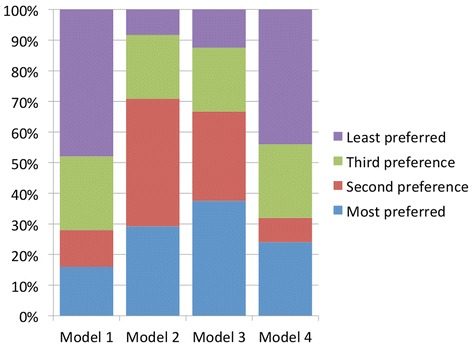

Figures 1 and 2 show the preference levels for each of the four models in the morning and afternoon sessions. Broadly speaking, Models 2 and 3 emerged as most preferred; they were more likely to be high preference (first and second preference combined) in both the morning and afternoon sessions. Minor fluctuations in preference levels can be observed between sessions. Model 2 was the most likely model to get first preference in the morning; it lost a few allocations in the afternoon but gained more second preference allocations, resulting in a consistently strong position overall. In contrast, Model 3 received an overwhelming majority of the second preference allocations in the morning but there was a noticeable drop in the afternoon; in the afternoon session Model 3 emerged as the model most likely to be ranked first preference.

Figure 1.

Levels in preference for Models 1–4 in the morning.

Figure 2.

Levels in preference for Models 1–4 in the afternoon.

Model 1 was the least preferred model, with the majority of respondents ranking it as a low preference (third or least). However, there is a marked increase in first preference allocations in the afternoon session suggesting that for a few people (n = 3), the discussion had a positive impact on their attitudes towards this model.

In line with the findings of some other studies,q there was limited preference for Model 4. The majority of respondents ranked it as a low preference (third or least), with most of that majority selecting the lowest possible level of preference.r However, it was selected as the most preferred option by more than a quarter of respondents. Of all the models, Model 4 received the fewest fluctuations in preference ranking between morning and afternoon sessions.

ii) What were the most/least acceptable models?

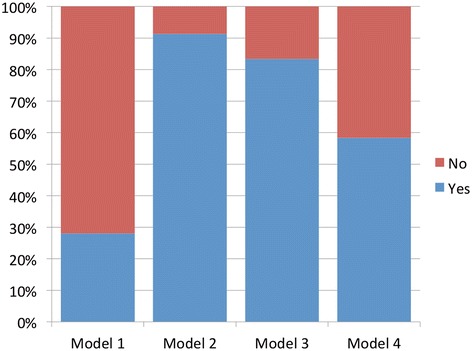

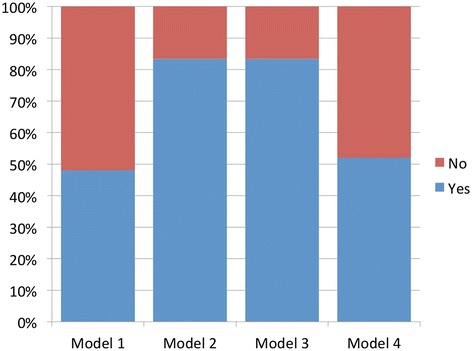

Looking at Figures 3 and 4, we see that Models 2 and 3 emerged as the most acceptable models overall. Model 2 was slightly more acceptable in the morning (91% compared with 83%), but at the end of the afternoon Models 2 and 3 were deemed equally acceptable by 83% of participants. In the morning session, Model 1 was the least acceptable model, with just over a quarter of respondents (28%) rating it as acceptable; in the afternoon, that figure increased to over half (52%). Model 4 was moderately acceptable in the morning (56%), dropping to 52% in the afternoon. Four participants considered all four models to be acceptable.

Figure 3.

Acceptability for Models 1–4 in the morning.

Figure 4.

Acceptability for Models 1–4 in the afternoon.

So, as was the case for levels of preference, it is the two ‘middle position’ models that are most acceptable. Again, it is Model 1 that was viewed most negatively, with Model 4 receiving some support; interestingly Model 1 had the biggest increase in acceptability after the discussion and information session.

iii) What proportion of those who expressed a negative preference towards each model, would, nevertheless, accept it?

The next stage of the analysis was to assess whether there was a difference between that which people prefer and that which they are willing to accept. Dealing first with Model 2, which was ranked highest in terms of both preference and acceptability by the largest proportion of people, three quarters (n = 6 of 8) of those who gave this model a low preference score (i.e third preference or least preferred in the afternoon session) said that they would be willing to accept it. Model 3 results were similar, with 60% (n = 3 out of 5) of those who had given the model a low preference score saying that it would be acceptable. As you move to the two extreme ends of the model spectrum, there is less willingness to concede acceptability; for Model 1, only 14% (n = 3 out of 21) of low preference rankers were willing to label the model acceptable and for Model 4, only 33% (n = 5 out of 15)s.

There was a small group of people (n = 5) who were unwilling to accept any model other than that which they ranked as their first preference. This was observed across all four models in the morning sessions. However, at the end of the afternoon session, two of these people revised their responses to accept more than one model. This left three people only willing to accept his or her most preferred model and in two of these cases it was Model 1. Both of these people were in Group 2 (consisting of people with primary care involvement).

Summary

The key findings of Stage 1 of the analysis may be summarised as follows:

i) Model 1 is the least preferred model and it is the least acceptable model.

ii) Models 2 and 3 are the most preferred and the most acceptable models.

iii) Very few people (n = 3) were only willing to accept their most preferred model at the conclusion of the session.

iv) Low preference for a model does not necessarily entail unwillingness to accept it.

Importantly, as with other studies there was no consistently preferred model of consent. For the vast majority of people, the range of models of consent that each individual considered to be potentially acceptable extended beyond the consent model that they considered to be most preferable. There were only three people at the end of the afternoon who considered their first choice to be the only acceptable alternative and two of these preferred Model 1. This underlines both the potential for broader agreement regarding the acceptability of a consent model than has previously been reported but also the importance of taking into account the conditions expressed as relevant to acceptability when designing any regulatory regime. The conditions raised by participants as relevant are discussed as part of the qualitative analysis below.

Stage 2: Qualitative analysis

Having addressed the first research question, let us move on to consider what might influence whether people are willing to accept a different level of control to that which they prefer. Associated with this second question is understanding what conditions, if any, influence the acceptability of different trade-offs between privacy and access to confidential patient data for health research purposes. From the discussion of these conditions in the focus groups, 3 main themes were identified, which are broken down into subthemes as shown in Table 2.

Table 2.

Conditions influencing the acceptability of different trade-offs between privacy and access to confidential patient data for health research purposes

| Theme | Sub-theme |

| 1. Appropriateness of access | 1a. Security and confidentiality |

| 1b. Detrimental Use | |

| 1c. Commercialisation | |

| 2. Safeguards | 2a. Opt-out |

| 2b. Transparency | |

| 2c. Independent Overview | |

| 3. Sensitivity of data |

1) Appropriateness of access

Several people had concerns relating to the appropriateness of access that might be listed under three discrete headings. It is important to underline that concerns relating to the appropriateness of access appeared to be general concerns irrespective of the model of consent adopted. Nevertheless, if an individual’s data are to be used without explicit consent, then it seemed to be considered particularly important that these concerns were addressed through appropriate safeguards. The concerns spontaneously expressed by members of the focus groups could be said to relate to:

a) Security and Confidentiality

b) Detrimental Use

c) Commercialisation

1a. security and confidentiality

Some people expressed the concern that the disclosure of confidential patient data for research purposes may pose a risk to the security and confidentiality of that data. For at least one person that concern was associated with the disclosure of identifiers (including name and address) and other demographic data rather than clinical data:

“my identity theft and attacking bank accounts and that sort of thing, that is my concern, not my health data”. [TH2, Group 3]

Another pointed to the poor record of data security in the recent past as a cause for concern if more identifiable data were to be used for health research purposes:

“Government departments, including the NHS, have got a pretty poor record for securing personally identifiable information”. [TH7, Group 3]

Concerns about security and confidentiality were not, however, restricted to technical questions around the prevention of unintentional loss or disclosure. There was also a concern that people who were authorised to have access to data might find out things about particular individuals. It was considered important that research was carried out by somebody owing a duty of confidentiality:

“We are talking about data that is only going to researchers. These researchers inherit a duty of confidentiality by dealing with this. If it was being passed on to a third party who did not have a duty of confidentiality under the NHS, then that would be very different”. [TH7, Group 3]

However, notwithstanding this duty of confidence, any possibility that researchers might know the data-subjects personally was considered significant. It led one individual to remark that

“I think that people employed by an organisation that do that sort of work should have the option to opt out, because they have the potential for their colleagues to be handling very personal information about them” .[TU3, Group 1]

In response, another member of the group remarked that they might also be handling “information about their neighbour [or] their family” [TU6, Group 1] and a similar opportunity to opt out should be afforded anyone who personally knows somebody that might be handling such data. This exchange queries the extent to which even perfect security could fully address concerns about confidentiality when it is the knowing itself – rather than the onward disclosure or loss of data - that is the concern. This concern was obviously related in particular to data that was held in an identifiable form.

1b. Detrimental use: stigmatisation, discrimination and privacy intrusion

A number of concerns were expressed about purposes that might be considered detrimental to the individual’s interests. While discussion was specifically about health research access to PCD participants nonetheless felt compelled to express concerns about data being used for other purposes:

“I personally don’t have any problems with my health care data being used for the good of healthcare research or the benefit of healthcare but, like I said earlier, I do worry about it straying off into commercial areas, insurance … [Interrupted by loud and general agreement] …. [someone] mentioned military [research] earlier but I think a much bigger risk of the abuse of data is by people in the insurance industry. [TU3, Group 1]

Similarly, there were concerns expressed over potential access by employers:

“If the results or the records on mental health are say passed on to a prospective employer, you know there may be things that you don’t want to be known in there that can mar your progress really if you get stigmatised” [W9, Group 2]

While it was recognised that access to an employer was unlikely to be granted for the purposes of health research, it was noted that insurance companies employ researchers and the definition of ‘health research’ is ambiguous. Concerns in this area were not restricted to the disclosure of identifiable data and reservations associated with the disclosure of even anonymised data have also been reported in similar studies.t There is a concern that research results, even if reported at the level of the group, might have consequences for individuals:

“Even if it is identifiable of a group, it could be, one could see particular dangers, a religious group for example, or an ethnic group, that could present problems and certainly we’ve had this in this past and, again, it is not just a question of what governments might do towards part of its population, like in Nazi Germany they used their doctors and health service there as part of the persecution of minorities [W7, Group 2]

Although concerns were associated with the use of data in anonymised form, most frequent mention was made of risks to individuals if identifiable data was disclosed. The risks were not only in relation to the possibility of discrimination or stigmatisation but also just the possibility of intrusive direct marketing:

“[T]he main concern that I have is that eventually it is going to be used for marketing purposes, and I think a lot of people think that as well you know that, it goes along the line that your private information, I mean, with the NHS having that vast amount of information, that eventually it will be used for marketing purposes” [W2, Group 2]

Comments resonated strongly with the finding of another study that “any linking resulting in the individual being targeted with specific messages prompts discomfort and resistance.”u For some, the concerns associated with marketing and other commercial use of the data were associated with the privatisation of the health service:

“A lot of people would say, it is not necessarily my view, but a lot of people would say that the whole of the health service is well on its way to being privatised and put into private hands. It gives you a lot less confidence that any kind of data is going to be kept sacred if you like and not released to private companies” [W1, Group 2]

The concerns associated with commercialisation were not just related to the economic risks to which an individual might be exposed due to stratification of groups and direct marketing. Concerns were also expressed around the commercialisation and commodification of data more generally. People were generally happy for data to be used for the purposes of improving the health of others but not providing individuals or groups with a financial advantage.

1c. Commercialisation

Opposition to the commodification of data provided for ‘the common interest’ was strongly expressed. This is consistent with the findings of other studies. Hill et al. reported following their meta-study “there was apprehension in many studies that data would be sold for commercial profit, and this was generally seen as less acceptable, commanding a higher requirement for informed consent”.v Discussion in these focus groups strongly supported that conclusion:

“Facilitator: Are there any burning things that people think we’ve missed on the first part of the discussion?

TU1: Selling data

TU8: Yes

TU1: Well we don’t want it.

TU8: No

Facilitator: What, under no circumstances should anybody be allowed to sell data?

TU1: No. [Group 1]

While most starkly expressed here, the sentiment was widely shared and extended for some to financial benefit for the NHS itself. There was a concern with the ‘corrupting’ effect that the sale of data might have upon NHS values:

“absolutely, I think we have to be aware of the fact that the NHS is not an infallible system. It is not a deity, it is nothing other than an organisation of human beings who make mistakes and who are subject to corruption and who .. I mean I think it is enough that we are talking about personally identifiable information, that is essentially private and important to the people that it concerns, and if we then add the thing on top of it that there is the possibility that they could make money out of us, I mean, granted we all use lots of money that is the NHS’s money but I think that that would add just a whole new spectrum of grey”. [TH4, Group 3]

Although some did seem more accepting of the idea that the NHS might profit (and use the money to support health care) the monetisation of the data was still resisted by many:

“TH8; I think most people would be reticent at the idea of the NHS selling the data-set

TH3; absolutely

TH2; but, I would actually be happier with the NHS having the money than the individuals, I think the NHS is heading for bankruptcy, you know, maybe not in our lifetime, but it can’t continue at the rate it is and so actually this could be a little bit of a sweetener, so you know, they still provide a free access health service for everybody, regardless of any discriminations or anything, and if they pocket a little bit of money to continue that and to continue to fund new treatment, then that is where I can see....

TH8; an interesting idea and I can see that you could produce a justifying argument, but I think more people would be tempted to opt out if you went down that line…

[general agreement]

TH8; … I mean, even me with my sort of “yeh, take the data, as long as it is acceptable, get on with it”, I would be tempted to opt out on that basis” [Group 3]

The discussion in Group 3 did, however, also draw out the difficulties in clearly distinguishing between ‘commercial’ and ‘non-commercial’ research in the health sector. It was recognised that “even therapeutic research are of commercial interest” [TH8, Group 3] and some of the advantages of being able to draw upon commercial funds to support health research were recognised:

“TH2; I think there is distinction as well between the commercial uses that you are talking about there. So, I would whole heartedly agree with that, I wouldn’t want anybody outside of the health sector looking at any of my identifiable data, so insurance companies, banks, I don’t know, car loan people, but when you talk about the commercial side of healthcare, I think there is a distinct difference between smith-kline beecham and whatever, that although they are a commercial run company, I would imagine an awful lot of the treatments that are provided on the NHS wouldn’t be there if they hadn’t been supported in some form, so actually I think to separate commercial and non commercial research for health purposes I don’t see how that is ever going to be clear because it is ‘You scratch my back, I’ll scratch yours’

TH8; it is not black and white is it, it is a whole spectrum of greys in there too” [Group 3]

This demonstrates the subtly of view recognised by members of the public and an appreciation of the complexity of the situation: Participants were aware of the value that commercial partnerships might bring, but nonetheless remained extremely wary of PCD being “sold” to such partners. The strength of feeling expressed would suggest that, while people recognise it to be difficult to always point to bright-line distinctions, it is an area in which public trust is extremely fragile.

2. Safeguards to protect people from risks?

In response to the concerns expressed around inappropriate use of data there were a number of safeguards suggested. Trust that effective safeguards are able to prevent inappropriate uses of data would seem to be crucial to the acceptability of lower levels of individual control than preferred. A key safeguard appeared to be the option of individual opt out. The opinions expressed on the issue of opt out were also expressed in particularly strong terms.

2a. Opt out

One participant said:

“I put [Model One: No Explicit Consent and No Opt Out] as number four because the thought of not being able to opt out under any circumstances horrified me” [TH6, Group 3]

This view did not appear to be significantly influenced by the possibility of independent review by an independent body such as an ethics committee:

“Facilitator: How comfortable are you in devolving responsibility for the decisions about your data to the ethics committee? Would it sway your opinion if they were, in your mind, properly constituted?

TU3: If they are properly constituted, then yes it would sway my opinion but I still would never ever be able to go for, or to feel comfortable with, a system in which I had no control whatsoever. I would still want the right to protest and object and say “no”.

TU6: That’s my feeling exactly

TU3: I mean 99 times out of 100 I would probably not feel strongly enough

TU6: Yes, to object

TU3: but I still want to have that security that if there was something which I felt really strongly about, there was a due legal process which I could go through to say ‘no you are not having my data’” [Group 1]

People wanted to be able to opt out if their data was to be used to support particular types of research activity that they did not accept. In contrast to the findings of Hill et al.,w reasons cited for possible opt out did include religious, moral and ethical views:

“You could you have it written in your notes, your record, that you would not allow it for religious reasons, moral reasons, etc. etc. and that is written in to your notes. And anything else that you want to” [TU9, Group 1]

Also,

“Facilitator: I don’t think we have example yet of a person being able to opt out for any reason that they consider to be reasonable?

TU1: military research

TU8: and selling

TU9: so moral grounds

TU1: yes moral grounds. we all have different morals and ideas about what is ethical and unethical” [Group 3]

Although it was recognised that unacceptable uses of data were not likely to be the primary intent of any move to improve research access there was concern with “mission creep” [TU1, Group 1]. This concern was particularly acute even if ‘opt out’ was permitted:

“the problem is that you don’t know what is going to happen in the future. So you don’t know if you want to object” [TU3, Group 1].

An important response to such concern was seen to be transparency.

2b. Transparency

Other studies have similarly found that, alongside common courtesy, being provided with information about what is done with information is recognised to be an important prerequisite of effective exercise of any right to opt out.x How people were to be provided with information about the purposes for which data was to be used was suggested by one participant to be the “fundamental question”:

“[H]ow research is being conducted, what care is being taken to protect their data and how that data is being used maybe for their own clinical treatment but also for future clinical treatment for 20, 50,100 years. I think that is an important point, if we can solve that question easily, then subsequent questions become quite easy to achieve. It just so happens that this first one is incredibly hard”. [TH3, Group 3]

The size of the challenge was consistently recognised:

“Again you have the problem that there is so much information, so many hundreds of thousands of different studies, how can you really make that a viable option other than saying, be aware, you’re an NHS patient, your data may be used for research, the only even viable way you could do that I think would be electronically and any more detail than that via an internet link - something like that - but even then I would want to know how realistic that could be for all studies to be out there. [TU3, Group 1]

Given the size of the challenge, and the fact that is was particularly acute in relation to specific groups, the suggestion was that we should ensure generic information is made clear to all so that people can be signposted to more detailed information to consider at their convenience. Exactly how that generic level of information should be provided was considered to vary between different groups with the overriding concern effective communication:

“If you are putting up written information, then what about people who can’t read? What about people like drug users who may not interact with services? Again, the homeless? Accessibility [of information] is a huge issue, especially for these groups, I think it should be explained at the first instance, to people face to face, so you can judge their understanding of what the opt out is and the threshold of any acceptable opt out should be explained as well” [TH7, Group 3]

Particular efforts are going to have to be made in relation to particular groups to ensure they are not left out if an opt out system is increasingly adopted:

“So therefore, that’s the group, the marginalised group in this kind of system, the opt out system, the marginalised group are the people who, for whatever reason don’t have the opportunity to learn about what is happening to their data and therefore don’t have the opportunity to give an opinion on it even if their opinion is that they do not want to be involved [TH4, Group 3]

It was recognised that, even with the very best of intentions, and a flexible communication strategy capable of accommodating a diverse range of needs, there would be limits to the extent to which all individuals could be effectively informed about all the very many (research) purposes to which their data might be put. Indeed, the same person as quoted immediately above suggested that if the goals of transparencies were realistically to be achieved, then the practical difficulties associated with an opt out system might be as great as with a system based on “explicit consent”:

“But is that not as difficult as getting explicit consent from every individual? I mean I raised the point really to illustrate that opt out is very rarely actually informed, I consider it not to be a form a consent and that is fine as long as accept that it is not a form of consent. What would you do? Police that every single person visited the website, or had access to a leaflet? I mean, even if they had access to a leaflet, you couldn’t ensure that they had read it. I mean if every person in Sheffield received a leaflet about how their data was being used, you have no way of knowing whether that went straight in the recycling the second it came in the door. And, I think practically speaking, informed opt out is as difficult and unrealistic as explicit opt in. [TH4, Group 3]

Recognising the limitations of transparency in practice, and the associated limits on the extent to which individuals could use opt out (if available) to protect their own interests, strengthened calls for an effective independent ‘watchdog’: a review group capable of providing effective regulatory oversight and preventing inappropriate uses of the information.

2c. ‘Watchdog’ or independent review group

There was general agreement with the suggestion that there should be an effective independent ‘watchdog’ or ‘independent review group’ capable of protecting public interests. Interestingly, in two groups the significance of lay review to such independence was clearly articulated:

“Facilitator: Who should be the guardians? Who should make the decisions about what, who, and for what?

TU1: A non-governmental body

TU9: and non-NHS

TU1: This will be difficult group: a group that has no connection at all with industry, commissioning groups, NHS, universities, anybody with a vested interest in using data. How that would be achieved goodness knows” [Group 1]

In both of these groups participants spontaneously drew a parallel with the jury system when considering the appropriate constitution of a review group: “12 lay people, like you do on a jury”. One person went so far as to suggest that membership of such a review group should be recognised to be a civic duty, as with jury service. However constituted, it was recognised that:

D7; “there would have to be some sort of body in charge of understanding, of delineating at what point it is acceptable to not do these things, to not request explicit consent from patients for identifiable information” [TH4, Group 3]

Interestingly, it was suggested that the value of the group might go beyond exercising a quasi delegated function to protect the interests of those not in a position to protect their own interests through opting out. It was suggested that the watchdog might provide more rigorous control of data than an individual could achieve through opt out. Indeed, it was suggested that the argument for such control might be particularly powerful if individuals were not asked to explicit consent or even allowed to opt out of data collection:

“What would I think also convince me is the question that I raised earlier about the use of the material and what would really be the pressure on the authorities, both the medical authorities and the political authorities, to make sure that this material was used only for health research purposes and only for the common good, and that would I think be a much greater pressure than if you had a system whereby, either explicit consent [or some kind of opt out system] where I can imagine the authorities sort of saying ‘you had your chance to opt out – what are you complaining about?’ OK – we had to sell it to insurance companies to cut the cost of research, you had your chance to get out of it, don’t complain now”. [W7, Group 2]

“And I think if it wasn’t opt out, then the NHS the IC the watchdog, whoever, they would have more responsibility to get that information out there so it is accessible and it is understandable. It is not in a scientific paper, it is in a lay summary, so, ah! they did this bit of research, that could have been my data, it might not have been but it could have been” [TH5, Group 3]

3. Sensitivity of data

One set of concerns that are not easily aligned with the idea of inappropriate uses of data or specific kinds of safeguard related to the use of particularly sensitive data. Several people talked about data that they felt were particularly sensitive to them or to others personally. Sometimes this was because the data related to a particular type of medical condition, related for example to sexual or mental health:

“Isn’t that separate for different diseases. Like has already been said, you’ve got different sensitivities about sexually transmitted diseases to those you do to the common cold”. [TU3, Group 1]

“For myself there is a big difference between physical and mental health certainly my physical health records, I have no problem with anybody doing any research on them at all but I think that mental health particularly is a completely different ball game because the repercussions of that coming back to haunt you later in life, and it doesn’t matter how far back it goes into your childhood into your teens into your twenties, these things do have a nasty habit, if they are brought back up, of becoming quite real again”. [W6, Group 2]

Others noted instead the particularly sensitive nature of data that was intensely personal in another way. For some this distinction fell along the boundary between primary and secondary care settings:

“TU3: I sometimes look at it like this, I don’t have much worry about my secondary care, my records about what has happened to me in hospital being shared with people but when it goes into my primary care data I actually get a lot more sensitive and I am much more tetchy about it, because there are things that I discuss with my GP, that I really would not want anyone else to know about.

TU6: Absolutely

TU8: Yes” [Group 1]

For others, there were moments or events in their life that were so significant to them, and the act of confiding the details in another had been such an acute act of trust, that the thought that those details might be revealed – even in an anonymised form – was intensely distressing:

“I certainly do have things in my medical records, from my very young years, which if I thought anybody, ever, had access to, then I would be devastated. And that is whether they have my name or not. I have still – 45 years later – I have problems when it is brought to mind. The thought of somebody else going through that – whether they know my name or not – I find it incredibly distressing”. [W6, Group 2]

Discussion and conclusions

This is, to our knowledge, the first study to focus specifically on the relationship between preference and acceptability in the use of personal confidential data for health research. It was designed as a small-scale pilot study and we must therefore be cautious before extrapolating from the findings. The sample was small and the persons involved in the research had either above average involvement with the NHS and/or above average educational qualification. Furthermore, many of the participants drawn from the university community were researchers themselves. Future work should be designed to address these points of selection bias and ideally include participants with low or no involvement in the NHS for comparative purposes.

The questionnaire was first completed after two presentations on the regulation of PCD in the NHS and a consideration of the merits of different models of public interest (involving different tradeoffs between individual control and research access). Previous studies have suggested that a better understanding of the impediments posed to research if explicit consent is insisted upon, would improve the acceptability of access without consent.y One might speculate that this might explain why this group, with a relatively high level of understanding of research in the NHS and the problems that researchers would face, found ‘opt out’ to be a broadly acceptable solution even if it was not their first preference. The possibility that this group might have been more supportive of opt out models generally when compared with others does not undermine the central claim: people typically considered models of consent other than their preferred model to be acceptable. The broadly expressed acceptance of alternatives to the first preference was accompanied with concerns that adequate safeguards be put in place to protect trust that identifiable, and for some also anonymised, data were not going to be used in ways that people did not consider acceptable. It has been suggested by other studies that people are more likely to accept alternatives to explicit consent if they are confident that alternative mechanisms of control are going to ensure that data is used by persons, and for purposes, that they trust and accept as reasonablez.

Our key finding is that people are typically willing to accept models of consent other than that which they would prefer. This should be taken into account when designing future studies to test the acceptability of different consent models. Asking people to select between different alternative models might yield information about which models people would prefer (and past evidence is that this is actually quite fragmented as a preference) but this does not directly provide any reliable indicator of acceptability. However, if acceptability is a concern, then we should pay attention to those factors that people have expressed as relevant to their acceptance of different models of consent, including those models that provide lower levels of individual control than they would ideally prefer. These include security and confidentiality, controls over detrimental use and commercialisation, adequate transparency, existence of a independent ‘watchdog’, and the ability to object, particularly to any processing considered to be inappropriate or particularly sensitive.

This is important because England and Wales appear to be moving toward the use of PCD for health research purposes without explicit patient consent. This study suggests that the political commitmentaa to respect opt out in relation to health research access to PCD is consistent with not only what people prefer but also what they would consider to be acceptable. We would suggest that continuing to respect opt out may be an important part of public acceptability of any operative consent model.

Given that other studies have consistently reported a fragmented picture regarding public preferences for particular models of consent (e.g. broad opt in, specific opt in, opt out, etc.) it is significant that we have found a difference between what people would indicate to be their preferred model (if given only one choice) and what they would be willing to accept. Particularly, if they recognise and accept certain reasons for adopting an alternative other than their first choice. The results suggest that it might be possible to gather a much broader alliance of support around particular models if one focuses upon acceptability rather than preference.

Reasonable acceptability does, however, depend upon persons having access to the reasons for the alternatives. We must get better at explaining to people why they might have reason to accept models other than their preferred model in relevant circumstances. Assurance that these reasons are sufficient to lead somebody to accept a lower level of control over their data than they would ideally prefer depends in part upon a trust that adequate alternative safeguards exist to address their concerns and to protect their interests.

The willingness to accept any particular trade-off is informed by an understanding of the controls that exist around the access and use of the information. Concerns were expressed around the security and confidentiality of the data, the fact that it is not used to an individual’s detriment, in either identifiable form or as a result of research conducted at the level of the group; and the commercialisation of data.

The safeguards identified as necessary to address such concerns included adequate transparency, a strong independent watchdog capable of protecting an individual’s interests and ensuring that data was only used ‘in the public interest’, and the opportunity to opt out of particular processing if the purposes, or the data accessed, are considered to be particularly sensitive by an individual. The possibility of overriding an opt out was considered, and it was recognized that it might be necessary in some circumstances, but people were of the opinion that it should be a rare occurrence given the fact that people have different sensitivities around particular types and uses of data.

Endnotes

aThe term is being used here as defined by The Information Governance Review Information: To Share or not to Share (March, 2013), p130: “This term describes personal information about identified or identifiable individuals, which should be kept private or secret. For the purposes of this review ‘Personal’ includes the DPA definition of personal data, but it is adapted to include dead as well as living people and ‘confidential’ includes both information ‘given in confidence’ and ‘that which is owed a duty of confidence’ and is adapted to include ‘sensitive’ as defined in the Data Protection Act”.

bSee s259 Health and Social Care Act 2012. For additional comment on these changes see Grace and Taylor (2012).

cMadlen Davies, ‘Patients to be given “veto” over their data being shared from GP records’ pulsetoday (online), 26 April 2013. It has recently been announced that legal Directions are to give legal force to this right to opt out. See statement of Dr Dan Poulter, Under Secretary of State for Health, to the House of Commons, Hansard HC Deb 10 March 2014, col 134.

dResearch Capability Programme Team ‘Summary of Responses to the Consultation on the Additional Uses of Patient Data’ (Department of Health, 27th November 2009); Stone et al. (2005); Robling et al. (2004) For similar conclusions in New Zealand, see Whiddett et al. 2006; for the USA, see Damschroder et al. 2007.

eResearch Capability Programme Team, Department of Health ‘Summary of Responses to the Consultation on the Additional Uses of Patient Data’ (Department of Health, 27th November 2009), p.6.

fHill et al. (2013).

gHill et al. (2013), p2.

hibid.

iHill et al. (2013), p7.

jSee the care.data programme for an example of a significant national programme to extract PCD from health records without explicit patient consent. http://www.england.nhs.uk/ourwork/tsd/care-data/. For reporting of an upcoming publicity campaign see http://www.ehi.co.uk/news/EHI/8961/%C2%A31m-national-leaflet-drop-on-care.data.

k‘Public and Patient Involvement’ (PPI) is a term that is used variously to describe engagement with members of patient and public communities in the decisions about health care and research. See description of PPI in House of Commons Health Committee, Patient and Public Involvement in the NHS, (Vol.1) (HC278-1), Ch.2. In this case, participants were approached through membership of specific patient or PPI groups and membership of relevant groups was described by participants in their own words at the beginning of the workshop.

lParticipants were told that, for the purposes of completing the questionnaire, they should assume ‘sufficiency’ was to be assessed in a way that they agreed was appropriate.

mIBM Statistical Package for the Social Sciences (SPSS) version 17 for Windows.

nNVivo 10 for Windows, QSR International.

oWillison et al. (2008). This contrasts with the findings of Hill et al. (2013) where some shift in aggregate opinion was found.

pHill et al. (2013), p7.

qe.g. Research Capability Programme Team, Department of Health ‘Summary of Responses to the Consultation on the Additional Uses of Patient Data’ (Department of Health, 27th November 2009), p.6; Willison et al. (2008) where “Four percent of respondents thought information from their paper medical record should not be used at all for research, 32% thought permission should be obtained for each use, 29% supported broad consent, 24% supported notification and opt out, and 11% felt no need for notification or consent” (p706).

rIn light of the difference in views on use of identifiable data without consent by public, patients, and researchers reported in the summary of responses to the consultation on the additional uses of patient data (ibid) this finding may reflect the particular composition of the focus groups and their relatively high levels of prior involvement with the NHS. This could usefully be the subject of further research.

sThe Fisher’s Exact test was used to test whether there were any statistically significant relationships between preference and acceptability variables (this is appropriate when samples sizes are small and cells commonly contain fewer than 5 cases); Cramer’s V was deemed appropriate to measure the strength of association. However, of the 8 cross-tabulations (one for each of the four models in both the morning and afternoon), only 4 statistically significant relationships were found (p < 0.005), all of which related to Models 1 and 4 (AM and PM). We can tentatively conclude that there is a significant, moderate association between preference and acceptability for Models 1 and 4; people who ranked these as non-preferable models are also significantly more likely to find them unacceptable (and vice versa). However, here the lack of a statistically significant relationship between preference and acceptability for Models 2 and 3 show that there is a much more complex picture to be understood; here is where the most interesting trade-offs between privacy and access to data are coming into play.

tResearch conducted in New Zealand found that “60% of respondents expressed some reservations about sharing even anonymous information with people other than health professionals. This result is similar to findings in the UK and Australia which identified that many people desire some control over their data even if it is anonymous” Whiddett et al, 2006 However, compare Wellcome Trust, Summary Report of Qualitative Research into Public Attitudes to Personal Data and Linking Personal Data (2013), page 13 [3.7].

uWellcome Trust, Summary Report of Qualitative Research into Public Attitudes to Personal Data and Linking Personal Data (2013), page 13 [3.6].

vHill et al. (2013) p5.

wHill et al. (2013), p6.

xRobling et al. (2004), p106.

yHill et al. (2013).

zWillison et al. (2008) reported that support for research use of PCD “is dependent on the intended uses and users of the data and on the safeguards applied”. p710; See also, Damschroder et al. (2017).

aaSee n.3 above.

Acknowledgements

We would like to thank the participants in the focus groups. The Facilitator, Mr David Ardron, and the colleagues who have kindly commented on drafts of this paper including, and most notably, Dr Liz Hill, Professor Don Willison, and Dr Susan Wallace. We would also like to thank the two anonymous reviewers who provided excellent comments on the original submission.

This research was carried out, and the manuscript drafted, while Mark Taylor was on study leave funded by the British Academy as a mid-career Fellowship. The support of the British Academy is gratefully acknowledged.

Abbreviations

- PCD

Personal confidential data

Footnotes

Competing interests

Mark Taylor is currently the Establishing Chair of the Confidentiality Advisory Group for the Health Research Authority. His authorship of this manuscript is in the capacity of a university academic. The content should not, in any way, be understood to represent the views of, or by attributed to, the Confidentiality Advisory Group or the Health Research Authority or any other group of which he is a member.

Author’s contributions

MT organised the focus groups, delivered presentations prior to the focus group discussion, sat in on and subsequently transcribed the focus group discussion, thematically coded the discussion using NVIVO software and drafted the introduction, conclusion and section on qualitative analysis. NT carried out the quantitative analysis and drafted the section on quantitative analysis. She also thematically coded the focus group transcripts and contributed toward the re-drafting of the entire piece following comments. Both authors read and approved the final manuscript.

Authors’ information

Mark Taylor is a Senior Lecturer in Law at the University of Sheffield and Deputy Director of the Sheffield Institute of Biotechnology Law and Ethics.

Natasha Taylor is a Senior Research Fellow at the University of Huddersfield and has a particular interest and expertise in research methods.

Contributor Information

Mark J Taylor, Email: m.j.taylor@sheffield.ac.uk.

Natasha Taylor, Email: n.c.taylor@hud.ac.uk.

References

- Crow I, Semmens N. Researching Criminology. McGraw-Hill: Maidenhead; 2008. [Google Scholar]

- Damschroder LJ, Pritts JL, Neblo MA, Kalrickal RJ, Creswell JW, Hayward RA. Patients, privacy and trust: Patients’ willingness to allow researchers to access their medical records’. Soc Sci Med. 2007;64:223–235. doi: 10.1016/j.socscimed.2006.08.045. [DOI] [PubMed] [Google Scholar]

- Grace, J, and MJ Taylor. 2012. Disclosure of confidential patient information and the duty to consult: The role of the health and social care Information Centre. Med Law Rev. first published online March 29, 2013. [DOI] [PubMed]

- Hill EM, Turner EL, Martin R, Donovan JL. “Let’s get the best quality research we can”: public awareness and acceptance of consent to use existing data in health research: a systematic review and qualitative study’. BMC Med Res Methodol. 2013;13:72. doi: 10.1186/1471-2288-13-72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- House of Commons Health Committee, Patient and Public Involvement in the NHS, (HC 2006-2007, 278-I).

- Robling MR, Hood K, Houston H, Pill R, Fay J, Evans HM. Public attitudes towards the use of primary care patient record data in medical research without consent: a qualitative study’. J Med Ethics. 2004;30:104–109. doi: 10.1136/jme.2003.005157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stone, MA, AS Redsell, JT Ling, and AD Hay. 2005. Sharing Patient Data: Competing demands of privacy, trust and research in primary care. Br J Gen Pract 783–789. [PMC free article] [PubMed]

- Whiddett R, Hunter I, Englebrecht J, Handy J. Patient's attitudes towards sharing their health information. Int J Med Informatics. 2006;75:530–541. doi: 10.1016/j.ijmedinf.2005.08.009. [DOI] [PubMed] [Google Scholar]

- Willison DJ, Swinton M, Schwartz L, Abelson J, Charles C, Northrup D, Cheng J, Thabane L. Alternatives to project-specific consent for access to personal information for health research: Insights from a public dialogue’. BMC Med Ethics. 2008;9:18. doi: 10.1186/1472-6939-9-18. [DOI] [PMC free article] [PubMed] [Google Scholar]