Abstract

Humans shape their hands to grasp, manipulate objects, and to communicate. From nonhuman primate studies, we know that visual and motor properties for grasps can be derived from cells in the posterior parietal cortex (PPC). Are non-grasp-related hand shapes in humans represented similarly? Here we show for the first time how single neurons in the PPC of humans are selective for particular imagined hand shapes independent of graspable objects. We find that motor imagery to shape the hand can be successfully decoded from the PPC by implementing a version of the popular Rock-Paper-Scissors game and its extension Rock-Paper-Scissors-Lizard-Spock. By simultaneous presentation of visual and auditory cues, we can discriminate motor imagery from visual information and show differences in auditory and visual information processing in the PPC. These results also demonstrate that neural signals from human PPC can be used to drive a dexterous cortical neuroprosthesis.

SIGNIFICANCE STATEMENT This study shows for the first time hand-shape decoding from human PPC. Unlike nonhuman primate studies in which the visual stimuli are the objects to be grasped, the visually cued hand shapes that we use are independent of the stimuli. Furthermore, we can show that distinct neuronal populations are activated for the visual cue and the imagined hand shape. Additionally we found that auditory and visual stimuli that cue the same hand shape are processed differently in PPC. Early on in a trial, only the visual stimuli and not the auditory stimuli can be decoded. During the later stages of a trial, the motor imagery for a particular hand shape can be decoded for both modalities.

Keywords: audio processing, brain–machine interface, grasping, hand shaping, motor imagery, posterior parietal cortex

Introduction

The cognitive processes which result in grasping and hand shaping can be investigated on many levels. On the level of single neurons, we can ask how spiking activity is correlated with specific features of a hand shape. It has been shown that specific grasp shapes can be decoded from motor signals of motor cortex neurons in monkeys and humans (Vargas-Irwin et al., 2010; Wodlinger et al., 2015). Before the details of muscle activation are defined in the motor cortex, an intention to shape the hand has to be formed in high-level areas of the brain. Grasp intentions have been decoded from neurons in the premotor cortex of monkeys (Carpaneto et al., 2011). In the posterior parietal cortex (PPC), such intentional signals can be derived as well (Andersen and Buneo, 2002; Culham et al., 2006). Several monkey studies have identified the anterior intraparietal (AIP) area to be one such high-level area involved in grasp actions (Sakata et al., 1995, 1997; Baumann et al., 2009; Townsend et al., 2011). Interestingly neurons in this area have also been reported to be tuned to visual properties of graspable objects (Murata et al., 1997, 2000; Schaffelhofer et al., 2015). In all mentioned monkey studies, hand shaping was studied in the context of grasping or interacting with a physical or sometimes virtual object. However, it remains unclear whether these results translate to humans who have an even larger repertoire of grasps and who also use hand shapes unrelated to grasping and independent of objects (eg, in sign language). Can we use high-level signals from PPC for decoding complex human hand shapes similarly and can these signals still be extracted in paralyzed subjects who have not used their hands in many years? In the field of neuroprosthetics, motor cortex decoding of simple grasp actions has been demonstrated using various robotic limbs (Hochberg et al., 2012; Collinger et al., 2013) but the human hand with its 20 degrees of freedom (DOF) allows for far more complex operations. Improving neuroprostheses and allowing tetraplegic patients—who rate hand and arm function to be of highest importance (Anderson, 2004; Snoek et al., 2004)—to have better control over their environment could be achieved using control signals from the PPC. For decoding reaches, we have shown that PPC provides a strong representation of the 3D goal of an imagined reach (Aflalo et al., 2015). In this study, we now examine the representation of imagined hand shapes.

A tetraplegic subject, E.G.S., was implanted with two microelectrode arrays in the left hemisphere of the PPC. One array was placed in a reach-related area on the surface of the superior parietal lobule (putative human Brodmann's area 5; BA5). The other one was placed in a grasp-related area at the junction of the intraparietal sulcus and postcentral sulcus (putative human AIP). We used the neuronal activity from recorded units to control the physical robotic limb as well as a virtual reality version of the limb. For training purposes and to investigate how these areas represent hand shapes we implemented a task based on the popular Rock-Paper-Scissors game and its extension Rock-Paper-Scissors-Lizard-Spock. We used variations of this task to further analyze how visual information and motor imagery signals interact in the posterior parietal cortex. Our results show that complex hand shapes can be decoded from human PPC and area AIP in particular. We also find visual information of objects to be encoded by neurons of this area. In contrast, we do not find early information of objects cued by auditory stimuli. Furthermore, we can show that visual object information and motor-imagery information are encoded in largely separate populations of cells. This finding of two populations is important for separating attentional-visual components of neuronal response from motor-related components, which can be used to drive neuroprostheses in an intuitive manner.

Materials and Methods

Approvals.

This study was approved by the institutional review boards at the California Institute of Technology, Rancho Los Amigos, and the University of Southern California (USC), Los Angeles. We obtained informed consent from the patient before participation in the study. We also obtained an investigative device exemption from the FDA (IDE no. G120096) to use the implanted devices throughout the study period. This study is registered with (NCT01849822).

Implantation.

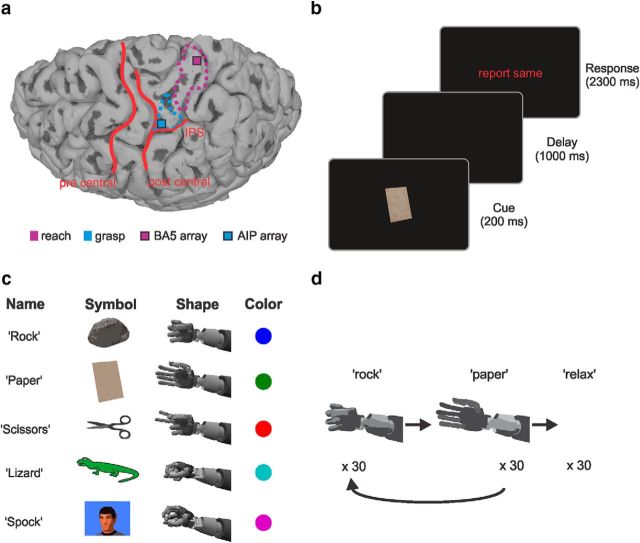

The subject in this study, E.G.S., is a male tetraplegic patient who was 32 years old at the time of implantation. His spinal cord lesion was complete at cervical level C3-4 with a paralysis of all limbs. At the time of implantation, he was 10 years postlesion. We implanted two 96 channel microelectrode arrays (Blackrock Microsystems) arranged in a 10 × 10 grid in two areas of the posterior parietal cortex (Fig. 1a). One was implanted on the surface of the superior parietal lobule (putative human BA5) and one at the junction of the intraparietal sulcus with the postcentral sulcus (putative human AIP). The electrodes were 1.5 mm long and putatively record from cortical layer 5. The array electrodes had platinum-coated tips and were spaced 400 μm apart. The exact placement of the arrays was based on an fMRI task, which E.G.S. performed before implantation. Recordings for this study were made over 12 months during which other experiments also took place. More information about the implantation method can be found in a previous study in which the same patient participated (Aflalo et al., 2015).

Figure 1.

Implantation site and schematic overview of tasks. a, MRI of the implantation site for the BA5 and the AIP array. Some characteristic sulci are overlaid in red for better orientation. The most active regions from the fMRI task (see “fMRI task and array locations”) are outlined for reaching (purple) and grasping (light blue). b, Sketch of task progression showing the three distinct phases of cue presentation, delay, and response with their respective lengths. c, Table listing the names of the hand shapes (which also correspond to the auditory cues given in the CC task), their symbolic representations on the screen, corresponding hand shapes (as performed by the robotic arm), and the color code used for cue-based analyses. d, Schematic sketch of the GT task. The robotic hand would alternate between the two hand shapes rock (closed hand) and paper (open hand) for 60 trials total, and after that 30 relaxation trials would follow (see “Online control and grasp training task”).

fMRI task and array locations.

To determine the placement for the two implanted arrays, we had the patient perform an imagined hand reaching and grasping task during an fMRI scan.

A GE 3T scanner at the USC Keck Medical Center was used for scanning. Parameters for the functional scan were as follows: T2*-weighted single-shot echoplanar acquisition sequence (TR = 2000 ms; slice thickness = 3 mm; in-plane resolution = 3 × 3 mm; TE = 30 ms; flip angle = 80; FOV = 192 × 192 mm; matrix size = 64 × 64; 33 slices (no-gap) oriented 20° relative to anterior and posterior commissure line). Parameters for the anatomical scan were as follows: GE T1 Bravo sequence (TR = 1590 ms; TE = 2.7 ms; FOV = 176 × 256 × 256 mm; 1 mm isotropic voxels). Surface reconstruction of the cortex was done using Freesurfer software (http://surfer.nmr.mgh.harvard.edu/).

The imagined reach and grasp task started with a 3 s fixation period in which E.G.S. had to fixate a dot in the center of the screen. In the following cue phase, he was cued to the type of imagined action to perform, which could be a precision grip, power grip, or a reach without hand shaping. Next, a cylindrical object was presented in the stimulus phase. If the object was “whole” (“go” condition) E.G.S. had to imagine performing the previously cued action on the object and report back the color of the part of the object which was closest to his thumb. The object could be presented in one of six possible orientations and E.G.S. could freely choose how to align his imagined hand with regard to the object. The reported color allowed us to determine whether the imagined action was performed with an overhand or underhand posture. Analysis of the orientation of the object and the reported color suggested that E.G.S. was imagining biomechanically plausible, naturalistic arm movements. If the object presented in the stimulus phase was “broken” (“no go” condition) E.G.S. had to withhold the cued action. This was used as a control condition.

The BOLD response from this task was then used to determine possible implantation sites for surgery (Fig. 1a). Based on the highest activation for grasping and reaching, and intraoperative constraints (blood vessel locations, cable and pedestal placement, etc), two implantation sites were picked. Statistical analysis was restricted to the superior parietal lobule to increase statistical power. More details about the fMRI task and its implementation were previously described (Aflalo et al., 2015).

Behavioral setup.

During all tasks of this study, E.G.S. was sitting in his wheelchair 2 m in front of a LCD screen (1190 mm screen diagonal). Stimulus presentation was controlled using a combination of Unity (Unity Technologies) and MATLAB (MathWorks). For the grasp training (GT) task (see “Online control and grasp training task,” below) a 17 DOF anthropomorphic robotic arm (modular prosthetic limb; MPL) was used. The MPL was designed by the Johns Hopkins University Applied Physics Laboratory and approximates the functions of a complete human limb. For on-line control, the GT task only relied on the function of the robotic hand to replicate the different hand shapes we were using. A virtual version of the MPL (vMPL) was also available in conjunction with the Unity environment and was used in on-line control as well. The vMPL was designed so that it resembles the MPL in form and function within the Unity 3d environment.

Data collection.

The data for the current work was collected over a period of 12 months (including pauses) in 2-4 study sessions per week. No device-related adverse events occurred throughout the study. Two neural signal processors (Blackrock Microsystems) were used to record neuronal signals. The raw data were amplified, and then digitized using a 30 kHz sampling rate. The threshold for waveform detection was set to −4.5 times the root-mean-square of the high-pass filtered full-bandwidth signal. Off-line spike sorting was done semi automatically using a Gaussian mixture model based on the first two principal components of the detected waveforms. Cluster centroids were selected manually by the researchers and the results of the clustering algorithm were visually inspected and adjusted if necessary. In on-line experiments, only unsorted action potential threshold crossings were used (using −4.5 times the root-mean-square as threshold). Overall, the unit yield on the BA5 array was substantially lower than on the AIP array. On average, we could record only ∼¼ the number of units on the BA5 array compared with the AIP array. In this study, we therefore focus mainly on data recorded from the AIP array. Only for a neuron dropping comparison did we compare the two arrays.

Rock-Paper-Scissors task.

Selectivity for hand shapes was evaluated using a task based on the popular Rock-Paper-Scissors (RPS) game (Fig. 1b). In the RPS task, E.G.S. was shown a symbolic representation of rock, paper, or scissors (Fig. 1c) on a display screen for 200 ms. The symbols on the screen occupied a space of 200 × 150 mm (2.9° × 2.2° of visual angle). The cue phase was followed by a delay phase in which a blank screen was shown for 1000 ms. At the end of the task, E.G.S. was instructed to imagine making the corresponding hand shape with his right hand and to verbally report the cued symbol (response phase). The onset of a text saying, “report same” would indicate the start of the response phase. The verbal reports were used to keep E.G.S. engaged in the task and check whether the correct cue was remembered. The symbol reported by E.G.S. was recorded by the experimenter. Trials in which he would not answer within 4 s were marked as “no answer” trials.

All symbols were interleaved and at least 10 repetitions were recorded for each condition. For data analysis, all trials in which E.G.S. reported an incorrect cue during the response phase and “no answer” trials (see above) were excluded. The analyses from this task are based on 20 recording sessions.

Rock-Paper-Scissors-Lizard-Spock task.

This task was an extension of the RPS task, which included two additional hand shapes to increase the repertoire of possible hand shapes available to E.G.S. The general task flow corresponds to that of the RPS task. Two additional symbols, lizard and Spock, and their associated hand shapes, a pinch grasp and a spherical grasp, respectively, were added (Fig. 1c). The symbol for lizard resembled a cartoon lizard and the symbol for Spock was a photo of the face of Leonard Nimoy dressed as Mr. Spock from the TV series (Fig. 1c). Importantly, unlike the symbols in the RPS task, which E.G.S. could arguably imagine to grasp, these symbols were chosen not to be intuitively graspable. The corresponding grasps, a pinch and a spherical grasp (Fig. 1c), do not correspond to those of the original Rock-Paper-Scissors-Lizard-Spock (RPSLS) game but instead were chosen to widen the repertoire of usable grasps.

The dataset for this task is composed of 12 recording sessions which were taken mostly after the completion of the RPS recordings (only 3 recording days at the end of the RPS recording period overlap).

Cue conflict task.

Task progression and timing of this task correspond to the RPS task in every aspect except that two cues were presented to E.G.S. during the cue phase instead of one. One of the cues was the visual representation of the object (as in the RPS task) and the other cue consisted of a corresponding auditory representation (a synthetic voice saying “rock,” “paper,” and “scissors”). All permutations of the visual and auditory cue were used, which means that in one-third of the trials the cues were congruent and in two-thirds of the trials the cues were incongruent. Fifteen repetitions were recorded for each symbol (5 congruent trials and 10 incongruent trials). In each block of this task, the experimenter would instruct E.G.S. in the beginning to either respond to the visual (“attend object” condition) or the auditory cue (“attend auditory” condition). One block of “attend object” and “attend audio” was recorded right after each other on a recording day and the order of block presentation was changed randomly. Except for the instruction at the beginning of a block, the blocks were identical in all other aspects. As in the previous tasks, E.G.S. had to imagine and verbalize what he was imagining and we only used trials in which he responded correctly for analysis.

The cue conflict (CC) task dataset consists of eight recordings for the “attend object” condition and eight recordings for the “attend audio” condition. One block of each was recorded on the same recording day. All CC recordings were made after the RPS and RPSLS sessions.

Online control and grasp training task.

In various on-line decode experiments E.G.S. directly brain-controlled either the MPL or the vMPL to open, close, and hold objects or play Rock-Paper-Scissors with the experimenters. The on-line experiments did not follow a strict task structure, and other than E.G.S.'s subjective verbal feedback, no recorded performance data are available for them. To train the decoder for on-line control we implemented a grasp training (GT) task (Fig. 1d). During the GT task, E.G.S. was not controlling the robot arm but had to imagine hand shapes, which were cued. The data from this training was then used as input for a decoder for on-line control. Off-line analyses of this training data are shown in the current work. To make the analysis as similar as possible to the on-line decoding we use only threshold crossing and no additional spike sorting for this off-line decoding analysis.

In the GT task, E.G.S. had to imagine making hand shapes that corresponded to the ones the MPL was performing in front of him. An open hand (“paper” gesture) and a power grasp (“rock” gesture) were used as the two grasp types in addition to a relaxation state. Opening and closing of the robotic hand was accompanied by verbal instructions, which corresponded to the hand movements (ie, paper for open hand, rock for power grasp). The relaxation state was verbally instructed (“release”). The robot hand in front of E.G.S. was still visible during the relaxation state, like in the open hand or power grasp state and remained in the last position presented to E.G.S.; ie, either in the open hand or power-grasp state. In the relaxation state, there was no explicit behavioral instruction for E.G.S. other than to relax. Typically, he would look at the screen during this state but his gaze was neither restricted nor controlled. Unlike the previous tasks, there was no verbal confirmation or control of the E.G.S.'s attention in this task.

Data for the GT task consists of 41 recordings which were taken over a period of 3 months during which the data for the CC task was also recorded.

Off-line discrete decoding.

To measure decoding performance we used the same off-line decoding procedure for the RPS, RPSLS, and GT tasks. In the RPS and RPSLS tasks, only correct trials (see above) were used for decoding. In the GT task, there was no verbal feedback and therefore no trial exclusion. Units with a low mean firing rate (<1.5 Hz) during the task were excluded. The time window for decoding started 600 ms before the response phase and lasted 1500 ms. The time window was chosen to include the buildup of activity just before the response phase onset, which we attribute to anticipation by the patient and a large fraction of the response phase itself during which we assumed the highest specificity for hand shapes. We used a linear discriminant classifier with a uniform prior for classification. The decoder was trained and performance was evaluated using leave-one-out cross-validation. One trial is used for validation and all other trials are used as the training set for a given recording session. Then another trial is used for validation and the remainder as the training set. This is repeated until every trial has been validated. The average performance of all validations is then used as the performance for that recording session.

CC continuous decoding.

For the CC continuous decoding analysis we used a k-nearest neighbor (KNN) classifier (k = 4). All units recorded in the CC task that had a mean firing rate of 1.5 Hz and higher were included. Recorded units in the attend object and attend audio conditions were treated separately. For both groups we used the KNN classifier to decode the visual cue and the audio cue independently for each 50 ms time step. To ensure that all units of a dataset would have identical number of trials for each condition, we only used the lowest number of trials per condition that occurred in the entire dataset (12 trials per condition in this case). The features for the decoder were binned and smoothed firing rates (spike timestamps binned in non-overlapping 50 ms windows smoothed using a 500 ms Gaussian filter) of all included units. We then performed a principal component analysis for dimensionality reduction and used the first five components for decoding. The decoder was trained and performance was evaluated using a 10-fold cross-validation.

Neuron-dropping analysis.

Neuron-dropping analysis provides a way to assess the decoding potential of the implantation site even when units are not stable over time. Instead of analyzing each recording session separately, the total recorded population of units is used as though it was recorded in one session. For this analysis, an artificial feature set was created using firing rates from all sorted units in the dataset of a task, which had a minimum firing rate of 1.5 Hz. All units, which were recorded on different days (sessions), were treated as independent units. Analysis of waveform features (trough-to-peak width and half-point width) and other features (mean firing rate and interspike interval) of successive days indicated that most units did not remain the same between sessions (data not shown). Performance is then calculated with subpopulations of the entire feature set by systematically removing single units. We used the same time window as the discrete decoding analysis (see above) starting at 600 ms before response phase onset and ending 900 ms after response phase onset. Units for the subpopulations were randomly drawn from the total population. Two additional analyses for the RPS task were performed using a window for the cue and a window for the response phase. Both time windows were 400 ms long and started 100 ms after start of the respective phase. This was done to compare decoding differences of BA5 and AIP depending on the task phase. For each data point, 100 subpopulations were created. Two-thirds of trials were used for training and one-third for testing. Trial assignment was done randomly with 10 repetitions for each subpopulation. After feature selection, we used principal component analysis for dimensionality reduction. The first five principal components were used for classification with the linear discriminant method (see Off-line discrete decoding).

Results

Representations of hand shapes

To study the relationship between imagined hand shapes and neuronal activity we implemented a task which was modeled after the popular RPS game and its extension RPSLS. The task consists of three phases (Fig. 1b). In the cue phase an object which corresponds to one of the hand shapes (Fig. 1c) was shown to E.G.S. on a screen. In the following delay phase, the screen turned blank. Finally, text would appear instructing E.G.S. to imagine the cued grasp shape and verbalize which shape he imagined.

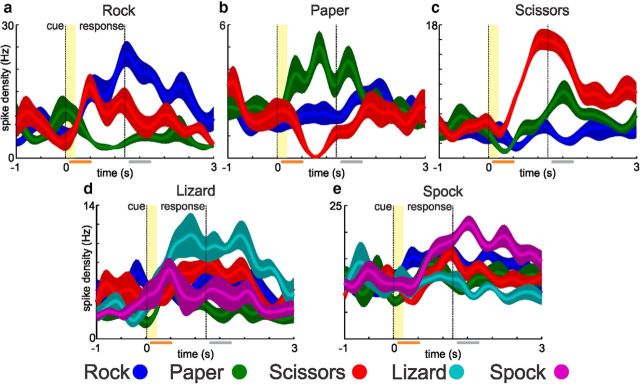

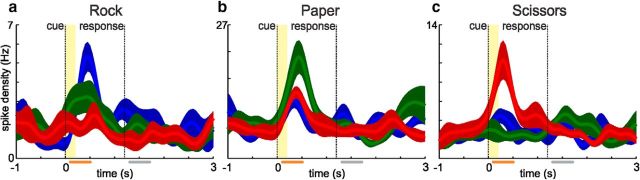

We found single units which had a preferred tuning for each of the different hand shapes that we used in our task. Figure 2 shows example neurons tuned to each of the five hand shapes used in the RPS (top row) and RPSLS (bottom row) tasks. The depicted units were especially active during the delay and response phase which indicates that they did not (or only slightly as in case of the paper example unit shown in Fig. 2b) respond to the visual cue. In addition to units that were preferentially active during the delay or response phases we also found units that were more active during or shortly after stimulus presentation and not in the response phase (Fig. 3). Those units were not significantly tuned in the response phase and are assumed to reflect the visual cue.

Figure 2.

Example motor-imagery neurons. a–c, Top row, Example neurons from the RPS and the bottom row (d, e) neurons from the RPSLS task. Each plot shows the average firing rates (solid line; shaded area = SD) for 10 trials of each cued symbol during the task. Vertical lines indicate the onset of the cue (yellow shading = time period during which the cue symbol was visible) and response phase. Selection criterion for neurons was significant tuning for one of the cue symbols during the response time window (gray bar; see Materials and Methods). The orange bar shows the cue time window, in which only the paper neuron is also tuned.

Figure 3.

Example visual neurons from the RPS task. Plots have been prepared in the same way as described for Figure 2. Selection criterion for neurons was significant tuning for one of the cue symbols during the cue time window (orange bar). From left to right neurons are selective for rock (a), paper (b), and scissors (c).

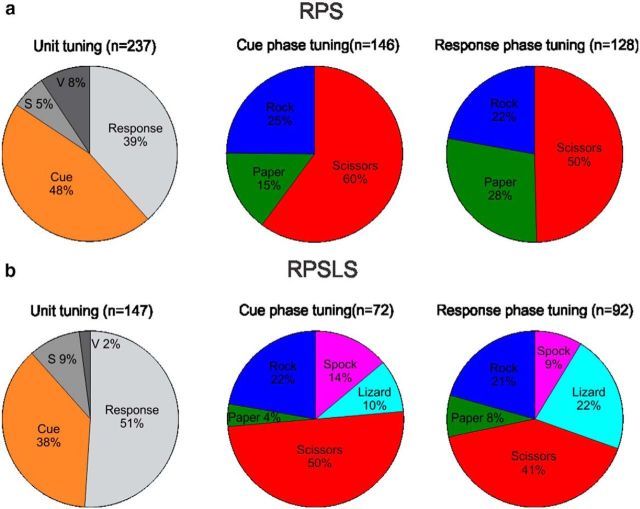

From a total of 803 sorted units recorded from AIP in the RPS task a total of 237 units (30%) were tuned (Fig. 4). Based on these findings we separated the tuned units into those tuned during the cue phase (“visual units”) and those tuned during the response phase (“motor-imagery units”). To be considered in one of the two groups a unit had to be significantly tuned (one-way ANOVA; significance level 0.05) in a 400 ms window that started 100 ms after the occurrence of either the cue or response event (Figs. 2, 3, orange and gray bars). The time window was chosen to take into account processing latencies and the length of the time window so as to keep the overlap between cue and delay phase at a minimum. Our analysis shows that approximately one-half of the tuned cells belong to either of the two categories (Fig. 4, left column of pie diagrams). This was true for the RPS and the RPSLS task even though the RPSLS task showed slightly more motor-imagery units. In both of these tasks we also found that approximately one-half of the units were tuned for the scissors symbol which makes this symbol largely overrepresented (cue phase: p = 2.6 × 10−6; response phase: p = 7.5 × 10−3; χ2 test) in the recorded population assuming an equal representation of the three symbol types (Fig. 4a, middle and right pie diagrams). E.G.S. also said that the scissors grasp shape was the most difficult to imagine.

Figure 4.

Statistics of tuned units. Units were recorded during the RPS (a) and RPSLS (b) tasks. Percentage of units tuned in either the cue or response time window (left). Units were tuned either exclusively in the cue (orange) or response (light gray) time window or in both (the two dark gray sectors). If a unit was tuned in both time windows it could either be tuned for the same symbol (“v” for visuomotor; dark gray) or for different symbols in each time window (“s” for switching; darkest gray). Percentage of all tuned units that were tuned during the cue (middle) and response (right) time window sorted by their preferred symbol. Note that the total for the cue and response phase pie charts (middle and right) does not add up to the total from the unit tuning pie chart (left) because visuomotor and switching units are included in both charts.

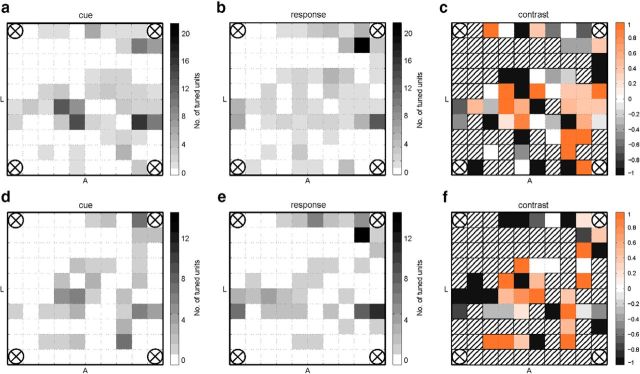

In the RPSLS task 147 (37%) of 397 total recorded units recorded from AIP were tuned. Only ∼¼ of the units were tuned for the two new symbols introduced by the RPSLS task (Fig. 4b, middle and right pie diagrams). Furthermore we found that visual and motor-imagery units were almost entirely exclusive populations of neurons with only ∼11%-13% of overlap in both RPS and RPSLS tasks (Fig. 4 left column of pie diagrams). Visuomotor units (Fig. 4, labeled “v”) were units that were tuned during both the cue and the response phase of the task and had the same preferred tuning in both phases. Switching units (Fig. 4, labeled “s”) were also tuned during both the cue and the response phase but did not have the same preferred tuning in both phases. The spatial distribution of tuned units on the AIP array is shown in Figure 5 for the cue and response phases of the RPS (top row) and RPSLS (bottom row) tasks. Although there are some hot spots where most of the units recorded on a channel are only tuned during the cue or response phase as can be seen from the contrast plots (Fig. 5c,f), on most channels a mix of cue- or response-tuned cells could be found. When comparing the distribution of units for the RPS and the RPSLS tasks some changes can be observed, but the general areas where tuned units could be found stayed approximately the same.

Figure 5.

Spatial distribution of tuned units on the AIP array. Total number of tuned units per channel (electrode) on the array (top down view onto the pad) in the RPS (a, b) and the RPSLS (d, e) tasks. Tuned units are shown for the cue (a, d) and response (b, e) phases. The electrodes in the four corners of the array were used as references. Orientation of the array on the cortex is indicated by the letters A (anterior) and L (lateral). The contrast plots show how many units were tuned for the cue phase relative to the response phase for the RPS (c) and RPSLS (f) tasks. A contrast ratio of 1 means that all units found on the channel were only tuned for the cue phase and a value of −1 means that all units were only tuned in the response phase. Striped channels in the contrast plots mean that no tuned units were recorded on the channel. Contrast was calculated as c = , where tc is the number of tuned cells during the cue phase and tr is the number of tuned cells during the response phase.

Context dependency

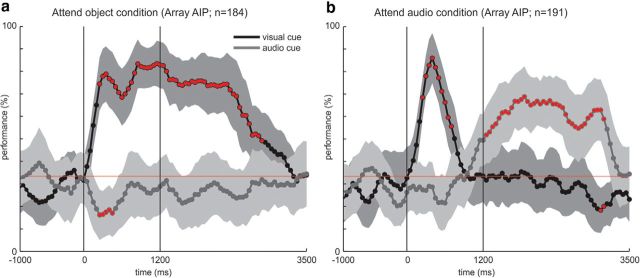

To further investigate visual and intention properties of neurons, we implemented the CC task which uses two cues, a visual and an auditory cue, which are presented simultaneously (see Materials and Methods). The task progression is identical to the RPS task with the exception that during the cue phase one visual object is presented and simultaneously an auditory cue is played. Importantly, only in one-third of trials both cues were congruent. The auditory cue is a verbal representation of one of the shapes; ie, rock, paper, and scissors. At the beginning of each session the experimenter would instruct E.G.S. to either attend to the object (attend object condition) or the auditory cue (attend audio condition). A total of 184 sorted units (47%) which met the 1.5 Hz minimum firing rate criterion of 395 total units, were used in the attend object condition. In the attend audio condition, 191 units (49%) which met the minimum firing rate criterion of 393 total units were used. In addition to the 1.5 Hz minimum firing rate criterion, no further selection criteria were applied for this analysis. The decoding analysis shows that in the case of the attend object condition the visual cue can be decoded soon after it is presented and decoding accuracy stays high during the response period (Fig. 5a). It starts declining ∼1000 ms after the start of the response phase and reaches chance level at ∼3200 ms after cue onset. The audio cue on the other hand cannot be decoded throughout the trial. In the attend audio condition the decoding curve looks very different (Fig. 5b). The visual cue can be decoded shortly after it has been presented but decoding accuracy reaches chance level quickly thereafter, ∼300 ms before start of response phase, and stays at chance for the remainder of the trial. The audio cue cannot be decoded during cue presentation. However, ∼100 ms before the start of the response phase, decoding accuracy for the audio cue starts to rise, reaches a peak 1000 ms after response phase onset, and then slowly declines reaching chance level ∼3200 ms after cue presentation. These results are consistent with the finding of two different populations of which one is selective during the (visual) cue phase and the other during the response phase (see above). By using two different modalities, we can now further differentiate the functional properties of these populations. The cue phase-tuned units are selectively active for the visual modality. On the other hand, the response phase tuned neurons encode the required hand shape independent of the sensory modality.

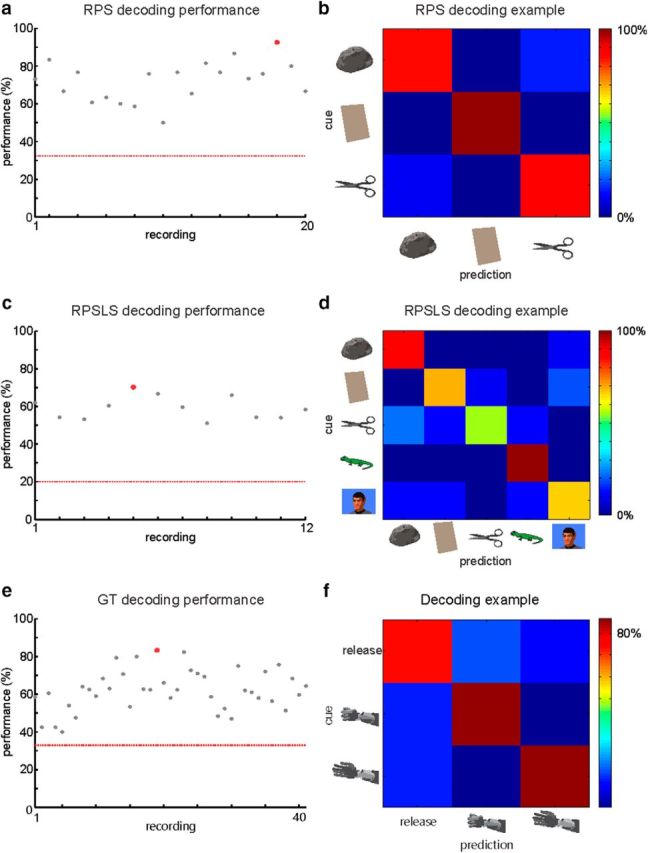

Off-line discrete decoding in RPS and RPSLS tasks

To assess the potential of the recorded population to be used in a neuroprosthetic scenario, we calculated the decoding performance for each recording session of the RPS and RPSLS tasks (Fig. 7). The decoder used 19.6 ± 7.3 units (mean ± SD) per session in the RPS task and 18.3 ± 4.7 units (mean ± SD) per session in the RPSLS task. In both tasks, the decoding performance was above chance level throughout the whole recording period. Performance quickly reached a stable plateau and stayed constant with day-to-day variability. The example confusion matrices (Fig. 7b,d) show that all hand shapes can be decoded, although the probabilities for each of the shapes can vary in the RPSLS task.

Figure 7.

Off-line decoding analysis of the RPS, RPSLS, and GT tasks. Decoding performance for each recording for the RPS (a), RPSLS (c), and GT (e) tasks. Red line indicates chance level for each task. Example confusion matrices for recordings from the RPS (b), RPSLS (d), and GT (f) tasks. Examples are marked as red dots in the performance plots.

GT task and on-line brain control

The GT task was used to train a decoder for on-line brain control as described in Materials and Methods (Fig. 1d). Training was done using the physical robotic limb that would perform one of two states, a power grasp or an open hand, in front of E.G.S. A third relaxation state in which the robotic limb did not move was used as an intermediary state. The decoder used 37 ± 14 units (mean ± SD; units were defined only by threshold crossings, see Materials and Methods) per session in the GT task. The decoding analysis (Fig. 7e,f) shows performance above chance level for all sessions. This corresponds well with E.G.S.'s ability to control the robotic limb on-line. It was difficult initially for him to maintain a grasp shape. With subsequent training and using a relaxation state (see Materials and Methods), E.G.S. was able to maintain grasp for longer periods. The off-line decoding performance for all recording sessions in the GT task is shown in Figure 7e. After an initial phase of relatively low performance, a plateau is reached and mean performance stays stable over the whole recording period. Throughout the recording period, performance varied considerably but was always above chance level (33%; Fig. 7, red line). The example confusion matrices in Figure 7f illustrate that all states in the GT task could be decoded with approximately equal probability.

Neuron-dropping analysis

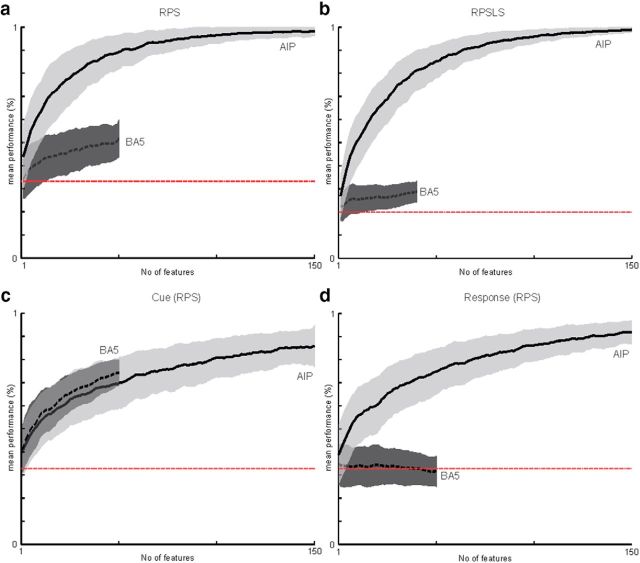

For this analysis, a total pool of 321 sorted units in the RPS task (222 sorted units in RPSLS) were available from the AIP array and 61 sorted units (45 sorted units in RPSLS) were available from the BA5 array. Only units with a minimum mean firing rate of 1.5 Hz were used for this analysis. The maximum number of pooled units shown for the AIP array was cutoff at 150 units and the maximum number of pooled units shown for the BA5 array was cutoff at 50 units (no cutoff for RPSLS) to make comparison of the curves easier. Note that to create the neural dropping curves, the units used and the trials assigned for training and cross-validation were randomly chosen (see Materials and Methods). The neuron-dropping curves show that the correct hand shape in the RPS (Fig. 8a) and RPSLS (Fig. 8b) tasks can be decoded with high accuracy if a sufficient number of units are available. Interpolating from the neuron-dropping curve an average performance of 90% could be achieved with ∼52 randomly selected units in the RPS task and 63 in the RPSLS task.

Figure 8.

Neuron-dropping analysis. Neuron-dropping curves are shown for the RPS (a) and RPSLS (b) tasks using the 1500 ms time window (see Materials and Methods). Neuron-dropping curves are shown for the AIP (solid line) and BA5 (dashed line) arrays separately. Red line shows chance level for the task. Shaded areas show SD. Each data point was created using a 1000-fold cross-validation (see text). Separate neuron-dropping analyses for the cue (c) and response (d) phases used 400 ms time windows aligned to the corresponding phase (see “Neuron-dropping analysis”).

Comparing the neuron-dropping curves from AIP and BA5 using the 1500 ms time window that overlaps the delay and response phases shows a generally worse performance for the BA5 array (Fig. 8a,b). However, when we compare the curves for particular phases of the task it becomes apparent that this difference is for the phase and not because of the differences in the sampled neuron populations, which were of lower yield for the BA5 array (see Materials and Methods). When comparing neuron dropping curves for the cue phase (Fig. 8c) and the response phase (Figure 8d) this can easily be seen. In the case of AIP, decoding is good in both phases, whereas the BA5 array performed well only for the cue phase and decoding was not possible during the response phase. This result shows a specialization of AIP for intended hand shaping.

Discussion

Our results show for the first time encoding of visual and motor-imagery aspects of hand shaping by single neurons recorded from human PPC. These neurons are selectively active for specific complex hand shapes during a Rock-Paper-Scissors inspired task. We found two mostly separate populations of neurons that either show visual or motor-imagery-related activity during a task, which requires identifying a cue and imagining performing a corresponding hand shape. In addition, simultaneous presentation of visual and auditory cues distinguished visual processing from motor-imagery and showed differences in encoding of the two sensory modalities.

Finding two mostly different populations of neurons, which are either tuned during the cue presentation or the motor imagery, could have different interpretations. It is possible, but not very likely, that the AIP array recorded from different layers of cortex, which had different functional properties. In the spatial distribution plots (Fig. 5), we could not find a clear gradual shift from visual to motor imagery which would support this theory either because the array was not evenly inserted or because it followed a bend in the cortex. Another explanation could be that the different populations were indeed functionally separated and distributed in small patches. From monkey experiments we know that “visuomotor” cells, which would be active during cue presentation and execution of a movement, are rather rare in PPC and only comprise ∼7% of recorded units (Gail and Andersen, 2006), which corresponds to our findings.

Cells that are selectively active for specific grasps have been reported in area AIP of nonhuman primates (Vargas-Irwin et al., 2010; Carpaneto et al., 2011; Schaffelhofer et al., 2015). In these studies, healthy animals would grasp objects that were visually presented either throughout the trial or at the beginning of a trial. The neuronal responses of motor intent could therefore be mixed with visual responses and/or (anticipated) proprioceptive feedback. In our case, the hand shapes were purely imagined and, in the CC condition, the relevant hand shape was audibly cued. For these reasons, we assume that imagined proprioceptive feedback and visual memory are unlikely to be responsible for the tuned activity of the motor-imagery units. Additionally, in the RPSLS task the two new visual cues, lizard and Spock, were chosen to resemble icons rather than graspable objects.

The dynamics of the continuous decoding analysis in the CC task show further interesting properties. Even though the task is identical except for the initial instruction, the continuous decoding curves show a very different result (Fig. 6). The visual cue can be decoded early in the attend visual and the attend audio condition, whereas the auditory cue can only be decoded later and only in the condition in which it is relevant for task performance. These observations clearly show the importance of visual stimuli within this cortical area and the absence of tuning for auditory stimuli. At the same time, this task reveals that many cells in this area are selectively active for the imagined action.

Figure 6.

Continuous decoding performance during the CC task. E.G.S. was either instructed to attend and respond to the visual cue (a) or the auditory cue (b). Feature n is the number of units recorded over eight sessions that were used by the decoder. Solid black lines show the decoder mean performance when decoding the visual cue and dark gray lines show the decoder mean performance when decoding the audio cue. Shaded areas show SE for decoding. Red circles indicate significant (p < 0.01) deviation of decoding performance compared with chance level (red line). Vertical lines indicate the onset of cue and response phases.

As mentioned above, the CC task results argue against a sustained visual or attentional interpretation of the data. Interestingly the decoding performance after the visual cue response is lower in the attend audio condition for the delay and response phases (Fig. 6, compare a and b) and there seems to be a “decoding gap” between the peak for the visual cue decoding and the onset of audio decoding (Fig. 6b, solid vs dashed curve). The dynamic changes in decoding (Fig. 6) are compatible with the idea of a “default plan” (Rosenbaum et al., 2001), which would be activated by visual but not auditory cues. Competing motor plans which are modulated by a top-down contextual signal from the dorsolateral prefrontal cortex have been shown (Cisek and Kalaska, 2005; Klaes et al., 2011) and several computational studies use models that are based on this mechanism (Erlhagen and Schöner, 2002; Cisek, 2006; Klaes et al., 2012, p.212; Christopoulos et al., 2015). The graded responses for each hand shape which we observed (Fig. 2) rather than a categorical response for one specific shape (and no response for any other) is also compatible with this idea. If this theory were true, dissimilar plans would be actively suppressing each other, whereas more similar plans would suppress each other less. As a result, tuned neurons would have a graded response, which would depend on the similarity between competing hand shapes and the preferred hand shape of the neuron.

Tuning representations

It is interesting to note that the scissors symbol is highly overrepresented in the recorded neuronal population. This could be a sampling artifact or related to the additional effort that E.G.S. had to make to imagine the grasp shape, which he reported to be the most difficult on several occasions. If effort was involved the question would remain why the overrepresentation can already be observed in the cue phase. Another possibility is that the scissors symbol represents a tool that requires complicated hand motions to interact with it. If a default plan was formed to interact with the scissors object rather than to perform the scissors gesture this could explain a difficulty based overrepresentation. When the new symbols lizard and Spock were introduced with the RPSLS task, they were represented by a relatively low number of neurons. There are several possible explanations for this: first, the other three symbols had already been trained for a long time before the new symbols had been introduced. Second, the new symbols were represented by icons rather than graspable objects, which might have made a difference for neurons in this area. Third, for the neurons that were tuned during the response phase it might be that their actual preferred hand gesture lies in between those gestures which we used during those tasks. Therefore, it could have happened that the new gestures did not fit well into the hand shape space represented by the recorded neuron pool. The spherical and pinch grasps might have been too similar to be well distinguished or E.G.S. might have had difficulties of imagining the two separately. A similar argument could be made for visual object categories, which might have been more similar between the two non-graspable images than the previously trained objects. More data with different hand shapes and/or more subjects are needed to confirm either of these hypotheses.

AIP and BA5 differences

As mentioned previously, we focus mainly on the data that we collected from the AIP array because the array implanted in BA5 had a much lower neural yield. Nevertheless, comparing decoding from both areas showed interesting results (Fig. 8). The neuron-dropping analysis shows that tuning during the cue phase seems to be present but not present during the response phase in BA5. On the other hand, AIP shows tuning in both the cue and response phases. An explanation for this difference could be that AIP is specialized for intending grasp movements whereas BA5 is not. Such a specialization of PPC areas has been shown in nonhuman primates for the lateral intraparietal area for saccades (Andersen et al., 1990; Snyder et al., 1997), AIP for grasping (Murata et al., 2000; Baumann et al., 2009), and the parietal reach region and area 5 d for reaches (Snyder et al., 1997; Cui and Andersen, 2011; Chang and Snyder, 2012). According to this idea of a map of intentions, human BA5 would not be specialized for intending grasping, whereas AIP would be.

Neuroprosthetics

The grasp-decoding results indicate that human PPC is well suited for neuroprosthetic applications, which require grasping and hand shaping, using an anthropomorphic robot hand. Using motor imagery signals from higher cortical areas, such as AIP, in which single-neuron tuning reflects complex effector configurations, could be advantageous in real time applications. A strategy could be to decode complete hand configurations from well-tuned single cells instead of decoding individual joint angles. This information could be used complementary to motor cortex signals, which in addition can provide information about individual joint angles. It is remarkable that after >10 years E.G.S. was still able to imagine performing complex hand shapes. The neuron-dropping analysis shows that a stable daily population of 50–75 units from AIP would be sufficient to achieve 90% performance in grasping tasks that involve 3–5 distinct hand shapes.

Footnotes

This work was supported by the NIH (grants EY013337, EY015545, and P50 MH942581A), the Boswell Foundation, the USC Neurorestauration Center, and DoD contract N66001-10-C-4056. We thank Viktor Shcherbatyuk for computer assistance, Tessa Yao, Alicia Berumen, and Sandra Oviedo for administrative support; Kirsten Durkin for nursing assistance; and our colleagues from the Applied Physics Laboratory at John Hopkins University for technical support with the robotic limb.

The authors declare no competing financial interests.

References

- Aflalo T, Kellis S, Klaes C, Lee B, Shi Y, Pejsa K, Shanfield K, Hayes-Jackson S, Aisen M, Heck C, Liu C, Andersen RA. Decoding motor imagery from the posterior parietal cortex of a tetraplegic human. Science. 2015;348:906–910. doi: 10.1126/science.aaa5417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci. 2002;25:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Bracewell RM, Barash S, Gnadt JW, Fogassi L. Eye position effects on visual, memory, and saccade-related activity in areas LIP and 7a of macaque. J Neurosci. 1990;10:1176–1196. doi: 10.1523/JNEUROSCI.10-04-01176.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson KD. Targeting recovery: priorities of the spinal cord-injured population. J Neurotrauma. 2004;21:1371–1383. doi: 10.1089/neu.2004.21.1371. [DOI] [PubMed] [Google Scholar]

- Baumann MA, Fluet MC, Scherberger H. Context-specific grasp movement representation in the macaque anterior intraparietal area. J Neurosci. 2009;29:6436–6448. doi: 10.1523/JNEUROSCI.5479-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carpaneto J, Umiltà MA, Fogassi L, Murata A, Gallese V, Micera S, Raos V. Decoding the activity of grasping neurons recorded from the ventral premotor area F5 of the macaque monkey. Neuroscience. 2011;188:80–94. doi: 10.1016/j.neuroscience.2011.04.062. [DOI] [PubMed] [Google Scholar]

- Chang SW, Snyder LH. The representations of reach endpoints in posterior parietal cortex depend on which hand does the reaching. J Neurophysiol. 2012;107:2352–2365. doi: 10.1152/jn.00852.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christopoulos V, Bonaiuto J, Andersen RA. A biologically plausible computational theory for value integration and action selection in decisions with competing alternatives. PLoS Comput Biol. 2015;11:e1004104. doi: 10.1371/journal.pcbi.1004104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P. Integrated neural processes for defining potential actions and deciding between them: a computational model. J Neurosci. 2006;26:9761–9770. doi: 10.1523/JNEUROSCI.5605-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural correlates of reaching decisions in dorsal premotor cortex: specification of multiple direction choices and final selection of action. Neuron. 2005;45:801–814. doi: 10.1016/j.neuron.2005.01.027. [DOI] [PubMed] [Google Scholar]

- Collinger JL, Wodlinger B, Downey JE, Wang W, Tyler-Kabara EC, Weber DJ, McMorland AJ, Velliste M, Boninger ML, Schwartz AB. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet. 2013;381:557–564. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui H, Andersen RA. Different representations of potential and selected motor plans by distinct parietal areas. J Neurosci. 2011;31:18130–18136. doi: 10.1523/JNEUROSCI.6247-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Cavina-Pratesi C, Singhal A. The role of parietal cortex in visuomotor control: what have we learned from neuroimaging? Neuropsychologia. 2006;44:2668–2684. doi: 10.1016/j.neuropsychologia.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Erlhagen W, Schöner G. Dynamic field theory of movement preparation. Psychol Rev. 2002;109:545–572. doi: 10.1037/0033-295X.109.3.545. [DOI] [PubMed] [Google Scholar]

- Gail A, Andersen RA. Neural dynamics in monkey parietal reach region reflect context-specific sensorimotor transformations. J Neurosci. 2006;26:9376–9384. doi: 10.1523/JNEUROSCI.1570-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J, Haddadin S, Liu J, Cash SS, van der Smagt P, Donoghue JP. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485:372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klaes C, Westendorff S, Chakrabarti S, Gail A. Choosing goals, not rules: deciding among rule-based action plans. Neuron. 2011;70:536–548. doi: 10.1016/j.neuron.2011.02.053. [DOI] [PubMed] [Google Scholar]

- Klaes C, Schneegans S, Schöner G, Gail A. Sensorimotor learning biases choice behavior: a learning neural field model for decision making. PLoS Comput Biol. 2012;8:e1002774. doi: 10.1371/journal.pcbi.1002774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murata A, Fadiga L, Fogassi L, Gallese V, Raos V, Rizzolatti G. Object representation in the ventral premotor cortex (area F5) of the monkey. J Neurophysiol. 1997;78:2226–2230. doi: 10.1152/jn.1997.78.4.2226. [DOI] [PubMed] [Google Scholar]

- Murata A, Gallese V, Luppino G, Kaseda M, Sakata H. Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. J Neurophysiol. 2000;83:2580–2601. doi: 10.1152/jn.2000.83.5.2580. [DOI] [PubMed] [Google Scholar]

- Rosenbaum DA, Meulenbroek RG, Vaughan J. Planning reaching and grasping movements: theoretical premises and practical implications. Motor Control. 2001;5:99–115. doi: 10.1123/mcj.5.2.99. [DOI] [PubMed] [Google Scholar]

- Sakata H, Taira M, Murata A, Mine S. Neural mechanisms of visual guidance of hand action in the parietal cortex of the monkey. Cereb Cortex. 1995;5:429–438. doi: 10.1093/cercor/5.5.429. [DOI] [PubMed] [Google Scholar]

- Sakata H, Taira M, Kusunoki M, Murata A, Tanaka Y. The TINS lecture: the parietal association cortex in depth perception and visual control of hand action. Trends Neurosci. 1997;20:350–357. doi: 10.1016/S0166-2236(97)01067-9. [DOI] [PubMed] [Google Scholar]

- Schaffelhofer S, Agudelo-Toro A, Scherberger H. Decoding a wide range of hand configurations from macaque motor, premotor, and parietal cortices. J Neurosci. 2015;35:1068–1081. doi: 10.1523/JNEUROSCI.3594-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snoek GJ, IJzerman MJ, Hermens HJ, Maxwell D, Biering-Sorensen F. Survey of the needs of patients with spinal cord injury: impact and priority for improvement in hand function in tetraplegics. Spinal Cord. 2004;42:526–532. doi: 10.1038/sj.sc.3101638. [DOI] [PubMed] [Google Scholar]

- Snyder LH, Batista AP, Andersen RA. Coding of intention in the posterior parietal cortex. Nature. 1997;386:167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- Townsend BR, Subasi E, Scherberger H. Grasp movement decoding from premotor and parietal cortex. J Neurosci. 2011;31:14386–14398. doi: 10.1523/JNEUROSCI.2451-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vargas-Irwin CE, Shakhnarovich G, Yadollahpour P, Mislow JM, Black MJ, Donoghue JP. Decoding complete reach and grasp actions from local primary motor cortex populations. J Neurosci. 2010;30:9659–9669. doi: 10.1523/JNEUROSCI.5443-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wodlinger B, Downey JE, Tyler-Kabara EC, Schwartz AB, Boninger ML, Collinger JL. Ten-dimensional anthropomorphic arm control in a human brain-machine interface: difficulties, solutions, and limitations. J Neural Eng. 2015;12 doi: 10.1088/1741-2560/12/1/016011. 016011. [DOI] [PubMed] [Google Scholar]