Significance

We demonstrate that cortical oscillatory activity in both low (<8 Hz) and high (15–30 Hz) frequencies is tightly coupled to behavioral performance in musical listening, in a bidirectional manner. In light of previous work on speech, we propose a framework in which the brain exploits the temporal regularities in music to accurately parse individual notes from the sound stream using lower frequencies (entrainment) and in higher frequencies to generate temporal and content-based predictions of subsequent note events associated with predictive models.

Keywords: musical expertise, MEG, entrainment, theta, beta

Abstract

Recent studies establish that cortical oscillations track naturalistic speech in a remarkably faithful way. Here, we test whether such neural activity, particularly low-frequency (<8 Hz; delta–theta) oscillations, similarly entrain to music and whether experience modifies such a cortical phenomenon. Music of varying tempi was used to test entrainment at different rates. In three magnetoencephalography experiments, we recorded from nonmusicians, as well as musicians with varying years of experience. Recordings from nonmusicians demonstrate cortical entrainment that tracks musical stimuli over a typical range of tempi, but not at tempi below 1 note per second. Importantly, the observed entrainment correlates with performance on a concurrent pitch-related behavioral task. In contrast, the data from musicians show that entrainment is enhanced by years of musical training, at all presented tempi. This suggests a bidirectional relationship between behavior and cortical entrainment, a phenomenon that has not previously been reported. Additional analyses focus on responses in the beta range (∼15–30 Hz)—often linked to delta activity in the context of temporal predictions. Our findings provide evidence that the role of beta in temporal predictions scales to the complex hierarchical rhythms in natural music and enhances processing of musical content. This study builds on important findings on brainstem plasticity and represents a compelling demonstration that cortical neural entrainment is tightly coupled to both musical training and task performance, further supporting a role for cortical oscillatory activity in music perception and cognition.

Cortical oscillations in specific frequency ranges are implicated in many aspects of auditory perception. Principal among these links are “delta–theta phase entrainment” to sounds, hypothesized to parse signals into chunks (<8 Hz) (1–6), “alpha suppression,” correlated with intelligible speech (∼10 Hz) (7, 8), and “beta oscillatory modulation,” argued to reflect the prediction of rhythmic inputs (∼20 Hz) (9–13).

In particular, delta–theta tracking is driven by both stimulus acoustics and speech intelligibility (14, 15). Such putative cortical entrainment is, however, not limited to speech but is elicited by stimuli such as FM narrowband noise (16, 17) or click trains (18). Overall, the data suggest that, although cortical entrainment is necessary to support intelligibility of continuous speech—perhaps by parsing the input stream into chunks for subsequent decoding—the reverse does not hold: “intelligibility” is not required to drive entrainment. The larger question of the function of entrainment in general auditory processing remains unsettled. Therefore, we investigate here whether this mechanism extends to a different, salient, ecological stimulus: music.

In addition, higher-frequency activity merits scrutiny. Rhythmic stimuli evince beta band activity (15–30 Hz) (10, 19), associated with synchronization across sensorimotor cortical networks (20, 21). Beta power is modulated at the rate of isochronous tones, and its variability reduces with musical training (19). These effects have been noted in behavioral (tapping to the beat) tasks: musicians show less variability, greater accuracy, and improved detection of temporal deviations (22, 23). Furthermore, prestimulus beta plays a role in generating accurate temporal predictions even in pure listening tasks (9). Here, we test whether this mechanism scales to naturalistic and complex rhythmic stimuli such as music.

Musical training has wide-ranging effects on the brain, e.g., modulating auditory processing of speech and music (24–27) and inducing structural changes in several regions (28–30). Recent work (31) has shown that musical training induces changes in amplitude and latency to brainstem responses. Building on effects such as these, we expect that increased entrainment and reliability in musicians’ cortical responses may reflect expertise. We explore three hypotheses: (i) like speech, music will trigger cortical neural entrainment processes at frequencies corresponding to the dominant note rate; (ii) musical training enhances cortical entrainment; and (iii) beta rhythms coupled with entrained delta–theta oscillations underpin accuracy in musical processing.

This study focuses on a low-level stimulus feature: note rate. This methodological choice allows us to study the rate of the music without delving into the complexities of beat and meter, which can shift nonmonotonically with increasing overall rate (i.e., faster notes are often arranged into larger groupings). As the entrainment mechanism occurs for a wide range of acoustic stimuli, we find it unlikely to be following beat or meter in music: such clear groupings do not exist in other stimulus types. It should, however, be noted that influential recent research has addressed cortical beat or meter entrainment (11, 12, 32–37). In particular, using EEG, selective increases in power at beat and meter frequencies have been observed, even when those frequencies are not dominant in the acoustic spectrum (35, 36). Similarly, Large and Snyder (12) have proposed a framework in which beta and gamma support auditory–motor interactions and encoding of musical beat. Such beat induction has been linked with more frontal regions such as supplementary motor area, premotor cortex, and striatum (33), suggesting higher-order processing of the stimulus that is linked to motor output.

In relation to these interesting findings, our goal is to identify a distinct entrainment process and, by hypothesis, a necessary precursor to the higher-order beat entrainment mechanism: the parsing of individual notes within a stream of music. We suggest further that beta activity may reflect such low-level temporal regularity in the generation of temporal predictions for upcoming notes. As such, the metric, notes per second (Materials and Methods), is meant to be associated with this more general process of event chunking—which must occur in all forms of sound stream processing—and should therefore not be confused with beat, meter, and the complex cognitive processes associated with these operations.

Results

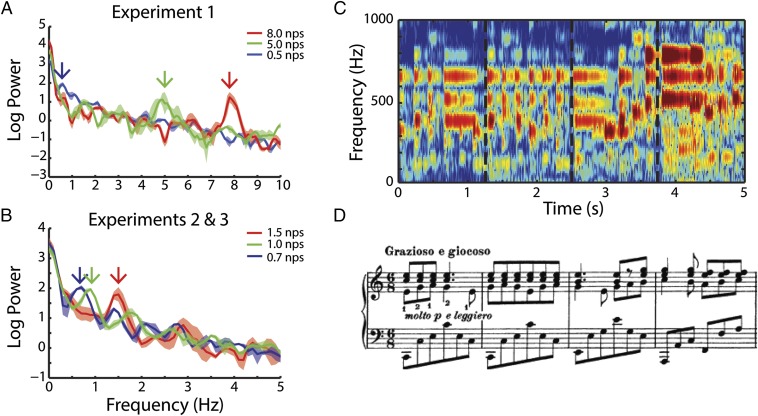

In all experiments, participants, while undergoing magnetoencephalography (MEG) recording, listened to (∼13-s duration) clips of classical piano music (Fig. 1) and were asked to detect a short pitch distortion. Fig. 1 A and B shows the modulation spectrum of the stimuli and identifies the dominant note rates of each. Fig. 1 C and D shows the spectrogram and musical score of the first 5 s of one example clip. See Materials and Methods for more information on stimulus selection and analysis.

Fig. 1.

Stimulus attributes. A shows average modulation spectra of stimuli in experiment 1 and highlights the peak note rates at 0.5, 5, and 8 nps. B shows modulation spectra of stimuli in experiments 2 and 3 and highlights peak note rates at 0.7, 1, and 1.5 nps. Arrows point to relevant peak note rates for each stimulus that will be used for analyses of neural data throughout the study. The colors red, green, and blue indicate high, medium, and low note rates within experiment. This scheme is used throughout the paper. C shows a spectrogram to the first 5 s of an example clip from experiment 1: Brahms Intermezzo in C (5 nps). Dashed lines mark bar lines that coincide with D. D shows the sheet music of the same clip; notes are vertically aligned with their corresponding sounds in C.

Behavioral Measures.

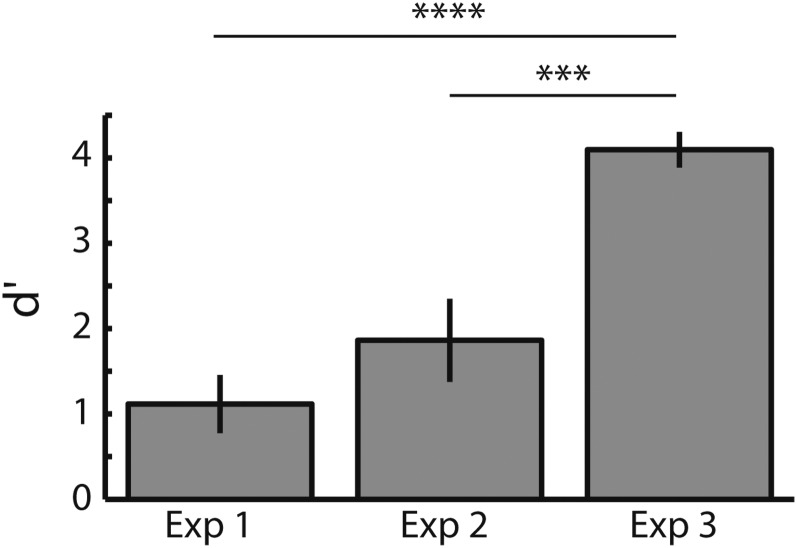

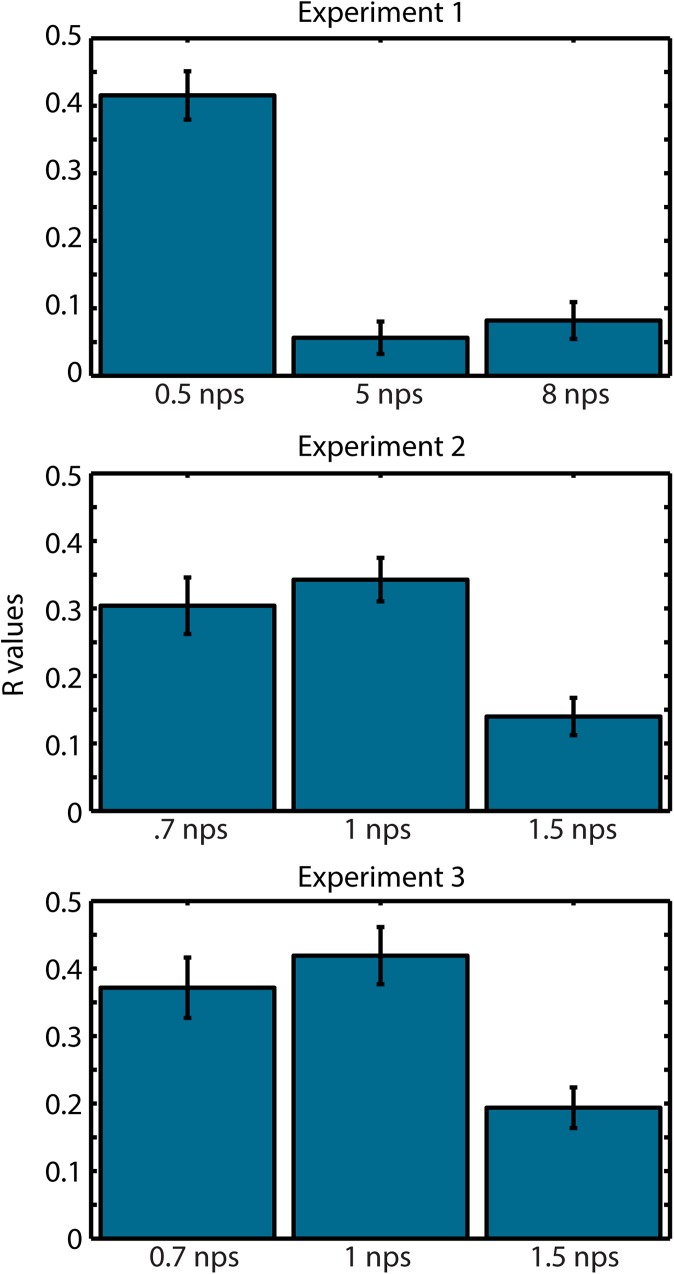

We used d′ to measure participant behavior. d′ measures the sensitivity of participant responses by taking into account not only the hit rate but also the false alarm rate. Fig. 2 shows the average d′ in each of the three experiments. Experiments 1 and 2 had only nonmusicians (NM), and experiment 3 was run only with musicians (M). This is clearly reflected in the d′ values. A one-way ANOVA by experiment shows a group-level difference [F(36,2) = 18.16, P < 0.00001]. Post hoc planned comparisons (Tukey–Kramer) showed significant differences between experiment 3 and experiment 2 (P < 0.001) and between experiment 3 and experiment 1 (P < 0.00001). No differences were found between experiments 1 and 2 in which musical training was held constant.

Fig. 2.

d′ in target detection for each experiment. Experiments 1 and 2 were conducted using only nonmusicians, and experiment 3 had only musician participants.

Entrainment to Music Tracks at the Note Rate.

We selected MEG channels reflecting activity in auditory cortex on the basis of a separate auditory functional localizer (Materials and Methods). To see the overlap between the selected channels and the entrainment topography, see Fig. S1. Intertrial coherence (ITC) analysis (of the nontarget trials) in nonmusicians shows significant entrainment to the stimulus at the dominant note rate for each stimulus (Fig. 3 A and B). Significance was determined via permutation test of the note rate labels (Materials and Methods). Each note rate condition was compared against the average of the other rates. Multiple comparisons were corrected for using false discovery rate (FDR) at the P = 0.05 level. Only positive effects are shown. Nonmusicians show, for all stimuli above 1 note per second (nps), a band of phase coherence at the note rate of the stimulus.

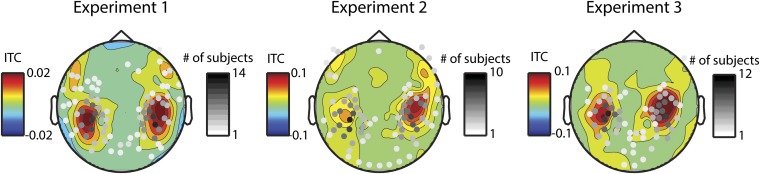

Fig. S1.

Auditory localizer selects channels that overlap with cortical entrainment. In color, the topography of ITC for the neural frequency corresponding to the dominant note rate in frequencies above 1 Hz (where effects were significant) for nonmusicians (experiments 1 and 2) and musicians (experiment 3). The channels taken from the functional auditory localizer are plotted over in grayscale. The scale is indicative of the number of subjects for which that channel was picked.

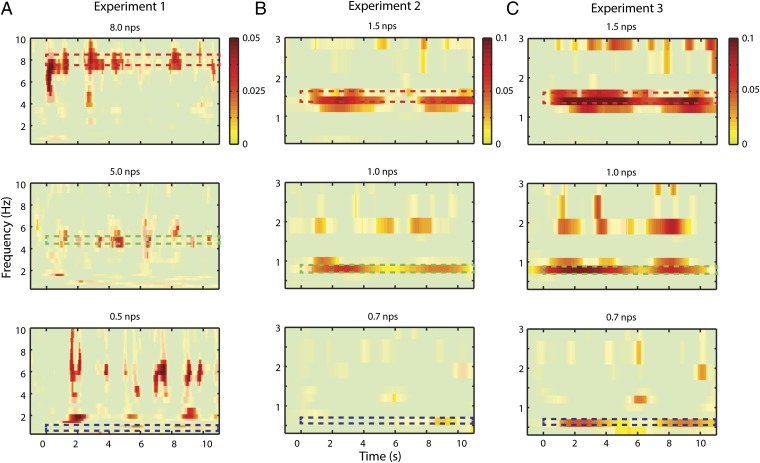

Fig. 3.

Intertrial phase coherence. A illustrates ITC of nonmusicians while listening to stimuli used in experiment 1: at 8, 5, and 0.5 nps. Each plot shows a contrast of the note rate specified in the title minus an average of the other two. Only positive effects are shown. Note that, in the first two cases, entrainment is significant at the corresponding neural frequencies indicated by the dashed, colored boxes (color scheme refers to Fig. 1 A and B): 8 and 5 Hz. The slow 0.5 nps stimulus shows a profile of onset responses for each note. (B) ITC of nonmusicians while listening to stimuli used in experiment 2: 1.5, 1, and 0.7 nps. Again, only positive effects are shown. A similar pattern is seen as in experiment 1. (C) ITC of musicians while listening to the same stimuli as in experiment 2. In this case, all three note rates show significant cortical tracking at relevant neural frequency.

In nonmusicians, no entrainment was observed at the note rate (illustrated by the dashed box) for the 0.5 nps (experiment 1) and 0.7 nps (experiment 2) conditions. Both conditions show a series of transient “spikes” in ITC from 4 to 10 Hz, consistent with responses to the onsets of individual notes (e.g., Fig. 3A, Bottom; frequency range not shown for 0.7 nps). Fig. S2 demonstrates this analytically showing that the transient spikes correlate with the stimulus envelope onsets only for frequencies at and below 1 Hz. Above this range, there is a sharp drop-off of such evoked activity. This pattern suggests a qualitative difference in phase coherence to note rates below 1 nps compared with less extreme tempi. We assessed this effect quantitatively by testing the frequency specificity of the response at each note rate. Frequency specificity was assessed by normalizing the maximum ITC at any frequency under 10 Hz by the mean response in that range (see Materials and Methods for a more detailed description). All note rates at 1 nps and above were significantly frequency selective (8 nps: 4.11, P < 0.005; 5 nps: 3.32, P < 0.05; 1.5 nps: 5.89, P < 0.001; 1.0 nps: 6.95, P < 0.0001), whereas slower stimuli were not (0.5 nps: 2.23, P = 0.33; 0.7 nps: 3.68, P = 0.11). The peak frequency for each stimulus type corresponded to the note rate of the stimulus except for the 0.5 nps condition, which had a peak frequency of 2 nps.

Fig. S2.

ITC driven by onset responses for note rates at and below 1 nps. We averaged ITC from 0.5 to 10 Hz and correlated the time-series with the stimulus envelope. We do this for each subject, Fisher-transform the R values, and average them. We observe a strong temporal correlation with all note rates at 1 nps and below, reflecting onset responses. Above that rate, the correlation is drastically reduced as the auditory cortex moves to a cortical entrainment mechanism. This analysis reinforces the notion that 1 Hz represents a transition point from stream entrainment to sequential onset responses.

We further tested the difference above and below 1 nps by comparing the relevant neural frequencies (averaging 8 and 5 nps conditions for experiment 1; 1.5 and 1 nps for experiment 2) using a paired T test. We found that neural tracking was stronger above 1 nps than below [experiment 1, t(14) = 2.15, P < 0.05; experiment 2, t(11) = 4.2, P < 0.005]. These findings could suggest a lower limit of the oscillatory mechanism, in which the cortical response is constrained by the inherent temporal structure within the neural architecture.

In experiment 3, we repeated experiment 2 with musicians (Fig. 3C). Fig. 3C shows the entrainment of the musicians to the same stimuli used in experiment 2. Significance was determined via a permutation test comparing across conditions (as in Fig. 3). The musicians did show significant entrainment at 0.7 Hz for the corresponding stimuli. This marks a stark contrast with nonmusicians and may suggest that musical training extends the frequency limit of cortical tracking. However, comparing above and below 1 nps (as in experiments 1 and 2) still showed a significant increase above 1 nps (experiment 3, T = 2.44, P < 0.05), suggesting musicians were better at entraining to the higher frequencies.

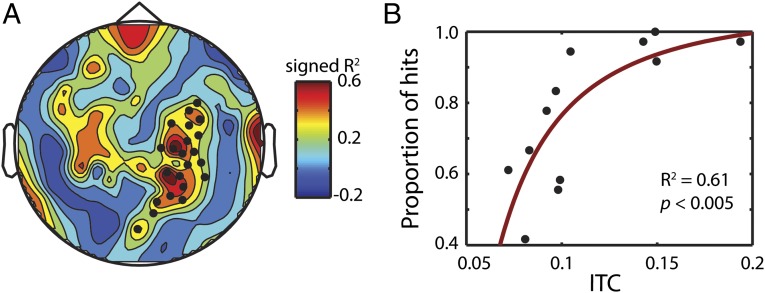

To test the behavioral relevance of cortical entrainment, we investigated how ITC affected perceptual accuracy in nonmusicians (note that the musicians’ behavioral performance is at ceiling). Fig. 4 shows the transformed correlation (Materials and Methods) between entrainment at the dominant note rate and hit rate, at the subject level. The black dots in Fig. 4A mark significant channels (P < 0.05, cluster permutation test). The effect is clearly right lateralized and the topography is consistent with right auditory cortex. A second cluster in the left hemisphere did not reach significance (P = 0.14). When averaging the significant channels together, the scatter plot indicates a strong correlation (R2 = 0.56, P < 0.005; Fig. 4B). This relationship demonstrates that faithful tracking enhances processing of the auditory stimulus, even when the task is not temporal but pitch-based.

Fig. 4.

Entrainment correlates with pitch-shift detection. (A) Topography of the correlation between entrainment at the dominant rate and proportion of hits (see Materials and Methods for details of the correlation analysis). Black dots mark significant channels tested using a cluster permutation test (P < 0.05). (B) Scatter plot of ITC and hit percentage for the significant channels marked in A.

Musical Training Enhances Entrainment.

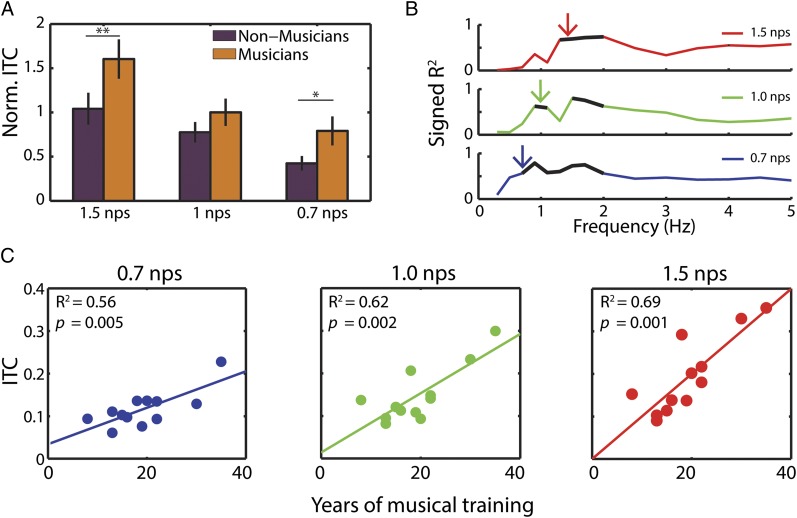

Having demonstrated a relationship between cortical entrainment and behavioral accuracy, we then focused on testing the reciprocal relationship: does behavioral training enhance entrainment? We compared musicians and nonmusicians for each stimulus rate at the corresponding frequency (i.e., 1.5 Hz for 1.5 nps). Having chosen these frequencies a priori (and confirmed statistically in Fig. 3), we ran a two-way ANOVA on ITC values normalized within participant comparing frequency (1.5, 1, and 0.7 nps) by musical expertise (NM vs. M). As expected, we found (Fig. 5A) a main effect of frequency [F(2,66) = 15.51, P < 0.001]. Importantly, we also found a main effect of musical expertise [F(1,66) = 9.12, P < 0.005]. Post hoc planned comparisons showed significant differences of musical expertise for 1.5 nps (P < 0.05) and at 0.7 nps (P < 0.05). The 1 nps condition did not show a significant difference of musical expertise.

Fig. 5.

Musical expertise enhances entrainment. (A) Comparison of ITC normalized by subject shows musicians have stronger entrainment at the note rate than nonmusicians. Asterisks indicate significant post hoc tests. (B) ITC increases with years of musical training. R2 values of linear correlation between years of musical training and ITC. Black lines mark significant values (FDR corrected at P < 0.05). Colored arrows show the corresponding dominant note rate for each stimulus. (C) Scatter plots of years of musical training by average ITC at the relevant neural frequency for each note rate.

Although we did not predict it, musicians also showed greater tracking at harmonic rates (i.e., 3 Hz for 1.5 nps and 2 Hz for 1 nps) for all conditions but 0.7 nps. An ANOVA using the harmonic frequencies (3, 2, and 1.5 Hz) showed a main effect of musical experience [F(1,66) = 12.46, P < 0.001], whereas frequency showed no significant modulation. Multiple comparisons show significant effects in 1.5 nps (P < 0.05) and 1.0 nps (P < 0.05) but not 0.7 nps (P = 0.16).

Further analysis confirms the robust relationship between musical expertise and entrainment: a linear correlation is tested between ITC, averaged across time in each condition, and the number of years of musical training of each musician. Fig. 5B shows the R2 values demonstrating that phase coherence at the note rate and neighboring frequencies correlate with years of musical training. The corresponding scatter plots (Fig. 5C) at the relevant neural frequencies (indicated by arrows in Fig. 5B) indicate a linear relationship. It should be noted that musical training is highly correlated with the age of the participants (R2 = 0.56, P < 0.005). Crucially, age does not correlate with ITC in the nonmusicians (for all note rates, R2 ≤ 0.02, P > 0.2), suggesting that age in itself is unlikely to drive the relationship between ITC and training in musicians.

Musical Training and Accuracy Enhances Beta Power.

Finally, motivated by recent findings in the literature (9–11, 13, 38), we examined effects in the beta band, in particular over central channels overlaying sensorimotor cortex. Functional MRI studies have shown that musical training increases brain responses in an auditory–motor synchronization network (39, 40). If beta power is a physiological marker of such a sensorimotor network, we might expect that beta activity recorded by MEG should be enhanced by musical expertise as well.

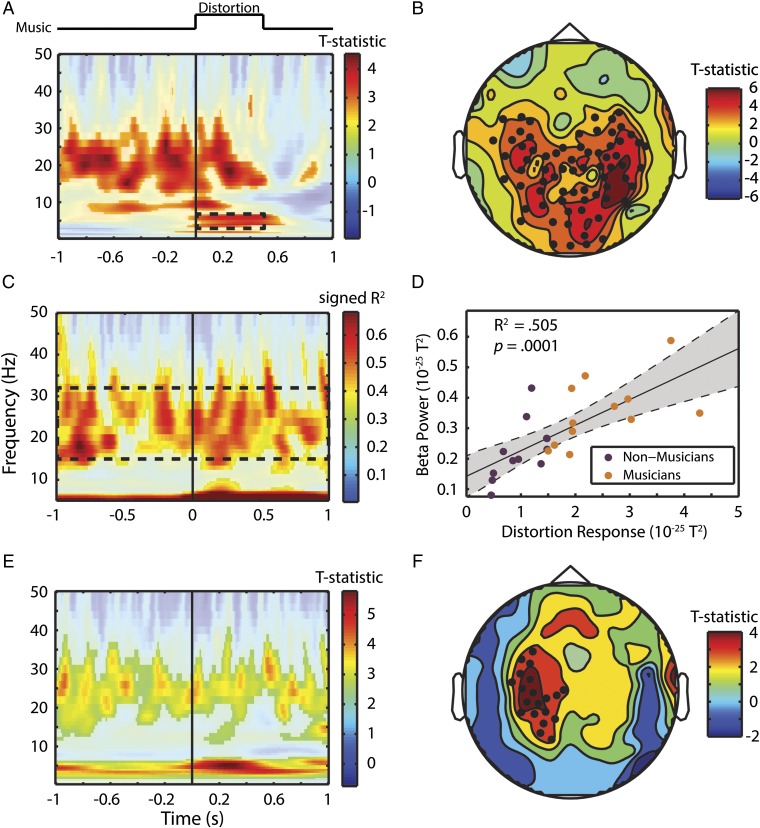

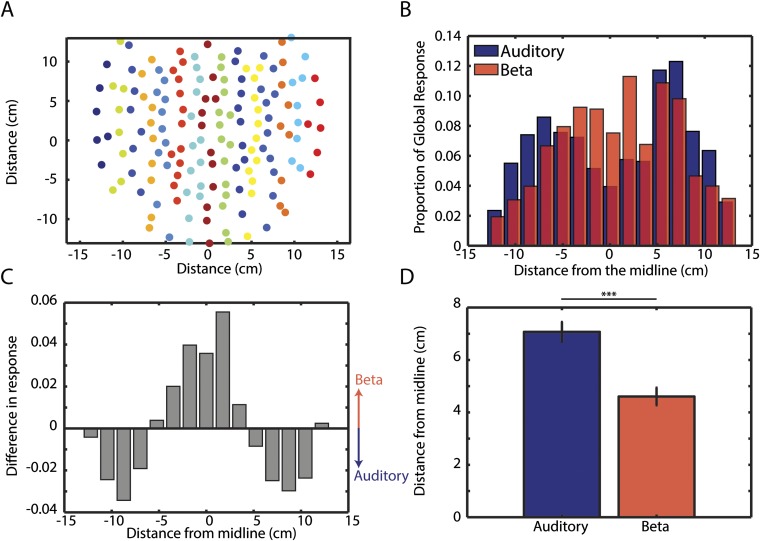

Fig. 6 shows the relationship between neural beta activity and behavioral accuracy (detect distortion task) as well as musical expertise. In nonmusicians, we investigated accuracy by comparing hits and misses in auditory channels. The result of this contrast is shown in Fig. 6A. Multiple comparisons are corrected for using FDR at the 0.05 level. There are three distinct response features: (i) an onset response to the distortion in the theta range (3–6 Hz), which starts and ends with the target (we will refer to the raw activity in this frequency and time range as the “distortion response”); (ii) a narrow pretarget alpha band (∼9 Hz) activity [likely reflecting inhibition of irrelevant information (41)]; (iii) pretarget beta activity (∼15–30 Hz), which persists through to the offset of the target. Fig. 6B shows a similar analysis (comparing hits against misses) in channel space. Significant channels are shown by black dots. These channels are more central than those of the auditory localizer (Fig. S1). We compared the absolute distance from the midline of channels selected by the auditory localizer and the significant channels in Fig. 6B and find the auditory channels to be more peripheral than the beta channels [t(142) = 4.90, P < 0.0001]. For a detailed quantitative analysis, see SI Materials and Methods and Fig. S3. The fact that the beta effect is more centrally located than the auditory channels supports the hypothesis that the source of the beta oscillation is a part of a sensorimotor synchronization network (e.g., refs. 10 and 42). (It should be noted that we are unable to confirm this without source localization, which we are unable to perform due to a lack of anatomical MRI scans for the participants of this study.)

Fig. 6.

Sensorimotor beta power facilitates musical content processing. (A) Contrast of hits and misses in auditory channel power analysis. The solid line marks the onset of the pitch distortion. Dashed box marks distortion response that was used for correlations in C and D. (B) Topography of the accuracy contrast (shown in A) in beta power from 1 s before to 1 s after target onset. Black dots mark significant channels based on cluster permutation test. (C) Correlation of power at each time point and frequency with the distortion response marked as dashed square in A. D shows a scatter plot of the beta power (dashed box in C) by the distortion response (dashed box in A) for each subject. Purple and orange dots are NM and M, respectively. (E) Comparison of M vs. NM around the target stimulus. Missed target trials were thrown out, and trial numbers were matched in each group for this analysis. (F) Topography of the effect of musical expertise. Black dots mark significant channels tested via cluster permutation test.

Fig. S3.

Channels showing beta effect of accuracy are more central than the auditory localizer. (A) Each channel is plotted in 2D location. Colors indicate groupings for subsequent analysis in B and C. (B) In each group, the activity was summed across channels and divided by the summed response of all 157 channels to generate the proportional contribution from each group. If the effect was uniformly distributed, each groups proportion would be evenly spread at 1/15 = 0.067. The beta effect (topography from Fig. 6B) peaks more centrally, whereas the auditory topography (generated by the functional auditory localizer; Fig. S1) is more lateral and bimodal. (C) The difference of the bars in B is shown. The positive direction represents greater beta response than auditory. The difference in distribution at its peaks is more than half the 0.067 uniform proportion. (D) Compares the absolute distance from the midline of all channels selected by the localizer and all channels with significant differences in beta between hits and misses. Auditory channels are more peripheral than beta channels.

Although we sought to test whether beta power was similarly relevant for perceptual accuracy in musicians, we were unable to compare musicians’ neural responses to their behavior because (as shown in Fig. 2) they performed at ceiling in the task. We reasoned that, although the behavioral response is saturated, the distortion response might still exhibit some variation. We correlated the musicians’ neural response at each time point and frequency with the distortion response (the mean raw neural activity in the region marked in the dashed box of Fig. 6A: 3–6 Hz, 0–0.5 s). Significance was determined using FDR correction at P < 0.05. Apart from the expectedly near-perfect correlations in theta range, the signed R2 values (Fig. 6C) show beta power is highly correlated with the distortion response (mean R2 = ∼0.6). This suggests that the sensorimotor beta facilitates a response to the pitch distortion. No correlation is found with the alpha band (as was found in the nonmusicians accuracy contrast; Fig. 6A). This correlation between beta power (mean of values within dashed box in Fig. 6C) and the distortion response (mean of values within dashed box in Fig. 6A) is evident when examining the combined musician and nonmusician group (R2 = 0.505, P < 0.0001; Fig. 6D).

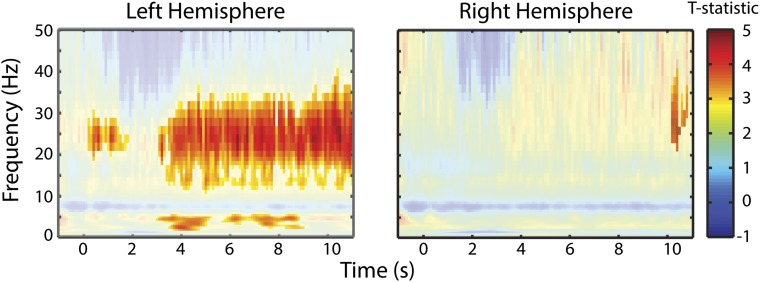

To investigate the effect of musical expertise, we compared musicians from experiment 3 with nonmusicians from experiment 2 in channels selected from the functional auditory localizer. In this analysis, only hit trials were considered and both groups were matched for number of trials. Significance was determined via a cluster permutation test of the T statistic comparing M and NM groups at P < 0.05. We observed a similar beta-power increase that persisted beyond the target only in the left auditory channels (Fig. 6E). This effect also persists throughout nontarget trials (Fig. S4). Musicians also exhibited a stronger “distortion response” in the theta range compared with nonmusicians in the left hemisphere. However, no alpha effect was seen. Left lateralization was confirmed in a topographical analysis of the difference between M and NM in the beta range (15–30 Hz; Fig. 6F). These findings, taken together, show that beta oscillations play an important role in musical processing and are increased with musical training. In Discussion, we propose several hypotheses for its functional role.

Fig. S4.

Enhanced beta power in musicians in nontarget trials. Comparison of musicians and nonmusicians in power on collapsed across clean trials in all conditions. Nonsignificant values are masked. The two panels show the effect averaged across auditory channels in the left hemisphere (Left) and the right hemisphere (Right). This shows a clearly left lateralized effect. Statistics are performed exactly in the same manner as Fig. 6C.

SI Materials and Methods

Stimuli.

The pieces chosen for experiment 1 were composed by Bach, Brahms, and Beethoven: Goldberg Variations, BWV 988: Variation 17 a 2 Clav (Bach, J. S. Bach: Goldberg Variations, Murray Perahia; Sony, 2000), 8 nps; Four Piano Pieces: Intermezzo in C (Brahms, J. Brahms: Händel Variations; Murray Perahia; Sony, 2010), 5 nps (shown in Fig. 1 C and D); and Piano Sonata No. 4 in E-flat major, Op. 7. II. Largo, con gran espressione (Beethoven, L.; Murray Perahia: Beethoven Piano Sonatas Nos. 4 and 11; Murray Perahia; Sony, 1983), 0.5 nps.

Experiments 2 and 3 both used the selection of pieces specified below but differed in the type of participant: NM in experiment 2 and M in experiment 3. The pieces were chosen for note rates that were slow but just above the 0.5 nps of the Beethoven piece from experiment 1. All pieces were chosen from Beethoven piano sonatas: Piano Sonata No. 11 in B-flat major, Op. 22: II. Adagio con molto espressione (Beethoven, L.; Murray Perahia: Beethoven Piano Sonatas Nos. 4 and 11, Murray Perahia; Sony, 1983), 1.5 nps; Piano Sonata No. 7 in D Major, Op. 10, No. 3: II. Largo e mesto (Beethoven, L.; Beethoven: Piano Sonatas Nos. 7 and 23, “Appassionata,” Murray Perahia; Sony, 1985), 1.0 nps; and Piano Sonata No. 23 in F Minor, Op. 57, “Appassionata”: II. Andante con moto (Beethoven, L.; Murray Perahia: Beethoven Piano Sonatas Nos. 4 and 11, Murray Perahia; Sony, 1983), 0.7 nps.

MEG Recording.

Five electromagnetic coils were attached to a participant’s head to monitor head position during MEG recording. The locations of the coils were determined with respect to three anatomical landmarks (nasion, left and right preauricular points) on the scalp using 3D digitizer software (Source Signal Imaging) and digitizing hardware (Polhemus). The coils were localized to the MEG sensors, at both the beginning and the end of the experiment. Although the MEG’s initial sampling rate was 1,000 Hz, after acquisition, the data were downsampled to 100 Hz.

Time–Frequency Analysis.

As we were investigating relatively slow oscillations, we performed the time–frequency (TF) analysis on the whole (contiguous) session before epoching the TF representation into trials, to avoid large edge effects at the beginning and end of trials at low frequencies. The center frequencies (CFs) of each band were spaced unevenly and so that one band would fall directly on the frequency corresponding to the note rate of the stimuli: [0.3–1.7 (0.2-Hz spacing), 2.5–10 (0.5-Hz spacing), 11–20 (1-Hz spacing), 22–50 (2-Hz spacing)]. It is worth noting that, although we spaced the CFs in this manner, the spectral resolution of the wavelet analysis decreases with higher frequencies. The bandwidth of each band can be calculated as 2*CF/m.

ITC-Envelope Correlation.

ITC shows transient broadband spikes to note rates below 1 Hz (Fig. 3). We hypothesized that such activity was the result of onset responses to individual notes rather than an entrainment to the continuous stream. If this is the case, then the timing of the spikes should coincide with large increases in the sound envelope. This should result in a high correlation between ITC and the stimulus envelope. We ran a Pearson's correlation between the average ITC from 0.5 to 10 Hz and the stimulus envelope for each clip. We then Fisher-transformed the R values and averaged clips across subjects within note rate. While note rates at and below 1 nps result in a high correlation, note rates above that limit show a steep drop-off suggesting that this tracking is much less susceptible to individual note onsets (Fig. S2).

Topographical Analysis.

Previous effects regarding beta power and temporal prediction have found effects in central motor and premotor regions. Therefore, we tested whether the channels that show the beta effect for accuracy were more central than the auditory channels (as defined by the functional localizer). We performed this analysis in two ways. We grouped channels by their location on the horizontal axis (15 groups coded by color in Fig. S3A) and summed activity within group normalized by the summed activity of all groups. This allowed us to compare directly, the peak event-related potentials (of the functional localizer) to the beta power using each group’s proportion of the global response. Fig. S3B shows the beta effect to have a near-normal distribution with a more central peak. The auditory response is more bimodal and peripheral. The difference in the two distributions is confirmed by a Kolmogorov–Smirnov test (D = 0.202, P < 0.05). The difference between these distributions is shown in Fig. S3C, confirming our previous observation and showing a large effect size (compared with an average proportion of 1/15 = 0.067). We also conducted a simpler analysis by comparing the absolute distance of all channels selected by the functional localizer (Fig. S1) and all channels with a significant difference in beta power between hits and misses (Fig. 6B). A two-sample T test showed that auditory channels are significantly more peripheral than beta channels [t(142) = 4.90, P < 0.0001].

Discussion

Cortical Entrainment to Music.

To our knowledge, these data represent the first demonstration of cortical phase entrainment of delta–theta oscillations to natural music. Slow oscillations entrain to the dominant note rate of musical stimuli. Such entrainment correlates with behavior in a distortion detection task. These observations, coupled with previous work showing that tracking at the syllabic rate of speech improves intelligibility (e.g., refs. 2 and 15), suggest a generalization: cortical low-frequency oscillations track auditory inputs, entraining to incoming acoustic events, thereby chunking the auditory signal into elementary units for further decoding. We focus here on two aspects: the effect of expertise and the role of sensorimotor activity as reflected in beta frequency effects.

In nonmusicians, we observed no tracking below 1 Hz (Fig. 3 A and B, experiments 1 and 2; no tracking at the dominant note rate in the 0.5 or 0.7 nps conditions). This would appear to indicate a lower limit of 1 Hz below which entrainment is not likely without training. However, an alternative explanation is that such a cutoff demonstrates a limit of the signal-to-noise ratio (SNR) of the MEG recordings, such that our analysis is unable to show entrainment below 1 Hz. As the noise in the signal increases at 1/f, there must be a limit below which it becomes impossible to recover any significant effects. However, the fact that musicians show a clear effect of entrainment (Fig. 3C) at 0.7 Hz points toward the interpretation that the MEG recordings had adequate SNR at these frequencies to detect significant phase coherence, and therefore that in nonmusicians we have reached a true limit of neurocognitive processing in this participant group. If this is the case, then musical expertise affects not only the quality of entrainment (see below) but also the frequency range, extending it below the 1-Hz limit, i.e., to slower, longer groupings of events. This interpretation, although not definitive, demonstrates the temporal structure that an oscillatory mechanism might impose onto the processing of an auditory signal. New data will be required to dissociate between these interpretations.

We show that cortical entrainment is enhanced with years of musical training. We cannot, however, conclude that musical expertise specifically modulates entrainment as opposed to other types of training. It may well be that any acquired skill could enhance entrainment through the fine-tuning of motor skills. Familiarity with the clips is another potential factor. Musicians are more likely to have heard these pieces before, and this could affect entrainment. However, as each clip is repeated 20 times, all participants become familiar with the clips at an early stage in the experiment, rendering this explanation less likely. For the purposes of our discussion, we have focused on musical training, in particular, as the most likely driving force of this effect of musical expertise.

The data demonstrate that, over increased years of musical training, entrainment of slow oscillations to musical rhythms is enhanced (Fig. 5C). The predictions of the behavioral effects of this relationship are unclear, as our task did not include a temporal component. Our assumption (as stated in the Introduction) is that this mechanism reflects not rhythm perception per se but rather a precursor to it: the identification of individual notes in the sound stream. This ability to generate more precise representations for not only the timing but also the content of each note would likely facilitate a number of higher-order processes such as rhythm, melody, and harmony. Furthermore, it could facilitate synchronization between musicians. Whether one of these aspects of musical training drives this relationship, in particular, remains unclear and will be studied in future experiments.

A natural question of this study is whether increased entrainment is limited to musical stimuli or is transferred to other auditory signals such as speech. Given our conjecture that this entrainment mechanism is shared between speech and music, we suppose that it would. Previous work has suggested that shared networks are likely the source of transfer between music and speech (43). Nevertheless, the speech signal is not as periodic as music, which may present difficulties in the transfer of such putative functionality. Still, as both types of complex sounds require the same function, broadly construed—the identification of meaningful units within a continuous sound stream—it is plausible that they perform this function using the same or a similar mechanism. If musical training enhances cortical entrainment to speech for example, as has been reported for auditory brainstem responses (24, 27, 31, 44), then it may provide an explanation for findings related to music and dyslexia.

Studies of dyslexia (45–51) suggest that one underlying source of this complex disorder can be a disruption of the cortical entrainment process. This effect—when applied to speech learning—would result in degraded representations of phonological information and thus make it more difficult to map letters to sounds. The disorder has also been linked to poor rhythmic processing in both auditory and motor domains (52). An intervention study (53) demonstrated that rhythmic musical training enhanced the reading skills of poor readers by similar amounts compared with a well-established letter-based intervention. Our study hints at a mechanism for these results: providing musical and rhythmic training enhances cortical entrainment such that children with initially weak entrainment can improve, thereby alleviating one key hypothesized cause of dyslexia.

A large body of work (e.g., refs. 24–31, 53, and 54) shows that musical training elicits a range of changes to brain structure and function. The entrainment effects observed here are presumably the result of one (or many) of these changes. The question remains: which changes affect entrainment and how are they manifested through musical practice?

These questions cannot be answered within the scope of this study; however, we present several hypotheses to inspire future research. These hypotheses are not mutually exclusive, and it is likely that the reality lies closer to a combination of factors. One possibility is a bottom-up explanation. Musacchia et al. (31) demonstrated that musical training can increase amplitude and decrease latencies of responses in the brainstem to both speech and music. Thus, the entrainment effects we show could be the feedforward result of effects in auditory brainstem responses. An alternative (or additional) mechanism builds on a top-down perspective. The action of playing music could fine-tune the sensorimotor synchronization network (indexed here as central beta oscillations). This higher-level network could generate more regular and precise temporal predictions and—through feedback connections—modulate stimulus entrainment to be more accurate (cf. ref. 55). As musical training requires synchronous processing of somatosensory, motor, and visual areas, these areas will likely provide modulatory rhythmic input into auditory cortex, further enhancing entrainment to the musical stimulus. Last, the training effect we show could be due to recruitment of more brain regions for processing music. Peelle et al. (14) found that cortical entrainment was selectively increased in posterior middle temporal gyrus, associated with lexical access (56–60), when sentences were intelligible. This suggests a communication-through-coherence mechanism (61) in which relevant brain regions gain access to the stimulus by entraining to the parsing mechanism. A similar effect could be occurring in musicians where training manifests structural and functional changes, presumably, to optimize processing and production of musical stimuli. Further experiments will be required to dissociate between these hypotheses.

Sensorimotor Beta Oscillations.

Both accuracy and expertise are related to beta power. In nonmusicians, the power of beta reveals a direct relationship with behavioral performance (detecting pitch distortions). Furthermore, musicians show stronger beta power than nonmusicians before, during, and after target appearance, as well as throughout trials on which no target is present (Fig. S4). This suggests that the primary function reflected in this neural pattern is not specific to the task. A growing body of research points to a key role of beta oscillations in sensorimotor synchronization and in processing or predicting new rhythms (9, 10, 19, 23). Plausibly, then, music—with its inherent rhythmicity—also drives this system.

What we consider intriguing is that this sensorimotor network, with a primary function in rhythmic processing, affects performance on a nonrhythmic task. Why should beta oscillatory activity, whose main role in audition appears to be predicting “when,” facilitate processing of “what”? We suggest two possible explanations: first, beta oscillations predict only timing, as previously suggested (10, 13), and, in making accurate predictions, facilitate other mechanisms to process pitch content; or second, more provocatively, beta oscillations contain specific predictions not only of when but also of what. The data shown here are unable to resolve these two possibilities. Indeed, Arnal and Giraud (38) articulated a framework in which beta and gamma interact in the processing of predictions. Crucially, in their framework, beta oscillations are used to convey top-down predictions of “content” to lower-level regions.

A recent study (62) showed that rhythmicity (i.e., high temporal predictability) enhances detection, confirming a link between temporal and content processing. Similar effects occur in vision: reaction time in a detection task is modulated by the phase of delta oscillations (63). These studies support a dynamic attention theory (or varieties thereof) wherein attentional rhythms align to optimize processing of predicted inputs (64, 65). Our data go beyond this for one key reason: the target pitch distortion had no temporally valid prediction. It was uniformly distributed throughout the clips and not tied to a particular note in the stimulus. Therefore, the beta activity must be predicting the timing of the notes, not of the distortion. The surprising result then is that this process—the divining of temporal structure within music—facilitates pitch processing and distortion detection when such detection would seemingly have a minimal relationship to the music’s temporal structure. Accurately predicting when the beginning of the next note will come actually helps listeners to detect deviations in pitch at any point in the stimulus.

Unexpectedly, we did not find a direct relationship (by way of phase-amplitude nesting or power correlations) between delta entrainment and beta power. It does not follow that no direct relationship exists. This may be an issue with the task. Had the task been to detect a rhythmic distortion or to tap to the beat, one might expect more nesting between delta and beta, as has been recently shown using isochronous tones (9). Another alternative is that the added complexity of music engages a range of other, more complex brain functions, making it difficult to detect this specific relationship using the tools available in this study.

Conclusions.

We show that musical training strengthens oscillatory mechanisms designed for processing temporal structure in music. The size of these effects correlates not only with musical training but also with performance accuracy on a pitch-related task in nonmusicians. Such effects suggest an inherent link between temporal predictions and content-based processing, which should be considered in future research. These findings support theories in which oscillations play an important role in the processing of music, speech, and other sounds. Furthermore, it demonstrates the utility of music as an alternative to speech to probe complex functions of the auditory system.

Materials and Methods

Participants.

In three experiments, 39 right-handed participants (15 nonmusicians, 10 females, mean age, 25.9 y, range, 19–45; 12 nonmusicians, 7 females, mean age, 24.9 y, range, 18–44; 12 musicians, 5 females, mean age 26.2 y, range, 22–42, mean years of musical training, 19.25, range, 8–35) underwent MEG recording after providing informed consent. Participants received either payment or course credit. Handedness was determined using the Edinburgh Handedness Inventory (66). Nonmusicians (NM) were defined as having no more than 3 years of musical training and engaging in no current musical activity. Musicians (M) were defined as having at least 6 years of musical training, which was ongoing at the time of the experiment. Participants reported normal hearing and no neurological deficits. The study was approved by the local institutional review board (New York University’s Committed on Activities Involving Human Subjects).

Stimuli.

Notes per second.

We categorized the musical stimuli using a relatively coarse metric: notes per second. Note rates were determined analytically by calculating the peak of the modulation spectrum of each clip. The envelope of each clip was extracted using a bank of cochlear filters using tools from Yang et al. (67). The narrowband envelopes were then summed to yield a broadband envelope. The power spectrum of the envelope was estimated using Welch’s method as implemented in MATLAB (version 8.0; MathWorks). From each piece, three clips were chosen (∼13 s per clip).

Music.

Participants listened to long clips (∼13 s each) of music recorded from studio albums. The clips varied in their note rate from 0.5 to 8 nps. In particular, in each experiment, participants listened to natural piano pieces recorded by the pianist Murray Perahia. Pieces played by a single interpreter were chosen to limit, as best as possible, stylistic idiosyncrasies in the performance style. For each experiment, three pieces were chosen for their note rates and three clips were selected from each piece. The total of nine clips were presented to each subject 20 times, in pseudorandomized order, leading to 180 presentations in total. Fig. 1 A and B illustrates the modulation spectra for the pieces. Fig. 1 C and D shows 5 s of an example clip from experiment 1: Brahms, Intermezzo in C (5.0 nps). Fig. 1C shows the spectrogram of the stimulus below 1 kHz, whereas Fig. 1D shows the music notation for the same duration.

Task.

In each experiment, four repetitions per clip (a total of 36 per participant) were randomly selected to contain a pitch distortion. These trials are referred to as target trials. The placement of the distortion was selected randomly from a uniform distribution throughout the clip (with the exception of a buffer of 1 s at the beginning and end of the clip). The distortion lasted 500 ms and changed the pitch of the spectrum approximately one quarter-step (1/24 of an octave). To create the distortion, we resampled the to-be-distorted segment by a factor of 20.5/12, approximating a quarter-step pitch shift (either up or down) without a noticeable change in speed.

At the end of each trial, participants were asked whether they heard a distortion in the previous clip, and if so, in which direction. They were given three possible responses (through button press): (i) the pitch shifted downward, (ii) there was no pitch distortion, and (iii) the pitch shifted upward. All participants were given feedback after each trial: correct, incorrect, or “close” (i.e., correctly detected a distortion but picked the wrong direction). Although NM participants were able to detect the distortion over the course of the experiment, most struggled throughout in determining the direction of this distortion (mean performance, 41.2%). As such, all behavioral analyses lumped responses 1 and 3 together as an indication that a distortion was detected regardless of the pitch shift direction (mean performance, 74.1%).

MEG Recording.

Neuromagnetic signals were measured using a 157-channel whole-head axial gradiometer system (Kanazawa Institute of Technology, Kanazawa, Japan). The MEG data were acquired with a sampling rate of 1,000 Hz, filtered on-line with low-pass filter of 200 Hz, with a notch filter at 60 Hz. The data were high-pass filtered postacquisition at 0.1 Hz using a sixth-order Butterworth filter.

Behavior Analysis.

d′ was calculated using a correction for perfect hits or zero false alarms (which occurred often with M participants). In such cases, the tally of hits (or false alarms) was corrected by a half-count such that hits would total 1 − 1/(2N) and false alarms would equal 1/(2N), where N is the total number of targets. The correction is one often used by psychophysicists, initially presented by Murdock and Ogilvie (68) and formalized by Macmillan and Kaplan (69) as the 1/(2N) rule. The correction follows the assumption that participants’ true hit rates and false alarms (given infinite trials) are not perfect and that the true values in such cases lie somewhere between 1 − 1/N and 1 (hits) or 0 and 1/N (false alarms).

MEG Analysis.

The MEG analysis was performed the same way for each experiment. All recorded responses were noise-reduced off-line using a combination of the CALM algorithm (70) followed by a time-shifted principal component analysis (71). All further preprocessing and analysis was performed using the FieldTrip toolbox (www.fieldtriptoolbox.org/) (72) in MATLAB. Channel data were visually inspected and those with obvious artifacts such as channel jumps or resets were repaired using a weighted average of its neighbors. An independent component analysis as implemented in FieldTrip was used to correct for eye blink-, eye movement-, and heartbeat-related artifacts.

Time–frequency (TF) information from 0.3–50 Hz was extracted using a Morlet wavelet (m = 7) analysis in 50-ms steps for whole-trial analyses and 10-ms steps for analysis of target detection. See SI Materials and Methods for further details. Although we show effects only in specific bands, all tests were run on the entire frequency range (0.3–50 Hz) unless otherwise stated. Power effects refer to total (induced and evoked) rather than just evoked power.

Intertial phase coherence.

Cortical entrainment was calculated as it was first analyzed by Luo and Poeppel (2), where a detailed explanation of the analysis can be found. The analysis compares the phase of TF representations across multiple repetitions of the same stimulus and yields a larger number (closer to 1) when the phase at each time point across trials is consistent. The equation is as follows:

where θ represents the phase at each time point i, frequency j, and trial n, and N is the total number of trials of one stimulus.

ITC frequency specificity.

To assess quantitatively the frequency specificity reported in the ITC analysis, we averaged the ITC across time and then calculated the peak response from 0.3 to 10 Hz, normalized by the mean in the same range. To test for significance, we compared this value against a null distribution of the same metric calculated over each iteration of randomized labels used to calculate significance in ITC (Statistics).

ITC correlation with proportion of hits.

To test the relationship between cortical entrainment and listening behavior, we performed a Pearson correlation between ITC and the proportion of hits in the distortion detection task. ITC at the relevant neural frequency for each note rate was averaged across time and across clip to get a single value for each subject. These values were correlated with the hit rate for each subject. However, even among the nonmusicians, a few subjects reached ceiling performance, suggesting that a linear analysis is inappropriate for these data. As such, we transformed the ITC values by 1/x2, where x corresponds to the original ITC values. Doing so should account for the asymptotic nature of the behavioral values. We then performed the Pearson correlation between the behavior and this ITC transformation to obtain the results shown in Fig. 4B. It is worth noting, however, that similar (although slightly weaker) results were found using a typical Pearson correlation on the original values (R2 = 0.56, P = 0.0054).

Power contrast: hits vs. misses.

In the analysis shown in Fig. 6A, we grouped the target trials for all subjects (36 targets * 12 subjects = 432 trials). We then contrasted the mean hit response (333 trials) and the mean miss response (99 trials) using a t test. For significance testing, we compared the result against a permutation test (10,000 iterations) in which we randomly split the trials into two groups of 333 and 99 trials and conducted t tests. We also conducted the analysis by randomly selecting 99 of the hit trials for comparison over 10,000 iterations and calculated the mean T statistic overall. This had no effect on the overall result of the analysis.

Because of the limited number of target trials per subject, this analysis groups trials across subjects. It must be noted that, although we are comparing power and behavior in grouped trials, the effect shown mixes within-subject and across-subject differences. This point disallows any dissociation of which difference drives this effect. It should, however, be noted that the majority of the variation in this dataset is due to intrasubject rather than intersubject variation (76.6%). This suggests data ranges are highly overlapping across subjects and thus that intersubject variability is unlikely to be the driving force of this effect.

Channel selection.

As we focused on auditory cortical responses, we used a functional auditory localizer to select channels for each subject. Channels were selected for further analysis on the basis of the magnitude of their recorded M100 evoked response elicited by a 400-ms, 1,000-Hz sinusoidal tone recorded in a pretest and averaged over 200 trials. In each hemisphere, the 10 channels with largest M100 response were selected for analysis. This method of channel selection allowed us to select channels recording signals generated in auditory cortex and surrounding areas (73, 74) while avoiding “double-dipping” bias (i.e., selecting only the channels that show an effect the best to test for the effect). After finding an effect using these channels, we investigated the topography of the response by running the analysis in the significant frequency band on every channel to see whether we can glean more regarding the source of the significant activity. Fig. S1 shows the selected channels (shaded by the number of subjects for which that channel was selected) overlaid on the topography of cortical entrainment in each experiment. The figure shows a high amount of overlap.

Statistics.

Unless otherwise stated, statistics were performed using a permutation test in which group labels (e.g., M vs. NM, or stimulus note rate) were randomized and differences of the new groups were calculated over 10,000 iterations to create a control distribution. P values were determined from the proportion of the control distribution that was equal to or greater than the actual test statistic (using the original group labels). Multiple comparisons were controlled for using FDR (75) correction at P < 0.05. In the case of tests over MEG sensors, a cluster permutation was used, as first presented by Maris and Oostenveld (76).

Acknowledgments

We thank Jeff Walker for his excellent assistance in running the magnetoencephalography (MEG) system and Luc Arnal, Greg Cogan, Nai Ding, and Jess Rowland for comments on the manuscript. This research was supported by the National Institutes of Health under Grant R01 DC05660 (to D.P.) and by the National Science Foundation Graduate Research Fellowship Program under Grant DGE 1342536 (to K.B.D.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1508431112/-/DCSupplemental.

References

- 1.Cogan GB, Poeppel D. A mutual information analysis of neural coding of speech by low-frequency MEG phase information. J Neurophysiol. 2011;106(2):554–563. doi: 10.1152/jn.00075.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Luo H, Poeppel D. Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex. Neuron. 2007;54(6):1001–1010. doi: 10.1016/j.neuron.2007.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kerlin JR, Shahin AJ, Miller LM. Attentional gain control of ongoing cortical speech representations in a “cocktail party.”. J Neurosci. 2010;30(2):620–628. doi: 10.1523/JNEUROSCI.3631-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schroeder CE, Lakatos P. Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 2009;32(1):9–18. doi: 10.1016/j.tins.2008.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gross J, et al. Speech rhythms and multiplexed oscillatory sensory coding in the human brain. PLoS Biol. 2013;11(12):e1001752. doi: 10.1371/journal.pbio.1001752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ghitza O. On the role of theta-driven syllabic parsing in decoding speech: Intelligibility of speech with a manipulated modulation spectrum. Front Psychol. 2012;3:238. doi: 10.3389/fpsyg.2012.00238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Obleser J, Weisz N. Suppressed alpha oscillations predict intelligibility of speech and its acoustic details. Cereb Cortex. 2012;22(11):2466–2477. doi: 10.1093/cercor/bhr325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Weisz N, Hartmann T, Müller N, Lorenz I, Obleser J. Alpha rhythms in audition: Cognitive and clinical perspectives. Front Psychol. 2011;2:73. doi: 10.3389/fpsyg.2011.00073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Arnal LH, Doelling KB, Poeppel D. Delta-beta coupled oscillations underlie temporal prediction accuracy. Cereb Cortex. 2015;25(9):3077–3085. doi: 10.1093/cercor/bhu103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fujioka T, Trainor LJ, Large EW, Ross B. Internalized timing of isochronous sounds is represented in neuromagnetic beta oscillations. J Neurosci. 2012;32(5):1791–1802. doi: 10.1523/JNEUROSCI.4107-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fujioka T, Trainor LJ, Large EW, Ross B. Beta and gamma rhythms in human auditory cortex during musical beat processing. Ann N Y Acad Sci. 2009;1169(1):89–92. doi: 10.1111/j.1749-6632.2009.04779.x. [DOI] [PubMed] [Google Scholar]

- 12.Large EW, Snyder JS. Pulse and meter as neural resonance. Ann N Y Acad Sci. 2009;1169(1):46–57. doi: 10.1111/j.1749-6632.2009.04550.x. [DOI] [PubMed] [Google Scholar]

- 13.Arnal LH. Predicting “when” using the motor system’s beta-band oscillations. Front Hum Neurosci. 2012;6:225. doi: 10.3389/fnhum.2012.00225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Peelle JE, Gross J, Davis MH. Phase-locked responses to speech in human auditory cortex are enhanced during comprehension. Cereb Cortex. 2013;23(6):1378–1387. doi: 10.1093/cercor/bhs118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Doelling KB, Arnal LH, Ghitza O, Poeppel D. Acoustic landmarks drive delta-theta oscillations to enable speech comprehension by facilitating perceptual parsing. Neuroimage. 2014;85(Pt 2):761–768. doi: 10.1016/j.neuroimage.2013.06.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Henry MJ, Obleser J. Frequency modulation entrains slow neural oscillations and optimizes human listening behavior. Proc Natl Acad Sci USA. 2012;109(49):20095–20100. doi: 10.1073/pnas.1213390109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Luo H, Poeppel D. Cortical oscillations in auditory perception and speech: Evidence for two temporal windows in human auditory cortex. Front Psychol. 2012;3:170. doi: 10.3389/fpsyg.2012.00170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lakatos P, et al. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J Neurophysiol. 2005;94(3):1904–1911. doi: 10.1152/jn.00263.2005. [DOI] [PubMed] [Google Scholar]

- 19.Krause V, Schnitzler A, Pollok B. Functional network interactions during sensorimotor synchronization in musicians and non-musicians. Neuroimage. 2010;52(1):245–251. doi: 10.1016/j.neuroimage.2010.03.081. [DOI] [PubMed] [Google Scholar]

- 20.Hari R, Salmelin R. Human cortical oscillations: A neuromagnetic view through the skull. Trends Neurosci. 1997;20(1):44–49. doi: 10.1016/S0166-2236(96)10065-5. [DOI] [PubMed] [Google Scholar]

- 21.Farmer SF. Rhythmicity, synchronization and binding in human and primate motor systems. J Physiol. 1998;509(Pt 1):3–14. doi: 10.1111/j.1469-7793.1998.003bo.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Repp BH. Sensorimotor synchronization and perception of timing: Effects of music training and task experience. Hum Mov Sci. 2010;29(2):200–213. doi: 10.1016/j.humov.2009.08.002. [DOI] [PubMed] [Google Scholar]

- 23.Krause V, Pollok B, Schnitzler A. Perception in action: The impact of sensory information on sensorimotor synchronization in musicians and non-musicians. Acta Psychol (Amst) 2010;133(1):28–37. doi: 10.1016/j.actpsy.2009.08.003. [DOI] [PubMed] [Google Scholar]

- 24.Wong PC, Skoe E, Russo NM, Dees T, Kraus N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat Neurosci. 2007;10(4):420–422. doi: 10.1038/nn1872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Pantev C, et al. Increased auditory cortical representation in musicians. Nature. 1998;392(6678):811–814. doi: 10.1038/33918. [DOI] [PubMed] [Google Scholar]

- 26.Zatorre RJ. Functional specialization of human auditory cortex for musical processing. Brain. 1998;121(Pt 10):1817–1818. doi: 10.1093/brain/121.10.1817. [DOI] [PubMed] [Google Scholar]

- 27.Kraus N, et al. Music enrichment programs improve the neural encoding of speech in at-risk children. J Neurosci. 2014;34(36):11913–11918. doi: 10.1523/JNEUROSCI.1881-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gaser C, Schlaug G. Brain structures differ between musicians and non-musicians. J Neurosci. 2003;23(27):9240–9245. doi: 10.1523/JNEUROSCI.23-27-09240.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ohnishi T, et al. Functional anatomy of musical perception in musicians. Cereb Cortex. 2001;11(8):754–760. doi: 10.1093/cercor/11.8.754. [DOI] [PubMed] [Google Scholar]

- 30.Schlaug G, Jäncke L, Huang Y, Staiger JF, Steinmetz H. Increased corpus callosum size in musicians. Neuropsychologia. 1995;33(8):1047–1055. doi: 10.1016/0028-3932(95)00045-5. [DOI] [PubMed] [Google Scholar]

- 31.Musacchia G, Sams M, Skoe E, Kraus N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc Natl Acad Sci USA. 2007;104(40):15894–15898. doi: 10.1073/pnas.0701498104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Grahn JA, Rowe JB. Finding and feeling the musical beat: Striatal dissociations between detection and prediction of regularity. Cereb Cortex. 2013;23(4):913–921. doi: 10.1093/cercor/bhs083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Grahn JA, Rowe JB. Feeling the beat: Premotor and striatal interactions in musicians and nonmusicians during beat perception. J Neurosci. 2009;29(23):7540–7548. doi: 10.1523/JNEUROSCI.2018-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Grahn JA, McAuley JD. Neural bases of individual differences in beat perception. Neuroimage. 2009;47(4):1894–1903. doi: 10.1016/j.neuroimage.2009.04.039. [DOI] [PubMed] [Google Scholar]

- 35.Nozaradan S, Peretz I, Mouraux A. Selective neuronal entrainment to the beat and meter embedded in a musical rhythm. J Neurosci. 2012;32(49):17572–17581. doi: 10.1523/JNEUROSCI.3203-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Nozaradan S, Peretz I, Missal M, Mouraux A. Tagging the neuronal entrainment to beat and meter. J Neurosci. 2011;31(28):10234–10240. doi: 10.1523/JNEUROSCI.0411-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Nozaradan S. Exploring how musical rhythm entrains brain activity with electroencephalogram frequency-tagging. Philos Trans R Soc Lond B Biol Sci. 2014;369(1658):20130393. doi: 10.1098/rstb.2013.0393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Arnal LH, Giraud AL. Cortical oscillations and sensory predictions. Trends Cogn Sci. 2012;16(7):390–398. doi: 10.1016/j.tics.2012.05.003. [DOI] [PubMed] [Google Scholar]

- 39.Chen JL, Penhune VB, Zatorre RJ. Moving on time: Brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. J Cogn Neurosci. 2008;20(2):226–239. doi: 10.1162/jocn.2008.20018. [DOI] [PubMed] [Google Scholar]

- 40.Chen JL, Penhune VB, Zatorre RJ. Listening to musical rhythms recruits motor regions of the brain. Cereb Cortex. 2008;18(12):2844–2854. doi: 10.1093/cercor/bhn042. [DOI] [PubMed] [Google Scholar]

- 41.Händel BF, Haarmeier T, Jensen O. Alpha oscillations correlate with the successful inhibition of unattended stimuli. J Cogn Neurosci. 2011;23(9):2494–2502. doi: 10.1162/jocn.2010.21557. [DOI] [PubMed] [Google Scholar]

- 42.Jensen O, et al. On the human sensorimotor-cortex beta rhythm: Sources and modeling. Neuroimage. 2005;26(2):347–355. doi: 10.1016/j.neuroimage.2005.02.008. [DOI] [PubMed] [Google Scholar]

- 43.Patel AD. Why would musical training benefit the neural encoding of speech? The OPERA hypothesis. Front Psychol. 2011;2:142. doi: 10.3389/fpsyg.2011.00142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Tierney A, Krizman J, Skoe E, Johnston K, Kraus N. High school music classes enhance the neural processing of speech. Front Psychol. 2013;4:855. doi: 10.3389/fpsyg.2013.00855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Hämäläinen JA, Rupp A, Soltész F, Szücs D, Goswami U. Reduced phase locking to slow amplitude modulation in adults with dyslexia: An MEG study. Neuroimage. 2012;59(3):2952–2961. doi: 10.1016/j.neuroimage.2011.09.075. [DOI] [PubMed] [Google Scholar]

- 46.Goswami U, et al. Language-universal sensory deficits in developmental dyslexia: English, Spanish, and Chinese. J Cogn Neurosci. 2011;23(2):325–337. doi: 10.1162/jocn.2010.21453. [DOI] [PubMed] [Google Scholar]

- 47.Goswami U. A temporal sampling framework for developmental dyslexia. Trends Cogn Sci. 2011;15(1):3–10. doi: 10.1016/j.tics.2010.10.001. [DOI] [PubMed] [Google Scholar]

- 48.Leong V, Goswami U. Impaired extraction of speech rhythm from temporal modulation patterns in speech in developmental dyslexia. Front Hum Neurosci. 2014;8:96. doi: 10.3389/fnhum.2014.00096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Leong V, Goswami U. Assessment of rhythmic entrainment at multiple timescales in dyslexia: Evidence for disruption to syllable timing. Hear Res. 2014;308:141–161. doi: 10.1016/j.heares.2013.07.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Lehongre K, Morillon B, Giraud AL, Ramus F. Impaired auditory sampling in dyslexia: Further evidence from combined fMRI and EEG. Front Hum Neurosci. 2013;7:454. doi: 10.3389/fnhum.2013.00454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Lehongre K, Ramus F, Villiermet N, Schwartz D, Giraud AL. Altered low-gamma sampling in auditory cortex accounts for the three main facets of dyslexia. Neuron. 2011;72(6):1080–1090. doi: 10.1016/j.neuron.2011.11.002. [DOI] [PubMed] [Google Scholar]

- 52.Thomson JM, Goswami U. Rhythmic processing in children with developmental dyslexia: Auditory and motor rhythms link to reading and spelling. J Physiol Paris. 2008;102(1-3):120–129. doi: 10.1016/j.jphysparis.2008.03.007. [DOI] [PubMed] [Google Scholar]

- 53.Bhide A, Power A, Goswami U. A rhythmic musical intervention for poor readers: A comparison of efficacy with a letter-based intervention. Mind Brain Educ. 2013;7(2):113–123. [Google Scholar]

- 54.Schlaug G, Jäncke L, Huang Y, Steinmetz H. In vivo evidence of structural brain asymmetry in musicians. Science. 1995;267(5198):699–701. doi: 10.1126/science.7839149. [DOI] [PubMed] [Google Scholar]

- 55.Zatorre RJ, Chen JL, Penhune VB. When the brain plays music: Auditory-motor interactions in music perception and production. Nat Rev Neurosci. 2007;8(7):547–558. doi: 10.1038/nrn2152. [DOI] [PubMed] [Google Scholar]

- 56.Binder JR, et al. Human brain language areas identified by functional magnetic resonance imaging. J Neurosci. 1997;17(1):353–362. doi: 10.1523/JNEUROSCI.17-01-00353.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Rissman J, Eliassen JC, Blumstein SE. An event-related fMRI investigation of implicit semantic priming. J Cogn Neurosci. 2003;15(8):1160–1175. doi: 10.1162/089892903322598120. [DOI] [PubMed] [Google Scholar]

- 58.Rodd JM, Davis MH, Johnsrude IS. The neural mechanisms of speech comprehension: fMRI studies of semantic ambiguity. Cereb Cortex. 2005;15(8):1261–1269. doi: 10.1093/cercor/bhi009. [DOI] [PubMed] [Google Scholar]

- 59.Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8(5):393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- 60.Lewis G, Poeppel D. The role of visual representations during the lexical access of spoken words. Brain Lang. 2014;134:1–10. doi: 10.1016/j.bandl.2014.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Fries P. A mechanism for cognitive dynamics: Neuronal communication through neuronal coherence. Trends Cogn Sci. 2005;9(10):474–480. doi: 10.1016/j.tics.2005.08.011. [DOI] [PubMed] [Google Scholar]

- 62.ten Oever S, Schroeder CE, Poeppel D, van Atteveldt N, Zion-Golumbic E. Rhythmicity and cross-modal temporal cues facilitate detection. Neuropsychologia. 2014;63:43–50. doi: 10.1016/j.neuropsychologia.2014.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008;320(5872):110–113. doi: 10.1126/science.1154735. [DOI] [PubMed] [Google Scholar]

- 64.Large EW, Jones MR. The dynamics of attending: How people track time-varying events. Psychol Rev. 1999;106(1):119–159. [Google Scholar]

- 65.Song K, Meng M, Chen L, Zhou K, Luo H. Behavioral oscillations in attention: Rhythmic α pulses mediated through θ band. J Neurosci. 2014;34(14):4837–4844. doi: 10.1523/JNEUROSCI.4856-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Oldfield RC. The assessment and analysis of handedness: The Edinburgh Inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- 67.Yang X, Wang K, Shamma SA. Auditory Representations of acoustic signals. IEEE Trans Inf Theory. 1992;38(2):824–839. [Google Scholar]

- 68.Murdock BB, Jr, Ogilvie JC. Binomial variability in short-term memory. Psychol Bull. 1968;70(4):256–260. doi: 10.1037/h0026259. [DOI] [PubMed] [Google Scholar]

- 69.Macmillan NA, Kaplan HL. Detection theory analysis of group data: Estimating sensitivity from average hit and false-alarm rates. Psychol Bull. 1985;98(1):185–199. [PubMed] [Google Scholar]

- 70.Adachi Y, Shimogawara M, Higuchi M, Haruta Y, Ochiai M. Reduction of nonperiodic environmental magnetic noise in MEG measurement by continuously adjusted least square method. IEEE Trans Appl Supercond. 2001;11:669–672. [Google Scholar]

- 71.de Cheveigné A, Simon JZ. Denoising based on time-shift PCA. J Neurosci Methods. 2007;165(2):297–305. doi: 10.1016/j.jneumeth.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Oostenveld R, Fries P, Maris E, Schoffelen JM. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci. 2011;2011:156869. doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Reite M, et al. Auditory M100 component 1: Relationship to Heschl’s gyri. Brain Res Cogn Brain Res. 1994;2(1):13–20. doi: 10.1016/0926-6410(94)90016-7. [DOI] [PubMed] [Google Scholar]

- 74.Lütkenhöner B, Steinsträter O. High-precision neuromagnetic study of the functional organization of the human auditory cortex. Audiol Neurootol. 1998;3(2-3):191–213. doi: 10.1159/000013790. [DOI] [PubMed] [Google Scholar]

- 75.Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J R Stat Soc Series B Stat Methodol. 1995;57(1):289–300. [Google Scholar]

- 76.Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007;164(1):177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]