Abstract

The human connectome represents a network map of the brain's wiring diagram and the pattern into which its connections are organized is thought to play an important role in cognitive function. The generative rules that shape the topology of the human connectome remain incompletely understood. Earlier work in model organisms has suggested that wiring rules based on geometric relationships (distance) can account for many but likely not all topological features. Here we systematically explore a family of generative models of the human connectome that yield synthetic networks designed according to different wiring rules combining geometric and a broad range of topological factors. We find that a combination of geometric constraints with a homophilic attachment mechanism can create synthetic networks that closely match many topological characteristics of individual human connectomes, including features that were not included in the optimization of the generative model itself. We use these models to investigate a lifespan dataset and show that, with age, the model parameters undergo progressive changes, suggesting a rebalancing of the generative factors underlying the connectome across the lifespan.

Keywords: Graph theory, Generative models, Connectome

Highlights

-

•

We systematically compare thirteen generative models for the human connectome.

-

•

The best-fitting model combines a distance penalty with homophily.

-

•

Our results are consistent across three datasets comprising 380 total participants.

-

•

The distance penalty weakens monotonically across the human lifespan.

Introduction

The human connectome represents a network map of the brain in which regions and inter-regional connections are rendered into the nodes and edges of a graph. In this format, the connectome can be analyzed using tools from network science and graph theory (Bullmore and Sporns, 2009, Sporns, 2014). Network analyses of the connectome have revealed a host of attributes that are likely essential for healthy brain function, including hierarchical and multi-scale modules (Bassett et al., 2010, Betzel et al., 2013), highly connected, highly central hubs (Hagmann et al., 2008, van den Heuvel and Sporns, 2013), and a rich club of mutually connected, high-degree regions (van den Heuvel and Sporns, 2011). Additionally, the connectome's topology (the pattern in which its connections are configured) is thought to play an important role in shaping task-evoked and spontaneous brain activity (Hermundstad et al., 2013, Goñi et al., 2014, Mišić et al., 2015).

The connectome is an example of a physical network whose nodes and edges are embedded in Euclidean space (Barthélemy, 2011). Consequently, the formation of connections carries a material and metabolic cost that increases with connection length (Bullmore and Sporns, 2012). To remain within the limits of viability, the human connectome maintains disproportionally many short-range (i.e. low cost) connections. Despite the importance of conserving connection cost, previous work in model organisms has shown that wiring minimization alone cannot account for all the connectome's topological features (Kaiser and Hilgetag, 2006, Costa et al., 2007a). Rather, connectome networks strike a balance wherein the formation of costly features like hubs and rich clubs trades off with a drive to reduce the total cost of wiring.

The conditions that allow this tradeoff to emerge are the central topic of this paper, and one that we explore using generative models applied to human connectome data obtained from individual participants. In the context of complex networks, generative modeling refers to a set of approaches for creating synthetic networks with properties similar to those of real-world networks. One example among many (Watts and Strogatz, 1998, Kumar et al., 2000, Solé et al., 2002, Vázquez et al., 2003, Dall and Christensen, 2002, Middendorf et al., 2005) is the preferential attachment model (Barabási and Albert, 1999), which generates synthetic networks with heavy-tailed degree distributions similar to those observed in many real-world socio-technical networks.

In this report we build upon and expand the tradition of generative models for brain networks by fitting many different generative models to single-subject human connectome data and comparing models in terms of their overall performance. The models we investigate combine two distinct mechanisms for network growth: 1) geometric wiring rules which influence connection formation by favoring either shorter or longer connections and 2) non-geometric rules that ignore the distance between two regions and, instead, form connections on the basis of some shared topological relationship. Some of the models we consider implement rules that mimic well-established growth mechanisms like geometric random graphs, preferential attachment, degree assortativity, and homophilic attraction. In all cases, our aim is to discover wiring rules that produce synthetic networks with properties similar to those of observed connectomes.

To this end, we tuned our models' parameters to generate realistic synthetic networks. We found that the best-fitting model was one that penalized the formation of longer connections while increasing the likelihood of forming connections between brain regions with similar connectivity profiles (homophily). We cross-validated this result, comparing synthetic and observed connectomes along measures other than those used in the optimization process and using three different datasets. Finally, we fit the optimal generative model to data from a lifespan study (with ages ranging from 7 to 85 years) and found that the penalty on long-distance connections weakened monotonically with age. Older subjects' connectomes were fit poorly compared to those of younger individuals, a result driven primarily by an inability to match edge length and clustering coefficient distributions. This suggests that the human connectome undergoes a characteristic reorganization across the lifespan.

Methods

Data acquisition and processing

A total of N = 40 healthy participants underwent an MRI session on a 3-T Siemens Trio scanner with a 32-channel head-coil. The magnetization-prepared rapid graident-echo (MPRAGE) sequence was 1 mm in-plane resolution and 1.2 mm slice thickness. The DSI sequence included 128 diffusion-weighted volumes plus one reference b0 volume, maximum b-value of 8000 s · mm− 2 and 2.2 × 2.2 × 3.0 mm voxel size. The echo planar imaging (EPI) sequence was 3.3 mm in-plane resolution and 0.3 mm slice thickness with TR of 1920 ms. DSI and MPRAGE data were processing using the Connectome Mapping Toolkit (Daducci et al., 2012). Segmentation of gray and white matter was based on MPRAGE volumes. The cerebral cortex was parcellated into n = 219 ROIs (Cammoun et al., 2012), of which we retained the 108 comprising the right hemisphere. We enforced an average connectome density of ρ ≈ 10%, resulting in a streamline threshold of 27 streamlines (i.e. a minimum of 27 streamlines must have connected two regions for us to consider the presence of an anatomical connection). These same data have been analyzed elsewhere (Avena-Koenigsberger et al., 2014, Goñi et al., 2014, Betzel et al., 2013).

Generative algorithm

In this report we construct synthetic networks using a generative model. The algorithm for producing synthetic networks is simple. Starting with a sparse seed network (62 bi-directional edges that were common across all 40 participants), edges were added one at a time over a series of steps until M total connections were placed (where M = 576 ± 57 connections across subjects). At each step we allow for the possibility that any pair of unconnected nodes, u and v, will be connected. Connections are formed probabilistically, where the relative probability of connection formation is given by:

| (1) |

In this expression E(u, v) denotes the Euclidean distance between brain regions u and v. The exponent η controls the characteristic connection length. When η < 0, short-range connections are favored, while η > 0 increases the probability of forming longer connections. The other term, K(u, v), represents an arbitrary non-geometric relationship between nodes u and v and the value of γ scales its relative importance. The precise definition of K(u, v) is flexible and can be varied to realize different wiring rules. For instance, setting K(u, v) = kukv and γ > 0 implements a variant of preferential attachment, wherein higher degree nodes are more likely to become connected. Alternative definitions can be used to implement rules such as degree assortativity (e.g. K(u, v) = |ku − kv|, where nodes with similar/dissimilar numbers of connections preferentially connect to one another) or homophily (e.g. K(u, v) = ∑wauwawv where connections form between nodes with more or fewer common neighbors). In Table 1 we show a complete list of all non-geometric wiring rules. We limit our analysis to generative models whose wiring rules include only two components, though we could accommodate more components, in principle. We impose this limit in an effort to focus on highly simple, idealized models of network growth.

Table 1.

Complete list of generative models. The first two columns show each model's name and the non-geometric wiring rule. The remaining columns indicate sample mean ± standard error energy (E), and the four KS statistics, KSk, KSb, KSe, and KSc.

| Name | K(u, v) | E | η | γ | KSk | KSb | KSe | KSc |

|---|---|---|---|---|---|---|---|---|

| Clu. avg. | 0.19 ± 0:02 | − 3.06 ± 0.48 | − 5.75 ± 1.62 | 0.14 ± 0.03 | 0.18 ± 0.03 | 0.17 ± 0.03 | 0.15 ± 0.03 | |

| Clu. diff. | |cu − cv| | 0.19 ± 0.02 | − 3.13 ± 0.49 | − 5.85 ± 2.07 | 0.15 ± 0.03 | 0.18 ± 0.03 | 0.17 ± 0.03 | 0.16 ± 0.03 |

| Clu. max. | max[cu, cv] | 0.19 ± 0.02 | − 3.14 ± 0.49 | − 5.75 ± 1.97 | 0.15 ± 0.03 | 0.18 ± 0.03 | 0.17 ± 0.03 | 0.15 ± 0.03 |

| Clu. min | min[cu, cv] | 0.24 ± 0.03 | − 3.60 ± 0.41 | − 4.04 ± 1.09 | 0.12 ± 0.03 | 0.19 ± 0.05 | 0.23 ± 0.03 | 0.17 ± 0.05 |

| Clu. prod. | cucv | 0.20 ± 0.02 | − 3.14 ± 0.39 | − 3.44 ± 1.00 | 0.11 ± 0.03 | 0.18 ± 0.03 | 0.19 ± 0.03 | 0.15 ± 0.04 |

| Deg. avg. | 0.24 ± 0.02 | − 3.76 ± 0.57 | 2.47 ± 0.38 | 0.18 ± 0.05 | 0.18 ± 0.05 | 0.23 ± 0.03 | 0.19 ± 0.04 | |

| Deg. diff. | |ku − kv| | 0.25 ± 0.02 | − 4.55 ± 0.76 | − 1.03 ± 2.61 | 0.13 ± 0.04 | 0.19 ± 0.04 | 0.24 ± 0.03 | 0.21 ± 0.05 |

| Deg. max. | max[ku, kv] | 0.25 ± 0.02 | − 4.02 ± 0.60 | 2.20 ± 0.43 | 0.13 ± 0.04 | 0.18 ± 0.05 | 0.24 ± 0.03 | 0.18 ± 0.05 |

| Deg. min. | min[ku, kv] | 0.25 ± 0.02 | − 3.99 ± 0.64 | 2.03 ± 0.47 | 0.20 ± 0.05 | 0.16 ± 0.04 | 0.24 ± 0.03 | 0.21 ± 0.04 |

| Deg. prod. | kukv | 0.26 ± 0.02 | − 3.83 ± 0.70 | 1.14 ± 0.32 | 0.19 ± 0.07 | 0.16 ± 0.06 | 0.24 ± 0.04 | 0.22 ± 0.04 |

| Matching | 0.12 ± 0.02 | − 0.98 ± 0.37 | 0.42 ± 0.04 | 0.10 ± 0.03 | 0.10 ± 0.02 | 0.10 ± 0.03 | 0.11 ± 0.02 | |

| Nghbrs. | ∑wauwawv | 0.14 ± 0.02 | − 1.18 ± 0.43 | 0.35 ± 0.04 | 0.11 ± 0.03 | 0.11 ± 0.03 | 0.11 ± 0.03 | 0.11 ± 0.03 |

| Geom. | 1 | 0.29 ± 0.02 | − 4.01 ± 0.31 | N/A | 0.15 ± 0.03 | 0.18 ± 0.04 | 0.29 ± 0.02 | 0.27 ± 0.03 |

To prevent cases where P(u, v) is undefined (e.g. if K(u, v) = 0 and γ < 0 then P(u, v) = ∞, we add ∈ = 10− 6 to each K(u, v) before raising it to the power, γ). Over the course of the generative process new edges are added to the synthetic network which necessarily changes the value of K(u, v). Accordingly, at each step we update K(u, v) and the corresponding changes to P(u, v). If, at any step, the edge {u, v} is added to the synthetic network, then P(u, v) = 0 for all subsequent steps. See Fig. S14 for an illustration of the model using a toy network model.

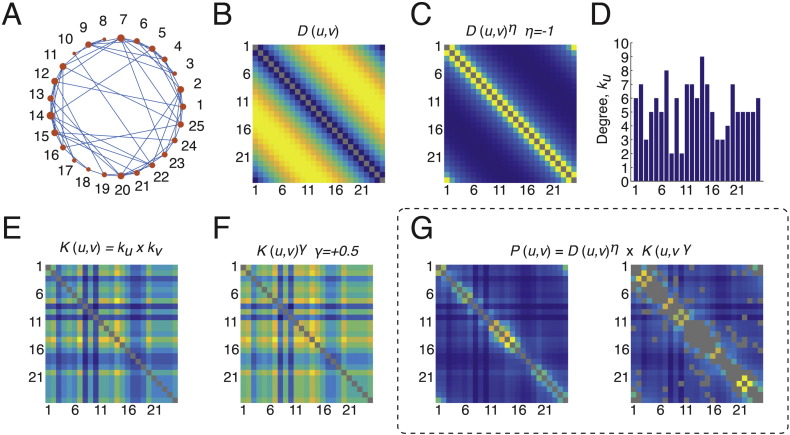

Fig. S14.

Detailed explanation of how the generative model works. Panel A shows a toy network, with nodes embedded along the perimeter of the unit circle. Given this network and our generative models, we can ask the following question: If we wish to add a new edge to the network according to the wiring rule: P(u, v) = K(u, v)γ × D(u, v)η, where will that edge most likely go? To answer this question, we need to first calculate the distance between all pairs of nodes (panel B), whose elements we raise to the power η = − 1 (panel C). The other component we need is the matrix, K(u, v), which represents the non-geometric component. One possible definition of K(u, v) is the product of node degrees (the deg. prod model). Given this particular definition, we set K(u, v) = ku × kv. To generate this matrix, we first calculate ku for all u (panel D). Then we multiply ku × kv for all pairs of nodes, {u, v} (panel E). We next raise K(u, v) to the power γ = 1, which we show in panel F. We perform the element-wise multiplication of K(u, v) by D(u, v)η (panel G, left). Finally, we have to remove the pairs, {u, v}, for which a connection already exists (the gray cells in panel G, right). The nonzero elements of this matrix give us the relative probabilities of where an edge would get placed given this wiring rule. After placing the edge according to these probabilities, the model would return to panel A and the process would repeat itself.

In our model we use Euclidean distance as a proxy for the cost of the connection between brain regions u and v. It is worth noting that there are alternative measures for quantifying the cost or spatial relatedness of node pairs, including measures derived from the network's spatial embedding (Friedman et al., 2015). Another candidate measure of, perhaps, greater neurobiological interest is fiber length, which measures the actual curved trajectories of white-matter tracts rather than the straight-line (Euclidean) distance between brain region centroids. While Euclidean distance and fiber length are correlated with one another, there are many instances where the fiber length of a connection is much longer than what would be expected given Euclidean distance. A more detailed discussion of this topic can be found in the Appendix (Fig. S10, Fig. S11).

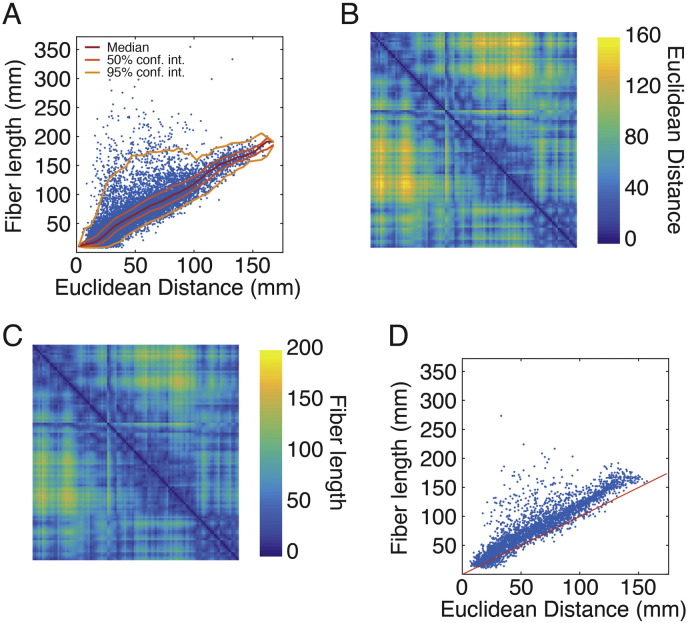

Fig. S10.

Relationship between Euclidean distance and fiber length. (A) Scatter plot of Euclidean distance and fiber length for connections across all participants in the CHUV dataset. (B) The group-average Euclidean distance matrix obtained as the average distance between centroids across all participants. (C) Interpolated fiber length matrix, generated by the procedure described in the Appendix. (D) Scatter plot of group-average Euclidean distance versus interpolated fiber length. The red line in panel D is the identity line. Note that most connections have longer fiber length than Euclidean distance. However, a small number of connections have shorter fiber lengths. This occurs for connections that originate and terminate near the boundary of two parcels. In this case, the fiber length can be very short, while the Euclidean distance between the parcels can be long.

Fig. S11.

Model energies for CHUV composite connectivity matrix using the interpolated fiber length matrix in place of Euclidean distance.

Evaluating synthetic network fitness

To assess the fitness of a synthetic network we calculated its energy, which measures how dissimilar a synthetic network is to the observed connectome. Intuitively, if the two networks have many properties in common, then the synthetic network's energy is small. Specifically, a synthetic network's energy was defined as:

| (2) |

where the arguments are Kolmogorov–Smirnov statistics which quantify the discrepancy between the synthetic and observed connectomes in terms of their degree (k), clustering (c), betweenness centrality (b), and edge length (e) distributions. Here, edge length refers to the Euclidean distance between the centroids of two connected brain regions. By taking the maximum of the four statistics we consider a synthetic network to be only as fit as its greatest discrepancy.

Model optimization

Given the generative rule and the energy measure for evaluating a model network's goodness of fit, it was important to find the parameters {η, γ} that produced networks with the lowest possible energy values. To solve this optimization problem, we developed a simple procedure based on classical Monte Carlo methods. The procedure consisted of three stages that were repeated:

-

1.

A sampling stage in which points in parameter space are selected

-

2.

An evaluation stage, where synthetic networks are generated with the previously-selected parameter values and their energies calculated.

-

3.

A partitioning stage, in which the entire parameter space is partitioned according to a Voronoi tessellation.

The procedure is initialized in stage 1 by randomly sampling Nsamp = 2000 points from parameter space. After evaluating the energy at each point and partitioning the entire parameter space into Voronoi cells, the algorithm returns to stage 1. Rather than sample points randomly, points are now sampled from within the boundaries of Voronoi cells, where the probability of drawing a point from within any given cell is inversely proportional to that cell's energy (P(C) ∝ EC− α, where EC is the energy of Voronoi cell, C, and P(C) is the relative probability of sampling from within that cell). This procedure ensures that points are sampled preferentially from low-energy regions of parameter space. We repeated stages 1, 2, and 3 a total of five times and varied α with each repetition, going from α = {0.0, 0.5, 1.0, 1.5, 2.0}. Early on, the low values of α meant that we searched the parameter space randomly, while the larger values at later repetitions allowed us to focus in the low energy regions. We emphasize that alternative optimization schemes could be used to minimize E (e.g. simulated annealing); the approach used here was chosen because it allowed us to not only converge to good solutions, but also to explore the energy landscape.

Results

We fit generative models to the connectomes of individual participants. In the main text, we focus on 40 adults (ages 18–40 years) scanned at the Department of Radiology, University Hospital Center and University of Lausanne (CHUV), Lausanne, Switzerland. The Appendix contains results from replication cohorts of 214 and 126 participants from the Human Connectome Project (HCP) (Van Essen et al., 2012, Glasser et al., 2013) and the Nathan Kline Institute, Rockland, New York (NKI) cohort (Nooner et al., 2012), respectively. In the same Appendix we also investigate the sensitivity of our results to alternative processing streams.

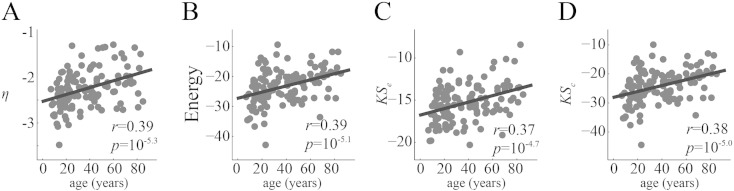

Geometric model

It is well known that the connectome's physical embedding shapes its topology by promoting the formation of low-cost connections (Bullmore and Sporns, 2012). On the other hand, forming only the shortest connections produces a skewed edge length distribution lacking long-distance connections (Kaiser and Hilgetag, 2006), resulting in increased characteristic path length, thereby reducing the efficiency with which information can flow between distant brain regions. We first sought to test the extent to which cost conservation shapes the topology of the human connectome by implementing a pure geometric model (i.e. K(u, v) = 1).

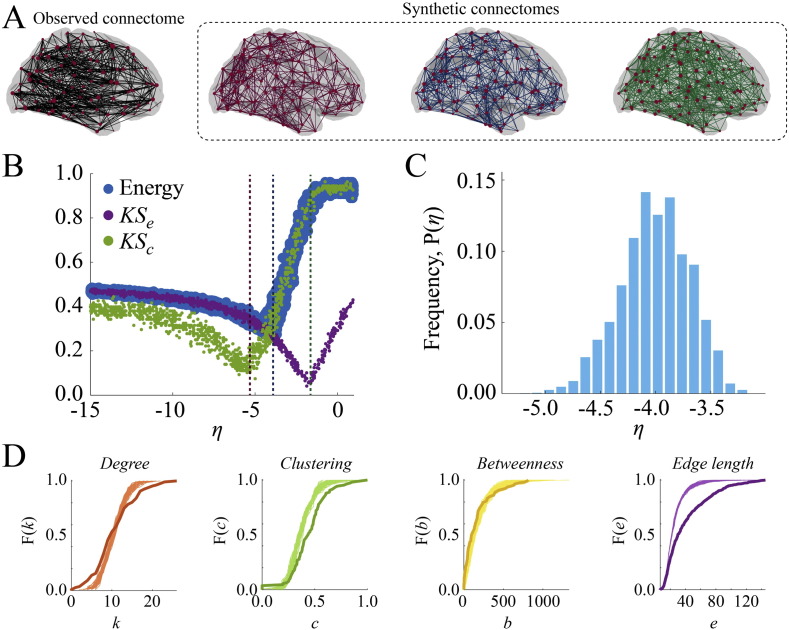

For each participant we tuned the free parameter, η, to a range where the geometric model consistently produced synthetic networks with near-minimal energies (Fig. 1B) and analyzed the top 1% lowest-energy synthetic networks (100 networks/participant). At this point in parameter space (η = − 4.01 ± 0.31; sample mean ± standard error; see Fig. 1C), synthetic networks achieved an average energy of E = 0.29 ± 0.02 with KS statistics KSk = 0.15 ± 0.03, KSb = 0.18 ± 0.04, KSe = 0.27 ± 0.03, and KSc = 0.29 ± 0.02 (Fig. 1B). To contextualize these scores, we compared them to KS statistics obtained from a null generative model where connections were formed with uniform probability. We found that, with the exception of KSe (p ≈ 0.4; Wilcoxon signed-rank test Wilcoxon, 1945), the geometric model produced significantly lower energy and smaller KS statistics (maximum p ≈ 10− 5).

Fig. 1.

Summary of the geometric model: (A) observed (black) and synthetic networks generated at different points in parameter space. (B) Energy landscape showing the behavior of KSe, KSc, and energy as a function of η. The dashed vertical lines indicate the parameter values at which the example synthetic networks were generated. (C) Distribution of η parameter of top 1% lowest-energy synthetic networks aggregated across all participants. (D) Cumulative distributions of degree (orange), clustering coefficient (green), betweenness centrality (yellow), and edge length (purple) for observed connectome (darker line) and best-fitting synthetic networks (lighter lines) for a representative participant.

Interestingly, the point at which energy is minimized deviates from the respective minima of KSe and KSc, demonstrating that even the-best fitting synthetic networks generated by the geometric model cannot simultaneously match observed connectomes in terms of clustering and edge length distributions. The reason for this is intuitive: A strong distance penalty is required to generate highly clustered networks, which inadvertently penalizes the formation of long-distance connections. Conversely, realistic edge length distributions arise when the distance penalty is relatively weak, at which point synthetic networks become vastly under-clustered. The energy minimum occurs at a point situated between these two extremes, trading off accuracy along one dimension with the other though never simultaneously minimizing both (Fig. 1D).

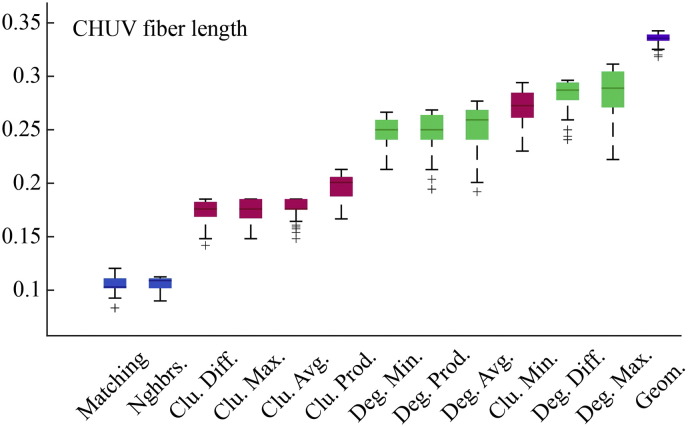

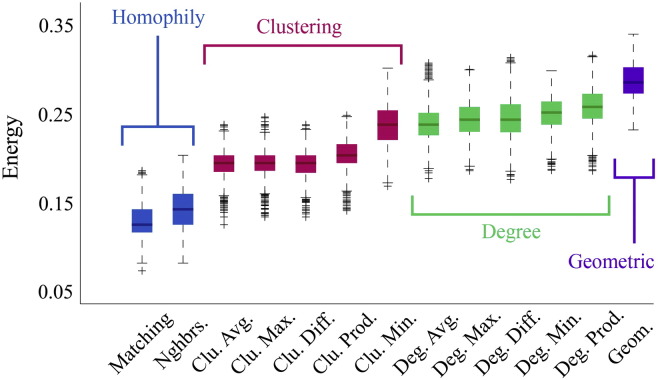

Models driven by geometry and topology outperform pure geometric models

The failure of the pure geometric model to generate synthetic networks that were as clustered and contained as many long-distance connections as observed connectomes suggests that additional factors are needed as part of a realistic generative mechanism. To determine which factors were most capable in this regard we compared twelve different generative models where topological features such as degree, clustering, and homophily influenced the connection formation probabilities. As expected, due to the additional free parameter, γ, we find that all dual-factor models outperformed the pure geometric model, generating synthetic networks with significantly lower energies (p ≈ 0, see Fig. 2). Importantly, dual-factor models were stratified, with clustering-based models outperforming degree-based models, which in turn were outperformed by homophily-based models. The absolute best model incorporated a homophilic attraction mechanism in the form of the matching index (MI), which is a normalized measure of overlap in two nodes' neighborhoods. If Γu = {v : auv = 1} represents the set of node u's neighbors, then the matching index is equal to:

| (3) |

where Γu\v is simply Γu but with v excluded from the set. In the event that u and v have perfect overlap in their neighborhoods, then Muv = 1. If the neighborhoods contain no common elements then Muv = 0.

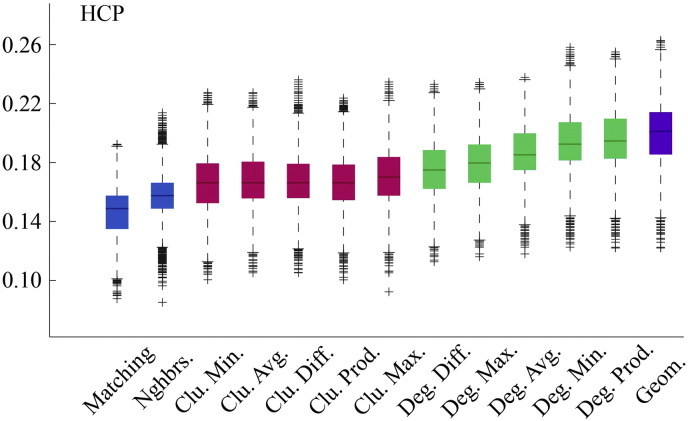

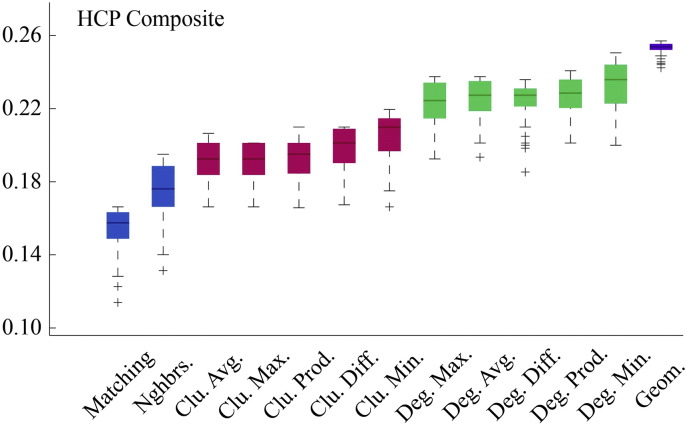

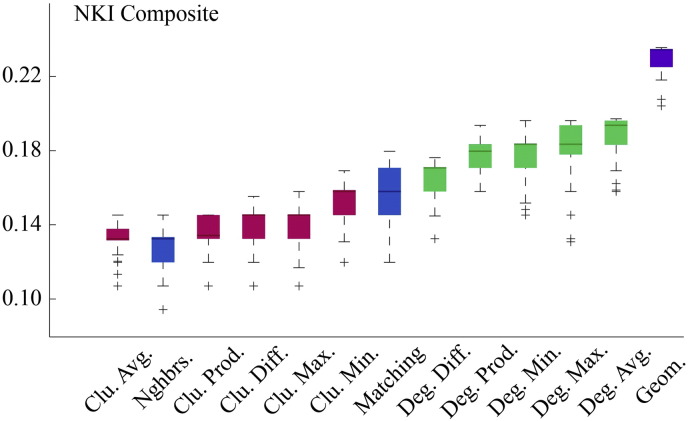

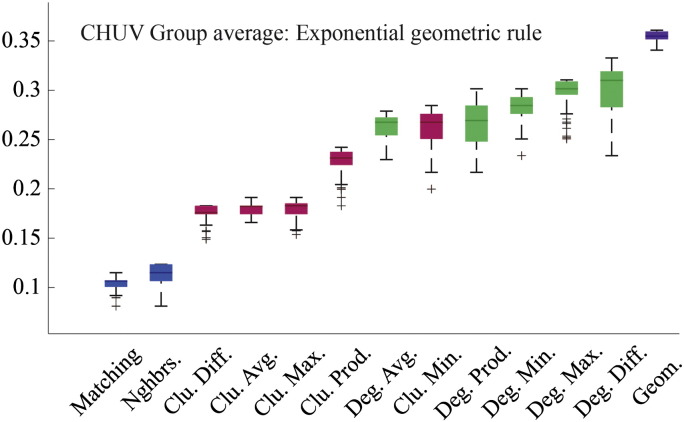

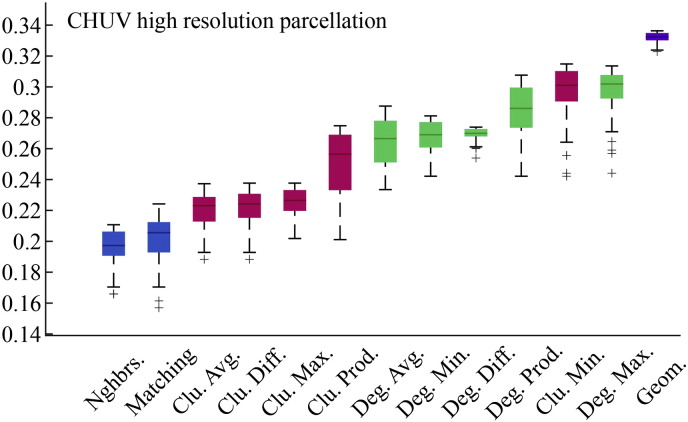

Fig. 2.

Energy distributions across all models. Each box plot represents the top 1% lowest energy synthetic networks generated by each model and aggregated across all participants. The color of each plot indicates the general class of the model: homophily is shown in blue, clustering in pink, degree in green, and geometric in purple. The specific wiring rule names are shown along the x-axis.

Applied to the CHUV dataset, the MI model achieved an average energy of E = 0.12 ± 0.02 with parameters η = − 0.98 ± 0.37 and γ = 0.42 ± 0.04 (Fig. 3C). Together, these parameter values indicated that, like the pure geometric model, the MI model exercised a penalty against long distance connections (albeit markedly weaker than the geometric model), while increasing the probability that nodes with similar connectivity profiles would connect to one another. Interestingly, the parameters η and γ appear to trade off with one another (Fig. 3D), suggesting that the more an individual's connectome is shaped by geometry (large amplitude of η), the less it is shaped by non-geometric constraints and vice versa. On average, the MI model outperformed the geometric model in reducing discrepancies along all four components of the energy function: KSk = 0.10 ± 0.03, KSb = 0.10 ± 0.02, KSe = 0.10 ± 0.03 and KSc = 0.11 ± 0.02 (maximum p-value for all KS statistics and energy was p ≈ 10− 7, Wilcoxon signed-rank test). Whereas the geometric model's performance was limited primarily by mismatches in clustering and edge length, the MI model's performance was more evenly limited. The best-fitting synthetic networks had energies equal to KSk, KSb, KSc, and KSe around 21%, 25%, 29%, and 25% of the time, respectively.

Fig. 3.

Matching index model: (A) observed (black) and synthetic networks generated at different points in parameter space. (B) Energy landscape showing the points at which the example synthetic networks were generated. (C) Distribution of η and γ parameters of best-fitting synthetic networks aggregated across all participants. (D) Tradeoff between η and γ. Each point represents the mean parameter values for an individual participant. Participants with larger values of η tend to have the smallest magnitude γ and vice versa. (E) KS statistic landscapes for degree (orange), clustering (green), betweenness (yellow), and edge length (purple) for observed connectome and best-fitting synthetic networks for a single participant. (F) Cumulative distributions of degree (orange), clustering (green), betweenness (yellow), and edge length (purple) for observed connectome (darker line) and best-fitting synthetic networks (lighter lines) for a representative participant.

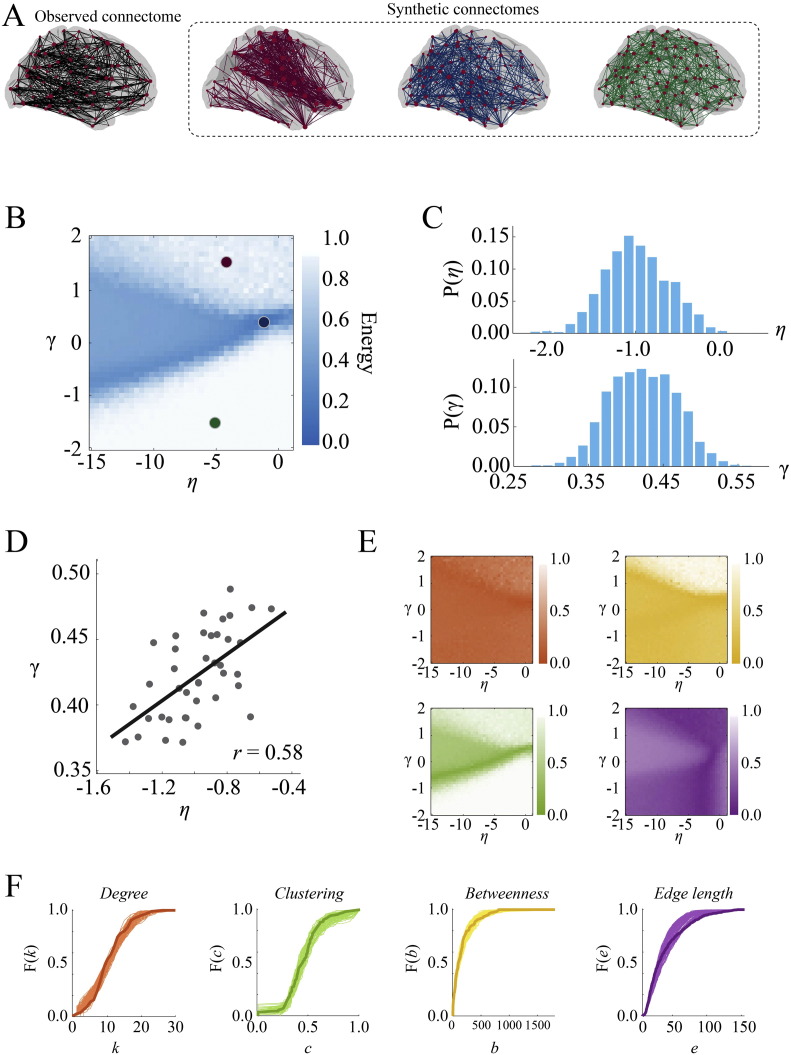

Evaluating synthetic networks using additional measures

Our analyses to this point consisted of tuning the parameters of generative models to ranges where the synthetic networks achieved low energy, which identified the MI model as the best fitting model. The form of the energy function, however, may be considered ad hoc; it represents only one of many alternative ways to evaluate a synthetic network's fitness. For this reason it was important to establish that the best-fitting synthetic networks generated by the MI model matched observed connectomes across additional dimensions that were not part of the energy function used for optimization. To that end, we subjected the lowest-energy synthetic networks to a series of additional tests to determine whether they could also reproduce other properties of the human connectome.

Graph theoretic measures

The first test involved evaluating the best-fitting synthetic networks in terms of how well they matched graph-theoretical properties of observed connectomes, focusing on the measures: mean clustering coefficient (C), global efficiency (E), degree assortativity (Rk), modularity (Q), characteristic path length (L), and network diameter (max[D]) (see Appendix for descriptions of these measures). We estimated the magnitude of correlation between graph measures made on synthetic networks generated by the MI model and the same measures made on empirical networks. We found that the MI model did an excellent job reproducing the rank order of individual participants' mean clustering coefficient (r = 0.93, p ~ 0), modularity (r = 0.73, p ~ 10− 7), characteristic path length (r = 0.88, p ~ 10− 13), and efficiency (r = 0.64, p ~ 10− 5). Network diameter (r = 0.25, p ~ 10− 0.91) and degree assortativity (r = 0.05, p ~ 10−0.12) were not well matched (Fig. 4A). It should be noted that, in general, most graph measures are not completely orthogonal to one another (Costa et al., 2007b).

Fig. 4.

Cross validation of the matching index model: (A) comparison of matching index model and observed connectomes in terms of the graph-theoretic measures mean clustering coefficient, modularity, global efficiency, and characteristic path length. (B) Comparison of all models in terms of reproducing the distance-dependent degree assortativity (i.e. the propensity for high degree nodes to be linked by long-distance connections). (C) Discrepancies in degree and clustering coefficient sequences of synthetic networks generated by the matching index model and pure geometric model.

While the MI model generally reproduced the rank order of participant-level graph measures, it nonetheless systematically over-/under-estimated the values of certain measures. For instance, efficiency was, on average, smaller for synthetic networks than for empirical networks (points falling above the diagonal in Fig. 4A, third panel). The same is true for characteristic path length (over-estimated). Despite these biases, the discrepancy between empirical and synthetic networks for any of these measures was, on average, small — across participants, the mean clustering coefficient, modularity, path length, and efficiency scores of synthetic networks were always within 5.5% of the same measure made on the corresponding observed network.

Distance-dependent degree assortativity

The human connectome features hub regions linked by long distance connections, forming rich clubs and cores. This propensity for higher-degree nodes to be linked by longer connections should be reproducible by a good generative model. To assess whether this were the case, we extracted and pooled across participants the list of all connections, the degrees of their stubs (ku and kv), and length (E(u, v)). From these data, we estimated the three-dimensional cumulative distribution function, F(kα, kβ, E(α, β)). At any point {kα, kβ, E(α, β)}, the value of F corresponded to the fraction of all connections satisfying ku ≤ kα, kv ≤ kβ, and E(u, v) ≤ E(α, β) (ku and kv were ordered so that ku ≤ kv). We constructed similar distributions for the best-fitting synthetic networks generated by each model and quantified the discrepancy between distributions with a KS statistic. In general, the rank order of models scored by this KS statistic was similar to the rank order of their energies (Fig. 4B). The MI model achieved the smallest KS statistic (KS = 0.12 ± 0.01) while the pure geometric model, on the other hand, performed the worst (KS = 0.37 ± 0.01).

Local statistics

Finally, we tested whether the best-fitting synthetic networks generated by the MI model were capable of predicting the degree and clustering coefficient sequences of the connectome. We expressed each node's empirical degree, ku, and clustering coefficient, cu, as z-scores by standardizing the empirical values against the distributions obtained from the best-fitting synthetic networks. Z-scores were averaged across subjects and used to quantify the discrepancy in those measures (larger scores indicated poorer fit). We compared these z-scores against scores obtained from the best-fitting synthetic networks generated by the pure geometric model in order to ascertain whether they represented an improvement in fitting local network measures (Fig. 4C). We found that, on average, the MI model produced smaller discrepancies (points below the diagonal) compared to the geometric model. Typically, the largest improvements were for nodes whose degree or clustering coefficient was mismatched the greatest by the geometric model. For some nodes, however, the geometric model actually outperformed the MI model, though the standardized scores for these nodes were, generally, rather small for both models.

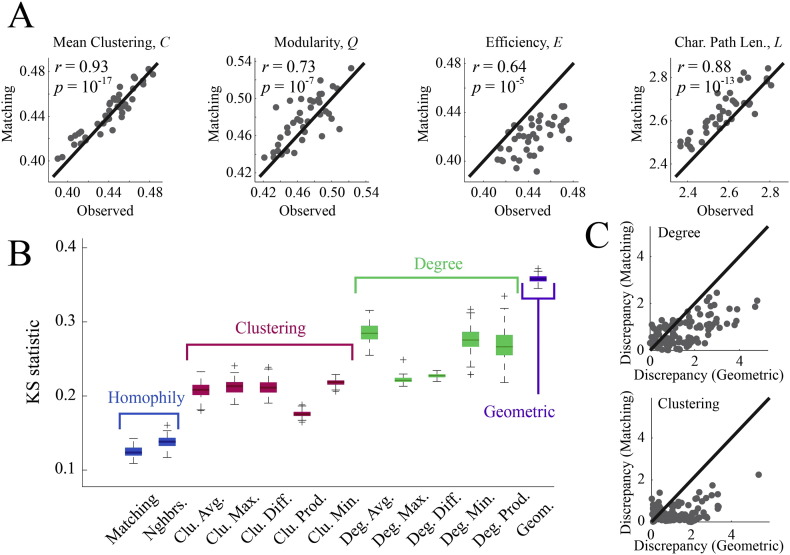

Application to human lifespan data

In addition to quantifying models' performances, we asked whether the parameters of the generative models captured meaningful information about individual differences in network organization. To demonstrate the utility of the network modeling approach for characterizing individual variation, we extended our analysis to the NKI dataset's N = 126 participants, spanning a range of ages from 7 to 85 years. With an average network density of 10%, a number of individual's connectomes were fractured into multiple disconnected components (71 of the 126 participants). However, the largest connected component, across all participants, included 98.5 ± 0.03% of all nodes, indicating that in the majority of cases the network is divided into two components: one singleton node and a component containing all other nodes. We hypothesized that age-related changes in network organization may be captured by the parameters of the generative models, η and γ. We tested this hypothesis by first regressing out participants' intracranial volumes and mean framewise displacement from parameter values obtained from the best-fitting MI models and correlating the residuals with participant age. We also expressed energies and KS statistics as z-scores relative to a generative model in which connections were formed randomly to correct for variations in network density with age (Betzel et al., 2014, Lim et al., 2015). This null model preserved only the density of connections and not degree sequence. The results of these analyses indicated that the value of η decreased in magnitude with age , while γ did not exhibit any significant age-related changes , which implied that the penalty on long-distance connections weakened with age. We also found that E, KSe, and KSc all increased with age (max p ≈ 10− 4.7) (Fig. 5), indicating that the MI model does an increasingly poor job capturing the organization of older connectomes compared to younger connectomes.

Fig. 5.

Changes in model parameters and energy components across the lifespan: (A) the geometric parameter, η weakens with age. (B) The average energy of each participant's best-fitting synthetic networks (z-scored against an ensemble of synthetic networks generated using a uniform wiring rule) also increases with age. (C, D) KSe and KSc increase with age, and these increases collectively drive the increase in energy.

Discussion

In this report, we tested different classes of generative models for the human connectome. Our study makes several novel contributions, by quantitatively comparing different sets of generative models, by applying these models to human connectome data, and by fitting models to networks of individual participants. We confirmed that pure geometric models of the form considered in this report cannot create synthetic networks that were both as clustered and also contained the same proportion of long-distance connections as the observed human connectome. To identify which additional factors were most capable of creating realistic networks we incorporated non-geometric information into our generative models' wiring rules. With this additional degree of freedom, the synthetic networks generated by these more complex models more accurately reproduced the connectome's clustering and edge length distributions. The best-fitting model formed connections on the basis of homophilic attraction (matching index) combined with geometric constraints. Importantly, synthetic networks generated by this model not only reproduced degree, betweenness centrality, clustering coefficient, and edge length distributions (all measures that contributed to the energy function used for optimization), but they also reproduced additional graph theoretic properties such as characteristic path length, mean clustering coefficient, global efficiency, modularity, the propensity for high-degree nodes to be connected via long-distance edges, and local node statistics such as degree and clustering coefficient sequences. We also demonstrated robustness of the matching index model, comparing it across three separate datasets totaling N = 380 participants and finding consistent results in all cases (See Appendix). As a final demonstration of the utility of generative models, we fit the MI model to connectomes of individuals whose ages ranged from 7 to 85 years, showing that the distance penalty weakened with age while energy increased, an effect driven by growing discrepancies in clustering and edge length distributions.

Generative models for brain networks have been investigated before, serving as proofs of concept (Kaiser and Hilgetag, 2004b, Kaiser et al., 2009, Henderson and Robinson, 2013, Lim and Kaiser, 2015, Roberts et al., 2016) or as investigative tools for non-human connectome data (Kaiser and Hilgetag, 2007, Costa et al., 2007a, Nicosia et al., 2013, Langen et al., 2015). One limitation of earlier studies was the use of composite connectivity matrices as empirical benchmarks. For example, Ercsey-Ravasz et al. (2013) and Song et al. (2014) proposed geometric models of an incomplete macaque connectome, where connections were based on composite tract-tracing data compiled across multiple subjects and only a subset of cortical areas. Another limitation of earlier work was the lack of model comparison. In many cases proposed generative models were only compared against random generative models (Ercsey-Ravasz et al., 2013, Song et al., 2014) where connections were formed with uniform probability, as opposed to models incorporating more plausible generative mechanisms.

The first model we examined was the pure geometric model, which was the simplest but also, in accordance with earlier studies, performed the worst. The observation that geometry partly explains the topology of brain networks is in line with in a large literature on wiring minimization (Mitchison, 1991, Laughlin and Sejnowski, 2003, Cherniak et al., 2004, Samu et al., 2014), and has been appreciated in modeling studies of both human brain networks (Henderson and Robinson, 2013, Kaiser and Hilgetag, 2004a, Vértes et al., 2012, Klimm et al., 2014) and those of non-human primates (Kaiser and Hilgetag, 2004a, Costa et al., 2007a) and other mammals (Henderson and Robinson, 2011, Rubinov et al., 2015). While recent work has suggested that regional variation in certain topological properties of connectomes such as degree, clustering coefficient, and betweenness centrality, can be accounted for based on the geometry of the brain (Henderson and Robinson, 2014), our findings support the view that strong spatial constraints alone are insufficient for explaining all topological aspects of brain networks (Kaiser and Hilgetag, 2006, Bullmore and Sporns, 2012). This conclusion stands in contrast to other reports (Ercsey-Ravasz et al., 2013, Song et al., 2014) suggesting that geometric models are the sole generative mechanism underlying the connectome's formation and evolution. Instead, we find that in order to accurately reproduce the connectome's topology our models required information about node's pairwise similarity (homophily), which agrees with earlier modeling studies of the primate connectome (Costa et al., 2007a) and human functional brain networks (Vértes et al., 2012).

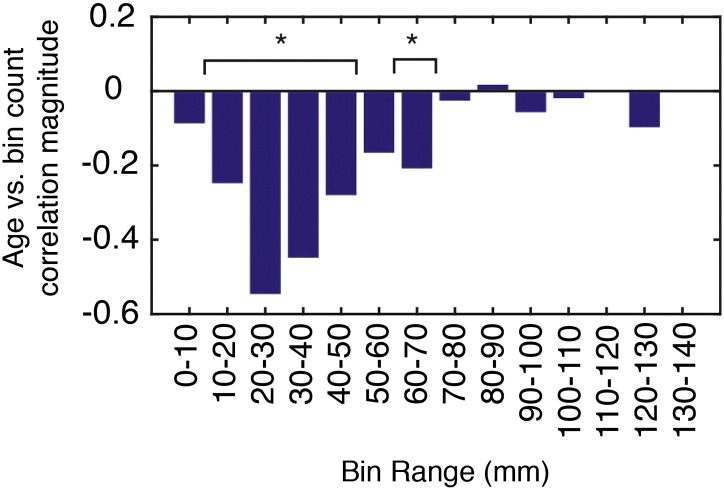

The final component of this report was an application of network modeling to human lifespan data, which revealed that geometric constraints weakened while energy and the mismatch of clustering and edge length distributions all increased with age. Collectively, these results indicate that the MI model is becoming an increasingly poor model of the connectome as participants become older. One possible explanation is that connectome patterns become increasingly random with age, making it impossible for any wiring rule to model the connectome precisely. Alternatively, it could also be the case that there are proportionally more long-range connections later in life (Lim et al., 2015), and therefore, with advancing age, connectomes cannot be reproduced as accurately with a wiring rule that shows preference for short-range connections. Indeed, this appears to be case; placing each participant's connections into bins (10 mm width) according to connection length and correlating bin counts with age we found that bin count was negatively correlated with age up to around 70 mm (Fig. S19). For longer connections there was no clear relationship. Future work should investigate, in greater detail, the underpinnings of the decrease in geometric constraints.

Fig. S19.

Correlation of age with number of connections of particular lengths. The height of each bar represents the magnitude of the Pearson's correlation coefficient. The bins with an asterisk exhibit statistically significant change (p < 0.05, uncorrected).

The aim of this study was not to model the growth and development of the human connectome. Doing so would have required a more complicated model that included more system-specific detail. Instead, our models were designed to reduce a network's description length. Naively, we can reconstruct a network exactly from a list of its nodes and edges. However, such a precise reconstruction may not be necessary or even desirable. Oftentimes we are more interested in a network's high-level properties (e.g. modularity, degree distribution), than the exact configuration of its connections. In such a case, a mechanism that generates synthetic networks with the approximately the same set of properties represents a much more economical (compressed) description of the network. Our models are in line with this approach, seeking a parsimonious description of the human connectome, wherein its overt complexity gets compressed into a model's wiring rule and parameters. This type of compressed description can be used toward any number of ends, including investigation of differences in individual participants. For instance, we found that some participants' connectomes were compressible (low energy) while others were not (high energy). An important question, moving forward, is whether these differences become meaningful when examining individual differences or comparing clinical and control populations, or whether they can be related to some behavioral measures across both individual and group levels.

There are a number of methodological considerations that should be discussed. First, the class of dual-term models left the definition of K(u, v) up to the user. For practical reasons, we explored only twelve such rules. Even with this limited exploration, we found a great deal of stratification in terms of model performance. This leaves open the possibility that wiring rules not explored in this report could produce superior results. For example, geometric models of forms not considered here (e.g. “connect all nodes separated by a distance less than dthreshold”, models with regional inhomogeneities see Rubinov et al., 2015), or more accurate modeling of interregional white-matter fiber lengths in place of Euclidean distance could possibly lead to improved fits. In addition, models of altogether different forms could be implemented. For example, in our report we make the assumption that the formation of connections depends equally on both geometric and topological constraints. An alternative approach might be to form one subset of connections on the basis of geometry and another set of connections on the basis of topology. While enumerating of all possible wiring rules or model variations is impractical, a number of methods have been proposed that aim to discover wiring rules by evolving models themselves (Bailey et al., 2012, Menezes and Roth, 2014), as opposed to proposing a model and fitting its parameters, as we did here. These approaches, we believe, warrant further attention. In any case, consideration of a broader class (or classes) of models represents an important avenue for future work.

Another methodological consideration concerns the evaluation of a synthetic network's fitness. The synthetic networks are mapped into a morphospace (Goñi et al., 2013) according to their geometric and topological properties and compared to the observed connectome along the same dimensions. Whether these properties are the most appropriate measures for network comparison is unclear. In principle, one could define alternative energy functions whose minima may not coincide with those reported here, and for which the MI model is not the best performer. Though the exploration of alternative energy functions is beyond the scope of this report, we attempted to mitigate the concern that our choice of energy function biased our results by performing a series of additional tests, the results of which indicated that the MI model consistently outperformed other models.

Another consideration relates to the combination of diffusion imaging and tractography for inferring the connectome's organization. Though diffusion imaging/tractography represents the state of the art for in vivo reconstruction of the brain's anatomical connections, these technologies are nonetheless prone to false positives and negatives (Thomas et al., 2014), which could potentially affect our results. While the use of multiple atlases, independent datasets, and alternative processing streams help reduce the bias of any single processing strategy they do not completely address the issue. The shortcomings of diffusion imaging and tractography, while presently limiting, also serve to highlight the need to develop new non-invasive methods for mapping the human brain.

A final consideration is related to the size of networks, the definition of nodes, and the scalability of our models. In general, how one defines a network's nodes has implications for the network properties of the resulting graph (Zalesky et al., 2010). It is likely that the size and number of nodes factor into the performance of the models studied here. The networks analyzed in this report consisted of either n = 74 or n = 108 nodes, representing two different parcellations of the cortex. However, it is becoming increasingly common to model brain networks with up to thousands of nodes. Because the number of possible positions to place an edge grows as O(n2), the space of all networks that the model could generate becomes much larger as n increases. Models with n ≫ 102 may require stronger parametric constraints (e.g. larger magnitudes for η or γ) or incorporating additional topological information (and an additional parameter) into a model's wiring rule. In general, the choice of how to define a network's nodes and at what scale the human connectome is best described is unclear, though future work on data-driven parcellations will surely help address this issue.

The following are the supplementary data related to this article.

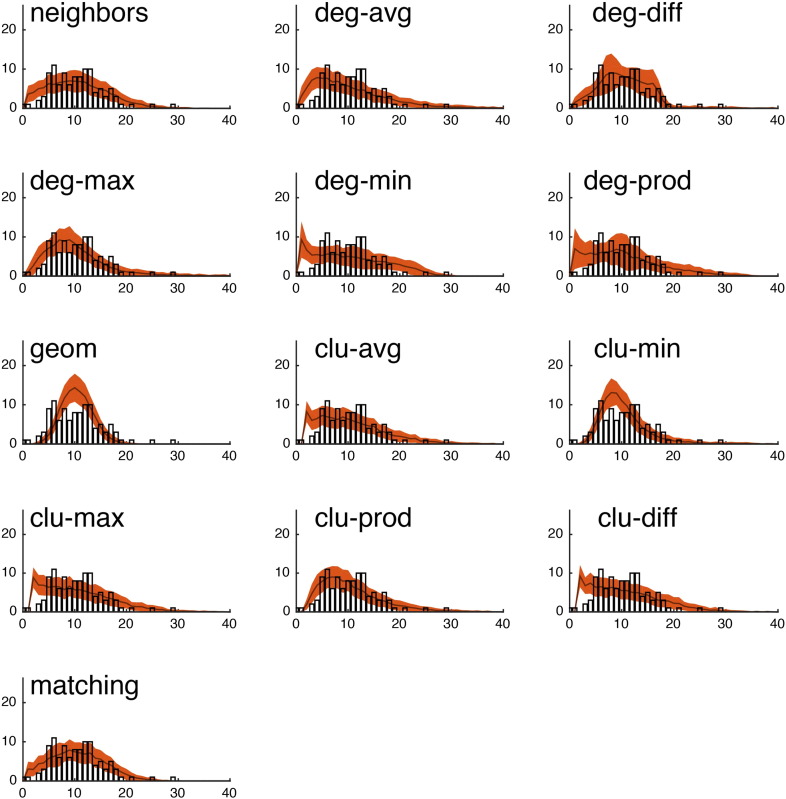

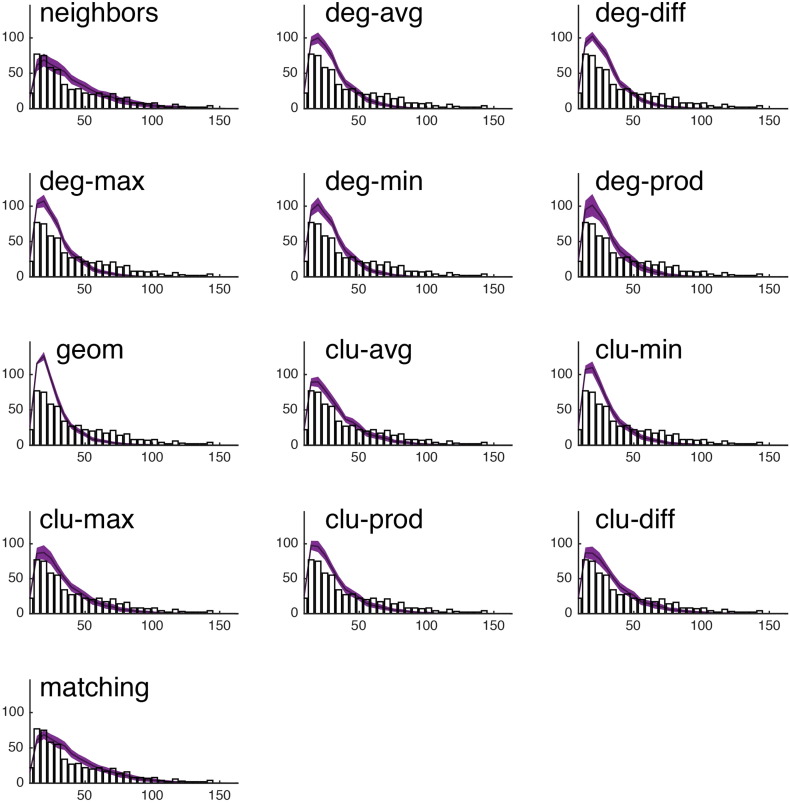

Fig. S15.

We show the observed degree distribution (black bar plot) against the degree distributions of the best-fitting synthetic networks generated with each model. In all panels, the x-axis indicates degree (k) and the y-axis indicates frequency. This figure shows data from a single representative subject in the CHUV cohort.

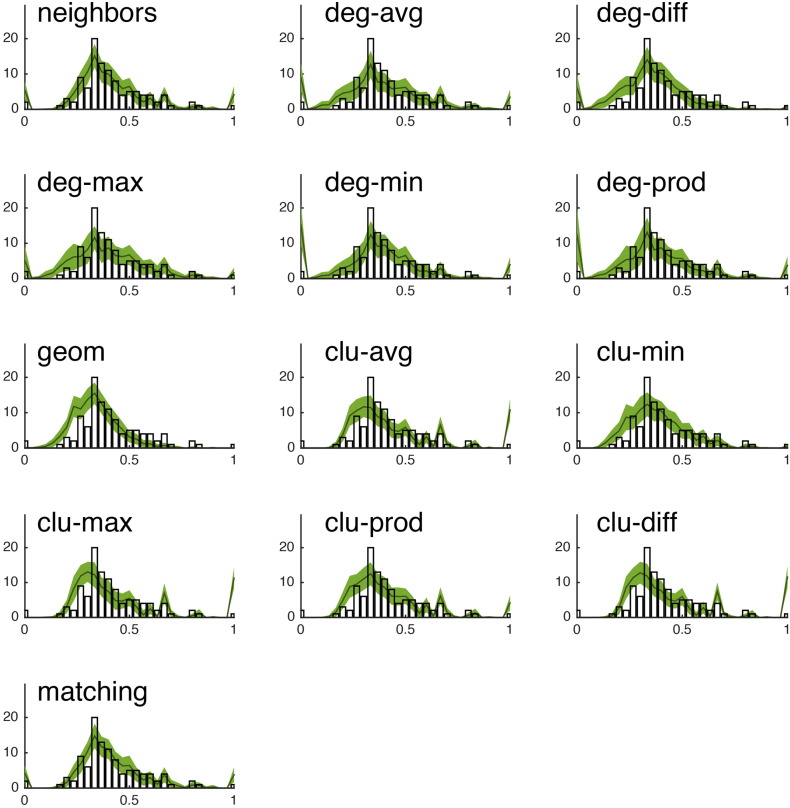

Fig. S16.

We show the observed clustering coefficient distribution (black bar plot) against the clustering coefficient distributions of the best-fitting synthetic networks generated with each model. In all panels, the x-axis indicates clustering coefficient (c) and the y-axis indicates frequency. This figure shows data from a single representative subject in the CHUV cohort.

Fig. S17.

We show the observed betweenness centrality distribution (black bar plot) against the betweenness centrality distributions of the best-fitting synthetic networks generated with each model. In all panels, the x-axis indicates betweenness centrality (b) and the y-axis indicates frequency. It should be noted, that due the orders of magnitude difference between the most central and least central nodes, the x-axis has been log-transformed. This figure shows data from a single representative subject in the CHUV cohort.

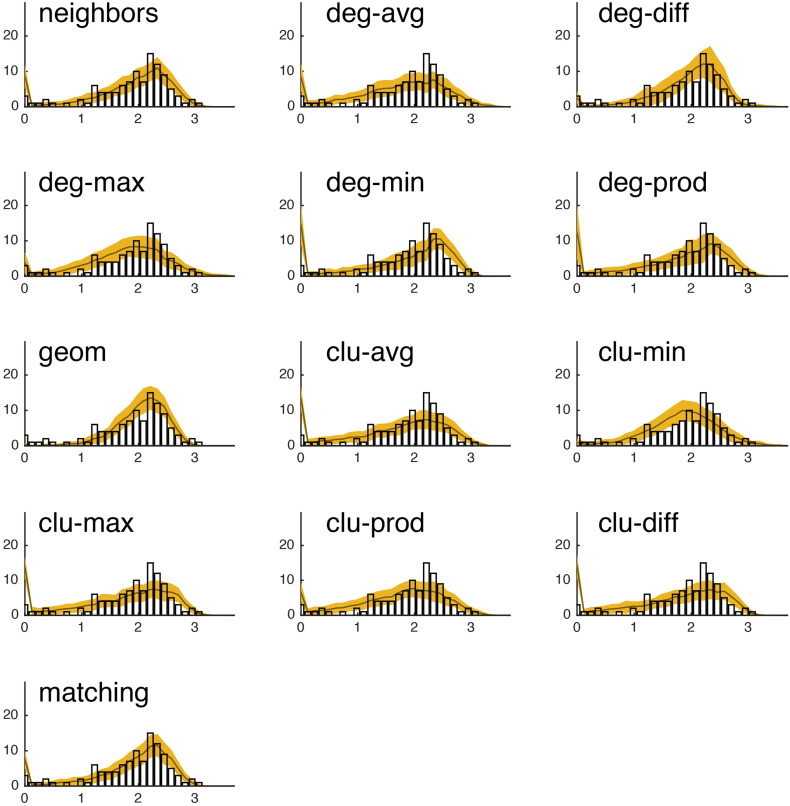

Fig. S18.

We show the observed edge length distribution (black bar plot) against the edge length distributions of the best-fitting synthetic networks generated with each model. In all panels, the x-axis indicates edge length (e) and the y-axis indicates frequency. This figure shows data from a single representative subject in the CHUV cohort. Note that of the models shown here, “matching” and “neighbors” do a reasonable job generating networks with a broad range of connection lengths. This is in stark contrast with the geometric model “geom,” which over-represents short range connections.

Funding information

R.F.B. was supported by the National Science Foundation/Integrative Graduate Education and Research Traineeship Training Program (0903495) in the Dynamics of Brain–Body–Environment Systems at Indiana University. J.G. was supported by the Indiana University Network Science Institute and the Indiana Alzheimer Disease Center (NIH/NIA P30 AG10133). A.G. was supported by the Swiss National Science Foundation (320030 130090). P.E.V. was supported by a Bioinformatics Research Fellowship from the Medical Research Council (UK) (MR/K020706/1). B.M. was supported by a Natural Sciences and Engineering Research Council of Canada Postdoctoral Fellowship. P.H. was supported by the Leenaards Foundation. M.H. was supported by VENI (451-12-001) grant of the Netherlands Organization for Scientific Research (NWO; http://www.nwo.nl) and by a Fellowship of the Brain Center Rudolf Magnus. X.Z. was supported by the National Basic Research Program (973) of China (http://www.most.gov.cn/eng/), grant number 2015CB35170 and the Major Joint Fund for International Cooperation and Exchange of the National Natural Science Foundation (http://www.nsfc.gov.cn/publish/portal1/), grant number 81220108014. E.T.B. was supported by a grant from the Behavioural & Clinical Neuroscience Institute (University of Cambridge) and by the Wellcome Trust and the Medical Research Council. O.S. was supported by the J.S. McDonnell Foundation (220020387) and the National Science Foundation (1212778).

Author contributions statement

R.F.B., P.E.V., E.T.B., O.S. conceived the experiment(s), R.F.B., A.A.-K., B.M., J.G., and O.S. conducted the experiment(s), R.F.B., A.A.-K., B.M., J.G., and O.S. analyzed the results, Y.H., M.A.R., A.G., J-P.T., P.H., M.H., and X-N.Z. contributed data. All authors reviewed the manuscript.

Additional information

ETB is employed half-time by the University of Cambridge and half-time by GlaxoSmithKline (GSK); he holds stock in GSK. The authors declare no other competing financial interests.

Acknowledgments

Data were provided [in part] by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by the 16 NIH Institutes and Centers that support the NIH Blueprint for Neuroscience Research; and by the McDonnell Center for Systems Neuroscience at Washington University.

Appendix A.

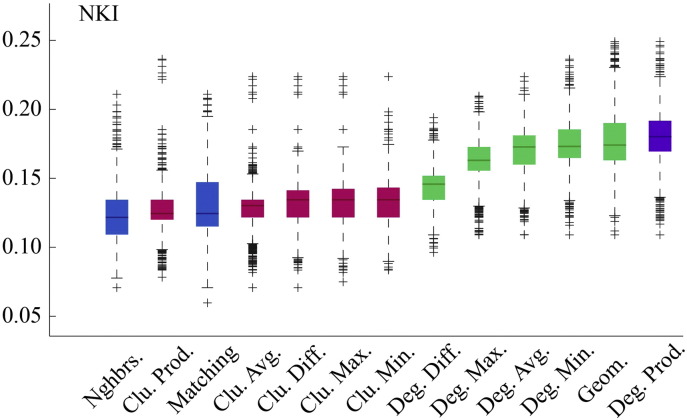

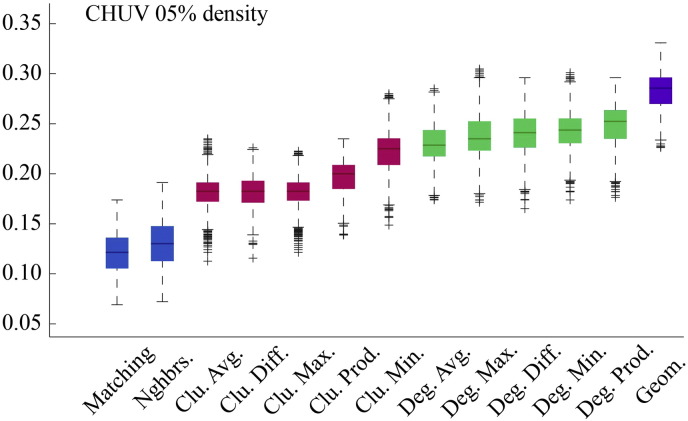

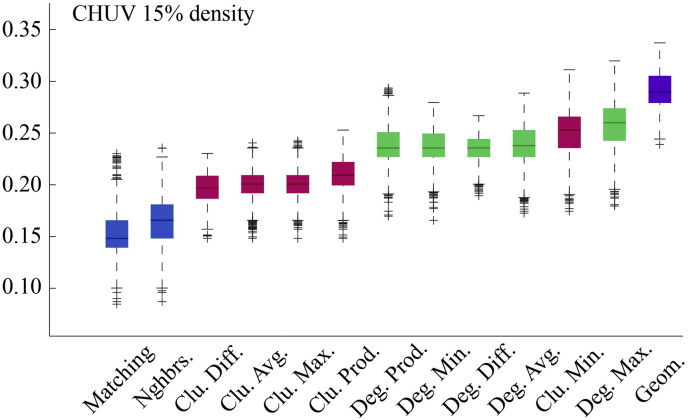

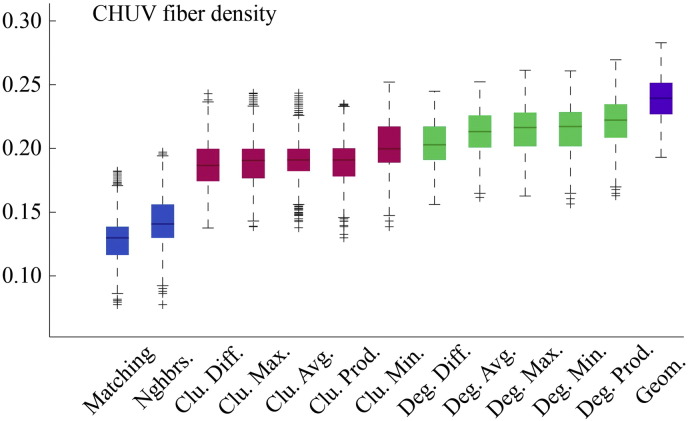

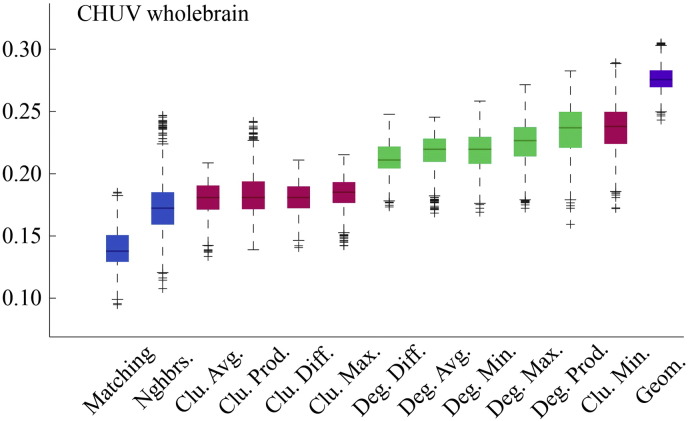

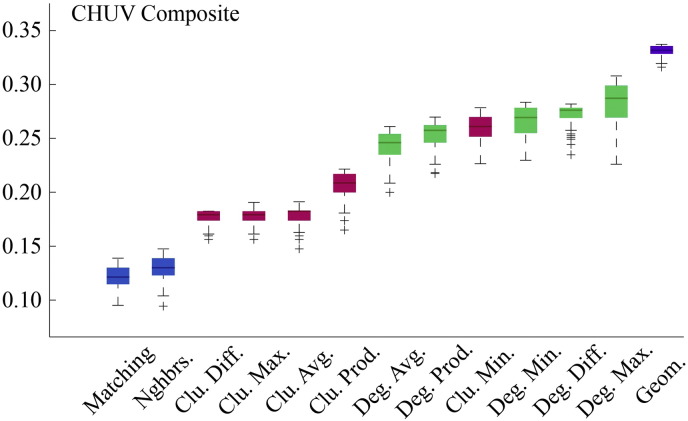

The main text describes the results of generative models applied to a dataset of 40 participants scanned at CHUV. In this appendix we demonstrate the robustness of those results by reproducing the principal findings using alternative datasets. The additional datasets are described, briefly, below and in more detail later in this appendix. Fig. S1, Fig. S2, Fig. S3, Fig. S4, Fig. S5, Fig. S6, Fig. S7, Fig. S8, Fig. S9 shows model energies for each of the additional datasets, reproducing Fig. 2 from the main text.

-

1.

Two replication datasets (HCP and NKI) of N = 214 and N = 126 participants, respectively.

-

2.

The same CHUV dataset with different levels of network density (5% and 15%) and defined using an alternative weighting.

-

3.

CHUV dataset including both left/right cerebral hemispheres.

-

4.

Composite (i.e. group averaged) CHUV, HCP, and NKI connectomes.

-

5.

Composite CHUV dataset using fiber length in place of Euclidean distance.

-

6.

Composite CHUV dataset using an exponential function to model geometric constraints in place of the power-law function.

-

7.

Composite CHUV dataset using a finer cortical parcellation (n = 223 nodes.)

Fig. S1.

Model energies for HCP dataset.

Fig. S2.

Model energies for NKI dataset.

Fig. S3.

Model energies for CHUV dataset with ρ ≈ 5%.

Fig. S4.

Model energies for CHUV dataset with ρ ≈ 15%.

Fig. S5.

Model energies for CHUV dataset with ρ ≈ 10% and edge presence/absence determined by fiber density weights rather than streamline/fiber tract counts.

Fig. S6.

Model energies for CHUV dataset with ρ ≈ 10% but for entire cerebral cortex.

Fig. S7.

Model energies for CHUV composite connectivity matrix.

Fig. S8.

Model energies for HCP composite connectivity matrix.

Fig. S9.

Model energies for NKI composite connectivity matrix.

Each additional dataset is accompanied by a figure showing the energy distribution for the 100 best-fitting synthetic networks for each model type. At the end of this appendix we have also included a glossary of graph theoretic terms that appear throughout the main text.

Additional datasets

Human connectome project (HCP) — see Fig. S1

The HCP data were drawn from the 215 participants made available as part of the Q3 release of the human connectome project (Van Essen et al., 2012, Glasser et al., 2013). From each participant's diffusion-weighted MR images (diffusion tensor imaging; DTI), white matter fibers were reconstructed from generalized q-sampling (Yeh et al., 2010) (GQI: allowing for the reconstruction of crossing fibers) and streamline tractography and the cortex was parcellated into 219 parcels based on a subdivision of FreeSurfers's Desikan–Killiany atlas (Cammoun et al., 2012). More details on the processing of these data can be found elsewhere (de Reus and van den Heuvel, 2014). We focused on the right hemisphere only, which consisted of n = 108 regions. We imposed a threshold on streamline counts of 5 (i.e. a minimum of five streamlines must be present for us to consider two regions linked by a binary connection) in order to maintain an average connectome density of ρ ≈ 10% across subjects. We excluded a single subject on the grounds that their total streamline count was greater than two standard deviations from the group mean, leading to a final dataset of N = 214 participants.

Nathan Kline Institute, Rockland, NY (NKI) — see Fig. S2

The NKI dataset consists of N = 126 participants whose ages ranged from 7 to 85 years (Nooner et al., 2012). Tractography was performed using the Connectome Computation System (CCS: http://lfcd.psych.ac.cn/ccs.html). A more detailed description of the processing pipeline was included in other reports (Betzel et al., 2014, Cao et al., 2014, Yang et al., 2014). Unlike the HCP and CHUV datasets, the cortex was parcellated into 148 regions according to the Destrieux atlas (Destrieux et al., 2010). We analyzed a single hemisphere (n = 74 regions), but instead of focusing on either the right or left, we formed a composite matrix by combining the streamline counts between homotopic pairs of regions. We, again, enforced a mean density of ρ ≈ 10% by selecting a streamline threshold of 30 streamlines.

Alternative CHUV datasets — see Fig. S3, Fig. S4, Fig. S5, Fig. S6

We investigated four variants of the CHUV dataset. In the main text we analyzed binary connectivity matrices (average density of ρ ≈ 10%) by applying a threshold to streamline counts. The first two variants were constructed in the same manner but with the threshold level chosen to maintain average densities of ρ ≈ 5% and ρ ≈ 15%. The third variant retained a threshold of ρ ≈ 10% but instead of thresholding streamline counts we thresholded “fiber density” matrices. The fiber density between nodes u and v is common choice for edge weights in weighted anatomical brain networks, and is defined as the number of streamlines divided by the sum of u and v's surface areas (Hagmann et al., 2008, Betzel et al., 2013, Goñi et al., 2014). The fourth variant was constructed by thresholding streamline counts to ρ ≈ 10% but included both left and right cerebral hemispheres.

Group-average matrices — see Fig. S7, Fig. S8, Fig. S9

In addition to single-participant modeling, we analyzed group-average connectivity matrices for all three datasets (CHUV, HCP, and NKI). Group-average matrices boost the ratio of signal to noise by emphasizing connections that are consistently expressed across subjects, thereby rendering the human connectome more clearly. The de facto method for generating group-average matrices is to retain the supra-threshold elements of the [n × n] consistency matrix, C, whose element cuv indicates the fraction of all participants in which a connection was present between nodes u and v. The resulting matrix, however, over-expresses short-range connections, as short-range connections are more easily reconstructed and are hence the most consistent connections across subjects whereas long-range connections are more prone to error. Also, this method forces a user to choose, somewhat ad hoc, the threshold for including a connection in the group-average matrix. Instead, we use an alternative method for generating a group-average connectomes whose edge-length distribution matches that of the typical single-participant distribution (Mišić et al., 2015). Briefly, this method begins by first estimating the average number of connections of a given length in a typical participant's connectome. Next, all pairs of nodes separated by a comparable distance are identified and, from among this subset, the most consistent connections are added to the group-average connectivity matrix. Repeating this process for all distances yields a representative connectome that matches, almost exactly, the typical edge length distribution, but features only the most consistently expressed edges at each connection length.

CHUV group-average matrix with fiber length — see Fig. S10, Fig. S11

In this report, we test the hypothesis that the human connectome emerges as a consequence of both topological and spatial constraints, which we model as power-law functions. In doing so, we assume that the material/metabolic cost of fiber tracts can be equated to Euclidean distance separating its endpoints, rather than the actual integrated length of the curved tract. The argument for doing so is threefold. First, estimates of fiber length can only be obtained for completed streamlines, meaning that no estimates exist for connections that were absent in the observed tractography data. In order to fill in the missing fiber lengths, one can resort to fiber interpolation (i.e. using the distance/fiber length relationship of existing connections to estimate the fiber length of missing connections), which necessarily introduces an additional source of uncertainty. Second, the relationship of fiber length and Euclidean distance is rather strong across our datasets: the amount of variance in fiber length accounted for by Euclidean distance was 66%, 32%, and 79% for the CHUV, NKI, and HCP datasets, respectively. Lastly, a recent study used both Euclidean distance and the measured length of axons in a geometric generative model of the mouse connectome (Rubinov et al., 2015), finding no change in their results. For these reasons, we assert that Euclidean distance, though imperfect, is a reasonable proxy for the cost of forming a connection.

Nonetheless, we felt it prudent to test the effect of using fiber length in place of Euclidean distance in our models. To do so we first constructed an interpolated fiber length matrix. The elements of this matrix contain fiber length estimates for the hypothetical tracts linking the pair of nodes, u and v. To obtain such estimates for nodes u and v, we calculate the Euclidean distance, E(u, v) between their respective centroids. We then consider all geometric neighbors of u and all geometric neighbors of v, where a geometric neighbor of u is any other node whose centroid is less than K(u, v) × τ away from that of u. Here, we use a fixed value τ = 0.2. The fiber length of the hypothetical connection between nodes u and v is set equal to the mean fiber length of connections between u and any of u's geometric neighbors and v and any of v's geometric neighbors. If no connections exist between these subsets of nodes, then we used the β coefficients from the Euclidean distance versus fiber length linear regression model to estimate a fiber length.

CHUV group-average matrix with exponential rule — see Fig. S12

Fig. S12.

Model energies for CHUV composite connectivity matrix using an exponential function in place of the power-law function for the geometric wiring rule.

In the main text we modeled the geometric wiring rule as a power-law function. However, recent work has suggested that an exponential function better captures the relationship between edge length and connection probability (Henderson and Robinson, 2014). To this end, we replaced the geometric power-law function in our geometric models with an exponential function and re-ran our analyses.

CHUV group-average matrix with finer cortical parcellation — see Fig. S13

Fig. S13.

Model energies for CHUV composite connectivity matrix with a higher-resolution partition (n = 455 cortical nodes; n = 223 cortical nodes in the right hemisphere).

The pipeline described in Cammoun et al. (2012) makes it possible to partition the cerebral cortex into parcels at several different scales or resolutions. In our main analysis, we used an intermediate scale (n = 219 cortical parcels, with n = 108 parcels in the right hemisphere). In this section, we repeat our analysis for a composite group-average CHUV matrix generated at the next finest scale, which has n = 223 cortical parcels in the right hemisphere. The group-average matrix was generated using the same procedure as described earlier.

Graph theory

In the main text we characterize networks using a number of different graph-theoretic measures. Here we describe those measures in some detail (Rubinov and Sporns, 2010).

-

•

Adjacency matrix: A topology of an n-node network can be described by the matrix A = [auv]. The elements auv are equal to 1 if nodes u and v are connected and are otherwise equal to 0.

-

•

Node degree: A node's degree counts the total number of connections that node makes. In an undirected network (i.e. auv = avu) a node's incoming and outgoing degrees are equivalent, and can be calculated as the sum across rows or columns of A, i.e. ku = ∑vauv.

-

•

Network density: A network's density, ρ, is equal to the fraction of existing connections out of the total number of possible connections. If the total number of connections is equal to 2m = ∑uku, then network density is equal to .

-

•

Degree assortativity: Degree assortativity measures the extent to which nodes of similar degree connect to one another. It is usually operationalized as a correlation measure, Rk, which measures the Pearson correlation of the stub degrees of all edges (Newman, 2002).

-

•

Clustering coefficient: A node's clustering coefficient measures the density of a node's neighborhood. Phrased differently, clustering coefficient it measures the fraction of a nodes' neighbors that are connected to one another. If is the number of triangles (connected neighbors) surrounding node u, then u's clustering coefficient is equal to . The mean clustering coefficient of a network is simply the average of cu over all possible u.

-

•

Characteristic path length: The shortest path matrix, D = [duv], measures the length of the shortest paths between all pairs of u and v. The characteristic path length is the average length of all shortest paths and is calculated as .

-

•

Network diameter: A network's diameter is the longest shortest path between any two nodes, and is calculated as max(duv).

-

•

Global efficiency: A network's efficiency is closely related to characteristic path length. Rather than calculating the average length of a shortest path, efficiency is calculated as the average length of . Specifically, a network's efficiency is calculated as .

-

•Modularity: Many network measures describe a network's organization at the level of individual nodes or with a global summary statistic. Alternatively, it is possible to describe a network's “large-scale structure” (Newman, 2012) — i.e. its organization at an intermediate scale. Perhaps the most common type of large-scale structure is known as a network's community structure or a division of a network into internally dense and externally sparse modules (Fortunato, 2010, Sporns and Betzel, 2016). The most popular method for identifying a network's communities and evaluating their fitness is to maximize a “modularity” function (Newman and Girvan, 2004):

(4)

In this expression, gu ∈ {1, …, K} is the community to which node u is assigned, δ(gu, gv), is the Kronecker delta function and is equal to unity when gu = gv, and puv indicates the expected number of connections between u and v under a particular null model (typically , which is the expected weight under the null model where each node's degree is preserved but connections are otherwise made randomly). In general, high quality modules produce large values of Q. To maximize Q, then, one needs to ensure that connections satisfying (auv − puv) > 0 fall within communities. The process of modularity maximization is computationally intractable for all but the most trivial cases, though many heuristics are available for identifying near-optimal modules. Here, we use the Louvain algorithm (Blondel et al., 2008) to produce 100 near-optimal estimates of modules.

References

- Avena-Koenigsberger A., Goñi J., Betzel R.F., van den Heuvel M.P., Griffa A., Hagmann P., Thiran J.-P., Sporns O. Using Pareto optimality to explore the topology and dynamics of the human connectome. Philos. Trans. R. Soc. B-Biol. Sci. 2014;369(1653) doi: 10.1098/rstb.2013.0530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bailey A., Ventresca M., Ombuki-Berman B. Proceedings of the 14th Annual Conference on Genetic and Evolutionary Computation. ACM; 2012. Automatic generation of graph models for complex networks by genetic programming; pp. 711–718. [Google Scholar]

- Barabási A.-L., Albert R. Emergence of scaling in random networks. Science. 1999;286(5439):509–512. doi: 10.1126/science.286.5439.509. [DOI] [PubMed] [Google Scholar]

- Barthélemy M. Spatial networks. Phys. Rep. 2011;499(1):1–101. [Google Scholar]

- Bassett D.S., Greenfield D.L., Meyer-Lindenberg A., Weinberger D.R., Moore S.W., Bullmore E.T. Efficient physical embedding of topologically complex information processing networks in brains and computer circuits. PLoS Comput. Biol. 2010;6(4):e1000748. doi: 10.1371/journal.pcbi.1000748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Betzel R.F., Griffa A., Avena-Koenigsberger A., Goñi J., Hagmann P., Thiran J.-P., Sporns O. Multi-scale community organization of the human structural connectome and its relationship with resting-state functional connectivity. Netw. Sci. 2013;1(3):353–373. [Google Scholar]

- Betzel R.F., Byrge L., He Y., Goñi J., Zuo X.-N., Sporns O. Changes in structural and functional connectivity among resting-state networks across the human lifespan. NeuroImage. 2014;102:345–357. doi: 10.1016/j.neuroimage.2014.07.067. [DOI] [PubMed] [Google Scholar]

- Blondel V.D., Guillaume J.-L., Lambiotte R., Lefebvre E. Fast unfolding of communities in large networks. J. Stat. Mech: Theory Exp. 2008;2008(10):P10008. [Google Scholar]

- Bullmore E., Sporns O. Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 2009;10(3):186–198. doi: 10.1038/nrn2575. [DOI] [PubMed] [Google Scholar]

- Bullmore E., Sporns O. The economy of brain network organization. Nat. Rev. Neurosci. 2012;13(5):336–349. doi: 10.1038/nrn3214. [DOI] [PubMed] [Google Scholar]

- Cammoun L., Gigandet X., Meskaldji D., Thiran J.P., Sporns O., Do K.Q., Maeder P., Meuli R., Hagmann P. Mapping the human connectome at multiple scales with diffusion spectrum MRI. J. Neurosci. Methods. 2012;203(2):386–397. doi: 10.1016/j.jneumeth.2011.09.031. [DOI] [PubMed] [Google Scholar]

- Cao M., Wang J.-H., Dai Z.-J., Cao X.-Y., Jiang L.-L., Fan F.-M., Song X.-W., Xia M.-R., Shu N., Dong Q. Topological organization of the human brain functional connectome across the lifespan. Dev. Cogn. Neurosci. 2014;7:76–93. doi: 10.1016/j.dcn.2013.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherniak C., Mokhtarzada Z., Rodriguez-Esteban R., Changizi K. Global optimization of cerebral cortex layout. Proc. Natl. Acad. Sci. U. S. A. 2004;101(4):1081–1086. doi: 10.1073/pnas.0305212101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costa L.d.F., Kaiser M., Hilgetag C.C. Predicting the connectivity of primate cortical networks from topological and spatial node properties. BMC Syst. Biol. 2007;1(1):16. doi: 10.1186/1752-0509-1-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costa L.d.F., Rodrigues F.A., Travieso G., Villas Boas P.R. Characterization of complex networks: a survey of measurements. Adv. Phys. 2007;56(1):167–242. [Google Scholar]

- Daducci A., Gerhard S., Griffa A., Lemkaddem A., Cammoun L., Gigandet X., Meuli R., Hagmann P., Thiran J.-P. The connectome mapper: an open-source processing pipeline to map connectomes with mri. PLoS ONE. 2012;7(12):e48121. doi: 10.1371/journal.pone.0048121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dall J., Christensen M. Random geometric graphs. Phys. Rev. E. 2002;66(1):016121. doi: 10.1103/PhysRevE.66.016121. [DOI] [PubMed] [Google Scholar]

- de Reus M.A., van den Heuvel M.P. Simulated rich club lesioning in brain networks: a scaffold for communication and integration? Front. Hum. Neurosci. 2014;8:647. doi: 10.3389/fnhum.2014.00647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Destrieux C., Fischl B., Dale A., Halgren E. Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. NeuroImage. 2010;53(1):1–15. doi: 10.1016/j.neuroimage.2010.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ercsey-Ravasz M., Markov N.T., Lamy C., Van Essen D.C., Knoblauch K., Toroczkai Z., Kennedy H. A predictive network model of cerebral cortical connectivity based on a distance rule. Neuron. 2013;80(1):184–197. doi: 10.1016/j.neuron.2013.07.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fortunato S. Community detection in graphs. Phys. Rep. 2010;486(3):75–174. [Google Scholar]

- Friedman E.J., Landsberg A.S., Owen J., Hsieh W., Kam L., Mukherjee P. Edge correlations in spatial networks. J. Complex Netw. 2015:cnv015. [Google Scholar]

- Glasser M.F., Sotiropoulos S.N., Wilson J.A., Coalson T.S., Fischl B., Andersson J.L., Xu J., Jbabdi S., Webster M., Polimeni J.R. The minimal preprocessing pipelines for the human connectome project. NeuroImage. 2013;80:105–124. doi: 10.1016/j.neuroimage.2013.04.127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goñi J., Avena-Koenigsberger A., de Mendizabal N.V., van den Heuvel M., Betzel R., Sporns O. Exploring the morphospace of communication efficiency in complex networks. PLoS ONE. 2013;8(3):e58070. doi: 10.1371/journal.pone.0058070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goñi J., van den Heuvel M.P., Avena-Koenigsberger A., de Mendizabal N.V., Betzel R.F., Griffa A., Hagmann P., Corominas-Murtra B., Thiran J.-P., Sporns O. Resting-brain functional connectivity predicted by analytic measures of network communication. Proc. Natl. Acad. Sci. U. S. A. 2014;111(2):833–838. doi: 10.1073/pnas.1315529111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagmann P., Cammoun L., Gigandet X., Meuli R., Honey C.J., Wedeen V.J., Sporns O. Mapping the structural core of human cerebral cortex. PLoS Biol. 2008;6(7):e159. doi: 10.1371/journal.pbio.0060159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henderson J., Robinson P. Geometric effects on complex network structure in the cortex. Phys. Rev. Lett. 2011;107(1):018102. doi: 10.1103/PhysRevLett.107.018102. [DOI] [PubMed] [Google Scholar]

- Henderson J.A., Robinson P.A. Using geometry to uncover relationships between isotropy, homogeneity, and modularity in cortical connectivity. Brain Connect. 2013;3(4):423–437. doi: 10.1089/brain.2013.0151. [DOI] [PubMed] [Google Scholar]

- Henderson J.A., Robinson P.A. Relations between the geometry of cortical gyrification and white-matter network architecture. Brain Connect. 2014;4(2):112–130. doi: 10.1089/brain.2013.0183. [DOI] [PubMed] [Google Scholar]

- Hermundstad A.M., Bassett D.S., Brown K.S., Aminoff E.M., Clewett D., Freeman S., Frithsen A., Johnson A., Tipper C.M., Miller M.B. Structural foundations of resting-state and task-based functional connectivity in the human brain. Proc. Natl. Acad. Sci. U. S. A. 2013;110(15):6169–6174. doi: 10.1073/pnas.1219562110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser M., Hilgetag C.C. Modelling the development of cortical systems networks. Neurocomputing. 2004;58:297–302. [Google Scholar]

- Kaiser M., Hilgetag C.C. Spatial growth of real-world networks. Phys. Rev. E. 2004;69(3):036103. doi: 10.1103/PhysRevE.69.036103. [DOI] [PubMed] [Google Scholar]

- Kaiser M., Hilgetag C.C. Nonoptimal component placement, but short processing paths, due to long-distance projections in neural systems. PLoS Comput. Biol. 2006;2(7):e95. doi: 10.1371/journal.pcbi.0020095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser M., Hilgetag C.C. Development of multi-cluster cortical networks by time windows for spatial growth. Neurocomputing. 2007;70(10–12):1829–1832. [Google Scholar]

- Kaiser M., Hilgetag C.C., Van Ooyen A. A simple rule for axon outgrowth and synaptic competition generates realistic connection lengths and filling fractions. Cereb. Cortex. 2009;19(12):3001–3010. doi: 10.1093/cercor/bhp071. [DOI] [PubMed] [Google Scholar]

- Klimm F., Bassett D.S., Carlson J.M., Mucha P.J. Resolving structural variability in network models and the brain. PLoS Comput. Biol. 2014;10(3):e1003491. doi: 10.1371/journal.pcbi.1003491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumar R., Raghavan P., Rajagopalan S., Sivakumar D., Tomkins A., Upfal E. Proceedings of the 41st Annual Symposium on the Foundations of Computer Science. IEEE; 2000. Stochastic models for the web graph; pp. 57–65. [Google Scholar]

- Langen M., Agi E., Altschuler D.J., Wu L.F., Altschuler S.J., Hiesinger P.R. The developmental rules of neural superposition in drosophila. Cell. 2015;162(1):120–133. doi: 10.1016/j.cell.2015.05.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laughlin S.B., Sejnowski T.J. Communication in neuronal networks. Science. 2003;301(5641):1870–1874. doi: 10.1126/science.1089662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lim S., Kaiser M. Developmental time windows for axon growth influence neuronal network topology. Biol. Cybern. 2015;109(2):275–286. doi: 10.1007/s00422-014-0641-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lim S., Han C.E., Uhlhaas P.J., Kaiser M. Preferential detachment during human brain development: age-and sex-specific structural connectivity in diffusion tensor imaging (DTI) data. Cereb. Cortex. 2015;25(6):1477–1489. doi: 10.1093/cercor/bht333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menezes T., Roth C. Symbolic regression of generative network models. Sci. Rep. 2014;4:6284. doi: 10.1038/srep06284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middendorf M., Ziv E., Wiggins C.H. Inferring network mechanisms: the Drosophila melanogaster protein interaction network. Proc. Natl. Acad. Sci. U. S. A. 2005;102(9):3192–3197. doi: 10.1073/pnas.0409515102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mišić B., Betzel R.F., Nematzadeh A., Goñi J., Griffa A., Hagmann P., Flammini A., Ahn Y.-Y., Sporns O. Cooperative and competitive spreading dynamics on the human connectome. Neuron. 2015;86:1518–1529. doi: 10.1016/j.neuron.2015.05.035. [DOI] [PubMed] [Google Scholar]

- Mitchison G. Neuronal branching patterns and the economy of cortical wiring. Proc. R. Soc. Lond. B Biol. Sci. 1991;245(1313):151–158. doi: 10.1098/rspb.1991.0102. [DOI] [PubMed] [Google Scholar]

- Newman M.E. Assortative mixing in networks. Phys. Rev. Lett. 2002;89(20):208701. doi: 10.1103/PhysRevLett.89.208701. [DOI] [PubMed] [Google Scholar]

- Newman M.E. Communities, modules and large-scale structure in networks. Nat. Phys. 2012;8(1):25–31. [Google Scholar]

- Newman M.E., Girvan M. Finding and evaluating community structure in networks. Phys. Rev. E. 2004;69(2):026113. doi: 10.1103/PhysRevE.69.026113. [DOI] [PubMed] [Google Scholar]

- Nicosia V., Vértes P.E., Schafer W.R., Latora V., Bullmore E.T. Phase transition in the economically modeled growth of a cellular nervous system. Proc. Natl. Acad. Sci. U. S. A. 2013;110(19):7880–7885. doi: 10.1073/pnas.1300753110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nooner K.B., Colcombe S.J., Tobe R.H., Mennes M., Benedict M.M., Moreno A.L., Panek L.J., Brown S., Zavitz S.T., Li Q. The nki-rockland sample: a model for accelerating the pace of discovery science in psychiatry. Front. Neurosci. 2012;6:152. doi: 10.3389/fnins.2012.00152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts J.A., Perry A., Lord A.R., Roberts G., Mitchell P.B., Smith R.E., Calamante F., Breakspear M. The contribution of geometry to the human connectome. NeuroImage. 2016;124:379–393. doi: 10.1016/j.neuroimage.2015.09.009. [DOI] [PubMed] [Google Scholar]

- Rubinov M., Sporns O. Complex network measures of brain connectivity: uses and interpretations. NeuroImage. 2010;52(3):1059–1069. doi: 10.1016/j.neuroimage.2009.10.003. [DOI] [PubMed] [Google Scholar]

- Rubinov M., Ypma R., Watson C., Bullmore E. Wiring cost and topological participation of the mouse brain connectome. Proc. Natl. Acad. Sci. U. S. A. 2015 doi: 10.1073/pnas.1420315112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samu D., Seth A.K., Nowotny T. Influence of wiring cost on the large-scale architecture of human cortical connectivity. PLoS Comput. Biol. 2014;10:e1003557. doi: 10.1371/journal.pcbi.1003557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solé R., Satorras R.P., Smith E., Kepler T. A model of large-scale proteome evolution. Adv. Complex Syst. 2002;5(01):43–54. [Google Scholar]

- Song H.F., Kennedy H., Wang X.-J. Spatial embedding of structural similarity in the cerebral cortex. Proc. Natl. Acad. Sci. U. S. A. 2014;111(46):16580–16585. doi: 10.1073/pnas.1414153111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sporns O. Contributions and challenges for network models in cognitive neuroscience. Nat. Neurosci. 2014;17(5):652–660. doi: 10.1038/nn.3690. [DOI] [PubMed] [Google Scholar]