Abstract

Although the distinction between positive and negative facial expressions is assumed to be clear and robust, recent research with intense real-life faces has shown that viewers are unable to reliably differentiate the valence of such expressions (Aviezer, Trope, & Todorov, 2012). Yet, the fact that viewers fail to distinguish these expressions does not in itself testify that the faces are physically identical. In experiment 1, the muscular activity of victorious and defeated faces was analyzed. Higher numbers of individually coded facial actions—particularly smiling and mouth opening—were more common among winners than losers, indicating an objective difference in facial activity. In experiment 2, we asked whether supplying participants with valid or invalid information about objective facial activity and valence would alter their ratings. Notwithstanding these manipulations, valence ratings were virtually identical in all groups and participants failed to differentiate between positive and negative faces. While objective differences between intense positive and negative faces are detectable, human viewers do not utilize these differences in determining valence. These results suggest a surprising dissociation between information present in expressions and information used by perceivers.

Keywords: facial expressions, intense emotions

Although facial expressions play a central role in everyday social interactions, the specific information conveyed by them is disputed. According to discrete emotion theories, prototypical facial expressions are assumed to convey emotions by means of diagnostic facial movements (Smith, Cottrell, Gosselin, & Schyns, 2005). This approach posits that each basic emotion is signaled with a unique facial signature which is perceptually distinct from the signatures of other emotions (Ekman, 1993; Etcoff & Magee, 1992; Schyns, Petro, & Smith, 2007).

In contrast, according to dimensional theories of emotion, facial expressions are inherently ambiguous and a given facial configuration may reflect multiple neighboring emotional categories (Carroll & Russell, 1996; Hassin, Aviezer, & Bentin, 2013; Russell, 1997; Russell & Bullock, 1986). Consequently, contextual information is critical for disambiguating the face and for perceiving specific emotions in facial expressions (Aviezer, Hassin, Bentin, & Trope, 2008a; Aviezer et al., 2008b; Barrett & Kensinger, 2010; Barrett, Lindquist, & Gendron, 2007).

Despite their differences, both theoretical approaches agree that facial valence, i.e., the affective positivity or negativity of the face, is clearly and unambiguously signaled (Carroll & Russell, 1996). Further, both approaches would agree that intense expressions should be better recognized than weak and subtle expressions. This would be the case because intense expressions maximize the activation of diagnostic action units in the face (Hess, Blairy, & Kleck, 1997) or because they maximize distances in the bipolar space of valence and arousal (Russell, 1997).

One limitation of prior research, however, is its overreliance on posed facial expressions (Ekman & Friesen, 1976; Matsumoto & Ekman, 1988). While highly recognizable (Young, Perrett, Calder, Sprengelmeyer, & Ekman, 2002), such expressions may differ from those portrayed in real life.

In an attempt to examine stimuli with greater ecological validity, Aviezer, Trope, and Todorov (2012) tested the recognition of valence from real-life intense facial expressions. Emotional expressions of professional tennis players reacting to winning or losing a point in tennis tournaments were rated for valence using a bi-polar scale. The results indicated that when rating faces with bodies, or bodies alone, the valence of winners and losers were accurately differentiated. By contrast, when rating faces alone, raters could not reliably differentiate the faces from the positive and negative situations, and both groups of faces were rated as equally negative (Aviezer et al., 2012).

While the Aviezer et al. findings relate to the ability of perceivers to extract differential valence information from the face, they do not speak to what the face itself expresses. Specifically, winners and losers may display different facial movements, but perceivers may be unaware of these cues. Several facial movements are noteworthy in this context and serve as the focus of our analysis. These include brow lowering (AU4) and smiling (AU12) which may be direct indices of valence as well as eye constriction (AU6) and mouth opening (AU27), which may index both positive and negative affect.

Smiles (AU12) are thought to index the stereotypical expression of positive emotion after social goal attainment, though they may also serve to conceal disappointment (Cole, 1986; Ekman et al., 1980; Shiota et al., 2003; Tobin & Graziano, 2011). Brow lowering (AU4) - a component of the stereotypical expression of anger - is likely associated with negative valence or more specifically, states in which goals have not been achieved (Ekman et al., 2002; Kohler et al., 2004; Wiggers, 1982).

Additionally, a different set of facial actions may convey information about facial expression valence in both positive and negative contexts. Previous research indicates that eye constriction (AU6; the Duchenne marker) and mouth opening (AU27) each index the intensity of both positive and negative infant facial expressions (Fogel et al., 2000; Fox & Davidson, 1988; Mattson et al., 2013; Messinger, 2002; Messinger et al., 2008; Messinger & Fogel, 2007; Messinger et al., 2001; Messinger et al., 2012). In adults, eye constriction (AU6) and mouth opening (AU27) are characteristic—although not requisite—in facial expressions of joyful positive affect (Ambadar et al., 2009), affectively negative pain expressions (Williams, 2002), and other extreme expressions such as those associated with orgasm (Fernández-Dols et al., 2011).

Based on these findings, we asked whether the aforementioned facial actions distinguished the expressions of winners and losers, and whether they were associated with the expressive intensity and affective valence observers perceived in these expressions.

Experiment 1

Facial Action Units indexing eye constriction (AU6), mouth opening (AU27), brow lowering (AU4) and smiling (AU12) were measured across winning and losing tennis players using anatomically-based facial coding. If indeed the intense faces do not differ systematically, then no consistent differences should emerge in the expressions produced by winners and losers. Conversely, intense positive and negative faces may be objectively distinguishable, even if non-expert raters are unaware of such cues.

Methods

Stimuli

We used the same database of tennis images used in Aviezer et al. (2012). In that database, 176 images (88 winning point, 88 losing point) were obtained through a Google image search using the query “reacting to winning a point” or “reacting to losing a point”, crossed with “tennis”. Gender distribution in the images was near equal: 45 of the losing images and 41 of the winning images showed males. 71/88 losers and 75/88 winners were unique identities. The face was cropped from the image and enlarged. The length of the presented faces subtended an average visual angle of 5.73°. The orientation of the facial images (forward 22%, right 41%, and left 37%) was not associated with winning or losing a point, χ2(2)=2.36, p=.31.

Face coding

A certified FACS expert coded faces using the anatomically-based Facial Action Coding System (FACS; Ekman, Friesen, & Hager, 2002), and a random subset of faces (80 for brow lowering [AU4] and smiling [AU12]; 26 for eye constriction [AU6] and mouth opening [AU27]) were coded by a second FACS certified coder. Both coders were blind to condition and faces appeared without bodies. Thus, no external cues were available to discern the situation in which the expressions were evoked.

Facial coding

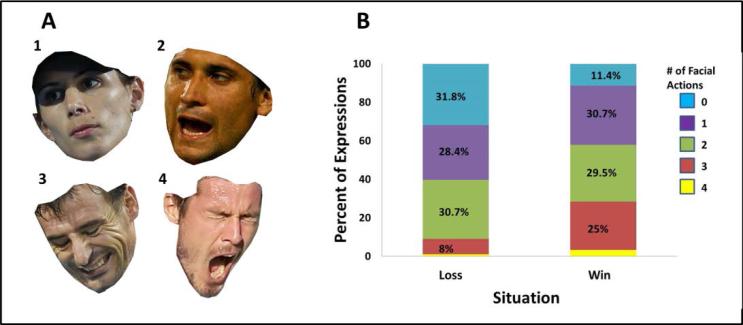

Faces were reliably coded for the presence of smiling (AU12; K = .67, 94% agreement) and brow lowering (AU4; K = .67, 84%). Faces were also reliably coded for the presence of eye constriction (produced by the orbicularis oculi, pars orbitalis, AU6 - cheek raiser; K = .78, 88% agreement) and mouth opening (produced by the pterygoids, AU 27 - mouth stretch; K = .63, 77%). We calculated the number of coded facial actions present in each expression to create a facial action composite variable that ranged from 0 – 4. Both the composite and individual facial actions were used in analyses (see Figure 1A).

Fig. 1.

(A) Examples of images with 1) no facial actions coded, 2) mouth opening, 3) smiling and eye constriction, and 4) mouth opening, eye constriction, and brow lowering. (B) Percent of expressions from negative and positive situations involving 0, 1, 2, 3, or 4 coded facial actions. For example, the face shown in A1 would correspond to a coding of “0” because none of the action units are activated. By contrast, the face shown in A4 would correspond to a coding of “3” because three of the action units are activated. Images A1-3 reproduced with permission from Getty Images - Image Bank Israel. Image A4 reproduced with permission from Reuters – A|S|A|P creative, Israel.

Results

The facial action composite and situational context

All facial actions coded tended to occur more frequently in winning than losing contexts, a pattern that was significant for smiling (AU12) and mouth opening (AU27). Specifically, brow lowering (AU4) occurred in the context of both wins (45.5%) and losses (36.4%), a non-significant difference, χ2(1)=1.50, p=.22. Eye constriction (AU6) also occurred in the context of both wins (52.3%) and losses (39.8%), a non-significant difference, χ2(1)=2.77, p=.10. Smiles (AU12) were more likely during wins (27.3%) than losses (10.2%), χ2(1)= 8.39, p=.004. Likewise, mouth opening (AU27) was more likely during wins (53.4%) than losses (31.8%), χ2(1)= 8.39, p=.004.

Employing the facial action composite variable, winning faces had a higher number of facial actions (M=1.78, SD=1.06) than losing faces (M=1.18, SD= 1.01), t(174) = 3.86, p < .0001 (see Figure 1B). A logistic regression indicated that mouth opening (AU27), B = .98, SE = .32, Wald's χ2(1) = 9.16, p = .002 and smiling (AU12) B = 1.29, SE = .44, Wald's χ2(1) = 8.72, p = .003 were significant predictors of whether expressions occurred in negative or positive situations (65.3% correct classification).

Association between the facial action composite, perceived expressive intensity, and situation

In their original report, Aviezer et al. (2012) obtained ratings of facial intensity, defined as the intensity of muscular activity in the face irrespective of valence. They found that, in contrast to valence judgments which were not indicative of situation, participants perceived higher levels of facial expressive intensity in images elicited in positive than in negative situations. In fact, we found that observers’ ratings of expressive intensity predicted whether expressions occurred in negative or positive situations [logistic regression: B = .37, SE = .09, Wald's χ2(1) = 15.95, p < .001, correct classification of the dichotomous outcome = 63.1%]. Moreover, current analyses indicated that the composite of objectively coded facial actions (0 – 4) was positively associated with ratings of expressive intensity, r = .78, p < .001. Thus facial action was associated with raters’ perceptions of expressive intensity which, in turn, reflected the affective situations in which the expressions were produced. Raters, however, did not use their perceptions of expressive intensity to correctly classify facial expression valence.

Facial actions as valence intensifiers

In the original study, valence was measured on a single negative to positive scale that ran from 1 through 9 with a neutral midpoint at 5 (Aviezer et al., 2012). Facial actions might make expressions appear more positive or more negative (Messinger et al., 2012). To determine whether the expressions of winners and losers exhibited this consistency, we calculated the absolute value of affective valence ratings. We subtracted 5 from all ratings so that neutral became 0. We then calculated the absolute value of ratings so that excursions in either a negative or positive direction were assigned the same value. For example, original ratings of 4 and 6 would each be assigned a 1.

Comparisons of the means indicated that each facial action—mouth opening (AU27), p = .02, eye constriction (AU6), p < .001, brow lowering (AU4), p < .001, and smiling (AU12), p =.02—was associated with higher values of the absolute value of affective valence. Overall, the facial action composite was positively associated with the absolute value of affective valence, r = .45, p < .001.

Discussion

The results of experiment 1 show that facial expressions from positive and negative situations contained different numbers of facial actions, these facial actions predicted perceived intensity, and perceived intensity was associated with situational valence. This evidence suggests that the faces contained information useful for distinguishing whether expressions were produced during positive or negative situations, but perceivers were not able to use that information for successful differentiation of the faces.

It is important to note that the current analysis examined only a small subset of facial actions from the full range of over 40 action units; however, full coding was not practical given the variance in facial view quality in our ecological image set. Notwithstanding this limitation, the AUs we did code are key players in the expression of facial valence. If positive and negative faces contain objective differences, why do participants fail to differentiate them when rating their valence? One possibility is that participants are unaware of the association between facial intensity and facial valence. If so, informing participants about this association may induce a dramatic improvement in their ability to differentiate facial expressions produced in positive and negative contexts. This hypothesis was tested in experiment 2.

Experiment 2

Previous work with tennis players reacting to winning or losing a point has shown that viewers cannot differentiate the valence of the faces (Aviezer et al., 2012). We therefore predicted that viewers would be strongly influenced by information pertaining to the relation between valence and face activity. Further, because participants are presumably unaware of actual links between facial movements and valence, we predicted that giving valid and invalid information would strongly sway viewers in their ratings, enhancing or diminishing accuracy, respectively.

We provided participants with information that would be associated with valid cues to the association between facial expressivity and situation without explicitly listing specific facial actions. Specifically, in the valid information condition, participants were told that the more emotionally expressive the faces were, the more likely they were to reflect reactions to positive situations, while the opposite was said in the invalid condition. A control group was not given valid or invalid information.

Methods

Participants

75 participants took part in the experiment in exchange for course credit or payment. Participants (n=25 per group: group 1=14 females, mean age=24.6; group 2=15 females, mean age=24.1; group 3=13 females, mean age=24.7) were randomly assigned to one of three experimental groups

Stimuli

The same 176 face images of winners and losers used in experiment 1 were used here.

Procedure

Participants in all groups viewed the faces individually and rated their valence using a bipolar 1-9 scale ranging from negative to positive valence with “5” serving as a neutral midpoint. However, the groups differed in the information provided to them before the rating task. Group 1 served as a baseline and received no special information. Participants were given the same instructions as in Aviezer et al. (2012) and asked to rate the valence of the facial expressions. Group 2 participants were given valid information. They were told that “faces that are more emotionally expressive are more likely to reflect reactions to positive situations”. Group 3 participants were given invalid information. They were told that “faces that are more emotionally expressive are more likely to reflect reactions to negative situations”. Participants were purposely not told the specific movements revealed in experiment 1 because we were concerned that such instructions would have rendered the task a simple feature detection assignment. For example, instead of naturally viewing the expressive valence of the faces, participants could simply scan the faces for open mouths while ignoring the overall entire expression. Such a strategy would lack the natural holistic characteristics of everyday emotion perception (Calder, Young, Keane, & Dean, 2000).

Results

Main results

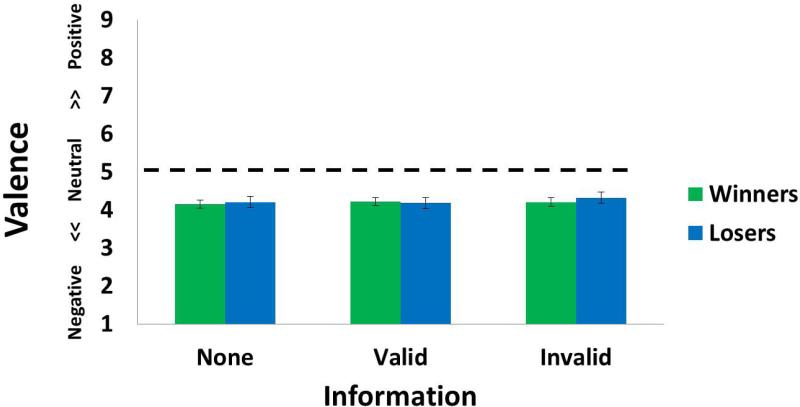

The mean valence ratings were subjected to a 2 (stimuli: winners, losers) × 3 (group instructions: none, valid, invalid) mixed ANOVA. None of the effects were significant, F(1,72) = .7, p > .3, η2 = .01 for the main effect of stimuli (winners vs losers); F(2,72) = .12, p > .8, η2 = .004 for the main effect of the group instructions (none, valid, invalid); and F(2,72) = .7, p > .4, η2 = .02 for the interaction. As seen in Figure 2, ratings across groups were somewhat negative. More importantly, group instruction condition had virtually no effect: subjects failed to differentiate the winners from losers in all three groups.

Fig. 2.

Mean valence ratings of Winners and Losers in experiment 2. The three groups differed in the type of information they were given (none, valid, or invalid). The dotted line marks the neutral midline. All ratings across groups were somewhat negative and more importantly participants failed to differentiate the valence of winners and losers irrespective of information condition.

Further, we calculated the mean rating of each image (88 winners and 88 losers) for each of the groups and examined whether valence ratings were consistent across groups that received different information. For the winner images, mean image ratings were highly correlated for the groups receiving valid and invalid information r(86) = .95, p < .0001. Similarly, for the losing images, mean image ratings were highly correlated for the groups receiving valid and invalid information r(86) = .93, p < .0001. Thus, the valence ratings of the images were highly similar across groups receiving different information.

Validation of the information manipulation

As noted, participants were purposely not explicitly told the specific movements revealed in experiment 1 as that would have rendered the task a feature detection assignment. While it may have improved differentiation of positive and negative images, the task would lose the natural holistic characteristics of everyday emotion perception (Calder, Young, Keane, & Dean, 2000). Although the current instructions were designed to retain an ecological holistic emotion-perception quality, two concerns arise. First, it is possible that the term used in the instructions, “emotional expressiveness,” was interpreted in a subjective fashion such that it was uncorrelated with facial activity (e.g., participants might tend to view neutral faces with little activity as conveying strong emotion). Second, because we did not list specific facial actions, it is possible that emotional expressiveness was not associated with informative facial cues. Thus, it might be that perceptions of emotional expressiveness were not associated with the AUs we investigated.

To address these concerns, two judges (1 female) blind to the current hypothesis viewed the entire set of images in two separate blocks and used a 1-9 scale to rate a) the degree of emotional expressiveness (block 1) and b) the degree of muscular activity intensity (block 2). Ratings for each image were averaged across judges and the correlation between the ratings of emotional expressiveness and muscular activity was high, r (174) = .84, p <. 0001. Thus, we can safely assume that emotional expressiveness was not treated as a subjective term associated with faces expressing minimal emotional activity.

We next assessed the association between emotional expressiveness judgments and the FACS facial action composite reported in experiment 1. The number of facial actions and the mean rating of expressiveness were significantly correlated, rs = .59, p< .001. Thus, it is likely that identified facial actions played an important role in the judgments of expressiveness. Moreover, in line with the findings reported in experiment 1, mean ratings of emotional expressiveness were higher for winners (M=6.7, SE=.19) than losers (M=5.9, SE=.2), t(174)=2.6, p < .009, validating the information we provided to participants.

To summarize, emotional expressiveness appeared to be a valid proxy for objectively coded facial activity, although participants failed to utilize this information in their judgments of valence.

Discussion

Replicating previous research (Aviezer et al., 2012), when given no additional information, participants failed to differentiate winners from losers and rated both face categories as conveying negative valence. Our main hypothesis was that information given before the rating task would alter ratings of valence, by improving accuracy in the case of valid information and by decreasing accuracy in the case of invalid information. Contrary to our predictions, participants proved immune to both valid and invalid information. Mean valence ratings were remarkably similar and consistent across all groups irrespective of the information received.

These results are intriguing precisely because of the potential importance of the information provided to the participants. Facial expressiveness was associated with objectively coded facial actions which, indeed, tended to occur more in positive situations. Moreover, ratings of emotional expressiveness and facial activity were highly associated. Nevertheless, participants failed to utilize these informative cues.

General Discussion

Previous work has shown that the valence ratings of intense facial expressions of victory and defeat are similar (Aviezer et al., 2012). However, although viewers fail to differentiate the valence of the winning and losing faces, the current analysis of facial movements demonstrates that reliable differences in the patterns of facial muscular activity exist. Specifically, winners were more likely to display more facial activity—at least facial activity involving brow lowering, smiling, eye constriction, and mouth opening—than losers.

Our results demonstrate that merely informing participants about the relationship between facial activity and valence is insufficient for altering their behavior. Contrary to our predictions, valid and invalid information had virtually no effect on the performance of human raters. Hence, although the faces displayed differentiating facial actions and although participants in the valid information condition were given a reliable rule of thumb, they failed to implement this rule in a fashion that increased their accuracy.

While our results suggest that emotional expressiveness appeared to be a valid proxy for objectively coded facial activity, it is important to recall that participants were not told the specific action units that differentiated the winners from the losers. In future work it would be important to address this limitation and apply such a stronger test of our hypothesis.

Nevertheless, results from research on expression differentiation suggest that even explicit training may not improve performance much. For example, recent work has demonstrated that human perceivers are poor at differentiating facial expressions of real from fake pain despite objective cues that differentiate the two (Bartlett, Littlewort, Frank, & Lee, 2014). Even with training, perceivers never exceeded a 60% accuracy level. By contrast, a machine learning algorithm using objectively measured facial action dynamics such as mouth opening reached an 85% accuracy level (Bartlett et al., 2014). It is possible that when human perceivers possess erroneous beliefs about the form of facial expressions, they fail to harness observable facial cues to make accurate judgments. In future work it will be interesting to examine if faces of winners and losers are better differentiated by machine learning algorithms.

One may wonder why perceivers fail to accurately recognize these emotions and whether such faces convey adaptive communicative signals (Fridlund, 1994). First, we note that the facial expressions in the current study differ from the typical expressions used in most studies because they were not posed. Our expressions, like many (if not most) everyday emotional expressions, diverged from the limited set of “basic” expressions (Ortony & Turner, 1990; Rozin, Lowery, & Ebert, 1994). Real life facial expressions include a great degree of variance and they do not necessarily follow the rules of prototypical facial expression sets. While one may argue that our faces are the exception because they were expressed in extreme situations, isolated spontaneous faces are difficult to decipher even when emotions are more subtle (Motley & Camden, 1988).

While the current study focused on valence discrimination, portrayals of emotions while winning or losing a high-stakes game may resemble those displayed during physical conflict. Two candidates of emotions come to mind in this context: pride (Tracy & Matsumoto, 2008; Tracy & Robins, 2004) and triumph (Matsumoto & Hwang, 2012). Both emotional expressions have been hypothesized as vehicles for drawing attention to the successful individual and alerting others to accept the dominant status of the expresser. While these interpretations appear reasonable, our sample of faces shows a high degree of variance within both winners and losers. For example, smiles are considered an essential component of the pride display yet only a minority of our faces portrayed smiles.

In fact, in both displays of pride and triumph, the isolated face is highly ambiguous and successful recognition of these emotions relies heavily on contextual cues. In a recent comprehensive review, Wieser and Brosch (2012) outline several types of contextual cues that play a role in facial expression recognition. Specifically they differentiate between within-sender features such as body language and external features such as scene information. Both these cues seem critical in the case of pride (Tracy & Prehn, 2011) and triumph (Matsumoto & Hwang, 2012). Pride and triumph both rely heavily on body language and both are influenced by contextual scene information such as the clothing of the expresser (Matsumoto & Hwang, 2012; Shariff, Tracy, & Markusoff, 2012). In fact, an isolated facial expression of triumph can easily be mistaken for a negative pain expression when planted on the body of a person undergoing a painful piercing procedure (Aviezer, Trope, & Todorov, 2012).

Rather than view these findings as a limitation of the face, it is important to recall that in real life conditions, reliance on contextual information while reading affective information into facial expressions is the rule, not the exception (Hassin, Aviezer, & Bentin, 2013; Barrett & Kensinger, 2010; Carroll & Russell, 1996; Lindquist, Barrett, Bliss-Moreau, & Russell, 2006). Relevant to the case of triumph, contextual cues in the body language and gestures of winners and losers are accurately recognized by perceivers and help shape the emotions recognized from the face (Aviezer et al., 2012). Indeed, previous work has shown that even prototypical, basic-emotion face expressions may be strongly influenced by affective body cues, and this effect is likely accentuated when the faces are highly ambiguous (Aviezer et al., 2008a; Aviezer et al., 2008b).

Finally, it is important to remember that while this study turned to real-life stimuli, these represent a very specific situation, namely that of winning and losing in professional tennis matches. One intriguing possibility is that the production of facial expressions is itself highly dependent on context. That is, the facial movements of positive and negative valence may depend on the specific circumstances of the situation. For example, tennis matches may have implicit rules and social norms governing how expressive winners and losers should be. Future work may consider examining the differences between winners and losers in other situations (e.g., reality TV singing contests, beauty contests etc.).

To summarize, an objective analysis of intense faces indicates differences in muscle activity as a function of situational valence. Nevertheless, perceivers are unable to utilize these cues to accurately perceive valence. They remain consistent in their indiscriminate ratings despite information provided to them. Hence, everyday emotion recognition appears to rely on contextual cues that enable accurate judgments of facial expressions.

Acknowledgements

This work was supported by an Israel Science Foundation [ISF#1140/13] grant to Hillel Aviezer and by NIH (1R01GM105004) and NSF (1052736) grants to Daniel Messinger.

References

- Ambadar Z, Cohn JF, Reed LI. All Smiles are Not Created Equal: Morphology and Timing of Smiles Perceived as Amused, Polite, and Embarrassed/Nervous. Journal of Nonverbal Behavior. 2009;33(1):17–34. doi: 10.1007/s10919-008-0059-5. doi: 10.1007/s10919-008-0059-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer H, Hassin RR, Bentin S, Trope Y. Putting facial expressions into context. In: Ambady N, Skowronski J, editors. First Impressions. Guilford Press; New York: 2008a. pp. 255–286. [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, Bentin S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science. 2008b;19(7):724–732. doi: 10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science. 2012;338(6111):1225–1229. doi: 10.1126/science.1224313. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Kensinger EA. Context is routinely encoded during emotion perception. Psychological Science. 2010;21(4):595–599. doi: 10.1177/0956797610363547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends in Cognitive Sciences. 2007;11(8):327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartlett MS, Littlewort GC, Frank MG, Lee K. Automatic Decoding of Facial Movements Reveals Deceptive Pain Expressions. Current Biology. 2014;24(7):738–743. doi: 10.1016/j.cub.2014.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26(2):527–551. doi: 10.1037//0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology. 1996;70(2):205–218. doi: 10.1037//0022-3514.70.2.205. doi: 10.1037/0022-3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Cole PM. Children's spontaneous control of facial expression. Child Development. 1986;57(6):1309–1321. [Google Scholar]

- Ekman P. Facial expression and emotion. American Psychologist. 1993;48(4):384–392. doi: 10.1037//0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Consulting Psychologists Press; Palo Alto, CA: 1976. [Google Scholar]

- Ekman P, Freisen WV, Ancoli S. Facial signs of emotional experience. Journal of Personality and Social Psychology. 1980;39(6):1125. [Google Scholar]

- Ekman P, Friesen WV, Hager JC. Facial Action Coding System Investigator's Guide. A Human Face; Salt Lake City, UT: 2002. [Google Scholar]

- Etcoff NL, Magee JJ. Categorical perception of facial expressions. Cognition. 1992;44(3):227–240. doi: 10.1016/0010-0277(92)90002-y. [DOI] [PubMed] [Google Scholar]

- Fernández-Dols J-M, Carrera P, Crivelli C. Facial behavior while experiencing sexual excitement. Journal of Nonverbal Behavior. 2011;35(1):63–71. [Google Scholar]

- Fridlund AJ. Human facial expression: An evolutionary view. Academic Press; San Diego, CA: 1994. [Google Scholar]

- Fogel A, Nelson-Goens GC, Hsu H-C, Shapiro AF. Do different infant smiles reflect different positive emotions? Social Development. 2000;9(4):497–520. [Google Scholar]

- Fox N, Davidson RJ. Patterns of brain electrical activity during facial signs of emotion in 10 month old infants. Developmental Psychology. 1988;24(2):230–236. [Google Scholar]

- Gross JJ. Emotion regulation in adulthood: Timing is everything. Current directions in psychological science. 2001;10(6):214–219. [Google Scholar]

- Gross JJ, Levenson RW. Emotional suppression: physiology, self-report, and expressive behavior. Journal of personality and social psychology. 1993;64(6):970. doi: 10.1037//0022-3514.64.6.970. [DOI] [PubMed] [Google Scholar]

- Hassin RR, Aviezer H, Bentin S. Inherently ambiguous: Facial expressions of emotions, in context. Emotion Review. 2013;5(1):60–65. [Google Scholar]

- Hess U, Blairy S, Kleck RE. The intensity of emotional facial expressions and decoding accuracy. Journal of Nonverbal Behavior. 1997;21(4):241–257. doi: 10.1023/a:1024952730333. [Google Scholar]

- Kohler CG, Turner T, Stolar NM, Bilker WB, Brensinger CM, Gur RE, Gur RC. Differences in facial expressions of four universal emotions. Psychiatry Research. 2004;128(3):235–244. doi: 10.1016/j.psychres.2004.07.003. doi: http://dx.doi.org/10.1016/j.psychres.2004.07.003. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Barrett LF, Bliss-Moreau E, Russell JA. Language and the perception of emotion. Emotion. 2006;6(1):125–138. doi: 10.1037/1528-3542.6.1.125. [DOI] [PubMed] [Google Scholar]

- Matsumoto D, Ekman P. Japanese and Caucasian facial expressions of emotion (IACFEE) Intercultural and Emotion Research Laboratory, Department of Psychology, San Francisco State University; San Francisco, CA: 1988. [Google Scholar]

- Matsumoto D, Hwang HS. Evidence for a nonverbal expression of triumph. Evolution and Human Behavior. 2012;33(5):520–529. [Google Scholar]

- Mattson WI, Cohn JF, Mahoor MH, Gangi DN, Messinger DS. Darwin’s Duchenne: Eye Constriction during Infant Joy and Distress. PloS one. 2013;8(11):e80161. doi: 10.1371/journal.pone.0080161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Messinger D. Positive and negative: Infant facial expressions and emotions. Current Directions in Psychological Science. 2002;11(1):1–6. [Google Scholar]

- Messinger D, Cassel T, Acosta S, Ambadar Z, Cohn J. Infant Smiling Dynamics and Perceived Positive Emotion. Journal of Nonverbal Behavior. 2008;32:133–155. doi: 10.1007/s10919-008-0048-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Messinger D, Fogel A, Dickson K. All smiles are positive, but some smiles are more positive than others. Developmental Psychology. 2001;37(5):642–653. [PubMed] [Google Scholar]

- Messinger D, Fogel A. The interactive development of social smiling. Advances in child development and behaviour. 2007;35:328–366. doi: 10.1016/b978-0-12-009735-7.50014-1. [DOI] [PubMed] [Google Scholar]

- Messinger DS, Mattson WI, Mahoor MH, Cohn JF. The eyes have it: Making positive expressions more positive and negative expressions more negative. Emotion. 2012;12(3):430. doi: 10.1037/a0026498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Motley MT, Camden CT. Facial expression of emotion: A comparison of posed expressions versus spontaneous expressions in an interpersonal communication setting. Western Journal of Communication (includes Communication Reports) 1988;52(1):1–22. [Google Scholar]

- Ortony A, Turner TJ. What's basic about basic emotions? Psychological review. 1990;97(3):315. doi: 10.1037/0033-295x.97.3.315. [DOI] [PubMed] [Google Scholar]

- Rozin P, Lowery L, Ebert R. Varieties of disgust faces and the structure of disgust. Journal of personality and social psychology. 1994;66(5):870. doi: 10.1037//0022-3514.66.5.870. [DOI] [PubMed] [Google Scholar]

- Russell JA. Reading emotions from and into faces: Resurrecting a dimensional contextual perspective. In: Russell JA, Fernandez-Dols JM, editors. The psychology of facial expressions. Cambridge University Press; New York: 1997. pp. 295–320. [Google Scholar]

- Russell JA, Bullock M. Fuzzy concepts and the perception of emotion in facial expressions. Social Cognition. 1986;4(3):309–341. [Google Scholar]

- Schyns PG, Petro LS, Smith ML. Dynamics of visual information integration in the brain for categorizing facial expressions. Current Biology. 2007;17:1580–1585. doi: 10.1016/j.cub.2007.08.048. doi: 10.1016/j.cub.2007.08.048. [DOI] [PubMed] [Google Scholar]

- Shariff AF, Tracy JL, Markusoff JL. (Implicitly) Judging a Book by Its Cover The Power of Pride and Shame Expressions in Shaping Judgments of Social Status. Personality and Social Psychology Bulletin. 2012;38(9):1178–1193. doi: 10.1177/0146167212446834. [DOI] [PubMed] [Google Scholar]

- Shiota MN, Campos B, Keltner D. The faces of positive emotion. Annals of the New York Academy of Sciences. 2003;1000(1):296–299. doi: 10.1196/annals.1280.029. doi: 10.1196/annals.1280.029. [DOI] [PubMed] [Google Scholar]

- Smith ML, Cottrell GW, Gosselin F, Schyns PG. Transmitting and decoding facial expressions. Psychological Science. 2005;16(3):184–189. doi: 10.1111/j.0956-7976.2005.00801.x. doi: 10.1111/j.0956-7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- Tobin RM, Graziano WG. The disappointing gift: Dispositional and situational moderators of emotional expressions. Journal of Experimental Child Psychology. 2011;110(2):227–240. doi: 10.1016/j.jecp.2011.02.010. doi: http://dx.doi.org/10.1016/j.jecp.2011.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wieser MJ, Brosch T. Faces in context: a review and systematization of contextual influences on affective face processing. Frontiers in psychology. 2012;3 doi: 10.3389/fpsyg.2012.00471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiggers M. Judgments of facial expressions of emotion predicted from facial behavior. Journal of Nonverbal Behavior. 1982;7(2):101–116. doi: 10.1007/BF00986872. [Google Scholar]

- Williams A. C. d. C. Facial expression of pain: an evolutionary account. Behavioral and brain sciences. 2002;25(4):439–455. doi: 10.1017/s0140525x02000080. [DOI] [PubMed] [Google Scholar]

- Young A, Perrett D, Calder A, Sprengelmeyer R, Ekman P. Facial expressions of emotion: Stimuli and tests (FEEST) Harcourt Assessment; San Antonio, TX: 2002. [Google Scholar]