Abstract

Context

The context for this study was the Adaptive Designs Advancing Promising Treatments Into Trials (ADAPT-IT) project, which aimed to incorporate flexible adaptive designs into pivotal clinical trials and to conduct an assessment of the trial development process. Little research provides guidance to academic institutions in planning adaptive trials.

Objectives

The purpose of this qualitative study was to explore the perspectives and experiences of stakeholders as they reflected back about the interactive ADAPT-IT adaptive design development process, and to understand their perspectives regarding lessons learned about the design of the trials and trial development.

Materials and methods

We conducted semi-structured interviews with ten key stakeholders and observations of the process. We employed qualitative thematic text data analysis to reduce the data into themes about the ADAPT-IT project and adaptive clinical trials.

Results

The qualitative analysis revealed four themes: education of the project participants, how the process evolved with participant feedback, procedures that could enhance the development of other trials, and education of the broader research community.

Discussion and conclusions

While participants became more likely to consider flexible adaptive designs, additional education is needed to both understand the adaptive methodology and articulate it when planning trials.

Keywords: adaptive clinical trials, qualitative research, emergency medicine, neurological emergencies

1 Introduction

Randomized clinical trials are the gold-standard procedure for comparing the efficacy of medical treatments. Regulatory agencies, including the US Food and Drug Administration, have rigorous guidelines for the design and conduct of clinical trials. Clinical trials require a great deal of time and expense to complete and frequently the findings of the studies do not help inform science or improve health due to negative or equivocal results (1, 2). For example, in certain disease areas, particularly neurology, the pivotal trials that would lead to a new indication for a treatment (or new medication approval) are neutral (i.e., no significant treatment effect) over two thirds of the time despite promising preliminary data from preclinical and early phase human trials (1). Despite recurrently neutral results, the design of clinical trials has remained relatively unchanged. This means that a fixed clinical trial is often set up to answer a very discrete question: does drug X work when given at dose Y to patient population Z (3, 4). The precision by which X, Y, and Z are chosen for the definitive trials is often low and informed by small animal experiments or very preliminary human studies (5). Particularly for dose determination, the research community has a potential opportunity to better utilize clinical trials to optimize the treatment regimen for a population (3). In certain clinical areas, particularly oncology, adaptive clinical trials (ACTs) have become increasingly common (6). Adaptive clinical trials use data that accumulates from patients within the trial to help improve the performance of the study over time – a preset algorithm can be used to assess dose response and more precisely select the treatment regimen most likely to be successful in a pivotal trial (7). Adaptive designs have primarily been used in earlier phase clinical trials (Phase II), and ADAPT-IT was focused on incorporating adaptive designs for pivotal, confirmatory trials. ACTs have been increasingly adopted by private industry. Use in academic, government-funded trials has been less common as few clinical trialists have access to the resources needed to plan and simulate more complicated designs prior to receiving a grant award for a trial (8).

There has been surprisingly little research providing guidance to academic institutions to design adaptive trials. Previous work largely focuses on very large industry trials networks (eg, Duke Clinical Research Institute)(9) or pharmaceutical companies (10). One of these resources is the Clinical Trials Transformation Initiative (CTTI), a public-private partnership founded by the US Food and Drug Administration (FDA) and Duke University to improve the quality and efficiency of clinical trials. They examine and advance recommendations related to topics such as quality, risk management in trials, large simple trials, and central IRBs, among many others (11). The existing literature contains reviews of adaptive designs (12), discussion of potential benefits and drawbacks (13–15), and a description of adaptive sample calculations (16). However, a gap remains in general resources and literature that addresses planning of adaptive trials in small to midsized academic groups that lack extensive resources. This article seeks to address this gap by discussing the process evaluation of the ADAPT-IT adaptive trial development project.

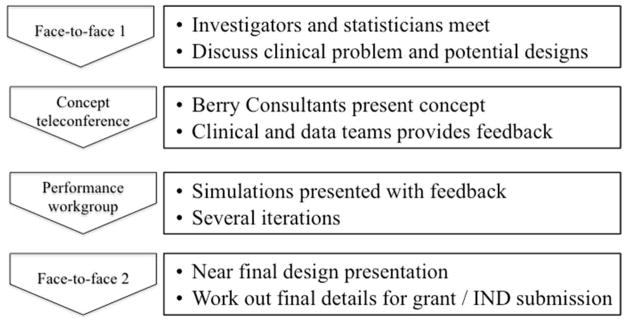

In 2010, the FDA and the office of the director of the National Institutes of Health (NIH) recognized the need for innovation in regulatory science and issued a joint call for proposals that could speed the discovery and approval of new treatments. At the same time, the investigators of an NIH funded research network - the Neurological Emergencies Treatment Trials (NETT) network that focuses on conducting pivotal trials in neurological emergencies such as stroke and traumatic brain injury believed that ACTs might provide a mechanism to improve trial design in this field. The Adaptive Designs Advancing Promising Treatments Into Trials (ADAPT-IT) project was funded through a cooperative award from FDA and NIH (17). The first aim of this project was to incorporate flexible adaptive designs into confirmatory or pivotal clinical trials as these designs have not been commonly used or well understood in confirmatory (Phase III) trial design. The other major aim of ADAPT-IT was to conduct a mixed methods assessment of the trial development process including interactions between the academic researchers, FDA, NIH, and a private statistical consulting group specializing in design of industry-funded drug and device trials. Through this second aim the investigators sought to understand what processes best facilitated the design of clinical trials and decision making about what adaptations to include for the NETT network. Prior to this project, very little observational data existed on the planning processes for adaptive designs within academic clinical trials networks although barriers to use in academia have been described (10). For each trial, the ADAPT-IT process involved four steps (see Figure 1). The first was an initial face-to-face meeting among investigators and statisticians to discuss clinical problems and potential designs. The second was a concept teleconference in which consulting statisticians presented a concept and the clinician researchers and academic biostatisticians provided feedback. The third was a performance workgroup in which consulting statisticians presented several iterations of simulations with feedback. The fourth was a face-to-face meeting involving a near final presentation of the adaptive trial design and discussion of final details for submission.

Figure 1.

The Four-Step ADAPT-IT Process

As part of Aim 2, we examined literature related to decision making in organizations for two reasons. First, a major aim of the study focused on a process involving a group of individuals. Second, observations at early meetings revealed considerable dissension regarding proposals for designing trials. Previous work related to organizational decisions suggests that dissent within groups can improve the quality of decisions (18). Dissent is defined as interactions within a group in which one or more individuals explicitly disagrees with the status quo, particularly when that perspective is contrary to the expectations of the organization (19). The existing literature indicates openness to different ways of thinking and freely expressing differing opinions promotes more open discussion. Such discussion, including working through conflicts, may generate better ideas (20, 21). We incorporated the organizational dissent conceptual framework into the interpretation of the ADAPT-IT project to understand the contentious debates that emerged about the utility of flexible adaptive designs versus fixed clinical trials. Organizational dissent is specifically the response to disagreements or contradictory opinions about policies and practices that arise in an organization (22).

During the ADAPT-IT project, (Aim 1) the investigators designed and simulated five designs for multi-center trials in stroke (glycemic control), status epilepticus, spinal cord injury, hypoxic encephalopathy following cardiac arrest, and a separate stroke project (neuroprotection). We have summarized previously the trial development process (17). One aspect of the process evaluation for Aim 2 was systematically interviewing key participants in ADAPT-IT at the completion of the project. The interviews included principal investigators of the developed trials, project statisticians, and representatives of NIH. These interviews were designed to collect information regarding what processes and end products from ADAPT-IT they deemed helpful and to learn how best to extend these lessons into future trial planning in academic research. Therefore, the purpose of this qualitative study was to explore the perspectives and experiences of stakeholders as they reflected back about the interactive ADAPT-IT adaptive design development process for the five trials, and to understand their perspectives regarding lessons learned about the design of the trials and trial development.

2 Methods

2.1 Study Design

This qualitative study was a component of a larger convergent mixed methods study of ACT designs (23). A qualitative approach conducted at the completion of the of the trial development was best suited to explore nuanced topics, such as participants’ experiences with the ADAPT-IT project, their reflections about the process, and their views concerning the ACT design development process. Data sources included interviews with stakeholders in the ADAPT-IT project and observational notes taken during the project. The University of Michigan Institutional Review Board determined that this project was exempt from Institutional Review Board oversight.

2.2 Selection of Participants

We selected participants using a maximum variation, purposeful sampling procedure (24) to select the PIs and participants in leadership roles. We exhausted those who were eligible. Specifically, to ensure different perspectives, the recruitment pool consisted of a balanced combination of statisticians, clinician researchers, and representatives from the FDA and NIH. These key stakeholders were involved in the design and evaluation of the five clinical trials from the NETT network that participated in the ADAPT-IT project. They were asked to participate in individual interviews when the trial proposal development process was completed. Of the 12 stakeholders invited, ten individuals agreed to participate, including one PI from each of the five trials associated with the study, two consultant statisticians, two academic statisticians, and one regulator from the NIH. Individuals were sampled based on their unique position to provide rich information. The reason for selecting the PI was because they ultimately made the decision about which trial design would be used. An early stage and a senior member from each of the statistical teams was chosen. Because the statistical aspects of ACTs are a very important component, it was imperative to obtain the perspective from both a junior and senior statistician to fully understand their views of the design process. Members of the FDA and the NIH were asked to participate as they play a key role in the funding and approval of clinical trials. These participants also played a key role in the ADAPT IT study. Individuals did not receive compensation for participating in the interview. Table 1 provides a description of key stakeholders described throughout the paper in addition to key terms.

Table 1.

Definitions of stakeholders and trial terms

| People | |

|---|---|

| Consultant statistician | A group of statisticians with expertise in the design and implementation of adaptive clinical trials, who primarily work for private industry, drug and device manufacturers |

| Academic biostatistician | Statisticians with a primary appointment with a university or academic health center, with most clinical trial experience drawn from government funded research and less experience with adaptive trials |

| Regulator | Representatives from government (NIH or FDA), who are involved in the planning or approval of clinical trials |

| Clinician researcher | A clinically trained researcher who conducts trials on humans with disease; includes clinical trialists, academic clinicians, and clinical trial experts |

| Principal investigator | The subset of clinical trialists who were the leaders of individual trial initiatives |

| Project participants | Collectively, all of the above groups who participated in ADAPT-IT with the goal of developing clinical trials |

| Process evaluation team | Researchers with a background in mixed methods research who were focused on collecting, analyzing and synthesizing interview, survey, and process evaluation data |

| Research community | The broader group of scientists who are engaged in clinical trials conceived by academic institutions and funded by the government |

|

| |

| Trial terminology | |

| Adaptive clinical trial (ACT) | A clinical trial that uses data that accumulates from patients within the trial to help improve the performance of the study over time |

| Flexible adaptive design | A clinical trial that has a number of potential, pre-specified adjustments that are triggered by the ongoing outcomes of accruing patients in the trial |

| Fixed clinical trial | A clinical trial with few or no potential pre-specified adjustments, usually limited to an interim analysis for efficacy/futility or a blinded sample size re-estimation |

| Industry trials | A clinical trial of large scale, typically conducted through the drug development enterprise |

2.3 Data Collection

Between June and August, 2013, an investigator (L.L.) trained in qualitative interviewing conducted interviews via telephone using a semi-structured interview protocol. The protocol consisted of open-ended questions about the ADAPT-IT development process (see Appendix). Open-ended questions allow participants to respond freely within a defined topical area. All interviews were recorded and transcribed by a professional transcriptionist. We imported all transcripts into MAXQDA (Version 11, Verbi GmbH, 2014), a computer assisted qualitative data analysis software. In addition to the interviews, the process evaluation team (W.M., M.F. and L.L.) recorded observations during face-to-face ADAPT-IT meetings. The purpose of observing was to gather data concerning participant interactions and behaviors during the ACT development process.

2.4 Data Analysis

We used a qualitative thematic text data analysis approach (25) whereby the data are reduced into overall themes to describe a phenomenon. Themes were not set a priori; rather, they emerged through the analysis. After reading through the entire qualitative database, we began a lean coding approach (26) by identifying segments of text and assigning a code label. This step led to an initial codebook. After coding all transcripts, we refined the codebook by clarifying each code and combining redundant codes. Next, we examined the relationships among codes in order to group the codes into four overarching themes about the ADAPT-IT project and ACTs generally. In the analysis, we examined the relative frequency of codes to guide our interpretation as they reflect more or less common opinions of project participants. In this paper, we use reporting conventions for consistency: “few” refers to two or three, and “multiple” refers to four or five, and “many” is six or more.

Two primary strategies supported validation of the qualitative findings. The first validation was the use of multiple data sources (27). Specifically, we included with interview data observations of the ADAPT-IT project to triangulate findings. Second, we employed member checking, which consisted of sharing a summary of the themes with participants and soliciting feedback about whether the account was accurate, what was missing, and what the participants disagreed with (26). The individuals responding to the member checking reported the findings were accurate and agreed with the concepts represented. One participant felt one concept was not emphasized the results sent out, and it was incorporated based on corroboration with the observational data.

We applied the concept of saturation to assess the adequacy and appropriateness of our sample. Saturation is the point at which the interviews provided overlapping evidence within each theme. Saturated data do not have to be identical but have to reflect a common essence (28). Saturation was evidence prior to completing the ten interviews.

3 Results

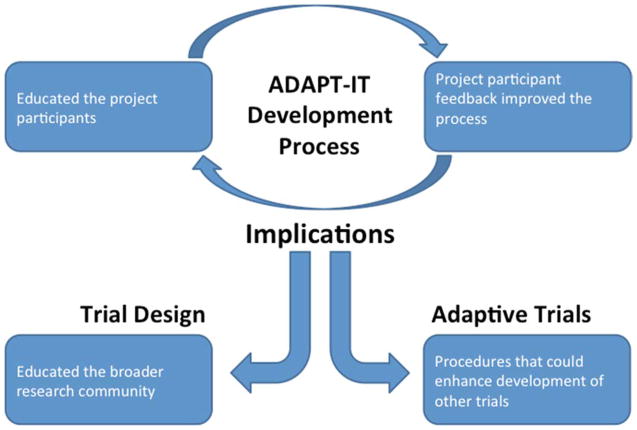

The qualitative analysis revealed four themes regarding the ADAPT-IT design process: educating the project participants, improving the process, procedures that could enhance the development of future trials, and educating the broader research community (see Figure 2). The following section describes the themes in detail supported with illustrative comments from the qualitative data.

Figure 2.

Concept map of ADAPT-IT PI Interview themes

3.1 ADAPT-IT Educated Project Participants about Adaptive Designs

The ADAPT-IT design development process served an important role by educating the project participants unfamiliar with adaptive designs (see Table 2). Many participants explained that meetings in the ADAPT-IT process provided a beneficial, collegial, albeit at times a contentious “open forum” to discuss ideas and gain feedback about designs. Several features of the ADAPT-IT process emerged. First, it brought together clinician researchers, study section members, biostatisticians, and NIH and FDA representatives. Many participants felt that the NIH and FDA presence played a critical role. Their inclusion was important in endorsement of adaptive designs and brought “trust and understanding.” Second, many participants reported that they learned by seeing the results of simulations, which further shifted their views on the importance of simulations. Although simulations were new for a few participants, the simulations provided a mechanism to explain adaptive elements for clinician researchers and a language to communicate with academic statisticians. Third, it provided a forum for productive interaction among the statisticians and clinician researchers. Many participants cited the value of statisticians interfacing in the design of simulations and understanding research questions, particularly when the statistician is experienced at working with clinicians. As one participant noted, “a statistician can bring more than just being good at math once you’re conversing in the clinical area.” A few participants, however, raised concern, and questioned the extent to which most biostatisticians will be with comfortable with adaptive designs. A final feature of ADAPT-IT related to efficiency gained by working “offline” on designs between meetings. A few participants mentioned the productivity of the design development process, explaining their study group would determine consensus during calls and meetings, divide tasks to actually work on grant writing, and then reconvene every few weeks. Contentious points, such as disagreement among statisticians, could be and were addressed offline between meetings.

Table 2.

The ADAPT-IT adaptive design development process helped educate the project participants

| Code | Description | Sample Excerpts from Interview |

|---|---|---|

| FDA/NIH representative presence | Project participants valued having the FDA and NIH representative, giving an endorsement of adaptive design. | “It just felt like we were very embedded.” (Consultant Statistician) “…the huge one [influence] was because the FDA and the NIH were a part of this. I think it brought very huge amounts of trust, understanding, teaching with regulators and funders. I thought that was probably the biggest impact this had at the end of the day. (Consultant Statistician) |

| Discussing ideas | Project participants described the benefits of meetings as an open forum to explore ideas. | “…an open forum for discussing ideas” (Progesterone Clinician) “…great opportunities for the investigators to get together and talk about their trial and move forward, so especially for this meeting because it definitely led to quite a bit of detailed thinking about that trial design” (ESETT Clinician) |

| Interact with the statistician | Interfacing with the statistician, particularly one who interacts well with clinicians, is useful. | “…statisticians should be part of the team…a statistician can bring more than just being good at math once you’re conversing in the clinical area.” “we are going to have much more success if the statistical teams and the clinical research teams are speaking some of the same language” (Consultant Statistician) |

| Responsive and collegial | ADAPT-IT meetings were responsive, collegial, and welcomed ideas. | “I was amidst and amongst the experts in the field and at no time did I ever feel like my ideas, thoughts, questions were treated with anything other than respect, and I felt like there was great responsiveness, and so I thought that was really some of the best aspects of it.” (Progesterone Clinician) |

| Simulation results | Seeing the results of a simulation was helpful. | “It made me more knowledgeable about the – mostly about the simulation. That was really the critical element I can tell you that was new to us, because we’re familiar in general with Adaptive Design, but the actual execution of it…lies with…simulation.” (ARCTIC Clinician) “I always had this inclination and thought that simulations were important. It raised my view on the importance of simulation.” (Progesterone Clinician) |

| Worked offline | Individuals noted working "offline" between study group meetings to do grant writing and work on the project. | “I thought it was very efficient, every two or three weeks to get on the phone and do that. We didn’t do a lot of online work.” (ARCTIC Clinician) |

3.2 Project Participant Feedback Improved the ADAPT-IT Process

The project participants provided valuable feedback to refine (see Table 3) the adaptive design development process of ADAPT-IT. Overall, the process evolved over time, as the process evaluation team incorporated ideas based on observations of the process and brought suggestions back into the ADAPT-IT development meetings. These suggestions included the need for more background information about the overall protocol, statistical analysis plans, and clinical conditions (e.g., a review paper on status epilepticus) when entering trial development meetings. In addition, a few reported that adaptive methodology is complex to learn and recommended specific strategies such as developing “analogies, models, or visual depictions.” During the meetings, face-to-face group dynamics influenced the sessions. Many participants commented on the challenge of navigating difficult group dynamics, comments we corroborated through observational data. On one hand, working in a large group was at times a polarized and contentious process, as disagreements arose. For example, academic biostatisticians did not always agree with adaptive design consultants regarding preferred designs. Over time the dynamics evolved as trust emerged. Both the participants’ comments and observational notes of the investigators confirm that subsequent meetings were “more open” and the process provided a forum to discuss ideas. Another way the process evolved was to respond to the need to maintain momentum between face-to-face meetings that included members of the primary trials. This was deemed particularly important when individuals were unable to attend all ADAPT-IT development meetings they wanted to attend.

Table 3.

Project participant feedback improved the ADAPT-IT adaptive design development process

| Code | Description | Sample excerpt |

|---|---|---|

| Background | Participants wanted more background information about the project discussed. | “Sometimes it would have been nice to have like more background, either like getting MUSC slides, the stats, plans or a little more background to the extent it’s available clinically like a review paper on status epileptic because I’d never heard of that before the ESETT trial” (Consultant Statistician) |

| Group dynamics | The face-to-face meetings involved a large group. Some suggested beginning with a smaller group to progress quicker. | “…probably some technique that has people recognize, acknowledge their positions ahead of time, and – I don’t know. I think there’s probably some means of facilitating those conflicts that would do it better than the way we just sort of stumbled through them.” (ADAPT-IT Clinician) “I think that we probably could have made more headway, more quickly, with a smaller group then taken it back to a larger group to sort of confirm.” (ARTIC Clinician) |

| Translate to clinicians | Participants talked about the need to translate adaptive designs to clinicians. Suggestions included analogies, models, and visual depictions. | “There needs to be a better way to translate the methodology into a greater understanding.” (Progesterone Clinician) “There needs to be simplified analogies, or models, or something visual depictions somehow that needs to be brought to the mainstream so that people will be more accepting.” (Progesterone Clinician) |

| Maintain momentum | Momentum is needed to continue to include members of the primary trial between the ADAPT-IT meetings. Some gaps were noted. | “I think if there was a mechanism if things were moving forward to continue to include the members of the primary trial, but that would have been more helpful.” (SHINE Clinician) “…the last one I think had a lot of trouble with forward momentum. I think it was also the hardest scientific challenge for the adaptive science.” (ADAPT-IT Clinician) |

3.3 ADAPT-IT Procedures Could Enhance the Development of Other Trials

A major implication of the ADAPT-IT adaptive design development process is the illustration of procedures that could enhance trial development in general (see Table 4). Participants provided examples of what they learned and how they anticipate approaching clinical trials in the future. Many participants described how the ADAPT-IT process met their needs, including networking with other investigators and gaining a “better understanding of federally funded academic trials” in addition to learning perspectives on adaptive designs and procedures. It also “moved the field forward” by educating key individuals about adaptive designs. Based on the design development process, a majority of participants noted they added adaptive components to their study designs. ADAPT-IT provided planning resources to add these components while writing grants. The design changes were substantial. For instance, participants discussed redesigning a trial of a cooling treatment for traumatic spinal cord injuries that was previously submitted to NIH for peer review, but was not funded. Using the ADAPT-IT development process, investigators changed from a two arm trial to a four arm trial with adaptive randomization. They anticipate the changes will lead to a more precise understanding because they will compare three different durations of cooling to a control arm. That trial also includes an adaptive component in which the researchers could reduce the time-since-injury to enter the trial if initial data appear promising. Thus, the research question evolved from whether a cooling treatment works to whether its effect is differential as a function of time since injury—a question the original design could not have addressed.

Table 4.

The ADAPT-IT Adaptive Design Development Process Illustrates Procedures that Could Enhance the Development of Other Trials

| Code | Description | Sample excerpt |

|---|---|---|

| Needs met | The ADAPT-IT process met needs and worked well with grants. | “…well-organized, thoughtful attempt at introducing a new concept to people that were unfamiliar to it.” (Progesterone Clinician) “It’s enabled me to think about how to design the clinical trial– our goal is very specific and very pragmatic.” (Progesterone Clinician) |

| Value of ADAPT- IT | Participants reported the value of ADAPT-IT in incorporating adaptive elements into trials. | “We had some adaptive components in our design to start with, which I loved and then we - - again, we added slightly more after we met with the adaptive team.” (SHINE Clinician) “So adaptive was the integral part of this because the concepts were there, but without the tools I think that it would have been much harder to try to accomplish these goals.” (ARCTIC Clinician) |

| Design/Planning | Individuals noted the design was strengthened in their grant. | “Because we don’t have any planning money so this actually served as a planning one for us in terms of design, otherwise we had a different design to begin with which had some issues with it so we could use that, especially the first meeting in July.” (ESETT Clinician) |

| Understand research problem | Participants described many ways the ADAPT-IT development process allowed them to understand the research problem and address research questions better. | “It has the chance to bridge the gap in dealing with heterogeneity of disease and the fact that human beings respond differently than lab animals in terms of dose, duration, and effect.” (Progesterone Clinician) “So in a way the research question went from does cooling work to is it differential as a function of time since injury.” [Referring to ICECAP] (Consultant Statistician) |

| Adaptive trials future | Individuals reported they were more open to adaptive trials and likely to use them again. | “I became more interested and intrigued by the idea for the adaptive design.” (ESETT Clinician) “I make more of a point of talking about adaptive design again, on the national stage and things when I am talking about SHINE.” (SHINE Clinician) |

| General approach to clinical trials | Participants described how the ADAPT-IT project affected their general approach to clinical trials. | “Hopefully, it will show that is does have the advantages that I’m hoping it does have and if it does I think it will change the practice of clinical trial design especially when there is competitive efficacy issues.” (ESETT Clinician) “I don’t know if it did that much to tell you the truth. I don’t know if it’s changed the way I approach trials and maybe…it’s because I also teach clinical trials so I’m always combing the literature to make sure my class is getting the most up to date information and things like that.” (NETT Statistician) |

| Opened opportunities | The ADAPT-IT development process opened up opportunities to explore the research question. | “I think that if we had not started long this road with the seamless design to begin with we might just have dropped the project all together, or launched a phase one study or something like that. So, yeah, it opened up our opportunities to explore the fundamental research question.” (ARCTIC Clinician) |

| Efficiency | Comments related to the efficiency of an adaptive approach. | “If you do a lot of clinical trials you kind of have an idea of the allocation of efforts, but when you start doing adaptive design do those efforts have to increase? Probably, but to what amount, I don’t know if anyone has a good handle on that.” (NETT Statistician) “The futile things can be identified quickly and be stopped and that we can do more efficient approaches to figure out which treatments or which doses or which timings or whatever should go forward and which ones shouldn’t” (SHINE Clinician) |

In addition, many participants reported increased interest in planning adaptive trials in future clinical studies (e.g., “I am much more inclined to be open to [adaptive trials]”). A few participants mentioned extending the design development process into other clinical areas of neurology, such as Parkinson’s. Many participants endorsed the perspective that ADAPT-IT opened opportunities to employ alternative methodologies. Beyond considering adaptive designs more often, a participant reported also mentoring junior scholars about adaptive designs because they have little exposure to the approach. This participant focuses mentoring on design advantages (e.g., enhanced specificity and sensitivity to signals of whether a drug is biologically active) and encourages learning adaptive approaches for career development.

Although not all were convinced, most participants thought that adaptive designs could increase the efficiency of trials. A perspective shared by multiple participants was that adaptive trials can allow researchers to answer secondary questions in a single study. For example, one participant emphasized that although a fixed clinical trial could be effective, adaptive designs can reduce the overall costs and resource requirements to thoroughly address clinical research questions. Another participant noted that adaptive designs provide a tool for researchers to identify successful treatments to proceed and quickly identify futile treatments to stop. As one participant remarked, a single study can then account for multiple dimensions, including the treatments, dosage, and timing. A few questioned this assumption, and opined that modifying a trial to use an adaptive design could add time and cost. In counter-point, a participant noted the importance of more pragmatic information about how to improve efficiency when conducting adaptive trials (e.g., “fewer numbers of patients, less expensive, and less time”). This perspective reflects the need for adaptive trial advocates to provide clarity about costs of all types, and to continue to educate the clinical research community about adaptive designs with a focus on practical implementation strategies. These examples show how there were debates about applications and utility about adaptive versus fixed trial choices.

3.4 ADAPT-IT Identified the Need to Educate the Broader Research Community About Adaptive Designs

The ADAPT-IT adaptive design development process revealed implications for educating the broader research community about adaptive designs. Both the interviews with participants and observations by investigators indicate the need to improve understanding of adaptive designs and when they can add value (see Table 5). In addition, multiple participants pondered, ‘are review committees ready for adaptive designs?’ These comments related to convincing review committees of the value of adaptive designs. One participant opined with trepidation that that cautious reviewers will prefer to “invest in something small” before proceeding with a large adaptive trial.

Table 5.

The implications for educating the broader research community

| Code | Description | Sample excerpt | |

|---|---|---|---|

| Difficult to articulate | Participants noted it is difficult to "articulate what adaptive design represents.” | “Too many people out there, including our study section don’t really understand adaptive design.” (SHINE Clinician) “I’m going to struggle with a twelve-page limit to a protocol that is reviewed by the NIH that can only have maybe two or three pages about the design…need to be able to explain a number of things including the Adaptive Design, and which we do less than a page, maybe half a page to describe the Adaptive Design.” (Progesterone Clinician) |

|

| Are review committees ready? | Participants wondered whether review committees at NIH are ready for adaptive designs. | “I do worry that review committees at the NIH are still not ready for this.” (Progesterone Clinician) [Regarding ICECAP] “It’s hard to know from what we’ve heard of the reviews so far, but I actually think that despite it being less fundamentally different I still think that review didn’t get it.” (ADAPT-IT Clinician) |

|

| Challenges in applying adaptive | Participants reported it was important to acknowledge and dialogue about the challenges of applying adaptive methodology appropriately. | “I think it’s an option for some disease areas, some more so than others but I’m not quite sure I’m on board yet.” (NETT Statistician) “The advantage of the traditional design is when there are more questions to be answered and something has to be changed rudimentarily, the control group or the site, that isn’t answered by the adaptive process.” (NIH Representative) “There are benefits to adaptive design but I think there are some drawbacks, and let’s discuss those drawbacks rather than just push through based on only the good things” (NETT Statistician 2) |

|

| Practical implementation | Participants discussed the need for information about practical day-to-day aspect of operating an adaptive trial. | “There’s some complexity in the implementation of this type of trial at the local level because of the varying doses, durations, and the way the Adaptive Design requires – it will change and how that affects the clinicians who are enrolling patients, the coordinators, and the pharmacy at the local level.” (NIH Representative) “Often the modification sounded efficient but in reality it would take longer and more money” (Progesterone Clinician) |

|

| Ongoing training resources needed | Additional training resources are needed to understand adaptive designs and the use of Bayesian techniques in clinical trial design. | “In terms of the goal of getting all of the parties and the stakeholders in the same room and hearing one another speak about it, that part was great. As far as addressing adaptive design, I don’t know if that would totally meet the adaptive project and maybe that wasn’t the primary goal of the adaptive project..” (NETT Statistician) “…says well the model learns from it, but I’m not quite sure how that happens, so that’s the part that’s still a little mystery to me..” (NETT Statistician) “There are lots of places you can send you trainees to get a semester of medical epidemiology but there is no place to send them to get a semester of introduction to adaptive design in clinical trials.” (SHINE Clinician) | |

Many participants discussed the importance of acknowledging and dialoguing openly about the challenges of applying adaptive methodology appropriately. While participants consistently found strong value in the methodology, opinions and counter concerns were how to design the study and operate within resource limits. For example, one participant questioned whether the group was “asking too much of the adaptive design.” Multiple participants reported the need to learn more about the practical implementation of future adaptive designs. As a statistician participant cautioned, developing a strong design also requires addressing implementation. Future adaptive trials will require attention to logistical issues, such as communicating protocol changes to all parties involved.

The educational challenges are surmountable with ongoing training resources. ADAPT-IT provided a starting point for adaptive design conversations and launching projects. However, nearly all participants referenced the need for training resources for themselves to continue learning and for others who are new to the adaptive approach. A statistician noted their unit will be using Bayesian adaptive designs since participating in ADAPT-IT and suggested sharing experiences with publications on the implementation of adaptive designs. Thus, the ADAPT-IT design development process educated the research community involved, but also transformed thinking relative to the need of educating a larger community about adaptive designs and advance it to other clinical areas.

4 Discussion

Prior to this research, it was unclear what a funded process for developing adaptive clinic trials would entail, and what lessons could be learned from the process. The ADAPT-IT qualitative study was a unique opportunity to understand how the design process unfolds. The findings of this study provide the perspectives of principal investigators, consultant statisticians, academic biostatisticians, and NIH regulators participating in the ADAPT-IT design development process. A key point is the extent that social interactions and behavioral aspects impact adaptive trial design. As expected from an anthropological perspective, stakeholders have their own “lenses” and preconceptions about adaptive trials and may enter the design process with relatively general knowledge or limited knowledge about specific adaptive trial designs, and that their lenses can influence broader adaptive clinical trial designs. An important reality is that many individuals are firm in their convictions about optimal approaches. This finding is consistent with previous published work on this project that examined ethical perspectives about adaptive trials that were collected during the ADAPT-IT trial development process (29). The current findings reiterated the complexity of the development process because participants bring different lenses due to differences in values (29) or different perceptions of scientific rigor as described in this paper. Consistent with this study, a previous investigation of the ethics of adaptive clinical trials found that various stakeholders (i.e., academic biostatisticians, consultant biostatisticians, clinician researchers, regulators) differed in how they interpret ethical implications and how they value various aspects of clinical trials (29). Reflecting complexity and differing stakeholder perspectives on scientific rigor, the ADAPT-IT design development process itself evolved over time. The process evaluation team made adjustments based on feedback by project participants. The findings suggest that future adaptive design planning teams should implement feedback mechanisms and iteratively change the planning process as necessary.

Regarding dissention about the preferred decision choice, there is good reason it would occur among a diverse group of key stakeholders. Participating individuals have already excelled within their fields using specific approaches and procedures and are expected to have strong opinions about the best parameters and procedures for conducting innovative research. This contention proved to have value by leading to better adaptive designs. Previous research in the social sciences illustrates that dissent and openness to dissent among leaders can be productive and lead to better outcomes (19). This body of research suggests that expression of disagreement leads to more effective and creative solutions to problems. The current study extends previous research that dissent is a healthy process (20) in the arena of developing adaptive trials. Without dissent, such trial development teams may risk ”groupthink”, a situation of extreme concurrence seeking that prevents more effective decisions (30). Through interaction with groups and through education with individuals familiar with adaptive designs, the key stakeholders in this study were able to achieve a more effective planning process.

The findings of this process evaluation indicate that much work is to be done to educate community about the potential of adaptive trials. First, investigators will need ongoing support in designing adaptive trials. Their education may consist of learning more about adaptive designs and Bayesian principles, but it may also involve mediating or educating others on the value of adaptive designs. While it will be important to educate principal investigators, it is important to educate other groups in the community as well, e.g., clinicians and staff involved in the daily implementation. Second, NIH reviewers and study sections may need further education to create a pathway for appropriate evaluation of adaptive trials for funding. Third, the complexity of implementation of adaptive designs necessitates attention to clinicians and personnel carrying out the studies in clinical settings. Clinicians, for instance, will need to understand adaptive designs and be able to explain procedures to patients as part of consent.

A limitation of the study is that it was focused around a single effort—the funded ADAPT-IT project that was conducted in the NETT network. The NETT is US-based, and no international participants were involved. Furthermore, the statistical consultants involved were from a single firm, and ADAPT-IT focused on Bayesian adaptive designs. Frequentist adaptive designs may be received differently. Finally, we are unsure of how the process may transfer to clinical trial development in small to midsize academic groups that lack planning resources. Still, ADAPT-IT was a unique opportunity to have resources to understand the process. Although many of the findings are likely transferable to academic clinical trials in general, the planning procedures for adaptive designs may differ for other disease areas. Future research might examine adaptive design procedures in other disease areas and fields. Another limitation is that the project participants are at the forefront of methodology and advanced trial design. Despite their expertise, disagreements still arose and resolution was a process. Issues of adaptive design development could be even more challenging with groups who have less familiarity with advanced trial designs than the stakeholders in this study designs.

5 Conclusion

This study explored the experiences of stakeholders participating in the ADAPT-IT, a grant funded to understand the adaptive design development process. These findings illustrate how even stakeholders invested in adaptive design development bring different lenses to the design development process. A series of opportunities to interact with a team and assimilate the implications of different designs was needed. The findings provided insight into how the process educated project participants and the broader research community. Project participants described how their thinking evolved through the process, as they are more likely to consider flexible adaptive designs over fixed clinical trials. This experience also suggests that because adaptive designs are complex, additional education is needed to both understand the methodology and articulate it to others when planning trials. Because the design development in adaptive trials is very complex, these data suggest that funding future grants, such as ADAPT-IT, are needed to succeed in the process.

Appendix Stakeholder Interview Protocol

Introduction

Your opinions are VERY valuable as we try to learn about this. I would like to tape record our conversation so we can be certain not to lose any information you tell me. After the recording is reviewed to check my notes, the recording will be deleted.

-

“What are your overall thoughts about the Adapt IT development process?”

Probe:

“What went well?”

“What could be improved?”

“How was the bang for the buck? e.g., Personal time, overall manpower costs?”

-

“What impact did the ADAPT IT development process have on your understanding of the research problem?” (PI question)

Probes:

“What you do and don’t know?”

“How did changes in the design affect your thinking about the research problem?”

“What are your thoughts about the proposed design now?”

-

“How did the Adapt IT development process affect your approach to clinical trials in general?”

Probes:

“Trial development?”

“Trial design?”

-

“How did the Adapt IT development process affect your view of adaptive clinical trials?”

Probes:

“Statistical modeling approaches/concepts?”

“Your role in ACTs?”

“Trial efficiency/optimization?”

“Niche of adaptive designs?”

“Doing research on rare diseases?”

-

“How did the process affect you professionally?”

Probes:

“Development as a researcher?”

“Ability to consider in other clinical/therapeutic areas?”

-

“Are there any other issues regarding Adapt IT in general that you would like to share?"

Probes:

Anything else?

* Interviewer will ask related questions necessary for clarification.

Interviewer comments and observations:

Observations about Context:

Key content/major points?

Any conceptual ideas?

Footnotes

Declaration of Interest

This work was supported jointly by the National Institutes of Health Common Fund and the Food and Drug Administration, with funding administered by the National Institute of Neurological Disorders and Stroke (NINDS) U01NS073476. The authors gratefully acknowledge the participants who provided interviews as well as all participants in the study as a whole.

References

- 1.Arrowsmith J. Trial watch: Phase III and submission failures: 2007–2010. Nat Rev Drug Discov. 2011;10(2):87. doi: 10.1038/nrd3375. [DOI] [PubMed] [Google Scholar]

- 2.Arrowsmith J. Trial watch: Phase II failures: 2008–2010. Nat Rev Drug Discov. 2011;10(5):328–9. doi: 10.1038/nrd3439. 05/print. [DOI] [PubMed] [Google Scholar]

- 3.Lalonde RL, Kowalski KG, Hutmacher MM, Ewy W, Nichols DJ, Milligan PA, et al. Model-based Drug Development. Clinical Pharmacology & Therapeutics. 2007;82(1):21–32. doi: 10.1038/sj.clpt.6100235. [DOI] [PubMed] [Google Scholar]

- 4.Sheiner LB. Learning versus confirming in clinical drug development. Clinical Pharmacology & Therapeutics. 1997;61(3):275–91. doi: 10.1016/S0009-9236(97)90160-0. [DOI] [PubMed] [Google Scholar]

- 5.Sacks LV, Shamsuddin HH, Yasinskaya YI, Bouri K, Lanthier ML, Sherman RE. Scientific and regulatory reasons for delay and denial of fda approval of initial applications for new drugs, 2000–2012. JAMA. 2014;311(4):378–84. doi: 10.1001/jama.2013.282542. [DOI] [PubMed] [Google Scholar]

- 6.Jack Lee J, Chu CT. Bayesian clinical trials in action. Statistics in Medicine. 2012;31(25):2955–72. doi: 10.1002/sim.5404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Antonijevic Z, Pinheiro J, Fardipour P, Lewis RJ. Impact of Dose Selection Strategies Used in Phase II on the Probability of Success in Phase III. Statistics in Biopharmaceutical Research. 2010;2(4):469–86. [Google Scholar]

- 8.Morgan CC, Huyck S, Jenkins M, Chen L, Bedding A, Coffey CS, et al. Adaptive Design: Results of 2012 Survey on Perception and Use. Therapeutic Innovation & Regulatory Science. 2014 Jul 1;48(4):473–81. doi: 10.1177/2168479014522468. [DOI] [PubMed] [Google Scholar]

- 9.Duke Clinical Research Institute. Duke Clinical Research Institute 2015. Available from: dcri.org.

- 10.Kairalla JA, Coffey CS, Thomann MA, Muller KE. Adaptive trial designs: a review of barriers and opportunities. Trials. 2012;13(1):145. doi: 10.1186/1745-6215-13-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tenaerts P, Madre L, Archdeacon P, Califf RM. The Clinical Trials Transformation Initiative: innovation through collaboration. Nat Rev Drug Discov. 2014;13(11):797–8. doi: 10.1038/nrd4442. 11/print. [DOI] [PubMed] [Google Scholar]

- 12.Chow SC, Chang M. Adaptive design methods in clinical trials - a review. Orphanet J Rare Dis. 2008;3:11. doi: 10.1186/1750-1172-3-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Berry DA. Adaptive clinical trials in oncology. Nat Rev Clin Oncol. 2012 Apr;9(4):199–207. doi: 10.1038/nrclinonc.2011.165. [DOI] [PubMed] [Google Scholar]

- 14.Berry DA. Adaptive clinical trials: the promise and the caution. J Clin Oncol. 2011 Feb;29(6):606–9. doi: 10.1200/JCO.2010.32.2685. [DOI] [PubMed] [Google Scholar]

- 15.Jaki T. Uptake of novel statistical methods for early-phase clinical studies in the UK public sector. Clinical Trials. 2013 Apr 1;10(2):344–6. doi: 10.1177/1740774512474375. [DOI] [PubMed] [Google Scholar]

- 16.Lehmacher W, Wassmer G. Adaptive Sample Size Calculations in Group Sequential Trials. Biometrics. 1999;55(4):1286–90. doi: 10.1111/j.0006-341x.1999.01286.x. [DOI] [PubMed] [Google Scholar]

- 17.Meurer WJ, Lewis RJ, Tagle D, Fetters MD, Legocki L, Berry S, et al. An overview of the adaptive designs accelerating promising trials into treatments (ADAPT-IT) project. Ann Emerg Med. 2012 Oct;60(4):451–7. doi: 10.1016/j.annemergmed.2012.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Schulz-Hardt S, Brodbeck FC, Mojzisch A, Kerschreiter R, Frey D. Group decision making in hidden profile situations: Dissent as a facilitator for decision quality. Journal of Personality and Social Psychology. 2006;91(6):1080–93. doi: 10.1037/0022-3514.91.6.1080. [DOI] [PubMed] [Google Scholar]

- 19.Garner JT. Different Ways to Disagree: A Study of Organizational Dissent to Explore Connections Between Mixed Methods Research and Engaged Scholarship. Journal of Mixed Methods Research. 2015 Apr 1;9(2):178–95. [Google Scholar]

- 20.Mitchell R, Nicholas S, Boyle B. The Role of Openness to Cognitive Diversity and Group Processes in Knowledge Creation. Small Group Research. 2009;40(5):535–54. [Google Scholar]

- 21.De Dreu CKW, West MA. Minority dissent and team innovation: The importance of participation in decision making. Journal of Applied Psychology. 2001;86(6):1191–201. doi: 10.1037/0021-9010.86.6.1191. [DOI] [PubMed] [Google Scholar]

- 22.Kassing JW. Speaking Up: Identifying Employees’ Upward Dissent Strategies. Management Communication Quarterly. 2002 Nov 1;16(2):187–209. [Google Scholar]

- 23.Fetters MD, Curry LA, Creswell JW. Achieving Integration in Mixed Methods Designs—Principles and Practices. Health Services Research. 2013;48(6pt2):2134–56. doi: 10.1111/1475-6773.12117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Patton MQ. Qualitative research and evaluation methods. 3. Thousand Oaks CA: Sage; 2002. [Google Scholar]

- 25.Kuckartz U. Qualitative text analysis: A guide to methods, practice and using software. London: Sage; 2014. [Google Scholar]

- 26.Creswell JW. Qualitative inquiry and research design: Choosing among five approaches. 3. Thousand Oaks, CA: Sage; 2013. [Google Scholar]

- 27.Maxwell JA. Qualitative research design: An interactive approach. 3. Thousand Oaks, CA: Sage; 2013. [Google Scholar]

- 28.Morse JM. Data were saturated. Qualitative Health Research. 2015 May 1;25:587–8. doi: 10.1177/1049732315576699. [DOI] [PubMed] [Google Scholar]

- 29.Legocki L, Meurer WJ, Frederiksen S, Lewis RJ, Durkalski VL, Berry DA, et al. Clinical trialist perspectives on the ethics of adaptive clinical trials: A mixed-methods analysis. BMC Medical Ethics. 2015;16:27. doi: 10.1186/s12910-015-0022-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Pratkanis AR, Turner ME. Methods for Counteracting Groupthink Risk: A Critical Appraisal. International Journal of Risk and Contingency Management. 2013;2(4):18–38. [Google Scholar]