Abstract

Objectives

To analyse a medical protection organisation's database to identify hazards related to general practice systems for ordering laboratory tests, managing test results and communicating test result outcomes to patients. To integrate these data with other published evidence sources to inform design of a systems-based conceptual model of related hazards.

Design

A retrospective database analysis.

Setting

General practices in the UK and Ireland.

Participants

778 UK and Ireland general practices participating in a medical protection organisation's clinical risk self-assessment (CRSA) programme from January 2008 to December 2014.

Main outcome measures

Proportion of practices with system risks; categorisation of identified hazards; most frequently occurring hazards; development of a conceptual model of hazards; and potential impacts on health, well-being and organisational performance.

Results

CRSA visits were undertaken to 778 UK and Ireland general practices of which a range of systems hazards were recorded across the laboratory test ordering and results management systems in 647 practices (83.2%). A total of 45 discrete hazard categories were identified with a mean of 3.6 per practice (SD=1.94). The most frequently occurring hazard was the inadequate process for matching test requests and results received (n=350, 54.1%). Of the 1604 instances where hazards were recorded, the most frequent was at the ‘postanalytical test stage’ (n=702, 43.8%), followed closely by ‘communication outcomes issues’ (n=628, 39.1%).

Conclusions

Based on arguably the largest data set currently available on the subject matter, our study findings shed new light on the scale and nature of hazards related to test results handling systems, which can inform future efforts to research and improve the design and reliability of these systems.

Keywords: PRIMARY CARE

Strengths and limitations of this study.

The study reports findings from the analysis of the Medical Protection Society's (MPS) organisational database, which contains arguably the largest available data set on the hazards associated with systems for ordering laboratory tests, managing results and communicating outcomes to patients in primary care.

The findings are strengthened because data collected on hazards involved both general practice teams and external, independent review visits by trained MPS clinical risk assessment facilitators.

A conceptual model of the hazards associated with the test ordering and results management system and their potential impacts on health, well-being and performance was developed based on empirical data from this study and others. This may be useful for informing future patient safety research, quality improvement and evaluation efforts.

A failure to collect and cross-tabulate practice demographic data with risk data was a study limitation and a missed opportunity in learning more about the impact of diverse practice demographic characteristics on the scale and nature of identified hazards.

Although data from a large number of general practices across the UK and Ireland were analysed and will be of wide interest, the findings may still be open to bias as the vast majority were contracted members of a single medical indemnity organisation and may not, therefore, be representative of general practice organisations.

Introduction

The ordering of laboratory tests by clinicians for the purpose of screening, diagnosing and monitoring patients is a vital and increasing part of routine primary care worldwide.1 However, growing international evidence points to the associated clinical risks and patient harms associated with complex test results systems.2–7 Multiple, interacting process steps and personnel are involved, including ordering tests; tracking and reconciling results with tests ordered; reviewing and ‘actioning’ the results; and communicating the findings to patients.8 At every stage, there is a risk of system failure and the subsequent possibility of patients being unintentionally harmed.9

The design of the test ordering and results handling process in a single general practice is characterised by its heterogeneous nature and functioning, which is reflective of a non-deterministic, complex sociotechnical system.10 The ‘complexity’ is characterised and amplified by the interactions and interdependencies between different people (eg, patient, clinicians and administrative staff communications) and technologies (eg, computer hardware and software or venipuncture equipment) in the system.11 12 Although there is clearly a linear workflow aspect between taking a blood sample, ordering a test, managing the test result and then communicating the findings to the patient,8 it is not always possible to accurately anticipate and predict the behaviour of this type of system even when its functions and properties are fully known.13

Patient safety research in primary care indicates that between 15% and 54% of all detected incidents are directly related to the systems management of testing results.14–16 The evidence also demonstrates that existing support systems are complex, problematic, error prone and many vary in terms of their reliability and design quality.17 The consequences include poor follow-up of test results, missed results of clinical significance, failing to act on results and delays or errors in communicating the outcomes of results leading to avoidable patient harms.18–20 The impacts for patients include missed or delayed diagnosis and treatment causing unnecessary distress and continued ill health, as well as dissatisfaction with healthcare and the inconvenience of returning for appointments and to repeat blood tests.2 14–17 21 22 For clinicians and the wider practice, there is the possibility of patient complaints, medicolegal action, breakdowns in patient relationships, and increases in workload and time commitments caused by repeating work tasks and problem solving-related issues due to unreliable and inefficient systems.17 23 24

Despite the management of the testing and result communication process being a known clinical risk, published empirical evidence of the nature and scale of what goes wrong and why is very limited, particularly in the UK and wider European general practice settings where there is much less research compared with North America.17 Although much of the patient safety literature acknowledges many of the threats posed,3 there is limited detail of those interacting factors that contribute to suboptimal performance across the system mainly because topic-specific research that needs to be undertaken over time is lacking.17

As part of the LINNEAUS Euro-PC collaboration25 on patient safety in primary care (box 1), preliminary guidance on the safe management of laboratory-based diagnostic tests ordering and results systems was developed based on the limited research published, programme-related studies and recent independent research outcomes.17 A key contributor to the safe guidance development was Medical Protection Society's (MPS) risk management programme in the UK and Ireland. In addition to its core function as an international medical protection and indemnity organisation for clinical professionals, MPS also undertakes a clinical risk self-assessment (CRSA) for individual general practices as part of membership arrangements,26 or for a fee for non-members (box 2). The CRSA is an educationally supportive initiative which involves a visit from a specifically trained MPS clinical risk assessment facilitator who reviews, documents and provides feedback on a whole range of clinical system risks, patient safety issues and professional requirements across the general practitioner (GP) workplace, including those related to systems for the safe management and communication of laboratory test results. Most related research thus far has explored the safety perspectives of patients and GP team members, observed practice systems, and reported literature findings or a method to measure system safety.1 17 27 From this perspective, the service offered by the MPS is unique and (given the limited published evidence) potentially provides access to arguably the most extensive data set available for this important, safety-critical area of clinical practice.

Box 1. About the LINNEAUS Euro-PC collaboration.

The LINNEAUS Euro-PC collaboration is a coordination action funded by the European Union Framework 7 Programme. The main focus of the coordination action is to build a network of researchers and practitioners working on patient safety in primary care in the European Union. Through building a network of researchers into a pan European network, this coordination action will extend the current knowledge and experience from countries where the importance of patient safety is nationally recognised, to countries where it is less developed, ensure that there is an appropriate focus on primary care and encourage cooperation and collaboration for future interventions through large-scale trials (http://www.linneaus-pc.eu/index.html)

Box 2. Summary of the clinical risk self-assessment (CRSA) process.

CRSA purpose:

To offer an opportunity for all members of the team—general practitioners, managers, nurses, receptionists and therapists—to work together, talk openly and develop practical solutions that promote safer general practice.

CRSA aims to:

Identify potential areas of risk and encourage all members of the team to develop safer practices

Reduce the likelihood of complaints and claims

Support the practice development plan

Provide useful evidence for appraisal and revalidation

Identify non-compliance with national standards

Improve communication within the team.

CRSA involves:

A previsit questionnaire to ascertain roles and responsibilities and services provided

A full-day visit by a trained Medical Protection Society (MPS) risk assessment facilitator, incorporating a half-day multidisciplinary workshop

Confidential exploratory discussion with key members of staff

Completion of a staff patient safety culture survey, which helps identify the importance of patient safety within the organisation.

Against this background, we aimed to identify, analyse and learn from the MPS organisational database of hazards specifically related to practice systems for the ordering of laboratory test investigations and subsequent results management and communication processes. We further aimed to integrate these data with other evidence sources to inform design of a systems-wide conceptual model of related hazards and the potential impacts on the well-being of people and the practice as an organisation.

Methods

Definition and scope of system hazards, risks and laboratory tests

‘Hazards’ can be defined as a potential source of harm or adverse health effect on a person or persons.10 28 They are present in every workplace but more so in complex, safety-critical industries such as primary healthcare organisations. A hazard can be viewed as a latent system condition that will not normally lead to an incident unless it interacts with other system elements. For example, a general practice hazard may be a total reliance on patients to contact the surgery for test results. This would become a contributory factor in a patient safety incident if the following interacting system issues occurred: (1) The patient's test result is clinically abnormal and indicative of an early stage of serious illness. (2) The patient fails to contact the practice as requested at the previous consultation. (3) There is a significant delay in the practice reviewing and ‘actioning’ the test results received. The goal of this safe system paradigm is to minimise risks and avoid unwanted but preventable harm events.

Hazard is closely related to and is often used interchangeably with the term ‘risk’, although they are different concepts. ‘Risk’ can be defined as the likelihood that a person may be harmed or suffers adverse health effects if exposed to a hazard.10 28 In this study, the identification of hazards embedded in test results systems extended beyond the risk consequences for patients and includes potential impacts on the ‘well-being’ of relatives and carers as well as the GP team and the performance of the practice surgery. ‘Well-being’ is defined from a person perspective as health, safety, comfort, convenience, satisfaction, interest and enjoyment; and in practice organisational terms as performance with regard to productivity, quality, flexibility and effectiveness.29

Given the scope, range and complexity of clinical investigations that can be ordered by primary care clinicians, the LINNEAUS Euro-PC collaboration decided to narrow the study focus to include only common, high volume biochemistry and haematology blood test requests (ie, those with short turnaround times like urea and electrolytes, liver function test, full blood count)—although it was recognised that the study findings and implications would likely apply more widely to include other investigation processes.

CRSA data collection

The CRSA is a supportive, voluntary improvement method developed by MPS for its UK and Ireland practice membership. The purpose is to bring the GP team together to learn about clinical and organisational risks, and guide teams to adopt a systems approach to mitigating or minimising related threats. The process involves a 1-day external visit and review by an experienced and trained MPS clinical risk assessment facilitator—all facilitators have a clinical background and have worked or currently work in primary care. The facilitator employs a combination of small group work exercises, and informal and structured confidential discussions with team members to identify actual or potential hazards across the GP work system that may impact on patient safety or the health and well-being of staff members. It employs a core standardised approach but takes account of the differing legislative and primary care structures in each country's health system.

The CRSA facilitator undertakes informal interviews—guided by a standardised proforma—with key members of the practice team (eg, GP partners, practice manager, practice nurses and administrative staff) discussing internal systems and identifying potential hazards during the discussions and observations made as part of a ‘think-aloud’ process,30 while also taking contemporaneous notes. Additional hazards are identified by the team themselves during a multidisciplinary afternoon workshop. Based on all hazards collated and actions agreed on, the facilitator generates a report using an online system containing hazards and recommendations for each hazard category and a comments/guidance section, that is, what is the legislation guidance around this particular hazard or action. A rating system is also employed for all hazards which gives a combined score out of 400 for the following four domains: patient safety, clinical risk, legislation and financial risk. Timescales for recommended interventions or actions for each identified hazard to be implemented are prioritised as short, medium or long term. All reports and scoring are independently peer reviewed by another risk facilitator with differences resolved by consensus.

Categorisation of MPS data

A list of 722 documented ‘free-text’ hazards related to the tests results handling systems previously recorded by CRSA risk assessment facilitators during visits was generated from the MPS organisational database. The complete list was carefully read and re-read, coded on an iterative basis from which a basic categorisation framework was developed and refined by NH based on content analysis principles.31 This involved a process of identifying the same hazards, merging those strongly related to each other, deleting duplicates and ultimately reducing the total number until a preliminary list of 45 discrete hazard categories was identified. One author (JP) then independently checked the validity of the categories against the original ‘free-test’ list and coding data, with any disagreements queried and resolved with NH.

Thematic analysis of MPS hazards categories and published evidence

The discrete hazard categories were then jointly reviewed and analysed by three authors (PB, JP and JM) to generate agreed ‘high-level’ themes for each functional dimension of the results handling management system (preanalytical, specimen processing stage (formerly ‘analytical’), postanalytical and communication outcome issues). The system hazards reported in the published evidence base1 17 that informed the development of the aforementioned LINNEAUS Euro-PC safe guidance was also reviewed. Although this did not add anything novel to the MPS data set, it provided information on risks at the practice organisation and cultural levels, together with overall impacts on the well-being of people and the organisation related to poor or inadequate system design. This review, analysis and agreement process was achieved by three 4 h, face-to-face meetings with all of the authors and follow-up electronic mail discussion until consensus was reached.

Conceptual modelling of system hazards identified

The conceptual model evolved by merging the core components of two existing theoretical frameworks of high relevance to this work. The first was Hickner et al's8 generic model of the preanalytic, analytic and postanalytic stages and functions of a diagnostic test ordering and results management system. The second approach was Carayon et al's32 Safety and Engineering Initiative for Patient Safety (SEIPS) proposed model of human factors interactions (people, tasks, technology and tools, environment and organisation) and related outcomes (eg, the well-being and performance of people and organisations) for healthcare systems. Key elements of the SEIPS approach were merged to the generic test results framework to form the basis of a ‘new’ conceptual model. The aforementioned themes generated by the authors were then mapped onto this ‘new’ model to describe potential hazards and outcomes associated with results handling systems. However, we subdivided the ‘postanalytical test stage’ of the system to create a new end-stage process of ‘communication outcome issues’, which litigation data and recent research have highlighted as an important safety-critical system element where failures may occur for patients and practices.

Results

CRSA practice visits and proportion with system hazards identified

A total of 778 CRSA visits to UK and Ireland general practices were undertaken over the period from January 2008 to December 2014. In 647 practices (83.2%), a range of hazards were observed and recorded by clinical risk assessment facilitators that were related to the safe operation of the test ordering and results management system. A breakdown of the year of CRSA visit, number of practices and the proportion with system hazards identified is outlined in table 1.

Table 1.

Proportion of clinical risk self-assessment (CRSA) practice visits conducted in the UK and Ireland by year and proportion with test results system risks highlighted by clinical assessors

| CRSA general practice visits | Proportion of practices with identified test results system issues |

||

|---|---|---|---|

| Year | (n) | (n) | (%) |

| 2008 | 41 | 34 | 82.9 |

| 2009 | 136 | 107 | 78.7 |

| 2010 | 121 | 108 | 88.5 |

| 2011 | 162 | 138 | 85.1 |

| 2012 | 58 | 48 | 82.7 |

| 2013 | 153 | 135 | 88.2 |

| 2014 | 107 | 77 | 72.0 |

| Totals | 778 | 647 | 83.2 |

Number of hazard categories, mean number and most frequently occurring

A total of 45 discrete hazard categories were identified which cover the breadth of the laboratory test ordering and results management system (see online supplementary appendix 1). The mean number of hazards per practice was 3.6 (SD=1.94). The most frequently occurring hazard was inadequate processes for matching test requests and results received (n=350, 54.1%). Other hazards which occurred in many practices include informing patients of results but failing to communicate that the results data set is incomplete (n=195, 30.1%), and system reliance on patients contacting the practice for test results (n=166, 25.7%). The frequency of occurrence of the 15 most common hazard categories identified by CRSA facilitators is described in table 2.

Table 2.

The top 15 most frequently occurring hazards identified during CRSA visits to general practices by MPS (n=647)

| No | Hazard category | n | Per cent |

|---|---|---|---|

| 1 | Inadequate process for matching test requests and results received | 350 | 54.1 |

| 2 | Inadequate tracking process to check patients attend on request following abnormal results being received | 340 | 52.5 |

| 3 | Informing patients of some test results before all results are received | 195 | 30.1 |

| 4 | System reliance on patients contacting practice for test results | 166 | 25.7 |

| 5 | Test results not being forwarded to covering GPs in a timely manner (inadequate ‘buddy system’, ie, a clinical colleague covers the work of a colleague on annual leave or sick leave, etc) | 94 | 14.5 |

| 6 | Family members and ‘Third Party’ requests for test results | 91 | 14.1 |

| 7 | Communicating incorrect results | 80 | 12.3 |

| 8 | Ambiguous and/or unclear instructions given to frontline administrators by GPs to communicate to patients | 78 | 12.1 |

| 9 | Front-line administrators asked by patients for test results and to provide addition information/interpretation | 75 | 11.6 |

| 10 | Failing to ‘action’ clinically abnormal results received | 69 | 10.7 |

| 11 | Lack of system standardisation—variation and inconsistency in how GPs review and action test results | 61 | 9.4 |

| 12 | Lack of a formal protocol describing the overall system | 58 | 8.9 |

| 13 | No documented record of tests requested to ensure that all tests and results have been reported on | 56 | 8.7 |

| 14 | Test results not forwarded to the requesting GP/GPs reporting on test results ordered by a colleague | 54 | 8.3 |

| 15 | Desired action not carried out, that is, due to difficulty contacting the patient or task not being completed | 49 | 7.6 |

CRSA, clinical risk self-assessment; GP, general practitioner; MAS, Medical Protection Society.

In those practices with identified systems risks, the 45 known hazard categories were recorded on a total of 1604 occasions. A breakdown of the proportion of these hazard occurrences (subdivided into each of the four high-level system dimensions) is described in table 3. Hazards occurred most frequently at the ‘postanalytical test stage’ (n=702, 43.8%), followed closely by ‘communication outcomes issues’ (n=628, 39.1%). Therefore, in the vast majority of cases, system hazards occur after the test result arrives back in the practice.

Table 3.

The number and proportion of hazards (n=1604) identified at each of the four high-level system dimensions in the UK and Ireland general practices undergoing a clinical risk self-assessment visit between 2008 and 2014

| System dimensions | N | Per cent |

|---|---|---|

| Preanalytical stage (eg, inadequate specimen handling and storage) | 209 | 13.0 |

| Specimen process stage (eg, broken specimen container) | 65 | 4.0 |

| Postanalytical stage (eg, not acting on results that require action) | 702 | 43.8 |

| Communication outcome issues (eg, failure to inform patient) | 628 | 39.1 |

| Total | 1604 | 100.0 |

Practice organisation and cultural issues

A number of commonly occurring high-level organisational and culture risks were identified mainly from published evidence17 but also from the MPS study data. These were defined as those risks that relate to the organisation of the whole system. For example, limited practice leadership commitment to safety; limited opportunities for necessary staff training; an over reliance on patients to contact the practice for test results; and lack of a formal written system protocol that is shared and understood by the GP team.

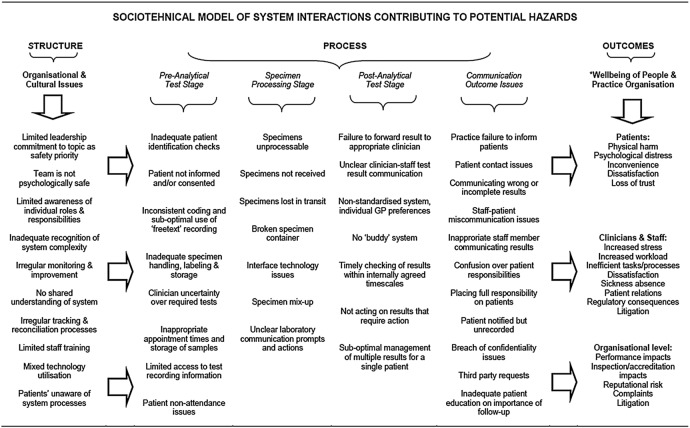

Conceptual model of system hazards

The developed conceptual model (figure 1) is representative of the test ordering and results handling process as a complex sociotechnical system.10–12 It describes (and potentially predicts) how the hazards at the organisational and cultural levels and across the specific generic stages of the test results system may interact to impact on the well-being of people and on practice performance. The model has the potential to be utilised or adapted by GP teams to prompt reflection and discussion around specific hazards related to different aspects of the results handling system as a means to facilitate risk assessment, potential learning and improvement opportunities as part of the patient safety agenda.

Figure 1.

A conceptual model of test ordering and results handling system hazards from a primary care perspective (GP, general practitioner).

Discussion

In this study, we analysed data on test results management systems held in a large medical protection organisational database collected as part of clinical risk assessments undertaken in general practices throughout the UK and Ireland. The findings illustrated the presence of a significant number of system-wide hazards that may impact on the health, safety and well-being of patients, but also on the GP team and the practice organisation. By integrating these data with existing evidence,1 17 we were able to design a conceptual model of hazards and impacts related to test results systems in primary care. The model may be useful from a health services research or implementation science perspective in studying facilitators, inhibitors and interventions to improve the safety of test results processes based on systems thinking.12 33

Arguably the findings provide a clue for the first time in terms of quantifying the extent to which aspects of general practice systems for managing test results (more than 80% of practices in this study) may be at risk of impacting on the safety, health and well-being of patients, the GP team and the practice as an organisation. Although the high figure may not be surprising given what we know about patient safety in general practice,5 it does reinforce the complexity of such systems and highlights the potential difficulties faced by extremely busy GP teams in coping with everyday work related to the management of test results. Although it is previously reported that errors can occur in any of the multiple steps of the test management process, it is unclear which stage(s) is the most hazardous from a general practice perspective.34 Our findings suggest that just over 80% of all hazards identified directly related to functions in the postanalytical test stage. Arguably, therefore, this area may harbour the greatest risks because of the requirement for high-level clinical decision-making and more complex communication tasks which may be difficult to capture in standard protocols—particularly in terms of the timely follow-up of abnormal results and communicating test results outcomes to patients.

Previous research using anonymous reporting by clinicians found types of system ‘errors’35 that similarly align with the potential risks identified in this study, such as in the workflow of results to the clinician, test ordering processes and notifying results to patients. However, whereas Hickner et al found that <10% of errors reported related to communication outcome issues, just under 40% of all hazards uncovered in this study were identified in this part of the test results system. This finding is also supported by a recent UK study by Litchfield et al36 who found that patients frequently experienced dissatisfaction with the test results process, particularly around communication delays and inconsistencies in how information was imparted to them. A clear and accessible protocol for the communication of results was suggested by patients as a practice improvement method to this problem. Similarly, recent research has also identified risks posed around clinician-to-administrator and administrator-to-patient communications related to the testing process and the need to enhance current communication skills for these groups as another method of making care safer.22 23

Traditional approaches to learning from inadequate results handling systems by GP teams will highly likely focus on methods such as clinical audit and significant event analysis. Both are reactive approaches which while useful also offer a limited and fragmented perspective of the whole test results management system in that they tend to focus on ‘end point outcomes’ (eg, number of test results successfully communicated to patients within five working days, or investigating and learning from why a single test result was lost). Arguably what is also necessary is to understand the underlying system as a whole. One retrospective method which attempts this systems-wide approach is the implementation of a ‘care bundle’ to measure and monitor basic safe performance at each high-level stage of the system and direct improvement efforts where necessary.27 It is also argued that prospective hazard analysis methods are necessary in order to develop a deeper understanding of the intricate functioning of the system and the identification of likely error producing conditions.37 However, there is very limited evidence that these types of improvement approaches are being applied to test results management systems, probably because of a lack of capacity and capability on the part of GP teams.

A general criticism of current approaches to patient safety in all healthcare settings is that they may be inadequate for understanding system complexity and are overly focused on issues of reliability, quantifying incidents and performing incident investigations as the main means of learning about systems of work ‘failures’.38 The ‘holy grail’ of this safety management approach is perceived as the absence of incidents (and therefore harm) or at least their reduction to an ‘acceptable’ number. In resilience engineering terms, this is known as a safety-I approach and is perceived as being limited in gaining insights into the everyday functioning of complex sociotechnical systems such as those found in healthcare.39

In this regard, resilience engineering offers a system perspective of potential interest to safe test results management. It is defined as the ability of a system to modify but sustain its functioning before, during and after any changes whether they were expected or otherwise. It is interested not only in what goes wrong (failures) but importantly what goes right (successes). If a system is resilient, then it is safe—based on the “simple fact that it is impossible for something to go right and wrong…at the same time”.13 This is the cornerstone of what is known as a safety-II approach. From a research and improvement perspective, an interesting next step might be to explore this concept of systems resilience and how taking this type of proactive approach may benefit the safety management of test result systems.

However, putting aside the philosophical debate over how best to understand and improve patient safety, if we wish to gain insights into why things go wrong with test results management systems, then there is a need to recognise that the great majority of harm incidents arise not from the actions or inactions of individual team members (or patients), but from the complex sociotechnical interactions that take place within the inadequate, incomplete and often conflicted systems of which people (clinicians and patients) are an integral, interdependent element.10–12 This level of understanding forms a key safety principle in the discipline of Human Factors and Ergonomics,10–12 knowledge of which is limited in healthcare although policy planning in this area at a national level is now underway.40 Realistically, a deeper understanding of the basic principles underpinning discipline is arguably necessary if GP teams are to acquire the necessary knowledge and skills to design safe and efficient (and resilient) care systems to optimise performance and eliminate hazards.

Study limitations

A number of limitations are evident with this study. Although data from a large number of UK and Ireland practices are included, this is highly likely to be a biased and potentially unrepresentative group given their self-selecting membership of MPS, or preparedness to pay a fee for the CRSA. The lack of practice demographic data collected meant we could not provide a more insightful background context to the risks identified across different types, sizes and locations of practices and enabled interpractice and country comparisons. This would have been useful to inform future quality improvement and research activity. Although CRSA facilitators are trained and accredited, there will still be variation in how they interview staff and observe and rate aspects of practice performance, meaning that they may overestimate or underestimate actual or perceived practice risks around systems for managing laboratory test results. The categorisation of study data and design of the conceptual model was based on evidence from the perspective of general practice rather than clinical laboratory-based research, where the types of patient safety concerns (particularly in the analytical phase) can be markedly different.41 Similarly, there may be debate over how we have categorised some the of identified hazards (eg, see online supplementary appendix 1—hazards numbers 13, 19, 31 and 33 were pragmatically classified as ‘preanalytical’ for convenience because all related to some extent to this first stage of the process).

It is difficult to determine the significance of the risk posed to patients and practice teams with many of the hazards identified in this study. The reality is that raising awareness of the high frequency of a specific hazard occurring may not necessarily lead to it being categorised as a priority risk by GP teams. For example, the lack of an adequate tracking system to reconcile tests ordered with results received may well be recognised as a safety hazard by the practice leadership, but is not accorded priority status because of a combination of the effort and resource involved in resolving the issue and the perceived risk of harm to patients, that is, the practice is willing to trade-off the perceived safety risk to patients in favour of the perceived efficiency of their current system.

Conclusion

Based on arguably the largest data set currently available on the subject matter, our study of the MPS's CRSA programme sheds new light on the scale and nature of hazards related to test results handling systems in primary care. We need to acknowledge that interventions to reduce patient harm are currently limited due to lack of research and improvement attention given to this high-risk area. However, the study outcomes will be of interest internationally to primary care providers, researchers, patient safety leaders and policymakers with an interest in studying the topic with a view to minimising risks and improving the underlying safety and reliability of such systems.

Acknowledgments

The authors wish to sincerely thank MPS for providing their hazard data on this important patient safety topic and making a pivotal contribution to advancing knowledge in this area. Similarly, they wish to thank the CRSA risk assessment facilitators involved and the UK and Ireland practice teams who participated in the CRSA visits.

Footnotes

Contributors: PB was involved in concept, study design, data analysis, co-development and critical review of manuscript. JP was involved in study design, data analysis and co-development and critical review of the manuscript. NH was involved in data analysis and critical review of the manuscript. MD and JM were involved in data interpretation, co-development and critical review of manuscript.

Funding: The research leading to these results has received funding from the European Community's Seventh Framework Programme FP7/2008-2012 under grant agreement number 223424. Additional funding was provided by NHS Education for Scotland and the Medical Protection Society.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Callen JL, Westbrook JI, Georgiou A et al. Failure to follow-up test results for ambulatory patients: a systematic review. J Gen Intern Med 2012;27:1334–48. 10.1007/s11606-011-1949-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.McKay J, Bradley N, Lough M et al. A review of significant events analysed in general medical practice: implications for the quality and safety of patient care. BMC Fam Pract 2009;10:61 10.1186/1471-2296-10-61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Elder NC, Dovey SM. Classification of medical errors and preventable adverse events in primary care: a synthesis of the literature. J Fam Pract 2002;51:927–32. [PubMed] [Google Scholar]

- 4.Jacobs S, O'Beirne M, Derfiingher LP et al. Errors and adverse events in family medicine: developing and validating a Canadian taxonomy of errors. Can Fam Physician 2007;53:271–6, 270. [PMC free article] [PubMed] [Google Scholar]

- 5.The Health Foundation. Evidence scan: levels of harm in primary care. London, 2011. http://www.health.org.uk/publications/levels-of-harm-in-primary-care/ (accessed 10 May 2013). [Google Scholar]

- 6.Makeham MA, Kidd MR, Salman DC et al. The Threats to Patient Safety (TAPs) study: incidence of reported errors in general practice. Med J Aust 2006;185:95–8. [DOI] [PubMed] [Google Scholar]

- 7.Elder NC, Graham D, Brandt E et al. The testing process in family medicine: problems, solutions and barriers as seen by physicians and their staff. J Patient Saf 2006;2:25–32. [Google Scholar]

- 8.Hickner JM, Fernald DH, Harris DM et al. Issues and initiatives in the testing process in primary care physician offices. Jt Comm J Qual Patient Saf 2005;31:81–9. [DOI] [PubMed] [Google Scholar]

- 9.Elder NC, McEwen TR, Flach JM et al. Management of test results in family medicine offices. Ann Fam Med 2009;7:343–51. 10.1370/afm.961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Karsh BT, Holden RJ, Alper SJ et al. A human factors engineering paradigm for patient safety: designing to support the performance of the healthcare professional. Qual Saf Health Care 2006;15(Suppl 1):i59–65. 10.1136/qshc.2005.015974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care 2010;19(Suppl 3):i68–74. 10.1136/qshc.2010.042085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Braithwaite J, Runciman WB, Merry AF. Towards safer, better healthcare: harnessing the natural properties of complex sociotechnical systems. Qual Saf Health Care 2009;18:37–41. 10.1136/qshc.2007.023317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wears RL, Hollnagel E, Braithwaite J, eds. The resilience of everyday clinical work. Farnham, UK: Ashgate, 2014. [Google Scholar]

- 14.Dovey SM, Meyers DS, Phillips RL Jr et al. A preliminary taxonomy of medical errors in family practice. Qual Saf Health Care 2002;11:233–8. 10.1136/qhc.11.3.233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Fernald D, Pace W, Harris D et al. Event reporting to a primary care patient safety reporting system: a report from the ASIPS Collaborative. Ann Fam Med 2004;2:327–32. 10.1370/afm.221 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Makeham MA, Dovey SM, County M et al. An international taxonomy for errors in general practice: a pilot study. Med J Aust 2002;177:68–72. [DOI] [PubMed] [Google Scholar]

- 17.Bowie P, Forrest E, Price J et al. Good practice statements on safe laboratory testing: A mixed methods study by the LINNEAUS collaboration on patient safety in primary care. Eur J Gen Pract 2015;21(Suppl):19–25. 10.3109/13814788.2015.1043724 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bird S. Missing test results and failure to diagnose. Aust Fam Phys 2004;33:360–1. [PubMed] [Google Scholar]

- 19.Poon EG, Gandhi TK, Sequist TD et al. “I wish I had seen this test result earlier!” Dissatisfaction with test result management systems in primary care. Arch Intern Med 2004;164:2223–8. 10.1001/archinte.164.20.2223 [DOI] [PubMed] [Google Scholar]

- 20.Mold JW. Management of laboratory test results in family practice: an OKPRN study. J Fam Pract 2000;49:709–15. [PubMed] [Google Scholar]

- 21.Bird S. A GP's duty to follow-up test results. Aust Fam Phys 2003;32:45–6. [PubMed] [Google Scholar]

- 22.Bowie P, Halley L, McKay J. Laboratory test ordering and results management systems: a qualitative study of safety risks identified by administrators in general practice. BMJ Open 2014;4:e004245 10.1136/bmjopen-2013-004245 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cunningham DE, McNab D, Bowie P. Quality and safety issues highlighted by patients in the handling of laboratory test results by general practices—a qualitative study. BMC Health Serv Res 2014;14:206 10.1186/1472-6963-14-206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kljakovic M. Patients and tests: a study into understanding of blood tests ordered by their doctor. Aust Fam Phys 2012;40:241–3. [PubMed] [Google Scholar]

- 25.LINNEAUS Euro-PC. Learning from international networks about errors and understanding safety in primary care. http://www.linneaus-pc.eu/ (accessed 20 May 2015).

- 26.Medical Protection Society. http://www.medicalprotection.org/uk/home (accessed 20 May 2015).

- 27.Bowie P, Ferguson J, Price J et al. Measuring system safety for laboratory test ordering and results management in primary care: international pilot study. Qual Prim Care 2014;22:238–7. [PubMed] [Google Scholar]

- 28.Health and Safety Authority. What is a hazard? http://www.hsa.ie/eng/Topics/Hazards/ (accessed 10 May 2015). [Google Scholar]

- 29.Wilson JR. Methods in the understanding of human factors. In: Wilson JR, Corlett N, eds. Evaluation of human work. 3rd edn Boca Raton: CRC Press, 2005:1–31. [Google Scholar]

- 30.Think-Aloud Protocol. https://www.niar.wichita.edu/humanfactors/toolbox/T_A%20Protocol.htm (accessed 2 June 2015).

- 31.Golafshani N. Understanding reliability and validity in qualitative research. Qual Rep 2003;8:597–607. [Google Scholar]

- 32.Carayon P, Schoofs Hundt A, Karsh BT et al. Work system design for patient safety: the SEIPS model. Qual Saf Health Care 2006;15(Suppl 1):i50–8. 10.1136/qshc.2005.015842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.de Savigny D, Adam T, eds. Systems thinking for health systems strengthening. Alliance for Health Policy and Systems Research, WHO, 2009. [Google Scholar]

- 34.Singh R, Hickner J, Mold J et al. “Chance favors only the prepared mind”: preparing minds to systematically reduce hazards in the testing process in primary care. J Patient Saf 2014;10:20–8. 10.1097/PTS.0b013e3182a5f81a [DOI] [PubMed] [Google Scholar]

- 35.Hickner J, Graham DG, Elder NC et al. Testing process errors and their harms and consequences reported from family medicine practices: a study of the American Academy of Family Physicians National Research Network. Qual Saf Health Care 2007;17:194–200. 10.1136/qshc.2006.021915 [DOI] [PubMed] [Google Scholar]

- 36.Litchfield IJ, Bentham LM, Lilford RJ et al. Patient perspectives on test result communication in primary care: a qualitative study. Br J Gen Pract 2015;65:e133–40. 10.3399/bjgp15X683929 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Dean Franklin B, Shebl NA, Barber N. “Failure mode and effects analysis: too little for too much?” BMJ Qual Saf 2012;21: 607–11. 10.1136/bmjqs-2011-000723 [DOI] [PubMed] [Google Scholar]

- 38.Hollnagel E, Braithwaite J, Wears R, eds. Resilient health care. London: Ashgate, 2013. [DOI] [PubMed] [Google Scholar]

- 39.Hollnagel E. Safety-I and safety-II: the past and future of safety management. Farnham, UK: Ashgate, 2014. [Google Scholar]

- 40.National Quality Board. Human factors in healthcare: a concordat from the National Quality Board. 2013. http://www.england.nhs.uk/wp-content/uploads/2013/11/nqb-hum-fact-concord.pdf (accessed 25 Aug 2014).

- 41.Plebani M, Laposata M, Lundberg GD. The brain-to-brain loop concept for laboratory testing 40 years after its introduction. Am J Clin Pathol 2011;136:829–33. 10.1309/AJCPR28HWHSSDNON [DOI] [PubMed] [Google Scholar]