Significance

Emotions function to optimize adaptive responses to biologically significant events. In the auditory channel, humans are highly attuned to emotional signals in speech and music that arise from shifts in the frequency spectrum, intensity, and rate of acoustic information. We found that changes in acoustic attributes that evoke emotional responses in speech and music also trigger emotions when perceived in environmental sounds, including sounds arising from human actions, animal calls, machinery, or natural phenomena, such as wind and rain. The findings align with Darwin’s hypothesis that speech and music originated from a common emotional signal system based on the imitation and modification of sounds in the environment.

Keywords: emotion, speech, music, environmental sounds, musical protolanguage

Abstract

Emotional responses to biologically significant events are essential for human survival. Do human emotions lawfully track changes in the acoustic environment? Here we report that changes in acoustic attributes that are well known to interact with human emotions in speech and music also trigger systematic emotional responses when they occur in environmental sounds, including sounds of human actions, animal calls, machinery, or natural phenomena, such as wind and rain. Three changes in acoustic attributes known to signal emotional states in speech and music were imposed upon 24 environmental sounds. Evaluations of stimuli indicated that human emotions track such changes in environmental sounds just as they do for speech and music. Such changes not only influenced evaluations of the sounds themselves, they also affected the way accompanying facial expressions were interpreted emotionally. The findings illustrate that human emotions are highly attuned to changes in the acoustic environment, and reignite a discussion of Charles Darwin’s hypothesis that speech and music originated from a common emotional signal system based on the imitation and modification of environmental sounds.

Emotional responses to environmental events are essential for human survival. In contexts that have implications for survival and reproduction, the amygdala transmits signals to the hypothalamus, which releases hormones that activate the autonomic nervous system and cause physiological changes, such as increased heart rate, respiration, and blood pressure (1). These bodily changes contribute to the experience of emotion (2), and function to prepare an organism to respond effectively to biologically significant events in the environment (3).

Throughout the arts and media, environmental conditions have been used to connote an emotional character. For example, the acoustic soundscape of film and television can powerfully affect a viewer’s perspectives on the narrative (4). Thus, human emotions appear to track changes in the acoustic environment, but it is unclear how they do this. One possibility is that the acoustic attributes that convey emotional states in speech and music also trigger emotional responses in environmental sounds. This possibility is implied within Charles Darwin’s theory that speech and music originated from a common precursor that developed from “the imitation and modification of various natural sounds, the voices of other animals, and man’s own instinctive cries” (5). Darwin also argued that this primitive system would have been especially useful in the expression of emotion. Modern day music, he reasoned, was a behavioral remnant of this early system of communication (5, 6).

This hypothesis has been elaborated and restated by modern researchers as the “musical protolanguage hypothesis”: speech and music share a common ancestral precursor of a songlike communication system (or musical protolanguage) used in courtship and territoriality and in the expression of emotion, which is based on the imitation and modification of environmental sounds (6–10). Environmental sounds carry biologically significant information reflected in our emotional responses to such sounds. To express an emotional state, early hominins might have selectively imitated and manipulated abstract attributes of environmental sounds that have broad biological significance, vocally modulating pitch, intensity, and rate while disregarding the attributes of sound that are specific to individual sources. Extracting and transposing biologically significant cues in the environment to contexts beyond their original source allowed a new channel of emotional communication to emerge (11–14).

The musical protolanguage hypothesis is supported by recent evidence that speech and music share underlying cognitive and neural resources (15–22), and draw on a common code of acoustic attributes when used to communicate emotional states (23–31). In their review of emotional expression in speech and music, Juslin and Laukka found that higher pitch, increased intensity, and faster rate were associated with more excited and positive emotions in both speech and music (23). More recently, it has been demonstrated that the spectra associated with certain major and minor intervals are similar to the spectra of excited and subdued speech, respectively (26, 27), a finding corroborated in acoustic analyses of South Indian music and speech (28). Furthermore, deficits in music processing are associated with reduced sensitivity to emotional speech prosody (32), whereas enhancements of the capacity to process music are correlated with improved sensitivity to emotional speech prosody (33, 34). For example, a study on individuals with congenital amusia, a neurodevelopmental disorder characterized by deficits in processing acoustic and structural attributes of music, showed that amusic individuals were worse than matched controls at decoding emotional prosody in speech, supporting speculations that music and language share mechanisms that trigger emotional responses to acoustic attributes (32).

Changes in three acoustic attributes are especially important for communicating emotion in speech and music: frequency spectrum, intensity, and rate (23–25). Darwin’s hypothesis implies that these attributes are tracked by human emotions because they reflect biologically significant information about sound sources, such as their size, proximity, and speed. More specifically, the musical protolanguage hypothesis predicts that acoustic attributes that influence the emotional character of speech and music should also have emotional significance when arising from environmental sounds (5).

The present study tested the hypothesis that changes in the frequency spectrum, intensity, and rate of environmental sounds are associated with changes in the perceived valence and arousal of those sounds (23–25). Because the sources and nature of environmental sounds vary considerably according to geographic location, environmental sounds are defined as any acoustic stimuli that can be heard in daily life that are neither musical nor linguistic. Thus, four types of environmental sounds were considered (human actions, animal sounds, machine noise, sounds in nature), each containing six exemplars. For each of these 24 environmental sounds, we manipulated the frequency spectrum, intensity, and rate. In accordance with the circumplex model of emotion, we obtained ratings of the perceived difference in valence (negative to positive) and arousal (calm to energetic) for stimulus pairs that differed in just one of the three manipulated attributes (35, 36). Although not all environmental sounds have a clearly perceptible fundamental frequency, research on pitch sensations for nonperiodic sounds confirm that individuals are sensitive to salient spectral regions and can detect when such regions are shifted (37, 38).

Results

Two preliminary stimulus verification tests confirmed that all manipulation-types resulted in highly discriminable stimuli, and none of the manipulations had a significant effect on the perceived naturalness of stimuli (Materials and Methods). Next, the three types of manipulation (frequency spectrum, intensity, rate) were examined separately in Exps. 1a–1c, respectively, to minimize fatigue effects and to increase response reliability. Exp. 1a focused on the frequency spectrum of stimuli. For each stimulus, listeners rated the difference in valence and arousal between the high- and low-frequency versions. The same procedure was adopted for manipulations of intensity (Exp. 1b) and rate (Exp. 1c).

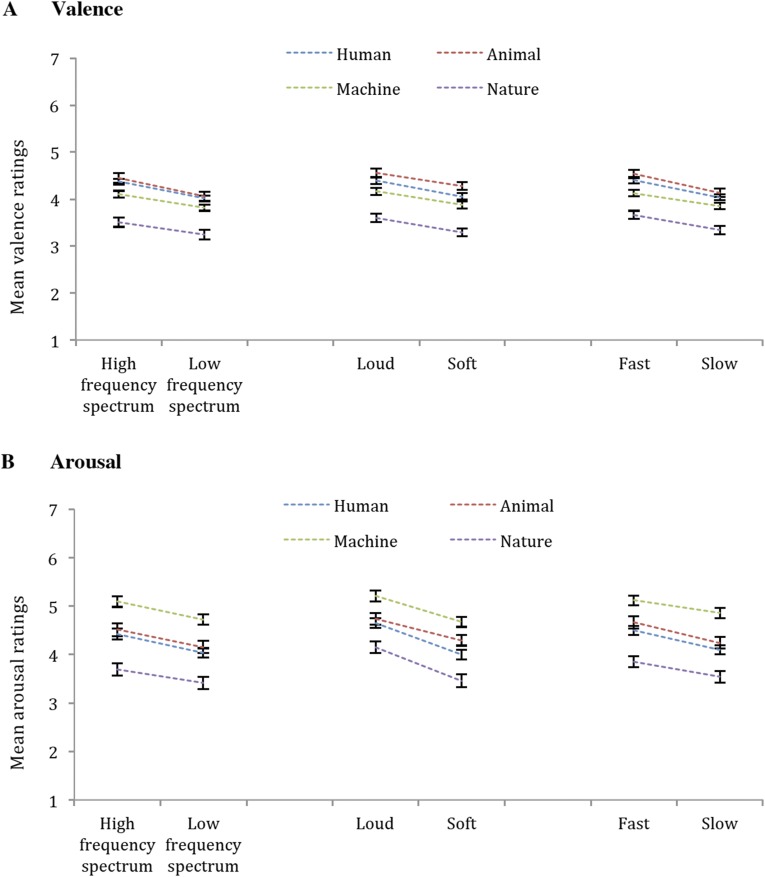

Experiment 1a (Frequency Spectrum).

Valence and arousal ratings were subjected to separate two-by-four ANOVAs with repeated measures on frequency spectrum height (high, low) and sound-type (human actions, animals, machinery, natural phenomena). We observed a main effect of frequency spectrum height for ratings of valence [F(1, 49) = 88.37, P < 0.001, ηp2 = 0.64] and arousal [F(1, 49) = 129.76, P < 0.001, ηp2 = 0.73], with a higher frequency spectrum associated with more positive valence and greater arousal (Fig. 1). For valence ratings, we also observed a significant main effect of sound-type [F(3, 147) = 5.29, P = 0.002, ηp2 = 0.10] with higher ratings associated with human actions and lower ratings associated with animals, machinery, and natural phenomena. The main effect of sound-type was not significant for arousal ratings (P = 0.28).

Fig. 1.

Mean valence (A) and arousal (B) ratings for frequency spectrum, intensity, and rate manipulations. For each manipulation-type, the mean ratings (and SEs) for the increased and decreased stimuli for each sound-type are displayed relative to mean ratings for control stimuli. The ratings of rate manipulations are averaged across long and short versions. Separate paired-samples t tests showed that both valence and arousal ratings significantly decreased from increased stimuli to controls then to decreased stimuli within each type of manipulation.

Experiment 1b (Intensity).

As in Exp. 1a, valence and arousal ratings were subjected to separate two-by-four ANOVAs with repeated measures on intensity (loud, soft) and sound-type. We found a main effect of intensity for ratings of valence [F(1, 49) = 82.90, P < 0.001, ηp2 = 0.63] and arousal [F(1, 49) = 125.90, P < 0.001, ηp2 = 0.72], with louder sounds associated with more positive valence and greater energy relative to softer sounds (Fig. 1). There were no main effects of sound-type for either valence (P = 0.07) or arousal (P = 0.37) ratings.

Experiment 1c (Rate).

Because rate manipulations alter the duration of stimuli as well, the pairs of rate-manipulated stimuli always differed in both rate and duration. To address this confound, we created long (7–7.5 s) and short versions (5 s) of each original stimulus before manipulating its rate. This procedure allowed us to separate the effects of duration and rate on the emotional character of sounds. Long and short versions of the original stimuli were subjected to rate manipulations. Separate two-by-two-by-four ANOVAs were conducted with repeated measures on duration (long, short), rate (fast, slow), and sound-type. There were no reliable effects of duration on ratings of valence (P = 0.89) or arousal (P = 0.09). However, there was a main effect of rate for both valence [F(1, 49) = 136.39, P < 0.001, ηp2 = 0.74] and arousal [F(1, 49) = 88.43, P < 0.001, ηp2 = 0.64] with the fast rate associated with more positive valence and greater arousal, relative to the slow rate (Fig. 1). We also found a main effect of sound-type for ratings of valence [F(3, 147) = 11.13, P < 0.001, ηp2 = 0.19] and arousal [F(3, 147) = 6.72, P < 0.001, ηp2 = 0.12], with the highest ratings associated with human actions and lower ratings associated with other sounds.

These findings confirm a close correspondence between attributes that carry emotional information in speech and music and the attributes that carry emotional information in environmental sounds. The acoustic attributes (higher frequency spectrum, increased intensity, and faster rate), which have been associated with valence and arousal in speech and music (23–25), are also tracked by human emotions when they occur in environmental sounds. Frequency, intensity, and rate may interact with human emotions because they carry biologically significant information about the size, proximity, and degree of energy of a sound source. For example, larger species tend to produce lower-pitched calls than smaller species (39); intensity is inversely related to the distance (40) and positively related to the body size and muscle power (41) of the sound source; rate is inherently determined by the speed of motion.

Exp. 2 was designed to validate this result by asking participants to rate the emotional connotation of sounds presented in isolation rather than in pairs. This design addressed the possibility that our acoustic manipulations only resulted in differences in emotional connotation because contrasting sounds were presented successively, whereas ratings of isolated sounds might not yield reliable effects of our manipulations.

The three types of manipulations (frequency spectrum, intensity, rate) were re-examined in Exps. 2a–2c, respectively. Another group of participants completed the emotional rating task. On each trial, listeners heard a single sound and were asked to rate its valence and arousal level. Consistent with Exp. 1, Exp. 2 showed that sounds with higher frequency spectrum, intensity, and rate were rated as more positive and energetic, relative to sounds with lower frequency, intensity, and rate (SI Materials and Methods and Fig. S1).

Fig. S1.

Valence (A) and arousal ratings (B) for the three types of manipulation. Means (and SE means) of the ratings for increased and decreased stimuli for the four sound-types are displayed. The ratings for rate manipulations were the average ratings across long and short versions. Separate paired-samples t tests showed that both valence and arousal ratings significantly decreased from increased stimuli to decreased stimuli within each type of manipulation.

In the first two experiments, participants directly judged the emotional connotations of changes in environmental sounds. Exp. 3 tested the emotional consequences of changes in environmental sounds without asking participants to evaluate the sounds themselves. If human emotions automatically track the acoustic environment, then the presence of environmental sounds should affect emotional judgments in other domains (e.g., vision).

A subset (n = 8) of environmental sounds was used. On each trial, participants were presented with a change in an environmental sound (e.g., an increased sound followed by a decreased sound) along with a change in visual stimuli (e.g., a happy face followed by a neutral face) and were instructed to judge as quickly as possible the emotional change implied by the visual stimuli. Thus, the acoustic channel was irrelevant to the task, allowing us to evaluate any interference that it might have on judgments of the visual channel. We predicted that if the acoustic stimuli changed in a manner that was congruent with the visual change, then classification of the visual change should be rapid; if it was incongruent, however, then classification of the visual change should be slower.

Average reaction times were calculated for the congruent and incongruent trials for frequency spectrum, intensity, and rate manipulations within separate valence and arousal blocks. Reaction times were subjected to two-by-three ANOVAs with repeated measures on congruity (congruent, incongruent) and manipulation-type (frequency spectrum, intensity, rate) within each block. We observed main effects of congruity in both valence [F(1, 39) = 10.40, P = 0.003, ηp2 = 0.21] and arousal [F(1, 39) = 7.98, P = 0.007, ηp2 = 0.17] blocks with congruent trials associated with faster reaction time (Fig. S2). Thus, the mere presence of changes in (irrelevant) background sounds affected the emotional decoding of facial expressions by participants, suggesting that human emotions continuously and automatically track changes in the acoustic environment. The results also suggest that the acoustic environment can shape our visual perception of emotion: our interpretation of what we see is affected by what we hear.

Fig. S2.

Average reactions time of the congruent and incongruent trials in the valence and arousal blocks in Exp. 3. The congruent trials are associated with significantly faster reaction time relative to the incongruent trials in both blocks.

SI Materials and Methods

Stimuli.

Twenty students of the University of Electronic Science and Technology of China (UESTC) (M = 23.50 y, SD = 3.33; 10 males) provided perceptual natural ratings of the manipulated sounds.

For each participant, average ratings of enhanced and diminished stimuli were calculated within each type of manipulation to confirm that they sounded equally natural, as any differences in naturalness would confound interpretation of our primary experiment on the emotional consequences of the manipulations. Separate two- (manipulation: enhanced, diminished) by-four (sound-type: human actions, animals, machinery, natural phenomena) repeated-measures ANOVAs were conducted to assess the effect of each type of manipulation on naturalness. The main effect of manipulation was not significant for frequency spectrum (P = 0.20), intensity (P = 0.32), or rate (long version: P = 0.79; short version: P = 0.23) manipulations, suggesting that enhanced and diminished stimuli did not differ in perceptual naturalness.

As expected, sounds in nature (e.g., rain) received higher ratings of naturalness than other sound-types, resulting in a main effect of sound-type for frequency spectrum [F(3, 57) = 31.71, P < 0.001], intensity [F(3, 57) = 20.17, P < 0.001], and rate [long version: F(3, 57) = 21.64, P < 0.001; short version: F(3, 57) = 33.49, P < 0.001].

Follow-up analyses suggested that naturalness did not differ among the remaining three sound-types. For each type of manipulation, two- (manipulation) by-three (sound-type with natural sounds excluded) repeated-measures ANOVAs were conducted. There was no significant effect of sound-type for any manipulation—frequency spectrum (P = 0.28), intensity (P = 0.88), or rate (long version: P = 0.46; short version: P = 0.07)—suggesting that the significant main effect of sound-type revealed in the previous analysis resulted from higher ratings assigned to natural sounds (Table S3).

Table S3.

The means and SDs of the perceptual naturalness ratings for the manipulated stimuli

| Stimuli | Frequency spectrum manipulation | Intensity manipulation | Rate manipulation | |||

| High | Low | Loud | Soft | Fast | Slow | |

| Human | 3.90 (0.51) | 3.93 (0.62) | 3.96 (0.70) | 3.83 (0.81) | 3.99 (0.36) | 4.12 (0.78) |

| Animal | 3.60 (0.46) | 3.78 (0.88) | 4.12 (1.11) | 3.78 (0.74) | 3.82 (0.78) | 4.02 (0.85) |

| Machine | 3.70 (0.61) | 3.84 (0.60) | 3.90 (0.80) | 4.05 (0.84) | 3.62 (0.42) | 4.01 (0.79) |

| Nature | 4.88 (0.95) | 5.20 (0.84) | 5.39 (1.55) | 4.72 (0.90) | 5.27 (0.55) | 5.04 (1.00) |

Note: The ratings for rate manipulations were the average ratings across long and short versions. The naturalness ratings did not differ between the increased and decreased stimuli in any types of manipulation.

Experiment 2.

Experiment 2a (frequency spectrum).

Valence and arousal ratings were subjected to separate two-by-four ANOVAs with repeated measures on frequency spectrum height (high, low) and sound-type (human actions, animals, machinery, natural phenomena). We observed a main effect of frequency spectrum height for ratings of valence [F(1, 49) = 62.34, P < 0.001] and arousal [F(1, 49) = 59.45, P < 0.001], indicating that a high-frequency spectrum was associated with more positive valence and greater arousal. The main effect of sound-type was significant for ratings of valence [F(3, 147) = 34.61, P < 0.001] and arousal [F(3, 147) = 40.16, P < 0.001], indicating that participants gave higher ratings of valence and arousal to certain sound-types. The frequency spectrum height × sound-type interaction was not significant for ratings of either valence (P = 0.14) or arousal (P = 0.60).

Valence ratings decreased from animals to human actions to machinery then to natural phenomena. To examine the reliability of this trend, three two-by-two ANOVAs were conducted with repeated measures on frequency spectrum height and sound-type. Results showed that valence ratings did not differ between animals and human actions (P = 0.54). However, human action had higher valence rating than machinery [F(1, 49) = 10.03, P = 0.003], which in turn had higher valence rating than natural phenomena [F(1, 49) = 36.12, P < 0.001] (Fig. S1).

The average arousal ratings decreased from machinery to animals to human actions and to natural phenomena. Three two-by-two ANOVAs were again conducted with repeated measures on frequency spectrum height and sound-type. Results showed that arousal ratings differed between machinery and animals [F(1, 49) = 17.34, P < 0.001] and between human actions and natural phenomena [F(1, 49) = 33.99, P < 0.001], but did not differ between animals and human actions (P = 0.35).

Experiment 2b (intensity).

Valence and arousal ratings were subjected to separate two-by-four ANOVAs with repeated measures on intensity (loud, soft) and sound-type. We observed a main effect of intensity for ratings of valence [F(1, 49) = 50.71, P < 0.001] and arousal [F(1, 49) = 136.27, P < 0.001], suggesting that increased intensity was associated with more positive valence and greater arousal. The main effect of sound-type was also significant for ratings of valence [F(3, 147) = 46.29, P < 0.001] and arousal [F(3, 147) = 27.95, P < 0.001]. However, the intensity × sound-type interaction was not significant for ratings of valence (P = 0.81) or arousal (P = 0.06).

Valence ratings decreased from animals to human actions to machinery then to natural phenomena. Three ANOVAs with repeated measures on intensity and sound-type were conducted to explore this pattern of ratings. Results showed that valence ratings differed between animals and human actions [F(1, 49) = 6.03, P = 0.02], between human actions and machinery [F(1, 49) = 16.81, P < 0.001], and between machinery and natural phenomena [F(1, 49) = 47.15, P < 0.001].

Arousal ratings decreased from machinery to animals to human actions then to natural phenomena. Three ANOVAs with repeated measures on intensity and sound-type were again conducted to explore these ratings. Results showed that arousal ratings differed between machinery and animals [F(1, 49) = 11.10, P = 0.002], and between human actions and natural phenomena [F(1, 49) = 11.77, P < 0.001], but did not differ between animals and human actions (P = 0.08).

Experiment 2c (rate).

Two versions of original stimuli (long, short) were used for rate manipulations. Valence and arousal ratings were subjected to separate two-by-two-by-four ANOVAs with repeated measures on duration (long, short), rate (fast, slow), and sound-type. Results revealed no main effects of duration for either valence (P = 0.52) or arousal ratings (P = 0.78). However, we observed a main effect of rate for ratings of valence [F(1, 49) = 105.01, P < 0.001] and arousal [F(1, 49) = 69.94, P < 0.001], suggesting that fast rate was associated with more positive valence and greater arousal. The main effect of sound-type was also significant for ratings of valence [F(3, 147) = 33.98, P < 0.001] and arousal [F(3, 147) = 38.33, P < 0.001]. However, the rate × sound-type interaction did not approach significance for ratings of valence (P = 0.67) or arousal (P = 0.68).

Valence ratings decreased from animals to human actions to machinery and to natural phenomena. Three ANOVAs with repeated measures on rate and sound-type were conducted to explore this trend. Valence ratings did not differ between animals and human actions (P = 0.16). However, human action received higher valence rating than machinery [F(1, 49) = 6.82, P = 0.01], which in turn received higher valence rating than natural phenomena [F(1, 49) = 25.95, P < 0.001].

The average arousal ratings decreased from machinery to animals to human actions and to natural phenomena. Repeated-measures ANOVA indicated that arousal ratings differed between machinery and animals [F(1, 49) = 14.67, P < 0.001], and between human actions and natural phenomena [F(1, 49) = 26.18, P < 0.001], but did not differ between animals and human actions (P = 0.17).

The main effect of sound-type suggests that there are inherent differences in valence and arousal between the sound-types used in this study. Such effects were not unexpected, but were not the focus of this investigation. Nonetheless, they raise important questions that could be addressed in future research. At present, however, it is unclear whether the effects observed in this study can be generalized to a larger sample of sounds from each category, or whether they are specific to the sample of sounds used in this study.

Experiment 3.

Reaction times were subjected to two-by-three ANOVAs with repeated measures on congruity (congruent, incongruent) and manipulation-type (frequency spectrum, intensity, rate) within the valence and arousal blocks, respectively. In addition to the main effect of congruity reported in the main article, we also examined the effect of manipulation-type. In the arousal test block, the main effect of manipulation-type was not significant (P = 0.26). In the valence test block, however, the main effect of manipulation-type was significant [F(2, 78) = 5.22, P = 0.007]. This effect suggests that congruency in valence was determined more rapidly for some manipulation-types than others. Three two-by-two ANOVAs with repeated measures on manipulation-type and congruity were conducted to explore this effect. Results showed that reaction times were slower for frequency spectrum manipulations than for rate [F(1, 39) = 4.45, P = 0.04] and intensity [F(1, 39) = 8.31, P = 0.006] manipulations. However, reaction times did not differ between intensity and rate manipulations (P = 0.17).

Discussion

This investigation demonstrates that human emotions systematically track changes in the acoustic environment, affecting not only how we experience those sounds but also how we perceive facial expressions in other people. Such effects are consistent with Darwin’s musical protolanguage hypothesis, which posits a transition from emotional communication based on the imitation of environmental sounds to the evolution of speech and music. In contemporary theories that draw from Darwin’s insights, the capacity to imitate and modify sensory input is thought to have been crucial for the evolution of human cognition (8, 42). Contemporary theories of cognitive evolution posit a sequence of critical transitions (8, 42), including a transition in which a concrete, time-bound representation of the environment evolved into an abstract representation by extracting key features from the environment. An abstract representation provided individuals with an understanding that sensory attributes were not tied to specific environments but had significance independently of circumstances. This transition would have made it possible for individuals to communicate the meaning of stimulus attributes in novel contexts and channels of communication, including vocalizations. This evolutionary stage has been referred to as mimetic cognition (42), and is thought to have mediated the transition that led to human cognitive capacities.

According to this account, mimesis represents a generative and intentional form of communication that can occur in facial expressions, gestures, and vocalizations based on the abstract features of communicative meanings (42). By using vocalizations to imitate acoustic changes in the environment that had biological significance, early hominins could convey environmental conditions to other individuals, communicating second-hand knowledge that could be acted upon adaptively. It is possible that emotive vocalizations gradually came to stand for internal states, including the emotional states of conspecifics. Thus, emotional mimesis may have allowed early hominins to share biologically significant information efficiently. Human memory eventually became inadequate for storing and processing our accumulating collective knowledge, creating the need for a more efficient and effective communication system. This led to the next transition: the invention of language, revolutionary for the evolution of human cognition (7, 8, 12, 43). Humans started to construct elaborate symbolic systems, ranging from cuneiforms, hieroglyphics, and ideograms to alphabetic languages and mathematics (44).

At the same time, it has been suggested that protolanguage was insufficient to convey the range of complex emotions associated with large social groups (8). This furthered the separation of speech and music, eventually leading to a fully developed musical system for expressing emotions efficiently and effectively, facilitating group coordination, signaling fitness and creativity, and nurturing social bonds (6, 8). Of course, hypotheses on the precise sequence of evolutionary transitions leading to speech and music are necessarily speculative, given that the early vocalizations that preceded speech and music left no “bones” (45), and there is scant paleontological evidence with which to trace their emergence and subsequent development. What is important for the purposes of this investigation is that the present results are consistent with Darwin’s hypothesis that vestiges of a musical protolanguage (5) should be evident in the emotional parallels between speech, music, and environmental sounds.

Darwin’s musical protolanguage hypothesis can account for the present results, but it should be acknowledged that there are other possible interpretations. For example, it is plausible that emotional responses to a primitive vocalization system are primary and emotional responses to music and environmental sounds derive from this emotional code. Although it is not possible to adjudicate between competing interpretations based on the evidence to date, it is relevant to note that evolution promotes development in the direction toward selective advantage. Thus, it is reasonable to suggest that the capacity to track changes in the acoustic environment evolved before the development of a vocalization system for emotional communication (e.g., protolanguage).

Regardless of the evolutionary implications of the effect, the findings illustrate the emotional power of environmental sounds on both our experience of sounds and our evaluations of accompanying visual stimuli. This evidence provides an empirical basis for the pathetic fallacy in the arts, a device in which human emotions are attributed to environmental events (e.g., an angry storm; a bitter winter).

The current study confirmed that human emotions track changes in three core acoustic attributes. However, it should be noted that speech and music have other acoustic attributes with emotional significance (23, 26–28) and share important structural parallels, including syntactic and rhythmic structure (22). As evidence accumulates on the emotional character of environmental sounds, it should be possible to develop models of emotional acoustics that include other attributes (26–28, 34, 37, 46, 47), and that predict how our perceptions of the environment shape our interpretations of people and events around us.

Materials and Methods

Participants.

Participants were recruited at the University of Electronic Science and Technology of China (UESTC). They provided informed consent and testing was approved by the Research Ethics Committee of UESTC. All participants reported having normal hearing and normal or corrected-to-normal vision, had no neurological or psychiatric disorder, were right-handed, and showed no evidence of clinical depression based on the Zung Self-rating Depression Inventory (48), which was completed after the experiment to avoid its potential influences on the emotional rating task.

Auditory Stimuli.

Four types of environmental sounds were used, each containing six exemplars: human actions (breathing, chatting, chewing, clapping, stepping, typing), animal sounds (bird, cat, cricket, horse, mosquito, rooster), machine noise (car engine, electrical drill, helicopter, jet plane, screeching tires, train), and sounds in nature (dripping water, rain, river, thunder, waves, wind). The original stimuli used for acoustic manipulations were selected from an online database (www.sounddogs.com) (see Audio Files S1–S3 for the original auditory files). All sounds had a bit depth of 16 and sampling rates between 11,025 and 48,000 Hz (see Table S1 for details). Acoustic manipulations were performed using Audacity 2.1.0. The same set of original stimuli was used for frequency spectrum and intensity manipulations. For each of these 24 environmental sounds, we manipulated frequency spectrum (high/low = ±4 semitones) and intensity (loud/soft = ±5 dB). Manipulations of the frequency spectrum were accomplished using a standard pitch-shifting function (Effect – Change Pitch), which changes the frequency content independently. We created long and short versions of each original stimulus before manipulating its rate. The short version was truncated from the long version, and had the same spectral distribution, intensity, and rate as the long version. We manipulated the rate of each stimulus (slow/fast = 1.3/0.7 times the original sample) using the function (Effect – Change Tempo) that changes rate independently. Rate manipulations were performed on the long and short versions of original stimuli, respectively.

Table S1.

The sample rate of the auditory stimuli used in this study

| Sample rate | Sounds |

| 11,025 | Breathing, typing, bird, rooster, horse, cricket, screeching tire, electrical drill, waves, dripping water, river, thunder |

| 16,000 | Cat |

| 44,100 | Clapping, chewing, mosquito, wind |

| 48,000 | Stepping, chatting, car engine, train, helicopter, jet plane, rain |

Note: The long and short versions of original stimuli used for rate manipulations have the same sample rate as listed in the table.

Stimulus Verification.

We first verified that our manipulations resulted in pairs of stimuli that were discriminable and sounded natural. Twenty participants completed an auditory discrimination task. They indicated if two consecutive stimuli (separated by a 2-s pause) were the same or different (0 = no difference, 1 = subtle difference, 2 = obvious difference). The task consisted of four blocks, each testing one manipulation-type (frequency spectrum, intensity, rate-long, rate-short). Each block had 30 trials, consisting of 24 tests where an increased and decreased versions of a stimulus were presented (six tests for each sound-type), and six controls where two identical stimuli were presented. The presentation order of the four blocks was counterbalanced across participants and the trial order within a block was randomized. The task lasted ∼30 min. All acoustic manipulation resulted in highly discriminable stimuli (Table S2).

Table S2.

The means and SDs of the perceptual discriminability ratings for the manipulated stimuli and the paired-sample t tests between experimental trials (manipulated stimuli) and controls

| Stimuli and controls | Frequency spectrum manipulation | Intensity manipulation | Rate manipulation | |||

| Mean (SD) | t Tests against controls | Mean (SD) | t Tests against controls | Mean (SD) | t Tests against controls | |

| Human | 1.62 (0.32) | t = 16.83 | 1.59 (0.36) | t = 16.87 | 1.62 (0.32) | t = 16.83 |

| Animal | 1.85 (0.22) | t = 29.98 | 1.32 (0.36) | t = 13.16 | 1.85 (0.22) | t = 29.98 |

| Machine | 1.72 (0.30) | t = 25.30 | 1.59 (0.36) | t = 16.81 | 1.72 (0.30) | t = 25.30 |

| Nature | 1.30 (0.44) | t = 11.10 | 1.42 (0.43) | t = 13.46 | 1.30 (0.44) | t = 11.10 |

| Control | 0.17 (±0.15) | |||||

Note: The rating for the control was the average rating across all manipulation-types. The ratings for rate manipulations were the average ratings across long and short versions. All Ps < 0.001.

Another group of 20 participants rated the naturalness of stimuli presented individually (1 = unnatural, 4 = moderately natural, 7 = completely natural). The task consisted of four blocks, each testing one manipulation-type (frequency spectrum, intensity, rate-long, rate-short). Each block had 48 trials (six increased and six decreased trials for each sound-type). The presentation order of the four blocks was counterbalanced across participants and the trial order within a block was randomized. The task lasted ∼40 min.

For each participant, average ratings of increased and decreased stimuli were calculated within each type of manipulation to confirm that they sounded equally natural, as any differences in naturalness would confound interpretation of our primary experiment on the emotional consequences of the manipulations. Separate two- (manipulation: increased, decreased) by-four (sound-type) repeated-measures ANOVAs were conducted to assess the effect of each type of manipulation on naturalness. The main effect of manipulation was not significant for frequency spectrum (P = 0.20), intensity (P = 0.32), or rate (long version: P = 0.79; short version: P = 0.23) manipulations, suggesting that increased and decreased stimuli did not differ in perceptual naturalness, and that perceived naturalness was unaffected by our manipulations (SI Materials and Methods and Table S3).

Experiment 1.

Participants.

Fifty participants (M = 23.32 y, SD = 2.29; 27 males) completed an emotional rating task.

Procedure.

The effects of the three acoustic manipulations (frequency spectrum, intensity, rate) were examined in Exps. 1a–1c, respectively. In Exp. 1a (frequency spectrum), there were 24 experimental trials (six for each type of sound: human actions, animals, machinery, natural phenomena) and 6 control trials (two identical sounds, selected randomly from the set of unmanipulated sounds). On each trial, participants heard increased and decreased versions of each exemplar presented consecutively and separated by a 2-s pause. Participants then rated the difference in emotional character between the two versions. In the experimental trials, high- and low-frequency versions of a sound were presented: half with the high-frequency spectrum version presented first (three trials per sound-type) and half with the low-frequency spectrum version presented first. Twenty-five participants rated the first sound in each pair relative to the second; the other 25 participants rated the second sound relative to the first. The order of the three part-experiments was counterbalanced across participants, and the order of trials within each part-experiment was randomized independently for each participant. The same procedure was adopted for manipulations of intensity (Exp. 1b) and rate (Exp. 1c).

Participants were seated in a sound-attenuated booth, and given a demonstration of the task based on an instruction written in Chinese: “Sometimes our environment suggests a mood or emotion. This connection is often seen in films and story-telling, which often describes weather conditions, and details of the environment. In this study, we are examining the possibility that sounds that we hear in our environment can sometimes suggest an emotional tone or mood. Your task is to compare the two sounds and rate the emotional connotation of the second sound in relation to the first by using two scales: one ranging from more negative to more positive (1 = more negative, 4 = no change, 7 = more positive); and the other ranging from more calm to energetic (1 = more calm, 4 = no change, 7 = more energetic). To help you do the task, you might imagine that you are a film director, and your sound editor has introduced a series of environmental sounds in order to create an overall mood for the film. Imagine that you are giving your sound editor feedback about her choice of sounds, and the moods that they evoke.” The auditory stimuli were binaurally presented via headphones. The sound pressure level remained constant across participants. The task lasted ∼40 min.

Experiment 2.

Participants.

Fifty UESTC students (M = 23.10 y, SD = 1.58; 39 males) completed an emotional rating task.

Procedure.

The procedure was similar to Exp. 1 but participants only heard one stimulus on a trial, and the middle point of the valence and arousal scales were revised to be “neutral”. Exps. 2a and 2b contained 48 trials (six increased and six decreased trials for the four types of sounds); Exp. 2c contained 96 trials (short and long exemplars of the fast and slow versions of each type of sound). On each trial, listeners heard a single sound and were asked to rate each pole of the 2D model of emotion: valence (1 = more negative, 4 = neutral, 7 = more positive) and arousal (1 = more calm, 4 = neutral, 7 = more energetic). The order of the three part-experiments was counterbalanced across participants, and the order of trials within each part-experiment was randomized independently for each participant. The experiment lasted ∼45 min. For each participant, we calculated the average ratings of the increased and decreased stimuli for each sound-type and manipulation.

Experiment 3.

Participants.

Forty UESTC students (M = 23.70 y, SD = 1.37; 27 males) completed a visual emotional judgment task while the environmental sounds were presented at the same time as the irrelevant signal.

Stimuli preparation.

Visual stimuli.

Facial expressions of 58 actors/actresses were selected from the Radboud Faces Database (49). For each actor/actress, three facial expressions (happy, neutral, surprised) recorded from the frontal angle were used (Fig. S3).

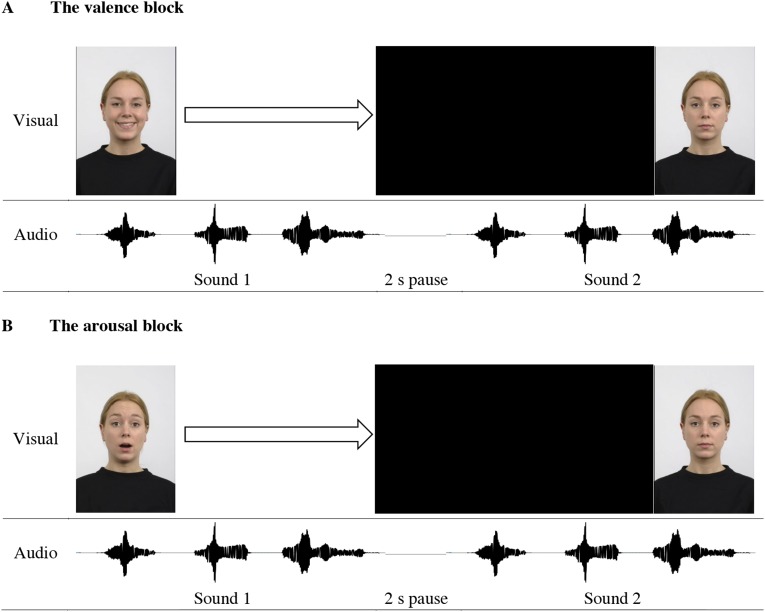

Fig. S3.

An illustration of the design of Exp. 3: (A) the valence block and (B) the arousal block. In each trial, the first visual stimulus was presented synchronously with the first auditory stimulus; then, a black screen was shown until the last second of the trial, during which the second facial expression was presented. The auditory stimuli and the time line in the figure are used only for the purpose of illustrating the experimental design.

Auditory stimuli.

Eight environmental sounds, two from each sound-type (clapping, typing, cat, horse, car engine, train, thunder, waves), were used. Because Exps. 1 and 2 showed that duration did not confound emotional perception for rate manipulations, only the long version was used for rate manipulations. Thus, each sound had six versions, manipulated in either frequency spectrum (high, low), or intensity (loud, soft), or rate (fast, slow).

Procedure.

An experiment consisted of a valence block and an arousal block, each containing 48 experimental trials and 10 control trials. In the valence block, participants saw in an experimental trial two facial expressions (happy, neutral) of an actor/actress presented sequentially on a computer monitor, while hearing the increased and decreased versions of a sound presented in a manner identical to Exp. 1. The length of a trial was defined by the auditory stimulus. In each trial, the first visual stimulus was presented synchronously with the first auditory stimulus; then, a black screen was shown until the last second of the trial during which the second facial expression was presented. Participants were asked to respond as rapidly as possible whether the second facial expression was happier than the first one. This design allowed for an adequate processing of the auditory stimulus while encouraging a rapid response to the visual stimulus (Fig. S3).

In the auditory channel, within a manipulation-type (frequency spectrum, intensity, rate), each sound was presented in two trials: once with the high-valence version presented first and once with the low-valence version presented first. In the visual channel, an individual actor/actress was used for a trial: half with the high-valence (happy) face presented first and half with the low-valence (neutral) face presented first. Based on the direction of valence change in the two channels, there were 24 congruent and 24 incongruent trials. Among the congruent trials, half involved an increase in both channels and half involved a decrease in both channels. The congruity assignment and the direction of change in the two channels were counterbalanced across participants. After every four or five experimental trials, there was a control trial, which differed from the experimental trials in that it consisted of two identical facial expressions accompanied by environmental sounds that were not used in the experimental trials.

Participants were first given a demonstration of the task based on an instruction written in Chinese: “In each trial, you will see two facial expressions presented sequentially. The second facial expression will appear at the end of a trial. Your task is to determine whether the second facial expression is happier, the same, or less happy than the first one. Press the ‘F’ key for an increase, the ‘J’ key for a decrease, and the ‘Space’ key for no change. With accuracy ensured first, please respond as quickly as possible. In about 20% of the trials, the two faces you will see in a trial are identical. Thus, please pay close attention to the task.” The key assignment for the decrease and increase responses was counterbalanced across participants. There was a 2-min break between blocks.

The two blocks used the same auditory stimuli and experimental procedure. However, the arousal block used surprised and neutral faces and collected responses on whether the second facial expression had greater, less, or an equivalent amount of energy than the first facial expression. The order of blocks was counterbalanced across participants, and the order of trials within each test block was randomized independently for each participant. The experiment contained 116 trials and lasted ∼40 min. The same set of actors/actresses was used in the two blocks.

To ensure reliable reaction time data, responses that took longer than 4 s were excluded from data analysis. A 4-s limit was chosen because it gave ample time to reliably judge the appearance of a visual stimulus (50). The exclusion criterion resulted into 4.8% of responses being excluded across participants.

Supplementary Material

Acknowledgments

We thank Diankun Gong for assistance in data collection at the University of Electronic Science and Technology of China. The Australian Research Council supported this research through a research fellowship (to W.M.) in the Australian Research Center Centre of Excellence in Cognition and its Disorders, and through Discovery Grant DP130101084 (to W.F.T.); W.M. is also supported by Chinese Ministry of Education Social Science Funding (11YJC880079) and Chinese National Educational Research Key Project (GPA115005).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1515087112/-/DCSupplemental.

References

- 1.Phelps EA, LeDoux JE. Contributions of the amygdala to emotion processing: From animal models to human behavior. Neuron. 2005;48(2):175–187. doi: 10.1016/j.neuron.2005.09.025. [DOI] [PubMed] [Google Scholar]

- 2.Mauss IB, Robinson MD. Measures of emotion: A review. Cogn Emotion. 2009;23(2):209–237. doi: 10.1080/02699930802204677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gosselin N, Peretz I, Johnsen E, Adolphs R. Amygdala damage impairs emotion recognition from music. Neuropsychologia. 2007;45(2):236–244. doi: 10.1016/j.neuropsychologia.2006.07.012. [DOI] [PubMed] [Google Scholar]

- 4.Smith GM. Film Structure and the Emotion System. Cambridge Univ Press; NY: 2004. [Google Scholar]

- 5.Darwin C. The Descent of Man and Selection in Relation to Sex. 1st Ed John Murray; London: 1871. [Google Scholar]

- 6.Fitch WT. The biology and evolution of music: A comparative perspective. Cognition. 2006;100(1):173–215. doi: 10.1016/j.cognition.2005.11.009. [DOI] [PubMed] [Google Scholar]

- 7.Brown S. The “musilanguage” model of music evolution. In: Brown S, Merker B, Wallin C, Wallin NL, editors. The Origins of Music. MIT Press; Cambridge, MA: 2000. pp. 271–300. [Google Scholar]

- 8.Mithen SJ. The Singing Neanderthals: The Origins of Music, Language, Mind and Body. Harvard Univ Press; Cambridge, MA: 2006. [Google Scholar]

- 9.Fitch WT. The evolution of language. In: Gazzaniga M, editor. The Cognitive Neurosciences III. MIT Press; Cambridge, MA: 2004. [Google Scholar]

- 10.Fitch WT. The Evolution of Language. Cambridge Univ Press; Cambridge, UK: 2010. [Google Scholar]

- 11.Richman B. On the evolution of speech: Singing as the middle term. Curr Anthropol. 1993;34(5):721–722. [Google Scholar]

- 12.Dissanayake E. Antecedents of the temporal arts in early mother-infant interaction. In: Brown S, Merker B, Wallin C, Wallin NL, editors. The Origins of Music. The MIT Press; Cambridge, MA: 2000. pp. 389–410. [Google Scholar]

- 13.Trehub SE. The developmental origins of musicality. Nat Neurosci. 2003;6(7):669–673. doi: 10.1038/nn1084. [DOI] [PubMed] [Google Scholar]

- 14.Levitin DJ. The World in Six Songs: How the Musical Brain Created Human Nature. Dutton Penguin; NY: 2008. [Google Scholar]

- 15.Ross D, Choi J, Purves D. Musical intervals in speech. Proc Natl Acad Sci USA. 2007;104(23):9852–9857. doi: 10.1073/pnas.0703140104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Musso M, et al. A single dual-stream framework for syntactic computations in music and language. Neuroimage. 2015;117:267–283. doi: 10.1016/j.neuroimage.2015.05.020. [DOI] [PubMed] [Google Scholar]

- 17.Maess B, Koelsch S, Gunter TC, Friederici AD. Musical syntax is processed in Broca’s area: An MEG study. Nat Neurosci. 2001;4(5):540–545. doi: 10.1038/87502. [DOI] [PubMed] [Google Scholar]

- 18.Levitin DJ, Menon V. Musical structure is processed in “language” areas of the brain: A possible role for Brodmann Area 47 in temporal coherence. Neuroimage. 2003;20(4):2142–2152. doi: 10.1016/j.neuroimage.2003.08.016. [DOI] [PubMed] [Google Scholar]

- 19.Fedorenko E, Patel A, Casasanto D, Winawer J, Gibson E. Structural integration in language and music: Evidence for a shared system. Mem Cognit. 2009;37(1):1–9. doi: 10.3758/MC.37.1.1. [DOI] [PubMed] [Google Scholar]

- 20.Musacchia G, Sams M, Skoe E, Kraus N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc Natl Acad Sci USA. 2007;104(40):15894–15898. doi: 10.1073/pnas.0701498104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Besson M, Chobert J, Marie C. Transfer of training between music and speech: Common processing, attention, and memory. Front Psychol. 2011;2:94. doi: 10.3389/fpsyg.2011.00094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Patel AD. Language, music, syntax and the brain. Nat Neurosci. 2003;6(7):674–681. doi: 10.1038/nn1082. [DOI] [PubMed] [Google Scholar]

- 23.Juslin PN, Laukka P. Communication of emotions in vocal expression and music performance: Different channels, same code? Psychol Bull. 2003;129(5):770–814. doi: 10.1037/0033-2909.129.5.770. [DOI] [PubMed] [Google Scholar]

- 24.Ilie G, Thompson WF. A comparison of acoustic cues in music and speech for three dimensions of affect. Music Percept. 2006;23(4):319–329. [Google Scholar]

- 25.Ilie G, Thompson WF. Experiential and cognitive changes following seven minutes exposure to music and speech. Music Percept. 2011;28(3):247–264. [Google Scholar]

- 26.Bowling DL, Gill K, Choi JD, Prinz J, Purves D. Major and minor music compared to excited and subdued speech. J Acoust Soc Am. 2010;127(1):491–503. doi: 10.1121/1.3268504. [DOI] [PubMed] [Google Scholar]

- 27.Curtis ME, Bharucha JJ. The minor third communicates sadness in speech, mirroring its use in music. Emotion. 2010;10(3):335–348. doi: 10.1037/a0017928. [DOI] [PubMed] [Google Scholar]

- 28.Bowling DL, Sundararajan J, Han S, Purves D. Expression of emotion in Eastern and Western music mirrors vocalization. PLoS One. 2012;7(3):e31942. doi: 10.1371/journal.pone.0031942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Quinto L, Thompson WF, Keating FL. Emotional communication in speech and music: The role of melodic and rhythmic contrasts. Front Psychol. 2013;4:184. doi: 10.3389/fpsyg.2013.00184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Huron D. Science & music: Lost in music. Nature. 2008;453(7194):456–457. doi: 10.1038/453456a. [DOI] [PubMed] [Google Scholar]

- 31.Hauser MD, McDermott J. The evolution of the music faculty: A comparative perspective. Nat Neurosci. 2003;6(7):663–668. doi: 10.1038/nn1080. [DOI] [PubMed] [Google Scholar]

- 32.Thompson WF, Marin MM, Stewart L. Reduced sensitivity to emotional prosody in congenital amusia rekindles the musical protolanguage hypothesis. Proc Natl Acad Sci USA. 2012;109(46):19027–19032. doi: 10.1073/pnas.1210344109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Thompson WF, Schellenberg EG, Husain G. Decoding speech prosody: Do music lessons help? Emotion. 2004;4(1):46–64. doi: 10.1037/1528-3542.4.1.46. [DOI] [PubMed] [Google Scholar]

- 34.Lima CF, Castro SL. Speaking to the trained ear: musical expertise enhances the recognition of emotions in speech prosody. Emotion. 2011;11(5):1021–1031. doi: 10.1037/a0024521. [DOI] [PubMed] [Google Scholar]

- 35.Russell JA. A circumplex model of affect. J Pers Soc Psychol. 1980;39(6):1161–1178. [Google Scholar]

- 36.Posner J, Russell JA, Peterson BS. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev Psychopathol. 2005;17(3):715–734. doi: 10.1017/S0954579405050340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Howard DM. Pitch, models of. In: Thompson WF, editor. Music in the Social and Behavioral Sciences: An Encyclopedia. SAGE Publication; 2014. pp. 867–868. [Google Scholar]

- 38.Schnupp J, Nelken I, King A. Periodicity and pitch perception: Physics, psychophysics, and neural mechanisms. In: Schnupp J, Nelken I, King A, editors. Auditory Neuroscience: Making Sense of Sound. The MIT Press; Cambridge, MA: 2010. pp. 93–138. [Google Scholar]

- 39.Hauser MD. The evolution of nonhuman primate vocalizations: Effects of phylogeny, body weight, and social context. Am Nat. 1993;142(3):528–542. doi: 10.1086/285553. [DOI] [PubMed] [Google Scholar]

- 40.Marten K, Marler P. Sound transmission and its significance for animal vocalization. Behav Ecol Sociobiol. 1977;2(3):271–290. [Google Scholar]

- 41.Bennet-Clark HC. Size and scale effects as constraints in insect sound communication. Philos Trans R Soc Lond B Biol Sci. 1998;353(1367):407–419. [Google Scholar]

- 42.Donald M. Origins of the Modern Mind: Three Stages in the Evolution of Culture and Cognition. Harvard Univ Press; Cambridge, MA: 1991. [Google Scholar]

- 43.Christiansen MH, Kirby S. Language evolution: The hardest problem in science? In: Christiansen M, Kirby S, editors. Language Evolution. Oxford Univ Press; NY: 2003. pp. 77–93. [Google Scholar]

- 44.Daves S. The Artful Species: Aesthetics, Art, and Evolution. Oxford Univ Press; Oxford: 2012. [Google Scholar]

- 45.Thompson WF. Music Thought & Feeling: Understanding the Psychology of Music. 2nd Ed Oxford Univ Press; New York: 2014. [Google Scholar]

- 46.Weninger F, Eyben F, Schuller BW, Mortillaro M, Scherer KR. On the acoustics of emotion in audio: What speech, music and sound have in common. Front Psychol. 2013;4:292. doi: 10.3389/fpsyg.2013.00292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Trainor L. Science & music: The neural roots of music. Nature. 2008;453(7195):598–599. doi: 10.1038/453598a. [DOI] [PubMed] [Google Scholar]

- 48.Zung WWK. A self-rating depression scale. Arch Gen Psychiatry. 1965;12(1):63–70. doi: 10.1001/archpsyc.1965.01720310065008. [DOI] [PubMed] [Google Scholar]

- 49.Langner O, et al. Presentation and validation of the Radboud Faces Database. Cogn Emotion. 2010;24(8):1377–1388. [Google Scholar]

- 50.Barbalat G, Bazargani N, Blakemore SJ. The influence of prior expectations on emotional face perception in adolescence. Cereb Cortex. 2013;23(7):1542–1551. doi: 10.1093/cercor/bhs140. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.