Abstract

We investigated the dynamics of head movement in mothers and infants during an age-appropriate, well-validated emotion induction, the Still Face paradigm. In this paradigm, mothers and infants play normally for 2 minutes (Play) followed by 2 minutes in which the mothers remain unresponsive (Still Face), and then two minutes in which they resume normal behavior (Reunion). Participants were 42 ethnically diverse 4-month-old infants and their mothers. Mother and infant angular displacement and angular velocity were measured using the CSIRO head tracker. In male but not female infants, angular displacement increased from Play to Still-Face and decreased from Still Face to Reunion. Infant angular velocity was higher during Still-Face than Reunion with no differences between male and female infants. Windowed cross-correlation suggested changes in how infant and mother head movements are associated, revealing dramatic changes in direction of association. Coordination between mother and infant head movement velocity was greater during Play compared with Reunion. Together, these findings suggest that angular displacement, angular velocity and their coordination between mothers and infants are strongly related to age-appropriate emotion challenge. Attention to head movement can deepen our understanding of emotion communication.

Index Terms: Still-Face paradigm, Mother-infant interaction, Head movements, Social interaction

1 Introduction

In everyday language and experience, we encounter associations between head movement and emotional expression. For instance, we lower our head in sorrow and embarrassment and raise it in pride. Yet, with few exceptions [32], [20], [3], the relation between head movement and emotion is relatively neglected in the psychology of emotion. Descriptions of prototypic emotions emphasize non-rigid deformations of facial features (e.g., laterally stretched lip corners in fear) rather than the context of rigid head movement in which they may occur (e.g., pulling the head back and away from a perceived threat).

Existing evidence suggests that head movement, facial expression of emotion, and attention may be closely coordinated. When infants visually track an upwardly moving target, their brows raise; when they track the very same target moving from below their line of sight, their brows are lowered and pulled together. These co-occurrences appear to result from coordinated motor patterns [42].

In addition to communicating emotion, head movement serves to regulate social interaction. Head nods and turns serve turn-taking [25] and back-channeling [34] functions, vary with gender and the expressiveness of interactive partners [9], [10], and communicate messages such as agreement or disagreement in interpersonal interaction between intimate partners [30].

Despite its importance in social interaction, in automatic emotional expression analysis, head movement has mostly been considered a “nuisance” variable to control when extracting features for the detection of Action Units (AUs) or facial expressions [23]. Few investigators in this area have considered the relation between head movement and emotion communication in adults. Gunes and Pantic [29], found that head movements of participants interacting with intelligent virtual agents were related to the observed participants' valence and intensity among other dimensions of emotion. Busso and colleagues [12], [13] found associations between head movement and emotional speech in avatars. Sidner and colleagues [47], pursued related work in human-robot conversation. In emotion portrayals, De Silva and Bianchi-Berthouze [24] found that head movement in comparison with other body movements was at best weakly related to intended or perceived emotions. In portrayals of mental states, El Kaliouby and Robinson [26] found stronger linkages between head movement and intended states (e.g., interest and confusion). In spontaneous social interactions in intimate couples, Hammal and colleagues [30] found that head movement varied with interpersonal conflict.

As a contribution to previous work reviewed above and to further analyze the characteristics of head movement in the context of social interaction, we studied head movement characteristics in face-to-face interaction between mothers and their infants. The study of mother-infant dyads is important because they are some of the most influential and defining relationships we establish in our lives. To analyze the characteristics of head movement in the context of social interaction between mothers and infants, we used a well-validated age-appropriate emotion induction, the Still Face paradigm [50], [1]. The Still Face paradigm consists of three contiguous episodes: mother and infant play normally for 2 minutes (Play) followed by 2 minutes in which the mother is unresponsive (Still Face), and then two minutes in which she resumes responsiveness (Reunion). Shared positive affect and co-regulation of social interaction are key developmental tasks in the first half-year, and infants respond powerfully to perturbations of typical mother behavior. During the Play episode, mothers and infants show bouts of shared positive affect and sustained mutual gaze. During the Still Face, infants respond with increased gaze aversion, less smiling, and increased negative affect relative to the Play episode. Much anecdotal evidence suggests that mothers experience considerable discomfort during the Still Face, as well. The still-face effect in the infants typically extends into the ensuing Reunion episode. For the first half minute or so of the Reunion, infant affect remains more negative and less positive than in the Play episode [21], [39]. Moderator effects are few. For instance, gender differences exists. Male relative to female infants, are less regulated during all episodes of the Still Face paradigm [51], [14], [53]. Maternal depression also influences mother and infant affect in the paradigm [15]. Overall, these patterns observed in the paradigm are robust and have been well replicated in many studies [39]. Individual differences in the still-face effect are strongly related to maternal sensitivity during the Play and Reunion episodes, to attachment security at 12 months, and to behavior problems at 18 months or older [21], [39], [43], [19].

Qualitative descriptions of the Still Face effect have often referred to head movement, such as when the infant looks away following an attempt to elicit positive affect from the mother. Tronick [50], for example, described a Still Face episode in which the infant initially orients toward the mother and greets her. When the mother fails to respond, the infant “alternates brief glances toward her with glances away from her.” Finally, “as these attempts fail, the infant eventually withdraws, orients his face and body away from his mother, and stays turned from her.” Little is known beyond qualitative observations about the contribution of head movement in this paradigm. The few reported investigations were based on the manual coding of only small intervals of 1s into a small subset of discrete categories such as “up” and “down” using “ordinalized behavior scales” [5] [6]. However, mother-infant social interaction is continuous and not categorical [40], and manual coding of head movement to a subset of categories leads to the loss of the temporal information. Quantitative measurement and analysis is of great support to capture the continuous spatiotemporal dynamic of social interaction between mothers and infants. Efforts in this direction have begun [40], [41]. Messinger and colleagues [40], for example, used automatic measurements to investigate coordination of mothers and infants' facial expression over time. However, nothing has been done to automatically measure and analyze the spatiotemporal dynamic of head movement in mother-infant social interaction. Thus, little is known about the perturbation and recovery of head movement in the coordination of emotion exchanges between mothers and infants.

We used the Still Face paradigm to investigate this issue. We hypothesized that head movement would reveal the challenges of this age appropriate emotion challenge. Specifically, we hypothesized that head movement would significantly differ between Play and Still Face and between Still Face and Reunion, with no difference between Play and Reunion [39]. Consistent with previous findings for facial expression [51], [14], [53], we also hypothesized that head movement across all episodes would differ between boys and girls.

Infants were 4 months of age, which is a prime age for face-to-face play. Individual differences in how infants respond in the Still Face paradigm at this age are predictive of a range of developmental outcomes [31], [43], including attachment security and behavior problems.

2 Experimental Set-Up and Head Tracking

2.1 Participants

Participants were 42 ethnically diverse 4-month-old infants (M = 4.07, SD = 0.3) and their mothers. Twenty-seven had Hispanic ethnic backgrounds, 14 were Non-Hispanic, and one was unknown. Eight were African-American, 29 were Euro-American, 4 were both, and one was unknown. Twenty-three were boys. All mothers gave their informed consent to the procedures.

2.2 Observational Procedures

Infants were positioned in a seat facing their mothers who were seated in front of them (see Fig. 1). Following previous research [1], the paradigm consisted of three contiguous episodes: 2 minutes of play interaction (Play) where the mothers were asked to play with their infants without toys; 2 minutes in which the mothers stop playing and remained oriented toward their infants but were unresponsive (Still Face); and 2 minutes in which the mothers resumed play (Reunion). A 2-second tone signaled the mother to begin and end each episode. If the infant became significantly distressed during the Still Face, (defined as crying for more than 30 seconds), the episode was terminated early. The experiment was recorded using Sony DXC190 compact cameras at 30f/s, and Countryman E6 Directional Earset Microphone and B6 Omnidirectional lavalier microphone. Face orientation to the cameras was approximately 20° from frontal for the infants and close to frontal for the mothers, with considerable head movement (up to about 40° deviation from frontal faces) for each (see Fig. 2).

Figure 1.

Face-to-Face interaction.

Figure 2.

Example of tracking results of a mother and her infant. Each column corresponds to the same interaction moment between the mother and her infant. Red boxes correspond to the face area and green pyramids to the 3D representation of the detected head pose.

2.3 Automatic Head Tracking

The CISRO cylinder-based 3D head tracker was used to model the 6 degrees of freedom of rigid head movement [22]. This tracker has high concurrent validity with alternative methods and is capable of revealing dynamics of head motion in face-to-face interaction. In a recent application, the tracker significantly differentiated between low- and high conflict episodes in adult intimate and distressed couples [30]. For each participant (mother and infant), the tracker was initialized on a manually selected near-frontal image prior to tracking. Figure 2 shows examples of tracking results for a mother and her infant.

3 Data Reduction

In the following we first describe the results of the automatic head tracking. We then describe how the obtained head tracking results were reduced into angular displacement and angular velocity.

3.1 Head Tracking Results

For each video frame, the tracker output 6 degrees of freedom of rigid head movement or a failure message when a frame could not be tracked. To evaluate the quality of the tracking, we visually reviewed the tracking results overlaid on the video (see red boxes and green pyramids orientation in Fig. 2). Table 1 reports the distribution of tracked frames that met visual review for good tracking. Better tracking performance was found for mothers compared with infants. For infants, no significant difference was found in the tracking results between Play, Still Face, and Reunion. Reasons for failure in infants included hand in mouth, extreme head turn (out of frame), and lack of clear boundaries between their lower jaw and chest.

Table 1.

Proportion (P) and Mean Number (N) of Frames with Valid Tracking.

|

|

||||||

|---|---|---|---|---|---|---|

| Play | Still Face | Reunion | ||||

|

| ||||||

| P | N | P | N | P | N | |

| Mothers | 64.69 | 2409.5 | 89.23 | 3190.6 | 78.16 | 2895.2 |

| Infants | 54.53 | 2025.6 | 47.51 | 1716.0 | 52.56 | 1946.0 |

| Both Mother and infant | 28.45 | 1059.6 | 42.89 | 1559.2 | 33.80 | 1252.1 |

3.2 Head Movement Measurement

Angles of the head in the horizontal, vertical, and lateral directions were selected to measure head movement. These directions correspond to head nods (i.e. pitch), head turns (i.e. yaw), and lateral head inclinations (i.e. roll), respectively. For each participant and each episode (i.e., Play, Still Face, and Reunion), head angles were converted into angular displacement and angular velocity. So that missing data would not introduce error, for each episode the angular displacement and angular velocity were computed separately for each consecutive valid segment (i.e. consecutive valid frames). For pitch, yaw, and roll, angular displacement was measured by subtracting the overall mean head angles within each valid segment from the head angle value for each frame in that segment. We used the mean head angle, which afforded an estimate of the overall head position for each participant in each segment. For pitch, yaw, and roll, angular velocity was computed as the derivative of angular displacement, thus measuring the speed of changes of head movement from one frame to the next.

The root mean square (RMS) then was used to measure the magnitude of variation of the angular displacement and the angular velocity, respectively. The RMS value of a set of values is the square root of the arithmetic mean of the squares of the original values (See Equa.1), in our case the angular displacements and the angular velocities. The RMS value of the angular displacement and angular velocity was computed for each consecutive valid segment for each episode for each mother and each infant, separately. RMSs as used below refer to the mean of the RMSs of consecutive valid segments (see Section 5, Table 2 and Table 3). In all conducted analyses, the RMS angular displacement and RMS angular velocity were used to measure head movement characteristics.

Table 2.

Post-hoc paired t-tests for pitch, yaw, and roll RMS angular displacements for mothers and infants.

| Mean of RMS angular displacement | Paired t-test | ||||||

|---|---|---|---|---|---|---|---|

|

|

|||||||

| Play | Still Face | Reunion | Play-Sill Face | Play-Reunion | Still Face-Reunion | ||

|

| |||||||

| Pitch | M (SD) | M (SD) | M (SD) | t | t | t | |

| Mothers | 1.92 (0.83) | 1.28 (0.82) | 1.96 (0.71) | 3.87** | −0.53 | −4 59** | |

| Infants | all | 1.88 (0.71) | 2.37 (0.88) | 1.91 (0.74) | −2 85** | −0.06 | 3.12** |

| Boys | 1.80 (0.69) | 2.59 (0.96) | 1.87 (0.70) | −2 97** | −0.24 | 3.51** | |

| Girls | 1.98 (0.75) | 2.10 (0.69) | 1.95 (0.80) | −0.74 | 0.12 | 0.74 | |

|

| |||||||

| Yaw | |||||||

|

| |||||||

| Mothers | 2.03 (0.88) | 1.28 (0.92) | 2.06 (0.70) | 3.96** | −0.63 | −463** | |

| Infants | all | 1.91 (0.65) | 2.26 (0.83) | 1.90 (0.73) | −2 21* | 0.14 | 2.54** |

| Boys | 1.83 (0.65) | 2.56 (0.88) | 1.96 (0.77) | −3.54** | −0.74 | 2.95** | |

| Girls | 2.01 (0.66) | 1.89 (0.61) | 1.84 (0.69) | 0.75 | 0.86 | 0.30 | |

|

| |||||||

| Roll | |||||||

|

| |||||||

| Mothers | 0.98 (0.52) | 0.74 (0.69) | 0.89 (0.36) | 1.84 | 0.44 | −1.25 | |

| Infants | all | 1.36 (0.53) | 1.64 (0.70) | 1.40 (0.48) | −2 29* | −0.38, | 2.40* |

| Boys | 1.21 (0.37) | 1.77 (0.82) | 1.36 (0.45) | −3.22* | −1.64 | 2.70* | |

| Girls | 1.54 (0.64) | 1.49 (0.49) | 1.44 (0.53) | 0.44 | 0.76 | 0.39 | |

Note: t: t-ratio, df: degree of freedom (df = 40 for Play-Still Face and Play-Reunion; df = 41 for Still Face-Reunion), p: probability.

p ≤ 0.01,

p ≤ 0.05

Table 3.

Post-hoc paired t-tests for pitch, yaw, and roll RMS angular velocities for mothers and infants.

| Mean of RMS angular velocity | Paired t-test | |||||

|---|---|---|---|---|---|---|

|

|

||||||

| Play | Still Face | Reunion | Play-Still Face | Play-Reunion | Still Face-Reunion | |

|

| ||||||

| Pitch | M (SD) | M (SD) | M (SD) | t | t | t |

| Mothers | 0.75 (0.49) | 0.26 (0.35) | 0.79 (0.72) | 5.44** | −0.48 | −5 59** |

| Infants | 0.78 (0.39) | 0.94 (0.44) | 0.69 (0.31) | −1.70 | 1.33 | 3.15** |

|

| ||||||

| Yaw | ||||||

|

| ||||||

| Mothers | 0.80 (0.51) | 0.27 (0.36) | 0.84 (0.82) | 5.51** | −0.51 | −5 15** |

| Infants | 0.79 (0.37) | 0.91 (0.43) | 0.68 (0.29) | −1.50 | 1.70 | 3.65** |

|

| ||||||

| Roll | ||||||

|

| ||||||

| Mothers | 0.39 (0.30) | 0.14 (0.17) | 0.32 (0.23) | 5.01** | 1.10 | −4 38** |

| Infants | 0.62 (0.39) | 0.69 (0.36) | 0.59 (0.32) | −0.89 | 0.44 | 1.66 |

Note: t: t-ratio, df: degree of freedom (df = 40 for Play-Still Face and Play-Reunion; df = 41 for Still Face-Reunion), p: probability.

p ≤ 0.01,

p ≤ 0.05

| (1) |

4 Data Analysis

We analyzed the mean differences of the RMS of head angular displacement and RMS of head angular velocity between Play, Still Face, and Reunion. We then used time series analysis to measure the pattern of synchrony and asynchrony in angular displacement and angular velocity between mothers and infants and whether this pattern changes in Reunion compared with Play interaction.

4.1 Comparison of Infants' and Mothers' Head Movement During Play, Still Face, and Reunion

Because of the repeated-measures nature of the data, mixed ANOVAs were used to evaluate mean differences in head angular displacement and angular velocity. Both main effects, such as sex of the infant and episode (i.e., Play, Still-Face, and Reunion), and the interaction effect (sex-by-episode)1 were evaluated.

Student's paired t-tests were used for post hoc analyses following significant ANOVAs. The RMSs of the angular displacement and angular velocity for pitch, yaw, and roll were analyzed in separate ANOVAs.

4.2 Coordination of Head Movement Between Mothers and Infants During Play and Reunion

4.2.1 Zero-Order Correlation

As an initial analysis, we computed the zero-order correlations between mothers' and infants' time series of angular displacement and angular velocity during Play and Reunion. During Still Face, mothers were instructed not to move and so any head movement was negligible.

4.2.2 Windowed Cross-Correlation

Windowed cross-correlation estimates time varying correlations between signals [35], [36] and produces positive and negative correlation values for each (time, lag) pair of values. In [40], for example, the windowed cross-correlation was used to measure local correlation between infant and mother during smiling activity over time. In [30], the windowed cross-correlation was used to compare the correlation of head movements between intimate partners in conflict and non-conflict interaction. Guided by these previous studies, the windowed cross-correlation was used to measure the lead-lag relation between mothers' and infants' head movement.

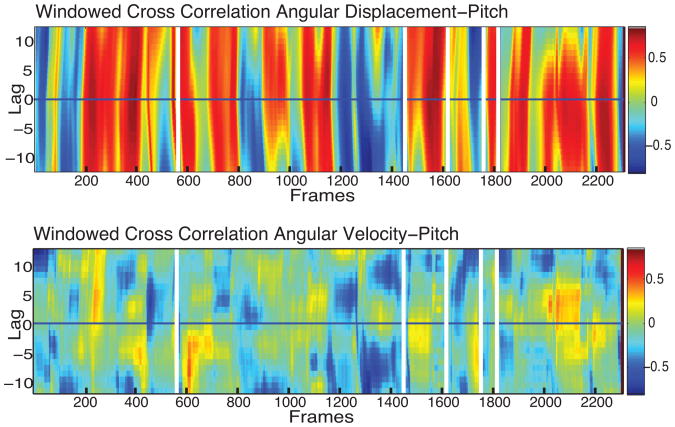

The windowed cross-correlation uses a temporally defined window to calculate successive local zero-order and lagged correlations over the course of an interaction. It requires several parameters: the sliding windows size (Wmax), the window increment (Winc), the maximum lag (tmax), and the lag increment (tinc). Previous works found that plus or minus 2s is meaningful for the production and perception of head nods (i.e. pitch) and turns (i.e. yaw) [7]; [46], and that 3s is meaningful to measure local correlation between mothers and infants during smiling activity over time [40]. Guided by these previous studies and the inspection of our data, we set window size Wmax = 3s. The maximum lag is the maximum interval of time that separates the beginning of the two windows. The maximum lag should allow capture of the possible lags of interest and exclusion of lags that are not of interest. After exploring different lag values, we choose a maximum lag tmax = 12 samples. Using the criteria of a minimum duration of 3s (corresponding to the size of Wmax) of overlap of good tracking results for mothers and infants and for both Play and Reunion, 29 dyads had complete data. We report on their data for the analysis of the dynamic correlation between mothers and infants head movement. The windowed cross-correlation was used to measure the correlations between the angular displacement as well as the angular velocity of the mothers and the angular displacement and angular velocity of their infants for pitch, yaw, and roll during the two episodes Play and Reunion. Fig. 3 shows an example of windowed cross-correlation for pitch angular displacement and angular velocity for one mother and her infant. Similarly to the RMSs (see section 3.2), so that missing data would not introduce error, the windowed cross-correlation was applied in each consecutive valid segment separately.

Figure 3.

Example of windowed cross correlation result for pitch angular displacement (top) and pitch angular velocity (bottom).

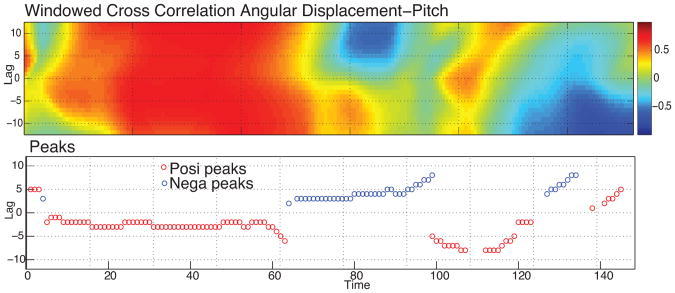

To analyze the patterns of change in the obtained cross-correlation matrix, Boker and colleagues [7] proposed a peak selection algorithm that selects the peak correlation at each elapsed time according to flexible criteria. The peaks are defined so that each selected peak has the maximum value of cross-correlation centered in a local region where the other values are monotonically decreasing through each side of the peak [7] (interested reader can find the source code of the peak-picking algorithm as reported in Boker et al. in [8]). The second line of Fig. 4 shows an example of the peaks of correlation (red represents positive peaks and blue negative peaks) obtained using the peak picking algorithm on the correlation matrix reported in the first line of Fig. 4.

Figure 4.

For the windowed cross-correlation plot in the upper panel, we computed correlation peaks using the peak-picking algorithm. The peaks are shown in the lower panel. Red indicates positive correlation; blue indicates negative correlation.

5 Results

We first report results for tests of mean differences in RMS of angular displacement and RMS of angular velocity. Then we report findings for zero-order correlation and windowed cross-correlation analyses.

5.1 Mothers' Head Movement

5.1.1 Mothers' Angular Displacement

For pitch and yaw RMS angular displacement, there were significant effects for episode (F2,116 = 8.96, p < 0.001 and F2,116 = 10.62, p < 0.001, respectively)2. For roll RMS angular displacement, there was no main effect for episode (F2,116 = 1.95, p = 0.14). There were no effect for sex or sex-by-episode interaction for pitch, yaw and roll RMS angular displacement. Pitch and yaw RMS angular displacement decreased from Play to Still Face and increased from Still Face to Reunion with no change in roll (Table 2).

5.1.2 Mothers' Angular Velocity

For RMS angular velocity of pitch, yaw, and roll, there were significant effects for episode (F2,116 = 11.47, p < 0.001, F2,116 = 10.94, p < 0.001, and F2,116 = 11.05, p < 0.001, respectively). For pitch and yaw RMS angular velocity, there were significant effects for sex (F2,116 = 4.27, p = 0.04 and F2,116 = 6.14, p = 0.01, respectively) but no sex effect for the roll (F2,116 = 0.16, p = 0.68). There was no sex-by-episode interaction for pitch, yaw and roll RMS angular velocity. Pitch, yaw and roll RMS angular velocity decreased from Play to Still Face and increased from Still Face to Reunion (Table 3).

Because mothers were instructed to remain unresponsive during the Still Face episode, their angular amplitude and angular velocity were consistent with our expectations: less during the Still Face than during Play or Reunion.

5.2 Infants' Head Movement

5.2.1 Infants' Angular Displacement

For pitch RMS angular displacement, there was a significant effect for episode (F2,119 = 4.52, p = 0.01) and no effect for sex or sex-by-episode interaction (F2,119 = 0.32, p = 0.57 and F2,119 = 2.30, p = 0.10, respectively). Pitch RMS angular displacement increased from Play to Still Face and decreased from Still Face to Reunion (Table 2). For yaw and roll RMS angular displacement, there was a marginal effect for episode (F2,119 = 2.61, p = 0.07 and F2,119 = 2.49, p = 0.08, respectively), no effect for sex (F2,119 = 2.48, p = 0.11 and F2,119 = 0.16, p = 0.69, respectively), and a significant sex-by-episode interaction (F2,119 = 3.71, p = 0.02 and F2,119 = 3.01, p = 0.05, respectively). For boys only, RMS head angular displacement increased significantly from Play to Still Face and decreased from Still Face to Reunion (Table 2).

5.2.2 Infants' Angular Velocity

For RMS angular velocity of pitch, yaw, and roll, there were no main effects for sex or sex-by-episode interactions. There were significant effects for episode (F2,119 = 4.18, p = 0.01 and, F2,119 = 3.55, p = 0.03, for pitch and yaw, respectively). These effects were carried by significant decreases from Still Face to Reunion (Table 3). From Play to Still, there was no significant change. For roll, there was no effect for episode (F2,119 = 0.89, p = 0.41).

In summary, RMS angular displacement for pitch, yaw, and roll increased from Play to Still Face and decreased from Still Face to Reunion for boys only. For girls, there was no change from one episode to the next. RMS angular velocity, on the other hand, was consistent for both boys and girls. There was no change from Play to Still Face. From Still Face to Reunion, RMS angular velocity decreased for pitch and yaw with no change for roll. Thus, for RMS angular displacement, the Still Face effect was found for boys but was absent for girls. For RMS angular velocity, the Still Face effect emerged for both pitch and yaw in Reunion for both boys and girls.

5.3 Coordination of Head Movement between Mothers and Infants

5.3.1 Zero-Order Correlation

We first report the zero-order correlations of the pitch, yaw and roll angular displacement and angular velocity between mothers' and infants' time series during Play and Reunion. One-sample t-tests of the mean zero-order correlations indicated that all correlations differed significantly from 0 (p <= 0.01) (Table 4). Infants and mothers showed significant zero-order correlation in pitch, yaw, and roll in both Play and Reunion episodes. These associations were evident both with respect to the angular displacement of pitch, yaw, and, roll, and angular velocity. Head movement, however, is dynamic. We reasoned that associations between infant and mother head angular displacement and angular velocity might change over time (see [40], for an illustration of the phenomenon with respect to facial expressions). To examine this possibility, we conducted windowed cross-correlations of the head movement variables.

Table 4.

Mean zero-order correlations of pitch, yaw and roll angular displacement and angular velocity between mothers and infants.

| Pitch | Yaw | Roll | ||||

|---|---|---|---|---|---|---|

| Play | Reunion | Play | Reunion | Play | Reunion | |

| Angular displacement | 0.21 | 0.22 | 0.16 | 0.27 | 0.27 | 0.28 |

| Angular velocity | 0.28 | 0.22 | 0.20 | 0.14 | 0.29 | 0.22 |

Note: All correlations differed significantly from 0, p ≤ 0.01

5.3.2 Windowed Cross-Correlation

Fig. 3 shows an example of a windowed cross-correlation for a mother and infant. For each graph, the area above the midline of the plot (Lag > 0) represents the relative magnitude of correlations for which the angular displacement of the mother predicts the angular displacement of the infant; the corresponding area below the midline (Lag < 0) represents the opposite. The midline (Lag = 0) indicates that both mother and infant are changing angular displacements at the same time. The obtained rows highlight local periods of positive (red) and negative correlations (blue) between the displacement and velocity of head movements of the mother and her infant (see Fig. 3). Positive correlations (red) convey that the angular displacements of mother and infant are changing in the same way (i.e., increasing together or decreasing together). Negative correlation (blue) conveys that the angular displacements of mother and infant are changing in the opposite way (e.g., one increases while the other decreases). Note that the direction of the correlations changes dynamically over time. Visual examination of Fig. 3 suggests that angular displacements are strongly correlated between mother and infant with periods of instability during which the correlation is attenuated or reverses direction.

The second line of Fig. 4 shows an example of peak picking results. The visual inspection of the obtained peaks highlights the pattern described above. The direction of the correlation peaks seems to change dynamically over time with gradual changes in which partner is leading the other. This suggests that the pattern of influence between mothers and infants is non-stationary. To evaluate whether the overall amount of coordination of mothers and infants' head movement varied between Play and Reunion as a carryover effect of the Still Face, the mean of the obtained correlation peak values were calculated for each dyad within each condition. So that low correlations would not bias the findings, only peaks of correlation greater than or equal to 0.4 were included for peak correlation analyses. Correlations at this threshold or higher represent effect sizes of medium to large [18]. The obtained means of correlation were first Fischer r-to-z transformed and then used as the primary dependent measures.

Mixed ANOVAs (sex by episode) were used to evaluate mean differences in correlations of head angular displacement and angular velocity between mothers and infants. Student's paired t-tests were used for post hoc analyses following significant ANOVA. So that missing data would not bias measurements, the mean of peaks of cross-correlation for each episode was computed for each valid segment separately and normalized by the duration of that segment. The normalized means of peaks were then computed and used as the primary dependent measures. As mothers were asked to stop interaction with their infants in the Still Face episode, only differences between Play and Reunion were considered. Twenty nine mother-infant pairs had complete data for Play and Reunion. In a mixed ANOVA (sex by episode) no sex or sex-by-episode interaction effects were found for angular displacement and angular velocity. Sex of infant therefore was omitted from further analysis of peaks.

a. Angular displacement

No differences between the Play and Reunion episodes were found in the mean of peak correlations of the angular displacements for pitch, yaw, and roll (F1,54 = 0.11, p = 0.74; F1,54 = 1.28, p = 0.26; and F1,54 = 3.12, p = 0.08, respectively).

b. Angular Velocity

Differences between the Play and Reunion episodes were found for the angular velocities for pitch and yaw but not for roll (F1,54 = 5.27; p = 0.02, F1,54 = 4.51, p = 0.03; and F1,54 = 1.24, p = 0.26, respectively). In a second analysis, Student paired t-tests were used as pair wise follow-up analysis to evaluate the variability of the correlations of the angular velocities in Reunion compared with Play separately for each dyad. Similarly, within dyad effects were found in the mean of peak correlations of the angular velocities for pitch and yaw (see Table 5). Thus, mothers and infants angular velocities were more highly correlated during Play compared with Reunion. This finding is consistent with a carryover effect from the Still Face to Reunion.

Table 5.

Within-dyad effects for normalized mean of peaks of correlation values for angular velocity of pitch, yaw, and roll during Play and Reunion.

Note: t: t-ratio, df: degree of freedom (df = 28), p: probability,

p < 0.05

6 Discussion

As noted above, the Still-Face paradigm is one of the most well-validated procedures for inducing positive and negative affect in mothers and infants [39], [38]. During the Play episode, mother and infant positive affect and joint attention are frequent; during the Still Face episode infant positive affect and attention to the mother decrease and infant negative affect increases relative to Play. This pattern, referred to as the still-face effect, continues forward into the subsequent Reunion. While mother and infant affect have been well studied in the Still Face paradigm, head movement has received only anecdotal attention. Using quantitative measures, we present the first evidence that head movement systematically varies across the three episodes of the Still Face paradigm and is consistent with previous reports of sex differences in the Still Face. These findings suggest the hypothesis that head movement and facial affect are coupled. Further work will be needed to test this hypothesis.

First, as a manipulation check, we examined mothers' head movement across the three episodes. The angular displacement and angular velocity of mothers' pitch and yaw declined from the Play to Still Face episodes and rebounded in the Reunion. This pattern was not significant for mothers' angular displacement of roll but was significant for their roll angular velocity. These results suggest impressive – though not perfect – compliance with the directions addressed to the mothers to maintain a still-face. This is the only quantitative examination of such basic experimental compliance of which we are aware of.

Second, the findings for head movement mirrored what has been reported previously for positive and negative affect, including the occurrence of sex differences. For boys but not girls, the angular displacement of the pitch, yaw, and roll of head movement increased in the transition to the Still Face and then reduced in the recovery from the Still Face to the Reunion. For both boys' and girls' pitch and yaw angular velocities were not significantly dampened in the transition to the Still Face but did decrease significantly in the transition to the Reunion.

Previous research indicates that male infants are more inclined to extremes of both positive and negative emotion [2] — and somewhat less regulated in their responses to mother — than are female infants in the Still Face paradigm [51], [14], [53], [52]. We found evidence that this pattern is also evident in objective measurements of head displacement. Boys but not girls exhibited sensitivity to the still-face in measures of pitch, yaw, and roll displacement. Sex differences were not evident in measures of velocity. Replication of these results might suggest important differences in infant response to interaction with mother and its perturbation. Changes in infant head movement in across the Still Face paradigm suggest that infant head movement is responsive to mother head movement (and vice-versa). That is, influence is bidirectional. We investigated this possibility with a set of zero-order and windowed cross-correlations.

Third, zero-order correlations indicate significant in-time associations between both the angular displacement and angular velocity of infant-mother pitch, yaw, and roll. These correlations indicate synchrony between infant and mother in an unexplored area of interaction. Beebe and colleagues ([5]; [6]) have described these associations during play interactions using manual measurements of a small subset of head movement categories using “ordinalized behavior scales”. We used continuous measurements of physical motion on a precise quantitative scale to quantify displacement and velocity of both mother and infant head movement and their correlation throughout the Still Face paradigm. With respect to the zero-order correlations, few differences between Play and Reunion emerged. When longer lags and windowed correlation was examined, more findings emerged.

Windowed cross-correlation revealed that mother and infant head angular amplitude and angular velocity were each tightly linked with exceptions. The bouts of intermittent high and low correlation recall what Tronick and Cohn [51] described as mismatch-repair cycles. That is, periods of high correlation or reciprocity, followed by disruption, followed by a return to high correlation (i.e., “repair”). That is, mother and infant cycling between periods of high coherence and intermittent periods of disruption, in which one or the other partner deviated. Mismatches presented the dyad with a challenge to resolve. Tronick's and Cohn's discovery of mismatch and repair was limited to the Play episode and manual annotation of holistic measures of emotion. Neither head movement nor Reunion were explored. Our findings suggest that mismatches in head movement dynamics are common in both episodes and are more difficult to repair during Reunion for pitch and yaw angular velocities. We interpret these findings as a carryover effect of the Still Face episode. However, we cannot rule-out two alternative interpretations. One is that coherence could decrease over time and thus could have occurred in any case. To rule-out this alternative hypothesis would require a comparison group that lacked exposure to the Still Face. Two, it is possible that missing data from tracking error played a role. Further improvements in tracking infant head and facial movements especially during distress would be needed to rule this alternative.

Previous work in emotion has emphasized facial expression and for good reason. The face is a rich source of information about emotion and intention. Yet, head movement can convey much about emotion experience and communication. Infant's head movement changed markedly when mothers became unresponsive. That effect not only was dramatic but had consequence for subsequent Reunion. The temporal coordination of head movement between mothers and infants strongly suggests a deep inter-subjectivity between them. Body language such as this is well worth our attention for automatic affect recognition. Multimodal approaches to emotion detection and coordination are needed. As well, relatively unexplored is the potential contribution of head movement to facial action unit (AU) detection. Head movement systematically varies with a number of actions, such as smiles (AU 12) of embarrassment [3]. Tong and colleagues [49] have shown the potential value of exploiting anatomical constraints and AU covariation for AU detection. Similar benefit might accrue from inclusion of head movement in probabilistic models of facial expression. Head movement is not simply a source of error (as in registration error), but a potential contributor to improved action unit detection. We aim at testing this hypothesis.

Within the past decade, there has been increased interest in social robots and intelligent virtual agents. Much of the effort in social robotics has emphasized motor control and facial and vocal expression (e.g., [11], [48]). In virtual agents, there has been more attention to head movement, although mostly related to back-channeling (e.g. [16]). The current findings suggest that coordination of head movement between social robots or intelligent virtual agents and humans is an important additional dimension of emotion communication. Head movement figures in behavioral mirroring and mimicry among people and qualifies the communicative function of facial actions (e.g., head pitch in embarrassment [3]). Social robots and intelligent virtual human agents that can interact naturally with humans will need the ability to use this important channel of communication contingently and appropriately. Social robots and intelligent virtual human agents would benefit from further research into human-human interaction with respect to head movement and other body language. Work in this area is just beginning. For example, developmental psychologists and computer scientists using a “baby” robot, known as Diego-San, have begun to consider coordination of expressive facial expressions (see [45]). Breazeal's work cited above is yet another example. To achieve greater reciprocity between people and social robots, designers could broaden their attention to consider the dynamics of head movement and expression.

7 Conclusion

In summary, we found strong evidence that mother and infant head movement and their coordination strongly varied with age-appropriate emotion challenge and interactive context. For male infants, angular head displacement increased from Play to Still Face and then decreased in Reunion. For both male and female infants, angular head velocity decreased from Still Face to Reunion. For both male and female infants, as well, mother-infant coordination of angular head velocity was higher during Play than Reunion. Head movement proved sensitive to interactive context. Attention to head movement can deepen our understanding of emotion and interpersonal coordination with implications for social robotics and social agents.

Acknowledgments

Research was supported in part by the National Institute of General Medical Sciences (R01GM105004) and the National Science Foundation (1052603, 1052736, and 1052781). The content is solely the responsibility of the authors and does not necessarily represent the official views of the sponsors.

Biographies

Zakia Hammal Zakia Hammal is a Research Associate at Robotics Institute at Carnegie Mellon University. Her research interests include computer vision and signal and image processing applied to human-machine interaction, affective computing, and social interaction. She has authored numerous papers in these areas in prestigious journals and conferences. She has served as the organizer of several workshops including CBAR 2012, CBAR 2013, and CBAR 2015. She is a Review Editor in Human-Media Interaction section of Frontiers in ICT, served as the Publication Chair of the ACM International Conference on Multimodal Interfaces in 2014 (ICMI 2014), and serves as a reviewer for numerous journals and conferences in her field. Her honors include an outstanding paper award at ACM ICMI 2012 and an outstanding reviewer award at IEEE FG 2015.

Jeffrey F. Cohn Jeffrey Cohn is professor of psychology and psychiatry at the University of Pittsburgh and an adjunct professor at the Robotics Institute, Carnegie Mellon University. He has led interdisciplinary and inter-institutional efforts to develop advanced methods of automatic analysis and synthesis of facial and vocal expression and applied them to research in human emotion, interpersonal processes, social development, and psychopathology. He co-chaired the IEEE International Conference on Automatic Face and Gesture Recognition (FG2015 and FG2008), the International Conference on Multimodal Interfaces in 2014 (ICMI2014) and the International Conference on Affective Computing and Intelligent Interaction (ACII2009). His research is supported in part by the U.S. National Institutes of Health and the U.S. National Science Foundation.

Daniel S. Messinger Daniel Messinger is Professor in the Department of Psychology at the University of Miami with secondary appointment in Pediatrics, Electrical and Computer Engineering, and Music Engineering. He investigates social and emotional development of typically developing and at-risk infants and children using computer vision and pattern recognition tools. His current collaborative work involves measurement of emotional dynamics, communicative development among infants at risk for autism, and the application of machine learning approaches to infant-parent interaction. Messinger is a member of the Baby Sibs [Autism] Research Consortium and an Associate Editor of Developmental Science.

Footnotes

An interaction effect is a change in the simple main effect of one variable over levels of the second. Thus, a sex-by-episode interaction would indicate a change in the simple main effect of sex over levels of episode or a change in the simple main effect of episode over levels of sex.

F2,116 corresponds to the F statistic and its associated degrees of freedom. The first number refers to the degrees of freedom for the specific effect and the second number to the degrees of freedom of error.

Contributor Information

Zakia Hammal, Email: zakia_hammal@yahoo.fr, The Robotics Institute, Carnegie Mellon University, Pittsburgh, PA, USA.

Jeffrey F Cohn, Email: jeffcohn@cs.cmu.edu, the Robotics Institute, Carnegie Mellon University and the Department of Psychology, University of Pittsburgh, Pittsburgh, PA, USA.

Daniel S Messinger, Email: dmessinger@miami.edu, the Department of Psychology at the University of Miami with secondary appointment in Pediatrics, Electrical and Computer Engineering, and Music Engineering, University of Miami, FL, USA.

References

- 1.Adamson LB, Frick JE. The still-face: A history of a shared experimental paradigm. Infancy. 2003;4:451–474. [Google Scholar]

- 2.Alexander GM, Wilcox T. Sex Differences in Early Infancy. Child Development Perspectives. 2012;6(4):400–406. [Google Scholar]

- 3.Ambadar Z, Cohn JF, Reed LI. All smiles are not created equal: Morphology and timing of smiles perceived as amused, polite, and embarrassed/nervous. J of Nonver Behav. 2009;33:17–34. doi: 10.1007/s10919-008-0059-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ashenfelter KT, Boker SM, Waddell JR, Vitanov N. Spatiotemporal symmetry and multi- fractal structure of head movements during dyadic conversation. J of Experimental Psychology: Human Perception and Performance. 2009;35(4):1072–1091. doi: 10.1037/a0015017. [DOI] [PubMed] [Google Scholar]

- 5.Beebe B, Jaffe J, Markese S, Buck K, Chen H, Cohen P, et al. The origins of 12-month attachment: A microanalysis of 4-month mother infant interaction. Attachment and Human Development. 2010;12:3–141. doi: 10.1080/14616730903338985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Beebe B, Steele M, Jaffe J, Buck KA, Chen H, Cohen P, Kaitz M, Markese S, Andrews S, Margolis, Feldstein A. Maternal anxiety symptoms and mother-infant self-and interactive contingency. Infant Mental Health J. 2011;32(2):174–206. doi: 10.1002/imhj.20274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Boker SM, Rotondo JL, Xu M, King K. Windowed cross correlation and peak picking for the analysis of variability in the association between behavioral time series. Psych Methods. 2002;7:338–355. doi: 10.1037/1082-989x.7.3.338. [DOI] [PubMed] [Google Scholar]

- 8.Boker SM, Xu M. Windowed cross-correlation and peak picking. 2002 doi: 10.1037/1082-989x.7.3.338. Available: http://people.virginia.edu/smb3u/ [DOI] [PubMed]

- 9.Boker SM, Cohn JF, Theobald BJ, Matthews I, Spies J, Brick T. Effects of damping head movement and facial expression in dyadic conversation using real-time facial expression tracking and synthesized avatars. Philosophical Trans B of the Royal Society. 2009;364:34853495. doi: 10.1098/rstb.2009.0152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Boker SM, Cohn JF, Theobald BJ, Matthews I, Mangini M, Spies JR, et al. Something in the way we move: Motion, not perceived sex, influences nods in conversation. J of Experim Psycho: Human Perception and Performance. 2011;37:874–891. doi: 10.1037/a0021928. [DOI] [PubMed] [Google Scholar]

- 11.Breazeal C. Designing Sociable Robots. The MIT Press; 2002. [Google Scholar]

- 12.Busso C, Deng Z, Grimm M, Neumann U, Narayanan S. Rigid Head Motion in Expressive Speech Animation: Analysis and Synthesis. IEEE Trans on Audio, Speech, and Language Processing. 2007 Mar;15(3):1075–1086. [Google Scholar]

- 13.Busso C, Deng Z, Grimm M, Neumann U, Narayanan S. Learning expressive human-like head motion sequences from speech. In: Deng Z, Neumann U, editors. Book: Data-Driven 3D Facial Animations. Springer-Verlag London Ltd; Surrey, United Kingdom: 2008. pp. 113–131. [Google Scholar]

- 14.Carter AS, Mayes LC, Pajer KA. The role of dyadic affect in play and infant sex in predicting infant response to the still-face situation. Child Development. 1990;61(3):764–773. [PubMed] [Google Scholar]

- 15.Campbell SB, Cohn JF. Prevalence and correlates of postpartum depression in first time mothers. Abnormal Psychology. 1991;100:594–599. doi: 10.1037//0021-843x.100.4.594. [DOI] [PubMed] [Google Scholar]

- 16.Cassell J, Bickmore T, Campbell L, Vilhjlmsson H, Yan H. Human Conversation as a System Framework: Designing Embodied Conversational Agents. In: Cassell J, et al., editors. Embodied Conversational Agents. Cambridge, MA: MIT Press; 2000. pp. 29–63. [Google Scholar]

- 17.Chow S, Haltigan JD, Messinger DS. Dynamic Infant-Parent Affect Coupling during the Face-to-Face/Still-Face. Emotion. 2010;10:101–114. doi: 10.1037/a0017824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cohen J. Statistical power analysis for the behavioral sciences. 2nd. New Jersey: Lawrence Erlbaum; 1988. [Google Scholar]

- 19.Cohn JF, Campbell SB, Ross S. Infant response in the still face paradigm at 6 months predicts avoidant and secure attachment at 12 months. Development and Psychopathology. 1991;3:367–376. [Google Scholar]

- 20.Cohn JF, Reed LI, Moriyama T, Xiao J, Schmidt KL, Ambadar Z. Multimodal coordination of facial action, head rotation, and eye motion. 6th IEEE Inter Conf on AFGR; Seoul, Korea. 2004. [Google Scholar]

- 21.Cohn JF. Additional components of the still-face effect: Commentary on a damson and frick. Infancy. 2003;4:493–497. [Google Scholar]

- 22.Cox M, Nuevo-Chiquero J, Saragih JM, Lucey S. CSIRO Face Analysis SDK. Brisbane, Australia: 2013. [Google Scholar]

- 23.De la Torre F, Cohn JF. Visual analysis of humans: Facial expression analysis. In: Moeslund TB, Hilton A, Volker Krger AU, Sigal L, editors. Visual analysis of humans: Looking at people. Springer; 2011. pp. 377–410. [Google Scholar]

- 24.De Silva R, Bianchi-Berthouze N. Modeling human affective postures: an information theoretic characterization of posture features. J of Comp Animation and Virtual World. 2004;15269276(3-4) [Google Scholar]

- 25.Duncan S. Some signals and rules for taking speaking turns in conversations. J of Personality and Social Psychology. 1972;23:283–292. [Google Scholar]

- 26.El Kaliouby R, Robinson P. Real-time inference of complex mental states from facial expressions and head gestures. Real-time vision for HCI. 2005:181–200. [Google Scholar]

- 27.Fleiss JL. Statistical methods for rates and proportions. New York: Wiley; 1981. [Google Scholar]

- 28.Gratch J, Wang N, Okhmatovskaia A, Lamothe F, Morales Mathieu, van der Werf RJ, et al. Can virtual humans be more engaging than real ones?. Inter Conf on HCI; Beijing, China. 2007. [Google Scholar]

- 29.Gunes H, Pantic M. Dimensional emotion prediction from spontaneous head gestures for interaction with sensitive artificial listeners. In: J A, et al., editors. Intelligent virtual agents. Vol. 6356. Heidelberg: Springer-Verlag Berlin; 2010. pp. 371–377. [Google Scholar]

- 30.Hammal Z, Cohn JF, George DT. Temporal Coordination of Head Motion in Distressed Couples. IEEE, Trans Affec Comp. 2014 doi: 10.1109/TAFFC.2014.2326408. doi: http://dx.doi.org/10.1109/TAFFC.2014.2326408. [DOI] [PMC free article] [PubMed]

- 31.Jaffe J, Beebe B, Feldstein S, Crown CL, Jasnow M. Rhythms of dialogue in early infancy. Monographs of the Society for Research in Child Development. 2001;66 [PubMed] [Google Scholar]; Jang JS, Kanade T. Robust 3D head tracking by online feature registration. IEEE Inter Conf on AFGR; Amsterdam, The Netherlands. 2008. [Google Scholar]

- 32.Keltner D. Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement and shame. J of Personality and Social Psychology. 1995;68:441–454. [Google Scholar]

- 33.Klier EM, Hongying W, Crawford JD. Three-dimensional eye-head coordination is implemented downstream from the superior colliculus. J of Neuro-physiology. 2003;89:2839–2853. doi: 10.1152/jn.00763.2002. [DOI] [PubMed] [Google Scholar]

- 34.Knapp ML, Hall JA. Nonverbal behavior in human communication. 7th. Boston: Wadsworth/Cengage; 2010. [Google Scholar]

- 35.Laurent G, Davidowitz H. Encoding of olfactory information with oscillating neural assemblies. Science. 1994;265:1872–1875. doi: 10.1126/science.265.5180.1872. [DOI] [PubMed] [Google Scholar]

- 36.Laurent G, Wehr M, Davidowitz H. Temporal representations of odors in an olfactory network. J Neurosci. 1996;16(12):3837–47. doi: 10.1523/JNEUROSCI.16-12-03837.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Li R, Huang TS, Danielsen M. Real-time multimodal humanavatar interaction. IEEE Trans on Circuits and Syst for Video Technology. 2008;18:467–477. [Google Scholar]

- 38.Mattson WI, Cohn JF, Mahoor MH, Gangi DN, Messinger DS. Darwin's Duchenne: Eye constriction during infant joy and distress. PLoS ONE. 2013;8(11) doi: 10.1371/journal.pone.0080161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mesman J, van IJzendoorn MH, Bakermans-Kranenburg MJ. The many faces of the Still-Face Paradigm: A review and meta-analysis. Developmental Review. 2009;29(2):120–162. [Google Scholar]

- 40.Messinger DS, Mahoor MH, Chow SM, Cohn JF. Automated measurement of facial expression in infant-mother interaction: A pilot study. Inter J of Computer Vision. 2009;14(3):285–305. doi: 10.1080/15250000902839963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Messinger DS, Mattson WI, Mohammad MH, Cohn JF. The eyes have it: Making positive expressions more positive and negative expressions more negative. Emotion. 2012;12:430–436. doi: 10.1037/a0026498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Michel GF, Camras L, Sullivan J. Infant interest expressions as coordinative motor structures. Infant Behavior and Development. 1992;15:347–358. [Google Scholar]

- 43.Moore GA, Cohn JF, Campbell SB. Infant affective responses to mother's still-face at 6 months differentially predict externalizing and internalizing behaviors at 18 months. Developmental Psychology. 2001;37:706–714. [PubMed] [Google Scholar]

- 44.Morency LP, Sidner C, Lee C, Darrell T. Artificial Intelligence. 8-9. Vol. 171. Elsevier; Jun, 2007. Head Gestures for Perceptual Interfaces: The Role of Context in Improving Recognition; pp. 568–585. [Google Scholar]

- 45.Movellan et al., 2013. http://ucsdnews.ucsd.edu/pressrelease/machine_perception_lab_shows_robotic_one_year_old_on_video

- 46.Rotondo JL, Boker SM. Behavioral synchronization in human conversational interaction. In: Stamenov M, Gallese V, editors. Mirror neurons and the evolution of brain and language. Amsterdam: John Benjamins; 2002. [Google Scholar]

- 47.Sidner C, Lee C, Morency LP, Forlines Clifton. The Effect of Head-Nod Recognition in Human-Robot Conversation. Trans of the 1st Annual Conf on Human-Robot Interaction; Salt Lake City, Utah, USA. March 2006. [Google Scholar]

- 48.Schroder M, Bevacqua E, Cowie R, Eyben F, Gunes H, Haylen D, et al. Building Autonomous Sensitive Artificial Listeners. IEEE Trans on Affective Computing. 2012 [Google Scholar]

- 49.Yan Tong, Jixu Chen, Ji Qiang. A Unified Probabilistic Frame-work for Spontaneous Facial Action Modeling and Understanding. IEEE Trans on PAMI. 2010 Feb;32(20):258–273. doi: 10.1109/TPAMI.2008.293. [DOI] [PubMed] [Google Scholar]

- 50.Tronick EZ, Als H, Adamson L, Susan Wise BA, Brazelton TB. The infant's response to entrapment between contradictory messages in face-to-face interaction. J of the American Academy of Child Adolescent Psychiatry. 1978;17:1–13. doi: 10.1016/s0002-7138(09)62273-1. [DOI] [PubMed] [Google Scholar]

- 51.Tronick EZ, Cohn JF. Infant-mother face-to-face interaction: Age and gender differences in coordination and the occurrence of misscoordination. Child Development. 1989;60:85–92. [PubMed] [Google Scholar]

- 52.Tronick EZ, Weinberg MK. Gender differences and their relation to maternal depression. In: Johnson SL, Hayes AM, Field TM, Schneiderman N, McCabe PM, editors. Stress, coping, and depression. Mahwah, NJ: Erlbaum; 2000. pp. 23–34. [Google Scholar]

- 53.Weinberg MK, Tronick EZ, Cohn JF, Olson KL. Gender differences in emotional expressivity and self-regulation during early infancy. Developmental Psychology. 1999;35(1):175–188. doi: 10.1037//0012-1649.35.1.175. [DOI] [PubMed] [Google Scholar]

- 54.Xiao J, Baker S, Matthews I, Kanade T. Real-time combined 2D+3D active appearance models. IEEE Inter Conf CVPR. 2004:535–542. [Google Scholar]