Abstract

Previous studies have reported that concurrent manual tracking enhances smooth pursuit eye movements only when tracking a self-driven or a predictable moving target. Here, we used a control-theoretic approach to examine whether concurrent manual tracking enhances smooth pursuit of an unpredictable moving target. In the eye-hand tracking condition, participants used their eyes to track a Gaussian target that moved randomly along a horizontal axis. In the meantime, they used their dominant hand to move a mouse to control the horizontal movement of a Gaussian cursor to vertically align it with the target. In the eye-alone tracking condition, the target and cursor positions recorded in the eye-hand tracking condition were replayed, and participants only performed eye tracking of the target. Catch-up saccades were identified and removed from the recorded eye movements, allowing for a frequency-response analysis of the smooth pursuit response to unpredictable target motion. We found that the overall smooth pursuit gain was higher and the number of catch-up saccades made was less when eye tracking was accompanied by manual tracking than when not. We conclude that concurrent manual tracking enhances smooth pursuit. This enhancement is a fundamental property of eye-hand coordination that occurs regardless of the predictability of the target motion.

Keywords: manual tracking, smooth pursuit, catch-up saccades, eye movements, eye-hand coordination

Introduction

Successful completion of many motor tasks in our daily life, such as handwriting or catching a fast-approaching baseball, requires seamless coordination between our eyes and hands. Previous research that studied eye-hand coordination found that concurrent hand tracking enhances eye tracking of a moving target. For instance, in both humans and monkeys, smooth pursuit eye movements, a continuous type of eye movement that maintains foveal vision of a moving object of interest, are enhanced when a visual target is tracked with eyes and hand concurrently compared to when the target is tracked with the eyes only. Specifically, during concurrent eye-hand tracking of a target, the peak smooth pursuit velocity is higher (Gauthier, Vercher, Mussa Ivaldi, & Marchetti, 1988; Koken & Erkelens, 1992), the eye follows the target closer and with shorter latency (Angel & Garland, 1972; Gauthier & Hofferer, 1976; Gauthier et al., 1988; Mather & Lackner, 1980; Steinbach, 1969), and fewer catch-up saccades are made (Angel & Garland, 1972; Jordan, 1970; Mather & Lackner, 1980; Steinbach & Held, 1968).

All the studies discussed previously used a visual target that was either self-driven or followed a predictable (sinusoidal, sawtooth, or square wave) trajectory. As such, in these studies, a predictive mechanism (Bahill & McDonald, 1983; Barnes & Asselman, 1991; Stark, Vossius, & Young, 1962) that forms an internal model of the target motion and anticipates the position and speed of the target played an important role in regulating smooth pursuit of the target to ensure that pursuit errors were small and few catch-up saccades were needed to track the target. However, real-world targets, such as a mosquito buzzing around the bedroom, generally do not follow predictable trajectories. Due to their unpredictable movement, no internal model of the target motion can be formed and the predictive mechanism cannot regulate pursuit eye movements to such targets (Miall, Weir, & Stein, 1986; Stark et al., 1962; Xia & Barnes, 1999). Instead, in this case pursuit eye movements are primarily regulated online by a feedback mechanism relying on the retinal slip of the target (Lisberger, 2010), and frequent catch-up saccades are needed to correct tracking errors (e.g., Ke, Lam, Pai, & Spering, 2013; Puckett & Steinman, 1969).

Two previous studies (Koken & Erkelens, 1992; Xia & Barnes, 1999) have examined eye-hand tracking of unpredictable moving targets and found that concurrent manual tracking did not enhance smooth pursuit eye movements or reduce the number of catch-up saccades. These findings suggest that concurrent manual tracking enhances smooth pursuit eye movements only when the target moves predictably and smooth pursuit is thus regulated by the predictive mechanism. Nevertheless, at least two factors might account for the absence of an enhancement effect in these two studies. First, neither of these two studies matched the displays in their two experimental conditions. Specifically, while the display contained only a moving target when the eye tracked the target alone, the display contained both a moving target and a moving cursor yoked to the hand position when both the eye and hand tracked the moving target. As tracking the cursor showing the hand position also required attention, it could have drawn attention away from the moving target and thus have had a detrimental effect on the eye tracking of the target. Indeed, Xia and Barnes (1999) reported that the precision of eye tracking was worse when both eye and the hand tracked the unpredictable target than when only an eye tracked the target. Thus, the lack of an enhancement effect of concurrent hand tracking on smooth pursuit eye movements in this study could be due to the less precise eye tracking of the moving target when both the eye and hand tracked the target. Second, Koken and Erkelens (1992) collected data of only two participants. Their study thus lacks sufficient power to observe the enhancement effect.

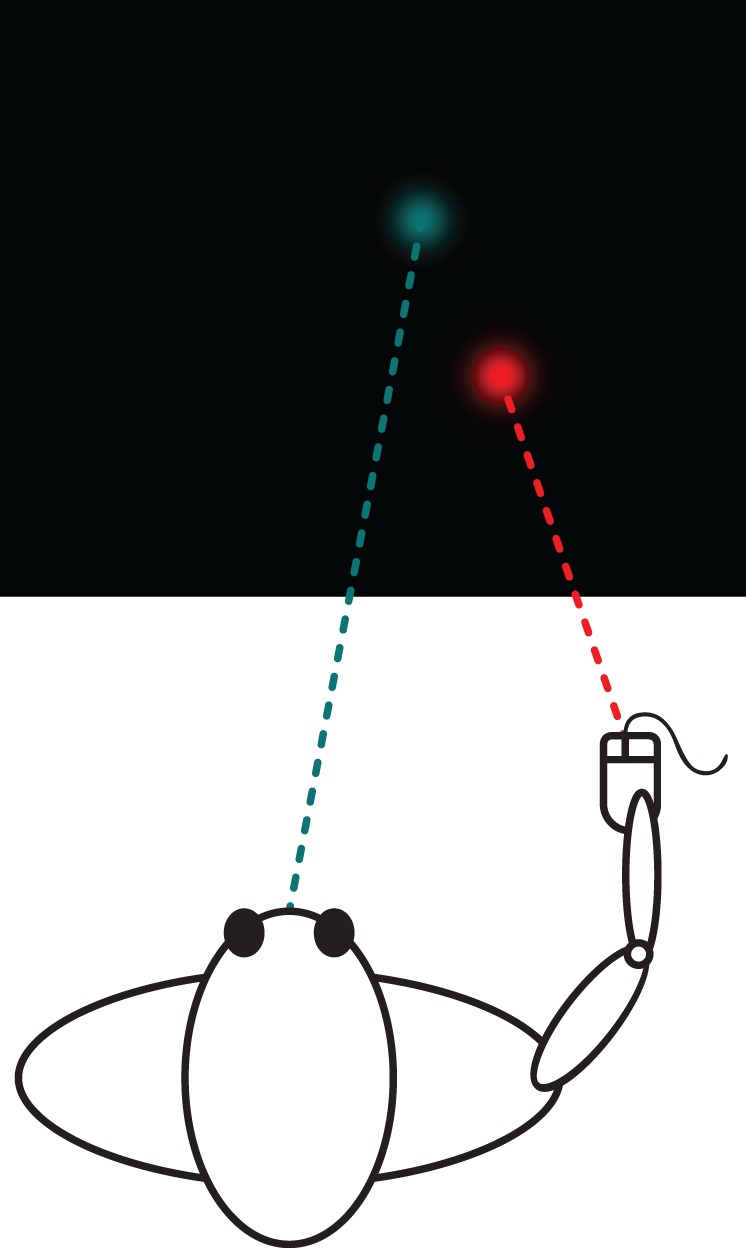

In summary, it still remains in question whether concurrent manual tracking enhances smooth pursuit eye tracking of an unpredictable moving target. In the current study, by testing a wider range of target motion frequencies and a larger number of participants than previous studies, we aimed to address this question and examine whether and how concurrent manual tracking enhances smooth pursuit eye movements. Specifically, participants viewed the same display in two experimental conditions: in the eye-hand tracking condition, participants were instructed to use their eyes to track an unpredictable target that moved horizontally on a computer screen while at the same time using a mouse with their dominant hand to move a cursor and keep it directly underneath the target (Figure 1). In the eye-alone tracking condition, the target and cursor positions previously recorded in the eye-hand tracking condition were played back, and participants were instructed to use only their eyes to track the movement of the target. Replaying both the target and cursor positions of the eye-hand tracking condition ensured that the displays were identical across the two experimental conditions.

Figure 1.

Schematic illustration of the display (drawn to scale) and task used in the study.

The logic of this study is given as follows. If concurrent manual tracking enhances smooth pursuit eye tracking of an unpredictable moving target, we expect higher smooth pursuit gain and less phase lag in the eye-hand compared to the eye-alone tracking condition. Furthermore, the number of catch-up saccades made should also be lower in the eye-hand than the eye-alone tracking condition.

Methods

Participants

Sixteen adults (nine males, seven females; aged 18 to 36 years) participated in the experiment. All were staff or students at The University of Hong Kong and were naïve to the goals of the experiment. All participants were right-hand dominant and had normal vision, i.e., they did not wear glasses or contact lenses during the experiment. One female participant was not included in the analyses due to the poor quality of her eye tracking data. This left 15 total participants in the study. The study was approved by the Human Research Ethics Committee for Non-Clinical Faculties at The University of Hong Kong. Written informed consent was obtained from all participants.

Visual stimuli and apparatus

A cyan round Gaussian target and a red round Gaussian cursor (both σ = 0.6°, peak luminance: 9.4 cd/m2) were placed on a uniform black background (luminance: 0.32 cd/m2) and displayed on a 21-in. CRT monitor (1280 × 1024 pixels; Mitsubishi Diamond Pro 2070 SB, Tokyo, Japan) at a 100 Hz refresh rate (Figure 1). The vertical center-to-center distance between the target and the cursor was 8° to ensure that the movement of the cursor did not affect eye tracking of the target. Participants were seated in a dark room with their head stabilized by a chin and head rest at a viewing distance of 58 cm where the display subtended a visual angle of 40° (H) × 30° (V).

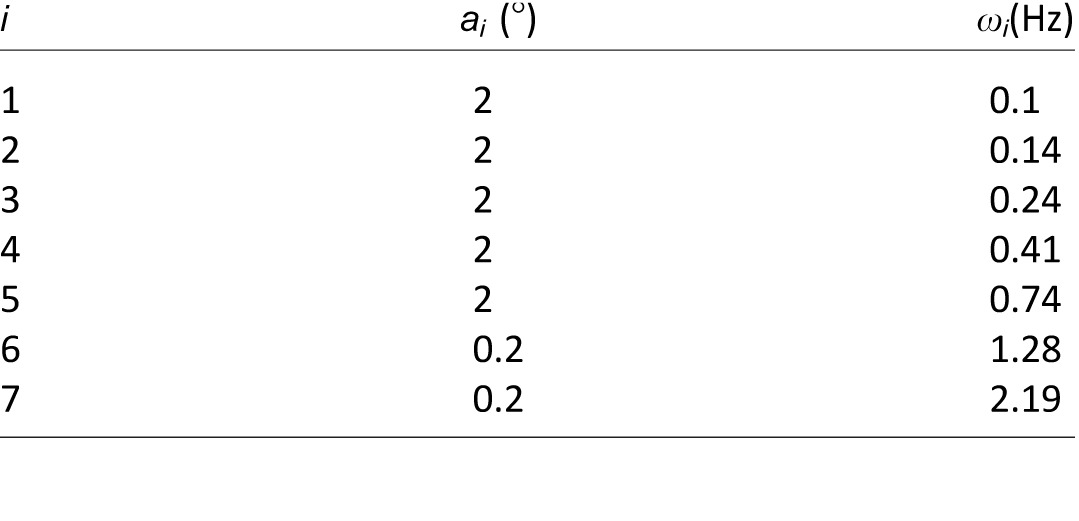

The azimuthal position of the target was determined by a perturbation function, which consisted of the sum of seven harmonically-unrelated sinusoids. The target's position θ as a function of time t was given by

|

where ai and ωi respectively represent the magnitude and frequency of the ith sinusoid component (Table 1), and ρi is a random phase offset drawn from the range −π to  in each trial. The input position gain G was set at a value of 1.8°, leading to a maximum azimuthal excursion of the target from the center of the screen of 17.1°. The average velocity of the target was 12°/s (peak: 43°/s). Although the target never moved out of the screen and its movement was thus not completely unpredictable, the sum-of-sinusoids position series made the target movement appear random (McRuer & Krendel, 1974; Stark, Iida, & Willis, 1961) and allowed for a frequency-based analysis of the participant's tracking response.

in each trial. The input position gain G was set at a value of 1.8°, leading to a maximum azimuthal excursion of the target from the center of the screen of 17.1°. The average velocity of the target was 12°/s (peak: 43°/s). Although the target never moved out of the screen and its movement was thus not completely unpredictable, the sum-of-sinusoids position series made the target movement appear random (McRuer & Krendel, 1974; Stark, Iida, & Willis, 1961) and allowed for a frequency-based analysis of the participant's tracking response.

Table 1.

Magnitudes (ai) and frequencies (ωi) of the seven harmonically-unrelated sinusoids determining the azimuthal position of the target.

Each trial was 95 s long to ensure that a sufficient number of cycles was available at all target motion frequencies for data collection (see Li, Stone, & Chen, 2011; Li, Sweet, & Stone, 2005, 2006; Niehorster, Peli, Haun, & Li, 2013). Two experimental conditions were run. In the eye-hand tracking condition, at the beginning of each trial, the target and cursor appeared on the vertical midline of the CRT monitor. The trial was then initiated with a mouse click, after which the target began to move horizontally according to the sum-of-sinusoids position series. Participants were instructed to use their eyes to track the movement of the target and at the same time use their dominant right hand to control a high-precision gaming mouse (M60, Corsair, Fremont, CA) to keep the cursor directly underneath the target as precisely as possible (i.e., to minimize the azimuthal distance between the target and the cursor). The physical mouse position was recorded at 100 Hz and linearly transformed to the cursor position on the screen. To ensure a one-to-one mapping of physical mouse position and displacement to displayed cursor position and movement, the mouse acceleration setting of the Microsoft Windows computer system was disabled. In the eye-alone tracking condition, the target and cursor motion recorded in the eye-hand tracking condition were replayed and participants were asked to use only their eyes to track the target motion as precisely as possible. Eye movements were recorded at 500 Hz by a video-based eye tracking system using the dark pupil and corneal reflection method (tower-mounted Eyelink 1000, SR Research, Ottawa, Canada). Custom C++ code and OpenGL were used to program the visual stimuli and run the experiment.

Procedure

The eye-hand and the eye-alone tracking conditions were run in separate sessions on separate days. As the target and cursor positions displayed in the eye-alone tracking condition were a replay of those recorded in the eye-hand tracking condition, the eye-hand tracking condition was run in the first session and the eye-alone tracking condition in the second. Accordingly, if concurrent hand tracking enhances smooth pursuit eye movements and leads to better eye tracking performance in the eye-hand than the eye-alone condition, this effect would not be contaminated by any practice effect. Each session consisted of six trials and the participant could request breaks at any time between the trials. At the start of the first session, participants were provided with six practice trials to familiarize themselves with the task and to ensure that their performance was stabilized.

After the completion of each session, the eye movement data were inspected for missing data caused by blinks. If the data for more than 4% (3.8 s) of a trial were missing, the trial was discarded and another trial was run for the participant. Despite this stringent criterion, across all participants, only 5% of trials were recollected. The average amount of data missing due to blinks was not different between the eye-hand (mean ± SE: 1.16 ± 0.19%) and eye-alone conditions (1.40 ± 0.25%, t(14) = −1.53, p = 0.15).

Data analysis

The time series of the target, cursor, and eye positions were recorded. Data analysis was performed offline using custom Matlab (Natick, MA) code. Following previous research, we analyzed the data beginning 5 s after the start of each 95-s trial to skip the initial transient response (e.g., Li et al., 2005, 2006; Li et al., 2011; Niehorster et al., 2013). Total eye tracking performance error was measured as the mean and the root mean square (RMS) of the time series of the azimuthal distance between the target and eye positions in visual angle. Total hand tracking performance error was measured as the mean and the RMS of the time series of the azimuthal distance between the target and the cursor positions in visual angle. The RMS tracking error indicates the precision of the tracking performance.

The mean and RMS tracking position errors measure the total performance error, which includes both the tracking error driven by the input visual signals as well as noise in motor control not related to the input visual signals. The tracking response at the seven perturbation frequencies provides a better measure of the visually driven tracking performance and also allows separate examination of the tracking response amplitude and delay. To evaluate the tracking performance specific to the perturbation frequencies, we performed a frequency response (Bode) analysis. Specifically, we performed Fourier analysis of the recorded time series of the azimuthal target, cursor, and eye positions in each trial. We took the ratios of the Fourier-transformed eye and target positions to obtain the response gain and phase describing eye tracking performance, and the ratios of the Fourier-transformed cursor and target positions to obtain the response gain and phase describing manual tracking performance at each perturbation frequency. The response gain and phase measure the amplitude and the delay of the tracking response, respectively.

In the Bode analysis of the eye tracking performance, the eye position data contained both saccades and smooth pursuit eye movement. To separately analyze these two types of eye movements, we first constructed an eye velocity trace and then used the algorithm described in Appendix A to detect saccades. Next, we removed the detected saccades from the eye velocity trace and replaced them with interpolated line segments to recover the smooth pursuit velocity trace (see Appendix A for details). We then performed a Bode analysis of the time series of smooth pursuit velocity and target velocity using the same procedure as previously described to compute the smooth pursuit gain and phase at each of the seven perturbation frequencies.

Last, to separately analyze the catch-up saccades, we constructed a cumulative saccadic position trace by replacing all intervals in the eye velocity trace that were not flagged as saccades with zero-velocity elements and then numerically integrating the result. Because most participants tended to make more saccades in a certain direction, the cumulative saccadic position trace showed a slope, which was removed trial by trial by fitting a line using a least-squares procedure and subtracting it from the trace (see also Collewijn & Tamminga, 1984). We then performed a Bode analysis of the cumulative saccadic position and target position time series to compute the saccadic tracking gain and phase at each of the seven perturbation frequencies. We also computed the duration and amplitude of each saccade, as well as the tracking position error at the start and the end of each saccade. Specifically, for each saccade, the eye position error in the five samples preceding its onset was averaged to determine the position tracking error at the onset of the saccade. The same was done for the five samples following saccade offset to determine the position tracking error at saccade offset. For each participant in each condition, we then computed the RMS position tracking onset and offset errors.

Results

Figure 2a plots a representative section of the eye and hand (cursor) positions along with the target position from part of a trial in the eye-hand tracking condition. While both the eye and hand tracked the target with some delay, the eye and hand positions were very close to the target. Figure 2b plots the corresponding cumulative saccadic position trace after pursuit removal along with the target position trace. The trace shows that saccades tracked the target with a delay and the low amplitude indicates that saccades accounted for only part of the overall tracking response. Finally, Figure 2c plots the corresponding smooth pursuit velocity trace after saccade removal (see Appendix A for the details of saccade removal) along with the hand and target velocity traces. Both smooth pursuit and hand velocity traces matched the target velocity trace closely, although with a delay.

Figure 2.

Representative sample of oculomanual tracking responses in the eye-hand tracking condition. (a) Azimuthal position of the target, eye, and hand (cursor) in visual angle. (b) Azimuthal position of target and azimuthal cumulative saccadic eye position. (c) Azimuthal velocity of target and hand (cursor) motion and smooth pursuit eye movements.

Overall tracking performance

Figure 3 plots the mean and RMS tracking position error for eye tracking in both the eye-hand and eye alone conditions and for hand tracking in the eye-hand condition for all 15 participants. The mean eye position tracking error was not significantly different from 0° for both the eye-hand (mean ± SE: −0.05 ± 0.13°, t(14) = −0.41, p = 0.69) and eye alone conditions (0.08 ± 0.15°, t(14) = 0.54, p = 0.59), and there was no significant difference in the RMS eye position error between the eye-hand (2.04 ± 0.06°) and eye-alone tracking conditions (2.00 ± 0.07°, t(14) = 1.17, p = 0.26). This indicates that the eye accurately tracked the target and that overall tracking performance was similar for these two experimental conditions.

Figure 3.

Mean and RMS eye and hand tracking position errors. Mean (a) and RMS (b) eye-tracking position error in the eye-hand and eye-alone tracking conditions, as well as mean and RMS hand-tracking position error in the eye-hand tracking condition for each participant along with the mean averaged across participants. Error bars are SEs across 15 participants.

For hand tracking, while the mean hand position tracking error (−0.37 ± 0.07°) was significantly different from 0° (t(14) = −5.33, p = 0.0001), it was small. Furthermore, although the mean RMS hand tracking position error (2.78 ± 0.11°) was larger than the mean RMS eye tracking position error in both experimental conditions (t(14) > 7.19, p < 0.0001), the increase was less than 1°. Together, this indicates that participants' hand tracking of the target was accurate and precise.

Position tracking gain and phase

Figure 4 plots the closed-loop Bode plots of the mean position tracking gain and phase averaged across 15 participants against perturbation frequency for the eye tracking response in both the eye-hand and eye-alone tracking conditions and for the hand tracking response in the eye-hand tracking condition. The gains of hand tracking and eye tracking in both experimental conditions were below unity at the lower four frequencies (t < −7.36, p ≪ 0.0001) and above unity at the higher two frequencies (t > 5.68, p < 0.0001). A 3 (tracking response type) × 7 (perturbation frequency) repeated-measures ANOVA on the gains revealed that eye tracking gains were similar in both experimental conditions and were higher than the hand tracking gains at the lower four frequencies, F(12, 168) = 6.41, p ≪ 0.0001. The peak in the gains indicates the damped natural frequency of the tracking response, which was 1.28 Hz for both eye and hand tracking. The fact that the peak occurred at the same frequency for eye and hand tracking despite their different biomechanical characteristics shows that eye and hand movements were tightly synchronized. The peak frequency probably reflects the natural frequency of the sensory-motor system that serves both eye and hand movements.

Figure 4.

Frequency-response (Bode) plots of eye and hand tracking performance. Mean closed-loop position tracking gain (top panel) and phase (bottom panel) averaged across participants as a function of perturbation frequency for the eye tracking response in the eye-hand and the eye-alone tracking conditions and for the hand tracking response in the eye-hand tracking condition. Error bars are SEs across 15 participants.

The phase for both eye and hand tracking rolled off with increasing frequency, as is typically observed in previous eye movement and manual control studies using unpredictable target motion (Jagacinski & Flach, 2003; Stark et al., 1961; Stark et al., 1962; Xia & Barnes, 1999). The phases for both eye and hand tracking were significantly lower than 0° at all perturbation frequencies (t < −10.1, p ≪ 0.0001), indicating that eye and hand tracking always lagged the target movement. A 3 (tracking response type) × 7 (perturbation frequency) repeated-measures ANOVA on the phases revealed that the phase lag started increasing significantly as early as at 0.14 Hz for hand tracking and at 0.24 Hz for eye tracking, F(6, 84) = 1414, p ≪ 0.0001. While the phase lags for eye tracking were similar in both experimental conditions, the phase lags for hand tracking were significantly larger than those for eye tracking at all perturbation frequencies except at the lowest frequency of 0.10 Hz, F(6, 84) = 66.1, p ≪ 0.0001. The larger phase lag together with the steeper phase roll-off for hand tracking compared with eye tracking indicates that hand tracking lagged behind eye tracking. In the next two sections, we analyze the two components that together make up the overall eye tracking response, smooth pursuit eye movements and catch-up saccades.

Smooth pursuit gain and phase

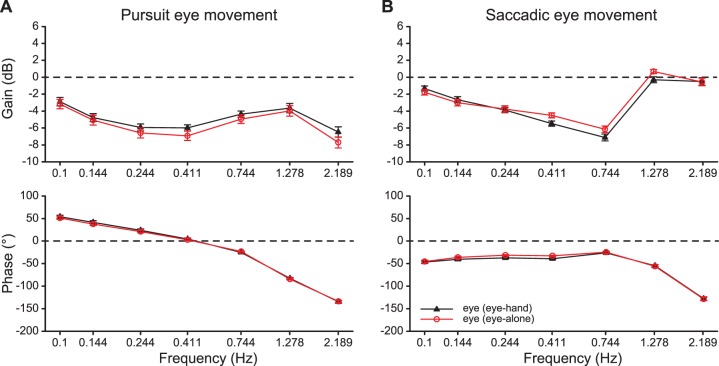

Figure 5a plots the mean smooth pursuit gain and phase averaged across 15 participants against perturbation frequency for eye tracking in both the eye-hand and eye-alone tracking conditions. For both experimental conditions, the smooth pursuit gain was significantly lower than the position tracking gain presented in the above section where saccades were included in the Bode analysis (F(1, 14) = 176, p ≪ 0.0001 and F(1, 14) = 137, p ≪ 0.0001 for the eye-hand and eye-alone conditions, respectively). This suggests that catch-up saccades played a significant role in tracking the moving target.

Figure 5.

Frequency-response (Bode) plots of smooth pursuit and saccadic eye tracking performance. (a) Mean smooth pursuit gain (top panel) and phase (bottom panel) and (b) mean saccadic tracking gain (top panel) and phase (bottom panel) averaged across 15 participants as a function of perturbation frequency for the eye-hand and the eye-alone tracking conditions. Error bars are SEs across 15 participants.

We then examined whether concurrent manual tracking enhances smooth pursuit eye movements. A 2 (experimental condition) × 7 (perturbation frequency) repeated-measures ANOVA showed that the smooth pursuit gain was significantly higher in the eye-hand than in the eye-alone tracking condition at the higher four perturbation frequencies, F(6, 84) = 3.40, p = 0.0048; p = 0.0068 at 0.24 Hz, p = 0.0002 at 0.41 Hz, p = 0.0094 at 0.74 Hz, p = 0.0001 at 2.19 Hz. For the phases, the phase lead was significantly larger in the eye-hand than in the eye-alone tracking condition at the lowest three perturbation frequencies, F(6, 72) = 2.54, p = 0.027; p = 0.032 at 0.10 Hz, p = 0.0071 at 0.14 Hz, and p = 0.017 at 0.24 Hz. The positive phase at the lowest three frequencies together with the dip pattern in the smooth pursuit gains at these frequencies are characteristics of a lead-lag compensator in the smooth pursuit system, which reduces tracking overshoot and improves the stability of the tracking response.

Figure 6a plots the mean smooth pursuit gain averaged across all perturbation frequencies for each participant for both the eye-hand and eye-alone tracking conditions. Twelve out of the 15 participants showed a higher mean smooth pursuit gain in the eye-hand than in the eye-alone tracking condition. The increase of the mean smooth pursuit gain from the eye-alone to the eye-hand tracking condition (mean percent increase ± SE: 8.2 ± 3.6%) was statistically significant and of a medium effect size (Cohen's d = 0.58, t(14) = 2.24, p = 0.04). A 2 (experimental condition) × 6 (trial) repeated-measures ANOVA revealed that the mean smooth pursuit gain was consistently higher in the eye-hand than in the eye-alone tracking condition across all trials, F(1, 14) = 4.91 p = 0.0438. A correlation analysis revealed no systematic relationship between the mean pursuit gain in the eye-alone condition and the size of the enhancement effect (p > 0.16).

Figure 6.

Mean smooth pursuit gain and phase. (a) Mean smooth pursuit gain averaged across seven perturbation frequencies for the eye-hand and the eye-alone tracking conditions for each participant along with the mean averaged across participants. (b) Mean smooth pursuit phase averaged across seven perturbation frequencies for the eye-hand and the eye-alone tracking conditions for each participant along with the mean averaged across participants. Error bars are SEs across 15 participants. *: p < 0.05.

Figure 6b plots the mean smooth pursuit phase averaged across all perturbation frequencies for each participant for both the eye-hand and eye-alone tracking conditions. Although twelve out of 15 participants showed a smaller mean smooth pursuit phase lag in the eye-hand than in the eye-alone tracking condition, there was no significant difference in the mean phase lag between the two experimental conditions, t(14) = 1.67, p = 0.12.

Catch-up saccades

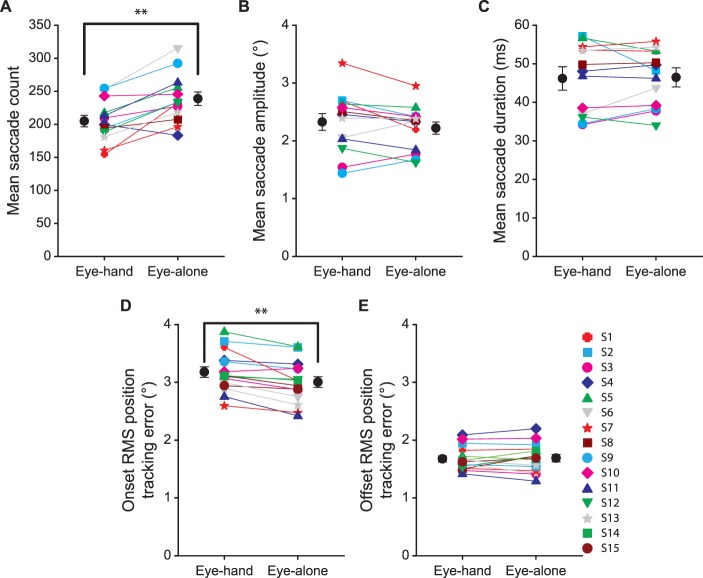

During each trial, participants made a large number of saccades. All but one saccade made from all subjects and trials were horizontal and at the height of the target (i.e., they landed within 2° vertically from the center of the target). Figure 7a plots the mean number of saccades made during a trial, averaged across six trials for each participant for the eye-hand and eye-alone tracking conditions. Fourteen out of the 15 participants made fewer saccades in the eye-hand than in the eye-alone condition. The number of saccades made in the eye-hand condition (mean ± SE: 206 ± 7.7, corresponding to 2.29 ± 0.086 saccades/s) was also significantly lower than that in the eye-alone tracking condition (238 ± 10.1, corresponding to 2.65 ± 0.113 saccades/s, t(14) = −4.69, p = 0.0003). In line with this, the mean percentage of eye position data consisting of saccades in each trial was significantly lower in the eye-hand (13.8 ± 0.40%) than in the eye-alone condition (15.9 ± 0.59%, t(14) = −4.32, p = 0.0007). Conversely, the mean percentage of eye velocity data consisting of smooth pursuits in each trial was significantly higher in the eye-hand (85.0 ± 0.47%) than in the eye-alone condition (82.7 ± 0.68%, t(14) = 5.12, p = 0.0002). This reinforces the finding of higher smooth pursuit gains in the eye-hand than in the eye-alone tracking condition reported in the previous section, as better smooth pursuit eye movements naturally lead to fewer catch-up saccades to maintain accurate eye tracking (see, e.g., Collewijn & Tamminga, 1984; Ke et al., 2013; Puckett & Steinman, 1969). Note that neither condition shows a systematic relationship between mean smooth pursuit gain and saccade count (p > 0.53), possibly due to individual differences in the catch-up saccade threshold (see Figure 7d).

Figure 7.

Catch-up saccade characteristics. (a) Mean saccade count. (b) Mean saccade amplitude. (c) Mean saccade duration. (d) RMS position tracking error at saccade onset. (e) RMS position tracking error at saccade offset for the eye-hand and eye-alone tracking conditions for each participant along with the means averaged across participants. Error bars are SEs across 15 participants. **: p < 0.001.

Next, we evaluated whether the characteristics of the catch-up saccades differed between the two tracking conditions. Although ten out of 15 participants made saccades with a larger amplitude and of a shorter duration in the eye-hand than in the eye-alone condition, the mean saccade amplitude (Figure 7b) and duration (Figure 7c) did not differ between the two experimental conditions (t(14) = 1.48, p = 0.16 and t(14) = 0.17, p = 0.86, respectively). This indicates that fewer saccades made in the eye-hand than in the eye-alone tracking condition was not due any difference in basic saccade characteristics between these two conditions.

We then computed the RMS position tracking error at saccade onset (Figure 7d) to evaluate how concurrent manual tracking influenced the tolerance to position error in tracking the target. We also computed the RMS position tracking error at saccade offset (Figure 7e) to assess the level of residual position error upon catch-up saccade landing. Although the RMS onset position tracking error was significantly larger in the eye-hand (3.18 ± 0.09°) than in the eye-alone condition (3.00 ± 0.09°, t(14) = 4.36, p = 0.0006), the corresponding RMS offset position error was similar in the two conditions (1.68 ± 0.05° and 1.69 ± 0.06° for the eye-hand and eye-alone conditions, respectively, t(14) = −0.45, p = 0.66). This indicates that while concurrent manual tracking led to a higher tolerance for position error, catch-up saccades realized similar residual position tracking error at saccade landing in the two conditions.

Finally, to examine the contribution that the catch-up saccades made to the overall tracking position gain and phase and their interplay with smooth pursuit tracking of the target, we performed a closed-loop Bode analysis of the cumulative saccadic position trace. We plotted the mean saccadic tracking gain and phase averaged across 15 participants against perturbation frequency for both the eye-hand and eye-alone tracking conditions in Figure 5b. We then examined how concurrent manual tracking affected the saccadic component of the eye tracking response. A 2 (experimental condition) × 7 (perturbation frequency) repeated-measures ANOVA revealed that the saccadic tracking gain was significantly lower in the eye-hand than in the eye-alone tracking condition at the higher middle three perturbation frequencies (F(6, 84) = 10.7, p ≪ 0.0001; p = 0.0001 at 0.41 Hz, 0.74 Hz, and 1.29 Hz), and the saccadic tracking gain was significantly higher in the eye-hand than the eye-alone tracking condition at the lowest frequency (p = 0.028 at 10 Hz). For phases, different from smooth pursuit eye movements that showed phase lead at the lowest three perturbation frequencies, saccadic tracking showed a phase lag at all frequencies in both conditions (t < −11.2, p ≪ 0.0001). The saccadic tracking phase lag was significantly larger in the eye-hand than in the eye-alone tracking condition at the lower middle three perturbation frequencies (F(6,84) = 5.71, p < 0.0001; p = 0.009 at 0.14 Hz, p = 0.0002 at 0.24 Hz, and p = 0.0002 at 0.41 Hz);.

In summary, the previously described catch-up saccade results show a tight coupling with the smooth pursuit results reported in the previous section. Specifically, the lead generated by the smooth pursuit system at low frequencies compensates for the lag in the response of the saccadic system, revealing a synergy that enables more accurate tracking of a target than either system could achieve independently (see also Krauzlis, 2004, 2005; Orban de Xivry & Lefèvre, 2007).

Discussion

Previous studies have reported that concurrent manual tracking enhances smooth pursuit of a predicable moving target (e.g., Angel & Garland, 1972; Gauthier et al., 1988; Mather & Lackner, 1980; Steinbach & Held, 1968). In this study, we examined whether concurrent manual tracking enhances smooth pursuit of an unpredictable moving target. Using an unpredictable moving target ensured that smooth pursuit was mainly driven by an online feedback control process relying on the retinal slip of the target (Lisberger, 2010) instead of by a predictive process. We compared the eye tracking response with concurrent manual tracking to that in a condition with identical visual display but in which participants only performed eye tracking of the target. While the overall eye tracking performance (measured as the position tracking accuracy, precision, gain, and phase) was similar between these two conditions, participants had an 8% higher smooth pursuit gain and made 13% fewer catch-up saccades when both the eye and hand tracked the moving target than when the eye tracked the target alone. Furthermore, the smooth pursuit phase lead was larger at the lowest three perturbation frequencies. The overall improvement in smooth pursuit gain, the reduced number of saccades, and the increased response lead at low frequencies all indicate that concurrent manual tracking enhances smooth pursuit of an unpredictable moving target.

There are three reasons why we believe that eye tracking of the target in our experiment is indeed driven online and not by a predictive process. First, significant phase lag is observed at all frequencies in the Bode plots of the overall tracking position data for both the eye-hand and eye-alone tracking conditions, indicating no prediction or anticipation of target position occurred. Second, the phase lag at all perturbation frequencies in the Bode plots of the catch-up saccades indicates that these saccades were generated online without prediction of the upcoming tracking position error, and therefore they never led the visual input. Both these observations show that the unpredictable target motion used in our study did not allow for the formation of an internal model of the target position to predict upcoming target position errors and preemptively program corrective saccades. Third, the phase lead at the lowest three frequencies observed in the Bode plots of the smooth pursuit eye movements is consistent with previously reported observations that smooth pursuit generates lead at low target motion frequencies (e.g., Collewijn & Tamminga, 1984; Dodge, Travis, & Fox, 1930; Drischel, 1958; Stark et al., 1962; Westheimer, 1954; Xia & Barnes, 1999). To ensure that smooth pursuit eye movements were not driven by a predictive mechanism in the current study, we assessed the time lag of smooth pursuit by cross-correlating the eye with the target velocity traces in each trial. The mean smooth pursuit latency, as determined by the lag at which the maximum unbiased cross-correlation value occurred, averaged across all trials and participants, is similar for the eye-hand (113.6 ± 4.0 ms) and eye-alone conditions (108.6 ± 4.1 ms), and corresponds to the range of previously reported latencies for smooth pursuit without any predictive character (e.g., Gauthier et al., 1988; Joiner & Shelhamer, 2006; Krauzlis & Lisberger, 1994; Lisberger, 2010; Mulligan, Stevenson, & Cormack, 2013).

The fact that we found evidence for the enhancement of smooth pursuit eye movements by concurrent manual tracking using an unpredictable target shows that the enhancement effect is not restricted to the case of tracking predictable or self-driven target motion when an internal model of the target motion is formed and the predictive mechanism plays an important role in driving eye movements. Instead, our findings support the claim that smooth pursuit enhancement by concurrent manual tracking is a fundamental property of eye-hand coordination that occurs regardless of the predictability of target motion.

While our findings show that concurrent manual tracking enhances smooth pursuit of a moving target, the underlying neural mechanism responsible for such an enhancement effect of eye-hand coordination remains under debate. Previous studies have proposed that the oculomotor system has access to efferent and/or afferent signals from hand movements, which are used to facilitate pursuit eye movements (e.g., Gauthier & Mussa Ivaldi, 1988; Steinbach, 1969; Vercher, Gauthier, Cole, & Blouin, 1997). The findings from the current study suggest that these signals are used even when pursuit eye movements are not driven by a predicative mechanism.

A potential caveat in our experimental design is that while in the eye-hand condition the hand cursor was self-driven and thus moved predictably, in the eye-alone condition its motion was a replay and thus no longer predictable. It could thus be that the motion of the hand cursor in the eye-alone tracking condition was more distracting than in the eye-hand tracking condition, leading to less smooth pursuit performance. However, we believe that this is unlikely. First, the target and the hand cursor were separated by 8° to minimize any effect from the motion of the hand cursor on the eye tracking of the target above it. Indeed, our analysis of the catch-up saccades showed that all but one saccade made from all subjects and trials landed within 2° vertically from the center of the target, indicating that the hand cursor moving underneath the target did not affect eye tracking of the target above. Second, in contrast to the study by Xia and Barnes (1999) which found less precise eye tracking performance in the eye-hand than in the eye-alone condition, we observed equal eye tracking performance in terms of the position tracking accuracy, precision, gain, and phase in the eye-hand and eye-alone tracking conditions in the current study. This indicates that the hand cursor did not take attention away from eye tracking the target. This also is an essential precondition to compare smooth pursuit and saccadic tracking between the two experimental conditions.

As to the neural mechanism underlying eye-hand coordination, some researchers propose that eye-hand coordination is regulated by shared early neural signals that drive both the eye and hand motor systems (Engel, Anderson, & Soechting, 2000; Stone & Krauzlis, 2003), while others propose that eye-hand coordination is enabled by a mutual exchange of efferent and or afferent motor signals between the two systems (Gauthier et al., 1988; Scarchilli & Vercher, 1999; Vercher & Gauthier, 1992). The supporting evidence of the former comes from studies that reported that the eye and hand make similar tracking errors and exhibit highly synchronized timing (e.g., Engel et al., 2000; Xia & Barnes, 1999). The supporting evidence of the latter comes from studies that reported that for predictable target motion, passive hand movements lead to a similar degree of smooth pursuit enhancement as active hand movements (e.g., Jordan, 1970; Mather & Lackner, 1980). The finding of a common natural frequency for eye and hand tracking in the current study supports the theory of shared early neural signals that drive both eye and hand motor systems. Furthermore, in another study of our group (Li, Niehorster, Liston, Siu, & Stone, 2013), we examined the correlation between the errors made by eye tracking and the errors made by hand tracking of an unpredictable moving target and found that these errors were significantly correlated. This further suggests that common neural signals drive eye and hand motor systems and limit eye-hand coordination.

Supplementary Material

Acknowledgments

We thank Leland Stone, Dorion Liston and Ignace Hooge for helpful discussions, and Ignace Hooge and Marcus Nyström for providing programming codes on which our eye movement analysis software was based. We also thank an anonymous reviewer, Jeff Mulligan, and Jing Chen for their helpful comments on a previous draft of this manuscript. This study was supported by grants from the Research Grants Council of Hong Kong (HKU7482/12H and 7460/13H) to L. Li, and a PhD Fellowship (PF09-03850) to D. C. Niehorster.

Commercial relationships: none.

Corresponding author: Li Li.

Email: ll114@nyu.edu.

Address: Neural Science Program, New York University Shanghai, Shanghai, People's Republic of China.

Appendix A: Eye movement analysis

Compound eye movements are composed of smooth pursuit with superimposed saccades (e.g., Drischel, 1958). As such, our eye movement analysis was designed to remove the saccades and recover the underlying pursuit. It combined the initial stage of the algorithm proposed by Liston, Krukowski, and Stone (2013) with the saccade identification procedure of Nyström and Holmqvist (2010). We describe our eye movement analysis in detail in the material that follows.

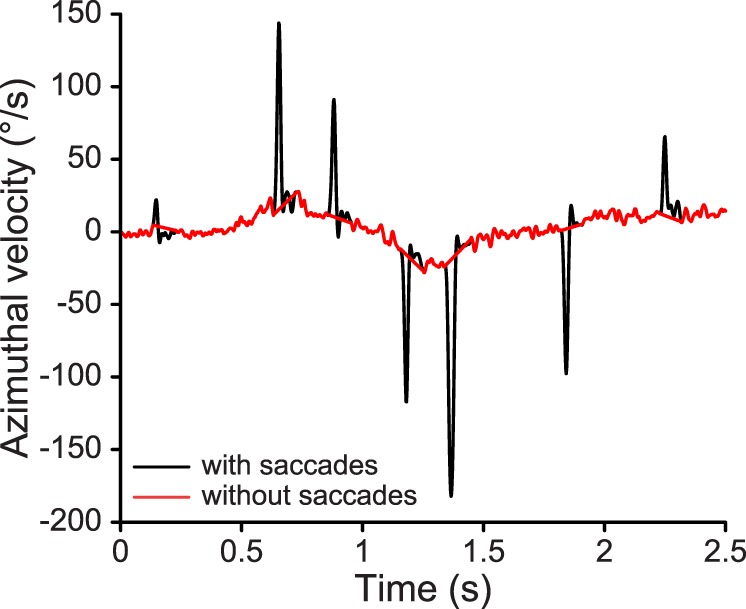

Figure A1.

Sample compound azimuthal eye velocity trace with saccades (black) and the corresponding smooth pursuit velocity trace without saccades (red) in the eye-hand tracking condition.

Smoothed compound azimuthal and elevational eye velocity traces were computed using a Savitzky-Golay differentiation filter (Savitzky & Golay, 1964), which in essence fitted a second order polynomial to the eye position data in a 22 ms sliding window and then analytically differentiated the polynomial to derive a smoothed estimate of the compound eye velocity. The compound angular eye velocity trace (ω̇) was then computed from the azimuthal (θ) and elevational (φ) velocity traces by  , where the cos2

φ scale factor adjusts for the fact that 1° of azimuthal change near φ = 0 is a much larger distance than 1° of azimuthal change near φ = 90.

, where the cos2

φ scale factor adjusts for the fact that 1° of azimuthal change near φ = 0 is a much larger distance than 1° of azimuthal change near φ = 90.

Following Liston et al. (2013), an estimate of the low-frequency smooth pursuit component in the compound angular eye velocity trace computed in Step One was obtained using a median filter of 40-ms width. This estimate was subtracted from the compound angular eye velocity trace to create a high-frequency velocity trace that contains primarily saccadic signals.

This saccadic signal velocity trace was low-pass filtered by cross-correlation with the minimum snap profile of a 30-ms saccade (García-Pérez & Peli, 2001) to enhance saccade-like features in the trace while excluding high-frequency noise.

Candidate saccades were identified from the cross-correlation output of Step Three using the iterative threshold estimation procedure from Nyström and Holmqvist (2010).

Once candidate saccades were identified, Nyström and Holmqvist's (2010) saccade identification algorithm (their step three) was used to determine saccade onsets and offsets from the compound angular eye velocity trace generated in Step One. Specifically, for each saccadic peak, a backward and forward search was executed that terminated at the first local minimum in the trace that was ≤ 3 SD below the mean velocity. Candidate saccades shorter than 10 ms were flagged as false alarms and discarded and saccades separated by less than 30 ms were merged together. All saccade analyses in the text were performed on the thus detected saccade onsets and offsets.

To recover the smooth pursuit velocity trace, detected saccades were removed from the compound azimuthal eye velocity trace generated in Step One. The intervals in this trace ranging from the saccade onsets to 50 ms past the corresponding saccade offsets were replaced with straight lines obtained by linear interpolation. We removed 50 ms of data following each saccade offset to ensure that glissadic eye movements (Nyström & Holmqvist, 2010) and postsaccadic oscillations (Hooge, Nyström, Cornelissen, & Holmqvist, 2015; Nyström, Hooge, & Holmqvist, 2013) were also removed from the compound azimuthal eye velocity trace. Straight lines also replaced data missing due to eye blinks, which were detected based on the pupil size returned by the eye tracker.

Figure A1 plots a representative sample of the compound azimuthal eye velocity trace produced by Step One and the corresponding smooth pursuit velocity trace with saccades removed after Step Six.

Contributor Information

Diederick C. Niehorster, Email: dcnieho@gmail.com.

Li Li, ll114@nyu.edu, https://shanghai.nyu.edu/research/brain/faculty/li-li.

References

- Angel, R. W.,, Garland H. (1972). Transfer of information from manual to oculomotor control system. Journal of Experimental Psychology, 96 (1), 92–96, doi:http://dx.doi.org/10.1037/h0033457. [DOI] [PubMed] [Google Scholar]

- Bahill A. T.,, McDonald J. D. (1983). Model emulates human smooth pursuit system producing zero-latency target tracking. Biological Cybernetics, 48 (3), 213–222, doi:http://dx.doi.org/10.1007/BF00318089. [DOI] [PubMed] [Google Scholar]

- Barnes G. R.,, Asselman P. T. (1991). The mechanism of prediction in human smooth pursuit eye movements. The Journal of Physiology, 439 (1), 439–461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collewijn H.,, Tamminga E. P. (1984). Human smooth and saccadic eye movements during voluntary pursuit of different target motions on different backgrounds. The Journal of Physiology, 351 (1), 217–250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dodge R.,, Travis R. C.,, Fox J. J. (1930). Optic nystagmus, III. Characteristics of the slow phase. Archives of Neurology & Psychiatry, 24 (1), 21–34, doi:http://dx.doi.org/10.1001/archneurpsyc.1930.02220130024002. [Google Scholar]

- Drischel H. (1958). Über den Frequenzgang der horizontalen Folgebewegungen des menschlichen Auges. Pflüger's Archiv für die gesamte Physiologie des Menschen und der Tiere [Translation: On the frequency response of horizontal pursuit movements of the human eye]. 268 (1), 34–34, doi:http://dx.doi.org/10.1007/BF02291023. [Google Scholar]

- Engel K. C.,, Anderson J. H.,, Soechting J. F. (2000). Similarity in the response of smooth pursuit and manual tracking to a change in the direction of target motion. Journal of Neurophysiology, 84 (3), 1149–1156. [DOI] [PubMed] [Google Scholar]

- García-Pérez M. A.,, Peli E. (2001). Intrasaccadic perception. The Journal of Neuroscience, 21 (18), 7313–7322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier G. M.,, Hofferer J. M. (1976). Eye tracking of self-moved targets in the absence of vision. Experimental Brain Research, 26 (2), 121–139, doi:http://dx.doi.org/10.1007/BF00238277. [DOI] [PubMed] [Google Scholar]

- Gauthier G. M.,, Mussa Ivaldi F. (1988). Oculo-manual tracking of visual targets in monkey: Role of the arm afferent information in the control of arm and eye movements. Experimental Brain Research, 73 (1), 138–154, doi:http://dx.doi.org/10.1007/BF00279668. [DOI] [PubMed] [Google Scholar]

- Gauthier G. M.,, Vercher J. L., MussaIvaldi F.,, Marchetti E. (1988). Oculo-manual tracking of visual targets: Control learning, coordination control and coordination model. Experimental Brain Research, 73 (1), 127–137, doi:http://dx.doi.org/10.1007/BF00279667. [DOI] [PubMed]

- Hooge I. T. C.,, Nyström M.,, Cornelissen T.,, Holmqvist K. (2015). The art of braking: Post saccadic oscillations in the eye tracker signal decrease with increasing saccade size. Vision Research, 112, 55–67, doi:http://dx.doi.org/10.1016/j.visres.2015.03.015. [DOI] [PubMed] [Google Scholar]

- Jagacinski R. J.,, Flach J. M. (2003). Control theory for humans: Quantitative approaches to modeling performance. Mahwah, NJ: Lawrence Erlbaum Associates. [Google Scholar]

- Joiner W. M.,, Shelhamer M. (2006). Pursuit and saccadic tracking exhibit a similar dependence on movement preparation time. Experimental Brain Research, 173 (4), 572–586, doi:http://dx.doi.org/10.1007/s00221-006-0400-3. [DOI] [PubMed] [Google Scholar]

- Jordan S. (1970). Ocular pursuit movement as a function of visual and proprioceptive stimulation. Vision Research, 10 (8), 775–780, doi:http://dx.doi.org/10.1016/0042-6989(70)90021-0. [DOI] [PubMed] [Google Scholar]

- Ke S. R.,, Lam J.,, Pai D. K.,, Spering M. (2013). Directional asymmetries in human smooth pursuit eye movements. Investigative Ophthalmology & Visual Science, 54 (6), 4409–4421, doi:10.1167/iovs.12-11369. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Koken P. W.,, Erkelens C. J. (1992). Influences of hand movements on eye movements in tracking tasks in man. Experimental Brain Research, 88 (3), 657–664, doi:http://dx.doi.org/10.1007/BF00228195. [DOI] [PubMed] [Google Scholar]

- Krauzlis R. J. (2004). Recasting the smooth pursuit eye movement system. Journal of Neurophysiology, 91 (2), 591–603. [DOI] [PubMed] [Google Scholar]

- Krauzlis R. J. (2005). The control of voluntary eye movements. New perspectives. The Neuroscientist, 11 (2), 124–137. [DOI] [PubMed] [Google Scholar]

- Krauzlis R. J.,, Lisberger S. G. (1994). Temporal properties of visual motion signals for the initiation of smooth pursuit eye movements in monkeys. Journal of Neurophysiology, 72 (1), 150–162. [DOI] [PubMed] [Google Scholar]

- Li L.,, Niehorster D. C.,, Liston D.,, Siu W. W. F.,, Stone L. (2013). Shared neural sensory signals for eye-hand coordination in humans. Journal of Vision, 13 (9): 1205, doi:10.1167/13.9.1205. [Abstract] [Google Scholar]

- Li L.,, Stone L. S.,, Chen J. (2011). Influence of optic-flow information beyond the velocity field on the active control of heading. Journal of Vision, 11 (4): 11 1–16, doi:10.1167/11.4.9. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Li L.,, Sweet B. T.,, Stone L. S. (2005). Effect of contrast on the active control of a moving line. Journal of Neurophysiology, 93 (5), 2873–2886, doi:http://dx.doi.org/10.1152/jn.00200.2004. [DOI] [PubMed] [Google Scholar]

- Li L.,, Sweet B. T.,, Stone L. S. (2006). Active control with an isoluminant display. IEEE Transactions on Systems, Man and Cybernetics A: Systems and Humans, 36 (6), 1124–1134, doi:http://dx.doi.org/10.1109/TSMCA.2006.878951. [Google Scholar]

- Lisberger S. G. (2010). Visual guidance of smooth-pursuit eye movements: Sensation, action, and what happens in between. Neuron, 66 (4), 477–491, doi:http://dx.doi.org/10.1016/j.neuron.2010.03.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liston D. B.,, Krukowski A. E.,, Stone L. S. (2013). Saccade detection during smooth tracking. Displays, 34 (2), 171–176, doi:http://dx.doi.org/10.1016/j.displa.2012.10.002. [Google Scholar]

- Mather J. A.,, Lackner J. R. (1980). Visual tracking of active and passive movements of the hand. Quarterly Journal of Experimental Psychology, 32 (2), 307–315, doi:http://dx.doi.org/10.1080/14640748008401166. [DOI] [PubMed] [Google Scholar]

- McRuer D. T.,, Krendel E. S. (1974). Mathematical models of human pilot behavior. AGARDAG-188, Group for Aerospace Research and Development. London: Technical Editing and Reproduction. [Google Scholar]

- Miall R. C.,, Weir D. J.,, Stein J. F. (1986). Manual tracking of visual targets by trained monkeys. Behavioural Brain Research, 20 (2), 185–201, doi:http://dx.doi.org/10.1016/0166-4328(86)90003-3. [DOI] [PubMed] [Google Scholar]

- Mulligan J. B.,, Stevenson S. B.,, Cormack L. K. (2013). Reflexive and voluntary control of smooth eye movements. Proceedings SPIE 8651, Human Vision and Electronic Imaging, XVIII, 86510Z, doi:http://dx.doi.org/10.1117/12.2010333. [Google Scholar]

- Niehorster D. C.,, Peli E.,, Haun A.,, Li L. (2013). Influence of hemianopic visual field loss on visual motor control. PLoS One, 8 (2), e56615: 1–9, doi:10.1371/journal.pone.0056615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyström M.,, Holmqvist K. (2010). An adaptive algorithm for fixation, saccade, and glissade detection in eyetracking data. Behavior Research Methods, 42 (1), 188–204, doi:1http://dx.doi.org/0.3758/BRM.42.1.188. [DOI] [PubMed] [Google Scholar]

- Nyström M.,, Hooge I. T. C.,, Holmqvist K. (2013). Post-saccadic oscillations in eye movement data recorded with pupil-based eye trackers reflect motion of the pupil inside the iris. Vision Research, 92, 59–66, doi:http://dx.doi.org/10.1016/j.visres.2013.09.009. [DOI] [PubMed] [Google Scholar]

- Orban de Xivry J. J.,, Lefevre P. (2007). Saccades and pursuit: two outcomes of a single sensorimotor process. Journal of Physiology, 584 (1), 11–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puckett J. D. W.,, Steinman R. M. (1969). Tracking eye movements with and without saccadic correction. Vision Research, 9 (6), 695–703, doi:http://dx.doi.org/10.1016/0042-6989(69)90126-6. [DOI] [PubMed] [Google Scholar]

- Savitzky A.,, Golay M. J. E. (1964). Smoothing and differentiation of data by simplified least squares procedures. Analytical Chemistry, 36 (8), 1627–1639, doi:http://dx.doi.org/10.1021/ac60214a047. [Google Scholar]

- Scarchilli K.,, Vercher J. L. (1999). The oculomanual coordination control center takes into account the mechanical properties of the arm. Experimental Brain Research, 124 (1), 42–52, doi:http://dx.doi.org/10.1007/s002210050598. [DOI] [PubMed] [Google Scholar]

- Stark L.,, Iida M.,, Willis P. A. (1961). Dynamic characteristics of the motor coordination system in man. Biophysical Journal, 1 (4), 279–300, doi:http://dx.doi.org/10.1016/S0006-3495(61)86889-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark L.,, Vossius G.,, Young L. R. (1962). Predictive control of eye tracking movements. IRE Transactions on Human Factors in Electronics, 3 (2), 52–57, doi:http://dx.doi.org/10.1109/THFE2.1962.4503342. [Google Scholar]

- Steinbach M. J. (1969). Eye tracking of self-moved targets: The role of efference. Journal of Experimental Psychology, 82 (2), 366–376, doi:http://dx.doi.org/10.1037/h0028115. [DOI] [PubMed] [Google Scholar]

- Steinbach M. J.,, Held R. (1968). Eye tracking of observer-generated target movements. Science, 161 (3837), 187–188, doi:http://dx.doi.org/10.1126/science.161.3837.187. [DOI] [PubMed] [Google Scholar]

- Stone L. S.,, Krauzlis R. J. (2003). Shared motion signals for human perceptual decisions and oculomotor actions. Journal of Vision, 3 (11): 11 725–736, doi:10.1167/3.11.7. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Vercher J. L.,, Gauthier G. M. (1992). Oculo-manual coordination control: Ocular and manual tracking of visual targets with delayed visual feedback of the hand motion. Experimental Brain Research, 90 (3), 599–609, doi:http://dx.doi.org/10.1007/BF00230944. [DOI] [PubMed] [Google Scholar]

- Vercher J. L.,, Gauthier G. M.,, Cole J.,, Blouin J. (1997). Role of arm proprioception in calibrating the arm-eye temporal coordination. Neuroscience Letters, 237 (2–3), 109–112, doi:http://dx.doi.org/10.1016/S0304-3940(97)00816-1. [DOI] [PubMed] [Google Scholar]

- Westheimer G. (1954). Eye movement responses to a horizontally moving visual stimulus. AMA Archives of Ophthalmology, 52 (6), 932–941, doi:http://dx.doi.org/10.1001/archopht.1954.00920050938013. [DOI] [PubMed] [Google Scholar]

- Xia R.,, Barnes G. (1999). Oculomanual coordination in tracking of pseudorandom target motion stimuli. Journal of Motor Behavior, 31 (1), 21–38, doi:http://dx.doi.org/10.1080/00222899909601889. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.