Abstract

Student populations are diverse such that different types of learners struggle with traditional didactic instruction. Problem-based learning has existed for several decades, but there is still controversy regarding the optimal mode of instruction to ensure success at all levels of students' past achievement. The present study addressed this problem by dividing students into the following three instructional groups for an upper-level course in animal physiology: traditional lecture-style instruction (LI), guided problem-based instruction (GPBI), and open problem-based instruction (OPBI). Student performance was measured by three summative assessments consisting of 50% multiple-choice questions and 50% short-answer questions as well as a final overall course assessment. The present study also examined how students of different academic achievement histories performed under each instructional method. When student achievement levels were not considered, the effects of instructional methods on student outcomes were modest; OPBI students performed moderately better on short-answer exam questions than both LI and GPBI groups. High-achieving students showed no difference in performance for any of the instructional methods on any metric examined. In students with low-achieving academic histories, OPBI students largely outperformed LI students on all metrics (short-answer exam: P < 0.05, d = 1.865; multiple-choice question exam: P < 0.05, d = 1.166; and final score: P < 0.05, d = 1.265). They also outperformed GPBI students on short-answer exam questions (P < 0.05, d = 1.109) but not multiple-choice exam questions (P = 0.071, d = 0.716) or final course outcome (P = 0.328, d = 0.513). These findings strongly suggest that typically low-achieving students perform at a higher level under OPBI as long as the proper support systems (formative assessment and scaffolding) are provided to encourage student success.

Keywords: inquiry, low-achieving students, problem-based learning

several decades ago, problem-based learning (PBL) was introduced at McMaster University Medical School, and it challenged the fundamental pedagogical principles of science education and the teaching of physiology (4). The success and popularity of PBL in medical education were noticed in the community of undergraduate education where its methods were adapted to this student population (47, 48). This movement of teaching through problem solving resurrected the closely related method of guided inquiry-based learning (GIL), which conveys information to students through the process of scientific inquiry under more direct instructor supervision and guidance (13, 23). Both PBL and GIL are rooted in the social constructivist theories of Dewey (10), and both follow a cyclical process that reinforces and builds on information throughout the semester. Students are presented with a problem or question and a set of “what is known” data from which they will generate a hypothesis. At this point, the students are given new data and asked to use critical reasoning skills to rethink their original hypothesis and discuss it with the class. This process is dually beneficial for the students; they gain knowledge of the concepts discussed and develop skills in hypothesis generation and critical reasoning that develop throughout the semester and can be used throughout their career (Fig. 1). These methods are successful because many learners don't maximally benefit from traditional didactic instructional methods and instead prefer a more active learning style (42). Recently, controversy has arisen regarding the effectiveness of PBL and GIL, labeling them as minimal guidance instructional methods (21). These arguments possess two potentially fatal flaws. First, while these methods may vary in their level of instruction, there is always some degree of direct instruction coupled with significant scaffolding for student instructional support (5, 18, 37). Second, the study in question selectively identified literature that supports the authors' claims while ignoring the wealth of evidence to the contrary (18, 21). There exists a significant archive of literature that strongly supports and advocates for the efficacy of PBL and GIL in medical school, university, and secondary-school student populations (11, 19, 22, 31, 32, 40, 44). There is a paucity of data, however, that addresses the specific level or degree of guidance best suited for these types of constructivist, discovery-based learning environments.

Fig. 1.

Problem-based learning is a cyclical process where practices and concepts learned in one exercise must be reused and reinforced in subsequent activities.

To address this, a required undergraduate core physiology course was designed with three different levels of instruction and predictions were made about potential student outcomes for each mode of instruction (Table 1). Traditional lecture-style instruction has been proven to effectively transmit both knowledge and understanding to students but falls short in successfully training students with sufficient problem-solving skills (11, 41). Constructivist learning models have been shown to be equally effective at conveying knowledge and understanding while also improving critical thinking skills and self-directed learning (19, 20, 22, 25, 39). These previous studies, however, have not addressed how these methods work on different populations of students (i.e., low- and high-achieving students) and whether different levels of guidance, within a constructivist model, will affect these populations differently.

Table 1.

Characterization and potential outcomes of each teaching method

| LI | GPBI | OPBI | |

|---|---|---|---|

| Instruction |

|

|

|

| Assumptions about learning |

|

|

|

| Motivations for learning |

|

|

|

| Assessment |

|

|

|

| Potential outcomes |

|

|

|

LI, lecture instruction; GPBI, guided problem-based instruction; OPBI, open problem-based instruction; MC, multiple choice; SA, short answer.

The present study investigated how undergraduate students with different levels of overall academic history responded to different modes of instruction in a required upper-level core animal physiology course. Both declarative knowledge and critical thinking skills were assessed by multiple-choice (MC) exam questions and short-answer (SA) exam questions, respectively.

MATERIALS AND METHODS

Instructional Groups

Different instructional groups and instructional methods were developed and labeled as follows: “lecture instruction” (LI), “guided problem-based instruction” (GPBI), and “open problem-based instruction” (OPBI). All students randomly selected the different instructional groups based on their time and availability of course scheduling and were unaware of the type of instruction offered before course enrollment. Wet laboratory instruction was also provided for all students, and the laboratory style instruction was not different for all groups. To control for classroom instructional ability, the same professor designed each instructional session and conducted the teaching for all sessions. Student cumulative grade point averages (GPAs) were not different between instructional groups at the onset of the course (P > 0.05). All course materials, such as recommended texts, access to PowerPoint materials, supplemental reading, website, and laboratory materials, were identical for all groups of students in the study.

LI group.

The LI group consisted of 47 biology majors with a cumulative GPA of 3.29 ± 0.53. These students were presented with information through traditional lecture-style classroom instruction by a full professor in the University of Kentucky's Department of Biology during 75-min instructional sessions held on Tuesday and Thursday over the 13-wk semester.

GPBI group.

The GPBI group consisted of 38 biology majors with a cumulative GPA of 3.25 ± 0.54. Instructional times for these students consisted of 75-min sessions that met 2 times/wk (Tuesday and Thursday) over the 13-wk semester. During class periods, students worked in small, randomly formed groups of three to four on guided activities and short timeframe tutorials (8–10 min) designed to encompass the same concepts covered in the LI group. The instructor of these sessions had a significant role as a facilitator, providing selected content to students at the onset of the lesson and providing unsolicited guidance during their problem-solving activities.

OPBI group.

This group contained 35 biology majors with a cumulative GPA of 3.40 ± 0.53. The instructional design of this group was somewhat similar to the GPBI group but with marked and significant differences. Students randomly chose to work jointly in small groups of three to four on similar and sometimes the same activities provided to the GPBI instructional group. The major instructional difference of the OPBI group from the GPBI group was that no formal dissemination of information and guidance was provided during the initiation of OPBI problem-solving activities. Students were provided with a core question or series of core questions to begin the instruction. Information then was provided when solicited by students in working groups and was provided in a manner that directed students to explore and seek the type of information required for problem-solving activities and core questions.

Randomization of Subjects and Grading

All students enrolling in BIO 350: Animal Physiology at the University of Kentucky chose one of seven sections. Each section was assigned one of the three instructional treatment groups (LI group: 3 sections; GPBI and OPBI groups: 2 sections each). During the enrollment process, students were unaware that different teaching methods would be used in different sections. At the beginning of the course, all students were informed of the study and completed an informed consent. Once informed, students were given the opportunity to switch sections if they preferred an alternate teaching methodology, although no students exercised this option.

All exams and assignments were graded blindly. MC questions were graded by Scantron scanners and entered into a database by a graduate teaching assistant. SA questions were all graded by the professor in charge of the course. Student names and section numbers were replaced with an unidentifiable eight-digit code on all exam forms, and exams were shuffled so that they were in a random order and unidentifiable by instructional group. Answer rubrics were generated for each SA question, and questions were graded in numeric order with each question for all exams completely graded before advancing to the next question to be graded. Upon completion of grading, a graduate teaching assistant resorted the exams by section number, decoded student names, and entered scores into a database.

Assessment

Summative assessment.

Student performance was summatively assessed by three exams that were 50% MC and 50% SA questions. Students from all three instructional groups (LI, GPBI, and OPBI groups) took the same exams on the same days. On exams 1 and 2, there were 60 possible points for MC questions and 65 possible points for SA questions. Exam 3 had 80 possible points for both MC and SA questions. The concepts covered on each exam are shown in Table 2. Exam 1 focused primarily on cell physiology, membranes and membrane potentials, and neuronal function. Exam 2 was the largest unit in terms of overall physiological content and concepts. This unit consisted of skeletal muscle contraction, cardiovascular, renal, and blood pressure control. In exam 3, the major focus was on respiration, acid-base balance, and endocrinology and reproductive physiology. All exams consisted of 1/3 Bloom's taxonomy level 1/2 questions, 1/3 Bloom's level 4/5 questions, and 1/3 Bloom's level 8 or greater questions. The week before final exams consisted of a short discussion of digestion and a more complete component of nutrient and energy balance. It is important to note that all exams were cumulative and included conceptual aspects from previous units. This method of assessment was used to support the different instructional modalities, thereby forcing students to continually incorporate conceptual understandings of physiology in a holistic manner.

Table 2.

Physiological concepts covered by each exam

| Exam 1 | Exam 2 | Exam 3 |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Formative assessment.

The LI group had no ongoing or specific formative assessment conducted between exams. In the GPBI and OPBI groups, students engaged in daily, nongraded activities and class discussions. Activities and class discussions by group did allow the instructor significant indications of conceptual areas requiring reinforcement or, in some cases, further instruction.

Identification of Academic Performance Groups

To investigate the influence of these different instructional methods on physiology students with different overall academic proficiencies, students were divided into three subgroups within each instructional group (Table 3). High-achieving students were defined as students with a cumulative GPA of 3.6 or higher at the onset of the course, average-level students began the course with a cumulative GPA between 3.0 and 3.6, and low-achieving students were defined as students with a cumulative GPA of 3.0 or less at the course onset. Students of all achievement levels were integrated into each instructional group and received the same instruction.

Table 3.

Sample sizes for the instructional groups and achievement levels

| High-Achieving Students | Average-Achieving Students | Low-Achieving Students | |

|---|---|---|---|

| LI group | 14 | 25 | 8 |

| GPBI group | 10 | 14 | 14 |

| OPBI group | 18 | 8 | 9 |

Statistical Analysis

Exam and final course score are presented as means ± SE of total points earned. When LI, GPBI, and OPBI groups or high-, average-, and low-achieving students were compared, we used either one-way ANOVA with Student-Newman-Keuls post hoc analysis (for normally distributed data) or Kruskal-Wallis ANOVA on ranks with Dunn's test for post hoc analysis (for data not normally distributed). Effect size between groups was calculated with Cohen's d. All data are presented as means ± SE unless otherwise noted. The 0.05 level of probability was used as the criterion for significance in all data sets.

RESULTS

General Student Population Exam Performance by Instructional Group

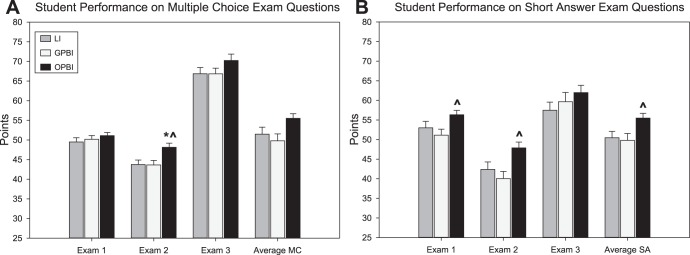

Student performance on MC questions for all three exams and averaged MC scores are shown in Fig. 2A. OPBI students scored significantly higher on the MC portion of exam 2 (48.18 ± 1.08 points) compared with GPBI (43.64 ± 1.14 points, P < 0.05, d = 0.667) or LI (43.75 ± 1.14 points, P < 0.05, d = 0.617) students. OPBI students tended to perform better on MC portions of other exams, but no other statistical significance was found (Fig. 2A). On the SA portion of exams, OPBI students scored better on average (55.50 ± 1.20 points) compared with GPBI (49.79 ± 1.75 points, P < 0.05, d = 0.619) but not LI (50.46 ± 1.62 points, P = 0.070, d = 0.540) students (Fig. 2B). Although there were no statistical differences between LI students and OPBI students on SA exam questions, OPBI students overall did score higher on every exam (Fig. 2B).

Fig. 2.

Open problem-based instruction (OPBI) had little effect on overall student exam performance compared to either traditional lecture instruction (LI) or guided problem-based instruction (GPBI) groups. SA, short answer. *P < 0.05 compared with the LI group; ^P < 0.05 compared with the GPBI group.

Effect of PBL on High- and Low-Achieving Student Exam Performance

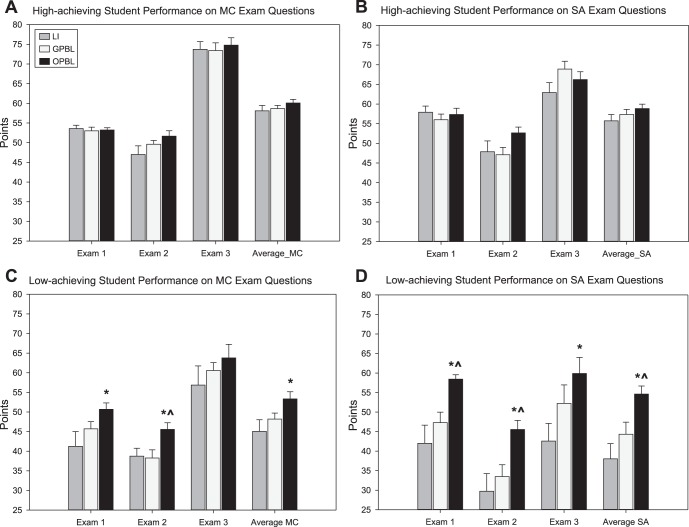

Low-achieving students benefited greatly from the open problem-based style of instruction (Fig. 3, C and D). They scored better on MC exam questions when instructed by OPBI (53.33 ± 1.83 points) compared with LI (45.04 ± 2.98 points, P < 0.05, d = 1.166) but not GPBI (48.19 ± 1.52 points, P = 0.071, d = 0.716), although there was a trend for significance. There was an increase in MC score for OPBI (45.56 ± 1.69 points) students compared with both GPBI (38.29 ± 2.08 points, P < 0.05, d = 1.267) and LI (38.75 ± 2.00 points, P < 0.05, d = 1.267) students on exam 2 (Fig. 3C). OPBI proved to be of an even greater benefit for low-achieving students on SA exam questions, with OPBI students scoring much higher on average (54.63 ± 2.05 points) compared with both LI (38.04 ± 3.88 points, P < 0.05, d = 1.865) and GPBI (44.33 ± 3.10 points, P < 0.05, d = 1.109) students (Fig. 3D).

Fig. 3.

High-achieving students performed just as well on both multiple-choice (MC; A) and SA (B) exam questions regardless of instructional group. Low-achieving students in the OPBI group performed better on MC (C) and SA (D) exam questions compared with both LI and GPBI groups. *P < 0.05 compared with the LI group; ^P < 0.05 compared with the GPBI group.

Focusing on high-achieving students, there were no observable differences on MC exam questions between LI (58.10 ± 1.37 points), GPBI (58.67 ± 0.81 points), or OPBI (60.08 ± 0.95 points) students (Fig. 3A). These students also exhibited no difference on responses to SA exam questions between LI (55.74 ± 1.60 points), GPBI (57.33 ± 1.32 points), or OPBI (58.83 ± 1.14 points) students (Fig. 3B).

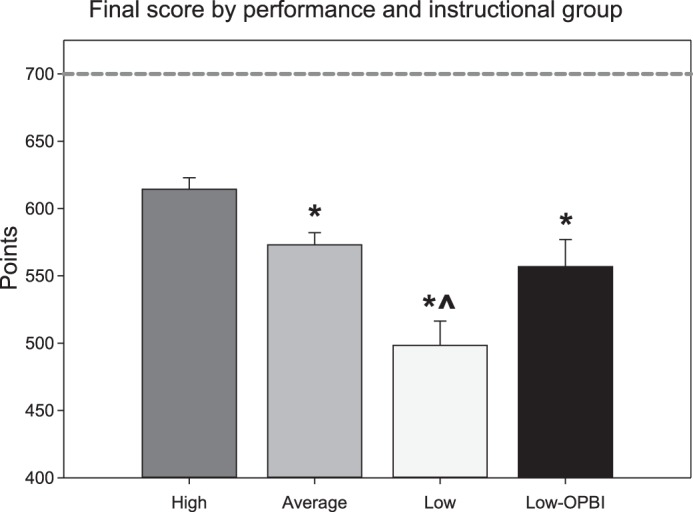

Overall Course Performance

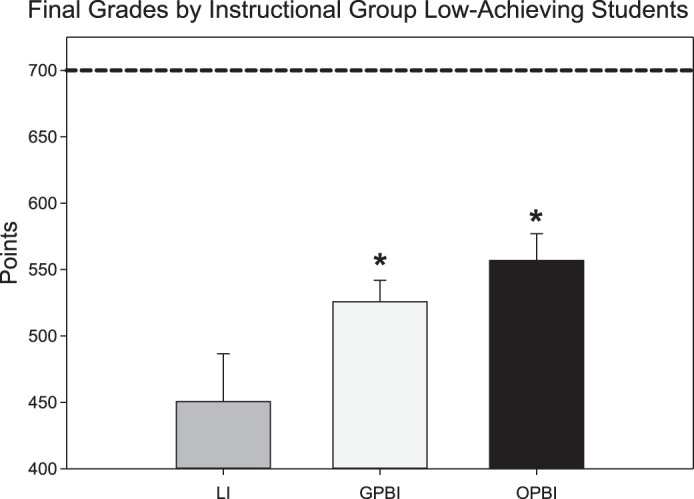

Overall course outcome was improved for low-achieving students instructed by the OPBI method (556.81 ± 20.23 points) compared with the LI (450.56 ± 36.08 points, P < 0.05, d = 1.265) method (Fig. 4). In fact, while GPA was a good indicator of overall course performance, with low-achieving students scoring lower (498.38 ± 17.97 points) than both average-level (573.07 ± 9.07 points, P < 0.05, d = 0.979) and high-achieving (614.30 ± 8.66 points, P < 0.05, d = 0.1.547) students, low-achieving students instructed by the OPBI method (556.81 ± 20.23 points) exhibited an overall course outcome not different from that of average-level (P = 0.315, d = 0.283) students (Fig. 5).

Fig. 4.

Low-achieving students performed better in the course when instructed by either OPBI or GPBI. The maximum possible points are represented by the dashed line. *P < 0.05 compared with the LI group; ^P < 0.05 compared with the GPBI group.

Fig. 5.

Low-achieving students had lower final course scores compared with both high- and average-achieving students. When low-achieving students were instructed by the OPBI method, they performed as well as average-achieving students. The maximum possible points are represented by the dashed line. *P < 0.05 compared with high-achieving students; ^P < 0.05 compared with average-achieving students.

DISCUSSION

The present study set out to achieve two primary goals. The first goal was to characterize and describe two distinctive varieties of PBL with differing degrees of direct instruction (Table 1). To be clear, neither of these methods, OPBI or GPBI, should be considered minimal guidance methods as there is significant scaffolding in place to ensure student success. The second goal was to evaluate the efficacy of these methods on student populations of diverse academic background compared with traditional lecture-style instruction. Many studies have found that PBL is a more effective method than LI, particularly when considering critical reasoning skills and long-term knowledge retention (2, 30, 44, 46). The results of the present study were not different, as students in the PBL groups outperformed LI students on assessment directed at critical reasoning skills (SA exam questions).

There has been some controversy regarding the effectiveness of constructivist methods and the level of guidance that should accompany them (18, 21). Leppink and colleagues (24) found that the added guidance of a GPBI course was moderately beneficial to novice, entry-level students with little or no prior knowledge of the course content. The present study found the opposite to be true in non-novice students, as the OPBI group performed moderately better than the LI group on assessments of critical reasoning skills, although GPBI group performance was generally not different. That is to say, students who have been exposed to the core concepts in previous introductory courses and have a knowledge base from which to draw will benefit more from the open-inquiry environment created by OPBI as opposed to LI or GPBI. This effect was magnified in low-achieving students. This group of students, when instructed by the OPBI method, exhibited improved content acquisition and understanding (MC exam questions) and vastly improved critical reasoning skills compared with both GPBI and LI groups (Fig. 3C). These results indicate that typically low-achieving students are stimulated by the self-directed and collaborative nature of OPBI and subsequently are able to achieve a greater level of conceptual understanding of complex physiological subject matter.

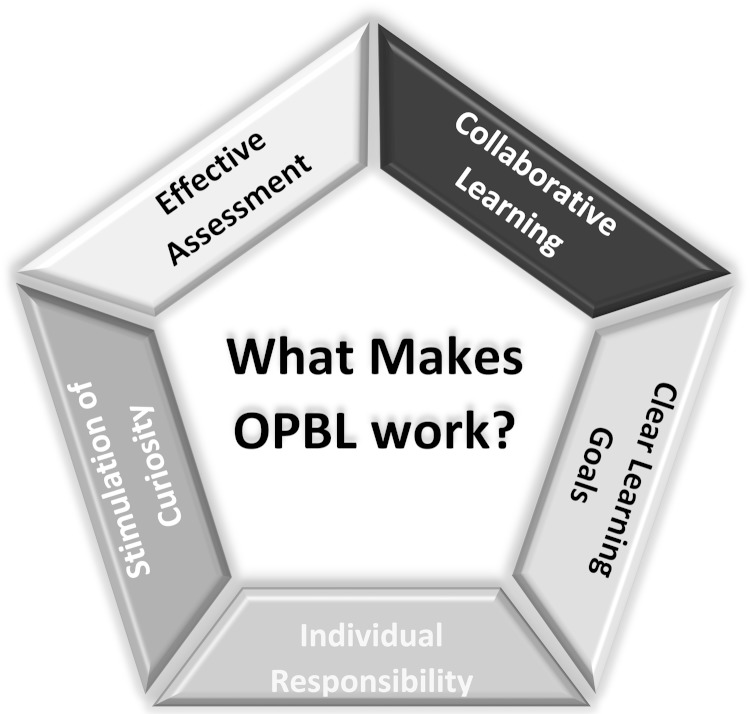

There are five main characteristics of OPBI that may have facilitated enhanced performance in typically low-achieving students (Fig. 6). The first is a collaborative, peer learning environment that encourages students from all academic backgrounds to work together and has been shown to increase student success in secondary and undergraduate science, technology, engineering, and mathematic education (27, 43). Second, this method of learning includes an inherent sense of individual responsibility and self-directed learning that not only garners improved course performance but also establishes student confidence for success when they enter the professional environment. One of the biggest obstacles with these learners is motivating them in the classroom. OPBI provides an environment that motivates these students in both the traditional way (grades) as well as by creating a demand for knowledge (need to know) and holding them responsible to their peers. Next, low-achieving students benefit from the clearly-defined learning goals that are intrinsic of well-designed constructivist methods (6). Finally, cooperative formative assessments and instructional scaffolding allows for early identification of problem areas in low-achieving students and provides a preexisting toolset to address these areas.

Fig. 6.

Key characteristics that make OPBI such an effective tool.

The collaborative environment created by OPBI is essential in regard to elevating the learning strength of low-achieving students. When students work together, the individual intellectual burden of learning can be diminished by exploiting the unique skill and knowledge sets of individual group members (17, 33, 36). This is especially true when low- and high-achieving students work together (15). The relationship between the student and instructor is a critical aspect of collaboration within groups, and each has a specific role to play to best use the group dynamic. The instructor's role is to ensure that all students are making active contributions to their group and intervening with leading questions when needed (28, 29). Students have many responsibilities to the group. They must contribute meaningfully to the group discussion, not only by presenting their own ideas but also by listening to the ideas of other group members and adjusting their opinions and process of thinking when necessary. Students' duties extend beyond the classroom; to effectively contribute to the team, students must come to class prepared to discuss the day's topics (12).

The concept of extended learning beyond the classroom strongly promotes individual responsibility and the development of self-directed and self-regulated learning skills. As educators, we have some control over how students develop learning strategies and habits while they are in the classroom, but outside of the classroom, they must be responsible for their own learning. Several studies have investigated the ability of students of various achievement levels to develop self-directed learning skills and found that low-achieving undergraduate students struggle to develop these skills (1, 35). The development of external study and learning skills could be one of the key disadvantages separating low-achieving students from their average- and high-achieving counterparts. There is substantial evidence, both qualitative and quantitative, that supports the effectiveness of PBL at developing self-directed learning skills (8, 20, 25, 45). The results of this study suggest that the performance of low-achieving students instructed by the OPBI method is elevated to that of average-achieving students, who outperform low-achieving students instructed by LI methods (Fig. 5 and 6). This may be due in part to enriched self-directed learning skills in low-achieving students in the OPBL group. Interactions with other traditional higher-achieving students likely contributed significantly to assisting the lower-achieving students in improved learning skills. These aspects of how OPBI improves learning in this student group are currently being explored.

To achieve these first two objectives, the course must create a motivating learning environment that garners interest in subject matter. It has been shown that low-achieving student performance is enhanced when interest in the subject matter is high (7). In addition to traditional motivations, such as passing examinations and earning high course grades, OPBI approaches this obstacle from a couple angles. First, the problems and tasks required of students during class periods are intrinsically motivating by creating a need to know and curiosity in the topics that persist over time (9, 16, 34). Second, the group dynamic motivates the individuals to come to class prepared to discuss the day's material so that they can make a meaningful contribution, thereby stimulating interest, self-directed learning and a more productive classroom environment. Finally, clearly defined learning goals (i.e., hypothesis generation, data analysis, and critical thinking skills) help to keep low-achieving students on task, interested, and motivated to learn (14, 15).

Formative assessment and instructional scaffolding are critical to ensure student success in PBL environments, and this is especially true in low-achieving students (3). The effectiveness and benefits of scaffolding in constructivist methods has been eloquently reviewed (18). Formative assessment is an essential tool for instructors to identify conceptual areas of need, and in OPBI this is incorporated into daily classroom activities of small groups and formal class discussions. To maximize the effect of these tools it is imperative to design them to work together in a dynamic way, so that scaffolding is responsive to formative assessment. In conclusion, these data demonstrate that low-achieving students benefit from the motivating, simulating, and supportive nature of OPBI. This instructional method promotes success in this population of students. Future research on this instructional method is designed to help elucidate the potential mechanisms by which lower-achieving students improve performance from OPBI.

GRANTS

This work was partially supported by National Science Foundation Grant 0431552 (to J. L. Osborn, Principal Investigator).

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

Author contributions: B.M.F., L.X., J.A.C., and J.L.O. conception and design of research; B.M.F., L.X., J.A.C., M.K.R., and J.L.O. performed experiments; B.M.F. and L.X. analyzed data; B.M.F., L.X., J.A.C., and J.L.O. interpreted results of experiments; B.M.F. prepared figures; B.M.F. drafted manuscript; B.M.F., L.X., J.A.C., M.K.R., and J.L.O. edited and revised manuscript; B.M.F. and J.L.O. approved final version of manuscript.

ACKNOWLEDGMENTS

The authors thank Jacqueline Burke for work and support in the production of the classroom instructional materials.

REFERENCES

- 1.Albaili MA. Differences among low-, average- and high-achieving college students on learning and study strategies. Educ Psychol 17: 171–177, 1997. [Google Scholar]

- 2.Antepohl W, Herzig S. Problem-based learning versus lecture-based learning in a course of basic pharmacology: a controlled, randomized study. Med Educ 33: 106–113, 1999. [DOI] [PubMed] [Google Scholar]

- 3.Barron BJ, Schwartz DL, Vye NJ, Moore A, Petrosino A, Zech L, Bransford JD. Doing with understanding: lessons from research on problem- and project-based learning. J Learn Sci 7: 271–311, 1998. [Google Scholar]

- 4.Barrows HS. Problem-Based learning: an Approach to Medical Education. New York: Springer, 1980. [Google Scholar]

- 5.Barrows HS. A taxonomy of problem-based learning methods. Med Educ 20: 481–486, 1986. [DOI] [PubMed] [Google Scholar]

- 6.Barrows HS. Problem-based learning in medicine and beyond: a brief overview. New Direct Teach Learn 1996: 3–12, 1996. [Google Scholar]

- 7.Belloni LF, Jongsma EA. The effects of interest on reading comprehension of low-achieving students. J Read 22: 106–109, 1978. [Google Scholar]

- 8.Blumberg P. Evaluating the evidence that problem-based learners are self-directed learners: a review of the literature. In: Problem-Based Learning: a Research Perspective on Learning Interactions, edited by Evensen D, Hmelo CE. Mahwah, NJ: Erlbaum, 2000, p. 199–226. [Google Scholar]

- 9.De Volder ML, Schmidt HG, Moust JH, De Grave WS. Problem-based learning and intrinsic motivation. In: Achievement and Task Motivation, edited by van derBerchen JH, Bergen TC, deBruyn EE. Berwyn, PA: Swets North America, 1986, p. 128–34. [Google Scholar]

- 10.Dewey J. Experience and Education. New York: Simon and Schuster, 2007. [Google Scholar]

- 11.Dochy F, Segers M, Van den Bossche P, Gijbels D. Effects of problem-based learning: a meta-analysis. Learn Instruct 13: 533–568, 2003. [Google Scholar]

- 12.Donnelly R, Fitzmaurice M. Collaborative project-based learning and problem-based learning in higher education: a consideration of tutor and student role in learner-focused strategies. In: Emerging Issues in the Practice of University Learning and Teaching, edited by O’Neill G, Moor S, McMulling B. Dublin: All Ireland Society for Higher Education, 2005, p. 87–98. [Google Scholar]

- 13.Farrell JJ, Moog RS, Spencer JN. A guided-inquiry general chemistry course. J Chem Educ 76: 570, 1999. [Google Scholar]

- 14.Fuchs LS, Fuchs D, Karns K, Hamlett CL, Katzaroff M, Dutka S. Effects of task-focused goals on low-achieving students with and without learning disabilities. Am Educ Res J 34: 513–543, 1997. [Google Scholar]

- 15.Gabriele AJ, Montecinos C. Collaborating with a skilled peer: the influence of achievement goals and perceptions of partners' competence on the participation and learning of low-achieving students. J Exp Educ 69: 152–178, 2001. [Google Scholar]

- 16.Hidi S, Renninger KA. The four-phase model of interest development. Educ Psychol 41: 111–127, 2006. [Google Scholar]

- 17.Hmelo-Silver CE. Problem-based learning: what and how do students learn? Educ Psychol Rev 16: 235–266, 2004. [Google Scholar]

- 18.Hmelo-Silver CE, Duncan RG, Chinn CA. Scaffolding and achievement in problem-based and inquiry learning: a response to Kirschner, Sweller, and Clark. Educ Psychol 42: 99–107, 2007. [Google Scholar]

- 19.Hmelo CE. Problem-based learning: effects on the early acquisition of cognitive skill in medicine. J Learn Sci 7: 173–208, 1998. [Google Scholar]

- 19a.Hmelo CE, Evensen DH. Introduction to problem-based learning: gaining insights on learning interactions through multiple methods of inquiry. In: Problem-Based Learning: a Research Perspective on Learning Interactions, edited by Evensen DH, Hmelo CE. Mahwah, NJ: Erlbaum, 2000, p. 1–16. [Google Scholar]

- 20.Hmelo CE, Lin X. Becoming self-directed learners: strategy development in problem-based learning. 2000.

- 21.Kirschner PA, Sweller J, Clark RE. Why minimal guidance during instruction does not work: an analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educ Psychol 41: 75–86, 2006. [Google Scholar]

- 22.Koh GC, Khoo HE, Wong ML, Koh D. The effects of problem-based learning during medical school on physician competency: a systematic review. Can Med Assoc J 178: 34–41, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kuhn D, Black J, Keselman A, Kaplan D. The development of cognitive skills to support inquiry learning. Cognit Instruct 18: 495–523, 2000. [Google Scholar]

- 24.Leppink J, Broers NJ, Imbos T, van der Vleuten CP, Berger MP. The effect of guidance in problem-based learning of statistics. J Exp Educ 82: 391–407, 2014. [Google Scholar]

- 25.Loyens SM, Magda J, Rikers RJ. Self-directed learning in problem-based learning and its relationships with self-regulated learning. Educ Psychol Rev 20: 411–427, 2008. [Google Scholar]

- 27.Lumpe AT, Staver JR. Peer collaboration and concept development: learning about photosynthesis. J Res Sci Teach 32: 71–98, 1995. [Google Scholar]

- 28.Maudsley G. Roles and responsibilities of the problem based learning tutor in the undergraduate medical curriculum. Br Med J 318: 657–661, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Mayo WP, Donnelly MB, Schwartz RW. Characteristics of the ideal problem-based learning tutor in clinical medicine. Eval Health Profess 18: 124–136, 1995. [Google Scholar]

- 30.McParland M, Noble LM, Livingston G. The effectiveness of problem-based learning compared to traditional teaching in undergraduate psychiatry. Med Educ 38: 859–867, 2004. [DOI] [PubMed] [Google Scholar]

- 31.Mergendoller JR, Maxwell NL, Bellisimo Y. The effectiveness of problem-based instruction: a comparative study of instructional methods and student characteristics. Interdiscipl J Problem Based Learn 1: 5, 2006. [Google Scholar]

- 32.Minner DD, Levy AJ, Century J. Inquiry-based science instruction–what is it and does it matter? Results from a research synthesis years 1984 to 2002. J Res Sci Teach 47: 474–496, 2010. [Google Scholar]

- 33.Pea RD. Practices of distributed intelligence and designs for education. Distributed cognitions. Psychol Educ Consider. 47–87, 1993.

- 34.Rotgans JI, Schmidt HG. Situational interest and academic achievement in the active-learning classroom. Learn Instruct 21: 58–67, 2011. [Google Scholar]

- 35.Ruban L, Reis SM. Patterns of self-regulatory strategy use among low-achieving and high-achieving university students. Roeper Rev 28: 148–156, 2006. [Google Scholar]

- 36.Salomon G. No distribution without individuals' cognition: a dynamic interactional view. In: Distributed Cognitions: Psychological and Educational Considerations, edited by Salomon G, Perkins D. New York: Cambridge Univ. Press, 1993, p. 111–138. [Google Scholar]

- 37.Schmidt HG. Problem-based learning: rationale and description. Med Educ 17: 11–16. 1983. [DOI] [PubMed] [Google Scholar]

- 38.Schmidt HG, Loyens SM, Van Gog T, Paas F. Problem-based learning is compatible with human cognitive architecture: commentary on Kirschner, Sweller, and Clark. Educ Psychol 42: 91–97, 2007. [Google Scholar]

- 39.Schmidt HG, Rotgans JI, Yew EH. The process of problem-based learning: what works and why. Med Educ 45: 792–806, 2011. [DOI] [PubMed] [Google Scholar]

- 40.Schmidt HG, Van der Molen HT, Te Winkel WW, Wijnen WH. Constructivist, problem-based learning does work: a meta-analysis of curricular comparisons involving a single medical school. Educ Psychol 44: 227–249, 2009. [Google Scholar]

- 41.Şendağ S, Odabaşı HF. Effects of an online problem based learning course on content knowledge acquisition and critical thinking skills. Comput Educ 53: 132–141, 2009. [Google Scholar]

- 42.Spencer JN. New directions in teaching chemistry: a philosophical and pedagogical basis. J Chem Educ 76: 566, 1999. [Google Scholar]

- 43.Springer L, Stanne ME, Donovan SS. Effects of small-group learning on undergraduates in science, mathematics, engineering, and technology: a meta-analysis. Rev Educ Res 69: 21–51, 1999. [Google Scholar]

- 44.Strobel J, van Barneveld A. When is PBL more effective? A meta-synthesis of meta-analyses comparing PBL to conventional classrooms. Interdiscipl J Problem Based Learn 3: 4, 2009. [Google Scholar]

- 45.Sungur S, Tekkaya C. Effects of problem-based learning and traditional instruction on self-regulated learning. J Educ Res 99: 307–320, 2006. [Google Scholar]

- 46.Vernon DT, Blake RL. Does problem-based learning work? A meta-analysis of evaluative research. Acad Med 68: 550–563, 1993. [DOI] [PubMed] [Google Scholar]

- 47.Wilkerson L, Gijselaers WH. Bringing Problem-Based Learning to Higher Education: Theory and Practice. San Francisco, CA: Jossey-Bass, 1996. [Google Scholar]

- 48.Woods DR. Problem-Based Learning: How to Gain the Most From PBL. Waterdown, ON, Canada: DR Woods, 1994. [Google Scholar]