Abstract

The promise of compressive sensing, exploitation of compressibility to achieve high quality image reconstructions with less data, has attracted a great deal of attention in the medical imaging community. At the Compressed Sensing Incubator meeting held in April 2014 at OSA Headquarters in Washington, DC, presentations were given summarizing some of the research efforts ongoing in compressive sensing for x-ray computed tomography and magnetic resonance imaging systems. This article provides an expanded version of these presentations. Sparsity-exploiting reconstruction algorithms that have gained popularity in the medical imaging community are studied, and examples of clinical applications that could benefit from compressive sensing ideas are provided. The current and potential future impact of compressive sensing on the medical imaging field is discussed.

1. Introduction

Imaging is vital to many aspects of medicine, including detection and diagnosis of disease, treatment planning, and monitoring of response to therapy. Given the complexity of the human body and diversity of possible disease states, numerous radiological imaging modalities and contrast mechanisms have come into clinical use. Two of the most widely used modalities are x-ray computed tomography (CT) and magnetic resonance (MR) imaging. The engineering and physics of the image formation process vary greatly between these modalities, but they share several often conflicting system design goals:

• Image Quality—The purpose of medical imaging is to inform clinical decisions. To achieve this purpose, imaging systems are designed to maximize image quality. The quality of a medical imaging system is in fact best defined by how well clinical tasks are performed using the imaging system. Thus, image quality metrics should reflect task performance, the purpose of medical imaging.

• Patient Safety—Clinical imaging is performed to aid the patient; however, no modality is completely without risk. X-ray CT employs ionizing radiation, exposure to which may lead to increased risk for development of certain types of cancer [1]; therefore, it is desirable to minimize the radiation dose to patients. Even the nonionizing radiation used in MRI generates heat within the body which could cause thermal injury in some situations [2]. Minimizing the risk to patients is important, though reduction in radiation dose may result in unacceptable reduction in image quality.

• Speed—Imaging faster has several advantages. Patients are not static objects and motion during the image formation process can create artifacts which reduce image quality. Increasing imaging speed inherently reduces the severity of these artifacts by reducing the opportunity for patient motion. Faster imaging can increase patient comfort and lead to greater patient throughput, making more efficient use of often expensive imaging hardware. Speed is also important for dynamic imaging, where multiple images must be acquired in rapid succession to characterize a physiological process or the dynamics of a time-varying contrast agent. The ability to image fast is limited however by the need to acquire sufficient data to achieve adequate image quality.

Compressive sensing (CS) has attracted much interest in the imaging community because of the potential to obtain high quality images from sparse data sampling acquisitions. This can potentially help achieve the aforementioned design goals. If the prior knowledge that medical images are compressible, if not truly sparse, is properly exploited, it may lead to faster, safer image acquisitions that do not sacrifice image quality; however, due to the excitement and rapid growth in publications on what is termed “compressive sensing,” the usual painstaking, careful approach to scientific research has to some extent been short-circuited. In particular, much relevant work in the literature prior to the CS big bang has largely been overlooked or forgotten. This is partly due to the fact that CS can apply to any area of image science, which has a vast body of literature, and many of the ideas recycled in recent years under the CS banner have previously appeared in application-specific journals. When reading the literature or attending conferences it becomes clear that the term CS is ill-defined, with different authors taking it to mean different things. Even the term CS itself is complicated due to the issue of prior work.

Accordingly, we start off by defining terms for this review:

• Iterative image reconstruction (IIR)—a generic term for image reconstruction that involves multiple updates of an image estimate guided by data discrepancy between available and estimated data and possibly some form of prior knowledge of the underlying object function.

• Sparsity-exploiting image reconstruction (SEIR)—IIR where exploiting some form of object sparsity is an important component.

• Compressive sensing (CS)—SEIR specifically intended for improved image quality with a scan design involving reduced sampling.

• Compressive sensing guarantee (CSG)—CS together with either a mathematical proof or empirical study yielding evidence for accurate image recovery under ideal conditions. CSGs also include investigations of robustness of image quality against inconsistency of the imaging model or data noise.

It is the last term, CSG, which we use to refer to what is novel in the body of work stemming from the seminal papers by Candès, Donoho, Tao, and Romberg [3-5]. While there may be work on CSGs in the applied mathematics and statistics literature that predate these papers, it is really these articles that communicated CSG concepts to the medical imaging community [6]. We emphasize that even though CS is a new term, using the definitions provided here, investigation of SEIR and CS has been occurring for decades. Nevertheless, the volume of recent work is a clear indication the research community believes there is indeed something new and important provided by CSGs. Whether or not CSGs will create a fundamental paradigm shift across imaging technologies, we believe is still an unanswered question.

In this review we address the issue of work in SEIR/CS prior to CSGs, and the relevance of CSGs to medical imaging technology, specifically x-ray CT and MRI. We begin with motivations for CS in medical imaging in Section 2. This is followed by a brief summary of MRI and x-ray CT and image reconstruction development prior to CSGs in Section 3. Sections 4 and 5 contain overviews of CS in CT and MRI, respectively. Section 6 summarizes the literature on related real data studies in CT. Section 7 provides an illustrative example of how CS can be utilized clinically in MRI. Finally, in Section 8 we discuss in detail our views on what CS is and what its contribution to medical imaging has been, and possibly will be in the future.

2. Why is CS of Interest for Medical Imaging?

Medical imaging data, like most imaging data, have an associated cost. The desire to minimize this cost naturally generates interest in CS. Medical imaging also has several inherent advantages that promote use of CS. The radiation source is under user control and thus can be modified to create a system more amenable to CS reconstruction. Additionally, medical imaging raw data are indirect in nature. As described below, x-ray CT and MRI systems have complex physical models and data acquisitions that are naturally described as projection operators, similar to those envisioned in CS theory. Contrast this situation to standard optical imaging with ambient light, where hardware such as the single-pixel camera [7] needed to be developed to fit CS concepts. Here we describe practical aspects of MRI and CT that motivate adoption of CS.

A. MRI

A primary motivation for development of CS in MRI has been the desire to reduce the amount of data required to create an image. This is important because the time cost of advanced MR image acquisition techniques has been a barrier to their use in routine clinical practice. The time required to acquire an MR data set g is roughly proportional to its dimensionality, the number of spatial frequencies measured; if less data are required, there will be a corresponding reduction in scan time.

Two of the most important classes of MR imaging techniques that benefit from a reduction in imaging time are dynamic and quantitative imaging. Dynamic imaging refers to the acquisition of a series of data sets at multiple time points, {gt=t0, gt=t1, …} to observe a dynamic physiological In many applications, for example imaging the cardiac cycle, the required interval between time points is a fraction of a second. This constrains the amount of data that can be acquired per time point and limits image quality, necessitating low spatial resolution imaging or acquiring data across multiple cardiac cycles which can introduce image artifacts due to patient motion or inaccurate cardiac triggering. Quantitative MRI is defined as the measurement of MR tissue properties. Standard MR images have contrast that reflects these parameter values, though to obtain estimates for these parameters, multiple standard MR images are required. This leads to time constraints analogous to those of dynamic imaging and motivates the development of methods to reduce scan time such as CS.

B. CT

For x-ray tomographic imaging, CS is of interest due to the possibilities of x-ray dose reduction, motion artifact mitigation, and novel scan designs. The typical diagnostic CT scan subjects a patient to an x-ray dose of about a factor of one hundred greater than that of a single projection x-ray. Reduction of sampling requirements is one obvious way to reduce the dose burden of CT imaging. Acquiring fewer views than current practice also may allow for faster acquisitions particularly for C-arm imagers with flat-panel x-ray detectors. Faster acquisition times can alleviate imaging problems related to motion due to, e.g., breathing. One of the fast-growing applications of x-ray tomographic imaging is for guidance in radiation therapy or surgery. In such applications, the standard tomographic scan, where the x-ray source executes a complete data arc greater than 180 degrees, may not be possible. Limiting the angular range of the x-ray source scanning arc is thus another important form of data undersampling.

C. CS: Disruptive Technology?

In adapting CS to medical imaging it is important to realize that MRI and CT are fairly mature technologies. As such, it is unrealistic to expect immediate disruptive technological change in MRI or CT, even when CS is augmented by guarantees of accurate image recovery. The reason for this is simple: CSGs relevant to CT or MRI consider exact image reconstruction of sparsely sampled data generated by idealized imaging systems, but mathematically exact reconstruction does not necessarily translate to utility of a real-world medical imaging device. For example, x-ray projection imaging in clinical practice is far more commonly used than x-ray CT and has a high utility, but from a tomographic perspective the standard planar x-ray represents the ultimate in projection view angle under-sampling—one sample! Because utility is ultimately the important image quality measure, many innovations in limited data sampling have already occurred without advanced image reconstruction techniques; the image artifacts due to approximate or nonoptimal image reconstruction may not be severe enough to interfere with the clinical task of interest.

Nevertheless CS, or CSG investigations, can lead to important advances in medical imaging. Incremental improvements in image quality or device robustness can have a large impact because the clinical application is vital to patient health and management. For example, in cancer screening a small increase in sensitivity of an imaging device without increasing false detections can possibly save hundreds of lives.

The distinction of CS as a possible disruptive technology for medical imaging versus the more realistic view that it can provide incremental, yet important, technological advancement is important to keep in mind. Proposed radical alterations in imaging system hardware design based on recent CSG results give the appearance of a paradigm shift, though these changes in design may not necessarily result in significant improvements in clinical task performance. This utility-based viewpoint places a great emphasis on the need for task-based evaluation of CS image reconstruction algorithms.

3. MRI and CT Background

In order to understand possible roles for CS concepts in medical imaging it is important to have exposure to current clinical devices and advancements to such devices, which are currently in the research domain.

A. Clinical MRI

The physical phenomena underlying MR imaging is the magnetic resonance effect. Atomic nuclei possessing nonzero spin exhibit resonance in an applied magnetic field, absorbing and emitting electromagnetic radiation at a resonant frequency linearly related to the magnetic field strength. This resonant Larmor frequency is given by

| (1) |

where f is the Larmor frequency, γ is the nuclei-specific gyromagnetic ratio, and B is the magnetic field strength. Energy emitted by resonating hydrogen nuclei in water and more complex molecular structures within the body is typically what is detected by an MR medical imaging system. Water hydrogen nuclei resonate in the radio frequency range, at f ≈ 128 MHz at a typical magnetic field strength of 3 Tesla, and thus do not produce ionizing radiation.

To create an image of the hydrogen nuclei, RF energy is applied at the resonance frequency. Spatial variation in the magnetic field is introduced through magnetic field gradients which encode location via the resulting Larmor frequency variation and phase variation of the resonant spins. In most MR imaging the phase variation is designed to mimic a Fourier basis function. The hydrogen spins subsequently emit location-encoded RF radiation which is detected by the MR device. During resonance, spins are subject to multiple effects including diffusion and signal relaxation related to the local chemical environment. These effects are taken advantage of to produce clinical images of varying contrast. Some of the most common classes of images include the following:

Anatomical imaging

This is the most common clinical scan. Two-dimensional or volumetric 3D images of the patient are produced for a variety of diagnostic tasks. MR typically provides excellent soft tissue contrast which can be optimized for a particular clinical task through choice of timing parameters and the way in which magnetic field gradients and RF pulses are applied. Image contrast is due to differences in proton density or relaxation times T1, which characterizes the rate at which excited spins return to thermodynamic equilibrium, and T2, which characterizes the rate of spin dephasing due to magnetic field variations generated by the local chemical environment. These scans are relatively routine and not time consuming, up to a minute or two, which is slower that a typical x-ray CT exam, but not slow enough to cause significant problems due to patient motion in most situations. This lessens the need for reduced sampling methods such as CS for anatomical imaging; however, increasing imaging speed without paying a penalty in reduced image quality is always of some benefit.

Dynamic imaging

Often it is important to image a time-varying biological process with MRI. A prime example of this is the cardiac cycle. Cine MR provides valuable diagnostic information about heart function. To achieve high resolution images at each time point within the cardiac cycle, data from multiple heart beats are combined to create a moving image of the heart. For this dynamic technique imaging speed is vital to reduce the need for combining data across cardiac cycles and to mitigate artifacts due to respiratory and bulk patient motion. This suggests a potential benefit from exploiting CS. Other applications such as 4D angiography, and dynamic contrast enhanced imaging to assess potentially cancerous lesions based on contrast agent uptake have similar time constraints and suffer from the classical spatial/temporal resolution trade-off. It is reasonable to assume these applications would also derive some benefit from CS and associated CSGs.

Perfusion

The measurement of blood flow into tissue is an important physiological characteristic of tissue function. Blood perfusion can be quantified using MRI, either through use of an injected contrast agent or endogenous contrast created via arterial spin labeling techniques. Quantifying perfusion requires multiple image acquisitions, which can result in motion artifacts. Myocardial perfusion studies have the most likelihood for problems related to motion and benefit from accelerated imaging techniques.

Diffusion

Several techniques exist for characterizing the rate and direction of water diffusion within the body, which is useful for diagnosing neurological disorders such as stroke. These images characterize the overall diffusion out of each voxel and do not require extensive scan time. This suggests current clinical practice would not be greatly improved via adoption of CS because there is time to acquire all the necessary data. More advanced techniques that measure the preferential direction of diffusion have however attracted a great deal of attention. By analyzing the direction of diffusion at each point within the brain, white matter fiber tracts can be traced to discover connections within the brain. This is known as diffusion tractography and is an important tool for neuroscience. A limitation is that multiple diffusion directions must be analyzed, which can lead to very long scan times, which is challenging for healthy subjects and particularly a problem for patients with disease. If data requirements can be reduced, perhaps through CS, this important technique could see broader use.

Quantitative MRI

Standard anatomical MR images have contrast that reflects differences in MR parameters such as proton density or relaxation times T1 and T2. It is also possible to directly measure these parameters. Examples include quantitative estimates of fat content, which are useful in diagnosis of fatty liver disease and certain heart diseases [8] and pharmaco-kinetic modeling, which is useful for assessment of tumor vascularization and response to therapy [9]. These types of quantitative measurements typically require multiple images over a period of time. This again leads to the problem of spatial/temporal resolution trade-off and motion artifacts. CSGs could have a valuable role to play in these sorts of applications.

Functional MRI

This technique measures brain activity and has become ubiquitous in neuroscience research. The primary mechanism for activity detection is the blood oxygen level dependent (BOLD) response. When neurons activate, they require an additional store of energy which is supplied by an increase in oxygenated blood flow. This is detectable as a localized change in contrast (related to the aforementioned T2). To measure the BOLD response and its connection to a thought or external stimulus, a series of images are required. As in other methods, CS could possibly permit more frequent image acquisitions or higher spatial resolution with the same time resolution, improving the ability to localize and track brain activity.

B. MR Image Reconstruction

All of the MR techniques discussed above require an image reconstruction step to recover interpretable images from raw MR data. The discrete inverse Fourier transform is the traditional way MR data are reconstructed. A typical example of this is a spin-echo image acquisition which produces anatomical images with contrast primarily determined by the spin-spin relaxation time T2. A raw spin-echo data vector g has elements that can be modeled as an integral over position r across the field of view,

| (2) |

where

| (3) |

is the hydrogen proton density ρ modulated by C, a complex-valued RF receive coil sensitivity function, TE is the time of spin-echo formation relative to the initial RF excitation, T2 is the tissue-dependent spin-spin relaxation time, kj is a particular Fourier frequency, and n is Gaussian-distributed system noise. Fourier frequencies are measured on a Cartesian grid, after which the data g are passed through an inverse discrete Fourier transform F−1 to produce the final image h,

| (4) |

which implies the image pixel values are roughly governed by the form of the signal equation,

| (5) |

ignoring the fact that the quantity on the right is continuously defined, while the image on the left is discrete. The TE time is chosen to obtain the desired T2 contrast. If the goal is to estimate T2, then images for multiple TE times would be required.

All of the applications described above existed before the introduction of CSGs, and there is a long history of reconstruction techniques in MR that attempt to alleviate the need for a fully-sampled Cartesian grid of data. In dynamic imaging, keyhole techniques which collect low spatial frequencies with high temporal resolution and mix data from multiple times in the high spatial frequencies have been proposed [10]. A variation on the keyhole technique is compared with a CS approach for quantitative imaging in a latter section (Section 7.A). Hermitian symmetry of Fourier domain representations of real-valued functions has been exploited to reduce data requirements [11]. Constrained IIR also has a history in MR before the recent interest in l1 regularization [12]. Greatest effort in terms of non-CS data reduction has gone into parallel imaging. Data are measured from multiple receive coils in parallel, and through proper design of their RF sensitivity profiles, unmeasured Fourier frequencies can be synthesized either in the image domain [13] or Fourier domain [14]. Parallel imaging in particular is complementary to CS as demonstrated by reconstruction methods such as ℓ1-SPIRiT [15].

C. State-of-the-Art for X-Ray Tomographic Imaging in the Clinic

Diagnostic CT

The diagnostic CT scanner [16], see Fig. 1, is nothing short of a marvel of modern technology, and a positive example of free market forces driving innovation. All of the main CT scanner manufacturers have strong research and development teams, who follow and contribute to imaging science research in addition to their in-house duties. CT devices are realizations of a gigapixel camera in routine clinical use. While the typical modern diagnostic CT scanner provides volumes consisting of hundreds of 512 × 512 slice images with submillimeter resolution, micro CT scanners can yield volumes as large as 20003 voxels. For cardiac CT imaging, an application that has driven much of the technological advancement, the volume images can be obtained with a timing resolution down to 100 milliseconds. The speed and resolution of CT imaging makes it an indispensable tool for cardiac imaging and assessing stroke. It is used routinely for diagnosing diverse medical conditions of all internal organs, and it is even being considered for use as a screening tool for lung cancer. Further research aims broadly to improve clinical utility without increasing the dose of the scan, or to maintain clinical utility while reducing radiation exposure to the patient.

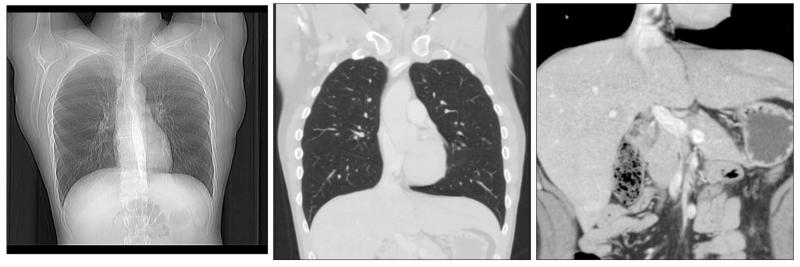

Fig. 1.

(Left) An x-ray projection image of the chest. (Middle) a CT slice image of the same patient, cutting vertically through the heart and lungs; the gray scale window is [−1200 HU, 400 HU]. (Right) a zoom and pan of the same CT slice image showing the patient’s lower abdomen [−150 HU, 200 HU]. The gray scale units are in the Hounsfield (HU) scale, where −1000 HU and 0 HU correspond to air and water, respectively. The CT lung image, displayed in a wide gray scale window, faithfully represents the structures in this slice without interference from overlapping lung tissue or ribs, seen in the x-ray projection. Such a representation is needed to view subtle lesions in the lung. Note that the image of the heart is sharp—an indication of the high temporal resolution of diagnostic CT. The CT abdomen image, displayed in a narrow gray scale window, shows the ability of CT to resolve low-contrast structures with high spatial resolution. Images courtesy of Alexandra Cunliffe, Ph.D.

C-arm CT

A fast growing area of x-ray tomography is the use of C-arm devices [17-19], where an x-ray source and flat-panel imager are mounted on two ends of a C-shaped gantry. For tomography, the C-arm devices can be manipulated to obtain x-ray projections from a variety of scanning trajectories. Such devices do not have the speed, resolution, and precision of diagnostic CT, but they are quite flexible and can be incorporated with radiation therapy gantries or brought into surgical environments to provide tomographic guidance for positioning or treatment targeting. For these devices there are many opportunities for developing novel image reconstruction algorithms, because the applications are more varied than diagnostic CT and the scanning conditions are not as well controlled. The trajectory of the C-arm scan can be perturbed by vibration and sag due to gravity. Scanning arcs may be limited by mechanical constraints. Scanning times are also much longer than that of diagnostic CT, and consequently projection data focused on regions of interest near the lungs are subject to inconsistency due to motion. As with diagnostic CT, there is also effort to reduce the dose of C-arm CT scanning protocols.

Tomosynthesis

Another interesting area of x-ray tomography uses very limited sampling to gain partial tomographic data. In tomosynthesis [20], the scanning arc is typically 50 degrees or less and with such a limited arc the x-ray detector can be stationary. Over this arc, 10–50 projections are taken, thus even within the limited scanning arc the view-angle sampling can be quite sparse. The projection data is far too limited to provide accurate tomographic image reconstruction, and the resolution is quite anisotropic as a result. An approximate volume can be generated by some modification of standard image reconstruction techniques. The “in-plane” resolution, i.e., planes parallel to the detector, is approximately a factor of 10 better than the depth resolution, the direction perpendicular to the detector. The in-plane slices are the ones presented to the observer, and compared with a radiograph of similar orientation the tomosynthesis slices have some ability to separate overlapping tissue structures. The primary clinical applications of tomosynthesis are for imaging the chest and the breast. Digital breast tomosynthesis (DBT) recently transitioned from a research device to one approved for clinical use by the FDA. It is now rapidly gaining use for breast cancer screening as the dose of DBT is on the order of that of full-field digital mammography. DBT has the potential to improve sensitivity by removing overlapping structures that may hide a malignant mass and to increase specificity by reducing tissue superpositions that may mimic a suspicious mass.

This device is one good example to demonstrate why CSGs may not lead to disruptive technology for x-ray based medical imaging. Tomosynthesis acquires fewer data than even what currently known CSGs require for accurate tomographic image reconstruction. Yet, with standard image reconstruction theory and the limited tomosynthesis data sets useful tomographic volumes are routinely generated and used in the clinic. Again, mathematical exactness is not as relevant as device utility when it comes to applications; the artifacts due to the limited sampling do not hinder performance of the imaging task. Nevertheless, preliminary research with SEIR algorithms may overcome some of the DBT artifacts due to the sparse view-angle sampling along the limited scanning arc.

D. X-Ray Tomographic Imaging Research

Despite its widespread use, research in x-ray tomography continues to increase as this technology continues to find more applications. To appreciate the role CSGs may play in this research effort we provide a short summary of past research in image reconstruction pertaining to CS and of current research efforts in x-ray CT hardware where CS may have impact.

Imaging model

A brief background on x-ray imaging is needed to appreciate the research efforts in this area (see Refs. [16,21] for more information). The physical quantity being imaged is the spatially varying x-ray linear attenuation coefficient, μ(r), where the vector r indicates a location in the subject. This quantity is interrogated by illuminating the subject with x-rays of intensity I0 and measuring the transmitted intensity I. Under many simplifying assumptions—monochromatic x-ray source, no x-ray scatter, point-like source and detector, no noise—the transmitted intensity follows the Beer–Lambert law

| (6) |

where the integration variable t indicates a point along the ray ℓ. In order to form the image the measured intensity is processed to find an estimate of the line-integration over μ(r)

| (7) |

where g is the projection data, also known as the sinogram, as a function of x-ray source location r0(λ) and ray direction . The x-ray source during a CT scan moves along a one-dimensional curve such as a circle or a helix and the parameter λ indicates location along this curve. The quantities ℓ, I0, and I all depend on r0, λ and , but these dependencies are suppressed in Eq. (7) for clarity.

The goal of image reconstruction is to recover μ(r) from . Mathematically, g is referred to as the divergent ray or x-ray transform. In 2D and 3D it is also referred to as the fan-beam and cone-beam transforms, respectively. In 2D, the most common acquisition involves a full circular orbit of the x-ray source acquiring untruncated fan-beam projections of a slice of the subject. From such data the image can be reconstructed using fan-beam filtered back projection (FBP). In 3D, image reconstruction theory is more complicated. In practice, circular and helical scans are most common. For the circular scan the projection data are not sufficient for accurate inversion of Eq. (7), but there is an approximate algorithm developed by Feldkamp, Davis, and Kress (FDK) [22], which generalizes fan-beam FBP to a cone-beam image reconstruction algorithm. The helical scan provides sufficient data for inversion of Eq. (7), and in the 90’s and early 00’s much research was devoted to developing analytic algorithms for this scanning configuration. In actual clinical scanners, the image reconstruction algorithms use the approximations involved in the FDK algorithm.

Of particular interest for CS, g is sampled at a finite number of projection views using x-ray detectors with a finite number of pixels. For analytic image reconstruction algorithms the data are interpolated, but the interpolation can be avoided using iterative image reconstruction.

Software research

In recent years, research on new image reconstruction algorithms for x-ray CT has shifted focus from analytic methods to iterative image reconstruction [23], which aims to solve the imaging model implicitly. The latter type of algorithm has a much higher computational load, and one of the factors that has led to the shift in research is the steady rapid increase in computational power. Most notably, the advent of graphics processing units (GPUs) [24] has provided a huge boost to research on iterative image reconstruction because computational times for the most time-consuming operations of projection and back-projection can be reduced down to the order of seconds for 3D systems of realistic size.

IIR is a large topic even when confining attention only to x-ray CT [23,25]. Accordingly, we present this topic narrowly highlighting methods related to CS. The most basic IIR algorithms are derived by discretizing the model in Eq. (7), yielding the linear system

| (8) |

where is a vector of n image expansion coefficients [modeling μ(r) from Eq. (7)] such as pixel values, and is system matrix that yields directly the projection data when applied to h. This linear system when modeling CT is extremely large and as a result all approaches to solving it involve some form of iterative algorithm, and these algorithms employ operations no more computationally intensive than matrix-vector products. As a result, algorithms are restricted to first-order or approximate second-order methods.

Initial efforts for IIR in CT took an algebraic approach. The linear system in Eq. (8) is dealt with by sequentially projecting onto hyperplanes defined by a row of the system matrix and the corresponding measurement gj. This approach termed the Algebraic Reconstruction Technique (ART) is a reinvention of the Kaczmarz algorithm [26-28]. One of the interesting aspects of ART, concerning CS, is that there is some advantage to randomizing the access order of the data index j [29] a topic that has received renewed attention and interesting analysis in recent years [30]. We point out that the use of randomness in the data access pattern is different than the random sampling proposed as a result of CSGs [31].

The most common form of IIR derives from optimization, where an objective function is formed by combining data fidelity with some form of image regularity

| (9) |

where the function D(·,·) represents a distance (or divergence, i.e., not necessarily symmetric) between the left and right side of Eq. (8); R (·) is some measure of image roughness; and γ is a parameter controlling the strength of the image regularization. Of particular relevance to CS methods exploiting sparsity in the gradient magnitude image (GMI); much work in IIR has been devoted to edge-preserving regularization [32-34] where R(·) is the total variation (TV) semi-norm [35] or the related Huber penalty. The common feature of these penalties is that they involve the ℓ1 norm instead of the ℓ2 norm of a numerical image gradient, thereby placing less of a penalty on discontinuities, i.e., edges, in the image. The majority of work on edge-preserving regularization focuses on reducing image noise while preserving edges in the image, but one notable early use of TV suggested use of this penalty to reduce artifacts due to limited angular scanning [36].

Finally, we would like to highlight work [37,38] specifically designed around the concept of exploiting sparsity for CT image reconstruction. An important application of CT is for visualization of blood vessels with the use of contrast agents. For this protocol, the blood vessels are the main x-ray attenuators and spatially they form a volumetrically sparse structure. This sparsity has been recognized and has motivated the development of an IIR algorithm that potentially allows for great reduction in view-angle sampling while still achieving accurate reconstruction of the vessel tree. This work broke from the traditional optimization approach of combining data fidelity and image regularity into a single objective function. It proposed instead a constrained minimization problem designed for under-determined linear systems. The model used an equality constraint forcing the image to agree with the available data and considered ℓp minimization of the voxel representation. To avoid the nonsmoothness of the p = 1 case, this work used p = 1.1. Example reconstructions were shown for four to 15 projections which is substantially fewer than the standard CT scanning protocols.

Hardware research

Much current hardware development in x-ray CT focuses on the x-ray detector. Of particular interest in recent years is the development of photon-counting detectors with the capability of resolving the energy of incoming photons [39-42]. Even though standard diagnostic CT yields high resolution images of anatomy, this imaging modality is not quantitative in that the displayed image is not actually the attenuation map of the subject. The reason for this is that the imaging model ignores important physical processes particularly those relating to x-ray energy: the x-ray source emits a broad spectrum of energies, and the attenuation coefficients are energy dependent. Dual-energy CT [43] can counteract some of the effects due to the assumption of x-ray monochromaticity. The new photon-counting detectors may, however, lead to true quantitative CT, allowing for better means of tissue differentiation and images with improved noise properties. Advanced IIR methods may be essential in dealing with the associated energy-resolved imaging model.

Nonstandard CT configurations with novel sampling schemes and the use of coded apertures are being considered. The so-called inverse geometry set up is somewhat similar to the idea of the single pixel camera [7]. Inverse geometry CT calls for a small detector, and sufficient transmission ray sampling is provided by sweeping the x-ray source [44]. Advanced forms of beam modulation or beam blocking are being considered for different sampling schemes which may provide information for accurate image reconstruction with reduced x-ray exposure [45-48]. Such methods may also be useful for estimating and compensating for x-ray scatter. The aims of this research are closely aligned with the goals of CS.

While traditional CT operates on the attenuation of the primary beam, there are efforts to utilize other physical processes for x-ray imaging. Much recent attention has been directed to exploiting the wave-like properties of x-ray for tomographic diffraction imaging [49]. Detection of coherently scattered x-rays is also being investigated [50,51], because this form of subject interrogation may give a handle on specific tissue type identification. These alternate forms of x-ray imaging could possibly benefit from SEIR, and they may offer different sparsity mechanisms than transmission based CT.

Image quality evaluation

Because x-ray tomography is a mature technology, systems development must be task-based. There is a large body of knowledge in and strong research effort directed toward assessment of image quality [52]. One of the major issues is that image quality of a particular medical imaging device can only be meaningfully assessed by the expert observers—radiologists, but their time is expensive and limited. Thus, an interesting area of medical imaging research is devoted to developing model observers—computer algorithms whose performance correlate with expert observers. Aside from the obvious benefits of reducing evaluation study cost and being more widely available, such observers add a level of objectivity. Many of these methods apply to assessing imaging devices as a whole, and there is some question as to how to evaluate performance of components of the imaging device such as the image reconstruction algorithm in a task-based manner. Development of such image evaluation methods for advanced IIR is even more complicated, because the algorithms depend nonlinearly on the projection data, and they are also object dependent. In Section 5.C some initial work on task-based image quality evaluation for MRI SEIRs is outlined.

4. Compressive Sensing Techniques for X-Ray CT

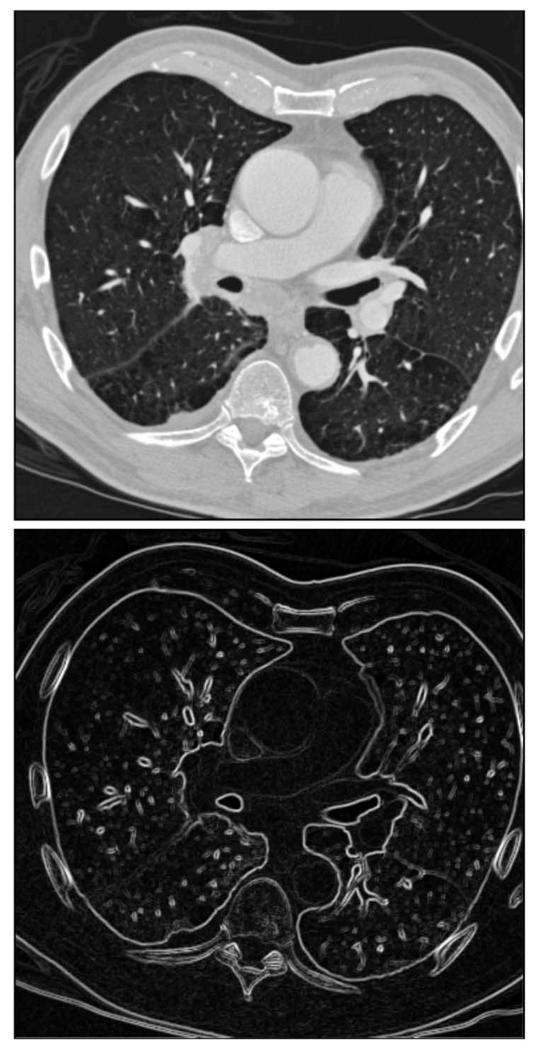

With the brief introduction on x-ray CT, we are now in a position to discuss CS and its role with x-ray CT IIR algorithm development. While there have been initial forays into many forms of sparsity, the over-whelming majority of work exploits GMI sparsity utilizing various optimization models with the TV seminorm. The reason for this is that tomographic images are fairly uniform within organs with abrupt variation at organ boundaries. In Fig. 2, a CT slice image is shown along side its GMI. The sparsity of the GMI is clearly a potentially useful object prior. This prior is particularly suited to x-ray CT, because even considering all of the disturbing physical factors not accounted for in the imaging model the approximation of a sparse GMI seems to be robust. In fact, the primary example from Candès et al. [4] is directly aimed at CT; the sparse DFT sampling with radial spokes can be interpreted as parallel beam CT data with sparse view angle sampling through the central slice theorem.

Fig. 2.

(Top) a transaxial CT slice image through the lungs of a patient; the gray scale window is [−1200 HU, 600 HU]. (Bottom) the GMI corresponding to the same image [0 HU, 800 HU]. The gray scale units are in the Hounsfield (HU) scale, where −1000 HU and 0 HU correspond to air and water, respectively. Despite the complex structure in the CT slice image, the GMI is clearly more sparse than the original image. Note again the sharpness of the heart. In this image the vessels through heart are clearly visible due to the use of CT contrast agent. The image, courtesy of Alexandra Cunliffe, Ph.D., is one of many from the study in Ref. [53].

The proposed optimization from Ref. [4] for obtaining a CSG was to minimize the image TV constrained by forcing the image estimate to agree with the available projection data

| (10) |

Even though the goal of this model is to obtain a CSG, much work has been performed in adapting this constrained TV-minimization model to x-ray CT [54-60]. Here, we summarize the related image reconstruction theory and the resulting CT applications, which are being actively investigated.

A. X-Ray Imaging Data Model

Before addressing possible solution or modification of Eq. (10), we return to the basic algebraic imaging model for x-ray CT and MRI

| (11) |

where A stands for either X, when modeling CT, or F when modeling MRI. The seeming simplicity of this generic linear model hides a multitude of complicating factors for the discrete-to-discrete x-ray transform. We state the issues surrounding X and contrast with MRI’s DFT:

• The linear system in Eq. (8) is inconsistent. Even when X is rectangular with number of measurements m fewer than the number of voxels n there are data sets g for which there is no corresponding h. With real data containing noise and other physical factors not accounted for in the model, this inconsistency is almost impossible to avoid. For the DFT, as long as the corresponding linear system is not over-determined it is consistent.

• The matrix X does not have a standard form. Many different approximations for the line-integration are used as well as different image expansion sets. For MRI, a Cartesian grid of Dirac delta functions is overwhelmingly the most common choice leading to the form of the DFT amenable to the fast Fourier transform (FFT) algorithm.

• There is also no consensus on the back-projection matrix B. It can be chosen as the matrix transpose of X; i.e., B = XT, or a discretization of the continuous back-projection operation [61,62]. Again, this discretization process can take multiple forms, and there is no standard implementation of B. For MRI, the Hermitian adjoint of the system matrix is standardized. The discretization of the inverse Fourier transform with a Cartesian grid of delta functions leads to the inverse DFT and Hermitian adjoint of the DFT.

• Poor conditioning of X. Regardless of the implementation of X, the singular value decomposition (SVD) of X [28] reveals that X has significantly poorer conditioning than the matrix corresponding to the DFT.

The issue of data inconsistency means that only under the conditions of ideal noiseless data used for obtaining a CSG is it possible to solve Eq. (10). For real data or realistic simulations the data constraint needs to be relaxed. One such relaxation is

| (12) |

where ϵ is a parameter controlling the allowable level of data discrepancy. Even with this form of CS optimization, care must be taken in selecting ϵ: too low and the problem remains inconsistent, or too high and the image becomes over-smoothed.

The issues of nonstandard implementations for X and B strike at one of the main problems of IIR. In IIR, it is very difficult to gain intuition for the image quality resulting from an IIR algorithm without actually executing the algorithm on specific data sets and parameter settings. The resulting image quality clearly depends on all of the parameters of the optimization problem it aims to solve, and with no clear idea on how these parameters affect the image there is no choice but to run the algorithm mapping out the parameter space. For systems of realistic size such a complete mapping is simply not possible. That X and B have no standard implementations adds to the parameter list affecting h, and different choices of line-integration models or image representations do affect the texture details of the resulting x-ray CT slice images.

The poor conditioning of X relative to the DFT has three important implications: (1) it impacts the sensitivity of reconstructed images to the data and to model parameters. (2) Of particular interest to CS, it affects what is considered to be sampling compressively. In order to specify to what extent the sampling is compressed, it is important to first define what is considered complete sampling. For the DFT with the standard Cartesian setup, complete sampling is obvious, and it is achieved when m ≥ n. For X modeling, the x-ray transform the complete sampling condition is not so obvious [63]. Multiple definitions for complete sampling are discussed in Ref. [63] based on different properties of X. Using invertibility of X leads roughly to m = n as a full sampling criterion, but the condition number of X can be quite large when m = n; in fact for non-CS IIR, m is chosen substantially larger than n because of this issue. Exploiting condition number for the most common sampling configurations, a simple useful rule for specifying full sampling of X is to take twice as many samples for each dimension of the CT imaging problem; i.e., full sampling occurs at m = 4n in 2D CT and m = 8n in 3D CT. Thus, a CS algorithm employing m = n/2 measurements can be sampling compressively by a factor of two to 16 depending on the definition of full sampling. (3) The conditioning of X impacts the convergence rate of first-order solvers. Theoretical investigations for the ideal optimization model in Eq. (10) become more difficult for CT than MRI, because first-order solvers can take much longer to converge when the system matrix is X instead of the DFT.

B. Compressive Sensing Guarantees for X-Ray CT

A CSG relates the necessary number of samples for accurate image reconstruction to some identified sparsity in the subject. As we are focusing on GMI sparsity, we seek to relate the number of nonzeros in the GMI to the necessary number of samples to recover the test image. Theoretical results from CS apply to sensing matrices with various forms of randomness [31], which do not apply to CT. In CT it may be possible to sense random transmission rays, but it is impossible to get away from the basic line integration sensitivity map leading to a single sample. As such, CT sampling is not as flexible as MRI. Another important difference is partial incoherence of the CT sampling with a voxel basis. One row of the DFT system matrix involves nearly every voxel in the volume image; but X is a sparse system matrix with one row of X having zero values for all voxels except those that lie on the path between the x-ray source and a specified detector element. One consequence of this partial incoherence is that it is more difficult to get accurate image recovery when undersampling the individual projections than undersampling the x-ray source trajectory. In any case, currently the only way to establish the link between GMI sparsity and necessary sampling is by numerical solution of the ideal constrained TV-minimization problem using ideal projections of test phantoms.

Many such results have been obtained in the literature for isolated sampling schemes and test phantoms. These results do not form a very complete picture of CSGs in CT, but there is some indication that there is the possibility to obtain accurate image reconstruction from substantially reduced view-angle sampling [57,63]. Some work employing the nonconvex ℓp semi-norm shows possibly even greater gains in sampling reduction by GMI sparsity-exploiting IIR [64]. Such results, however promising, paint an incomplete picture. The difficulty lies in the size of X for realistic CT systems and the slow convergence of solvers for Eq. (10).

One interesting strategy is to reduce the CT system size, extrapolating results for realistic sizes from a series of smaller more manageable CT model systems. For smaller CT systems, there are more efficient algorithms, such as interior point primal–dual algorithms, for solving equality constrained TV-minimization. With the more efficient solvers and smaller system sizes, sufficient sampling can be tested on ensembles of images with fixed sparsity producing a map in the style proposed by Donoho and Tanner [65]. Early indications show that a sharp phase transition exists for image recovery, and the location of this recovery transition indicates significant reduction in sampling by exploiting sparsity in the GMI [66,67].

Establishing this link between image sparsity and sampling with CSGs is potentially very useful for IIR algorithm design. It is difficult to come by general principles of IIR algorithm design, and establishing the link between sampling and sparsity would be one such principle. Such knowledge can be a guide for designing IIR based on exploiting GMI sparsity for real CT data, containing noise and other data inconsistencies.

C. Realizing Practical Sparsity-Exploiting Image Reconstruction Algorithms for X-Ray Tomography

In developing SEIR based on the GMI. the ideal optimization problem in Eq. (10) needs to be modified. In this section we address the choice of optimization problem and algorithms for solving these problems.

1. Optimization Problems

In modifying Eq. (10) to account for data inconsistency, the equality constraint is relaxed to an inequality constraint in Eq. (12). This optimization problem can be recast in different, mathematically equivalent forms (through the Lagrangian). But from the point of view of application to CT IIR, the different forms use alternate parameterizations—which can impact IIR algorithm design.

Unconstrained optimization

In the CS for CT literature, the most common form of optimization for exploiting GMI sparsity is as unconstrained minimization

| (13) |

which is essentially the same optimization arrived in considering edge-preserving regularization. Even though the optimization problems are the same there is a subtle difference in the design of the IIR study. The picture for edge-preserving regularization is that there is a trade-off between data fidelity and image regularity. Increasing γ leads to greater regularity at the cost of losing data fidelity. For pure CSG studies, the data g are ideal, and the solution of Eq. (13) is sought for γ → 0. This limit is equivalent to enforcing an equality constraint for the data, and the small admixture of the TV penalty breaks the degeneracy of the solution set of the linear system yielding the solution with minimum TV. For SEIR with noisy data, the parameter γ takes on two roles: regularization and breaking the degeneracy of the solution space. Generally speaking, edge-preserving regularization for CT involves an overdetermined linear system and relatively large γ, while GMI SEIR uses an under-determined imaging model and small values of γ. Algorithmically the two situations can be quite different as convergence is generally more difficult to achieve in the SEIR case. This unconstrained form is perhaps the most common because of the existing solver technology. For IIR algorithm design, it is not so convenient because the parameter γ has two roles and has a convoluted physical meaning.

Data discrepancy constrained TV minimization

Casting GMI SEIR in terms of the constrained minimization in Eq. (12) presents an optimization problem with a more meaningful parameter ϵ. This parameter fixes the data fidelity of the solution, and the minimum TV volume is sought. The parameter ϵ has an actual physical meaning, which is useful especially when the level of inconsistency in the data is known. This constrained form is also useful when comparing different implementations of GMI-based norms, particularly notable is the comparison of different ℓp norms for equivalent data fidelity. Although more useful than the unconstrained form, the data discrepancy constraint may also not be optimal particularly when considering different forms of X due to different scanning configurations. With different X, the meaning of, and interesting range of values of, ϵ shifts. Generally speaking, the more under-determined the imaging model the smaller the value of optimal ϵ. Also, there may be values of ϵ for which there is no solution.

TV constrained data discrepancy minimization

To address the issues of the data discrepancy constrained form, we can swap the object and constraint functions yielding the optimization problem

| (14) |

where now the parameter β constrains the image TV. This form is particularly convenient when comparing alternate X, coding different CT scanning configurations, or alternate D, denoting different data discrepancy measures. Of particular interest is fixed-dose studies where the number of projections is varied with the x-ray intensity per projection in a way that the total dose of the scan remains constant. Another advantage for simulation is that the image TV, β0, of the test phantom is known ahead of time. In many situations, the optimal value of β will be β0. For example, in ideal data testing, such as done to obtain a CSG, we can set β = β0 and drive the data discrepancy to zero and see if we have recovered the phantom. The comparison between ideal data and realistic data image reconstruction is more natural in this TV-constrained form; it is easy to avoid an infeasible optimization problem because we know there will be a solution for β ≥ 0. In actual application with real CT data, it is possible to get a handle on interesting values of β from a previous reconstructed volume by another IIR algorithm or FDK.

2. First-Order Solvers and Sequential Algorithms

The recent interest in CS and CSGs has redoubled the effort in developing optimization problem solvers particularly for nonsmooth large-scale problems. The nonsmooth aspect is not critical for real data applications, where it is difficult to tell the difference between TV and slightly smoothed TV. But for CSGs the nonsmooth aspect of the optimization problems of interest is essential. The CS-motivated algorithm development is split into two main categories: accurate solvers for the associated optimization problems and approximate solvers using sequential algorithms. The two approaches differ in algorithm efficiency and purpose. Accurate solvers are needed more for CSG related studies and at a minimum require hundreds of iterations for CT IIR. The approximate sequential algorithms apply to real CT data IIR and may yield useful images in ten or fewer iterations. By “iteration,” we mean a single cycle of processing all of the measured projection data.

We acknowledge that algorithm development is a fluid situation, and the methods described here may as a result have a short shelf-life. One over-arching issue, though, is whether or not to use accurate optimization solvers for image reconstruction with real data. The problem with approximate solvers is that the reconstructed volumes depend on the parameters of the optimization problem, which the algorithm aims to solve, and all the parameters of the algorithm itself because the result falls short of convergence. More parameters makes characterization of the algorithm more difficult. On the other hand, accurate solution of an optimization problem eliminates the need to study dependence of the volume on algorithm parameters; if the final volume is the solution of the stated optimization problem the path taken in getting there is irrelevant. Accurate solvers, of course, demand greater computational resources, and at present accurate solution to CS optimization problems is poorly characterized with real CT data.

Accurate solvers

Using slightly smoothed versions of optimization problems involving TV, many standard methods have been adapted for accurate solution. Work on accelerating gradient descent [68], nonlinear conjugate gradients [69], and the approximate Newton’s methods such as limited memory BFGS [70] have all been applied to CT IIR of realistic dimensions. Much research specific to IIR in tomography has also focused on optimization transfer methods [71]. For theoretical CSG studies the non-smoothness of the optimization problems is essential. On a small scale these problems, which can be cast as a second-order cone program (SOCP), are amenable to recently developed interior point primal–dual methods [72]. For realistic system sizes, there has been great progress in the past few years on nonsmooth first-order algorithms such as split Bregman [73], ADMM [74], and Chambolle-Pock [75-77]. These algorithms are inter-related [78], but there are differences in parameterization which can have significant consequences on convergence in practice. Also, convergence rates for all of these algorithms vary significantly depending on CT scanning configuration and data quality.

Sequential solvers

For approximate solvers applied to the CT system, sequential algorithms seem to be favored. Sequential algorithms update the image after processing subsets, or individual elements, of the projection data set. For the sparse system matrices corresponding to ray-based tomographic imaging, this strategy yields significant acceleration of initial convergence to a useful image. Although initial acceleration is rapid, convergence to an optimization solution can be slow. Several algorithms used for exploiting GMI sparsity use the sequential strategy. Adaptive-steepest-descent–projection-onto-convex-sets (ASD-POCS) [57] modifies the well-known POCS algorithm including a steepest descent step on the image TV in adaptive way so that image TV is reduced without destroying progress on reducing the data discrepancy. The ordered subsets for transmission tomography (OSTR) [79] also use the sequential processing strategy and can be applied to the TV-based optimization problems. The added benefit of the POCS-based strategy is that other constraints can be easily incorporated such as image non-negativity, and another popular choice is to include in combination with TV a gradient descent step that reduces the distance to a prior image [56,80,81]. Modifications to the POCS based algorithms have also been made [60]. Recently, there has also been work applying incremental algorithms [82] to GMI sparsity-exploiting CT image reconstruction [83].

As pointed out above, the approximate nature of these sequential algorithms complicates design. As a result, it may be useful to shift away from the framework of optimization problems with a unique solution and use a picture due to Combettes, provided by the POCS framework [84]. In this picture, a reconstructed volume is specified by listing a series of constraints on the volume. Any volume that satisfies all the constraints is considered a valid reconstruction. In fact, this is the picture used in practice for any IIR algorithm that uses a metric based only on the current image estimate as a stopping criterion. (Note a small distance between successive iterations does not fall into this picture.) The Combettes framework has been used for CT IIR design applied to real data [85].

5. Compressive Sensing Techniques for MRI

MR is a natural test case for CS algorithm development. Fully-sampled MR data are readily available and easily subsampled to create test data for CS algorithms. Thus, many papers focused on CS in general use MR data to qualitatively demonstrate the impact of new algorithms on real-world data. A subset of these algorithms have made the transition from theory to adoption by MR researchers. Here we highlight a representative sample of the methods that have gained popularity in the MR research community. This is followed by some discussion on how the performance of these algorithms could be more rigorously evaluated.

A. Optimization Problems

Magnetic resonance CS algorithms enforce some notion of image sparsity while maintaining consistency with the measured data as in CT. Sparsity of the total variation transform and wavelet domain sparsity were originally proposed in Ref. [6]. This leads to the constrained minimization problem

| (15) |

where W is a wavelet transform, λ is a parameter controlling the relative strengths of the sparsity constraints, Fuh is the partial Fourier transform of the image of the magnetization h [see Eq. (5)] at the frequencies measured in data vector g and ϵ is the noise level. The corresponding unconstrained problem can also be considered,

| (16) |

either as a step towards solving the constrained problem, or as a final reconstruction.

These formulations lead to SEIRs for standard anatomical MRI as described in Section 3.A. Much effort has gone into designing data sampling patterns Fu, referred to as trajectories, amenable to CS. There is considerable freedom in choosing a trajectory, bounded by magnetic field gradient hardware constraints, and many CS trajectories have been proposed.

There has been much work on SEIRs tailored to more advanced MR imaging techniques. In MR quantitative imaging, tissue parameters are estimated. This can be accomplished using a model-based CS reconstruction that implements a more sophisticated representation of the MR signal [86]. For the spinecho signal example given in Eq. (2), T2 can be estimated if data are acquired at multiple TE times. One possible model-based method [87] for estimating T2 and the equilibrium magnetization I0 is

| (17) |

where j indexes TE time and Fj is the partial Fourier transform of the signal model at frequencies measured at time TEj. Here we explicitly solve for the parameters of interest and apply sparsity constraints to the parameter maps rather than the image as in Eq. (15). Note that MR data are often nonlinear functions of the parameters of interest which complicates the development of SEIRs for quantitative MRI.

SEIRs designed to exploit spatiotemporal sparsity of dynamic MRI have also been developed. A method that has gained wide acceptance is k-t FOCUSS [88] which uses concepts from video compression algorithms to enforce sparsity of residual dynamic image components. Low rank methods for dynamic MRI have recently gained popularity [89]. The dynamic image is decomposed into a static background that is assumed low rank (well-represented by its first few principal components) and a dynamic component that is assumed sparse in an appropriate basis representation. This leads to essentially a blind deconvolution formulation of the image reconstruction problem, which is in general a difficult problem; however, if the static background and dynamic components obey a form of incoherence, the decomposition is unique, and the problem is well-posed [90].

B. Solvers

All of these image reconstructions are formulated as optimization problems which can be solved through algorithms developed for l1-based optimization. Algorithms are typically drawn from the broader CS literature and should be familiar to CS researchers. The unconstrained formulations are solved using standard optimization techniques such as conjugate-gradient or quasi-Newton methods. Constrained optimization problems are solved using first-order methods such as basis pursuit [91] or split-Bregman [92] methods, among others as discussed in Section 4.C.2 in the context of CT.

C. Image Quality Metrics

CS has attracted a great deal of interest in the MR imaging community, and a variety of potential clinical applications have been identified; however, the rigorous quantification of CS MR image quality in terms of clinical utility has received relatively little attention to date. In this respect, CS presents new challenges not encountered in traditional FFT reconstruction. The FFT is linear, with well-understood behavior and noise statistics. Quantitative image quality evaluation for fully-sampled data typically involves analysis of phantom images and metrics such as pixel SNR (mean pixel value in a region of interest divided by the standard deviation of the image noise) which can easily be measured in vivo. SEIRs are more complex and are designed to suppress structures in the image that are inconsistent with sparsity. This presents the possibility that clinically-relevant structures could unintentionally be suppressed, negatively impacting clinical effectiveness. Image quality metrics that are related to clinical task performance would be able to quantify any loss of relevant structures and be best suited to compare CS algorithms and system designs.

CS MR image quality has been evaluated in several ways. CSGs in terms of mean-squared error have been reported, such as in Ref. [3], though applicability to clinical MR systems is limited due to the conditions that must be satisfied, e.g., the restricted isometry property, which is difficult to demonstrate for current medical imaging systems. CSGs also typically ignore model mismatch. The true MR image formation process is a complex nonlinear function of time-varying MR parameters defined on a continuous object, rather than a discrete FFT identical to the operator F employed during image reconstruction. Qualitative comparison of artifacts in clinical images is often reported. This is a convenient way to illustrate the potential advantages of a particular method, though the subjective nature of such comparisons makes it difficult to infer broader clinical utility. Recent work has reported subjective image quality ratings given by clinical radiologists, for example in Ref. [93]; however, image preference, even given by trained image readers, is not necessarily reflective of task performance. Image quality metrics based on physical phantoms provide the advantage that ground truth can be established as in the example in Section 7.A. A major limitation of most physical phantoms is that they have simple structures. Clinical images are more complex, and likely less sparse in a given basis, making it difficult to infer clinical performance from phantom-based evaluation. On the other hand, ground truth for clinical images is often difficult to obtain, necessitating subjective performance evaluation.

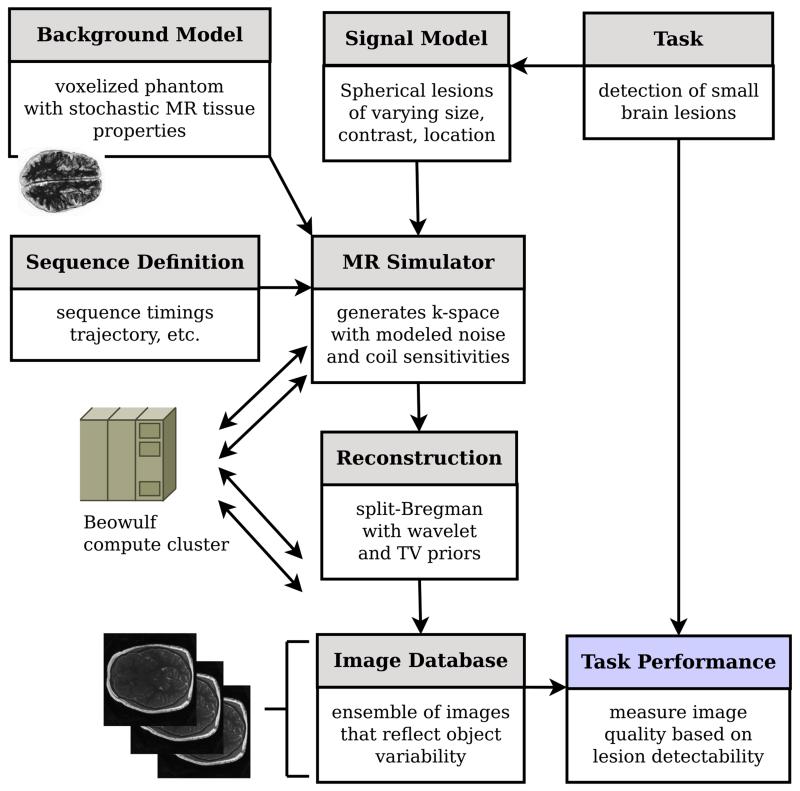

Objective assessment of image quality (OAIQ) has long been established as a rigorous framework for quantifying task performance in medical imaging [52]. OAIQ for MRI has previously been proposed outside the context of CS [94,95]. Recently a simulation framework has been developed to adapt OAIQ for the evaluation of MR CS reconstruction [96]. A task-based image quality metric was developed and compared with more traditional MR image quality metrics. We present an example of this work here.

The clinical task studied was detection of brain lesions. For a given location within the white matter of the brain a lesion is either present (hypothesis ) or absent (hypothesis ). Deciding which hypothesis is true is based on image data h reconstructed from raw MR data g,

| (18) |

| (19) |

where H is the MR system acting on the brain f to obtain Fourier data g contaminated by noise n. Note that H is nonlinear if we consider the object f to be characterized by its MR parameters. CS operator Rλ generates an image of the brain with a particular choice of reconstruction parameters λ. It is similarly a nonlinear function of the MR data. Image h is stochastic due to noise in the imaging system and variability in the object being imaged, thus we define the mean images and covariance matrices

| (20) |

| (21) |

The Hotelling SNR [52] measures the separability of the two image distributions and is defined

| (22) |

where Δμ is the mean lesion signal

| (23) |

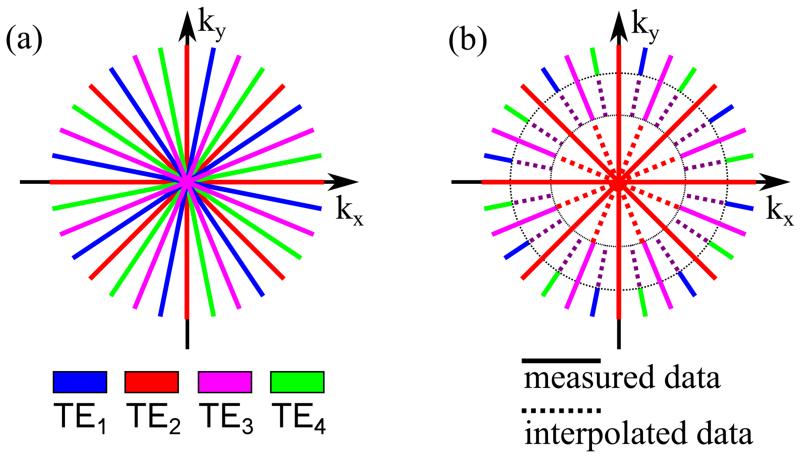

To estimate the mean images and covariance matrices under each hypothesis many image samples are required. To achieve this, a simulation environment was developed (Fig. 3). The brain model was based on a segmentation of the Visible Human [97] that defines a tissue type for each (0.12 mm × 0.12 mm × 0.25 mm) voxel. MR parameters (ρ, T1, T2) were assigned per-voxel based on estimated distributions of these parameters reported in the literature. Spherical lesions of varying size and T2 contrast were inserted randomly within the white matter. The phantom f was passed through a simulated MR imaging system H. Specifically,

| (24) |

where is a three-dimensional slice of excited tissue (250 mm × 250 mm × 4 mm) aligned such that the lesion was at the center of the 4 mm slice and the coil sensitivity C was a nearly uniform single channel coil. The sampled frequencies follow a radial pattern with 128 lines each consisting of 256 points. For this work, the imaging system H was fixed, and the reconstruction parameters λ varied.

Fig. 3.

Simulated image creation and analysis process.

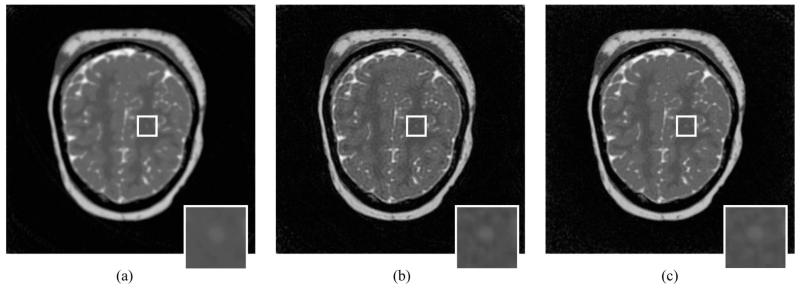

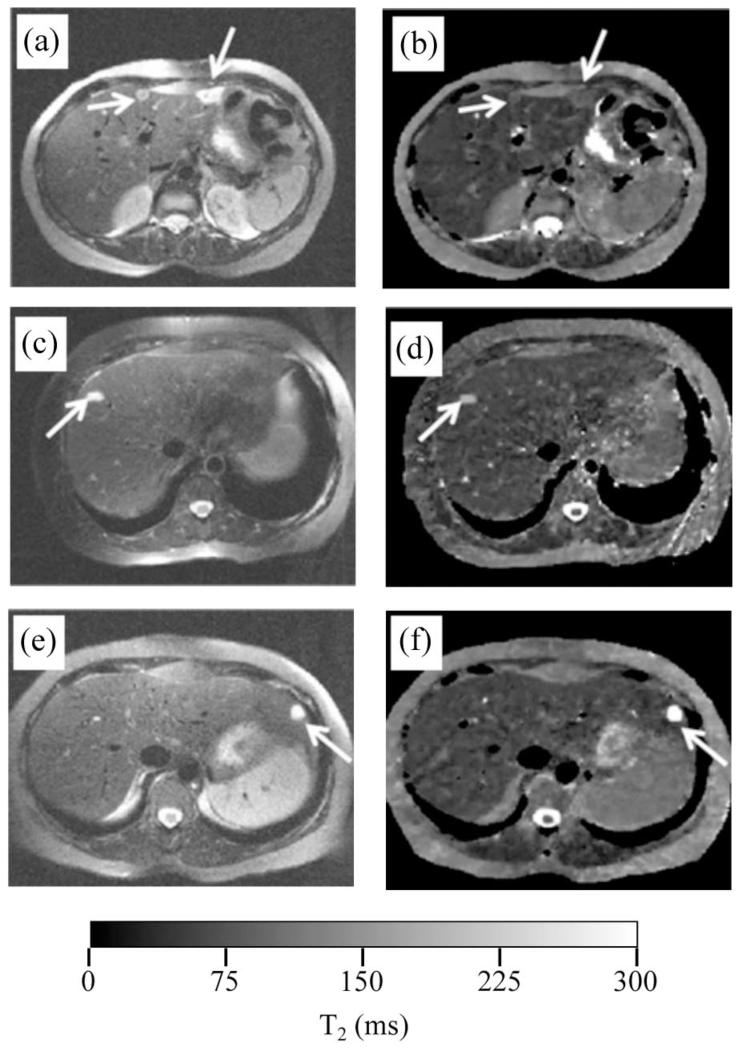

Multiple images with different noise realizations were reconstructed using a split-Bregman operator Rλ [92] with wavelet and total variation sparsity constraints. There are three reconstruction parameters, λw, the wavelet sparsity weight, λt, the total variation regularization weight, and λi, the stopping iteration number. Three different reconstruction operators, Rλ, differentiated by values of regularization parameters λw and λt were investigated. Sample images are shown in Fig. 4.

Fig. 4.

Sample image reconstructions for each set of regularization parameters. Inserted lesion of radius 2 mm with T2 = 100 ms. (a) Reconstruction 1 (low TV regularization, medium wavelet regularization) (b) Reconstruction 2 (high TV, high wavelet), (c) Reconstruction 3 (medium TV, low wavelet). For all images in this figure λi = 50 iterations. Inset shows enlarged view of lesion.

Several image quality metrics were used to compare the quality of the images produced by each set of reconstruction parameters, the pixel SNR (SNRp): the Hotelling SNR (SNRh) defined in Eq. (22); the contrast-to-noise ratio (CNR) defined as the difference between the mean value of lesion-containing pixels and surrounding nonlesion pixels divided by the standard deviation of the non-lesion pixels; and the lesion mean-squared error (MSE) calculated as the l2 norm of the difference between the image pixel values pj and the pixel values qj for an ‘ideal’ image reconstruction,

| (25) |

where is the 3D extent of the object domain represented by pixel pj.

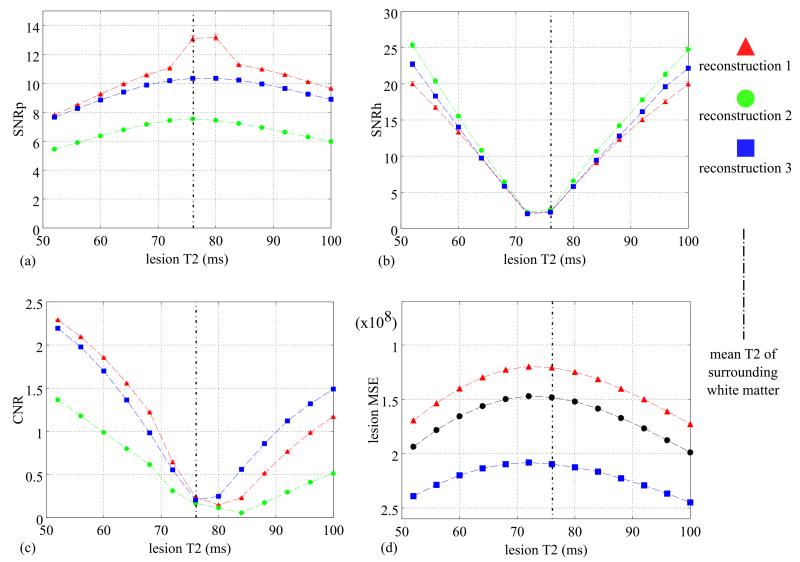

For each lesion location, size, contrast, and set of reconstruction parameters, 2500 images were reconstructed to characterize the noise properties and estimate image quality. Figure 5 compares each image quality metric for 4 mm diameter lesions of varying T2 contrast at the location illustrated in Fig. 4. The T2 of the surrounding white matter follows a log normal distribution with mean 76.1 ms. The metrics SNRp, CNR, and lesion MSE can be calculated on a per-image basis; however, the results reported here are the mean value over all 2500 images for each lesion. SNRh is calculated once for the entire 2500 image ensemble. Figure 5 reports the highest performance achieved over all iterations from λi = 1 to 50.

Fig. 5.

Image quality metrics: (a) expected SNRp, (b) SNRh, (c) expected CNR, and (d) expected lesion MSE for 4 mm diameter lesions of varying T2 contrast (x-axis) inserted at the location illustrated in Fig 4. The average T2 of the surrounding white matter is indicated by the dashed vertical line. Ninety-five percent confidence intervals are provided, though not visible for most points.

Measured in terms of SNRp, reconstruction 1 has the best performance. The qualitative appearance of reconstruction 1 given in Fig. 4(a) is smoother and less noisy than the other two reconstructions, which is consistent with this result. For all reconstructions the highest SNRp performance occurs when the lesion T2 is similar to the mean background T2, likely due to partial volume affecting the SNRp calculation. For task-based SNRh, reconstruction 2 gives the best performance, though the difference is not significant for the lowest contrast lesions. The Hotelling SNR is consistent with the task difficulty and varies approximately linearly with the difference between the lesion and background T2. CNR also is consistent with expected task difficultly due to differences in T2, though the relationship is more complex. For lesion T2 shorter than the background (brighter lesions), reconstruction 1 performed best, while for lesions with longer T2 (dark relative to the background), reconstruction 3 performed best. Similar to SNRp, the lesion MSE was inconsistent with presumed task difficulty and reconstruction 1 performed best, though the ranking of reconstructions 2 and 3 are reversed. Note the y scale is reversed for MSE to maintain consistency with higher curves indicating better performance.

This simple example demonstrates that MR image quality metrics do not give consistent system rankings for CS reconstructions. In this case only SNRh, the metric that directly measures the detectability of the lesions, ranks reconstruction 2 highest. One reason for this is that SNRh takes into account noise correlations which are not considered by the other metrics. Given the mathematical complexity of CS algorithms, this kind of simulation gives valuable information regarding the statistics of the images not easily obtainable through physical measurements due to the dependence on object complexity and the quantity of images required. This framework can be expanded to include more sophisticated imaging tasks of current interest in MR CS development.

6. Application of CS in X-Ray Tomography

Sparse view-angle sampling

Perhaps the most clear cut examples of CS algorithms being applied to real CT data are for studies involving reduction in the number of projections. It has been repeatedly demonstrated that GMI SEIR algorithm design can allow for substantial reduction in the number of projections while maintaining acceptable image quality judged by taking into account clinical tasks [60,80,81,85,98]. CSG investigations show a large potential for view-angle undersampling, and these results appear to be robust against noise and other physical factors that cause slight deviation away from a piece-wise constant reconstructed volume. Even objects with direct voxel sparsity appear to benefit from TV-based IIR [85,99]. One of the arguments against use of TV as an edge-preserving penalty has been that it yields unrealistic, blocky images. The amount of recent work exploiting GMI-sparsity to address view-angle sparse sampling seems to contradict this criticism. Perhaps this is a specific contribution of CSGs: by understanding the conditions of accurate image reconstruction, IIR design with the TV semi-norm is facilitated, and the blocky artifacts can be avoided.

Reducing the number of projections in a CT scan has implication for dose reduction, and initial results are promising. Further work is needed to survey constant-dose configurations trading off the number of views with intensity per view. Also, the majority of work on real data requires manual tuning of IIR algorithm parameters. For the clinic, such parameter tuning disrupts work-flow to the point where use of these algorithms is not practical. There is work addressing the issue of automatic parameter tuning [100,101].

Limited angular range scanning

Another form of data undersampling, which is important for x-ray CT, is the reduction of scanning angular range [36]. For the sake of discussion, we classify tomosynthesis under this heading because of its severe truncation of the scanning arc. For limited angular range scanning exploitation of GMI, sparsity is not as successful as the sparse view-angle sampling case. A CSG study investigating ideal recovery of test phantoms does not seem to have much bearing on the application because the conditioning of X for such scans is much worse than in the sparse view-angle sampling case for similar ratios of samples to voxels. As a result, the image recovery is not robust against data inconsistency, and it is not possible to overcome the anisotropic resolution inherent to the limited angular range scan only by use of the TV semi-norm. Nevertheless, use of the TV seminorm can overcome artifacts due to view-angle undersampling along the limited arc, and there is mitigation of artifacts due to the limited angular range. Even though it is not realistic to expect accurate image reconstruction with isotropically high resolution for such scans, even small improvements in image quality can have impact in utility toward a clinical application. For example, in the setting of digital breast tomosynthesis [102,103], appropriate use of GMI SEIR has the potential to increase visibility of microcalcifications, which can be an indirect indicator for some forms of breast cancer.

Four dimensional CT

Another heavily investigated application for CS in CT is in the reconstruction of projection data from a nonstatic object. Time dependence of the subject is a particularly difficult issue for C-arm devices [104,105], which have much longer acquisition times as compared with diagnostic CT. The form of the time dependence can involve stationary tissue structures and changing voxel values, as is the case when imaging with contrast agents [80], or more commonly the structures themselves undergo motion such as imaging of the lungs or heart [106,107]. By exploiting the periodic nature of the heart beat and breathing it is possible to use gating to form sparsely sampled data sets of a static object, representing the heart or lung at a specific phase of their motion cycle. Once converted to a sparse sampling problem, the previously discussed methods can be used for image reconstruction. There are also creative uses of object sparsity, which aim for true 4D [108] (or even 5D! [109]) image reconstruction where the extra dimension refers to a time axis.

7. Application of CS in MRI

Many potential clinical applications of SEIRs are being explored. Dynamic cardiac and model-based quantiative MR parameter estimation have been studied as described earlier. Improvements in scan time for many other applications such as fiber tractography [110] and functional MRI [111] have also been reported. Rather than compiling an exhaustive list of these applications, we provide an example of a particular clinical application of compressed sensing and how it compares with prior non-CS methods.

A. Example: Quantitative Liver Imaging