Abstract

The Mel Frequency Cepstral Coefficients (MFCCs) are widely used in order to extract essential information from a voice signal and became a popular feature extractor used in audio processing. However, MFCC features are usually calculated from a single window (taper) characterized by large variance. This study shows investigations on reducing variance for the classification of two different voice qualities (normal voice and disordered voice) using multitaper MFCC features. We also compare their performance by newly proposed windowing techniques and conventional single-taper technique. The results demonstrate that adapted weighted Thomson multitaper method could distinguish between normal voice and disordered voice better than the results done by the conventional single-taper (Hamming window) technique and two newly proposed windowing methods. The multitaper MFCC features may be helpful in identifying voices at risk for a real pathology that has to be proven later.

1. Introduction

Disordered voice quality could be a symptom of a disease related to laryngeal disorders. In clinical practice, the primary approach to assess voice quality is the auditory-perceptual evaluation. For this approach, the severity (degree) and quality of dysphonia are evaluated by a tool such as GRBAS (Grade, Roughness, Breathiness, Asthenia, and Strain) scale [1]. Auditory-perceptual evaluation offers a standardized procedure for assessment of abnormal voice quality. For this approach, voice evaluation is performed subjectively by the clinician's direct audition. Auditory-perceptual evaluation of voice quality is subjective because of the variability between listeners [2]. Moreover, this subjective evaluation can cause inconsistency on judging pathological voice quality [3]. Alternatively, laryngoscopic techniques such as direct laryngoscopy, indirect laryngoscopy, and telescopic video laryngoscopy are invasive tools which allow the observation of vocal folds [4]. These techniques, which are commonly used for monitoring the larynx, make the diagnosis of many laryngeal disorders possible [1]. On the other hand, these monitoring techniques may cause discomfort to the patient and become costly [5].

Apart from the above-mentioned methods, acoustic analysis of voice samples is generally applied as a complementary technique to aid ear, nose, and throat clinicians [6–10]. This analysis technique is an effective and noninvasive approach for the assessment of voice quality. For clinical application, acoustic analysis of disordered voices enables doctors to document quantitatively the degree of different voice qualities and the automatic screening of voice disorders. This technique can also be performed for the evaluation of surgical and pharmacological treatments and rehabilitation processes such as monitoring the patient's progress over the course of voice therapy [11, 12]. Furthermore, in voice clinics, various commercial acoustic analysis computer programs are run to aid the clinician in rating voice quality [13, 14]. Apparently, clinicians and speech therapists commonly combine auditory-perceptual evaluation techniques, laryngoscopic techniques, and acoustic analysis methods to evaluate voice quality.

Recently, many researchers have been working on differentiating between two levels of voice quality, normal and pathological, using acoustic analysis methods [3, 15, 16]. For this aim, the raw voice samples are converted into features which have more useful and compact representations of voice. In the literature, the features such as measures of acoustic perturbation (jitter and shimmer), the harmonics to noise ratio, and the glottal to noise excitation ratio have been applied for assessment of vocal quality [17, 18]. Moreover, nonlinear dynamic methods, including Lyapunov exponents and correlation dimension, have been applied to various kinds of classification tasks for disordered voice samples [19–21]. The recent studies show that these nonlinear methods may be more appropriate for aperiodic voices than traditional perturbation methods [6]. On the other hand, in comparison with perturbation analysis, the drawback of these nonlinear methods is the fact that they are more complex and may need longer computation time [22].

The well-known MFCC feature extraction has been commonly used in automatic classification between healthy and impaired voices [15]. This technique can be considered as an approach of the structure of human auditory perception [23]. Usually MFCC parameters are computed from a windowed periodogram using short time frames of speech via discrete Fourier transform algorithm. In this case, windowing attempts to reduce bias but large variance is still a problem. The large variance for spectrum estimation can be reduced by replacing the Hamming-windowed power spectrum with multiple time domain windows. This is usually called the multitaper spectral estimation method [24–26]. The idea in the multitaper spectral estimation method is to analyze the speech frame using a number N of spectrum estimators, each having a different taper, and then to compute the final spectrum as a weighted mean of each subspectrum. In [25], it is shown that multiple window spectral estimates have smaller variance than single windowed spectrum estimates by a factor that approaches 1/N.

For a long time, multitaper spectrum estimation has been used in geographical applications [27] and has demonstrated good results. But little attention has been paid to multitaper spectrum estimation in the field of speech processing. Recently researchers have started to employ the method in speech processing as well [24]. This study demonstrated first time that multitaper MFCC features could be used for speaker verification systems. Then, this method was applied to the speech recognition [28], emotion recognition [29], and language identification [30] and was shown to result in better performance than the single windowed method. In this study, our goal is to investigate the usage of multitaper MFCC features in the automatic discrimination of two levels of voice quality (healthy and pathological voices). So as to evaluate the usefulness of the proposed method, an automatic classification system is employed. To our knowledge, there were no previous studies in the existing literature using multitaper MFCC features for this problem. The second objective of this study is to apply different multitaper techniques including multipeak method [31], SWCE (sinusoidal weighted cepstrum estimator) method [32], and Thomson method [33] to MFCC and compare their performance to novel proposed windowing techniques [34, 35] and single-taper technique. In addition, the number of tapers affecting the classification performance and the issues of weight selection in the Thomson method are investigated. Experimental results indicate that, with a suitable configuration, the multitaper method outperformed these windowing techniques.

The outline of the paper is as follows. Multitaper spectrum estimation method and novel windowing techniques are given in Section 2. Section 3 evaluates the efficiency of the multitaper spectrum estimation for the classification of voice qualities. Discussion is presented in Section 4 and then conclusion is given in Section 5.

2. Methods

2.1. Multitaper Spectrum Estimation

In MFCC feature extraction process, the power spectrum is computed from a windowed periodogram. The short-time power spectrum estimate is given by

| (1) |

where x = [x(0), x(1), x(2),…, x(L − 1)] is a frame of utterance with length L, f ∈ {0,1, 2,…, L − 1} is frequency bin index, i is the imaginary unit, and w(t) denotes a window function. For MFCC application, Hamming window is the most popular window and we choose this window; it is given by

| (2) |

A single taper (e.g., Hamming window) reduces the bias of the spectrum which is the difference between the estimated spectrum and the actual spectrum S(f) but the estimated spectrum has higher variance. This problem can be reduced by multitaper spectrum estimator [25]. The multitaper spectrum estimator can be expressed as

| (3) |

where N is the number of the tapers, w m is the mth data taper (m = 1,2,…, N), and λ(m) is the weight of the mth taper. In this method, spectrum estimation is obtained from a series of spectra which are weighted and averaged in frequency domain. The block diagram of MFCC extraction from the single-taper and multitaper spectrum estimation is presented in Figure 1. As a special case, if m = N = 1 and λ(m) = 1, (3) simply degrades to (1) and in this case a single windowed power spectrum is obtained.

Figure 1.

Block diagram of single-taper and multitaper spectrum estimation based on MFCC feature extraction.

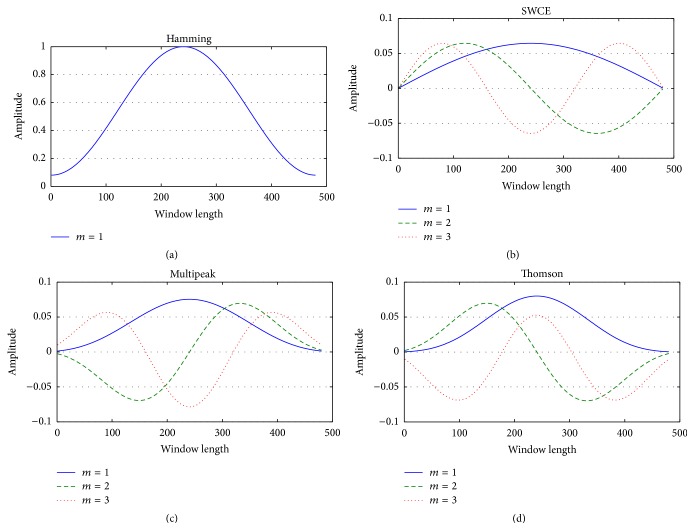

Some of the multitaper methods in the literature are Thomson multitaper, multipeak multitaper, and SWCE (sinusoidal weighted cepstrum estimator) multitaper, which are based on the Slepian tapers [17], peak matched multiple tapers, and sine tapers, respectively. These multitapers and Hamming taper are demonstrated in Figure 2. One goal of this study is to evaluate the effect of these tapers and compare their performances for a voice disorder classification system. Details of these tapers may be found in [31–33].

Figure 2.

Single taper and different multitapers used for spectrum estimation: (a) Hamming window, (b) the sine tapers, (c) the multipeak tapers, and (d) the Thomson tapers. Window length is 480; m is the taper number.

To make a visual comparison, samples from normal and pathologically affected voices for vowel /a/ and their estimated spectra by the Hamming windowed DFT spectrum as a reference and Thomson, multipeak, and SWCE multitaper methods are given in Figures 3 and 4. The number of tapers used for the multitaper methods is 3, 9, and 15, with a frame length of 30 msec and the sampling frequency is 16 kHz.

Figure 3.

(a) Normal voice and (b), (c), and (d) its estimated spectrum by the single taper (Hamming) and Thomson, multipeak, and SWCE multitaper methods for N = 3 tapers, for N = 9 tapers, and for N = 15 tapers, respectively.

Figure 4.

(a) Pathological voice and (b), (c), and (d) its estimated spectrum by the single taper (Hamming) and Thomson, multipeak, and SWCE multitaper methods for N = 3 tapers, for N = 9 tapers, and for N = 15 tapers, respectively.

In Figures 3 and 4, it is shown that each multitaper method has a different spectrum. For the same value of N, multipeak spectrum estimation has sharper peaks than Thomson and SWCE methods. Additionally, the single-taper spectrum includes more details comparing it with these multitaper methods and it can be expected that this multitaper spectral estimation has smaller variance. As these techniques generate different spectrum on the same voice frame, the results cause different cepstrum coefficients [25].

In estimating the spectrum by multitapering, the first taper attributes more weight to the center of the short-term signal than to its ends, while higher order tapers attribute increasingly more weight to the ends of the frame. For the SWCE multitaper method weights can be found from

| (4) |

Multipeak multitaper method weights can be defined as

| (5) |

where v m is the eigenvalues of the multiple windows.

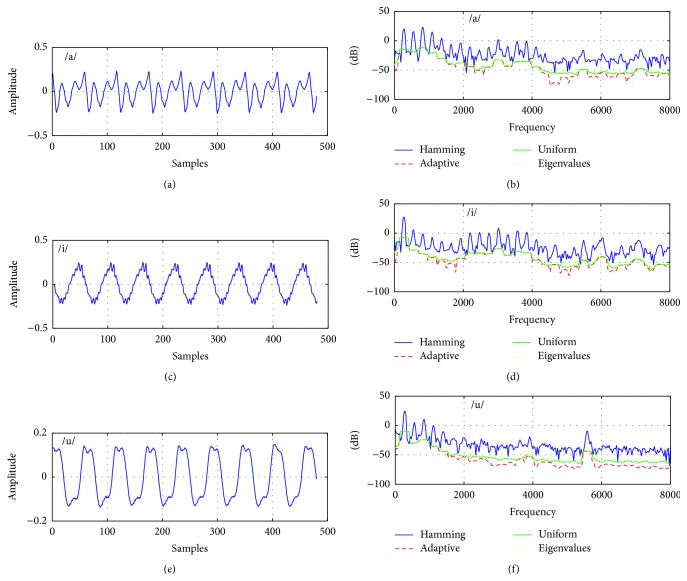

Usually, the three different approaches can be used for weighting schemes in the Thomson multitaper. These are uniform weights, where λ(m) = 1/N (N is the number of the Slepian tapers), eigenvalue weights, where λ(m) = v m (v is the eigenvalues of the Slepian tapers), and adaptive weights, where (m) = 1/∑i=1 m v i. Figures 5 and 6 show a comparison of these weighting schemes used in the Thomson multitaper for normal and pathological voice samples (/a/, /i/, and /u/). In speaker verification experiments, uniform weights are used to obtain MFCC multitaper features [24–26]. In [36], adaptive weights give higher accuracy than the uniform and eigenvalue weighting schemes. Therefore, it may not be clear which weighting technique in the Thomson multitaper is suitable for modeling voice signal. For this reason, we also investigated optimum weighting techniques in the Thomson multitaper for voice disorder classification task.

Figure 5.

(a), (c), and (e) Sustained vowels /a/, /i/, and /u/ from normal subjects and (b), (d), and (f) their Thomson multitaper spectral estimates using uniform weights, eigenvalues as the weights, and adaptive weights.

Figure 6.

(a), (c), and (e) Sustained vowels /a/, /i/, and /u/ from pathological subjects and (b), (d), and (f) their Thomson multitaper spectral estimates using uniform weights, eigenvalues as the weights, and adaptive weights.

2.2. The Novel Window Methods

Recently, apart from the multitaper method, the novel windowing techniques are presented for signal analysis. In 2011, Mottaghi-Kashtiban and Shayesteh [34] proposed a new efficient window function and compared main lobe width and peak side lobe amplitude to the Hamming window. The proposed window function can be expressed as

| (6) |

where a 0 = 0.5363 − 0.14/L, a 1 = 0.996 − a 0, and a 3 = 0.04. This new window function was obtained by the third harmonic of the cosine function in (2). Also they found the suitable amplitudes of DC term to minimize the peak side lobe amplitude [34].

In 2013, Sahidullah and Saha [35] presented a novel family of windowing method to calculate MFCC features. The basic idea of the proposed method is to use a simple time domain processing of signal after it is multiplied with a single window. The new window function can be expressed as

| (7) |

In the case where τ = 0, the window function is equal to w(t) such as Hamming window. Figure 7 shows these novel windowing functions and Hamming window as a reference in the time domain. For window w s, first-order and second-order (τ = 1 and τ = 2) window functions are used and amplitude of all the windows is normalized to 1 for visual clarity. In this study, we investigate the effects of these windowing techniques and compared them to the multitaper methods to categorize normal voice quality from disordered voice quality.

Figure 7.

The two novel window functions and Hamming window in the time domain.

3. Experiments

The performance of the proposed multitaper MFCC features is evaluated on an open database, namely, Saarbruecken Voice (SV) database, developed by Putzer [37, 38]. This database consists of pathological and healthy voices at different pitches (low, normal, and high) from more than 2000 speakers. SV database includes simultaneous voice and electroglottography (EGG) recordings of sustained vowels /a/, /i/, and /u/ for each case. The files have averages of around 1 and 3 s for sustained vowels and voice samples were sampled at 50 kHz with 16 bits of resolution.

In this study, voice samples of sustained vowels /a/, /i/, and /u/ produced at the subjects' normal pitch were used from SV database. Each voice signal resampled at 16 kHz was considered. For this work, 650 normal subjects and a group of 650 subjects with functional and organic dysphonia voice pathologies were chosen from SV database. The details of voice samples used in the study can be seen in Table 1.

Table 1.

Details of voice samples used in the study.

| Diagnosis | Number of samples |

|---|---|

| Cyst | 6 |

| Functional dysphonia | 76 |

| Hyperfunctional dysphonia | 68 |

| Hypofunctional dysphonia | 16 |

| Laryngitis | 102 |

| Leukoplakia | 41 |

| Normal | 650 |

| Paralysis | 196 |

| Reinke's edema | 66 |

| Vocal fold cancer | 22 |

| Vocal fold polyp | 41 |

| Vocal nodule | 16 |

In the experiments, the voice samples were segmented into frames of 30 ms lengths and the frame shift is 15 ms. Afterwards, each frame was weighted by a single window or multitaper method. To generate SWCE, multipeak, and Thomson tapers, the multitaper functions were utilized as described by Kinnunen et al. [25]. Afterwards, 29-channel Mel frequency filter bank was applied on the short-time spectrum. Then, the logarithmically compressed filter bank outputs were calculated and the DCT was applied on the filter bank outputs. The first 12 cepstral coefficients were taken as features excluding energy coefficient c 0 and these features were normalized to the range of 0-1.

For evaluation, we have used Gaussian Mixture Model (GMM) to represent each class. In this approach, voice samples were modeled as a weighted sum of multivariate Gaussian probability density functions. In the GMM parameter estimation, the distribution of features is modeled by the mean vectors , covariance matrices ∑i, and mixture weights c i which is denoted by the notation Θ = {c i, μ i, ∑i}, i = 1,2,…, K, where K is the number of mixture components [39]. These model parameters (Θ) are commonly determined using expectation maximization (EM) algorithm. Finding these parameters, this procedure iteratively updates the parameters by maximizing the expected log-likelihood of the data, and it guarantees a monotonic increase in the model's log-likelihood value [40, 41]. The classification of a sequence test feature vector is based on the calculation of a simple set of likelihood functions using the test voice. In other words, a test frame is classified with a normal or pathological class label, the result of which is the largest likelihood function, indicating the most likely class. In the proposed system, we have used 16 mixture components with diagonal covariance matrices for GMM classifier. We have used half of the features for training and the rest for testing randomly and all the experiments are repeated 20 times. Finally, the system performance was computed by averaging the results obtained from each experiment.

4. Results

We first evaluated the multitaper spectrum estimation technique described in Figure 1 for different numbers of tapers. In the previous multitaper applications, different numbers of tapers were applied to speech recognition [28] and speaker verification problems [24, 25, 36]. The dataset that was used previously is different from the voice quality classification experiments. Therefore, the previous conclusion that the optimal number of tapers, N, was found from 4 to 8 is no longer suitable to our task. For sustained vowels /a/, /i/, and /u/, the best value of N in our case should be rediscovered. Moreover, we compare the classification accuracies of the SWCE, Thomson (using uniform weights), and multipeak systems and illustrate the conventional Hamming windowing method as a reference in Figure 8.

Figure 8.

Classification accuracies (%) using different number of tapers for (a) sustained vowel /a/, (b) sustained vowel /i/, and (c) sustained vowel /u/.

In Figure 8, it can be seen that the multitaper methods outperform the baseline Hamming method depending on the number of tapers. In the case of vowel /a/, the Thomson multitaper method performs relatively better for 6 ≤ N ≤ 8 taper values than the other methods. For /i/ and /u/ vowels, it is observed that the multitaper methods outperform Hamming method in nearly all cases and this is because the exact setting is not very critical for these vowels.

We next compared the weights of the Thomson multitaper: uniform, eigenvalue, and adaptive weights. In the experiments, we use the number of tapers as N = 8, 12, and 16 for each multitaper method, respectively. The classification performance results are demonstrated in Figure 9.

Figure 9.

Voice quality classification accuracies (for /a/, /i/, and /u/) using the weights of Thomson multitaper method and Hamming window with (a) N = 8, (b) N = 12, and (c) N = 16.

When comparing the performances of the weights of the Thomson multitaper method, all three weighting techniques outperformed the baseline Hamming method. For vowels /a/, /i/, and /u/, the highest accuracies are obtained using N = 16 with adaptive weights.

Additionally, the classification task applied to the novel proposed weighting schemas in [34, 35] compared with baseline Hamming method offers interesting results. As shown in Figure 10, our classification experiment on SV database yields the highest accuracies of 95% (vowel /a/) for window w k system and 94.78% (vowel /i/) and 91.42% (vowel /u/) using window w s (τ = 2) system.

Figure 10.

Classification performance comparisons of the two different window functions and Hamming window for /a/, /i/, and /u/ vowels.

Table 2 summarizes the classification results of all windowing methods and the multitaper systems. The baseline results on the test set were obtained by using Hamming windowed MFCCs on the vowels /a/, /i/, and /u/. In the multitaper experiments, the number of tapers was set to 16 and adaptive weights were used in the Thomson method. Additionally, we fix τ = 2 for window w s.

Table 2.

Average relative improvement in SV database obtained by the novel window functions and the multitaper systems over the baseline Hamming window system.

| Vowel | Baseline acc. (%) | Method | Acc. (%) | Impr. (%) |

|---|---|---|---|---|

| /a/ | 94.83 | Window w k | 95.00 | 0.18 |

| Window w s | 93.65 | −1.24 | ||

| SWCE | 91.56 | −3.45 | ||

| Multipeak | 91.83 | −3.16 | ||

| Thomson | 99.38 | 4.8 | ||

|

| ||||

| /i/ | 91.03 | Window w k | 92.45 | 1.56 |

| Window w s | 94.78 | 4.12 | ||

| SWCE | 98.45 | 8.15 | ||

| Multipeak | 97.37 | 6.96 | ||

| Thomson | 99.86 | 9.7 | ||

|

| ||||

| /u/ | 87.86 | Window w k | 87.31 | −0.63 |

| Window w s | 91.42 | 4.05 | ||

| SWCE | 93.08 | 5.94 | ||

| Multipeak | 92.26 | 5.01 | ||

| Thomson | 99.54 | 13.29 | ||

As seen in Table 2, Thomson multitaper method with adaptive weighting was observed as the highest accuracy improvement of 4.8% for vowel /a/, 9.7% for vowel /i/, and 13.29% for vowel /u/, respectively. When comparing all multitaper methods together over the baseline, we observe that the Thomson method is preferable.

5. Discussion

In this paper, we have compared the performance of different windowing techniques using MFCC in order to investigate how to discriminate voice disorders from healthy controls. This classification problem has attracted interest in recent years, with the best results reporting approximately 79% recognition accuracy [42] on SV database. In [38], 76.4% accuracy was obtained using a new parameterization of voice quality properties in the voice signal. Here, we indicated that we can achieve almost 99% accuracy using multitaper MFCCs. Compared to previous studies in this application, we have used recently proposed windowing techniques and multitaper spectrum estimation methods which have not been previously used in voice quality classification task.

Moreover, we discussed the effect of chosen multitaper parameters such as the number of tapers, type of taper, and the weights of the Thomson multitaper method. In this work, the optimum number of tapers is 6 for vowel /a/, 15 for vowel /i/, and 16 for vowel /u/ (see Figure 8). The optimum number of tapers changes application and dataset [25–30]. In [24], the bias, variance, and MSE (squared bias plus variance) of the MFCC estimator were investigated using a set of 50 different recordings of the phonemes /a/ and /l/. Sandberget al. found that multitapers (multipeak, SWCE, and Thomson) with N between 8 and 16 indicate a good tradeoff between bias and variance for most MFCCs. In this paper, we obtained similar results using multitaper MFCCs for voice quality classification issues and it is clearly seen that the number of tapers is an important parameter. Moreover, the optimum weight of the Thomson multitaper method was found to be adaptive weights for the phonemes /a/, /i/, and /u/.

As can be seen from Table 2, the proposed multitaper method provides better classification results than other newly proposed windowing methods in [34, 35] and popular Hamming window. For voice quality classification problems, it is found that the Thomson multitaper method can be chosen as the optimal tapering method which is designed for smooth spectrum especially white noise [24]. This is expected because the disordered voice samples contain more noise compared to the healthy voices and the spectrum of these voice samples is estimated better by using the multitaper method than by using the single-taper method. In other words, the single-taper spectrum comprises more details for a voice frame, while the multitaper spectra contain a smoother voice frame and this situation can be seen in Figures 3 and 4. Thus, averaging spectral estimates with this method helps to reduce large variance especially for the Thomson multitaper method (see Figures 5 and 6) comparable to the single-tapered spectrum estimate. For this reason, in differentiating pathological voices from the healthy ones, multitaper MFCCs give better performance.

6. Conclusion

In the present study, we have investigated multitaper MFCC systems for a voice quality classification task. The Thomson, SWCE, and multipeak MFCC systems and GMM based modeling techniques were employed for this task. The system was tested using sustained vowels (/a/, /i/, and /u/) from 650 normal and 650 pathological subjects. The experimental results showed that the Thomson method (using adaptive weights and N = 16) outperformed the SWCE and multipeak MFCC systems as well as the baseline Hamming window system. Moreover, it was found that the important parameters such as the number of tapers used for the multitaper methods and the type of the weights in the Thomson method could affect the voice quality classification performance. Furthermore, it was found that the multitaper based features performed slightly better in terms of accuracy than the novel proposed windowing based features in most cases. These results confirm that multitaper methods (specifically the adaptive weighted Thomson multitaper MFCC) can be an alternative to the traditional MFCC which uses the Hamming window for automatic classification of voice quality. As a result, acoustic assessment techniques (e.g., multitaper MFCC) by no means need to replace auditory-perceptual techniques or laryngoscopic techniques, but they could help improve the voice quality analysis tools available to the clinician.

Conflict of Interests

The authors declare that they have no conflict of interests regarding the publication of this paper.

References

- 1.Omori K. Diagnosis of voice disorders. Japan Medical Association Journal. 2011;54(4):248–253. [Google Scholar]

- 2.Amara F., Fezari M. Recent Advances in Biology, Medical Physics, Medical Chemistry, Biochemistry and Biomedical Engineering. 2013. Voice pathologies classification using GMM and SVM classifiers; p. p. 65. [Google Scholar]

- 3.Henríquez P., Alonso J. B., Ferrer M. A., Travieso C. M., Godino-Llorente J. I., Díaz-de-María F. Characterization of healthy and pathological voice through measures based on nonlinear dynamics. IEEE Transactions on Audio, Speech, and Language Processing. 2009;17(6):1186–1195. doi: 10.1109/tasl.2009.2016734. [DOI] [Google Scholar]

- 4.Kundra P., Kumar V., Srinivasan K., Gopalakrishnan S., Krishnappa S. Laryngoscopic techniques to assess vocal cord mobility following thyroid surgery. ANZ Journal of Surgery. 2010;80(11):817–821. doi: 10.1111/j.1445-2197.2010.05441.x. [DOI] [PubMed] [Google Scholar]

- 5.Carvalho R. T. S., Cavalcante C. C., Cortez P. C. Wavelet transform and artificial neural networks applied to voice disorders identification. Proceedings of the 3rd World Congress on Nature and Biologically Inspired Computing (NaBIC '11); October 2011; Salamanca, Spain. IEEE; pp. 371–376. [DOI] [Google Scholar]

- 6.Zhang Y., Jiang J. J., Wallace S. M., Zhou L. Comparison of nonlinear dynamic methods and perturbation methods for voice analysis. Journal of the Acoustical Society of America. 2005;118(4):2551–2560. doi: 10.1121/1.2005907. [DOI] [PubMed] [Google Scholar]

- 7.Howard D. M., Abberton E., Fourcin A. Disordered voice measurement and auditory analysis. Speech Communication. 2012;54(5):611–621. doi: 10.1016/j.specom.2011.03.008. [DOI] [Google Scholar]

- 8.Little M. A., McSharry P. E., Roberts S. J., Costello D. A. E., Moroz I. M. Exploiting nonlinear recurrence and fractal scaling properties for voice disorder detection. BioMedical Engineering Online. 2007;6, article 23 doi: 10.1186/1475-925x-6-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fex B., Fex S., Shiromoto O., Hirano M. Acoustic analysis of functional dysphonia: before and after voice therapy (accent method) Journal of Voice. 1994;8(2):163–167. doi: 10.1016/s0892-1997(05)80308-x. [DOI] [PubMed] [Google Scholar]

- 10.Hirano M., Hibi S., Yoshida T., Hirade Y., Kasuya H., Kikuchi Y. Acoustic analysis of pathological voice: some results of clinical application. Acta Oto-Laryngologica. 1988;105(5-6):432–438. doi: 10.3109/00016488809119497. [DOI] [PubMed] [Google Scholar]

- 11.Godino-Llorente J. I., Fraile R., Sáenz-Lechón N., Osma-Ruiz V., Gómez-Vilda P. Automatic detection of voice impairments from text-dependent running speech. Biomedical Signal Processing and Control. 2009;4(3):176–182. doi: 10.1016/j.bspc.2009.01.007. [DOI] [Google Scholar]

- 12.Umapathy K., Krishnan S., Parsa V., Jamieson D. G. Discrimination of pathological voices using a time-frequency approach. IEEE Transactions on Biomedical Engineering. 2005;52(3):421–430. doi: 10.1109/tbme.2004.842962. [DOI] [PubMed] [Google Scholar]

- 13.Ma E. P.-M., Yiu E. M.-L. Suitability of acoustic perturbation measures in analysing periodic and nearly periodic voice signals. Folia Phoniatrica et Logopaedica. 2005;57(1):38–47. doi: 10.1159/000081960. [DOI] [PubMed] [Google Scholar]

- 14.Amir O., Wolf M., Amir N. A clinical comparison between two acoustic analysis softwares: MDVP and Praat. Biomedical Signal Processing and Control. 2009;4(3):202–205. doi: 10.1016/j.bspc.2008.11.002. [DOI] [Google Scholar]

- 15.Godino-Llorente J. I., Gómez-Vilda P., Blanco-Velasco M. Dimensionality reduction of a pathological voice quality assessment system based on gaussian mixture models and short-term cepstral parameters. IEEE Transactions on Biomedical Engineering. 2006;53(10):1943–1953. doi: 10.1109/tbme.2006.871883. [DOI] [PubMed] [Google Scholar]

- 16.Hansen J. H. L., Gavidia-Ceballos L., Kaiser J. F. A nonlinear operator-based speech feature analysis method with application to vocal fold pathology assessment. IEEE Transactions on Biomedical Engineering. 1998;45(3):300–313. doi: 10.1109/10.661155. [DOI] [PubMed] [Google Scholar]

- 17.Kasuya H., Endo Y., Saliu S. Novel acoustic measurements of jitter and shimmer characteristics from pathologic voice. Proceedings of the 3rd European Conference on Speech Communication and Technology (EUROSPEECH '93); September 1993; Berlin, Germany. pp. 1973–1976. [Google Scholar]

- 18.Ludlow C., Bassich C., Connor N., Coulter D., Lee Y. Laryngeal Function in Phonation and Respiration. Boston, Mass, USA: Brown; 1987. The validity of using phonatory jitter and shimmer to detect laryngeal pathology; pp. 492–508. [Google Scholar]

- 19.Alonso J. B., Díaz-de-María F., Travieso C. M., Ferrer M. A. Using nonlinear features for voice disorder detection. Proceedings of the 3rd International Conference on Nonlinear Speech Processing; April 2005; Barcelona, Spain. pp. 94–106. [Google Scholar]

- 20.Maragos P., Dimakis A., Kokkinos I. Some advances in nonlinear speech modeling using modulations, fractals, and chaos. Proceedings of the 14th International Conference on Digital Signal Processing (DSP '02); 2002; Santorini, Greece. pp. 325–332. [DOI] [Google Scholar]

- 21.Yu P., Ouaknine M., Revis J., Giovanni A. Objective voice analysis for dysphonic patients: a multiparametric protocol including acoustic and aerodynamic measurements. Journal of Voice. 2001;15(4):529–542. doi: 10.1016/s0892-1997(01)00053-4. [DOI] [PubMed] [Google Scholar]

- 22.Zhang Y., Jiang J. J., Biazzo L., Jorgensen M. Perturbation and nonlinear dynamic analyses of voices from patients with unilateral laryngeal paralysis. Journal of Voice. 2005;19(4):519–528. doi: 10.1016/j.jvoice.2004.11.005. [DOI] [PubMed] [Google Scholar]

- 23.Davis S. B., Mermelstein P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Transactions on Acoustics, Speech, and Signal Processing. 1980;28(4):357–366. doi: 10.1109/tassp.1980.1163420. [DOI] [Google Scholar]

- 24.Sandberg J., Hansson-Sandsten M., Kinnunen T., Saeidi R., Flandrin P., Borgnat P. Multitaper estimation of frequency-warped cepstra with application to speaker verification. IEEE Signal Processing Letters. 2010;17(4):343–346. doi: 10.1109/lsp.2010.2040228. [DOI] [Google Scholar]

- 25.Kinnunen T., Saeidi R., Sedlák F., et al. Low-variance multitaper MFCC features: a case study in robust speaker verification. IEEE Transactions on Audio, Speech and Language Processing. 2012;20(7):1990–2001. doi: 10.1109/tasl.2012.2191960. [DOI] [Google Scholar]

- 26.Kinnunen T., Saeidi R., Sandberg J., Hansson-Sandsten M. What else is new than the Hamming window? Robust MFCCs for speaker recognition via multitapering. Proceedings of the 11th Annual Conference of the International Speech Communication Association (INTERSPEECH '10); September 2010; Makuhari, Japan. pp. 2734–2737.27342737 [Google Scholar]

- 27.Wieczorek M. A., Simons F. J. Minimum-variance multitaper spectral estimation on the sphere. The Journal of Fourier Analysis and Applications. 2007;13(6):665–692. doi: 10.1007/s00041-006-6904-1. [DOI] [Google Scholar]

- 28.Alam M. J., Kenny P., O'Shaughnessy D. Advances in Nonlinear Speech Processing: 5th International Conference on Nonlinear Speech Processing, NOLISP 2011, Las Palmas de Gran Canaria, Spain, November 7–9, 2011. Proceedings. Vol. 7015. Berlin, Germany: Springer; 2011. A study of low-variance multi-taper features for distributed speech recognition; pp. 239–245. (Lecture Notes in Computer Science). [DOI] [Google Scholar]

- 29.Attabi Y., Alam M. J., Dumouchel P., Kenny P., O'Shaughnessy D. Multiple windowed spectral features for emotion recognition. Proceedings of the 38th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP '13); May 2013; Vancouver, Canada. IEEE; pp. 7527–7531. [DOI] [Google Scholar]

- 30.Diez M., Penagarikano M., Bordel G., Varona A., Rodriguez-Fuentes L. J. On the complementarity of short-time fourier analysis windows of different lengths for improved language recognition. Proceedings of the 15th Annual Conference of the International Speech Communication Association (INTERSPEECH '14); May 2014; Singapore. pp. 3032–3036. [Google Scholar]

- 31.Hansson M., Salomonsson G. A multiple window method for estimation of peaked spectra. IEEE Transactions on Signal Processing. 1997;45(3):778–781. doi: 10.1109/78.558503. [DOI] [Google Scholar]

- 32.Riedel K. S., Sidorenko A. Minimum bias multiple taper spectral estimation. IEEE Transactions on Signal Processing. 1995;43(1):188–195. doi: 10.1109/78.365298. [DOI] [Google Scholar]

- 33.Thomson D. J. Spectrum estimation and harmonic analysis. Proceedings of the IEEE. 1982;70(9):1055–1096. [Google Scholar]

- 34.Mottaghi-Kashtiban M., Shayesteh M. G. New efficient window function, replacement for the hamming window. IET Signal Processing. 2011;5(5):499–505. doi: 10.1049/iet-spr.2010.0272. [DOI] [Google Scholar]

- 35.Sahidullah M., Saha G. A novel windowing technique for efficient computation of MFCC for speaker recognition. IEEE Signal Processing Letters. 2013;20(2):149–152. doi: 10.1109/lsp.2012.2235067. [DOI] [Google Scholar]

- 36.Alam M. J., Kinnunen T., Kenny P., Ouellet P., O'Shaughnessy D. Multitaper MFCC and PLP features for speaker verification using i-vectors. Speech Communication. 2013;55(2):237–251. doi: 10.1016/j.specom.2012.08.007. [DOI] [Google Scholar]

- 37.Barry W. J., Pützer M. Saarbrücken Voice Database. Institute of Phonetics, Universität des Saarlandes, http://www.stimmdatenbank.coli.uni-saarland.de/

- 38.Putzer M., Barry W. J. Instrumental dimensioning of normal and pathological phonation using acoustic measurements. Clinical Linguistics & Phonetics. 2008;22(6):407–420. doi: 10.1080/02699200701830869. [DOI] [PubMed] [Google Scholar]

- 39.Eskidere Ö. Source microphone identification from speech recordings based on a Gaussian mixture model. Turkish Journal of Electrical Engineering & Computer Sciences. 2014;22(3):754–767. doi: 10.3906/elk-1207-74. [DOI] [Google Scholar]

- 40.Reynolds D. A. A Gaussian mixture modeling approach to text-independent speaker identification [Ph.D. thesis] Atlanta, Ga, USA: Georgia Institute of Technology; 1992. [Google Scholar]

- 41.Chen T., Zhang J. On-line multivariate statistical monitoring of batch processes using Gaussian mixture model. Computers and Chemical Engineering. 2010;34(4):500–507. doi: 10.1016/j.compchemeng.2009.08.007. [DOI] [Google Scholar]

- 42.Martínez D., Lleida E., Ortega A., Miguel A., Villalba J. Advances in Speech and Language Technologies for Iberian Languages. Vol. 328. Berlin, Germany: Springer; 2012. Voice pathology detection on the Saarbrücken voice database with calibration and fusion of scores using multifocal toolkit; pp. 99–109. (Communications in Computer and Information Science). [DOI] [Google Scholar]