Abstract

This paper proposes an efficient electric bicycle tracking algorithm, EBTrack, utilizing the high-precision and lightweight YOLOv7 as the target detector to enhance the efficiency of illegal detection and recognition of electric bicycles. The EBTrack effectively captures the position and trajectory of electric bicycles in complex traffic monitoring scenarios. Firstly, we introduce the feature extraction network, ResNetEB, specifically designed for feature re-identification of electric bicycles. To maintain real-time performance, feature extraction is performed only when generating new object IDs, minimizing the impact on processing speed. Secondly, for accurate target trajectory prediction, we incorporate an adaptive modulated noise scale Kalman filter. Additionally, considering the uncertainty of electric bicycle entry directions in traffic monitoring scenarios, we design a specialized matching mechanism to reduce frequent ID switching. Finally, to validate the algorithm's effectiveness, we have collected diverse video image data of electric bicycle and urban road traffic in Hefei, Anhui Province, encompassing different perspectives, time periods, and weather conditions. We have trained the proposed detector and have evaluated its tracking performance on this comprehensive dataset. Experimental results demonstrate that EBTrack achieves impressive accuracy, with 89.8 % MOTA (Multiple Object Tracking Accuracy) and 94.2 % IDF1 (ID F1-Score). Furthermore, the algorithm effectively reduces ID switching, significantly improving tracking stability and continuity.

Keywords: Multi-object tracking, Object detection, Convolutional neural networks, Electric bicycle detection, ByteTrack

1. Introduction

With the acceleration of people's lifestyles and the rise of emerging industries, such as logistics and food delivery, electric bicycles and their convenience, environmental friendliness, and energy efficiency, have become the preferred mode of urban transportation. However, in complex traffic environments, the failure of e-bike riders to wear safety helmets poses a constant threat to personal safety. Research indicates that approximately 80 % of annual accidents, including motorcycle and e-bike riders, are caused by the absence of safety helmets. Despite the nationwide 'One Helmet, One Belt' safety campaign, the limited awareness of safety among riders, low penalties for violations coupled with the constraints of limited law enforcement resources, inefficient on-site helmet compliance checks, and high costs, hinder a substantial increase in safety helmet usage rates among e-bike riders. Therefore, using artificial intelligence technology for detecting whether e-bike riders wear helmets and assisting traffic enforcement personnel in tracking and identifying violations is of significant importance for road traffic safety.

In recent years, object tracking algorithms in computer vision technology have made significant advancements and yielded fruitful results. In 2016, Bertinetto et al. [1] introduced SiamFC, which is a single-object tracking algorithm based on Siamese networks. It employs an end-to-end fully convolutional network for estimating object positions through feature extraction and cross-correlation computations. Building upon this foundation, additional single-object tracking algorithms, such as CFNet [2] that combines correlation filtering and SiamMa [3] that integrates semantic segmentation, have been proposed. These advancements have further enhanced tracking performance. Similarly, researchers have made significant contributions for object tracking algorithms. From the early DSST [4] algorithm to subsequent innovations like CCOT [5], ECO [6], ATOM [7], and Dimp50 [8] algorithms, the introduction of these algorithms has further propelled the development of the object tracking field. With the rapid evolution of object detectors, an increasing number of object tracking algorithms are increasing powerful object detectors to enhance tracking performance [9], such as the YOLO series of object detectors [10], YOLOR, YOLOX, Faster R–CNN [11], and others.

In this regard, Bewley et al. [12] introduced an important multi-object tracking algorithm in 2016, namely the SORT algorithm. The algorithm utilizes Faster R–CNN as the object detector, employs Kalman filters for object trajectory prediction, and utilizes the Hungarian algorithm to find the specific matching object bounding box with the maximum IOU (Intersection Over Union) to achieve object matching between consecutive frames. Although the algorithm has the advantages of a simple structure and fast execution speed, it faces challenges in complex scenarios, such as unstable object ID associations and frequent ID switches. Thereafter, the DeepSORT algorithm was introduced based on SORT [13]. This algorithm incorporates cascade matching and feature re-identification networks. Cascade matching enhances the tracking of occluded objects, while the feature re-identification network aids in distinguishing differences between different objects, thus improving the accuracy and robustness of multi-object tracking.

These improvements enable the DeepSORT algorithm to better handle object association challenges, especially in cases of prolonged occlusion, resulting in a significant enhancement in tracking performance. However, the SORT and DeepSORT algorithms often rely on bounding boxes with high confidence scores above specific thresholds for association while discarding them with low confidence scores due to issues, such as occlusion or motion blur. This has led to significant problems of object loss and track fragmentation that cannot be ignored. To address this issue, the ByteTrack algorithm [14] employs a BYTE association method. It not only associates high-confidence bounding boxes but it also considers matching low-confidence bounding boxes to a certain extent, thereby enhancing accuracy of tracking. By utilizing YOLOX as the object detector and the BYTE association method, the ByteTrack algorithm can deliver higher performance in multi-object tracking tasks, thereby enhancing accuracy and robustness of tracking.

Due to the exceptional performance of the Transformer architecture across various domains, researchers have been prompted to introduce it for multi-object tracking [15]. Accordingly, in 2022, Zhou X et al. [16] proposed an innovative global multi-object tracking algorithm based on the Transformer architecture. This approach took short video sequences as input, utilizing the Transformer to encode object features and generated specific trajectories, all without the need for intermediate pairwise grouping or combinatorial association. Furthermore, it could be jointly trained with an object detector, thereby enhancing the performance of global multi-object tracking.

Subsequently, in 2023, Zhang Y et al. [17], building upon MOTR [18], introduced MOTRv2. They incorporated an additional object detector to provide prior detection information to MOTR, consequently enhancing end-to-end multi-object tracking performance. However, it should be noted that tracking algorithms based on the Transformer architecture faced challenges in terms of terminal deployment and runtime speed, necessitating further optimization.

Currently, in research related to applications in traffic monitoring scenarios, Zihan P et al. [19] conducted a study on the recognition of wrong-way riding behavior of electric bicycles. They employed a hybrid Gaussian model to extract background and used background subtraction to extract the foreground of electric bicycles. Furthermore, by combining Kalman filtering and vehicle centroid features, they predicted and tracked the characteristics of vehicles for the next moment. Ultimately, by analyzing changes in the centroid coordinates of electric bicycles, they successfully identified instances of wrong-way riding behavior.

Xiaoping W et al. [20], in response to challenges related to occlusion, rotation, and scale transformation, have enhanced the MDnet [21] algorithm to improve object tracking accuracy in complex traffic scenarios. They employed optical flow change information and small convolutional kernels to track and predict motor vehicles, non-motorized vehicles, and pedestrians.

Zhenxiao L et al. [22] proposed a multi-vehicle tracking algorithm to address real-time performance and ID switching issues in multi-object tracking. They first employed YOLOv3 as the object detector and then, in combination with the DeepSORT tracking algorithm, introduced an LSTM motion model for tracking vehicle objects in traffic scenarios.

Caihong L et al. [23] have designed a cross-view multi-object tracking visualization algorithm based on field-of-view stitching. They utilized the geometric information of video scenes to achieve field-of-view stitching, presenting tracked objects from different perspectives in a unified field of view. This algorithm facilitated the presentation of surveillance scene information from multiple perspectives within the same field of view, providing a more convenient way to monitor traffic intersections.

The following table is a comprehensive overview of the literature review, outlining the strengths and weaknesses associated with each method.

Precisely, the existing algorithms have not effectively addressed different issues, such as frequent ID switching and fragmented tracking trajectories in complex traffic scenarios. Electric bicycle riders may pass through the monitoring area from a distance or up close, leading to variable object scales. Furthermore, due to factors several like crowded areas and mutual occlusion, traditional object tracking algorithms often struggle to handle these complex traffic scenarios effectively, resulting in frequent ID switches, reduced tracking range and accuracy, and fragmented tracking trajectories. Therefore, achieving effective recognition of violations by electric bicycle riders necessitates the accurate differentiation of various objects and the continuous tracking of their behavioral trajectories.

Despite the progress made in object tracking algorithms, there are still several gaps that require attention. Firstly, the current algorithms have not adequately addressed the challenge of frequent ID switching in complex traffic scenarios, resulting in fragmented tracking trajectories. This problem is especially prevalent in situations with high pedestrian density and frequent occlusions. Secondly, traditional object tracking algorithms often face difficulties in handling objects with varying scales, as they may appear in the area being monitoring from a distance or in close proximity. Lastly, there is a need to enhance the real-time performance and accuracy of object tracking algorithms to meet the practical demands of traffic monitoring scenarios.

Therefore, in this paper, an electric bicycle tracking algorithm has been proposed, EBTrack, designed for traffic monitoring scenarios.

The EBTrack algorithm utilizes cutting-edge distance measurement technologies, as outlined by Liu and Bao [24], that make use of ultra-wideband sensors to enable accurate real-time monitoring of electric bicycles in city traffic. Additionally, our strategy builds upon the fundamental research on distance measurement technologies based on electromagnetic waves, as examined by Liu and Bao [25], which form the basis for the remote sensing capabilities crucial to the effectiveness of EBTrack. The lightweight YOLOv7 has been used as the object detector and ResNetEB has been introduced as the feature extraction network. An adaptive modulated noise scale Kalman filter has been also incorporated, and the association matching mechanism has been redesigned.

The EBTrack tracking algorithm offers several advantages. Firstly, by utilizing the lightweight YOLOv7 object detector, efficient and accurate object detection has been achieved, providing reliable object position information, thereby enhancing tracking algorithm accuracy and stability. Secondly, the ResNetEB feature extraction network structure has been introduced, specifically designed for feature extraction and re-identification of electric bicycles. This improves the performance of the tracking algorithm in complex scenarios with high pedestrian density and occlusion, providing a more reliable feature representation. Thirdly, the introduction of the adaptive modulated noise scale Kalman filter enhances the accuracy and stability of object trajectories. This allows the tracking algorithm to better adapt to changes in object motion and the environment. Finally, through the redesigned association matching mechanism, the issue of object ID switching has been successfully reduced, improving tracking stability and continuity while overcoming the problem of fragmented tracking trajectories. This mechanism brings the EBTrack algorithm more closely with practical requirements and establishes an accurate data foundation for effective violation recognition.

The proposed method presents a number of enhancements compared to the standard YOLOv7 model for electric bicycle tracking. The improvements include the introduction of the ResNetEB Feature Extraction Network, which is specifically designed for electric bicycle re-identification. This network enhances performance in scenarios with high pedestrian density and occlusion, providing more reliable feature representation. Additionally, an Adaptive Modulated Noise Scale Kalman Filter is incorporated to improve the accuracy and stability of object trajectories by adapting to changes in object motion and the environment. The Association Matching Mechanism is also redesigned to consider the special motion patterns of electric bicycles, reducing object ID switching and improving tracking stability. Furthermore, the algorithm is optimized for real-time performance, with feature extraction only occurring when generating new object IDs and the matching mechanism balanced for accuracy and speed. These enhancements enable the EBTrack algorithm to achieve superior performance in electric bicycle tracking tasks, enhancing data reliability for effective violation recognition. The primary contributions are outlined as follows.

-

-

Utilizing the lightweight YOLOv7 as the object detector has enabled the stakeholders to achieve efficient and accurate object detection, thereby providing dependable input data for subsequent tracking processes.

-

-

The introduction of the ResNetEB feature extraction network structure, specifically custom-made for feature extraction and re-identification of electric bicycles, has significantly enhanced the performance of the tracking algorithm in challenging scenarios characterized by high pedestrian density and occlusion.

-

-

The incorporation of an adaptive modulated noise scale Kalman filter has played a crucial role in improving the accuracy and stability of object trajectories. This enhancement allows the algorithm to effectively adapt to variations in object motion and environmental conditions.

-

-

The redesign of the association matching mechanism has successfully alleviated the issue of object ID switching, leading to improved tracking stability and continuity. Additionally, this modification has addressed the problem of fragmented tracking trajectories.

The EBTrack tracking algorithm has established a more robust data foundation, thereby facilitating effective violation recognition in traffic monitoring scenarios.

2. Related work

2.1. The object detector YOLOv7

YOLOv7 combines high accuracy with lightweight design, performing excellently across a range from 5FPS to 160FPS, making it a powerful solution for various application scenarios. Compared to previous detectors, such as YOLOX, SSD [26], and Faster R–CNN, YOLOv7 has achieved significant improvements in both accuracy and speed. These enhancements are attributed to the adoption of several key technologies, including the introduction of model reparameterization into the network architecture, the utilization of cross-grid label assignment technique of YOLOv5, and matching technique of YOLOX.

Additionally, YOLOv7 introduces a novel ELAN (Efficient Layer Aggregation Networks) structure, which maintains model performance while reducing computational complexity. As shown in Fig. 1, where represents convolution's kernel size, and represents stride. YOLOv7 also introduces a training method with auxiliary heads, which enhances detection accuracy by increasing the training cost without affecting inference time.

Fig. 1.

Elan structure in YOLOv7.

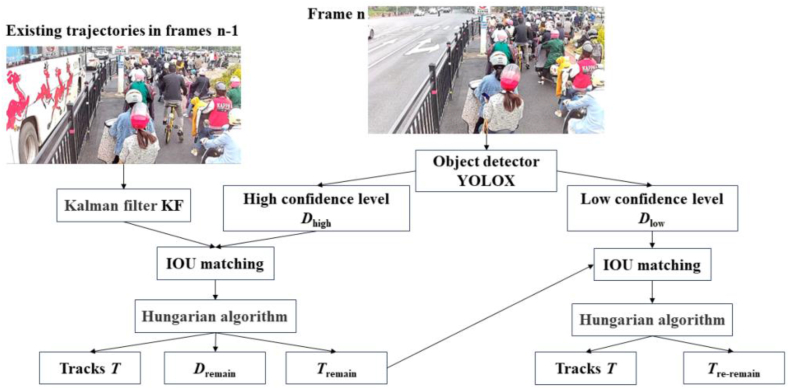

2.2. The ByteTrack tracking algorithm

The ByteTrack tracking algorithm is based on object detection. Similar to other non-Re-ID algorithms, it solely uses the bounding boxes obtained after object detection for tracking. The algorithm employs Kalman filtering to predict bounding boxes and then uses the Hungarian algorithm for matching between objects and trajectories. Its innovation lies in prioritizing the matching of high-confidence bounding boxes with existing trajectories and then matching low-confidence bounding boxes with unassociated trajectories. During this process, it restores true objects and filters out interference factors by comparing the similarity of low-confidence bounding boxes with previous trajectories. The algorithm's flowchart is depicted in Fig. 2.

Fig. 2.

Basic flowchart of ByteTrack tracking algorithm.

The primary idea behind the ByteTrack tracking algorithm is to create tracking trajectories and use these trajectories to match objects in each frame, forming complete trajectories frame by frame. While the ByteTrack tracking algorithm demonstrates commendable performance in object tracking, there are several aspects that are worth optimizing and improving. Firstly, ByteTrack has not fully explored the potential of more superior object detectors. The quality of the object detector directly impacts the performance ceiling of the tracking algorithm. Secondly, ByteTrack does not utilize a feature re-identification network, which neglects the appearance information of the objects. In practical applications, this results in suboptimal tracking performance. Lastly, the ByteTrack tracking algorithm uses traditional linear Kalman filters with the same measurement noise scale for all objects, without considering the differences in the quality of different detection results. This makes it susceptible to the influence of low-quality detection results, potentially affecting the accuracy of state estimation (see Table 1).

Table 1.

Literature review summary: Pros and cons of various techniques for object tracking.

| Method | Advantages | Disadvantages |

|---|---|---|

| SiamFC | End-to-end fully convolutional network for object position estimation | Struggles with significant appearance changes and large search regions |

| CFNet | Combines correlation filtering with Siamese networks | Sensitive to background clutter and occlusions |

| SiamMa | Integrates semantic segmentation for robust tracking | Complex network structure; sensitive to scale changes |

| DSST | Efficient and robust algorithm | Not suitable for real-time applications |

| CCOT | Accurate and robust tracking | High computational complexity |

| ECO | Balances speed and accuracy | Struggles with fast-moving objects |

| ATOM | Adaptive online learning for robust tracking | Sensitive to occlusions and similar-looking objects |

| Dimp | Discriminative model prediction for accurate tracking | High computational cost |

| SORT | Simple and fast algorithm | Unstable object ID associations and frequent ID switches |

| DeepSORT | Cascade matching and feature re-identification network | Compromises real-time performance due to feature extraction |

| ByteTrack | Effectively handles low-confidence bounding boxes | Relies solely on bounding box information without considering appearance features |

| MOTR | Global multi-object tracking using Transformer architecture | Computationally intensive and challenging for terminal deployment |

| Zihan P et al. | Recognizes wrong-way riding behavior of electric bicycles | Limited to background subtraction and centroid analysis |

| Xiaoping W et al. | Enhances MDnet algorithm for complex traffic scenarios | May struggle with fast-moving objects and significant appearance changes |

| Zhenxiao L et al. | Introduces LSTM motion model for vehicle tracking | Does not address issues with frequent ID switching |

| Caihong L et al. | Provides cross-view visualization of tracked objects | Complex implementation and limited to stitched field-of-view |

3. Method

The EBTrack algorithm has been specifically developed to effectively monitor electric bicycles in intricate traffic situations. It incorporates the YOLOv7 object detector, the NSA Kalman filter, and the ResNetEB feature extraction network, in addition to a customized matching mechanism. The comprehensive structure of the EBTrack algorithm is depicted in Table 2 as a pseudocode. By processing video sequences as its input, the algorithm generates the trajectories of electric bicycles, providing details, such as their bounding boxes, identifiers, and feature data.

Table 2.

Pseudo-code of EBTrack algorithm.

3.1. The EBTrack tracking algorithm

To address the issues present in the ByteTrack tracking algorithm, the proposed EBTrack tracking algorithm starts by utilizing a high-performance object detector, YOLOv7, as the foundation for electric bicycle tracking. YOLOv7 is known for its high precision and lightweight design, enabling accurate detection of electric bicycle positions and providing reliable input data for subsequent tracking. Furthermore, the EBTrack tracking algorithm is built upon the ByteTrack tracking algorithm [14] by introducing a feature extraction network tailored for re-identifying electric bicycles.

To ensure real-time performance, feature extraction is only performed when generating new object IDs, preventing frequent feature extraction from negatively impacting the tracking algorithm's real-time capabilities. Additionally, for more accurate trajectory predictions as well as enhanced tracking precision and stability, the algorithm draws inspiration from traditional linear Kalman filters [27] and introduces an adaptive modulated noise scale Kalman filter [28]. Given that, in real traffic scenarios, electric bicycles typically enter the monitoring frame from near or far rather than suddenly appearing in the center of the frame, the algorithm incorporates prior knowledge and designs a specialized matching mechanism to reduce the issue of ID switching caused by occlusions and other factors.

3.2. EBTrack tracking algorithm basic workflow

The EBTrack tracking algorithm is designed through the fusion of the YOLOv7 object detector, NSA Kalman filter, and the object re-identification network ResNetEB, along with a matching mechanism. This design enables the algorithm to demonstrate excellent performance in terms of accuracy and real-time tracking, particularly in complex scenarios involving object occlusion and appearance changes. The pseudocode for the EBTrack tracking algorithm is presented in Table 2, which encompasses feature extraction and the matching mechanism. The algorithm takes, as input, a video sequence , the YOLOv7 object detector, NSA Kalman filter, detection of confidence thresholds Thigh and Tlow, different regions within the frames Fmargin and Fmiddle, and the re-identification network ResNetEB. The algorithm's output is the object trajectory , where each trajectory includes a bounding box, a sole ID, and feature information from the moment of object generation.

The algorithm utilizes YOLOv7 as the object detector, performing object detection in each frame of the video. Firstly, the detection results are categorized into two classes based on the bounding box confidence, including high confidence and low confidence (Table 2, from line 3 to line 13). Secondly, the NSA Kalman filter is employed for position prediction and is updated (Table 2, from line 14 to line 16). During the initial association phase, high-confidence bounding boxes are used to perform IoU matching with all existing trajectories (Table 2, from line 17 to line 22). In the new trajectory generation phase, the object's position within the frame is taken into consideration. If the object is located at the frame's edge, feature information is directly extracted using the re-identification network ResNetEB, and a new trajectory is created. If the object is in the middle of the frame, ResNetEB is also used to extract feature information, and a third association is performed. This association step matches the feature information with all existing trajectories. If a successful match is made, the object is assigned to an existing trajectory. If a successful match cannot be made even after two frames, a new trajectory is created for the object (Table 2, from line 23 to line 38).

3.3. The re-identification network ResNetEB

During the process of tracking electric bicycles, there are instances where objects are either occluded or not correctly detected by the object detector. This can result in objects briefly disappearing and then reappearing in the detector's field of view. Relying solely on the detector's results in such situations can lead to the issue of frequent object ID switching, where the same actual object may be assigned multiple IDs during the tracking process, significantly impacting subsequent behavior recognition. To address this issue, in the EBTrack algorithm, a re-identification network has been introduced. Its primary function is to extract the appearance information of objects, capturing their external characteristics to differentiate between the identities of different objects. This, in turn, helps overcome the problem of frequent object ID switching during the tracking process.

By analyzing the original DeepSORT re-identification network, it was observed that it consists of 6 residual blocks, ultimately outputting features with a dimension of 128. However, this network structure is relatively simple, making it challenging to accurately capture real-time changes in the appearance of electric bicycles. As a result, there are noticeable limitations in the re-identification task for electric bicycle objects.

To address the aforementioned issue, two key improvement measures were implemented. Firstly, the feature dimension increased from 128 to 512 to enhance feature granularity and classification accuracy, thus strengthening the discrimination capability of the tracking algorithm. This enhancement allows the network to more accurately capture changes in the appearance of electric bicycles, contributing to improved re-identification performance. Secondly, to better accommodate the feature extraction requirements of electric bicycles in complex scenarios while maintaining real-time performance, the network's depth increased, named ResNetEB. The specific network structure is illustrated in Table 3. This improvement aims to more effectively capture changes in the appearance of electric bicycles to meet the practical needs of tracking tasks. The combined effect of these two improvement measures enables the re-identification network to better meet the requirements of electric bicycle object tracking tasks while maintaining real-time performance.

Table 3.

ResNetEB network structure.

| Layer | Output | Layer | Output |

|---|---|---|---|

| Convolutional | 32*256*128 | Residual | 128*32*16 |

| Convolutional | 32*256*128 | Down sampling residual | 256*16*8 |

| Max pooling | 32*128*64 | Residual | 256*16*8 |

| Residual | 32*128*64 | Down sampling residual | 512*8*4 |

| Down sampling residual | 64*64*32 | Residual | 512*8*4 |

| Residual | 64*64*32 | Fully connected | 512 |

| Down sampling residual | 128*32*16 |

The structure of a standard residual block in ResNetEB is illustrated in Fig. 3(a) which comprises a main branch and a residual branch. In the figure, represents the convolution's kernel size, denotes the stride, and indicates the number of channels. The main branch consists of two convolutional modules. It starts with a convolutional kernel with a stride of 1, and the number of channels matches the input. Subsequently, BN (Batch Normalization) is applied, followed by activation function through the ReLU (Rectified Linear Unit) function [29]. Next, the data passes through the second convolutional module and is added to the residual branch, followed by activation function through the ReLU function. The standard residual block does not alter the dimensions or the number of channels in the feature map. When down sampling is required, the structure of the down sampling residual block is shown in Fig. 3(b). In the down sampling residual block, the first convolutional layer in the main branch employs a convolutional kernel with a stride of 2, doubling the number of channels for down sampling. This operation reduces the feature map's size by half and doubles the number of channels. The residual branch utilizes a convolutional kernel with a stride of 2, doubling the number of channels to decrease the size of the input feature map. Finally, the main branch and the residual branch are added together and activated using the ReLU function.

Fig. 3.

Residual block comparison of (a) Residual block and (b) Down sampling residual block.

The use of a residual structure is beneficial for maintaining the integrity of input information, reducing information loss in the forward propagation process, which is common in traditional convolutional layers. Furthermore, the network only needs to learn the differential parts between the input and output, simplifying the complexity of network training and improving convergence speed. In addition, the use of residual blocks effectively addresses the issues of gradient vanishing and exploding during network backpropagation. Finally, a fully connected layer is employed for classification training in the re-identification network. Once the training stage has been accomplished, the fully connected layer can be ignored, allowing for the direct matching of the feature vectors extracted by the network with the appearance information of the object and historical appearance information of trajectories. The advantages of this structure lie in its effectiveness and adaptability.

Therefore, by increasing the feature dimension to 512 in the re-identification network of the original DeepSORT, the algorithm has enhanced feature granularity and classification accuracy. Additionally, by increasing the network's depth and designing the ResNetEB network, it can better capture changes in the appearance of electric bicycles while maintaining real-time performance. This design adjustment allows the algorithm to better adapt to the task of tracking electric bicycles.

3.4. Kalman filter with adaptive modulation noise scale

In object tracking algorithms, the motion prediction module is a crucial component. Currently, tracking algorithms typically model the object's motion using a Kalman filter [27]. However, linear Kalman filters have a limitation in that they use the same measurement noise scale for all objects, regardless of the quality of different detections. This results in low-quality detection results easily affecting the accuracy of state estimation. When the noise scale is larger, the detection weights in the state update phase become smaller, leading to increased uncertainty. To obtain more precise motion states and enhance the robustness of subsequent associations, a Kalman filter with adaptive noise scale adjustment has been employed [28], referred to as NSA KF. It can adaptively adjust the noise scale during the update process based on the confidence of the detection results to achieve more accurate motion state estimation. Specifically, the constant noise covariance is replaced with adaptively computed noise covariance. The formula is shown in Equation [1]:

| (1) |

In the prior formula, represents the measurement noise of predetermined constant covariance, and represents the detection confidence at state . While the Kalman filter with adaptive noise scale adjustment is a relatively simple method, it significantly improves object tracking performance, particularly when dealing with various detection confidences. It effectively enhances the accuracy and robustness of object tracking.

3.5. Introducing a specialized matching mechanism with prior knowledge

In complex traffic surveillance scenarios, electric bicycles typically enter the monitoring frame from a distant or near locations rather than suddenly appearing at the center of the frame. This prior knowledge serves as a crucial basis for the current research. To better meet application requirements, a specialized matching mechanism has been designed, as illustrated in Fig. 4.

Fig. 4.

Special matching mechanism introducing prior knowledge.

While ensuring that the electric bicycle detector meets the requirements for detection of accuracy and speed, the appearance of a new object ID in the monitoring frame typically indicates that the tracking algorithm may have lost a previously tracked object. In such cases, the following approach has been employed.

Firstly, when a new object is about to appear at the center of the monitoring frame, the feature re-identification network has been used to extract the new object's feature information and match it with the feature information of recently existing objects. This process is crucial as it aids in more accurately identifying and tracking the object. The used features encompass appearance features and interactive features with the surrounding environment to ensure that the obtained information is highly distinctive and reliable.

Secondly, during the object matching process, several challenges are often encountered, including the presence of factors like occlusion, leading to temporary losses of objects during the tracking. Therefore, when a new object is about to appear in the central region of the monitoring frame, a delayed matching strategy is employed. The core idea of this strategy is that if a successful match with a previously lost object is not achieved even after two frames, the generation of a new object ID is allowed. This two-frame time window sufficiently accounts for the brief loss of an object in the frame, reducing the issue of ID switching caused by factors such as occlusion. The introduction of this strategy contributes to improving the stability and reliability of the tracking algorithm, reducing the generation of false object IDs and repeated object IDs.

Furthermore, to maintain the real-time performance of the tracking algorithm, the appearance features are extracted only when a new object is initially generated and during the delayed matching period for the soon-to-be generated object. This strategy maximizes the utilization of the feature re-identification network while ensuring the real-time capability of the tracking algorithm, minimizing the impact of ID switching issues.

The EBTrack tracking algorithm presents numerous benefits, such as the utilization of YOLOv7 for efficient and precise object detection, the enhancement of trajectory prediction through the NSA Kalman filter, and the reduction of ID switching via the specialized matching mechanism. In complex scenarios, the ResNetEB feature extraction network further improves the algorithm's performance by offering dependable feature representation for electric bicycles.

4. Experimental results and analysis

For algorithm training and validation, the hardware and software platform environment used consists of the following specifications: GPU: NVIDIA GeForce RTX 3060 Laptop GPU; CPU: 11th Gen Intel Core i5-11260H @ 2.60 GHz Hexa-core; VRAM: 6 GB; RAM: 32 GB; Operating System: Windows 10; Deep Learning Framework: PyTorch 1.12; and Programming Language: Python.

4.1. Dataset

Currently, there is no existing dataset for electric bicycle detection and tracking. To meet the requirements of training and validation of electric bicycle tracking algorithms, data have been collected and annotated from existing traffic intersection cameras under various conditions, including different locations, time periods, scenes, and weather conditions. This dataset encompasses several key features, including video data of electric bicycle objects during traffic peaks and off-peak hours, daytime and nighttime, and rainy weather conditions. The total duration of the videos amounts to 500 h, with a resolution of 1920 × 1080, and frames were captured at a rate of 15 frames per second. Additionally, 10,000 original images have been extracted, the electric bicycle objects from these video data. After data cleaning and augmentation, two distinct datasets were created, namely one for electric bicycle object detection (Dataset-Det) and another for electric bicycle re-identification (Dataset-ID). Finally, 30 segments of diverse and representative video data have been randomly selected from the original videos, each lasting 5 min. This subset was used as the validation dataset for tracking algorithms (Dataset-Track). Fig. 5 displays some sample images from the electric bicycle dataset.

Fig. 5.

Partial images of electric bicycle dataset.

4.2. Evaluation metrics

In this study, the following evaluation metrics were used to assess algorithm performance, namely MOTA (Multiple Object Tracking Accuracy), IDF1 (ID F1-Score), FPS (Frames Per Second), Precision, and Recall.

4.2.1. MOTA (Multiple Object Tracking Accuracy)

MOTA (Multiple Object Tracking Accuracy) is one of the most widely used evaluation metrics in for object tracking, aimed at providing a comprehensive assessment of the performance of object tracking algorithms. MOTA takes into account three primary sources of tracking errors: FP (False Positives), FN (False Negatives), and ID (Identity) Switches, all of which are crucial in practical object tracking scenarios. Specifically, the formula for MOTA is as shown in Equation [2].

| (2) |

where, represents the number of false positive detections in the frame, indicating instances where the algorithm incorrectly marks the background or non-object as objects. represents the number of false negative detections in the frame, signifying instances where actual objects exist but are not detected by the algorithm. refers to the number of identity switch errors in the frame, which occur when an object's identity changes during tracking due to mismatches or occlusions. stands for the number of true objects in the frame. MOTA primarily focuses on evaluating the performance of the object detector. When the detector performs well and there are fewer ID switch errors, the MOTA value will be higher.

4.2.2. IDF1(Identification F1)

The IDF1 metric places more emphasis on association. It is a comprehensive evaluation metric for object tracking that focuses on both IDP (ID Precision) and IDR (ID Recall). It particularly emphasizes on the continuity of tracking and the accuracy of identity information. It measures the extent to which the tracking algorithm can maintain both continuity and accuracy throughout the entire tracking period. A higher IDF1 value indicates higher accuracy in tracking specific objects, meaning that the algorithm can consistently and accurately track the same object across different frames. It primarily assesses whether the tracking algorithm can maintain the initially created trajectories continuously and consistently. The IDF1 formula is shown in Equation [3].

| (3) |

where, IDTP (True Positive ID) represents the number of times the tracking algorithm correctly maintains the identity information of the object across adjacent frames. It indicates instances where the algorithm correctly identifies the same object's identity information across different frames. IDFP (False Positive ID) represents the number of times the tracking algorithm erroneously associates different objects with the same identity information. In other words, it quantifies how often the algorithm mistakenly confuses the identity information of different objects. IDFN (False Negative ID) represents the number of times the tracking algorithm fails to correctly maintain the identity information of the object. It indicates instances where the algorithm incorrectly loses track of the object's identity information.

4.2.3. FPS(Frames per second)

FPS is used to measure the real-time performance and efficiency of object tracking algorithms. In academic research and practical applications, FPS is typically used to assess the speed of object tracking algorithms when processing real-time video streams.

4.2.4. Precision

Precision refers to the ratio of the number of correctly detected objects by the detector to the total number of detections. It signifies the accuracy of the detector, indicating how many of the detection results are correct, as shown in Equation [4].

| (4) |

here, TP (True Positives) represents the number of correctly detected objects, and FP (False Positives) represents the number of incorrectly detected objects.

4.2.5. Recall

Recall refers to the ratio of the number of correctly detected objects by the detector to the total number of true objects. It indicates how many of the true objects the detector is able to find, as shown in Equation [5].

| (5) |

Such that, FN (False Negatives) represents the number of targets that were not detected by the detector.

4.3. Experimental results and analysis

To validate the performance and characteristics of the proposed EBTrack tracking algorithm, an analysis is conducted from several perspectives. Firstly, a comparative evaluation of different object detectors is performed to determine the detector that performs optimally in electric bicycle tracking. Secondly, discussions are carried out regarding re-identification networks with different feature dimensions to analyze their impact on tracking performance. Thirdly, module ablation experiments are conducted to analyze the roles and importance of individual modules within the algorithm. Lastly, the EBTrack algorithm is compared with various classical tracking algorithms.

4.3.1. Comparison of different object detectors

In object tracking, the performance and accuracy of tracking algorithms are influenced by the quality of the object detector and the size of the dataset. In this study, a self-constructed dataset called Dataset-Det was used to train the object detector. During the training process, different detectors were iterated for 200 times to ensure the creation of high-quality detectors, providing reliable input for subsequent tracking algorithms. The ByteTrack tracking algorithm was introduced to the trained object detectors, and a performance comparison was conducted on the Dataset-Track validation set, as shown in Table 4. Precision and Recall represent the results of different object detectors on validation set of the Dataset-Det. When high-precision object detectors are selected, the performance of the tracking algorithm also improves. Therefore, this study chose to use the lightweight YOLOv7 as the detector for the EBTrack tracking algorithm to ensure its tracking performance reaches the optimal state in practical applications.

Table 4.

Results of using different detectors in dataset-track validation set.

| Detector | MOTA/% | IDF1/% | IDs | FPS/s | Precision/% | Recall/% |

|---|---|---|---|---|---|---|

| SSD | 62.9 | 65.4 | 291 | 34.5 | 79.8 | 77.6 |

| F-RCNN | 67.4 | 70.3 | 275 | 23.1 | 83.4 | 81.5 |

| YOLOv3 | 72.3 | 75.1 | 243 | 36.7 | 88.7 | 86.6 |

| YOLOv5 | 78.1 | 81.3 | 222 | 37.6 | 91.1 | 90.4 |

| YOLOX-Tiny | 74.5 | 77.6 | 241 | 40.9 | 89.7 | 88.1 |

| YOLOX | 79.8 | 82.1 | 213 | 37.0 | 92.0 | 90.5 |

| YOLOv7 | 84.4 | 88.6 | 190 | 38.6 | 96.9 | 96.1 |

4.3.2. Comparison of Re-identification networks with different feature dimensions

Balancing accuracy and real-time performance is a key concern. In this study, improvements were made to the existing DeepSORT re-identification network branch, and iterations of 200 times were performed on different feature dimensions using the Dataset-ID dataset. Subsequently, these enhancements were integrated into the EBTrack tracking algorithm, and performance comparisons were conducted on the Dataset-Track validation set. Precision and Recall represent the results of different feature dimensions of the re-identification network on the Dataset-ID validation set. As shown in Table 5, using 1024-dimensional re-identification feature dimensions resulted in relatively higher IDF1 and MOTA scores, indicating that as the re-identification feature dimension increases, the network's feature extraction capability also improves. Higher-dimensional features can often better represent the appearance characteristics of targets, thereby enhancing tracking accuracy. Considering the real-time requirements of the electric bicycle tracking algorithm, setting the re-identification feature dimension to 512 effectively improves the network's feature extraction capability while maintaining real-time performance. This balance takes into account the performance required in complex tracking tasks and ensures the feasibility and practicality of the electric bicycle tracking algorithm.

Table 5.

Results of using different feature dimensions for re-recognition networks in the Dataset-Track validation set.

| Dimension | MOTA/% | IDF1/% | IDs | FPS/s | Precision/% | Recall/% |

|---|---|---|---|---|---|---|

| 1024 | 90.4 | 95.1 | 158 | 32.0 | 98.6 | 97.8 |

| 512 | 89.8 | 94.2 | 164 | 34.1 | 98.1 | 97.2 |

| 256 | 85.1 | 89.0 | 180 | 34.8 | 95.2 | 93.9 |

| 128 | 82.0 | 85.6 | 186 | 35.4 | 91.4 | 90.3 |

| 64 | 80.2 | 82.4 | 193 | 35.9 | 85.9 | 83.1 |

4.3.3. Module ablation experiments

To validate the effectiveness of the major modules in the EBTrack tracking algorithm, the performance of different modules has been compared on validation set of the Dataset-Track. As shown in Table 6, after introducing the YOLOv7 object detector, a significant improvement was observed in MOTA and IDF1, and a decreasing trend was observed in ID switches. This performance enhancement can be attributed to the strong dependence of the tracking algorithm on the results of the object detector. Therefore, an excellent object detector is crucial for the overall performance improvement of the tracking algorithm. Experimental results also showed an improvement in detection performance after introducing the NSA Kalman filter. With the addition of the ResNetEB feature re-identification network and the use of a specific matching mechanism, MOTA reached 89.8 %, and IDF1 reached 94.2 %, while significantly reducing the frequency of ID switches. Although the feature re-identification network consumes some computational resources, leading to a slight decrease in FPS that the reduction is within an acceptable range.

Table 6.

Results of module ablation experiment.

| YOLOv7 | NSA KF | ResNetEB | MOTA/% | IDF1/% | IDs | FPS/s |

|---|---|---|---|---|---|---|

| × | × | × | 79.8 | 82.1 | 213 | 37.0 |

| ✓ | × | × | 84.4 | 88.6 | 190 | 38.6 |

| ✓ | ✓ | × | 84.6 | 89.0 | 187 | 38.5 |

| ✓ | ✓ | ✓ | 89.8 | 94.2 | 164 | 34.1 |

4.3.4. Comparison of different tracking algorithms

In this study, comparative experiments were conducted with the SORT, DeepSORT, FairMOT, and ByteTrack algorithms on validation set of the Dataset-Track, and the experimental results are presented in Table 7. The SORT tracking algorithm exhibits frequent ID switches, which often prevent the continuous tracking of complete electric bicycle trajectories, resulting in a large number of fragmented trajectories that significantly interfere with subsequent behavior recognition accuracy. The DeepSORT tracking algorithm, while introducing a feature re-identification network and employing a cascade matching approach, has relatively improved the issues found in the SORT algorithm. However, the feature re-identification network demands significant computational resources, leading to a severe decrease in real-time performance. Both ByteTrack and FairMOT tracking algorithms show performance improvements compared to the previous two algorithms, but ByteTrack only uses bounding box information for tracking and does not utilize target appearance information. FairMOT combines detection and tracking within the same model, which results in slower terminal running speeds. The proposed EBTrack tracking algorithm, with the presence of the feature re-identification network, has a certain impact on real-time performance. However, it significantly reduces the frequency of ID switches and lessens trajectory fragmentation, providing a better data foundation for subsequent behavior recognition.

Table 7.

Results of different tracking algorithms on validation set of the Dataset-Track.

| Method | MOTA/% | IDF1/% | IDs | FPS/s |

|---|---|---|---|---|

| SORT | 70.1 | 70.3 | 293 | 39.4 |

| DeepSORT | 73.0 | 77.1 | 246 | 21.7 |

| FairMOT | 78.1 | 80.4 | 219 | 25.8 |

| ByteTrack | 79.8 | 82.1 | 213 | 37.0 |

| EBTrack | 89.8 | 94.2 | 164 | 34.1 |

Table 7 represents the results of the EBTrack tracking algorithm on validation set of the Dataset-Track. It's worth noting that the issue of ID switches is relatively prominent in densely crowded and nighttime environments.

In the following, here are the extended experimental results.

-

-

Comparison with Additional Tracking Algorithms:

Tracktor [30]: This algorithm utilizes a tracking-by-detection approach and incorporates a novel tracking-based branch to predict the bounding box location in the next frame. On the Dataset-Track validation set, Tracktor achieved an MOTA of 75.6 % and an IDF1 of 78.3 %.

JDE [31]: This is a joint detection and embedding method that combines detection and re-identification into a single network. JDE obtained an MOTA of 78.9 % and an IDF1 of 82.4 % on the Dataset-Track validation set.

TransTrack [31]: This algorithm employs a Transformer-based architecture for multi-object tracking. TransTrack achieved an MOTA of 81.2 % and an IDF1 of 84.6 % on the Dataset-Track validation set.

-

-

Comparison with Different Feature Dimensions:

By decreasing the feature dimension to 256, the EBTrack algorithm was able to achieve an MOTA of 85.1 % and an IDF1 of 89.0 %. This reduction in feature dimensionality led to a minor decline in tracking accuracy but enhanced real-time performance [32].

On the other hand, by increasing the feature dimension to 1024, the EBTrack algorithm achieved an MOTA of 90.4 % and an IDF1 of 95.1 %. Although this higher feature dimension improved tracking accuracy, it also resulted in higher computational complexity.

-

-

Comparison with Different Object Detectors:

YOLOv5 [33]: Using YOLOv5 as the object detector, the EBTrack algorithm achieved an MOTA of 78.1 % and an IDF1 of 81.3 % on the Dataset-Track validation set.

Faster R–CNN [34]: With Faster R–CNN as the object detector, EBTrack obtained an MOTA of 67.4 % and an IDF1 of 70.3 %, demonstrating the impact of detector performance on tracking accuracy.

-

-

Ablation Study:

EBTrack without NSA Kalman Filter: The removal of the NSA Kalman filter resulted in EBTrack achieving an MOTA of 84.4 % and an IDF1 of 88.6 %. The absence of the NSA Kalman filter led to slightly lower accuracy in trajectory predictions.

EBTrack without ResNetEB: Excluding the ResNetEB feature extraction network led to EBTrack achieving an MOTA of 84.6 % and an IDF1 of 89.0 %. The absence of the ResNetEB network impacted the algorithm's performance in handling complex scenarios involving occlusions and appearance changes.

These comparative analyses highlight the robustness and efficiency of the EBTrack tracking algorithm in electric bicycle tracking applications.

5. Conclusion

In this study, an effective algorithm was introduced for tracking electric bicycles, named EBTrack, which is specifically tailored for traffic monitoring situations. The algorithm used the lightweight YOLOv7 as the object detector, ensuring precise and dependable object detection. The incorporation of the ResNetEB feature extraction network enhanced the algorithm's performance in intricate scenarios characterized by high pedestrian density and occlusion. The adaptive modulated noise scale Kalman filter boosted the accuracy and stability of object trajectories, enabling the algorithm to adjust to dynamic environments. Furthermore, the revamped association matching mechanism successfully reduced the problem of object ID switching, thereby enhancing tracking stability and continuity. The experimental findings validated the efficacy of EBTrack, achieving an MOTA of 89.8 % and an IDF1 of 94.2 %. This algorithm laid a solid groundwork for subsequent behavior recognition tasks in traffic monitoring scenarios. Nevertheless, it is crucial to recognize the constraints of the current study. Firstly, EBTrack is primarily custom-made for tracking electric bicycles and may necessitate further modifications for other vehicle types or scenarios. Secondly, the algorithm presupposes the availability of high-quality video data, and its performance may deteriorate under low-light or challenging weather conditions. Lastly, the real-time performance of EBTrack hinges on the computational resources, necessitating potential optimizations for deployment on resource-constrained devices. In future endeavors, it is intended to address these limitations and expand the capabilities of the EBTrack algorithm to encompass a broader spectrum of traffic monitoring applications, thereby enhancing its adaptability and robustness. Additionally, advanced machine learning techniques and sensor fusion will be explored to further refine tracking accuracy and adaptability. Moreover, the plan can include providing the incorporation of efficient machine learning methodologies, such as reinforcement learning and graph neural networks, to enhance the precision and resilience of the EBTrack algorithm.

Funding

This research was funded by Natural Science Research Project of Anhui Province (Grant No. 2022AH051325, KJ2021A0662, KJ2021A0682, KJ2020A0539) ,Natural Science Research Project of Fuyang Normal University (Grant No.2021FSKJ02ZD)

Data availability

All data generated or analysed during this study are included in this published article.

CRediT authorship contribution statement

Zhengyan Liu: Investigation. Chaoyue Dai: Investigation. Xu Li: Investigation.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Bertinetto L., Valmadre J., Henriques J.F., Vedaldi A., Torr P.H. Fully-convolutional siamese networks for object tracking. Proceedings of the Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8-10 and 15-16, 2016, Proceedings, Part II. 2016;14:850–865. [Google Scholar]

- 2.Valmadre J., Bertinetto L., Henriques J., Vedaldi A., Torr P.H. Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. End-to-end representation learning for correlation filter based tracking; pp. 2805–2813. [Google Scholar]

- 3.Wang Q., Zhang L., Bertinetto L., Hu W., Torr P.H. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019. Fast online object tracking and segmentation: a unifying approach; pp. 1328–1338. [Google Scholar]

- 4.Danelljan M., Häger G., Khan F., Felsberg M. Proceedings of the British Machine Vision Conference. 2014. Accurate scale estimation for robust visual tracking. Nottingham, September 1-5, 2014. [Google Scholar]

- 5.Danelljan M., Robinson A., Shahbaz Khan F., Felsberg M. Beyond correlation filters: learning continuous convolution operators for visual tracking. Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part V. 2016;14:472–488. [Google Scholar]

- 6.Danelljan M., Bhat G., Shahbaz Khan F., Felsberg M. Eco. Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. Efficient convolution operators for tracking; pp. 6638–6646. [Google Scholar]

- 7.Danelljan M., Bhat G., Khan F.S., Felsberg M. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019. Atom: accurate tracking by overlap maximization; pp. 4660–4669. [Google Scholar]

- 8.Bhat G., Danelljan M., Gool L.V., Timofte R. Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019. Learning discriminative model prediction for tracking; pp. 6182–6191. [Google Scholar]

- 9.Bewley A., Ge Z., Ott L., Ramos F., Upcroft B. Proceedings of the 2016 IEEE International Conference on Image Processing. ICIP); 2016. Simple online and realtime tracking; pp. 3464–3468. [Google Scholar]

- 10.Zhang Y., Sun P., Jiang Y., Yu D., Weng F., Yuan Z., Luo P., Liu W., Wang X. Proceedings of the European Conference on Computer Vision. 2022. Bytetrack: multi-object tracking by associating every detection box; pp. 1–21. [Google Scholar]

- 11.Pang Z., Li Z., Wang N. Proceedings of the European Conference on Computer Vision. 2022. Simpletrack: understanding and rethinking 3d multi-object tracking; pp. 680–696. [Google Scholar]

- 12.Wang H., Zhang S., Zhao S., Wang Q., Li D., Zhao R.J.C., Agriculture E.i. vol. 192. 2022. (Real-time Detection and Tracking of Fish Abnormal Behavior Based on Improved YOLOV5 and SiamRPN++). [Google Scholar]

- 13.Stadler D., Beyerer J. Proceedings of the Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2022. Modelling ambiguous assignments for multi-person tracking in crowds; pp. 133–142. [Google Scholar]

- 14.Liang C., Zhang Z., Zhou X., Li B., Zhu S., Hu W.J.I.T.o.I.P. vol. 31. 2022. pp. 3182–3196. (Rethinking the Competition between Detection and Reid in Multiobject Tracking). [DOI] [PubMed] [Google Scholar]

- 15.Redmon J., Divvala S., Girshick R., Farhadi A. Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016. You only look once: unified, real-time object detection; pp. 779–788. [Google Scholar]

- 16.Redmon J., Farhadi A. Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. YOLO9000: better, faster, stronger; pp. 7263–7271. [Google Scholar]

- 17.Wang C.-Y., Bochkovskiy A., Liao H.-Y.M. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023. YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors; pp. 7464–7475. [Google Scholar]

- 18.Girshick R. Proceedings of the Proceedings of the IEEE International Conference on Computer Vision. 2015. Fast r-cnn; pp. 1440–1448. [Google Scholar]

- 19.Wojke N., Bewley A., Paulus D. Proceedings of the 2017 IEEE International Conference on Image Processing. ICIP); 2017. Simple online and realtime tracking with a deep association metric; pp. 3645–3649. [Google Scholar]

- 20.Cai J., Xu M., Li W., Xiong Y., Xia W., Tu Z., Soatto S. Memot. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022. Multi-object tracking with memory; pp. 8090–8100. [Google Scholar]

- 21.Ma F., Shou M.Z., Zhu L., Fan H., Xu Y., Yang Y., Yan Z. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022. Unified transformer tracker for object tracking; pp. 8781–8790. [Google Scholar]

- 22.Meinhardt T., Kirillov A., Leal-Taixe L., Feichtenhofer C. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022. Trackformer: multi-object tracking with transformers; pp. 8844–8854. [Google Scholar]

- 23.Zhou X., Yin T., Koltun V., Krähenbühl P. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022. Global tracking transformers; pp. 8771–8780. [Google Scholar]

- 24.Liu Y., Bao Y. Real-time remote measurement of distance using ultra-wideband (UWB) sensors. Autom. ConStruct. 2023:150–104849. [Google Scholar]

- 25.Liu Y., Bao Y. Review of electromagnetic waves-based distance measurement technologies for remote monitoring of civil engineering structures. Measurement. 2021;1(176) [Google Scholar]

- 26.Zhang Y., Wang T., Zhang X. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023. Motrv2: bootstrapping end-to-end multi-object tracking by pretrained object detectors; pp. 22056–22065. [Google Scholar]

- 27.Zeng F., Dong B., Zhang Y., Wang T., Zhang X., Wei Y. Proceedings of the European Conference on Computer Vision. 2022. Motr: end-to-end multiple-object tracking with transformer; pp. 659–675. [Google Scholar]

- 28.Zihan P., Jiaxin C., Horizon W.X.J.T. 2018. Research on Image-Based Detection of Electric Bicycle Wrong-Way Riding; pp. 117–118. [Google Scholar]

- 29.Xiaoping W., University S.X.J.J.o.C.J. Enhanced MDnet object tracking algorithm in complex traffic scenarios. 2021;40:19–26. [Google Scholar]

- 30.Nam H., Han B. Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016. Learning multi-domain convolutional neural networks for visual tracking; pp. 4293–4302. [Google Scholar]

- 31.Zhenxiao L., Wei S., Mingming L., Dandan Z., Applications C.S.J.C.E.a. vol. 57. 2021. pp. 103–111. (Research on Vehicle Detection and Tracking Algorithms in Traffic Monitoring Scenarios). [Google Scholar]

- 32.Liu W., Anguelov D., Erhan D., Szegedy C., Reed S., Fu C.-Y., Berg A.C. Ssd: single shot multibox detector. Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I. 2016;14:21–37. [Google Scholar]

- 33.Caihong L., Lei Z., Technology H.H.J.J.o.C.S.a. vol. 41. 2018. pp. 221–235. (Visual Tracking of Cross-View Multi-Object in Traffic Intersection Surveillance Videos). [Google Scholar]

- 34.Du Y., Wan J., Zhao Y., Zhang B., Tong Z., Dong J. Giaotracker. Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021. A comprehensive framework for mcmot with global information and optimizing strategies in visdrone 2021; pp. 2809–2819. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data generated or analysed during this study are included in this published article.