Abstract

Purpose

The study investigated the effect of a short computer-based environmental sound training regimen on the perception of environmental sounds and speech in experienced cochlear implant (CI) patients.

Method

Fourteen CI patients with the average of 5 years of CI experience participated. The protocol consisted of 2 pretests, 1 week apart, followed by 4 environmental sound training sessions conducted on separate days in 1 week, and concluded with 2 posttest sessions, separated by another week without training. Each testing session included an environmental sound test, which consisted of 40 familiar everyday sounds, each represented by 4 different tokens, as well as the Consonant Nucleus Consonant (CNC) word test, and Revised Speech Perception in Noise (SPIN-R) sentence test.

Results

Environmental sounds scores were lower than for either of the speech tests. Following training, there was a significant average improvement of 15.8 points in environmental sound perception, which persisted 1 week later after training was discontinued. No significant improvements were observed for either speech test.

Conclusions

The findings demonstrate that environmental sound perception, which remains problematic even for experienced CI patients, can be improved with a home-based computer training regimen. Such computer-based training may thus provide an effective low-cost approach to rehabilitation for CI users, and potentially, other hearing impaired populations.

One of the major benefits of cochlear implantation is the ability to perceive common everyday sounds, or environmental sounds. Vitally important to the patient's well-being (e.g., fire alarms, car horns) or simply aesthetically pleasing (e.g., chirping birds, ocean surf), environmental sounds transmit valuable information about objects and events taking place around the listener (Gaver, 1993) and can contribute to the patient's overall well-being (Ramsdell, 1978). On the other hand, recent findings indicate considerable deficits in the ability of patients with cochlear implants (CIs) to identify many common environmental sounds, even after years of implant use (Inverso & Limb, 2010; Looi & Arnephy, 2010; Reed & Delhorne, 2005; Shafiro, Gygi, Cheng, Vachhani, & Mulvey, 2011). As a possible remedy to this problem, previous studies with CI simulations in normal-hearing listeners demonstrate that environmental sound perception can improve after a period of formal training (Loebach & Pisoni, 2008; Shafiro, 2008; Shafiro, Sheft, Gygi, & Ho, 2012). Furthermore, in CI simulation studies, training effects have been shown to generalize to other nontrained environmental sounds and coincide with improvement in speech perception (Loebach & Pisoni, 2008; Shafiro et al., 2012). The purpose of the present study was to determine if similar sound-specific training and generalization effects can be found in experienced adult CI patients.

Perception of Environmental Sounds by Cochlear Implant Users

Environmental sounds are often among the first auditory experiences of patients with newly implanted CIs. Patient reports often convey a tremendous sense of excitement about being able, often after a period of prolonged deafness, to relate a sound to an external event that generated it. This ability provides CI patients with a stronger sense of connection to the surrounding world, awareness of sounds critical to one's safety, and an overall greater satisfaction with their implants. As patients gain more experience with their implants, their focus typically shifts to improving speech understanding, with less emphasis on environmental sounds. Nevertheless, existing research consistently demonstrates that even experienced CI patients with high speech-perception scores frequently show substantial deficits in environmental sound perception and are not able to identify many common environmental sounds (Inverso & Limb, 2010; Looi & Arnephy, 2010; Reed & Delhorne, 2005; Shafiro et al., 2011; Tyler, Moore, & Kuk, 1989).

The lack of awareness of this deficit in environmental sound perception may be due to the background nature of most everyday listening (Truax, 2001). Environmental sounds are rarely an explicit focus of listening even among listeners with normal hearing (NH). In addition, whereas in speech communication, listeners are typically aware when they are unable to understand speech, many unidentified environmental sounds can be easily ascribed to some generic background noise or an artifact of CI processing. Indeed, patients listening through CIs often have greater difficulties than NH listeners in segregating co-occurring sounds or sound streams (Oxenham, 2008), which results in many auditory experiences that cannot be easily classified in terms of their distal sound sources. Thus, patients may not be able to perceive many common environmental sounds in their environment.

Available research data seem to support that view. In the last decade, environmental sound perception in CI patients with postlingual deafness has been the subject of several studies described below. Unlike earlier research often conducted with single-to-four-channel implants using tests with few arbitrarily selected environmental sounds, these later studies reflect performance with more recent multichannel processors, using more rigorously constructed environmental sound tests. For example, prior to being given to CI patients, most recent tests described below have been thoroughly tested with NH adult listeners to ensure that the included sounds are indeed familiar and easily identifiable in the absence of hearing loss.

Reed and Delhorne (2005) examined environmental sound perception in 11 experienced CI patients with postlingual deafness who were asked to identify sounds from four different environmental settings (general home, kitchen, office, and outside). There were 10 sounds in each category with each sound represented by three different tokens. Using a closed-set, 10-item response format, the mean identification accuracy across all four sets was 79% (range = 45–95%).

Inverso and Limb (2010) tested 22 experienced patients who were asked to identify 50 environmental sounds. After listening to each sound, patients were asked to select one of 50 response options and then to classify the sound they heard into one of five categories (animal, human nonspeech, mechanical or alarm, nature, musical instruments). The average identification accuracy of individual sounds was 49%, with categorization accuracy of 71% correct.

These results are consistent with a later finding by Shafiro et al. (2011), who tested 17 experienced CI patients and reported the average sound identification accuracy of 45%. Similarly to Inverso and Limb (2010), Shafiro et al. used a large (i.e., 60 item) closed-set response format, which might have contributed to lower identification accuracy than previously found by Reed and Delhorne (2005), who used a 10-item closed-set format.

Looi and Arnephy (2010) examined environmental sound perception in two patient groups: (a) experienced CI patients and (b) CI patients about 3 months postimplantation. The test consisted of 45 sounds, each represented by two different tokens, comprising a broad sampling of nine environmental categories. After hearing each sound, subjects were asked to choose one of 45 sound names. The average identification accuracy was 59% for the 10 experienced CI users and 57% for the four subjects with new implants. The finding that environmental sound identification accuracy does not seem to improve following longer experience with an implant is consistent with previous research by Proops et al. (1999), who found that environmental sound perception scores reach an asymptote following rapid improvement in the first few months.

Overall, these results indicate deficits in environmental sound perception of experienced adult CI patients. Whereas NH adult listeners score 90% to 100% on individual sound identification in the above tests, the scores of CI patients remain substantially lower, in the 45% to 79% range, across recent studies. In these studies, individual patient performance is also highly variable, suggesting that whereas some patients may be able to learn to identify environmental sounds independently in the course of daily life, others do not seem to be able to do that on their own, even after years of implant use. Furthermore, these tests were administered in a closed-set format of varying set sizes, with smaller set size producing better overall performance. All sounds were also administered in quiet. It is thus reasonable to expect that in noisy real-world environments with fewer constraints on possible sounds and multiple concurrent sound sources, environmental sound identification of CI patients may be even poorer.

Computerized Training in Cochlear Implant Rehabilitation

Formal and structured training has been recommended and used as a means to improve auditory performance of individuals with hearing impairments since at least World War II (Alpiner & McCarthy, 2009). The advent of relatively inexpensive personal computers has made it possible to automate many common training routines, making computerized training a practical, low-cost approach for improving patient auditory perception (Henshaw & Ferguson, 2013; Pizarek, Shafiro, & McCarthy, 2013). A number of training programs, targeting different aspects of auditory perception and cognitive processing, have been tested with CI patients (Dawson & Clark, 1997; Fu & Galvin, 2007; Gfeller, Witt, Kim, Adamek, & Coffman, 1999; Goldsworthy & Shannon, 2014; Ingvalson, Lee, Fiebig, & Wong, 2013; Miller, Watson, Kistler, Wightman, & Preminger, 2008; Oba, Fu, & Galvin, 2011; Stacey et al., 2010; Wu, Yang, Lin, & Fu, 2007; Zhang, Dorman, Fu, & Spahr, 2012). Training studies with CI patients differ considerably in terms of study design, training regimens, materials, and outcome measures. Nevertheless, all studies to date report some improvement in speech perception abilities of adult postlingually deafened CI patients following training.

Perceptual improvements following training are generally attributed to neuroplastic changes in the listener's central auditory nervous system in response to exposure and practice with a specific type of stimuli. Furthermore, several studies provide converging evidence that the effects of training can generalize to novel stimuli and testing environments. For instance, Oba et al. (2011) found an improvement on Hearing in Noise Test (Nilsson, Soli, & Sullivan, 1994) and Institute of Electrical and Electronics Engineers (1969) sentence scores in multitalker babble and steady-state noise in a group of 10 CI patients following a month-long, home-based training with single-digit recognition in multitalker babble. Similarly, Ingvalson et al. (2013) reported an increase in Hearing in Noise Test scores and a decrease in QuickSIN SNR-50 scores (indicating tolerance of higher signal-to-noise ratios) in five CI adults with postlingual deafness after only four training sessions using audiovisual speech stimuli presented in noise.

Findings from these and other investigators indicate the susceptibility of adult CI patients with postlingual deafness to training-induced neuroplastic changes. It is important to note that benefits seem to persist even after completion of the training regimen, as reported by Zhang et al. (2012) who, following a 4-week-long training, tested a group of seven CI patients immediately after and 1 month after the end of training. Overall, these findings suggest that computerized training is an effective approach to increasing CI benefit. However, all CI training studies published thus far have been based on training primarily with speech materials. Potential training effects associated with other auditory stimuli of concern to CI patients, such as environmental sounds and music, have not been investigated in CI patients. Nevertheless, evidence from studies with simulated CI processing conducted in NH adults suggests that environmental sound training can lead to marked increases in the perception of both trained and novel environmental sounds and can coincide with or generalize to improvements in speech perception (Loebach & Pisoni, 2008; Shafiro et al., 2012).

Environmental Sound Training with CI simulations

Simulations of vocoder-based CI processing have been widely used with NH listeners to investigate the effect of degraded spectral resolution on the perception of speech (Shannon, Zeng, Kamath, Wigonski, & Ekelid, 1995). Similar processing methods have been also applied to determine the spectral resolution required for identification of common environmental sounds (Gygi, Kidd, & Watson, 2004; Shafiro, 2008). During an initial investigation by Gygi et al. (2004), it was observed that naïve NH adult listeners' performance on spectrally degraded environmental sounds, processed through a six-channel vocoder, improved from 36% to 66% correct between the first and the second presentation of the same 70 environmental sounds stimuli on two different days. It seems that hearing unprocessed tokens of the sounds only once before being tested on the second day was sufficient for some perceptual learning even when no other feedback was provided. However, it was not clear whether such improvements would generalize to novel sounds that were not heard during the two sessions, or were limited only to the specific sound tokens that listeners heard.

The generalization of training effects for environmental sounds and speech was later investigated by Loebach and Pisoni (2008) using an eight-channel sine wave vocoder simulation. After only a single short, 15–20 min training session in which listeners identified specific sounds and were provided trial feedback, a significant improvement in performance was obtained for both novel and trained environmental sounds. The positive effect of environmental sound training was also observed for speech: single words and two different types of sentence materials, which were also vocoded. On the other hand, no improvement in environmental sound perception was observed on a repeated test in the control group, who did not receive any training, or in the group that was trained with speech materials only.

Generalized training effects were also reported by Shafiro (2008) following a longer five-session course of environmental sound training. Similar to Loebach and Pisoni (2008), there was a significant improvement in listener identification scores on four-channel noise vocoded environmental sounds between the pretest and posttest that extended to both novel environmental sounds and alternative tokens of the same sounds as used during the training. In a follow-up study, Shafiro et al. (2012) used similarly processed environmental sounds in a three-session training regimen to contrast the effects of simple exposure to environmental sounds versus structured training with feedback. Two pretests, separated by a week-long interval, were administered to all subjects prior to beginning of training. At the completion of training, two posttests, also separated by a week-long interval, were administered to evaluate training outcomes and examine the retention of training benefits.

Significant improvements in environmental sound identification scores were obtained between the two pretests with simple exposure to the stimuli, as well as after the training period. It is noteworthy that the magnitude of improvement following training was significantly higher than that following exposure. As in previous studies, a significant training-related improvement, although of a smaller magnitude, was also observed for novel environmental sounds, and to a greater extent, for alternative tokens of environmental sounds used during training. A significant improvement in scores between the initial and the final tests was obtained for two speech tests: a monosyllabic isolated word identification test (CNC; Peterson & Lehiste, 1962) and a sentence test (SPIN-R; Elliott, 1995).

This improvement in speech scores following environmental sound training was consistent with the previous finding by Loebach and Pisoni (2008). It thus appears that training listeners with a broad range of environmental sounds which vary considerably in terms of their sound sources may positively influence listeners' ability to perceive smaller, more constrained sound classes, such as speech, as a subclass of environmental sounds. Indeed, moderate-to-strong correlations in the ability to perceive speech and environmental sounds have been frequently found for both CI patients and NH listeners responding to vocoded stimuli (Inverso & Limb, 2010; Shafiro et al., 2011; Shafiro et al., 2012; Tyler et al., 1989).

The results of environmental sound training studies using vocoder simulations of CI processing indicate a range of training benefits and suggest the possibility of long-lasting neuroplastic changes. However, these results may not always accurately represent the performance of actual CI users. There are a number of important differences between simulated CI and true electric hearing. For one, the experience of processed speech is different between NH and CI listeners. Whereas CI patients listen through their implants in everyday life, NH listeners do so only during testing and training, and otherwise have exposure to unprocessed natural sounds. Therefore, the perceptual changes that take place for NH listeners during short training intervals may be akin to rapid perceptual adaptation, and thus differ from the chronic perceptual changes of CI patients. Furthermore, after an extended period of deafness, CI patients may not always remember specific environmental sounds (e.g., rustling leaves, a car horn), making it more difficult for them to recognize such sounds even after the sensory information becomes available. On the other hand, most young NH listeners tested thus far can be expected to have strong memory representations for sounds they hear, which is likely to facilitate training. Finally, NH listeners' basic auditory processing abilities with respect to spectral and temporal information, as well as the ability to manipulate encoded auditory information at higher cognitive levels of processing, are generally superior to those of CI patients. These differences may result in CI patients' using different listening strategies and could affect their response to training.

Present Study

The present study was designed to determine the effect of environmental sound training on the perception of environmental sounds and speech in CI patients. Unlike previous training studies with CI patients, the training regimen was based exclusively on environmental sounds, an aspect of CI rehabilitation that has received little research emphasis in previous work. The study further extended the findings of earlier environmental sound training studies conducted with CI simulations to determine whether the training benefits previously obtained with NH listeners could be obtained in CI patients. Specific questions in that regard were: (a) will identification of environmental sounds improve following a short training regimen; and if so, (b) will environmental sound training effects generalize to (i) sounds not used during training and (ii) spoken words. These effects could be expected on the basis of previous training studies with CI simulations (Loebach & Pisoni, 2008; Shafiro et al., 2012).

Method

Subjects

Fourteen CI patients (five men, nine women) participated in the study (Table 1). Subjects' mean age was 63 years (range: 51–87). Their pure-tone-average audiometric thresholds at octave frequencies of 500 Hz, 1000 Hz, and 2000 Hz was 30 dB HL, SD = 8.6 dB. Subjects' mean implant experience was 5 years (SD = 2.5), with a minimum of 1 year of daily implant use. The age of hearing loss onset varied considerably among subjects, from early childhood to late-adult onset. However, all subjects claimed to have developed oral language skills prior to the onset of their severe-to-profound hearing loss.

Table 1.

Demographic characteristics of the 14 CI subjects with postlingual deafness.

| Subject | Sex | Age | Hearing loss onset | Duration CI use (years) | Implant |

|---|---|---|---|---|---|

| S2 | F | 57 | 20 | 3 | Cochlear Contour Advanced |

| S3 | F | 51 | 23 | 6 | Nucleus Freedom |

| S5 | M | 57 | 30 | 5 | Nucleus 24 |

| S6 | F | 60 | 5 | 6 | AB BionicEar |

| S7 | F | 67 | 3 | 8 | AB Harmony |

| S15 | F | 68 | 25 | 7 | AB Harmony |

| S16 | M | 81 | 45 | 2 | AB Harmony |

| S17 | F | 73 | 35 | 4 | Nucleus Freedom |

| S9 | F | 58 | 3 | 6 | Nucleus Freedom |

| S10 | F | 87 | 66 | 4 | Nucleus Freedom |

| S11 | M | 87 | 68 | 8 | Nucleus Freedom |

| S12 | M | 71 | 35 | 7 | AB PSP |

| S13 | F | 66 | 63 | 3 | Medel Sonata |

| S14 | M | 70 | 46 | 1 | Medel Sonata |

Stimuli and Design

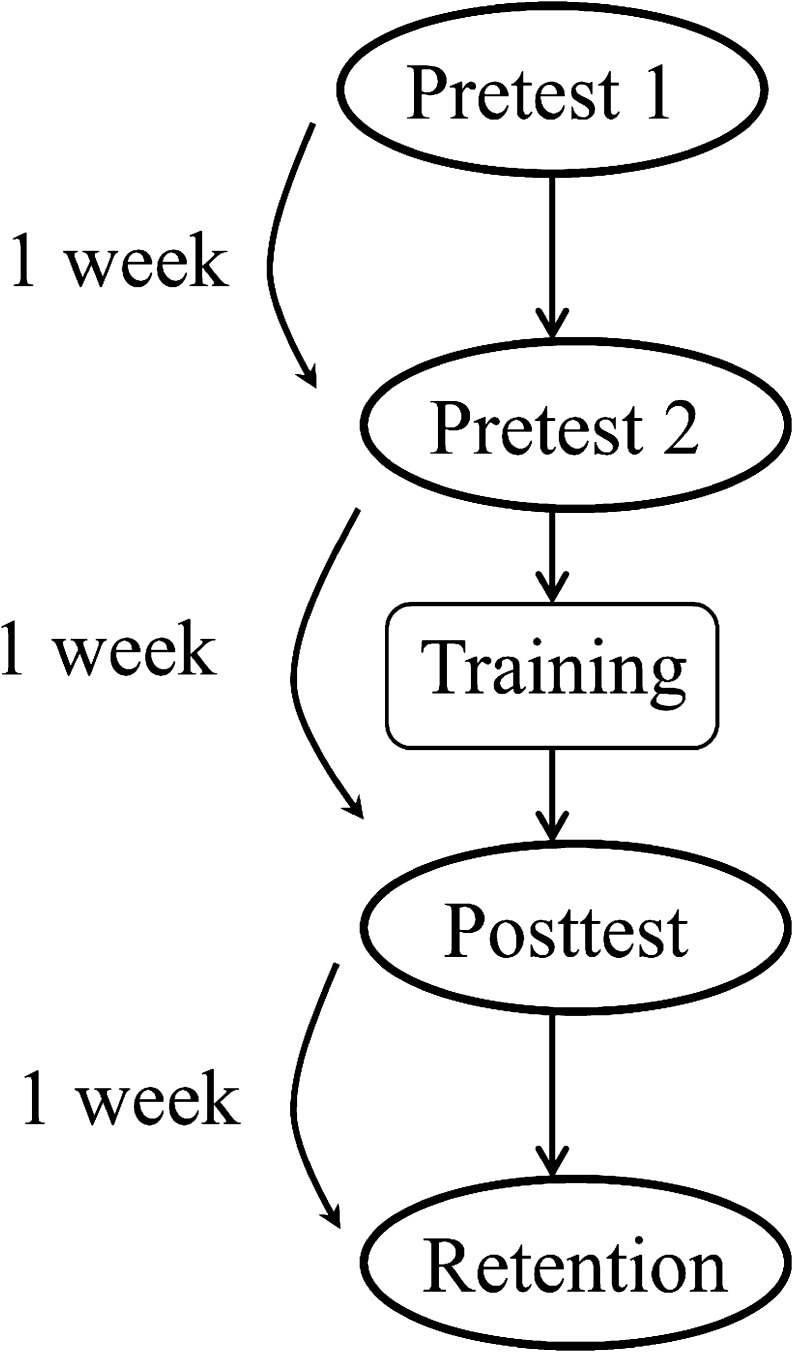

The design of the present study closely followed that of Shafiro et al. (2012). There were four test sessions, each separated by a week-long interval: Pretest 1, Pretest 2, Posttest, and Retention (Figure 1), and four training sessions administered between Pretest 2 and Posttest. No training was given between the first two sessions (Pretest 1 and Pretest 2) and the last two sessions (Posttest and Retention). Unlike testing, which was conducted in the laboratory, all four training sessions took place in subjects' homes using a dedicated laptop provided by the researchers.

Figure 1.

A diagram of the testing-training sequence used in the study.

Each of the four test sessions included an environmental sound test and two speech tests described below. An additional speech test, BKB-SIN (Etymotic Research, 2005), which examines sentence perception at varying signal-to-noise ratios (SNR), was administered only during the first test session as part of the general audiological assessment. For all tests, the presentation level was 70 dB SPL.

The Familiar Environmental Sound Test (FEST; Shafiro, 2008; Shafiro et al., 2012) included 160 sounds, which represented a broad range of 40 different sound sources, with four sound tokens corresponding to each sound source. The tokens of these sounds were initially obtained from several royalty-free sound libraries and environmental sound databases developed in previous research (Gygi et al., 2004; Marcell, Borella, Greene, Kerr, & Rogers, 2000; Shafiro, 2008; Shafiro & Gygi, 2004). These sounds comprised five large categories: human/animal vocalizations and bodily sounds, mechanical sounds, water sounds, aerodynamic sounds, and signaling sounds (Gaver, 1993). All test sounds were easily identifiable (i.e., average accuracy 98%, SD = 2) and highly familiar (i.e., 6.4 on a 7-point rating scale) to NH listeners (Shafiro, 2008). Being representative of a great variety of everyday environmental sounds, the test sounds varied considerably in duration. The shortest sounds were “door closing,” “burp,” “cork popping from a bottle,” and “water dripping,” with token durations of 0.1–0.5 s, whereas the longest sounds were “gargling,” “airplane,” “helicopter,” and “wind blowing,” with token durations reaching 7–9 s. The average duration across all test sounds was 2.7 s (SD = 1.3). As in the previous work (Shafiro, 2008; Shafiro et al., 2012), FEST was administered in a closed-set response format with 60 response alternatives displayed on the screen.

Speech tests included (a) Consonant-Nucleus-Consonant (CNC), monosyllabic word recognition test (Peterson & Lehiste, 1962) and (b) Speech-in-Noise (SPIN-R) sentence test (Elliott, 1995). The CNC and SPIN tests were presented in a closed-set format with 50 (CNC, SPIN-R) response alternatives displayed on a computer screen. Listeners were instructed to mark each word that they heard for the CNC test or to mark the last word of each sentence for the SPIN-R test. The CNC test was included to provide information on listeners' ability to perceive individual words in isolation, whereas the SPIN-R test was included to examine the effect of high- and low-probability sentence context on word identification.

Procedure

The initial test session, Pretest 1, had the same general format as the three test sessions that followed it, at 1-week intervals. Except for BKB-SIN, which was administered only during the first test session, two speech tests, CNC and SPIN-R, were administered in quiet at all four sessions, using two new lists for each test each time.

Prior to initial administration of the full 160-item FEST, subjects received a screening test, which contained 20 environmental sounds and was presented in quiet. The sounds included in the test were from some of the sound sources of the full test, but were represented by different sound tokens than those of the full test. The purpose of the screening test was to anticipate possible ceiling effects because those may have an impact on the training protocol. On the basis of the results of the screening procedure, the full 160-item FEST was administered in quiet to 11 subjects, who scored less than 70% correct on the screening test, and it was administered in a steady-state white noise, bandlimited at 8 kHz, to three subjects who scored above 70%. Different SNR levels were used with each of these three subjects. For one subject (S2), SNR levels were initially adjusted for each of the 160 test sounds to ensure audibility of each sound (as estimated by the experimenter prior to testing) and accommodate time-varying envelope differences among sounds (e.g., brief impulsive sound of door closing vs. long and relatively steady sound of wind blowing). However, for the two other subjects, this procedure was deemed excessively time-consuming and unnecessary. Their SNR levels were set uniformly for all 160 sounds using their preferences for screening sound tokens presented at different SNRs. One participant (S3) was tested at 3.5 dB SNR, and the other (S6) at 10.5 dB SNR. Despite a somewhat ad hoc character of the SNR setting procedure, performance of these three subjects, including training effects, was comparable to that of other subjects (see Table 3).

Table 3.

Performance of individual subjects (percent correct) on the Familiar Environmental Sound Test across four testing sessions of the study.

| Subject | Pretest 1 | Pretest 2 | Posttest | Retention | Total improvement |

|---|---|---|---|---|---|

| S2* | 53.12 | 44.38 | 60 | 59.83 | 6.71 |

| S3* | 45 | 63.25 | 80.625 | 80.625 | 35.625 |

| S5 | 52.5 | 54.38 | 83.75 | 81.25 | 28.75 |

| S6* | 66.25 | 69.36 | 75 | 75.63 | 9.38 |

| S7 | 30 | 36.25 | 46.9 | 49 | 19 |

| S15 | 45.63 | 41.25 | 56.88 | 56.25 | 10.62 |

| S16 | 14.4 | 17.5 | 27.5 | 31.25 | 16.85 |

| S17 | 73.1 | 78 | 82.5 | 84.38 | 11.28 |

| S9 | 37 | 38 | 60 | 58 | 21 |

| S10 | 38 | 42 | 51 | 52 | 14 |

| S11 | 46.88 | 59.38 | 69.375 | 58.89 | 12.01 |

| S12 | 61.86 | 70 | 71.88 | 70 | 8.14 |

| S13 | 45.63 | 31.89 | 40 | 51.25 | 5.62 |

| S14 | 43.89 | 42.22 | 59.38 | 65.63 | 21.74 |

| M | 46.7 | 49.1 | 61.8 | 62.4 | 15.8 |

| SD | 14.9 | 17.1 | 16.7 | 14.9 | 8.7 |

Note. Environmental sound testing and training of the subjects marked by an asterisk were conducted in noise.

In a sound-treated booth, all auditory stimuli were presented in a sound field at 70 dB SPL, through a single loudspeaker positioned at 45 degrees to the implanted ear of each participant, who was sitting one meter away. The nonimplanted ear was occluded with an E-A-R Classic disposable foam earplug (NRR 29 dB; 3M, Saint Paul, MN) to avoid potential residual hearing effects from the contralateral side. Each testing session took approximately 2 hr.

Environmental sound training took place between the Pretest 2 and Posttest sessions, and consisted of four sessions conducted at the subject's home on four different days during a single week. Training stimuli were selected individually for each subject on the basis of their performance on Pretest 2 using the following procedure: Half of all sound sources identified by a given subject with accuracy of 50% or less were used during training (Difficult-Trained, or D-TR), whereas the other half (Difficult-Not trained, or D-NT) were used only during follow-up testing to evaluate generalization of training effects. Similarly, only two of the four tokens representing each D-TR sound source in FEST were used during training (D-TR-used), supplemented by two novel sound tokens used during training only, which were added to increase intertoken variability. For instance, if one half, or 20, of all 40 sound sources were identified with accuracy of 50% or less by a given subject, the training set for this subject would include two tokens of each of these sound sources taken from the test and two additional tokens of the same sources from a large environmental sound database (Shafiro, 2008) and used only during the training sessions.

During each training session, all stimuli in a subject's training set, thus obtained, were presented in three consecutive blocks, once per block, in a random order using the same interface as used during testing. On every trial, after entering a response, subjects received correct-response feedback. If an incorrect response was given, the correct response was provided and subjects replayed the stimulus three times before advancing to the next trial. A bar graph displayed the percent-correct score for the current block and was updated on every trial; another bar graph showing block scores for the current session was displayed at the end of each block. Training sessions were approximately 40–60 min long. Training stimuli were presented using two portable loudspeakers (Logitech V-10; Newark, CA) set on each side of the laptop within approximately one meter of the patient's implant. Patients were instructed to set the speakers to a comfortable listening level prior to beginning the training regimen. The remaining two FEST tokens of D-TR sources (D-TR-alt) were used only during testing. Similarly, stimuli sounds that were identified with accuracy above 50% correct were also used only during the four testing sessions (E-NT).

Results

Percent-correct scores on the two speech tests and one environmental sound test were analyzed in three separate one-way analyses of variance to evaluate changes in performance across all four test sessions. When main effects were found, planned comparisons were carried out to determine the significance of changes between consecutive sessions with and without intervening training. For environmental sounds, additional analyses were conducted to evaluate generalization of training effects by comparing changes in performance in sounds that were used during training with those that were not.

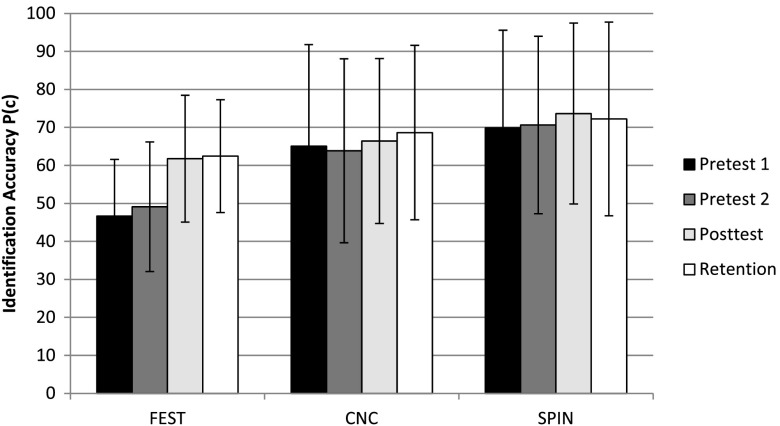

Training-Related Changes

Across the four test sessions, the highest overall improvement in performance of 15.8 percentage points was observed for environmental sounds, F(1, 13) = 52.094, p < .0001, η2 = .67), the sound class that was trained. In contrast, a change in performance on both speech tests, CNC and SPIN-R, was considerably smaller and not significant (p > .05), that is, 3.6 and 2.6 points, respectively (see Figure 2). Planned comparisons for environmental sound scores between Pretest 1 and Pretest 2, when listeners received no training, revealed that a 2.5-percentage point increase in performance was not significant (p > .50). However, following training, FEST scores improved significantly, F(1, 13) = 37.067, p < .0001, η2 = .74, by 12.6 percentage points on average, between Pretest 2 and Posttest. This increase indicates that the significant increase in environmental sound identification following training could not be accounted for by gradual improvement in performance due only to repeated exposure to the sounds during the test. Importantly, there were no significant differences in FEST scores between Posttest and Retention (i.e., 0.66 percentage point change), demonstrating that subjects did not continue to improve without additional training. Nevertheless, they were able to retain their training-induced improvement in environmental sound identification even after training was stopped.

Figure 2.

Performance accuracy across testing sessions for the familiar environmental sound test (FEST) and two speech tests (CNC and SPIN). Error bars represent ± one standard deviation.

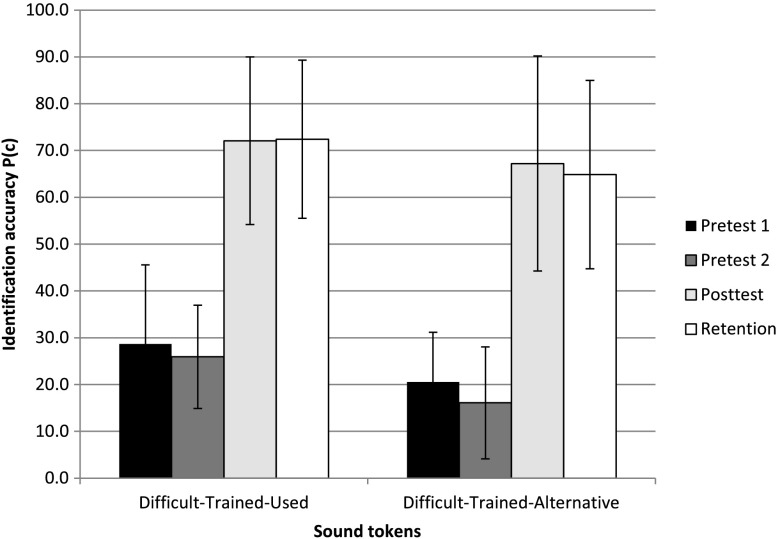

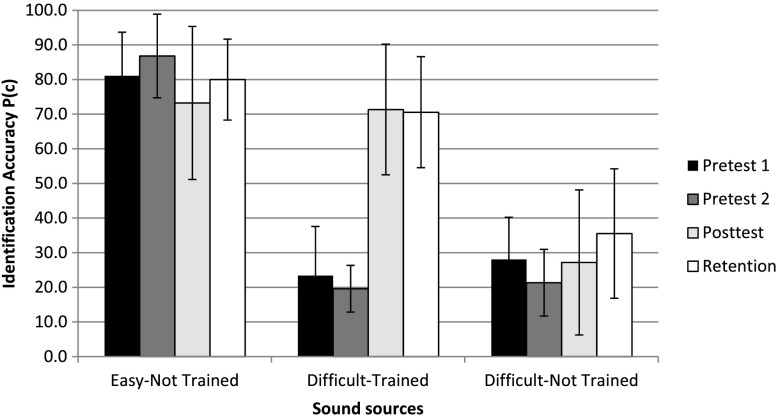

Analysis of sound token and sound source categories revealed that the highest training-related improvement of 46.1 percentage points was observed for the environmental sound tokens that were used during the training sessions (D-TR-used). As shown in Figure 3, this improvement was accompanied by a similarly large improvement of 51.1 points in the alternative tokens that were not used during training (D-TR-alt), but referenced the training sound sources (e.g., different kinds of dog barking sounds). Both D-TR-used and D-TR-alt improvements were significant (p < .05). This suggests that following training, listeners were able to generalize relevant perceptual cues beyond specific sound tokens as long as they referenced the same sound sources. On the other hand, as shown in Figure 4, generalization effects to nontrained sound sources (D-NT) were much smaller. Identification of D-NT sources (averaged across all four corresponding tokens) that were not included in the training sets increased by only 5.8 percentage points between Pretest 2 and Posttest, which was not significant. This contrasts sharply with an overall increase of 51.7 points for D-TR sound sources that were part of the training set. Only the latter improvement for D-TR sound sources was significant at p < .05.

Figure 3.

Identification accuracy across four test sessions for the two subgroups of the Difficult-Trained (D-T) sound sources: Difficult-Trained-used (D-T-used), that is, two tokens of each source selected for training and used during training and Difficult-Trained-alternative (D-T-alt), that is, two tokens of each source selected for training but not used during training. Error bars represent one ± one standard deviation of the mean.

Figure 4.

Environmental sound identification accuracy across four test session for three sound-source categories: (a) high-accuracy sounds that were not trained (Easy-Not Trained); (b) low-accuracy sounds that were later used during training (Difficult-Trained); and (c) low-accuracy sounds that were not trained (Difficult-Not Trained). Error bars represent one ± one standard deviation of the mean.

In contrast, identification of E-NT sound sources that were identified with high accuracy of 86.8% on Pretest 2 decreased significantly (p < .05) to 73.2% following training. Such a decrease, although unexpected, could perhaps reflect random fluctuations in listener performance with familiar and identifiable sounds. This interpretation is supported by a later increase in performance for E-NT sources up to 80% in the Retention session, which is not significantly different from the 81.1% accuracy during the first Pretest 1 session for E-NT sources. Alternatively, the decrease in this category followed the training sessions might result from listeners' greater focus on the sounds with which they practiced during training, making them less attentive to other more familiar sounds.

Variability Among Sounds

As in previous studies (Inverso & Limb, 2010; Shafiro et al., 2011), there was large variability in performance among individual sound sources. Identification accuracy of 20 of the 40 sound sources in the test was below 50% correct during Pretest 1 (see Table 2). However, at the last test session (Retention), the number of sounds identified with accuracy of less than 50% was reduced to eight. The five least recognizable sounds at Pretest 1 were all initially identified with accuracy of less than 20% correct: “brushing teeth”(5.4%), “zipper” (7.1%), “camera taking a picture” (16.1%), “blowing noise” (16.1%), and “airplane flying” (19.6%). Although all of these sounds improved between 10.7 (“blowing nose”) and 39.3 (“camera taking a picture”) percentage points, three of them (“brushing teeth,” “zipper,” and “blowing nose”) remained among the five least accurate sounds even at the final Retention session, whereas (“airplane flying”) was the sixth lowest. Nevertheless, identification accuracy of the majority of sound source improved, with 28 sounds improving by 10 percentage points or more between Pretest 1 and Retention. The five sounds with the greatest improvements of between 30.4 and 42.9 percentage points from Pretest 1 to Retention were “bowling strike,” “camera taking a picture,” “brushing teeth,” “clapping,” and “clock ticking.” As in prior work, these sounds are inharmonic and appear to demonstrate distinct envelope patterning cues, which would be available to CI patients. On the other hand, other initially poorly identified sounds did not improve considerably. For instance, “wind blowing” and “blowing nose,” which were identified with accuracy of 21.4% and 16.1%, respectively, improved only by 3.6 and 10.7 points each. For other sounds with initially poor identification such as “airplane flying” and “zipper,” which were identified at Pretest 1 with the accuracy of 19.6% and 7.1%, respectively, overall accuracy remained low even at Retention despite an improvement of 26.8 points each after training. This result could be because of their generic envelope pattern, which can be attributed to a broad array of inharmonic noisy sounds, making these sounds easily confusable.

Table 2.

Average environmental sound identification accuracy and associated change between the first (Pretest 1) and the last (Retention) testing sessions.

| Sound source | Pretest 1 | Retention | Improvement |

|---|---|---|---|

| brushing teeth | 5.4 (10.6) | 37.5 (40.1) | 32.1 |

| zipper | 7.1 (11.7) | 33.9 (40) | 26.8 |

| camera taking picture | 16.1 (21) | 55.4 (44) | 39.3 |

| blowing nose | 16.1 (21) | 26.8 (31.7) | 10.7 |

| airplane flying | 19.6 (32.8) | 46.4 (39) | 26.8 |

| thunder | 21.4 (32.8) | 35.7 (41.3) | 14.3 |

| wind blowing | 21.4 (29.2) | 25 (25.9) | 3.6 |

| bowling strike | 23.2 (24.9) | 66.1(34.8) | 42.9 |

| ice cubes into glass | 28.6 (29.2) | 55.4 (29.7) | 26.8 |

| pouring soda into cup | 30.4 (34.2) | 55.4 (41.8) | 25.0 |

| yawning | 30.4 (35.6) | 46.4 (39) | 16.1 |

| typing | 33.9 (37.5) | 50 (47) | 16.1 |

| clapping | 37.5 (30.6) | 67.9 (35.9) | 30.4 |

| clock ticking | 39.3 (32.1) | 69.6 (31.3) | 30.4 |

| car starting | 39.3 (36.3) | 62.5 (44.7) | 23.2 |

| sighing | 39.3 (28.9) | 46.4 (39) | 7.1 |

| snoring | 41.1 (30.4) | 57.1 (34.6) | 16.1 |

| toilet flushing | 41.1 (30.4) | 55.4 (38.2) | 14.3 |

| coughing | 46.4 (30.8) | 64.3 (23.4) | 17.9 |

| gargling | 48.2 (47.5) | 73.2 (42.1) | 25.0 |

| glass breaking | 51.8 (33.2) | 62.5 (38.9) | 10.7 |

| burp | 53.6 (32.3) | 73.2 (31.7) | 19.6 |

| horse neighing | 53.6 (32.3) | 67.9 (34.6) | 14.3 |

| clearing throat | 55.4 (36.9) | 73.2 (34.6) | 17.9 |

| car horn | 55.4 (31.3) | 73.2 (24.9) | 17.9 |

| siren | 55.4 (32.8) | 67.9 (35.9) | 12.5 |

| machine gun | 55.4 (17.5) | 64.3 (35) | 8.9 |

| telephone ringing | 57.1 (31.7) | 58.9 (36.2) | 1.8 |

| helicopter flying | 58.9 (34.8) | 69.6 (35.6) | 10.7 |

| birds chirping | 60.7 (33.6) | 62.5 (37.7) | 1.8 |

| cow mooing | 62.5 (35) | 85.7 (23.4) | 23.2 |

| baby crying | 64.3 (38.9) | 82.1 (30.1) | 17.9 |

| footsteps | 69.6 (29.7) | 91.1 (15.8) | 21.4 |

| rooster | 69.6 (44) | 76.8 (36) | 7.1 |

| sneezing | 69.6 (29.7) | 73.2 (34.6) | 3.6 |

| door closing | 71.4 (25.7) | 75 (24) | 3.6 |

| dog barking | 73.2 (31.7) | 82.1 (24.9) | 8.9 |

| laughing | 78.6 (30.8) | 83.9 (28.8) | 5.4 |

| doorbell | 82.1 (30.1) | 89.3 (21.3) | 7.1 |

| telephone busy signal | 100 (0) | 98.2 (6.7) | -1.8 |

Note. Sounds are arranged from lowest to highest accuracy on Pretest 1. Numbers in parentheses represent associated standard deviations obtained for each sound across all subjects.

Group Performance and Variability Among Listeners

Large individual differences were obtained on speech and environmental sound performance as well as in the magnitude of training effects (see Table 3). The range of environmental sound scores, averaged across four test sessions, was highly variable across listeners (M = 55%, range = 21.9–96.4%). Although all subjects showed a positive change in environmental sound perception between Pretest 2 and Posttest, the magnitude of change varied from 1.9 to 29.4 percentage points across subjects and, in a correlation analysis, appeared unrelated to their initial FEST performance or any other performance or demographic variables.

Similarly for speech, across individual test sessions, individual scores ranged from drastically reduced to nearly perfect on the CNC monosyllabic word test (M = 66%, range = 21%–97.25%) and the SPIN-R sentence test (M = 71.7%, range = 11%–97.7%). However, for the SPIN-R test averaged across the four test session, all but one of the 14 subjects demonstrated higher accuracy on the high-predictability than on low-predictability sentences, with the average high-predictability advantage of 12.1 percentage points. Finally, as found in previous work (Inverso & Limb, 2010; Shafiro et al., 2011), all three speech tests (BKB-SIN, SPIN, and CNC) moderately correlated with the environmental sound test scores (absolute correlation values in the range of 0.45–0.66).

Discussion

This study examined the effect of environmental sound training on the perception of speech and environmental sounds by adult experienced users of CIs. Results indicate that following a short four-session training regimen, there was a significant overall improvement in environmental sound identification. The highest improvement was observed for those environmental sounds that were part of the training set. This improvement generalized to all tokens of the trained sounds; the magnitude of improvement was equally large for those tokens of the trained sounds that were included in the training set (D-TR-used) as for those that were not (D-TR-alt). On the other hand, initially poorly recognized environmental sounds whose tokens that were not part of the training set did not improve significantly following the training. Thus, generalization of training effects was limited to the tokens of the trained sounds. Similarly, no significant improvement was observed for either of the two speech tests that assessed perception of isolated monosyllabic words or words in sentences.

It should be noted that the design of the present study did not include a control group that received no training, thus preventing more conclusive evaluation of training-induced effects. However, the lack of significant training effects for environmental sounds and speech stimuli that were not part of the training set, as well as the absence of any significant changes in either speech or environmental sound perception during study intervals without training (i.e., Pretest 1–Pretest 2 and Posttest–Retention), suggest that improvements in the identification of trained environmental sounds following the training interval could not have arisen solely due to repeated testing.

These results further indicate, in line with previous findings, that environmental sound identification remains problematic even for experienced CI patients. The FEST identification accuracy at the initial test session was 46.7%, which is similar to the values previously reported for CI patients with environmental sound tests of comparable set size (Inverso & Limb, 2010; Looi & Arnephy, 2010; Shafiro et al., 2011). The present findings with CI patients are also consistent with those previously obtained with NH listeners tested with CI vocoder simulations (Loebach & Pisoni, 2008; Shafiro et al., 2012), in that a significant and substantial improvement in perception of environmental sounds, the sound class which was trained, was obtained. However, in contrast to both previous studies with CI simulations, training effects did not generalize to speech or other nontrained environmental sounds. For instance, in Shafiro et al. (2012), when NH listeners were tested with the same materials using CI vocoder simulations and the same training protocol, there was a significant 7-point mean improvement in the perception of D-NT sounds. Although the magnitude of improvement in that study was comparable to that in the present sample of CI listeners (i.e., 5.8 points), it was not significant in the present study. The lack of significance could result from the large variability of CI user performance compared with performance by NH listeners.

Similarly, despite slight improvements in performance between the first and the last sessions for the two speech tests (i.e., 3.6 and 2.3 points for CNC and SPIN-R, respectively), these changes were not significant. Nevertheless, even with the vocoded stimuli tested by Shafiro et al. (2012), changes in speech scores were also small—all under 10 points. Furthermore, unlike naïve NH listeners tested with CI simulations that they never experienced before, present CI patients had on average 2.5 years of experience with electric hearing. Thus, their ability to improve with speech sounds—the sound class that was not part of the training—is likely to be greatly attenuated. Nevertheless, it is possible that a generalization effect for speech, similar to that observed earlier with CI simulations (Loebach & Pisoni, 2008; Shafiro et al., 2012), could be obtained with a longer training regimen. For experienced CI patients, four 1-hr sessions may not be enough for more pervasive neuroplastic changes in auditory perception to take place.

In addition to the extensive practice with electric hearing that present CI patients had, the differences in the magnitude and extent of training and generalization effects may be due to the age and cognitive and basic auditory abilities of the subjects. As a group, the present subjects were considerably older than NH adults tested in previous simulation studies, and they were likely to have reduced cognitive abilities (Humes & Dubno, 2010). In addition, for all the subjects, implantation followed an extended period of hearing impairment that could affect sound representation in memory and their ability to link remaining auditory representations with new sensory experiences delivered through the CIs. It is thus remarkable that after only four short test sessions, CI patients demonstrated a considerable improvement in environmental sound perception.

Much of that improvement appears to be driven by the environmental sounds used as part of the training set. Indeed, the change in scores for these sounds was 51.7 points following training, and as mentioned, included both trained and untrained tokens of these sounds. This appears considerably larger than the improvements that have been previously observed for any speech stimuli in experienced CI patients following speech training. Such substantial increases in environmental sound identification abilities may partly be attributable to the difference in the roles speech and environmental sounds play in the daily lives of CI patients. In speech communications, listeners are typically aware of whether or not someone is speaking to them and know if they can understand the speaker. In contrast, listeners may not be aware of many common environmental sounds that may be occurring around them even after these sounds become audible following implantation. Explicitly focusing listeners' attention on specific environmental sounds during training may thus enable them to connect sensory experiences of these sounds with their existing memory representations. This positive effect appears to persist even after the training has been discontinued (see Figure 2).

Environmental sounds differed considerably in terms of their difficulties for CI patients. Whereas sounds such as “footsteps,” “laughing,” “doorbell,” or “telephone busy signal” were identified with an accuracy of more than 80%, others such as “brushing teeth,” “zipper,” “blowing nose,” or “wind” were poorly identified, with less than 26% accuracy. This grouping of sounds is similar to that found in Shafiro et al. (2011). Variation in the perceptual difficulty of different sounds is likely to result from several factors. Chief among those may be the sounds' acoustic distinctiveness. Accurately identified sounds appear to have a distinct pattern of dynamic variation in energy envelope and gross spectral features so that the sounds are not easily confused. On the other hand, poorly identified sounds are less distinct in their temporal and spectral features (e.g., “wind”). Sounds may also differ in the sound level at which they are typically heard in the environment. For instance, “zipper” sounds are quiet and may be below many CI users' hearing thresholds. Similarly, sounds of “brushing teeth” and “blowing nose,” which may seem loud and familiar to most people, may sound much quieter to CI patients due to much smaller or absent bone conduction effects. Furthermore, in their daily life, listeners may have more exposure to some sounds than to others. For instance, patients may not be wearing implants while brushing their teeth. Even sounds that are common and seemingly distinct in their temporal characteristics, for example, “clapping,” “helicopter flying,” or “typing” were not identified with high accuracy, whereas others that are not commonly heard in urban settings and not necessarily as distinct acoustically were identified with a higher accuracy (e.g., “cow mooing,” “rooster”). It appears that a variety of factors, including audibility, acoustic characteristics of sounds, and frequency of occurrence in an individual's daily life, may influence environmental sound identification.

Conclusions and Future Directions

The present study is the first attempt to investigate the effect of environmental sound training on CI patients. It demonstrated that, whereas environmental sound perception poses considerable difficulty even for experienced CI patients, it could be improved with computer-based training, and that the positive changes in environmental sound perception persisted 1 week after training stopped. Although it is unknown what the long-term impact of the training might be, the present findings suggest that computer-based environmental sound training presents an effective, low-cost rehabilitative option for CI patients. Despite variation in age, cognitive abilities, and hearing etiology, all patients were able to carry out the training regimen in the privacy of their homes without assistance. This training situation approximates the conditions in which subjects would likely use commercially available training software and indicates the efficacy of this training approach. However, several questions remain unanswered. The wide variation in subjects' performance and magnitude of training effects indicates that some CI patients are able to benefit from computer-based training more than others. Determining factors that influence the ability to benefit from training will be useful for deciding the best kind of rehabilitation approach for a specific patient. The present study focused on adult experienced CI patients with postlingual implants. It is likely that other CI patient groups such as children or CI patients whose implants were recently activated may also benefit from formal computer-based environmental sound training. In addition, adult CI patients with prelingual deafness, who typically do not develop the ability to understand speech, may be able to benefit from such training. This is especially important because environmental sounds signal events in listeners' environment that may be critical for one's safety. Better awareness of one's environment may also lead to better quality of life for CI patients.

Acknowledgments

This work was funded by National Institute on Deafness and Other Communication Disorders Grant DC008676 awarded to Valeriy Shafiro. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We are grateful to all subjects with cochlear implants for their listening expertise, with a special thank you to the physicians and patients of the Chicago Ear Institute for their participation and encouragement.

Funding Statement

This work was funded by National Institute on Deafness and Other Communication Disorders Grant DC008676 awarded to Valeriy Shafiro. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- Alpiner J., & McCarthy P. (2009). History of adult audiologic rehabilitation: The past as prologue. In Montano J., & Spitzer J. (Eds.), Adult audiologic rehabilitation (pp. 3–24). San Diego, CA: Plural Publishing. [Google Scholar]

- Dawson P. W., & Clark G. M. (1997). Changes in synthetic and natural vowel perception after specific training for congenitally deafened patients using a multichannel cochlear implant. Ear and Hearing, 18, 488–501. [DOI] [PubMed] [Google Scholar]

- Elliott L. L. (1995). Verbal auditory closure and the Speech Perception in Noise (SPIN) test. Journal of Speech and Hearing Research, 38, 1363–1376. [DOI] [PubMed] [Google Scholar]

- Etymotic Research. (2005). Bamford-Kowal-Bench Speech-in-Noise Test (Version 1.03) [Audio CD]. Elk Grove Village, IL: Author. [Google Scholar]

- Fu Q.-J., & Galvin J. J. (2007). Perceptual learning and auditory training in cochlear implant recipients. Trends in Amplification, 11, 193–205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaver W. W. (1993). What in the world do we hear?: An ecological approach to auditory event perception. Ecological Psychology, 5, 1–29. [Google Scholar]

- Gfeller K., Witt S. W., Kim K.-H., Adamek M., & Coffman D. (1999). Preliminary report of a computerized music training program for adult cochlear implant recipients. Journal of the Academy of Rehabilitation Audiology, 32, 11–27. [Google Scholar]

- Goldsworthy R. L., & Shannon R. V. (2014). Training improves cochlear implant rate discrimination on a psychophysical task. The Journal of the Acoustical Society of America, 135, 334–341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gygi B., Kidd G., & Watson C. (2004). Spectral-temporal factors in the identification of environmental sounds. The Journal of the Acoustical Society of America, 115, 1252–1265. [DOI] [PubMed] [Google Scholar]

- Henshaw H., & Ferguson M. A. (2013). Efficacy of individual computer-based auditory training for people with hearing loss: A systematic review of the evidence. PLoS ONE, 8(5), e62836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes L. E., & Dubno J. R. (2010) Factors affecting speech understanding in older adults. In Gordon-Salant S., Frisina R. D., Popper A. N., & Fay R. R. (Eds.), The aging auditory system (pp. 211–258). New York, NY: Springer. [Google Scholar]

- Ingvalson E. M., Lee B., Fiebig P., & Wong P. C. (2013). The effects of short-term computerized speech-in-noise training on post-lingually deafened adult cochlear implant recipients. Journal of Speech, Language, and Hearing Research, 56, 81–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Institute of Electrical and Electronics Engineers. (1969). IEEE recommended practice for speech quality measurements. IEEE Transactions on Audio and Electroacoustics, 17, 225–246. [Google Scholar]

- Inverso Y., & Limb C. J. (2010). Cochlear implant-mediated perception of nonlinguistic sounds. Ear and Hearing, 31, 505–514. [DOI] [PubMed] [Google Scholar]

- Loebach J. L., & Pisoni D. B. (2008). Perceptual learning of spectrally degraded speech and environmental sounds. The Journal of the Acoustical Society of America, 123, 1126–1139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Looi V., & Arnephy J. (2010). Environmental sound perception of cochlear implant users. Cochlear Implants, 11, 203–227. [DOI] [PubMed] [Google Scholar]

- Marcell M. M., Borella D., Greene J., Kerr E., & Rogers S. (2000). Confrontation naming of environmental sounds. Journal of Clinical and Experimental Neuropsychology, 22, 830–864. [DOI] [PubMed] [Google Scholar]

- Miller J. D., Watson C. S., Kistler D. J., Wightman F. L., & Preminger J. E. (2008). Preliminary evaluation of the Speech Perception Assessment and Training System (SPATS) with hearing-aid and cochlear-implant users. Proceedings of Meetings on Acoustics, 2, 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nilsson M., Soli S. D., & Sullivan J. A. (1994). Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. The Journal of the Acoustical Society of America, 95, 1085–1099. [DOI] [PubMed] [Google Scholar]

- Oba S. I., Fu Q. J., & Galvin J. J. (2011). Digit training in noise can improve cochlear implant users' speech understanding in noise. Ear and Hearing, 32, 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oxenham A. J. (2008). Pitch perception and auditory stream segregation: Implications for hearing loss and cochlear implants. Trends in Amplification, 12, 316–331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson G., & Lehiste I. (1962). Revised CNC list for auditory tests. Journal of Speech and Hearing Disorders, 27, 62–70. [DOI] [PubMed] [Google Scholar]

- Pizarek R., Shafiro V., & McCarthy P. (2013). Effect of computerized auditory training on speech perception of adults with hearing impairment. Perspectives on Aural Rehabilitation and Its Instrumentation, 20, 91–106. [Google Scholar]

- Proops D. W., Donaldson I., Cooper H. R., Thomas J., Burrell S. P., Stoddart R. L., … Cheshire I. M. (1999). Outcomes from adult implantation, the first 100 patients. Journal of Laryngology and Otology, 113, 5–13. [PubMed] [Google Scholar]

- Ramsdell D. A. (1978). The psychology of the hard-of-hearing and the deafened adult. In Davis H., & Silverman S. R. (Eds.), Hearing and deafness (pp. 499–510). New York, NY: Holt, Rinehart and Winston. [Google Scholar]

- Reed C. M., & Delhorne L. A. (2005). Reception of environmental sounds through cochlear implants. Ear and Hearing, 26, 48–61. [DOI] [PubMed] [Google Scholar]

- Shafiro V. (2008). Development of a large-item environmental sound test and the effects of short-term training with spectrally-degraded stimuli. Ear and Hearing, 29, 775–790. [DOI] [PubMed] [Google Scholar]

- Shafiro V., & Gygi B. (2004). How to select stimuli for environmental sound research and where to find them? Behavior Research Methods, Instruments and Computers, 36, 590–598. [DOI] [PubMed] [Google Scholar]

- Shafiro V., Gygi B., Cheng M., Vachhani J., & Mulvey M. (2011). Perception of environmental sounds by experienced cochlear implant patients. Ear and Hearing, 32, 511–523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shafiro V., Sheft S., Gygi B., & Ho K. (2012). The influence of environmental sound training on the perception of spectrally degraded speech and environmental sounds. Trends in Amplification, 16(2), 83–101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon R. V., Zeng F., Kamath V., Wigonski J., & Ekelid M. (1995, October 13). Speech recognition with primarily temporal cues. Science, 270, 303–304. [DOI] [PubMed] [Google Scholar]

- Stacey P. C., Raine C. H., O'Donoghue G. M., Tapper L., Twomey T., & Summerfield A. Q. (2010). Effectiveness of computer-based auditory training for adult users of cochlear implants. International Journal of Audiology, 49, 347–356. [DOI] [PubMed] [Google Scholar]

- Truax B. (2001). Acoustic communication. Westport, CT: Ablex Publishing. [Google Scholar]

- Tyler R. S., Moore B., & Kuk F. (1989). Performance of some of the better cochlear-implant patients. Journal of Speech, Language, and Hearing Research, 32, 887–911. [DOI] [PubMed] [Google Scholar]

- Wu J. L., Yang H. M., Lin Y. H., & Fu Q. J. (2007). Effects of computer-assisted speech training on Mandarin-speaking hearing-impaired children. Audiology and Neuro-Otology, 12(5), 307–312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang T., Dorman M. F., Fu Q. J., & Spahr A. J. (2012). Auditory training in patients with unilateral cochlear implant and contralateral acoustic stimulation. Ear and Hearing, 33, e70–e79. [DOI] [PMC free article] [PubMed] [Google Scholar]